- 1The Dina Recanati School of Medicine, Reichman University, Herzliya, Israel

- 2Baruch Ivcher School of Psychology, Institute for Brain, Cognition and Technology, Reichman University, Herzliya, Israel

Tactile pairing with auditory stimulation has been shown to enhance various capabilities, including the intensity of the stimulus, its location, and its comprehensibility in noise. However the effect of adding haptics on emotional state is still poorly understood, despite the key role of bodily experiences on emotional states. In the current study we aimed to investigate the impact of a multisensory audio-tactile music experience on emotional states and anxiety levels. For this purpose we developed an in-house algorithm and hardware, converting audio information to vibration perceivable through haptics, optimized for music. We compare participants’ emotional experiences of music when provided with audio only versus audio-tactile feedback. We further investigate the impact of enabling participants to freely select their music of choice on the experience. Results indicate multisensory music significantly increases positive mood and decreased state anxiety when compared to the audio only condition. These findings underscore the potential of multisensory stimulation and sensory-enhanced music-touch experiences, specifically for emotional regulation. The results are further enhanced when participants are given the autonomy to choose the musical content. We discuss the importance of multisensory enhancement and embodied experiences on emotional states. We further outline the potential of multisensory experiences in producing robust representations, and discuss specific use cases for technologies that enable controlled multisensory experiences, particularly VR headsets, which increasingly incorporate multifrequency haptic feedback through their controllers and APIs.

Introduction

Imagine yourself in a live concert, expecting your favorite artist to go on stage. Suddenly, big lights turn on and you see the artist enter a magnificent stage. The visual effects together with the cool performers’ outfit makes you feel even greater anticipation. Then, you hear the sound you love so much, and feel the bass vibrating under your feet. You know every note in the opening song which gave you hope so many times in your past, but the live multisensory experience of it sweeps you into a wholesome feeling of happiness. This common multisensory experience is a result of information being integrated by multiple sensory modalities in order to create a coherent and robust perception of the world. This integration is essential for adapting to environmental changes and responding to stimuli efficiently (Kristjánsson et al., 2016). Beyond improved perception, it has been shown that multisensory manipulations significantly impact cognition, emotions, and behavior in real-world scenarios (Shams et al., 2011; Khan et al., 2022; Cornelio et al., 2021). This indicates that while sensory signals offer congruent information, they are integrated into enhanced neural and behavioral processes. Importantly, congruent sensory input also enhances emotional experiences (Garcia Lopez et al., 2022), which may serve as one reason behind concerts being a more unique and emotionally engaging experience compared to listening to music on a stereo system or headphones (Trost et al., 2024).

There is plenty of evidence suggesting music listening as an effective sensory manipulation to upregulate positive emotions (e.g., happiness and relaxation) and downregulate negative emotions (Lawton, 2014; Groarke and Hogan, 2019). Music in particular, has been shown to effectively reduce stress, mitigate anxiety and decrease depression symptoms through music therapy as well as by passively listening to music (Mas- Herrero et al., 2023; De Witte, et al., 2020; Ferreri, et al., 2019). However, music familiarity and preferences may mediate listeners’ emotional experiences (Van Den Bosch et al., 2013). Prior research indicates that familiarity with music enhances the perception of consonance, leading to higher pleasantness ratings (McLachlan et al., 2013) and activating brain regions associated with reward, while unfamiliar music elicited brain activity in regions related to recognition and novelty detection (Freitas et al., 2018). Furthermore, musical preference (selected by the participants) has been associated with an increase in dopaminergic activity in the mesolimbic system (Salimpoor et al., 2011), suggesting that familiar and preferred music would greatly impact emotional experience, and could easily be incorporated into daily living, providing an accessible and cost-effective intervention with significant therapeutic potential (Finn and Fancourt, 2018; De Witte et al., 2020; Harney, 2023).

The reciprocal link between music and emotions is not surprising, as emotional experiences arise from perceiving the world through our senses (Polania et al., 2024). The Circumplex Model of emotion perspective suggests that emotional experiences can be described on two dimensions: emotional valence and emotional arousal (Russell, 1980). Valence denotes how much an emotion is positive or negative and Arousal can be defined as the degree of subjective activation, or more simply low or high emotional intensity (Pessoa and Adolphs, 2010; Nejati et al., 2021). Congruently, a large-scale study indicated that 82% of participants use music to increase valence (Lawton, 2014) but it also alters physiological experiences of arousal, including heart rate, blood pressure, and cortisol levels (De Witte et al., 2020). Indeed, neuroscientific studies indicate that sensation affects emotions very quickly, without any cognitive demand (Guo et al., 2015) even when the sensory stimuli is subliminal (Roschk and Hosseinpour, 2020). This suggests that music based sensory manipulation might be a relatively effortless way to manage emotions (Rodriguez and Kross, 2023).

In recent years, technological progress of multisensory based technologies has become increasingly important for addressing sensory, cognitive and emotional processes (Amedi et al., 2024; Tuominen and Saarni, 2024). Sensory substitution devices which convey information typically delivered by one sensory modality through a different sensory modality using specific translation algorithms (Heimler and Amedi, 2020; Heimler, Striem- Amit & Amedi, 2015) have been used for the enhancement of various sensory experiences (Kristjánsson et al., 2016). Virtual Reality (VR) also generates environments through creation of multisensory stimulation to further immerse users within them (Montana et al., 2020). These technologies have assisted in expanding the scope of multisensory enhancement research (Cornelio et al., 2021), showing that multisensory integration optimizes perception beyond what each sense can achieve individually, demonstrated by improved spatial perception (Snir et al., 2024), speech comprehension (Ciesla et al., 2021), attention and even memory recall using multisensory integration based stimuli (Stein et al., 2020). Moreover, it has been suggested that the existence of multisensory stimuli in VR enhances user performance, perception, experience and sense of presence (Melo et al., 2020).

While most of the existing literature on multisensory enhancement has been confined to visual and auditory modalities (Cornelio et al., 2021), recent focus on audio-tactile stimulation suggested more intuitive substitution, requiring no or little training (rather than tens of hours often required by visual-auditory devices for instance; Snir et al., 2024), possibly because these modalities share common mechanisms (Russo, 2020; Senkowski and Engel, 2024). Since sound is a multi-modal phenomenon that can be experienced both sonically and through vibrations (Trivedi et al., 2019), both are sensitive to partially overlapping frequencies, and are processed in common brain regions (Auer et al., 2007), it is reasonable to assume that coupling them will also embellish subjective experience (Melo et al., 2020). Moreover, recent evidence stresses the benefits of multisensory stimulation (audio and tactile) over audio alone, in learning and sensory enhancement by healthy participants (Ciesla et al., 2019; Ciesla et al., 2022), in enriching subjective experience in terms of emotional valence, arousal, and enjoyment (Turchet et al., 2020; Garcia Lopez et al., 2022) and yielding a greater sense of embodiment, engagement and agency (Leonardis et al., 2014). Tactile technologies in themselves have been developed to impact anxiety (Faerman et al., 2022; Goncu-Berk et al., 2020). These nonetheless consider the impact of tactile experience on self ownership and self-agency that have been proposed as a basis for the sense of embodiment (Shimada, 2022).

Going back to the example of a concert, the multisensory experience of the sound of one’s favorite music, synchronized with the vibrating ground can thus enhance the emotional experience. VR concerts have become increasingly popular and provide more immersive experiences than simply watching on screen, however, emphasis is usually given to vision and audition, neglecting the importance of synchronised haptics (Venkatesan and Wang, 2023), which play an important role in both the perception and the enjoyment of music (Merchel and Altinsoy, 2020). Supporting evidence suggests that listening to recorded music without vibrotactile feedback is less perceptually rich and immersive (Ideguchi and Muranaka, 2007). In their book Musical Haptics, Pappeti & Saitis (2018) thoroughly explain why haptic technology is the most suitable to communicate musical information and enrich the experience of music listening. Despite the attention musical haptics have recently received, user experience evaluation methods have not been established, and healthcare applications have not been sufficiently utilized (Remache-Vinueza et al., 2021).

Taken together, the current study features a novel tactile device developed by our lab in the form of two handheld balls, along with a novel algorithm developed by one of the authors of the manuscript (A.S.) dedicated to transforming musical information to touch. The algorithm both transforms frequency content as well as emphasizes various audio features in order to better replicate the sound content to perceptual features of the tactile modality. To further strengthen the experience, actuators with matching content are also placed on the chest and heels. The devices use wide frequency response actuators on par with some of the most popular VR/gaming sets’ controllers (i.e., Oculus Pro and PS5). The system was tested for user experience by tens of users (both healthy and with various mental conditions), with overall positive subjective responses, yet the current study is the first to test the device in an experimental setting. We use this unique setup to perform a proof of concept examination of multisensory music and its impact on one’s emotional experience and state anxiety markers. We hypothesize that audio-tactile music will enhance the regulative qualities of music on emotional state to a greater extent than the same music experienced through only the auditory modality. Based on the reviewed literature we expect to find increased valence, decreased arousal measured by Self Manikin Assessment (SAM; Bradley and Lang, 1994) and lower STAI state anxiety scores (Spielberger, 1983). We further hypothesize that the effects of audio-tactile musical experiences will be more pronounced when participants are able to select their music of choice, due to its added emotional relevance (Fuentes-Sánchez et al., 2022).

Methods

Participants: Thirty two healthy individuals participated in this proof of concept study (20 females and 11 males; Mage = 22, SD = 3.8). Participants were not native English-speakers but were fluent in English. Each provided an informed consent and they were not paid for participation. Participants were recruited online via the Reichman University Sona Systems which facilitates student participation in psychology and neuroscience studies, as approved by the Institutional Review Board (IRB) of Reichman University (P_2023192).

Preparation of stimuli

We used CrowdAI, released by Spotify (Brost et al., 2019), which contains approximately 4 million tracks in order to choose one instrumental musical track for the experiment. By using an instrumental track as an experimental stimulus, we sought to study the effect of music alone, as opposed to the combined effect of musical and lyrical content on emotions (Barradas and Sakka, 2022). This data set includes eight audio features (Instrumentalness, Acousticness, Speechiness, Liveness, Tempo, Energy, Valence and Danceability). We used the Python package GSA (Heggli, 2020 GitHub Repos) and excluded tracks that are very popular or completely unknown (including only tracks that have between 50 to 500 followers), to prevent familiarity (Ward et al., 2014). Moreover, we included only instrumental tracks (Instrumentalness > 0.8) with high valence (>50%) and high BPM (>120 BPM) to keep participants aroused without triggering negative experiences (Pyrovolakis et al., 2022).

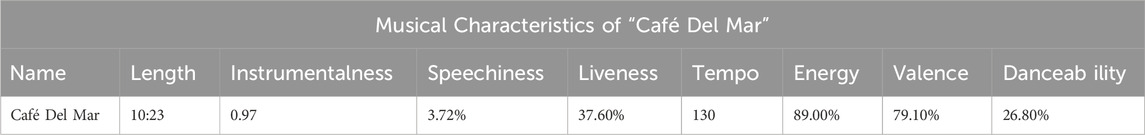

We ended up with a list of 50 tracks and excluded those shorter than 7 min. We then selected one track randomly: “Café Del Mar” Michael Woods Remix by Energy 52. The track’s features are shown in Table 1 (track features taken from: Spotify for Developers, Get Track’s Audio Features (v1), n.d; Retrieved 18 October 2023 https://developer.spotify.com/documentation/web-api/reference/get-audio-features).

Table 1. Analysis of audio features profile of “Café Del Mar” were derived from Spotify for Developers.

Experimental setup

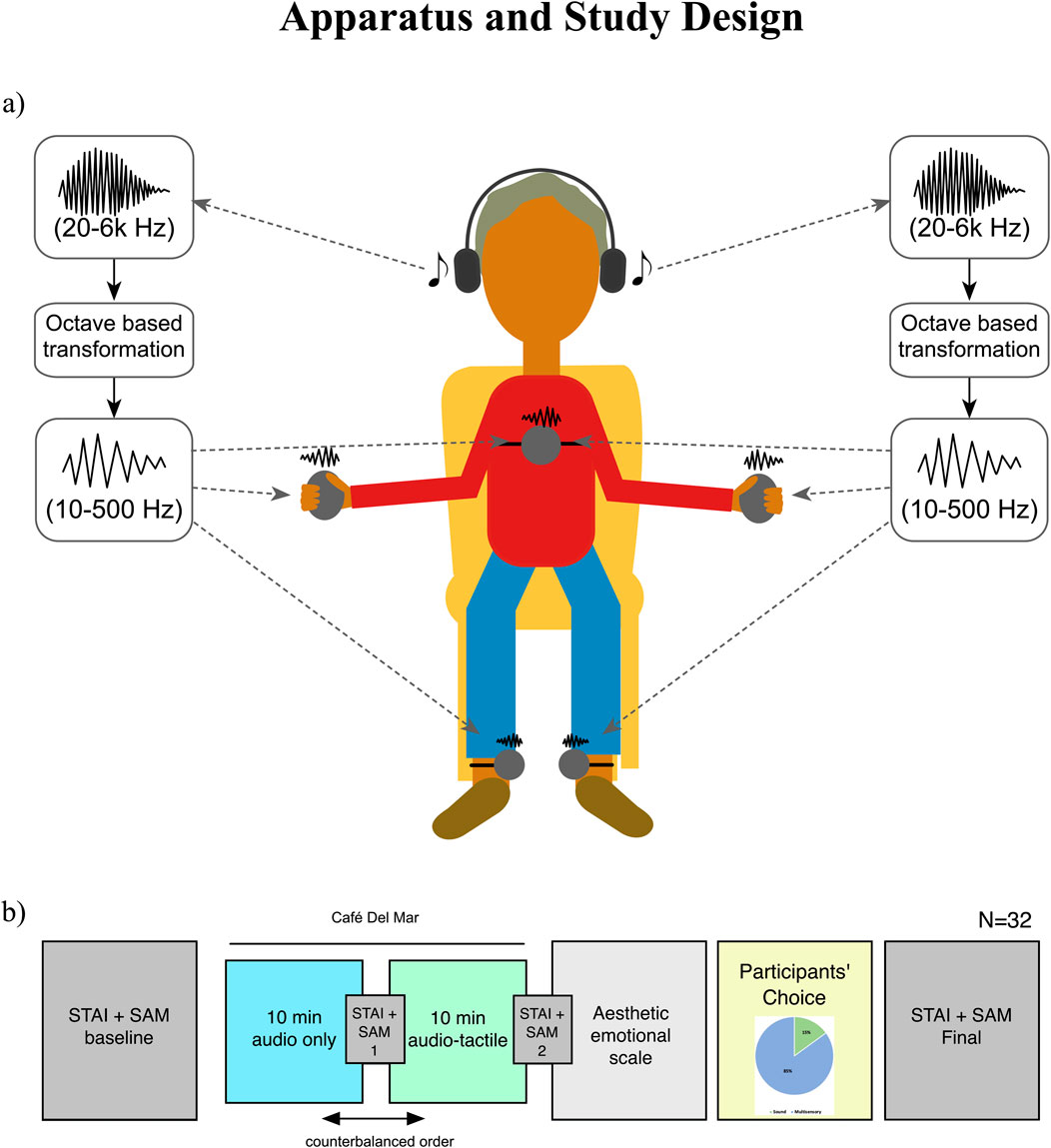

Each participant received the music through headphones (BOSE QC35 IIA), as well as separately transformed left-right channels of audio to vibration on the palms, the chest and the ankles. The tactile devices and conversion algorithm were developed inhouse. The actuators used were voice coil actuators (replacement parts for playstation 5 controllers) with a full response range rated to be between 10 and 1,050 Hz (maximum acceleration frequency rated at approximately 65 Hz., tested for peak-to-peak 0.85 m/s2; for further details see Jagt et al., 2024). These were each driven by a 3W Class D amplifier. Robust actuator response was found to lose intensity above approximately 300 Hz. We thus corrected the response curve using a cascaded filter, resulting in a flat response up to 300 Hz and perceivable actuation up to ∼500 Hz. The algorithm converting musical content to tactile actuation (see further below) was thus calibrated in consideration of this frequency range (i.e. 10-500Hz). The vibration emitted to the hands was made into the form of 3D printed balls with a diameter of 2.8cm (standard stress ball size), while the ankle and chest actuators were placed onto the participants’ body using velcro straps (see Figure 1).

Figure 1. Actuator placement on the body and step-by-step flow of the study (a) Participants were seated and equipped with noise canceling headphones and five identical voice coil actuators. Two were installed within ergonomically sized plastic spheres to be held in the hands. The remaining three actuators were attached via straps to the ankles and the front center of the chest. The music was converted algorithmically to match somatosensory properties, taking into consideration stereophonic separation of left and right for the hands and ankles, and a monophonic mixdown for the chest. (b) The procedure included three experiential conditions: 1. sound-only (normal music listening through headphones), 2. multisensory (both audio through headphones and vibration actuators simultaneously), 3. participant-choice (free choice of music and of whether to experience it as sound only or multisensory). STAI and SAM questionnaires were given prior to and between all conditions. 32 participants took part in the study.

The experimental setup included a PC for music playback and algorithmic processing, to which an 8 output channel sound card was connected (ESI- GIGABPORT eX). Output channels 1–2 were mapped to audio, while consecutive pairs were mapped to haptics as such: channels 3–4 (hands; left and right respectively), 5–6 (ankles; left and right respectively) and 7 (chest; software based mixdown of the stereo signal to a mono actuator). All left sided haptic content was identical, as was between right sided actuators. Each side was transformed as a separate algorithmic conversion stream, corresponding directly between the stereo audio output and the side of the haptics on the body. We targeted the chest, palms, and ankles because together they span three somatotopic locations repeatedly highlighted in bodily-mapping research (see discussion for more details).

Audio-to-tactile conversion was processed using an inhouse algorithm which transforms audio signal into information perceivable by the tactile sense using a combination of frequency compression and feature detection meant to enhance certain qualities of the musical content. The algorithm performed audio-to-tactile conversion of the streamed audio using low latency means. The conversion algorithm was designed to transform information within the most prominent range of frequencies used in music (mainly between 20 and 6,000 Hz) and convert it in bands, via octave based transformation, to a range of vibrations robustly perceivable by the human tactile system (up to 700 Hz; see Merchel and Altinsoy, 2020). When taking into account the ideal range of the actuators selected for the current project, the resulting haptic output was configured between the frequencies of 10–500 Hz. Peak detection and their enhancement were used following frequency transformation to further emphasize moments of attack and rhythmic features (see Figure S1) (The precise algorithm is currently patent pending. For further information contact the author A.S.). Online algorithmic transformation and channel mixing was processed within a Max MSP patcher (cycling ‘74, version 8.5.5) which received the musical output from Spotify. Blackhole (Existential Audio, Canada, Public License version 3.0 (GPLv3) was used as a virtual soundcard for passing the audio between the two applications.

Procedure

Upon arrival each participant filled a consent form then the experimenter placed actuators on their chest, ankles and hands (in the form of handheld haptic spheres). Subsequently, participants rated baseline measurements. At the start of each session, participants listened to calibration tones and felt a reference vibration from the haptic actuators; each individual adjusted audio volume and vibration amplitude until the stimulus felt clearly perceptible yet comfortable. The chosen audio level was then locked for that participant and remained constant across all experimental conditions.

Participants then listened to the same song twice (Café Del Mar 10 min) under two sensory conditions, counterbalanced across participants. The two conditions consisted of: 1) sound-only, which included listening to music over headphones while the tactile devices were deactivated, and 2) multisensory, during which participants experienced music in headphones along with vibration emitted by the tactile devices. The study employed a within-subject experimental design where each participant underwent both conditions while experiencing the same musical piece. Prior to and following every one of the test conditions, participants rated their current emotional valence and arousal, each on a scale from 0 to 100 using Self Assessment Manikins (SAM; Bradley and Lang, 1994), and STAI-state questionnaire (Spilberger, 1983). Upon completion of both conditions, the same participants were also asked to fill out the Aesthetic Emotional Scale questionnaire (Schindler et al., 2017). These participants were then asked to select one more song of their own choosing, as well as the condition they would prefer to experience it under (sound-only/multisensory). Following this added experience, each participant rated one’s current emotional experience using STAI and SAM as before (see Figure 1).

Analysis

To define the number of participants needed for the study, a power analysis was conducted using G*Power software (Faul et al., 2007). To determine the required sample size for a within-subjects design with a medium effect size (Cohen’s d = 0.5), 80% power, and a significance level of α = 0.05, a sample size of 32 participants was found to be needed for a significant effect.

We tested the normality assumption using Shapiro-Wilks that was found violated (i.e., the data was not normally distributed). Thus to test differences in emotional valence, arousal and state anxiety scores under the different conditions, we conducted a Friedman test for nonparametric repeated measures comparison.

To calculate significance between conditions, Wilcoxon signed ranks tests were performed. We compare emotional change (relative to baseline) following multisensory music and following auditory music. To calculate effect size for nonparametric analysis we used Kedall’s Tau and Kendall’s W tests. All p values were corrected for multiple comparisons using Bonferroni corrections. All statistical tests were performed using R (Mangiafico, 2016).

Results

Emotional valence is higher following the multisensory condition

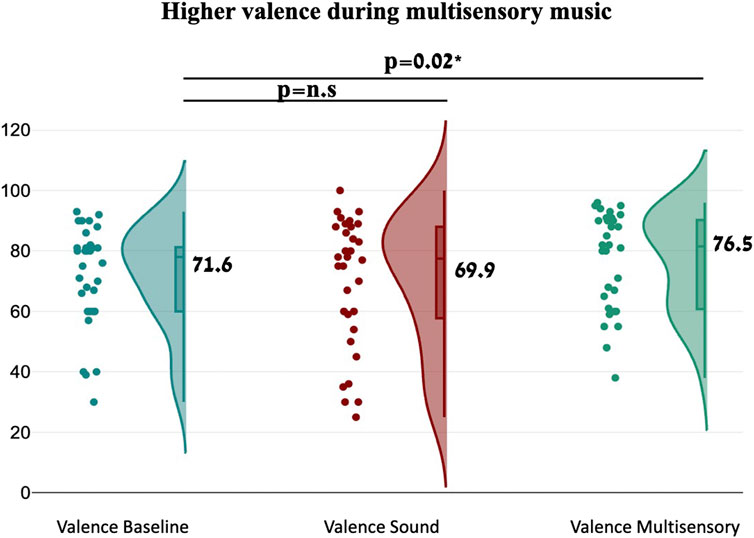

Comparing emotional valence ratings at baseline (Mean = 71.6 ± 16; Median = 78, IQR = 21), following unisensory “CaféDel Mar” (Mean = 69.9 ± 21; Median = 77.5, IQR = 30) and multisensory “CaféDel Mar” (Mean = 76.5 ± 16; Median = 81.5, IQR = 29) yielded a significant Friedman repeated measures test (X2r(2,32) = 7.14, p = 0.02, Kendall’s W = 0.112). To understand the simple effect driving the results we conducted a Wilcoxon signed-ranks test which indicated significantly higher valence following the multisensory condition relative to baseline (W = 137.5, z = 1.72, p = 0.04, with moderate effect size Kendall’s τ = 0.30) while the sound-only condition did not significantly differ from baseline valence (W = 174, z = −0.03, p = 0.4; Figure 2).

Figure 2. Valence scores between conditions. Group change to valence ratings in each of the music conditions (sound only and multisensory) in relation to baseline. Changes to valence were found to be significantly different between conditions, suggesting that while multisensory experience of music increases emotional valence, the sound-only condition slightly decreases valence (N = 32; Wilcoxon signed-ranks test; ∗∗p < 0.01, ∗p < 0.05); Bonferroni corrections were applied to all p values).

Same examination of emotional arousal did not yield a significant difference ((X2r(2,32) = 2.29, p = 0.31) between emotional arousal at baseline (Mean = 43.9 ± 24; Median = 49.4, IQR = 42) following sound-only (Mean = 36.4 ± 24.9; Median = 35, IQR = 40.7) and multisensory experiences (Mean = 38.7 ± 27.1; Median = 34.5, IQR = 36; W = 192.5, z = 0.07, p = 0.09).

State anxiety decreases following only the multisensory condition

Comparing STAI baseline scores (Mean = 33.7 ± 9; Median = 34, IQR = 13) to STAI scores following the sound-only condition (Mean = 33.1 ± 12; Median = 29, IQR = 12) and multisensory condition (Mean = 29.7 ± 7; Median = 28, IQR = 14) yielded a significant Friedman repeated measures test (X2r(2,32) = 8.31, p = 0.015, Kendall’s W = 0.13). Pairwise comparison of music experience conditions to baseline using Wilcoxon signed-ranks test revealed lower state anxiety score following the multisensory condition (W = 251, z = 2.55, p = 0.02) yet not following the sound-only condition (W = 178.5, z = 1.7, p = 0.17; See Figure 3a).

Figure 3. State Anxiety (STAI) scores between conditions. (a) State Anxiety (STAI) scores during baseline and following the sound-only and multisensory conditions (N = 32; Friedman test for repeated measures/Wilcoxon signed-ranks tests; ∗∗p < 0.01, ∗p < 0.05). Bonferroni corrections were applied to all p values). (b) Scores of the eight participants with the highest STAI baseline scores, showing lower STAI ratings following the multisensory condition.

The STAI scoring system ranges between a minimum score of 20 to a maximum score of 80. The full range is commonly divided into three classifications: low anxiety (20–37), moderate anxiety (38–44), and high anxiety (45–80; Kayikcioglu et al., 2017). Eight participants within our sample scored a baseline state anxiety within the moderate to high range. Their STAI scores have been plotted below and show a common tendency of decreasing following multisensory music (See Figure 3b).

Preference of multisensory experience and associations between subjective responses and valence

After experiencing both conditions, participants were asked to select a condition of choice (audio or multisensory) for their next song. Twenty eight (88%) of participants chose to experience their song in a multisensory fashion, while only four participants chose the sound-only condition. Examining relations between emotional ratings and Aesthetic Emotional Scale after the condition participants chose, revealed a small to medium correlation with valence, but not arousal ratings. Significant correlations between valence and specific items included: “The experience fascinated me” (r = 0.42, p = 0.02), “Felt something wonderful” (r = 0.41, p = 0.02), “Was impressed” (r = 0.44, p = 0.01) and “Made me happy” (r = 0.38, p = 0.03).

Significantly enhanced effects when participants select their music of choice

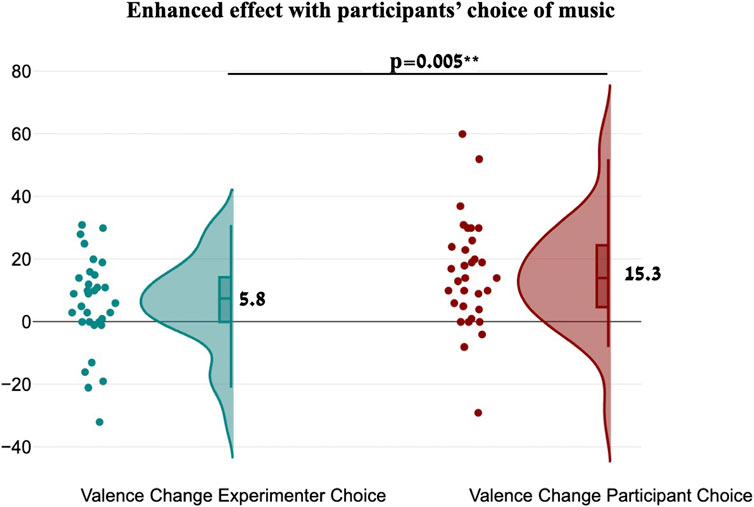

Participants were given the choice to experience any song they’d like under their condition of choice (sound only/multisensory). Emotional ratings following participants’ experience of choice were compared to baseline to measure emotional change by participants’ song of choice. Then emotional change by participants’ song was compared to their experience with “Café Del Mar” under the same condition. A Wilcoxon signed-ranks test yielded a significant difference in valence change following Cafe Del Mar (Mean = 5.8 ± 13; Median = 8, IQR = 11) relative to participants’ choice (Mean = 15.3 ± 17; Median = 14, IQR = 19) showing greater efficacy for participants’ choice (W = 411.5, z = 3.67, p = 0.005, τ = 0.65). Changes to arousal were not found to be significantly different (W = 335.5, z = 1.32, p = 0.36) (see Figure 4; for details regarding participants’ song of choice see also Table S1).

Figure 4. Valence rating between experimenter and participant selected track. Valence ratings following the participants’ song of choice in comparison to “Cafe Del Mar” (a standard playlist intended for relaxation purposes) (N = 32; Wilcoxon signed-ranks tests; ∗∗∗p < 0.001, ∗∗p < 0.01, ∗p < 0.05; Bonferroni corrections were applied to all p values).

Discussion

Audio-tactile enhancement of valence and state anxiety but not arousal

The current experiment set out to explore the potential effect of a multisensory (audio-tactile) experience on one’s emotional state during music listening. We investigated this using our tactile setup and novel algorithm, which uses actuators with a wide frequency response and is designed especially for transforming musical content to be perceivable through touch (patent pending). Originally, we hypothesised that a combined audio-tactile musical experience would show added effects on both valence and arousal, as well as on state anxiety markers, this in comparison to auditory-only music listening. We found that the addition of our haptic device indeed had a significant effect on valence, as well as state anxiety self-report measures, but did not affect emotional arousal (see Figures 2, 3). We further hypothesised that participants would experience added effects when selecting the musical content themselves. The effect of self choice was indeed found to be significant, in particular when considering valence measures, which further highlights the additive impact of combining tactile information within auditory musical experiences. Below we discuss our findings in light of the existing literature, while considering a number of influential factors, including: multisensory perception and its neural substrates, features of tactile awareness and embodiment, and the effects of agency in musical selection. We consider all of these in terms of their connection to our findings pertaining to emotional states.

Perceptual and attention enhancement as a result of combined audio-tactile sensory stimulation

Firstly, we discuss the enhancement of the experience caused by multisensory integration of two modalities in tandem (both auditory and tactile information) as opposed to only one. The enhancement of distinct sensory stimuli is a key feature of multisensory integration (Diederich and Colonius, 2004; Peiffer et al., 2007; Khan et al., 2022). Specifically, combining sensory inputs can amplify or suppress incoming signals, when both salient and weak perceptual stimuli are presented, the more salient stimuli are enhanced while the weaker signals are suppressed (Bauer et al., 2015). This mechanism enables reducing perceptual uncertainty and enabling the brain to make more reliable decisions by matching information from various senses (Shams et al., 2011; Lu et al., 2016).

The use of auditory and tactile stimuli in particular (as opposed to audio-visual for instance) may be further strengthening the multisensory effect. A few papers have now demonstrated various immediate effects on auditory experiences and enhanced auditory capabilities induced by simultaneous tactile stimulation (for more details see the introduction section of this manuscript). These include among others the enhancement of music perception (Russo et al., 2012; Young et al., 2016), as well as speech perception (Ciesla et al., 2019; Ciesla et al., 2022; Fletcher et al., 2020) and even auditory localisation (Snir et al., 2024; Snir et al., 2024; Fletcher, 2021) and instant audio-tactile binding capabilities within complex environments (Snir et al., 2025). Other similarities have been demonstrated, showing that musicians which, similar to their known enhanced auditory detection abilities, show better detection of emotion and frequency through touch when compared to non-musician controls (Sharp et al., 2019; Sharp et al., 2019b). Auditory stimulation has also been shown to have a biasing effect on tactile perception of sinusoidal “sweeps” (Landelle et al., 2023).

The current findings support this point of view, showing multisensory integration of audio-tactile music (relative to unisensory) enhances upregulation of positive emotions and downregulates state anxiety. Unlike unisensory music studies where both emotional valence and arousal mediate emotion regulation and pleasure (Salimpoor et al., 2009), here we see that multisensory stimulation had an effect on valence measures yet none were seen for arousal. Effects on valence were expected, as music is known to improve mood and multisensory experiences of music in particular (Yu et al., 2019). Surprisingly, arousal was not significantly different, this in contradiction to prior findings regarding novel sensory experiences, showing that unfamiliar music increases electrodermal activity responses which are strongly related to emotional arousal (Van Den Bosch et al., 2013). Nevertheless, the relaxing content of the music may have reduced arousal to some degree (Baccarani et al., 2023). This may also explain the lack of change in arousal following the participant’s song of choice relative to baseline. Accordingly, the novelty of haptics and the multisensory experience of music offered in the current experiment might have increased the general arousal beyond familiarity of music (Park et al., 2019; Baccarani et al., 2023). On the other hand arousal ratings were not found to be different. Vibrotactile enhancement was strong in the latent dimension of ‘musical engagement’, encompassing the sense of being a part of the music, arousal, and groove. These findings highlight the potential of vibrotactile cues for creating intensive musical experiences (Siedenburg et al., 2024).

Effects on emotion have also been demonstrated in audio-visual processing. In fact, enhanced processing of audio visual emotional stimuli show multisensory integration contributes to a coherent sense of self and an intensified sense of presence (Yu et al., 2019). Moreover, it has been suggested that emotional stimuli may sensitize all sensory modalities (Sharvit et al., 2019), by modification of early sensory responses, across vision, auditory and somatosensory modalities, controlled by supramodal networks for emotional regulation of perception and attention (Domínguez-Borràs et al., 2017; Vuilleumier, 2005; Domínguez-Borràs and Vuilleumier, 2013). This further strengthens the view that multimodal stimulation may be key towards enhancing sensory experiences and their potential effect on emotional regulation.

Neural substrates of auditory and tactile processing

Multisensory processing of auditory-visual perception has been much more extensively studied in comparison to auditory-tactile. The expanding research on auditory-tactile processing nonetheless, shows both neural and psychophysical reasons for the immediacy and affective qualities of such integration (Merchel and Altisony, 2020). These effects may in fact be due to the similarities among the two senses as well as their vicinity in terms of where this information would be processed in the brain (Wu et al., 2015). Both the auditory and somatosensory systems are known to have for instance the ability of encoding frequencies, at a partially overlapping frequency range (Pongrac, 2008; Merchel and Altinsoy, 2020; Bolanowski et al., 1988). Yet this may have deeper reasons relating to central nervous system processing. Some authors claim that interactions between the two systems may be inherent (Bernard, 2022). A number of studies have demonstrated the convergence of the auditory and tactile neural pathways in the brain using MRI. Such studies demonstrated that activation in the auditory cortex can be caused by vibrotactile stimulation (Auer et al., 2007; Levänen et al., 1998; Schürmann et al., 2006). Furthermore, multisensory areas, originally understood to be responsive to audio and visual stimulation, have been shown to respond to tactile stimulation as well (Beauchamp et al., 2008). Other work has used texture, an object feature which uses auditory, somatosensory and motor cues, to show shared activity in primary auditory and somatosensory areas for effective discrimination (Landelle et al., 2023).

Tactile emotional enhancement due to embodiment via tactile stimulation

Tactile experiences play a crucial role in how individuals perceive and interact with their surroundings, both in physical and virtual environments. Touch provides critical feedback for body ownership and agency, shaping our perception of reality (Aoyagi et al., 2021; Cui and Mousas, 2023). Self-ownership and self-agency are both bodily sensory-motor processing, and constitute the embodied self - the self that is situated in “here and now” (Gallagher, 2000). Self ownership is arising from bodily multisensory integration, whereas the self agency is arising from sensorimotor integration regarding intentional movement (Shimada, 2022). This perspective suggests that the use of tactile stimulation, particularly when placed at various positions along the body, may impact embodiment of the musical experience by manipulating self awareness towards different body locations, immersion, and body sensations (Putkinen et al., 2024; Cavdir, 2024). Hence, in the current study body locations were chosen by high sensitivity to emotional impact. Specifically, the chest is sensitive to every high-arousal emotion, reflecting cardiorespiratory changes that listeners consistently feel in the heart–lung area (Nummenmaa et al., 2014; Nummenmaa et al., 2018; Putkinen et al., 2024). The palms, which are among the most touch-sensitive surfaces of the body—making them ideal for conveying the energizing aspects of music-evoked affect (Ackerley et al., 2014). Finally the ankles, which extend stimulation to the lower-limb circuitry that lights up for approach-oriented and rhythm-entrained states (e.g., dancing), allowing us to engage locomotor embodiment without obstructing other sensors (Hove and Risen, 2009). Stimulating this triad therefore covers core interoceptive arousal, upper-extremity action, and lower-extremity movement, giving a balanced yet practical embodiment profile for modulating mood (Putkinen et al., 2024).

Indeed, research into VR concert experiences indicates that tactile stimulation increases the sense of presence, connection, and immersive musical experiences (Venkatesan and Wang, 2023). Neuroscientific investigation suggests that when extrinsic body information (derived primarily from visual and auditory inputs) and intrinsic body information (derived from sensory motor inputs) are consistent, the brain recognizes the body as self, generating a sense of ownership and a sense of agency (Shimada, 2022). In fact, an increased sense of self agency created by audio-tactile music experiences shows that many genres of music activate the supplementary motor area (SMA) in the brain, which controls internally generated movements, thus illustrating the involuntarily planned motion caused by a musical piece (Wu et al., 2015), even in absence of overt movements (Zatorre et al., 2007; Maes et al., 2014). This has been shown to further promote brain plasticity and connectivity in structures related to sensory processing, memory formation, motor function and stimulating complex cognition and multisensory integration, demonstrating that music listening involves somatosensory processing (Wu et al., 2019). Furthermore, motor responses such as dancing or tapping of rhythm are associated directly with music, as an automatic response to auditory processing (Repp and Penel, 2004). In fact, for people who are deaf, body vibration is the only means for experiencing music, and many of them are capable of enjoying music and dancing to it using only such cues (Tranchant et al., 2017). Such findings indicate that our results which show enhanced effects on emotion caused by the added tactile actuation may be influenced by enhanced embodiment of the music. Finally, embodiment theories’ approach the perception of music (or any other sensory salient stimuli) as always being in relation to the “self”, taking one’s body as a reference frame while considering all sensory, motor and affective processes (Tajadura-Jiménez, 2008; Damassio, 1999; Niedenthal, 2007). Considering this perspective, we suggest that the enhancement of emotional response resulting from audio-tactile music may be due to enhanced embodiment, caused by changing the frame of reference via the use of haptics.

Enhanced effects when the music is chosen by the participants

Emotional responses to music largely depend on the music, the listener and the situation, hence there is no universal music or sound that will trigger the same emotional reaction in everyone (Tajadura-Jiménez, 2008). Through enabling free choice of any musical piece with instantaneous tactile feedback, the current setup provided the opportunity to compare the impact of musical selection on valence and arousal. The current study shows that multisensory music enhances emotional valence (but not arousal), and this emotional enhancement is even greater when participants choose the music they would like to experience. As such, there seems to be an additive effect on valence caused by the combination of multisensory integration and musical choice. Liljeström et al. (2013) argue that self-selected music enhances positive valence (but not arousal) because it offers a greater sense of control over a situation. Moreover, evidence linked user control on musical devices (mp3/radio/computer) to positive mood response. In the current study, we believe that the design of the handheld haptics may have further contributed to a sense of control, partially explaining the multisensory enhancement of emotional valence (Krause et al., 2015). Another possible explanation is that musical preference is strongly correlated to pleasurability (positive valence), but less with relaxation (low arousal; Ho and Loo, 2023; Fuentes-Sánchez et al., 2022). Congruently, comparing functional connectivity following experimenter and participant choices of music has demonstrated that largely familiar music pieces generate the most functional connections and promote brain plasticity and connectivity in structures related to sensory processing, memory formation and motor function, while stimulating complex cognition and multisensory integration (Wu et al., 2019). Additionally, listening to self chosen music increased brain activity in reward regions such as ventral striatum and nucleus accumbens (Singer et al., 2023), and activated cerebral µ-opioid receptors (Putkinen et al., 2024). Taken together, these findings suggest that the emotional benefits of multisensory music are amplified by self-selection, and potentially an enhanced sense of control afforded by handheld haptics, likely engaging reward-related brain regions and reinforcing the pleasurable experience of personally meaningful music. Further research is nonetheless needed in order to further understand the impact of the design on one’s enhanced sense of agency and its potential benefit to the overall experience.

Potential for use and development

As aforementioned, technological interventions are becoming increasingly important for addressing emotional regulation. Our device, which makes use of tools based on gaming/VR controllers’ embedded coils, shows that the use of such hardware can help enhance emotional effects in musical experiences. VR setups in particular thus provide an off the shelf opportunity to leverage immersive environments in order to engage users in ways that traditional methods cannot, through highly controlled multisensory stimulation (considering all three primary sensory modalities: visual, auditory, and tactile) (Montana et al., 2020). Although music-based interventions using VR often focus primarily on audio and visual elements (Tuominen and Saarni, 2024), we argue that the integration of haptics in particular may have robust effects on the emotional experience of music, this considering both our own findings as well as data collected by other researchers (Siedenburg et al., 2024; Haynes et al., 2021). Indeed, increasing interest in haptic capabilities within both the VR and neuroscientific communities has clarified the importance of proper tactile embedding, in particular in consideration of experiences that are centered around auditory processing (Yang et al., 2021; Spence and Gao, 2024; Zhao et al., 2021). Unlike most other attempted solutions out there, our device (and the conversion algorithm in particular) carries two key advantages: 1) it allows for low latency and live conversion of audio to tactile; 2) it embeds in haptics a representation of almost the entire frequency range, which is converted to a somatosensory perceivable frequency range; 3) it is based on existing actuators and does not demand unique/specially manufactured vibration hardware.

One should thus consider the audio-to-tactile conversion algorithm which is used in the current study which is unlike the basic playback of music through tactile coils which often attenuate frequencies above a certain range (often anything above 200–400 Hz), in effect enabling only low end content of the music to be perceived. Such a design of tactile coils is logical as it helps avoid hearing the vibration devices, and more importantly because this is the main frequency range in which the somatosensory system can perceive vibrations (Merchel and Altinsoy, 2020). Nevertheless, the music-to-tactile algorithm used in the current study converts higher frequency content to be compatible with the above mentioned frequency range, embedding more of the auditorily perceived content into the tactile range. Further research is needed in order to fully understand the impacts of this particular algorithm in comparison to others, in particular when considering its effects on musical experiences as opposed to tactile stimulation with other or no conversion, or simply of using vibration with no particularly related content.

In the current study, which investigates emotional effects in particular, one should take into account the wide relevance of the application. One such aspect in particular is the enhanced effect on the musical experience when considering the added significance of self-choice. One should consider that opening participants to free choice resulted in a variety of musical genres (see Table S1 in supplementary material for a detailed description of music chosen by participants), yet the effects remained intact. This on the one hand delineates the potential importance of tactile experiences to musical effect regardless of the musical style, and furthermore strengthens the claim for the robustness of the algorithm and setup towards the transformation of various types of musical content.

Limitations and future directions

The study incorporated an in-house algorithm in order to create an enhanced audio-tactile musical experience. Further research is needed in order to compare emotional impacts of our synchronous audio conversion algorithm to other audio-to-tactile devices which do not embed such algorithmic conversion. Furthermore, considering this was a proof of concept study in which the hardware was created utilizing similar multi-frequency actuators as those embedded within the Meta controllers, it was not yet embedded within an actual VR set. Future directions include embedding the algorithm fully to be compatible with an existing VR set and utilizing solely its included hardware and software, considering both hardware (audio playback, haptics directly from controllers) as well as algorithmic compatibility with the existing APIs. These should further incorporate visual feedback as well, in order to better assess the influence of all three sensory modalities, and compare the impact of each modality separately and in unison. Furthermore, the current study assumes the importance of synchronized and well matched audio-tactile content for the effects caused by multisensory integration, further studies using non-synchronous stimulation in both timing and content are warranted in order to further assess the importance of integrated multisensory stimulation on emotional markers.

To better assess the therapeutic potential of a music based audio-tactile intervention, future studies should also include groups of participants with particular issues pertaining to emotional regulation. These could include both anxiety and stress related disorders (i.e., chronic anxiety, acute stress disorder, post-traumatic stress disorder, etc.). Further considerations should include longitudinal use of such a device and its long term effects on such populations. Further groups to consider include individuals with hearing impairments, which often have limited access to musical content due to their reduced hearing capabilities (often reduced range of perceivable frequencies) and the level of acoustic complexity embedded within musical content.

Conclusion

Our findings highlight the role of haptic feedback and self agency in enhancing emotional responses to music, with audio-tactile music increasing positive valence and reducing state anxiety. These results underscore the importance of multisensory integration in emotion regulation and have implications for therapeutic applications and accessibility. In virtual reality (VR), combining audio-tactile music with immersive environments could enhance presence, emotional engagement, and user control. Future research should explore these interactions to advance VR-based interventions, interactive media, and digital based solutions for wellbeing.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Reichman University Institutional Review Board and Research Compliance Office. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

NS: Conceptualization, Data curation, Formal Analysis, Investigation, Methodology, Project administration, Validation, Writing – original draft, Writing – review and editing, Visualization. AS: Conceptualization, Formal Analysis, Funding acquisition, Methodology, Software, Supervision, Visualization, Writing – original draft, Writing – review and editing. AA: Conceptualization, Funding acquisition, Resources, Supervision, Writing – original draft, Writing – review and editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This project has received funding as part of the GuestXR project by the European Union’s Horizon 2020 research and innovation programme under grant agreement No 101017884 (AA) and the European Research Council proof of concept grant under project acronym TouchingSpace360 (AA); this research was also supported by the Israel Science Foundation under Grant No. 3709/24 (AA).

Acknowledgments

We would like to thank Uri Barak for assisting with the statistical analysis and Darya Hadas for her design and support with the hardware.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The handling editor JSA declared a past co-authorship with the author AA.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2025.1592652/full#supplementary-material

References

Ackerley, R., Saar, K., McGlone, F., and Backlund Wasling, H. (2014). Quantifying the sensory and emotional perception of touch: differences between glabrous and hairy skin. Front. Behav. Neurosci. 8, 34. doi:10.3389/fnbeh.2014.00034

Amedi, A., Shelly, S., Saporta, N., and Catalogna, M. (2024). Perceptual learning and neural correlates of virtual navigation in subjective cognitive decline: a pilot study. iScience 27 (12), 111411. doi:10.1016/j.isci.2024.111411

Aoyagi, K., Wen, W., An, Q., Hamasaki, S., Yamakawa, H., Tamura, Y., et al. (2021). Modified sensory feedback enhances the sense of agency during continuous body movements in virtual reality. Sci. Rep. 11 (1), 2553. doi:10.1038/s41598-021-82154-y

Auer Jr, E. T., Bernstein, L. E., Sungkarat, W., and Singh, M. (2007). Vibrotactile activation of the auditory cortices in deaf versus hearing adults. Neuroreport 18 (7), 645–648. doi:10.1097/wnr.0b013e3280d943b9

Baccarani, A., Donnadieu, S., Pellissier, S., and Brochard, R. (2023). Relaxing effects of music and odors on physiological recovery after cognitive stress and unexpected absence of multisensory benefit. Psychophysiology 60 (7), e14251. doi:10.1111/psyp.14251

Barradas, G. T., and Sakka, L. S. (2022). When words matter: a cross-cultural perspective on lyrics and their relationship to musical emotions. Psychol. Music 50 (2), 650–669. doi:10.1177/03057356211013390

Bauer, J., Dávila-Chacón, J., and Wermter, S. (2015). Modeling development of natural multi-sensory integration using neural self-organisation and probabilistic population codes. Connect. Sci. 27 (4), 358–376. doi:10.1080/09540091.2014.971224

Beauchamp, M. S., Yasar, N. E., Frye, R. E., and Ro, T. (2008). Touch, sound and vision in human superior temporal sulcus. Neuroimage 41 (3), 1011–1020. doi:10.1016/j.neuroimage.2008.03.015

Bernard, C. (2022). Perception of audio-haptic textures for new touchscreen interactions. (Doctoral dissertation, TU Delft). Available online at: https://hal.science/tel-03591014/.

Bolanowski Jr, S. J., Gescheider, G. A., Verrillo, R. T., and Checkosky, C. M. (1988). Four channels mediate the mechanical aspects of touch. J. Acoust. Soc. Am. 84 (5), 1680–1694. doi:10.1121/1.397184

Bradley, M. M., and Lang, P. J. (1994). Measuring emotion: the self-assessment manikin and the semantic differential. J. Behav. Ther. Exp. psychiatry 25 (1), 49–59. doi:10.1016/0005-7916(94)90063-9

Brost, B., Mehrotra, R., and Jehan, T. (2019). “The music streaming sessions dataset,” in The world wide web conference, 2594–2600.

Cavdir, D. (2024). “Auditory-tactile narratives: designing new embodied auditory-tactile mappings using body maps,” in Proceedings of the 19th international audio mostly conference: explorations in sonic cultures, 105–115.

Cieśla, K., Wolak, T., Lorens, A., Heimler, B., Skarżyński, H., and Amedi, A. (2019). Immediate improvement of speech-in-noise perception through multisensory stimulation via an auditory to tactile sensory substitution. Restor. Neurology Neurosci. 37 (2), 155–166. doi:10.3233/rnn-190898

Ciesla, K., Wolak, T., Lorens, A., Skarżyński, H., and Amedi, A. (2021). Speech-to-touch sensory substitution: a 10-decibel improvement in speech-in-noise understanding after a short training.

Cieśla, K., Wolak, T., Lorens, A., Mentzel, M., Skarżyński, H., and Amedi, A. (2022). Effects of training and using an audio-tactile sensory substitution device on speech-in-noise understanding. Sci. Rep. 12 (1), 3206. doi:10.1038/s41598-022-06855-8

Cornelio, P., Velasco, C., and Obrist, M. (2021). Multisensory integration as per technological advances: a review. Front. Neurosci. 15, 652611. doi:10.3389/fnins.2021.652611

Cui, D., and Mousas, C. (2023). Exploring the effects of virtual hand appearance on midair typing efficiency. Comput. Animat. Virtual Worlds 34 (3-4), e2189. doi:10.1002/cav.2189

Damasio, A. R. (1999). How the brain creates the mind. Sci. Am. 281 (6), 112–117. doi:10.1038/scientificamerican1299-112

De Witte, M., Spruit, A., van Hooren, S., Moonen, X., and Stams, G. J. (2020). Effects of music interventions on stress-related outcomes: a systematic review and two meta-analyses. Health Psychol. Rev. 14 (2), 294–324. doi:10.1080/17437199.2019.1627897

Diederich, A., and Colonius, H. (2004). Bimodal and trimodal multisensory enhancement: effects of stimulus onset and intensity on reaction time. Percept. Psychophys. 66 (8), 1388–1404. doi:10.3758/bf03195006

Domınguez-Borras, J., and Vuilleumier, P. (2013). “Affective biases in attention and perception,” in Handbook of human affective neuroscience, 331–356.

Domínguez-Borràs, J., Rieger, S. W., Corradi-Dell'Acqua, C., Neveu, R., and Vuilleumier, P. (2017). Fear spreading across senses: visual emotional events alter cortical responses to touch, audition, and vision. Cereb. Cortex 27 (1), 68–82. doi:10.1093/cercor/bhw337

Faerman, M. V., Ehgoetz Martens, K. A., Meehan, S. K., and Staines, W. R. (2022). Neural correlates of trait anxiety in sensory processing and distractor filtering. Psychophysiology 62, e14706. doi:10.1111/psyp.14706

Faul, F., Erdfelder, E., Lang, A.-G., and Buchner, A. (2007). G*Power 3: a flexible statistical power analysis program for the social, behavioral, and biomedical sciences. Behav. Res. Methods 39, 175–191. doi:10.3758/bf03193146

Ferreri, L., Mas-Herrero, E., Zatorre, R. J., Ripollés, P., Gomez-Andres, A., Alicart, H., et al. (2019). Dopamine modulates the reward experiences elicited by music. Proc. Natl. Acad. Sci. 116 (9), 3793–3798. doi:10.1073/pnas.1811878116

Finn, S., and Fancourt, D. (2018). The biological impact of listening to music in clinical and nonclinical settings: a systematic review. Prog. Brain Res. 237, 173–200. doi:10.1016/bs.pbr.2018.03.007

Fletcher, M. D. (2021). Can haptic stimulation enhance music perception in hearing-impaired listeners? Front. Neurosci. 15, 723877. doi:10.3389/fnins.2021.723877

Fletcher, M. D., Song, H., and Perry, S. W. (2020). Electro-haptic stimulation enhances speech recognition in spatially separated noise for cochlear implant users. Sci. Rep. 10 (1), 12723. doi:10.1038/s41598-020-69697-2

Freitas, C., Manzato, E., Burini, A., Taylor, M. J., Lerch, J. P., and Anagnostou, E. (2018). Neural correlates of familiarity in music listening: a systematic review and a neuroimaging meta-analysis. Front. Neurosci. 12, 686. doi:10.3389/fnins.2018.00686

Fuentes-Sánchez, N., Pastor, R., Eerola, T., Escrig, M. A., and Pastor, M. C. (2022). Musical preference but not familiarity influences subjective ratings and psychophysiological correlates of music-induced emotions. Personality Individ. Differ. 198, 111828. doi:10.1016/j.paid.2022.111828

Gallagher, S. (2000). Philosophical conceptions of the self: implications for cognitive science. Trends Cognitive Sci. 4 (1), 14–21. doi:10.1016/s1364-6613(99)01417-5

García López, Á., Cerdán, V., Ortiz, T., Sánchez Pena, J. M., and Vergaz, R. (2022). Emotion elicitation through vibrotactile stimulation as an alternative for deaf and hard of hearing people: an EEG study. Electronics 11 (14), 2196. doi:10.3390/electronics11142196

Goncu-Berk, G., Halsted, T., Zhang, R., and Pan, T. (2020). “Therapeutic touch: reactive clothing for anxiety,” in Proceedings of the 14th EAI international conference on pervasive computing technologies for healthcare, 239–242.

Groarke, J. M., and Hogan, M. J. (2019). Listening to self-chosen music regulates induced negative affect for both younger and older adults. PLoS One 14 (6), e0218017. doi:10.1371/journal.pone.0218017

Guo, B., Wang, Z., Yu, Z., Wang, Y., Yen, N. Y., Huang, R., et al. (2015). Mobile crowd sensing and computing: the review of an emerging human-powered sensing paradigm. ACM Comput. Surv. (CSUR) 48 (1), 1–31. doi:10.1145/2794400

Harney, C. L. (2023). Developing an effective music listening intervention for anxiety. University of Leeds.

Haynes, A., Lawry, J., Kent, C., and Rossiter, J. (2021). FeelMusic: enriching our emotive experience of music through audio-tactile mappings. Multimodal Technol. Interact. 5 (6), 29. doi:10.3390/mti5060029

Heggli, O. A. (2020). Generalized spotify analyser [Source code]. GitHub. Available online at: https://github.com/oleheggli/Generalized-Spotify-Analyser.

Heimler, B., and Amedi, A. (2020). Are critical periods reversible in the adult brain? Insights on cortical specializations based on sensory deprivation studies. Neurosci. Biobehav. Rev. 116, 494–507. doi:10.1016/j.neubiorev.2020.06.034

Heimler, B., Striem-Amit, E., and Amedi, A. (2015). Origins of task-specific sensory-independent organization in the visual and auditory brain: neuroscience evidence, open questions and clinical implications. Curr. Opin. Neurobiol. 35, 169–177. doi:10.1016/j.conb.2015.09.001

Ho, H. Y., and Loo, F. Y. (2023). A theoretical paradigm proposal of music arousal and emotional valence interrelations with tempo, preference, familiarity, and presence of lyrics. New Ideas Psychol. 71, 101033. doi:10.1016/j.newideapsych.2023.101033

Hove, M. J., and Risen, J. L. (2009). It's all in the timing: interpersonal synchrony increases affiliation. Soc. Cogn. 27 (6), 949–960. doi:10.1521/soco.2009.27.6.949

Ideguchi, T., and Muranaka, M. (2007). “Influence of the sensation of vibration on perception and sensibility while listening to music,” in Second international conference on innovative computing, informatio and control (ICICIC 2007) (IEEE), 5.

Jagt, M., Ganis, F., and Serafin, S. (2024). Enhanced neural phase locking through audio-tactile stimulation. Front. Neurosci. 18, 1425398. doi:10.3389/fnins.2024.1425398

Kayikcioglu, O., Bilgin, S., Seymenoglu, G., and Deveci, A. (2017). State and trait anxiety scores of patients receiving intravitreal injections. State Trait Anxiety Scores Patients Receiv. Intravitreal Inject. Biomed. Hub 2 (2), 1–5. doi:10.1159/000478993

Khan, M. A., Abbas, S., Raza, A., Khan, F., and Whangbo, T. (2022). Emotion based signal enhancement through multisensory integration using machine learning. Comput. Mater. Continua 71 (3), 5911–5931. doi:10.32604/cmc.2022.023557

Krause, A. E., North, A. C., and Hewitt, L. Y. (2015). Music-listening in everyday life: devices and choice. Psychol. music 43 (2), 155–170. doi:10.1177/0305735613496860

Kristjánsson, Á., Moldoveanu, A., Jóhannesson, Ó. I., Balan, O., Spagnol, S., Valgeirsdóttir, V. V., et al. (2016). Designing sensory-substitution devices: principles, pitfalls and potential. Restor. Neurology Neurosci. 34 (5), 769–787. doi:10.3233/rnn-160647

Landelle, C., Caron-Guyon, J., Nazarian, B., Anton, J. L., Sein, J., Pruvost, L., et al. (2023). Beyond sense-specific processing: decoding texture in the brain from touch and sonified movement. Iscience 26 (10), 107965. doi:10.1016/j.isci.2023.107965

Leonardis, D., Frisoli, A., Barsotti, M., Carrozzino, M., and Bergamasco, M. (2014). Multisensory feedback can enhance embodiment within an enriched virtual walking scenario. Presence 23 (3), 253–266. doi:10.1162/pres_a_00190

Levänen, S., Jousmäki, V., and Hari, R. (1998). Vibration-induced auditory-cortex activation in a congenitally deaf adult. Curr. Biol. 8 (15), 869–872. doi:10.1016/s0960-9822(07)00348-x

Liljeström, S., Juslin, P. N., and Västfjäll, D. (2013). Experimental evidence of the roles of music choice, social context, and listener personality in emotional reactions to music. Psychol. music 41 (5), 579–599. doi:10.1177/0305735612440615

Lu, L., Lyu, F., Tian, F., Chen, Y., Dai, G., and Wang, H. (2016). An exploratory study of multimodal interaction modeling based on neural computation. Sci. China. Inf. Sci. 59 (9), 92106. doi:10.1007/s11432-016-5520-1

Maes, P. J., Leman, M., Palmer, C., and Wanderley, M. M. (2014). Action-based effects on music perception. Front. Psychol. 4, 1008. doi:10.3389/fpsyg.2013.01008

Mas-Herrero, E., Ferreri, L., Cardona, G., Zatorre, R. J., Pla-Juncà, F., Antonijoan, R. M., et al. (2023). The role of opioid transmission in music-induced pleasure. Ann. N. Y. Acad. Sci. 1520 (1), 105–114. doi:10.1111/nyas.14946

McLachlan, N., Marco, D., Light, M., and Wilson, S. (2013). Consonance and pitch. J. Exp. Psychol. General 142 (4), 1142–1158. doi:10.1037/a0030830

Melo, M., Gonçalves, G., Monteiro, P., Coelho, H., Vasconcelos-Raposo, J., and Bessa, M. (2020). Do multisensory stimuli benefit the virtual reality experience? A systematic review. IEEE Trans. Vis. Comput. Graph. 28 (2), 1428–1442. doi:10.1109/TVCG.2020.3010088

Merchel, S., and Altinsoy, M. E. (2020). Psychophysical comparison of the auditory and tactile perception: a survey. J. Multimodal User Interfaces 14 (3), 271–283. doi:10.1007/s12193-020-00333-z

Montana, J. I., Matamala-Gomez, M., Maisto, M., Mavrodiev, P. A., Cavalera, C. M., Diana, B., et al. (2020). The benefits of emotion regulation interventions in virtual reality for the improvement of wellbeing in adults and older adults: a systematic review. J. Clin. Med. 9 (2), 500. doi:10.3390/jcm9020500

Nejati, V., Majdi, R., Salehinejad, M. A., and Nitsche, M. A. (2021). The role of dorsolateral and ventromedial prefrontal cortex in the processing of emotional dimensions. Sci. Rep. 11 (1), 1971. doi:10.1038/s41598-021-81454-7

Niedenthal, P. M. (2007). Embodying emotion. Science 316 (5827), 1002–1005. doi:10.1126/science.1136930

Park, K. S., Hass, C. J., Fawver, B., Lee, H., and Janelle, C. M. (2019). Emotional states influence forward gait during music listening based on familiarity with music selections. Hum. Mov. Sci. 66, 53–62. doi:10.1016/j.humov.2019.03.004

Peiffer, A. M., Mozolic, J. L., Hugenschmidt, C. E., and Laurienti, P. J. (2007). Age-related multisensory enhancement in a simple audiovisual detection task. Neuroreport 18 (10), 1077–1081. doi:10.1097/wnr.0b013e3281e72ae7

Pessoa, L., and Adolphs, R. (2010). Emotion processing and the amygdala: from a “low road” to “many roads” of evaluating biological significance. Nat. Rev. Neurosci. 11 (11), 773–782. doi:10.1038/nrn2920

Polanía, R., Burdakov, D., and Hare, T. A. (2024). Rationality, preferences, and emotions with biological constraints: it all starts from our senses. Trends Cognitive Sci. 28 (3), 264–277. doi:10.1016/j.tics.2024.01.003

Pongrac, H. (2008). Vibrotactile perception: examining the coding of vibrations and the just noticeable difference under various conditions. Multimed. Syst. 13 (4), 297–307. doi:10.1007/s00530-007-0105-x

Putkinen, V., Zhou, X., Gan, X., Yang, L., Becker, B., Sams, M., et al. (2024). Bodily maps of musical sensations across cultures. Proc. Natl. Acad. Sci. 121 (5), e2308859121. doi:10.1073/pnas.2308859121

Pyrovolakis, K., Tzouveli, P., and Stamou, G. (2022). Multi-modal song mood detection with deep learning. Sensors 22 (3), 1065. doi:10.3390/s22031065

Remache-Vinueza, B., Trujillo-León, A., Zapata, M., Sarmiento-Ortiz, F., and Vidal-Verdú, F. (2021). Audio-tactile rendering: a review on technology and methods to convey musical information through the sense of touch. Sensors 21 (19), 6575. doi:10.3390/s21196575

Repp, B. H., and Penel, A. (2004). Rhythmic movement is attracted more strongly to auditory than to visual rhythms. Psychol. Res. 68, 252–270. doi:10.1007/s00426-003-0143-8

Rodriguez, M., and Kross, E. (2023). Sensory emotion regulation. Trends Cognitive Sci. 27 (4), 379–390. doi:10.1016/j.tics.2023.01.008

Roschk, H., and Hosseinpour, M. (2020). Pleasant ambient scents: a meta-analysis of customer responses and situational contingencies. J. Mark. 84 (1), 125–145. doi:10.1177/0022242919881137

Russell, J. A. (1980). A circumplex model of affect. J. Personality Soc. Psychol. 39 (6), 1161–1178. doi:10.1037/h0077714

Russo, F. (2020). Music beyond sound: weighing the contributions of touch, sight, and balance. Acoust. Today 16, 37. doi:10.1121/at.2020.16.1.37

Russo, F. A., Ammirante, P., and Fels, D. I. (2012). Vibrotactile discrimination of musical timbre. J. Exp. Psychol. Hum. Percept. Perform. 38 (4), 822–826. doi:10.1037/a0029046

Salimpoor, V. N., Benovoy, M., Longo, G., Cooperstock, J. R., and Zatorre, R. J. (2009). The rewarding aspects of music listening are related to degree of emotional arousal. PLoS One 4 (10), e7487. doi:10.1371/journal.pone.0007487

Salimpoor, V. N., Benovoy, M., Larcher, K., Dagher, A., and Zatorre, R. J. (2011). Anatomically distinct dopamine release during anticipation and experience of peak emotion to music. Nat. Neurosci. 14 (2), 257–262. doi:10.1038/nn.2726

Schindler, I., Hosoya, G., Menninghaus, W., Beermann, U., Wagner, V., Eid, M., et al. (2017). Measuring aesthetic emotions: a review of the literature and a new assessment tool. PLoS One 12 (6), e0178899. doi:10.1371/journal.pone.0178899

Schürmann, M., Caetano, G., Hlushchuk, Y., Jousmäki, V., and Hari, R. (2006). Touch activates human auditory cortex. Neuroimage 30 (4), 1325–1331. doi:10.1016/j.neuroimage.2005.11.020

Senkowski, D., and Engel, A. K. (2024). Multi-timescale neural dynamics for multisensory integration. Nat. Rev. Neurosci. 25 (9), 625–642. doi:10.1038/s41583-024-00845-7

Shams, L., Wozny, D. R., Kim, R., and Seitz, A. (2011). Influences of multisensory experience on subsequent unisensory processing. Front. Psychol. 2, 264. doi:10.3389/fpsyg.2011.00264

Sharp, A., Houde, M. S., Bacon, B. A., and Champoux, F. (2019). Musicians show better auditory and tactile identification of emotions in music. Front. Psychol. 10, 1976. doi:10.3389/fpsyg.2019.01976

Sharp, A., Houde, M. S., Maheu, M., Ibrahim, I., and Champoux, F. (2019b). Improved tactile frequency discrimination in musicians. Exp. Brain Res. 237 (6), 1575–1580. doi:10.1007/s00221-019-05532-z

Sharvit, G., Vuilleumier, P., and Corradi-Dell'Acqua, C. (2019). Sensory-specific predictive models in the human anterior insula. F1000Research 8, 164. doi:10.12688/f1000research.17961.1

Shimada, S. (2022). Multisensory and sensorimotor integration in the embodied self: relationship between self-body recognition and the mirror neuron system. Sensors 22 (13), 5059. doi:10.3390/s22135059

Siedenburg, K., Bürgel, M., Özgür, E., Scheicht, C., and Töpken, S. (2024). Vibrotactile enhancement of musical engagement. Sci. Rep. 14 (1), 7764. doi:10.1038/s41598-024-57961-8

Singer, N., Poker, G., Dunsky-Moran, N., Nemni, S., Balter, S. R., Doron, M., et al. (2023). Development and validation of an fMRI-informed EEG model of reward-related ventral striatum activation. Neuroimage 276, 120183. doi:10.1016/j.neuroimage.2023.120183

Snir, A., Ciela, K., and Amedi, A. (2025). A novel tactile technology enables sound source identification by hearing-impaired individuals in a complex 3D audio environment. doi:10.21203/rs.3.rs-7418108/v1

Snir, A., Cieśla, K., Ozdemir, G., Vekslar, R., and Amedi, A. (2024). Localizing 3D motion through the fingertips: following in the footsteps of elephants. IScience 27 (6), 109820. doi:10.1016/j.isci.2024.109820

Snir, A., Cieśla, K., Vekslar, R., and Amedi, A. (2024). Highly compromised auditory spatial perception in aided congenitally hearing-impaired and rapid improvement with tactile technology. IScience 27 (9), 110808. doi:10.1016/j.isci.2024.110808

Spence, C., and Gao, Y. (2024). Augmenting home entertainment with digitally delivered touch. i-Perception 15 (5), 20416695241281474. doi:10.1177/20416695241281474

Stein, B. E., Stanford, T. R., and Rowland, B. A. (2020). Multisensory integration and the society for neuroscience: then and now. J. Neurosci. 40 (1), 3–11. doi:10.1523/jneurosci.0737-19.2019

Tajadura-Jiménez, A. (2008). Embodied psychoacoustics: spatial and multisensory determinants of auditory-induced emotion (ph. D. Thesis). Gothenburg: Chalmers University of Technology.

Tranchant, P., Shiell, M. M., Giordano, M., Nadeau, A., Peretz, I., and Zatorre, R. J. (2017). Feeling the beat: bouncing synchronization to vibrotactile music in hearing and early deaf people. Front. Neurosci. 11, 507. doi:10.3389/fnins.2017.00507

Trivedi, U., Alqasemi, R., and Dubey, R. (2019). “Wearable musical haptic sleeves for people with hearing impairment,” in Proceedings of the 12th ACM international conference on pervasive technologies related to assistive environments, 146–151.

Trost, W., Trevor, C., Fernandez, N., Steiner, F., and Frühholz, S. (2024). Live music stimulates the affective brain and emotionally entrains listeners in real time. Proc. Natl. Acad. Sci. 121 (10), e2316306121. doi:10.1073/pnas.2316306121

Tuominen, P. P., and Saarni, L. A. (2024). The use of virtual technologies with music in rehabilitation: a scoping systematic review. Front. Virtual Real. 5, 1290396. doi:10.3389/frvir.2024.1290396

Turchet, L., West, T., and Wanderley, M. M. (2020). Touching the audience: musical haptic wearables for augmented and participatory live music performances. Personal Ubiquitous Comput. 25 (4), 749–769. doi:10.1007/s00779-020-01395-2

Van Den Bosch, I., Salimpoor, V. N., and Zatorre, R. J. (2013). Familiarity mediates the relationship between emotional arousal and pleasure during music listening. Front. Hum. Neurosci. 7, 534. doi:10.3389/fnhum.2013.00534

Venkatesan, T., and Wang, Q. J. (2023). “Feeling connected: the role of haptic feedback in VR concerts and the impact of haptic music players on the music listening experience,” 12. MDPI, 148. doi:10.3390/arts12040148

Vuilleumier, P. (2005). How brains beware: neural mechanisms of emotional attention. Trends Cognitive Sci. 9 (12), 585–594. doi:10.1016/j.tics.2005.10.011

Ward, M. K., Goodman, J. K., and Irwin, J. R. (2014). The same old song: the power of familiarity in music choice. Mark. Lett. 25, 1–11. doi:10.1007/s11002-013-9238-1

Wu, C., Stefanescu, R. A., Martel, D. T., and Shore, S. E. (2015). Listening to another sense: somatosensory integration in the auditory system. Cell Tissue Res. 361, 233–250. doi:10.1007/s00441-014-2074-7

Wu, K., Anderson, J., Townsend, J., Frazier, T., Brandt, A., and Karmonik, C. (2019). Characterization of functional brain connectivity towards optimization of music selection for therapy: a fMRI study. Int. J. Neurosci. 129 (9), 882–889. doi:10.1080/00207454.2019.1581189

Yang, T. H., Kim, J. R., Jin, H., Gil, H., Koo, J. H., and Kim, H. J. (2021). Recent advances and opportunities of active materials for haptic technologies in virtual and augmented reality. Adv. Funct. Mater. 31 (39), 2008831. doi:10.1002/adfm.202008831

Young, G. W., Murphy, D., and Weeter, J. (2016). Haptics in music: the effects of vibrotactile stimulus in low frequency auditory difference detection tasks. IEEE Trans. haptics 10 (1), 135–139. doi:10.1109/toh.2016.2646370

Yu, L., Cuppini, C., Xu, J., Rowland, B. A., and Stein, B. E. (2019). Cross-modal competition: the default computation for multisensory processing. J. Neurosci. 39 (8), 1374–1385. doi:10.1523/jneurosci.1806-18.2018

Zatorre, R. J., Chen, J. L., and Penhune, V. B. (2007). When the brain plays music: auditory–Motor interactions in music perception and production. Nat. Rev. Neurosci. 8 (7), 547–558. doi:10.1038/nrn2152

Keywords: multisensory (audio, tactile), music emotion, virtual reality, sensory enhancement, embodied music cognition, anxiety, music technology

Citation: Schwartz N, Snir A and Amedi A (2025) Feeling the music: exploring emotional effects of auditory-tactile musical experiences. Front. Virtual Real. 6:1592652. doi: 10.3389/frvir.2025.1592652

Received: 12 March 2025; Accepted: 28 July 2025;

Published: 01 October 2025.

Edited by:

Justine Saint-Aubert, Inria Rennes - Bretagne Atlantique Research Centre, FranceReviewed by:

Peter Eachus, University of Salford, United KingdomRavali Gourishetti, Max Planck Institute for Intelligent Systems, Germany

Copyright © 2025 Schwartz, Snir and Amedi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Adi Snir, YWRpLnNuaXJAcG9zdC5ydW5pLmFjLmls, YWRpc2F4b3Bob25lQGdtYWlsLmNvbQ==; Amir Amedi, YW1pci5hbWVkaUBydW5pLmFjLmls

†These authors have contributed equally to this work and share first authorship

Naama Schwartz

Naama Schwartz Adi Snir

Adi Snir Amir Amedi*

Amir Amedi*