- 1Department of the Arts, University of Bologna, Bologna, Italy

- 2Department of Political Sciences, Communication and International Relations, University of Macerata, Macerata, Italy

- 3Department of Computer Science and Engineering, University of Bologna, Bologna, Italy

In this paper we introduce M-AGEW (Magic AuGmentEd Workout), an Augmented Reality (AR) application that assists users during outdoor, high-dynamic workouts such as jogging and calisthenics. It is a client-server-based system with a proprietary data structure (WKAN) that dynamically defines sequences of workouts as finite state machines. M-AGEW adapts workout intensity dynamically based on real-time sensor data and overlays contextual AR feedback and guidance, including biometric readings and a virtual coaching avatar. The technology was created through a user-centric design process, supported by an initial user study and industrial partnership. We validate M-AGEW through a technology acceptance evaluation with professional athletes, reporting promising results in usability, enjoyment, and perceived usefulness. Our findings suggest that AR headsets can effectively enhance and supplement outdoor physical activity, offering a motivating alternative to standard fitness monitoring.

1 Introduction

Extended reality (XR) has become an increasingly popular technology for diverse applications, including entertainment, education, and healthcare (Ning et al., 2021; Rauschnabel et al., 2022; Hajahmadi et al., 2024a; b; Stacchio et al., 2024). This is because XR supports virtual experiences that blend the physical and digital worlds, allowing seamless and immersive interactions. In the spectrum of XR, Augmented Reality (AR) lets one visualize the surrounding world and augment it by displaying and interacting with virtual objects, with a situated or non-situated approach (Billinghurst et al., 2015; Fitzmaurice, 1993). As a consequence, AR allows detecting, recognizing, and processing objects, actions, and situations (Soltani and Morice, 2020; Sawan et al., 2020). Those concepts are rooted in (Fitzmaurice, 1993), which introduced the idea of situated information spaces, i.e., spaces where digital information associated with spatial locations is collected, associated, and collocated with those, that act as anchors (Fitzmaurice, 1993). Considering the advancements and affordability of modern AR devices and eco-systems, informed spaces, providing information regarding physical objects, and chaperoning a user through a specific process (e.g., training, forecasting, making a choice).

Among the various fields of application, AR was applied in particular to sport (Soltani and Morice, 2020), particularly in training activities, to augment the real world with useful information mostly concerning (Soltani and Morice, 2020; Sawan et al., 2020): a) training for both professionals and amateurs (Bonfert et al., 2022; Kim et al., 2022; b) enhancing the user experience and engagement (Tan et al., 2015; Sport Business Journals, 2021; Hamada et al., 2022; c) measuring and increasing performance (Pascoal et al., 2019; Kowatsch et al., 2021; Nekar et al., 2022).

Despite its proven usefulness (Soltani and Morice, 2020), AR systems have not been fully exploited in the context of high-dynamic sports (e.g., running, body-weight activities, box) and outdoor sports activities (Baman et al., 2010; Soltani and Morice, 2020; Sawan et al., 2020). This is also because current hardware and software limitations make the design of AR systems for high-dynamic and outdoor sports complex (Soltani and Morice, 2020; Sawan et al., 2020).

Considering fitness Head Mounted Display (HMD) ergonomics must be considered concerning an athlete’s actions (Soltani and Morice, 2020). Moreover, the information presented during the activity should be functional and related to the current activity and its completion while not obstructing the user’s view (Soltani and Morice, 2020). For the outdoor context, crucial variables are the HMD’s lens properties and the design of the AR interface (Pascoal et al., 2020, considering the variable hologram visibility with different natural light conditions (Seo et al., 2018; Hertel and Steinicke, 2021). Another important aspect amounts to the dynamic customization of the user activities based on personal data (e.g., physical characteristics, training goal, real-time biometric data) (Technogym, 2017; Martin-Niedecken et al., 2019). Finally, the system should also provide sensory feedback to keep users active, motivated, and engaged (Wu et al., 2013; Soltani and Morice, 2020; Sawan et al., 2020).

Considering now the lack of applications that take into consideration all the mentioned aspects, we here report the design and implementation of an AR system, Magic AuGmentEd Workout (M-AGEW1), which assists users in two outdoor high-dynamic sports activities: jogging and calisthenics (e.g., body weight exercises). M-AGEW follows a data-driven client-server model: the client app is designed to be deployed in various AR headsets and serves as a visualization tool, while the server manages system logic, data storage, and processing during workouts. This design allows for a lightweight system deployable on any AR headset with minimal computational requirements. It is worth noticing that, each workout is codified as a custom key-value data structure, which is interpreted by the server as a Finite-State-Machine (FSM) where each state represents an activity, and the transition from the current state to the next one is defined by its completion.

M-AGEW overlays a dynamic AR virtual interface in the user’s view, offering context-specific guidance and performance indicators based on sensor data. For instance, during jogging, it uses GPS to display the distance to the goal and the actual speed. The workout plan adjusts dynamically based on historical sensor data, with user discretion for acceptance, using a simple yet effective thresholding mechanism. Moreover, it incorporates virtual elements, like a virtual avatar and progressive bars, used as engagement elements. Finally, the system is adaptable to various environments, including parks and urban streets. To reach the here discussed M-AGEW implementation, we first investigated which design factors should be included in the system development, including the user interface and device ergonomics through a preliminary study, which also included a user study to validate the first designed mockup of the system.

It is worth noting that, with this work, we provide a first step in verifying how an AR headset system can improve and potentially replace the digital gadgets currently used for outdoor and highly dynamic workouts: smartphones and smartwatches (Lopez et al., 2019; Schiewe et al., 2020).

Indeed, replacing classical devices with AR-adaptive experiences provides several advantages, including having free movements, immersive real-time feedback and spatial alignment of training cues, and reduction of both distraction sources and unnatural movements (e.g., user raises his/her arm to watch his/her smartphone/smartwatch). For this reason, we first evaluated, from both a hardware and software perspective, the best AR headset candidate to develop our system, particularly considering critical factors, such as Field Of View, Battery Life, Display Brightness, and available AR framework. Then, we evaluated M-AGEW, developed and deployed on the chose AR headset, with a technology-acceptance perspective, involving professional athletes affiliated with a company leader in the production of sports equipment2, which also participated in the mentioned user study and the system design. The obtained results support the possible acceptance of M-AGEW for outdoor workout training.

In summary, the contributions of our work amounts to:

The rest of the paper is organized as follows. Section 2 reviews the state-of-the-art works that fall closest to this work. In Section 3, we introduce a preliminary analysis of the factors that influenced the design of our system. Section 4 reports a description of the M-AGEW system architecture, detailing both client and server applications while Section 5 reports our experimental settings and details the obtained results. Finally, Section 6 describes the limitations of our work and fosters the future extension of M-AGEW.

2 Related works

Despite the availability of XR systems for sports activities, which offer assistance, guidance, and training, only a limited number of them specifically cater to highly dynamic sports (Liu et al., 2020; Tan et al., 2015; Sport Business Journals, 2021; Pascoal et al., 2019; Kowatsch et al., 2021; Nekar et al., 2022; Hamada et al., 2022; Kim et al., 2022; Bonfert et al., 2022; Bozgeyikli and Bozgeyikli, 2022). Some of these systems focus on outdoor activities like jogging (Tan et al., 2015; Sport Business Journals, 2021; Hamada et al., 2022), while others target specific sports like tennis, football, and skiing (Liu et al., 2020; Kim et al., 2022; Bonfert et al., 2022; Bozgeyikli and Bozgeyikli, 2022).

In disciplines different from jogging and calisthenics, XR technology has been used as a guide (Nozawa et al., 2019; Yeo et al., 2019). In Nozawa et al. (2019) a VR ski training system has been proposed using an indoor ski simulator. The simulator guides a user to reproduce the movements of a professional skier, represented as a digital avatar. Authors of Yeo et al. (2019) faced the problem of repeated self-directed practice sessions in wrist motion-based sports, providing a general AR framework to direct a user in her/his actions. The framework proposed the usage of AR wearable devices for both displaying guidance and correcting postures and actions in real time. However, for both such works, no evaluation of the system’s usability or effectiveness has been provided.

In the context of jogging, all relevant works focused on increasing the enjoyment of exercising outdoors (Tan et al., 2015; Sport Business Journals, 2021; Hamada et al., 2022). To this aim, authors of Tan et al. (2015); Sport Business Journals (2021) presented an AR app showing virtual elements to increase user engagement while running. In particular, Tan et al. (2015) provided a combination of wearable visual, audio, and sensing technology to realize a game-like AR environment to enhance jogging, by switching between visual and audio modes using sensor technology to enable a form of on-demand AR. However, the proposed system is not spatially modular or configurable by data, and its design was not validated. In Hamada et al. (2022) an AR system has been developed for the EPSON Moverio BT40s (EMBT) device, focusing on the competitive social aspects by displaying digital avatars while jogging, demonstrating that virtual partners increase the enjoyment of running. However, such a system does not provide any modularity concerning the track to follow nor provide an implementation for exercises different from jogging.

Another consideration pertains to the adaptability of the training experience, as explored in works such as (Martin-Niedecken et al., 2019; Marienko et al., 2020; Huang et al., 2021). Adaptive training involves tailoring the training process to the specific needs of the user by leveraging technological training systems and techniques. On such a concept, the ExerCube, as presented in Martin-Niedecken et al. (2019), introduces an adaptive fitness game setup designed for indoor training. The game dynamically adjusts its difficulty based on players’ individual gaming and fitness skills, considering factors such as race speed, heart rate within a predefined range, and the number of mistakes made (reflecting cognitive and mental focus). The authors demonstrated that users’ experience in the ExerCube game, including flow, enjoyment, and motivation, is comparable to that of personal training. However, to the best of our knowledge, such a system cannot be used in outdoor workouts nor be configured with a data-driven approach.

On a purely commercial level, applications like “ZRX: Zombies Run” ZRX (2021) enable users to engage in dynamic, audio-driven activities. For example, users hear various audio tracks that simulate the experience of escaping from zombies, enhancing the element of fun and motivation. However, systems like this, primarily designed for joggers, lack real-time and modularly visualizable biometrics and workout information and were not designed for AR.

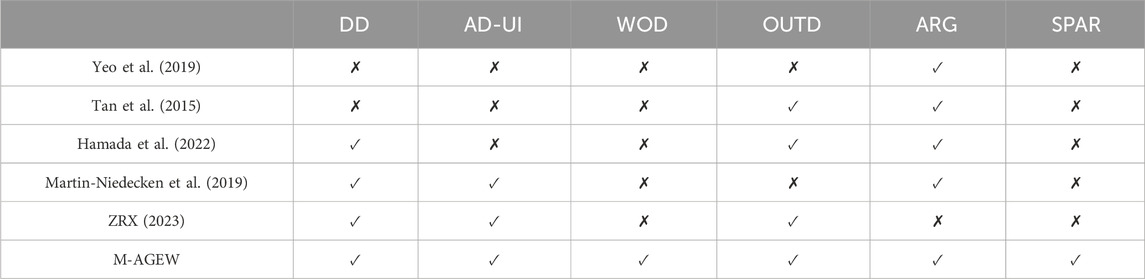

A comparison between M-AGEW features and all the cited related works is reported in Table 1. To the best of our knowledge, none of the cited works provided a data-driven AR interface specifically designed to support the completion of outdoor workouts, for both jogging and body-weight activities, exploring the possible evolution of classical guidance approaches (i.e., smartphone and smartwatch) in outdoor contexts. At the same time, none of the previous works provided a custom data structure to define and instantiate a modular AR interface that provides dynamic instruction for carrying out a workout. Moreover, to the best of our knowledge, none has followed an academic-industrial user study to design and validate an AR interface for outdoor workout activities.

Table 1. Comparison between the considered systems considering different relevant features, such as Data Driven (DD), Adaptive User-Interface (AD-UI), Workout as Interpretable Data (WOD), Outdoor-designed (OUTD), Augmented Reality Guidance (ARG), and provide a Straightforward Porting to other AR device (SPAR).

3 Preliminary studies on AR for outdoor workouts, device choice and user interface

3.1 Preliminary study: user-study on desired features for an AR app for outdoor workouts

Considering the lack of studies regarding the factors that could influence AR adoption in outdoor sports with dynamic guidance, and considering the lack of user studies from an academic-industrial perspective on the topic (Soltani and Morice, 2020; Sawan et al., 2020; Goebert and Greenhalgh, 2020; Uhm et al., 2022), we initiated a collaboration with one of the leader industrial companies in the field2. In this collaboration, we performed a preliminary analysis of the main factors that could influence the development and acceptance of such a system.

Firstly, we collaborated with the company, designing storyboard mockups (Hartson and Pyla, 2018) exploiting Figma3 in order to create a static visual representation of our prototype user interface and system logic, convey the layout, and structure following guidelines from Billinghurst et al. (2005); Pascoal et al. (2018); Kim et al. (2020). In the mockups4, the flow of the AR system is reported, comprising the beginning of the user activities, the workout flow (including the alternation of jogging and bodyweight exercises), and the provided AR guidance. In the entire design process, the mockups were optimized through discussion and usability inspection to prevent errors (Rieman et al., 1995).

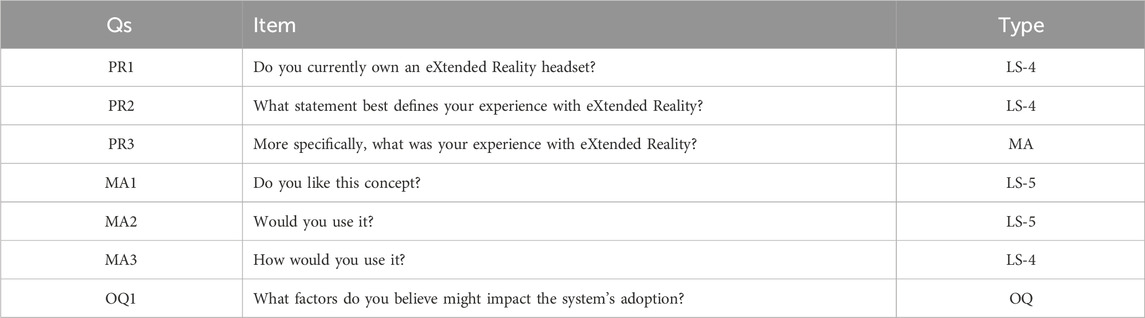

We then designed a user survey, to assess the acceptance of an outdoor AR system, exploiting the previously defined mockups. It was composed of different questions, regarding demography, possession of XR devices and familiarity with them (PR-items), and questions related to the possible acceptance of the system presented with the previously developed mockup (MA-items) and a final open question (OQ-item) to investigate the reasons why users would not adopt the system in case they negatively answer to the question item MA2. The latter follows the qualitative analysis methodology “thinking aloud” to catch the cons of the AR system proposal (Lewis, 1982). Table 2 reports all the questions and items that were provided in the survey. The survey has been distributed online using the SurveyMonkey service by the company5.

Table 2. Questions for the Preliminary survey. LS-

We collected 1392 answers but we cut out all the records that contained at least one null value to consider only the subjects that were motivated in answering all the question items, resulting in 1056 answers. Within this set, the participants were mostly balanced by gender (518 males and 533 females) and exhibited an average age of 41.61

Figure 1 presents the results obtained for the PR-x question items. It is evident that the majority of people do not possess an XR device, and among those who do, more people have a VR device compared to an AR headset (see Figure 1a), in line with the trends observed in a recent survey Statista, Richter (2023). Related to this result, many of them are not familiar or did not have experienced XR. Among those who do (421 subjects), more adopted AR on their smartphones/headsets concerning VR, making this pool of subjects suitable for evaluating the preliminary system design (Figures 1b,c).

We then filtered out all the participants who did not experience AR, resulting in 320 subjects who visualized the system mockup to evaluate both the MA and OQ question items (Figure 2). Analyzing the MV question items, it is evident how the considered population liked the system design described in the mockups (Figure 2a). They also exhibited a general intention to use the system in their workouts (as reported in Figure 2b). Among the 178 subjects that provided a positive answer to the latter (probably/definitely use it), there was uncertainty about the frequency of usage (Figure 2c).

However, it is worth noting that 20.63% of the users stated that they will probably or not use the system. To explore the reasons behind this decision, we analyzed the responses to OQ1. From these answers, we identified the main system criticalities reported by the users, including (a) becoming distracted and at risk of injury; (b) not being able to focus on training; (c) experiencing view obstruction due to digital information; and (d) encountering a non-intuitive user interface. In summary, the results of this preliminary analysis have allowed us to identify two key variables to focus on for improving the usability and ergonomics of the proposed system: (a) the ergonomics of the AR device and (b) the interface design to address all the issues reported by our participants.

3.2 Preliminary study: Device choice

Considering the results obtained in our preliminary analysis, we carefully review many commercial AR headsets for the high-dynamic sports use case, considering factors such as ergonomics, software frameworks, and hardware features, which are crucial in outdoor contexts (e.g., battery life, lens reflection, and luminance), although none has so far been specifically designed for the outdoors.

Analyzing the literature (Tzima et al., 2021; Singh et al., 2022; Lee et al., 2023; Hamada et al., 2022; Shin et al., 2023), we narrowed down the choice to three devices: Magic Leap 1 (ML1), Hololens 2 (HL2) Microsoft (2022), and EMBT Epson (2022) as the candidates. As mentioned, we considered different features while selecting the best option for the outdoor use case (reported in Table 3): availability of a dedicated AR Framework (AFW), the diagonal Field of View (FOV), Battery Life (BL) in hours, Weights (W) in grams, maximum Brightness (MBR) in nits (Samini et al., 2021; Leap, 2023; Yin et al., 2022). The weight instead refers to the headset without the computational unit in case they are separated.

None of these devices were suitable for outdoor use, necessitating the ergonomics to incorporate external components for changing light conditions, like sunglasses. Taking this into account, the ML1 device emerged as the best choice for our intended use case: software frameworks (Lumin SDK and Magic Leap Tools), ergonomics, hardware capabilities, and battery life make it the most suitable candidate.

We chose the ML1 also considering its separated headset and computational unit, which can be conveniently attached to the user’s clothing. While the EMBT shares this feature, the ML1 boasts superior computational power, hardware components, and field-of-view, and offers an SDK for integrating various AR functionalities.

3.3 Preliminary study: M-AGEW user interface and experience design

Considering the outdoor sports context, the design of the user interface is crucial. It should consider several factors such as the AR headset hardware features, the layout, the color palette, the shape of the elements, the frequency of appearance, and, finally, the variable light conditions. Considering the initial positive evaluation results in the preliminary study, we maintain the same UI narratives and interface elements, while at the same time expanding each of the proposed activities, focusing on different aspects to face all the criticalities that emerged during the preliminary survey (Billinghurst et al., 2005; Pascoal et al., 2018; Lee et al., 2023; Lilligreen and Wiebel, 2023).

3.3.1 Design guidelines for interface elements

The rules governing the presence of interface elements are informed by usability principles (Shneiderman and Plaisant, 2010) focusing on the effective presentation of the visual contents, and their placements.

Based on feedback from our preliminary user study, which also highlighted issues such as distraction risk and obstructed vision, we adopted a design strategy focused on low cognitive load, visual hierarchy, and spatial layout anchoring to ensure clear, to ensure a non-intrusive guidance during the workout. Our guidelines draw from human-computer interaction (HCI) research (Ejaz et al., 2019), considering that jogging and calisthenics activities change the cognitive abilities of runners, designing all the UI elements to be as simple and less cognitively heavy as possible. We have adhered to design principles that prioritize the effective comprehension of them by users during their workouts. To achieve this, the focus has been directed toward the subsequent critical design considerations, which here follow.

3.3.1.1 Visual hierarchy

A clear visual hierarchy has been established to direct users’ attention toward crucial workout information, while de-prioritizing decorative or less essential features. This involves the utilization of size, color, and placement to highlight important elements such as exercise instructions, progress indicators, and feedback (Benyon, 2014). This intentional design approach ensures that essential components, including exercise instructions, progress indicators, and feedback, are prominently featured within the user interface. Through the adjustment of interface component sizes, the use of distinctive and attention-catching colors, and the strategic placement of vital information, the user experience is enhanced by enabling swift and intuitive access to critical workout details, all while reducing distractions from less important elements (Dong, 2019).

3.3.1.2 Typography

A meticulous choice of fonts and text styles has been made to ensure that workout instructions and information remain highly legible, even when users are in outdoor settings with fluctuating lighting and visibility conditions. Sans-serif fonts were favored due to their straightforward and legible nature in digital interfaces, optimizing their size, weight, and spacing (Josephson, 2008; Sawyer et al., 2020). Furthermore, careful consideration was given to text styles, encompassing font size, thickness, and spacing, with the primary goal of improving readability. Crucial information, such as exercise instructions, was intentionally displayed in larger and bold fonts, guaranteeing its prominence and effortless comprehension for users (Russell et al., 2001). This approach significantly contributes to the creation of a user-friendly and efficient interface (Heim, 2007).

3.3.1.3 Iconography

Thoughtfully designed icons and symbols are employed to convey information quickly, reducing the reliance on text and enhancing user understanding (Heim, 2007; Ejaz et al., 2019). The M-AGEW Augmented Workout system incorporates a comprehensive array of interface elements meticulously designed to enhance the user experience and provide crucial feedback and guidance throughout workouts (Huang et al., 2015; Su and Liu, 2012). These elements serve various functions, such as facilitating user interaction, offering real-time information, and boosting user engagement. Notable components include the timer that keeps users informed about workout time, while the arrow acts as a navigational guide. Elements like the rest icon and celebration icons enhance motivation, and the workout status indicator offers progress snapshots. The coach icon ensures coaching assistance and the green target element aids goal visualization. Biometric and workout data provide real-time insights, and the progress bar communicates the distance covered during sprints. The map aids navigation, the loop icon tracks repetitions, and the heart rate zone element ensures users stay within their target heart rate zone. These elements collectively create a user-centric and data-rich workout experience, promoting engagement, motivation, and effective training in the M-AGEW system.

3.3.1.4 Contrast and color

Appropriate color schemes and contrast levels have been incorporated to improve readability, ensuring that text and graphics are easily discernible against diverse backgrounds, including outdoor settings (Lee et al., 2012). The design process commenced with a deliberate selection of a suitable color palette. This palette is characterized not only by its aesthetic appeal but also by its functionality in terms of providing contrast. Colors were selected based on their legibility against both bright and dark backgrounds. To achieve this, we opted for high-contrast color combinations, such as using white text on a blue background or black text on a white background for critical information, thereby guaranteeing optimal visibility (Hertel and Steinicke, 2021; Gabbard and Swan II, 2008).

3.3.1.5 Layout structure

Regarding the placement of the visual content, the predefined positions for interface elements are informed by Gestalt principles of perceptual organization, which suggest that users tend to perceive and process information more efficiently when it is organized in a structured and predictable manner (Koffka, 1935). It is worth noticing that, in all the interface element design phases, we adopted the recently defined Panel and Proxy design patterns (Lee et al., 2023).

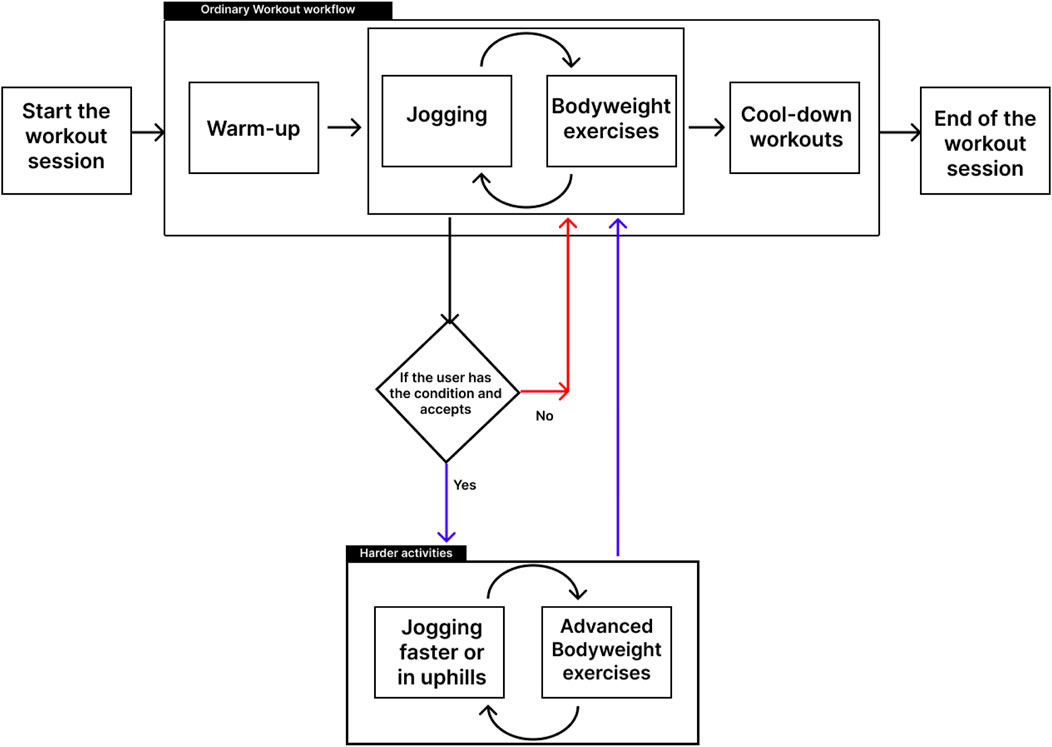

3.3.2 User experience flow

Built upon all the aforementioned guidelines, the user experience flow within the M-AGEW system is designed to provide a structured and engaging journey for users throughout their outdoor workouts. This comprehensive user experience flow ensures that users can seamlessly navigate their augmented workouts, from inception to completion, while providing opportunities for adaptability to individual fitness levels and goals. This flow consists of three main parts, each serving a distinct purpose (as illustrated in Figure 4): Start, Workout, and End.

1. Start: The beginning of the workout experience is decided by the users with a simple click of the start button in their AR view;

2. Workout: The core of the user experience revolves around the workout itself, which can be executed at an easy or, depending on the user’s fitness level, a hard intensity level. This phase encompasses various modules, including warm-ups, sprints, bodyweight exercises, and cool-downs. The system’s logic guides users through these workout modules and assesses their physical condition, potentially offering more challenging exercises if appropriate. The overall step tracks the completion of training exercises and partially completed exercises, represented by green circles. Each stage encompasses four phases: tutorial, middle, final, and rest. The tutorial phase offers a concise exercise explanation that blends visual components with coach-provided voice guidance. The middle phase concentrates on the crucial points within the exercise, showcasing exercise status and coach recommendations. In the final phase, users receive notifications denoting proximity to the finish point. The final phase signifies the attainment of workout objectives, featuring celebratory visual and auditory elements. Subsequently, a rest phase enables users to recuperate between exercises;

3. End: The workout session comes to an end during this phase, where the users experience a celebratory moment.

4 M-AGEW: magic AuGmentEd workout

M-AGEW (Magic AuGmentEd Workout) is an AR system designed to assist users in outdoor workout sessions, providing step-by-step guidance during training sessions. The system can adapt the workout scenario to a user’s performance and can manage a sequence of different activities: Jogging, Sprint, Body-Weight, and Rest.

The user is supported in the completion of each activity with different dynamic user interfaces displayed on the AR headset. A user is firstly guided by the information contained in the workout data structure. Depending on how the user responds, the rest of the workout is then adapted accordingly. M-AGEW includes an adaptive approach to increase the workout difficulty based on user performance, providing support for adaptive training. Therefore the user’s actions trigger the advancements and completion of the sequence of activities.

In the following, we describe the general system architecture components while detailing the main working mechanisms of the outdoor activities here considered.

4.1 System architecture

M-AGEW system architecture follows the client-server paradigm in a data-driven setting which is visually depicted in Figure 3.

4.1.1 Client applications

The client, implemented as an AR experience, acts as a dynamic visualization tool: data is stored and processed on a remote server. The client, in fact, always waits for a signal from the server, that contains data about the current activity, such as the type of the activity, the repetitions, and other properties that change according to its type (a thorough description of them is reported in Section 4.2). Once the client receives updated data from the server, it spawns a different interface, which changes according to the type of the current activity. In any of those interfaces, all the elements will be dynamically updated based on the user’s actions and sensor-generated data. Considering the latter, we move the implementation and the collection of sensor data to an application designed for mobile devices. This choice was driven by one portability concerning different AR devices and sensor precision: not all the devices actually in commerce have sensors (Arena et al., 2022) (e.g., Inertial Measurement Units, or GPS), and even if they have one they are usually not as precise as the ones nowadays offered by mobile devices (Poulose and Han, 2019) (e.g., GPS service location in AR devices is usually implemented with a networked-driven approach, lowering their precision and at the same time needing for a continuous connection.)

The AR client application was developed with the Unity Game Engine and the MLTools SDK, written in C#, targeting the ML1 as a device, following the consideration made in Section 3.2. The mobile application collects and streams the user’s sensor data each second (limited to GPS for our implementation) and was written in Java and deployed on a Samsung Galaxy S22.

4.1.2 Server system

As mentioned, the server component holds the main logic and is responsible for the primary computational workload. The server starts its computation by reading a custom kind of key-value data structure (WKAN, detailed in Section 4.2) which contains the information related to all the activities the user should carry out during the workout. From this data, the server models and instantiates a Finite State Machine (FSM) which states and transitions depend on the type of activities detailed in the structure. The server is also responsible for interfacing this FSM with the AR experience, allowing it to fetch the current state (and so the current activity) but also the transition to the next state, based on the set conditions and the data coming from both the AR interface and the mobile device apps. This means that it should also collect and manage all the sensor data coming from mobile devices. The advantages of such an approach are manifold: (a) allows users to flexibly create workout plans, (b) does not overload the limited computational capabilities of the AR devices (e.g., RAM, and battery life), and, (c) provides a low-cost approach to deploy M-AGEW on many AR devices (i.e., developers may adapt the interaction system for a different device maintaining the same logic and UI elements).

The server component was instead implemented as a Representational State Transfer (REST) - Application programming interface (API) system in Python, exploiting the Flask web framework and a set of open-source libraries that provide different functionalities to analyze the data received from the client devices and trigger all the functionalities described in the following.

4.2 Workout data structure

Workout plans have been modeled using a custom key-value data structure that we defined as WorKout Activity Notation (WKAN). Each WKAN data structure should contain a list of the activities, each of each representing a WKAN entry. Each entry always includes the type of activity, the set of actions to carry out, along with their difficulties. The rest of the information depends on the exercise type.

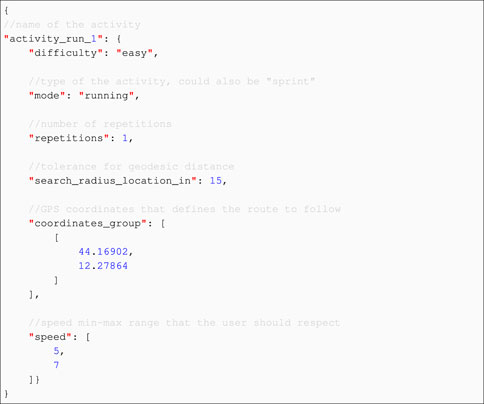

4.2.1 WKAN: Jogging and sprint

The Jogging and Sprint activities fields additionally include the locations describing the route to follow while walking/running, the confidence distance to identify that users reached those locations, the suggested speed, and the number of repetitions per route. An exemplar key-value structure for those activities is reported in Listing 1.

4.2.2 WKAN: Body-weight and rest activity

The Body-Weight activity includes instead the exercise types (e.g., Squat or Skipping Jumps) along with the number of sequence repetitions and single exercise executions. It also contains exemplar images that are displayed in AR to visually explain to the user how the exercise should be performed. In case the exercise requires a particular real-world physical facility, it also includes information on the coordinates to locate it and a picture of the facility itself. An exemplar key-value structure for those activities is reported in Listing 2.

Finally, the Rest activity just provides the resting time. The entire WKAN data structure is composed of a sequence of key-value pairs, representing different Jogging, Sprint, Body-Weight, and Rest activities.

4.2.3 WKAN FSM interpretation and thresholding mechanism

The WKAN data structure serves as the foundational blueprint from which the server instantiates a Finite State Machine (FSM) that guides the user through the outdoor workout session. Each WKAN entry, formatted as a JSON key-value pair, describes a single activity and its parameters, effectively mapping one FSM state. The sequence of these entries reflects the linear or conditional progression of the workout routine, with transitions determined by real-time sensor data streamed from the mobile application.

Upon loading the WKAN structure, the server parses the list of key-value pairs and builds the FSM accordingly. Each entry in the WKAN file defines a state as:

For each collected state in the created FSM:

To provide a practical example, during a Jogging segment, the system may require the user to maintain a minimum speed of 2.5 m/s for at least 60 s. If the user exceeds this threshold—say, jogging at 3.2 m/s—the system may prompt them to increase workout intensity. This is done via the AR interface, where users can respond through a controller click, hand gesture, or voice command.

In the case of a positive response, the FSM transitions to a harder version of the next activity. For instance, the transition from Jogging to Sprinting would occur only if the user’s average speed during jogging exceeds 3.0 m/s and if acceleration patterns (derived from inertial sensors) show consistent increases over a 10-s window. In this case, the Sprinting state will define a new set of requirements, such as sustaining a speed above 4.5 m/s for a fixed distance (e.g., 200 m), representing a meaningful escalation in difficulty.

This adaptive mechanism not only enhances the personalization of the workout but also ensures the progression logic is transparent and reproducible, allowing for fine-tuning in future implementations.

4.3 AR activity interfaces

As mentioned in Section 4.1 the AR experience spawns dynamic interfaces based on the type of the current activity. For this reason, in this Section, we will report the description of each of the implemented interfaces, detailing the roles of all the UI elements.

4.3.1 Jogging interface

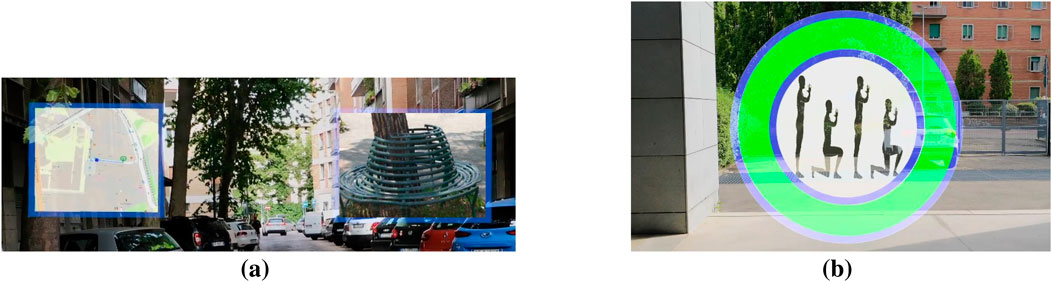

During a jogging session, the user could observe his/her current position and the one to be reached, along with the suggested path to follow, on a minimap generated with OpenStreetMaps OpenStreetMap contributors (2017) based on the current GPS location and the ones provided in the WKAN file. In addition, the user can visualize biometric information to monitor his/her health throughout the workout and its progress. The speed (expressed in Km/h) and beat per minute (BPMs) are calculated and displayed based on the collected GPS data, streamed from the mobile device. In particular, the BPMs are synthetically generated based on the current speed of the subject following known ranges (Lambert et al., 1998). A colored bar indicates the actual user’s fatigue level, calculated taking into account the speed, distance traveled so far and the BPMs, according to known and verified scales (see Figure 5a) (Marotta et al., 2021).

Figure 5. Jogging Activity interface views. (a) The AR interface presents a map with the location of the user and the route to follow. Metrics such as fatigue level and bpm are visualized along the map. (b) The enlarged version of the AR interface map. The blue dot represents the position of the user, the green one is the POI and the blue line is the suggested route.

The visualized map can be expanded and positioned in front of the user by clicking the controller trigger, occluding all the other visual elements so far described, to let the user focus on her/his position and the target location (Figure 5b).

The progress of the jogging activity is defined by reaching a specific Point Of Interest (POI), defined as a

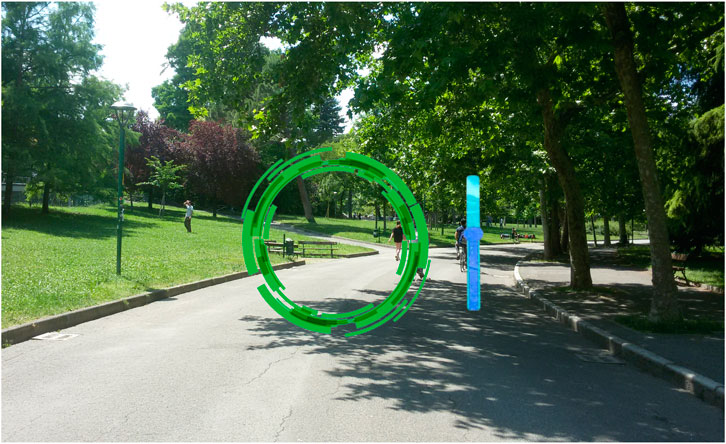

4.3.2 Sprint interface

The Sprint activity represents a special case of the jogging activity since the user is asked to run in a straight-line road at her/his maximum speed. In such an activity, the user’s location is monitored to check whether s/he reached the arrival line. Moreover, the distance between the user’s location and the final point is shown on the interface to provide an engaging and motivating visualization that may stimulate the user to reach her/his goal. To this aim, the progression is represented by a progressive circular element, which scales according to the distance already covered (Figure 6). This object is anchored in front of the user and will reach its maximum size immediately before reaching the target location. Along with this, we also adopted a classical progressive bar, which increases according to the same metric, showing the completion percentage.

Figure 6. Sprint Activity interface view. The interface shows a circle and a progress bar that are inversely proportional to the distance to the goal.

4.3.3 Body-weight and rest activities

In the body-weight activity, the user is asked to execute one or more calisthenics exercises. In case the execution of the exercises requires a real-world facility, the user is guided towards the POI which is located with an interface similar to the Jogging one; but in this case, the bio-metric stats would not be visible, and an image representing the real-world location is placed on the top-right section of the view (a view example is reported in Figure 7a). In case the execution of the exercises does not require any facility, the user will just visualize the exercise.

Figure 7. Body-Weight interface views. (a) The user is guided towards the exercise facility with a map and a picture of the facility itself. (b) Exercise progress bar.

The exercises can be quantified in terms of time or number of repetitions. In the timed case, the interface shows a timer indicating how much time is left until the exercise is completed; otherwise, when defined as a set of discrete repetitions, the system shows a descending counter corresponding to the missing repetitions. In the latter, repetition times have been estimated beforehand thanks to the collaboration of fitness experts. During the execution, a completion status circle is provided (Figure 7b) along with the same fatigue level bar provided in the Jogging activity (Figure 5a).

In the Rest activity, a simple timer is displayed along with an icon representing it: the user should wait until the indicated time to have a proper rest.

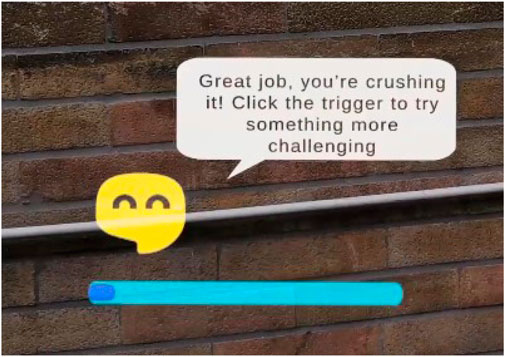

4.3.4 Virtual trainer assistant interface

As previously mentioned, M-AGEW integrates a digital avatar that vocally interacts with the user during the different steps of the workout Natesh Bhat (2021). The avatar is used to (a) inform when one activity ends and a new one starts, (b) ask whether one would like to increase the intensity of the workout, and (c) provide dynamic motivational messages during high-fatigue activities. Figure 8 represents the image of the avatar. The simple design aimed at providing a non-invasive and functional assistant that could be seen in an outdoor context while at the same time non-occluding the user’s view. The avatar provides multi-modal communication: the message it reports is both presented in textual form and also vocally synthesized using an external library Natesh Bhat (2021). In the case of (a) and (b), a progression bar is visualized below the avatar, indicating the time to wait for the next activity and/or to choose a harder version of it. The interface containing the avatar spawns after the conclusion of each of the previously detailed activities.

Figure 8. Image representing the 2D digital assistant avatar. In this picture, the avatar is asking the user if she/he wants to increase the difficulty of the next exercise.

5 Experimental setting and data analysis

5.1 Experience design and participants

To quantify the users’ satisfaction with M-AGEW, we built a custom workout around the headquarters of the company we collaborated with. In this workout, the FSM encompassed all activities from Section 4, including warm-up jogging, sprint, jogging, exercise session, another jogging, and cool-down jogging. During the experimental design process, we built an Activity Diagram describing it6.

To evaluate M-AGEW’s usability on a sunny, precipitation-free summer day, we integrated external sunglasses into the ML device to improve visibility. To assess the efficacy of our system, we enlisted eight professional company workers and athletes (2 females and 6 males).

Those users were selected from the company, considering the target users defined in Section 3.1 (i.e., early-middle-aged adults who are inclined to adopt AR but are not technological experts) and the limited general sample given by the collaborating pool of workers in the company it self. Moreover, the sample size is close to 10, which has repeatedly proven to be sufficient to discover over 80% of existing interface design Salomoni et al. (2017). The population had an average age of M: 37.12 (SD:

5.2 Assessment model

To assess the user’s experience with the application, participants completed three questionnaires: the Short User Experience Questionnaire (UEQ-S), selected questions from the technology acceptance model (TAM), and the NASA Task Load Index (NASA-TLX) (Schrepp et al., 2017; Hart and Staveland, 1988; Yousafzai et al., 2007). By combining these assessment scales, we aimed to understand user experience, acceptance, and potential areas for improvement for M-AGEW, providing valuable insights for design enhancements.

In particular, to evaluate how the users assess and experience the application in terms of usability, functionality, aesthetics, and emotional impact, we utilized the shortened version of the User Experience Questionnaire (UEQ-S) (Schrepp et al., 2017). This scale comprises eight items grouped into two dimensions: Pragmatic Quality (PQ), composed by items one to four, and Hedonic Quality (HQ) which is instead represented by items 5-8. The pragmatic quality dimension focuses on usability and functionality, evaluating factors such as ease of use, efficiency, and effectiveness. The hedonic quality dimension focuses on users’ subjective experience and emotional response, assessing attractiveness, novelty, and engagement. The scale spans from −3 to 3, with −3 representing one extreme of the dimension (e.g., strongly disagree) and three indicating the opposite extreme (e.g., strongly agree). Table 4 presents the questions of the UEQ-S, along with their corresponding measurement criteria.

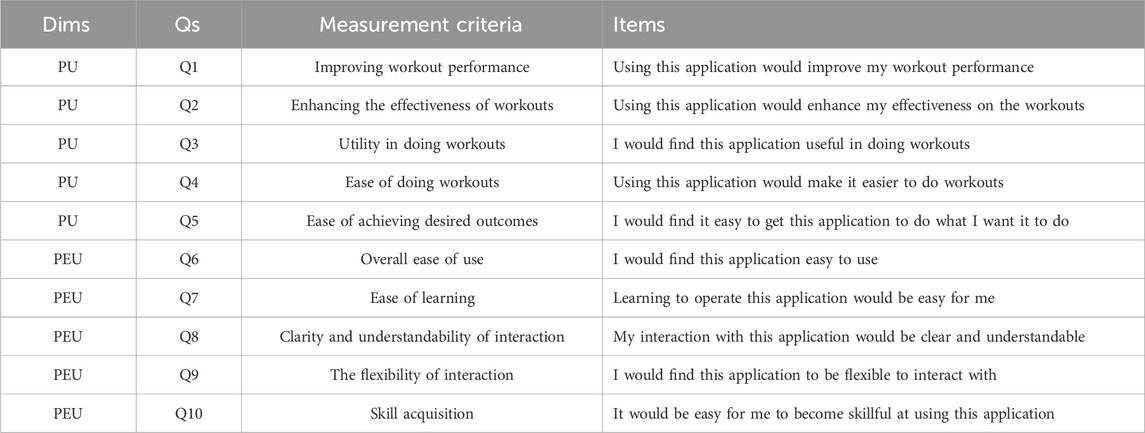

In addition, participants were invited to provide feedback on their expectations and perceptions of the application’s usefulness (PU) and ease of use (PEU) answering some questions taken from the TAM scale (Yousafzai et al., 2007). This survey comprises ten questions where participants provide ratings on a scale of one–7. The questions about PU and PEU and their corresponding measurement criteria are presented in Table 5.

Table 5. Questions for measuring the Perception of the application’s Usefulness (PU) and Ease of Use (PEU).

To evaluate instead the perceived mental workload of participants while using the application, we employed the NASA-TLX. This index measures various aspects of workload, including mental, physical, and temporal demands, as well as performance, effort, and frustration levels (Hart and Staveland, 1988). The questionnaire consists of six dimensions or subscales, which participants rate on a score between 0 and 100 from low to high, indicating their perceived workload for each dimension. The questions pertaining to the NASA-TLX and their corresponding measurement criteria are presented in Table 6.

5.3 Data analysis and results

In this Section, we report all our statistical analyses to first validate the reliability of the collected results and then provide descriptive analysis for them.

5.3.1 Internal consistency and reliability test

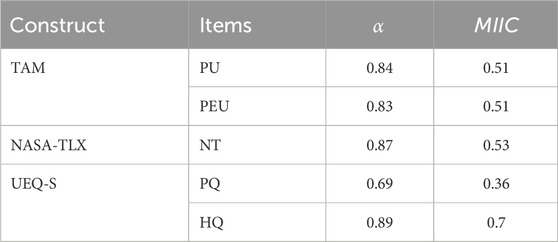

The collected data has undergone a reliability check to test for internal consistency and validate our research. For all our considered scales, TAM, NASA-TLX, and UEQ-S, we computed the widely accepted Cronbach’s alpha index (Cronbach, 1951) and the Mean inter-item correlation (MIIC) (Gulliksen, 1945) for each sub-group (i.e., construct).

Cronbach’s alpha provides a measure of the internal consistency of a test or scale; it is expressed as a number between 0 and 1. Internal consistency describes the extent to which all the items in a test measure the same concept or construct (Tavakol and Dennick, 2011). In addition, reliability estimates show the amount of measurement error in a test. Put simply, this interpretation of reliability is the correlation of the test with itself. Cronbach’s alpha score is considered reliable when (

Where:

The mean inter-item correlation serves as a method for assessing the internal consistency reliability of a test or questionnaire. This metric examines whether individual questions within the assessment yield consistently appropriate results. It is the result of the average of the upper or lower triangle in the correlation matrix calculated for the different question items representing the construct, as reported in Equation 2:

Where:

The reliable range for MIIC falls between 0.15 and 0.50 (Gulliksen, 1945). If the correlation is below 0.15, the items lack a strong correlation, indicating a potential issue in measuring the same construct. Conversely, a correlation exceeding 0.50 suggests items that are closely related and may be redundant (relevant in scale definition and initial validation).

The obtained results are reported in Table 7, exhibiting reliable scores for almost all of the groups, except for the PQ construct of the UEQ-S scales, whose score is slightly lower than the

Table 7. Cronbach’s

These values can be justified by the obtained low scores on the supportive item in the PQ (see Section 5.3.2). However, the corresponding MIIC exhibits a value in the optimal range, which compensates for this small offset. To date, the collected data can be considered reliable for subsequent analysis. Moreover, further analysis of this construct could provide hints on why these could have happened, supporting also the comprehension of the attitude of the considered users toward the general user experience. For this reason, we will consider this in our further analyses of all the measured constructs.

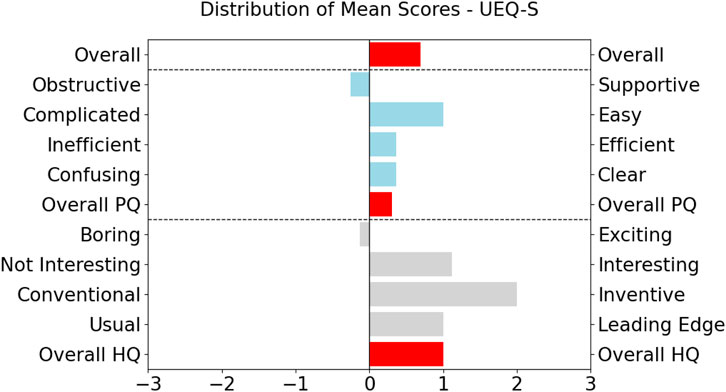

5.3.2 UEQ-S

A comparison of the mean scores from the UEQ-S questionnaire, including the mean scores of individual questions and the overall PQ (Pragmatic Quality) and HQ (Hedonic Quality) dimensions are provided in the bar chart reported in Figure 9.

Figure 9. Comparison of mean scores from UEQ-S questionnaire. The questions measure the PQ and HQ dimensions.

The PQ dimension scores are skewed towards positive ones, indicating a positive assessment of usability and effectiveness of the user experience. It is worth noticing that the users particularly perceived the system as very easy to use but at the same time, they were neutral or relatively negative about the system’s ability to be supportive. The HQ dimension got slightly higher average scores, suggesting an even stronger response in terms of emotional appeal and enjoyment, in particular for the perceived level of innovativeness.

However, questions within both dimensions yielded scores below 0, implying mixed or neutral perceptions. For example, the question about “Perceived support” in the PQ dimension exhibited a mean score of −0.25, indicating diverse opinions among users regarding the level of support provided by the system. Likewise, the question concerning ’Perceived excitement’ in the HQ dimension obtained a mean score of −0.125, suggesting that users’ experiences of excitement during system interaction leaned more towards neutrality or were relatively subdued. However, they were neutral or slightly unfavorable concerning its level of excitement.

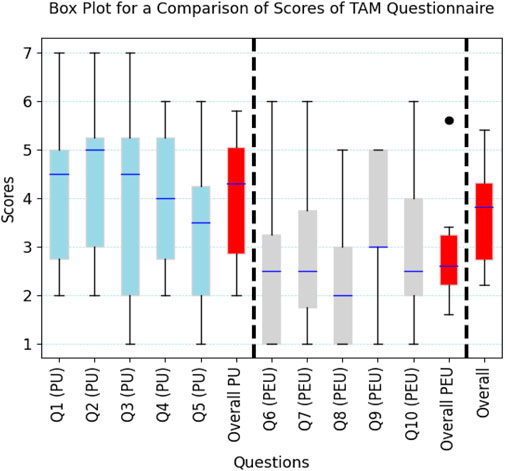

5.3.3 TAM

The results of the TAM questionnaire are illustrated in Figure 10, where the scores are visually represented by box plots. The overall PU items report an average high score exhibiting a slightly negative skewness. Different question items (Q1, Q2, Q4), and also the overall scores are centralized towards

Figure 10. Comparison of scores from the TAM questionnaire. The questions measure the Perception of the application’s Usefulness (PU) and the Perception of the Ease of Use (PEU).

5.3.4 NASA-TLX

The box plot describing the scores from the NASA-TLX questionnaire reported in Figure 11 implies a moderate workload level experienced by the majority of participants. In particular, our participants found using the system not too mentally or temporally demanding (items Q1 and Q3), even if they found achieving the task physically burdensome (item Q2). The reason leading to these physical demands could be due to the weight of the AR device and computation unit (even if light, users are wearing an additional gadget, placed above their head and in their pockets).

Despite this, the users felt performing and did not put so much effort into using the system (items Q4 and Q5). This is in fact in contrast to what was observed before. This positive achievement is also supported by the high variability among the dimensions detailed in item Q2.

Finally, they felt moderately frustrated (item Q6), a fact that could be referable to their low experience with AR devices. Notably, outliers were observed in the effort and frustration dimension (Q5-16), indicating extreme scores deviating from the general pattern: some users found M-AGEW very easy to use and not frustrating at all.

6 Conclusions & future works

In this work, we introduced M-AGEW, an AR system to support users in outdoor workouts with a data-driven approach. The development of M-AGEW was preceded by a preliminary user study and literature review to establish the main factors that would let users adopt an AR-outdoor design system for physical workouts. Considering the results from this preliminary study, we developed M-AGEW which guides users, with a dynamic approach, through different outdoor activities defined as a novel key-value data structure named WKAN.

Finally, we validated the system with a user campaign, involving professionals from the aforementioned company. The observed results point towards a positive attitude for the different investigated constructs. This is to the best of our knowledge, the first work reporting such kind of analysis in the context of an AR outdoor workout system.

However, our system does not integrate any external smart devices capable of measuring real-time speed, acceleration, and GPS in parallel, such as commercial smartwatches, that would improve both the biometric feedback visualization and activity completion feedback.

In future works, we will include them along with Human Digital Twin paradigms, leveraging user-generated and prior data for robust activity detection, dynamic training schedule creation, and injury prevention (Lee et al., 2010; Tao and Zhang, 2017; Barricelli et al., 2020; Stacchio et al., 2022). Additionally, we are keen on exploring the capabilities of advanced AR headsets such as MagicLeap two and Apple Vision Pro, which boast improved outdoor visibility through features like luminance, dynamic dimming Leap (2023), and video pass-through (Apple, 2023).

Finally, to further validate and enhance the effectiveness of M-AGEW, within diverse user contexts, we plan to conduct an extensive user-testing campaign after those integrations. This user study will involve a more varied user base, ensuring that our solution caters to a wide range of preferences, needs, and physical activities. Through these future developments and user-centric evaluations, we aim to refine M-AGEW into a comprehensive and versatile tool for individuals seeking optimal support in their physical activity endeavors.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Author contributions

VA: Software, Writing – review and editing. LS: Conceptualization, Data curation, Formal Analysis, Methodology, Software, Validation, Writing – original draft. PC: Data curation, Formal Analysis, Methodology, Supervision, Validation, Writing – original draft. SH: Conceptualization, Formal Analysis, Methodology, Writing – review and editing. LD: Funding acquisition, Resources, Supervision, Writing – review and editing. GM: Funding acquisition, Resources, Supervision, Writing – review and editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

The authors express their sincere gratitude to Wellness Explorers for its valuable support and collaboration throughout the development of this research.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1A video demo is available here.

2To comply with the company’s internal policy its name is not cited.

4The Figma storyboard mockups are accessible through this link.

5https://www.surveymonkey.com/

6The Figma activity diagram is accessible through this link.

References

Apple (2023). Vision OS features. Available online at: https://developer.apple.com/visionos/.

Arena, F., Collotta, M., Pau, G., and Termine, F. (2022). An overview of augmented reality. Computers 11, 28. doi:10.3390/computers11020028

Baman, T. S., Gupta, S., and Day, S. M. (2010). Cardiovascular health, part 2: sports participation in athletes with cardiovascular conditions. Sports Health 2, 19–28. doi:10.1177/1941738109356941

Barricelli, B. R., Casiraghi, E., Gliozzo, J., Petrini, A., and Valtolina, S. (2020). Human digital twin for fitness management. Ieee Access 8, 26637–26664. doi:10.1109/access.2020.2971576

Benyon, D. (2014). Designing interactive systems: a comprehensive guide to hci. UX Interact. Des. 3.

Billinghurst, M., Grasset, R., and Looser, J. (2005). Designing augmented reality interfaces. ACM Siggraph Comput. Graph. 39, 17–22. doi:10.1145/1057792.1057803

Billinghurst, M., Clark, A., and Lee, G. (2015). A survey of augmented reality. Found. Trends® Human–Computer Interact. 8, 73–272. doi:10.1561/1100000049

Bonfert, M., Lemke, S., Porzel, R., and Malaka, R. (2022). “Kicking in virtual reality: the influence of foot visibility on the shooting experience and accuracy,” in 2022 IEEE conference on virtual reality and 3D user interfaces (VR) (IEEE), 711–718.

Bozgeyikli, L. L., and Bozgeyikli, E. (2022). “Tangiball: foot-Enabled embodied tangible interaction with a ball in virtual reality,” in 2022 IEEE conference on virtual reality and 3D user interfaces (VR) (IEEE), 812–820.

Cronbach, L. J. (1951). Coefficient alpha and the internal structure of tests. psychometrika 16, 297–334. doi:10.1007/bf02310555

Dong, R. (2019). “Minimalist style of ui interface design in the age of self-media,” in Proc. Of international conference on information and social science, 217–221.

Ejaz, A., Ali, S. A., Ejaz, M. Y., and Siddiqui, F. A. (2019). Graphic user interface design principles for designing augmented reality applications. Int. J. Adv. Comput. Sci. Appl. (IJACSA) 10, 209–216. doi:10.14569/ijacsa.2019.0100228

Epson (2022). Moverio BT-40S. Available online at: https://www.epson.co.uk/en_GB/products/smart-glasses/see-through-mobile-viewer/moverio-bt-40s/p/31096 (Accessed January 3, 2022).

Fitzmaurice, G. W. (1993). Situated information spaces and spatially aware palmtop computers. Commun. ACM 36, 39–49. doi:10.1145/159544.159566

Gabbard, J. L., and Swan II, J. E. (2008). Usability engineering for augmented reality: employing user-based studies to inform design. IEEE Trans. Vis. Comput. Graph. 14, 513–525. doi:10.1109/tvcg.2008.24

GeoPy (2023). Geocoding library for python. Available online at: https://github.com/geopy/geopy.

Goebert, C., and Greenhalgh, G. P. (2020). A new reality: fan perceptions of augmented reality readiness in sport marketing. Comput. Hum. Behav. 106, 106231. doi:10.1016/j.chb.2019.106231

Gulliksen, H. (1945). The relation of item difficulty and inter-item correlation to test variance and reliability. Psychometrika 10, 79–91. doi:10.1007/bf02288877

Hajahmadi, S., Calvi, I., Stacchiotti, E., Cascarano, P., and Marfia, G. (2024a). Heritage elements and artificial intelligence as storytelling tools for virtual retail environments. Digital Appl. Archaeol. Cult. Herit. 34, e00368. doi:10.1016/j.daach.2024.e00368

Hajahmadi, S., Stacchio, L., Giacché, A., Cascarano, P., and Marfia, G. (2024b). “Investigating extended reality-powered digital twins for sequential instruction learning: the case of the rubik’s cube,” in 2024 IEEE international symposium on mixed and augmented reality (ISMAR) 259–268.

Hamada, T., Hautasaari, A., Kitazaki, M., and Koshizuka, N. (2022). “Solitary jogging with a virtual runner using smartglasses,” in 2022 IEEE conference on virtual reality and 3D user interfaces (VR) (IEEE), 644–654.

Hart, S. G., and Staveland, L. E. (1988). “Development of nasa-tlx (task load index): results of empirical and theoretical research,”, 52. Elsevier, 139–183. doi:10.1016/s0166-4115(08)62386-9

Hartson, R., and Pyla, P. S. (2018). The UX book: Agile UX design for a quality user experience. Morgan Kaufmann.

Heim, S. (2007). The resonant interface: HCI foundations for interaction design. Addison-Wesley Longman Publishing Co., Inc.

Hertel, J., and Steinicke, F. (2021). “Augmented reality for maritime navigation assistance-egocentric depth perception in large distance outdoor environments,” in 2021 IEEE virtual reality and 3D user interfaces (VR) (IEEE), 122–130.

Huang, S.-C., Bias, R. G., and Schnyer, D. (2015). How are icons processed by the brain? Neuroimaging measures of four types of visual stimuli used in information systems. J. Assoc. Inf. Sci. Technol. 66, 702–720. doi:10.1002/asi.23210

Huang, G., Qian, X., Wang, T., Patel, F., Sreeram, M., Cao, Y., et al. (2021). “Adaptutar: an adaptive tutoring system for machine tasks in augmented reality,” in Proceedings of the 2021 CHI conference on human factors in computing systems, 1–15.

Josephson, S. (2008). Keeping your readers’ eyes on the screen: an eye-tracking study comparing sans serif and serif typefaces. Vis. Commun. Q. 15, 67–79. doi:10.1080/15551390801914595

Kim, J., Lorenz, M., Knopp, S., and Klimant, P. (2020). “Industrial augmented reality: concepts and user interface designs for augmented reality maintenance worker support systems,” in 2020 IEEE international symposium on mixed and augmented reality adjunct (ISMAR-Adjunct) (IEEE), 67–69.

Kim, J., Ka, J., Lee, Y., Lee, Y., Park, S., and Kim, W. (2022). “Mixed reality-based outdoor training system to improve football player performance,” in 2022 international conference on engineering and emerging technologies (ICEET) (IEEE), 1–3.

Kowatsch, T., Lohse, K.-M., Erb, V., Schittenhelm, L., Galliker, H., Lehner, R., et al. (2021). Hybrid ubiquitous coaching with a novel combination of Mobile and holographic conversational agents targeting adherence to home exercises: four design and evaluation studies. J. Med. Internet Res. 23, e23612. doi:10.2196/23612

Lambert, M., Mbambo, Z., and Gibson, A. S. C. (1998). Heart rate during training and competition for longdistance running. J. sports Sci. 16, 85–90. doi:10.1080/026404198366713

Leap, M. (2023). Four optics breakthroughs to power enterprise AR. Available online at: https://www.magicleap.com/hubfs/Magic-Leap-2-Optics-Highlights-Paper.pdf.

Lee, M.-W., Khan, A. M., Kim, J.-H., Cho, Y.-S., and Kim, T.-S. (2010). “A single tri-axial accelerometer-based real-time personal life log system capable of activity classification and exercise information generation,” in 2010 annual international conference of the IEEE engineering in medicine and biology IEEE, 1390–1393.

Lee, G. A., Dünser, A., Kim, S., and Billinghurst, M. (2012). “Cityviewar: a Mobile outdoor ar application for city visualization,” in 2012 IEEE international symposium on mixed and augmented reality-arts, media, and humanities ISMAR-AMH IEEE, 57–64.

Lee, B., Sedlmair, M., and Schmalstieg, D. (2023). Design patterns for situated visualization in augmented reality. arXiv Prepr. arXiv:2307.09157 30, 1324–1335. doi:10.1109/tvcg.2023.3327398

Lewis, C. (1982). Using the “thinking-aloud” method in cognitive interface design. IBM TJ Watson Res. Cent. Yorkt. Heights.

Lilligreen, G., and Wiebel, A. (2023). Near and far interaction for outdoor augmented reality tree visualization and recommendations on designing augmented reality for use in nature. SN Comput. Sci. 4, 248. doi:10.1007/s42979-023-01675-7

Liu, H., Wang, Z., Mousas, C., and Kao, D. (2020). “Virtual reality racket sports: virtual drills for exercise and training,” in 2020 IEEE international symposium on mixed and augmented reality ISMAR (IEEE), 566–576.

Lopez, G., Abe, S., Hashimoto, K., and Yokokubo, A. (2019). “On-site personal sport skill improvement support using only a smartwatch,” in 2019 IEEE international conference on pervasive computing and communications workshops PerCom workshops (IEEE), 158–164.

Marienko, M., Nosenko, Y., and Shyshkina, M. (2020). Personalization of learning using adaptive technologies and augmented reality. arXiv Prepr. arXiv:2011.05802.

Marotta, L., Buurke, J. H., van Beijnum, B.-J. F., and Reenalda, J. (2021). Towards machine learning-based detection of running-induced fatigue in real-world scenarios: evaluation of imu sensor configurations to reduce intrusiveness. Sensors 21, 3451. doi:10.3390/s21103451

Martin-Niedecken, A. L., Rogers, K., Turmo Vidal, L., Mekler, E. D., and Márquez Segura, E. (2019). “Exercube vs. personal trainer: evaluating a holistic, immersive, and adaptive fitness game setup,” in Proceedings of the 2019 CHI conference on human factors in computing systems, 1–15.

Microsoft (2022). Microsoft hololens 2. Available online at: https://www.microsoft.com/en-us/hololens.

Natesh Bhat (2021). Offline text to speech (TTS) converter for python. Available online at: https://github.com/nateshmbhat/pyttsx3.Online.

Nekar, D. M., Kang, H. Y., and Yu, J. H. (2022). Improvements of physical activity performance and motivation in adult men through augmented reality approach: a randomized controlled trial. J. Environ. Public Health 2022, 3050424. doi:10.1155/2022/3050424

Ning, H., Wang, H., Lin, Y., Wang, W., Dhelim, S., Farha, F., et al. (2021). A survey on metaverse: the state-of-the-art, technologies, applications, and challenges. arXiv Prepr. arXiv:2111.09673.

Nozawa, T., Wu, E., and Koike, H. (2019). “Vr ski coach: indoor ski training system visualizing difference from leading skier,” in 2019 IEEE conference on virtual reality and 3D user interfaces (VR) (IEEE), 1341–1342.

OpenStreetMap contributors (2017). Planet dump Available online at: https://planet.osm.org.

Pascoal, R., Alturas, B., de Almeida, A., and Sofia, R. (2018). “A survey of augmented reality: making technology acceptable in outdoor environments,” in 2018 13th iberian conference on information systems and technologies (CISTI) (IEEE), 1–6.

Pascoal, R. M., de Almeida, A., and Sofia, R. C. (2019). “Activity recognition in outdoor sports environments: smart data for end-users involving Mobile pervasive augmented reality systems,” in Adjunct proceedings of the 2019 ACM international joint conference on pervasive and ubiquitous computing and proceedings of the 2019 ACM international symposium on wearable computers, 446–453.

Pascoal, R., Almeida, A. D., and Sofia, R. C. (2020). Mobile pervasive augmented reality Systems—Mpars: the role of user preferences in the perceived quality of experience in outdoor applications. ACM Trans. Internet Technol. (TOIT) 20, 1–17. doi:10.1145/3375458

Poulose, A., and Han, D. S. (2019). Hybrid indoor localization using imu sensors and smartphone camera. Sensors 19, 5084. doi:10.3390/s19235084

Puggioni, M., Frontoni, E., Paolanti, M., and Pierdicca, R. (2021). Scoolar: an educational platform to improve students’ learning through virtual reality. IEEE Access 9, 21059–21070. doi:10.1109/access.2021.3051275

Rauschnabel, P. A., Felix, R., Hinsch, C., Shahab, H., and Alt, F. (2022). What is xr? Towards a framework for augmented and virtual reality. Comput. Hum. Behav. 133, 107289. doi:10.1016/j.chb.2022.107289

Richter, F. (2023). AR and VR Adoption is still in its infancy. Available online at: https://www.statista.com/chart/28467/virtual-and-augmented-reality-adoption-forecast/#:∼:text=Statista%20estimates%20that%2098%20million,smartphone%20users%20across%20the%20planet.Online.

Rieman, J., Franzke, M., and Redmiles, D. (1995). “Usability evaluation with the cognitive walkthrough,” in Conference companion on human factors in computing systems, 387–388.

Russell, M., Hull, J., and Wesley, R. (2001). Reading with rsvp on a small screen: does font size matter. Usability News 3.

Salomoni, P., Prandi, C., Roccetti, M., Casanova, L., Marchetti, L., and Marfia, G. (2017). Diegetic user interfaces for virtual environments with hmds: a user experience study with oculus rift. J. Multimodal User Interfaces 11, 173–184. doi:10.1007/s12193-016-0236-5

Samini, A., Palmerius, K. L., and Ljung, P. (2021). “A review of current, complete augmented reality solutions,” in 2021 international conference on cyberworlds (CW) (IEEE), 49–56.

Sawan, N., Eltweri, A., De Lucia, C., Pio Leonardo Cavaliere, L., Faccia, A., and Roxana Moşteanu, N. (2020). “Mixed and augmented reality applications in the sport industry,” in 2020 2nd international conference on E-Business and E-commerce engineering, 55–59.

Sawyer, B. D., Dobres, J., Chahine, N., and Reimer, B. (2020). The great typography bake-off: comparing legibility at-a-glance. Ergonomics 63, 391–398. doi:10.1080/00140139.2020.1714748

Schiewe, A., Krekhov, A., Kerber, F., Daiber, F., and Krüger, J. (2020). “A study on real-time visualizations during sports activities on smartwatches,” in Proceedings of the 19th international conference on Mobile and ubiquitous multimedia, 18–31.

Schrepp, M., Hinderks, A., and Thomaschewski, J. (2017). Design and evaluation of a short version of the user experience questionnaire (ueq-s). Int. J. Interact. Multimedia Artif. Intell. 4 (6), 103–108. doi:10.9781/ijimai.2017.09.001

Seo, S., Kang, D., and Park, S. (2018). Real-time adaptable and coherent rendering for outdoor augmented reality. EURASIP J. Image Video Process. 2018, 118–8. doi:10.1186/s13640-018-0357-8

Shin, S., Batch, A., Butcher, P. W., Ritsos, P. D., and Elmqvist, N. (2023). The reality of the situation: a survey of situated analytics. IEEE Trans. Vis. Comput. Graph. 30, 5147–5164. doi:10.1109/tvcg.2023.3285546

Shneiderman, B., and Plaisant, C. (2010). Designing the user interface: strategies for effective human-computer interaction. Pearson Education India.

Singh, S., Singh, J., Shah, B., Sehra, S. S., and Ali, F. (2022). Augmented reality and gps-based resource efficient navigation system for outdoor environments: integrating device camera, sensors, and storage. Sustainability 14, 12720. doi:10.3390/su141912720

Soltani, P., and Morice, A. H. (2020). Augmented reality tools for sports education and training. Comput. and Educ. 155, 103923. doi:10.1016/j.compedu.2020.103923

Sport Business Journals (2021). Nike running Ad features snap’s augmented reality glasses. Available online at: https://www.sportsbusinessjournal.com/Daily/Issues/2021/11/10/Technology/nike-running-ad-features-snaps-augmented-reality-glasses.

Stacchio, L., Angeli, A., and Marfia, G. (2022). Empowering digital twins with extended reality collaborations. Virtual Real. and Intelligent Hardw. 4, 487–505. doi:10.1016/j.vrih.2022.06.004

Stacchio, L., Garzarella, S., Cascarano, P., De Filippo, A., Cervellati, E., and Marfia, G. (2024). Danxe: an extended artificial intelligence framework to analyze and promote dance heritage. Digital Appl. Archaeol. Cult. Herit. 33, e00343. doi:10.1016/j.daach.2024.e00343

Su, K.-W., and Liu, C.-L. (2012). A Mobile nursing information system based on human-computer interaction design for improving quality of nursing. J. Med. Syst. 36, 1139–1153. doi:10.1007/s10916-010-9576-y

Taber, K. S. (2018). The use of cronbach’s alpha when developing and reporting research instruments in science education. Res. Sci. Educ. 48, 1273–1296. doi:10.1007/s11165-016-9602-2

Tan, C. T., Byrne, R., Lui, S., Liu, W., and Mueller, F. (2015). “Joggar: a mixed-modality ar approach for technology-augmented jogging,” in SIGGRAPH Asia 2015 Mobile graphics and interactive applications (ACM digital library), 1.

Tao, F., and Zhang, M. (2017). Digital twin shop-floor: a new shop-floor paradigm towards smart manufacturing. Ieee Access 5, 20418–20427. doi:10.1109/access.2017.2756069

Tavakol, M., and Dennick, R. (2011). Making sense of cronbach’s alpha. Int. J. Med. Educ. 2, 53–55. doi:10.5116/ijme.4dfb.8dfd

Technogym (2017). Skillrow. Rowing device and fitness class. Available online at: https://www.technogym.com/us/line/skillrow-class/.

Tzima, S., Styliaras, G., and Bassounas, A. (2021). Augmented reality in outdoor settings: evaluation of a hybrid image recognition technique. J. Comput. Cult. Herit. (JOCCH) 14, 1–17. doi:10.1145/3439953

Uhm, J.-P., Kim, S., Do, C., and Lee, H.-W. (2022). How augmented reality (ar) experience affects purchase intention in sport e-commerce: roles of perceived diagnosticity, psychological distance, and perceived risks. J. Retail. Consumer Serv. 67, 103027. doi:10.1016/j.jretconser.2022.103027

Wu, H.-K., Lee, S. W.-Y., Chang, H.-Y., and Liang, J.-C. (2013). Current status, opportunities and challenges of augmented reality in education. Comput. and Educ. 62, 41–49. doi:10.1016/j.compedu.2012.10.024

Yeo, H.-S., Koike, H., and Quigley, A. (2019). “Augmented learning for sports using wearable head-worn and wrist-worn devices,” in 2019 IEEE conference on virtual reality and 3D user interfaces (VR) (IEEE), 1578–1580.

Yin, K., Hsiang, E.-L., Zou, J., Li, Y., Yang, Z., Yang, Q., et al. (2022). Advanced liquid crystal devices for augmented reality and virtual reality displays: principles and applications. Light Sci. and Appl. 11, 161. doi:10.1038/s41377-022-00851-3

Yousafzai, S. Y., Foxall, G. R., and Pallister, J. G. (2007). Technology acceptance: a meta-analysis of the tam: part 1. J. Model. Manag. 2, 251–280. doi:10.1108/17465660710834453

ZRX (2021). Zombies run. Available online at: https://zrx.app/.Online.

ZRX (2023). ZRX: zombies run + marvel move. Available online at: https://play.google.com/store/apps/details?id=com.sixtostart.zombiesrunclient&hl=it.

Keywords: augmented reality, outdoor workouts, human-computer interaction, adaptive training, wearable interfaces, AR fitness systems

Citation: Armandi V, Stacchio L, Cascarano P, Hajahmadi S, Donatiello L and Marfia G (2025) An augmented outdoor workout system for jogging and calisthenics support. Front. Virtual Real. 6:1613717. doi: 10.3389/frvir.2025.1613717

Received: 17 April 2025; Accepted: 21 August 2025;

Published: 22 September 2025.

Edited by:

Jihad El-Sana, Ben-Gurion University of the Negev, IsraelReviewed by:

Jan Lacko, Pan-European University, SlovakiaHu Xin, City University of Macau, Macao SAR, China

Copyright © 2025 Armandi, Stacchio, Cascarano, Hajahmadi, Donatiello and Marfia. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Pasquale Cascarano, cGFzcXVhbGUuY2FzY2FyYW5vMkB1bmliby5pdA==; Gustavo Marfia, Z3VzdGF2by5tYXJmaWFAdW5pYm8uaXQ=

Vincenzo Armandi1

Vincenzo Armandi1 Lorenzo Stacchio

Lorenzo Stacchio Pasquale Cascarano

Pasquale Cascarano Shirin Hajahmadi

Shirin Hajahmadi Gustavo Marfia

Gustavo Marfia