- Human-Computer Interaction (HCI) Group, Computer Science IX, University of Würzburg, Würzburg, Germany

The sense of embodiment and the sense of spatial presence are two fundamental constructs in Virtual Reality, shaping user experience and behavior. While empirical studies have consistently shown that both constructs are influenced by similar cues, theoretical discussions often treat them as separate, leaving their conceptual relationship underexplored. This paper systematically examines the conceptual overlap between these two constructs, revealing the extent of their interconnection. Through a detailed analysis, we present fourteen arguments that demonstrate how cues designed to enhance one construct also impact the other. This unified perspective highlights that any cue contributing to one construct is likely to influence the other. Furthermore, our findings challenge the suitability of common network-based models in representing the relationship between the two constructs. As an alternative, we suggest a table-based representation that maps the influence of individual cues onto both constructs, highlighting their relative impact. By bridging this theoretical gap, our work clarifies the intertwined nature of these constructs, with potential applications in the development of more cohesive measurement instruments and further research in presence and embodiment.

1 Introduction

Virtual Reality (VR) as an interface technology has the potential to evoke a diverse range of intense qualia, i.e., subjective states of conscious experience. Many VR applications are specifically designed to elicit particular experiential states, making the relationship between VR cues and user experiences a central concern for designers and developers. The key question they face is: which VR-related cues trigger the desired state of experience for my application’s users? While the term qualia captures the subjective and phenomenal nature of these experiences (Kanai and Tsuchiya, 2012), scientific research typically relies on constructs—theoretically grounded, multidimensional models—to describe and measure them. The interplay between constructs, their underlying factors, and the cues that elicit them remains an active area of scientific investigation, both empirically and theoretically. Among the most widely studied constructs in VR research are spatial presence and virtual embodiment. These two constructs are particularly significant because they play a crucial role in numerous VR applications. For example, a high sense of presence can increase the effect of training or therapy applications (Grassini et al., 2020; Mantovani and Castelnuovo, 2003; Herbelin et al., 2002; Alsina-Jurnet et al., 2011). Virtual embodiment can be leveraged to strengthen interpersonal communication in social VR (Kullmann et al., 2023; Smith and Neff, 2018) or to increase emotional responses to virtual stimuli (Gall et al., 2021).

Empirical studies consistently show correlations between the sense of virtual embodiment and spatial presence. Manipulations designed primarily to strengthen or weaken virtual embodiment often have similar effects on spatial presence. Notably, manipulations such as the presence or absence of an avatar (Unruh et al., 2021; Wolf et al., 2021; Slater et al., 2018), the synchrony of visuotactile stimulation (Gall et al., 2021; Halbig and Latoschik, 2024; Pritchard et al., 2016), or the perspective from which an avatar is controlled (Matsuda et al., 2021; Unruh et al., 2024; Iriye and St. Jacques, 2021) have been shown to influence both virtual embodiment and spatial presence. However, the relationship between these two constructs is not necessarily unidirectional. Just as traditional virtual embodiment cues can enhance spatial presence, traditional spatial presence cues may, in turn, influence the sense of embodiment. The overall level of immersion—determined by factors such as display technology (Waltemate et al., 2018; Roth and Latoschik, 2020), the integration of sensory channels independent of the avatar (Gall, 2022; Guterstam et al., 2015), or the field of view of the Head-Mounted Display (HMD) (Falcone et al., 2022; Nakano et al., 2021) can significantly affect both spatial presence and virtual embodiment.

While empirical findings repeatedly highlight these interconnections, theoretical models have yet to fully account for them. Many of the established VR theories focus primarily on either presence or virtual embodiment, without explicitly addressing their relationship—for example, Schubert (2009), Kilteni et al. (2012), or Skarbez et al. (2017). Others acknowledge a connection but remain relatively vague or superficial in explaining the link between the two constructs, as seen in the works of Biocca (1997), Slater (2009), or Latoschik and Wienrich (2022). As a result, most researchers also tend to think about the two phenomena separately. However, a notable exception has emerged recently. The Implied Body Framework (IBF) introduces a relatively new approach to linking the two qualia (Forster et al., 2022). It is based on the assumption that every form of multisensory correlation indicates the existence of implied bodies. These implied bodies, in turn, give rise to an internal body representation. Spatial presence then emerges from the spatial reference point of this body representation. Thus, the IBF understands presence as arising from embodiment processes.

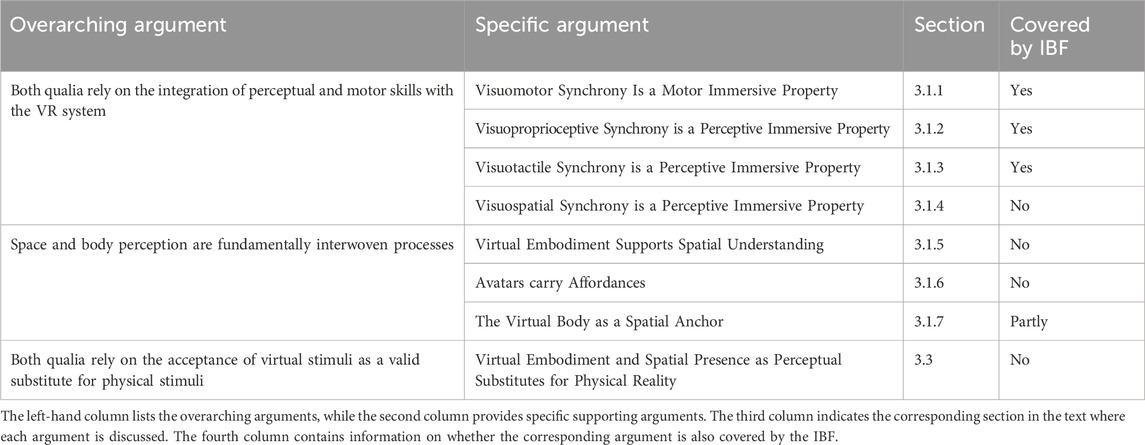

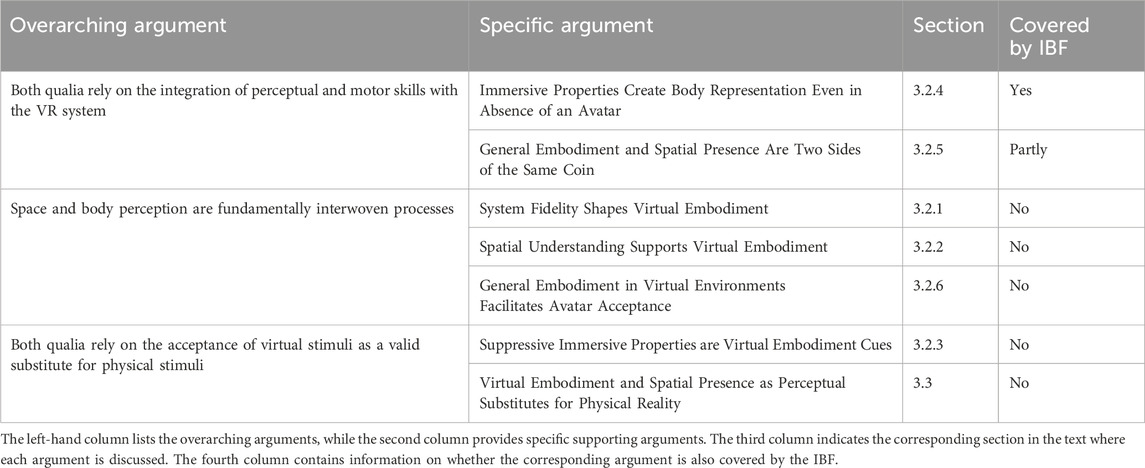

However, despite these advances, we believe that no existing theory or empirical work fully captures the entire spectrum of conceptual overlap between the two constructs. In this work, we aim to bridge this gap by contributing to the further development of theoretical ideas regarding their connection. We challenge the conventional separation between virtual embodiment and spatial presence. By thoroughly analyzing the established concepts, we reveal the significant overlap between them. Through this examination, we identify fourteen key arguments that illustrate how cues typically linked to one construct also influence the other. Tables 1, 2 provide an overview of the arguments. Table 1 shows how traditional virtual embodiment cues affect spatial presence, while Table 2 shows the reverse. Both tables also indicate which arguments are covered by the IBF, revealing several important connections it overlooks and highlighting the need for a more comprehensive perspective.

Table 1. Overview of arguments supporting the idea that traditional virtual embodiment cues influence spatial presence.

Table 2. Overview of arguments supporting the idea that traditional spatial presence cues influence virtual embodiment.

On the one hand, this list of arguments provides a valuable tool for interpreting various empirical findings. On the other hand, it highlights the deep conceptual connection between the two constructs and their associated qualia. From this conceptual connection, we also draw overarching theoretical conclusions. Firstly, imposing a hierarchical or network-based structure on the constructs and their dimensions leads to conceptual circularity with limited predictive value. The two constructs are simply too conceptually intertwined for this to be effective. Instead, we propose visualizing their relationship through a table that captures the relative strength with which various cues influence each construct. Secondly, any bottom-up cue that has the potential to manipulate one of the constructs also has the potential to manipulate the other one. Thirdly, the very frequently used subjective measurement instruments are not able to clearly separate the two constructs. These insights underscore the need for a more integrated approach to studying virtual embodiment and spatial presence—both in terms of measurement instruments and conceptual frameworks.

2 Background

2.1 The theoretical concept behind spatial presence

In our definition of spatial presence, we follow Wirth et al. (2007). It is a construct that consists of two aspects. Aspect one is the sensation of being physically situated in the virtual environment, i.e., users perceive their current location to be the virtual environment. Aspect two is the sensation of being able to act in this virtual environment, i.e., one perceives the possibilities to act and interact in the virtual environment while the interaction possibilities of the physical environment diminish. In their comprehensive review of presence theories and concepts, Skarbez et al. (2017) demonstrate that this definition is widely accepted among researchers. They categorize such definitions as the “being there–active” definitions. Consequently, many presence questionnaires include items or even dedicated subscales designed to measure these two dimensions (Schubert et al., 2001; Vorderer et al., 2004; Hartmann et al., 2016). Reviews further indicate that questionnaires remain the most widely used method for assessing spatial presence (Hein et al., 2018; Souza et al., 2021).

There are various theoretical models that make statements about the emergence of spatial presence. Slater (2009) refers to the sense of being in the virtual environment as the “place illusion”. According to his model, this quale arises from the sensorimotor contingencies that a system supports. Sensorimotor contingencies refer to the extent to which users can perceive and interact with the virtual environment using their bodies in ways that follow the same principles as in physical reality. The more valid actions a system enables, the more immersive it becomes, and the stronger the place illusion, experienced by the user. For example, head-tracking allows users to explore the environment naturally by moving their heads, while higher pixel density enables them to inspect objects more closely. This idea is also reflected in other theories. Schubert et al. (1999) express this with the term embodied presence. According to them, “presence develops from the representation of navigation (movement) of the own body (or body parts) as a possible action in the virtual world.” (Schubert et al., 1999, p. 272). Biocca (1997) talks about “progressive embodiment”. By this he means the continuous integration of sensorimotor abilities into the computer interface, resulting in a tighter coupling of the body and its abilities to a user interface. It is important to note that when Biocca and Schubert discuss embodiment, they are not referring to avatars, as is common in more recent research. We explore this distinction in greater detail later.

These ideas about the emergence of spatial presence align well with our initial definition. As humans, we possess physical characteristics and abilities that allow us to extract information from our environment, orient ourselves within it, and ultimately act purposefully within the given context. By engaging these innate capacities, VR systems can more powerfully evoke a sense of being in the virtual world, rather than merely observing it. When we describe the emergence of spatial presence in this work, we describe it as an ever-increasing coupling between users, their motor and perceptive abilities, and the VR system. This concerns the purely physiological components of the body, e.g., its sensory organs, but also the direct bottom-up processing of the VR stimuli, e.g., via multisensory integration. We therefore follow the traditional view that considers spatial presence a pre-cognitive phenomenon—one that does not require active or conscious processing (Slater, 2009).

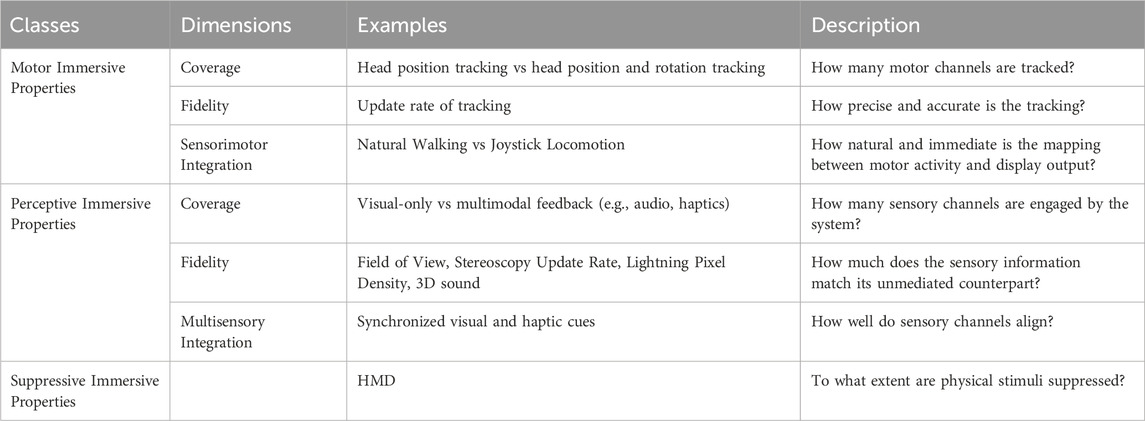

2.2 Spatial presence cues

Different VR systems can be differentiated according to their properties, which determine whether and to what extent the coupling of the user’s motor and perceptive abilities to the VR system takes place. We call these “immersive properties” as immersion is typically seen as the main factor for spatial presence (Slater, 2009; Skarbez et al., 2017). Biocca (1997) distinguishes between different classes of simulation technologies, i. e., those that focus on increasing sensory engagement and those that focus on increasing motor engagement. We can apply these to the immersive properties of VR to create a meaningful categorization of spatial presence cues. A summary of this categorization can be found in Table 3.

2.2.1 Motor immersive properties

Motor Immersive Properties are the immersive properties of a VR system that ensure that the user’s motor activations are recorded and translated into meaningful changes of the system’s output. Probably the most central example for this is head tracking. Another example would be whether a VR system allows a player to walk freely or if this is restricted (Regenbrecht and Schubert, 2002). In principle, tracking a motor channel alone is not sufficient. Depending on the activity on the respective motor channel, there must always be a corresponding change in the output of the VR system. This change in output does not necessarily have to be of a visual nature. A motor channel can also be linked to sound output of the VR system, for example, by creating footstep sounds when walking in the virtual world (Nordahl, 2005). We distinguish three dimensions in which the motor immersive properties become effective. These dimensions help us to understand how these properties create a link between the body and the VR system.

The first dimension is the coverage, which refers to the number of motor channels supported by the VR system. With head tracking, for example, one can distinguish whether only the rotation or also the translation of the head movement is tracked (see, for example, Brübach et al. (2024) or Zanbaka et al. (2005)). Another example would be whether a VR system supports controllers or not.

The second dimension is the technical fidelity with which the user’s motor activities are tracked. So the question is not always whether a motor channel is supported or not, but also the fidelity with which this is done. Head tracking with an update rate of 1 hz, for example, will hardly lead to a sufficient spatial presence experience, no matter how good the mapping to the system’s output is. In the context of motor immersive properties, therefore, fidelity refers primarily to the quality in which the tracking of the motor channels takes place.

The third dimension is the quality of the sensorimotor integration, which is about the mapping between motor channels and the output of the VR system. It refers to how naturally and immediate a user’s physical movements translate into corresponding actions of the VR system. Congruence increases as the mapping between user actions and system responses approximates the sensorimotor contingencies typical of non-mediated interactions. High congruency supports the brain’s ability to integrate motor actions with the output of the VR system, reinforcing a coherent and more immersive experience. Suppose we were to build an application in which the rotation axes of the head tracking are swapped, e.g., tilting the head up or down (pitch) results in horizontal rotation of the display image (yaw) and vice versa. Even if this means that we have created a direct mapping from motor channels to the output of the system, everyone will be able to understand that this leads to a flawed link between body and system which is highly unlikely to generate a sense of spatial presence. A more realistic example is given by papers that compare locomotion in VR with the help of joysticks vs real walking, e.g., Ryu et al. (2020) or Usoh et al. (1999). Here, too, navigation via joystick means coupling motor skills to the VR system. However, this link is more congruent if actual walking is used, which results in increased spatial presence.

2.2.2 Perceptive immersive properties

Perceptive immersive properties are the properties of a VR system, that ensure that user’s perceptive abilities are engaged (to a higher degree) by the virtual simulation. As with motor immersive properties, they can be analyzed along the same three dimensions that determine how they become effective.

The first dimension is the coverage of sensory channels, referring to how many of the human senses are engaged by the VR system. Systems can be differentiated based on the extent to which they stimulate different sensory modalities. VR experiences that include not only visual input but also auditory (Brinkman et al., 2015; Poeschl et al., 2013) or tactile cues (Kaul et al., 2017; Haddod et al., 2019) tend to elicit a stronger sense of spatial presence.

The second dimension is the fidelity of the sensory information generated by the VR system. Biocca (1997) defines the sensory fidelity as “the degree to which the energy array produced by a mediated display matches the energy array of an unmediated stimulus.“. From another perspective, one could also say that it is the degree to which the perceptual abilities of a user are supported and thus coupled to the VR system. A system that supports stereoscopic vision, for example, consequently supports the perceptual ability of its users to extract depth information from the generated stimuli, which ultimately results in higher spatial presence (Uz-Bilgin et al., 2019; Ling et al., 2012). Similarly, higher pixel density engages more visual processing resources, while a broader field of view strengthens immersion by effectively engaging the user’s peripheral vision. The fidelity of sensory information refers not only to visual stimuli. For instance, stereo audio can enhance the sense of presence compared to mono audio, while 3D audio can further amplify this effect compared to stereo (Brinkman et al., 2015). The acoustic signals generated by the VR system can therefore be continuously adjusted to the level at which they would appear in the physical environment. In this process, the VR system engages more and more of the user’s perceptual capabilities. At the same time, this increased engagement enhances the user’s ability to interpret and respond to auditory cues within the virtual environment.

The third dimension is the quality of multisensory integration. Spatial presence is strengthened when sensory channels are synchronized. For instance, if auditory stimuli originate from a different location than their corresponding visual stimuli, the sense of presence is disrupted (Kim and Lee, 2022). Similarly, misalignment between the visual and haptic positioning of a table can diminish spatial presence (Gall and Latoschik, 2018). By enabling the brain to merge information from multiple sensory inputs into a coherent representation, these stimuli support a fundamental perceptual ability that allows users to extract and interpret environmental information. Although we distinguish here between motor and perceptual immersive properties, these should by no means be regarded as independent. As we have previously explained, the motor immersive properties depend on how congruent their mapping to the system’s output is. This mapping also depends on the perceptive immersive properties. For example, in head tracking, the update rate of the display must be sufficiently high to maintain congruence between head movements and the visual response. The congruence of head tracking does not only depend on the fidelity of tracking but also on the fidelity of the system output. Ultimately, all perceptual immersive properties potentially shape how users perceive the changes of the VR system resulting from motor activity.

2.2.3 Suppressing immersive properties

The third class of immersive properties consists of those that suppress stimuli from the physical environment. These properties vary in the number of sensory channels they suppress and the degree to which suppression occurs. The best example is the HMDs themselves, which block visual stimuli from the physical environment and therefore also generate a much greater sense of presence than desktop VR (Axelsson et al., 2001; Merz et al., 2024; Kim et al., 2014; Cummings and Bailenson, 2016). Another example would be noise-cancelling headphones, which suppress acoustic signals from the physical environment, which potentially harm the sense of being in the virtual environment (Kojić et al., 2023). Suppressing immersive properties promote the coupling between the users and the stimuli produced by the VR system, albeit indirectly. By limiting exposure to real-world stimuli, they reduce interference from the physical environment and free up perceptual resources, which in turn strengthens engagement with the virtual stimuli.

2.3 The theoretical concept behind the sense of embodiment

When it comes to the sense of embodiment, the starting point is usually the well-known Rubber Hand Illusion (Botvinick and Cohen, 1998). In this experiment, a visible rubber hand is stroked synchronously with the participant’s hidden real hand, creating the compelling sensation that the rubber hand belongs to them. It demonstrated how healthy humans are able to incorporate an external object into the own mental body-representation. Later research showed that this phenomenon extends beyond rubber hands to various external objects, including tools (Cardinali et al., 2009; Cardinali et al., 2012; Garbarini et al., 2015). This plasticity of one’s own mental body representation, has since opened up many opportunities for VR applications. In this work, we use the term “mental body representation” as an umbrella term for the frequently used terms “body schema” (more non-conscious mental representation of the biological body, especially its spatial extend and location) and “body image” (more conscious mental representation of the biological body and how a person sees, thinks, and feels about it). Since the distinction between these two concepts can also be viewed critically (Cuzzolaro, 2018), we stick to the umbrella term, which is perfectly adequate for most of our arguments.

One of the most notable working definitions for the sense of embodiment is provided by Kilteni et al. (2012), who conceptualize it as “the ensemble of sensations that arise in conjunction with being inside, having, and controlling a body.” (Kilteni et al., 2012, p. 374). This definition aligns with the general concept of embodiment in cognitive sciences, which focuses on the bodily self-consciousness that arises from having and controlling a body (Blanke and Metzinger, 2009; Herbelin et al., 2016).

In the realm of VR, Kilteni et al. (2012) relate these sensations to a virtual body. The emphasis here is on the sense of embodiment toward a 3D object that can be seen through the VR system, usually an avatar. In the present work we call this “virtual embodiment”. The idea is that the properties of the virtual entities are processed as if they were integral parts of one’s biological body. So it is about the extent to which the avatar is incorporated into one’s own mental body representation and how strongly this representation is influenced by the avatar. This process is categorized into the same three dimensions as the general embodiment definition: self-location, agency, and body ownership. Self-location refers to the sense of actually being located inside the virtual body. Agency refers to the user’s sense of control over the virtual body. Body ownership involves recognizing the virtual body as one’s own and as the source of experienced sensations. Empirical findings suggest a strong interaction between body ownership and agency, with both often reinforcing each other (see Braun et al. (2018) for a review). However, these two components can also be dissociated. For example, Argelaguet et al. (2016) compared a more realistic hand with limited motor control to a more abstract hand with better motor control. The results showed that the sense of body ownership was higher for the realistic hand, while the sense of agency was simultaneously lower. The usefulness of distinguishing between general embodiment and virtual embodiment becomes even clearer when considering De Vignemont’s (2018) philosophical account of bodily awareness. According to her, bodily awareness is shaped not only by ownership, but also by what she calls bodily presence—the sense of one’s body as a physical object among other objects in three-dimensional space. This becomes evident in the case of amputees, who often report that the missing limb (a phantom limb) is still there, even though they no longer perceive it as functionally usable or as truly part of their body. In these cases, bodily presence remains intact, even in the absence of agency or ownership. Transferred to VR, this suggests that even if no virtual embodiment occurs, due to the absence of an avatar, users may still retain a general sense of bodily presence. That is, the general feeling of being an embodied agent in space can persist, even without a visible or controllable virtual body. As with spatial presence, virtual embodiment is typically measured using subjective methods such as questionnaires (Gonzalez-Franco and Peck, 2018; Kilteni et al., 2012).

2.4 Virtual embodiment cues

In contrast to spatial presence, the typical cues that generate virtual embodiment are less versatile. To understand the functionality of virtual embodiment cues, one has to understand the nature of the mental body representation of humans. It mainly results from the integration of information of four sensory channels: visual and tactile (both exteroceptive), as well as proprioceptive and vestibular (both interoceptive) (Holmes and Spence, 2004; Lopez et al., 2012; Chiba et al., 2016). The prerequisite for the sense of virtual embodiment is usually a correlation between visual signals associated with the avatar and signals from the other channels that affect the mental body representation. This process can be understood through the lens of Bayesian Inference Theory, which suggests that the brain continuously integrates sensory signals in a probabilistic manner to form a coherent mental body representation (Shams and Beierholm, 2022; Samad et al., 2015). The brain weights the inputs from the sensory channels, especially their temporal and spatial discrepancies, and continuously tries to integrate this information into a uniform body representation. From this perspective, the experience that the avatar is part of one’s own body, is simply the most likely explanation that the brain can offer to explain the current sensory input. The virtual embodiment cues are based on this sensory input, which shapes the internal body representation.

The first class of virtual embodiment cues would be visuoproprioceptive synchrony: the interoception caused by passive movements of the biological body has to correlate with the visual perception of the avatar’s movements. It occurs when the visible motion of the avatar accurately corresponds to the user’s own bodily movements as sensed through proprioception. The visuoproprioceptive synchrony has a rather passive character and is mostly used to describe the synchrony of the avatar with movements that were not actively initiated by the user, e.g., in the Virtual Hand Illusion (Halbig and Latoschik, 2024; Gall et al., 2021; Slater et al., 2008). The second class would be visuomotor synchrony. The interoception caused by actively moving the biological body has to correlate (spatially and temporally) with the visual perception of avatar’s movements. Technically, “the interoception caused by actively moving the biological body” would also be part of proprioception, so visuomotor synchrony could be regarded as a special form of visuoproprioceptive synchrony. Nevertheless, the distinction makes sense because visuomotor synchrony emphasizes the additional correlation with motor commands that were actively initiated by the user. The third class is visuotactile synchrony. The tactile perception of a user has to correlate (spatially and temporally) with the visual perception of collisions between the avatar and other objects in the virtual environment. Although visuotactile synchrony may also affect agency, it is body ownership that it most strongly supports (Kalckert and Ehrsson, 2012; Tsakiris and Haggard, 2005). Visuotactile synchrony is unique among the modalities due to the duality of touch, also known as the touchant-touché phenomenon: in self-contact, we experience a tactile sensation as both the touched (touché) and the touching (touchant) (de Vignemont, 2018). Applied to virtual embodiment, this means that when a person touches their own biological body, two distinct forms of visuotactile synchrony can be established: one between the visual perception of touching the avatar and the tactile sensation of touching one’s own body, and another between the visual perception of being touched by the virtual hand and the tactile sensation of being touched on the biological body. Bovet et al. (2018) demonstrated that both types of synchrony are important, and that humans are highly sensitive to mismatches in these cues. They showed that when visual alignment during self-touch is disrupted—such as through a “floating” hand offset—both body ownership and agency significantly decline, even when motion cues are otherwise preserved. A fourth class is visuospatial synchrony or simply the perspective. This class of cues concerns the match between the user’s viewpoint through which they look at the virtual scene and the position of the avatar. Various studies have shown that the sense of virtual embodiment is higher when the user’s viewpoint is inside the avatar, i.e., first-person perspective (1PP), compared to a perspective outside the avatar, i.e., third-person perspective (3PP) (Gorisse et al., 2017; Galvan Debarba et al., 2017; Slater et al., 2010; Maselli and Slater, 2013).

Overall, all four classes of cues have been shown to enhance the sense of embodiment in various experiments, though none of them is strictly necessary for it to occur (see Toet et al., 2020). For instance, ownership of an avatar can emerge solely through 1 PP, even in the absence of additional sensory input (Maselli and Slater, 2013; Piryankova et al., 2014). At the same time, ownership can also be created for an avatar from 3 PP if sufficient embodiment cues from other sources provide supporting evidence (Pomés and Slater, 2013; Gorisse et al., 2019). This flexibility highlights the adaptability of the perceptual processes that determine where we experience our body and what we perceive as part of it.

Another important virtual embodiment cue is the visual similarity between the users and their avatar. However, as with spatial presence cues, we focus primarily on the bottom-up aspects of virtual embodiment.

3 The interwoven nature of spatial presence and the sense of embodiment

In the previous sections, we provided a theoretical foundation for virtual embodiment and spatial presence. We adhered to widespread and established theories and have strictly separated the two constructs in their theoretical derivation. However, in this section, we aim to challenge this separation. We systematically examine the established concepts and demonstrate the extent to which they overlap. This leads us to identify fourteen distinct arguments that provide a more unified and comprehensive understanding of both constructs. These arguments highlight how the typical cues associated with one construct also influence the other, and where this parallelism is evident in subjective measurement tools. To facilitate the discussion, we divide the arguments into two categories. First, we present arguments that illustrate how cues associated with virtual embodiment influence spatial presence (see Table 1 for an overview). Next, we turn the focus around and present arguments showing how cues typically linked to spatial presence affect virtual embodiment (see Table 2 for an overview). The individual arguments can be further categorized into three different overarching arguments that show the conceptual parallels between the two constructs at a higher level. Argument one is that both constructs rely on the integration of perceptual and motor skills with the VR system. Overarching argument two is that space and body perception are fundamentally interwoven processes. Argument three is that both constructs rely on the acceptance of virtual stimuli as a valid substitute for physical stimuli. This further subdivision is also shown in Tables 1, 2.

3.1 How traditional virtual embodiment cues affect the sense of spatial presence

3.1.1 Visuomotor synchrony is a motor immersive property

A closer examination of traditional virtual embodiment cues reveals a significant overlap with the principles underlying traditional spatial presence cues, particularly in how both types of cues influence user experience. For the motor immersive properties, we defined three dimensions in which they could reinforce the sense of spatial presence, i.e., by raising the number of motor channels tracked, by increasing the fidelity in which they are tracked and by increasing the congruency of the mapping between motor channels and the system’s output. A particularly illustrative example of these three dimensions is the visuomotor synchronization of an avatar. Implementing such synchronization requires tracking multiple motor channels with sufficient fidelity, and it typically achieves a high degree of mapping congruence. When a user moves their arm or hand and sees the corresponding virtual limb replicate the movement in real time, the result is a highly congruent mapping of a motor channel to the output of the VR system. The same arguments we use to claim that head tracking is an immersive property of VR can also be applied to visuomotor synchrony in avatar embodiment. So, by definition, visuomotor synchrony is a motor immersive property. This is, for example, very suitable for interpreting results as to why it is repeatedly shown that VR gloves exhibit a higher sense of presence and also a higher sense of embodiment compared to controllers (Adkins et al., 2021; Palombo et al., 2024; Lin et al., 2019). The VR gloves increase the coverage of tracked motor channels, the accuracy in which they are tracked and ultimately create a more congruent representation of a virtual hand, compared to an inverse kinematic solution with controllers. They therefore meet all the requirements for how motor-immersive properties increase spatial presence. These improvements can also enhance the sense of embodiment, however, its impact is not necessarily uniform across its different dimensions. A relevant example is provided by the study of Argelaguet et al. (2016), in which a realistic virtual hand, featuring a greater number of motor channels, led to an increased sense of body ownership. At the same time, however, it resulted in a lower sense of agency compared to a more abstract hand that visualized fewer motor channels. Notably, the study also found that task performance was worse when participants used the realistic hand, indicating a decrease in perceived motor control. These findings suggest that, particularly for the sense of agency, the quality and precision of the mapping between the user’s motor activity and the movements of the virtual hand plays a critical role. In this case, it was more decisive than the sheer number of motor channels tracked and visualized.

3.1.2 Visuoproprioceptive synchrony is a perceptive immersive property

The rationale for classifying visuomotor synchrony as an immersive property extends similarly to visuoproprioceptive synchrony. A functioning visuoproprioceptive synchrony and a functioning visuomotor synchrony function almost identically. Both forms of synchrony establish a correlation between the user’s internally generated sensory signals and the external sensory information provided by the VR system. This integration and the coordination of new sensory channels with the content produced by the VR system is a typical spatial presence mechanism. The sensory integration promotes the connection between the user and the simulation, which ultimately convinces them that their location is the location shown by this stimulation. It explains very clearly why past work has shown that visuoproprioceptive synchronization of a virtual hand alone, without any active user input, is a strong spatial presence cue (Halbig and Latoschik, 2024; Gall et al., 2021).

3.1.3 Visuotactile synchrony is a perceptive immersive property

A typical way in which spatial presence is enhanced by perceptive immersive properties is the integration of other senses, in addition to the visual channel that is primarily engaged by VR applications. A frequently used sense is the tactile sense. However, tactile feedback alone is insufficient; it must be meaningfully linked to events within the virtual environment. To be effective, the VR system must simulate a visible collision between a virtual object and the user’s body, such as a football striking the head (Kaul et al., 2017), an enemy attack in a fighting game (Cui and Mousas, 2021), or the user’s virtual hand making contact with their virtual torso during self-touch (Bovet et al., 2018). Therefore, the VR system requires the representation of a body in the virtual world, which forms the basis for tactile feedback to be congruently embedded in the action. The most intuitive and natural representation of the biological body in VR is the avatar (in Section 3.2.4 we discuss why the avatar is not the only way to represent the body). The mechanisms by which tactile feedback enhances spatial presence are the same as those that contribute to the virtual body becoming part of one’s own mental body representation. Visuotactile synchrony of an avatar is a perfect example of how perceptive immersive properties increase immersion into a simulation by increasing the number of sensory channels addressed and by promoting multisensory integration. That means, by definition, visuotactile synchrony is a perceptive immersive property.

3.1.4 Visuospatial synchrony is a perceptive immersive property

When considered in isolation, the influence of perspective on spatial presence may appear less intuitive, as any perspective can ostensibly support a sense of being within the virtual environment. Nevertheless, many studies show that a 1PP leads to an increased sense of spatial presence, compared to the 3PP (Matsuda et al., 2021; Unruh et al., 2024; Iriye and St. Jacques, 2021; Otsubo et al., 2024; Tamaki and Nakajima, 2022). It helps if we remind ourselves of the nature of the mental body representation of humans and how it dynamically integrates information from various sensory channels. If we consider visuospatial synchrony in isolation, then two sensory channels are particularly relevant; the visual channel, which delivers computer-generated signals rendered as a virtual body, while the proprioceptive channel provides input from the user’s biological body. If we now manipulate the perspective relative to the virtual body (non-1PP conditions), a kind of tug-of-war occurs between the biological body and the virtual body. The biological body pulls on the rope with proprioceptive cues while its visual cues are suppressed. In contrast, the avatar pulls on the rope with visual cues while it is incapable of delivering proprioceptive cues in the first place. If we show an avatar from 3PP, there are still proprioceptive signals from the biological body that it is located directly under the camera, i.e., the origin of the user’s perspective, while the visual cues of the virtual environment indicate that the body is located some distance in front of the camera. The perspective in relation to the avatar is, therefore, nothing more than another form of visuoproprioceptive synchrony. Perspective can therefore be seen as a perceptive immersive property of VR, which primarily affects the multisensory integration of the visual output of the VR system and the interoception of its user. This becomes particularly relevant in conjunction with the other typical virtual embodiment cues. For example, if we create visuomotor synchrony for the arms of an avatar in 3PP, then we still couple motor channels to the output of the VR system. We would map the relative rotation and position changes of the biological arms to the virtual world. However, what the visuomotor synchrony no longer achieves is the mapping of the relative position of these arms to the user’s perspective. A central function of visuomotor synchronization is to provide users with information about the current position of their limbs in the virtual environment. When the avatar is embodied from a 3PP, this positional information is largely inaccessible. The congruency of the coupling between the body and VR system that is caused by the visuomotor synchrony depends largely on visual perspective. We give more insight on why this is so important for spatial presence in Sections 3.1.5, 3.1.6. The same line of argumentation can be applied to visuotactile synchrony, as it becomes effective when tactile feedback on the biological body is temporally and spatially aligned with the visual input from the avatar. A manipulation of perspective therefore also means a disturbance of synchrony on the spatial level.

3.1.5 Virtual embodiment supports spatial understanding

Part of the sense of being in a certain place is dependent on being able to build up a spatial understanding of that place. This is also the reason why depth cues, such as stereoscopy, are among the traditional spatial presence cues. They foster this spatial understanding. This is reflected in the spatial presence questionnaires, where users are asked if they felt like “just perceiving pictures” or if they “felt that the virtual world surrounded” them (Schubert et al., 2001). Virtual embodiment can support this spatial understanding as well. Visuotactile synchrony, for example, describes congruent tactile information that always works relative to an object in the virtual environment. When users receive visuotactile feedback about their own body, they simultaneously gain information about the virtual environment, its objects, and the position and size of their body relative to it. In this way, the subjective understanding of the space grows. The argument also applies to visuomotor and visuoproprioceptive synchrony. They describe congruent movements of the virtual body through the virtual space. This provides users with information about the relative position of one’s own body parts in the virtual environment and its objects, ultimately fostering the spatial understanding of the virtual space. By serving as a reference point, the virtual body helps users intuitively grasp the size and position of other objects in the room (Van Der Hoort et al., 2011; Banakou et al., 2013). Empirical studies have shown that size and distance estimations in virtual environments improve when users have an avatar (Mohler et al., 2008; Ries et al., 2008; Mohler et al., 2010). Increasing avatar fidelity (Ebrahimi et al., 2018; Jung et al., 2018) can further enhance this effect, demonstrating how typical embodiment cues contribute to improved spatial perception in VR. An interesting special case arises when the virtual environment presents a uniform black background without any distinguishable features, as in Gall et al. (2021). But even in such an example, virtual embodiment supports spatial understanding. The reason is that the avatar itself provides cues that allow the perception of spatial dimensions. For example, stereoscopic stimuli emanate from the virtual body itself, allowing a 3D space perception. As the image parallax is larger for objects closer to the viewer (Burdea and Coiffet, 2003; Sherman and Craig, 2003), the depth information that can potentially be inferred from the stereoscopic vision of a virtual body is even higher than for objects further away. In the same way, the movement of a virtual body generates motion parallax. Stereoscopy and motion parallax are two of the most important depth cues in VR (Sherman and Craig, 2003). Just as certain traditional immersive features enhance spatial understanding through richer and more congruent sensory information, virtual embodiment similarly reinforces this understanding. It helps users develop a spatial mental model of the virtual environment, thereby strengthening the sense of spatial presence.

3.1.6 Avatars carry affordances

In addition to the purely spatial part, the definition of spatial presence also includes the perceived possibilities for action. Users feel spatially present if they are able to mentally represent actions of their own body in the virtual world (Hartmann et al., 2016; Schubert, 2009). This idea forms the basis of many of the measuring tools we use for spatial presence, for example, when we ask users to what extent they had the feeling that they could “act” or “be active” in the virtual environment (Hartmann et al., 2016). Giving users a virtual body that follows their movements is a perfect example for representing actions of their own body in the virtual world. It shows users seamlessly and naturally how they can interact with the virtual environment through their own actions. Ultimately, it is the body that determines the possible actions that we can perform on an object. When users control an avatar, the avatar inherently carries affordances that stem from real-world experiences. Various studies show that an avatar and its characteristics change how people perceive the interaction possibilities in VR (Joy et al., 2022; Arend and Müsseler, 2021; Saxon et al., 2019). This reveals a strong theoretical connection between the two constructs, specifically between the agency dimension of virtual embodiment and the action-oriented dimension of spatial presence. Even if the virtual body cannot directly interact with objects, such as picking something up, its mere presence might foster a stronger sense of agency compared to applications where no avatar is present. This offers an interesting perspective on how virtual embodiment can serve as a spatial presence cue. In this regard, it is particularly important to emphasize visuomotor and visuospatial synchrony. They ensure that the position of virtual hands and arms aligns with proprioceptive input. This alignment makes interactions feel more immediate and natural. As a result, users experience a stronger sense of agency for the virtual body and a stronger sense of being active in the virtual environment.

3.1.7 The virtual body as a spatial anchor

There is another important idea that shows why virtual embodiment affects spatial presence. It is the idea that one’s own body and one’s own location are normally inseparable. Slater (2009) wrote: “As we have argued, the action involved in looking at your own body provides very powerful evidence for [Place Illusion] (your body is in the place you perceive yourself to be)” (Slater, 2009). A visible body is therefore a helpful cue when it comes to convincing someone that the virtual world is the current location. Effective virtual embodiment cues increasingly support the integration of sensory signals associated with the avatar into the user’s internal body representation. It is crucial to grasp the full extent of this effect. In some cases, it is so strong that even after the HMD has been removed, the influence of virtual embodiment on the body schema and body image persists, partially still overriding the sensory input from the biological body (Groen and Werkhoven, 1998; Peck et al., 2013; Reinhard et al., 2020; Banakou et al., 2018). People do not merely experience the illusion that the virtual body moves like them or belongs to them—rather, the arrangement of illuminated pixels becomes their visible body. In the course of this process, the influence of the biological body, which anchors a person in the physical world, diminishes. At the same time the influence of the virtual body, which anchors them in the virtual world, grows. The internal body concept is increasingly determined by the stimuli that are produced by the VR system. So when we ask users about their perceived location, it is of course pivotal what and therefore where they perceive their body. The growing acceptance of the avatar thus also provides growing visual evidence that one is truly located in the virtual environment.

3.2 How traditional spatial presence cues affect the sense of virtual embodiment

Through the previous explanations, we were able to show comprehensively that typical bottom-up virtual embodiment cues can also lead to an increase in spatial presence. But what about the reverse case? Would all spatial presence cues always lead to an increase in virtual embodiment? This is much more difficult to show simply because traditional spatial presence cues are much more versatile than virtual embodiment cues. This is also due to the fact that the definitions and measurement tools for presence are broader and more adaptable. Despite this complexity, we have gathered several arguments to illustrate how typical spatial presence cues influence virtual embodiment.

3.2.1 System fidelity shapes virtual embodiment

With virtual embodiment, the focus is on the virtual avatar. The avatar is a subset of what the VR system as a whole has to offer, primarily on the visual level. This relatively trivial idea is the basis for a central argument as to how spatial presence cues can also influence virtual embodiment. We are talking about the perceptive immersive properties that enhance the fidelity of sensory information generated by the VR system, e.g., pixel density, refresh rate, stereoscopic vision, or field of view. These features allow users to perceive the content of the VR system with greater precision and detail. As the avatar is always part of the system, the perception of it is inextricably linked to these cues. A sufficiently large pixel density and stereoscopy are the basis for perceiving an avatar as a three-dimensional body that potentially belongs to oneself, rather than as a sequence of mere images played in front of one’s eyes. Furthermore, studies have shown that body ownership is particularly enhanced when the virtual hand is perceived as more realistic—an effect that is itself influenced by these very immersive display properties (e.g., Argelaguet et al., 2016). Similarly, the update rate is critical for virtual embodiment. A sufficient update rate ensures that all movements are rendered smoothly and accurately, which is essential for triggering virtual embodiment. Typical virtual embodiment cues, such as visuomotor synchrony and visuotactile synchrony, depend on temporally accurate movement. This can involve either the avatar itself or objects that move relative to the avatar. When the update rate is inadequate, it can lead to lag or stuttering, which in turn disrupts these processes. On an empirical level, for exactly this reason, artificial latency is often introduced in avatar movements when attempting to create a break in virtual embodiment (Halbig and Latoschik, 2024; Gall et al., 2021; Caserman et al., 2019). The field of view is also important, because under normal conditions, visual perception of one’s own body primarily occurs in the peripheral vision. A wider field of view allows for more visual cues from the avatar to be perceived. When those cues are synchronized with, for example, motor activity, they increase the impact of the avatar on the user’s mental body representation. Empirical evidence supports this idea, showing that an HMD’s field of view can function as a virtual embodiment cue (Falcone et al., 2022; Nakano et al., 2021). More broadly, any immersive property that enhances visual fidelity of a VR system enhances avatar fidelity as well.

3.2.2 Spatial understanding supports virtual embodiment

In Section 3.1.5, we explained how virtual embodiment can increase spatial presence by improving spatial understanding. We can also turn this argument around. The idea behind it is largely based on the inseparability of VR system and the avatar (see 3.2.1). As already explained, some of the typical immersive properties of VR are responsible for promoting spatial understanding. This understanding also relates to the avatar itself. So if an avatar is rendered with strong stereoscopic depth cues this not only means that a user can generate a better understanding of the virtual environment, but also of the dimensionality of the avatar itself. Traditional spatial presence cues like stereoscopy, motion parallax, field of view, or proper illumination ensure that a grid of illuminated pixels can be interpreted as a real human body and not just flashing lights in front of the eyes. The process or information processing that allows a user to understand the space, its dimensions and arrangement, also allows the user to understand the avatar, its dimensions, composition and position. All four classes of the bottom-up virtual embodiment cues require such a spatial understanding. Visuotactile synchrony, for example, arises when tactile feedback of the biological body is temporally and spatially aligned with the visual input from the avatar. The basis on which the virtual embodiment cues become effective are thus the immersive properties that allow the synchrony between the biological and virtual body to be perceived in the first place. This provides a very good explanation as to why HMD-VR setups, for example, provide higher embodiment values than 2D screens (Wenk et al., 2023; Juliano et al., 2020). Avatar perception and spatial understanding are deeply interconnected.

3.2.3 Suppressive immersive properties are virtual embodiment cues

Perhaps the most central paradigm for researching embodiment phenomena is the rubber hand illusion, which has been adapted and modified in numerous ways for research purposes. Researchers, for example, have manipulated the position of the rubber hand (Tsakiris and Haggard, 2005; Cadieux et al., 2011), altered the instrument used for touching the hand (Ward et al., 2015; Schütz-Bosbach et al., 2009), or adjusted the timing of the tactile stimulation (Shimada et al., 2009; Shimada et al., 2014). One can even do the rubber hand illusion without the rubber hand itself, thus creating ownership for a volume of empty space (Guterstam et al., 2013). Numerous studies, conducted under various conditions, have repeatedly demonstrated how readily the human brain integrates external elements into its mental representation of the body. However, almost all forms and facets of the rubber hand illusion share a common characteristic they hide the biological hand from the eyes of the participants, usually with a blanket or a standing screen. The visibility of the real (biological) hand significantly diminishes the ownership illusion for the rubber hand (Armel and Ramachandran, 2003). The blanket or screen that hides the real hand, allowing the rubber hand illusion to become effective, serves as the equivalent of the suppressing immersive properties of a VR application. The visual stimuli that emanate from the biological body are part of the physical environment. Seemingly by chance, the suppressing immersive properties create the basis for virtual embodiment, by diminishing the visual stimuli of the biological body. This is not merely coincidental; rather, it reflects the fact that virtual embodiment and spatial presence rely on overlapping cognitive and perceptual mechanisms. Both rely on the attenuation of physical stimuli and the dominance and acceptance of virtual stimuli. The same process that creates a stronger coupling between the user and the simulation, also facilitates the process that allows the avatar to become part of the mental body representation. From this perspective, the suppressive immersive properties also serve as virtual embodiment cues. This could explain why setups that display both the physical and virtual body tend to report lower presence but also body ownership scores, as seen in comparisons between Augmented Reality and VR (Wolf et al., 2022), or between CAVE and HMD setups (Waltemate et al., 2018).

3.2.4 Immersive properties create body representation even in absence of an avatar

While many researchers associate embodiment closely with visual representations of the body, such as avatars or virtual limbs, we argue that embodiment in VR begins long before any avatar appears. The mental body representation is shaped and modulated not only by visual input, but also by proprioceptive and tactile stimuli. When we discussed embodiment so far, we almost only talked about embodiment toward an avatar, which we called virtual embodiment. However, the mental body representation is not created by an avatar. Under certain conditions, it can just align with or modify the existing representation. This means that even in the absence of a visible avatar, the user’s biological body continues to be implicitly represented within the virtual environment. One compelling example of this is visuotactile synchrony. It requires a 3D object to collide with the user. Avatars often make this collision explicit by providing a visible surface for this collision, but they are not necessary for the tactile integration to occur. When tactile feedback is applied in a spatially congruent way, it implies a virtual body surface, even if no avatar is shown. For instance, Kaul et al. (2017) developed a soccer header simulation in which participants used their heads to strike virtual soccer balls. In one condition, users wore a vibrotactile grid around the head that delivered tactile feedback upon contact. Even without any avatar present, participants reported increased presence. The system treated the head as a body part embedded in the virtual world, and so did the users. Other applications have also already demonstrated how collisions can be simulated in VR, even without an avatar (Günther et al., 2020; Guterstam et al., 2015). This kind of body mapping also arises through proprioceptive and motor-related cues, such as head tracking. A visual perspective implies a spatial location, and proprioception informs users that their body resides just beneath that perspective. VR users who lack an avatar do not perceive themselves as disembodied floating cameras. Instead, they implicitly assume that their body is present, albeit invisible, rather than nonexistent (Murphy, 2017). This aligns with De Vignemont’s (2018) view that a bodily presence persists even without visual representation. The idea that body representations are not solely derived from avatars is also reflected in the IBF. This framework suggests that multisensory integration inherently implies the presence of a body, even in the absence of an explicitly rendered avatar. For example, an implied body can be inferred through visuomotor correlations during head-tracking. These insights have significant implications for how we measure virtual embodiment. The traditional view often links embodiment strictly to avatars, but many commonly used measurement tools already extend beyond that scope. For example, the Avatar Embodiment Questionnaire (Peck and Gonzalez-Franco, 2021) includes items like “I felt out of my body” or “I felt my own body could have been affected by the virtual world.” These refer not to the avatar, but to broader body representations. Similarly, the Virtual Embodiment Questionnaire (Roth and Latoschik, 2020) includes a “Change” factor with items such as “I felt like the form or appearance of my own body had changed”. This link is even supported by physiological evidence. Guterstam et al. (2015) demonstrated that integrating congruent tactile feedback in a VR application enhances the physiological response to a threat stimulus, even without a visible avatar. That means that our standard measures of virtual embodiment are not only responsive to avatar-related cues, but also to traditional spatial presence cues, such as congruent tactile feedback (independent of an avatar) or motor feedback via head tracking.

3.2.5 General embodiment and spatial presence are two sides of the same coin

When Kilteni et al. (2012) introduced their working definition for the sense of embodiment toward a virtual body, they directly derived it from the more general sense of embodiment which is understood as “the ensemble of sensations that arise in conjunction with being inside, having, and controlling a body” (Kilteni et al., 2012, p. 374). The definition itself implies that the feeling of embodying an avatar is only part of the larger construct of owning and controlling a body. There must therefore be a collection of cues that, in addition to the virtual embodiment cues, reinforce the general sense of owning and controlling a body in a virtual environment. We argue that every spatial presence cue has the potential to be such a general embodiment cue. There is a central conceptual overlap between general embodiment and spatial presence. Spatial presence is typically defined as the feeling of being physically located in a virtual environment. But this “being there” depends heavily on the engagement of bodily capabilities: sensory input, perceptual alignment, motor control, and spatial orientation. As such, spatial presence is deeply rooted in the body. This perspective is echoed by Biocca (1997), who described the emergence of spatial presence as “progressive embodiment.” Most theories of presence adopt an embodied view of spatial cognition, asserting that perception and action are never detached from the body—they are shaped by what the body can do. When we talk about immersive properties in VR—like head tracking, stereoscopy, or depth cues—we are talking about how well a system supports the body’s native capabilities. This means that spatial presence cues do not just place a user in the virtual environment, they also implicitly reinforce the sensation of being embodied in that environment. For example, motor-related cues such as head tracking allow users to move naturally and perceive those movements in real-time. This motor feedback not only enhances the illusion of “being there,” but also supports the sense of having a body that acts and senses within the virtual space. The same logic applies to perceptual cues. Stereoscopy, for example, provides depth perception by presenting slightly different images to each eye, simulating the way we see in the real world. From an embodied cognition perspective, our depth perception is closely linked to our body’s physicality—our eyes’ positions and how they converge on objects. When stereoscopy creates a convincing sense of depth, it not only enhances spatial presence but also supports the sense of “being” a body that perceives depth and navigates space. Recent research supports this view. Halbig and Latoschik (2024) present two studies where traditional spatial presence cues—head tracking and depth information—were manipulated in environments without avatars. In both cases, the manipulations not only increased spatial presence but also the general sense of being embodied in the virtual space. These results give an idea of how many immersive properties have the potential to manipulate the general sense of embodiment in VR. In the last section, we have already explained that this manipulation of general embodiment also has an impact on our subjective and objective measures of virtual embodiment. This section thus further demonstrates how many spatial presence cues also influence virtual embodiment.

3.2.6 General embodiment in virtual environments facilitates avatar acceptance

In Section 3.1.7, we argued that virtual embodiment anchors users in the virtual environment, thereby enhancing their sense of presence. The questions of where one perceives the body and where one feels present are closely intertwined. This reasoning has supported our claim that embodiment cues can enhance spatial presence. However, the relationship may also work in the opposite direction. If a user has a greater immersion into the virtual world through certain spatial presence cues, e.g., more sensory channels are affected, this user might also find it easier to connect to the avatar which is inextricably presented as a part of this world. After all, we are used to the fact that body and location belong together. If we ask users whether they accept a virtual hand as their own, it makes a difference whether they generally perceive the virtual environment as their current location. This idea suggests that the influence of spatial presence cues on the general sense of embodiment can also increase the effectiveness of virtual embodiment, even when these cues do not directly address the avatar. While the overall impact of this effect may not be particularly strong, there is evidence to support its existence. We can, for example, use it to explain the results of section 5 of the work of Gall (2022). Gall manipulated presence by changing the location of a table in a room, making its position congruent or incongruent with the virtual representation seen through an HMD. With their left hand, the participants could explore the table in order to recognize its congruent or incongruent position. However, the left hand was not visible. Then the participants turned to the right where they saw a virtual hand that was congruent in its position to their right hand. This right hand was simply lying on a hand rest. Participants with a congruent table position experienced both a higher sense of spatial presence and a higher body ownership for the virtual hand. This experiment is another striking example of how the separation between spatial and bodily perception fails. The alignment of visual stimuli from the virtual environment with proprioceptive and tactile feedback reinforced the conviction of being physically located within the virtual space. On the one hand, this enhanced sense of spatial presence made it easier to accept elements of the virtual world—such as the virtual hand—as part of one’s own body. On the other hand, the congruent sensory information also helped participants understand the position of their own body relative to elements of the virtual environment. This anchoring process may have further strengthened the feeling that their body and the virtual elements exist within the same space. This, in turn, could have increased the acceptance of the virtual hand as part of their own body. Here, the results of Halbig and Latoschik (2024) are particularly relevant, as they clearly demonstrate how versatile spatial presence cues can also function as general embodiment cues.

3.3 Virtual embodiment and spatial presence as perceptual substitutes for physical reality

One way of looking at VR qualia is through the idea that human perception works within a set of frames that guide how we perceive the world and how that perception changes in response to our own behavior and events in the environment. These frames are shaped by lifelong learning in physical reality, current interoceptive and exteroceptive states, and by deeper evolutionary processes that have optimized our sensory systems for interpreting signals from the unmediated world. If the stimuli generated by the VR system match these frames, we tend to accept them as adequate substitutes for physical stimuli. This does not necessarily mean that what happens feels realistic, it simply means that the simulation does not cause a disturbance in perception. Both spatial presence and virtual embodiment rely on this same mechanism: the degree to which virtual stimuli can effectively replace unmediated ones. Whether someone experiences presence in a virtual environment or perceives the virtual body as their own represents two different ways of measuring the success of this process. Consequently, our measurement tools inherently assess this process, at least to some extent. The idea described here draws heavily from the Congruence and Plausibility model of Latoschik and Wienrich (2022). This model predicts that virtual embodiment cues and spatial presence cues increase the overall plausibility of the simulation. Rather than focusing on individual cues in isolation, the emphasis is placed on how each contributes to increasing this overall plausibility. When congruent cues successfully increase plausibility, it becomes less relevant whether we ask users if they feel present in the virtual world or if they perceive the virtual body as their own—both experiences are, at least in part, grounded in how plausible the simulation feels. If the simulation as a whole is convincing, then this can help users to feel present in the virtual world, but it can also help them to accept the virtual body shown there as their own. This suggests that all the described cues have the potential to influence one another. This argument accounts for the bidirectional relationship between spatial presence and embodiment: on one hand, virtual embodiment cues can enhance spatial presence; on the other hand, spatial presence cues can reinforce virtual embodiment.

4 Discussion

In the previous sections, we have explored various perspectives and arguments demonstrating the deep interconnection between virtual embodiment and spatial presence. A detailed examination of either construct inevitably leads back to the other, creating a continuous, cyclical relationship. This can be illustrated with a simple example: Let’s assume we have a very simple VR-setup: an HMD with stereoscopic view, sufficient pixel density, and head tracking. The stereoscopic view and the grid of pixels provide enough information about the nature of the virtual environment so that users can perceive it as a space they feel actually located in and not just as flashing lights in front of their eyes. The head tracking also links a motor channel to the VR system, which provides more possibilities to act in this virtual space. Together, they couple physical abilities to the simulation, which at the same time ensures that the user’s internal body representation is increasingly made up of virtual stimuli. If we now incorporate an avatar into the VR system and give the user motor control, then the stereoscopic vision and the pixel density ensure that the users can perceive the avatar and its movements with a certain fidelity, which helps them to perceive the avatar as an actual body that might belong to them. The visuomotor control gives the users a sense of agency for this avatar, which increases the embodiment effect for the avatar. At the same time, this agency strengthens the coupling between motor channels and the VR system, i.e., it strengthens the immersive process that we have already started with head tracking. Thus, it also increases the sense of users to act and become effective in the virtual environment. The control over the avatar also changes the perception of the virtual environment, especially the size and arrangement of objects and one’s own relative position and size to them. The increased spatial understanding in turn increases the sense of being there. The interplay of visuomotor synchronization and the visual perception of the avatar with a certain fidelity also ensures that users actually get a sense of ownership for this avatar. The user’s internal body concept is therefore controlled even more by the virtual stimuli, which only increases the connection to the VR system and thus its immersive qualities. To the user for whom this avatar is now even more strongly incorporated into their own body concept, this avatar now also serves as a visual indication that their body, and therefore they themselves, are actually in the virtual environment. This mutual reinforcement further advances an embodiment-presence loop.

We could now try to translate our arguments and findings into a network-based model which connects the constructs, their factors, and cues into a flow diagram. This approach was taken in various other theories on VR constructs, e.g., Skarbez et al. (2017) or Forster et al. (2022). However, if we were to take this route, we would face a major problem: in such a diagram, almost all factors and constructs would be connected to each other. It would result in a kind of cycle or complete graph that would have no predictive value in terms of causes and effects. For this reason, we argue that it is not useful to conceptualize the relationship between these two constructs and their associated qualia as one directly causing the other.

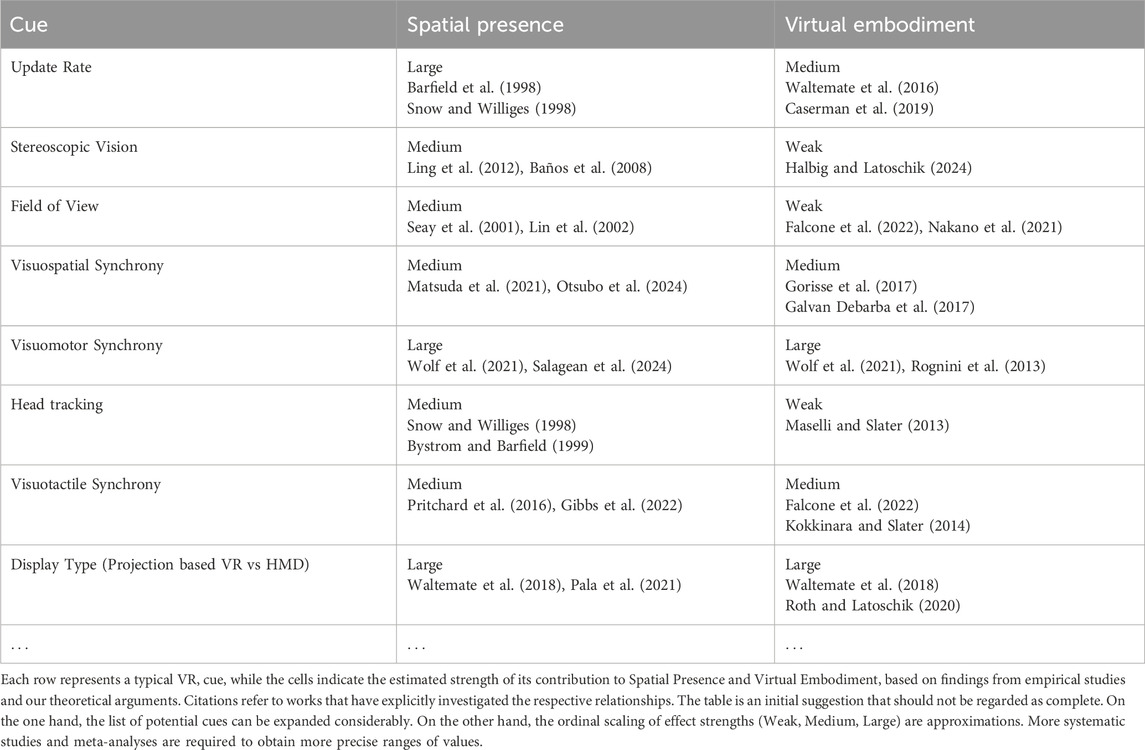

This fundamental link between the two constructs also means that we should no longer consider the cues for virtual embodiment and spatial presence separately. Any cue that affects measures of spatial presence also has the potential to influence measures of virtual embodiment, and vice versa. This applies primarily to the subjective measures. We should therefore move away from a dichotomous perspective and rather differentiate between the cues in terms of how strongly they contribute to the respective construct. As a counterargument, one could point to studies where a specific manipulation significantly affected one construct but not the other, e.g., Gorisse et al. (2017); Suk and Laine (2023); Wolf et al. (2020); Unruh et al. (2023). However, what stands out in these studies is that whenever a significant difference is observed in one of the two constructs, there is at least a descriptive difference in the other that trends in the same direction. While manipulations often strengthen or weaken both constructs, their effects are rarely identical. The influence follows the same general direction but is not strictly parallel, as the actual impact on each construct depends on various factors. The same pattern can also be found, for example, if we look at immersive properties and their influence on spatial presence. There are examples of studies that do not find a significant effect of the field of view (de Kort et al., 2006; Johnson and Stewart, 1999), stereoscopy (Balakrishnan et al., 2012; Baños et al., 2008), or even head-tracking (Bystrom and Barfield, 1999; Halbig and Latoschik, 2024) on the sense of presence. Yet, from a broader perspective, there is no doubt that these properties are spatial presence cues, c. f. Cummings and Bailenson (2016). Under certain conditions, their influence may simply not be strong enough to yield a significant effect. Therefore, discussions about the influence of cues on these constructs should not be limited by the dichotomous framework imposed by significance testing.

Based on these ideas, we propose a table-based representation as an alternative to network models for capturing the relationship between spatial presence and virtual embodiment. Table 4 represents individual VR cues as rows and the two constructs as columns. Each cell reflects the estimated strength of influence that a particular cue exerts on a given construct, informed by both empirical evidence and theoretical considerations. We have opted for ordinal-scaled approximations (i.e., Weak, Medium, Large) to represent these effect strengths. These estimates are preliminary and should not be regarded as definitive. Further empirical studies and meta-analyses are necessary to establish more precise and generalizable values. Similarly, the list of cues included in the table is not exhaustive but serves as a starting point for systematic comparison. This table structure avoids circular dependencies and instead offers a qualitative and comparative overview of cue–effect relationships. It is also a clear and abstracted description of the relationship between the two constructs, as we have elaborated in great detail in this paper. Both qualia, along with their associated factors, are human-defined constructs—simplified representations of the underlying neural processes. Their connection lies in the fact that the concept behind these constructs and the resulting measurement methods have large overlaps. This explains why there is a large pool of overlapping cues, each of which has a different impact on both constructs. When evaluating the impact of VR applications, several key experiential dimensions come into focus: the user’s sense of being located in the virtual environment, the perceived ability to act within it, the sense of ownership over the virtual body, and the sense of agency in controlling it. We argue that these dimensions are not entirely independent but rather represent different facets of the same underlying process. Each questionnaire that we use to assess such an experiential dimension just sets the focus differently. This conceptual interdependence suggests that current subjective measurement approaches may be insufficient for isolating the two constructs independently. The core idea of organizing these constructs and their contributing factors in a non-hierarchical manner—without creating direct dependencies on the qualia or factor level—derives from the Congruency and Plausibility Model proposed by Latoschik and Wienrich (2022). While their model provides a general framework for VR-related qualia, this paper offers a focused application of its principles to the sense of embodiment and spatial presence.

Table 4. The Cue-Effect table visualizes the relationship between common VR cues, Spatial Presence, and Virtual Embodiment.

5 Limitations and conclusion

Based on our arguments, we can confidently state that spatial presence and virtual embodiment are shaped by a highly overlapping set of cues. However, the exact weight of each cue’s influence on either construct remains largely unknown. These weights would also be the content of the cells of the cue-effect table which we proposed in the discussion. Addressing this gap will require targeted empirical studies and broader meta-analyses. Such empirical studies would ideally use experimental designs that systematically manipulate a single immersive cue while keeping other variables constant, in order to isolate its specific effects on both spatial presence and virtual embodiment. In parallel, meta-analyses aggregating existing studies could help estimate the average effect size of specific cues across contexts. To support this, it would be beneficial for future empirical work to report spatial presence and virtual embodiment measures side by side, even when only one is the primary focus. Additionally, reporting standardized effect sizes for each manipulation would greatly improve the comparability across studies and provide a stronger empirical foundation for aggregating findings in future reviews and meta-analyses. Beyond methodological considerations, it is equally important to reflect on how we describe and label these phenomena in both research and design practice. As discussed extensively in this paper, the representation of the human body in VR does not rely solely on the presence of a visible avatar. For this reason, it is conceptually misleading to label conditions as “embodiment” vs “no embodiment” based solely on avatar visibility. Such labels risk obscuring the nuanced and continuous nature of embodiment in VR.