- 1College of Science and Engineering, Hamad Bin Khalifa University, Doha, Qatar

- 2Cardiff School of Management, Cardiff Metropolitan University, Cardiff, United Kingdom

- 3Department of Computer Science, Qatar University, Doha, Qatar

- 4GHD Engineering, Architecture and Construction Services, Doha, Qatar

- 5Visual and Data-Intensive Computing, CRS4, Cagliari, Italy

- 6National Research Center in HPC, Big Data, and QC, Cagliari, Italy

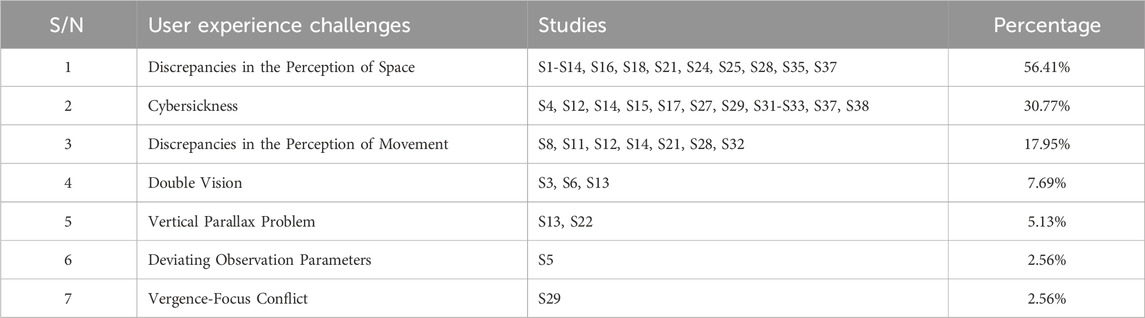

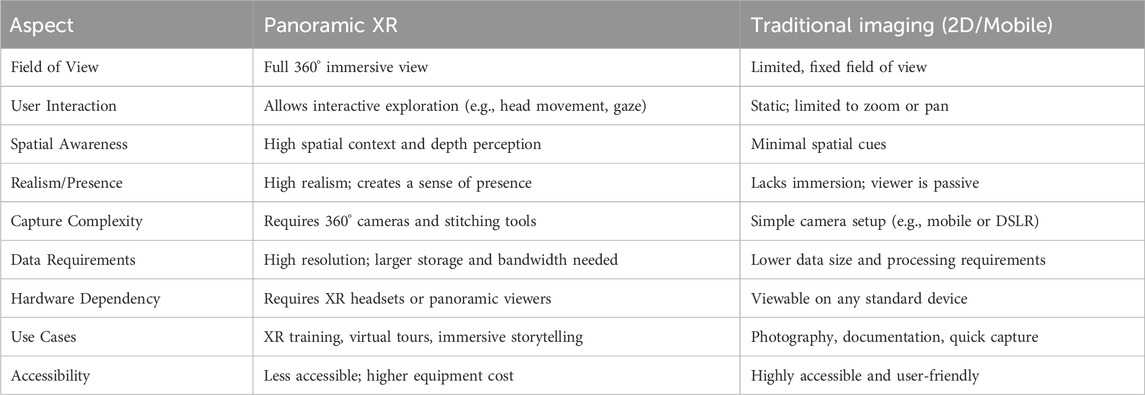

Panoramic imaging plays a pivotal role in creating immersive experiences within Extended Reality (XR) environments, including Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR). This paper presents a scoping review of the research on panoramic-based XR technologies, focusing on both static and dynamic 360° imaging techniques. The study analyzes 39 primary studies published between 2020 and 2024, offering insights into the technological frameworks, applications, and limitations of these XR systems. The findings reveal that education, tourism, entertainment, and gaming are the most dominant sectors leveraging panoramic-based XR, accounting for 28.21%, 25.64%, 23.08%, and 20.51% of the reviewed studies, respectively. In contrast, challenges such as high computational demands, low image quality and depth perception, and bandwidth and latency issues are among the critical limitations identified in 28.21%, 23.08%, and 15.38% of the studies, respectively. The analysis also explores the level of user interaction and immersion supported by these systems, specifically in terms of degrees of freedom (DoF). A majority of the studies (56.41%) offer 3DoF, which allows users to look around within a static position, while only 35.90% provide 6DoF, enabling full movement in space. This indicates that most panoramic XR applications currently support limited interaction, though 6DoF systems are being adopted in a notable portion of the reviewed work to enable more immersive experiences. The review further examines key perceptual studies related to user experiences, including visual perception, presence and immersion, cognitive load and attention distribution, and spatial awareness in panoramic XR environments. In addition, user experience challenges such as discrepancies in spatial and movement perception, along with cybersickness, are among the most commonly reported issues. The paper concludes by outlining future research directions aimed at addressing these challenges, optimizing system performance, reducing user discomfort, and expanding the applicability of panoramic-based XR technologies in fields such as healthcare, industrial training, and remote collaboration.

1 Introduction

Extended Reality (XR) is an umbrella term that encompasses Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR), referring to immersive technologies that blur the boundaries between the digital and physical worlds (Cardenas-Robledo et al., 2022; Samala et al., 2024). These technologies have gained significant attention across disciplines due to their ability to enhance interaction, perception, and engagement in a wide range of contexts (Kourtesis, 2024; Tukur et al., 2024b). Detailed definitions and distinctions among XR modalities are presented in Section 3.1. XR is now being applied across numerous domains such as education (Liarokapis et al., 2024), tourism (Marczell-Szilágyi et al., 2023), healthcare (Logeswaran et al., 2021), entertainment (Ansari et al., 2022), and industrial training (Chen M. et al., 2022), where it supports simulations, remote collaboration, visualization, and storytelling.

Among the core enablers of immersive XR experiences is panoramic imaging, which involves the capture and rendering of 360-degree visual environments (Tukur et al., 2023; Fergusson de la Torre, 2024; Shinde et al., 2023). Panoramic-based XR systems allow users to explore spatial scenes from within, often using head-mounted displays (HMDs) to simulate the sensation of being physically present in a remote or simulated environment (Chen M. et al., 2022; Livatino et al., 2023). This imaging approach is especially relevant for applications where realism, spatial awareness, and immersion are critical—such as virtual tours (Marczell-Szilágyi et al., 2023), training (Chen M. et al., 2022), medical simulations (Mergen et al., 2024), and interactive media experiences (Chen et al., 2024).

Despite the advancements in XR applications through panoramic imaging, several challenges persist, broadly categorized into technological and user experience issues. Technological limitations include high computational demands (Zhang et al., 2020; Pintore et al., 2023b), low image quality and insufficient depth perception (Livatino et al., 2023; Marrinan and Papka, 2021), and bandwidth and latency constraints (Li et al., 2023; Zheng et al., 2021). These limitations directly influence the quality and realism of XR environments, constraining scalability and real-time responsiveness. On the other hand, user experience challenges encompass motion sickness (Ma et al., 2023), cognitive overload, and limited interaction capabilities (Waidhofer et al., 2022). Importantly, these issues are often tightly interrelated with technological constraints. For example, latency caused by slow rendering or data transmission can disrupt visual flow and spatial coherence, leading to disorientation or cybersickness. Similarly, poor image quality and inaccurate depth perception can degrade user presence and immersion. Addressing these challenges requires a comprehensive understanding of both the underlying technical frameworks and the perceptual implications they have on users in XR systems.

However, despite its growing presence, panoramic XR has not been sufficiently examined in a consolidated or systematic manner in existing literature. Most reviews in the XR field focus on broader hardware and software innovations, specific XR modalities, or sector-specific implementations (e.g., XR in education (Koumpouros, 2024; Liarokapis et al., 2024) or healthcare (Mergen et al., 2024)). These reviews often treat panoramic imaging as a secondary feature or exclude it altogether. Furthermore, while technological developments—such as scene capture, rendering, and deployment—have progressed, there is limited integration of these advancements with user-centered evaluations that consider perceptual outcomes such as motion sickness (Lu et al., 2023), immersion (Peng, 2024), and cognitive load (Hebbar et al., 2022).

This presents two critical gaps in the literature:

Addressing these gaps is crucial for guiding future development, ensuring more effective XR design, and expanding its use in both practical and research contexts. To this end, this paper presents a scoping review that analyzes and synthesizes existing research on panoramic-based XR systems published between 2020 and 2024. The review aims to bridge technical and user experience perspectives, offering an integrated view of the current state of the field.

The following research questions guide this study:

The contributions of this research are as follows:

By addressing these questions, this review provides a structured synthesis of panoramic XR technologies, offering insights into both the technological frameworks and application domains, as well as user experience considerations. Through analysis of the existing literature and identification of key gaps, the study aims to offer a clear roadmap for future research and development in immersive XR applications utilizing panoramic imaging.

Previous surveys have primarily focused on panoramic-based applications within specific industrial sectors. For instance, Shinde et al. (2023) reviewed 360° panoramic visualization technologies in the Architecture, Engineering, and Construction (AEC) industry, while similar efforts have been made in the construction domain (Eiris Pereira and Gheisari, 2019), scene understanding (Gao et al., 2022), and video streaming (Shafi et al., 2020; Khan, 2024). These studies offer valuable domain-specific insights but do not provide a holistic perspective on panoramic XR across multiple sectors. Other reviews have explored panoramic imagery in relation to 3D geometry reconstruction and scene understanding (da Silveira and Jung, 2023), or in broader visual computing contexts (da Silveira et al., 2022). Separately, several studies have examined XR applications more generally—without focusing on panoramic content. For example, Minna et al. (2021) reviewed XR in collaborative work settings, Cardenas-Robledo et al. (2022) explored XR for Industry 4.0, and Zoleykani et al. (2024) addressed XR’s role in construction safety. Additional reviews have focused on specific modalities such as VR (Mergen et al., 2024; Chen et al., 2024), AR (Koumpouros, 2024), or MR (Tran et al., 2024). In contrast, our scoping review uniquely synthesizes panoramic-based XR technologies across diverse application domains—such as education, healthcare, entertainment, and real estate—while integrating analysis of user experience, perceptual challenges, and cognitive factors. To the best of our knowledge, this is the first study to provide a cross-sector synthesis that unifies technical frameworks, applications, and user-centric considerations specific to panoramic XR. The structure of this paper is organized as follows: Section 2 outlines the research methodology employed in this study. Section 3 discusses and analyzes the technological frameworks used for panoramic imaging in XR applications, as identified in the literature (RQ1). Section 4 explores the application space and limitations of panoramic-based XR systems (RQ2 and RQ3). In Section 5, we examine the perceptual studies conducted and challenges faced by users when interacting with panoramic-based XR systems (RQ4). Section 6 presents the key findings, strengths, and limitations of the study, followed by the conclusion in Section 7.

2 Methodology

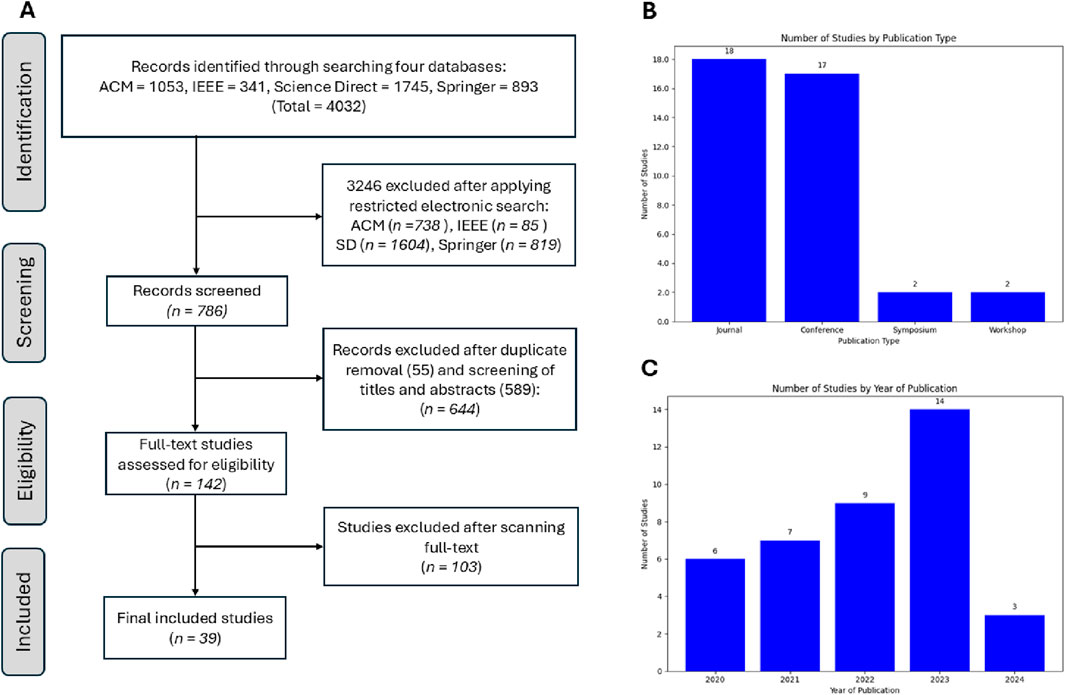

To address the research questions outlined in the introduction, we adopted the guidelines set by the PRISMA Extension for Scoping Reviews (PRISMA-ScR) (Tricco et al., 2018), which is a well-established framework for ensuring transparency, methodological rigor, and comprehensive coverage in scoping reviews—especially when synthesizing findings across diverse study designs and domains, as in the case of panoramic-based XR technologies. As illustrated in Figure 1A, this framework provides a structured and systematic approach for conducting comprehensive scoping reviews, ensuring rigor and thoroughness. A completed PRISMA checklist, detailing where each item is reported in the manuscript, is provided as Supplementary Table S1. The literature search was conducted through the following stages:

Figure 1. Methodology charts: (A) PRISMA chart of the included studies; (B) publication type of the selected papers. (C) The distribution of studies over the years. Note: The initial identification phase, as shown in part A of this Figure, reflects the total number of records retrieved using broad search terms across all databases (n = 4,032). The “Restricted Electronic Search Results” in Table 1 refers to the refined subset (n = 786) obtained after applying database-specific filters such as publisher, date range, and metadata fields (e.g., title, abstract).

2.1 Search strategy

The search strategy encompassed the selection of bibliographic databases, formulation of search terms and strings, establishment of inclusion and exclusion criteria, and the process for selecting relevant studies for inclusion.

2.2 Data source selection

To ensure comprehensive coverage of relevant literature, we selected four major academic databases for this review: ACM Digital Library, IEEE Xplore, Science Direct, and Springer. These databases were chosen for their broad indexing of high-quality research in computer science, engineering, immersive technologies, multimedia systems, and human-computer interaction—areas highly relevant to both the technological and user experience aspects of panoramic XR. Although foundational journals such as Nature and Science were not searched directly, relevant articles indexed within the selected databases were captured when meeting the inclusion criteria. This approach ensured both domain relevance and comprehensive coverage.

2.3 Search terms

Given the diversity and complexity of creating immersive extended reality (XR) applications, it was essential to focus on the specific intersection between XR technologies and panoramic imaging. The following terms were identified as most representative for generating search queries:

2.4 Search strings

Search queries were formulated using appropriate literal and semantic synonyms to capture a wide range of relevant results. Table 1 presents the search strings applied to each data source:1

While the search strings focused on technological terms, this was intentional, as perceptual and user experience aspects (e.g., motion sickness, immersion, cognitive load) are often discussed within technical studies. To ensure these aspects were captured, we included them in the full-text screening and applied inclusion criteria that considered both technological, perceptual, and user-experience perspectives.

2.5 Search criteria

Studies were included based on their direct relevance to extended reality applications created using panoramic imaging. The inclusion and exclusion criteria were as follows:

2.5.1 Inclusion criteria

2.5.2 Exclusion criteria

2.6 Study selection

The study selection process was conducted in two phases: (1) duplicate removal, and initial screening of titles and abstracts for relevance, followed by (2) a full-text review to confirm eligibility based on the predefined inclusion and exclusion criteria.

2.6.1 Screening based on duplicate removal, title, and abstract

In this phase, a total of 55 duplicate records were identified and removed. The remaining studies were then screened based on their titles, abstracts, and the availability of full-texts. An additional 589 studies were excluded for reasons including irrelevance to the review scope or inaccessible full-texts. As a result, 142 unique full-text articles were retained for subsequent eligibility assessment.

2.6.2 Screening based on full-text

The remaining studies were then filtered through a full-text review, applying the inclusion and exclusion criteria detailed in Section 2.5. Studies that merely mentioned “Extended Reality” or related terms (VR, AR, MR, etc.) without substantial discussion, or that did not involve panoramic imaging, were excluded. After a thorough review, 39 studies were identified as the most relevant and were included as primary sources. The multidisciplinary nature of this study is reflected in the diverse range of publication venues, as detailed in references. Figure 1B illustrates the publication types of the included studies. While Figure 1C presents the distribution of the included studies over the last half-decade.

To ensure methodological rigor and reduce bias, the screening and selection process was independently conducted by three co-authors. Each retrieved full-text article was reviewed by at least two of the three reviewers. If both reviewers agreed on inclusion or exclusion, the decision was accepted without further deliberation. In cases of disagreement, the third senior co-author acted as an adjudicator. The final decision was made through consensus, ensuring that all included studies reflected a jointly agreed-upon judgment. In addition, Microsoft Excel was used for screening and data extraction, while Overleaf’s.bib system managed references, supporting consistent tracking and collaboration throughout the review process.

3 Panoramic-based XR technologies

3.1 Extended reality overview

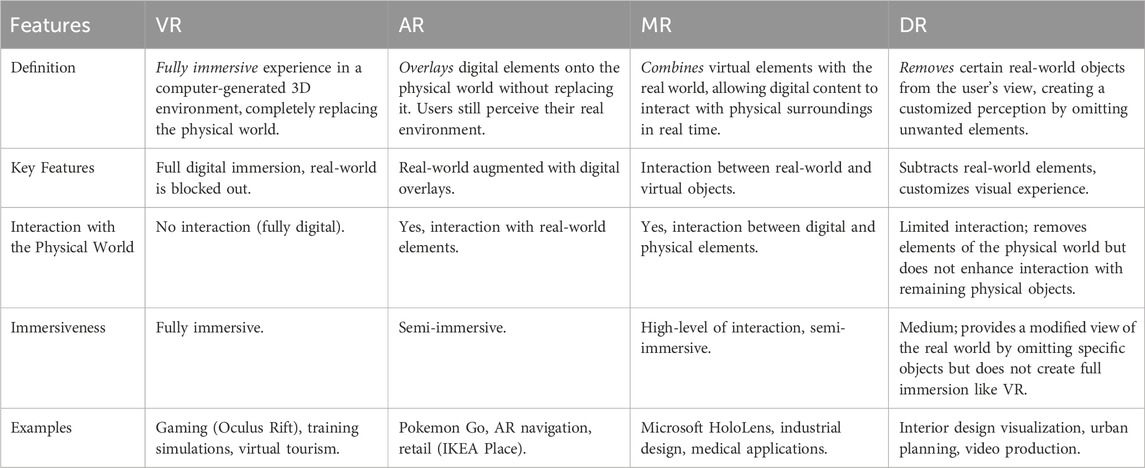

Extended Reality (XR) involves any technology that blurs the line between the physical and digital world, integrating digital elements into the user’s experience of reality (Samala et al., 2024). In this section, we present the definitions and analysis of the technological frameworks identified for panoramic imaging within XR applications, as summarized in Tables 2–4. Based on an extensive review of the literature, these frameworks extend beyond the traditional XR components of VR, AR, and MR, incorporating two additional categories: Diminished Reality (DR) (Cheng et al., 2022) and Augmented Virtual Reality (AVR) (Lee et al., 2023; Ou et al., 2004)2. This broader categorization reflects the evolving nature of XR technologies and their diverse applications.

1. Virtual Reality (VR): VR fully immerses users in a computer-generated, three-dimensional environment that completely replaces the physical world (Wohlgenannt et al., 2020). Users typically engage with this virtual environment through the use of VR headsets (such as Oculus Rift or HTC Vive) and motion controllers, which allow them to navigate and interact with the digital space. VR is designed to simulate real-world scenarios or create entirely new, imagined environments, making it ideal for applications in gaming, simulations, training, and virtual tourism. For example, in architectural visualization, users can “walk through” virtual building designs to get a real-time, immersive preview of the space before construction begins.

2. Augmented Reality (AR): AR enhances the physical world by overlaying digital elements—such as images, videos, 3D objects, or sounds—onto the user’s view of their real-world surroundings (Arena et al., 2022). Unlike VR, AR does not replace the real environment; instead, it adds virtual elements that are integrated into the user’s actual surroundings. AR can be experienced through devices like smartphones, tablets, or AR glasses (e.g., Microsoft HoloLens or Google Glass). A common example of AR is the mobile game Pokémon GO, where digital characters appear in the real world through the phone’s camera. AR is also widely used in industries like retail (virtual try-ons), healthcare (surgical assistance), and education (interactive learning).

3. Mixed Reality (MR): MR is a blend of both the physical and virtual worlds, where digital elements not only coexist with the real environment but also interact with it in real-time (Speicher et al., 2019). MR offers a more advanced level of integration compared to AR, as digital content can be anchored to real-world objects and interact with the physical environment based on contextual information. For example, MR allows a virtual object to be placed on a real-world table, and when the user moves around the table, they can see the object from different angles. This level of interaction is achieved through sophisticated sensors and spatial awareness technology found in devices like the Microsoft HoloLens 2. MR is increasingly used in fields like industrial design, healthcare (e.g., medical training simulations), and collaborative work environments, where digital and real-world data merge seamlessly for interactive experiences.

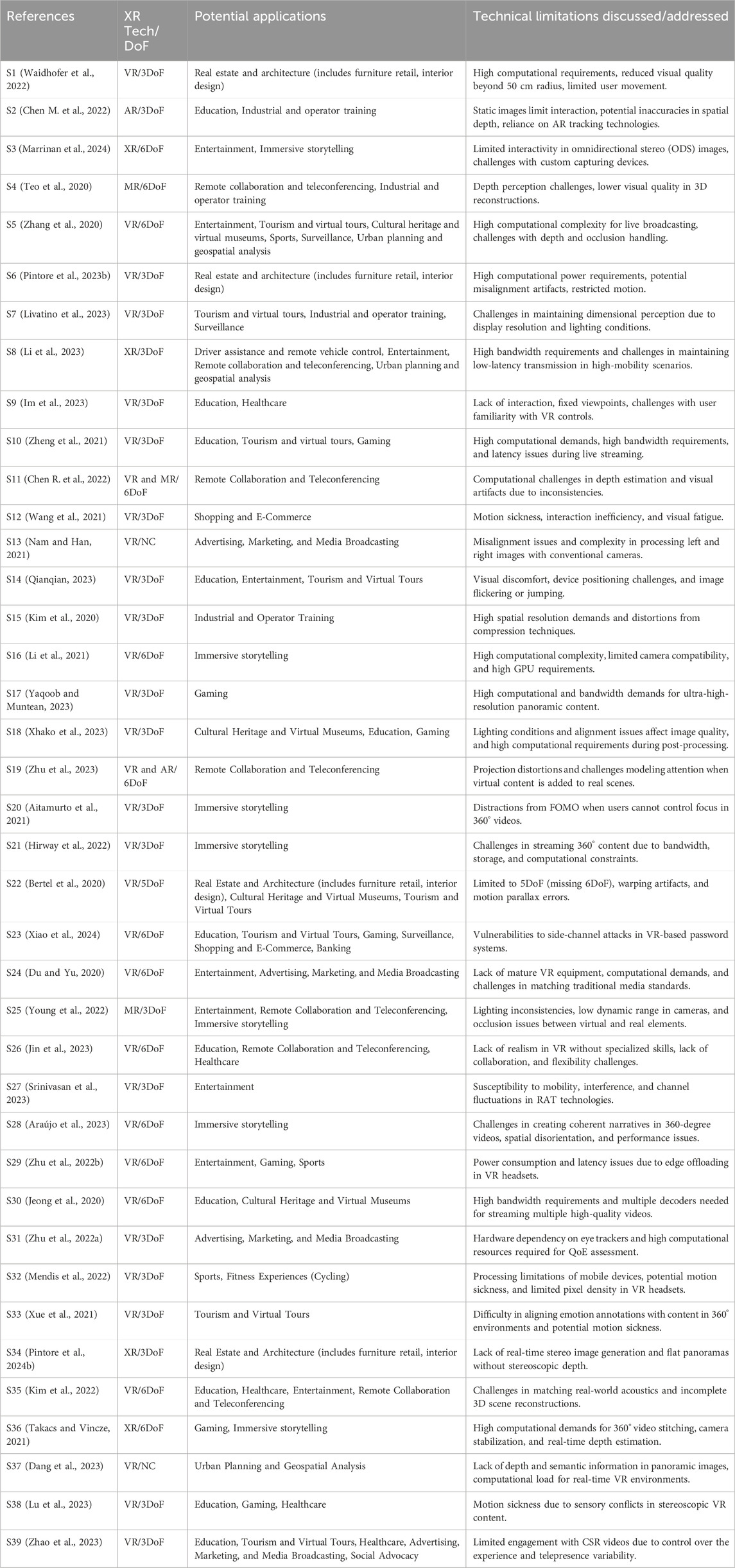

Table 4. Overview of XR technologies, their degree of freedom (DoF), potential applications, and technical limitations.

MR integrates virtual content with the physical world, enabling real-time interaction. Devices like the HoloLens 2 use optical see-through displays to overlay holograms onto the real environment (Balogh et al., 2006) In contrast, newer VR headsets such as the Meta Quest Pro and HTC Vive XR Elite use passthrough MR, capturing the physical world with cameras and blending it with virtual elements. While optical MR offers more natural perception of the real world, passthrough MR provides enhanced immersion with higher virtual content fidelity. Given this overlap, we have merged AVR into MR to reflect the evolving convergence of these technologies.

4. Diminished Reality (DR) is a specialized form of Augmented Reality (AR) where certain real-world objects or elements are digitally removed from the user’s field of vision, effectively “erasing” unwanted parts of the environment (Cheng et al., 2022). Unlike AR, which overlays digital information on the real world, DR aims to subtract elements from the physical surroundings, creating a customized perception by omitting specific objects or distractions (Cheng et al., 2022). DR is often used in applications such as interior design, where users can see how a space might look after removing furniture or other elements, or in urban planning, where buildings or infrastructure can be digitally erased to visualize a space before renovations or developments (Tukur et al., 2023; Pintore et al., 2022). For instance, DR can be used to remove unwanted objects like power lines in architectural renderings or even to enhance real-time video feeds by removing unnecessary distractions from a scene.

A comprehensive comparison of these XR technologies is presented in Table 3, highlighting their key features, interaction with the physical world, level of immersiveness, and relevant examples.

3.2 Discussion and analysis of panoramic-based XR technological frameworks

Table 2 provides an overview of the various panoramic imaging approaches employed in immersive extended reality (XR) research, highlighting their contributions, year of publication, and the type of panoramic imaging utilized. As shown in Table 2, panoramic imaging, both static and dynamic, has been widely adopted across XR applications to enhance immersive user experiences by offering 360-degree views. Specifically, Figure 2 reveals that “Dynamic Panoramic Imaging” is used in 25 out of the 39 included studies (64.10%), while “Static Panoramic Imaging” is used in 13 studies (33.33%). Additionally, a single study (2.56%), incorporates both static and dynamic panoramic imaging. This demonstrates the diverse and evolving use of panoramic techniques within the XR ecosystem.

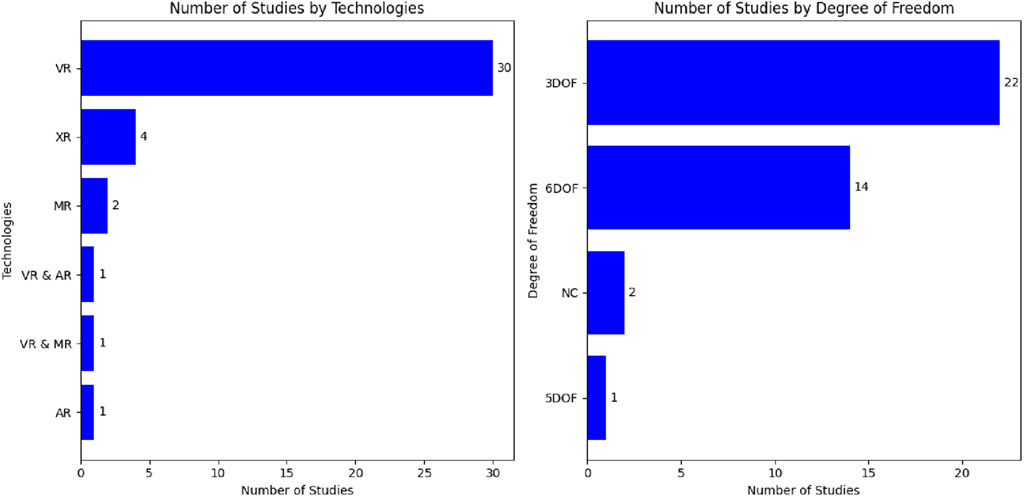

The frameworks for panoramic imaging in XR vary according to the specific XR technology and its intended application. The most prevalent technological framework is Virtual Reality (VR), which is employed in 76.92% of the included studies. This indicates that VR remains the dominant platform for panoramic-based XR systems. The second most common framework, representing 10.26% of the studies, is XR in its broader sense, where multiple XR components are employed without exclusive focus on a single technology. Following these, the other XR technologies—“MR,” “VR and AR,” “VR and MR,” “AR”—constitute 5.13%, 2.56%, 2.56%, and 2.56%, of the studies, respectively (see Figure 3-Left; Table 4). This distribution suggests that while VR dominates XR applications, other technologies are also contributing to the diversity of immersive experiences, albeit at a lower rate.

Figure 3. Distribution of studies by XR technologies and degrees of freedom. DoF, Degree of Freedom; NC, Not Clear.

The use of panoramic content differs markedly across XR categories, extending beyond mere prevalence. Panoramic imaging in VR is utilized to construct immersive environments that envelop the user, facilitating experiences such as virtual field trips, training simulations, and narrative-based settings. In MR, panoramic imagery functions as a perceptual framework that allows for the anchoring and interaction with 3D content, applicable in collaborative remote tasks or hybrid spatial simulations (Teo et al., 2020). AR applications frequently utilize panoramic imagery as contextual or instructional backgrounds, such as overlaying step-by-step guides onto panoramic captures for industrial procedures (Chen M. et al., 2022). However, full integration is limited by challenges in spatial registration.

From both literature and practical perspective, panoramic imaging naturally aligns with VR environments, which are designed to fully immerse users within a 360-degree field of view. This spherical content is well-suited for head-mounted displays and supports strong presence and spatial awareness. In contrast, integrating panoramic content into AR applications presents greater challenges, such as achieving accurate spatial registration of panoramic imagery in real-world contexts, maintaining depth consistency, and avoiding perceptual conflicts between the digital and physical layers. These limitations likely contribute to the lower adoption of panoramic imaging in AR-based XR systems. Despite these challenges, numerous studies illustrate significant applications of panoramic imaging in AR contexts (Chen M. et al., 2022). employ panoramic visuals to integrate real-world industrial training scenarios into AR overlays, enabling workers to obtain guided instructions in spatially accurate environments. This integration approach prioritizes panoramic imaging as a spatial reference for augmentations; however, challenges related to visual alignment and real-time responsiveness persist as significant design issues. In MR systems, panoramic content serves a complementary function by providing a perceptual backdrop within interactive 3D environments (Teo et al., 2020). integrate 360° panoramic photo bubbles with 3D reconstructions to facilitate collaborative remote workspaces, enhancing panoramic imagery with spatially aware virtual objects. In contrast to VR, MR applications require real-time occlusion management and accurate calibration of virtual objects with panoramic textures. AVR, integrated within the MR framework, enhances the user experience by providing stereoscopic panoramic immersion alongside manipulable virtual elements, thereby improving user agency and depth realism (Lee et al., 2023; Ou et al., 2004). These examples demonstrate that while VR is the primary domain for panoramic imaging, AR and MR present distinct advantages and limitations that influence the registration, rendering, and interaction of panoramic content within XR platforms. Moreover, the degree of freedom (DoF) supported by the XR systems in the reviewed studies varies. The majority of the studies, 56.41%, support 3 degrees of freedom (3DoF), allowing rotational movement but restricting positional movement within the virtual space. In contrast, 35.90% of the primary studies provide 6 degrees of freedom (6DoF), enabling both rotational and translational movement for enhanced interactivity. A single study (2.56%) supports 5DoF, while 5.13% of the included studies do not clearly indicate their DoF (see Figure 3-Right; Table 4). This analysis suggests that while 3DoF remains the standard in many XR applications, 6DoF systems are being adopted in a notable portion of the reviewed work to enable more immersive experiences.

3DoF systems are common due to their technical simplicity and extensive hardware compatibility; however, their primary application lies in passive or semi-interactive panoramic XR environments. Examples include virtual tourism (Livatino et al., 2023), educational storytelling (Aitamurto et al., 2021), and live broadcasting, where users navigate 360° environments by adjusting their viewpoint without physical movement (Livatino et al., 2023; Aitamurto et al., 2021; Zhao et al., 2023). In contrast, 6DoF is used in contexts that require extensive spatial interaction, including immersive storytelling, industrial training, and collaborative design. Here, panoramic content is integrated with stereoscopy or depth synthesis to facilitate locomotion, manipulation, or shared spatial reference (Marrinan et al., 2024; Teo et al., 2020). The presented use cases illustrate that the DoF constitutes both a technical choice and a design decision that aligns with user experience objectives. Additionally, it is worth noting the temporal evolution of research in this domain. Table 2 and Figure 1C indicate a steady annual increase in the number of studies from 2020 to the present. This upward trend reflects the rapid expansion and growing interest in panoramic imaging technologies within XR, underscoring the dynamic and evolving nature of this field. The slight drop in publications in the current year is likely attributable to the fact that the data was collected in the third quarter, with additional studies potentially forthcoming by the year’s end. This trajectory suggests that panoramic imaging in XR continues to gain traction, positioning the field as both timely and significant in advancing immersive technologies.

4 Applications and limitations of panoramic-based XR technologies

4.1 Applications of panoramic-based XR technologies across industrial sectors

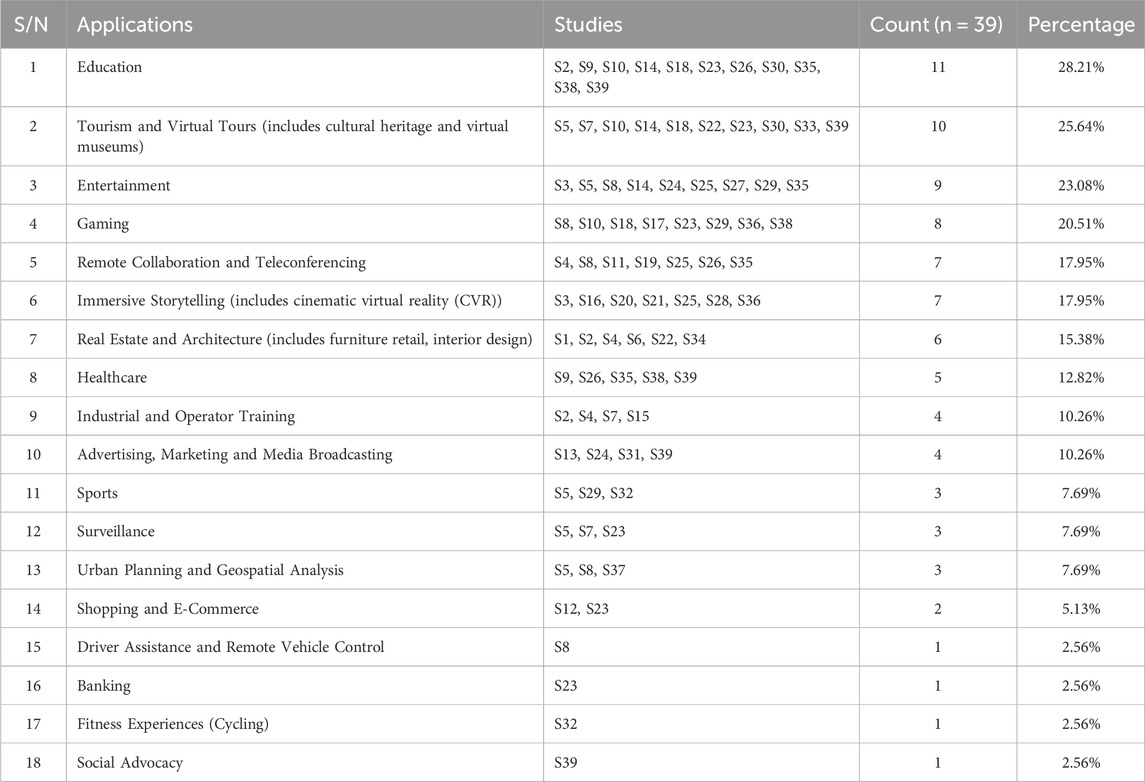

This section explores the diverse applications of panoramic-based XR technologies identified in the studies included in our review. These applications span across eighteen (18) major categories, reflecting their significant impact on various industrial sectors. A comprehensive summary of these studies, highlighting their key contributions to the advancement of XR technologies across various industries, along with their respective occurrences, is provided in Tables 4 and 5, respectively.

1. Education: Panoramic imaging enables the creation of highly immersive learning environments such as virtual classrooms, anatomy labs, and engineering workshops by capturing real-world spaces in 360°. Unlike conventional XR, which often relies on computer-generated models that require time-consuming development and technical expertise, panoramic-based XR allows educators to quickly generate realistic training environments using actual imagery. This approach reduces content creation overhead while preserving contextual authenticity, making it ideal for scenarios such as virtual field trips, medical simulations, or equipment operation training. Moreover, the full field-of-view offered by panoramic imaging allows learners to freely explore their surroundings from a first-person perspective, supporting experiential learning and improving spatial awareness. For example, students can examine the layout of an operating room or navigate an industrial setting as if they were physically present. This fosters better knowledge retention and engagement, particularly in remote or resource-limited settings where physical access to labs, machinery, or clinical environments may not be feasible. Panoramic XR also supports asynchronous and self-paced learning, giving students the flexibility to revisit complex environments and reinforce understanding through repeatable, low-cost immersion.

Source(s): (Jin et al., 2023; Im et al., 2023; Liarokapis et al., 2024).

2. Tourism and Virtual Tours (includes cultural heritage and virtual museums): Panoramic content is highly effective for providing immersive virtual access to heritage sites and tourist attractions. Unlike conventional XR, which often requires time-intensive 3D modeling, panoramic imaging uses real-world 360° captures to deliver authentic visual experiences with significantly less development effort. This approach allows users to explore environments such as museums, archaeological sites, or historical landmarks in a natural and intuitive way. Because the imagery is captured directly from physical locations, it retains the visual richness and spatial context of the original environment—qualities that are often difficult to replicate in synthetic XR scenes. Additionally, panoramic tours are more accessible and scalable, making them ideal for remote education, cultural outreach, and virtual tourism. The ability to embed contextual elements like audio guides or informational hotspots within the 360° view further enhances user engagement, offering a compelling and cost-effective alternative to fully modeled XR environments.

Source(s): (Marczell-Szilágyi et al., 2023; Hamid et al., 2021; Evrard and Krebs, 2018).

3. Entertainment: In cinematic VR and live performances, panoramic-based XR provides users with a highly immersive, front-row experience by capturing real-world events in 360° video. Unlike conventional XR, which relies on computer-generated scenes, panoramic imaging brings realism and authenticity by using actual footage of concerts, festivals, or theatrical productions. This allows users to explore the environment freely, enhancing the sense of presence without the need for complex modeling or animation. Panoramic visuals are often paired with spatial audio, allowing the sound to shift naturally with the user’s head movement, further increasing immersion. This is particularly valuable in entertainment settings where visual fidelity and emotional engagement are essential. Additionally, panoramic content is more cost-effective and efficient to produce than fully synthetic XR experiences, making it ideal for wide-scale virtual distribution of live events and immersive storytelling.

Source(s): (Ansari et al., 2022; Zhao et al., 2023; Li et al., 2023).

4. Gaming: While most gaming XR systems rely on fully synthetic, computer-generated environments, panoramic imaging offers a unique advantage by introducing real-world textures and visual contexts through 360° imagery. This hybrid approach allows developers to build games that blend interactive elements with photo-realistic backdrops, enhancing immersion without the overhead of detailed 3D asset creation. Panoramic content provides players with a visually rich environment captured from actual locations, which is especially useful for narrative-driven or exploratory games where realism contributes to the user experience. Unlike traditional XR, which requires significant resources for environment design and rendering, panoramic XR enables faster content development by leveraging pre-captured scenes, making it well-suited for indie developers, educational games, or location-based storytelling. Additionally, panoramic XR can support lightweight interaction models, allowing for intuitive gameplay in mobile or low-compute settings where full 6DoF interaction may not be feasible. This makes it more accessible and scalable for broader audiences while still delivering immersive, context-aware gameplay experiences.

Source(s): (Xhako et al., 2023; Zheng et al., 2021).

5. Real Estate and Architecture (includes furniture retail, and interior design): Panoramic imaging plays a valuable role in enabling virtual walkthroughs of properties and architectural designs. Unlike conventional XR, which typically requires labor-intensive 3D modeling and rendering, panoramic-based XR leverages 360° imagery to quickly and accurately capture real spaces, making it far more efficient for showcasing existing environments. This approach allows potential buyers or clients to remotely explore interiors and layouts with a high degree of spatial realism and context, offering a true-to-life impression of room dimensions, lighting, and finishes. The simplicity of panoramic capture also makes it ideal for large-scale or rapid deployment across multiple properties, without the cost and time associated with building detailed 3D models. In architectural visualization, panoramic imaging provides a practical solution for presenting design concepts, renovations, or staging ideas, especially during early planning stages. While it may not support dynamic interaction with objects like fully synthetic XR, it excels in communicating visual intent and spatial flow, offering an accessible and immersive experience to clients and stakeholders.

Source(s): (Miljkovic et al., 2023; Tukur et al., 2024a; Zhi et al., 2022; Tukur et al., 2022).

6. Immersive Storytelling: Panoramic imaging is particularly well-suited for immersive storytelling and cinematic virtual reality (CVR), where the goal is to place users within real-world narrative environments. Unlike conventional XR, which often relies on animated or synthetic scenes, panoramic content uses 360° video or images to deliver photorealistic settings captured from real locations, enhancing authenticity and emotional impact. This format allows for user-directed viewing, where the audience can freely look around and explore the environment from their own perspective, adding a layer of personalization and agency to the storytelling experience. It is especially effective for documentaries, journalism, and cultural narratives, where realism and presence are critical to audience engagement. Compared to traditional XR, panoramic storytelling requires less production time and technical complexity while still delivering immersive, high-quality experiences. It also enables creators to embed spatial audio and interactive elements, enriching the narrative without the need for full 3D scene reconstruction. These advantages make panoramic XR a compelling and accessible medium for storytellers aiming to engage users through immersive, reality-based content.

Source(s): (Szita and Lo, 2021; Rothe et al., 2018; MacQuarrie and Steed, 2017; Thatte et al., 2016).

7. Industrial and Operator Training: Panoramic-based AR/VR offers an efficient and practical approach to training in complex or hazardous industrial environments. By using 360° imagery of real machinery, control rooms, or factory floors, panoramic XR allows trainees to gain situational awareness and procedural understanding without exposing them to physical risks. Compared to traditional XR, which typically requires fully modeled 3D environments, panoramic imaging provides a faster and more scalable solution that still delivers high visual fidelity. This approach is particularly beneficial for onboarding or refresher training, where the emphasis is on recognizing spatial layouts, equipment placement, and operational flow. Trainees can navigate through the environment at their own pace, with annotations or overlays guiding them through key tasks or safety protocols. The real-world context captured in panoramic scenes enhances learning by grounding it in familiar, authentic settings—something often lacking in synthetic XR environments. Additionally, panoramic XR reduces the technical overhead of creating interactive training modules, making it more accessible to industries with limited development resources or time constraints. This makes it an ideal tool for training in sectors such as manufacturing, logistics, energy, and maintenance.

Source(s): (Chen M. et al., 2022; Teo et al., 2020; Livatino et al., 2023).

8. Remote Collaboration and Teleconferencing: Panoramic-based XR enhances remote collaboration by capturing complete 360° views of real environments, enabling participants to share immersive, real-time visual context during meetings, design reviews, or consultations. Unlike conventional XR, which may rely on abstract 3D models or avatars, panoramic imaging presents authentic, real-world scenes, allowing users to better understand spatial arrangements, equipment layouts, or environmental details during remote sessions. This is particularly useful in fields such as architecture, engineering, healthcare, and manufacturing, where spatial awareness and context are crucial for effective communication. Panoramic content supports telepresence, giving remote participants the sense of “being there” without physically visiting the site. It also reduces the need for complex scene modeling or simulation, making deployment faster and more cost-effective. By combining panoramic visuals with live annotations, pointer tools, or embedded instructions, XR-based teleconferencing can support collaborative decision-making, equipment inspections, or remote training with greater visual clarity than typical video calls or standard XR environments. These benefits make panoramic imaging a valuable solution for enhancing efficiency and understanding in distributed teamwork.

Source(s): (Jin et al., 2023; Teo et al., 2020; Li et al., 2023; Young et al., 2022).

9. Healthcare: Panoramic XR plays an important role in medical education and simulation-based training by recreating real clinical environments—such as operating rooms, dental clinics, and emergency care units—using 360° visual captures. Compared to traditional XR, which often requires detailed 3D modeling of medical settings, panoramic imaging offers a faster and more realistic alternative, capturing the exact layout, equipment placement, and spatial relationships found in actual healthcare facilities. This realism is especially beneficial for training that emphasizes spatial orientation, procedural flow, and environmental familiarity, such as navigating sterile zones, identifying instrument locations, or observing team dynamics during surgeries. The visual fidelity of panoramic content allows learners to immerse themselves in real-world scenarios, which enhances knowledge retention and helps bridge the gap between theoretical instruction and hands-on practice. Moreover, panoramic XR can be easily scaled and distributed for remote or asynchronous learning, making it a valuable tool for medical schools, hospitals, and continuing education programs—particularly in settings where physical access to clinical environments is limited. By offering an authentic, low-risk learning experience, panoramic XR complements traditional XR tools while reducing the need for expensive and complex 3D development pipelines.

Source(s): (Logeswaran et al., 2021; Mergen et al., 2024).

10. Advertising and Marketing: Panoramic imaging provides a compelling medium for immersive advertising and brand storytelling by allowing consumers to engage with 360° content that replicates real-world settings. Unlike conventional XR, which often relies on synthetic or heavily stylized environments, panoramic XR offers photorealistic, location-based experiences that can be quickly produced and deployed across digital platforms. Through panoramic storytelling, brands can create interactive commercials, virtual showrooms, or branded tours, where users explore the experience at their own pace. This user-directed engagement increases immersion and emotional connection, making marketing messages more memorable and impactful. For example, a 360° product showcase can give viewers the sense of standing inside a retail space, walking around a new car model, or experiencing a resort—all from their device. Compared to traditional XR, panoramic content is faster to produce, more visually authentic, and easier to integrate into web and mobile platforms, making it accessible to a wider audience. This makes it an ideal solution for businesses seeking immersive outreach without the development overhead of fully interactive XR experiences.

Source(s): (Dimitrova et al., 2024; Zhao et al., 2023).

11. Sports: Panoramic XR enhances sports viewing and training experiences by capturing the entire 360° field of play, allowing users to immerse themselves in live or recorded events from any angle. Unlike traditional XR, which often involves virtual reconstructions or stylized simulations, panoramic imaging delivers real-time, photorealistic visuals of actual matches, stadiums, and player movements, creating a sense of being physically present at the venue. This immersive viewpoint gives spectators control over their experience, enabling them to switch perspectives—for instance, from the sideline to behind the goal—based on their interests. For athletes and coaches, panoramic playback can provide multi-angle replays and spatial awareness insights, which are valuable for post-game analysis and tactical review. Compared to fully synthetic XR, panoramic-based systems are quicker to deploy and do not require modeling complex animations or environments. They also offer a more authentic fan experience, making them well-suited for remote engagement, virtual attendance, or personalized broadcasting. These advantages position panoramic XR as a practical and impactful tool in both sports entertainment and training contexts.

Source(s): (Le Noury et al., 2022; Mendis et al., 2022).

12. Shopping and E-Commerce: Panoramic-based XR enables the creation of immersive virtual store environments that replicate real retail spaces using 360° imagery. Unlike traditional XR, which often relies on fully synthetic or gamified interfaces, panoramic imaging captures authentic retail layouts and product displays, allowing customers to navigate and explore stores as if they were physically present. This approach enhances spatial understanding and product context, helping users visualize item placement, size, and aesthetic appeal within a real-world setting. For example, customers can virtually walk through a showroom, view products from different angles, and interact with embedded product information—all within a realistic visual environment. Compared to conventional XR shopping apps, panoramic XR is faster and more cost-effective to deploy, especially for small businesses or retailers with limited resources for 3D modeling. It also improves user engagement and decision-making, offering a more intuitive and personalized experience than flat web interfaces or catalog-based e-commerce platforms. As such, panoramic XR bridges the gap between physical retail and digital convenience, offering a practical path toward immersive online shopping.

Source(s): (Baltierra, 2023; Wang et al., 2021).

13. Surveillance: In security and monitoring applications, panoramic imaging offers a distinct advantage by providing comprehensive wide-angle coverage through 360° camera feeds. This minimizes blind spots and reduces the need for multiple fixed-position cameras. When integrated into XR environments, panoramic content allows operators to immerse themselves in the monitored space, offering a more intuitive and spatially aware interface than traditional surveillance dashboards or synthetic XR environments. Unlike conventional XR, which may simulate security scenarios or rely on abstracted data views, panoramic XR presents real-time, photorealistic visuals from actual environments—enabling faster threat detection, situational understanding, and response coordination. Security personnel can explore surveillance feeds by naturally shifting their view within a virtual control room or even remotely, enhancing coverage efficiency and decision-making. Furthermore, panoramic XR can be layered with interactive elements such as alerts, motion tracking, and sensor data overlays, creating a multi-sensory command interface that improves situational control in both public safety and private security contexts. Its realistic, immersive view of the environment makes panoramic XR particularly effective for critical infrastructure monitoring, crowd management, and emergency response coordination.

Source(s): (Ott et al., 2006; Livatino et al., 2023).

14. Driver Assistance and Remote Vehicle Control: Panoramic imaging plays a critical role in enhancing situational awareness for remote operators and autonomous vehicle systems. By capturing the entire 360° environment around a vehicle, panoramic XR enables operators to perceive the driving context in a natural, immersive format, offering a broader and more realistic field of view compared to conventional XR systems that may rely on segmented or simulated visualizations. When integrated into XR interfaces, panoramic content allows remote drivers or supervisors to look around freely using head movement, mimicking the natural behavior of in-vehicle observation. This supports more intuitive and responsive control, especially in complex or high-risk environments such as warehouses, mines, or urban delivery zones. The immersive display of depth cues and spatial relationships aids in obstacle detection, path planning, and maneuvering in tight or unpredictable settings. Unlike synthetic XR models, which may require high computational loads for rendering dynamic environments, panoramic imaging can stream real-time visual data with reduced latency and greater visual fidelity. This makes it a practical solution for scenarios where real-time decision-making, safety, and situational precision are paramount—offering a scalable and cost-effective path toward immersive remote vehicle operations.

Source(s): (Li et al., 2023; Mukhopadhyay et al., 2023).

15. Urban Planning and Geospatial Analysis: Panoramic imaging provides urban planners, architects, and stakeholders with an immersive way to visualize real-world environments in 360°, including infrastructure layouts, public spaces, and transportation networks. Unlike traditional XR, which often relies on synthetic 3D models, panoramic XR offers real photographic context, enabling users to assess existing conditions and proposed changes with greater spatial awareness. This approach enhances virtual site visits, supports collaborative decision-making, and improves communication across disciplines—especially when in-person inspections are not feasible. Planners can explore visibility, pedestrian access, or traffic flow directly within a panoramic interface, making it a valuable tool for design evaluation, community engagement, and geospatial analysis. Its lower production cost and ease of capture make panoramic imaging a practical alternative to more resource-intensive XR modeling approaches.

Source(s): (Ma et al., 2023; Dang et al., 2023).

16. Fitness Experiences (Cycling): Panoramic XR enhances virtual fitness activities—such as cycling, rowing, or treadmill workouts—by immersing users in realistic 360° environments like nature trails, cityscapes, or scenic routes. Unlike synthetic XR, which typically uses stylized or game-like graphics, panoramic imaging delivers photorealistic visuals captured from actual locations, creating a more authentic and motivating experience. Users can navigate or move through these environments in sync with their physical activity, simulating outdoor exploration while remaining indoors. This not only boosts engagement and workout adherence, but also supports mental wellbeing by providing visual variety and a sense of presence. Additionally, panoramic XR is easier and quicker to implement than building detailed 3D fitness environments, making it a cost-effective and scalable solution for home fitness apps, rehabilitation programs, and gym-based experiences.

Source(s): (Mendis et al., 2022).

17. Social Advocacy: Panoramic storytelling has emerged as a powerful tool in advocacy efforts focused on issues such as poverty, climate change, human rights, and disaster response. By capturing real-world conditions in immersive 360° visuals, panoramic XR enables viewers to experience environments firsthand, fostering empathy and emotional engagement that traditional media or synthetic XR cannot easily replicate. The sense of presence offered by panoramic imaging allows users to observe communities, crises (Ahmad et al., 2012; Pintore et al., 2012), or affected regions from an intimate, ground-level perspective—strengthening the impact of the message and encouraging action, awareness, or policy support. Because it requires less technical effort than fully modeled XR, panoramic content is especially suited for NGOs and grassroots campaigns that seek cost-effective, authentic storytelling with high emotional resonance.

Source(s): (Zhao et al., 2023).

18. Banking: In banking environments, panoramic XR is used to support security awareness and customer service training by recreating real-world branch layouts and daily operational scenarios. Through 360° imagery, trainees can immerse themselves in realistic environments—such as teller stations, lobbies, or vault areas—without the need for costly or time-consuming 3D modeling. Panoramic imaging allows institutions to simulate customer interactions, emergency drills, or even security breaches in a safe, repeatable, and visually accurate way. This enhances spatial understanding and procedural learning while ensuring that staff are trained in contextually relevant environments. Compared to traditional XR, panoramic approaches are more cost-effective, easier to update, and better suited for quickly deploying training across multiple locations.

Source(s): (Xiao et al., 2024; Rasanji et al., 2024).

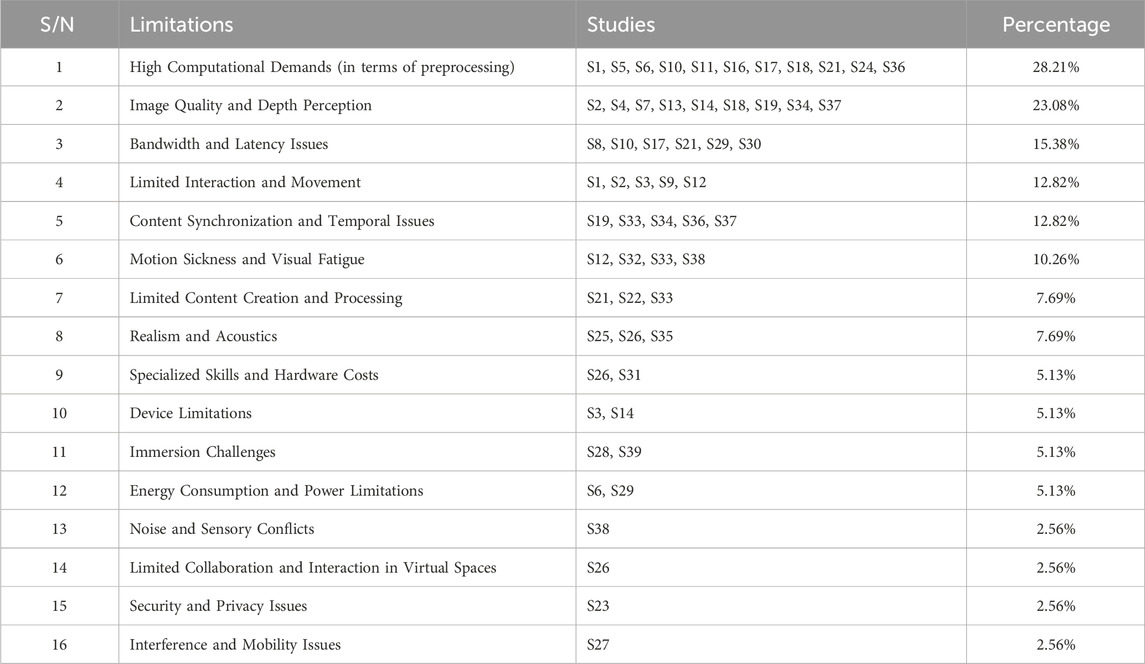

4.2 Limitations of panoramic-based XR technologies

This section examines the limitations of panoramic-based XR technologies identified in the studies included in our review. These limitations span sixteen (16) key categories, each highlighting specific technical challenges affecting various XR technologies discussed/addressed in the reviewed articles. A comprehensive summary of these limitations, along with their occurences, is presented in Tables 4 and 6, respectively.

1. High Computational Demands: Panoramic imaging requires real-time stitching, rendering, and playback of ultra-high-resolution 360° content. These processes significantly strain system resources, especially in interactive XR scenarios, where latency must be minimized to preserve. These demands can limit the scalability and accessibility of XR applications. For example, rendering a 360-degree video in a VR environment may require powerful GPUs, especially when targeting high frame rates (e.g., 90 FPS or above) and resolutions (e.g., 4K per eye), which are essential for minimizing motion blur and latency (Marrinan and Papka, 2021). In contrast, lower-end GPUs may suffice for static panoramic experiences or applications where frame rate and visual fidelity are less critical, such as simple virtual tours or training modules (Lyu et al., 2019). However, for highly interactive or photorealistic VR environments involving dynamic content and head-tracking, GPU performance becomes a key determinant of user comfort and immersion (Li et al., 2021). This highlights the trade-off between immersive quality and hardware requirements, which can affect accessibility across different user groups and institutions.

Source(s): (Waidhofer et al., 2022; Zhang et al., 2020; Pintore et al., 2023b; Zheng et al., 2021; Chen R. et al., 2022; Li et al., 2021; Yaqoob and Muntean, 2023; Xhako et al., 2023; Hirway et al., 2022; Du and Yu, 2020; Takacs and Vincze, 2021).

2. Image Quality and Depth Perception: Achieving photorealism across a full panoramic field is difficult due to inconsistent lighting, depth ambiguity, and image warping at stitch lines. These artifacts reduce the spatial coherence needed for effective presence and depth judgment. For instance, in immersive training simulations, improper depth perception may disrupt the learning experience, causing misjudgment of distances or spatial relationships.

Recent studies illustrate the efficacy of panoramic and stereoscopic XR systems in enhancing the alignment of spatial relationships in immersive training environments (Chen M. et al., 2022). created a panoramic augmented reality (PAR) teaching platform intended for intricate industrial wiring operations. The technology superimposes virtual instructional content onto real-world panoramic visuals, allowing trainees to physically position cables, connectors, and tools within a realistic environment. This strongly resembles actual assembly tasks, such as aligning mechanical fasteners or components, where precise spatial alignment is important (Livatino et al., 2023). evaluated user perception of spatial dimensions in photorealistic panoramic virtual reality environments. Participants exhibited considerable precision in assessing object distances and spatial configurations, underscoring the efficacy of panoramic VR for tasks that requires spatial judgement, including component placement and layout verification (Nam and Han, 2021). presented a stereoscopic image optimisation technique that aligns binocular disparity by Euler angle correction, leading to improved spatial coherence and depth consistency. This advancement is particularly vital for training applications that require users to fully understand complicated spatial configurations without misalignment or visual distortion. These examples highlight the power of panoramic and XR technologies to facilitate spatial alignment in activities that call for accuracy, procedural comprehension, and immersive contextualisation. Source(s): (Chen M. et al., 2022; Teo et al., 2020; Livatino et al., 2023; Nam and Han, 2021; Qianqian, 2023; Xhako et al., 2023; Zhu et al., 2023; Pintore et al., 2024b; Dang et al., 2023).

3. Bandwidth and Latency Issues: High-resolution panoramic content, such as 360-degree videos and live streaming, requires significant bandwidth for smooth operation, especially in real-time applications. Additionally, latency issues—delays between user input and system response—can disrupt the immersive experience. For example, live-streamed panoramic sports events may experience interruptions or delays, reducing the quality of the experience.

Source(s): (Li et al., 2023; Zheng et al., 2021; Yaqoob and Muntean, 2023; Hirway et al., 2022; Zhu Z. et al., 2022; Jeong et al., 2020).

4. Limited Interaction and Movement: Many panoramic systems, particularly those using static imagery, restrict users to 3DoF, reducing interaction fidelity. This is unlike native 3D environments, where 6DoF is standard. For example, a virtual tour of a museum might only allow users to rotate and zoom in or out but not move freely within the space.

Source(s): (Waidhofer et al., 2022; Chen M. et al., 2022; Marrinan et al., 2024; Im et al., 2023; Wang et al., 2021).

5. Content Synchronization and Temporal Issues: Synchronizing panoramic content, particularly when integrating multiple media streams (e.g., audio, video, and interactive elements), can be complex. Temporal misalignments, such as out-of-sync audio and visuals, can disrupt the user experience. In virtual storytelling, a delay between narrative voiceovers and visual cues can break immersion.

Source(s): (Zhu et al., 2023; Xue et al., 2021; Pintore et al., 2024b; Takacs and Vincze, 2021; Dang et al., 2023).

6. Motion Sickness and Visual Fatigue: The wide field of view (FoV) and immersive nature of panoramic XR experiences can heighten the risk of motion sickness and visual fatigue, particularly when there is a mismatch between visual cues and the user’s vestibular (balance) system. This issue is exacerbated when panoramic content includes rapid camera movements, abrupt scene transitions, or inconsistent stitching artifacts that disrupt spatial continuity. Unlike traditional VR environments—where camera control and scene design can be optimized for comfort—panoramic XR often relies on pre-captured 360° content, limiting opportunities for real-time adjustment. Additionally, lower frame rates, high latency, or display inconsistencies in rendering panoramic scenes can contribute to eye strain, nausea, and disorientation, particularly during extended sessions or for first-time users.

The onset of motion sickness in virtual environments varies widely, but symptoms can appear within just 5–10 min, especially in high-motion scenarios or among first-time users—typically due to visual-vestibular mismatch and system latency (Conner et al., 2022; McHugh, 2019; Ryu and Ryu, 2021). For instance (Gruden et al., 2021), observed significant discomfort within minutes during a dynamic VR driving simulation. On the other end of the spectrum (Nordahl et al., 2019), reported symptom escalation after 7 h in a 12-h continuous VR session, suggesting a practical upper limit for exposure. Additionally, repeated use may reduce symptoms over time (Conner et al., 2022). Factors influencing sickness onset include user demographics (Peng, 2024), content intensity (Chattha et al., 2020), and hardware characteristics such as latency and field of view (Ryu and Ryu, 2021). Source(s): (Wang et al., 2021; Mendis et al., 2022; Xue et al., 2021; Lu et al., 2023).

7. Content Creation Complexity: Creating high-quality panoramic content for XR applications involves considerable technical and logistical challenges. Unlike conventional XR pipelines that often rely on computer-generated environments, panoramic XR typically requires specialized hardware such as multi-lens 360° cameras, stereo rigs, or LiDAR systems to capture the full environment (Pintore et al., 2024a). The raw footage must then undergo computationally intensive post-processing steps including stitching, color correction, exposure balancing, and geometric alignment to ensure seamless visual continuity. For interactive or dynamic scenes, additional complexities arise—such as synchronizing multiple panoramic viewpoints, integrating depth information, or generating stereoscopic panoramas. Furthermore, real-time panoramic applications (e.g., live streaming or telepresence) demand robust encoding and low-latency transmission pipelines, often requiring advanced technical expertise. These challenges make the content creation process more resource-intensive and less accessible compared to traditional 3D modeling or synthetic XR content generation.

Source(s): (Hirway et al., 2022; Bertel et al., 2020; Xue et al., 2021).

8. Realism and Acoustics: A critical factor in delivering immersive panoramic XR experiences is the integration of realistic audio that aligns with the user’s spatial orientation. However, many panoramic XR systems fall short in providing spatially coherent and directionally accurate audio, which significantly undermines the sense of presence. Unlike in fully 3D-rendered environments where positional audio can be dynamically generated and updated in real time, panoramic content often relies on pre-recorded or stereo soundtracks that do not adapt to the user’s head movement or gaze direction. This creates a disconnect between what the user sees and hears, especially when rotating within a 360° scene. Furthermore, there is a lack of standardized frameworks or toolkits for seamlessly embedding and synchronizing spatial audio with panoramic visuals—particularly for stereoscopic or multi-perspective content. The absence of spatial soundscapes can flatten the sensory experience, making environments feel static or artificial and reducing the emotional and cognitive impact of the XR content.

Source(s): (Young et al., 2022; Jin et al., 2023; Kim et al., 2022).

9. Specialized Skills and Hardware Costs: Developing and deploying panoramic-based XR systems requires both specialized technical expertise and high-cost equipment, which poses a significant barrier to entry for many institutions and developers. Capturing high-quality panoramic content often involves the use of omnidirectional or multi-lens cameras, stereoscopic rigs, and sometimes LiDAR scanners—all of which require precise setup, calibration, and operation. Post-processing such content also demands familiarity with tools for stitching, projection mapping, optical flow alignment, and managing large datasets. On the display side, panoramic XR experiences typically benefit from high field-of-view (FoV) head-mounted displays (HMDs) with high resolution and refresh rates to minimize distortion and ensure immersion—devices that are still relatively costly and not uniformly available across markets. The combination of equipment costs, processing requirements, and skilled labor needed to create and deliver panoramic XR experiences limits its widespread adoption, particularly in resource-constrained settings such as public education, small businesses, or developing regions.

Source(s): (Jin et al., 2023; Zhu H. et al., 2022).

10. Device Limitations: Panoramic XR experiences depend heavily on hardware capable of delivering high-resolution, wide field-of-view (FoV) displays to accurately render 360° content. However, many consumer-grade HMDs (head-mounted displays) have limitations in terms of resolution, FoV, and refresh rates, which directly impact the visual quality and immersive potential of panoramic scenes. Inadequate resolution can lead to pixelation and screen-door effects, where the user sees visible gaps between pixels—distracting from the experience and breaking immersion. Additionally, a narrow FoV may prevent users from perceiving the full spatial context of the scene, effectively cropping the intended panoramic experience and causing disorientation during head movement. These limitations are particularly problematic for static panoramic content, which relies heavily on visual fidelity and clarity to convey depth, realism, and presence in the absence of interactive geometry. Moreover, not all HMDs support the decoding or playback of high-bitrate panoramic video, further constraining the delivery of high-quality content on mainstream devices. As a result, the hardware bottleneck remains a key barrier to the broader accessibility and effectiveness of panoramic XR applications.

Source(s): (Marrinan et al., 2024; Teo et al., 2020).

11. Immersion Challenges: While panoramic XR environments offer immersive visuals through full 360° coverage, they often fall short in simulating real-world physical cues that are essential for a convincing sense of presence. A key limitation arises when panoramic scenes are created from stitched 2D images, which lack the depth information required to accurately reproduce occlusion, parallax, and motion-based perspective shifts. This becomes particularly noticeable when users move their heads or attempt to navigate the environment—resulting in visual inconsistencies that break the illusion of being physically present within the scene. For example, objects in the foreground may appear flat or misaligned when viewed from different angles, and the absence of dynamic lighting or shadows can make environments feel static or artificial. Unlike 3D-modeled XR experiences that adjust scene rendering based on user position and orientation, traditional panoramic content often remains viewpoint-fixed or shallow, limiting natural interaction and depth perception. These limitations reduce sensorimotor congruence, which is critical for immersion, and can lead to user disengagement or discomfort, especially in applications where spatial awareness is key (e.g., training, navigation, or collaboration).

Source(s): (Araújo et al., 2023; Zhao et al., 2023).

12. Energy Consumption and Power Limitations: Panoramic XR applications are particularly demanding in terms of processing power and energy consumption, especially when deployed on mobile or untethered platforms such as standalone headsets, tablets, or smartphones. Rendering and displaying high-resolution 360° content requires continuous decoding of large video files or textures, real-time stitching of multiple image streams, and frequent scene redrawing as the user changes viewpoint. These operations place a sustained load on the GPU, CPU, and memory subsystems, leading to rapid battery drain and thermal buildup. The situation becomes more critical in live-streamed panoramic experiences, where real-time network transmission and decoding further increase the energy footprint. This significantly limits the duration and reliability of field-based applications such as outdoor training, mobile journalism, or tourism. Additionally, power constraints may force resolution scaling or frame rate reductions, which degrade visual quality and negatively impact user experience. Unlike traditional XR applications where content complexity can be adjusted dynamically, panoramic XR systems often operate with pre-captured high-resolution imagery, leaving limited flexibility for optimization. As such, power efficiency becomes a major bottleneck in the scalability and portability of panoramic XR—particularly in contexts where infrastructure is limited or prolonged use is required.

Source(s): (Pintore et al., 2023b; Zhu Z. et al., 2022).

13. Noise and Sensory Conflicts: Panoramic XR experiences can suffer from sensory mismatches when visual, audio, or haptic elements are not properly integrated. For example, static or misaligned directional audio in a 360° scene, or the absence of tactile feedback, can create disorientation and reduce immersion. These conflicts are particularly disruptive in training and storytelling applications, where realism is essential. Addressing them requires better synchronization of multimodal cues within panoramic environments.

Source(s): (Lu et al., 2023).

14. Limited Collaboration and Interaction in Virtual Spaces: Panoramic XR, particularly when based on static 360° imagery, presents significant limitations in supporting multi-user collaboration and interactive co-navigation. Unlike synthetic XR environments—where users can move freely, interact with 3D objects, and engage with avatars in real time—panoramic scenes are typically fixed-viewpoint and pre-rendered, offering limited scope for dynamic interaction. This restricts users from jointly manipulating content, annotating scenes, or engaging in spatially aware dialogue, which are critical features in collaborative domains such as architecture reviews, remote training, and virtual meetings. The absence of interactive elements, depth-aware objects, and avatar presence reduces the sense of co-presence and weakens the immersive quality of shared virtual spaces. Additionally, because panoramic content lacks environmental responsiveness (e.g., object physics, lighting changes), it is less effective in scenarios that require active exploration, feedback loops, or synchronous decision-making. These limitations make panoramic XR less suited for tasks that go beyond passive viewing, especially where real-time spatial interaction is crucial.

Source(s): (Jin et al., 2023).

15. Security and Privacy Issues: Panoramic XR captures full-scene environments, increasing the risk of unintentionally recording sensitive or private information—such as personal identities, confidential documents, or secure areas. This is especially critical in sectors like healthcare, education, and finance. Unlike traditional XR, panoramic content leaves less control over what is included in the frame, making anonymization and redaction more complex. Additionally, when shared or streamed, this data may be vulnerable to unauthorized access without proper encryption and access controls. Addressing these concerns requires privacy-aware design, secure data handling, and compliance with relevant regulations.

Source(s): (Xiao et al., 2024).

16. Interference and Mobility Issues: Mobile panoramic XR applications often involve streaming or transmitting high-resolution 360° content, which can be affected by network interference, latency spikes, and signal instability. These issues are more pronounced in dynamic environments where users are on the move or operating in areas with inconsistent wireless coverage. Such disruptions can cause visual artifacts, frame drops, or lag, affecting continuity in use cases like live streaming, remote collaboration, or field operations. Additionally, mobile devices may face handover delays or connection drops during transitions between network zones. Addressing these challenges requires efficient compression, adaptive streaming protocols, and stable wireless infrastructure to maintain a smooth and immersive experience.

Source(s): (Srinivasan et al., 2023).

4.3 Discussions and analysis of the applications and limitations of panoramic-based XR technologies

As detailed in Table 5, our review of 39 studies reveals that education is the leading application area for panoramic-based XR (28.21%), particularly in creating virtual classrooms, labs, and training simulations. Tourism and virtual tours follows (25.64%), leveraging XR for cultural exploration. Then Entertainment (23.08%), with applications such as immersive live events and virtual concerts, while the gaming sector (also 20.51%) focuses on dynamic 360° gameplay experiences.

Other notable sectors include remote collaboration and immersive storytelling (17.95% each), real estate and architecture (15.38%), and healthcare (12.82%), where XR enhances visualization, communication, and training. Additional emerging domains—such as advertising, sports, and urban planning—each represent less than 11% of the reviewed studies, highlighting areas of future potential.

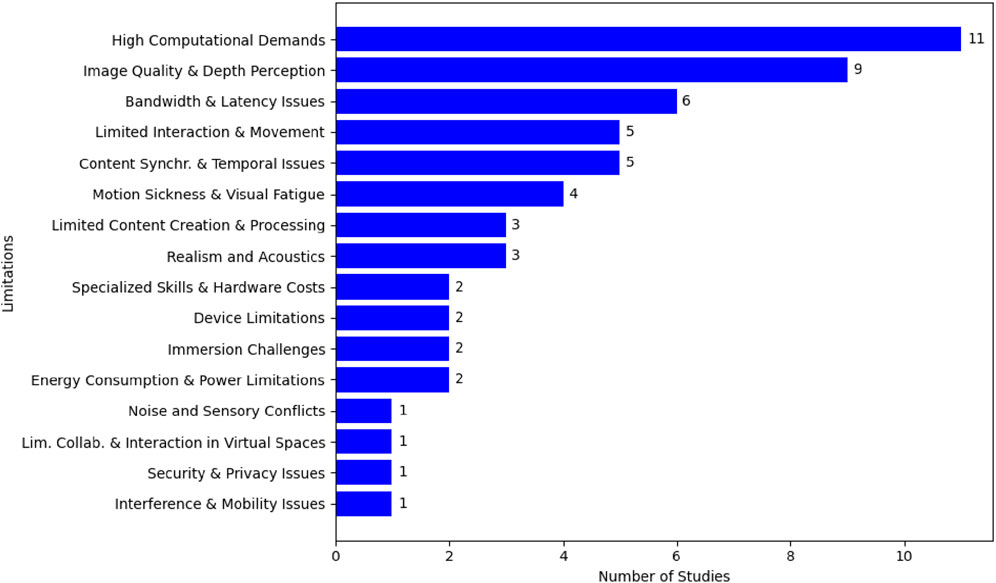

In addition to the numerous applications of panoramic-based XR technologies, the literature reveals a range of limitations associated with these technologies. These limitations span across various technical and practical aspects, as shown in Table 6 and Figure 4, and are grouped into sixteen (16) major categories.

The most prevalent limitation identified is high computational demands, which is noted in 28.21% of the reviewed studies. Panoramic-based XR systems require significant processing power for real-time rendering, image stitching, and delivering smooth, immersive experiences. This challenge is particularly pronounced in VR applications, where the need to maintain high frame rates and visual quality can strain even high-end hardware. For example, 16K panoramic video rendering in real-time can overwhelm standard GPU capabilities, causing latency and reducing the overall quality of the user experience.

Another key limitation is related to image quality and depth perception, cited by 23.08% of the studies. Although panoramic imaging offers a 360-degree view, maintaining consistent image quality and accurate depth perception across all angles can be difficult. Visual artifacts, pixelation, and incorrect depth cues can negatively affect the immersive experience, especially in AR and MR applications where digital objects need to align seamlessly with the real world. This is a common challenge in architectural visualization and gaming applications where precise object placement is crucial.

Bandwidth and latency issues are highlighted in 15.38% of the studies. Transmitting high-quality panoramic images or video streams in real-time requires significant bandwidth, which can lead to latency problems. This limitation is especially relevant in applications such as live streaming of sports events or concerts, where delays can disrupt the immersive experience. Additionally, remote collaboration and teleconferencing platforms struggle with these issues, leading to a degraded quality of interaction.

Limited interaction and movement capabilities are discussed in 12.82% of the studies. Many panoramic-based XR applications restrict users to predefined viewpoints or limit their ability to interact with virtual objects. This reduces the overall engagement and immersion in the experience. For example, virtual museum tours often allow users to only observe the environment but do not provide interactive features that allow deeper engagement with the exhibits.

Content synchronization and temporal issues are another common limitation, discussed in 12.82% of the studies. Synchronizing real-time data, such as live audio and video feeds, with panoramic imagery can be challenging, especially when dealing with multiple input sources. Temporal discrepancies in XR systems can lead to disjointed user experiences, especially in live event broadcasting or collaborative virtual environments where synchronization is key for maintaining coherence.

Motion sickness and visual fatigue, noted in 10.26% of the studies, are significant challenges in panoramic-based XR technologies. They stem from mismatched visual and vestibular cues or prolonged focus on stereoscopic images, leading to discomfort, nausea, or eye strain. These issues are particularly concerning for extended XR use, such as in training or gaming, highlighting the need for improved display technologies and ergonomic designs to enhance user comfort.

The remaining ten limitations are represented by less than 10% of the included studies, suggesting that while these challenges are emerging, they still pose significant concerns in the research and development of panoramic-based XR technologies. These limitations, including realism and acoustics, energy consumption and power constraints, as well as security and privacy issues, highlight the complexities and barriers in fully leveraging panoramic-based XR technologies to create immersive and effective XR applications.

5 Perceptual studies and user experience challenges

5.1 Perceptual studies

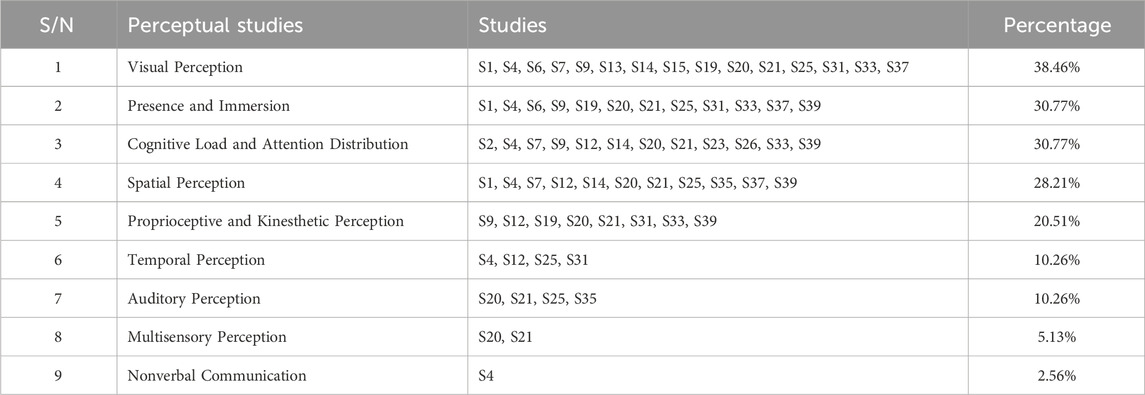

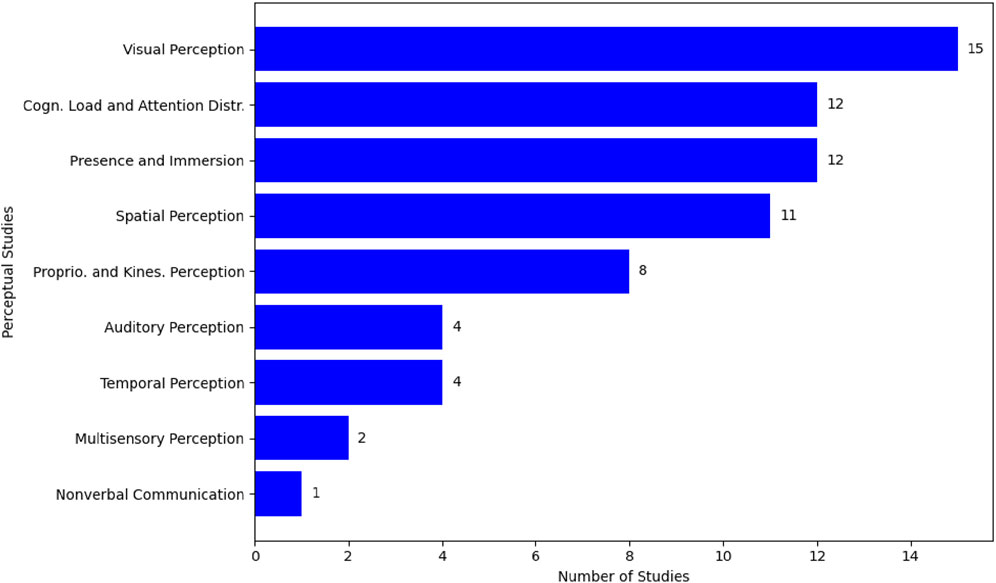

This section provides an in-depth analysis of the various perceptual studies on panoramic-based XR technologies as identified in the reviewed literature. These studies are categorized into nine (9) key areas, representing user perceptual studies conducted across a diverse range of proposed panoramic-based XR applications. A comprehensive summary of these studies, including their frequency and rankings, is presented in Table 7 and visually illustrated in Figure 5. Additionally, Table 8 offers a detailed overview of the sources’ user perceptual studies, encompassing the apparatus utilized, participant demographics, age ranges, and study durations.

Table 8. Summary of the sources’ user perceptual studies, equipment used, participant demographics, age range, and study duration.

Drawing from insights provided in the literature, the following sections elaborate on these perceptual studies, offering brief explanations and illustrative examples to highlight their significance within the context of panoramic-based XR technologies.

1. Visual Perception: This refers to how users interpret visual stimuli presented in panoramic-based XR systems. Visual sense is undoubtedly the most important source of information in the perception of virtual worlds (Doerner and Steinicke, 2022). In immersive environments, the quality of visual perception is crucial for creating a sense of realism. For instance, panoramic videos in VR need to simulate light, depth, and motion accurately to ensure that the user experiences the environment as naturally as possible.

2. Presence and Immersion: Presence describes the user’s sensation of “being there” in the virtual environment. Immersion refers to the extent to which the system can surround the user with stimuli that block out the real world (Doerner and Steinicke, 2022). In panoramic-based XR, achieving high immersion and presence is critical, as seen in applications like virtual tourism, where users need to feel fully immersed in 360-degree views.

3. Cognitive Load and Attention Distribution: In XR environments, managing the user’s cognitive load is key to ensuring effective interaction and learning (Hebbar et al., 2022). For example, in virtual education, attention must be directed to relevant objects, while avoiding overwhelming users with too much information. Effective panoramic systems strategically distribute visual and auditory cues to manage cognitive load.

4. Spatial Perception: Users must perceive depth, distance, and spatial relationships accurately in panoramic XR environments. In architectural applications, for example, spatial perception allows users to experience 3D models of buildings as if they were physically moving through them (Doerner and Steinicke, 2022).

5. Temporal Perception: This involves the user’s sense of time in the virtual world (Read et al., 2021). Panoramic-based XR must synchronize movements, sounds, and visuals to align with real-time perceptions. In gaming applications, for instance, this is crucial for seamless interaction and immersion.

6. Proprioceptive and Kinesthetic Perception: This refers to the awareness of the body’s movement and position in space (Doerner and Steinicke, 2022). In XR, this is often leveraged through motion tracking technologies that allow users to feel as though their physical movements are mirrored in the virtual world, such as in fitness simulations.