- VR and AR Research Unit, Institute of Visual Computing and Human-Centered Technology, Faculty of Informatics, TU Wien, Vienna, Austria

Mobile robots are becoming more common in Virtual Reality applications, especially for delivering physical interactions through Encountered-Type Haptic Devices (ETHD). However, current safety standards and definitions of collaborative robots (Cobots) do not sufficiently address situations where an immersed user shares the workspace and interacts with a mobile robot without seeing it. In this paper, we explore the specific safety challenges of using mobile platforms for ETHDs in a large-scale immersive setup. We review existing robotic safety standards and perform a risk assessment of our immersive autonomous mobile ETHD system CoboDeck. We demonstrate a structured approach for potential risk identification and mitigation via a set of hardware and software safety measures. These include strategies for robot behavior, such as pre-emptive repositioning and active collision avoidance, as well as fallback mechanisms. We suggest a simulation-based testing framework that allows evaluating the safety measures systematically before involving human subjects. Based on that, we examine the impact of different proposed safety strategies on the number of collisions, robot movement, haptic feedback rendering, and noise resilience. Our results show considerably improved safety and robustness with our suggested approach.

1 Introduction

With the recent advancements in quality and field of view of Head-Mounted Displays (HMDs), Virtual Reality (VR) environments are becoming increasingly immersive. In addition to visual immersion, haptic feedback can further enhance the user’s sense of presence by allowing physical interaction with virtual objects (Sallnäs et al., 2000). However, placing a passive proxy object to overlap with the virtual one is not enough, as continuously aligning the objects is difficult. A specific class of haptic technologies known as Encountered-Type Haptic Devices (ETHDs) utilizes robots to position a proxy object that allows users to touch and interact with a corresponding virtual object as if it were physically present (Yokokohji et al., 1996; 2005). Compared to passive proxy objects, ETHDs provide more dynamic feedback and a wider range of simulation possibilities (Araujo et al., 2016). They can deliver high-fidelity tactile sensations without adding external load or restricting movement, unlike wearable haptic devices, which can reduce user comfort by introducing additional gear on the user’s body (de Tinguy et al., 2020; Horie et al., 2021).

Previously, ETHDs relied mostly on stationary robotic arms to create haptic feedback within a limited volume (Mercado et al., 2021; Vonach et al., 2017). To improve scalability and enable large-scale haptic interaction, ETHDs have recently been integrated with mobile platforms, such as quadcopters (Yamaguchi et al., 2016; Hoppe et al., 2018; Abtahi et al., 2019; Abdullah et al., 2018) and wheeled robots (Suzuki et al., 2020; Wang et al., 2020). Although these mobile ETHDs (mETHDs) can be highly maneuverable, they cannot provide the forces necessary to simulate sturdier objects, like walls. For that, more powerful devices with substantial payloads should be employed, such as lower-tier industrial robotic arms. Robotic arms with reasonable payload are very heavy; thus, mobile robotic platforms able to provide enough stability and movability as a base for such arms need to be massive. Moreover, at a large scale, the robot needs to be autonomous to minimize the response delay when providing haptics. However, the high weight of such mobile robots makes collision risks more severe for the user.

Ultimately, the human-robot interaction (HRI) should be safe. Therefore, collaborative robots, also known as cobots, were introduced. This group of robots has advanced safety features built in, such as power and force limitations, and an emergency stop at contact. Nevertheless, in typical interactive industrial scenarios, cobots do not move while the user physically interacts with them and stop or move very slowly if the user just shares the same space. These safety measures add complexity and strongly restrict the possible interactions.

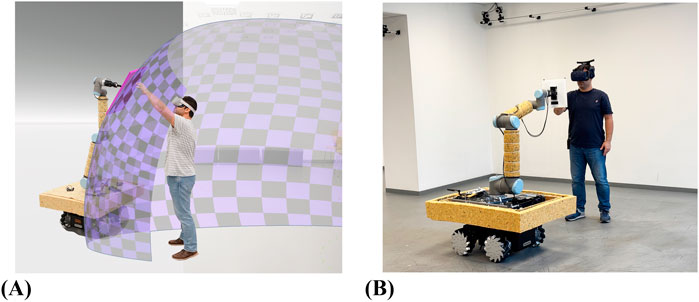

In a haptic VR scenario, the user needs to be physically collocated and touch the robot that provides the real-time haptic feedback. In a large-scale setup, such as a design studio, the VR user would need to freely walk and interact with the virtual content, requiring a mobile robot to quickly react to her intention to interact: (see Figure 1A). Apart from this, the HMD is effectively blindfolding the user from the physical world. Sharing space with any mobile robot the VR user cannot see makes her vulnerable to collisions and other hazards during close proximity interaction due to the robot’s size and shape, its possible corners and edges, or any instability due to the load’s weight, shape, and inertia: (see Figure 1B).

Figure 1. Close-proximity human-robot interactions in an mETHD system. (A) Overlays the virtual object that the user is interacting with in VR. (B) Shows the physical human-robot collaborative workspace. The user remains unaware of the position of the robot as she is immersed in VR.

Although there are works on safety in HRI covering various user-aware path-planning algorithms and different sensors to avoid unwanted collisions, these efforts are largely focused on traditional HRI settings with limited interactions (see Section 2.1). The combination of industrial experiences with safety research has led to the development of safety standards and industry norms. However, existing safety protocols are only partially applicable and sometimes not sufficient for the challenges posed by any ETHDs near a VR user. For example, a common safety measure in HRI is the maintenance of a substantial distance between humans and robots in a shared workspace. The industrial norms call for the robot to stop its operation or proceed at a considerably reduced speed if a human enters its space. However, neither is suitable for mETHDs.

We review the relevant studies and industrial standards further in Section 2. There seems to be no research that systematically addresses the safety of users in large-scale VR setups with mETHDs from design to complete implementation. This paper seeks to fill this gap by (1) conducting a comprehensive risk analysis of an mETHD utilizing a mobile robot carrying a robotic arm as an example case, identifying various safety risks associated with such systems. Based on the result of this risk analysis (2) we propose safety measures at both hardware and software levels, tailored specifically for close proximity HRI in mETHDs. Furthermore, (3) we describe a testing approach that enables systematic testing and validation of safety protocols. Using the implemented testing framework, we define a series of test cases to (4) evaluate and validate our proposed safety-oriented robot behaviors, demonstrating their effectiveness in enhancing safety in large-scale VR applications involving mobile autonomous robots. Although our mobile platform and robotic arm form a single unit, here we primarily focus on the mobile platform’s safety, which is underexplored in research. We developed our safety approach for a special case of a ground-based heavy mobile robot, where safety is critical. However, it can be applied to other types of mobile robots like vacuum cleaners, suspended robots, and drones.

2 State of the art

2.1 Human-robot interaction and safety

Early studies in HRI mostly recommended segregating robot workspaces from human operators to prevent accidents (Engelberger, 1985). However, some early works suggest basic safety considerations for close collaboration between robots and humans. For example, Heinzmann and Zelinsky (1999) introduced simple and basic safety mechanisms for robotic manipulators, aiming to create “human-friendly robots” capable of operating safely near human users. For shared workspaces, Baerveldt (1992) introduced basic localization mechanisms to stop the robot when a human approaches, as well as emergency stop buttons mounted on robots to handle hardware or software failures. While these early safety measures are useful in general HRI contexts, they are very basic and fall short in applications involving more dynamic and close interactions.

Physical safety is recognized as absolutely necessary for human-robot collaboration (HRC). Ensuring physical safety requires careful consideration in the design of the robot itself (Giuliani et al., 2010; Casals et al., 1993), the environment (Michalos et al., 2015), and the control systems governing robot movements (Sisbot et al., 2007; Kulić, 2006; Haddadin et al., 2008; De Santis et al., 2008). Alami et al. (2006) reviewed general safety principles and challenges in physical HRI, while De Santis et al. (2008) provided a comprehensive review on safety standards, injury criteria, mechanical and control techniques, and planning for safe robotic operations. Haddadin et al. (2008) also examined strategies for collision detection and mitigation, experimentally validating techniques to reduce injury risk from physical contact. Haddadin et al. (2009) highlighted risks for humans interacting with industrial robots by systematically testing various industrial robots colliding with crash test dummies to document possible injuries from uncontrolled robot movements. Beluško et al. (2016) proposed visual work instructions to support safe industrial robot operation, enhancing both safety and productivity but potentially reducing immersion in VR applications, such as ETHDs. When considering autonomous mobile robots safety becomes even more critical due to the mobility and dynamic nature of these systems (Hata et al., 2019).

Beyond physical safety, the user’s feeling of security when interacting with a robot known as perceived safety, is vital in HRC. Factors influencing perceived safety include comfort, trust, and a sense of control (Akalin et al., 2022). Various methods to measure perceived safety, such as questionnaires, physiological assessments, behavioral analysis, and direct feedback devices, have been proposed (Rubagotti et al., 2022; Menolotto et al., 2024). Importantly, these factors may differ in VR applications, where users are immersed and unaware of a robot in close proximity, yet still required to interact with them.

According to ISO 10218 (ISO, 2011a), collaborative robots must undergo a risk assessment before being used. Although risk assessment and mitigation largely rely on expert knowledge, recently various tools and methods have been proposed to support risk assessment and reduction of cobots (Huck et al., 2021). Askarpour et al. (2016) introduced SAFER-HRC, a safety analysis method based on formal verification techniques to explore errors between human operators and robots. Chemweno et al. (2020) conducted a review of the state-of-the-art in risk assessment processes and safety safeguards. Semi-automated risk assessment methods are often effective in static environments, such as production lines with well-defined tasks. However, these approaches do not cover VR simulations, where users should dynamically and continuously interact with robots to haptically experience the elements of virtual environments (VEs).

Zacharaki et al. (2020) conducted a comprehensive review of prior research in this field and proposed a systematic method for ensuring safety in HRC. In a more recent survey by Lasota et al. (2017), the authors categorized existing related works into safety through control, motion planning, prediction, and consideration of psychological factors. While these studies inspired our safety design, we optimize specifically for VR settings, where the user is effectively blindfolded by HMDs. Moreover, in applications such as mETHDs, physical contact between the user and the robot is intended and unavoidable. Therefore, unlike conventional HRC contexts, mETHDs require collision avoidance algorithms that distinguish between safe, intentional interactions and undesired contact.

2.2 Industry norms

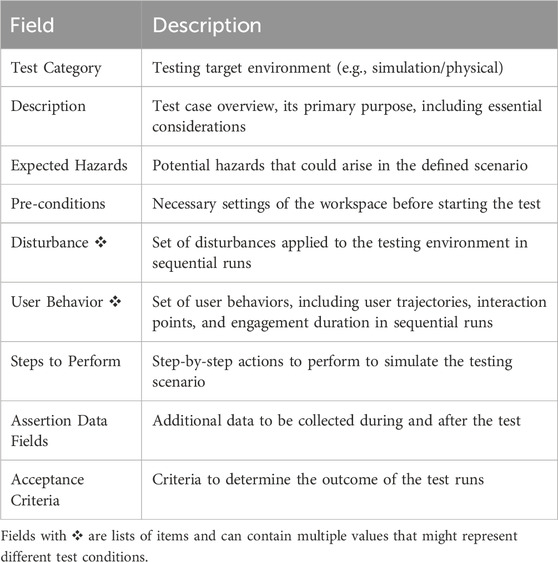

As robots become more common in manufacturing, industry experts and organizations are actively developing safety standards for HRC applications. These efforts have led to the publication of various types of documents in the International Standards Organization (ISO) and the American National Standards Institute (ANSI). A brief comparison of related standards used in shaping our presented safety guideline is listed in Table 1.

Table 1. Overview of safety standards in Human-Robot Interaction: summarizing key details and differences across standards governing safety in industrial and collaborative robotics, personal care robots, and control systems.

The ISO 10218 series (ISO, 2011a; ISO, 2011b) provides guidelines for the safe deployment of industrial robots. It includes fundamental aspects such as robot safety requirements, risk assessment, and safety protocols to prevent accidents in industrial settings. The first part focuses on the safety requirements related to the robot itself, including specifications for design, construction, and integration to ensure safe operation in industrial environments. The second part extends this by emphasizing the safety of the overall robotic system, including the robot, its environment, and human interaction.

In addition to ISO 10218, ISO/TS 15066 offers specialized guidance on collaborative robot systems, emphasizing risk assessment and safety prerequisites such as power and force limitations and speed monitoring (ISO, 2016). A subsequent analysis discussing applications, requirements, and efforts to address the limitations of this technical specification was published in a report by Marvel from the National Institute of Standards and Technology (NIST) (Marvel, 2017). ISO/TS 15066 defines four types of HRC operations: Safety-rated Monitored Stop (SMS), Hand Guiding (HG), Speed and Separation Monitoring (SSM), and Power and Force Limiting (PFL). In SMS the robot halts its motion while a human is in the workspace. HG allows cobots to move under direct control from an operator. SSM permits cobots to move concurrently with the operator as long as they maintain a pre-defined distance apart. PFL requires cobots to have force feedback to detect contact with humans and react accordingly. Under this standard, when a human operator is in the shared workspace, the cobot must either remain stationary (SMS and HG) or move very slowly (SSM and PFL). Adhering to these constraints in VR might significantly reduce the performance of cobots in delivering haptic feedback as mETHDs. Furthermore, in scenarios where robots interact with visually isolated VR users, they must be able to respond quickly to avoid collisions. This requires rapid movements conflicting with the speed limitations imposed by the standard.

For personal care robots, ISO 13482 (ISO, 2014) outlines safety requirements for non-industrial environments, addressing collision avoidance, impact force thresholds, and fault tolerance to ensure safe interaction. However, the standard is not suitable for VR applications, as it primarily focuses on healthcare robots, which have different characteristics and functions.

The European EN ISO 13849 series (ISO, 2023; 2012) focuses on the safety of control systems, delineating performance levels for safety-related aspects and offering guidance on designing and validating safety functions. The general risk assessment procedure for machinery is provided by ISO 12100 (ISO, 2010), forming the basis for our risk assessment.

In the United States, the Robotic Industries Association (RIA) issues the ANSI/RIA R15.08 standard series, which provides guidelines for the safe deployment of autonomous mobile robots in industrial environments (Robotic Industries Association, 2020; 2023). This series of standards emphasizes human safety through key principles such as navigation safety, obstacle detection, emergency stop systems, and the use of sensors to prevent collisions. While highly relevant for autonomous mobile robots used in mETHDs, its focus is on traditional industrial settings, not on the unique challenges posed by VR users interacting with mobile robots. As a result, while ANSI/RIA R15.08 can serve as a foundational reference, VR applications demand more stringent safety measures.

2.3 Safety in ETHDs in VR

Safety considerations in VR are crucial because users are isolated from the physical environment, making them vulnerable to various hazards like collisions (Kang and Han, 2020; Kanamori et al., 2018). The addition of cobots further heightens these safety challenges. One primary application of robots in VR is in ETHDs. Safety has been considered a critical factor in the design of many ETHDs. For example, Vonach et al. (2017) and Mercado et al. (2021) outlined safety measures for their robotic arm haptic platforms, including speed and torque limits, emergency switches, and human oversight. Similarly, Dai et al. (2022) implemented speed and force limitations to minimize collision risks. Zhou and Popescu (2025) proposed a redirection strategy for static and dynamic objects to ensure safety by avoiding the user colliding with a moving ETHD. In addition to that, they argued that safety is enforced because users interact with the ETHD using a handheld stick rather than their bare hands. While this is valid, it may be less applicable in scenarios where direct haptic feedback with bare hands is required.

In mETHDs, the likelihood of unintended collisions increases. Common safety measures, such as emergency stop mechanisms with dead-man switches and visual emergency warnings to alert users of potential collisions, are discussed in related works (Abtahi et al., 2019; Yixian et al., 2020). More recent works proposed using an overseer equipped with an emergency stop button to monitor the entire system and intervene in case of safety risks (Gomi et al., 2024; Onishi et al., 2022). These mechanisms are further detailed and evaluated in our proposed guidelines. Some mETHDs incorporate hardware-specific safety mechanisms. For instance, Abtahi et al. (2019) developed a critical landing mechanism for drones, while Bouzbib et al. (2020) designed dedicated stop mechanisms for moving columns. Although effective, such solutions are highly tailored to specific hardware and are, therefore, unsuitable as general safety guidelines.

Using bumpers and soft pads around the robot, similar to what we proposed in this paper, is suggested by Weng et al. (2025). In this category, Horie et al. (2021) proposed designing safe end-effectors by covering their edges with soft materials to minimize the risk of harsh collisions with the user’s body or the robot itself. However, this approach is not always practical, particularly when the end-effector must simulate hard materials such as stone or concrete.

Guda et al. (2020) tackled safety in ETHDs by developing a robot placement algorithm that ensures collision-free interaction between the user and the robot. This algorithm enables the end-effector to reach most areas of interest while maintaining user safety. However, it is designed specifically for robotic arms and does not extend to mobile platforms.

Integrating visual feedback to increase user awareness and perceived safety has been suggested in the literature (Kästner and Lambrecht, 2019; Oyekan et al., 2019). At the same time, displaying the robotic ETHDs in VR might disrupt the user’s immersion and distract from the simulated experience (Medeiros et al., 2021). For instance, Mercado et al. (2022) explored various visual feedback methods to raise user awareness of ETHDs, designing 18 safety techniques and establishing eight evaluation criteria to balance immersion and perceived safety. Similarly, Horie et al. (2021) introduced a ‘safety guardian’ that visualizes the robotic arm and end-effector in VR to prevent collisions. Hoshikawa et al. (2024); Gomi et al. (2024) suggested robot visualization to enhance safety for VR users, but both reported breaks in immersion in their studies. A review of visual feedback methods to improve awareness in VR, along with evaluation criteria for ETHD applications, is presented in (Garcia, 2021). We have also adopted this technique to alert users in emergency situations, despite its potential to affect immersion.

Bouzbib and Bailly (2022) presented a structured approach for identifying a wide spectrum of failures and potential risks in ETHDs, accompanied by proposed solutions to mitigate them. Although safety risks are included within the discussed failures, the focus is more on outlining initial mitigation actions rather than developing a comprehensive safety guideline specifically for ETHDs.

To summarize, there are a number of works that partially addressed safety in ETHD design. However, the literature still lacks a comprehensive guideline or an in-depth exploration of safety for mETHDs in VR. This work aims to bridge that gap by proposing safety considerations specifically tailored for VR applications, with a focus on mETHDs.

3 Safety requirements analysis

The ISO/TS 15066:2016 emphasizes the importance of conducting a comprehensive risk analysis for collaborative robotic systems to ensure safe human-robot interaction. In line with these guidelines, we analyzed possible risks in large-scale VR systems with mETHDs and human actors, taking the CoboDeck as a case example.

CoboDeck is an immersive VR autonomous mETHD system, where VR users can freely walk and interact in a large virtual environment. The employed collaborative robot RB-Kairos consists of an omnidirectional mobile platform with a robotic arm, each controlled by a separate onboard computer unit. It moves in real space and provides user interactions with virtual objects. For that purpose, the robot presents a physical prop in the real environment that corresponds to the position and orientation of a virtual counterpart. The robot’s autonomous operation is controlled over the Robot Operating System (ROS) by several state machines called “behaviors”, between which the robot switches depending on the situation. Unity 3D simulates the virtual space, where the user can interact with the presented virtual objects naturally by hand, unaware of the robot’s position or actions. For further details on the framework architecture and implementation, please refer to (Mortezapoor et al., 2023).

The robot is equipped with a variety of sensors and subsystems to perceive its own state and surroundings, i.e., Light Detection and Ranging (LiDAR) sensors, an Inertial Measurement Unit (IMU), and internal sensors for odometry, several localization subsystems, cameras, and many more. As required by ISO 13849-1, the robot’s key systems are redundant, so the robot has multiple ways to perform a particular function if one system fails. As a cobot, it also has some common safety measures, such as remote emergency stop buttons and LiDAR-based obstacle detection. As they are implemented by the manufacturer, they will not be discussed in this work.

To perform the analysis in a systematic way, one researcher created an initial document, collecting possible hazards from their own experience, related work, and interviews with four experts regarding Cobodeck. First, the actors involved in the interaction scenario were identified: the user, robot, and overseer. Then the functions and risks of the actors were listed. In a large table, the variations of possible risk-associated situations were listed and assessed for the severity of the risk as well as the probability of its occurrence. On this basis, the four experts individually extended the document with comments, additional hazards, and their initial subjective assessment applied to Cobodeck, which the researcher, as a meta-expert, merged into one document. Finally, the four experts plus the meta-expert finalized the document in an extensive group discussion, identifying over 100 individual hazardous scenarios. The hazards include unintended contact between the user and robot that could lead to an injury, particularly in sensitive regions (Park et al., 2019), but also situations that could lead to damage to equipment or the environment. For each actor, we summarize the most notable identified risks below.

3.1 System’s actors and their risks

3.1.1 VR user

The VR User, or user for short, is an individual immersed in the virtual environment via wearing a tracked HMD and moving freely within the physical space, without seeing it. One of the primary safety risks is an unexpected or too quick user’s motion to which the robot cannot appropriately react or might overreact, e.g., avoid the user in the wrong direction. The user may experience sudden physiological symptoms, such as cybersickness (LaViola, 2000) due to VR exposure. Delays or irregularities in visualization or tracking data may also lead to unexpected user behavior. Faulty tracking or incorrect localization of the user might also increase the collision risk. In such scenarios, timely intervention may be necessary to mitigate potential risks to the user’s health, prevent injuries, or avoid further damage.

3.1.2 Overseer

An overseer is an individual responsible for monitoring the system from outside and ensuring safety during operation. She monitors both the physical workspace and the system’s operational information on the monitors. The information includes the robot’s current behavior, the VR scene with the user, and the robot’s motion plan. In the event of a hazardous situation, the overseer has to intervene and stop the user and the robot.

One potential risk for the overseer is the misinterpretation of the workspace situation. Given the complexity of the monitoring task, there is also a chance of missing critical parameter changes or failing to intervene in time. Furthermore, although the overseer should remain outside the workspace, entering this space poses additional risks. In such a case, she could only be detected by the robot’s LiDAR sensors. If the sensors malfunction or lack sufficient resolution, a collision could occur. Other risks include the overseer’s unexpected illness, power failure for the stationary computers, or low battery levels in the wireless emergency stop device. Although cobots are designed to fail safely with emergency stop if the device becomes inactive or disconnected, there is still a delayed detection risk.

3.1.3 Robot

The robot refers to an autonomous mobile platform that supports movement in any direction without rotation and features a robotic arm for serving props for interaction with the user. The robot can reach speeds of up to 3 m/s in all directions, with acceleration and deceleration rates of

A key risk associated with the robot is the potential for uncontrolled loss of traction. Rapid velocity or acceleration changes can cause the robot to slide if ground grip is insufficient or tip over due to a sudden redistribution of momentum, especially with the robot arm stretched out, which increases the risk of injury. Sensor and subsystem failures pose additional risks. Delays or inaccuracies in data from the LiDAR sensors or the localization subsystem can result in incorrect robot behavior. For instance, the robot’s delayed response to changes in the user’s or its own positions, or an obstacle, might lead to collisions. The limited resolution of LiDAR sensors can interfere with the robot’s situational awareness. Failing to detect the legs of a chair or dirt on the sensor might create a non-existent obstacle. A low battery level can render critical functionalities unreliable or inoperative, posing further safety risks. Moreover, the computers responsible for controlling the robot’s behavior could crash, leading to system-wide failures. Errors in the robot’s behavior engine may also result in unpredictable reactions, creating a dangerous situation where only overseers can intervene in time to prevent harm.

Furthermore, the props presented by the robot also introduce risks. They may detach and fall if not securely mounted or if their weight exceeds the robot arm’s capacity. A loose prop in motion could be propelled towards nearby individuals, posing a significant hazard. Furthermore, if the robot moves with its articulated arm outstretched, it may risk injuring users or damaging itself through collisions with environmental elements.

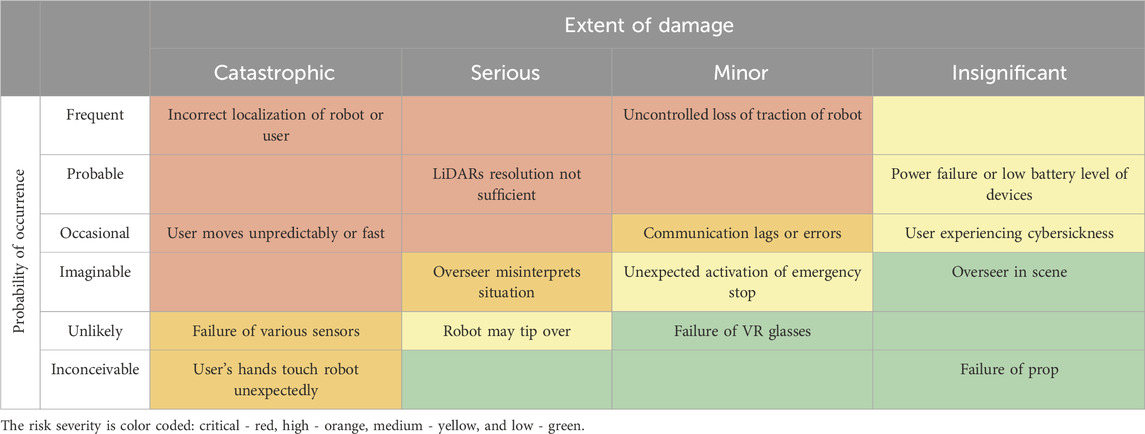

3.2 Risk assessment matrix

We analyzed the apparent dangers with a risk matrix–a table with the probability of occurrence on one axis and the potential extent of damage on the other. This matrix is a visual tool to summarize the risk evaluation. Note that the system was analyzed in its basic functional state with general sense and common safety features implemented, such as the cobot intrinsic safeties. Therefore, they are excluded from the table. Table 2 shows a shortened version of the resulting matrix, where several scenarios were combined to fit in this paper. The probability of occurrence is divided into six levels, from “Frequent” to “Inconceivable”. We specified that “Frequent” corresponds to the occurrence of this task or scenario 7 to 10 times within 1 h of normal operation. “Probable” 4 to 6 times and “occasional” 0 to 3 times in 1 h. “Imaginable” is a situation that can occur, but is not probable within 1 h of normal operation, while “Unlikely” should not be possible in 100 runs. “Inconceivable” describes the likelihood of situations that should never occur. In addition, we defined the extent of possible damage ranging from “catastrophic” (user would be injured or objects would be irreparably damaged) through “serious” (damage to the robot, prop, or room, which can be repaired) and “minor” (does not need repair) to “insignificant” (needs no further attention). The combination of the probability and severity of the damage leads to the evaluation of the risk severity: critical, high, medium, and low.

Table 2. Shortened Risk matrix: An overview of the potential extent of damage and probability of occurrence of identified risks of the Cobodeck.

The goal of the risk assessment is not only to identify the risks but also to plan for the actions that will reduce the risks’ severity to As Low As Reasonably Practicable (ALARP) (Jones-Lee and Aven, 2011; Langdalen et al., 2020).

For instance, there is an imaginable hazard of the robot driving over the user’s foot. Providing metal-reinforced builders’ shoes would reduce the extent of damage from “high” to “low”. For a more complex case, consider interaction. To facilitate interaction, the robot positions itself frontally to the user and positions a prop within the arm’s reach of a person. The maximum distance to enable any interaction with extended robot and human arms would be 188 cm with low or “imaginable” injury probability. Reducing the distance increases the possible interaction range has a trade-off of increasing risk. Standing directly next to each other, the interaction volume would be the largest possible, but the risk of serious injuries or unintentional collisions gets high or “catastrophic” since sensitive areas such as the head and neck are within the action radius of the robot arm. Consider, the robot’s footprint with its arm parked has a radius of 77 cm, but increases to a maximum of 147 cm if the arm is extended horizontally. Therefore, adding to a safety distance 50 cm reduces the volume for interaction, but enables the user to leave the action radius simply by pulling back the arms. This way, the sensitive areas of the body will be outside the interactive volume by default, and the risk of the user touching the robot will be reduced from high to low. Similarly to the examples above, we analyzed all identified risk-associated scenarios to create a number of strategies for risk mitigation in the form of specific safety measures discussed in this paper.

4 Safety measures

A group of risks could be addressed on the hardware level, such as LiDAR resolution and communication. We particularly considered the detection of thin objects such as chair legs. Therefore, we selected devices with a wide field of view (270

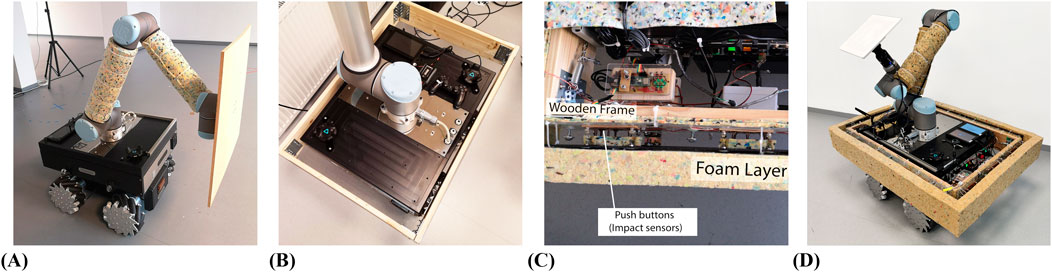

4.1 Mechanical protection: safety bumper and impact sensors

Fast motion and the potential risk of losing grip on the floor surface might lead to unintended contact, such as brushing by or a low-impact collision. Our RB-Kairos robot is environment-aware via LiDAR sensors but has no means to either detect or soften a collision. Another concern is the protection of the robot’s enhancing devices and sensors and their connections to the physical interfaces of the robotic platform, in the event of unintended contact.

To serve these purposes, we implemented safety bumpers with two primary features. One feature is a high-sensitivity rapid impact detection system, ensuring that the robot can take immediate action to minimize potential harm. Depending on the situation, this could involve activating emergency stop or redirection of movement, to prevent further contact. The second feature is the composition: the equipment should have a rigid protection case while obstacles and the user require impact-dampening cushioning with soft, yet dense and elastic material. It should be also compact and light enough to avoid affecting the acceleration, traction, and floor grip of the cobot due to the extra weight. A composite foam is an example of an ideal material for that.

For our RB-Kairos robot (see Figure 2A), we created a suspended wood and foam frame around the platform. The wooden part provides stability and protects the wired connections on the robot (see Figure 2B). On it, we mounted equally distributed arrays of push buttons for impact sensing (see Figure 2C). To ensure the reliable work of the sensors, we attached small plates of thin 2 mm plastic plates over them at a distance created with springs to guarantee that on impact more than one sensor will be triggered. Those were, in turn, covered with a 5 cm thick composite foam as a cushioning layer (see Figure 2C). The robot with bumpers is depicted in Figure 2D. The impact sensors were then connected to a PJRC Teensy 4.1 microcontroller attached via USB to the mobile platform’s computer. The controller processes the triggering of the sensors in any of the sensor clusters and triggers an emergency stop on the robotic platform.

Figure 2. An RB-Kairos robot with safety bumpers and impact sensors. (A) The robot in its original shape without safety bumpers. (B) The wooden frame around the robot is a solid bumper part. (C) The safety bumpers’ top view, the impact sensors array placed between the wooden frame and the external foam layer. (D) The robot with complete safety bumpers.

4.2 Tracking redundancy: co-localization accuracy and reliability

Accurate and precise co-localization of both the user and the robot is essential for haptic interaction within the shared space. Localization issues, such as delayed or damaged data, can lead to erratic or unintended robot behavior, affect the user’s experience, and introduce safety risks, especially from the robot’s side. To address these risks, robust localization strategies are required.

To enhance reliability and mitigate the risks, we propose employing multiple localization systems operating in parallel, with their data inputs fused through a Kalman filter. This approach not only compensates for the inherent errors of individual subsystems but also ensures fault tolerance, providing a safety net in case one localization method fails. Such data fusion ensures safer and more seamless interactions within VR environments.

In CoboDeck, we employed several localization solutions, with their outputs fused using an Extended Kalman Filter (Moore and Stouch, 2016) to ensure precise robot positioning. The first solution utilizes the robot’s dual LiDAR sensors combined with a pre-scanned map of the environment for localization, implemented through Adaptive Monte Carlo Localization (AMCL) (Fox et al., 1999). The second solution involves a scalable, low-cost marker-based GPU tracking system, where large markers are mounted on the ceiling to provide sub-centimeter precise tracking (Podkosova et al., 2016). The third solution uses HTC Vive Lighthouse tracking (HTC and Valve, 2016) with four base stations and two HTC Vive V2 trackers attached diagonally onto the mobile platform to provide submillimeter accuracy. Note that each solution can also be replaced as needed, as the Vive tracking will be replaced within the next iteration of the project. The Extended Kalman Filter is a fail-safe localization mechanism working at 30 Hz that accounts for different precision and noise levels in the localization solutions enhancing the final localization precision and robustness of the robotic system. Due to the real-time rendering requirement for high-frequency tracking (minimum 90 Hz), the user currently cannot profit from such fusion and should rely on the best available tracking solution that is constantly checked for quality.

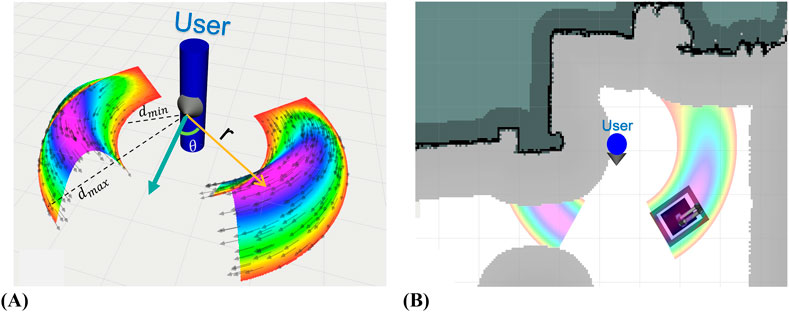

4.3 Positioning strategy for large scale setups

For large-scale haptic VR systems, robot positioning dynamics affect both user safety and system efficiency. The robot should not remain at the site of its last interaction until the next one. At first, it would be too close to the user, creating a potentially dangerous situation. This situation will be addressed in the next section. Then later, it might be too far from the next interaction point, introducing a substantial response delay, requiring the use of higher speeds for positioning with subsequent issues. Given the user’s freedom to move in any direction and distance, a strategically positioned robot nearby might minimize response time and ensure a smooth user experience while maintaining the safety of the workspace.

To tackle this challenge, we propose the robot to “Chase” the user. Under this strategy, the robot follows the user, maintaining a short but safe distance, making itself available for haptic interaction on short notice. We suggest a user-centric weighted chase space consisting of two arches as shown in Figure 3A for the robot’s positioning near the user. The formula for the weights is outlined in Equation 1 below:

Figure 3. Chase space. (A) Full chase space on both sides of the user. The elevation and coloring depict the weight distribution, where higher/purple is preferable. The arrows depict the robot’s orientation. (B) Chase space in use excludes areas occupied by obstacles or their buffer zones.

The function

When in use, the parts of the space that are obstructed by obstacles (static or dynamic) should be excluded from consideration, as shown in Figure 3B. This approach avoids interference with the user’s free movement and safety, but also ensures that the robot is consistently positioned optimally for serving the interaction.

Implementing the “Chase” strategy has multiple benefits. We showed in Mortezapoor et al. (2023) that it reduces the total distance traveled by the robot, which in turn conserves battery life. It also significantly improves the response time for haptic interactions. Moreover, this strategy enhances safety - the robot actively maintains a safe distance from the user and does not obstruct the user’s direct walking path, thus reducing the risk of collisions. Through this user-aware re-positioning, the robot not only becomes more efficient and safe in its role but also contributes to a more seamless haptic VR experience.

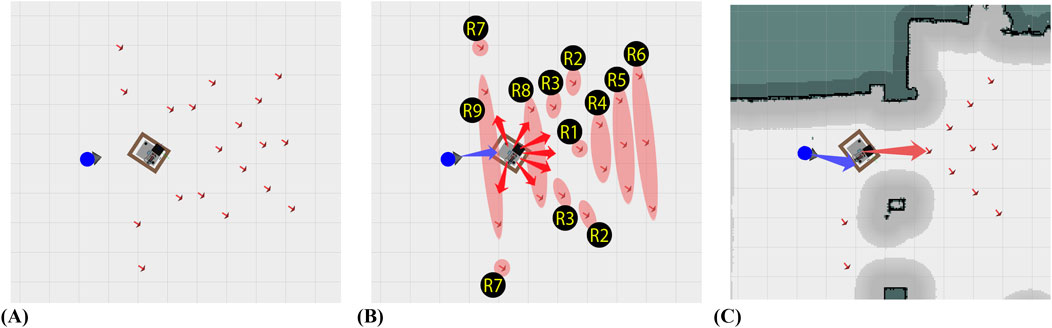

4.4 Fast collision prevention strategy

In haptic VR, the user is in close proximity, and interaction dynamics and the environment itself result in a variety of possible collision scenarios. In the basic scenario, the VE is static and the objects’ positions are known and remain unchanged. The robot can be strategically positioned in safe zones when not interacting with the user and positioned inside the virtual obstacle during interaction. These safe positions would need to be reevaluated after each VE or workspace change. However, when the robot travels to or from the interaction, unexpected collisions still can occur, necessitating a fast response and repositioning.

In contrast, dynamic environments, such as a design studio with dynamic behaviors and content, present a more complex challenge. The robot must adapt in real time to new elements and layout alterations. Alternatively, the content might be dynamic. The user might open the door she touched a second ago and try walking through it while the door simulation robot is still blocking her path. In such dynamic scenes, it is critical for the robot to instantly and safely reposition itself in response to the user’s actions, prioritizing her safety over everything else.

To achieve instant reaction, the regular safety protocols and the robot’s operational parameters should be readjusted and extended. This includes decreasing the standard obstacle buffer size to gain more space for navigation around obstacles or increasing acceleration limits for the agile reaction. To save time for navigation planning, there also should be precalculated options to escape and reestablish the minimum safe distance from the user.

In our proposed strategy, the potential escape positions away from the user and her potential walking path should be computed concurrently and prioritized based on real-time assessments of the workspace situation (see Figure 4). Factoring priorities include the current extended occlusion map (obstacles and their buffer zones), position, and orientation of both the user and the robot. Figure 4A provides a top-down view of a scenario with an idle robot near an approaching user. Here, the small red arrows show 19 possible escape positions in a constellation shaped to correspond best to the space and the robot’s shape and maneuverability.

Figure 4. Escape direction calculation. The small red arrows around the robot indicate possible escape positions. (A) The robot in the escape situation with all possible escape points available. (B) If the user walks toward the robot (blue arrow), the escape positions are prioritized based on escape regions (lower region number gains higher priority). (C) Escape situation with occlusions: the robot moves toward the best available escape position within the escape region with highest priority.

Although other constellation shapes and sizes are possible, in the case of Cobodeck and its real workspace, this version performed the best. This constellation comprises nine regions (see Figure 4B) with different priorities, from which the highest priority goes to R1 and the lowest to R9. The high-priority regions allow the robot to have more time to empty the direct walking path while not driving too far from the user. These are picked to be far enough from the current position of the robot to allow high initial acceleration, and enough space for deceleration for a quick escape. The escape positions’ accessibility depends on static and dynamic obstacles in the workspace, leaving only feasible positions for path planning. When masked with obstacles or their buffer zones, regions with lower priorities are taken, although they might lead to further distances to the user after the escape is performed. When multi-part regions are available (R2, R3, and R7), the robot prefers the sub-region that is further from the user’s position. This applies to the escape destinations within each region in general as well, when multiple inner-region escape destinations exist. For an omnidirectional robot, the orientation alignment to the robot’s current orientation leads to a faster escape, as rotation takes time.

Upon detecting a potential collision, the robot escapes from the user to the highest-priority escape position. In Figure 4C, this process is visualized, showing how some escape positions are excluded due to obstacles.

4.5 Actions at autonomous operation limits

Despite the measures implemented to enable the robot’s autonomous risk management, it may encounter situations beyond its resolution capabilities. Scenarios such as extremely constrained maneuvering spaces or unexpected functional delays present such challenges. The “Inform” strategy is designed to bridge the gap between autonomous robot functionality and the need for human intervention or awareness with the following sequence of means:

Robot visualization in VR: Disclosing the robot’s physical position enables the user to adjust her actions to resolve an issue without leaving VR. However, the immersion might be compromised.

Notification to the overseer: In parallel, the overseer alert ensures that complications are promptly addressed by a trained professional.

Human-safe robot recovery action: A predefined robot action can be executed when standard autonomous responses are insufficient, but the situation might still be resolved without the need for the overseer’s intervention. For instance, the robot’s recovery action can be to stay still until an escape route becomes available as the user steps out of the way.

Emergency robot stop: This feature is for scenarios with an immediate threat to the user’s safety and when a rapid halt preserves equipment, as in the case of a severe malfunction. It might go beyond the human-triggered functionality with programmatic triggers, e.g., based on self-test results. The stop can be canceled only by the overseer.

Notification for VR disengagement: Some situations might require an alert for the user to exit the VR momentarily by removing the goggles. This measure applies when immediate user awareness of the real-world environment is necessary and should be complemented by prior instructions.

4.6 System health monitoring

In close human-robot interaction, especially if the user cannot see the robot, the presence of an external expert observer (overseer) is indispensable. The overseer should be situation-aware, monitoring the state of the robot’s and user’s hardware and software systems, and understand user-triggered responses.

From the robot’s side, the overseer needs to have an overview of the status of the robot’s onboard computer, internal inter-components network connection, ROS Master process, and the function and data flow of the sensors as well as subsystems such as localization, navigation, and the arm’s Inverse Kinematic (IK) planner and joint synchronization. From the user’s side of the system, the overseer needs to ensure the healthy function of the VR computer and its operating system, network connection, ROS data synchronization, VR hardware, backend, and application. In parallel, the overseer needs to constantly monitor the physical workspace, the robot, and the user.

To accomplish this complex task and for better visibility, we suggest a portable screen or, even better, standalone AR glasses to allow seeing the workspace with overlayed necessary information, as we presented the concept in Mortezapoor et al. (2025). The status monitoring solutions should operate independently of the robot’s onboard or user’s computers, to ensure continuous and reliable observation, should any part of the system fail. Additionally, this monitoring solution can also have semi-automatic capabilities. In certain scenarios, such as major failures, it can have the authority to trigger a hardware-level emergency stop of the robot and notification of the user, thereby providing an additional layer of safety.

4.7 Pre-deployment testing

Before the safe operation is established, a real user cannot be collocated with the robot due to health concerns. However, the user’s behavior can be simulated. Similarly, the robot’s software solutions should be extensively tested in simulation before deploying to the real robot. For mobile robots, this also saves battery-life and decreases the amount of real tests that are necessary for simulation results verification.

To confirm that the robot behaves as expected and fulfills its safety and performance requirements from the end user’s viewpoint, we chose a black-box testing paradigm (Beizer and Wiley, 1996). For that, the user behavior is defined for a test under various scenarios and only the robot’s responses are monitored. The integration between the user and robot subsystems should be seamless and the same for both real and simulated operations. This means that the robot responses can be tested with real or simulated users and robots in all possible combinations with minimal differences. The safety testing should start with a full simulation of both and progress towards full reality.

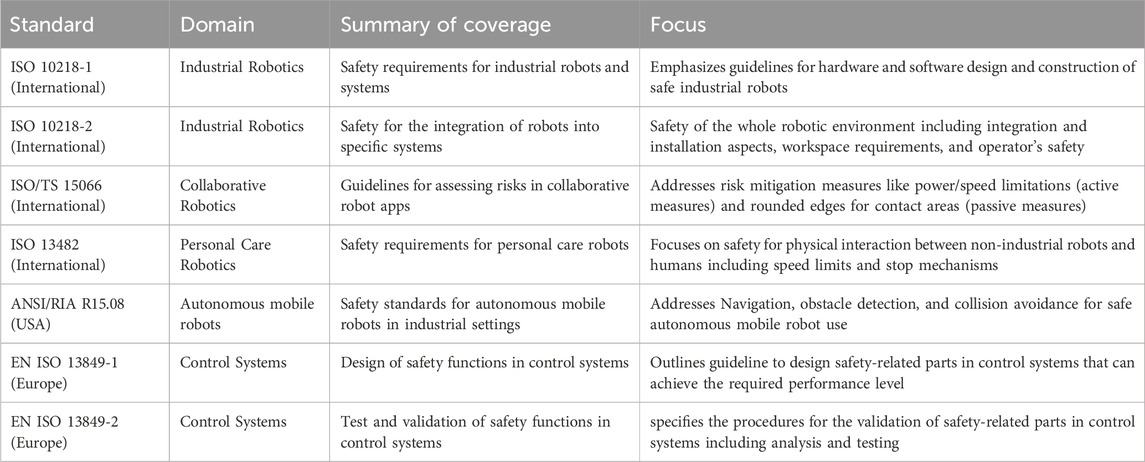

We defined a template shown in Table 3 (more details in Supplementary Appendix 1), for the creation of flexible and comprehensive test scenarios, that include all necessary parameters, and to tailor testing conditions to specific cases. The template is easily translatable into code, enabling efficient implementation. This way, the system’s performance can be systematically evaluated, even under extreme or unusual conditions, maximizing failure coverage and enhancing overall robustness. In addition, test-specific criteria can be introduced depending on the scenario, e.g., a criterion specifying maintenance of a minimum safe distance (e.g., 1.5 m) during robot-user interactions. The test passes the general safety requirements if no robot-user collisions occur for either actor and the robot avoids collisions with the workspace.

Based on the defined template, we implemented a Unity-based testing framework. The framework provides test case definition, user behavior replay and simulation, and scenario repetition for data collection and analysis. Similar to VR setup with ROS synchronization, it allows testing in both simulated and real-world environments. The user properties and trajectories combined together with environmental parameters according to the test case template, are stored in JSON files, allowing for consistent and reproducible test executions. Repeatable tests and result logging further aid in optimizing subsystems and improving system performance. The major components of the testing framework are:

The design of the testing framework with a wide parameterization range ensures that the robotic system can be rigorously tested in a wide range of scenarios. The high level of customization and flexibility allows the tests to closely mimic real-world user behaviors and interactions, enhancing the reliability and applicability of the testing outcomes.

5 Evaluation

In this section, we perform the evaluation of the proposed safety measures. For that, we implemented the test cases that cover the majority of the critical risks. They can also serve as practical examples demonstrating how such a system can be systematically tested and assessed using the proposed methods and tools.

The first set of tests addresses the effectiveness of our proposed strategies in contrast to their absence. The second set tests the system’s robustness to signal disturbances that might reflect malfunctions or external interference on the most critical localization system. Due to a high number of tests, we tested our system in a fully simulated setup.

5.1 Technical setup

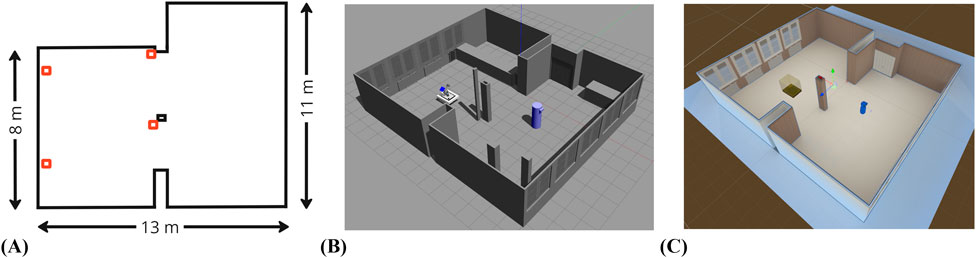

Our testing environment replicates the lab space. The robotic platform was simulated using a detailed 3D model of the robot provided by the manufacturer. The robot simulation employed the Gazebo engine and user simulation and test execution were done in Unity on a separate PC, as shown in Figure 5.

Figure 5. The simulation workspace for testing based on the accessible real space. (A) The layout of the accessible physical workspace. (B) The simulation environment of the robotic system. (C) The testing framework environment.

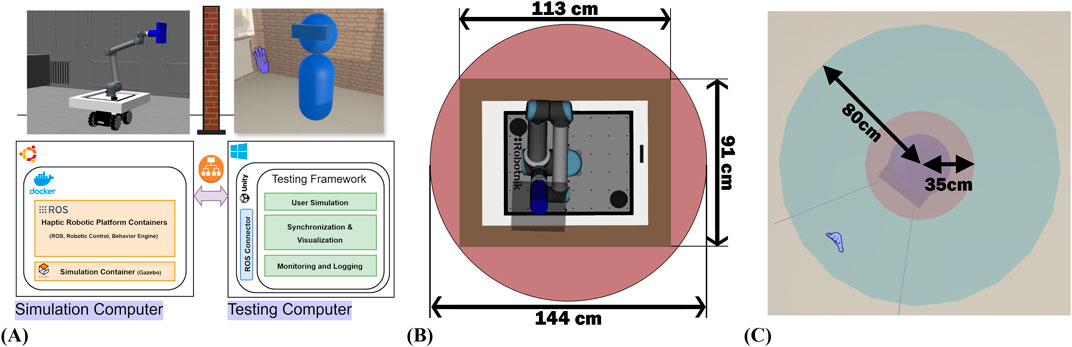

The testing runs across two PCs connected via LAN for synchronized operation. The robot simulation computer (Ubuntu 22.04, Intel Core i9 14700KF, 128 GB RAM, Nvidia RTX 4090) manages the robotic simulation in Gazebo, maintaining a real-time factor of 1.0 for most of the test execution. The robotic system runs ROS Melodic in a Docker environment with GPU pass-through for optimized performance. The testing computer (Windows 11, Intel Core i7 9900K, 32 GB RAM, Nvidia RTX 2080Ti) runs the Unity-based testing framework. Figure 6A illustrates the system deployment.

Figure 6. Test environment architecture and key parameterization: (A) Deployment schema of the testing and simulation environments. (B) The footprint and the minimum in-place turning space of the simulated robot. (C) The footprint of the simulated user, with the red circle depicting the user’s collision space, and the blue circle indicating the Inform activating space.

Robotnik RB-KAIROS with a UR10 robotic arm and Mecanum wheels, supporting omnidirectional driving. The robot’s footprint forms a 113 cm by 91 cm rectangle, as shown in Figure 6B. A minimal enclosing circle with a 77 cm radius around the robot defines the minimum space required for in-place rotation. The safety strategies were implemented in FlexBe Engine (Schillinger et al., 2016) as behaviors running on the onboard computer.

5.2 Evaluation’s system settings

To systematically evaluate the system’s safety performance, we defined standardized test conditions, including user behavior, robot behaviors, initial positions, and evaluation metrics.

Initial Positions: For consistency, the robot and user always started from predefined positions with a 9-m separation. This ensured the robot had sufficient time to initialize and select appropriate behaviors and start following the user.

User Behavior: For consistency in user behavior across all test cases, we employed a predefined set of trajectories leading through a sequence of 100 interaction points randomly distributed across the simulated workspace. The interaction points were generated randomly within the test environment and filtered to ensure that their orientation allowed sufficient space for the user and robot approach in at least 90% of the cases. The remaining 10% included positions where successful delivery within interaction time was not due to physical constraints, such as narrow spaces near walls or corners. While the primary evaluation objective is serving haptic interaction and performance, it is also important to address the failure cases.

At each point, the user first remains stationary for 12 s, simulating the visual exploration of an object. Then, the interaction is initiated and lasts for 20 s, during which the robot positions itself and delivers the haptic feedback. After the interaction, the user waits 5 s simulating decision-making before moving to the next point. The user moves between points at a translational speed of approximately 1.2 m/s and an angular speed of

Chase Settings: In all of our tests, the robot had the Chase behavior active to ensure the desired distance between the robot and the user without active interaction. The value of the environment-dependent Chase parameters

Escape Settings: To trigger Escape, we set a minimum distance threshold of 2.1 m between the user’s and the robot’s positions when the robot is in front of the user within the frontal missing arc shown in Figure 4A, or 1.7 m at any other angle. Escape is disabled for the interaction duration, but was initiated right after the interaction finished. This ensures a clear user’s direct walking path if the object is removed after the interaction.

Inform Settings: The Inform alert about a potential hazard is handled in Unity. It is activated from FlexBe when the Escape behavior fails, or when any part of the robot enters a 0.80 m space around the user (see the blue circle in Figure 6C). To emulate the reaction time to the Inform alert, based on our observations from the physical system, the test user was programmed to do a full stop within 1 s and stay still while the Inform behavior is active. The user could then continue her trajectory once the safety was restored.

Test Data Collection: During each test run, we collected the robot’s localization data, traveled distance, user-robot and robot-environment collisions, and a number of triggered Inform and Escape behaviors, successful and not haptic interactions, and various timings. We also recorded the duration when the distance between the robot and the user was less than 1 m. This 1 m threshold is critical, especially when the robot is positioned orthogonally to the user, as its bumper corners may pose a collision risk (see Figures 6B,C).

To measure the system’s haptic performance, the number of successful haptic prop serves and the haptic response time were recorded, indicating how the system responded to haptic requests in each of the test variations. These parameters and test acceptance criteria (defined in Section 4.7) provided an informative evaluation of both the safety and performance of the system.

5.3 Safety strategies impact tests

These tests examine how Escape and Inform behaviors, implementing corresponding strategies, enhance user safety. First, we evaluate the system without them and then progressively introduce the behaviors to analyze their individual and combined effects in the following conditions:

1. Without safety behaviors (“No Safety”): The system operates only with the Chase behavior, maintaining user proximity without Chase or Inform. This condition serves as a baseline.

2. Inform’s impact (“Inform Only”): The Inform behavior is added, allowing the system to warn the user of potential proximity hazards. This test assesses the Inform effectiveness in preventing collisions and its influence on system performance.

3. Escape’s impact (“Escape Only”): The Escape behavior is enabled without Inform. This test evaluates Escape’s impact in maintaining safe distances and its limitations without user warnings.

4. With Safety Behaviors (“Inform and Escape”): Both safety behaviors are active for evaluation of their combined effectiveness for safety.

5.3.1 Results

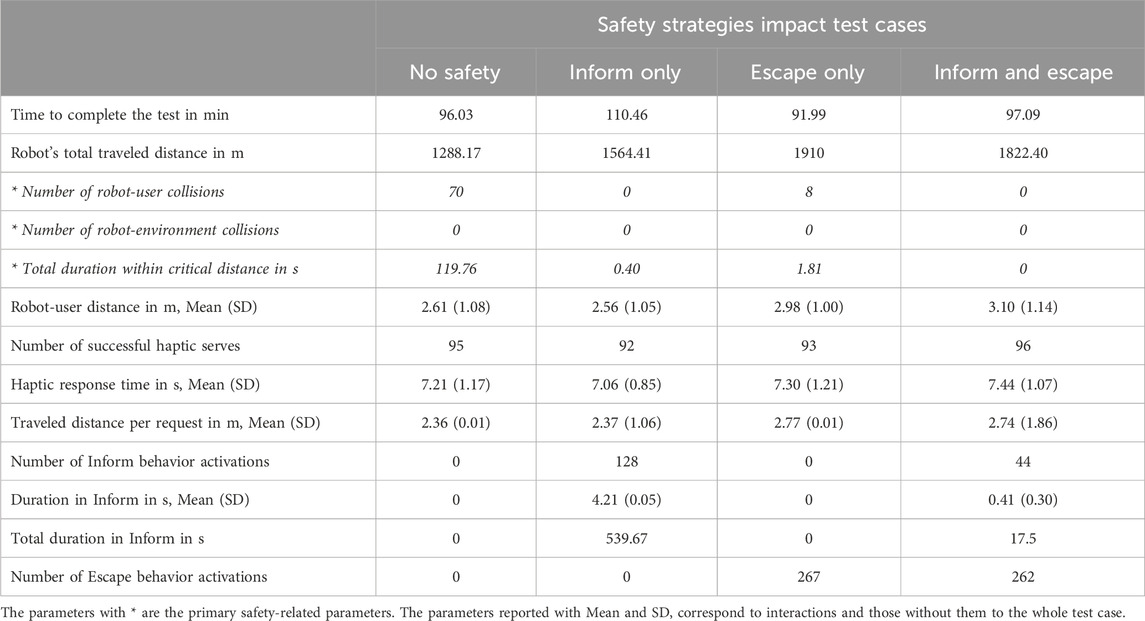

Table 4 presents the results of the conducted tests. The test durations varied, with the “No Safety” case taking 96.03 min “Escape Only” reduced it by 4% (91.99 min) while “Inform Only” increased runtime by 15% (110.46 min). The “Inform and Escape” condition had a runtime of 97.09 min, closely matching the “No Safety” case, indicating that both behaviors have minimum impact on task performance time.

The robot’s traveled distance was 1288.17 m in the “No Safety”. With “Inform Only”, it increased by 21% (1564.41 m), and with “Escape Only”, it reached the highest value at 1910 m (+48%). With “Inform and Escape”, the robot traveled 1822.40 m, falling between the “Inform Only” and “Escape Only” conditions but still noticeably higher than the “No Safety”. Notably, the traveled distance did not directly correlate with test duration.

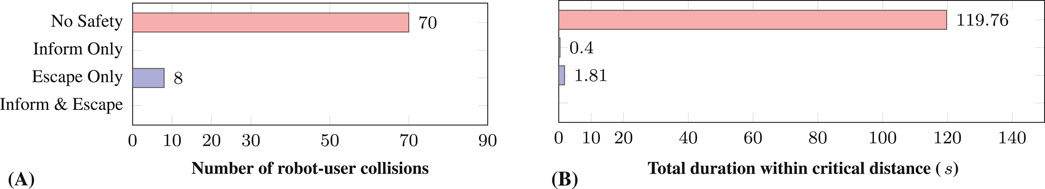

The bar chart in Figure 7A depicts the number of collisions in the tests. No user-robot collisions occurred in tests where Inform was active (“Inform Only” and “Inform and Escape”). In contrast, “Escape Only” resulted in eight collisions, while “No Safety” recorded 70 collisions. No collisions between the robot and the environment were observed in any of the tests.

Figure 7. Safety-related parameters of the test case runs. (A) The number of collisions between the robot and the user. (B) The total duration of critical proximity (

Critical proximity duration (time spent within 1 m of the user) was significantly reduced by safety behaviors. In the “No Safety” condition, it was 119.76 s “Inform Only” reduced it to 1.81 s, while “Escape Only” lowered it further to 0.40 s. With both behaviors active “Inform and Escape”, no critical proximity events were recorded. The distribution is shown in Figure 7B.

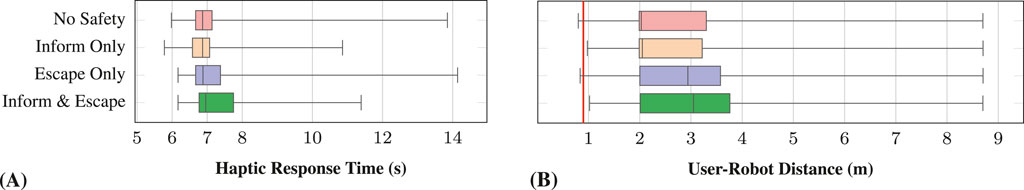

Throughout the test runs, the logged average distance between the robot and the user was 2.61 m (SD 1.08 m) in “No Safety”, and 2.56 m (SD 1.05 m) in “Inform Only”. This is below our preferred distance of 3 m between the robot and the user. The distance increased to an average of 2.98 m (SD 1.00 m) in “Escape Only” and reached a mean of 3.10 m (SD 1.14 m) in the “Inform and Escape” condition. Figure 8B illustrates the distribution of the user-robot distance.

Figure 8. Safety Strategies Impact Tests Results. (A) Haptic response time, and (B) the distance between the robot and the user compared to the baseline. The red line in (B) indicates the collision distance between the user and the robot.

The number of successfully served haptic interactions and the average haptic response time (time between initiation and actual haptic interaction) remained consistent across all four conditions. In the “Inform and Escape” condition, a maximum of 96 out of 100 haptic requests were served successfully, while a minimum of 92 out of 100, occurred in the “Inform Only” condition. The haptic response time showed slight variations, with the shortest mean response time of 7.06 s (SD 0.85 s) recorded in the “Inform Only” condition, and the longest mean response time of 7.44 s (SD 1.07 s) in “Inform and Escape” condition. The distribution of haptic response times across the test cases is shown in Figure 8A.

The Inform behavior activated 128 in “Inform Only”, compared to 44 in “Inform and Escape”, a 66% reduction. Additionally, the total duration of Inform signals was significantly higher in “Inform Only” (539.67 s) compared to “Inform and Escape” (17.5 s), showing that the Escape behavior reduces reliance on user warnings. The Escape behavior was activated 267 times in “Escape Only” and 262 times in “Inform and Escape”, showing consistent activation frequency across these cases.

5.3.2 Discussion

In the “No Safety” condition, the system experienced 70 collisions between the user and the robot over a 96 min operation time. Introducing Inform behavior alone eliminated all user-robot collisions and reduced the duration of critical proximity from nearly 2 min to less than a second. However, this came at the cost of activating Inform 128 times, with each activation averaging 4.21 s. The system required the user to fully stop and detach from the VE for a total of 8.5 min with one or more Informs per interaction. In reality, frequent warnings have a notable impact on user presence and overall system performance perception, due to many interruptions reminding about the physical world.

In contrast, “Escape Only” had less impact on VR presence, as no warnings were issued. This setup allowed the user to operate uninterrupted. However, while Escape drastically reduced the number of user-robot collisions considerably (from 70 to 8) and the total critical proximity duration (from 2 min to

The “Inform and Escape” condition provided the best safety-performance balance. It eliminated collisions and critical proximity events while maintaining a completion time similar to the “No Safety” condition. Inform was triggered two-thirds less than in the “Inform Only” case, due to Escape proactively preventing warnings. Inform activations averaged under half a second, reducing total activation time to 17.5 s compared to 539.67 s in the “Inform Only” condition. However, Escape was activated 262 times, increasing the robot’s traveled distance by 41% compared to “No Safety” and 16% compared to “Inform Only”.

Despite increased robot travel in all safety conditions, haptic response time remained consistent. In the “Inform and Escape” case, response time increased by only 3%, confirming that extra movement primarily occurred during idle periods. Notably, this condition also achieved the highest number of successful haptic serves (96 out of 100), suggesting that improved positioning and repositioning enhanced interaction performance.

5.4 Disturbance resilience tests

Testing under environmental disturbances is important for validating system robustness in real-world conditions, where sensor noise and localization errors are common. For that, the haptic system in the “Escape and Inform” condition was tested with the noise as disturbance and followed the methodology described in section 4.7. The noise was applied to:

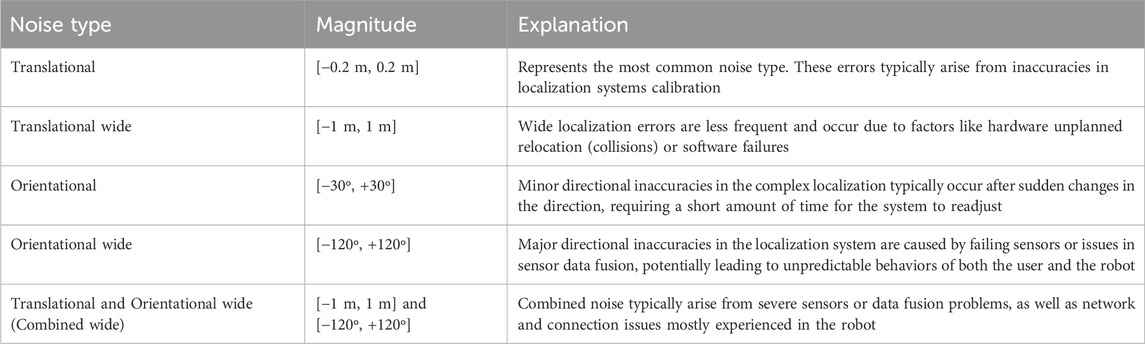

The types of applied noise are detailed in Table 5, selected based on our experience with the physical setup. The noise was randomized within defined ranges and independently injected into the localization data streams and did not interfere with the subsystems’ internal workflow.

Table 5. Noise types and magnitudes for localization disturbance test variations and the explanation of their possible source and impact on the system.

5.4.1 Results

In this group of tests, the system in the “Escape and Inform” condition without noise is the comparison baseline. The Supplementary Appendix 2 provides a full summary of the test results. The system successfully served the haptic prop in 94–97 out of 100 interaction points, maintaining service performance despite localization noise. The measured test durations did not deviate much from the baseline (97.09 min) staying in the 97.1 s–101.55 s range across the noise conditions. The primary factor here was user behavior, which remained consistent across all tests, and noise with safety behaviors had minimum impact on the completion time.

Traveled distance per interaction also remained consistent (mean 2.74–3.13 m, SD 1.55–2.16 m). The true robot’s traveled distance remained similar to the baseline (mean 1786.91 m, SD 49.53 m). However, in conditions with translational noise injection to the robot’s data stream, the testing framework reported traveled distances significantly and abnormally higher than the baseline. We obtained the actual traveled distances from ROS logs and used them to cross-check for the framework’s report (see Supplementary Appendix 2). This discrepancy is an artifact of the black-box testing framework and noise implementation, which calculated the traveled distance based on noisy localization data. The confusion of the robot’s localization itself should be tested as well but would require a different implementation and hybrid testing.

Similarly, no true collisions occurred in any of these test case variations, although the test logs indicated collisions between the user and the robot in some variations. Further inspection of the scene captures confirmed that these were artifacts caused by the noisy data. Similarly, brief instances of critical proximity (1 m or less) in cases with translational noise were verified as artifacts and not genuine events (see Supplementary Appendix 2).

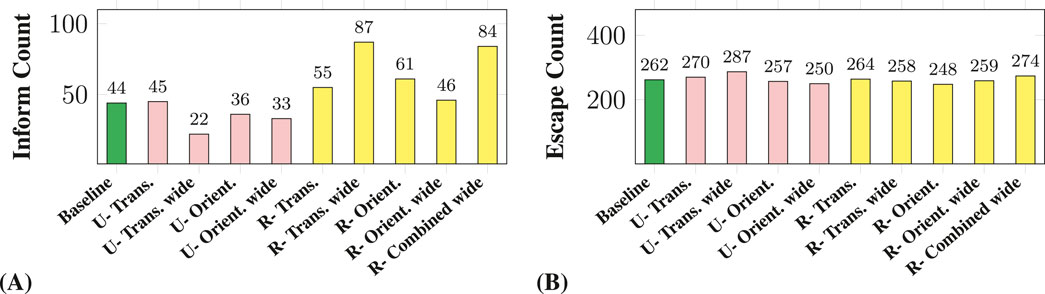

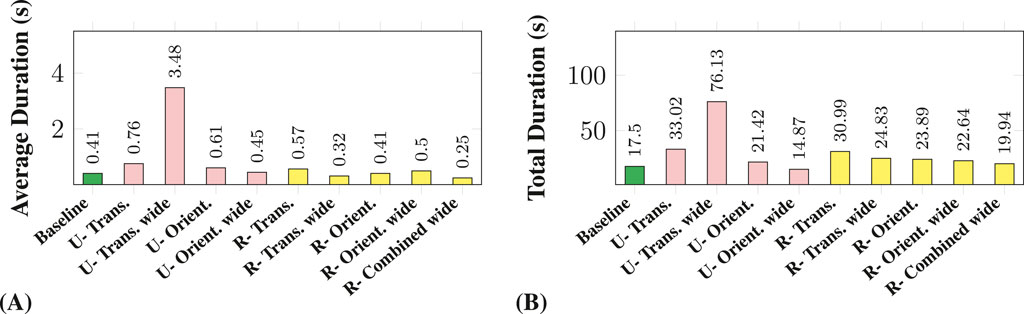

Escape activations were generally consistent with the baseline, as shown in Figure 9B, with fluctuations below 10%. Inform activations varied, increasing when noise affected the robot’s localization, particularly in the wide translational noise case. However, it decreased when noise was applied to the user, where the wide translational noise case recorded half as many activations as the baseline (see Figure 9A). Interestingly, when noise was applied to the user, the average duration and total active time of Inform were consistently higher than the baseline, except for the total duration in the orientation-wide noise case. In contrast, when noise was applied to the robot, Inform durations generally aligned with or fell below the baseline (Figure 10).

Figure 9. The number of Inform and Escape behaviors activations in each test condition. In these charts, green color is used for the baseline, pink for the user (U), and yellow for the robot (R). (A) The number of inform activations. (B) The number of Escape activations.

Figure 10. Inform activations in each test condition. In these charts, green color is used for the baseline, pink for the user (U), and yellow for the robot (R). (A) The average time Inform behavior stayed active per condition. (B) The total duration of active Inform in test conditions.

5.4.2 Discussion

This test category further demonstrated the system’s ability to maintain user safety under varying conditions, even in the presence of localization noise. The robot did not collide with the environment in the tests, even when subjected to translational and orientational localization noise. This resilience stems from the robot-centric LiDAR-based localization and obstacle detection, which does not rely on global localization data. While localization noise can disrupt navigation, it does not impair the robot’s ability to detect and avoid obstacles during movement. Indeed, the collisions are typically a result of fast user movements toward the robot rather than the robot’s navigation errors.

An interesting anomaly was observed when the user experienced wide translational noise. In this case, the number of Inform activations dropped to exactly half of the baseline, yet the mean and total durations of activations were significantly higher than those of other test cases in this category. Additionally, this test condition recorded the highest number of Escape activations among all scenarios. This behavior can be attributed to the wide translational noise (up to 1 m), which caused Inform to remain active for extended periods and prompted the system to enter Escape behavior more frequently. Despite these unusual patterns, none of the tests, including the wide translational noise case, resulted in any collisions between the user and the robot, maintaining user safety throughout.

Such unique cases underscore the utility of the proposed testing framework, which allows developers to identify, investigate, and elaborate on outlier scenarios by defining closer and more specific test cases. This capability helps explore a broader range of situations and system responses to identify unforeseen hazards that could compromise safety. Another use case of the testing outcome is the collection of the training data for the overseer, who needs to interpret the observed situation correctly.

6 Discussion

While safety concerns have been partially addressed in several previous works on custom ETHD systems, e.g., (Vonach et al., 2017; Mercado et al., 2021), the discussion remains open, especially for the use of mobile robots. Based on our experience, we proposed an approach to integrate a constellation of safety measures into the design of a mETHD system. As a groundwork, a thorough initial risk analysis reveals potential hazards, which have to be addressed with suitable safety measures. The risk analysis has to be revisited repeatedly until all risks are at least at the ALARP level (Jones-Lee and Aven, 2011; Langdalen et al., 2020).

In a mETHD system, the robot and user are closer, often in direct contact, than the safety standards suggest, and the user cannot see the robot. So developing such a system, the possibility of failure of every safety measure should be assumed. Fundamentally, multiple hardware safeties should be present as an indispensable backup solution to cover the worst-case scenario. A cobot is usually already equipped with a number of mitigating mechanisms on a hardware level, like joint torque sensors or an emergency stop. However, some of them can also create a hazard if triggered untimely. Thus, we specify the inclusion of a human overseer with a standard remote emergency stop, crucial to be able to immediately halt the robot from a distance when necessary. Additionally, we propose placing contact sensors on the mobile platform, creating a three-way emergency stop mechanism.

One critical aspect of hardware-level safety is ensuring that the robot’s acceleration and velocity are sufficient for rapid pre-emptive collision avoidance maneuvers. Another essential feature is the robot’s capability of omnidirectional mobility, enabling it to react and reposition efficiently without requiring in-place rotation before movement. These capabilities were key factors in selecting the robot used in CoboDeck, as it can navigate omnidirectionally and maintain speeds comparable to a typical human walking in VR.

We analyzed which other parts of the robot might be dangerous for the user and could be addressed with physical means. Inspired by the level of safety of soft robots (Raatz et al., 2015), we proposed to employ soft materials in areas where user contact is possible, even if improbable. For example, in CoboDeck, we equipped the robot with a suspended wooden frame with an extra foam layer, as well as foam matting around the robotic arm, as shown in Figure 2. We also considered what and how the robot can sense with redundant sensors, and strongly recommend enhancing the localization system’s reliability and confidence.

Nevertheless, the best safety is to prevent the worst case in principle. In this paper, we proposed a set of behavior strategies for mETHDs: “Chase”, “Escape”, and “Inform”. These general strategies systematically address the performance and safety of such systems and could be customized for the specifics of the individual robots. Software-level safety measures involve real-time system monitoring, dynamic user response, and dedicated safety robot behaviors. For instance, we proposed the Chase behavior for repositioning during the robot’s idle state. The robot actively moves to areas that are less probable to be explored by the user from where they currently stand (see Section 4.3; Figure 3). This approach could be extended to other systems like those in Bouzbib et al. (2020) to be considered in path-planning strategies to move components to safer positions when not in use for interactions.

In scenarios when users take unexpected paths, the robotic system must respond promptly with controlled behaviors, such as the Escape strategy we proposed, explained in Section 4.4 and depicted in Figure 4. For robots lacking omnidirectional movement, Escape behavior design must ensure a fast response without requiring an in-place rotation, as delays here could compromise safety. Since Escape behavior is dependent on both the robot’s mobility and the environment’s geometry, it should be tailored to each specific system. Additionally, variations in VR applications may lead to different user behaviors, necessitating further customization of the robot’s movements. In the instance of Zoomwalls (Yixian et al., 2020), it would be recommendable to consider the response of the system if the VR user acts in an unpredictable way, such as walking backward.

When everything fails and the robot cannot prevent a collision autonomously, fallback strategies are crucial. These include providing the user with visual or auditory alerts, such as the Inform behavior we proposed in Section 4.5. Inform helps maintain user awareness during unexpected situations by temporarily interrupting the VR immersion to ensure safe interaction with the robotic system.

We suggest implementing the strategies as a state machine with its modular approach without tight coupling. It allows modification of the strategies for the specifics of each robot. The proposed strategies are also applicable for non-omnidirectional grounded robots, but they should consider the specific robot orientation and, for bulky robots, as in Suzuki et al. (2020), the air resistance. For mETHDs based on vacuum cleaners (Wang et al., 2020), where stepping onto the robot is a hazard, the robot needs to be either covered by a proxy object (physical solution) or the distances to the user should be increased. For ceiling-mounted mobile props (Bouzbib et al., 2020), the chasing position should be far enough from the user to account for a possible fall during movement and stop for the interaction, to allow the user to close in from a safe distance.

Consequently, our safety strategies generalize to various mobile grounded, suspended, or flying robots, except body-grounded ones, as our use of 2D distances and positioning is not applicable to robots attached to the user. Yet, the safety risk analysis, physical means, and redundant sensors have to be customized for each individual case. Nevertheless, once defined, some solutions might be reused on a similarity basis. Likewise, the testing should also consider specifics in the requirements elicited during risk analysis, the use-case specifics, and hardware limitations.

Implementation of a close-to-reality simulation system with an integrated easy-to-adapt testing framework makes evaluation of the mETHD safety fast, repeatable, low-cost, and reliable. Such a framework allows testing various scenarios involving sensor noise, delays, and other unwanted factors that can be challenging to replicate in a physical system. The evaluation section demonstrated how this testing approach can identify the system’s vulnerabilities prior to deployment, ensuring that the employed safety measures are validated under realistic and potentially adverse conditions. Thorough pre-deployment testing ensures that mETHD’s software controls are reliable, safe, and ready for real-world use.

However, for the final deployment of the haptic VR system, the testing cannot be limited to virtual tests only. It should be followed by semi-virtual tests with a real robot and a simulated user. This step will help to identify the physical details that could not be simulated, such as floor-to-wheel traction, wireless connectivity, actual battery life, and reflective surfaces or bad lighting in the working volume that might affect the system. Next, the real user motion replay can address the behavioral specifics, such as unexpected actions or speed alteration, without endangering them. In our ongoing research, we are using a small four-wheeled omnidirectional robot with a make-shift mannequin on top to test the user detection mechanisms and collision reaction. It moves according to a pre-recorded real user trajectory and can trigger a haptic request. After this step, the transition should be made to live testing with real users to confirm the resilience under final working conditions.

7 Limitations

This paper has several limitations that were partially or not addressed. One limitation is that we did not cover the use of the robotic arm and its user-aware dynamic (re)positioning when rendering large virtual objects. The interactions with stationary or body-anchored robotic arms have been covered in the existing literature (Mercado et al., 2021; Vonach et al., 2017; Horie et al., 2021). Therefore, this work focused on the mobile platform that can support such an arm and greatly increase the interaction range.

Our risk analysis and parameterization are mostly based on our existing Cobodeck system. Therefore, it generalizes only to a certain extent and should be reevaluated for other systems. Environment geometry, hardware specifics, and the user’s tasks within a system also might have a significant impact on the risk assessment and the full set of safety measures and parametrization. Taking this into account, we placed more emphasis on developing strategies than on specific guidelines. Strategies themselves have to be adapted to the specifics of the work environment and robots’ characteristics. For instance, Escape in narrow spaces will likely work for a vacuum cleaner but might need adaptations for a fast and heavy platform like RB Kairos.