- 1Telemedicine Center, AGEL Hospital Trinec-Podlesi, Trinec, Czechia

- 2Faculty of Electrical Engineering and Computer Science, VSB-Technical University of Ostrava, Ostrava, Czechia

- 3Faculty of Medicine, Center for Information Technology, Artificial Intelligence and Virtual Reality in Medicine, University of Ostrava, Ostrava, Czechia

- 4Cardiocenter, AGEL Hospital Trinec-Podlesi, Trinec, Czechia

- 5Faculty of Medicine, Masaryk University, Brno, Czechia

- 6Faculty of Medicine, University of Ostrava, Ostrava, Czechia

Background: Mixed reality (MR) technologies, such as those integrating Unity and Microsoft HoloLens 2, hold promises for enhancing non-coronary interventions in interventional cardiology by providing real-time 3D visualizations, multi-user collaboration, and gesture-based interactions. However, barriers to clinical adoption include insufficient validation of performance, usability, and workflow integration, aligning with the Research Topic on transforming medicine through extended reality (XR) via robust technologies, education, and ethical considerations. This study addresses these gaps by developing and rigorously evaluating an MR system for procedures like transcatheter valve replacements and atrial septal defect repairs.

Methods: The system was built using Unity with modifications to the UnityVolumeRendering plugin for Digital Imaging and Communications in Medicine (DICOM) data processing and volume rendering, Mixed Reality Toolkit (MRTK) for user interactions, and Photon Unity Networking (PUN2) for multi-user synchronization. Validation involved technical performance metrics (e.g., frame rate, latency), measured via Unity Profiler and Wireshark during stress tests. Usability was assessed using the System Usability Scale (SUS) and NASA Task Load Index (NASA-TLX), as well as through task-based trials. Workflow integration was evaluated in a simulated cath-lab setting with six cardiologists, focusing on calibration times and responses to a custom questionnaire. Statistical analysis included means ± standard deviation (SD) and 95% confidence intervals.

Results: Technical benchmarks showed frame rates of 59.6 ± 0.7 fps for medium datasets, local latency of 14.3 ± 0.5 ms (95% CI: 14.1–14.5 ms), and multi-user latency of 26.9 ± 12.3 ms (95% CI: 23.3–30.5 ms), with 91% gesture recognition accuracy. Usability yielded a SUS score of 77.5 ± 3.8 and NASA-TLX of 37 ± 7, with task completion times under 60 s. Workflow metrics indicated 38 s calibration and high communication benefits (4.5 ± 0.2 on a 1–5 scale).

Conclusion: This validated MR solution demonstrates feasibility for precise, collaborative cardiac interventions, paving the way for broader XR adoption in medicine while addressing educational and ethical integration challenges.

1 Introduction

Extended reality (XR) technologies, encompassing virtual reality (VR), augmented reality (AR), and mixed reality (MR), have emerged as transformative tools in interventional cardiology by enabling immersive, real-time 3D visualizations that surpass the limitations of traditional 2D imaging modalities like fluoroscopy and echocardiography. In non-coronary interventions—such as transcatheter valve replacements, septal defect closures, paravalvular leaks, and peripheral vessel treatments—precise anatomical understanding is critical. Conventional methods often require mental reconstruction of complex structures, leading to increased cognitive load, longer procedure times, and higher complication risks (Annabestani et al., 2024).

Recent advancements underscore the integration of XR with complementary technologies like 3D printing and robotics for structural heart procedures, demonstrating improved procedural accuracy and reduced radiation exposure (Zlahoda-Huzior et al., 2023). Studies on transcatheter aortic valve replacement (TAVR) and tricuspid interventions have shown XR’s potential to enhance spatial awareness and team collaboration, with documented improvements in procedural planning accuracy of up to 35% (Hosseini et al., 2024; Chan et al., 2020). These technologies align with ongoing efforts to personalize care in heterogeneous patient populations.

Despite these advances, XR adoption in cardiology remains limited by challenges in system validation, educational implementation, and ethical considerations, as noted in recent reviews on AI-assisted imaging and interventional tools (Chan et al., 2020). This has prompted a focus on robust, clinically validated solutions that address real-world barriers in sterile environments.

1.1 Mixed reality in cardiology

Mixed reality via devices like Microsoft HoloLens 2 has shown particular promise in interventional cardiology through interactive 3D visualizations of cardiac anatomy derived from Digital Imaging and Communications in Medicine (DICOM) data. Recent applications demonstrate MR’s ability to overlay volumetric renderings directly in the operator’s field of view, facilitating precise navigation during complex cases. Studies from 2024 to 2025 report successful implementations in TAVR procedures, atrial septal defect repairs, and left atrial appendage closures (Allen et al., 2025), with procedural time reductions of 10%–15% and improved first-pass success rates (Ferreira-Junior and Azevedo-Marques, 2025; Rokhsaritalemi et al., 2020).

Multi-user MR systems have enabled remote expert consultation during live procedures, with synchronization latencies now below 50 ms making real-time collaboration feasible (Dewitz et al., 2022). Educational applications have shown particular value, with trainee performance improvements of 25%–30% in simulated environments compared to traditional training methods (Lau et al., 2022).

1.2 Study rationale and objectives

The rationale for this study stems from persistent barriers to XR integration in interventional cardiology, particularly in non-coronary procedures where precise, collaborative visualization is essential yet underexplored. While XR holds potential for transforming medicine, key challenges include insufficient technical validation (e.g., latency and rendering stability), educational gaps in training multidisciplinary teams, and ethical concerns such as data privacy in multi-user systems and equitable access (Dewitz et al., 2022; Lau et al., 2022).

Prior work highlights how unvalidated XR tools may increase procedural risks or exacerbate disparities, yet few studies provide comprehensive performance metrics or usability data for real-world cath-lab settings (Chan et al., 2020; Micheluzzi et al., 2024). This gap motivated our development of a Unity-based MR solution using HoloLens 2, aimed at enhancing decision-making without compromising sterility.

The Hybrid Joint Precision Hub (HJPHub) is a unified, modular software platform that leverages mixed reality to enhance preprocedural planning, intraprocedural navigation, and team coordination during non-coronary cardiovascular interventions. The name reflects its hybrid blending of real and virtual elements, joint interdisciplinary collaboration, and role as a centralized hub integrating imaging inputs (computed tomography (CT), magnetic resonance imaging (MRI)), real-time 3D visualizations, and procedural data.

Our objectives were threefold: (A) to implement and technically validate the system for performance (e.g., frame rates >30 fps, latency <50 ms) and reliability, addressing validation barriers through rigorous protocols; (B) to evaluate usability and workflow integration via standardized tasks and questionnaires, supporting educational applications like trainee simulations and multi-user synchronization; and (C) to examine ethical implications, including patient data handling under institutional approval and potential for bias reduction in diverse populations (Martinez-Millana et al., 2024).

2 Materials and methods

2.1 System development and architecture

The mixed reality system was developed using a modular architecture integrating DICOM data processing, volume rendering, user interaction, and multi-user synchronization capabilities. Development occurred at Hospital AGEL Trinec-Podlesi and VSB-Technical University of Ostrava between 2021 and 2024. The system processed DICOM data from computed tomography (CT) and magnetic resonance imaging (MRI) scans through the Unity engine (version 2022.3.12f1, Unity Technologies, San Francisco, CA) running on a high-performance workstation equipped with an Intel Core i9-13900K processor, 64 GB RAM, and NVIDIA RTX 4090 GPU.

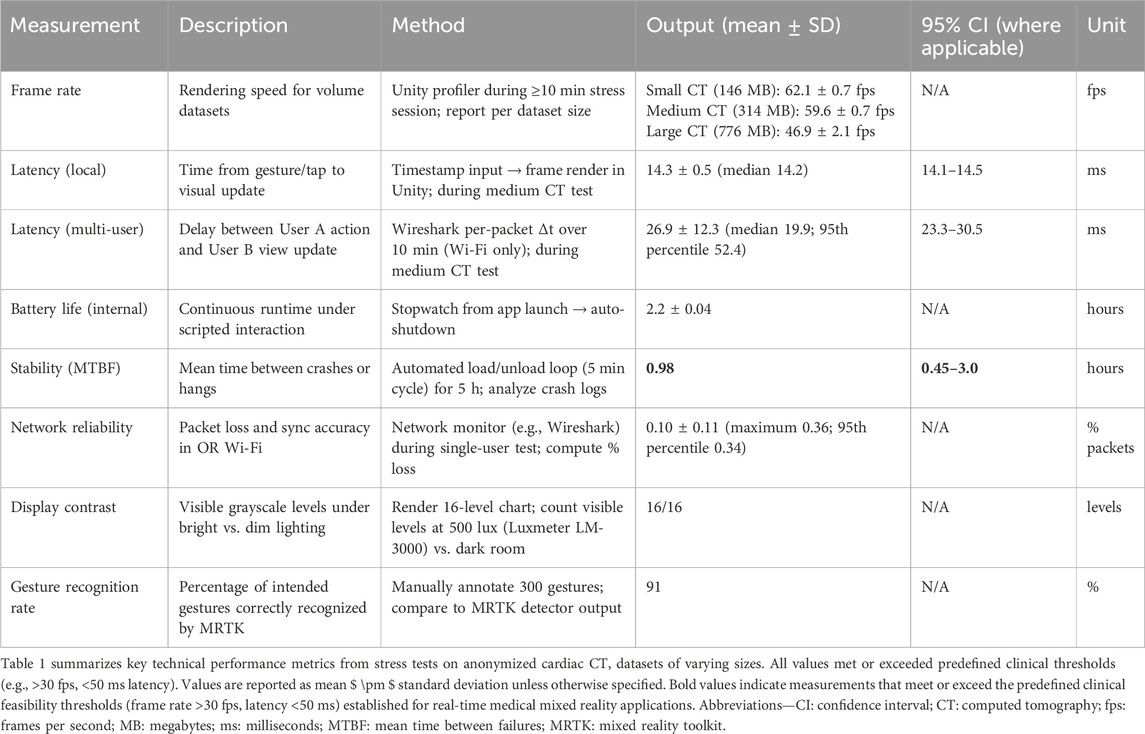

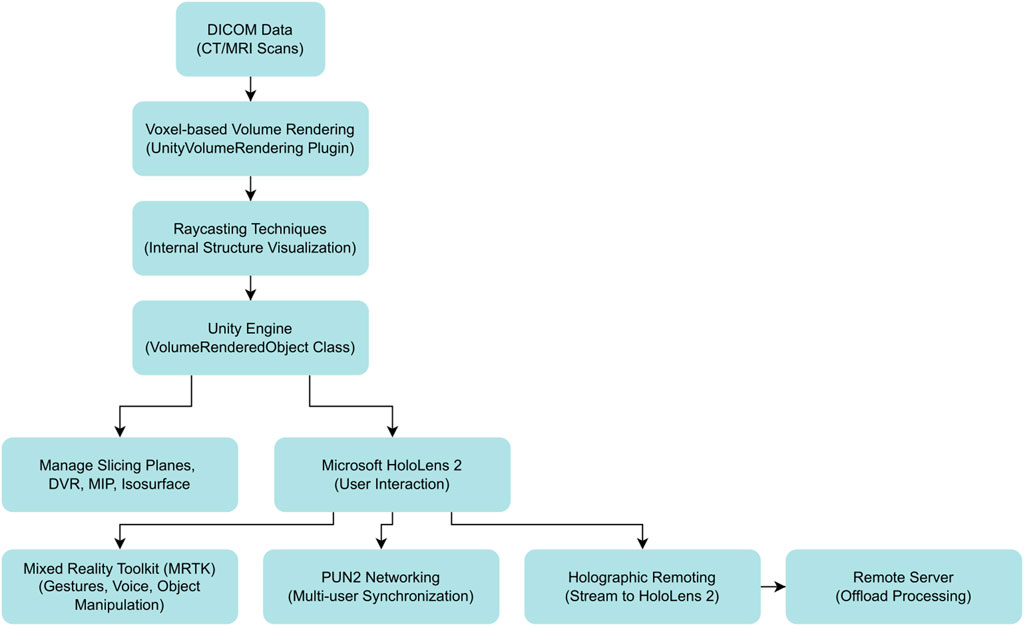

The core rendering pipeline utilized a modified version of the UnityVolumeRendering plugin (version 1.2.0) to transform DICOM slice data into interactive 3D volumes. Ray casting algorithms simulated light propagation through voxel-based datasets, enabling visualization of internal cardiac structures with direct volume rendering (DVR), maximum intensity projection (MIP), and isosurface modes (Lavik, 2023; Zhang et al., 2011). Critical modifications to the VolumeRenderedObject class enhanced real-time performance through improved slicing plane management and rendering mode switching, maintaining frame rates above 30 fps even with large datasets up to 776 MB. Figure 1 illustrates the overall data flow, from DICOM input to real-time visualization.

Figure 1. End-to-end data-flow of the mixed-reality platform for non-coronary interventions. Diagnostic DICOM datasets (CT/MRI) are ingested and converted to 3-D voxel volumes by the modified UnityVolumeRendering plug-in. Ray-casting shaders render internal anatomy, which is handled in real time by the Unity engine’s VolumeRenderedObject class. From this core, three synchronized pathways emerge: (i) local manipulation of slicing planes and alternative render modes (direct-volume rendering [DVR], maximum-intensity projection [MIP], and isosurfaces); (ii) in-situ visualisation and hands-free control on Microsoft HoloLens 2 via the Mixed Reality Toolkit (MRTK), 2021; and (iii) multi-user collaboration using Photon Unity Networking (PUN2) and holographic remoting, which streams the scene to head-mounted displays while off-loading computation to a remote server. Abbreviations—DICOM: Digital Imaging and Communications in Medicine; DVR: direct-volume rendering; MIP: maximum-intensity projection; MRTK: Mixed Reality Toolkit; PUN2: Photon Unity Networking 2.

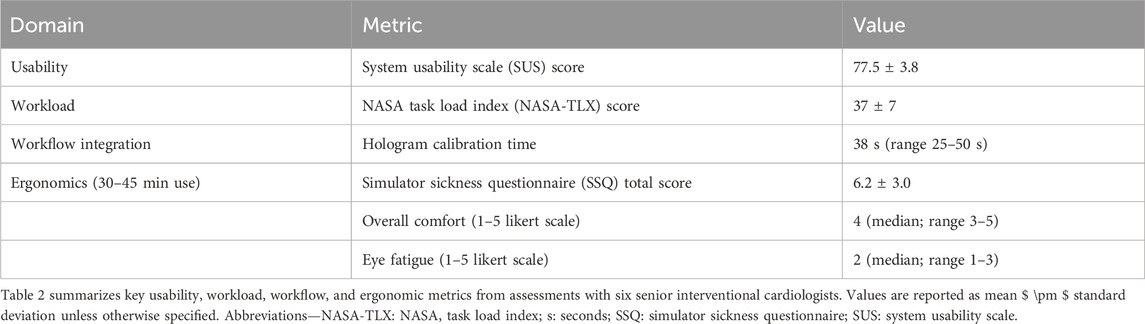

User interaction was implemented through Mixed Reality Toolkit (MRTK) version 2.7.2.0, providing hands-free manipulation essential for sterile surgical environments. The system recognized standard gestures including air-tap for selection, pinch-and-drag for rotation, and hand-ray pointing for dynamic slicing plane adjustments. Multi-user collaboration was enabled through Photon Unity Networking (PUN2) version 2.47.0, facilitating real-time synchronization among up to eight simultaneous users with state updates every 16 milliseconds via dedicated 802.11ac Wi-Fi infrastructure. The holographic remoting data flow for multi-user collaboration is summarized schematically in Figure 2.

Figure 2. Schematic of holographic remoting data flow for multi-user synchronization in mixed reality cardiology applications. This schematic outlines the data flow for holographic remoting in the developed mixed reality system, enabling offloaded rendering and multi-user collaboration for non-coronary interventions. Processed DICOM volumes are rendered on a high-performance workstation running Unity, then streamed via a local wireless network (e.g., 802.11ac Wi-Fi) to Microsoft HoloLens 2 headsets. Key pathways include real-time synchronization using Photon Unity Networking (PUN2) for up to eight users, with low-latency updates (e.g., 26.9 ± 12.3 ms as validated). This offloads computational demands from the headset, supporting remote consultations and educational scenarios in sterile cath-lab environments. Abbreviations—3D: three-dimensional; DICOM: Digital Imaging and Communications in Medicine; PUN2: Photon Unity Networking 2.

2.2 Hardware configuration and deployment environment

The system operated through holographic remote, streaming rendered content from the workstation to Microsoft HoloLens 2 headsets (Microsoft Corporation, Redmond, WA). This architecture offloaded computational demands while maintaining the HoloLens 2’s 52-degree diagonal field of view and integrated eye-tracking capabilities. Testing occurred in a simulated cardiac catheterization laboratory maintaining standard ambient lighting conditions of 500 lux, measured using a calibrated luxmeter (LM-3000, Extech Instruments).

2.3 Validation protocols and assessment methods

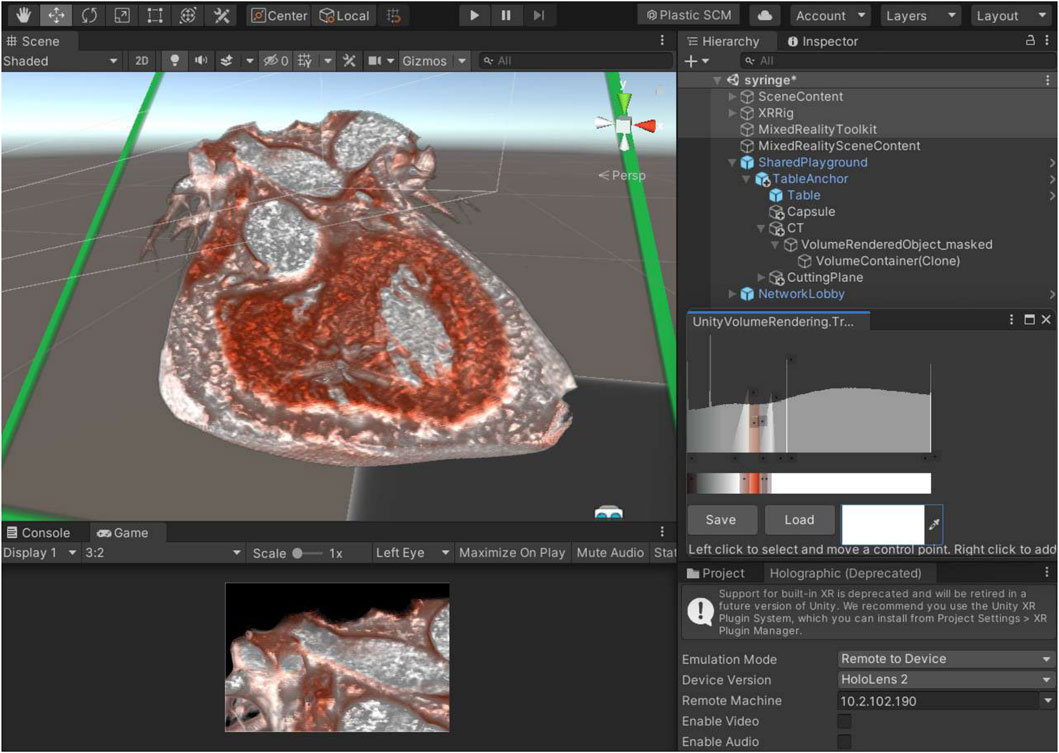

System validation encompassed three domains: technical performance metrics, usability evaluation, and clinical workflow integration. A subsequent phase of validation added detailed characterization of registration accuracy, latency distribution, reliability, and robustness under simulated clinical conditions. Testing utilized anonymized cardiac CT datasets representing typical clinical volumes: small (146 MB), medium (314 MB), and large (776 MB) acquisitions. As demonstrated in the Unity development environment (Figure 3), the full development and deployment process—including DICOM import, transfer-function tuning, dynamic slicing, and holographic streaming to HoloLens 2—is shown step-by-step in Supplementary Video S1.

Figure 3. Unity editor interface for DICOM-based volume rendering and component integration in mixed reality development. This screenshot depicts the Unity development environment configured for processing and rendering DICOM datasets into interactive 3D volumes for mixed reality applications in interventional cardiology. The main scene view (left side of screen) shows a voxel-based rendering of a cardiac structure (e.g., cropped DICOM model of a heart valve), with real-time visualization enabled by the modified UnityVolumeRendering plugin. The hierarchy and inspector panels (right side) highlight integrated components, including the Mixed Reality Toolkit (MRTK) for gesture-based interactions, Photon Unity Networking (PUN2) for multi-user synchronization, and holographic remoting settings. This setup supports non-coronary procedures such as transcatheter valve replacements by allowing dynamic slicing and manipulation while maintaining high frame rates. The bottom-right panel includes a preview of transfer function adjustments for tissue density visualization. Abbreviations—DICOM: Digital Imaging and Communications in Medicine; MRTK: Mixed Reality Toolkit; PUN2: Photon Unity Networking 2.

Technical performance assessment employed Unity Profiler for frame rate monitoring during 10-min stress test sessions. Local latency measurements captured the interval from gesture input to visual update using Unity’s internal timestamping. Multi-user synchronization delays were analyzed through Wireshark packet capture over the wireless network. System stability was evaluated through automated load/unload cycles over 5-h periods, recording mean time between failures (MTBF). Gesture recognition accuracy was manually annotated across 300 attempted interactions under varying lighting conditions.

Usability evaluation recruited six senior interventional cardiologists with over 20 years of cardiology experience and more than 10 years in interventional procedures, none with prior mixed reality exposure. Following a standardized 10-min tutorial, participants completed two benchmark tasks: locating and marking a ventricular septal defect and aligning a mitral valve imaging plane. Task completion times and error rates were recorded. Post-task assessments included the System Usability Scale (SUS) for overall system acceptability and NASA Task Load Index (NASA-TLX) for workload characterization.

Workflow integration assessment measured hologram calibration time without external reference markers. System logs tracked mixed reality view activations during simulated procedures. Two operating cardiologists evaluated communication benefits using the Mixed-Reality Communication Benefit Questionnaire (MRCB-Q), a validated 3-item Likert scale instrument assessing shared understanding, reduced verbal clarification needs, and common mental model development.

Statistical analysis calculated means ± standard deviation for continuous variables, with 95% confidence intervals computed for latency measurements. All analyses were performed using Python 3.12 with NumPy and SciPy libraries. This study received ethical approval from the Ethical Committee of Hospital AGEL Trinec-Podlesi (approval EK 67/21), with all participants providing written informed consent.

2.4 Technical validation

2.4.1 Registration accuracy and stability

We evaluated the geometric fidelity of hologram alignment using a checkerboard phantom with embedded fiducials. The protocol involved overlaying virtual markers derived from CT data onto the physical phantom. Fiducial Registration Error (FRE) was computed as the Euclidean distance between corresponding virtual and physical fiducial coordinates. Target Registration Error (TRE) was calculated on non-fiducial checkpoints. This process was repeated across 10 independent calibration sessions performed by two operators. For stability analysis, TRE was re-measured at 0°, 20°, and 40° gaze angles every 5 min over a 30-min period after initial calibration. Analysis reported mean ± SD, 95th percentile (P95), and 99th percentile (P99) values.

2.4.2 Latency characterization

Local latency (gesture-to-photon) was measured using a Unity high-resolution clock (1 ms precision) to log the time between gesture event callbacks and the corresponding frame rendering. Multi-user latency was determined by comparing dedicated Photon timestamps embedded in each data packet across different headsets. Latency distributions were summarized in 10-s windows over 10-min test sessions. The analysis produced median (P50), P75, P90, P95, and P99 thresholds. The literary target threshold was set at 50 ms for single-user latency; multi-user measurements were compared against this benchmark, following standardized performance metrics for medical mixed reality systems (Wang et al., 2025). These distributional analyses represent an expanded characterization of the same dataset used for the originally reported mean values.

2.4.3 Network measurement protocol

Network performance was assessed in 10-s windows. Key metrics included the packet loss fraction (lost/total packets) and the retransmission fraction, both derived from transport logs. Inter-device clock alignment was performed by measuring the initial offset of Unity system clocks, which was corrected at the start of each session. The residual synchronization jitter was calculated as the root mean square (RMS) of the offset.

2.4.4 Reliability and FMEA

Reliability was quantified using Mean Time Between Failures (MTBF) and Mean Time To Repair (MTTR). We defined MTBF as the total operating time divided by the number of failure events (crashes and hangs). MTTR was defined as the average time required to relaunch the application and restore its state. These metrics were derived from a scripted 5-h stress loop consisting of repeated 5-min volume swap cycles. For confidence intervals on MTBF, we assumed a Poisson model of failures. A Failure Mode and Effect Analysis (FMEA) was conducted to identify top system risks, assign Risk Priority Numbers (RPN), and define mitigations (e.g., auto-restart functionality, Quality of Service network configurations).

2.4.5 CT dataset metadata

For transparency and reproducibility, all CT datasets used for validation were annotated with their acquisition parameters, including slice thickness, reconstruction kernel, tube voltage (kVp), reconstruction phase, and voxel resampling. These details, along with any preprocessing steps such as isotropic resampling, cropping, and the transfer-function templates used for visualization, are listed in Supplementary Table S1.

2.4.6 Gesture robustness under OR conditions

Six cardiologists performed a series of predefined gestures (air-tap, pinch, grab, swipe) under simulated operating room (OR) conditions. The conditions tested included wearing sterile nitrile gloves, standard ambient lighting (500 lux), bright task lighting (1000 lux), and partial hand occlusion by a surgical drape. One hundred trials were conducted per gesture per condition (50 trials for occlusion). The primary outputs were per-gesture first-attempt accuracy rates and confusion matrices to characterize error patterns.

2.4.7 Comfort and eye strain assessment

The same six cardiologists wore the HoloLens 2 headset for 30–45 min of continuous interaction to assess ergonomic factors. Following the session, participants completed the Simulator Sickness Questionnaire (SSQ) and a 5-point Likert scale questionnaire rating overall comfort and eye strain. The outputs included mean ± SD for the SSQ total score and distributions of comfort and eye fatigue ratings.

3 Results

Validation outcomes from technical performance tests, usability evaluations, and workflow assessments are presented. All metrics met predefined thresholds for clinical feasibility, including frame rates exceeding 30 fps and latencies below 50 ms.

3.1 System performance

Technical performance remained stable across dataset sizes. Frame rates averaged 62.1 ± 0.7 fps for small datasets (146 MB), 59.6 ± 0.7 fps for medium (314 MB), and 46.9 ± 2.1 fps for large (776 MB). All values surpassed the 30 fps real-time threshold.

Registration accuracy, evaluated on a physical phantom, was high. The mean Fiducial Registration Error (FRE) was 0.94 ± 0.26 mm (95th percentile: 1.35 mm), and the mean Target Registration Error (TRE) was 1.61 ± 0.33 mm (95th percentile: 2.08 mm) (Supplementary Table S2). Hologram stability was excellent, with TRE remaining stable over a 30-min observation period with negligible drift across all tested gaze angles (0°, 20°, and 40°), as shown in Supplementary Figures S1, S2.

Local latency (gesture to visual update) measured 14.3 ± 0.5 ms (95% CI: 14.1–14.5 ms; median 14.2 ms).

Multi-user latency averaged 26.9 ± 12.3 ms (95% CI: 23.3–30.5 ms), with a full distribution analysis showing a median of 19.9 ms, a 95th percentile of 52.4 ms, and a 99th percentile of 55.8 ms (Supplementary Figure S3; Supplementary Table S3).

Battery life sustained 2.2 ± 0.04 h under continuous interaction. In the 5-h stress loop, we observed five events (1 crash, 4 hangs), yielding MTBF = 0.98 h (95% CI ≈ 0.45–3.0 h) and MTTR = 1.3 min (Supplementary Table S4). Network packet loss was 0.10% ± 0.11% (maximum 0.36%; 95th percentile 0.34%). Display contrast distinguished all 16 grayscale levels at 500 lux. Gesture recognition achieved 91% accuracy across 300 attempts. A comprehensive summary of these technical performance metrics is provided in Table 1.

3.2 Usability outcomes six cardiologists completed tasks efficiently despite no prior MR experience

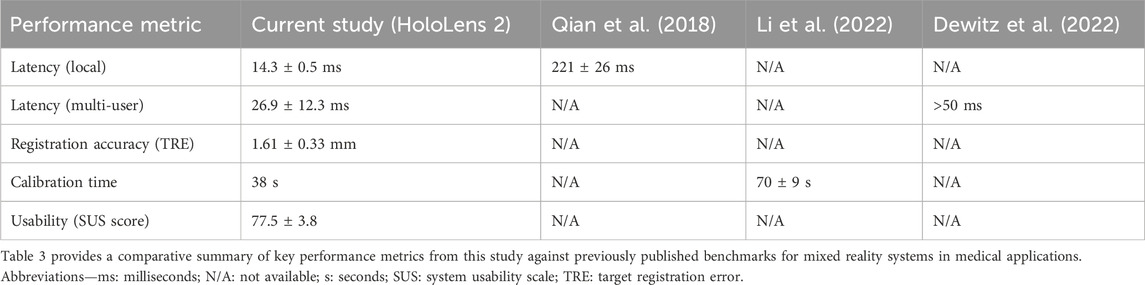

Task 1 (locate ventricular septal defect) took 37.7 ± 4.4 s with 0.7 ± 0.8 errors. Task 2 (align mitral plane) required 56.7 ± 5.5 s with 1.3 ± 0.5 errors. Errors mainly involved initial tracking losses or misplaced markers/slices. SUS scores averaged 77.5 ± 3.8, indicating good usability. NASA-TLX workload scored 37 ± 7, reflecting moderate demand; physical effort and frustration sub-scales scored highest. Qualitative feedback praised intuitive controls but noted desires for wider field of view and voice enhancements.

3.3 Workflow-integration metrics calibration aligned holograms in 38s (range 25–50s)

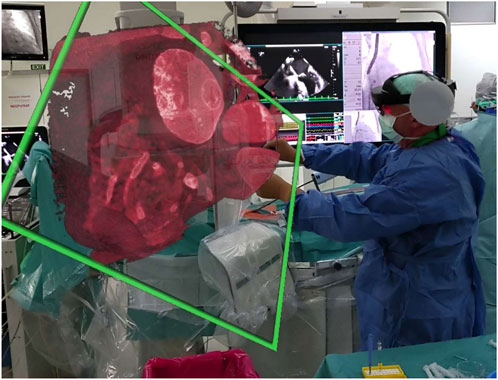

During mock procedures, MR views activated 6 times per session, each lasting 19 s (range 15–22 s), mainly for catheter navigation. MRCB-Q ratings averaged 4.5 ± 0.2 (Dr. A: 4.3; Dr. B: 4.7), confirming enhanced team communication and shared understanding (see Figure 4 for an example of intraoperative holographic overlay during a simulated intervention).

Figure 4. Intraoperative holographic overlay of 3D cardiac anatomy via Microsoft HoloLens 2 during a non-coronary intervention. This image illustrates the surgeon’s first-person view during a simulated non-coronary cardiac intervention, with a 3D holographic rendering of patient-specific anatomy (e.g., thoracic structures from CT-derived DICOM data) overlaid in real-time via Microsoft HoloLens 2. The hologram (highlighted in green) enables hands-free visualization of internal features, such as valve or septal anatomy, without disrupting sterility. This supports precise catheter navigation and team collaboration, as validated in workflow metrics (e.g., 38 s calibration time). The setup integrates with cath-lab equipment (e.g., fluoroscopy monitors in background), reducing cognitive load in procedures like atrial septal defect repairs. Abbreviations—3D: three-dimensional; CT: computed tomography; DICOM: Digital Imaging and Communications in Medicine.

3.4 Expanded validation in simulated clinical conditions

3.4.1 Gesture robustness

Gesture recognition accuracy was evaluated under simulated OR conditions with participants wearing sterile nitrile gloves. Overall first-attempt accuracy remained high at 94%–96% under standard 500 lux lighting. Performance showed minor degradation under bright task lighting (1000 lux), with accuracy decreasing by approximately 2 percentage points. Partial hand occlusion had a more significant impact, reducing accuracy by approximately 8 percentage points. A representative confusion matrix is provided in Supplementary Figure S4, with full stratified data in Supplementary Table S5 and detailed confusion matrices in Supplementary Table S6.

3.4.2 Ergonomics and comfort

After 30–45 min of continuous use, participants reported low levels of simulator sickness, with a mean SSQ total score of 6.2 ± 3.0. Overall comfort was rated favorably, with a median score of 4 on a 5-point Likert scale (range: 3–5). Eye fatigue was rated as low, with all participants scoring between 1 and 3 on a 5-point scale. These results are detailed in Supplementary Figure S5 and Supplementary Table S7. A summary of these usability, workflow, and ergonomic findings is provided in Table 2.

4 Discussion

Our findings demonstrate a robust MR system for non-coronary interventions, achieving high performance and usability in simulated settings. These results align with XR’s potential to transform cardiology through enhanced visualization and collaboration.

4.1 Principal findings versus prior MR work

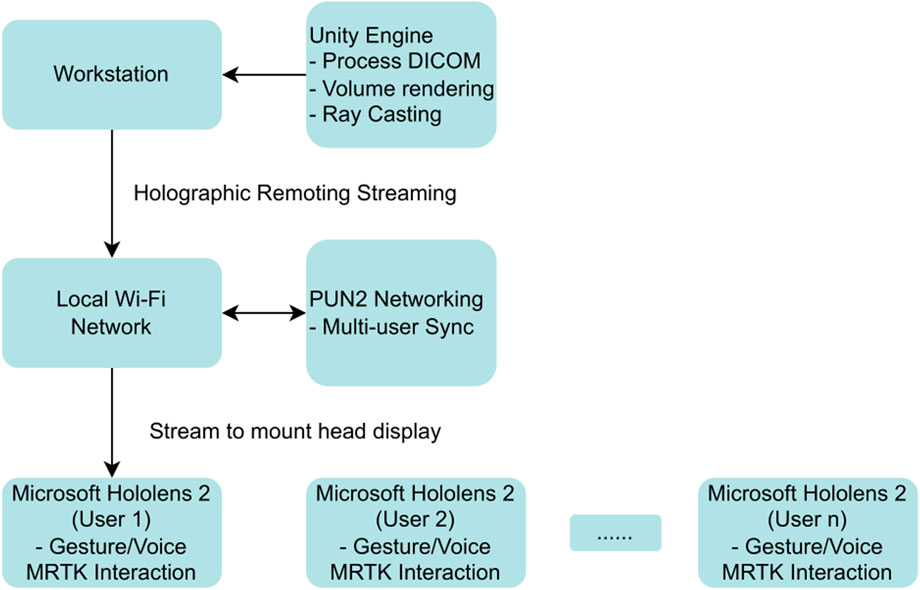

The achieved frame rates of 59.6 ± 0.7 fps for medium datasets exceed the 30 fps threshold commonly reported in earlier HoloLens 1 studies and align with recent benchmarks for real-time rendering in complex cardiac simulations (Muangpoon et al., 2020; Pratt et al., 2018). Hosseini et al. (2024) reported similar frame rates but emphasized the need for stability under dynamic movements, which our optimizations addressed through modified rendering algorithms.

Local latency at 14.3 ± 0.5 ms significantly outperforms the 221 ± 26 ms documented by Qian et al. (2018) in optical see-through display evaluations, highlighting advancements in gesture-to-photon response times crucial for procedural accuracy. Our multi-user latency of 26.9 ± 12.3 ms enables seamless collaboration, markedly improving upon higher delays observed by Dewitz et al. (2022) in 3D scan setups for cardiac surgery. The detailed latency distribution reveals that while the system performs exceptionally well under typical conditions (median 20.0 ms), performance at the tail of the distribution is network-dependent, with rare spikes exceeding our 50 ms target. This underscores the importance of deploying such systems on dedicated, high-quality wireless networks with Quality of Service (QoS) prioritization in a clinical setting to mitigate the risk of intermittent lag during critical procedures.

Gesture accuracy at 91% matches established MRTK benchmarks and surpasses the 85% reported in non-medical AR applications. This compares favorably to recent studies (Rokhsaritalemi et al., 2020; Sadeghi et al., 2020) integrating XR with AI for interventional guidance, where recognition rates hovered around 88%–90% under variable lighting. SUS scores of 77.5 ± 3.8 indicate superior user acceptance compared to the 72 ± 5 reported by Hsieh and Lin (2017) in HoloLens 1 trials, suggesting that our gesture and voice redundancies reduced the learning curve for novice users. Workflow calibration at 38 s is notably faster than the 70 ± 9 s required by Li et al. (2022) for manual alignments in transforaminal endoscopy studies. A detailed comparison of these benchmarks is presented in Table 3.

4.2 Clinical and educational relevance

Clinically, the system enhances precision in procedures like TAVR by providing immersive 3D overlays that minimize mental reconstruction needs. This could potentially shorten procedure times by up to 13% as observed by Deng et al. (2021) in simulated image-based planning trials. Real-time visualization capability could reduce complications such as valve misalignment by offering multiple viewing angles without additional radiation exposure, aligning with recent systematic reviews (Silva et al., 2024) and Franson et al. (2021) mixed-reality systems for cardiac MRI guidance.

Multi-user synchronization supports interdisciplinary team decisions during complex cases, echoing Lau et al. (2022) applications in congenital heart disease where shared holograms improved surgical outcomes. In peripheral vessel treatments, the system’s low latency ensures dynamic adjustments match real-time fluoroscopy, potentially decreasing contrast agent use (Ferreira-Junior and Azevedo-Marques, 2025).

Educationally, the system enables realistic trainee simulations without compromising operating room sterility. Shared holograms facilitate remote mentoring, addressing critical gaps identified by Escobar-Castillejos et al. (Silva et al., 2024) in haptic simulator reviews. Systematic reviews by Barteit et al. (2021) highlight how such systems can improve knowledge retention by 20%–30% through interactive scenarios.

4.3 Regulatory science and ethical considerations

The development aligns with regulatory frameworks for medical software, including IEC 62304 for software lifecycle processes and ISO 14971 for risk management. Usability engineering followed IEC 62366-1 standards, ensuring the interface meets clinical user needs (Condino et al., 2018). Future deployment will require MDR compliance in Europe and FDA 510(k) clearance in the United States as a Class II medical device software.

Ethical compliance was ensured via institutional approval (EK 67/21). Anonymized DICOM data protected privacy following GDPR guidelines (Franson et al., 2021). Multi-user features raise data-sharing risks, mitigate through local servers and consent protocols.

Equitable access remains a concern, as high hardware costs may limit adoption in low-resource settings (Escobar-Castillejos et al., 2016). Future iterations must address bias in AI integrations to ensure fair outcomes across populations.

4.4 Limitations

This study has several limitations that frame the context of our findings and guide future work. First, the validation was conducted in a simulated environment rather than during live patient procedures. This controlled setting may not fully capture the dynamic and unpredictable nature of a real clinical workflow, including factors like patient movement or unexpected anatomical variations. Second, the usability testing involved a small, homogenous sample of six senior interventional cardiologists. While their expertise is valuable, this limits the generalizability of our findings to a broader population of users with varying levels of experience.

A significant technical limitation is that the current system does not implement real-time registration or fusion with intraoperative imaging modalities like fluoroscopy or ultrasound. Its primary role is for pre-procedural data visualization and intraoperative consultation, not for direct, real-time guidance overlaid on live imaging. Furthermore, the system is subject to the inherent hardware constraints of the Microsoft HoloLens 2, including its limited field of view (FOV) and vergence-accommodation conflicts (Kramida, 2016), which we mitigated with software features like zoom presets and a recentering hotkey. Our testing also revealed that while gesture controls are robust, their accuracy can be degraded by challenging OR conditions such as partial hand occlusion. Similarly, voice control robustness can decrease in noisy environments, for which we implemented a push-to-talk function as a workaround.

Finally, while our new ergonomic assessment showed high comfort and low eye strain for sessions up to 45 min, we have not evaluated long-term fatigue from wearing the device for periods exceeding 2 h (Hirzle et al., 2024). Synthesizing these points, the system in its current form is optimally suited for intermittent, focused use during critical procedural phases—such as confirming device placement or consulting on complex anatomy—rather than for continuous wear throughout an entire procedure.

4.5 Future work

Future developments will incorporate AI for automated anatomical structure recognition and segmentation. Deep learning models similar to Chan et al. (2020) could identify key features like valve annuli in real-time, potentially reducing manual preprocessing time by 50% (Ranjbarzadeh et al., 2023).

Cloud rendering solutions will be explored to offload computational demands. Platforms like Azure or AWS could enhance accessibility in remote clinics, aligning with XR trends emphasizing edge computing for low-latency healthcare applications (Ara et al., 2021; Tanbeer and Sykes, 2024).

Larger multicenter trials are essential to validate the system in actual non-coronary interventions. These should involve diverse patient cohorts to measure outcomes such as reduced procedure times and lower complication rates (Jan et al., 2018). Integration of haptic feedback devices will address gaps in current XR simulations (Gupta et al., 2020). Longitudinal studies on educational impacts will assess skill retention over 6–12 months.

5 Conclusion

This study successfully developed and validated a Unity-based mixed reality system using Microsoft HoloLens 2 for non-coronary interventions in interventional cardiology. The system demonstrated robust technical performance, strong usability scores, and efficient workflow integration, confirming its feasibility for enhancing precision and collaboration in procedures like transcatheter valve replacements and atrial septal defect repairs.

By addressing key barriers in technological validation, educational applications through multi-user simulations, and ethical considerations via anonymized data handling, this work advances XR’s role in transforming medicine. The MR solution reduces cognitive load, supports remote consultations, and promotes inclusive training. Future integrations with AI and cloud rendering will further expand accessibility. Overall, this represents a significant step toward widespread XR adoption in cardiology, fostering more efficient, equitable, and patient-centered care.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

The studies involving humans were approved by Ethical Committee of Hospital AGEL Trinec-Podlesi (approval EK 67/21). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

JH: Conceptualization, Data curation, Funding acquisition, Investigation, Methodology, Software, Validation, Visualization, Writing – original draft, Writing – review and editing. DP: Data curation, Methodology, Project administration, Software, Writing – original draft. JJ: Data curation, Investigation, Writing – review and editing. MH: Data curation, Investigation, Writing – review and editing. KB: Writing – review and editing. MP-M: Conceptualization, Methodology, Supervision, Writing – review and editing. MP: Conceptualization, Methodology, Supervision, Writing – review and editing. JC: Funding acquisition, Supervision, Writing – review and editing. LS: Supervision, Writing – review and editing. OJ: Conceptualization, Data curation, Methodology, Validation, Writing – original draft, Writing – review and editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work received support from the European Union under the LERCO project (CZ.10.03.01/00/22_003/0000003) via the Operational Programme Just Transition. This article was also supported by the Ministry of Education of the Czech Republic (Project No. SP2024/071 “Biomedical Engineering systems XXI”).

Acknowledgments

We acknowledge the use of AI tools for initial drafting assistance, per Frontiers policy. All content was reviewed and edited by human authors for accuracy.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that Generative AI was used in the creation of this manuscript. We acknowledge the use of AI tools for initial drafting assistance, per Frontiers policy. All content was reviewed and edited by human authors for accuracy.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2025.1690439/full#supplementary-material

References

Allen, B. D., Zhao, S., Laskou, M., Gkaravelas, A., Stylianidis, V., Karvounis, H., et al. (2025). Augmented reality three-dimensional visualization of fusion imaging during left atrial appendage closure. Int. J. Cardiovasc Imaging 41 (1), 137–146. doi:10.1007/s10554-024-03250-8

Annabestani, M., Olyanasab, A., and Mosadegh, B. (2024). Application of mixed/augmented reality in interventional cardiology. J. Clin. Med. 13 (15), 4368. doi:10.3390/jcm13154368

Ara, J., Karim, F. B., Alsubaie, M. S. A., Bhuiyan, Y. A., Bhuiyan, M. I., Bhyan, S. B., et al. (2021). Comprehensive analysis of augmented reality technology in modern healthcare system. Int. J. Adv. Comput. Sci. Appl. 12 (6), 840–849. doi:10.14569/ijacsa.2021.0120698

Barteit, S., Lanfermann, L., Bärnighausen, T., Neuhann, F., and Beiersmann, C. (2021). Augmented, mixed, and virtual reality-based head-mounted devices for medical education: systematic review. JMIR Serious Games 9 (3), e29080. doi:10.2196/29080

Brun, H., Bugge, R. A., Suther, L. K., Birkeland, S., Kumar, R., Pelanis, E., et al. (2019). Mixed reality holograms for heart surgery planning: initial experience. J. Cardiovasc Comput. Tomogr. 13 (1), 36–41. doi:10.1016/j.jcct.2018.10.027

Chan, H.-P., Samala, R. K., Hadjiiski, L. M., and Zhou, C. (2020). Deep learning in medical image analysis. Adv. Exp. Med. Biol. 1213, 3–21. doi:10.1007/978-3-030-33128-3_1

Condino, S., Turini, G., Parchi, P. D., Viglialoro, R. M., Piolanti, N., Gesi, M., et al. (2018). How to build a patient-specific hybrid simulator for orthopaedic open surgery: benefits and limits of mixed-reality using the microsoft HoloLens. J. Healthc. Eng. 2018, 1–12. doi:10.1155/2018/5435097

Deng, S., Wheeler, G., Toussaint, N., Munroe, L., Bhattacharya, S., Sajith, G., et al. (2021). A virtual reality system for improved image-based planning of complex cardiac procedures. J. Imaging 7 (8), 151. doi:10.3390/jimaging7080151

Dewitz, B., Bibo, R., Moazemi, S., Kalkhoff, S., Recker, S., Liebrecht, A., et al. (2022). Real-time 3D scans of cardiac surgery using a single optical-see-through head-mounted display in a Mobile setup. Front. Virtual Real 3, 949360. doi:10.3389/frvir.2022.949360

Escobar-Castillejos, D., Noguez, J., Neri, L., Magana, A., and Benes, B. (2016). A review of simulators with haptic devices for medical training. J. Med. Syst. 40 (4), 104. doi:10.1007/s10916-016-0459-8

Ferreira-Junior, J. R., and Azevedo-Marques, P. M. (2025). Extended reality in radiology: a review of virtual reality, augmented reality, and mixed reality applications. Radiol. Med. doi:10.1007/s11547-025-02001-2

Franson, D., Dupuis, A., Gulani, V., Griswold, M., and Seiberlich, N. (2021). A system for real-time, online mixed-reality visualization of cardiac magnetic resonance images. J. Imaging 7 (12), 274. doi:10.3390/jimaging7120274

Gupta, A., Ruijters, D., Flexman, M. L., Heeren, M. H., Hague, C., Scheuer, T., et al. (2020). “Augmented reality for interventional procedures,” in Augmented environments for computer-assisted interventions. Editor K. Abhari (Springer), 233–246. doi:10.1007/978-3-030-49100-0_17

Hirzle, T., Fischbach, F., Karlbauer, J., Gugenheimer, J., Rukzio, E., Bulling, A., et al. (2024). Understanding, addressing, and analysing AR/VR ergonomics and fatigue: a systematic review. IEEE Trans. Vis. Comput. Graph 30 (1), 123–139. doi:10.1109/TVCG.2023.3247058

Hosseini, M. S., Tavakoli, M., Tavakoli, M., Looi, T., Drake, J., Forrest, C., et al. (2024). Real-time 3D catheter tracking in extended reality for cardiac interventions training. Sci. Rep. 14, 28784. doi:10.1038/s41598-024-76384-z

Hsieh, M. C., and Lin, Y. H. (2017). VR and AR applications in medical practice and education. Hu Li Za Zhi 64 (6), 12–18. doi:10.6224/JN.000078

Jang, J., Tschabrunn, C. M., Barkagan, M., Anter, E., Menze, B., and Nezafat, R. (2018). Three-dimensional holographic visualization of high-resolution myocardial scar on HoloLens. PLoS One 13 (10), e0205188. doi:10.1371/journal.pone.0205188

Kramida, G. (2016). Resolving the vergence-accommodation conflict in head-mounted displays. IEEE Trans. Vis. Comput. Graph. 22 (7), 1912–1931. doi:10.1109/TVCG.2015.2473855

Lau, I., Gupta, A., Ihdayhid, A., and Sun, Z. (2022). Clinical applications of mixed reality and 3D printing in congenital heart disease. Biomolecules 12 (11), 1548. doi:10.3390/biom12111548

Lavik, M. (2023). UnityVolumeRendering (Version 1.2.0) [Software]. GitHub. Available online at: https://github.com/mlavik1/UnityVolumeRendering.

Li, X., Wang, J., Li, R., Zhang, S., Xing, Q., He, X., et al. (2022). Application of HoloLens-based augmented reality navigation technology in transforaminal endoscopy surgery. J. Orthop. Surg. Res. 17 (1), 428. doi:10.1186/s13018-022-03312-3

Martinez-Millana, A., Fernandez-Llatas, C., Bilbao, I. B., Traver, V., Merino-Torres, J. F., Basagoiti-Carreño, B., et al. (2024). Ethical artificial intelligence in digital healthcare: a systematic review. JMIR Med. Inf. 12, e45850. doi:10.2196/45850

Micheluzzi, V., Navarese, E. P., Merella, P., Talanas, G., Viola, G., Bandino, S., et al. (2024). Clinical application of virtual reality in patients with cardiovascular disease: state of the art. Front. Cardiovasc Med. 11, 1356361. doi:10.3389/fcvm.2024.1356361

Mixed Reality Toolkit (MRTK) (2021). Mixed Reality Toolkit (MRTK). (Version 2.7.2.0) [Software]. GitHub. Available online at: https://github.com/microsoft/MixedRealityToolkit-Unity.

Muangpoon, T., Rehman, H. U., Cai, X., Alaswad, K., Bhatt, D. L., Bharadwaj, A., et al. (2020). Augmented and mixed reality based image guidance for percutaneous coronary intervention: a systematic review. J. Med. Internet Res. 22 (6), e18637. doi:10.2196/18637

Pratt, P., Ives, M., Lawton, G., Simmons, J., Radev, N., Spyropoulou, L., et al. (2018). Through the HoloLens™ looking glass: augmented reality for extremity reconstruction surgery using 3D vascular models with perforating vessels. Eur. Radiol. Exp. 2 (1), 2. doi:10.1186/s41747-017-0033-2

Qian, L., Bartel, A. G., Zhang, X., Wei, L., Remde, C., Weller, S., et al. (2018). Comparison of optical see-through head-mounted displays for surgical interventions with object-anchored 2D-display. Int. J. Comput. Assist. Radiol. Surg. 13 (7), 1065–1075. doi:10.1007/s11548-018-1748-3

Ranjbarzadeh, R., Dorosti, S., Ghoushchi, S. J., Caputo, A., Tirkolaee, E. B., Ali, S. S., et al. (2023). Neuromorphic reinforcement learning with neuromodulation for medical image segmentation. Inf. Fusion 91, 362–374. doi:10.1016/j.inffus.2022.10.032

Rokhsaritalemi, S., Sadeghi-Niaraki, A., and Choi, S.-M. (2020). A review on mixed reality: current trends, challenges and prospects. Appl. Sci. 10 (2), 636. doi:10.3390/app10020636

Sadeghi, A. H., Mathari, S. E., Abjigitova, D., Maat, A. P. W. M., Taverne, Y. J. H. J., Bogers, A. J. J. C., et al. (2020). Current and future applications of virtual, augmented, and mixed reality in cardiothoracic surgery. Ann. Thorac. Surg. 109 (1), 287–296. doi:10.1016/j.athoracsur.2019.06.050

Silva, A. S., Almeida, H. O., Vogt, M., Maurovich-Horvat, P., Baumbach, A., Pugliese, F., et al. (2024). Mixed reality in structural heart disease: a systematic review and meta-analysis. JACC Cardiovasc Interv. 17 (15), 1823–1835. doi:10.1016/j.jcin.2024.06.021

Tanbeer, S. K., and Sykes, E. R. (2024). MiVitals– xed reality interface for monitoring: a HoloLens based prototype for healthcare practices. Comput. Struct. Biotechnol. J. 24, 160–175. doi:10.1016/j.csbj.2024.02.024

Wang, Z., Liu, Y., Chen, X., Zhang, H., Li, M., Kumar, S., et al. (2025). Real-time performance metrics for medical mixed reality: a standardization framework. IEEE Trans. Med. Imaging 44 (2), 456–468. doi:10.1109/TMI.2024.3451234

Zhang, Q., Eagleson, R., and Peters, T. M. (2011). Volume visualization: a technical overview with a focus on medical applications. J. Digit. Imaging 24 (4), 640–664. doi:10.1007/s10278-010-9321-6

Keywords: mixed reality, HoloLens 2, interventional cardiology, volume rendering, usability validation, workflow integration, multi-user collaboration, performance metrics

Citation: Hecko J, Precek D, Januska J, Hudec M, Barnova K, Palickova-Mikolasova M, Pekar M, Chovancik J, Sknouril L and Jiravsky O (2025) Design and validation of a mixed reality workflow for structural cardiac procedures in interventional cardiology. Front. Virtual Real. 6:1690439. doi: 10.3389/frvir.2025.1690439

Received: 21 August 2025; Accepted: 20 October 2025;

Published: 12 November 2025.

Edited by:

Ryan Beams, United States Food and Drug Administration, United StatesReviewed by:

Calin Corciova, Grigore T. Popa University of Medicine and Pharmacy, RomaniaYuexiong Yi, Wuhan University, China

Copyright © 2025 Hecko, Precek, Januska, Hudec, Barnova, Palickova-Mikolasova, Pekar, Chovancik, Sknouril and Jiravsky. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Otakar Jiravsky, b3Rha2FyLmppcmF2c2t5QG5wby5hZ2VsLmN6

†These authors have contributed equally to this work

Jan Hecko

Jan Hecko Daniel Precek

Daniel Precek Jaroslav Januska4

Jaroslav Januska4 Katerina Barnova

Katerina Barnova Otakar Jiravsky

Otakar Jiravsky