- 1School of Psychology, University of Central Lancashire, Preston, UK

- 2Department of Building, Energy and Environmental Engineering, University of Gävle, Gävle, Sweden

- 3Neuroscience Center, University of Helsinki, Helsinki, Finland

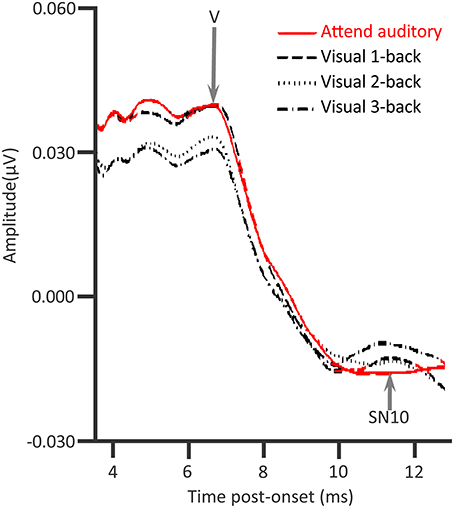

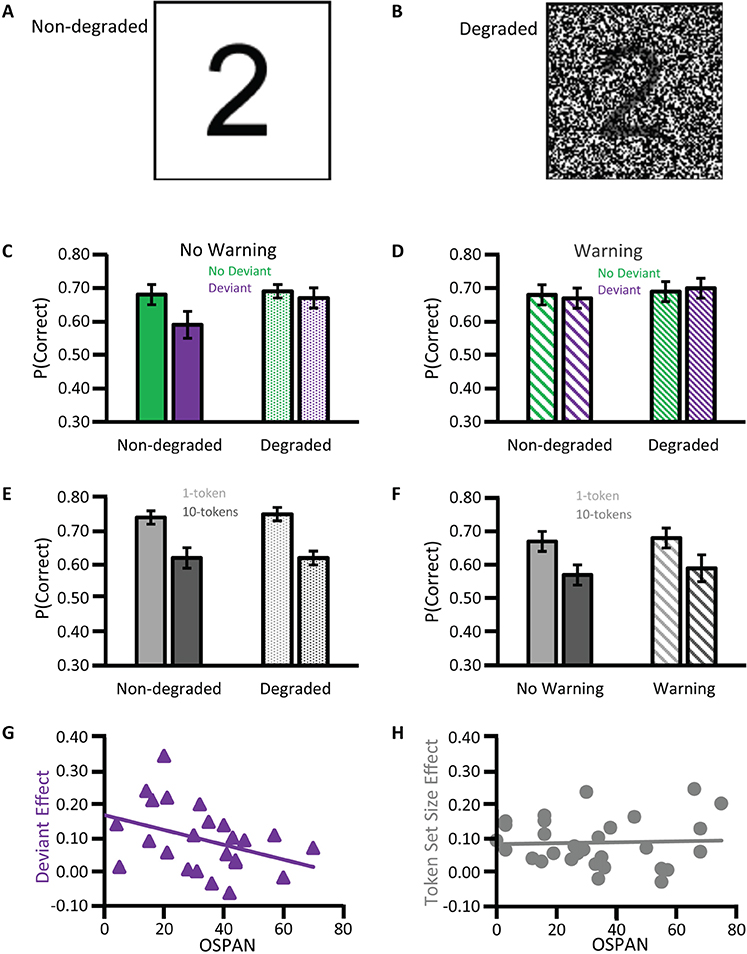

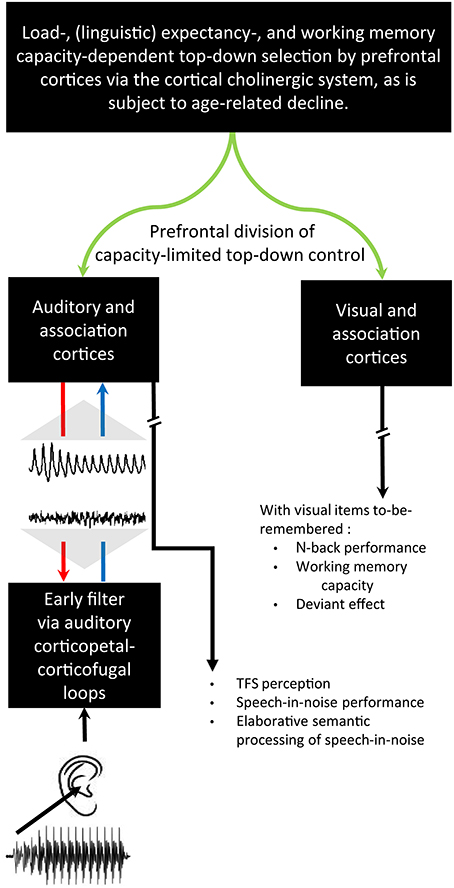

The rostral brainstem receives both “bottom-up” input from the ascending auditory system and “top-down” descending corticofugal connections. Speech information passing through the inferior colliculus of elderly listeners reflects the periodicity envelope of a speech syllable. This information arguably also reflects a composite of temporal-fine-structure (TFS) information from the higher frequency vowel harmonics of that repeated syllable. The amplitude of those higher frequency harmonics, bearing even higher frequency TFS information, correlates positively with the word recognition ability of elderly listeners under reverberatory conditions. Also relevant is that working memory capacity (WMC), which is subject to age-related decline, constrains the processing of sounds at the level of the brainstem. Turning to the effects of a visually presented sensory or memory load on auditory processes, there is a load-dependent reduction of that processing, as manifest in the auditory brainstem responses (ABR) evoked by to-be-ignored clicks. Wave V decreases in amplitude with increases in the visually presented memory load. A visually presented sensory load also produces a load-dependent reduction of a slightly different sort: The sensory load of visually presented information limits the disruptive effects of background sound upon working memory performance. A new early filter model is thus advanced whereby systems within the frontal lobe (affected by sensory or memory load) cholinergically influence top-down corticofugal connections. Those corticofugal connections constrain the processing of complex sounds such as speech at the level of the brainstem. Selective attention thereby limits the distracting effects of background sound entering the higher auditory system via the inferior colliculus. Processing TFS in the brainstem relates to perception of speech under adverse conditions. Attentional selectivity is crucial when the signal heard is degraded or masked: e.g., speech in noise, speech in reverberatory environments. The assumptions of a new early filter model are consistent with these findings: A subcortical early filter, with a predictive selectivity based on acoustical (linguistic) context and foreknowledge, is under cholinergic top-down control. A prefrontal capacity limitation constrains this top-down control as is guided by the cholinergic processing of contextual information in working memory.

Introduction

One of the most challenging tasks that most people perform upon a daily basis is perceiving and understanding speech in background sound such as noise. Be that noise interfering voices in a restaurant, music, or traffic in the street, the socio-psychological impact is profound for many elderly listeners, whether or not they suffer from peripheral hearing loss. The majority of audiological patients have difficulty understanding conversation in noise (Kochkin, 2000). Noise may obscure or degrade speech information, such that only a fraction of the speech signal is available to the listener's brain. Listening and communicating under adverse conditions (Mattys et al., 2012) is known to engage compensatory brain mechanisms, particularly in elderly listeners (Wong et al., 2009).

The purpose of this article is to provide a theoretical model explaining phenomena related to the cognitive hearing science of the perception and comprehension of speech in noise. This model is intended to focus new enquiry. Having highlighted the scale of the problem motivating this objective, we first offer two necessary definitions: (i) Elevated audiometric thresholds define hearing impairment; (ii) Sensory processing is the way that the nervous system receives information from the auditory periphery and turns that information into perceptual representations. Deficits of sensory processing thus not only include losses that cause elevated audiometric thresholds and/or supra-threshold auditory processing deficits, but also include what has been termed “hidden loss” (Schaette and McAlpine, 2011; Plack et al., 2014). Considering such hidden loss, Kujawa and Liberman (2015) have revealed cochlear synaptopathy in an animal model, characterized by changes either at the level of the synapse from hair cells to auditory nerve fibers or at the level of the nerve fibers themselves. Kujawa and Liberman showed that in age-related hearing loss, synaptopathy precedes hair cell loss. This synaptopathy likely causes problems hearing in noise even before the loss of those hair cells. Accordingly, such synaptopathy is one origin of a hidden loss, which affects hearing (in noise) without elevating audiometric thresholds. Further, when the person's brain adapts to peripheral loss such as damage to hair cells, this loss can become hidden. The nervous mechanisms of sensory processing between primary auditory nerve fibers and the rostral brainstem of the central auditory system thus undergo adaptive neuroplastic changes, such that the individual is audiometrically normal (Schaette and McAlpine, 2011). The evidence for hidden loss thus challenges a watertight definition of hearing impairment based on audiometric thresholds alone. To further specify the definition of sensory processing, deficits in sensory processing may thus reside in the auditory periphery or in the central auditory system. However, the long-term neuroplastic changes in sensory processing, which accommodate sensorineural loss, involve adaptive changes in the auditory nerve and/or the central auditory system.

Turning from defining sensory processing to applying this notion to aging, the aging of individuals with bilateral sloping hearing loss causes a decline in sensory processing. Specifically, the weaker activation of superior temporal regions reflects that decline (Wong et al., 2010). This is accompanied by an increase in the recruitment of more general cognitive brain areas of the frontal lobe (Wong et al., 2009). The development of a larger and more active left pars triangularis of the inferior frontal gyrus and the left superior frontal gyrus compensate when listening under adverse conditions including speech in noise (Wong et al., 2010). Also, prefrontal activation correlated positively with improved speech-in-noise performance in older adults. These data thus support the decline-compensation hypothesis (Wong et al., 2009). This hypothesis postulates that the neurophysiological characteristics of an aging brain with respect to sensorily and cognitively demanding tasks include a reduced activation in (auditory) sensory areas, which otherwise support sensory processing, alongside an increase in general cognitive (association) areas, respectively. Long-term neuroanatomical changes, which permit compensatory prefrontal cortical activation to sensory decline, may be a double-edged sword. Such changes may cause maladaptive changes in cognitive abilities not related to speech-in-noise perception. In that sense, these changes would reflect a cognitive decline. Having introduced the decline-compensation hypothesis, we now turn to other extant hypotheses.

A seminal review (Schneider and Pichora-Fuller, 2000) contrasts four further hypotheses of associated declines in sensory and cognitive processing. The “sensory deprivation hypothesis” and the “information degradation hypothesis” both assume that sensory decline occurs before cognitive decline. The “sensory deprivation hypothesis” assumes that prolonged sensory decline drives a chronic cognitive change. By contrast, the “information degradation hypothesis” assumes that sensory decline immediately drives an acute cognitive decline. The “cognitive load on perception hypothesis” assumes that age-related cognitive decline occurs before sensory decline. Cognitive decline thus drives changes in perception: what we term sensory processing. The “common-cause hypothesis” assumes a common age-related factor causes a deterioration of both sensory processing and cognition. Wong et al.'s (2009, 2010) data supporting the decline-compensation hypothesis are also compatible with long-term chronic changes assumed by the sensory deprivation hypothesis. These data are not compatible with the acute changes assumed by the information degradation hypothesis and are agnostic as to whether sensory decline drives cognitive decline, or vice-versa as the cognitive load on perception hypothesis assumes. However, these data out-rule the common-cause hypothesis: There was not an age-related decline in the activation during speech-in-noise perception across sensory and cognitive areas (Wong et al., 2009).

Pertinent to these findings, Lin et al. (2011) postulated that the compensatory dedication of general cognitive resources to difficult auditory perception could also cause an accelerated decline in cognitive faculties. With peripheral age-related hearing loss leading to deafferentation of the auditory nerves and, in turn, a loss of afferents within the central auditory system, what happens is that the perception and understanding of speech becomes more difficult. Other cases where auditory perception is difficult are under environmentally adverse conditions such as noise or reverberation. A competing theory that Lin et al. evaluated is that social isolation and loneliness, caused by communication impairments (Strawbridge et al., 2000), could relate to cognitive decline and neuroanatomical indicators of Alzheimer's disease pathology (Bennett et al., 2006). The decline-compensation hypothesis (Wong et al., 2009, 2010) rather assumes that the compensatory dedication of general cognitive resources to difficult auditory perception accelerates neurocognitive decline. Of particular interest are complex span tests that assess working memory capacity (WMC); (e.g., Daneman and Carpenter, 1980; Turner and Engle, 1989; for an introduction to different working memory (WM) processes, see Baddeley, 1986). These complex span tasks involve retaining a memory load during some form of concurrent mental processing—tasks that are more strongly affected by cognitive aging than simple verbal short-term memory span (Bopp and Verhaeghen, 2005). Forward digit span requires the mental operations of retaining digit items in their original order, a measure of simple verbal short-term memory span. Backward digit span also requires the concurrent reordering of those items for backward report. Backward digit span and complex span tasks thus share the common requirement for concurrent mental processing during retention. Backward recall, sharing commonalities with both forward recall and complex span, is thus only intermediately susceptible to cognitive aging (Bopp and Verhaeghen, 2005).

Having introduced aging and working memory, it is worth considering the role of working memory in the perception of speech under acoustically adverse conditions. Perceiving and understanding speech in noise involves retaining a memory load. Such context proactively predicts, and retroactively repairs, utterances containing degraded sensory information (Marslen-Wilson, 1975; Samuel, 1981; Shahin and Miller, 2009; Shahin et al., 2012). The retention of information occurs while the listener concurrently performs linguistic processing. This lingustic processing affects the perceptual and semantic processing of that degraded sensory information in a top-down manner. Indeed, Uslar et al. (2013) revealed that the more complex the linguistic processing required, when perceiving speech in noise, the higher the signal-to-noise ratio required to identify 80% of the presented stimuli. Uslar et al.'s findings thus cohere well with the notion that speech-in-noise perception relies on a WM function: managing the trade-off between the (more complex linguistic) processing and the retention of (semanto-syntactic contextual) information. Further, corroboration of this notion stems from training on a backward span task (in noise). Such training improves complex span performance—WM improvements generalizing from the backward span task—and also enhances speech-in-noise performance (Ingvalson et al., 2015).

Turning to a different form of adverse conditions, background noise from to-be-ignored sources is not the only form of noise affecting the processing of to-be-attended speech. Reverberation pervades the built-environment and is particularly challenging for hearing-impaired listeners: The speech signal produced by the talker reverberates-off of hard surfaces, such as walls, reaching the listener in the form of an echo at a delay from the speech signal. Reverberation thus obscures speech perception cues of the direct signal (Nábělek, 1988). However, it has been shown that humans have the ability of perceptual compensation (Watkins and Raimond, 2013): They use tacit knowledge of the room acoustics from immediate prior speech sound context to reduce the adverse effects of reverberation on speech perception. Accordingly, the listener's brain forms, and retains in memory, a mental model of the room's acoustics when listening. This model is used in a top-down manner to select and predict the perceptual representation of the current utterance to support speech perception under reverberatory adverse conditions.

A goal of the present article is thus to refocus new enquiry into the perception and comprehension of speech under adverse conditions by offering a new theoretical cognitive model of subcortical speech processing. The necessary evidence integrated thus centers on the relation of WM to the brainstem's processing of speech under adverse conditions. These conditions include noise and reverberation. A further goal is to communicate, beyond the consequences of such peripheral masking effects, how cognitive aging and plasticity of the auditory nerves and central auditory system driven by hearing loss can affect the brain's processing of speech in noise.

In the following, we will introduce the pivotal role of the rostral auditory brainstem as an anatomical and informational hub of the “bottom-up” ascending and “top-down” descending auditory systems. In turn, we will review the current state-of-the-art on the complex Auditory Brainstem Response (cABR) to speech sounds. What then ensues is a discussion of findings concerning the relation of effects of reverberation on the speech intelligibility to the speech ABR representation of speech TFS. These findings concern elderly listeners. This discussion will flow then into how memory load and WMC can influence the generation of wave V of the auditory brainstem response (ABR) to clicks. In turn, the influence of memory load and sensory load on auditory distraction will be considered. The discussion will ultimately converge on a new early filter model, reviving Broadbent's (1958) influential assumption: There is a capacity limitation on how the human mind processes information. That bottleneck in processing selects information early on for further processing. The rostral brainstem is arguably crucial in the operation of that early filter, to which we now turn.

The Rostral Brainstem as a Computational Hub in the Ascending and Descending Auditory Systems Serving as an Early Filter

Generators of the Auditory Brainstem Response

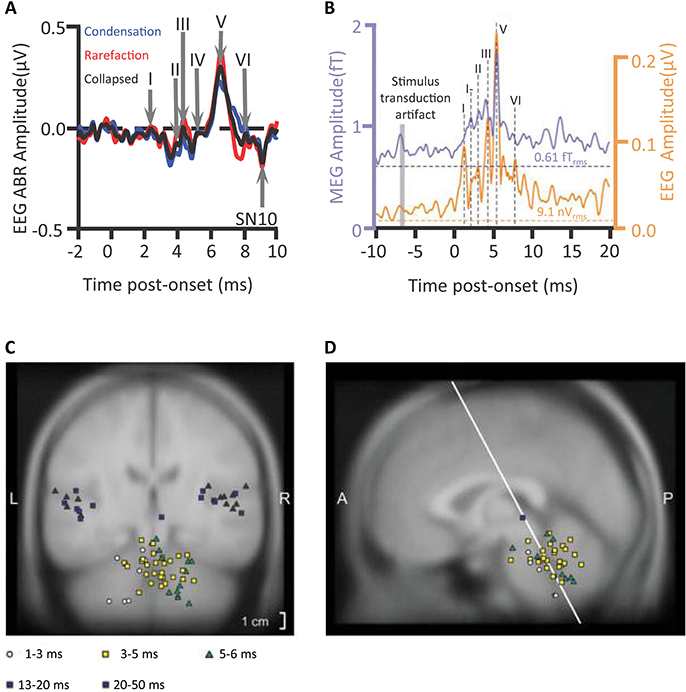

A rapid volley of deflections of the click-elicited ABR, deflections of scalp-measured electrical potentials, occur mostly within the first 10 ms after the onset of a sound (Figure 1A). Tone-pip-elicited ABR deflections occur slightly later (Ikeda, 2015). Assessments of the deflections of ABRs are already in routine clinical use. The audiology lecturer's E-COLI mnemonic (Hall, 2007) detailing a one-to-one peak-to-structure mapping, misidentifies the nature of ABR source generation. The mnemonic specifies E: eighth nerve action potential (wave I); C: cochlear nucleus (wave II); O: olivary complex (superior) (wave III); L: lateral lemniscus (wave IV); I: inferior colliculus (wave V). This bottom-up route does reflect some of the detail of the ascension of information through the subcortical auditory system upwards toward the medial geniculate body of the thalamus and then the auditory cortex. Yet, sophistication is warranted: Many-to-one mappings of anatomical source generator structures to each deflection are apparent (Hall, 2007). Further, vertex-negative troughs as well as vertex-positive peaks can also have source generators. Multiple sources can be concurrently active and a subset of those generators reflected in the timing and amplitude of the ABR peak (Figures 2A,B).

Figure 1. Auditory brainstem response deflections. An individual's auditory brainstem responses (ABRs) averaged from waveforms of scalp-measured electroencephalogram (EEG) epochs in response to clicks (A) plotted for condensation or rarefaction leading phase or collapsed across leading phase. Waves I–VI are visible as positive deflections at the scalp. A subsequent scalp negativity, which though reduced by a filter, is still visible (SN10), Campbell et al. (2012); n = 1. The grand-averaged wave V latency-normalized ABR to clicks presented to the left ear and the average of corresponding magnetic ABR waveforms (mABRs) was acquired simultaneously with a whole head array of magnetometers and collapsed across magnetometers (B), Parkkonen et al. (2009); n = 7. Equivalent Current Dipoles (C,D) locations were normalized from individual MRIs onto the coordinates of the Montreal Neurological Institute average brain offering theoretical source generators of mABR deflections, wave V (green triangles) being generated contralateral to stimulation. SN10 generators and auditory middle latency generators localized to cortical regions. Credits: (A) is adapted with permission from Campbell et al. (2012). Promotional and commercial use of the material in print, digital or mobile device format is prohibited without the permission from the publisher Wolters Kluwer Health. Please contact aGVhbHRocGVybWlzc2lvbnNAd29sdGVyc2tsdXdlci5jb20= for further information. (B–D) are adapted with permission of John Wiley and Sons from Parkkonen et al. (2009). Copyright © 2009 Wiley-Liss, Inc.

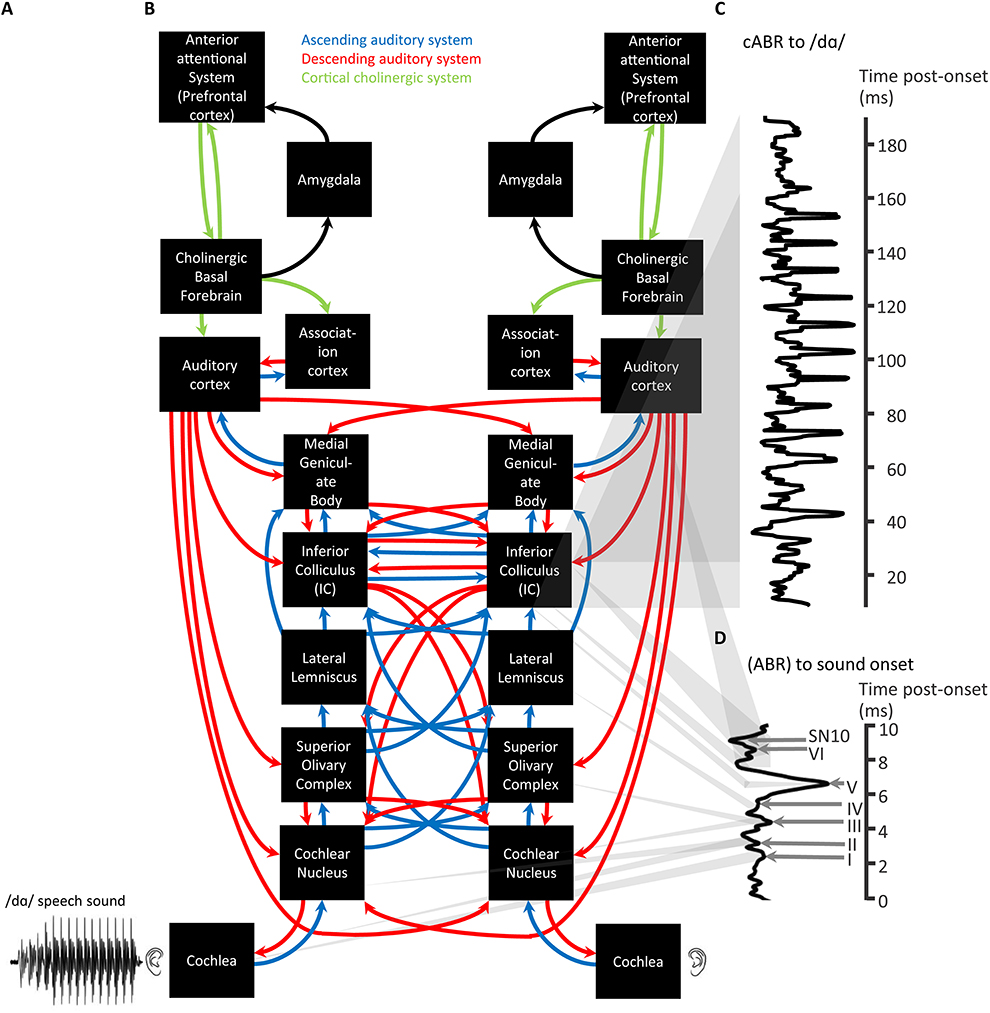

Figure 2. A schematic of cortical cholinergic influence, reliant on the neurotransmitter acetylcholine, on the descending auditory system affecting the flow of information through the ascending auditory system in relation to the generation of ABRs and cABRs. A complex stimulus waveform of a speech sound v/dɑ/, illustrated on the lower left (A), passing through the rostral brainstem including the Lateral Lemniscus and Inferior Colliculus (B) generating a cABR (C). As also shown in green (A), these structures are under the top-down control of the prefrontal cortex via basal forebrain cholinergic projections (green) to auditory cortex that corticofugally control corticopetal-corticofugal loops in the ascending (blue) and descending (red) auditory system. These loops thus attentionally tune the selective processing of ascending auditory information. There is a delay in the time-course of the cABR with respect to the stimulus waveform by the time (ca. 8 ms) for ascending auditory information to reach the rostral brainstem. The preceding ABR to stimulus onset is generated during this delay (D). Gray shadowing denotes theoretical mappings of source generators to scalp-measured responses; n = 21. Credits: The schematic of the ascending and descending auditory pathways is adapted with permission of John Wiley and Sons from Chandrasekaran and Kraus (2010). Copyright © 2010 Society for Psychophysiological Research. Waveforms are reprinted from Chandrasekaran et al. (2009), Copyright © 2009, with permission from Elsevier in respect to Chandrasekaran et al. (2009: Exp.1).

Further vindicating a sophistication concerning the mapping of source generators to deflections, a far-field magnetoencephalographic investigation (Parkkonen et al., 2009) localized wave V to regions posterior and lateral to both the lateral lemniscus and inferior colliculus (IC) of the hemisphere contralateral to the stimulation. These Equivalent Current Dipole source models of magnetic Auditory Brainstem Responses (mABR) represented the net effect of simultaneously active sources. It cannot be out-ruled that concurrent activation of both lateral lemniscus and IC contributed to this Wave V. However, as measured directly during surgery, fibers of the lateral lemniscus have been shown to generate the Wave V peak (Møller and Jannetta, 1982; Møller et al., 1994). Those fibers enter the IC, though there may be further consequences for the activation of the IC indicated by the later longer-lasting high-amplitude SN10 negativity (Davis and Hirsh, 1979; Møller and Jannetta, 1983)1. This IC is the largest structure of the brainstem and wave V the largest wave of the ABR with commonly used filtering parameters. However, wave V is not affected by deafferentation of the IC (Møller and Burgess, 1986).

As depicted in Figure 2A, ABR source generators are subcortical processing stations. These stations are on the pathway of the ascending auditory system, mediated by neuronal elements originating from sensory receptors. In psychological terms that pathway may be described as bottom-up. This pathway begins with the auditory nerve fibers that input the cochlear nuclei and bifurcate from where information is then transmitted upward to other brainstem, midbrain, and thalamic stations up to the auditory cortex. These ascending connections running from cochlear to cortex are termed corticopetal connections.

Interim Summary

There are a series of subcortical generators of the ABR within the ascending auditory system. There are many-to-one mappings from the activation of generators to the sequence of scalp-measured deflections in the ABR.

Corticopetal-Corticofugal Loops

Not only is there an ascending auditory system, as we have already introduced, but there is also a descending auditory system. There are extensive efferent top-down projections of this descending auditory system. These systems of ascending and descending connections are not independent (Bajo and King, 2013). Rather, Bajo and King theorize that the auditory system is a series of dynamic loops in which changes in activity at higher levels in the brain affect neural coding in the IC. These loops also affect other subcortical nuclei as much as signals received from lower structures of the brainstem (Figure 2). In control theory, such loops could permit a corrective positive feedback. Accordingly, a loop receives a top-down expectancy of neural output descending from the requirements of higher structures of the auditory system. To specify these terms, an “expectancy” is a prediction signal from higher structures to lower structures in the context of previous ascending input from a lower structure. This prediction signal is also based on what information the higher structures “require” lower structures to select. For instance, consider selective attention to behaviorally relevant targets of a certain fundamental frequency: The prediction signal coding the expectancy from higher structures may require lower structures to provide information about the behaviorally relevant fundamental frequency. The deviation of the actual neural output of an ascending connection from that expectancy then leads to an alteration in the descending connections of that loop. Those altered descending connections, in turn, affect how the ascending connections code future neural input. As Figure 2 depicts, the auditory system is thus theoretically a collection of dynamic control loops. As each of these loops contain corticopetal and corticofugal connections, such a loop is termed a corticopetal-corticofugal loop. Each loop is influenced by changes in higher levels and input from lower loops. Suga et al. (2000) postulate that such corticopetal-corticofugal loops perform cortically “egocentric selection.” Noise information ascends affecting descending corticofugal connections. This effect on corticofugal connections leads to a transient shift, thus sharpening the lateral inhibition of ascending connections. Accordingly, subsequent noise leads to a small suppressed ascending output to noise information: a small short-lived cortical change thus occurs in response to noise stimulation. When the ascending information is a fear-conditioned signal rather than noise, that information ascends to the auditory cortex and auditory association cortex. In turn, these cortices activate the cholinergic basal forebrain via the amygdala—a cortical influence on the basal forebrain that can also be affected by an unconditioned somatosensory shock stimulus, possibly by ascending thalamic routes (Weinberger, 1998).

Interim Summary

The auditory system is a hierarchy of corticopetal-corticofugal loops. These loops can dynamically adapt. By virtue of being hierarchically organized, such a loop can selectively filter incoming information on the basis of top-down control from higher structures.

Cortical Cholinergic Attention System

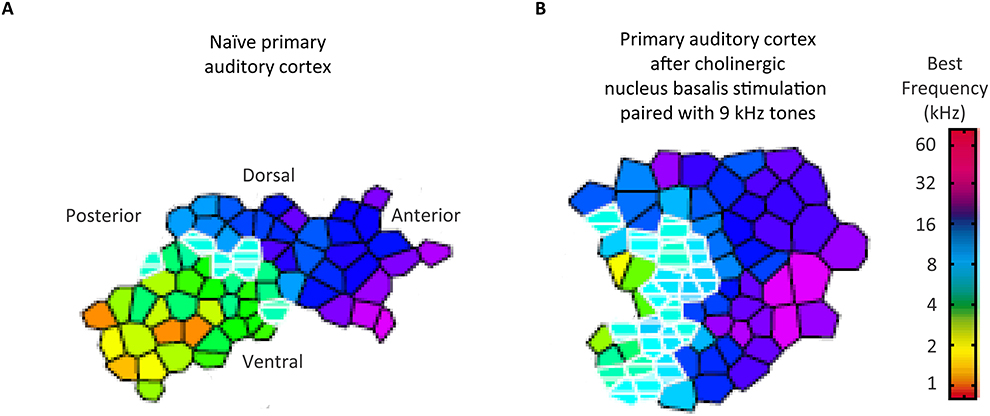

Having introduced the notion of hierachical control of corticopetal-corticofugal loops of the central auditory system, we turn now to how the highest of these loops could be controlled. Sarter et al. (2005) reviewed evidence for a reciprocal feedback loop between the basal forebrain and the prefrontal cortex. This feedback loop controls the cholinergic projections to the prefrontal cortex within an anterior attentional system (Figure 2A). This positive feedback loop also controls the cholinergic output to other brain areas including sensory areas, yet without reciprocal feedback. Such a system of cholinergic feedback has the basis for top-down control of sensory processing. This control occurs through the basal forebrain through the release of acetylcholine by efferent top-down projections to sensory areas including the auditory cortex (Kilgard and Merzenich, 1998; Figure 3). Acetylcholine thus affects the auditory cortex; top-down projections influencing sensory cortical processing. Kilgard and Merzenich revealed that such top-down reorganization occurred without either a fearful or an aversive stimulus. It is thus viable that prefrontally controlled attention to stimuli, for instance during the long-term experience of listening to a specific language, rather than fear conditioning, can cholinergically permit attention to those auditory experiences to cause long-term changes in the operation of egocentric selection by corticopetal-corticofugal loops. Also viable is that the prefrontally controlled cholinergic modulation of corticofugal connections from the auditory cortex is an attentional modulation of auditory subcortical processing.

Figure 3. Cholinergic influences on the auditory cortical organization without fear conditioning. The representative best frequency map, derived from cortical mapping of respones to pure tones of 45 different frequencies at 15 different intensities, show tonotopy of naïve primary auditory cortex (A) and the corresponding map following the pairing of 9 kHz sounds with stimulation of the cholinergic nucleus basalis of the basal forebrain (B). A comparable auditory cortical reorganization did not occur with pairing of sounds with such stimulation after a lesioning of cholinergic rather than GABAergic nucleus basalis neurons. Credit: From Kilgard and Merzenich (1998). Reprinted with permission from AAAS.

Visual attentional demands can also influence such subcortical auditory processing. When a cat visually attends a mouse, subcortical auditory responses of the dorsal cochlear nucleus are reduced (Hernández-Peón et al., 1956). Further, attention to a visual discrimination task reduces responses of the auditory nerve to clicks (Oatman, 1971; Oatman and Anderson, 1977). In humans, Lukas (1980) revealed that attention to the visual modality also reduces auditory nerve responses, while Puel et al. (1988) showed that such attention reduced the otoacoustic emissions evoked by a click. Prefrontal influences of visual attention on such subcortical auditory filtering by corticofugal influences on corticopetal-corticofugal loops could also, in turn, permit visual attention to influence the cortically generated auditory supratemporal mismatch negativity (Erlbeck et al., 2014; Campbell, 2015). This convergent evidence thus points toward a very early stage of attention that influences subcortical auditory mechanisms.

Interim Summary

We introduced the cholinergic top-down control assumption that the cholinergic cortical attentional system controls an early filter. Corticofugal modulation of corticopetal-corticofugal loops leads to an attentional selection crucially affecting the level of the rostral brainstem. The rostral brainstem is the locus of action of that filter, being integral to the confluence of ascending, descending, ipsilateral, and contralateral effective connectivity of the subcortical central auditory system.

Attention and Auditory Brainstem Responses

In contrast to this evidence for top-down control, ABRs proved, in several early studies, to be unaffected by attention (Woldorff et al., 1987, 1993; Woldorff and Hillyard, 1991). Compelling was that, juxtaposed with Woldorff et al.'s findings indicating there are no attentional effects on ABRs, in the same studies, there were attentional augments of auditory middle latency response (AMLR) deflections (20–50 ms.), alongside attentional augments of auditory long latency responses (ALLRs). These ALLRs include N1 and P2. In Woldorff et al.'s “dichotic” listening tasks, participants attempted to attend to target deviants (D) in an oddball sequence of standards (S), SSSSDSSSSSSSSD… Attending those deviants, while ignoring unattended deviants in an oddball sequence, presented in the other ear, affected the P20–P50 of the AMLR and the “Nd” of ALLRs. Contrastingly, ABRs were unaffected by such attention in these dichotic listening tasks.

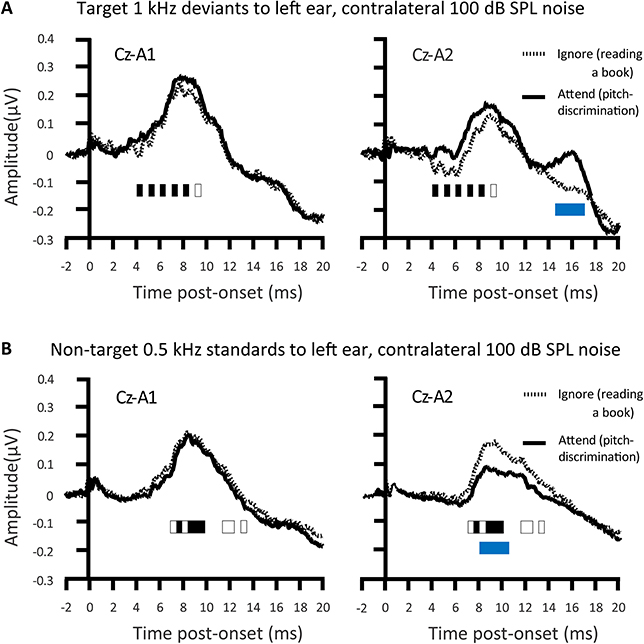

Inconsistent with the findings of Woldorff et al., Ikeda et al. (2008) showed that selective attention affected tone-pip ABRs (Figure 4). A task requirement of perceptual discrimination between pips of a target frequency and a non-target frequency, alongside rather loud (100 dB SPL) contralateral masking noise, sufficed to cause attentional augments of ABRs. Those attentional augments occurred in the range of waves II–VI in response to attended target sounds relative to sounds that participants just ignored (while reading a book). Conversely, Ikeda et al. (2008) also revealed attentional decrements of all ABRs to attended frequent non-targets relative to acoustically identical sounds that participants just ignored. The augments and decrements of ABRs by selective attention were particularly apparent with a contralateral Cz-A2 bipolar channel than with the Cz-A1 channel ipsilateral to stimulation. These Cz-A2 ABRs arguably more strongly reflected right hemisphere generators that were contralateral to the left ear that received the tone pips. The extent of these selective attention effects on ABRs were also stronger with louder (100 dB SPL) than with quieter (80 dB SPL) masking noise. The implication is that the mechanisms of selective attention affecting ABR generation are promoted by the binaural interaction of information from to-be-ignored masking noise; masking noise that would make the task more effortful. These mechanisms affect generators ipsilateral and contralateral to the attended ear. An assumption is that these mechanisms involve the descending corticofugal routes between subcortical processing stations.

Figure 4. Attention modulations of the auditory brainstem response (ABR). An attentional augment overlaps the grand-averaged ABRs to deviants presented with contralateral 100 dB SPL noise. That augment is a vertex positivity occuring when participants attend for deviant targets, relative to when participants ignored the sounds whilst reading a book (A). This augment reached significance at the times denoted by black rectangles. The response to the attended target was significantly more contralateral during the time denoted by the blue rectangle. There was a corresponding attentional decrement, a negativity, to the attended non-target standards, relative to when participants ignore all sound whilst reading a book (B). This decrement reached significance at the times denoted by black rectangles. This attentional decrement was also significantly more contralateral during Wave V, as denoted by the blue rectangle; n = 24. Credit: Adapted with permission from Ikeda et al. (2008). Promotional and commercial use of the material in print, digital or mobile device format is prohibited without the permission from the publisher Wolters Kluwer Health. Please contact aGVhbHRocGVybWlzc2lvbnNAd29sdGVyc2tsdXdlci5jb20= for further information.

The earliest signs of binaural interaction of the ascending auditory system in the ABR, at least in some individuals, occur during Wave III (e.g., Wong, 2002; Hu et al., 2014). This Wave III generation could implicate the superior olivary complexes (SOC) after the first bifurcation from the cochlear nucleus within the subcortical ascending auditory system. Such binaural interactions can be attentionally modulated at least for tone-pip stimuli (Ikeda, 2015). These interactions involve cells exhibiting ipsilateral excitation alongside contralateral inhibition (Ikeda, 2015). Conceivable is that binaural interactions with tone pips also engage cells exhibiting ipsilateral excitation as well as contralateral excitation (Ikeda, 2015). The findings of Ikeda et al. (2008) revealed that selective attentional effects on Wave II can be affected by contralateral noise. The descending olivocochlear projection could mediate an improved selection of the attended target at the level of the cochlear nucleus. This selection would occur prior to the first bifurcation of the ascending auditory system including an ascending projection to the SOC of the contralateral hemisphere. The top-down influence of that descending olivocochlear projection could exclusively involve covert attentional mechanisms. Such mechanisms could operate at the level of the cochlear nucleus or also involve the outer hair cells (Maison et al., 2001). Another hypothesis is that these covert attentional mechanisms even modulate the muscles affected during the middle ear acoustical reflex (Ikeda et al., 2013). There is thus evidence for a corticofugally operated top-down early selective filtering mechanism affecting processing during the first few milliseconds. This mechanism comes particularly into play under adverse conditions including noise (Maison et al., 2001). This mechanism is arguably less necessary and apparent under the experimental conditions that Woldorff et al. employed. The early processing of that sound, affected by top-down attentional effects, thus becomes sensitive to the demands of the task and what the sound is.

Interim Summary

The ABR is attentionally modulated in loud noise.

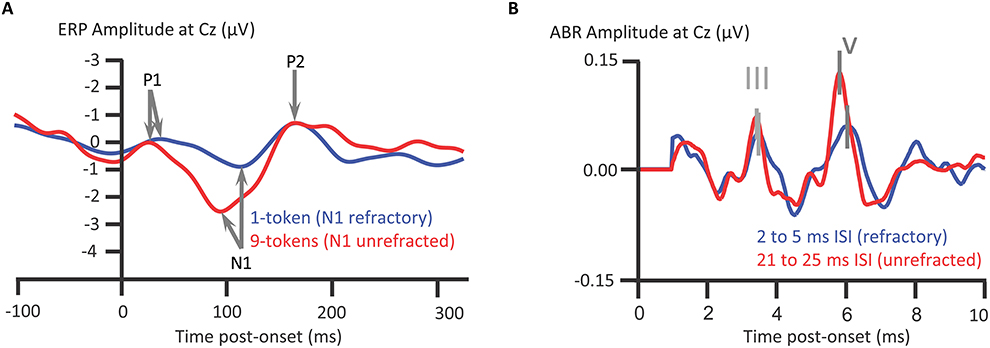

Refractoriness of ABRs and ALLRs

We turn now from attentional modulations of ABRs and ALLRs to their relative susceptibility to attenuation on repeated presentation of a sound: refractoriness. In this subsection, we intend to tackle why the subcortical processing indexed by ABRs more closely reflects temporal information within the acoustical waveform than thalmocortically generated responses. The answer to this question hinges on this notion of refractoriness. The time-course of auditory evoked responses (EPs), otherwise known as “auditory event-related potentials” (ERPs), are time-locked to the onset of a sound. Deflections of the ALLRs of auditory ERPs, such as the supratemporally generated auditory N1, attenuate on repeated presentation of a sound. This attenuation recovers after a period of silence (e.g., Butler, 1973; Campbell and Neuvonen, 2007), as is termed the refractory period. When stimulus-specific neuronal elements are unstimulated, those neurons are released from refractoriness (e.g., Campbell et al., 2003, 2005, 2007; see Figure 5A). By contrast to ALLRs, such as the auditory N1, ABRs are relatively unaffected by refractoriness: For instance, even with multiple reductions in interstimulus interval from 53 to 3 ms, all ABR deflections were unaffected except for wave V (Picton et al., 1992). Wave V showed a prolongation of peak latency at interstimulus intervals of 3 ms only. However, Valderrama et al. (2014) compared ABRs elicited with interstimulus intervals of 21–25 ms to those elicited with interstimulus intervals of 2–5 ms. Valderrama et al. thus found shorter interstimulus intevals reduced ABR amplitudes and affected ABR morphology. On balance, ABRs are less subject to refractoriness than the auditory N1; this refractoriness occuring at briefer interstimulus intervals, with which stimuli evoke ABRs with a clear morphology. Indeed, Valderrama et al. (2014) deconvolved overlapping ABR signals with interstimulus intervals as short as 2–5 ms. Thus when a complex sound such as a speech stimulus /dɑ/ is presented, the consequence, after the ABR to the onset, is that the rostral brainstem generates an ongoing response to aspects of the ongoing /dɑ/ sound.

Figure 5. Longer refractory periods of auditory N1 than for ABRs. The grand-averaged auditory N1 to a tone in a pitch-varying sequence of tones, 9 different pitch tokens, presented at an interstimulus interval (ISI) of 328 ms., is less refracted than when presented in a 1-token repeated tone sequence (A). Stimulus-specific cortical neuronal elements sensitive to pitch become less responsive upon repeated stimulation, as recovers after a period of quiescence. Such quiescence is more common with multiple different pitch tokens. The inter-token repetition interval between stimulation of stimulus-specific elements is longer with a higher token set size. Such elements contribute to N1 generation and thus N1 is refracted in the 1-token relative to 9-token sequences; Campbell et al. (2007); n = 12. ABRs are also subject to refractoriness (B), though new deconvolution techniques show sounds still elicit ABRs with ISIs of 2–5 ms. The ABRs are from a representative participant with intact hearing, Valderrama et al. (2014); n = 1. Credits: (A) is adapted with permission of John Wiley and Sons from Campbell et al. (2007). Copyright © 2007 Society for Psychophysiological Research. (B) is reprinted from Valderrama et al. (2014). Copyright © 2014, with permission from Elsevier.

Interim Summary

The ABR is relatively unaffected by refractoriness. Thus when complex sounds are presented, a flowing river-of-information passes through the rostral brainstem that abstracts envelope and periodicity information generating a cABR response.

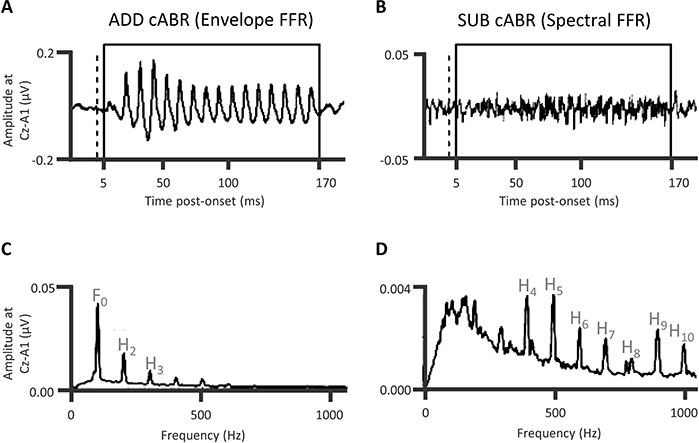

Attention, Expectancy, and Prediction Affect Both the cABR and Speech-in-Noise Perception

Being relatively unaffected by refractoriness, the cABR is thus responsive to landmarks in the acoustical waveform (Skoe and Kraus, 2010; Campbell et al., 2012). The representation of lower frequencies of that acoustical waveform predominates the cABR waveform. The cABR generator process thus seems to abstract the envelope and the fundamental frequency of the stimulus away from the acoustical waveform. The cABR does so at a time-lag of 8 to 10 ms. After the ABR response to the consonantal onset of the /dɑ/ stimulus, the cABR reflects that informational flow through rostral brainstem generators of the ABRs, with the contribution of a distinct Frequency Following Response or “FFR” (Chandrasekaran and Kraus, 2010; Xu and Gong, 2014; Bidelman, 2015; Xu and Ye, 2015). This FFR locks primarily to the fundamental frequency of the vowel portion that the rostral brainstem also generates, albeit in the IC. The form of FFR typically recorded when analyzing cABRs is an “envelope FFR” (Aiken and Picton, 2008) or “envelope following response” (Easwar et al., 2015; Varghese et al., 2015). This EFR follows the periodicity envelope. The envelope differs from the spectral FFR (Aiken and Picton, 2008; Easwar et al., 2015) that follows the spectral frequency of the stimulus. Though there are cochlear nucleus (CN), trapezoid body, and superior olivary complex (SOC) contributions to the FFR (Marsh et al., 1974) as well as a cortical contribution (Coffey et al., 2016), there is a dramatic reduction in a form of FFR accomplished by a subcortical cooling of the IC (Smith et al., 1975). On balance, generators in the vicinity of the rostral brainstem, encompassing the lateral lemniscus and IC, predominate both the cABR to consonantal and vowel portions of a speech sound. The flow of information through the rostral brainstem indexed by the cABR is time-lagged. This time-lag concerns how long the landmark information takes to reach the rostral brainstem. A series of investigations revealed that attention augments the FFR: Galbraith and colleagues (Galbraith and Arroyo, 1993; Galbraith et al., 1995, 1998, 2003) showed that whether comparing attending sounds to not attending sounds, or whether attending to a selected auditory stream of sound while ignoring another, an attentional augment of the FFR is shown and that FFR is higher in amplitude with speech sounds (for an alternative perspective, see Varghese et al., 2015). A separate series of experiments also corroborated that the familiarity of speech or music affected the time-course and dynamics of FFR via experience-dependent plasticity (Musacchia et al., 2007; Wong et al., 2007; Song et al., 2008; Chandrasekaran et al., 2012).

Turning from these initial studies revealing influences of experience and attention on FFRs, a recent investigation of auditory attention and FFRs (Lehmann and Schönwiesner, 2014) showed that attentional selection in background speech noise can rely on both frequency and spatial cues. This selection can also rely on frequency cues alone. In Lehmann and Schönwiesner's procedure, participants attended to vowels uttered by the designated speaker while ignoring another speaker (attend the male and ignore the female, or attend the female and ignore the male). These participants were required to detect occasional attended pitch-deviant target vowels by pressing a button. In a diotic condition, audio-recordings of a male repeating /a/ and a female speaker repeating /i/ were intermixed such that the same sound mixture was presented to both ears. In a dichotic condition, the male speaker's repeated /a/ was presented to the left ear and the female speaker's repeated /i/ was presented to the contralateral ear. In both the diotic and dichotic conditions, the FFR followed the distinct fundamentals of both vowels. In the dichotic condition only, attending the male (on the left) relative to attending the female (on the right) increased the amplitude of the FFR at the fundamental frequency of male's /a/. The direction of attention thus arguably affects the FFR. Lehmann and Schönwiesner computed a neural spectral modulation index of how much attention affects the FFR. This index was higher in the dichotic than the diotic conditions. Spatial cues were thus important to attentional selection, which conceivably occurs at the level the rostral brainstem. Further, frequency cues were also sufficient for attentional selection in that the modulation index was above zero in the diotic condition. Accordingly, attentional selection does not require the segregation of attended and ignored information to different sides of the brain. Further, individual variability in the amplitude of these attentional FFR augments, whilst selecting one voice and ignoring another, was related to the detection of pitch-deviant targets in the attended stream (Lehmann and Schönwiesner, 2014): the stronger the attentional modulation of FFR, the lower the discriminability of the attended pitch-deviant target. Relative to individuals performing at ceiling, participants who struggled more with the task thus applied more attention to the task's stimuli affecting the brainstem representation of those stimuli. The IC, at least in part, generated this attentionally augmented FFR (Bidelman, 2015). In addition, an extensive corticofugal efferent system arguably influenced the generation of this attentional augment in a manner that is both goal-directed and behaviorally relevant. For evidence of a cortical contribution to FFR, see Coffey et al. (2016).

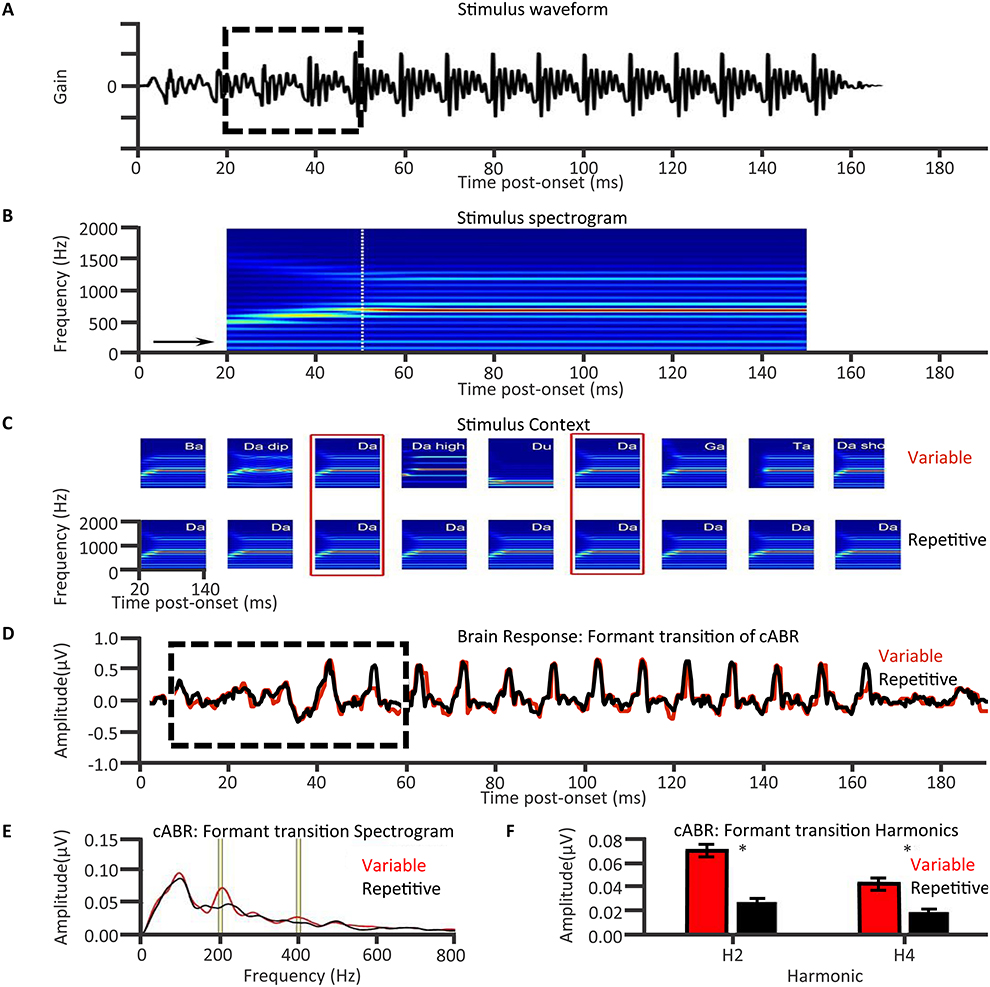

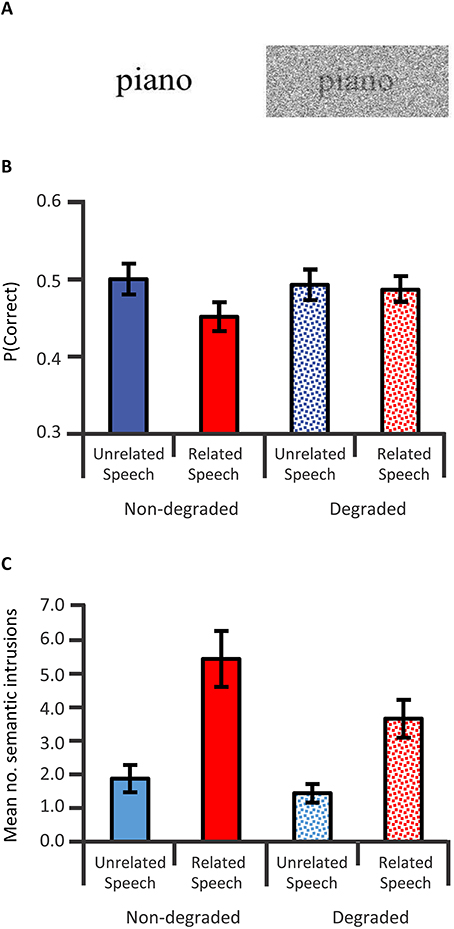

Having established the FFRs of cABRs are influenced by auditory attention and long-term auditory experience, it is worth emphasizing that the cABRs generated in the rostral brainstem are not the automated readout of stimulus attributes in an informational vacuum. Rather, cABR generation is affected by expectancies derived from the immediate preceding context. An investigation of neural entrainment in children revealed such effects of acoustical context on cABR (Chandrasekaran et al., 2009). The notion was that a variable sequence of acoustically distinct monosyllables containing a /dɑ/ syllable prevents the preceding context from predictively enhancing the neural representation of the current stimulus /dɑ/. “Neural entrainment” using the context of a repeated /dɑ/ (Figures 6A,B) reflected such an enhancement. This neural entrainment enhanced the cABR second harmonic amplitude during the formant transition between consonantal and steady-state vowel portions of /dɑ/ (Figures 6C–E). The cortex could process a memory of the preceding context, leading to a top-down expectancy. Subcortical corticopetal-corticofugal loops attempt to meet that expectancy when encoding the current stimulation. The stronger such neural entrainment for the second harmonic in the formant transition, the better the speech-in-noise performance. Such neural entrainment of the cABR is thus functionally relevant for speech-in-noise performance.

Figure 6. Top-down influences of auditory speech context on cABRs: Speech stimulus context affects the formant transition of the cABR in a manner that predicts speech in noise performance in 8- to 13-year olds with intact hearing. A long /dɑ/ stimulus (A) contains a formant transition (boxed) as the acoustical spectrogram (B) illustrates. Chandrasekaran et al. (2009) derived cABRs to /dɑ/ in a variable speech or in a repeated /dɑ/ context (C). The cABRs revealed no significant effect of speech context during the steady-state vowel portion (D), but during the formant transition (boxed) context influenced cABRs. The amplitude spectra of the cABR (E) during the formant transition revealed a repetitive context augmented the second and fourth harmonics, as was significant (F). Correlations revealed that the higher this presumably top-down speech-context modulation of the representation of the second harmonic during the formant transition of the cABR, the better the speech-in-noise performance on the Hearing in Noise Test (not shown). Credit: Reprinted from Chandrasekaran et al. (2009). Copyright © 2009, with permission from Elsevier in respect to Chandrasekaran et al. (2009: Exp.1); n = 21.

This neural entrainment, enhancing the second harmonic during the formant transition, predicted speech-in-noise performance (Chandrasekaran et al., 2009) as assessed by the Hearing In Noise Test or HINT (Nilsson et al., 1994). The more faithful the cABR was to the auditory signal during the transition from the consonantal to the vowel portion, the better the speech-in-noise performance. This evidence concerning speech-in-noise performance of children coheres well with that from older adults. Anderson and Kraus (2010) compared two such adults, with near-identical audiograms (≤25 dB HL for audiometric frequencies from 125 to 8 kHz) to one another. The individual with poorer speech-in-noise peformance exhibited a weaker representation of the fundamental frequency and second harmonic in the FFR of the cABR. Comparing two groups of older adults who showed good and poor speech-in-noise performance, respectively, Anderson et al. (2011) found no significant audiometric difference (≤25 dB HL from 125 to 4 kHz), yet the difference in the FFR of the cABR was replicated. In those older adults, the presence of meaningless syntactic speech adversely affected the faithfulness of the cABR to a repeated /dɑ/. This influence of background speech noise was particularly strong in those showing poor speech-in-noise performance on the HINT: The higher the overall root mean square (RMS) of the cABR in quiet or noise, or the stronger the correlation of the cABR waveform to /dɑ/ in quiet and noise, the better the speech-in-noise performance on the HINT (Anderson et al., 2011).

Kindred to the neural entrainment of the cABR that predicted HINT performance (Chandrasekaran et al., 2009), Chandrasekaran et al. (2012) revealed that using repeated rather than changing stimuli augmented the FFR and reduced the cerebral blood flow in the IC: a repetition suppression effect. The processing of sound in the IC becomes more efficient when predictable. The fidelity of the FFR and the associated repetition suppression is particularly pronounced in those who have learned to process the sound well: e.g., English-speakers who rapidly learn new vocabulary based on the recognition of lexically meaningful tones, having acquired the mapping of distinct pitch patterns of one English pseudoword onto pictures of different objects. These findings could thus relate to the second language acquisition of tonal languages, such as Mandarin Chinese.

When sequences of natural stimuli such as speech exhibit an inherent acoustical variability with time, thus not promoting neural entrainment and repetition suppression, the nature of the filtering of the auditory information at the level of the rostral brainstem is thus arguably non-absolute. The IC shows increased bloodflow reflecting a less efficient processing of the stimulus and generates waveforms less faithful to the stimulus suggesting the filter is wide open to unpredicted stimuli. Accordingly, the experience-dependent corticofugal efferent influence on the rostral brainstem typically permits a selectivity for information promoted by top-down expectancies. Not only acoustical but also semantic and linguistic factors may influence expectancies. Those factors affect the ascendency of information in the auditory system from the IC upward. The influence of these top-down expectancies on corticopetal-corticofugal loops effectively operate as an early filter (Broadbent, 1958). The neural entrainment of facets of the cABR, FFR, or repetition suppression at the IC reflect the selectivity of that early filter, for instance, by affecting the perception of speech in noise. Yet the selectivity of that filtering is only near-absolute under conditions that promote neural entrainment or repetition suppression within the IC. These conditions are atypical in natural acoustically varying to-be-attended stimulation that is often in the presence of noise. The new early filter model offered here thus proposes that the early filter is not only affected by top-down experience-dependent selective attentional factors but also by neural entrainment. This assumption that neural entrainment affects the early filter is thus not as discrepant as Broadbent's (1958) early selection model was with the evidence supporting attenuation (Treisman, 1960, 1964a,b, 1969; Treisman and Riley, 1969) and late selection models (Gray and Wedderburn, 1960; Deutsch and Deutsch, 1963).

Interim Summary

Top-down attentional as well as experience-dependent plasticity factors influence cABR generation. In support of an assumption of predictive selection by the early filter, this generation is also affected by the neural entrainment determined by the speech context. This neural entrainment affects the attention selectivity for speech in noise.

TFS and Age-Dependent Decline of Temporal Resolution

Having discussed how attention, expectancy, and prediction affect the sub-cortical representation of speech in the central auditory system, as well as speech-in-noise performance, we turn now to the representation of TFS. TFS is best understood by first considering how the auditory periphery analyses sound. The structure of the basilar membrane within the cochlea performs a Fourier-analysis-like function (von Békésy, 1960): The basilar membrane resolves a complex sound into component narrowband signals. In response to a sinusoidal stimulation, the basilar-membrane response takes the form of a traveling wave that shows a peak amplitude at a specific place on the basilar membrane, depending on the frequency of the stimulation. Due to the mechanical properties of the basilar membrane, the basal end responds most vigorously to high-frequency sounds and the apical end to low-frequency sounds. This tonotopically organized pattern of vibration is transduced by the inner hair cells. In the auditory nerve, each transduced component narrowband signal thus has a temporal envelope, an informational trace of the slow amplitude dynamics of the upper extremes of basilar membrane deflections of that narrowband waveform. This temporal envelope varies at lower frequency, slower than the higher frequency TFS information bounded within that envelope. This amplitude modulation envelope supplies cues to speech perception that are not only necessary but also sometimes alone sufficient for speech perception (Drullman et al., 1994a,b; Shannon et al., 1995). In quiet, slow-rate temporal-envelope cues (4–16 Hz) are especially important for speech identification (Drullman et al., 1994a) when higher frequency amplitude modulation envelope is present. Also in quiet, medium rate amplitude modulation envelope (2–128 Hz) is also important when lower frequency amplitude modulation envelope is absent (Drullman et al., 1994b). In the presence of interfering sounds, slow temporal-envelope cues (0.4–2 Hz) become important conveying prosody (Füllgrabe et al., 2009), as do high rate temporal-envelope cues (50–200 Hz) conveying fundamental frequency (Stone et al., 2009, 2010).

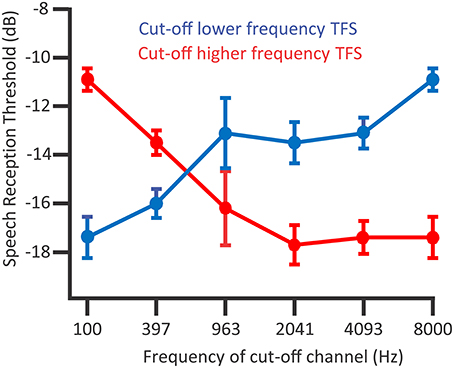

The temporal information bounded within this temporal-envelope is TFS, i.e., the fluctuations in amplitude close to the center frequency of a narrowband signal, which are higher in frequency than the amplitude modulation envelope. In tone-vocoded sound, narrow frequency bandwidths of sound—and in turn the resolved narrowband signal at the basilar membrane—“channels” have envelope information preserved yet the TFS replaced with a tonal sound amplitude-modulated by that envelope. Hopkins and Moore (2010) explored how incrementally replacing the content of tone-vocoded channels with the original speech channels improved speech recognition in noise. Listeners were between 19 and 24 years of age and audiometrically normal in the test ear. These listeners were sensitive to TFS as can be used in speech perception in noise: The speech reception thresholds of target signals containing partial TFS information improved when adding speech TFS information to the tone-vocoded sound (Figure 7; Hopkins and Moore, 2010). The TFS information improved thresholds in a procedure that incrementally replaced higher-and-higher frequency tone-vocoded channels with speech TFS (Figure 7, red line). TFS information also improved thresholds in a procedure replacing lower-and-lower frequency tone-vocoded channels of noise with speech TFS in the same bandwidth (Figure 7, blue line). Noteworthy is that TFS in higher frequency ranges aided speech recognition when no TFS was available in lower frequency ranges. In an analogous experiment, Hopkins and Moore (2010) also showed that speech TFS information is less useful to those with hearing impairment, albeit potentially confounded by the hearing-impaired participants being older (Moore et al., 2012; Füllgrabe, 2013; Füllgrabe et al., 2015).

Figure 7. The presence of TFS above 1500 Hz contributes to speech recognition thresholds in normal hearing listeners. Considering the blue line, a procedure adding TFS between 8000 and 4093 Hz to tone-vocoded channels resulted in improved (lower) speech reception thresholds. Considering the red line, when lower frequency TFS is already available, after adding TFS between 963 and 2041 Hz that improved speech reception thresholds, there were no dramatic improvements from adding higher frequency TFS. Data points denote mean speech reception thresholds; error bars denote the standard error of the mean; n = 7. Credit: Reprinted with permission from Hopkins and Moore (2010). Copyright © 2010, Acoustic Society of America.

Another study complements Hopkins and Moore's (2010) demonstration that TFS is important for speech identification in the presence of speech background sounds. Stone et al.'s (2011) experiments investigated the dynamic range of usable TFS information by comparing the addition of TFS information to the amplitude peaks of a vocoded speech signal by adding that TFS information to the valleys and troughs of this vocoded signal. Whether added to amplitude peaks or to troughs, TFS information improved identification of the target speech over a background talker: Adding target and background noise TFS information to a channel containing the corresponding temporal envelope information proved useful. This TFS information was useful for channel levels—relative to the RMS sound level of that channel—from about 10 dB below to 7 dB above that RMS sound level. However, the range of channel sound levels where TFS was useful depended on the relative levels of the target sound to the background masking talker: For an experimental condition in which background noise dominated the target more, adding TFS to peaks was more useful at channel sound levels further below the RMS sound level of the channel than in an experimental condition in which the background noise did not dominate the target as much. Further, adding TFS information to peaks when the background dominated more was more useful than adding TFS information to dips. Stone et al.'s (2011) results thus show that TFS information is not exclusively useful for listening in dips, but rather TFS also contributes to the segregation of target to-be-attended speech from the to-be-ignored background speech sound.

Having shown how processing TFS information is important to the recognition of speech in speech noise, we turn to how the subcortical processing of TFS is relevant to one of the outstanding unresolved conundrums of cognitive hearing science. This conundrum is that of isolating the age-related decline in temporal processing that is caused by effects of peripheral hearing loss on the auditory nerve and central auditory system from age-related declines that are unconnected to audiometric loss. Presbycusis, age-related sloping loss, may drive a progressive deafferentation of unstimulated neurons spreading upward in the ascending auditory system, which ultimately results in chronic cognitive change according to the sensory deprivation hypothesis. Hearing-impaired listeners can experience supra-threshold auditory processing deficits, characterized by distorted processing of audible speech cues. Peripheral damage to outer hair cells and reductions in peripheral compression and frequency selectivity contribute to these deficits, as does a reduced access to TFS information in the speech waveform, leading to this distortion (Summers et al., 2013). However, this impairment of TFS processing, which affects distortion, is not necessarily always a direct or indirect consequence of peripheral damage.

There is evidence for an independent age-related decline in temporal resolution as reflected by the action of the rostral brainstem of the central auditory system (Marmel et al., 2013)2. Marmel et al. investigated an audiometrically heterogeneous population of adults with a wide age range (Supplementary Figure 1A). Participants with thresholds greater than 20 dB HL had a sensorineural loss. To investigate inter-individual variability in temporal resolution at the level of the rostral brainstem of the central auditory system, Marmel et al. used an FFR synchronization index. This index comprised of the cross-correlation of FFR to the stimulus and also comprised of the signal-to-noise ratio of the FFR. Such an index thus tracked how faithful the FFR was to the acoustical stimulus. This FFR synchronization index decreased with age in a manner reflecting a poorer temporal resolution at the level of the rostral brainstem, which is associated with higher frequency difference limens. These higher limens reflected poorer pitch discrimination abilities. A tendency for sloping loss to be more severe in elder participants was confirmed (Supplementary Figure 1), yet at 500 Hz, hearing thresholds did not correlate significantly with age (Supplementary Figure 1C). Marmel et al. presented stimuli in this frequency range when measuring absolute auditory thresholds, frequency difference limens, and FFR synchronization. The influence of age on FFR synchronization in this frequency range, without a significant influence of age on hearing level, thus strains any assumption that a peripheral presbycusis could be the sole cause of this effect of age on the processing of sound by the central auditory system (though see Footnote 2). Further, this FFR synchronization was not associated with absolute auditory thresholds. The point is that there was an age-related decline in temporal resolution arguably at the level of the rostral brainstem that was associated with impairments in pitch discrimination abilities. Pitch discrimination abilities appeared to hinge both on absolute auditory thresholds and on the FFR synchronization index. However, FFR synchronization yet not absolute auditory threshold was affected by age. It is tenable that auditory absolute threshold could affect the place-coding of auditory information, in turn affecting pitch discrimination. Equally, absolute auditory threshold could affect the coding of auditory information that is not phase-locked. However, the firing of neurons conveying that auditory information would have to be asynchronous. The upshot of s (Marmel et al.'2013) findings is that there is an age-related functionally relevant decline in auditory temporal resolution at the level of the rostral brainstem. This decline is arguably independent of audiometric hearing loss, which though affecting frequency discrimination, was not affected by age within the frequency ranges investigated.

The question that still remains is whether aging of the auditory nerves and central auditory system alone drives this functionally relevant decline of temporal resolution arguably at the level of the rostral brainstem, as indexed by FFR synchronization. Such aging could relate to a decline of inhibitory GABAergic (Caspary et al., 2008; Anderson et al., 2011) or cholinergic (Zubieta et al., 2001) systems of neurotransmission. Such systems involve respectively, γ-aminobutyric acid or acetylcholine. A decline in temporal processing may limit the speed of acoustical fluctuations that the (auditory nerve and, in turn the) central auditory system can follow. Such a decline thus renders it impossible for the central auditory system to represent high frequencies using the rate facet of a place-rate code, which affect the IC's generation of the FFR.

Interim Summary

There is an age-related decline in supra-threshold auditory processing, which Marmel et al. (2013) revealed as independent of audiometric hearing loss (Marmel et al., 2013). This age-related decline occurs alongside a decline in the temporal resolution of the FFR, which arguably the rostral brainstem generates. This age-related decline in temporal resolution could also impair speech recognition in noise (Hopkins and Moore, 2010). There is a comparable age-related decline in TFS sensitivity, which even occurs in audiometrically normal adults (Füllgrabe, 2013; Füllgrabe et al., 2015). However, peripheral hearing loss could also drive a decline in the processing of sounds in the auditory nerves and central auditory system. This loss is either measurable in the audiogram, or is “hidden” (Schaette and McAlpine, 2011; Plack et al., 2014).

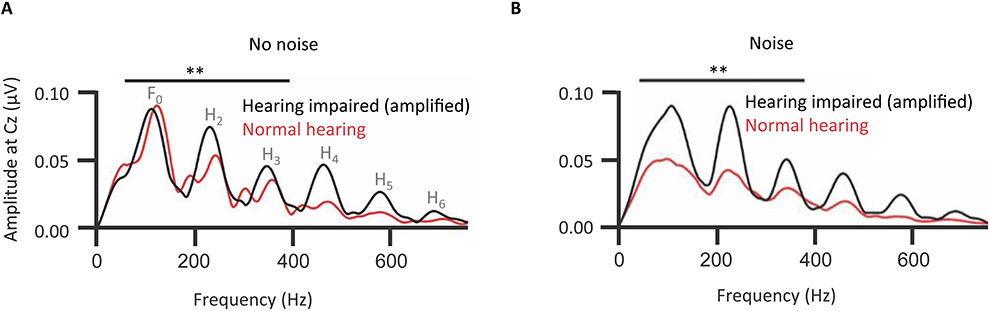

Neuroplastic Changes to Accommodate High-Frequency Audiometric Loss

A hypothesis is that the decline of temporal resolution of the central auditory system, indexed by the FFR, comes from long-term neuroplastic change to accommodate the loss of audiometric sensitivity, especially in the high-frequency range. Older adults with mild-to-moderate hearing impairment show FFRs of the cABR with an, at first counterintuitive, higher amplitude fundamental and lower harmonics than normal-hearing controls (Figure 8; Anderson et al., 2013). One explanation is the higher amplitude of the FFR in hearing-impaired listeners might be due to a larger effective modulation depth in those listeners caused by the reduction or abolition of cochlear compression (Füllgrabe et al., 2003; Oxenham and Bacon, 2003).

Figure 8. Long-term neuroplastic changes accommodate peripheral sensorineural hearing loss: Effects of hearing loss on the amplitude spectra of the envelope FFR of grand-averaged cABR representing the fundamental and lower harmonics of the vowel portion of a /dɑ/ stimulus under acoustical background conditions of noise or no noise. The fundamental and lower harmonics (F0 to H3) were together represented (A) significantly more strongly (denoted by **) in elderly individuals with mild-to-moderate sloping hearing loss (n = 15), than in elderly controls without such a loss (n = 15). Background noise (pink noise of signal-noise ratio 10 dB) exacerbated this effect (B). There was no hearing impairment-associated effect in higher harmonics. Arguably, hearing-impaired participants have learned to rely on lower-frequency speech cues, particularly in noise. With the same hearing-impaired listeners without amplification the pattern of significance replicated, though these effects were slightly weaker without amplification (not shown). Credit: Reprinted with permission from Anderson et al. (2013). Copyright © 2013, Acoustic Society of America.

However, such results are also germane to another theory (Woods and Yund, 2007) that sensorineural impairment leads to a remapping from the auditory cortex—with impoverished output to high frequency cues—to the auditory association cortex. Accordingly, that remapping, to compensate, takes the low frequency cues still available for phoneme recognition and amplifies those cues within the central auditory system (Woods and Yund, 2007). Whether occurring between the auditory cortex and auditory association cortex, or between other structures of the auditory system, this remapping has consequences. Anderson et al.'s (2013) data relate to such a remapping. Those consequences alter the generation of the FFR in the rostral brainstem. Anderson et al.'s (2013) analyses of higher harmonics Aiken and Picton (2008) revealed no corresponding upregulation of high frequency cues.

Further, Anderson et al.'s analyses revealed that whether the stimuli were unamplified or amplified, using the NAL-R fitting formula (Byrne and Dillon, 1986), there was a bias in persons with mild-to-moderate hearing loss toward a stronger upregulation of lower rather than high frequency components in noise. Indeed, this bias for upregulating high frequency components was even stronger when amplified. If this bias were due to peripheral factors alone, such as a reduction in cochlear compression, then, if there were no long-term consequent neuroplastic changes, we would predict amplification would attenuate that bias. Anderson et al.'s analyses revealed the reverse of that prediction: amplification enhanced this bias. Accordingly, while peripheral factors such as declining cochlear compression would affect the FFR, long-term neuroplastic changes also take place that affect the FFR. Amplification with the NAL-R formula used did not remediate these changes.

Anderson et al.'s (2013) FFR findings from older adults with mild-to-moderate hearing impairment cohere well with evidence of a slightly different sort. Upon receiving an aid that amplifies high frequency cues, hearing aid users who have had unaided high frequency hearing loss for many years, can hear the amplified sound as distorted (Woods and Yund, 2007; Galster et al., 2011). Reasons, which are not necessarily mutually exclusive, could include regions of dead cochlea (Vickers et al., 2001; Mackersie et al., 2004; Moore, 2004; Preminger et al., 2005; Aazh and Moore, 2007; Vinay and Moore, 2007; Zhang et al., 2014). However, other reasons could include the long-term plasticity of the auditory nerves or central auditory system attempting to make the best use of lower frequency information from a damaged periphery. The low frequency sound can also seem too loud: “hypercusis.” Here the notion is that the encoded low frequency cues swamp high frequency perceptual cues. This problem is even more apparent in the FFR under conditions of background noise (Anderson et al., 2013). As Galster et al. (2011) note, “the inability to restore audibility of high-frequency speech and the possible contraindication for the restoration of high-frequency speech are established conundrums of hearing care.” This neuroplastic change is, at least in part, reversible. Training programs improve the aided perception of word-initial phonemes for those who have become accustomed to high frequency loss (Woods and Yund, 2007); people who presumably have partially functional basal cochlear regions. The neuroplastic changes, which adapt to hearing loss and seem to implicate the rostral brainstem, thus seem, on the whole, to be reversible. These neuroplastic changes are reversible even in later life and even after extensive hearing aid use. At first glance, such a finding would cohere well with the notion of neuroplastic recovery from neuroplastic long-term changes that accommodate peripheral hearing loss. However, it is worth considering that GABA units can increase in the auditory cortex due to training (Guo et al., 2012). Accordingly, systems of neurotransmission could have aged affecting the temporal resolution of the central auditory system to a point that is not normal. Those systems of neurotransmission could be subject to recovery due to training. While training was effective for nearly all individuals, there were factors affecting the inter-individual differences in the efficacy of training (Stecker et al., 2006). The distortion and annoyance issues associated with receiving an aid after becoming accustomed to sensorineural hearing impairment also concern signal processing techniques. These techniques map information in the high frequency components in the to-be-amplified sound onto lower frequency regions of cochlea (Galster et al., 2011). Approaches include frequency compression (Glista et al., 2009) and frequency transposition (Füllgrabe et al., 2010).

Such a signal processing approach might be more advisable than training when the majority of high frequency (basal) regions of cochlea are dead—the relevant afferents of the eighth cranial nerve have atrophied. At first, it is hard to imagine how such individuals could benefit from a training in listening to high frequency information: If a region of cochlea is dead, there is no sound transduction at the characteristic frequencies of the inner hair cells of that region. However, if sounds are loud, a frequency component produces a broader excitation pattern across auditory nerve fibers. With loud enough frequency components, regions of live inner hair cells neighboring dead cochlear regions, would thus be able to transduce some high-frequency information: “off-frequency listening” (Westergaard, 2004). Foreseeable is that training these persons to use information from off-frequency listening might have some benefit with very high levels of amplification. For persons with extensive dead basal cochlear regions, a prediction is that such training is not as effective as the suggested signal processing approaches.

Anderson et al.'s (2013) analyses offer intriguing biomarkers to evaluate for specifity in predicting such treatment's outcomes. These analyses were geared to investigating both lower frequency components and higher frequency components of the FFR of the cABR. As such these analyses revealed low frequency cues swamp higher frequency cues following neuroplastic changes that accommodate sensorineural loss. By contrast to these analyses, the representation of the stimulus classically apparent in the cABR, is relatively abstracted from the TFS at the level of the rostral brainstem. Whether the FFR of the cABR was responsive to the steady-state segment of a vowel or the steady-state sound of a cello, that FFR represented the fundamental and lower harmonics more strongly than the higher harmonics: As depicted in Figure 9, such lower frequency components were more strongly represented even when higher harmonics are of a higher intensity, as attributable to the low-pass characteristics of brainstem phase-locking (Musacchia et al., 2007; Skoe and Kraus, 2010).

Figure 9. Evidence for the low-pass properties of the auditory brainstem. Spectrograms of steady-state portions of speech (A) and non-speech (B) stimuli (left-hand panels) reflect the same fundamental frequency of 100 Hz, but a different harmonic structure. The corresponding spectrograms of the Frequency Following Responses of the cABR (right-hand panels), reveal that FFR follows the fundamental and lower harmonics more strongly than higher frequency harmonics; n = 29. Credits: Adapted with permission from Musacchia et al. (2007). Copyright © 2007 National Academy of Sciences, U.S.A., after Skoe and Kraus (2010). Adapted with permission from Skoe and Kraus (2010). Promotional and commercial use of the material in print, digital or mobile device format is prohibited without the permission from the publisher Wolters Kluwer Health. Please contact aGVhbHRocGVybWlzc2lvbnNAd29sdGVyc2tsdXdlci5jb20= for further information.

Interim Summary

Audiometric hearing loss could drive a decline of temporal resolution in the central auditory system. This age-related decline could be a long-term adaptation to higher frequency loss at the periphery. However, Füllgrabe et al. (2015) have shown an age-related decline of temporal resolution in audiometrically normal individuals, who are audiometrically matched across age groups. This decline thus arguably occurs in the central auditory system. This finding would thus indicate that audiometric hearing loss does not drive all such decline. However, this assertion comes with a caveat that there may be hidden loss (Schaette and McAlpine, 2011; Plack et al., 2014; Kujawa and Liberman, 2015) that is age-related. Accordingly, that hidden loss does not affect the audiogram but still drives this decline thus affecting the central auditory system. The cABR can reflect neuroplastic changes in response to peripheral sensorineural loss upregulating the relative representation of lower rather than high frequency components arguably at the rostral brainstem. This upregulation occurs in a manner exacerbated by noise and by amplification, as could relate to distortion, hypercusis, and annoyance issues. The cABR is thus an intriguing biomarker that could have specificity informing the approach to treatment. Stimulus transduction artifact-free cABRs can now be recorded through hearing aids (Bellier et al., 2015). It remains to be determined how well such cABR attributes—including noise sensitivity and the extent of adaptation to lower frequency components—predict the outcomes of fitting. This fitting concerns signal processing, directional microphones, binaural care, and choice of noise reduction schemes. Also to-be-determined is how well such cABR attributes predict the benefit from behavioral interventions such as perceptual training (Woods and Yund, 2007).

From the Limits on Phase-Locking in the Inferior Colliculus to Top-Down Neural Entrainment During Speech Perception

We have seen that prolonged hearing impairment has consequences for the generation of FFR of the cABR, which typically reflects low frequency sound components. The temporal envelope information in a narrowband signal is definitively lower in frequency than the TFS information bound within that envelope. Narrowband signals with a lower center frequency, are, however more dominated by temporal envelope information than narrowband signals with a higher center frequency. Much speech TFS information is transmitted through those higher center frequency narrowband signals. The question remains for neuroscience as to how TFS is re-coded prior to the rostral brainstem. Spectral FFRs are known to represent harmonics of acoustical information as high as 1500 Hz (Aiken and Picton, 2008). Yet, a processing of TFS above 1500 Hz contributed to speech target recognition in speech background noise (Hopkins and Moore, 2010). The frequency components of that TFS over 1500 Hz are thus somehow processed by the brain. Such temporal information is available at the level of the cochlear nucleus (Palmer and Russell, 1986; Winter and Palmer, 1990). Skoe and Kraus (2010) postulate that a place code facet of a rate-place code (Rhode and Greenberg, 1994) recodes information about higher frequencies. Such a place code could be supported by a form of tonotopy within the IC (e.g., Malmierca et al., 2008). Indeed, Harris et al. (1997) support this notion of a rate-place code with multi-unit recordings from gerbil IC. Frequencies below 1000 Hz activated a broad phase-locked population. Higher frequencies induced activation of a more focal population without phase-locked firing of the constituent neuronal elements. The spectral FFR is thus more strongly affected by lower frequencies.

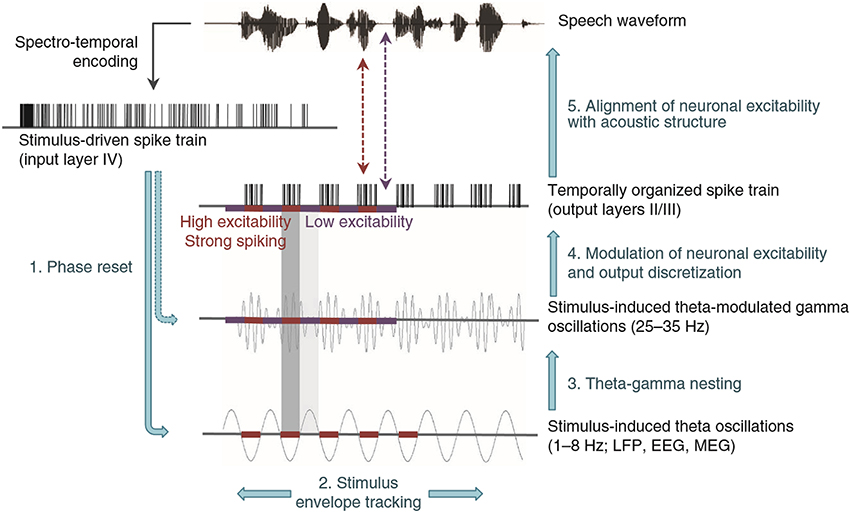

Turning from these evoked responses to neuronal oscillations, a recent model of how cortical theta (1–8 Hz) and gamma (25–35 Hz) oscillations process speech assumes a high-resolution spectrotemporal representation of speech in the primary auditory cortex (Figure 10; Giraud and Poeppel, 2012). This representation enters input layer IV upon which operations are performed to code speech into the theta- and gamma-band, albeit a representation encoded in a neuronal spike train. At first blush, the assumption of such a representation contrasts with the upper limit of phase-locking in the FFR. This limit is known to drop from 3.5 kHz in the guinea pig auditory nerve to 2–3 kHz in the cochlear nucleus (Palmer and Russell, 1986; Winter and Palmer, 1990) down to 1000 Hz in the central nucleus of the guinea pig's IC, right down to 250 Hz in auditory cortex (Wallace et al., 2002, 2005). This cortical limit is likely an over-estimate in non-human primates (Steinschneider et al., 1980, 2008), perhaps even humans.