- 1School of Computer Software, College of Intelligence and Computing, Tianjin University, Tianjin, China

- 2Tianjin University of Traditional Chinese Medicine, Tianjin, China

- 3School of Computer Science and Technology, College of Intelligence and Computing, Tianjin University, Tianjin, China

- 4CSIRO Data61, Sydney, NSW, Australia

- 5Institute of Fundamental and Frontier Sciences, University of Electronic Science and Technology of China, Chengdu, China

Gliomas have the highest mortality rate and prevalence among the primary brain tumors. In this study, we proposed a supervised brain tumor segmentation method which detects diverse tumoral structures of both high grade gliomas and low grade gliomas in magnetic resonance imaging (MRI) images based on two types of features, the gradient features and the context-sensitive features. Two-dimensional gradient and three-dimensional gradient information was fully utilized to capture the gradient change. Furthermore, we proposed a circular context-sensitive feature which captures context information effectively. These features, totally 62, were compressed and optimized based on an mRMR algorithm, and random forest was used to classify voxels based on the compact feature set. To overcome the class-imbalanced problem of MRI data, our model was trained on a class-balanced region of interest dataset. We evaluated the proposed method based on the 2015 Brain Tumor Segmentation Challenge database, and the experimental results show a competitive performance.

1. Introduction

Gliomas, the most common brain tumors in adults, have the highest mortality rate and prevalence among the primary brain tumors (DeAngelis, 2001). They can be classified into high grade gliomas (HGG) and low grade gliomas (LGG). HGG is more aggressive and infiltrative than LGG, thus patients with HGG have a shorter life expectancy (Louis et al., 2007). Magnetic resonance imaging (MRI) with multiple sequences, such as T2-weighted fluid attenuated inversion recovery (Flair), T1-weighted (T1), T1-weighted contrast-enhanced (T1c), and T2-weighted (T2) provides detailed and valuable information of the brain, and thus is commonly used to diagnose brain diseases, plan the medical treatment strategies, and monitor tumor progression (Bauer et al., 2013; Zeng et al., 2018a). However, gliomas from MRI are difficult to localize as they invade into almost everywhere in the brain with various shapes and sizes and heterogeneous growth patterns (Zhao et al., 2018); they have similar appearances with other diseases such as stroke or inflammation observed in the images; and they are also tangled with surrounding tissues, causing the boundaries diffusive and blurry (Goetz et al., 2016). Furthermore, the scale of MRI voxels is not uniform as the X-ray computed tomography (CT) scans, causing the same tumors to have different gray values, especially when the scans are obtained at different institutions (Sapra et al., 2013). Manual segmentation requires expertise and manually labeling each voxel is laborious and time-consuming (Gordillo et al., 2013). Meanwhile, a variability of 20% and 28% for intra- and inter-rater respectively has been reported for manually segmentation of brain tumors (Mazzara et al., 2004; Goetz et al., 2016). For these reasons, automatic methods instead of manual segmentation with high accuracy and less time-consumption is in high demand.

In this paper, our goal is to propose an automatic method to detect the three different regions of interest (ROI): complete tumor, tumor core, and enhancing tumor from the brain MRI. Our main contributions can be summarized as following:

1. A group of features named circular context-sensitive (CCS) features were proposed. The CCS features fully utilize the histogram information of rays along various orientations and with various lengths.

2. Gradient information was fully utilized by extracting two-dimensional and three-dimensional features. A total of 62 features were extracted to detect and classify the brain tumors.

3. We used an mRMR feature selection algorithm, which could select features that have minimum redundancy and maximum relevance with each others. This is used to significantly reduce the computational cost and increase the efficiency.

The paper is organized as follows: we give a brief literature review of related work in section 2. Then the methods are described in details in section 3. We give the experimental results in section 4, followed by the conclusions in section 5.

2. Related Work

Numerous methods of brain tumor detection and segmentation including semi-automatic methods and full-automatic techniques have been proposed (Tang et al., 2017). These segmentation techniques can be roughly divided into 4 categories: threshold-based techniques, region-based techniques, model-based techniques, and pixel/voxel classification techniques.

The threshold-based techniques, region-based techniques, and pixel classification techniques are commonly used for two-dimensional image segmentation (Vijayakumar and Gharpure, 2011). Model-based techniques and voxel classification methods are usually used for three-dimensional image segmentation. We will review the four types of methods in the following subsections.

2.1. Threshold-Based Techniques

Threshold-based method is a simple and computationally efficient approach to segment brain tumors because only intensity values need to be considered. The objects in the image are classified by comparing their intensities with one or more intensity threshold values (Gordillo et al., 2013). The Otsu algorithm (Otsu, 1979), Bernsen algorithm (Bernsen, 1986), and Niblack algorithm (Niblack, 1986) are simple and commonly used algorithms.

Gibbs et al. proposed an unsupervised approach using a global threshold to segment. The ROI for the tumor extraction task from the MRI images (Gibbs et al., 1996). Stadlbauer et al. used the Gaussian distribution of intensity values as the threshold to segment tumors in brain T2-weighted MRI (Stadlbauer et al., 2004). However, if the information in the image is too complex, the threshold-based algorithm is not suitable. It is also limited to extract enhanced tumor areas.

2.2. Region-Based Techniques

Region-based methods divide an image into several regions that have homogeneity properties according to a predefined criterion (Adams and Bischof, 1994). Region growing and watershed methods are the most commonly used region-based methods for brain tumor segmentation.

Ho et al. proposed a region competition method which modulates the propagation term with a signed local statistical force to reach a stable state (Ho et al., 2002). Salman et al. examined the seeded region growing and active contour to be compared against experts' manual segmentations (Salman et al., 2012). Sato et al. proposed a Sobel gradient magnitude-based region growing algorithm which solves the partial volume effect problem (Sato et al., 2000). Deng proposed a region growing method which was based on the gradients and variances along and inside of the boundary curve (Deng et al., 2010).

Letteboer et al. and Dam et al. described multi-scale watershed segmentation (Letteboer et al., 2001; Dam et al., 2004). Letteboer et al. proposed a semi-automatic multi-scale watershed algorithm for brain tumor segmentation in MR images (Letteboer et al., 2001). Region-based techniques are used commonly in brain tumor segmentation. However, region-based segmentation has the over-segmentation problem and there is considerable difficulty in marker extraction when using marker-based watershed segmentation. Li and Wan solved these problems by proposing an improved watershed segmentation method with an optimal scale based on ordered dither halftone and mutual information (Li and Wan, 2010).

2.3. Model-Based Techniques

Model-based segmentation techniques could be divided into parametric deformable and geometric deformable approaches. There are a number of studies on image segmentation based on active contours, which is a popular parametric deformable method (Boscolo et al., 2002; Amini et al., 2004). Snake is one of the most commonly used geometric deformable algorithm for brain tumor segmentation. Luo et al. proposed a deformable model to segment brain tumors (Luo et al., 2003). This method combined the adaptive balloon force and the gradient vector flow (GVF) force to increase the GVF snake's capture range and convergence speed. Ho et al. proposed a new region competition method for automatic 3D brain tumor segmentation based on level-set snakes which overcome the difficulty in initialization and the missing boundary problems by modulating the propagation term with a signed local statistical force (Ho et al., 2002).

2.4. Pixel/Voxel Classification Techniques

Voxel-based classification usually uses voxel attributes for each voxel in the image such as gray level and color information. In brain tumor segmentation, voxel-based techniques are classified as unsupervised classifiers and supervised classifiers to cluster each voxel in the feature space (Gordillo et al., 2013).

Juang and Wu proposed a color-converted segmentation approach with the K-means clustering technique for MRI which converts the input gray-level MRI image into a color space image and the image is labeled by cluster indices (Juang and Wu, 2010). Selvakumar et al. implemented a voxel classification method which combined K-means clustering and fuzzy C-means (FCM) segmentation (Selvakumar et al., 2012). Vasuda and Satheesh improved the conventional FCM by implementing data compression including quantization and aggregation to significantly reduce the dimensionality of the input (Vasuda and Satheesh, 2010). Comparing to the conventional FCM, the modified FCM has a higher convergence rate. Ji et al. proposed a modified possibilistic FCM clustering of MRI utilizing local contextual information to impose local spatial continuity to reduce noise and resolve classification ambiguity (Ji et al., 2011). Autoencoders were used in Vaidhya et al. and Zeng et al. work for brain tumor segmentation and other imaging tasks (Vaidhya et al., 2015; Zeng et al., 2018b). Zhang et al. proposed a hidden Markov random field model and the expectation-maximization algorithm for brain segmentation on MRI (Zhang et al., 2001).

For the voxel-classification MRI processing techniques, proper depiction of voxels is required as a criteria to accurately classify each voxel. In the previous studies, Zulpe et al. used gray-level co-occurrence matrix (GLCM) textural features to detect the brain tumors (Zulpe and Pawar, 2012); Context-sensitive features were used in Meier et al.'s study to classify tumors and non-tumors (Meier et al., 2014). Meanwhile, a feature selection algorithm also requires good designs to select a compact set of features in order to reduce the computation cost (Zou et al., 2016a,b; Su et al., 2018), considering the huge data size of the MRI. In our study, one set of informative features and efficient feature selection algorithm were proposed. The experimental results have demonstrated that promising brain tumor segmentation performance can be achieved using the proposed method.

3. Methodology

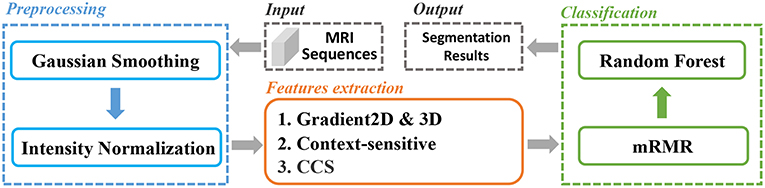

In this paper, we extracted various types of features from the brain MRI and used for classification. And an mRMR feature selection method was used to reduce the feature dimension and select the best feature set. The whole pipeline was depicted in Figure 1. Firstly, the MRI sequences were pre-processed with smoothing and normalization operations. Secondly, we extracted two types of features, gradient-based features and context-sensitive features. Thirdly, we used an mRMR feature selection method to select the optimal feature set with minimal redundancy and maximal relevance. We will explain the whole process in detail later.

3.1. Data

We used the training data of BraTS 2015 as our training and test data (Menze et al., 2015). It provides 4 sequences T1, T1c, T2, and Flair. The image data contains 220 HGG (anaplastic astrocytomas and glioblastoma multiforme tumors) MR scans and 54 LGG (histological diagnosis: astrocytomas or oligoastrocytomas) cases. The “ground truth” are labeled by manual annotations with 0-5 with four types of tumoral structures labeled as the following: “necrotic (or fluid-filled) core” is labeled 1, “edema” is labeled 2, “non-enhancing(solid) core” is labeled 3, and “enhancing core” is labeled 4. The normal tissue is labeled 0. We evaluated our work within three regions: complete tumor (which contains necrotic core, edema, non-enhancing core and enhancing core), tumor core (which contains necrotic core, non-enhancing, and enhancing core) and enhancing tumor.

3.2. Pre-processing

We carried out smoothing and normalization on the MRI sequences to reduce the impact of image noise and to enhance image quality for further processing. As for smoothing, we chose the Gaussian filter which has been widely used in image processing and computer vision for noise suppression (Bergholm, 1987; Deng and Cahill, 1993; Kharrat et al., 2009; Zeng et al., 2017).

For further processing, MRI sequences are sensitive to all the acquisition conditions such as MR protocols, MR scanners, and MR adjustments (Sled et al., 1998). Even for the same tissue information acquired with the same conditions, there will be a variation because MRI intensities do not have a tissue specific value. In order to eliminate the impact of the variation for further image processing which is based on image intensity, we normalized the smoothed value to the range from 0 to 1. The normalization was calculated as in Equation (1)

where X* and X are the normalized and raw gray value respectively; Xmax is the maximal gray value, and Xmin is the minimal gray values.

3.3. Gradient Based Features

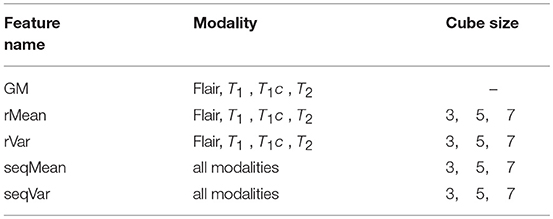

The gradient value represents the rate of change in the direction of the largest possible intensity change. In our study, we used the central difference gradient as the gradient operator. For each voxel p, the derivative at one direction is the mean of the two voxels adjacent to p in that particular direction. Here we calculated two sets of gradient-based features within the ROI. The first set calculated the gradient along each coordinate plane, which we named as Gradient2D. The Gradient2D of one image in each coordinate plane has two components: the x-derivative and the y-derivative. In Equation (2), we take the x-derivative as an example (I is the input image). The second set, the Gradient3D, is based on the three-dimensional gradient magnitude. We further divide the Gradient3D into five subsets, the GM, rMean, rVar, seqMean, and seqVar, and we show them in Table 1.

The GM feature consists of the three-dimensional gradient magnitude, which is calculated based on Equation (3). Gx is the directional gradient along the x-axis, Gy is the directional gradient along the y-axis and Gz is the directional gradient along the z-axis. In our study, we also used the central difference gradient as gradient operator to extract the GM feature for each respective MRI image sequence. The operator is given in Equation (2).

We further extracted the rMean and rVar features by calculating the mean and variance of the GM feature over a cube-shaped neighborhood with sizes 33, 53, 73 for each GM feature of each respective sequence. Meanwhile, we extracted seqMean and seqVar by calculating the mean and variance of the GM features over the sequences in cube-shaped neighborhoods.

3.4. Circular Context-Sensitive Feature

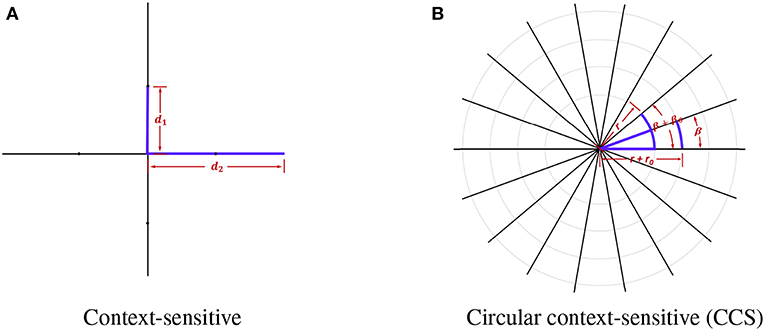

Meier et al. proposed context-sensitive features for brain tumor segmentation which extracts ray features in plane by calculating the histogram using intensity values from T1 and Flair-weighted images after atlas-normalization (Meier et al., 2014). The rationale of this method is that the intensity range of T1 and Flair-weighted modalities is larger than that of the healthy tissues. Based on this method, we proposed a circular context-sensitive (CCS) feature to capture more details in various sizes.

In context-sensitive features, every voxel sends out four rays with radius r ∈ {10, 20} and orienting at ang ∈ {0, π/2, π, 2π/3}. In order to obtain more information and extract features in multiple scales, we made several improvements to the original context-sensitive features. Firstly, instead of utilizing only voxel information in the horizontal or vertical directions, we used rays evenly distributed on a circle to swipe all the orientations, which are denser. The directions are calculated using the following equation:

where β is the initial angle, βθ is the step size rotating around the center point, and Nβ is the total number of directions. Secondly, in order to capture context features with all the scales, we used a continuous radius to cover the neighboring voxel information as much as possible. The radius r is defined as:

where r0 is the initial radius, where rθ is the step size moving toward the outermost circle, rmin and rmax are the minimum and maximum of the radius, and Nr is the total number of rays. We show the comparison between the original context-sensitive features and the circular context-sensitive features in Figure 2. The original context-sensitive sends out four rays in four directions. The CCS features, however, send out rays along all the orientations and with all the radius, which is supposed to capture rich context information. In our studies, we used 8 rays evenly distributed on 45 circles ranging from 10 to 20 with even numbers to capture the context-sensitive features.

Figure 2. The original context-sensitive features and the circular context-sensitive (CCS) features. (A) shows the original context-sensitive features. (B) shows the CCS features. In this example, the center voxel sends out 18 rays of length r+n*rθ with an angle β+n*βθ. The CCS can fully extract context information instead of only voxel information in horizontal or vertical directions.

In the MRI, the slice thickness varies considerably which will affect the feature extraction results so the features are considered only for the T1 and Flair-weighted in-plane images. In summary, our CCS feature extraction is summarized as the following:

1. For one voxel i, we calculated r and ang of all the rays, based on Equations (4, 5).

2. Using T1 and Flair images, we computed the histograms and obtained the maximal Hmax and minimal Hmin histogram values.

3. The mean values of each ray was calculated using Hmax and Hmin as the CCS feature values.

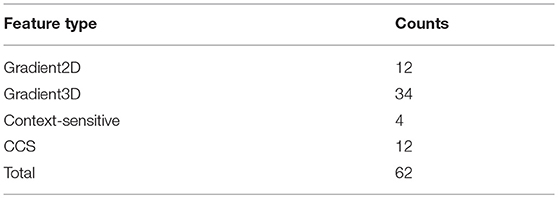

In summary, we extracted 12 Gradient2D features, 34 Gradient3D features, 4 context-sensitive features, and 12 CCS features, as shown in Table 2. In the next section, we will show the voxel-based classification and feature selection to label different regions of the brain MR images.

3.5. Feature Selection Based on mRMR

We classified the brain MRI images into five categories: normal tissue, edema, non-enhancing core, necrotic core, and enhancing core. Random forests (RF) (Breiman, 2001) is an ensemble learning method for classification, regression, and other tasks, and has been widely used in image analysis (Jin et al., 2014; Liu et al., 2015). It consists of multitude of decision trees and outputs the votes over each tree. They are able to handle multi-class problems, and they provide a probabilistic output instead of hard label separations. However, due to the large volume of MRI images, direct classification based on the extracted features (as shown in Table 2) is time-consuming. A proper feature selection algorithm will greatly reduce the computational cost and increase the efficiency.

Minimal Redundancy maximal Relevance(mRMR) was proposed by Peng et al. which can select features that have minimum redundancy and maximum relevance with each other (Peng et al., 2005). We use Equation (6) to search for features which have the maximum relevance, and use the minimal redundancy condition as the Equation (7) to select mutually exclusive features. Equation (8) gives mRMR features. xi represents the i−th feature in feature set S with target class c. Φ means the combination of D and R.

In order to avoid over-learning and under-learning, we evaluated our RF classifier by 5-fold cross validation with the measurements in section 3.7. In detail, we divided the subcases into 5 roughly equal parts. For each k = 1, 2, …, 5, we fit the RF model to the other 4 parts, and predict the kth part with the fitted RF model. The final outcome equals to the mean of the results of the 5-folds. In our study, we used the mRMR feature selection method to select the minimal feature set which reduces the computational cost without performance degradation. The raw 62-dimensional feature set is shown in Table 2. The mRMR feature selection details are as follows:

1. Train the RF classifier with top f features which are ranked by mRMR from the raw feature set and test the model with 5-fold cross validation. Here, we set f = 62−5n, n = 0, 1, 2, …, 12.

2. Rank the performance in step 1. Set the final feature set with fm dimension as the features which achieve the best performance among all the f-dimensional features. Here, fm is the dimension of the final feature set.

3.6. Solution to the Class-Imbalance Problem

The “BraTS” data is seriously unbalanced, with less than 1% of voxels being tumor voxels. Training on them would result in problems such as higher mis-classification rate for the minority class data. Thus, we carried out three steps to overcome the class-imbalance problem.

1. Detect the boundary of ROI: We used a plane to move along each axis's direction until it detected a voxel labeled with none-zero. Then the plane in the current position was used as the boundary in that direction.

2. Split the ROI: After step 1, each raw MR image would have a total of 6 boundaries in the xyz space. We split out a cuboid as our ROI using the boundaries. This removed a large number of zero label voxels and voxels inside each ROI contained all the categories.

3. Equally select voxels for each category: Within the segmented ROI, the label with the least number of voxels was recorded. We randomly picked the equal number of voxels in the ROI to form a balanced data.

3.7. Performance Measurements

To show the performance of our segmentation approach, we use Dice, positive predictive value (PPV), Sensitivity, Specificity to evaluate HGG and LGG tumor regions segmentation. TP represents the number of “true positive,” where “true positive” is the event that the test makes a positive prediction, and the subject has a positive ground truth. FP is the number of “false positive,” where “false positive” is the event that the test makes a positive prediction, and the subject has a negative ground truth. FN is the number of “false negative” and TN indicateds the size of “true negative” set.

1. Dice

The Dice score normalizes the number of true positives to the average size of the two segmented areas. It is identical to the F-score (the harmonic mean of the precision recall curve) and can be transformed monotonously to the Jaccard score.

2. Positive predictive value (PPV)

The PPV represents the proportions of positive results in tests that are true positive results.

3. Sensitivity

Sensitivity measures the proportion of actual positives that are correctly identified as such.

4. Specificity

Specificity (also called the true negative rate) measures the proportion of actual negatives that are correctly identified as such.

4. Experimental Results

In our experiments, we extracted four groups of features after pre-processing: Gradient2D features, Gradient3D features, context-sensitive features, and CCS features, including totally 62 features (as shown in Table 2). The Gradient3D set contains five subsets: the GM, rMean, rVar, seqMean, and seqVar. We used the mRMR feature selection method to select a compact set of features and built the random forest classifier.

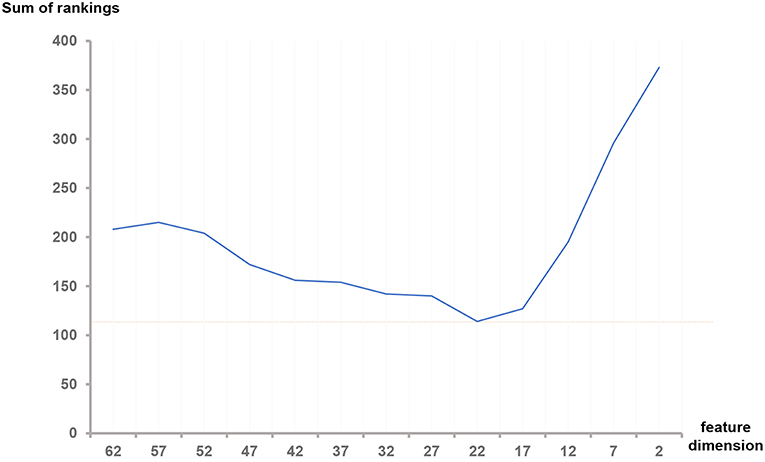

Firstly, we built the random forest classifier using the top f feature set ranked by mRMR respectively. We used f to denote the dimension of the feature set, where f = 62−5n, n = 0, 1, 2, …, 12. Secondly, we compared the performance of feature sets with diverse f. The best feature dimension fm is the f which has the best performance. We recorded this fm-dimensional feature set as the optimal feature set. Here we present the performance comparisons between diverse fs; Then we show the performance comparisons among different feature groups. Next, we compared our results with Meier et al.'s method (Meier et al., 2014). Lastly, we show the segmentation results marked with different colors.

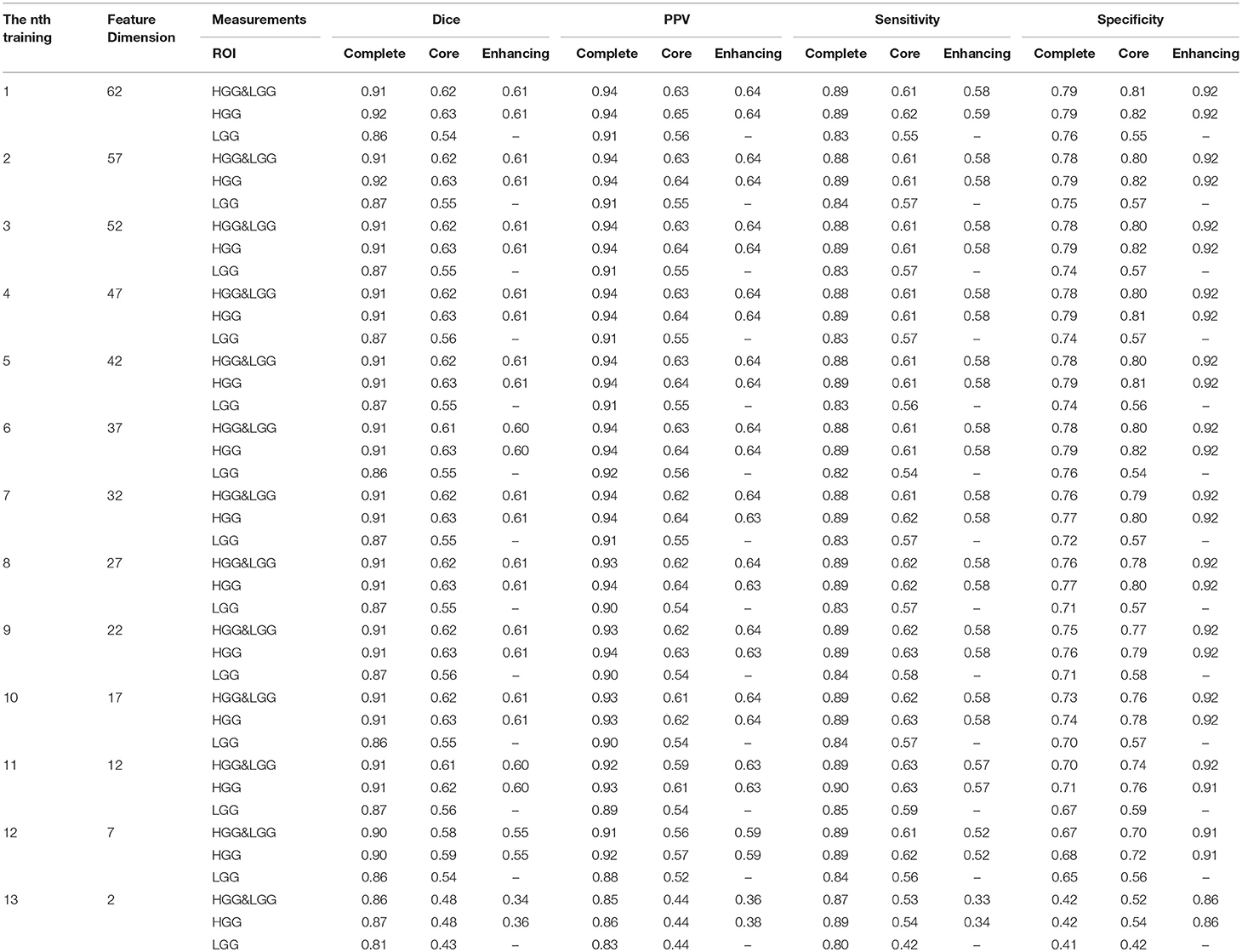

4.1. Comparison Between Feature Sets With Different Dimensions

In this step, we trained our model with different numbers of features as shown in Table 3. For the n-th training, we selected the top f-dimensional feature set ranked by the mRMR feature selection method according to their relevance and redundancy, where f = 62−5n, n = 0, 1, 2, …, 12. Here we used random forest as our classifier and set the number of trees in the forest to 100. For every n, we evaluated the model by the measurements in section 3.7 within three regions: complete tumor, tumor core, and enhancing tumor. The HGG&LGG, HGG, and LGG MR scans subsets were tested.

As shown in Table 3, the difference between the results of two adjacent experiments is very small. It is difficult to distinguish which dimension f has the best performance. In order to obtain an intuitive feature selection outcome, we provide an overall ranking of the performances for each f and show the results in Figure 3. It can be seen that 22-dimensional feature set achieves the best performance among all the tested feature sets.

Figure 3. Sum of rankings for each feature dimension f. The ranking rules are defined as follows: In each feature set with dimension f, we observed the three metrics (Dice, sensitivity and specificity) in three ROIs (Complete, enhancing and core) of HGG, LGG and both, totally 32 values in Table 3. We ranked the corresponding values across all the f. If equal values appeared, we took another metric, the size of the feature set into account. Then we summed all the rankings of each f and plotted the curve.

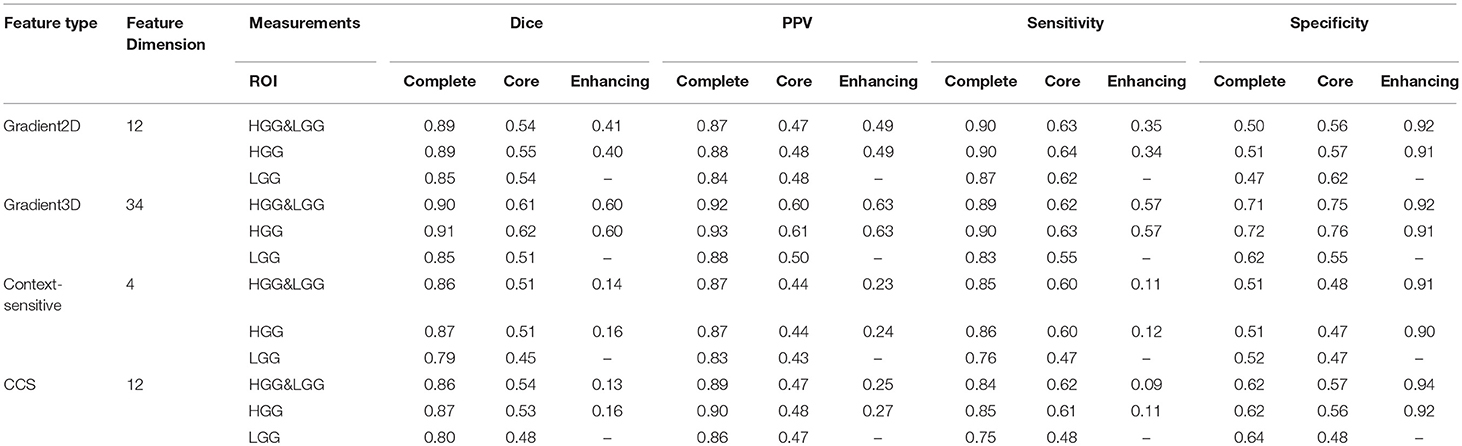

4.2. Comparison Between Different Feature Groups

In our studies, We have tested four different types of features. In order to learn which feature group is more informative for classification, we trained the model with each feature group and compared the performances of each feature group in Table 4. The table shows that the Gradient3D group performed far better than the other groups. It obtained a high Dice score (0.91) for complete tumor in HGG and a high PPV (0.92) for complete tumor in HGG&LGG datasets. And the CCS features performs slightly better than the context-sensitive features. However, compared with using all the 62 features, using a single group cannot achieve a better performance, which shows that each group is useful for classification and the integration of all the four groups is more helpful for classification.

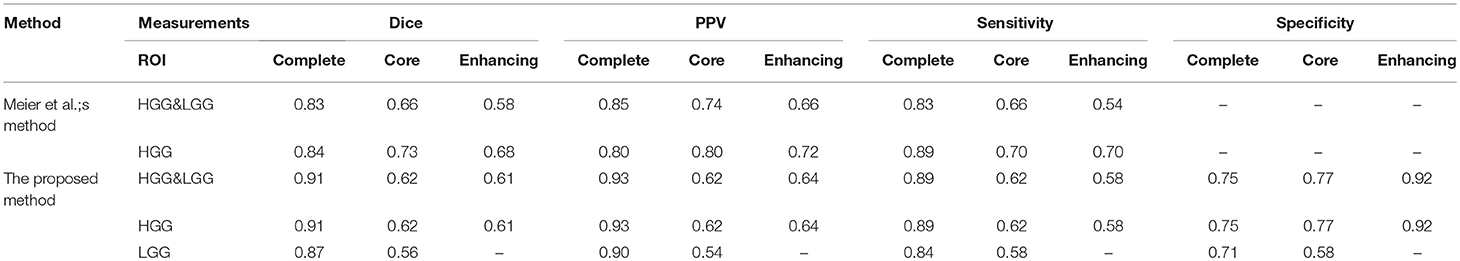

4.3. Comparison With Other Method

We compared our methods with another method which was proposed by Meier et al. (2014). They extracted appearance-sensitive and context-sensitive features and also used random forest as a classifier.

As shown in Table 5, Meier et al. evaluated the classifier with three ROIs: complete tumor, tumor core, and enhancing tumor. However, the LGG performance was not mentioned. In our experiments, we trained the HGG&LGG, HGG, and LGG models and tested our models with three ROIs. As shown in Table 3, we had better performance especially for complete tumor. Compared to Meier et al.'s method, we not only extract the context-sensitive features, but we also made improvements and proposed the circular context-sensitive features, which considered multiple scales and multiple directions.

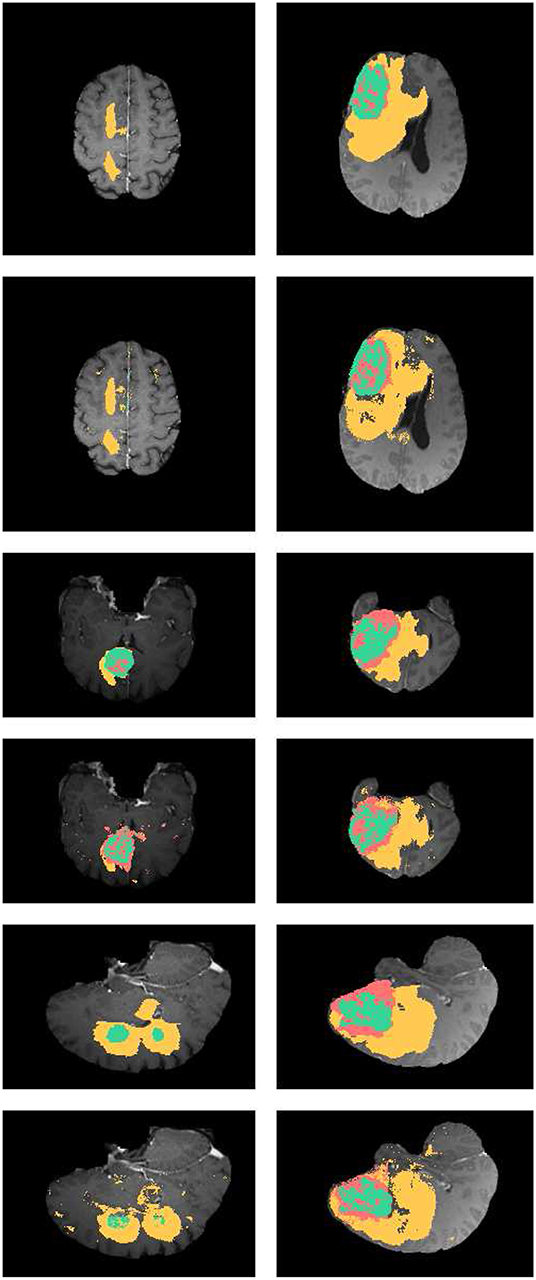

4.4. Performance of Brain Tumor Segmentation

In Figure 4, the axial, sagittal, and coronal slices of the ground truth are shown in rows 1, 3, and 5, respectively. The corresponding slices of the segmentation results are shown in rows 2, 4, and 6, respectively. As shown in Figure 4, our segmentation results are consistent with the ground truth. And we have good performance in all axial, sagittal, and coronal directions.

Figure 4. Examples the brain tumor segmentation results using the proposed method. The rows 1,3,5 are the axial, sagittal, and coronal slices of the ground truth. Rows 2,4,6 are the axial, sagittal, and coronal slices of our results. The labels of the tumor structure: enhancing tumor (green), tumor core(green and red), complete tumor (green, red, and yellow).

5. Conclusions

In our study, we proposed a supervised brain tumor segmentation method for MRI scans. We extracted four types of feature groups named Gradient2D set, Gradient3D set, context-sensitive features, and circular context-sensitive features, totally 62 features. Then we selected a set of the most informative feature set based on the mRMR algorithm and used them to build the random forest in order to distinguish different regions of brain tumors. We presented the performance comparisons among different dimensions of feature sets for feature selection, comparisons among different feature subgroups and comparisons with other tumor segmentation approaches. The results show that the proposed method is competitive in segmenting brain tumors.

Data Availability

Publicly available datasets were analyzed in this study. This data can be found here: http://braintumorsegmentation.org/.

Author Contributions

JZ conducted the experiements. ZM, LW, and CS participated the manuscript writing. QZ and RS designed the experiments and edited the manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Adams, R., and Bischof, L. (1994). Seeded region growing. IEEE Trans. Pattern Anal. Mach. Intell. 16, 641–647. doi: 10.1109/34.295913

Amini, L., Soltanian-Zadeh, H., Lucas, C., and Gity, M. (2004). Automatic segmentation of thalamus from brain MRI integrating fuzzy clustering and dynamic contours. IEEE Trans. Biomed. Eng. 51, 800–811. doi: 10.1109/TBME.2004.826654

Bauer, S., Wiest, R., Nolte, L.-P., and Reyes, M. (2013). A survey of MRI-based medical image analysis for brain tumor studies. Phys. Med. Biol. 58:R97. doi: 10.1088/0031-9155/58/13/R97

Bergholm, F. (1987). “Edge focusing,” in IEEE Transactions on Pattern Analysis and Machine Intelligence, 726–741.

Bernsen, J. (1986). Dynamic thresholding of grey level images. in Proceedings of the Eighth International Conference on Pattern Recognition (Paris).

Boscolo, R., Brown, M. S., and McNitt-Gray, M. F. (2002). Medical image segmentation with knowledge-guided robust active contours. Radiographics 22, 437–448. doi: 10.1148/radiographics.22.2.g02mr26437

Dam, E., Loog, M., and Letteboer, M. (2004). “Integrating automatic and interactive brain tumor segmentation,” in Proceedings of the 17th International Conference on Pattern Recognition. ICPR 2004, Vol. 3 (Cambridge: IEEE), 790–793.

DeAngelis, L. M. (2001). Brain tumors. N. Engl. J. Med. 344, 114–123. doi: 10.1056/NEJM200101113440207

Deng, G., and Cahill, L. (1993). “An adaptive Gaussian filter for noise reduction and edge detection,” in Nuclear Science Symposium and Medical Imaging Conference, 1993 IEEE Conference Record (San Francisco, CA: IEEE), 1615–1619.

Deng, W., Xiao, W., Deng, H., and Liu, J. (2010). “MRI brain tumor segmentation with region growing method based on the gradients and variances along and inside of the boundary curve,” in 2010 3rd International Conference on Biomedical Engineering and Informatics, Vol. 1 (Yantai), 393–396.

Gibbs, P., Buckley, D. L., Blackband, S. J., and Horsman, A. (1996). Tumour volume determination from MR images by morphological segmentation. Phys. Med. Biol. 41, 2437. doi: 10.1088/0031-9155/41/11/014

Goetz, M., Weber, C., Binczyk, F., Polanska, J., Tarnawski, R., Bobek-Billewicz, B., et al. (2016). DALSA: Domain adaptation for supervised learning from sparsely annotated MR images. IEEE Trans. Med. Imaging 35, 184–196. doi: 10.1109/TMI.2015.2463078

Gordillo, N., Montseny, E., and Sobrevilla, P. (2013). State of the art survey on MRI brain tumor segmentation. Magn. Reson. Imaging 31, 1426–1438. doi: 10.1016/j.mri.2013.05.002

Ho, S., Bullitt, E., and Gerig, G. (2002). “Level-set evolution with region competition: automatic 3-D segmentation of brain tumors,” in Proceedings of 16th International Conference on Pattern Recognition, Vol. 1 (Quebec City, QC: IEEE), 532–535.

Ji, Z.-X., Sun, Q.-S., and Xia, D.-S. (2011). A modified possibilistic fuzzy c-means clustering algorithm for bias field estimation and segmentation of brain MR image. Comput. Med. Imaging Graph. 35, 383–397. doi: 10.1016/j.compmedimag.2010.12.001

Jin, M., Govindarajan, L. N., and Cheng, L. (2014). “A random-forest random field approach for cellular image segmentation,” in 2014 IEEE 11th International Symposium on Biomedical Imaging (ISBI) (Beijing: IEEE), 1251–1254.

Juang, L.-H., and Wu, M.-N. (2010). MRI brain lesion image detection based on color-converted k-means clustering segmentation. Measurement 43, 941–949. doi: 10.1016/j.measurement.2010.03.013

Kharrat, A., Benamrane, N., Messaoud, M. B., and Abid, M. (2009). “Detection of brain tumor in medical images,” in 2009 3rd International Conference on Signals, Circuits and Systems (Medenine: IEEE), 1–6.

Letteboer, M., Niessen, W., Willems, P., Dam, E. B., and Viergever, M. (2001). “Interactive multi-scale watershed segmentation of tumors in MR brain images,” in Proceedings of the IMIVA Workshop of MICCAI (Berlin, Heidelberg).

Li, G., and Wan, Y. (2010). “Improved watershed segmentation with optimal scale based on ordered dither halftone and mutual information,” in IEEE International Conference on Computer Science and Information Technology, Vol. 9 (Chengdu: IEEE), 296–300.

Liu, J., Narayanan, D., Chang, K., Kim, L., Turkbey, E., Lu, L., et al. (2015). “Automated segmentation of the thyroid gland on CT using multi-atlas label fusion and random forest,” in Biomedical Imaging (ISBI), 2015 IEEE 12th International Symposium on (New York City, NY: IEEE), 1114–1117.

Louis, D. N., Ohgaki, H., Wiestler, O. D., Cavenee, W. K., Burger, P. C., Jouvet, A., et al. (2007). The 2007 WHO Classification of Tumours of the Central Nervous System. International Agency for Research on Cancer.

Luo, S., Li, R., and Ourselin, S. (2003). “A new deformable model using dynamic gradient vector flow and adaptive balloon forces,” in APRS Workshop on Digital Image Computing (Brisbane, QLD) 9–14.

Mazzara, G. P., Velthuizen, R. P., Pearlman, J. L., Greenberg, H. M., and Wagner, H. (2004). Brain tumor target volume determination for radiation treatment planning through automated MRI segmentation. Int. J. Radiat. Oncol. Biol. Phys. 59, 300–312. doi: 10.1016/j.ijrobp.2004.01.026

Meier, R., Bauer, S., Slotboom, J., Wiest, R., and Reyes, M. (2014). “Appearance-and context-sensitive features for brain tumor segmentation,” in MICCAI BraTS Workshop (Boston, MA).

Menze, B., Jakab, A., Bauer, S., Kalpathy-Cramer, J., Farahani, K., Kirby, J., et al. (2015). The multimodal brain tumor image segmentation benchmark (BRATS). IEEE Trans. Med. Imaging 34, 1993–2024. doi: 10.1109/TMI.2014.2377694

Niblack, W. (1986). An Introduction to Digital Image Processing, Vol. 34. Englewood Cliffs, NJ: Prentice-Hall.

Otsu, N. (1979). A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 9, 62–66. doi: 10.1109/TSMC.1979.4310076

Peng, H., Long, F., and Ding, C. (2005). Feature selection based on mutual information criteria of max-dependency, max-relevance, and min-redundancy. IEEE Trans. Pattern Anal. Mach. Intell. 27, 1226–1238. doi: 10.1109/TPAMI.2005.159

Salman, Y. M., Assal, M. A., Badawi, A. M., Alian, S. M., and El-Bayome, M. El. (2005). “Validation techniques for quantitative brain tumors measurements,” in Proceedings of 2005 IEEE Engineering in Medicine and Biology 27th Annual Conference (Shanghai), 7048–7051.

Sapra, P., Singh, R., and Khurana, S. (2013). “Brain tumor detection using neural network,” in International Journal of Science and Modern Engineering (IJISME), 2319–6386.

Sato, M., Lakare, S., Wan, M., Kaufman, A., and Nakajima, M. (2000). “A gradient magnitude based region growing algorithm for accurate segmentation,” in Proceedings 2000 International Conference on Image Processing (Cat. No.00CH37101), Vol. 3 (Vancouver, BC), 448–451.

Selvakumar, J., Lakshmi, A., and Arivoli, T. (2012). “Brain tumor segmentation and its area calculation in brain MR images using K-mean clustering and fuzzy c-mean algorithm,” in International Conference on Advances in Engineering, Science and Management (IEEE), 186–190.

Sled, J. G., Zijdenbos, A. P., and Evans, A. C. (1998). A nonparametric method for automatic correction of intensity nonuniformity in MRI data. IEEE Trans. Med. Imaging 17, 87–97. doi: 10.1109/42.668698

Stadlbauer, A., Moser, E., Gruber, S., Buslei, R., Nimsky, C., Fahlbusch, R., et al. (2004). Improved delineation of brain tumors: an automated method for segmentation based on pathologic changes of 1H-MRSI metabolites in gliomas. Neuroimage 23, 454–461. doi: 10.1016/j.neuroimage.2004.06.022

Su, R., Wu, H., Xu, B., Liu, X., and Wei, L. (2018). “Developing a multi-dose computational model for drug-induced hepatotoxicity prediction based on toxicogenomics data,” in IEEE/ACM Transactions on Computational Biology and Bioinformatics, doi: 10.1109/TCBB.2018.2858756

Tang, W., Wan, S., Yang, Z., Teschendorff, A. E., and Zou, Q. (2017). Tumor origin detection with tissue-specific miRNA and DNA methylation markers. Bioinformatics 34, 398–406. doi: 10.1093/bioinformatics/btx622

Vaidhya, K., Thirunavukkarasu, S., Alex, V., and Krishnamurthi, G. (2015). “Multi-modal brain tumor segmentation using stacked denoising autoencoders,” in International Workshop on Brainlesion: Glioma, Multiple Sclerosis, Stroke and Traumatic Brain Injuries (Springer), 181–194.

Vasuda, P., and Satheesh, S. (2010). Improved fuzzy C-means algorithm for MR brain image segmentation. Int. J. Comput. Sci. Eng. 2, 1713–1715.

Vijayakumar, C., and Gharpure, D. C. (2011). Development of image-processing software for automatic segmentation of brain tumors in MR images. J. Med. Phys. Assoc. Med. Phys. India 36, 147–158. doi: 10.4103/0971-6203.83481

Zeng, N., Qiu, H., Wang, Z., Liu, W., Zhang, H., and Li, Y. (2018a). A new switching-delayed-PSO-based optimized SVM algorithm for diagnosis of Alzheimer's disease. Neurocomputing 320, 195–202. doi: 10.1016/j.neucom.2018.09.001

Zeng, N., Zhang, H., Li, Y., Liang, J., and Dobaie, A. M. (2017). Denoising and deblurring gold immunochromatographic strip images via gradient projection algorithms. Neurocomputing 247, 165–172. doi: 10.1016/j.neucom.2017.03.056

Zeng, N., Zhang, H., Song, B., Liu, W., Li, Y., and Dobaie, A. M. (2018b). Facial expression recognition via learning deep sparse autoencoders. Neurocomputing 273, 643–649. doi: 10.1016/j.neucom.2017.08.043

Zhang, Y., Brady, M., and Smith, S. (2001). Segmentation of brain MR images through a hidden Markov random field model and the expectation-maximization algorithm. IEEE Trans. Med. Imaging 20, 45–57. doi: 10.1109/42.906424

Zhao, X., Wu, Y., Song, G., Li, Z., Zhang, Y., and Fan, Y. (2018). A deep learning model integrating FCNNs and CRFs for brain tumor segmentation. Med. Image Anal. 43, 98–111. doi: 10.1016/j.media.2017.10.002

Zou, Q., Wan, S., Ju, Y., Tang, J., and Zeng, X. (2016a). Pretata: predicting TATA binding proteins with novel features and dimensionality reduction strategy. Bmc Syst. Biol. 10(Suppl. 4):114. doi: 10.1186/s12918-016-0353-5

Zou, Q., Zeng, J., Cao, L., and Ji, R. (2016b). A novel features ranking metric with application to scalable visual and bioinformatics data classification. Neurocomputing 173, 346–354. doi: 10.1016/j.neucom.2014.12.123

Keywords: brain tumor segmentation, gradient, context-sensitive, random forest, mRMR, class-imbalanced

Citation: Zhao J, Meng Z, Wei L, Sun C, Zou Q and Su R (2019) Supervised Brain Tumor Segmentation Based on Gradient and Context-Sensitive Features. Front. Neurosci. 13:144. doi: 10.3389/fnins.2019.00144

Received: 15 January 2019; Accepted: 07 February 2019;

Published: 14 March 2019.

Edited by:

Nianyin Zeng, Xiamen University, ChinaReviewed by:

Haiyang Yu, Nanyang Technological University, SingaporeLin Gu, National Institute of Informatics, Japan

Copyright © 2019 Zhao, Meng, Wei, Sun, Zou and Su. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Quan Zou, em91cXVhbkBuY2xhYi5uZXQ=

Ran Su, cmFuLnN1QHRqdS5lZHUuY24=

Junting Zhao

Junting Zhao Zhaopeng Meng1,2

Zhaopeng Meng1,2 Leyi Wei

Leyi Wei Changming Sun

Changming Sun Quan Zou

Quan Zou Ran Su

Ran Su