- 1Beijing Key Laboratory of Intraocular Tumor Diagnosis and Treatment, Beijing Ophthalmology and Visual Sciences Key Laboratory, Medical Artificial Intelligence Research and Verification Key Laboratory of the Ministry of Industry and Information Technology, Beijing Tongren Eye Center, Beijing Tongren Hospital, Capital Medical University, Beijing, China

- 2Beijing Eaglevision Technology Co., Ltd., Beijing, China

- 3Beijing Ophthalmology and Visual Science Key Laboratory, Beijing Tongren Eye Center, Beijing Tongren Hospital, Beijing Institute of Ophthalmology, Capital Medical University, Beijing, China

- 4eResearch centre, Monash University, Melbourne, VIC, Australia

- 5ECSE, Faculty of Engineering, Monash University, Melbourne, VIC, Australia

- 6Department of Ophthalmology, Medical Faculty Mannheim, Heidelberg University, Mannheim, Germany

This study aimed to develop an automated computer-based algorithm to estimate axial length and subfoveal choroidal thickness (SFCT) based on color fundus photographs. In the population-based Beijing Eye Study 2011, we took fundus photographs and measured SFCT by optical coherence tomography (OCT) and axial length by optical low-coherence reflectometry. Using 6394 color fundus images taken from 3468 participants, we trained and evaluated a deep-learning-based algorithm for estimation of axial length and SFCT. The algorithm had a mean absolute error (MAE) for estimating axial length and SFCT of 0.56 mm [95% confidence interval (CI): 0.53,0.61] and 49.20 μm (95% CI: 45.83,52.54), respectively. Estimated values and measured data showed coefficients of determination of r2 = 0.59 (95% CI: 0.50,0.65) for axial length and r2 = 0.62 (95% CI: 0.57,0.67) for SFCT. Bland–Altman plots revealed a mean difference in axial length and SFCT of −0.16 mm (95% CI: −1.60,1.27 mm) and of −4.40 μm (95% CI, −131.8,122.9 μm), respectively. For the estimation of axial length, heat map analysis showed that signals predominantly from overall of the macular region, the foveal region, and the extrafoveal region were used in the eyes with an axial length of < 22 mm, 22–26 mm, and > 26 mm, respectively. For the estimation of SFCT, the convolutional neural network (CNN) used mostly the central part of the macular region, the fovea or perifovea, independently of the SFCT. Our study shows that deep-learning-based algorithms may be helpful in estimating axial length and SFCT based on conventional color fundus images. They may be a further step in the semiautomatic assessment of the eye.

Introduction

Axial length and subfoveal choroidal thickness (SFCT) belong to the most important biometric parameters of the eye and are directly or indirectly associated with axial ametropias and maculopathies such as myopic macular degeneration and pachychoroid-associated macular diseases, to name only a few (Fujiwara et al., 2009; Spaide, 2009; Saka et al., 2010; Cheung et al., 2013; Shao et al., 2014; Ohno-Matsui et al., 2015; Tideman et al., 2016; Yan et al., 2018a, b; Lim et al., 2020; Peng et al., 2020). Although both parameters can relatively easily and non-invasively be determined with relative high precision, their measurements necessitate costly ophthalmological devices and equipment, which are not readily available and the use of which are personal dependent and time consuming. Incentives have, therefore, started to assess axial length and SFCT by other means than the conventional measurement devices. Since fundus photographs can be taken with easily available devices including smartphones (Bastawrous et al., 2016; Toy et al., 2016; Muiesan et al., 2017; Mamtora et al., 2018), we conducted this study to assess whether readily taken photographs of the ocular fundus could serve for an estimation of both biometric parameters with the application of deep-learning-based algorithms. In previous studies, artificial intelligence has already been shown to be helpful in the assessment of medical images and diagnosis of diseases (Ting et al., 2017; Biousse et al., 2020; Milea et al., 2020). Deep learning, known as a subset of artificial intelligence, allows computational systems to learn representations directly from a large number of images without designing explicit hand-crafted features (LeCun et al., 2015). The applications of deep-learning techniques trained on color fundus images have produced systems with competitive or close-to-expert performance for an automatic detection of ophthalmic diseases, including diabetic retinopathy (Cao et al., 2020; Gargeya and Leng, 2017; Ting et al., 2017), age-related macular degeneration (Burlina et al., 2017; Grassmann et al., 2018; González-Gonzalo et al., 2020), retinopathy of prematurity (Wang et al., 2018; Mao et al., 2020), glaucoma (Hemelings et al., 2020), and other disorders (Shah et al., 2020); assessment of ocular and systemic risk factors such as age, gender, body mass index, and blood pressure; estimation of the refractive error (Poplin et al., 2018; Varadarajan et al., 2018; Chun et al., 2020).

Materials and Methods

The Beijing Eye Study 2011 was a population-based, cross-sectional study conducted in Northern China (Wei et al., 2013; Yan et al., 2015). The Medical Ethics Committee of the Beijing Tongren Hospital approved the study protocol, and all participants gave an informed consent. The study was carried out in five communities in the urban area of Haidian district and three communities in the village area of Daxing District. The only eligibility criterion for inclusion in the study was an age group of ≥ 50 years. In total, 3468 individuals (1963 female, 56.6%) participated in the eye examination. Optical low-coherence reflectometry (Lensstar 900 Optical Biometer, Haag-Streit, 3098 Koeniz, Switzerland) was used for biometry of the right eyes for the measurement of axial length. After medical mydriasis, photographs of the macula and optic disk were taken using a 45° fundus camera (Type CR6-45NM, Canon Inc, Lake Success, NY, United States). The SFCT was measured using spectral-domain optical coherence tomography (SD-OCT) (Spectralis, wavelength of 870 nm; Heidelberg Engineering Co, Heidelberg, Germany) applying the enhanced depth imaging (EDI) modality. Seven OCT sections, each comprising 100 averaged scans, were obtained in a rectangle measuring 5° × 30°, centered onto the fovea. The horizontal section running through the center of the fovea was selected for further analysis. SFCT was defined as the vertical distance between the hyperreflective line of the Bruch’s membrane to the hyperreflective line of the inner surface of the sclera. The measurements were performed using the Heidelberg Eye Explorer software (v. 5.3.3.0; Heidelberg Engineering Co, Heidelberg, Germany) (Figure 1). Only the right eye of each study participant was assessed. The interobserver agreement between two ophthalmologists in measuring the SFCT had been assessed in a previous study and had shown correlation coefficient of r2 = 0.98 (Shao et al., 2013).

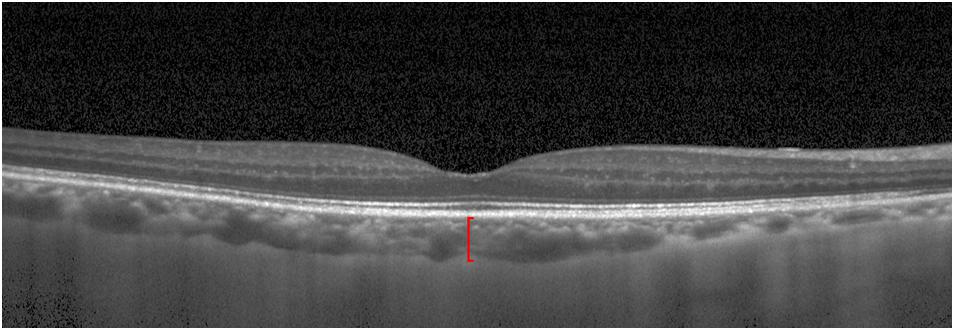

Figure 1. Optical coherence tomographic image (enhanced depth imaging mode) showing the retina and the choroid. Red line: subfoveal choroidal thickness.

We split the dataset into a development dataset and a validation dataset. The division was performed randomly with a ratio of 9:1 for the development/validation dataset. The development dataset consisted of a training set and a tuning set with the proportion of 8:1 (Table 1).

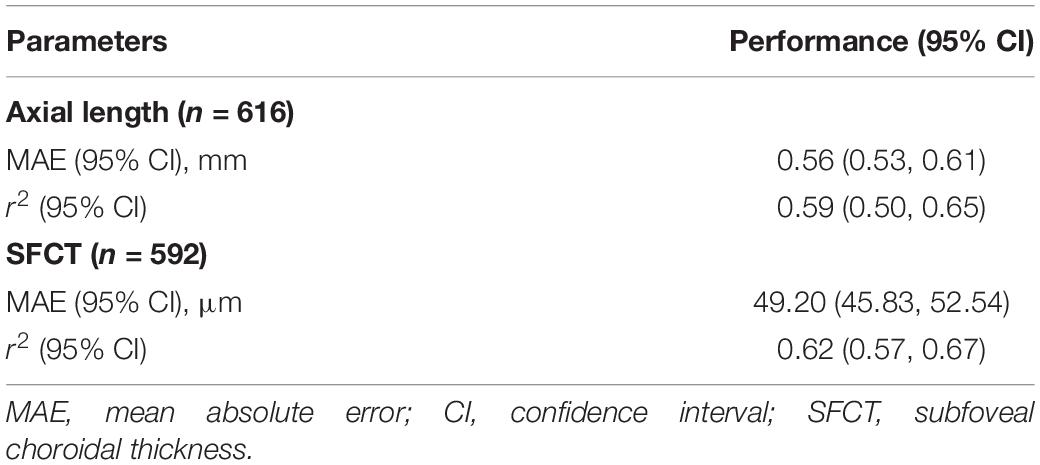

Table 1. Baseline characteristics (mean ± standard deviation) of participants in the development group and validation group.

For the development of the algorithm, we used a convolutional neural network (CNN), a specialized deep-learning model (Krizhevsky et al., 2012), to analyze the digitized fundus images. The models employed the same configurations and CNN architecture as Inception-Resnet-v2 (Szegedy et al., 2016). Based on this architecture, a modified 164-layer CNN was employed to estimate axial length and SFCT. We initialized the parameters of the neural network with the ImageNet classification pretrained model.

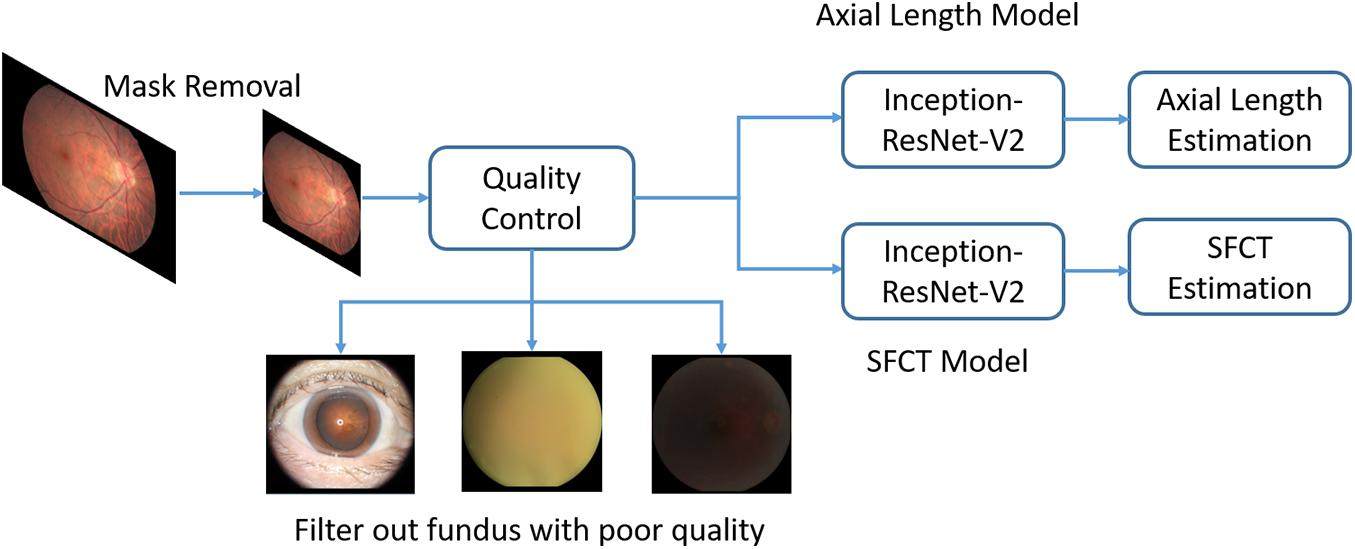

Before the analysis, we preprocessed the images to improve the CNN-based analysis. We removed the dark background by detecting a circular mask of the photographs, and the images were resized to the size of 500 × 500 pixels. A quality control module was implemented after the mask removal to assess the image quality and to filter out unqualified images (Figure 2). The standard for excluding poor quality images followed the procedures used in previous investigations (Zago et al., 2018) and utilized parameters such as the readable region ratio, illumination, blurriness, and image contents. The pixel values of the selected images applied to a linear mapping with a pixel value ranging from (0, 255) to (0, 1). In the training stage, a batch of images, called the training batch, was generated and fed back to the network. The Huber loss was calculated based on this batch (Huber, 2004). The corresponding gradients of the loss were back-propagated to update the network parameters. We set the batch size (also known as mini-batch size) as 14. The stochastic gradient descent was used for the mini-batch optimization with the learning rate of 0.0001.

Figure 2. Overview of a deep convolutional neural network (CNN)-based model training pipeline to automatically estimate axial length and subfoveal choroidal thickness from color fundus images.

To implement and deploy the network, an open-source software library (Keras, V2.2.21) was used for training and evaluation. The model was trained on a dual-GPU of NVIDIA Titan-X with CUDA version 9.0 and cuDNN 7.0. The Inception-ResNet-V2 network architecture used in this work was publicly available in the Keras-Application package.

Since axial length and SFCT are continuous values, the metrics used for the assessment of the model performance were the mean absolute error and the coefficient of determination (r2). We calculated the mean absolute error and r2 with their 95% confidence intervals (CIs) with an evaluation of 2000 times. Bland–Altman plotting was used to visualize the agreement between the estimated values and the measured values.

To illustrate the fundus region predominantly used by the CNN to generate and apply the algorithm, we implanted another convolutional visualization layer into our network architecture (Zhou et al., 2016). The layer takes image features learned by the preceding layers and gives each feature a weight indicating its importance. It is shown in heat maps.

Results

Out of the 3468 participants of the Beijing Eye Study, fundus images of 3124 (90.1%) individuals were eventually included into the present study, after the images of 344 (9.9%) individuals had been excluded due to the exclusion criteria detailed above. Among the included photographs, 3239 images were centered on the macula, and 3065 images were centered on the optic nerve head. For the estimation of axial length, the development group used 5688 retinal fundus images from 2811 participants, and the validation group consisted of 616 images from 313 participants. Since some participants had not undergone OCT imaging, the development group for the estimation of SFCT used 5436 fundus images of the macula from 2672 participants and validated the model using 592 images from 300 participants (Table 1). The mean axial length was 23.24 mm (median, 23.12 mm; range, 18.96–30.88 mm), and the mean SFCT was 257 μm (median, 252 μm; range, 12–854 μm). An axial length between 22 mm and 26 mm was measured for 5550 (88.0%) images, and a SFCT between 150 μm and 350 μm was determined for 3862 (64.1%) images. The development group and the validation group did not differ significantly in axial length (P = 0.488) and SFCT (P = 0.163).

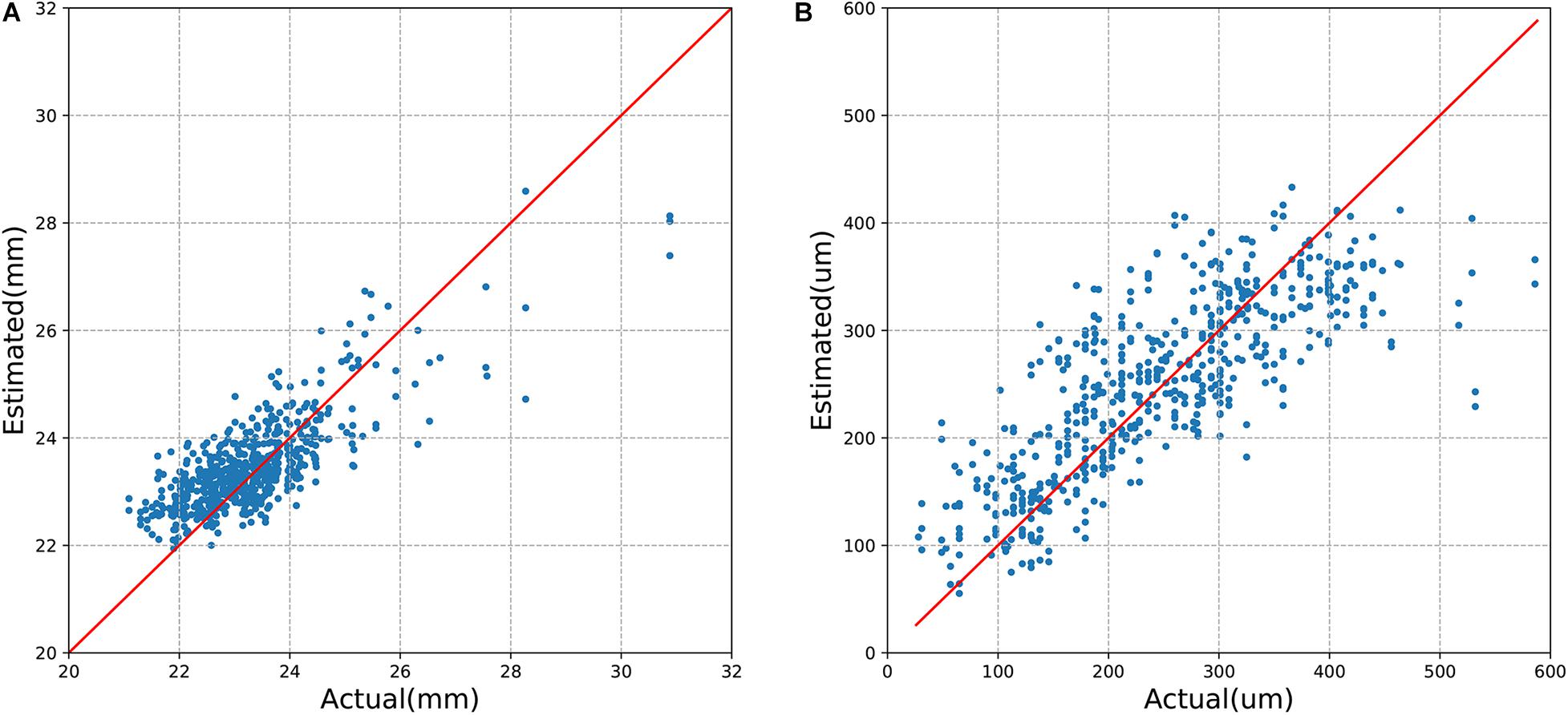

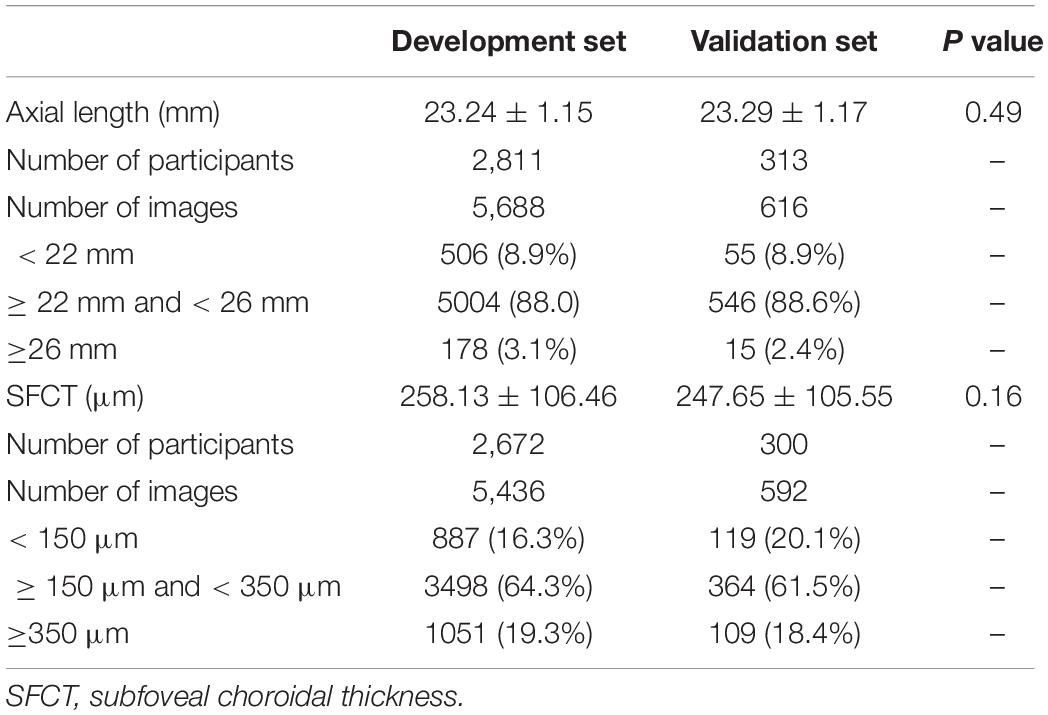

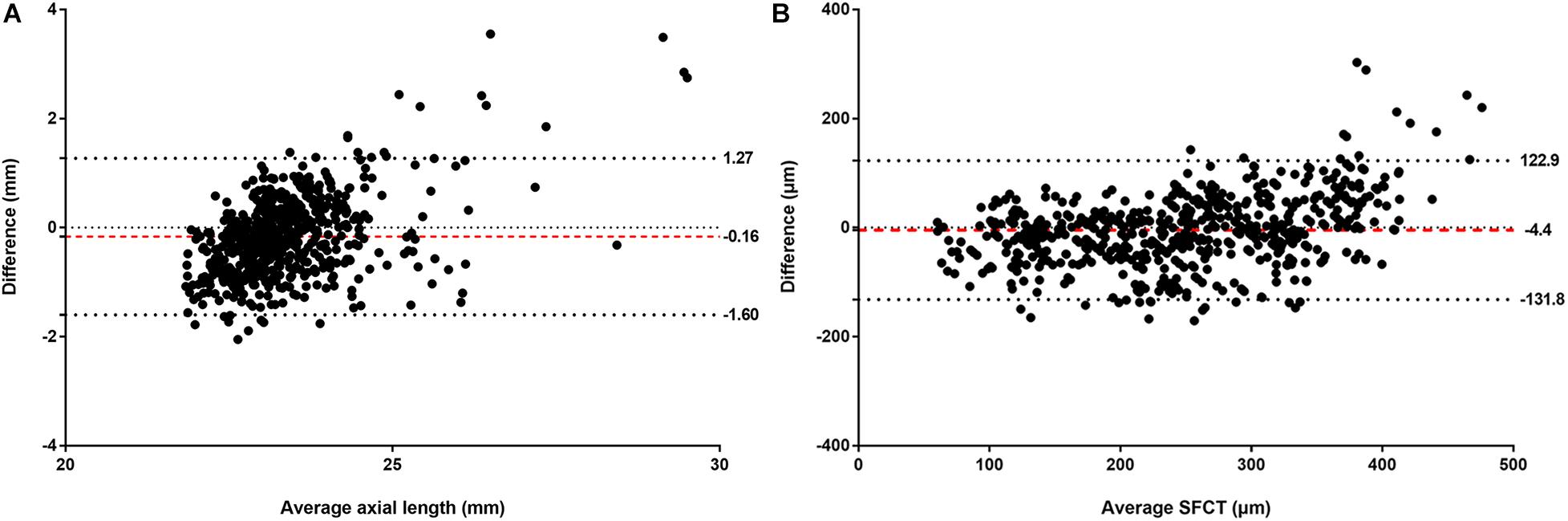

The mean absolute error (MAE) of the algorithm for the estimation of axial length and SFCT was 0.56 mm (95% CI, 0.53–0.61) and 49.20 μm (95% CI, 45.83–52.54), respectively, with coefficients of determination values of r2 of 0.59 (95% CI, 0.50–0.65) for axial length and r2 of 0.62 (95% CI, 0.57–0.67) for SFCT (Table 2). The estimated values and the measured values showed a relatively linear relationship for both parameters (Figure 3). In Bland–Altman plots, the mean difference of axial length was −0.16 mm (95% CI, −1.60–1.27 mm), with 3.7% (23/616) measurement points located outside the 95% limits of agreement (Figure 4). The mean difference of SFCT was −4.40 μm (95% CI, −131.8–122.9 μm), and 4.9% (29/592) of the measurement points were located outside the 95% limits of agreement in the Bland–Altman plots. Subgroup analysis showed the MAE of the algorithm for the estimation of axial length ranged from 22 to 26 mm was 0.50 mm (95% CI, 0.47–0.53), and the MAE for the estimation of SFCT was 42.47 μm (95% CI, 38.80–46.32).

Figure 4. Bland–Altman plots comparing the (A) actual and estimated axial length and (B) subfoveal choroidal thickness (SFCT). X-axis: mean of axial length or SFCT. Y-axis: measured values minus the estimated values. The mean differences and the 95% confidence limits of the difference are shown by the three dotted lines.

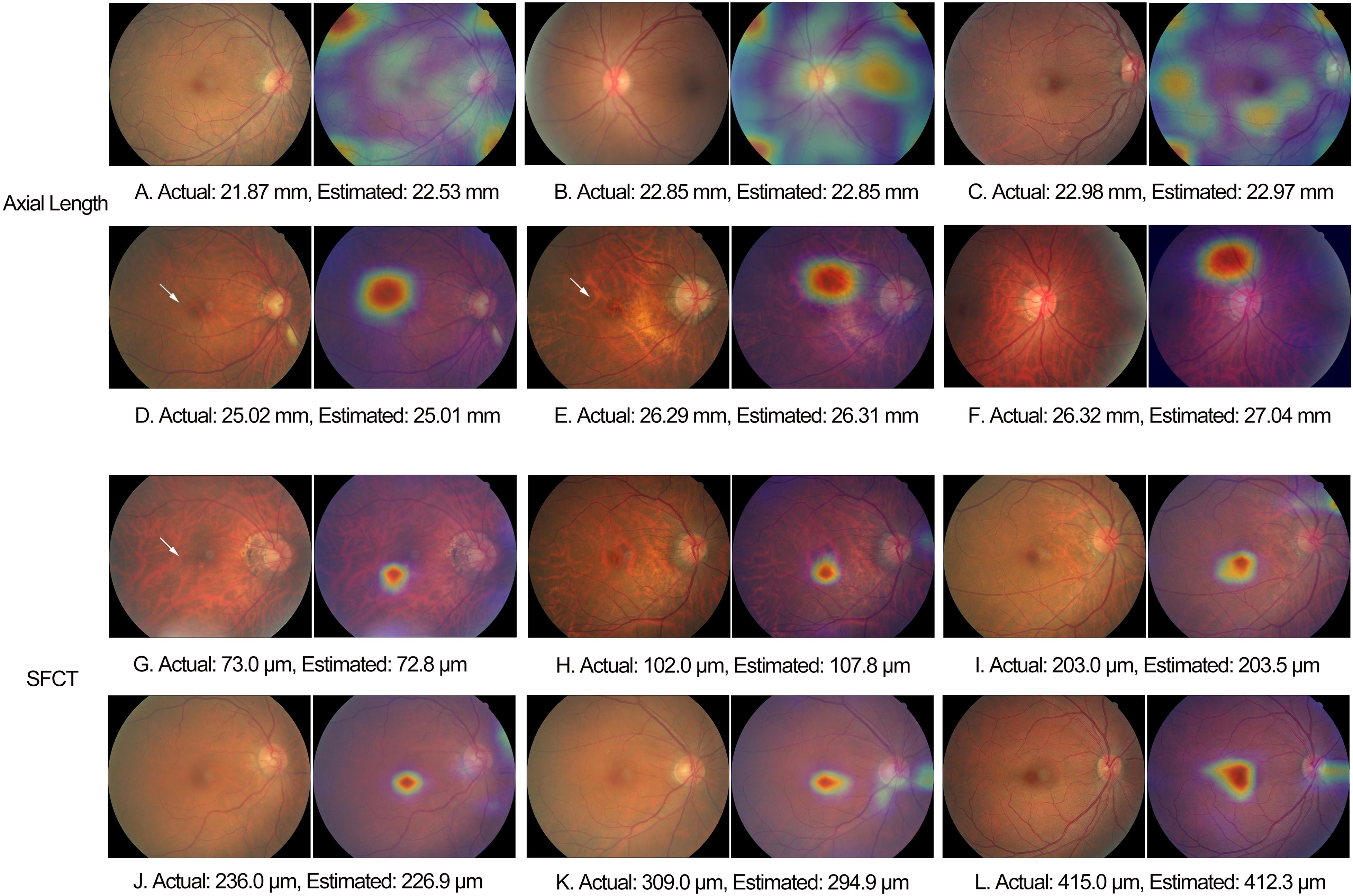

For the estimation of axial length, the heat map analysis showed that signals from overall of the macular region were used by the CNN in the eyes with an axial length of < 22 mm, while in the eyes with an axial length ranging between 22 mm and < 26 mm, the CNN used signals mostly from the foveal region, and in the eyes with an axial length of > 26 mm, the CNN used signals from the extrafoveal region within the macular (Figures 5A–F). For the estimation of SFCT, the CNN used mostly the central part of the macular region, the fovea or perifovea, independently of the SFCT (Figures 5G–L).

Figure 5. Examples of heat maps generated in eyes of different axial length and subfoveal choroidal thickness. White arrow: fundus tessellation.

Discussion

In our population-based study, the CNN-based algorithm had a mean absolute error for estimating axial length and SFCT of 0.56 mm and 49.20 μm, respectively, and the Bland–Altman plots revealed a mean difference in axial length and SFCT of −0.16 mm and −4.40 μm, respectively.

These results of our study with respect to the estimation of axial length cannot directly be compared with the results of other investigations, since axial length has not been included in a study on deep learning yet. Komuku et al. (2020) used an adaptive binarization method to analyze choroidal vessels on color fundus photographs and a deep-learning-based method to estimate the SFCT based on the binarization-generated choroidal vessel images. The correlations between choroidal vasculature appearance index and choroidal thickness were −0.60 for normal eyes (P < 0.01) and −0.46 for eyes with central serous chorioretinopathy (CSC) (P < 0.01), respectively. For the deep-learning system, the correlation coefficients between the value estimated from the color images and the true choroidal thickness were 0.68 for normal eyes (P < 0.01) and 0.48 for the eyes with CSC (P < 0.01), respectively. These values are comparable with the value of r2 = 0.62 found in our study with a larger study population and a population-based recruitment.

The difference between the estimated values and measured values of the axial length measurements was lower than that between axial length measurements by optical low-coherence reflectometry and sonographic axial length determinations [mean difference, −0.72 mm (95% CI, −0.75, −0.69 mm)] (Gursoy et al., 2011). In that context, it has to be taken into account that it is not the mean difference but the scattering of the difference between two methods that markedly influence the clinical reliability and validity of a technique. The algorithm in our study overestimated axial length for the eyes with a small axial length, and the model underestimated the SFCT in the eyes with a thick SFCT. The findings may be related to an underrepresentation of eyes with a small axial length and eyes with a thick SFCT in the study population. Most eyes included into the study had an axial length ranging between 22 and 26 mm and a SFCT ranging between 150 μm and 350 μm. The advantage of our study population being recruited in a population-based level was combined with the disadvantage of a relative lack of eyes in the extreme range of measurements of axial length and SFCT. Future studies may include preferably such eyes to further improve the algorithm.

The observations made in our study agree with the findings made in other investigations and with clinical experience that axially elongated eyes differ in the appearance of their posterior fundus from the eyes with a short axial length. In a parallel manner, it holds true for the SFCT, since it is strongly correlated with axial length (Fujiwara et al., 2009; Wei et al., 2013). A main feature of an axially elongated eye is an increased degree of fundus tessellation, which is also strongly correlated with a decreasing thickness of the SFCT (Yan et al., 2015). Other features of an increasing axial elongation in non-highly myopic eyes include a shift of the Bruch’s membrane (BM) opening, usually into the temporal direction, leading to an overhanging of BM into the intrapapillary compartment at the nasal optic disk and, correspondingly, an absence of BM at the temporal disk border in the form of a parapapillary gamma zone; an ovalization of the ophthalmoscopically detectable optic disk shape and a decrease in the ophthalmoscopical horizontal disk diameter due to the temporal BM shift; and an increase in the disk–fovea distance due to the development of parapapillary gamma zone and, correspondingly, a decrease in the angle kappa between the two temporal vascular arcades (Jonas et al., 2015, 2017, 2019; Guo et al., 2018). In view of this long list of axial elongation-associated morphological changes in the posterior fundus, it might have been expected that besides ophthalmologists, also deep-learning-based algorithms can estimate axial length. Interestingly, the heat map analysis revealed that signals predominantly from overall of the macular region, the foveal region, and the extrafoveal region were used in eyes with an axial length of < 22 mm, 22–26 mm, and > 26 mm, respectively. For the estimation of SFCT, the CNN used mostly the central part of the macular region, the fovea or perifovea, independently of the SFCT. It agrees with the finding of a previous study that the degree of fundus tessellation assessed in the macular region or in parapapillary region can be used to estimate SFCT and that a high degree of fundus tessellation is a surrogate for a leptochoroid (Yan et al., 2015).

The practical importance of an algorithm estimating the axial length may be in a combination of portable and cheap fundus cameras with such an algorithm (Bastawrous et al., 2016; Toy et al., 2016; Muiesan et al., 2017; Mamtora et al., 2018). Based on the data available so far, it may be unlikely that a deep-learning algorithm based only on fundus photographs will be better than biometry for the measurement of axial length. The same may hold true for the assessment of SFCT.

When the results of our study are discussed, its limitations should be taken into account. First, the study population included only subjects aged ≥ 50 years, so the results of our study cannot directly be transferred to younger individuals. Second, by the same token, the study population consisted only of Chinese so that future studies may address study population of different ethnicity. Third, the use of both optic-disk-centered fundus images and macula-centered fundus photographs, for the training and validation of the algorithm, might have led to some scattering in estimations. However, it should be noticed that the fovea was visible also on the optic nerve head images, and vice versa, the optic disk was visible on the macula-centered photographs. It indicates that the fovea, as the most important part for the estimation of the SFCT and axial length, was assessable in both types of photographs. In addition, the optic nerve head shows characteristic of axial-length-related particularities, so that the inclusion of its full image in the optic-disk-centered images might only have supported finding a best fitting algorithm. It also holds true for the estimation of the SFCT since the SFCT is strongly correlated with axial length (Liu et al., 2018). Adding the optic nerve head photographs to the study, furthermore, increased the sample size for the training of the model. Fourth, the attention maps did not rule out that other features in the images were also used, and we did not perform a quantitative validation of the heat maps. Fifth, although the study population as a real-world group also included eyes with disorders of the macula and optic nerve, we did not analyze whether the inclusion of eye with disorders influenced the performance of the algorithm. Sixth, we did not include a second data set of a completely different study population so that the validation of the algorithm can still be further refined. Further research may include data sets from populations of different age ranges and ethnicities and may use different fundus cameras. In addition, to boost the performance of the model, one may use more data for the development of the algorithm and improve the training schemes, such as using data augmentation.

In conclusion, deep-learning-based algorithms may be helpful for estimating axial length and SFCT based on conventional color fundus images. They may be a further step in the semiautomatic assessment of the eye.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by the Ethics Committee of Beijing Tongren Hospital. The patients/participants provided their written informed consent to participate in this study. Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author Contributions

LD, YNY, QZ, NZ, YXW, and WBW: design of the study. XYH, JHX, CXL, and ZYG: development of the algorithm. YNY, QZ, YXW, JX, LS, YJL, and YL: gathering the data. LD, XYH, YNY, QZ, NZ, YXW, JX, and JBJ: performing the data analysis. LD, XYH, and JHX: drafting the first version of the manuscript. All authors: revision and approval of the manuscript.

Funding

This study was supported by the Capital Health Research and Development of Special (2020-1-2052); Science and Technology Project of Beijing Municipal Science and Technology Commission (Z201100005520045, Z181100001818003); and the Beijing Municipal Administration of Hospitals’ Ascent Plan (DFL20150201).

Conflict of Interest

XYH, JHX, and CXL were employed by the company Beijing Eaglevision Technology Co., Ltd., China

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest

Footnotes

References

Bastawrous, A., Giardini, M. E., Bolster, N. M., Peto, T., Shah, N., Livingstone, I. A., et al. (2016). Clinical validation of a smartphone-based adapter for optic disc imaging in Kenya. JAMA Ophthalmol. 134, 151–158. doi: 10.1001/jamaophthalmol.2015.4625

Biousse, V., Newman, N. J., Najjar, R. P., Vasseneix, C., Xu, X., Ting, D. S., et al. (2020). Optic disc classification by deep learning versus expert neuro-ophthalmologists. Ann. Neurol. 88, 785–795. doi: 10.1002/ana.25839

Burlina, P. M., Joshi, N., Pekala, M., Pacheco, K. D., Freund, D. E., and Bressler, N. M. (2017). Automated grading of age-related macular degeneration from color fundus images using deep convolutional neural networks. JAMA Ophthalmol. 135, 1170–1176. doi: 10.1001/jamaophthalmol.2017.3782

Cao, J., You, K., Jin, K., Lou, L., Wang, Y., Chen, M., et al. (2020). Prediction of response to anti-vascular endothelial growth factor treatment in diabetic macular oedema using an optical coherence tomography-based machine learning method. Acta Ophthalmol. 99, e19–e27. doi: 10.1111/aos.14514

Cheung, C. M., Loh, B. K., Li, X., Mathur, R., Wong, E., Lee, S. Y., et al. (2013). Choroidal thickness and risk characteristics of eyes with myopic choroidal neovascularization. Acta Ophthalmol. 91, e580–e581. doi: 10.1111/aos.12117

Chun, J., Kim, Y., Shin, K. Y., Han, S. H., Oh, S. Y., Chung, T. Y., et al. (2020). Deep learning-based prediction of refractive error using photorefraction images captured by a smartphone: model development and validation study. JMIR Med. Inform. 8:e16225. doi: 10.2196/16225

Fujiwara, T., Imamura, Y., Margolis, R., Slakter, J. S., and Spaide, R. F. (2009). Enhanced depth imaging optical coherence tomography of the choroid in highly myopic eyes. Am. J. Ophthalmol. 148, 445–450. doi: 10.1016/j.ajo.2009.04.029

Gargeya, R., and Leng, T. (2017). Automated identification of diabetic retinopathy using deep learning. Ophthalmology 124, 962–969. doi: 10.1016/j.ophtha.2017.02.008

González-Gonzalo, C., Sánchez-Gutiérrez, V., Hernández-Martínez, P., Contreras, I., Lechanteur, Y. T., Domanian, A., et al. (2020). Evaluation of a deep learning system for the joint automated detection of diabetic retinopathy and age-related macular degeneration. Acta Ophthalmol. 98, 368–377. doi: 10.1111/aos.14306

Grassmann, F., Mengelkamp, J., Brandl, C., Harsch, S., Zimmermann, M. E., Linkohr, B., et al. (2018). A deep learning algorithm for prediction of age-related eye disease study severity scale for age-related macular degeneration from color fundus photography. Ophthalmology 125, 1410–1420. doi: 10.1016/j.ophtha.2018.02.037

Guo, Y., Liu, L. J., Tang, P., Feng, Y., Wu, M., Lv, Y. Y., et al. (2018). Optic disc-fovea distance and myopia progression in school children: the Beijing Children Eye Study. Acta Ophthalmol. 96, e606–e613. doi: 10.1111/aos.13728

Gursoy, H., Sahin, A., Basmak, H., Ozer, A., Yildirim, N., and Colak, E. (2011). Lenstar versus ultrasound for ocular biometry in a pediatric population. Optom. Vis. Sci. 88, 912–919. doi: 10.1097/OPX.0b013e31821cc4d6

Hemelings, R., Elen, B., Barbosa-Breda, J., Lemmens, S., Meire, M., Pourjavan, S., et al. (2020). Accurate prediction of glaucoma from colour fundus images with a convolutional neural network that relies on active and transfer learning. Acta Ophthalmol. 98, e94–e100. doi: 10.1111/aos.14193

Jonas, J. B., Ohno-Matsui, K., Jiang, W. J., and Panda-Jonas, S. (2017). Bruch membrane and the mechanism of myopization. A new theory. Retina 37, 1428–1440. doi: 10.1097/IAE.0000000000001464

Jonas, J. B., Ohno-Matsui, K., and Panda-Jonas, S. (2019). Myopia: anatomic changes and consequences for its etiology. Asia Pac. J. Ophthalmol. 8, 355–359. doi: 10.1097/01.APO.0000578944.25956.8b

Jonas, R. A., Wang, Y. X., Yang, H., Li, J. J., Xu, L., Panda-Jonas, S., et al. (2015). Optic disc-fovea distance, axial length and parapapillary zones. The Beijing Eye Study. PLoS One 10:e0138701. doi: 10.1371/journal.pone.0138701

Komuku, Y., Ide, A., Fukuyama, H., Masumoto, H., Tabuchi, H., Okadome, T., et al. (2020). Choroidal thickness estimation from colour fundus photographs by adaptive binarisation and deep learning, according to central serous chorioretinopathy status. Sci. Rep. 10:5640. doi: 10.1038/s41598-020-62347-7

Krizhevsky, A., Sutskever, I., and Hinton, G. E. (2012). Imagenet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 25, 1097–1105. doi: 10.1259/bjr.20180028

Lim, H. B., Kim, K., Won, Y. K., Lee, W. H., Lee, M. W., and Kim, J. Y. (2020). A comparison of choroidal thicknesses between pachychoroid and normochoroid eyes acquired from wide-field swept-source OCT. Acta Ophthalmol. 99, e117–e123. doi: 10.1111/aos.14522

Liu, B., Wang, Y., Li, T., Lin, Y., Ma, W., Chen, X., et al. (2018). Correlation of subfoveal choroidal thickness with axial length, refractive error, and age in adult highly myopic eyes. BMC Ophthalmol. 18:127. doi: 10.1186/s12886-018-0791-5

Mamtora, S., Sandinha, M. T., Ajith, A., Song, A., and Steel, D. H. W. (2018). Smart phone ophthalmoscopy: a potential replacement for the direct ophthalmoscope. Eye 32, 1766–1771. doi: 10.1038/s41433-018-0177-1

Mao, J., Luo, Y., Liu, L., Lao, J., Shao, Y., Zhang, M., et al. (2020). Automated diagnosis and quantitative analysis of plus disease in retinopathy of prematurity based on deep convolutional neural networks. Acta Ophthalmol. 98, e339–e345. doi: 10.1111/aos.14264

Milea, D., Najjar, R. P., Zhubo, J., Ting, D., Vasseneix, C., Xu, X., et al. (2020). Artificial intelligence to detect papilledema from ocular fundus photographs. N. Engl. J. Med. 382, 1687–1695. doi: 10.1056/NEJMoa1917130

Muiesan, M. L., Salvetti, M., Paini, A., Riviera, M., Pintossi, C., Bertacchini, F., et al. (2017). Ocular fundus photography with a smartphone device in acute hypertension. J. Hypertens. 35, 1660–1665. doi: 10.1097/HJH.0000000000001354

Ohno-Matsui, K., Kawasaki, R., Jonas, J. B., Cheung, C. M., Saw, S. M., Verhoeven, V. J., et al. (2015). International classification and grading system for myopic maculopathy. Am. J. Ophthalmol. 159, 877–883. doi: 10.1016/j.ajo.2015.01.022

Peng, C., Li, L., Yang, M., Teng, D., Wang, J., Lai, M., et al. (2020). Different alteration patterns of sub-macular choroidal thicknesses in aquaporin-4 immunoglobulin G antibodies sero-positive neuromyelitis optica spectrum diseases and isolated optic neuritis. Acta Ophthalmol. 98, 808–815. doi: 10.1111/aos.14325

Poplin, R., Varadarajan, A. V., Blumer, K., Liu, Y., McConnell, M. V., Corrado, G. S., et al. (2018). Prediction of cardiovascular risk factors from retinal fundus photographs via deep learning. Nat. Biomed. Eng. 2, 158–164. doi: 10.1038/s41551-018-0195-0

Saka, N., Ohno-Matsui, K., Shimada, N., Sueyoshi, S., Nagaoka, N., Hayashi, W., et al. (2010). Long-term changes in axial length in adult eyes with pathologic myopia. Am. J. Ophthalmol. 150, 562.e1–568.e1. doi: 10.1016/j.ajo.2010.05.009

Shah, M., Roomans Ledo, A., and Rittscher, J. (2020). Automated classification of normal and Stargardt disease optical coherence tomography images using deep learning. Acta Ophthalmol. 98, e715–e721. doi: 10.1111/aos.14353

Shao, L., Xu, L., Chen, C. X., Yang, L. H., Du, K. F., Wang, S., et al. (2013). Reproducibility of subfoveal choroidal thickness measurements with enhanced depth imaging by spectral-domain optical coherence tomography. Invest. Ophthalmol. Vis. Sci. 54, 230–233. doi: 10.1167/iovs.12-10351

Shao, L., Xu, L., Wei, W. B., Chen, C. X., Du, K. F., Li, X. P., et al. (2014). Visual acuity and subfoveal choroidal thickness: the Beijing Eye Study. Am. J. Ophthalmol. 158, 702.e1–709.e1. doi: 10.1016/j.ajo.2014.05.023

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J., and Wojna, Z. (2016). “Rethinking the inception architecture for computer vision,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, (New York, NY: IEEE), 2818–2826.

Tideman, J. W., Snabel, M. C., Tedja, M. S., van Rijn, G. A., Wong, K. T., Kuijpers, R. W., et al. (2016). Association of axial length with risk of uncorrectable visual impairment for Europeans with myopia. JAMA Ophthalmol. 134, 1355–1363. doi: 10.1001/jamaophthalmol.2016.4009

Ting, D. S. W., Cheung, C. Y., Lim, G., Tan, G. S. W., Quang, N. D., Gan, A., et al. (2017). Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA 318, 2211–2223. doi: 10.1001/jama.2017.18152

Toy, B. C., Myung, D. J., He, L., Pan, C. K., Chang, R. T., Polkinhorne, A., et al. (2016). Smartphone-based dilated fundus photography and near visual acuity testing as inexpensive screening tools to detect referral warranted diabetic eye disease. Retina 36, 1000–1008. doi: 10.1097/IAE.0000000000000955

Varadarajan, A. V., Poplin, R., Blumer, K., Angermueller, C., Ledsam, J., Chopra, R., et al. (2018). Deep learning for predicting refractive error from retinal fundus images. Invest. Ophthalmol. Vis. Sci. 59, 2861–2868. doi: 10.1167/iovs.18-23887

Wang, J., Ju, R., Chen, Y., Zhang, L., Hu, J., Wu, Y., et al. (2018). Automated retinopathy of prematurity screening using deep neural networks. EBioMedicine 35, 361–368. doi: 10.1016/j.ebiom.2018.08.033

Wei, W. B., Xu, L., Jonas, J. B., Shao, L., Du, K. F., Wang, S., et al. (2013). Subfoveal choroidal thickness: the Beijing Eye Study. Ophthalmology 120, 175–180.

Yan, Y. N., Wang, Y. X., Xu, L., Xu, J., Wei, W. B., and Jonas, J. B. (2015). Fundus tessellation: prevalence and associated factors: the Beijing Eye Study 2011. Ophthalmology 122, 1873–1880. doi: 10.1016/j.ophtha.2015.05.031

Yan, Y. N., Wang, Y. X., Yang, Y., Xu, L., Xu, J., Wang, Q., et al. (2018a). Long-term progression and risk factors of fundus tessellation in the Beijing Eye Study. Sci. Rep. 8:10625. doi: 10.1038/s41598-018-29009-1

Yan, Y. N., Wang, Y. X., Yang, Y., Xu, L., Xu, J., Wang, Q., et al. (2018b). Ten-year progression of myopic maculopathy: the Beijing Eye Study 2001-2011. Ophthalmology 125, 1253–1263. doi: 10.1016/j.ophtha.2018.01.035

Zago, G. T., Andreão, R. V., Dorizzi, B., and Teatini Salles, E. O. (2018). Retinal image quality assessment using deep learning. Comput. Biol. Med. 103, 64–70. doi: 10.1016/j.compbiomed.2018.10.004

Keywords: deep learning, convolution neural network, axial length, subfoveal choroidal thickness, fundus photography, fundus image

Citation: Dong L, Hu XY, Yan YN, Zhang Q, Zhou N, Shao L, Wang YX, Xu J, Lan YJ, Li Y, Xiong JH, Liu CX, Ge ZY, Jonas JB and Wei WB (2021) Deep Learning-Based Estimation of Axial Length and Subfoveal Choroidal Thickness From Color Fundus Photographs. Front. Cell Dev. Biol. 9:653692. doi: 10.3389/fcell.2021.653692

Received: 15 January 2021; Accepted: 10 March 2021;

Published: 09 April 2021.

Edited by:

Lianhua Chi, La Trobe University, AustraliaReviewed by:

Haishuai Wang, Fairfield University, United StatesBaiying Lei, Shenzhen University, China

Copyright © 2021 Dong, Hu, Yan, Zhang, Zhou, Shao, Wang, Xu, Lan, Li, Xiong, Liu, Ge, Jonas and Wei. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Wen Bin Wei, d2Vpd2VuYmludHJAMTYzLmNvbQ==

†These authors have contributed equally to this work and share the first authorship

Li Dong

Li Dong Xin Yue Hu2†

Xin Yue Hu2† Lei Shao

Lei Shao Jie Xu

Jie Xu Jost. B. Jonas

Jost. B. Jonas Wen Bin Wei

Wen Bin Wei