- Department of Computer Science, Virginia Tech, Blacksburg, VA, United States

Glossaries play a major role in enhancing students' comprehension of core concepts. Glossary terms have complex interrelationship that cannot be fully illustrated by standard approaches such as including all terms in a linear, alphabetized list. To overcome this limitation, we introduce an interactive design for glossary terms within the OpenDSA interactive eTextbook system using concept maps. Glossary terms are visualized as nodes in graphs and their relationships are described on the edges. A concept map associated with the selected term is generated on demand. We evaluate the effectiveness of our design by comparing student use of our concept-map based glossary to the traditional alphabetized list. We used exercises that target the comprehension of the glossary terms to make students familiar with the concept maps.

1. Introduction

Books have a long tradition of including glossaries and this has carried over to digital media (Smith, 2000; Beetham and Sharpe, 2013). Glossaries are collections of the main terms and jargon used in the associated text, along with their meanings or definitions. Traditionally, a glossary is displayed using a list that is either sorted alphabetically or grouped by chapters. Computer science courses are not different from other digital courses when it comes to glossaries. Glossaries play a significant role in providing definitions for the many concepts and terms found in computer science courses. This holds true especially in the core courses in Computer Science such as Data Structures and Algorithms, which usually have hundreds of glossary terms (ODSA Contributors, 2012).

Given the complex interrelationships of the glossary terms in Computer Science courses, the traditional alphabetical list method does not provide a suitable means for students to make the best use of the glossaries. In particular, we have identified the following shortcomings in traditional glossary lists. First, students find it hard to navigate through a long list of glossary terms to find terms of concern. Moreover, this long list does not represent an appealing format for the students. Second, the list does not help students to draw connections between related terms. Finally, usually no or minimal interaction is available with the terms.

These shortcomings are believed to affect and limit the value students get from using glossaries. Therefore, we propose to implement a new design for the glossary terms that can overcome these shortcomings. The main motivations for our implementation are:

• The design needs to be appealing for students to encourage them to use the glossaries more frequently.

• A glossary access should focus on the term of concern along with its relationship to other terms.

• Tools used to implement glossaries should be compatible with the containing eTextbook infrastructure.

• The visualization should be interactive.

As explained below, we adopt an implementation of the glossary based on concept maps.

2. Related Work

Concept maps have been widely used in many applications to represent the relation between a number of related items or terms. According to Novak and Cañas (2008), concept maps are graphical tools that can organize and represent knowledge. A concept is designated by a label which is displayed in a box or circle and connected using lines to other concepts. Thus, the concept map can be viewed as a graph, with concepts as the nodes and relationships as the edges. Concept maps were developed in 1972 by Joseph D. Novak (Novak and Musonda, 1991), where he used them to follow and understand changes in children's knowledge of science. This ability of concept maps to deliver better information was later recognized by psychological research that interpreted the relation between concept map design and our brains. The basic relationship was concluded from the work in Miller (1956), which models human memory not as a single “vessel” that can be filled sequentially, but rather as a complex set of interrelated memory systems which can be fed with parallel information at once. That is why viewing the information represented in a concept map with the concepts displayed side by side is easier to comprehend than reading a long paragraph with the same information.

Concept maps are among a group of tools that can be used to construct and share information in a meaningful way. Eppler (2006) discusses concept maps, mind maps, conceptual diagrams, and visual metaphors. Concept maps, according to Eppler, represent a top-down diagram that shows the relationships between different concepts and their interrelationships. A mind map, on the other hand, is a radial diagram that is centered around an image and that represents the hierarchical connections between different portions of the learned materials. A conceptual diagram uses boxes to represent abstract concepts in a systematic illustration and it also highlights the relationship between these concepts. Finally, a visual metaphor is a graphical structure created based on the shape of a popular natural or man-made artifact that can be easily recognizable. It can model an activity or a story to organize contents meaningfully. These different graphical representation tools are used to deliver different types of information. Of these different tools, the concept map was shown to be the best tool that can be used to deliver information for students and to summarize the key topics in courses Eppler (2006). They can also help to clarify the elements of a big topic and to give examples of specific concepts.

Prior to the work described in this paper, the OpenDSA eTextbook system already made use of conceptual diagrams and visual metaphors when appropriate to convey content (Fouh et al., 2012, 2014, 2016). However, previously OpenDSA used only a traditional alphabetized list with hundreds of glossary terms, which was found not to be used much by students as described below.

2.1. Concept Maps in Education

The possible use of concept maps in education was discussed in Novak (1990). The initial focus was on teaching science, however, Novak determined that concept maps were useful to represent knowledge and information from many disciplines. The main result of the research was that concept maps can be beneficial to students, however, they cannot be the only source of information. In a recent follow-up study (Novak, 2010), Novak suggested using “expert skeleton” concept maps for educational purposes. Expert skeleton concept maps are those maps prepared by an expert in the knowledge domain and are used to represent the basic information in the field in the form of scaffolding. This was proven to facilitate meaningful learning and to help represent the general view of the ideas in a way that removes misconceptions.

In Stewart et al. (1979), the authors suggest three different uses of concept maps in the educational process: curricular tools, instructional tools, and a means of evaluation. The curricular aspect of concept maps is related to designing the curricula of the subject by helping the designer to determine the main ideas in the subject, and, hence, improve the intended learning outcomes. The instructional aspect of concept maps deals with teaching the concepts and the ideas to the students, which is the most obvious use of concept maps in education. Finally, the evaluation aspect suggests using concept maps in students' assessment. Kwon and Cifuentes (2007) present the effect of generating concept maps on students. Students in a middle school were divided into three groups: those who individually generated concept maps for some course concepts, those who created these concept maps in groups, and those who did not use concept maps to learn the material. Students who worked on concept maps, in general, had more positive attitudes toward concepts than those who did not, and students who worked individually had better understanding of the concepts than those who worked in groups.

Concept maps have been used in many disciplines to facilitate the learning of the main ideas and concepts. In Stewart et al. (1979), the use of concept maps in teaching biology was discussed. Stewart et al. explained how to extract the main concepts in biology and how to define relations between these concepts to be used in concept maps. Similarly, Lloyd (1990) has studied the elaboration of concepts in biology. In particular, three text books were chosen that target different audiences, and their presentation of a specific concept was investigated. The author created three different concept maps for the concepts of interest, one from each book. The goal of the study was to evaluate the different levels of presenting related concepts with a specific concept. Similarly Hwang et al. (2014) has shown that there is a trade-off between the amount of information represented in each of these concept maps and the cognitive load on students.

In Computer Science, concepts maps have been proven to increase students' ability to distinguish related terms, which reflects better understanding of the concepts. For instance, Sanders et al. (2008) used concept maps to facilitate understanding the main concepts of object-oriented programming such as classes, objects, and inheritance. In Mühling (2016), concept maps were used to investigate the knowledge structure of beginning CS students. Concept maps were shown to be useful in identifying the common knowledge configurations and the differences in learners' knowledge based on their background education.

Van Bon-Martens et al. (2014) explored the applicability of using concept maps to deliver scientific knowledge used for practical decision-making situations. They performed five studies to cover five different fields in public health. Results showed that concept maps were effective in highlighting the key issues and delivering the required medical information. In Turns et al. (2000), the use of concept maps in engineering education was discussed. The work related the most important concepts in engineering and identified their relationship. These concepts included experimentation, research, analysis, and modeling. The work has also shown the connection between these concepts and the impact of their implementation on many aspects.

2.2. Concept Maps Tools

There are many tools to generate concept maps, both commercial (whether free or not) tools that can be used to generate concept maps, and also tools that were proposed in the literature. First, we consider the commercial tools that are electronically available and that have been developed to help design and create concept maps. Examples of these tools include MindMeister (2007), Lucidchart (2008), MindMup (2013), and Cmap (2014). All these tools have features that enable users to create powerful concept maps. Some allow users to create a concept map online and store it in the cloud or download and use locally. The output of these tools can be saved in multiple formats, such as images or portable documents. However, the main drawback of these tools that makes them unsuitable for our implementation is that they all produce static output. Given the number of glossary terms found in our target eTextbooks, it would not be feasible to show the entire course concept map, nor, is it feasible to statically create a map for each term. Another drawback is that generating a map as a static image will not allow students to have interaction with the map.

The research literature provides many proposed tools to automatically generate concept maps. Villalon and Calvo (2011) introduce concept map miner (CMM), which can automatically generate concept maps from prose. CMM uses a grammatical tree for each sentence to extract the compound nouns. The relationships between these nouns are, then, identified through a semantic layer. Finally, the compound nouns are represented as a terminology map through a reduced version with no grammatical dependencies. However, one issue with CMM and similar tools is that they depend on the structure of the given sentences to extract the main concepts. The extracted concepts represent the most common words in these sentences, which are not always the core concepts of the course. Therefore, such tools are not always suitable to generate concept maps for the glossaries in a course.

3. Implementation

Our concept map implementation takes as input a glossary in the form of collection of terms and definitions that have been annotated to explicitly store links to related terms. From this, it can automatically generate an interactive concept map to show the nearby relationships with any selected term in the glossary. The concepts, their definitions, and their relationships were prepared by hand to ensure that only the core concepts are presented. The tool was developed and tested in the courses CS2114 Software Design and Data Structures (referred to hereafter as CS2) and CS3114 Data Structures and Algorithms at Virginia Tech (referred to hereafter as CS3). These are the primary courses that CS majors take in our department related to data structures and algorithms.

In the research literature, many layout designs have been proposed for concept maps have, according to the application. For instance, some concept maps use a horizontal hierarchical view for the nodes. Others use tree designs, which represent a vertical hierarchy. General graphs do not follow a special structure. Links are usually directional, pointing from one node to another. But they can also specify bi-directional relations between the nodes, with different relations given for different directions. In either case, the links are typically labeled with the type of relation between these nodes.

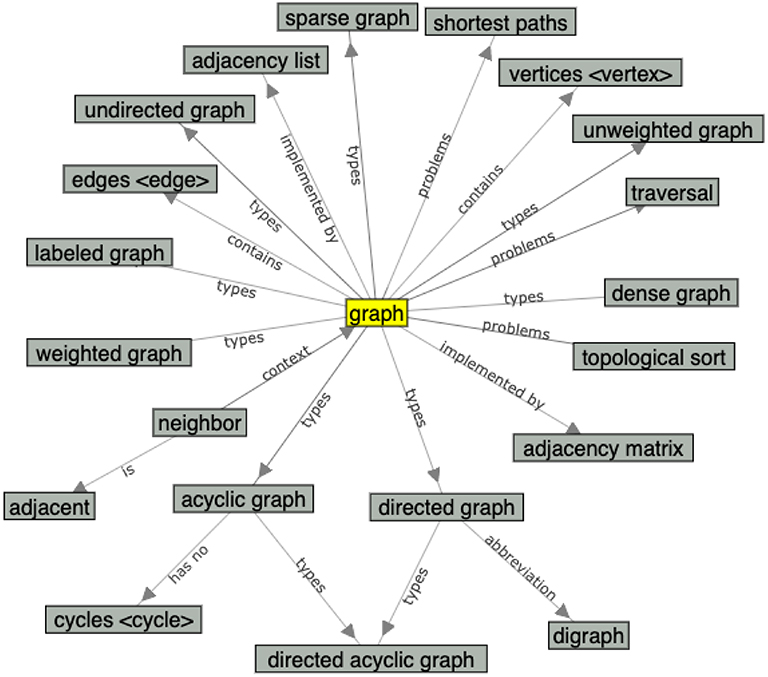

This flexibility in designing concept maps makes them a good fit to model glossaries. In our design, we consider each glossary term separately. This glossary term is represented as the main concept in its local region of the graph of concepts for the course. By annotating each term to explicitly store links to related terms, we can identify all related terms to this central concept. The related terms are then presented in the concept map as nodes connected to the main term. Here, we use directional links pointing out from the main concept toward these terms. The links used in our concept map design are labeled according to the relation between the terms. Example relations include “part of,” “example of,” “implemented in,” “consists of,” and “synonym.” Figure 1 shows a sample concept map that includes one main concept (“graph”) and multiple related concepts. Note that in some cases, related terms have been expanded to show their related terms.

3.1. Implementation Goals

The main goals of our implementation are:

• The concept map needs to be interactive such that the output graph is dynamic and be can manipulated by users.

• The concept map should be accessible from the course contents. We will display both the original glossary list as well as the generated concept map graph when a student clicks on a term in the course contents, so that students can check both.

• Although the concept map can theoretically support any number of hierarchical levels, we will limit the number of hierarchical levels so as not to clutter the display to the point where it distracts users from the concepts relations of the selected term.

3.2. Platform and Features

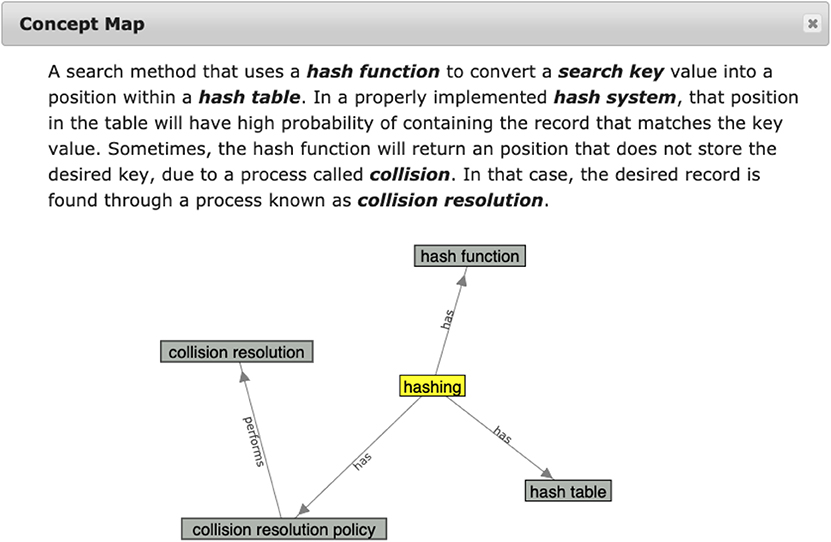

We implemented the concept map to be generated on-the-fly when a concept term is selected by the user, either from the eTextbook text or from the glossary list. The local concept map for the term is automatically generated from the global concept map of the entire glossary, so we do not need to store individual maps for every concept, nor spend a lot of time loading them from the server. We set the local concept map to be displayed in a separate browser window, while the original glossary list is displayed in the original browser page with the selected term highlighted. In the concept map window, the term definition is also included above the graph as shown in Figure 2. This allows students to read the concept definition while viewing its relations to other concepts in order to better understand the relationships.

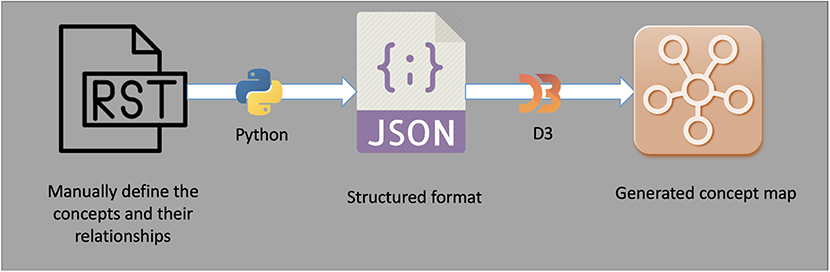

The steps for converting the glossary terms into concept maps and adding interactive actions to them are shown in Figure 3.

3.2.1. Creating a Concept Map From the Glossary

OpenDSA uses ReStructredText (RST) as its authoring language for content. RST is a lightweight markup language widely used by the Python community. It includes a number of features for creating digital book-like artifacts, including glossary support. OpenDSA uses RST (and the Sphinx compiler for converting RST to HTML) because it is extensible. Thus it is easy for us to take the standard glossary support mechanism (a list of terms with definitions) and annotate those entries with other attributes.

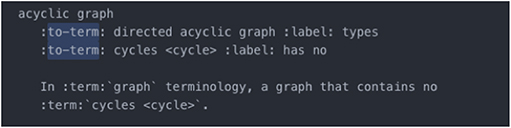

The starting point for our concept map implementation is the RST glossary file. Each term has a separate entry in the file, along with its definition. Figure 4 shows part of the glossary RST file, specifically, for the “acyclic graph” term. We can see that the term is displayed on the first line, and the related terms are displayed on the following two lines after the keyword “to-term,” which is an attribute that we have added to glossary terms. This means there will be a relation pointing out from the term “acyclic graph” toward“directed acyclic graph” and “cycles.” The label for each relation is also shown in Figure 4. The labels used here are “types” and “has no” to indicate the relation type between the concept and its connected concepts.

The process of editing the RST file to add the connections that build the concept map is actually the most time-consuming process in our implementation. It needs only to be done once. But it requires examining the concepts manually, defining their relations, and entering them into the RST file. After storing these relations in the RST file, the remaining stages are automated to generate the concept maps automatically.

3.2.2. Building the JSON File

The next stage, after defining the connections that define the concept map in the RST file, is to convert it into a structured data format that can be processed efficiently. Here we choose JSON (2017) as our structured format. JSON stands for “JavaScript Object Notation,” which is a lightweight data-interchange format that can be used with JavaScript. This allows the runtime support for the eTextbook to easily and efficiently generate the graph object for the entire concept map. This will in turn be used to extract the local concepts for a given term.

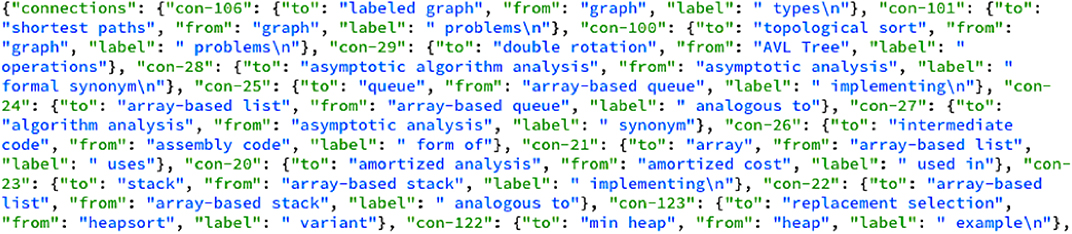

The process for converting the RST file into JSON is implemented by a python script that reads the RST glossary file, and stores them into the JSON file. Part of the result is shown in Figure 5. We can see that every connection in the JSON file is represented as a tuple in the format (“con−i”: {“to”: to-label, “from”: from-label, “label”: label-name}). Here “con-i” is the connection number, “to-label” is the main concept, “from-label” is its related concept, and “label-name” is the type of connection.

3.2.3. Concept Maps Implementation Using D3

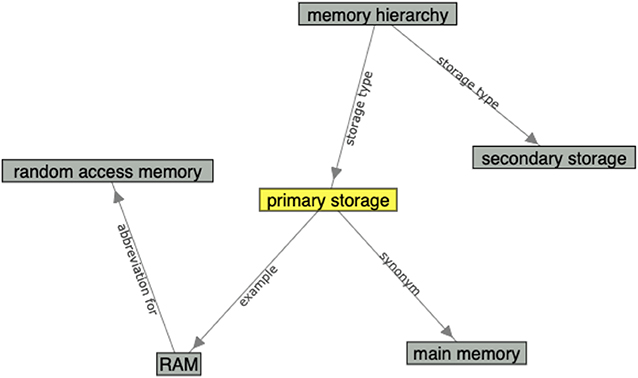

The JSON file is then fed to the last stage, which generates the glossary term concept graph in memory. We use the standard D3 JavaScript graphics library to visualize the glossary concept map. D3 provides many graph layout algorithms. We have chosen to use the graph force layout algorithm. This allows students to drag and drop nodes within the window, allowing nodes to be moved while it remains connected to its neighbors in the graph. This, in turn, will allow students to better examine their concepts of interest in more detail, especially if the graph has a large number of nodes. The main concept is excluded from this drag-and-drop action so it remains in the center of the window. We display the main concept in a different color to distinguish it from the other nodes. Figures 1, 2 show examples of generated concept maps. Notice that the main concept has a different color. Links are directed from the main concept to the other concepts, with labels on the edges. The force graph layout generates a visually appealing format.

We take advantage of several features provided by D3. The first is zoom, which allows students to zoom in or out in the graph. Our implementation uses click actions on the nodes. Students can select any node in the concept map by clicking on it. This node will highlight when the mouse hovers over it, to indicate that it is clickable. If this node has its own relationships, then its concept map will be generated and displayed in the current concept map window.

An important design choice for concept maps is selecting the number of hierarchical levels to be displayed for each node. This is complicated by the fact that the number of nodes connected to each concept varies drastically. Therefore, there is a trade-off to consider. On one hand, increasing the number of levels will allow more nodes to be displayed, and, hence, more information. On the other hand, if the number of nodes becomes too large, it will hinder understanding the relationships.

In the current implementation, we have adjusted the number of levels under each node to be two. This means the connections coming out from a specific node are limited to two levels. We have selected this number of levels since usually it displays enough nodes that are related to each concept, without causing a lot of visual noise to the students. A future direction we want to explore is to automatically adjust the number of the displayed nodes in the graph by allowing the implementation to decide the number of levels to be displayed. More levels can be generated depending on the whole number of nodes displayed. In Figure 1, we can see that two layers of hierarchical nodes are displayed for the concept “graph.”

We notice that some concepts are better understood when their previous level nodes (nodes that point toward them) can also be seen. In the current version of our implementation, we use two levels of connected nodes with one backward level. The backward level is chosen to be displayed in certain graphs to support the idea behind their main concepts, or when the total number of nodes in the graph is below a threshold. In Figure 6, only two nodes are directly connected to “primary storage,” one with connection to a node in the next level. In this case, the backward level is displayed and can be distinguished by the arrows pointing toward the main concept.

4. Evaluation

We evaluated the effectiveness of our concept map implementation by measuring student interactions with the glossary both before and after including the new design. To this end, we defined the following research questions to help determine our evaluation approach:

• How can we effectively make students aware of the concept map capabilities available in the glossary? In some sense, how can we advertise the implementation?

• What data should we collect to represent effective student interactions with the glossaries?

• Based on the data collected, how effective are concept maps vs. standard glossaries?

We start with the first question, which is our approach to advertise our work for students using OpenDSA Project (2012b). As mentioned earlier, our concept maps were integrated into the data structures courses at Virginia Tech. However, if a student is not used to checking the glossary of an eTextbook, he/she will not notice the concept maps. Therefore, we decided to design special exercises that refer to the concept maps within the course contents in order to make the students familiar with the available concept maps while also making them more familiar with certain key concepts.

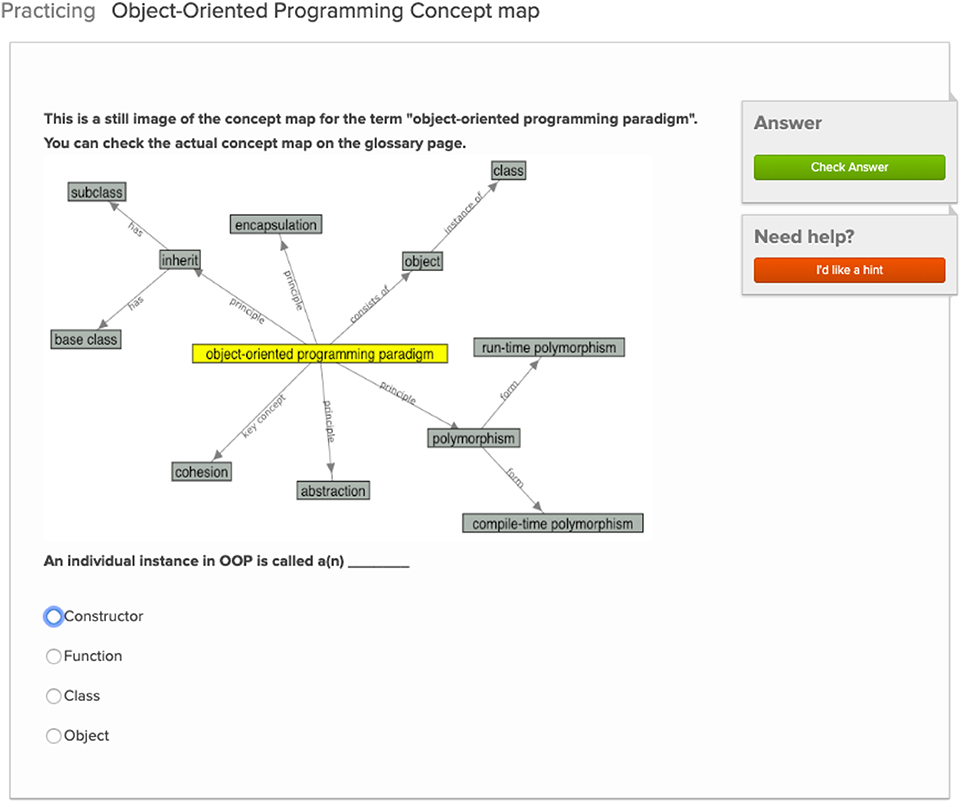

The exercises were included in CS2 but scores were not reported to the gradebook and so did not affect the course grade. The functional purpose for these exercises was to make students aware that the concept map view of the glossary was available. Ideally, they would also ignite student curiosity to explore more about the concepts in the questions, and, hence, use the concept maps. Students could tell that these exercises were not graded, however, almost all students tried to solve them. To build the exercises, we used the Khan Academy infrastructure Khan Academy (2014) that is integrated with the OpenDSA system. The Khan Academy exercise framework provides a robust infrastructure that can be used to create autograded questions including True/False, multiple choice, and Fill-in-the-Blank. In order to make the exercises quick for students, we used mainly True/False questions and multiple choice questions to guide exploration of the concept maps. The designed exercises include a static image of the concept maps for the glossary term found in the exercise. Figure 7 shows an example. In designing these exercises, we took into consideration that these exercises are not meant to evaluate the students. Hence, the questions were not designed to be complicated or to require further exploration from the students. The answers of these questions only require the students to study the picture to identify the correct choice among the given answers. We believe that this method of representation and interaction with the glossary terms will help students to be familiar with the concept maps, and hence, they will start using the feature.

Finally, we note that we have implanted these exercises in CS2 only and not in CS3. This lets us compare level of use of concept maps in the two courses. This might indicate whether these exercises were helpful in directing the students toward exploring more about the concept maps by visiting the glossary page.

Both CS2 and CS3 make use of OpenDSA content, with OpenDSA being the sole textbook for CS3. Besides the client-side content that students use and interact with, OpenDSA uses a server side implementation to register and store any events or logs of interest. The OpenDSA server OpenDSA Project (2012a) is implemented using Ruby on Rails. OpenDSA logs student use of many features, with all interactions logged into a database. This allows us to study student use of specific features within the system, such as the number of interactions with the glossary, visualizations, exercises, etc. This was helpful in collecting data about student use of the original glossaries in the previous semesters, as this was already stored in the database. We used simple SQL queries to retrieve from the database information about students' use of the glossary, both before and after concept maps were introduced.

The main focus in this evaluation is to study changes in glossary use due to adding the concept maps. We do not attempt to evaluate effects of the concept maps in improving the students' understanding. We do note that others have studied such effects, such as Novak (1990) and Sanders et al. (2008).

5. Results

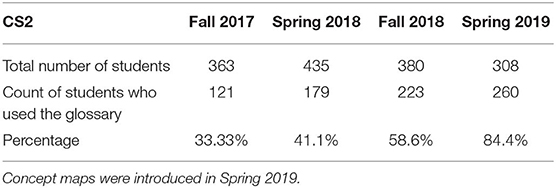

We now present data on student use of the glossary before and after activating the concept maps. In particular, three semesters prior to using the concept maps (Fall 2017, Spring 2018, and Fall 2018) were used to compare with the semester when the concept maps were introduced (Spring 2019).

5.1. Level of Use

The fraction of students who used the glossary in each of the four semesters is shown in Table 1. The percentage of students who used the glossary after implementing the concept maps is higher than the previous three semesters. In Spring 2019, about 84% of students used the glossary compared to an average of 44% in the previous semesters.

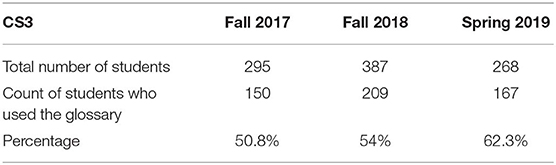

Similarly, in Table 2, we show glossary use for CS3 for Fall 2017 and Fall 2018 (when the original glossary was used) and in Spring 2019 (when the concept map was included, but no exercises were provided to train students in concept map use). Similar to Table 1, the percentage of students who used the glossary increased in the Spring 2019 semester, after implementing the concept maps, compared to the previous semesters. In Spring 2019, about 62% of students used the glossary compared to an average of 52% in the previous semesters. However, the increase is not nearly so great as in CS2 where the students completed the concept map exercises.

We notice that the student use of the glossary in CS2 was higher than that in CS3. However, we must consider effect of the concept map exercises on student use of the glossary in CS2. The key question then becomes: If the exercises related to an increase in use of the glossary, does this mean that (a) the increase in glossary use is mostly related directly to completing the exercises themselves, and otherwise there was no real increase in glossary use, or (b) the exercises trained the students to use the glossary, after which they continued to do so?

We found that about 18% of the total number of glossary uses in CS2 happened while students were solving the exercises. Since this percentage represents a significant part of the total use, we compare the results from CS2 for the total use, and also after excluding uses associated with completing the exercises.

We also study the number of students who have used the glossary more than once. The previous results show absolute percentage even if a student used the glossary only once. When a student uses the glossary more than once, this can be interpreted as the glossary was useful to the students, so they decided to use the feature again. In the following, we counted the number of students who used the glossary more than 5 times, more than 10 times, more than 20 times, and more than 30 times.

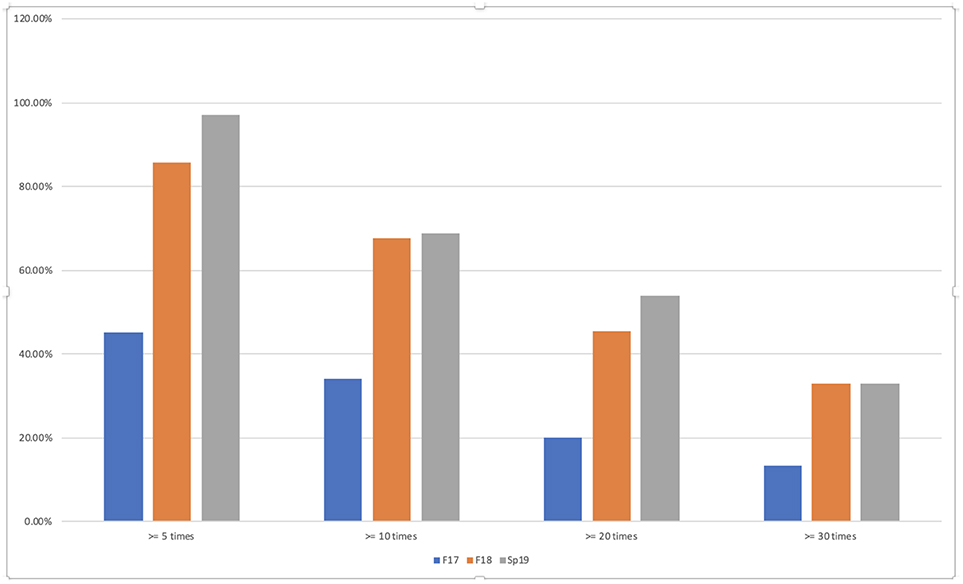

Figure 8 shows a comparison between the four semesters for CS2. Each column in Figure 8 shows the percentage of students who have used the glossary, for the number of times shown, to the total number of students who have used it in this semester. Each semester is represented with a column with the Spring 2019 represented twice, once with the complete data, and the other after excluding the direct exercise effects. We will focus our attention on the results after excluding the exercises effect.

Figure 8. Percentage of students who have used the glossary multiple times in CS2. The last two bars show use in Spring 2019; the yellow bar includes uses to complete exercises while the blue bar shows only uses unconnected with the exercises.

In Figure 8, we can see that out of 260 students who have used the glossary in CS2, 145 students have used the glossary more than 5 times (after excluding the exercises effect). This makes the percentage about 55%. The remaining numbers of use are calculated similarly. In Figure 8, we notice that the percentages of use in Spring 2019, after excluding the exercises effect, are higher than the percentages in Fall 2017 and Spring 2018, for all numbers of use. However, in Fall 2018 the percentages are higher for 20 and 30 times of use and are comparable for 5 and 10 times of use.

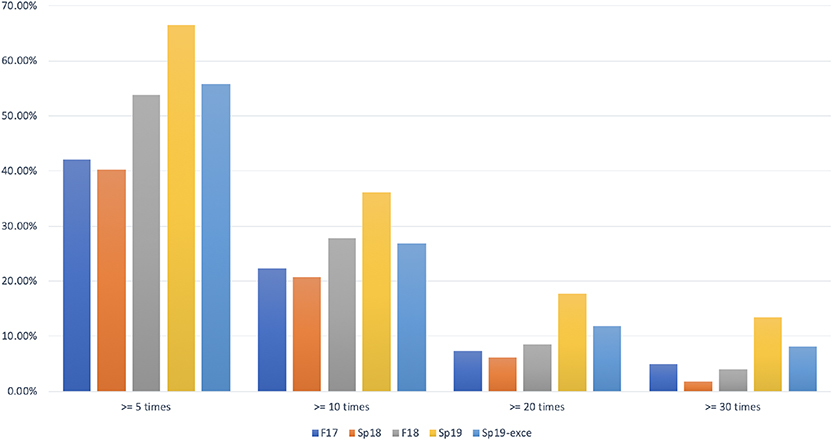

Similarly, in Figure 9, we show the percentage of students who used the glossary multiple times between Spring 2019 and the previous two semesters for CS3. In this case, the percentages in Spring 2019 are also higher than the percentages in the previous semesters, for different users' counts. However, the percentages of Spring 2019 are comparable to the percentages of Fall 2018.

For both CS2 and CS3, these results give strong indication that students who have tried the new concept map glossary decided to the use the glossary more than the previous semesters. However, we note that the percentage increase of students who have used the glossary in general and those who have used it multiple times, is higher in CS2 than CS3, with respect to the previous semesters. One difference between CS2 and CS3 is the included concept map exercises, so it could be that using our exercises has affected the results positively.

5.2. Statistical Analysis: ANOVA Test

Next we provide an in-depth comparison though statistical analysis of the students' use of the glossary between the different semesters. We start with the relations between the means and variances in each semester. We studied the interval plot for CS2 complete usage data. There are four groups representing the four semesters under test (F17, Sp18, F18, Sp19). We also calculated the interval plot for CS2 using the updated students' use after excluding the exercises direct effect. We notice that the means again are not the same. The mean of the updated use in Spring 2019 is still higher than the previous semesters (but of course it is lower than the complete data case). Similar results are studied for CS3. There are three groups representing the three semesters under test (F17, F18, Sp19). We notice that the means are not the same. The mean of the updated use in Spring 2019 is higher than the previous semesters.

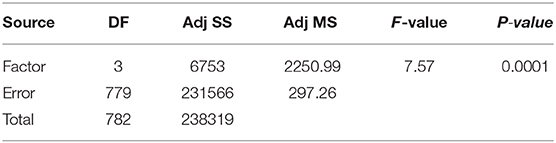

We performed a statistical analysis to check whether the differences in the means are significant. We use a one-way ANOVA Leard Statistics (2018) and Statistics How To (2019) on the CS2 and CS3 data. In the following tests, we use the null hypothesis as H0: All means are equal and the alternative hypothesis Halt: At least one mean is different. The significance level used in the following experiments is α = 0.05, which is the probability of rejecting the null hypothesis when it is true. We show the results of the one-way ANOVA for CS2 complete data in Table 3. We notice that the P-Value = 0.0001 which is much less than the significance level α. Therefore, we can reject the null hypothesis (that all means are equal) and we conclude that a significant difference exists between the different semesters of CS2 course.

Next, we perform the one-way ANOVA test on the updated students' use, after excluding the exercises, in Spring 2019 compared to the previous semesters. The results of the ANOVA test showed that the P-Value in this case = 0.01314 which is also less than the significance level α = 0.05. Therefore, we can also reject the null hypothesis and we conclude that a significant difference exists between the different semesters of CS2 course after excluding the exercises direct effect. Similarly, we studied the results of the one-way ANOVA test for CS3. The P-Value in this case is 0.2077 which is higher than the significance level α. Therefore, we cannot reject the null hypothesis (that all means are equal) and we conclude that there is no significant evidence that the means are different.

One difference between CS2 and CS3 was that the exercises were only given to CS2114 students. So the exercises probably helped to increase the level of glossary use in CS2. Although the percentages of use in CS3 are higher after implementing the concepts maps, as was shown in Figure 9, these differences were not significant. This lends support to the hypothesis that there is a “training effect” from doing the concept map exercises that leads to increased use of the glossary, and that these exercises need to be added to the CS3 course.

5.3. Statistical Analysis: Multi-Comparison

As the ANOVA test for CS2 shows that there is a significant difference between the groups, we performed follow-up tests on the means to check which semesters actually differ from the others. Multi comparison tests check whether concept map use in Spring 2019 is significantly different from the other semesters. We performed both the Tukey test (pairwise comparisons) and the Dunnett test (non-pairwise with a comparison group). We started with the Tukey test. There are, in total, six pairwise comparisons between the four groups (semesters). All the intervals that contain zero are assumed not to be significantly different. We notice that three intervals do not include zero, and, hence, their groups have significant differences in their number of use. The differences are between the Spring 2019 semester and all the previous three semesters. These results corroborate the ANOVA results that there is a significant difference between the means. Next, we performed the results of the Dunnett test with the Spring 2019 semester selected as the comparison group. The results were similar to the Tukey test. Spring 2019 has a significant difference from all the previous three semesters.

Next, we performed the multi-comparison for the updated CS2 results, after excluding the exercises effect. In this case, we notice that Spring 2019 is significantly different to only one previous semester compared to three semesters when considering the complete data. Next, we studied the results of the Dunnett test with the updated Spring 2019 semester selected as the comparison group. The results are similar to the Tukey test. Spring 2019 has a significant difference from Spring 2018, but not from Fall 2018.

We can conclude that while student use of the glossary has increased significantly in the Spring 2019 semester, a lot of that difference comes from the exercise use. When cutting out the use of the glossary in direct support of the exercises, there is a weak increase in use for CS2. Since this increase happened immediately after implementing our concept maps and nothing else changed that we are aware of to explain this, it is plausible that the concept maps helped to increase the students' use of the glossary for CS2. We have already noted that there was no significant increase in glossary use for CS3, where there was no use of the exercises, and so no “advertising” of the glossary. So it seems likely that the exercises were successful in drawing the students' attention to the concept maps, and, hence, the glossary.

6. Conclusions and Future Work

In this paper, we have presented an interactive visualization tool for the glossary terms using concept maps. In this implementation, glossary terms are visualized as nodes in graphs, while their relationships are associated with the edges. Our concept maps tool was implemented within the OpenDSA e-textbook system. The local region of theconcept map associated with a glossary term is automatically generated when that term is clicked in the text. We have, then, evaluated the effectiveness of our concept maps by performing statistical analysis to measure the effect of our concept maps on the students' use of the glossary. We used ANOVA tests followed by multi-comparison tests to compare the average students' use before and after implementing the concept maps. Results have shown that our concept maps design has significantly increased students' use of the glossaries when they are made aware of their existence.

A future direction that can be explored in the concept map implementation is to automatically adjust the number of the displayed nodes in the graph. This can be achieved by determining the optimal number of levels to be displayed for each node based on the whole number of related nodes retrieved after each level.

This study had limited goals, in that it was measuring only the changes in glossary use that result from augmenting the glossary with concept maps. In future work, we would like to compare the performance differences in understanding the concepts resulting from different levels of use of the concept maps (and consequent increase in using the glossary, since this is the mechanism whereby the concept maps are accessed). This can be driven by part by better integrating the concept maps into the auto-graded questions used throughout the eTextbook system. Another limitation of our current work is that we did not get direct feedback from the students about usability of the system. Think-aloud interviews with students while they answer the concept map questions would be useful for this purpose. A satisfaction survey at the end of the course would also help to understand how much students like the concept map approach.

So far, our work has focused exclusively on small-scale relationships between terms within a specific topic. For example, our auto-generated concept maps can hope to give students better understanding and better structure their knowledge on a specific topic like object-orient programming, or graphs. Another use of concept maps can be to help students understand the relationships between major topics. For example, when to use hash tables vs. search trees. Technically, a similar annotation mechanism can be used to show the relationships between broader topics as is used to show the relationships of terms within the topics, as both types of terms are in the glossary. But we have not yet addressed how or whether some indication should be made to students of the different levels, or how to emphasize these topical inter-relationships.

Data Availability Statement

The datasets generated for this study are available on request to the corresponding author.

Ethics Statement

The studies involving human participants were reviewed and approved by IRB for Virginia Tech. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author Contributions

CS originally conceived the project and its overall design. EE implemented the described system under the direction of CS and conducted the assessment activities. EE wrote the first draft of the manuscript. CS and EE contributed to manuscript revision, read, and approved the submitted version.

Funding

This work was supported in part by NSF grant DUE-1139861.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Beetham, H., and Sharpe, R. (2013). Rethinking Pedagogy for a Digital Age: Designing for 21st Century Learning. Abingdon, UK: Routledge.

Cmap (2014). Cmap. Available online at: https://cmap.ihmc.us/

Eppler, M. J. (2006). A comparison between concept maps, mind maps, conceptual diagrams, and visual metaphors as complementary tools for knowledge construction and sharing. Inform. Vis. 5, 202–210. doi: 10.1057/palgrave.ivs.9500131

Fouh, E., Akbar, M., and Shaffer, C. A. (2012). The role of visualization in computer science education. Computers Sch. 29, 95–117. doi: 10.1080/07380569.2012.651422

Fouh, E., Hamouda, S., Farghally, M., and Shaffer, C. (2016). “Automating learner feedback in an eTextbook for data structures and algorithms courses,” in Challenges in ICT Education: Formative Assessment, Learning Data Analytics and Gamification, eds S. Caballe and R. Clariso (Elsevier Science), 478–485.

Fouh, E., Karavirta, V., Breakiron, D., Hamouda, S., Hall, S., Naps, T., et al. (2014). Design and architecture of an interactive eTextbook–The OpenDSA system. Sci. Computer Programm. 88, 22–40. doi: 10.1016/j.scico.2013.11.040

Hwang, G.-J., Kuo, F.-R., Chen, N.-S., and Ho, H.-J. (2014). Effects of an integrated concept mapping and web-based problem-solving approach on students' learning achievements, perceptions and cognitive loads. Computers Educ. 71, 77–86. doi: 10.1016/j.compedu.2013.09.013

JSON (2017). The JSON Data Interchange Syntax. Available online at: https://www.json.org/

Khan Academy (2014). Khan Academy Exercises Wiki. Available online at: https://github.com/Khan/khan-exercises/wiki

Kwon, S. Y., and Cifuentes, L. (2007). Using computers to individually-generate vs. collaboratively-generate concept maps. J. Educ. Technol. Soc. 10, 269–280. Available online at: https://www.jstor.org/stable/pdf/jeductechsoci.10.4.269.pdf?seq=1

Leard Statistics (2018). One-way ANOVA. Available online at: https://statistics.laerd.com/statistical-guides/one-way-anova-statistical-guide.php

Lloyd, C. V. (1990). The elaboration of concepts in three biology textbooks: Facilitating student learning. J. Res. Sci. Teach. 27, 1019–1032.

Lucidchart (2008). Lucidchart. Available online at: https://www.lucidchart.com/pages/landing/concept_map_maker

Miller, G. A. (1956). The magical number seven, plus or minus two: Some limits on our capacity for processing information. Psychol. Rev. 63:81.

MindMeister (2007). MindMeister. Available online at: https://www.mindmeister.com/

MindMup (2013). MindMup. Available online at: https://www.mindmup.com/

Mühling, A. (2016). Aggregating concept map data to investigate the knowledge of beginning cs students. Computer Sci. Educ. 26, 176–191. doi: 10.1080/08993408.2016.1241340

Novak, J. D. (1990). Concept mapping: a useful tool for science education. J. Res. Sci. Teach. 27, 937–949. doi: 10.1002/tea.3660271003

Novak, J. D. (2010). Learning, creating, and using knowledge: concept maps as facilitative tools in schools and corporations. J. e-Learn. Knowl. Soc. 6, 21–30.

Novak, J. D., and Cañas, A. J. (2008). The Theory Underlying Concept Maps and How to Construct and Use Them. Technical report. Pensacola, FL: Institute for Human and Machine Cognition.

Novak, J. D., and Musonda, D. (1991). A twelve-year longitudinal study of science concept learning. Am. Educ. Res. J. 28, 117–153. doi: 10.3102/00028312028001117

ODSA Contributors (2012). CS2 Software Design & Data Structures. Available online at: https://opendsa-server.cs.vt.edu/ODSA/Books/CS2/html/Glossary.html

OpenDSA Project (2012a). OpenDSA-LTI Implementation. Available online at: https://opendsa.readthedocs.io/en/latest/OpenDSA-LTI-Implementation.html

OpenDSA Project (2012b). OpenDSA System Documentation. Available online at: https://opendsa.readthedocs.io/en/latest/index.html

Sanders, K., Boustedt, J., Eckerdal, A., McCartney, R., Moström, J. E., Thomas, L., et al. (2008). “Student understanding of object-oriented programming as expressed in concept maps.” in ACM SIGCSE Bulletin (Portland, OR: ACM), 332–336.

Smith, R. (2000). The purpose, design, and evolution of online interactive textbooks: the digital learning interactive model. Hist. Computer Rev. 16, 43–43. Available online at: https://www.learntechlib.org/p/93826/

Statistics How To (2019). ANOVA Test: Definition, Types, Examples. Available online at: https://www.statisticshowto.datasciencecentral.com/probability-and-statistics/hypothesis-testing/anova/

Stewart, J. (1979). Concept maps: a tool for use in biology teaching. Am. Biol. Teacher 41, 171–175. doi: 10.2307/4446530

Turns, J., Atman, C. J., and Adams, R. (2000). Concept maps for engineering education: A cognitively motivated tool supporting varied assessment functions. IEEE Trans. Educ. 43, 164–173. doi: 10.1109/13.848069

Van Bon-Martens, M., van de Goor, L., Holsappel, J., Kuunders, T., Jacobs-van der Bruggen, M., Te Brake, J., et al. (2014). Concept mapping as a promising method to bring practice into science. Public Health 128, 504–514. doi: 10.1016/j.puhe.2014.04.002

Villalon, J., and Calvo, R. A. (2011). Concept maps as cognitive visualizations of writing assignments. J. Educ. Technol. Soc. 14, 16–27. Available online at: https://www.jstor.org/stable/jeductechsoci.14.3.16?seq=1

Keywords: concept maps, glossary, visualization, eTextbook, digital education

Citation: Elgendi E and Shaffer CA (2020) Dynamic Concept Maps for eTextbook Glossaries: Design and Evaluation. Front. Comput. Sci. 2:7. doi: 10.3389/fcomp.2020.00007

Received: 05 December 2019; Accepted: 31 January 2020;

Published: 19 February 2020.

Edited by:

Monika Akbar, The University of Texas at El Paso, United StatesCopyright © 2020 Elgendi and Shaffer. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Clifford A. Shaffer, c2hhZmZlckB2dC5lZHU=

Ehsan Elgendi

Ehsan Elgendi Clifford A. Shaffer

Clifford A. Shaffer