Abstract

We present a primer on multisensory experiences, the different components of this concept, as well as a reflection of its implications for individuals and society. We define multisensory experiences, illustrate how to understand them, elaborate on the role of technology in such experiences, and present the three laws of multisensory experiences, which can guide discussion on their implications. Further, we introduce the case of multisensory experiences in the context of eating and human-food interaction to illustrate how its components operationalize. We expect that this article provides a first point of contact for those interested in multisensory experiences, as well as multisensory experiences in the context of human-food interaction.

Introduction

Our life experiences are multisensory in nature. Think about this moment. You may be reading this article while immersed in a sound atmosphere. There may be a smell in the environment, even if you are not aware of it, and you may be drinking a cup of coffee or eating something, while touching the means through which you read the article. All these different sensory inputs, but perhaps more, influence the experience that you have about reading the article. But what if we could design such multisensory arrangement to create a given, intended, experience?

In this article, we present a primer on multisensory experiences. This term is interdisciplinary and used in multiple research and practice fields, ranging from psychology, through marketing, to human-computer interaction (HCI). Although researchers from such fields have referred to multisensory experiences, there is still no conceptual article focusing exclusively on the term itself, and its implications. After presenting a primer on multisensory experiences, the role of technology in them, and their implications for individuals and society, we move on to discuss an example of multisensory experiences in the context of eating and the growing field of human-food interaction (HFI). At the outset, we would like to clarify that this is not an extensive review of multisensory experiences. Instead, we present a perspective article in which we define the concept and our position about it. We aim to make this article an accessible first point of contact for anyone interested in multisensory experiences and their role in HFI.

What Are Multisensory Experiences?

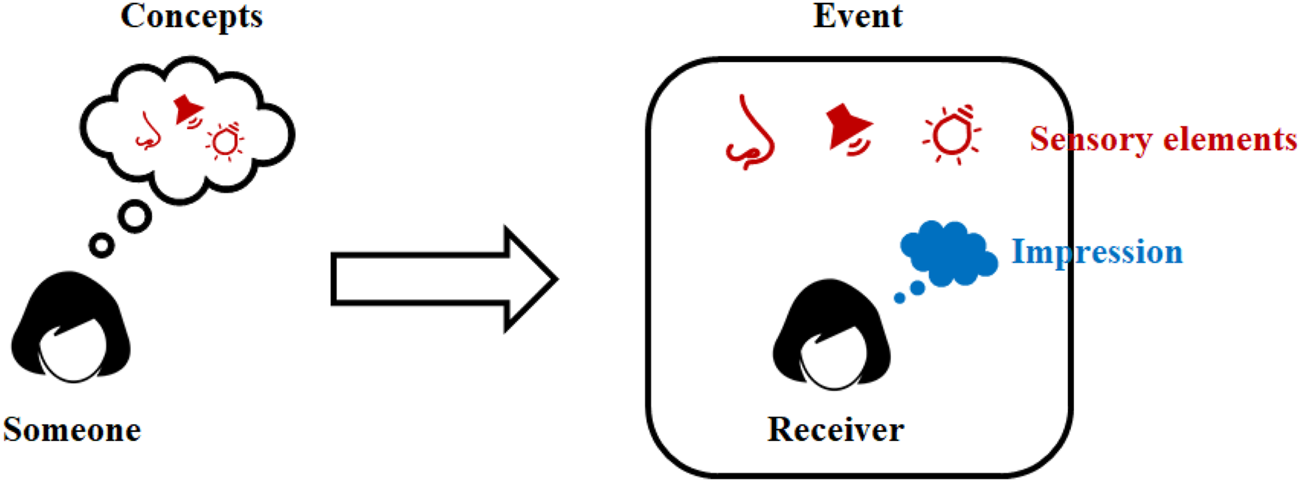

We recently defined multisensory experiences as “…impressions formed by specific events, whose sensory elements have been carefully crafted by someone.” (Velasco and Obrist, 2020, p. 15, see Figure 1). For instance, to create the impression of an object, say, a sunflower, colors, textures, and smells can be considered in a specific event (e.g., an art exhibition, Vi et al., 2017). The senses, and their corresponding functioning, are thus situated at the center of the formation of the impression of the object, even in the absence of a real object. We would like to note here, there is research that has suggested other concepts that can relate to, or involve, at least partly, multisensory experiences. These include, among others, multisensory enhancement (Marquardt et al., 2018), multisensory product experience (Ferrise et al., 2017), and mulsemedia (e.g., Ghinea et al., 2014). Discussing all these concepts falls out of the scope of this article, however, future research should aim at reviewing and finding the conceptual links between them as some are aimed at describing experiences, technologies, and/or design frameworks.

FIGURE 1

Schematic representation of our definition of multisensory experiences. Multisensory experiences (right of the arrow) are impressions formed by specific events (black square), whose sensory elements (in this case represented by smell, sound, and light, in red) have been carefully crafted by someone (left side of the arrow) for a given receiver, capitalizing on concepts of multisensory perception (in the cloud). Note that, whilst most of people’s experiences are multisensory per se, multisensory experiences are different in that there is intentionality in them. In other words, while, say, walking in a forest or jungle (event) involves a number of sensory elements, a multisensory experience, as we put forward, is carefully designed by someone. Take, as an example, a walk in the jungle that has been designed by a landscape architect to evoke specific impressions in a given receiver. Hence, when one refers to multisensory experiences, the “design” part is implied. For this same reason, multisensory experiences and multisensory experience design are often used interchangeably.

When crafting an impression, through a given set of sensory elements, the someone who designs capitalizes on existing research and concepts from multisensory perception1. These include, though are not limited to, spatiotemporal congruence (e.g., Chen and Vroomen, 2013), semantic congruence (e.g., Doehrmann and Naumer, 2008), crossmodal correspondences (e.g., Spence, 2011; Parise, 2016), sensory dominance (Fenko et al., 2010), and sensory overload (Malhotra, 1984; see also Velasco and Spence, 2019, for a description of these concepts). In other words, the way in which sensory elements are integrated to a given event is inspired or based on research on sensation and perception, and more particularly, on research suggesting that the multisensory nature of information changes also cognitive processes such as like attention (e.g., Talsma et al., 2010) and memory (e.g., Shams and Seitz, 2008).

The Role of Technology in Multisensory Experiences

Multisensory experiences are increasingly changed and enabled through technology, thus, there is growing research on the topic in HCI (Obrist et al., 2017). For this reason, the sensory elements that are crafted in an event can be physical, digital, or a combination of both (mixed reality). In other words, multisensory experiences move along the reality-virtuality continuum, where they can go from fully real, through mixed reality, to fully virtual (see Flavián et al., 2019; Milgram et al., 1995, for reflections on the reality-virtuality continuum). Technology can change how events occur and, potentially, also become the event itself (e.g., technology as experience, McCarthy and Wright, 2004).

To clarify how technology relates to events in our definition of multisensory experiences, let us consider the following example. A group of friends have a new project of growing sunflowers and they want you to experience them and they have three options to do so: (1) Take you through their sunflower field without any technology, (2) Take you through the field with the aid of augmented reality (AR) to obtain information about the sunflowers, or (3) Take you through the field in virtual reality (VR). In the first, there may not be technology, in the second technology augments the experience, and in the third the experience is created through technology. In other words, technology can influence the event or become the means for creating the event itself.

There are multiple examples of multisensory experiences enabled through technology, which can further illustrate its role on said experiences (see emerging role on the chemical senses, smell, and taste, when designing multisensory experiences Maggioni et al., 2020; Vi et al., 2020). Situated towards the real end of the reality virtuality continuum, a multisensory experience of dark matter is created inside an inflatable dome enabling people to feel, hear, smell, taste dark matter next to staring into a simulation of the dark matter distribution in the Universe (Trotta et al., 2020). In mixed reality, an example is presented by Tennent et al. (2017), who created a digital version of a swing. Players wear a head-mounted display while sitting on a real, physical swing, having their sense of movement within a virtual environment exaggerated through the visual feedback. Finally, an example on the virtual end of the reality-virtuality continuum, is FaceHaptics presented by Wilberz et al. (2020), who developed a haptic display based on a robot arm attached to a head-mounted virtual reality display that provides localized, multi-directional and movable haptic cues in the form of wind, warmth, moving and single-point touch events and water spray to dedicated parts of the face not covered by the head-mounted display. Importantly, as technology develops, we may see a shift from multisensory experiences involving human-computer interaction, toward human-computer integration (Mueller et al., 2020).

In recent times, there has been a growing digitization of human experiences, accelerated due to the COVID-19 pandemic (Roose, 2020). Purely offline and ‘real’ experiences appear to be shifting faster to mixed and virtual reality experiences where both the physical and digital worlds merge. However, many of the senses are still left ‘unsatisfied’, making it clear that there is still much to be done to create multisensory experiences in an increasingly digital world (Petit et al., 2019).

Implications of Multisensory Experiences and How to Think About Them

The excitement about multisensory experiences in academia and industry, opens a plethora of opportunities but also needs to be met with responsibility. Hence, the excitement around multisensory experiences comes with a number of challenges and responsibilities. However, to the best of our knowledge, there is, to date, little discussion on the implications of multisensory experiences. Yet, there are multiple challenges associated with them, including, among others, how many businesses, individuals and communities who are not ready for a digital transition may be left behind. In addition, issues that are already in the public’s eye, such as privacy, security, universal vs. exclusive access to technology, increased predictability, and controllability, will only become more and more salient.

Considering the aforesaid challenges and the definition of multisensory experiences, we recently postulated the three laws of multisensory experiences, which are inspired by

Asimov’s (1950)three laws of robotics. These laws focus on acknowledging and debating publicly different questions that are at the heart of the definition of multisensory experiences, namely, the why (the rationale/reason), what (the impression), when (the event), how (the sensory elements), who (the someone), and whom (the receiver), associated with a given multisensory experience. With this in mind, the laws indicate (

Velasco and Obrist, 2020, p. 79):

I. Multisensory experiences should be used for good and must not harm others.

II. Receivers of a multisensory experience must be treated fairly.

III. The someone and the sensory elements must be known.

The first law aims to guide the thinking process related to the question: Why and what impressions and events we want to design for? The answer to this question, should always be: Reasons, events, and impressions must not cause any harm to the receiver, nor anyone else. Multisensory experiences should be used for good. The second law aims to make people reflect about the questions: Who are we designing for? Should we design differently for different receivers? The first question helps to identify the receiver and its characteristics. The final law seeks to address two questions. First, who is crafting the multisensory experience? Second, what sensory elements we select and why? With this law we call for transparency in terms of who designs, what knowledge guides the design, and what sensory elements are chosen to craft an impression. Although it is possible that not all information may be provided upfront to the receiver, they must have easy access to such information if they want.

Multisensory Experiences in the Context of Human-Food Interaction

In this section we present multisensory experiences in the context of HFI, as a research and practice case. Multisensory experiences have been increasingly studied in the context of food (e.g., Velasco et al., 2018; Spence and Youssef, 2019). Among other reasons for this, is the fact that eating, and drinking are perhaps some of the most multisensory events of everyday life activities (e.g., Spence, 2017, Spence, 2020). Indeed, in the context of HFI research, multisensory human-food interaction (MHFI) is an active area of inquiry contributing to the field (Betran et al., 2019; see also Nijholt et al., 2016; Nijholt et al., 2018; Velasco et al., 2017). Here, we reflect on what multisensory experiences mean in the context of HFI.

What Are Multisensory HFI Experiences?

In the context of HFI, multisensory experiences refer to impressions formed by specific food-related events, whose sensory elements (e.g., intrinsic, and extrinsic to the food, see for example, Wang et al., 2019) have been carefully crafted by someone for a given receiver (e.g., diners). For instance, to create the impression of a taste, say “sweet”, colors, textures, and specific smells can be considered in a specific event. The senses are thus situated at the center of the formation of the impression, even in the absence of the real object (e.g., sugar).

Moreover, when creating multisensory HFI, it is worth looking at the consumer journey associated with food. Inspired by the research on the customer journey (Lemon and Verhoef, 2016), that is, the stages of interaction associated with products and services that constitute the total customer experience, one may argue that food-related events include at least three broad levels, namely, pre-consumption, consumption, and post-consumption. The first level is the one in which people identify a need associated with food, search for food, and develop expectations about it. The second level is about decision making (e.g., deciding what to do about the food and interacting with it). The final stage is about what happens after consumption, which may involve everything from sharing the food event or experience, to discarding what remains after consumption. These are suggested as broad levels in the interaction with a given food, though one may look at the interaction in further detail, depending on the specific food and/or food context.

By considering the different moments of experience with food (thus, different events), one may be able to create specific impressions more accurately in either one of the moments or the whole journey (Schifferstein, 2016).

Where Food Meets Multisensory Technology

The journey with food, and in general any consumer experience, is increasingly transformed by technology (Hoyer et al., 2020). There are numerous sensory elements that can be carefully crafted by someone throughout the consumer journey, to create a given impression. Such elements involve both intrinsic (internal) and extrinsic (external) properties of the food such as the aroma and atmospheric sound present in a given food interaction, respectively (e.g., Betancur et al., 2020). In addition, the sensory elements may be real or digital (see Figure 2), and as such, the experiences may occur at any level of the reality-virtuality continuum. In this case, concepts associated with multisensory food perception may be used as a base to craft the sensory elements, to deliver a given impression (e.g., Velasco et al., 2018).

FIGURE 2

Left traditional eating, right eating with a VR headset. Left—free stock picture. Right from “Tree by Naked” restaurant’.https://www.dw.com/en/tokyo-virtual-reality-restaurant-combines-cusine-with-fine-art/g-44898252.

There are multiple examples of multisensory experiences in the context of MHFI that can further help to illustrate what they mean in HFI. Situated on the real end of the reality-virtuality continuum, FoodFab capitalizes on 3D printing to control parameters of the internal structure of foods (infill pattern and infill density) and, thus, influence chewing time and, in turn, satiety (Lin et al., 2020). In mixed reality, an example is MetaCookie, a system that augments a cookie’s flavor by digitally overlapping colors and textures, as well as specific aromas, on a plain cookie (Narumi et al., 2011). Finally, an example on the virtual end of the reality-virtuality continuum, is presented by Brooks et al. (2020), who developed a ‘thermal display’ that, through scents that evoke characteristic trigeminal sensation, integrates thermal sensations into virtual reality.

Responsible Design of Multisensory HFI

There is a broad and promising scope for multisensory experiences when it comes to HFI. As with any other design opportunities there are challenges and responsibilities to meet. Responsible design of multisensory HRI is even more important than many other application scenarios, as food is essential to any human being.

To illustrate the need for responsible design, think about the following example. A group of friends in Tokyo develop a multisensory intelligent system that uses 3D printers that carefully control food color, size, and sound, to create the impression of satiety and thus reduce both food consumption and waste. Probably, if the receivers of this system are children or adults, the specifications of the sensory elements might need to differ, as the reactions of these groups may vary. But now consider that this intelligent system was developed by, and based on the behavior of, people from Japan only, who come from an educated, industrialized, rich, and democratic society. If this system were used in a small community in an island in the pacific, would it evoke the same impression? Humans and intelligent systems can have biases, which need to be considered when designing experiences. In that sense, there is a need to not only treat receivers fairly by balancing their differences and giving them all the same opportunities, but also empower them through giving them a voice in multisensory experiences. In other words, receivers do not just passively receive, but can adjust experiences to their own needs (see Obrist et al., 2018).

Discussion

We presented a primer to multisensory experiences. We elaborated on multisensory experiences, the role of technology in them, and their implications for individuals and society. In addition, we discuss the case of multisensory experiences in the context of eating and human-food interaction, to illustrate how to put the senses at the center of the experience design process.

We believe that we are just witnessing the first interdisciplinary research wave on multisensory experiences, as the scope for development is extensive. We have suggested, based on the example of HFI, that multisensory experiences can help tackle key challenges that humanity faces, such as those involved in space exploration (Binsted et al., 2008; Obrist et al., 2019). First, food is not only important for nutrition, but it also serves emotional and a social purpose in human contexts, dimensions which are key to the success of space travels (Szocik et al., 2018), as such, food experiences are vital for the success of space travels. Importantly, as space travelers experience changes in their senses in zero gravity and they are confined to a very specific context, such as that of a spaceship (Taylor et al., in press), multisensory food experiences can be designed to support those who venture to explore space.

It is important to mention that there are multiple questions and future directions that remain open and require further reflection. For example, with regards to carefully designing and engineering multisensory experiences, we need to think about what devices one may use for specific experiences; how to account for their different stimulation mechanisms and the reactions evoked; how can we ensure replicability and a seamless integration with experiences and users’ interactions. Moreover, how can we predict experiences and personalize them based on individual and cultural differences? These are just some of many more questions that remain unanswered and need future explorations (see Saleme et al., 2019 on challenges in a diverse device ecosystem; and Maggioni et al., 2019 aiming for a device-agnostic design toolkit). We must remember that despite the current progress, we are still beginning to truly formalize this design space. We hope that this perspective article opens a wide discussion, within and across disciplines and thus helps shape its future.

It is reasonable to expect that technology will keep advancing and sensory delivery will become more accurate. In addition, our understanding of the human senses and perception will become more precise through large scale data from human-computer interaction and integration research. As such, there is the potential to systematize our definition of multisensory experiences, through adaptive, computational design. This is exciting but at the same time carries big questions on the implications of multisensory experiences as well as our responsibility in them. The three laws of multisensory experiences we presented can help to think about the implications of designing multisensory experience in the growing integration between the senses and technology (Mueller et al., 2020), but further debate is undoubtedly needed. We believe that, when it comes to human experience design, both researchers and practitioners should promote an ongoing public ethical discussion.

Statements

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Funding

The contribution by MO has been supported by the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation program under the Grant No.: 638605.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Footnotes

1.^Note that such concepts have been used in the context of multisensory integration research, which focus on the how and when our brains integrate information from different senses to produce a singular experience of the world around us (Calvert and Thesen, 2004; Spence, 2011).

References

1

Asimov I. (1950). I, Robot. New York: Bantam Dell.

2

Bertran F. A. Jhaveri S. Lutz R. Isbister K. Wilde D. (2019). “Making sense of human-food interaction,” in Proceedings of the 2019 CHI conference on human factors in computing systems, Glasgow, United Kingdom, May 2, 2019 (New York, NY: ACM), p. 678.

3

Betancur M. I. Motoki K. Spence C. Velasco C. (2020). Factors influencing the choice of beer: a review. Food Res. Int.137, 109367. 10.1016/j.foodres.2020.109367

4

Binsted K. Auclair S. Bamsey M. Battler M. Bywaters K. Harris J. et al (2008). IAC-08 international astronautical congress (Glasgow). Available online at:http://www2.hawaii.edu/∼binsted/papers/Binsted_IAC-08.A1.1.9.pdf. (Accessed March 11, 2021)

5

Brooks J. Nagels S. Lopes P. (2020). “Trigeminal-based temperature illusions,” in Proceedings of the 2020 CHI conference on human factors in computing systems, Honolulu, HI, April, 2020(New York, NY: Association for Computing Machinery), p. 1–12.

6

Calvert G. A. Thesen T. (2004). Multisensory integration: methodological approaches and emerging principles in the human brain. J. Physiol. Paris98 (1–3), 191–205. 10.1016/j.jphysparis.2004.03.018

7

Chen L. Vroomen J. (2013). Intersensory binding across space and time: a tutorial review. Atten. Percept. Psychophys.75 (5), 790–811. 10.3758/s13414-013-0475-4

8

Doehrmann O. Naumer M. J. (2008). Semantics and the multisensory brain: how meaning modulates processes of audio-visual integration. Brain Res.1242, 136–150. 10.1016/j.brainres.2008.03.071

9

Fenko A. Schifferstein H. N. J. Hekkert P. (2010). Shifts in sensory dominance between various stages of user–product interactions. Appl. Ergon.41 (1), 34–40. 10.1016/j.apergo.2009.03.007

10

Ferrise F. Graziosi S. Bordegoni M. (2017). Prototyping strategies for multisensory product experience engineering. J. Intell. Manuf.28 (7), 1695–1707. 10.1007/s10845-015-1163-0

11

Flavián C. Ibáñez-Sánchez S. Orús C. (2019). The impact of virtual, augmented and mixed reality technologies on the customer experience. J. Business Res.100, 547–560. 10.1016/j.jbusres.2018.10.050

12

Ghinea G. Timmerer C. Lin W. Gulliver S. R. (2014). Mulsemedia: State of the art, perspectives, and challenges. ACM Trans. Multimed. Comput. Commun. Appl.11 (1s), 1–23. 10.1145/2617994

13

Hoyer W. D. Kroschke M. Schmitt B. Kraume K. Shankar V. (2020). Transforming the customer experience through new technologies. J. Interact. Market.51, 51–71. 10.1016/j.intmar.2020.04.001

14

Lemon K. N. Verhoef P. C. (2016). Understanding customer experience throughout the customer journey. J. Market.80 (6), 69–96. 10.1509/jm.15.0420

15

Lin Y. J. Punpongsanon P. Wen X. Iwai D. Sato K. Obrist M. et al (2020). “FoodFab: creating food perception illusions using food 3D printing,” in Proceedings of the 2020 CHI conference on human factors in computing systems, Honolulu, HI, April, 2020. (Honolulu, HI: Association for Computing Machinery (ACM)),p. 1–13.

16

Maggioni E. Cobden R. Dmitrenko D. Hornbæk K. Obrist M. (2020). SMELL SPACE: mapping out the olfactory design space for novel interactions. ACM Trans. Comput. Human Interact.27 (5), 26. 10.1145/3402449

17

Maggioni E. Cobden R. Obrist M. (2019). OWidgets: a toolkit to enable smell-based experience design. Int. J. Human Comput. Stud.130, 248–260. 10.1016/j.ijhcs.2019.06.014

18

Malhotra N. K. (1984). Information and sensory overload. Information and sensory overload in psychology and marketing. Psychol. Mark.1 (3, 4), 9–21. 10.1002/mar.4220010304

19

Marquardt A. Trepkowski C. Maiero J. Kruijff E. Hinkeniann A. (2018). Multisensory virtual reality exposure therapy, in 2018 IEEE conference on virtual reality and 3D user interfaces (VR), Tuebingen/Reutlingen, Germany, August, 2018. (IEEE), p. 769–770.

20

McCarthy J. Wright P. C. (2004). Technology as experience. Cambridge, MA: MIT Press.

21

Milgram P. Takemura H. Utsumi A. Kishino F. (1995). Augmented reality: a class of displays on the reality-virtuality continuum, in Telemanipulator and telepresence technologies, Vol. 2351. (Bellingham, WA: International Society for Optics and Photonics), p. 282–292.

22

Mueller F. Lopes P. Strohmeier P. Ju W. Seim C. Weigel M. et al (2020). “Next steps in human-computer integration,” in Proceedings of the 2020 CHI conference on human factors in computing systems (CHI '20), Honolulu, HI, April, 2020. (New York, NY: ACM).

23

Narumi T. Nishizaka S. Kajinami T. Tanikawa T. Hirose M. (2011). “MetaCookie+,”, in 2011 IEEE virtual Reality conference, Singapore, April, 2011. (IEEE), p. 265–266.

24

Nijholt A. Velasco C. Karunanayaka K. Huisman G. (2016). “1st international workshop on multi-sensorial approaches to human-food interaction (workshop summary),” in Proceedings of the 18th ACM international conference on multimodal interaction, Tokyo, Japan, Nov 1, 2016 (NewYork, NY: ACM), p. 601–603.

25

Nijholt A. Velasco C. Obrist M. Okajima K. Spence C. (2018). “3rd international workshop on multisensory approaches to human-food interaction,” in Proceedings of the 20th ACM international conference on multimodal interaction, Boulder, United States, Oct 16, 2018 (NewYork, NY: ACM), p. 657–659.

26

Obrist M. Marti P. Velasco C. Tu Y. Narumi T. Møller N. L. H. (2018). “The future of computing and food,” in Proceedings of the 2018 international conference on advanced visual interfaces, Utrecht, The Netherlands, October 2020 (NewYork, NY: ACM), p. 1–3.

27

Obrist M. Tu Y. Yao L. Velasco C. (2019). Space food experiences: designing passenger's eating experiences for future space travel scenarios. Front. Comput. Sci.1, 3. 10.3389/fcomp.2019.00003

28

Parise C. V. (2016). Crossmodal correspondences: standing issues and experimental guidelines. Multisensory Res.29 (1–3), 7–28. 10.1163/22134808-00002502

29

Petit O. Velasco C. Spence C. (2019). Digital sensory marketing: integrating new technologies into multisensory online experience. J. Interactive Marketing45, 42–61. 10.1016/j.intmar.2018.07.004

30

Roose K. (2020). The coronavirus crisis is showing us how to live online. Retrieved from: https://www.nytimes.com/2020/03/17/technology/coronavirus-how-to-live-online.html (Accessed March 11, 2021).

31

Saleme E. B. Santos C. A. S. Ghinea G. (2019). A mulsemedia framework for delivering sensory effects to heterogeneous systems. Multimed. Syst.25 (4), 421–447. 10.1007/s00530-019-00618-8

32

Schifferstein H. N. (2016). “The roles of the senses in different stages of consumers’ interactions with food products,” in Multisensory flavor perception: from fundamental neuroscience through to the marketplace. Editors Piqueras-FiszmanB.SpenceC. (Sawton, UK: Woodhead Publishing), p. 297–312.

33

Shams L. Seitz A. R. (2008). Benefits of multisensory learning. Trends Cogn. Sci.12 (11), 411–417. 10.1016/j.tics.2008.07.006

34

Spence C. (2011). Crossmodal correspondences: a tutorial review. Atten. Percept. Psychophys.73 (4), 971–995. 10.3758/s13414-010-0073-7

35

Spence C. (2017). Gastrophysics: the new science of eating. London: Penguin.

36

Spence C. (2020). Multisensory flavour perception: blending, mixing, fusion, and pairing within and between the senses. Foods9 (4), 407. 10.3390/foods9040407

37

Spence C. Youssef J. (2019). Synaesthesia: the multisensory dining experience. Int. J. Gastron. Food Sci.18, 100179. 10.1016/j.ijgfs.2019.100179

38

Szocik K. Abood S. Shelhamer M. (2018). Psychological and biological challenges of the Mars mission viewed through the construct of the evolution of fundamental human needs. Acta Astronautica152, 793–799. 10.1016/j.actaastro.2018.10.008

39

Talsma D. Senkowski D. Soto-Faraco S. Woldorff M. G. (2010). The multifaceted interplay between attention and multisensory integration. Trends Cogn. Sci.14 (9), 400–410. 10.1016/j.tics.2010.06.008

40

Taylor A. J. Beauchamp J. D. Briand L. Heer M. Hummel T. Margot C. et al (in press). “Factors affecting flavor perception in space: does the spacecraft environment influence food intake by astronauts?,” in Comprehensive reviews in food science and food safety.

41

Tennent P. Marshall J. Walker B. Brundell P. Benford S. (2017). “The challenges of visual-kinaesthetic experience,” in Proceedings of the 2017 conference on designing interactive systems (New York, NY: ACM), p. 1265–1276.

42

Trotta R. Hajas D. Camargo-Molina J. E. Cobden R. Maggioni E. Obrist M. (2020). Communicating cosmology with multisensory metaphorical experiences. JCOM19 (2), N01. 10.22323/2.19020801

43

Velasco C. Nijholt A. Obrist M. Okajima K. Schifferstein R. Spence C. (2017). “MHFI 2017: 2nd international workshop on multisensorial approaches to human-food interaction (workshop summary),” in Proceedings of the 19th ACM international conference on multimodal interaction (New York, NY: ACM), p. 674–676.

44

Velasco C. Obrist M. (2020). Multisensory experiences: Where the senses meet technology. Oxford: Oxford University Press.

45

Velasco C. Obrist M. Petit O. Spence C. (2018). Multisensory technology for flavor augmentation: a mini review. Front. Psychol.9, 26. 10.3389/fpsyg.2018.00026

46

Velasco C. Spence C. (2019). “The multisensory analysis of product packaging framework,” in Multisensory packaging: designing new product experiences. Editors VelascoC.SpenceC. (Cham: Palgrave MacMillan).

47

Velasco C. Tu Y. Obrist M. (2018). “Towards multisensory storytelling with taste and flavor”, in Proceedings of the 3rd international workshop on multisensory approaches to human-food interaction (New York, NY: ACM), p. 1–7.

48

Vi C. T. Ablart D. Gatti E. Velasco C. Obrist M. (2017). Not just seeing, but also feeling art: mid-air haptic experiences integrated in a multisensory art exhibition. Int. J. Human Comput. Stud.108, 1–14. 10.1016/j.ijhcs.2017.06.004

49

Vi C. T. Marzo A. Memoli G. Maggioni E. Ablart D. Yeomans M. et al (2020). LeviSense: a platform for the multisensory integration in levitating food and insights into its effect on flavour perception. Int. J. Human Comput. Stud.139, 102428. 10.1016/j.ijhcs.2020.102428

50

Wang Q. J. Mielby L. A. Junge J. Y. Bertelsen A. S. Kidmose U. Spence C. et al (2019). The role of intrinsic and extrinsic sensory factors in sweetness perception of food and beverages: a review. Foods8, 211. 10.3390/foods8060211

51

Wilberz A. Leschtschow D. Trepkowski C. Maiero J. Kruijff E. Riecke B. (2020). “FaceHaptics: robot arm based versatile facial haptics for immersive environments,” in Proceedings of the 2020 CHI conference on human factors in computing systems (New York, NY: ACM), p. 1–14.

Summary

Keywords

multisensory experiences, human-computer interaction, technology, senses, psychology, marketing

Citation

Velasco C and Obrist M (2021) Multisensory Experiences: A Primer. Front. Comput. Sci. 3:614524. doi: 10.3389/fcomp.2021.614524

Received

06 October 2020

Accepted

15 February 2021

Published

25 March 2021

Volume

3 - 2021

Edited by

Francesco Ferrise, Politecnico di Milano, Italy

Reviewed by

Pawel J. Matusz, University of Applied Sciences Western Switzerland, Switzerland

Estêvão Bissoli Saleme, Federal Institute of Espírito Santo, Brazil

Updates

Copyright

© 2021 Velasco and Obrist.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Carlos Velasco, carlos.velasco@bi.no

This article was submitted to Human-Media Interaction, a section of the journal Frontiers in Computer Science

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.