- 1Department of Business Information System, Suratthani Rajabhat University, Suratthani, Thailand

- 2School of Electronic and Computer Science (ECS), The University of Southampton, Southampton, United Kingdom

- 3School of Engineering and Technology, Walailak University, Nakhon Si Thammarat, Thailand

This research developed and evaluated a software development support method to help non-expert developers evaluating or gathering requirements and designing or evaluating digital technology solutions to accessibility barriers people with visual impairment encounter. The Technology Enhanced Interaction Framework (TEIF) Visual Impairment (VI) Method was developed through literature review and interviews with 20 students with visual impairment, 10 adults with visual impairment and five accessibility experts. It is an extension of the Technology Enhanced Interaction Framework (TEIF) and its “HI-Method” that had been developed and validated and evaluated for hearing impairment and supports other methods by providing multiple-choice questions to help identify requirements, the answers to which help provide technology suggestions that support the design stage. Four accessibility experts and three developer experts reviewed and validated the TEIF VI-Method. It was experimentally evaluated by 18 developers using the TEIF VI-Method and another 18 developers using their preferred “Other Methods” to identify the requirements and solution to a scenario involving barriers for people with visual impairment. The “Other Methods” group were then shown the TEIF VI-Method and both groups were asked their opinions of its ease of use. The mean number of correctly selected requirements was significantly higher (p < 0.001) for developers using the TEIF VI-Method (X̄ = 8.83) than the Other Method (X̄ = 6.22). Developers using the TEIF VI-Method ranked technology solutions closer to the expert rankings than developers using Other Methods (p < 0.05). All developers found the TEIF VI-Method easy to follow. Developers could evaluate requirements and technology solutions to interaction problems involving people with visual impairment using the TEIF VI-Method better than existing Other Methods. Developers could benefit from using the TEIF VI-Method when developing technology solutions to interaction problems faced by people with visual impairment.

Introduction

While tools like simulations, accessibility personas, checklists etc. can be used to gather or evaluate requirements in the software development processes, and there have been many purely conceptual or theoretical approaches to designing accessible technologies as well as many examples of developing particular technology solutions to particular accessibility barriers; there has been no existing standardized, comprehensive and experimentally validated software development accessibility support method found that helped ‘novice’ or ‘non-expert’ developers gather or evaluate requirements and design or evaluate accessible technology interaction solutions for multiple inaccessible interactions with any complex combinations of technology, people or objects, encountered by people with visual impairment under all conditions. The contribution of the research described in this paper is therefore the development and evaluation of such a method that fills this important gap by comprehensively and systematically supporting the requirements and design or evaluation stages of any other software development approach. The unique aspect of this method is the linking of requirement-questions and answers to suggestions as to how to use technology to make any inaccessible interactions between people, technology and objects (i.e., between people and people, people and technology and people and objects) in any scenario or context more accessible to a visually impaired person. Rather than using the available space in this paper for extensively discussing the many previous purely conceptual or theoretical approaches to designing accessible technologies or examples of developing particular technology solutions to particular accessibility barriers, these are only discussed briefly in Related Work while this paper concentrates on explaining to the reader in as much detail as space allows how our new method can be used and the development, evaluation and validation of our method by visually impaired users, experts and developers.

Designers and developers should always involve visually impaired end users when identifying any interaction barriers they might encounter and in evaluating any solutions developed to overcome these barriers. While personas of visually impaired people1 can be of assistance to design and development teams and simulations can provide some awareness of barriers2 they are no substitute for working with real visually impaired users3. While it is possible for a designer to develop a technology solution for a specific visually impaired user by spending as much time as necessary with them finding out about all the barriers they face and with them evaluating and iterating possible solutions to overcome those barriers to come up with the optimum unique technology solution for them, this technology solution might not be so suitable for other visually impaired users with different abilities and contexts. The use of a software development accessibility support method such as the one described in this paper can help designers and developers with little experience of visually impaired people develop solutions for a wider range of visually impaired users than the particular visually impaired end users they may co-design with.

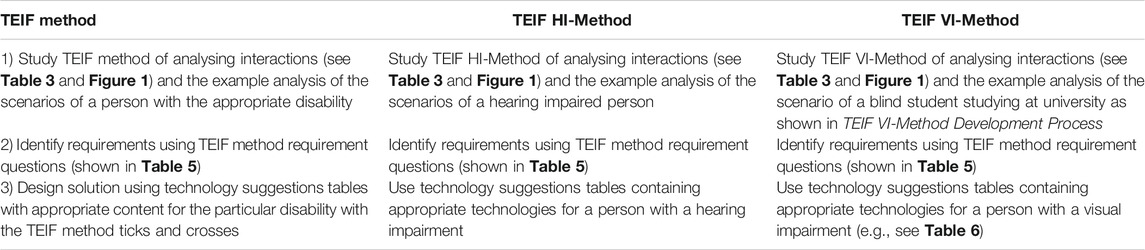

The president of the Blind Association of Thailand (Blind Association of Thailand, 2015) revealed that there were 1,918,867 registered disabled people in Thailand and 181,821 people with visual impairment (9.48% of all disabilities). For example twenty Suratthani Rajabhat University students with visual impairment encounter barriers learning from visual information in lectures or classes including difficulty reading from screen, board, paper, book, video or knowing which object the teacher is pointing to. For reducing discrimination in access to information, particularly in face-to-face situations, accessible solutions need to be created by technology developers. Many useful technologies are available for helping people with visual impairment accomplish tasks independently (e.g., reading or navigating), often using alternate modalities such as speech, touch or by sending photos to a sighted person to describe. The iPhone is very accessible for people with visual impairment and has many mobile applications that can help with many simple tasks (e.g., identify colours, currency, bar codes etc.). Artificial intelligence technologies, such as Seeing AI (Microsoft, 2019), are improving in their abilities to identify objects and faces. This application was created by a blind developer and although such useful technologies are being developed by talented people with a deep knowledge and understanding of the needs of people with visual impairment most technology developers do not have such a deep knowledge or understanding and do not learn about disability and accessibility on their university courses. Lee and Lee (1999) used experts and blind users to develop a checklist for identifying problems blind users face when using smartphone applications which they state was also intended for non-experts but they did not investigate whether non-experts could actually use the checklist. Also, people with visual impairment can have complex interactions with other people, technologies and objects which present barriers not overcome by the use of one or more simple applications. While guidelines exist for developing web or application interfaces (W3C/WAI/WCAG)4 and Software Accessibility (ISO 9241-171) the only framework and method specifically designed to support developers with developing accessible technology solutions for more complex interactions for people with disabilities is the Technology Enhanced Interaction Framework (TEIF) and its “HI-Method” developed and validated and evaluated for hearing impairment by Angkananon et al. (2015) but not for visual impairment which is the novel contribution of this study and paper. The TEIF VI-Method does not replace established guidelines but can help support their use in developing accessible technology solutions for more complex interactions for people with disabilities. While the TEIF has all the necessary components and sub-components to be a general framework, the TEIF HI-Method was developed for enhancing accessible interactions with people, technology, and objects through the use of technology, particularly in face-to-face situations involving people with disabilities, and was successfully validated by three developer experts, three accessibility experts, and an HCI professor. The TEIF Method involves requirement questions focused on accessible interactions with multiple choice answers and technology suggestions. The TEIF Method supports other methods by providing multiple-choice questions to help identify a user’s requirements, the answers to which help provide technology suggestions that support the design stage.

This article describes how TEIF VI-Method content was created to help developers with developing digital technology solutions to help people with visual impairment. To distinguish between the TEIF Methods for Hearing Impairment (HI) and Visual Impairment (VI) the terms TEIF HI-Method and TEIF VI-Method are used. The TEIF HI-Method research developed a new methodology for comparing software development support methods which will also be used for this TEIF VI-Method evaluation. Rather than compare the TEIF VI-Method with any specific other software development method it will be compared to whichever method(s) the developers prefer to use and this will be referred to as the “Other Method(s)”. This is the fairest approach to a comparison as the TEIF VI-Method does not replace other software development methods but can help support their use. TEIF is a general framework for interactions involving technology, people and objects whereas the TEIF VI-Method is a specific method for applying the Technology Enhanced Interaction Framework to help make interactions involving technology, people and objects more accessible for visually impaired people.

This paper is structured in the following way: Related Work provides a brief review of related work including the Technology Enhanced Interaction Framework (TEIF) and Method while Research Methodology Overview provides a brief overview of the research methodology used. TEIF VI-Method Development Process describes how the TEIF VI-Method was developed. TEIF VI-Method Development Process also presents a compressed version of the TEIF-VI Method content as it was presented to the participants in the User Evaluation Experimental Study that is explained in User Evaluation Experimental Study. While some might find this the hardest section to read, compressing it further or changing the wording or form that it was actually presented to the participants could detract from the reader’s understanding of the actual TEIF-VI Method. User Evaluation Experimental Design gives details of the User Evaluation Experimental Design, Results presents the results, Discussion discusses the results, while Conclusion summarises the conclusions.

Related Work

This section briefly reviews related works. Technologies for Supporting People With Visual Impairments and How Can Blind People Get Information? are intended to provide some context for the reader who is not knowledgeable about visual impairment and some of the related assistive technologies. Table 3 and Figure 1 and the text in Technology Enhanced Interaction Framework and Method very briefly summarises the TEIF Framework Conceptually and what was missing in existing other frameworks and therefore how TEIF adds beyond the state of the art. TEIF VI-Method Development Process provides an example how the TEIF VI-Method applies the Framework. Developers are free to also use whatever formal modeling language they prefer if they wish. If the interested reader wishes to see a more comprehensive explanation of the development and evaluation of the TEIF Framework and HI-Method and review of other frameworks this is available in the PhD thesis of Angkananon (Angkananon, 2015) and the journal paper (Angkananon et al., 2015). Accessibility Models briefly reviews some previous research on a general Accessibility Model and assistive technologies for visual impairment including mentioning some newer and future technologies the general reader may not be aware of. Software Design Process briefly reviews some traditional software design or development methods. The TEIF HI and VI-Methods could be used to support the requirements and design or evaluation stages of any software design or development method.

Technologies for Supporting People With Visual Impairments

While technologies for supporting people with visual impairments have been researched for many years [e.g. (Burgstahler, 2002; Petrie and Bevan, 2009)] more recently there have been some new ideas to help blind students in lectures. For example, ‘Petrie et al., (2002) trained blind students to use a haptic glove with a raised line version of a diagram and computer-vision-based tracking to provide awareness of deictic gestures by providing information through the glove to inform the user where on the diagram on the board the teacher was pointing as they spoke. This system required the tactile diagrams to be pre-prepared, however many companies are now developing tactile touch screens that could be used in real-time. MIT have developed a system that automatically provides a real-time tactile display of 3D objects using Microsoft Kinect (Follmer et al., 2013) that although currently only an expensive prototype could offer great benefits for blind people in the future. Freire et al. (2010) used a mediator to add screen reader accessible text annotations to the electronic images transmitted from a teacher’s drawings on an interactive whiteboard. Brock et al. (2015) suggested that using inclusive maps with simple audio tactile interaction was better than braille as a good solution for visually impaired people. There is not only text that causes difficulty to visually impaired people but also emojis. Choi et al. (2020) revealed that image-based-tactile emojis provided a greater significant support for visually impaired people in recognising message intention compared to non-image-based-tactile emojis.

How Can Blind People Get Information?

Golledge (1999) revealed that there are four senses that accounted for a navigation task:

1) Touch is a tactile perception ability to get information from objects by pressing on the skin, which is activated by Mechanoreceptors which is one of the neural receptors detecting a pressure on human skin when something touches on it e.g. pressure on hands, feet, follicle, tongue and body skin.

2) Sight is vision perception, ability to focus, interpret and detect a visible light that Bounces off and reflects from objects into the eyes. It provides information such as images, colours, brightness, and contrast.

3) Audition is sound perception, ability to detect and interpret the vibration into various frequencies of noise in the inner ears. Hearing capability also provides the ability to detect orientation (Milne et al., 2014; Wallmeier and Wiegrebe, 2014), e.g., where the sound comes from by using both ears. This technique is called echolocation.

4) Olfaction is Odor perception, ability to smell objects in the environment, which are processed by the Olfactory neural receptor.

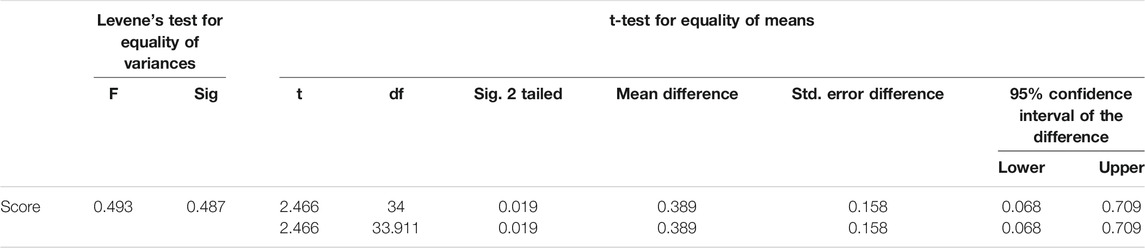

The example of how visual impairment detects obstructions at different levels is shown in Table 1. For example, visually impaired people use a white cane to detect obstructions at the ground level. They use a guide dog to avoid the obstruction and sighted people can also avoid the obstruction.

TABLE 1. The relationship between activities and internal perceptions (Williams et al., 2013; Wifarer, 2016; Watthanasak, 2019).

The term ‘haptics’ may often be used rather than ‘touch’ to include all kind of kinaesthesis needed for navigation.

Some Problems and Solutions Related to Visual Impairment

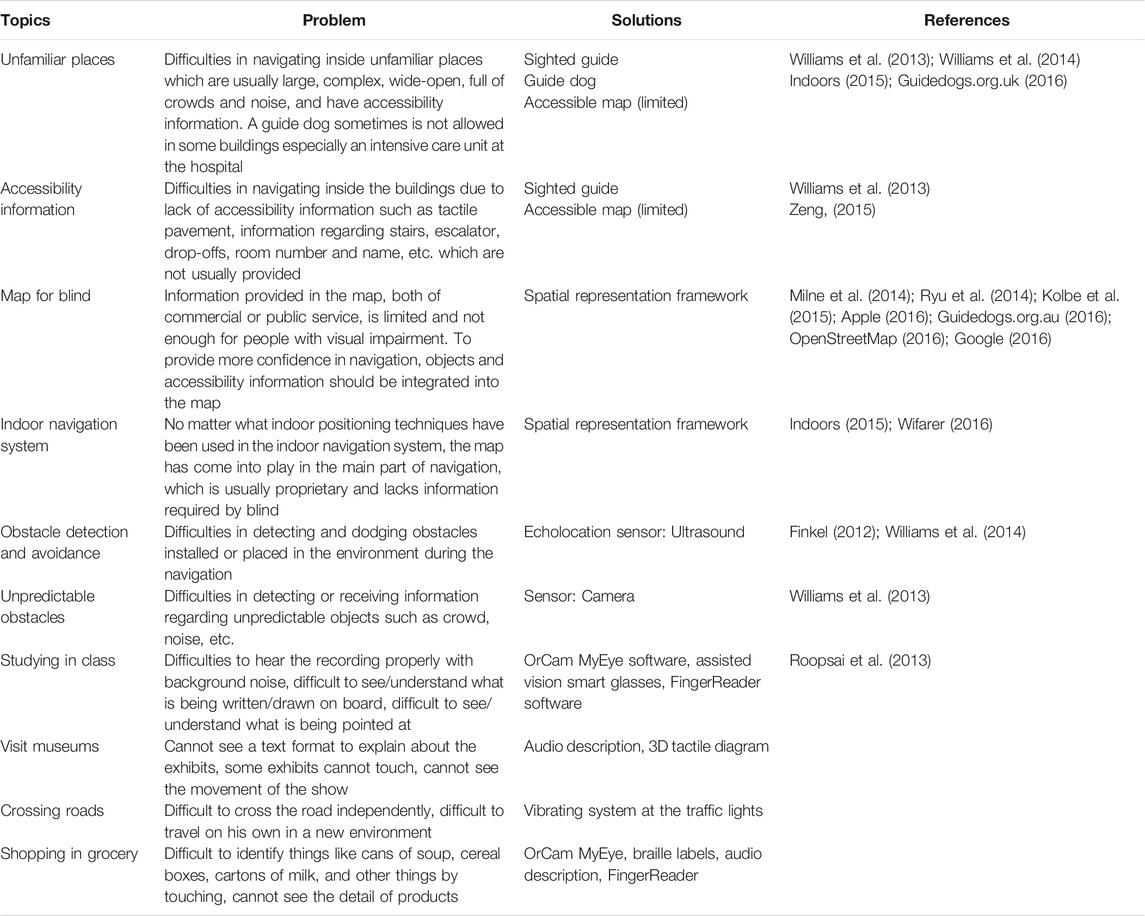

Some problems and solutions experienced by visually impaired and blind people identified from both the literature and interviews with blind people are shown in Table 2.

Interactions

Dix’s Framework (Dix, 1994) was called Computer Supported Cooperative Work (CSCW) and called the communication among participants “direct communication”. In addition, the participants could interact or control artifacts and could share artefacts among themselves. The artifacts were not only the topic of the communication but could be a medium of communication called “feedthrough”. In the communication, Deixis referred to indicating artefacts. Although Dix’s Framework mentioned interactions involving technology it did not address a separate technology component. It also did not consider the same time and same place situations identified by the Technology Enhanced Interaction Framework (TEIF) (Angkananon et al., 2015).

Vyas et al. (2008) studied the role of artefacts as supporting mediated communication in referring to analogue and digital objects which served as a tool in artefacts. They highlighted that the use of communication metaphors was culturally dependent.

Gaines (1988) observed that recommendations based on practical experience of single users operating standard workstations had little to offer developers of complex systems integrating complex behaviour of people and computers. To address this issue he presents a conceptual framework for person-computer interaction in complex systems based on an analysis of systems theory literature to derive design principles for person-computer interaction and a hierarchical model of person-computer systems. He proposed the conceptual framework in the analysis of person-computer interaction in complex system with six hierarchical layers: 1) Cultural layer: reflecting purpose and structure; 2) Intentionality layer: of anticipatory nature of an intelligent system that leads to acquisition of knowledge; 3) Knowledge layer: that supports modeling and control activities of the anticipatory system; 4) Action layer: that transmits activities interfacing to the world; 5) Expression layer: that supports encoding of communications and actions and; 6) Physical layer: that addresses how encodings exist physically in the external world.

Norman and Draper (1986) proposed a seven stage model of interaction between human and computer: establishing a goal, forming intention, specifying action sequence, executing action, perceiving system state, interpreting system state, and evaluating system state with respect to intentions and goals.

A Physical Mobile Interaction Framework was proposed by Rukzio et al. (2008) for using mobile devices as mediator to interact with a physical object with four types of interactions: Human–Computer; Human–Real World; Computer–Real World; and Computer–Computer.

Technology Enhanced Interaction Framework and Method

The TEIF built on the work of previous interaction Frameworks and particularly those of Dix (1994), Dix (1995), Dix (1997) and Gaines (1988) who were also consulted in the TEIF’s development. The TEIF conceptual Framework incorporates the interaction layers from Gaines Conceptual Framework for person-computer interaction in complex systems which were also shown to map well with Norman and Draper’s model of Human-Computer interaction (Norman and Draper, 1986). The TEIF Conceptual Framework from which the TEIF-VI Method was derived, carefully considered all the previous conceptual Frameworks and the detailed analysis of these in the development and evaluation of TEIF has been discussed extensively in many previous publications by the authors [e.g., (Angkananon, 2015; Angkananon et al., 2015)] and so only a few other frameworks are briefly discussed again in this paper.

Kaptelinin et al. (1999) developed the Activity Checklist to make ‘concrete the conceptual system of activity theory for the specific tasks of design and evaluation’ and state ‘users should first do a “quick-and-dirty” perusal of the areas represented in the Checklist that are likely to be troublesome or interesting (or both) in a specific design or evaluation. Then, once those areas have been identified, they can be explored more deeply … the Checklist can be most successfully used together with other tools and techniques to efficiently address issues of context.’ However Duignan et al. (2006) note that ‘Activity theory provides no step-by-step methodology.’ and that the Activity Checklist ‘has seen only limited use’ because ‘HCI practitioners who are not steeped in activity theory literature may find it inaccessible and difficult to apply without significant conceptual work.’ To help overcome this Duignan et al. developed 32 Interview Questions based on the checklist for their domain of computer mediated music production.

The Activity Diamond, is a conceptual model developed by Per-Olof Hedvall for his PhD thesis5 inspired by Cultural-Historical Activity Theory incorporating social and artefactual or natural contexts. Its application does not however appear to have been evaluated or validated either by independent expert review or experimentally.

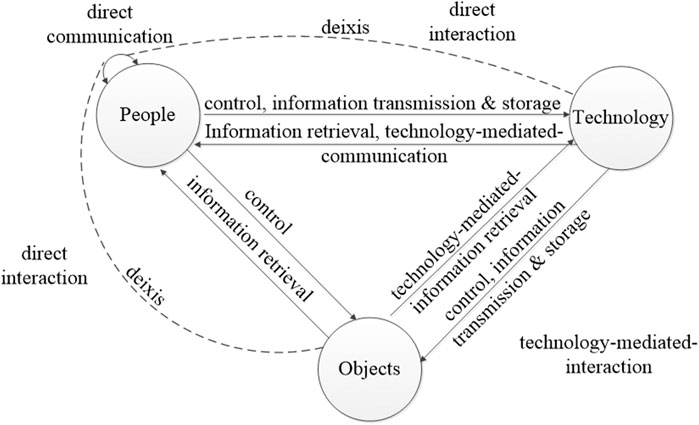

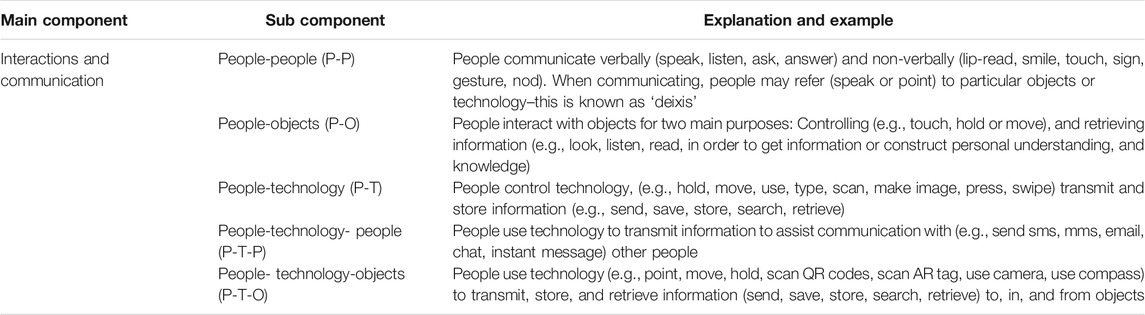

There has, however, been no framework found that has helped technology developers to consider all of the possible interactions that occur at the same time and in the same place although there have been projects concerned with how to develop and use assistive technology to support some of these interactions. In order to ensure that the TEIF was a general framework, which could apply in many situations, a wide range of scenarios and technology solutions were considered during the development process. The TEIF HI-Method provided a technology suggestions table that explained how current technologies met requirements and that will need updating as future new assistive technologies are developed. The TEIF HI-Method could be used to support the requirements and design or evaluation stages of any other method. This paper explains the development and evaluation of the TEIF VI-Method which was based on the TEIF HI-Method. The early plans for this TEIF VI-Method research study before any evaluations or experiments were conducted were outlined in short conference papers by Angkananon and Wald (2016) and Angkananon and Wald (2017). The five TEIF interaction types with explanation and examples are shown in Table 3 while Figure 1 shows the TEIF architecture. How the TEIF HI- Method and TEIF VI-Method relate to the general TEIF Method is shown in Table 4.

An experiment with 36 developers showed that the 18 developers using the TEIF HI-Method evaluated requirements for technology solutions to problems involving interaction with hearing impaired people better than the 18 developers using their preferred Other Methods. The TEIF HI-Method also helped the developers select a best solution significantly more often than the Other Methods and rate the best solution significantly closer to expert ratings than the Other Methods. Questionnaire results showed the TEIF HI-Method helped to evaluate requirements and technology solutions to interaction problems involving hearing impaired people and with further development would also help with gathering requirements and designing technology solutions for people with other disabilities.

Accessibility Models

The Human Activity Assistive Technology (HAAT) Model was proposed by Cook and Hussey (1995) to accommodate assistive technology. The Model consisted of: human who can have abilities/skills; activities which can be determined by role; context which involve setting, social, cultural, and physical and; assistive technology which involve hardware, software, and non-electronic.

The EC FP7 project ACCESSIBLE6 tried to link the functional characteristics and limitations of disabled users (ICF Framework) with assistive technologies and the Web Content Accessibility guidelines (WCAG 2.0). The proposed conceptual framework Chalkia and Bekiaris (2011) was presented to a workshop at a conference as well as available on the project website7 but does not appear to have been tested by experimental method or used to provide actual solutions to actual problems.

Carriço et al. (2011) presented the preliminary results of a study using a questionnaire with 18 visually impaired people that aimed at validating browser configuration patterns for visually impaired users based on the Framework mapping. The nine partially sighted users did not confirm their mapping. The project also developed accessible ontologies and personas. Many other projects have conceptually mapped ontologies to user profiles and undertaken expert reviews (Elias et al., 2020) but none appear to have been tested by experimental method or used to provide actual solutions to actual problems.

Petrie et al. (2002) investigated the use of universal interfaces for multimedia documents. They surveyed participants including blind and partially sighted plus experts using interviews and questionnaires and found that the users’ requirements varied depending on their disabilities. For instance, one partially sighted reader may have particular problems in colour blindness, or another may have no difficulties with colour but require enlargement of text and graphics. They developed a web based tourist guide with formatted table contents for blind screen reader users and alt-text attribute for images. For partially sighted readers using screen magnification of text and images they allowed background and foreground colours to be easily adjusted with Scalable Vector Graphic (SVG) versions of images and maps, which allow the output to be zoomed by up to 4 times without quality degradation.

Universal design is a concept of ‘design for all’ and represents an approach to designing products or building features which are suitable for many different types of users without the need for adaptation or specialized design (The Center for Universal Design, 1997).

Petrie and Bevan (2009) discussed the concepts of accessibility, usability and user experience as criteria for developers to evaluate their system. They quote ISO usability and accessibility definitions and World Wide Web Consortium (W3C) Web Accessibility Initiative (WAI) definition of accessibility to highlight a current lack of agreement about whether accessibility means universal design or usability for older and disabled people. They also refer to the 2008 draft ISO standard for user experience (UX) which defines UX as ‘A person’s perceptions and responses that result from the use or anticipated use of a product, system or service’ and note that UX will become more important in the future. They also discuss the role of accessibility, usability, and UX evaluations in the design process and group them under the headings: automatic checks, experts, models and simulations, users, and usage data.

Jung (2005) suggested factors which can be used in the design of mobile user interfaces in order to make them easily accessible for all users and The Heuristic-Evaluation method was used to evaluate these user interfaces.

Nganji (2012) developed an ontology-driven e-learning system (ONTODAPS) and evaluated both heuristically by experts (lecturers) and some students (disabled and non-disabled) including 3 with a visual impairment and some visually impaired students sought to change the font type, face and size. They noted that Interfaces for students with severe visual impairment also need the inclusion of a screen reader which reads out information to the student. Where this is included, some students stated that they want to be able to control the speed, being able to also stop and pause it or to turn it off. The inclusion of a screen magnifier is also needed for students with low vision who may rely on a magnification of the text in order to view information. One student with visual impairment preferred learning through video and two preferred learning through text. Students liked the personalization offered in terms of aggregating learning resources and presenting them in formats that are suitable for their specific needs.

Software Design Process

There are a wide range of traditional software design methods that developers can use in their designs which range from a linear approach such as the Waterfall Life Cycle Model and V Model (Balaji and Murugaiyan, 2012) to more iterative approaches to help design process in such aspects of speed and number of iterations include the Spiral Life Cycle Model, Rapid Application Development (RAD), Agile Model, and Prototype Model. User involvement approaches are ISO standard User Centred or Participatory Design, and Human-Centred Design for interactive systems (ISO 9241-210, 2010). However, all methods involve some element of design, requirements, and evaluations (Balaji and Murugaiyan, 2012; Martin et al., 2012; Roopsai et al., 2013). The use of scenarios and personas in gathering requirements for Mobile Accessible Chat System for Synchronous Computer Supported Learning Environments of Martin et al. (2012) noted that the study would need experts and real users for requirements evaluation. However, there are only a few approaches that consider the designing software process in a situation that involves disabled people. For example, Nganji and Nggada (2011) focused on the needs of disabled people in improving disability and usability. They used the Disability-Aware Software Engineering Model in user evaluation with a wide range of disabilities such as hearing impairment, visually impairment, mobility difficulties etc.

Research Methodology Overview

The TEIF VI-Method was designed to support novice developers designing technology solutions to inaccessible interactions involving people with visual impairment through helping developers consider user requirements, the design of interactions to accessibly address these requirements and the related requirements criteria for evaluating interactions. The TEIF VI-Method is not a replacement for other design and development methods, especially ones that involve participatory design with visually impaired people (Yuan et al., 2019) but can help in the design stage (e.g., providing requirement-questions and answers examples helps identifying requirements and linking the answers to technology suggestions). The process indicated by ISO 9241-220 “User centered Design” suggests multiple iterations are needed for designing a user interface and the TEIF VI-Method can be incorporated into these iterations. Time limitations meant that for the experimental study developer participants evaluated 3 possible solutions rather than designed solutions as when designing solutions, evaluation of those solutions is also always required. It was also not possible to ask the developer participants to actually interview people with visual impairment to gather the requirements and so a written scenario was used instead.

Research Question

The Research Question addressed by the research study described in this paper is:

Does the TEIF VI-Method support novice developers evaluating technology solutions to inaccessible interactions involving people with visual impairment?

TEIF VI-Method Development Process

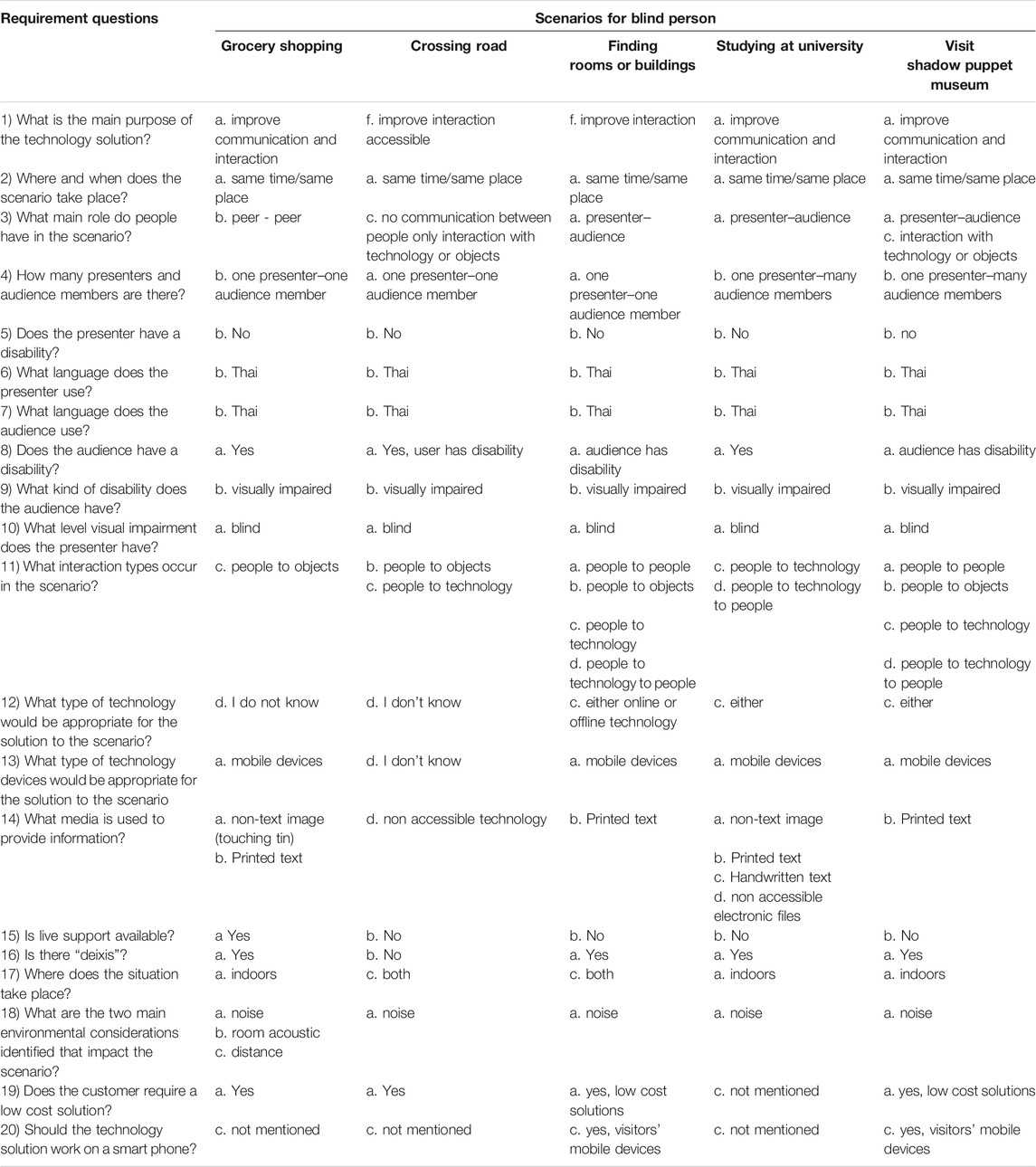

Five visual impairment experts with at least five years’ experience, 20 students with visual impairment and 10 adults with visual impairment were interviewed to gather information about visual impairment for the TEIF VI-Method and develop appropriate questions and multiple-choice answers, requirements, technologies, scenarios and solutions. To ensure that the TEIF VI-Method could be applied in various situations, five scenarios and their technology solutions involving a person with blindness were considered during the development process: shopping for groceries, crossing the road, finding rooms and buildings, problems in studying at the university, and visiting the Shadow Puppet Museum. The TEIF VI-Method was reviewed and validated by four visual impairment accessibility experts and three developer experts and refined based on their comments and any changes confirmed by the experts. Table 5 shows the multiple-choice requirement questions and answers for five scenarios which demonstrate how the questions can be applied. The questions are intended to be ‘generic’ based on the TEIF Framework and were originally used for the TEIF HI-Method and are being used again for the TEIF VI-Method.

Space limitations allow only one of the scenarios to be described in detail with how the TEIF VI-Method can be applied. The Scenario of a blind student studying at the University will be described as follows:

Golf is the only blind student in the law faculty class. Golf normally sits in a front of the class as he wants to record the lectures. However, 1there is a lot of noise as teachers did not use the microphone and other students are also talking during the class. Therefore, the sound quality of the media file that he records is not so good. Golf uses Braille to take notes from the lecture sometimes but not so often because he is not very familiar with braille. During the class, a teacher teaches by talking in Thai because all students in the class are Thai. 2When the teacher writes notes on a blackboard, Golf does not know what the teacher writes. Golf sometimes asks a friend to read it for him. Also 3when the teacher refers to material by pointing at the board, Golf does not know what the teacher is pointing at. Sometimes, 4the teacher asks questions related to information on a board. Golf is not able to answer as he does not understand the question as he cannot see the board. Sometimes 5the teacher gives students a hard copy case study to read and analyse in class individually. Golf cannot read it so the teacher allows Golf to work in a pair. Golf mentions to the teacher that, if she provides him a word file or information on the web then he will be able to read it. The teacher tells him that she only has a pdf file. At the end of the class, 6the teacher shows an important book that every student needs to read. Golf is not sure what is the book looks like. So he asks the teacher to touch the book. He can feel the size and thickness of the book. He normally pays for friends or professionals to turn books into text files by typing which is expensive for him as he doesn’t receive financial support from university or family. He needs to do this otherwise he will not pass the course because there is no accessible material for him. 7Golf find it difficult when pictures, graphs or multimedia appear as he requires assistance. For this scenario Golf requires mobile devices that he can use in the class and at home. He does not mind if it is online or offline, as long as it is suitable to solve the problems.

There follows an analysis of the interaction issues arising from the actions (Action numbers refer to the superscript numbers in the scenario) with corresponding possible solutions and changes required for these solutions. This analysis can help the developer decide on the appropriate solution. This extensive analysis of all the possible accessible interaction issues between people, technologies and objects and their possible solutions and the linking of possible technologies to the requirement question answers are unique aspects of the TEIF Method. Developers are free to also use whatever formal modeling language they prefer if they wish.

Action 1: Golf records teacher voice in the noisy environment.

Interaction issues: (P-T-P) Golf unable to hear the recording properly with background noise.

Possible solutions:

1) (P-T-P) Teacher uses microphone when talk to students that can reduce the noise.

Action 2: Teacher writes/draw on board.

Interaction issues: (P-T-P) Golf unable to see/understand what is being written/drawn on board.

Possible solutions:

1) (P-T-P) Teacher only uses pre-prepared accessible slides which Golf has access to before the lecture.

2) (P-P) Teacher or another student or helper read information aloud/explain it for Golf.

3) (P-T-P) Helper annotates drawing on screen with text information.

4) (P-T-P) Golf uses camera focused on board with Optical Character Recognition (OCR) and Screen Reading Technology (SRT) used to read text.

5) (P-T-P) Teacher and Golf uses electronic whiteboard with OCR and SRT to read text.

6) (P-T-P) Golf uses pre-prepared tactile diagram.

7) (P-T-P) Golf uses electronic tactile display.

8) (P-T-P) Golf uses OrCam MyEye, an intuitive wearable device with a smart camera to read from any surface.

9) (P-T-P) Golf uses Assisted Vision Smart Glasses, a wearable device by the University of Oxford, could be used in this case (Digital Trends, 2014).

10) Hand Writing Recognition (HWR) and SRT.

Changes required:

1) Teacher behavior.

2) Teacher or other students’ behaviour or additional helper.

3) Technology with in class helper.

4) Technology.

5) Technology.

6) Technology pre-prepared by helper.

7) Technology.

8) Technology.

9) Technology.

10) Technology.

Action 3: Teacher points to writing/drawing on board.

Interaction issues: (P-T-P with diexis) Golf unable to see/understand what is being pointed at.

Possible solutions:

1) (P-P) A teacher or another student or helper explains what the teacher is pointing at.

2) (P-T-P) A teacher provides pre-prepared tactile diagram with camera tracking of teacher’s pointing and haptic glove (further development is required before this can be a feasible and affordable solution).

3) (P-T-P) A teacher uses Camera focused on board with OCR used to read text.

4) (P-T-P) A teacher uses an electronic tactile display with camera tracking of teacher’s pointing and haptic glove (further development is required before this can be a feasible and affordable solution).

5) (P-T-P) A teacher uses an OrCam MyEye software which is an intuitive wearable device with a smart camera to read from any surface.

6) (P-T-P) Golf uses an Assisted Vision Smart Glasses, a wearable device by the University of Oxford, could be used in this case.

Changes required:

1) Teacher/other students: behaviour or additional helper.

2) Technology pre-prepared by helper.

3) Technology.

4) Technology.

5) Technology.

6) Technology.

Action 4: Teacher asks a question that related to the information on a board.

Interaction issues: (P-T-P with diexis) Golf unable to see/understand what is referring to.

Possible solutions:

1) (P-P) A teacher or another student or helper explains what the teacher is referring to.

2) (P-T-P) A teacher provides pre-prepared tactile diagram with camera tracking of teacher’s referring and haptic glove (further development is required before this can be a feasible and affordable solution).

3) (P-T-P) A teacher uses camera focused on board with OCR used to read text.

4) (P-T-P) A teacher uses an electronic tactile display with camera tracking of teacher’s pointing and haptic glove (further development is required before this can be a feasible and affordable solution).

5) (P-T-P) Golf uses an OrCam MyEye software which is an intuitive wearable device with a smart camera to read from any surface.

6) (P-T-P) Golf uses an Assisted Vision Smart Glasses, a wearable device by the University of Oxford, could be used in this case.

Changes required:

1) Teacher/other students: behaviour or additional helper.

2) Technology pre-prepared by helper.

3) Technology.

4) Technology.

5) Technology.

6) Technology.

Action 5: Teacher gives a case study hard copy paper to Golf to read.

Interaction issues: (P-T-P) Golf unable to see/understand what is being written.

Possible solutions:

1) (P-P) A teacher or another student or helper reads it for Golf.

2) (P-T-P) A teacher uses a camera focused on board with OCR used to read text.

3) (P-T-P) A teacher uses an electronic tactile display with camera tracking of teacher’s pointing and haptic glove (further development is required before this can be a feasible and affordable solution).

4) (P-T-P) Golf uses an OrCam MyEye software which is an intuitive wearable device with a smart camera to read from any surface.

5) (P-T-P) Golf uses the Assisted Vision Smart Glasses, a wearable device by the University of Oxford, could be used in this case.

6) (P-T-P) Golf uses the MIT ‘FingerReader’ device software to read the book scanning text with a finger (Finkel, 2012).

Changes required:

1) Teacher/other students: behaviour or additional helper.

2) Technology pre-prepared by helper.

3) Technology.

4) Technology.

5) Technology.

6) Technology.

Action 6: Teacher shows a book to students.

Interaction issues: (P-T-P) Golf unable to see/understand what is being written.

Possible solutions:

1) (P-P) Teacher or another student or helper reads it for Golf.

2) (P-T-P) Camera focused on board with OCR used to read text.

3) (P-T-P) Electronic tactile display with camera tracking of teacher’s pointing and haptic glove (further development is required before this can be a feasible and affordable solution).

4) (P-T-P) Use OrCam MyEye, an intuitive wearable device with a smart camera to read from any surface.

5) (P-T-P) Assisted Vision Smart Glasses, a wearable device by the University of Oxford, could be used in this case.

6) (P-T-P) FingerReader is providing an ability to read the book by scanning text with a finger.

Changes required:

1) Teacher/other students: behaviour or additional helper.

2) Technology pre-prepared by helper.

3) Technology.

4) Technology.

5) Technology.

6) Technology.

Action 7: Teacher shows a graph/diagram to students.

Interaction issues: (P-T-P) Golf unable to see/understand what is being written.

Possible solutions:

1) (P-P) Teacher or another student or helper reads it for Golf.

2) (P-T-P) Electronic tactile display with camera tracking of teacher’s pointing and haptic glove (further development is required before this can be a feasible and affordable solution).

3) (P-T-P) Electronic file that has alt tag with detailed explanation using a screen reader to read it out loud.

Changes required:

1) Teacher/other students: behaviour or additional helper.

2) Technology pre-prepared by helper.

3) Technology.

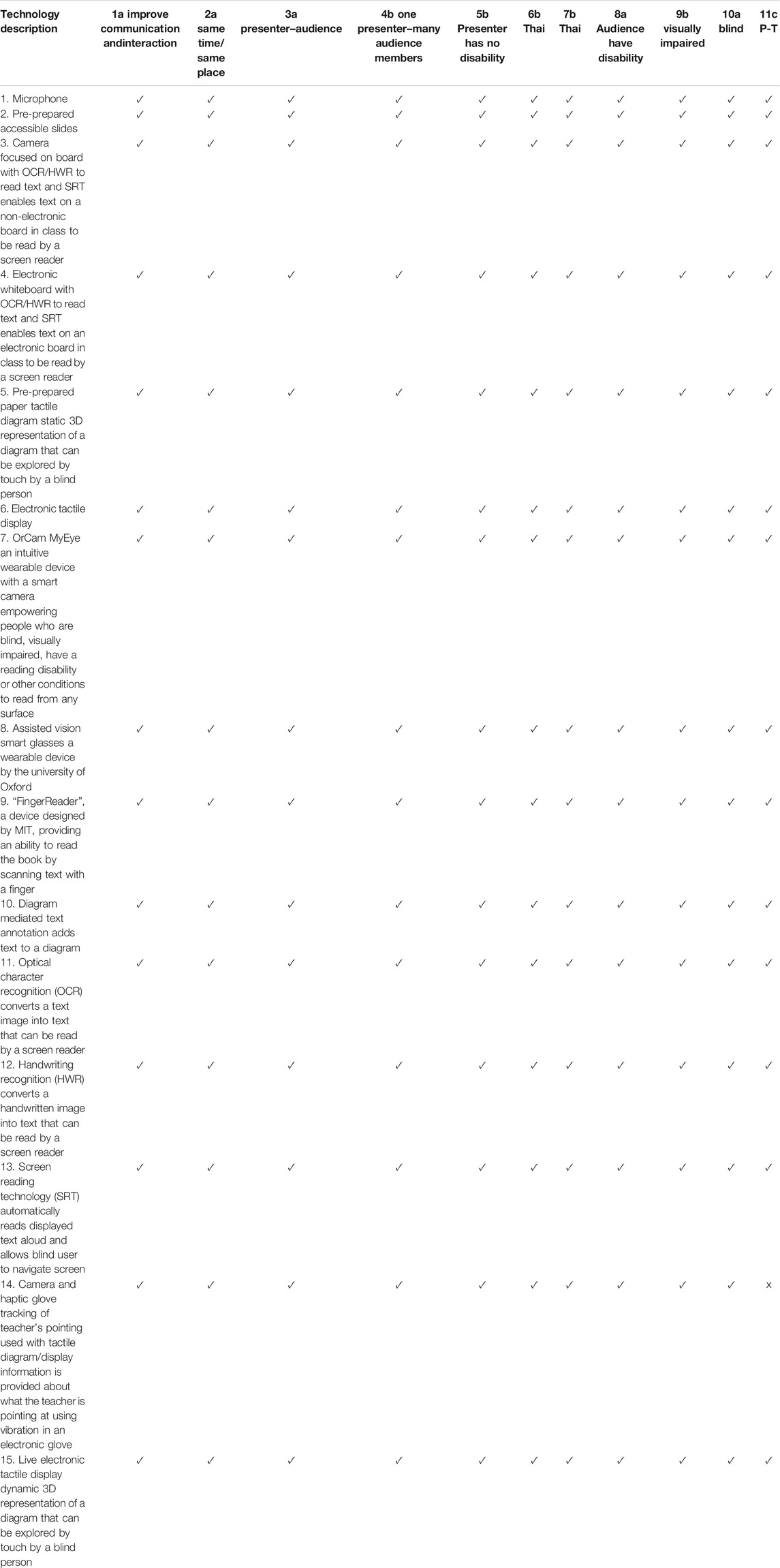

Table 6 shows a few of the technologies that are suggested that could be used to help with addressing these issues and the tick or cross indicates whether it could address the requirements identified. The technology suggestions tables provided to participants in the experiment extensively covered assistive technologies and requirement questions and only the first fifteen rows and 11 columns are shown here. Some of the technology suggestions are still at prototype stage and so further development would be required before they could provide a feasible solution. The TEIF VI- Method includes providing a technology suggestions table for the novice developers rather than each novice developer building this table themselves.

The different scenario of visiting the Shadow Puppet Museum was actually chosen for the developers to identify requirements and evaluate solutions in the experimental study detailed in User Evaluation Experimental Study of this article and is described in more detail as follows:

Non is a person with blindness who visits an in-situ shadow puppet museum in Nakhon Si Thammarat, Thailand which is run by Suchat Trapsin who only speaks Thai. There are exhibits of puppets, folk art, and pottery, inside the museum. There is some information provided in text format inside the museum to explain puppets’ names and where they are from to help tourists explore the museum by themselves. Unfortunately, people are not allowed to touch exhibits so Non cannot gain information from touching the puppets, folk art, and pottery exhibits. Therefore, Non required someone to explain the information for him. Moreover, for the fragile exhibits like pottery and glass, navigating around the rooms without vision could damage them easily as the museum has not been well designed for persons with blindness. Therefore, it would require a staff member to lead the way but providing someone to do this would be too expensive for Suchat. Normally, Suchat will give a puppet show to visitors behind the screen by talking to the visitors. Non can only hear the story of the show but cannot see the movement of the puppets and cannot tell which puppet actor is on at the moment of the show. Non cannot imagine the appearance of each puppet as each puppet will have different characteristics. For example, “Teng” is tall, thin, long body and short legs and receding hair. Therefore, Non would also require someone to explain the appearance of each puppet.

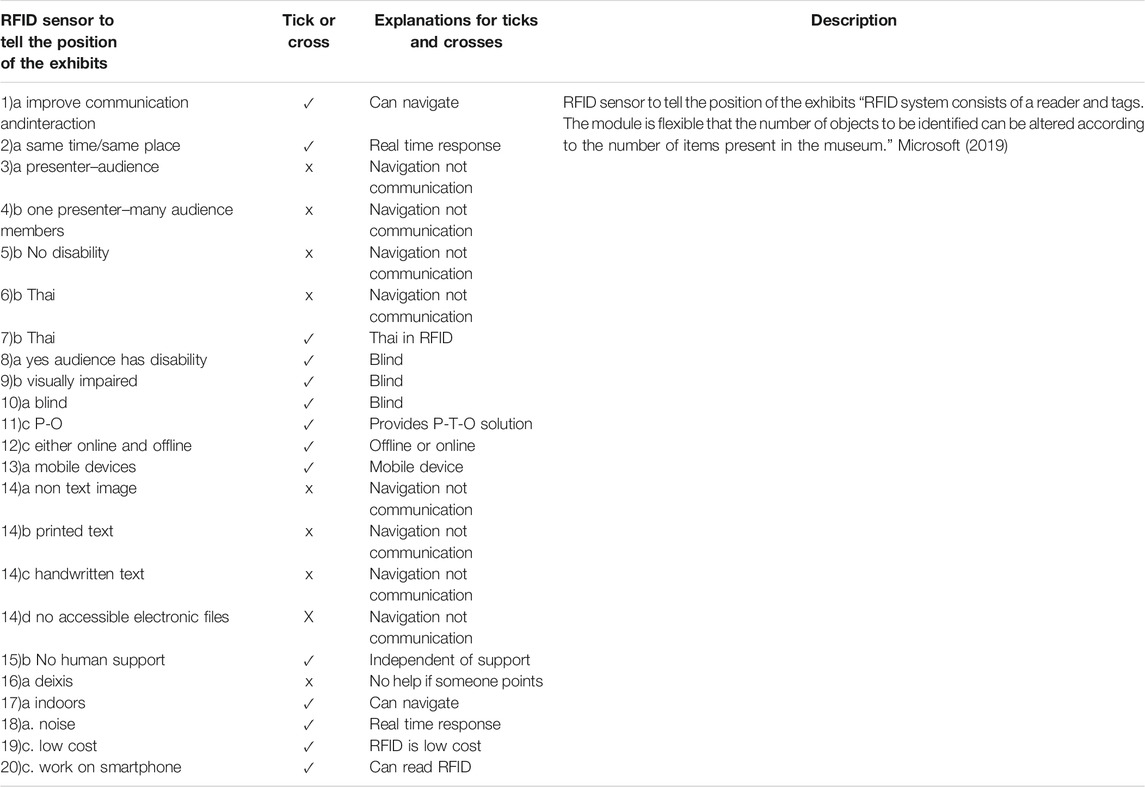

Table 7 shows and explains whether The RFID technology is appropriate (tick) or not (cross) with respect to the answers to the requirement questions for that scenario. This table should be read in context with the scenario and table 5. A similar table was created for every technology. The reader is referred to Angkananon et al. (2015) and Angkananon and Wald (2016) for more details about use cases and technologies involved.

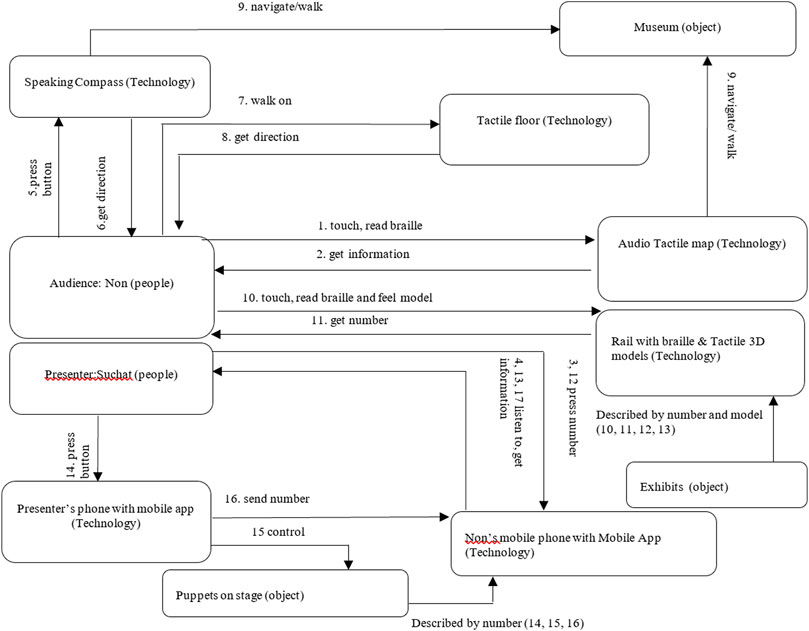

Figure 2 shows an interaction diagram for a possible solution A for the Shadow Puppet Museum scenario interaction barriers, with the following text explanations for solutions A, B and C that the experiment participant developers were required to evaluate. The arrows show the direction of information flow. The numbers in the text explanation refer to the numbers in the interaction diagram. The museum is made of wood. Braille can explain to blind people the presence of a QR code.

Solution A: An audio tactile map helps Non navigate to all parts of the museum independently. The tactile map has braille numbers on it 1) which tell Non 2) which number to press on the smartphone app 3) which explains where he is and what is there 4). The Speaking compass helps Non know the direction he is facing 5), 6) and textured ground 7) indicate the path 8) to navigate 9) around museum. Since Non cannot see a text format to explain puppets’ names and where are they from a smartphone app explains this to Non 13) who knows which number to press 11) on the app 12) from braille labels on a rail 10). It stops him from damaging the fragile exhibits like pottery and glass, when navigating around the rooms without vision. The app provides audio description to explain the information about puppets e.g., No 1 explains “Teng” (Puppet’s name). Tactile graphics and 3D models are available positioned on/by rail as Non cannot touch fragile puppet exhibits. RFID and OCR were not used as they were a more expensive solution than some sort of tactile information and it would still be required for Non to know where to position the RFID and/or OCR technology. Suchat has a smartphone app where Suchat presses the name 14) of the puppet on stage 15). This is announced to Non on his smartphone 16), 17). It is because Non cannot see the movement of the puppets and cannot tell which puppet actor is on at the moment.

From the Scenario Technology Solution, Non can touch a tactile map to get the information about the museum and exhibits. He can operate a speaking compass to get the direction to each point of exhibits and walk following the textured ground. There is rail to protect Non from walking into the exhibits at each point. Non can read braille at the rail to get the number of the information. Then, Non presses that number on to his mobile app to listen to the information of each object. Moreover, Non can touch tactile graphics and 3D models which are provided at the rail in order to get information about objects. A presenter has a role in the communication which is important because he can control technology to send an instant message to Non’s phone to notify when he introduces or moves a puppet. The technology solution selected to enable this is instant messaging which was chosen over SMS because it is free of cost using wireless and smartphones.

Solution B: The Suchart Trapsin Shadow Puppet Museum has a website providing information about the museum: history of the museum, exhibition on display inside the museum, characteristics of each shadow play, various household products, and travel details to the museum, etc. Non is a blind tourist from birth and can access the information provided on the museum’s website before and after visiting the museum by using a screen reader program to access to data. Non can comment or ask questions about the museum on the museum's web board before and after visiting the museum. Non uses a touch-sensitive graphic map to navigate within the museum. In addition, Non also wears shoes with sensors to detect obstacles which Non specially ordered for his daily use. If there is an obstacle in front of him, there will be a vibration warning on the shoes in order to prevent collisions, obstacles, or objects displayed at the museum freely. Non can set the inspection distance of the sensor attached to the shoe to see how far the obstacle is to be detected within the distances of 50, 100, or 200 m. In addition, the museum provides information about the museum via the Facebook Page where tourists can come to share photos and travel experiences. When the shadow play begins, Non can listen to the show without problems, since some character data has been studied from the website already. If Non wants to know more about the show, Non can ask after the show is finished. For activities and shadow puppet carving, Non can join by listening to the spoken steps about carving from the narrator. Suchat Trapsin’s Shadow Puppet Museum sells souvenirs: shadow play, t-shirts and various local products, which are a means of earning money to support the museum.

Solution C: Non, a blind tourist from birth, uses GPS technology on his own smartphone to navigate from home to the Suchat Trapsin Shadow Puppet Museum. It was Non's first trip to visit the museum. Upon reaching the museum, the museum’s building is a wooden building with a high basement in which there are 2 houses to visit the exhibition. Non used the handrail to hold on to on the way up to the building. When coming up to the building, Non uses the indoor navigation system to navigate the exhibition rooms by using a braille compass to give directions. The museum has prepared braille signs to indicate the location of each exhibition. Inside the exhibition room, there is QR Code technology to explain characters and pictures by voice. The visitors of the museum can scan the QR Code from their smart phones in order to access detailed information of each object in the museum. Sound information is used to describe the characteristics and shape of each shadow play. Each exhibition room has 3D models for Non to experience in order to get information about that object. The museum has an accessible bathroom and accessible parking places for tourists who need to use these services. The museum has exhibited a shadow play for visitors to see. There is also a service to explain the repertoire of the story to be displayed, a service for the visually impaired people. When Non had doubts about the shadow play puppet, he was able to use the technology of screen reading on his mobile phone to read more explanation of the script. In addition, visitors can also add friends to the Line Application and Facebook Page to receive news about the museum’s publicity such as movie trailer videos played on various occasions and shadow Puppet carving activity. Non can print the questions through the chat channels of Line Application and Facebook Messenger when he has questions about the museum. The museum will answer questions via those channels. If Non needs a helper to guide and explain various information about the exhibition and activities on display within the museum he can book a service for help but there are additional costs for this.

User Evaluation Experimental Study

The experimental study evaluated the TEIF VI-Method for helping technology developers who are not experts in accessibility with evaluating technology solutions to interaction issues involving people with visual impairment. The study took between 1 h and one and a half hours for each developer. The sample size was 36. It was divided into 2 groups of 18 developers with 1 year experience of digital technology development and no experience of accessibility or assistive technologies. There was no assistive technology training provided to developers beyond the information provided in the technology suggestions table. One group used the TEIF VI-Method and the other group used their preferred Other Methods. The sample size was determined using the G Power (McCrum-Garder, 2010) calculated values of:

• effect size: 1–This represent a relatively large effect size.

• alpha error probability: 0.05–normal convention.

• power: 0.8–normal convention.

• test family: t test–two independent means.

• tails: two–is appropriate when a difference in any direction is expected (it could be higher or lower).

• number of groups: 2.

• statistic test: means difference between two independent means (two groups).

• Levene’s test for equality of variances were computed for the results.

Surathani Rajabhat University has an ethics committee to approve studies on humans and participants are required to be provided with a participant information document which they read before giving informed consent.

User Evaluation Experimental Design

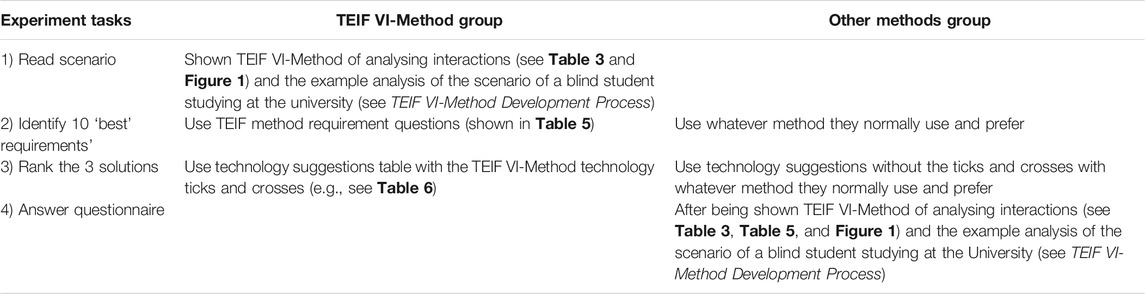

The participants read the scenario identifying issues faced by a user with visual impairment at the Shadow Puppet Museum (described previously in TEIF VI-Method Development Process of this paper) and then evaluated requirements for a technology solution to visual impairment related to the problems they identified. This was analysed using an independent samples t-test between the two groups. Next, participants were asked to rank the three provided technology solutions A, B, C (described previously in TEIF VI-Method Development Process of this paper) in order of best meeting the requirements. For the requirements task, participants selected the best ten requirements from 30 requirements provided for a technology solution to the visual impairment related problems they identified from the scenario of visiting the Shadow Puppet Museum. The best ten requirements had been decided by the experts when validating the TEIF VI-Method. The other twenty ‘non-best’ requirements were developed to ensure that there was a clear difference from the “best” requirements. For the solutions task, the three technology solutions were presented in a balanced design order. For each group, three participants rated the solutions in each of the six possible solution orders. For the Questionnaire Task, using a 5 point Likert scale the participants gave responses about TEIF VI-Method steps: clarity of explanations, evaluating if and how the TEIF VI-Method helped, imagining how the TEIF VI-Method might help in the future, and any other comments about the value or usefulness of the TEIF VI-Method. Table 8 shows the experimental tasks all participants were required to complete and the different information provided to the two groups. This is explained in more details in the following sections.

TEIF VI-Method Group

The 18 TEIF VI-Method group participants were shown the TEIF VI-Method of analysing interactions shown in Table 3 and Figure 1 and the example analysis of how interactions were affected by visual impairment for the scenario of a blind student studying at the University as described in TEIF VI-Method Development Process and also the 20 questions in Table 4 before reading the scenario. For the Requirements Task, the participants selected the ten best requirements for a technology solution to visual impairment related problems found in the scenario. For the Solutions Task, the participants used the technology suggestions table to identify technologies that met the provided requirements. This table had information on whether the technologies in the table addressed the issues identified in the questions about the scenario. Then, the participants were asked to give rankings for how well each of the three solutions (A, B, C) met the requirements. The participants finally completed the Questionnaire Task.

Other Methods Group

The 18 Other Methods group participants read the scenario. For the Requirements Task, the participants selected the ten best requirements for a technology solution to visual impairment related problems they found in the scenario. Then, the participants gave rankings for how well each of the three solutions met the requirements. The technology solutions sheet had the same technology information provided to the TEIF VI-Method group but without the Yes (tick)/No (cross) information on whether the technologies addressed the issues identified in the questions about the scenario provided to the TEIF VI-Method group. For the Questionnaire task, after completing both the requirements and solutions tasks the developers in the Other Methods group were shown the TEIF VI-Method. Then, they were asked to give responses about TEIF VI-Method steps.

Results

The following section reports the statistics, analysis and results for the experiments to show whether the TEIF VI-Method helps developers evaluate technology requirements and solutions for people with visual impairment.

The TEIF VI-Method Helping in Evaluating Requirements

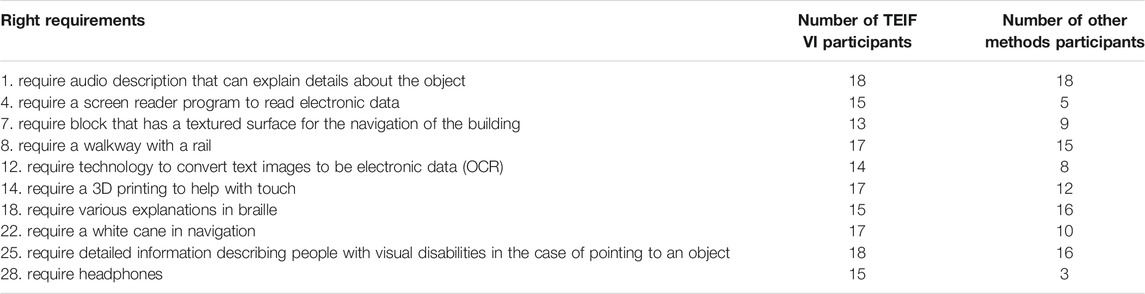

The number of participants in each group selecting the right requirements as identified by experts is shown in Table 9.

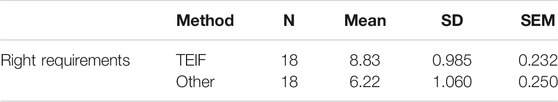

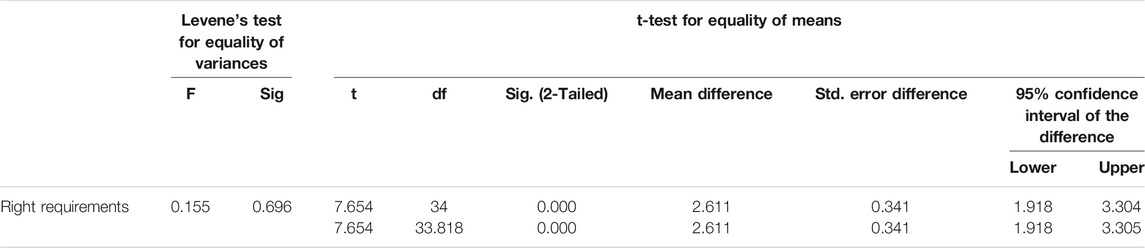

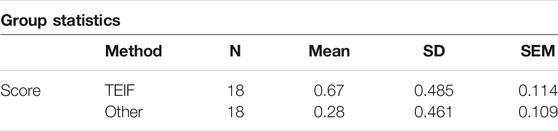

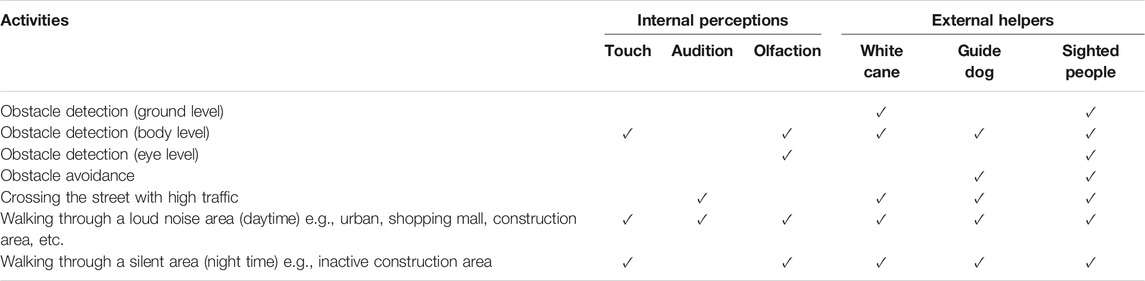

An independent samples t-test (IBM SPSS Statistics) was used to analyse the differences between the TEIF VI-Method group and the Other Methods group in evaluating requirements. Tables 10, 11 show the mean number of correct requirements was significantly higher (p < 0.001) for participants using the TEIF VI-Method (X̄ = 8.83) than the Other Methods (X̄ = 6.22).

The TEIF VI-Method Helping in Evaluating Solutions

Experts’ Rating on Solutions A, B, and C

The experts rated the solutions in the order A: 1st, C: 2nd, and B: 3rd. Thirteen participants using the TEIF VI-Method and Five participants using the Other Method ranked the solutions in the same order as the experts. A “1” was awarded if the participant ranking was the same as the expert ranking of the solutions. A “0” if the participant ranking was different from experts’ ranking of solutions and an independent t-test was undertaken. Table 12 shows the basic statistics of ranking on solutions A, B, and C of the TEIF VI-Method and Other Methods participants. Table 13 shows a significant difference at the p < 0.05 level between rankings by the two groups. This therefore demonstrates that the TEIF VI-Method helps participants rank technology solutions closer to the expert rankings.

How the TEIF VI-Method Helps Developers

Only 11.11% of participants in the TEIF VI-Method group and 5.55% of participants in the Other Method group thought TEIF VI-Method steps are complex and difficult to understand. All participants in both groups agreed that the TEIF VI-Method was explained well and easy to follow. Participants in each group rated the TEIF Method out of 5 (Likert Scale) for how it helped them evaluate requirements and solutions. The one sample t-test statistic showed that there was a significant difference between the neutral value “3” (p < 0.001) and the mean ratings, demonstrating how helpful the TEIF VI-Method was.

Discussion

The experimental results showed that the TEIF HI-Method that had previously been successfully developed and evaluated for helping novice developers identifying more accessible interactions for hearing impaired people could be successfully modified for helping novice developers with identifying more accessible interactions for visually impaired people. The TEIF VI-Method involves providing information about existing available technologies and which requirements they can address. This information therefore will need continuous updating to reflect the latest technologies. It was suggested that it might be more helpful if information was provided about how well the technologies met requirements (e.g., not at all, low, medium, and high levels) rather than a simple binary (“yes/no”, “tick/cross”) classification. A limitation of this research study was that in such a controlled experiment and with the time and resources available it was not possible to ask the developers to actually interview people with visual impairment to gather requirements and actually develop technology solutions for actual barriers faced by specific people with visual impairment who would evaluate these solutions in the actual situations. Future work could therefore involve a case study, where developers use the TEIF VI-Method to actually develop real working technology solutions for actual interaction barriers faced by specific people with visual impairments who would evaluate these solutions in their actual real context situations. The interaction barriers and requirements would be identified by the developers through interviewing these specific people with visual impairments. While the transferability of the TEIF VI-Method to the five contexts described in TEIF VI-Method Development Process was conceptually validated by expert review, this future work would provide face validity of the transferability of the TEIF VI-Method by developers to other contexts and solutions. The 36 participants who took part in this experimental study had 1 year experience of digital technology development and no experience of accessibility or assistive technologies. They had all studied the same degree at the same University. This helped ensure that the results of the study were not dependent on the different knowledge and experience of the participants in the two groups. Future studies could help identify how the method might be affected by, or modified for, the knowledge and experience of participants. There are many different roles required in any ‘software development lifecycle’ and depending on the size of an organization these roles can be undertaken by one or many people who may or may not be involved in identifying the requirements or designing and evaluating the solution.

This future work could also include a detailed study of what conceptual or practical frameworks or models are used in current software development approaches designing for visually impaired people by experienced developers and how they can also benefit from the TVEI VI-Framework.

A review of previous interaction frameworks, models and approaches found that none were standardized, comprehensive software development accessibility support methods developed with visually impaired people that helped ‘novice’ or ‘non-expert’ developers gather or evaluate requirements and design or evaluate accessible technology interaction solutions for inaccessible interactions with any complex combinations of technology, people or objects, encountered by people with visual impairment under all conditions and that also have been validated by both expert review and a statistically controlled experiment.

What was controlled for both groups in our experiment included; The knowledge and experience of participants; The tasks the participants completed; The balanced order the possible three solutions were presented to participants; The time available to participants to complete the tasks; The details of the presentation of the TEIF-VI Method.

The contribution of the research described in this paper is therefore the development and evaluation of a method that fills this important gap by systematically supporting the requirements and design or evaluation stages of any other method.

Function analysis8 explicitly focuses on what a product should do to prevent designers fixing on solutions too early and thus encourage creative thinking. The TEIF-VI Method suggestions table could help a designer who was using function analysis to see if an existing assistive technology could be used or adapted so they do not ‘re-invent the wheel’. For example, the solution to Scenario A that is presented in Figure 2 of our paper uses a combination of existing assistive technologies as well as new technologies requiring development.

While it could have been useful to have more comparative tests, where different methods are critically evaluated and compared this was not possible within the constraints of the statistically valid experimental quantitative study and it is something for future work. Similarly, while it would have also been useful to have interviewed each of the ‘Other Methods’ group participants in depth to find out exactly what resources and other methods they used to come to their decisions for the experimental tasks, the extra time required for the volunteer participants to have provided this very detailed information would have made it very difficult to actually recruit volunteer participants to sign up for the experimental study. It was also felt more important to use the available time to show the ‘Other Methods’ group the TEIF-VI Method and get their evaluation of it.

Some readers might be slightly confused by the requirements in Table 9 which may seems to be a mix of required functionality and suggested solutions. The issue was that it was necessary to provide a discriminating task to test participants understanding with 10 definitely ‘correct’ requirements and 20 definitely ‘incorrect’ requirements for which there could be no reasonable interpretation that they might be considered correct. If all requirements had been expressed simply in the form of “Make interaction XXX accessible” it would have been very difficult to devise a sufficiently discriminating task.

While it might be thought that since the experts involved in selecting the right requirements had already been involved in validating the method, that there is a potential risk of bias, it was felt that the experts could be trusted, and also that using half the experts for the method validation and half for the requirements selection would not make the best use of this ‘scarce resource’.

The TEIF-VI Method does not replace other tools or methods [e.g., simulations, personas, checklists, standards (e.g., WCAG), scenarios etc.] but can be used alongside other tools to support the design and development of technology solutions to inaccessible interactions encountered by people with visual impairment in the following ways as explained in detail in TEIF VI-Method Development Process:

a) The TEIF-VI Method helps analyse a scenario involving a visually impaired person into interactions between people, technology and objects.

b) The TEIF-VI Method’s requirement questions help identify in what way the interactions may be inaccessible to a visually impaired person.

c) The TEIF-VI Method’s technology suggestions help identify whether existing assistive technologies are available to assist a visually impaired person overcome each inaccessible interaction.

While there are many published works related to software development and visually impaired people it is very difficult to directly compare the TEIF VI-Method with any other published work since:

Regarding 1) no other published method has been found that provides a coherent systematic way to categorise all entities involved in any interaction involving a visually impaired person. For example, Dix (1994) did not consider disability at all or any interactions in the same time and at the same place situations such as people using technology to interact with real objects. Dix used the term ‘Artefact’ which means man-made object but more specifically a technology tool, while in the TEIF the terms ‘Technology’ and ‘Object’ are used instead where ‘Object’ refers to non-technology objects. Gaines (1988) also did not consider disability and also only addressed interactions between people, computers and equipment. Disability was also not considered by Rukzio’s Physical Mobile Interaction Framework (Rukzio et al., 2008) for using mobile devices as mediator to interact with people, things and places and only considered the context of a mobile tourist guide. Norman and Draper (1986) only considered interaction between human and computer and once again did not consider disability. Function analysis analyses and develops a model of a new product rather than focusing on interactions or accessibility while activity theory provides no step-by-step methodology, also does not explicitly consider accessibility or interactions and novice developers would find it particularly difficult to apply. Requirements Engineering is an integral part of traditional software design methods which as discussed in Software Design Process do not normally consider accessible interactions for visual impaired people. Guidelines that do consider disability include the Web Content Accessibility Guidelines which only address web or application interfaces and Cook and Hussey’s Human Activity Assistive Technology (HAAT) Model (Cook and Hussey, 1995) which aimed to study human performance in tasks involving technology only considered interactions between people and technology.

Regarding b) no other published method has been found that provides a coherent systematic way to help novice developers to identify in what ways the interactions may be inaccessible to a visually impaired person. For example the W3C Web Content Accessibility Guidelines only address inaccessible interactions with web or application interfaces and Cook and Hussey’s Human Activity Assistive Technology (HAAT) Model (Cook and Hussey, 1995) only considered inaccessible interactions between people and technology. Accessibility/universal design personas can be helpful in providing an indication of some of the interactions that might be inaccessible to a visually impaired person in some contexts but are usually not detailed enough to identify all the inaccessible interactions in any context. For example the United Kingdom government digital services team provide only two personas related to visual impairment9 i.e., ‘Ashleigh (partially sighted screenreader user) wants to be able to use any website she wants. She also wants to be more independent.’ ‘Claudia (partially sighted screen magnifier user) wants to be able to phone any company she needs to contact–it’s so much quicker and easier for her to call than to write. She also wishes there was less clutter on some websites.’ The Auckland Universal Design Manual10 provides the following information regarding People who are blind/have low vision: “We need … Level, wide and unobstructed footpaths, Strong tonal contrast between street furniture and pavements, Use texture and colour contrast to provide pathway guidance, Use audible or tactile indicators to provide warning or wayfinding information, Clear signage with appropriate colour contrast and font.”

Regarding c) no other published method has been found that provides a coherent systematic way to help novice developers identify whether existing assistive technologies are available to assist a visually impaired person overcome each inaccessible interaction. For example there are various available lists of assistive technologies (e.g., Perkins’ A to Z of Assistive Technology for Low Vision11, AbilityNet’s Vision impairment and Computing factsheet12, American Foundation for the Blind Assistive Technology Products13) but for a novice developer who did not have expertise in understanding the barriers faced by visually impaired people, they do not easily explain how they would overcome inaccessible interactions.

Conclusion

The TEIF VI-Method was successfully validated and reviewed by 4 accessibility experts, 20 students with visual impairment and 10 adults with visual impairment. Non-expert developers using the TEIF VI-Method evaluated requirements for technology solutions to people with visual impairment’s interaction barriers better than non-expert developers using their preferred Other Methods and also ranked technology solutions closer to the expert rankings. All developers in both groups agreed that the TEIF VI-Method was explained well and was easy to follow and thought that the TEIF VI-Method helped them to evaluate technology solutions and requirements. This shows that the TEIF VI-Method helps developers with little experience evaluate technology solutions for people with visual impairment and suggests it should also help them design solutions through evaluating their own designs. The TEIF Framework and Method is a general Framework and Method that can be applied to supporting people with any disability if the requirements questions and technology suggestions table are adapted to reflect the appropriate requirements and the assistive technologies. The TEIF Method was previously validated for people with a hearing impairment and this paper describes how it has been validated for people with a visual impairment. Future work will be able to validate the TEIF Method for other disabilities and the range of professionals involved in identifying the requirements or designing and evaluating solutions.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics Statement

The studies involving human participants were reviewed and approved by Suratthani Rajabhat University. Written informed consent for participation was not required for this study in accordance with the national legislation and the institutional requirements.

Author Contributions

All authors listed have made a substantial, direct, and intellectual contribution to the work and approved it for publication.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

I would like to give great thanks to Thailand Research Fund, Office of the Higher Education Commission, and Suratthani Rajabhat University which supported funding for this research project.

Footnotes

1https://www.w3.org/WAI/redesign/personas.

2http://www.inclusivedesigntoolkit.com/.

3https://www.cdrnys.org/blog/disability-dialogue/nothing-about-me-without-me/.

4https://www.w3.org/TR/WCAG21/.

5https://www.researchgate.net/publication/255935434_The_Activity_Diamond-Modeling_an_Enhanced_Accessibility.

6http://www.accessible-eu.org/.

http://www.accessible-eu.org/documents/ACCESSIBLE_D3.1.pdf.

8http://wikid.io.tudelft.nl/WikID/index.php/Function_analysis.

9https://www.gov.uk/government/publications/understanding-disabilities-and-impairments-user-profiles.

10http://content.aucklanddesignmanual.co.nz/design-subjects/universal_design/Documents/Universal%20Design%20Personas.pdf.

11https://www.perkinselearning.org/technology/blog/z-assistive-technology-low-vision.

12https://abilitynet.org.uk/factsheets/vision-impairment-and-computing.

13https://www.afb.org/blindness-and-low-vision/using-technology/assistive-technology-products.

References

Angkananon, K. (2015). “Technology Enhanced Accessible Interaction Framework and a Method for Evaluating Requirements and Designs,” (United Kingdom:Faculty of Physical Sciences and Engineering, Electronics and Computer Science, University of Southampton). PhD Thesis. Available at: https://eprints.soton.ac.uk/383618/1/final_thesis_correction_v26_final_submit.pdf (Accessed 07 20, 2020).

Angkananon, K., and Wald, M. (2016). “Extending the Technology Enhanced Accessible Interaction Framework Method for Thai Visually Impaired People,” in Computers Helping People with Special Needs. ICCHP 2016. Lecture Notes in Computer Science, 9759. Editors K. Miesenberger, C. Bühler, and P. Penaz (Cham: Springer), 555–559. doi:10.1007/978-3-319-41267-2_78

Angkananon, K., Wald, M., and Gilbert, L. (2015). Evaluation of Technology Enhanced Accessible Interaction Framework and Method for Local Thai Museums. J. Manag. Sci. Suratthani Rajabhat Univ. 2 (2), 19–48. Available at: https://so03.tci-thaijo.org/index.php/msj/issue/view/9710/Vol.2%20No.2 (Accessed 07 20, 2020).

Angkananon, K., and Wald, M. (2017). “Technology-Enhanced Accessible Interactions for Visually Impaired Thai People,” in Universal Access in Human–Computer Interaction. Designing Novel Interactions. UAHCI 2017. Lecture Notes in Computer Science, 10278. Editors M. Antona, and C. Stephanidis (Cham: Springer), 224–241. doi:10.1007/978-3-319-58703-5_17

Apple (2016). Apple Maps. Available at: http://www.apple.com/ios/maps/(Accessed 06 2, 2020).

Balaji, S., and Murugaiyan, M. S. (2012). Waterrrfall vs V-Model vs Agile: A Comparative Study on SDLC. Int. J. Inf. Tech. Business Manag. 2 (1).

Blind Association of Thailand (2015). Disability Statistics. Available at: http://tabgroup.tab.or.th/node/91 (Accessed January 5, 2020).

Brock, A., Truillet, P., and Oriola, B. (2015). Delphine Picard, Christophe Jouffrais. Interactivity Improves Usability of Geographic Maps for Visually Impaired People. Human-Computer Interaction. Taylor & Francis 30 (2), 56–194. doi:10.1080/0737002410.1080/07370024.2014.924412

Burgstahler, S. (2002). Distance Learning: Universal Design, Universal Access. Educational Technology Review, v10 n1 2002, Access to Technology; Course Development; Universal Design; Web Site Design.

Carriço, L., Lopes, R., and Bandeira, R. (2011). “Crosschecking the mobile Web for People with Visual Impairments,” in Proceedings of the International Cross-Disciplinary Conference on Web Accessibility (W4A '11), New York, NY (New York, NY, USA: ACM), 4.

Chalkia, E., and Bekiaris, E. (2011). “A Harmonised Methodology for the Components of Software Applications Accessibility and its Evaluation, Editors Stephanidis Constantine,” in Universal Access in HCI, Part I, LNCS 6765, Orlando, FL: Springer, 197–205. doi:10.1007/978-3-642-21672-5_22

Choi, Y., Hyun, K. H., and Lee, J.-H. (2020). Image-Based Tactile Emojis: Improved Interpretation of Message Intention and Subtle Nuance for Visually Impaired Individuals. Human-Computer Interaction 35 (1), 40–69. doi:10.1080/07370024.2017.1324305

Cook, A., and Hussey, S. (1995). Assistive Technologies: Principles and Pratice. St. Louis, MO: Mosby Elservier.

Digital Trends (2014). Digital Trends. http://www.digitaltrends.com/mobile/blind-technologies.