Abstract

Touch as a modality in social communication has been getting more attention with recent developments in wearable technology and an increase in awareness of how limited physical contact can lead to touch starvation and feelings of depression. Although several mediated touch methods have been developed for conveying emotional support, the transfer of emotion through mediated touch has not been widely studied. This work addresses this need by exploring emotional communication through a novel wearable haptic system. The system records physical touch patterns through an array of force sensors, processes the recordings using novel gesture-based algorithms to create actuator control signals, and generates mediated social touch through an array of voice coil actuators. We conducted a human subject study (N = 20) to understand the perception and emotional components of this mediated social touch for common social touch gestures, including poking, patting, massaging, squeezing, and stroking. Our results show that the speed of the virtual gesture significantly alters the participants' ratings of valence, arousal, realism, and comfort of these gestures with increased speed producing negative emotions and decreased realism. The findings from the study will allow us to better recognize generic patterns from human mediated touch perception and determine how mediated social touch can be used to convey emotion. Our system design, signal processing methods, and results can provide guidance in future mediated social touch design.

1. Introduction

Long-distance communication has experienced a tremendous evolution in the past few decades. The invention of phones broke the communication barrier for space-separated individuals (Tillema et al., 2010), and the development of video recording and display technology gives people the ability to explore the colorful world through an electronic screen (Saravanakumar and SuganthaLakshmi, 2012). However, even with the ability to communicate online, over the phone, or through videochat, people can still experience feelings of loneliness or depression due to limited physical contact, especially under the social isolation of the COVID-19 pandemic (Tomova et al., 2020). These negative side-effects of touch starvation, a lack of physical contact with others, have also been observed in elderly individuals, individuals in hospitals, and those who live alone (Tomaka et al., 2006; Klinenberg, 2016).

Social touch is an essential component in human development, cognition, communication, and emotional regulation (Cascio et al., 2019). Although social touch is common in our everyday lives, it is currently not possible for individuals to physically interact with each other when spatially separated.

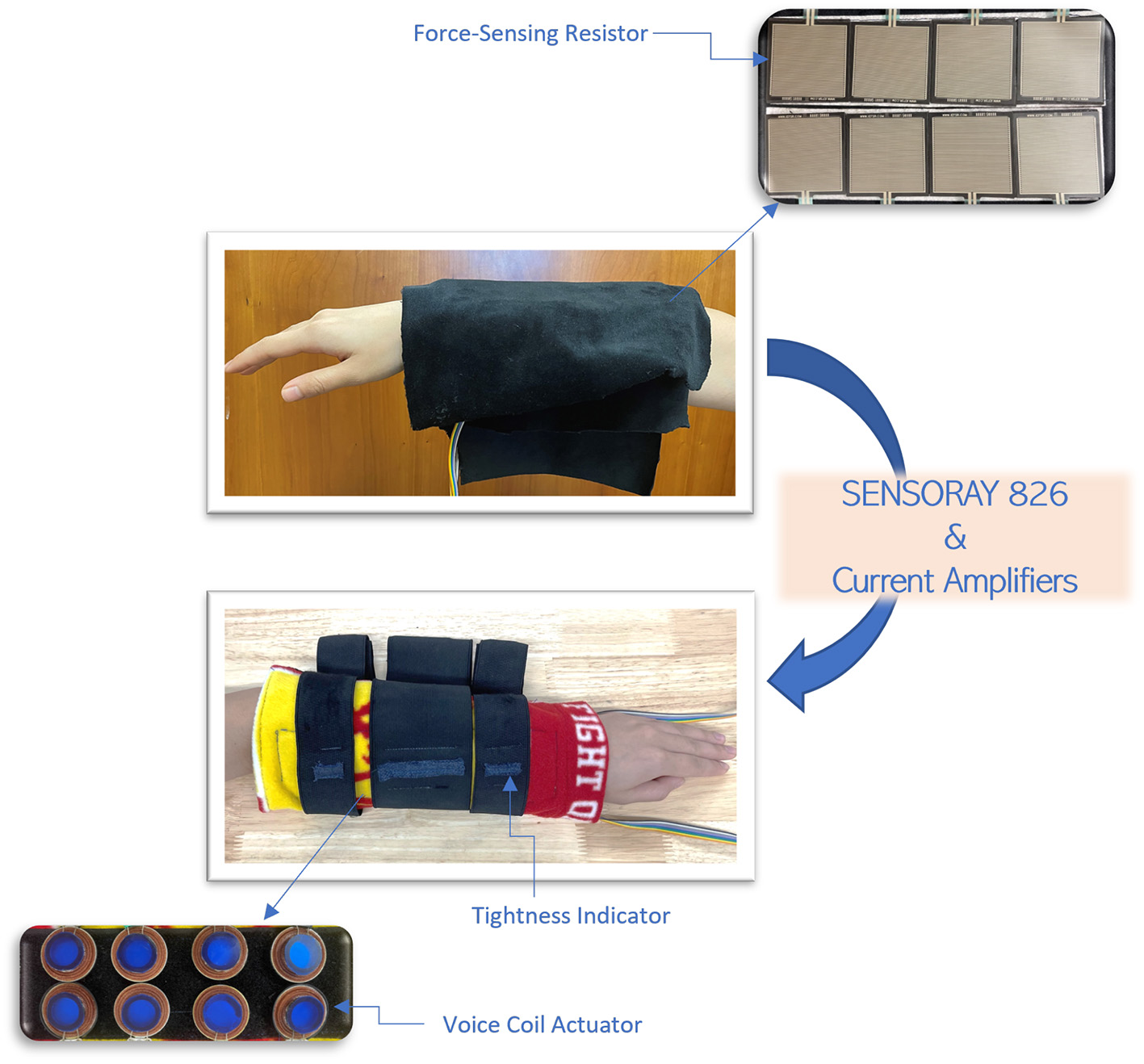

Although prior work has created haptic devices for displaying virtual social touch (Eichhorn et al., 2008; Tsetserukou, 2010; Nakanishi et al., 2014; Culbertson et al., 2018), there has been limited analysis of the acceptability of these virtual touch sensations or the role they play in mediating social interactions between individuals. The goal of this research is to study individuals' emotional responses to mediated social touch, and determine how the touch gesture and its speed affect the acceptability and emotional perception of the interactions. To achieve this goal, we have designed a system that has the ability to record and reproduce social touch, similar to how phones record and replicate voice. The system consists of two parts: one wearable sleeve that records human gesture data using force sensors, and a second sleeve that generates the mediated social touch to the user (Figure 1).

Figure 1

Input device with array of sensors and output device with array of actuators. The output device is controlled by a direct 1-1 mapping of sensor to actuator after gesture-specific data processing.

This article evaluates our system's ability to convey emotions using common social touch gestures: poking, patting, massaging, squeezing, and stroking. These gestures were chosen for their ability to express both positive and negative emotions (Hertenstein et al., 2009).

The remainder of the article is organized as follows. In Section 2, we review relevant work in the area of mediated social touch and data-driven methods. Our device design and control methods are explained in Section 3. The study design is introduced in Section 4. The study results are presented in Section 5 and discussed in Section 6.

2. Related Work

Social touch plays an important role in human development and communication, helping individuals maintain relationships (Stafford, 2003) and communicate emotions (Hertenstein et al., 2006), while also directly reflecting physical and psychological closeness between individuals (Andersen, 1998). Research into direct human-human social touch has shown that touch can communicate both positive and negative emotions, including anger, fear, disgust, love, gratitude, and sympathy (Hertenstein et al., 2009). Interpersonal touch is the most commonly used method of providing comfort (Dolin and Booth-Butterfield, 1993), can improve the well-being of older adults in nursing care (Bush, 2001), and reduces patients' stress level during preoperative procedures (Whitcher and Fisher, 1979). Touch as an expressive behavior also plays an important role in building up relationships. Studies have shown that intimate partners benefit from touch on a psychological level (Debrot et al., 2013) and caregiver touch impacts emotion perception in children as a function of relationship quality (Thrasher and Grossmann, 2021). Emotional perception is affected even by gender asymmetries during touch interaction (Hertenstein and Keltner, 2011). Not only can the sense of touch be used to communicate distinct emotions but also elicit (Suk et al., 2009) and modulate human emotion. For example, one can perceive a touch as communicating anger without feeling angry themselves. This profound connection between social touch and emotions opens up a wide range of research areas for haptic researchers to explore.

Social touch can take many forms including shaking hands, hugging, holding hands, and a pat on the back. A major challenge in the field of haptics is how to provide meaningful and realistic sensations similar to what is relayed during social touch interactions (Erp and Toet, 2015). The inability to transmit touch during interpersonal communication leads to a limited feeling of social presence during virtual interactions between people, motivating the design of haptic systems to deliver virtual social touch cues.

Mediated social touch refers to the use of haptic technology to allow people to touch one another over a distance (Haans and Ijsselsteijn, 2006). Researchers have discovered that mediated social touch can compensate for the loss of nonverbal cues that results from the use of current communication methods such as texting and videochat (Tan et al., 2010).

There has been a long history of research on the development of mediated social touch devices to replicate the feelings of social touch through wearable and holdable haptic devices. Most devices have focused on replicating a single form of social touch, such as a handshake (Nakanishi et al., 2014), a hug (Mueller et al., 2005; Cha et al., 2009; Tsetserukou, 2010; Delazio et al., 2018), a high-five (Yarosh et al., 2017), or a stroke on the arm (Eichhorn et al., 2008; Knoop and Rossiter, 2015; Tsalamlal et al., 2015; Culbertson et al., 2018; Israr and Abnousi, 2018; Wu and Culbertson, 2019). Vibration is a commonly adopted modality for social touch rendering, including for conveying specific emotions (Hansson and Skog, 2001) or gestures (Wang et al., 2019). Although these devices are effective at displaying specific touch or motion, they lack the complexity necessary to make clear the intent behind the touch. Social touch signals can be complex and varied, as even a simple stroke can be used to convey a wide range of emotions such as sympathy, sadness, and love (Hertenstein et al., 2009). To create an effective virtual touch system, the signals used to create the gesture are just as important as the device's mechanical design.

Previous research has shown that vibrations (Seifi and Maclean, 2013), thermal feedback (Wilson et al., 2016), and air jets (Tsalamlal et al., 2015) can convey distinct emotions. However, these devices used representational haptic icons that required users to learn associations between the emotion and displayed sensation. The use of icons was intended for the users to focus on emotional content of the signal rather than creating high-fidelity virtual gestures.

Rather than directly playing pre-tuned signals, some researchers have created data-driven social touch systems that measure an input touch signal from a user touching one device and replay that signal to a another user with an output device. These systems have been created for a variety of touch gestures, with varying fidelity and intimacy of touch. One common method for measuring touch in these systems is with an array of pressure sensors, with the output varying between pressure (Teh et al., 2012), lateral motion (Eichhorn et al., 2008), vibration (Furukawa et al., 2012; Huisman et al., 2013), and multisensory feedback (Cabibihan and Chauhan, 2015). Researchers have also explored creating higher fidelity input-output systems, for example in kissing display devices for use between family members (Cheok, 2020) and romantic partners (Samani et al., 2012). There has also been work in creating ultra-realistic input devices (Teyssier et al., 2019). Given the complexity of human social touch and the large number of previous devices that have been created, there is a lack of fundamental knowledge of user preferences for realism, touch intimacy, touch location, and interaction method.

Although previous work has shown that both direct (Hauser et al., 2019) and mediated (Salvato et al., 2021) social touch can convey emotion, there has been limited work studying how these emotions are conveyed or recognized. Specifically, research is currently lacking in studying how the actuators signals in mediated social touch devices can be controlled to convey distinct emotions. In this work, we aim to explore how temporal changes to the touch signals can alter perceived emotions. To achieve this, we develop a novel haptic system to examine the relationship between the mediated touch and emotion.

3. System Design and Control

With the goal of realistically replicating human touch, we created a system capable of both recording and displaying human touch patterns. We use a data-driven approach for creating the actuator control signals, recording touch patterns as an array of forces. This section describes the design of our input and output devices, and signal processing methods that make up our lightweight data-driven haptic system.

3.1. Device Hardware

3.1.1. Input Device

Our system consists of two devices: an input device to record the social touch signals as force and an output device for displaying the virtual touch to the user. Both devices were designed as fabric sleeves to be worn on the forearm. As shown in Figure 1, the input sleeve has eight force sensing resistors (FSRs) arranged in a 2 × 4 array. The FSRs measure the applied force as a change in resistance. These sensors (Sparkfun SEN-09376) were chosen due to their square shape (1.5 × 1.5 in) that allowed tight packing of sensors, flexible nature that is easily integrated into a wearable device, and ability to measure forces in the range used during human social touch (Wang et al., 2010). A previous study has shown that recorded pressure data is able to generate the control signals that can be used to mimic various gestures with a voice coil device (Salvato et al., 2021). The sensors are embedded in a fabric sleeve. To prevent the sensors from bending and to provide a stiffer material for better force transference when the sleeve is worn on the lower arm, we added a layer of rigid padding as supporting material in between the sensors and skin.

When recording the signals, the sensor sleeve was freely laid on the arm to avoid noise from pre-loading the sensors. We zeroed the sensors before each recording to remove the forces caused by the wearer's motion, bending in the sensors, and the pressure of the sensors resting on the arm. A voltage divider circuit was used to convert the sensors' change in resistance to change in voltage, and the resulting voltage was sampled at 1 kHz as analog inputs through a Sensoray 826 PCI card. The signals were lowpass filtered at 10 Hz to remove high-frequency noise not caused by human motion (Srinivasan, 1995). Further signal processing to convert the recorded signals to actuator control signals is described below.

3.1.2. Output Device

To generate the sensation of different social touch gestures, we created an output device with an array of voice coil actuators (Tectronic Elements TEAX19C01-8). These actuators are driven at low frequencies to provide force normal to the user's arm, This type of actuator has previously been used to create a comfortable and realistic stroking sensation (Culbertson et al., 2018). Salvato et al. (2021) further proved that this actuation hardware is effective at displaying pleasant and realistic social touch cues. Eight voice coil actuators are placed in a 2 × 4 array in a fabric sleevew to simplify mapping from the recorded forces to actuator control signals (Figure 1). The actuators signals are output at 1 kHz from the Sensoray 826 PCI card, and are sent through a linear current amplifier with a scaling factor of 1 A/V.

These voice coil actuators are position-controlled, meaning that the control signals specify the amount of actuator motion into the arm, and do not directly control the force that the actuators apply to the arm. Therefore, it is important to ensure the tightness of the sleeve is consistent across users and along the length of the sleeve. We created tightness indicators (TI) at three points along the length of the sleeve. Each TI is a piece of elastic with markings showing the ideal amount of stretch. To create recognizable social touch gestures, the voice coils must be programmed both temporally and spatially. The details of these actuator control signals are discussed below.

3.2. Device Control

This section describes our signal processing algorithms to create actuator control signals from recorded force with the goal of replicating touch gestures as realistically as possible. In Salvato et al. (2021)'s previous study, a similar 2 × 4 voice coil layout was used for generating social touch, and the study showed promising results for generating realistic social touch with this device. Although this previous article used finer resolution in the recording system (1″ × 1″ sensors), the sensing resolution was greater than the actuator resolution, so the increase in resolution helped smooth the gestures both temporally and spatially. In our current system, the individual force sensor we chose covers the same area as the voice coil actuator (1.5″ × 1.5″). We have designed the algorithms that map the sensor data to actuator control signals to include blending between actuators. This blending of the signals produces the same temporal and spatial smoothing as the greater resolution from the previous‘system.

3.2.1. Strategies for Different Gestures

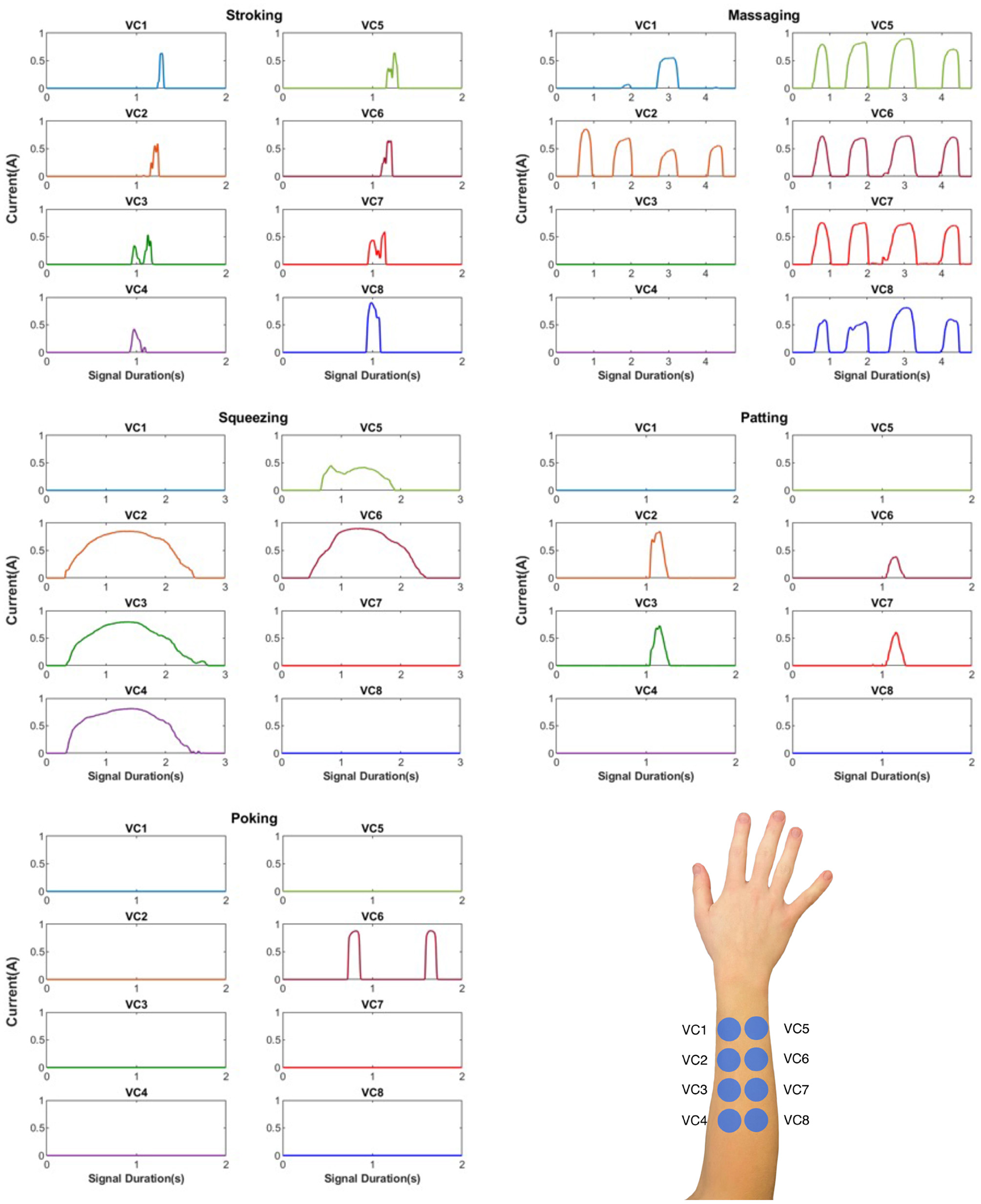

Our system is capable of displaying five social touch gestures: poking, patting, massaging, squeezing, and stroking. These gestures were chosen due to their common appearance in social touch and their ability to convey both positive and negative emotions (Hertenstein et al., 2009). Due to the unique spatial and temporal patterns of the different gestures, the data is processed and smoothed differently for each gesture type. Since the signals directly control the motion of the voice coil actuators, we apply principles of exaggeration to maximize the amount of actuator motion, resulting in more noticeable and salient virtual gestures. Exaggeration is a widely used animation principle to amplify cartoon characters' expression (Lasseter, 1987). Figure 3 shows the actuator signals for these five gestures. The number of actuators being moved at a single time correspond to the perceived area of contact during the gesture.

3.2.1.1. Poking

Poking involves using a single finger to repeatedly press into the arm. Each poke is made up of a rise in force as the finger presses into the skin, followed by a decrease in force as the finger releases contact. Since each FSR covers an area larger than the size of fingertip, we expect only a single FSR to be activated during poking, but it is still possible that poking happens between two adjacent sensors. During output, we only want to move a single actuator in order to accurately represent the small contact area of a single finger. We first use Touch Point Detection (TPD) to track the number of sensors that have valid contact force with the hand. TPD captures every sensor contact with a cycle (a measure of contact duration) T ≥ 0.05 s. This threshold was chosen to ensure only valid contacts are detected, ignoring fluctuations due to noise. We define Nc as the total number of active sensors determined by the TPD. Ideally, TPD for poking would result in Nc = 1 active sensor; if the poke occurs between two sensors, Nc = 2. When two sensors are activated, we select the more significant sensor (i.e., the sensor with the higher sensed force) and set the second sensor to zero.

To maximize the strength of the poke during actuation, we exaggerate the poke by mapping the sensed force to the full motion range of the actuator. We use a linear mapping where the maximum sensed force corresponds to the largest actuator indentation (Vact = 0.9V) and the minimum force corresponds to the actuator's neutral position (Vact = 0V). All other actuators' movement is constrained so that only a single actuator moves at a time to ensure the poking sensation is strong and only covers a small contact area.

3.2.1.2. Patting

The main difference between poking and patting is the contact area. Patting uses the entirety or part of the palm to apply a gentle force to the arm. Similar to poking, our first step in processing the signals for patting is to use TPD to determine the number of active sensors. From many recordings, we have determined that Nc = 2–4 sensors for a typical pat. Since the pat has a larger contact area than a poke, we perform a data integrity check on the signals rather than identifying the sensor with the highest significance. This integrity check means we want to make sure the recorded touch signal is reasonable based on the gesture type. For example, if three activated sensors are not adjacent to each other during a pat, it may imply that some sensors are not collecting the data correctly. The integrity check contains two parts. First, it checks to see if all activated sensors are connected using a simple Depth First Search (DFS) to check the graph connectivity, where we set every sensor as a vertex and build an edge for its two or three neighbors depending on where it is located in the 2D layout. Second, we check whether the acceleration of the motion is consistent by taking the derivative of the signals; all values should be either positive or negative. Any signal that does not pass the integrity check is assumed to be an invalid recording and must be collected again. Similar to poking, we linearly map the actuator control signals to the sensor signals by setting the maximum force to the largest actuator indentation and the minimum force to the actuator's neutral position. All actuators corresponding to inactive sensors are set to zero.

3.2.1.3. Squeezing

A squeezing gesture involves movement of multiple fingers during the contact. A typical squeeze on the lower arm is made by having the thumb on the one side of the arm while the other four fingers are on the opposite side; all fingers apply force to a virtual center at the same time. A valid squeezing recording should have the thumb on the one row of the FSRs and the rest of the fingers on the second row, as shown in Figure 3. If the fingers are too condensed in a single area, the information loss of the touch gesture will cause the transformed signal to appear ambiguous, and the gesture will likely be incorrectly interpreted as poking. Therefore, we first use TPD to determine if the signals match what we expect from a squeeze, that is Nc = 1–2 active sensors in one row and Nc = 2–4 active sensors in the second row. If our recording does not match this pattern, it is determined to be invalid and must be recorded again.

To increase the saliency of the squeeze, we exaggerate the center of force. If two sensors in the same column are activated at the same time, we scale these signals by setting the maximum sensed force equal to the largest actuator indentation. The rest of the sensors are not exaggerated and simply follow a linear scaling between sensed force and actuator voltage. For a squeeze with a long holding period, we manually identify the start and end of the hold and set the actuator signals equal to the mean of the signals during the entire holding period. This scaling and smoothing exaggerates the squeezing sensation, improving its perceived strength and saliency.

3.2.1.4. Massaging

Massaging shares some similar patterns with squeezing, but they are not exactly the same. For purposes of processing, we can consider massaging as repeated squeezing moving across the arm. However, we do not impose the same restriction on Nc across the two rows as we do for squeezing because it is more common that the entire palm presses on one row and four fingers press on the second row. We exaggerate all sensors by linearly scaling them to the actuator's full range of motion using the maximum force across all sensors. To further improve saliency, we use the same method of stabilizing the hold as for squeezing.

3.2.1.5. Stroking

Stroking refers to moving one's hand with gentle pressure over the lower arm. The recorded signal will show multiple sensors in the same row being activated in a sequence. In order to make the stroking pattern more noticeable, we emphasize the moving center of the stroke (i.e., the middle of the fingers applying the force). First, we must determine the direction of the stroke and the size of the contact area. If two sensors in a row are activated, we exaggerate the signal of the front sensor with a scaling factor of α = 1.25. If three sensors in a row are activated at the same time, then we put an emphasize on the middle sensor, amplifying it also with a scaling factor of α = 1.25. To create a smooth and continuous feeling stroke, we blend the signals of adjacent actuators. First, we collect information about the start, peak, and end time-stamps of each signal to determine the direction of the stroking motion. We then blend the signals of adjacent actuators in the direction of motion by shifting the starting time of the next actuator so that it overlaps the previous actuator in time by a set duration. This overlap maintains the shape of the actuator signals, but increases the continuity of the stroke by providing a smooth transfer of pressure between actuators. Similar overlapping of signals was used in Culbertson et al. (2018) to create a smooth stroking sensation, and that research found that an overlap of 25% of the actuator's total motion duration creates the most continuous sensation. Through pilot testing, we also determined that an overlap of 25% was the best choice for our system.

4. Study

Speed plays an important role in human perception. Previous research has shown a correlation between perceived emotion and the speed of speech (Lindquist et al., 2006) and the tempo of music (Rigg, 1940). The high arousal caused by fast-paced music has also been shown to increase the risk of traffic violations (Brodsky, 2001). In this research, we examine how users interpret the emotion of mediated social touch gestures and how these emotions can be manipulated by the speed of the gesture.

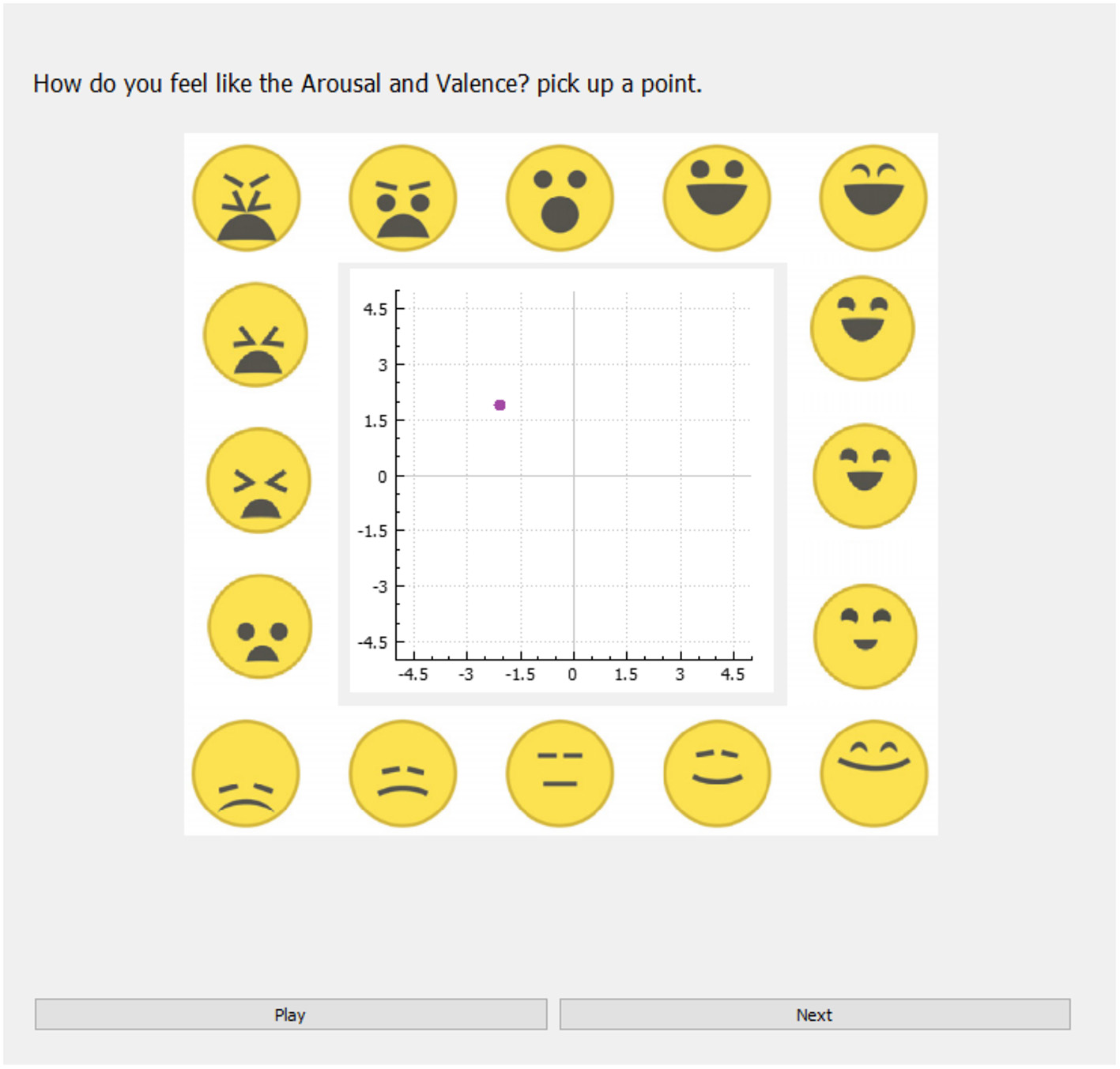

Twenty individuals (8 females and 12 male, 18–35 years old) participated in the study. The experimental protocol was approved by the Institutional Review Board at the University of Southern California under protocol number UP-19-00712, and participants gave informed consent. Participants sat at a desk and wore the output device on their left arm. They provided feedback through the GUI shown in Figure 2 and wore headphones playing white noise to block auditory cues from the actuators. Additionally, the fabric of the sleeve blocked the actuators from view, so participants relied only on the haptic cues in their ratings. The details of the study setup, conditions, and procedure are discussed below.

Figure 2

Study GUI, adapted from Toet et al. (2018). Participants rated the valence and arousal of each gesture by selecting a point on the 2D plot.

4.1. Experimental Conditions

The social touch gestures were pre-recorded by the experimenter so that all participants received the same feedback. The experimenter wore the input device on his left arm and made the gestures with his right hand. The data was processed as discussed in Section 3.2 and the actuator control signals were stored in a text file. We created and stored a single recording for each gesture, and empirically determined the ideal speed of each gesture using a pilot study.

In this work, we want to evaluate the effect of gesture speed on user's perception and preference. For consistency, we altered the speed of the five recorded gestures by resampling the original signals, ensuring that the touch pattern in the original waveform was maintained. The signals were downsampled to achieve a faster speed and upsampled to achieve a slower speed. To ensure a smooth signal, spline interpolation was used during resampling. This method preserves the shape of signals and introduces the least amount of noise. We created five levels of speed for each gesture type by both speeding up and slowing down the original gesture recordings: original speed (OS), 4 times slower speed (4S), 2 times slower speed (2S), 4 times faster speed (4F), and 2 times faster speed (2F). Altering the gesture speed only affects the duration and frequency of the gesture signal; the majority of other hyper-parameters for the signal, such as touch intensity and contact area, were preserved by our signal processing algorithm. Five gestures with five speed levels were used in the study to create a total of 25 distinct gestures. The actuator signals used in the study are shown in Figure 3.

Figure 3

Actuator signals used in the study for speed OS.

4.2. Phase 1

In Phase 1, participants were asked to evaluate their emotional response to the mediated touches by rating the perceived valence and arousal of each touch. Valence and arousal are two important dimensions in describing how individuals label their own subjectively experienced affective states (Barrett, 1998). Arousal is an evaluation of emotional intensity, and valence refers to the pleasantness (positive valence) or unpleasantness (negative valence) of an emotional stimulus. Participants rated the valence and arousal of the mediated touch together by selecting a single point on the 2D valence-arousal plot shown in Figure 2. This plot was adapted from the EmojiGrid, which was presented and validated in Toet et al. (2018).

Participants rated each mediated touch signal three times for a total of 75 cues, which were presented in randomized order. Participants were presented with a single mediated touch at a time and were are allowed to replay the signal before rating. They were asked to rate the emotion that they felt the gesture was trying to convey. It is worth mentioning that there is a difference between perceived emotion and personal emotion. Perceived emotion is the emotion that is trying to be conveyed through the gesture, while personal emotion is the feeling elicited in the user after receiving the touch. We clarified this concept to each participant to avoid confusion. Signals vary in length from 5 to 20 s, and this phase of the study took about 20–30 min total. After completing all trials, participants were given a 10-minute break before moving on to Phase 2.

4.3. Phase 2

In this phase, we evaluate our system's performance in conveying realistic and pleasant gestures. Additionally, we examine the correlation between the gesture speeds and participants' perception of realism and comfort. We used the same signals from Phase 1, and participants were again asked to rate each mediated gesture three times for a total of 75 trials. Participants were asked “How would you rate the realism of the touch on the following scale?” and provided their answers on a 7-point Likert scale (1 = very unrealistic, 7 = very realistic). The comfort of the touch was similarly rated on a 7-point Likert scale (1 = very uncomfortable, 7 = very comfortable).

5. Result

5.1. Phase 1: Effect of Speed on Valence and Arousal

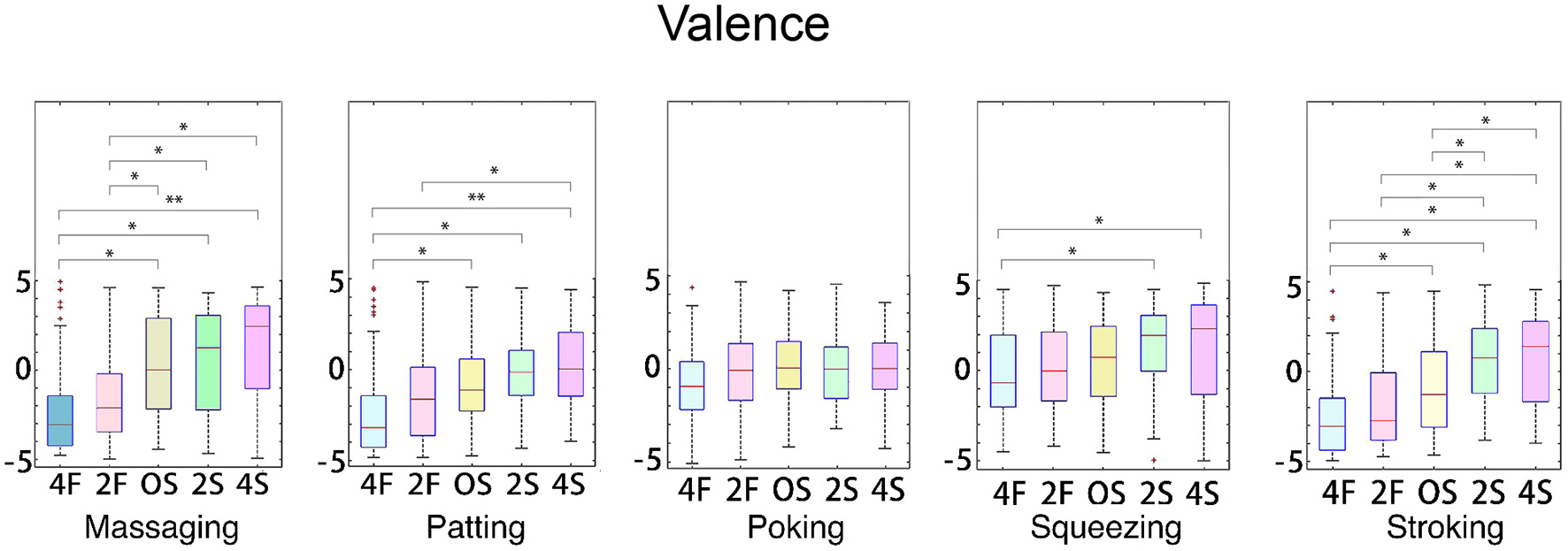

Figure 4 shows the valence ratings for each gesture and speed. We ran a one-way ANOVA on the valence rating for each gesture with the signal speed as factor. This analysis indicated that speed caused a statistically significant difference in the valence rating for stroking [F(4, 295) = 21.81, p < 0.001], massaging [F(4, 295) = 16.26, p < 0.001], squeezing [F(4, 295) = 5.03, p < 0.001], and patting [F(4, 295) = 9.6, p < 0.001]. Speed did not have a significant effect on the valence ratings for poking [F(4, 295) = 1.11, p = 0.35].

Figure 4

Valence ratings separated by gesture and speed. * p < 0.05, ** p < 0.01.

We then ran a Tukey's post-hoc pairwise comparison test on stroking, massaging, squeezing, and patting to further evaluate the effects of speed on the ratings of valence. The results showed that there was a general trend of decreasing valence with increasing speed. For the stroking gesture: 4F and 2F had significantly lower valence than 2S and 4S, and OS had significantly lower valence than 2S and 4S. For the massaging gesture: 4F and 2F had significantly lower valence than OS, 2S, and 4S. For the squeezing gesture: 4F had significantly lower valence than 4S and 2S. For the patting gesture: 4F had significantly lower valence than OS, 2S, 4S; 2F had significantly lower valence than 4S. There was no significant differences between any other signals.

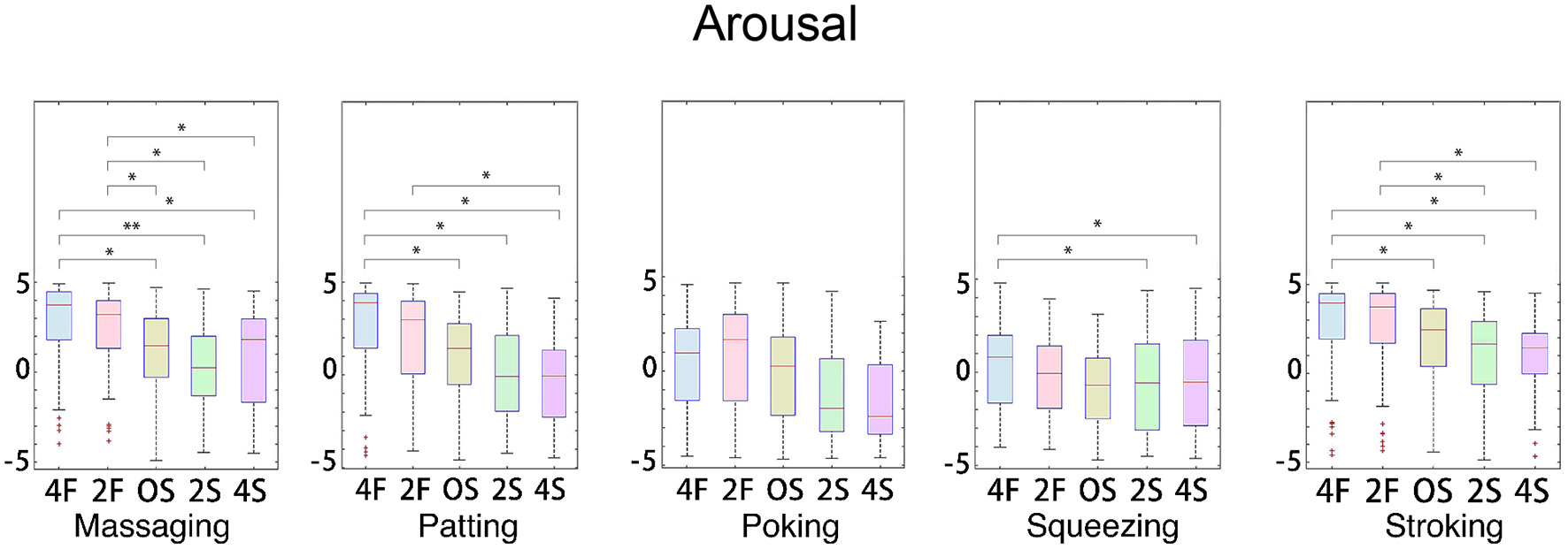

Figure 5 shows the arousal ratings for each gesture and speed. We ran a one-way ANOVA on the arousal rating for each gesture with the signal speed as factor. This analysis indicated that speed caused a statistically significant difference in the arousal rating for stroking [F(4, 295) = 6.72, p < 0.001], massaging [F(4, 295) = 13.51, p < 0.001], patting [F(4, 295) = 16.4, p < 0.001], and poking [F(4, 295) = 11.48, p < 0.001]. Speed did not have a significant effect on the arousal ratings for squeezing [F(4, 295) = 1.79, p = 0.13].

Figure 5

Arousal ratings separated by gesture and speed. *p < 0.05, **p < 0.01.

We also ran a Tukey's post-hoc pairwise comparison test on stroking, massaging, patting, and poking to further evaluate the effects of speed on the ratings of arousal. The results showed that there was a general trend of increasing arousal with increasing speed. For the stroking gesture: 4F and 2F had significantly higher arousal than 2S and 4S. For the massaging gesture: signal 4F and 2F had significantly higher arousal than OS, 2S, 4S. For the patting gesture: 4F had significantly higher arousal than OS, 2S, and 4S; 2F has significantly higher arousal than 2S and OS. For the poking gesture: 4F, 2F, OS have significantly higher arousal than 2S and 4S. There was no significant differences between any other signals.

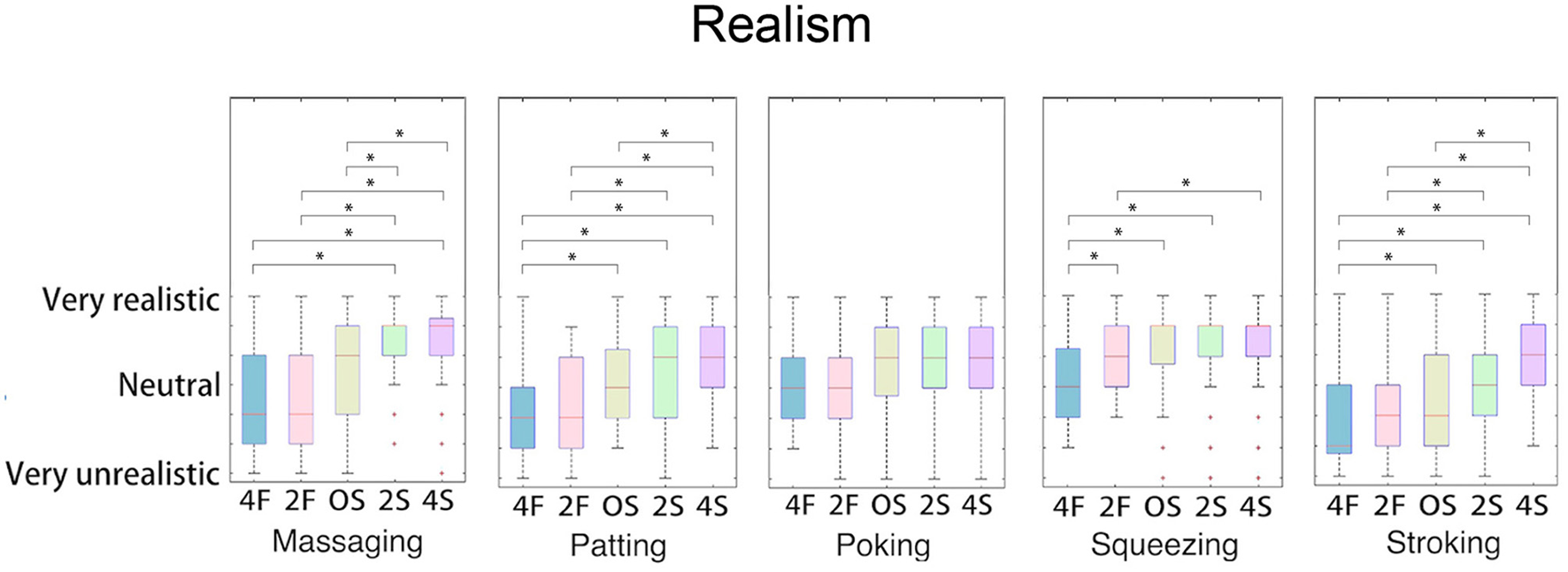

5.2. Phase 2: Effect of Speed Change on Realism and Comfort

Figure 6 shows the realism ratings for each gesture and speed. We ran a one-way ANOVA on the realism rating for each gesture with the signal speed as factor. This analysis indicated that speed caused a statistically significant difference in the realism rating for stroking [F(4, 295) = 15.92, p < 0.001], massaging [F(4, 295) = 17.41, p < 0.001], squeezing [F(4, 295) = 5.46, p < 0.001], and patting [F(4, 295) = 17.53, p < 0.001]. Speed did not have a significant effect on the realism ratings for poking [F(4, 295) = 1.11, p = 0.35].

Figure 6

Realism ratings separated by gesture and speed. * p < 0.05.

We then ran a Tukey's post-hoc pairwise comparison test on stroking, massaging, squeezing, and patting to further evaluate the effects of speed change on the realism ratings. The results showed that there was a general trend of decreasing realism with increasing speed. For the stroking gesture: 4F and 2F had significantly lower realism ratings than 2S and 4S; 4F was significantly less realistic than OS; OS was significantly less realistic than 4S. For the massaging gesture: 4F, 2F and OS had significantly lower realism than signal 2S and 4S. For the squeezing gesture: 4F had significantly lower realism than signal 2F, OS, 2S, and 4S. For the patting gesture: 4F and 2F had significantly lower realism than 2S and 4S. There was no significant differences between any other signals.

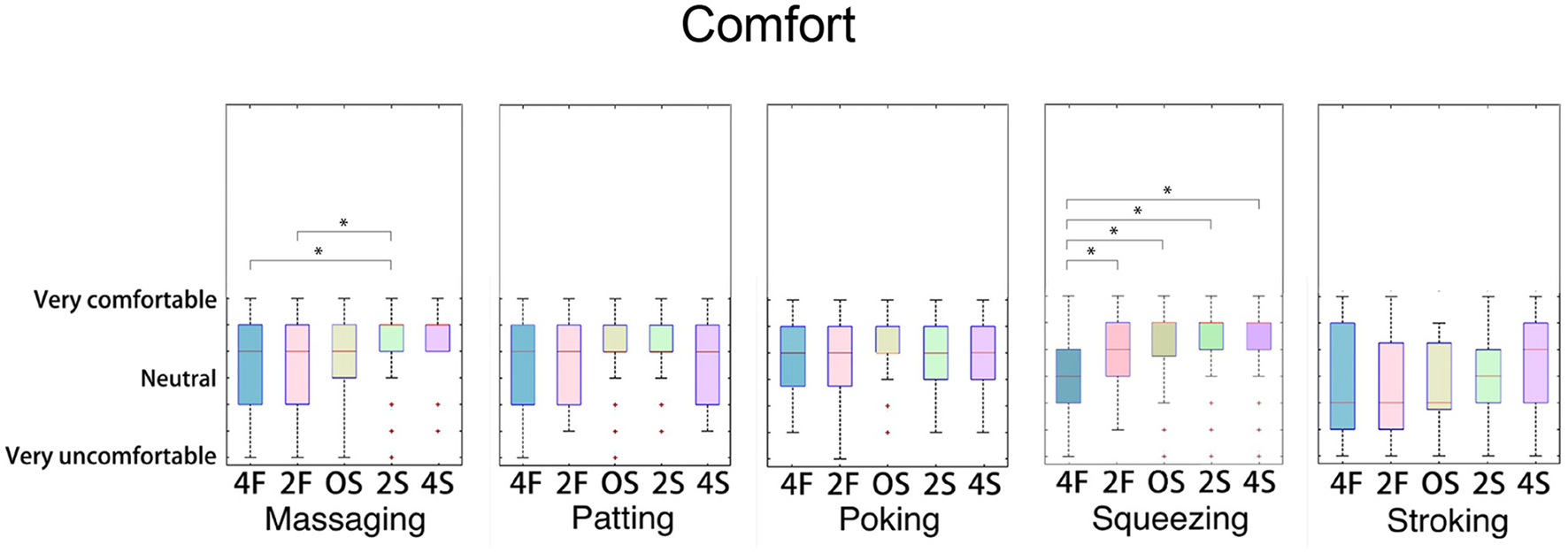

Figure 7 shows the comfort ratings for each gesture and speed. We ran a one-way ANOVA on the comfort rating for each gesture with the signal speed as factor. This analysis indicated that speed caused a statistically significant change in the comfort rating for massaging [F(4, 280), p = 0.002] and squeezing [F(4, 280) = 6.73, p < 0.001]. Speed did not cause a significant difference in the comfort ratings for stroking [F(4, 280) = 1.47, p = 0.21], patting [F(4, 280) = 1.09, p = 0.36], and poking [F(4, 280) = 1.38, p = 0.23].

Figure 7

Comfort ratings separated by gesture and speed. * p < 0.05.

We then ran a Tukey's post-hoc pairwise comparison test on massaging and squeezing to evaluate the effects of speed change on the ratings of comfort. The results showed that for massaging and squeezing, there was a general trend of decreasing comfort with increasing speed. For the massaging gesture: the comfort for 4F and 2F was significantly lower than for 2S and 4S. For the squeezing gesture: the comfort for 4F was significantly lower than for the four other speeds. There was no significant differences between any other signals.

6. Discussion

Studies have shown that both visual context (Esposito et al., 2009) and the relationship between toucher and touchee (Thompson and Hampton, 2011) affect the emotional perception of social touch. However, in our study all visual information and context was removed from the interaction. The touches were not presented in the context of any social interaction, and the gestures were recorded by the experimenter who had no social relationship with the participants. Therefore, we expect the participants' ratings to reflect only the emotional content of the gesture itself.

Before expanding the discussion, we summarize the four main findings of our results:

The perceived valence of the touch is more positive for slower gestures and negative for faster gestures.

The perceived arousal of the touch increases with increasing speed.

The realism of the mediated social touch improves with decreasing speed.

The mediated touch feels more comfortable when the speed is slow.

The current study provides evidence that the speed of mediated social touch plays a crucial role both on human emotional and personal perception.

Stroking has been shown to strongly activate the CT afferents, which respond maximally to stroking in the range of 1–10 cm/s (Loken et al., 2009). These speeds have also been shown to be the most pleasant range of velocities for stroking on the skin. Research has also shown that people tend to move their hand faster when stroking an artificial arm as compared to stroking their partner's arm (Croy et al., 2016). Therefore, it is expected for their to be an effect of speed on participants' ratings of stroking, with slower speeds being preferred, which our results support. Our study showed that when the speed of the stroking increases, arousal also increases and valence changes from positive to negative. The realism decreases significantly when the speed of the stroke increases, but we did not see a corresponding change in the comfort with speed. The effective speed of the stroke for 4S is 10.9 cm/s and for 4F is 87.0 cm/s, which are both above the CT afferents' preferred speed. It is likely that the low comfort ratings for stroking were partially caused by this mismatch in speeds.

Massaging has been shown to be effective in the application of physical therapy (Field, 1998) and body relaxation (Leivadi et al., 1999). Here, we focus on massaging on the arm, which may not be the ideal location for this specific gesture. Although massaging and stroking likely activate different mechanoreceptors, our results for massaging match those of stroking: increased speed increases the touch's arousal and moves the valence from positive to negative. Slow massaging feels more comfortable and realistic than fast massaging. The ratings of slow massaging signals are in the high comfort range, which also proves our device's ability to generate comfortable touch. The high arousal and negative valence level yielded by fast speed massaging was reported as unrealistic based on the ratings; a similar trend was also observed in gestures like patting and stroking. This finding shows us there seems to be a correlation between realism and the gesture's perceived emotion. However there is not sufficient data to prove that decreased realism causes negative emotions to be perceived in the touch. It remains to be studied in future work the effects between realism and emotion as well as the range of emotional information that a gesture can convey while still being realistic.

Valence goes from positive to negative when speed gets faster with squeezing. Previous studies have shown that squeezing is often related to anger or fear (Hertenstein et al., 2006). However, our analysis shows that even the fastest squeezes indicate a relatively low amount of negativity (median valence = −1) compared to stroking and massaging. This indicates that squeezing might not be an ideal gesture for expressing anger-related emotions, or that another parameter such as signal strength may be more important in altering the valence of this gesture. Similarly, our study also shows that speed did not significantly alter the intensity of the conveyed emotion. In future work we will study how other changes to the squeezing gesture, such as increasing its intensity or the duration of the hold, may alter the perceived emotion. Although the perceived emotion of the squeeze did not change with speed, we did see a decrease in comfort with increased speed, indicating that participants prefer a slower squeeze.

Patting can be used to convey a wide range of emotions, such as anger, happiness, love, gratitude, and sympathy (Hertenstein et al., 2009). Our study results support this idea and show a significant variation in the arousal and valence ratings with speed. However, even though there is no force difference in the gesture, several participants commented that the fast pats felt angry. Patting overall had fairly negative valence ratings, and the ratings decreased even more with increased speed. The arousal of the pat also increases significantly with speed meaning that high-speed pats are perceived as strong emotions. Since the contact duration changes with speed, the pat feel more like a slap when it is very fast.

Poking is consistently neutral in valence, realism, and comfort across all speeds. This result is not particularly surprising since poking is not commonly used to convey emotions (Jones and Yarbrough, 1985). The only factor that changes with speed is arousal, which increases with increasing speed. The reason for this might be that poking is considered an attention-getting gesture rather than an emotional gesture (Baumann et al., 2010).

Our results across the gestures shows that speed consistently has an effect on the human emotional perception during mediated social touch. It is intuitive that the touch's arousal would increase with increased speed. Our results were consistent across participants for a given speed and gesture, meaning that given an emotion we want to convey, we could choose an appropriate speed and gesture to display with our system. One thing to note is that the methods and results we presented apply only to our voice coil system; we plan to confirm this effect in different modalities and actuation methods in future work. An intuitive linking between the valence-arousal ratings and their representative emotions brings up some thoughts for future emotional communication. With a fast motion, massaging, patting, and stroking gestures are rated as low-valence and high-arousal, which can be adopted to convey emotions such as anger or annoyance. Slower-motion gestures are more appropriate for emotions like amused, glad, or pleased. Squeezing is consistent with positive valence and medium arousal, which can represent expressions of relaxed or calm. Poking would be better used for notifications or raising attention rather than conveying emotional information.

This study also provides some insights on factors to consider when designing realistic and comfortable touch signals. Our signals of squeezing, poking, and massaging were rated as highly realistic at slower speed, but stroking and patting showed lower realism overall. For all gestures, the perception of comfort level improves as the speed is decreased. We will explore additional data processing to increase the realism of these gestures.

7. Conclusion

In this article, we present a novel data-driven haptic system that can record human touch and output the gestures to a wearable array of actuators. The two sleeves of the system were designed to be lightweight and easy to build, so they are ideal for prototyping mediated social touch research. We designed heuristic algorithms for transforming touch gestures from sensor to actuator. Our signal processing algorithms tune the gestures' hyper-parameters, including moving speed of the motion and contact frequency, and apply additional processing like exaggeration or blending.

More importantly, our study results indicated a clear and consistent effect of speed on human emotion perception through mediated touch. Increased speed increases a touch's arousal and decreases its valence. This result can be used to design mediated touch signals to convey specific emotions. We also gain insights from human perception of mediated touch, knowing that even though slower motion would potentially increase the comfort and realism sensation of a touch, different gesture types still respond to the speed change in a varied way.

In the future, we will continue improving the hardware and signal processing design to create realistic and comfortable touch gestures. Our study showed it is necessary to consider hyper-parameters of gestures when transforming gestures from sensor to actuation signals, parameters such as force and contact area are worthy to explore. We also want to further identify the optimal parameter ranges for generating comfortable and realistic mediated touch signals.

Funding

This research was supported by the National Science Foundation grant #2047867.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Statements

Data availability statement

The original contributions presented in the study are included in the article/supplementary materials, further inquiries can be directed to the corresponding author/s.

Ethics statement

The studies involving human participants were reviewed and approved by University of Southern California Institutional Review Board. The patients/participants provided their written informed consent to participate in this study.

Author contributions

XZ and TF designed and implemented the hardware. XZ and HC contributed to the signal processing methods, study signals, and the study protocol. XZ conducted the user study and data analysis with the guidance from HC. All authors contributed to the drafting of the manuscript, read, and approved the manuscript.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

1

AndersenP. A. (1998). The cognitive valence theory of intimate communication. Prog. Commun. Sci. 14, 39–72.

2

BarrettL. F. (1998). Discrete emotions or dimensions? The role of valence focus and arousal focus. Cogn. Emot. 12, 579–599. 10.1080/026999398379574

3

BaumannM. A.MacLeanK. E.HazeltonT. W.McKayA. (2010). Emulating human attention-getting practices with wearable haptics, in 2010 IEEE Haptics Symposium (Waltham, MA), 149–156. 10.1109/HAPTIC.2010.5444662

4

BrodskyW. (2001). The effects of music tempo on simulated driving performance and vehicular control. Transport. Res. Part F Traffic Psychol. Behav. 4, 219–241. 10.1016/S1369-8478(01)00025-0

5

BushE. (2001). The use of human touch to improve the well-being of older adults: a holistic nursing intervention. J. Holist. Nurs. 19, 256–270. 10.1177/089801010101900306

6

CabibihanJ.-J.ChauhanS. S. (2015). Physiological responses to affective tele-touch during induced emotional stimuli. IEEE Trans. Affect. Comput. 8, 108–118. 10.1109/TAFFC.2015.2509985

7

CascioC. J.MooreD.McGloneF. (2019). Social touch and human development. Dev. Cogn. Neurosci. 35, 5–11. 10.1016/j.dcn.2018.04.009

8

ChaJ.EidM.BarghoutA.RahmanA.El SaddikA. (2009). HugMe: synchronous haptic teleconferencing, in Proceedings of ACM MM (Beijing), 1135–1136. 10.1145/1631272.1631535

9

CheokA. D. (2020). An instrument for remote kissing and engineering measurement of its communication effects including modified turing test. IEEE Open J. Comput. Soc. 1, 107–120. 10.1109/OJCS.2020.3001839

10

CroyI.LuongA.TriscoliC.HofmannE.OlaussonH.SailerU. (2016). Interpersonal stroking touch is targeted to c tactile afferent activation. Behav. Brain Res. 297, 37–40. 10.1016/j.bbr.2015.09.038

11

CulbertsonH.NunezC. M.IsrarA.LauF.AbnousiF.OkamuraA. M. (2018). A social haptic device to create continuous lateral motion using sequential normal indentation, in Proceedings of IEEE Haptics Symposium (San Francisco, CA), 32–39. 10.1109/HAPTICS.2018.8357149

12

DebrotA.SchoebiD.PerrezM.HornA. B. (2013). Touch as an interpersonal emotion regulation process in couples' daily lives: the mediating role of psychological intimacy. Pers. Soc. Psychol. Bull. 39, 1373–1385. 10.1177/0146167213497592

13

DelazioA.NakagakiK.KlatzkyR.HudsonS.LehmanJ.SampleA. (2018). Force jacket: pneumatically-actuated jacket for embodied haptic experiences, in Proceedings of ACM CHI (Montreal), 1–12. 10.1145/3173574.3173894

14

DolinD. J.Booth-ButterfieldM. (1993). Reach out and touch someone: analysis of nonverbal comforting responses. Commun. Q. 41, 383–393. 10.1080/01463379309369899

15

EichhornE.WettachR.HorneckerE. (2008). A stroking device for spatially separated couples, in Proceedings of ACM Conference on Human Computer Interaction with Mobile Devices and Services (New York, NY: Association for Computing Machinery), 303–306. 10.1145/1409240.1409274

16

ErpJ. B. V.ToetA. (2015). Social touch in human-computer interaction. Front. Digit. Human. 2:2. 10.3389/fdigh.2015.00002

17

EspositoA.CarboneD.RivielloM. T. (2009). Visual context effects on the perception of musical emotional expressions, in Proceedings of the European Workshop on Biometrics and Identity Management (Caserta: Springer), 73–80. 10.1007/978-3-642-04391-8_10

18

FieldT. M. (1998). Massage therapy effects. Am. Psychol. 53:1270. 10.1037/0003-066X.53.12.1270

19

FurukawaM.KajimotoH.TachiS. (2012). Kusuguri: a shared tactile interface for bidirectional tickling, in Proceedings of the Augmented Human International Conference (Megeve), 1–8. 10.1145/2160125.2160134

20

HaansA.IjsselsteijnW. (2006). Mediated social touch: a review of current research and future directions. Virt. Real. 9, 149–159. 10.1007/s10055-005-0014-2

21

HanssonR.SkogT. (2001). The lovebomb: encouraging the communication of emotions in public spaces, in CHI '01 Extended Abstracts on Human Factors in Computing Systems (Seattle, WA). 10.1145/634067.634319

22

HauserS. C.McIntyreS.IsrarA.OlaussonH.GerlingG. J. (2019). Uncovering human-to-human physical interactions that underlie emotional and affective touch communication, in 2019 IEEE World Haptics Conference (WHC) (Tokyo), 407–412. 10.1109/WHC.2019.8816169

23

HertensteinM.HolmesR.McCulloughM.KeltnerD. (2009). The communication of emotion via touch. Emotion9, 566–573. 10.1037/a0016108

24

HertensteinM.KeltnerD.AppB.BulleitB.JaskolkaA. (2006). Touch communicates distinct emotions. Emotion6, 528–533. 10.1037/1528-3542.6.3.528

25

HertensteinM. J.KeltnerD. (2011). Gender and the communication of emotion via touch. Sex roles64, 70–80. 10.1007/s11199-010-9842-y

26

HuismanG.Darriba FrederiksA.Van DijkB.HevlenD.KrseB. (2013). The TaSSt: tactile sleeve for social touch, in Proceedings of IEEE World Haptics Conference, Daejeon, 211–216. 10.1109/WHC.2013.6548410

27

IsrarA.AbnousiF. (2018). Towards pleasant touch: vibrotactile grids for social touch interactions, in Proceedings of ACM Conference Extended Abstracts on Human Factors in Computing Systems (Seattle, WA), 1–6. 10.1145/3170427.3188546

28

JonesS. E.YarbroughA. (1985). A naturalistic study of the meanings of touch. Commun. Monogr. 52, 19–56. 10.1080/03637758509376094

29

KlinenbergE. (2016). Social isolation, loneliness, and living alone: identifying the risks for public health. Am. J. Publ. Health106:786. 10.2105/AJPH.2016.303166

30

KnoopE.RossiterJ. (2015). The tickler: a compliant wearable tactile display for stroking and tickling, in Proceedings of ACM CHI Extended Abstracts on Human Factors in Computing Systems, 1133–1138. 10.1145/2702613.2732749

31

LasseterJ. (1987). Principles of traditional animation applied to 3d computer animation, in Proceedings of Conference on Computer Graphics and Interactive Techniques (Anaheim, CA), 35–44. 10.1145/37401.37407

32

LeivadiS.Hernandez-ReifM.FieldT.O'RourkeM.D'ArienzoS.LewisD.et al. (1999). Massage therapy and relaxation effects on university dance students. J. Dance Med. Sci. 3, 108–112.

33

LindquistK. A.BarrettL. F.Bliss-MoreauE.RussellJ. A. (2006). Language and the perception of emotion. Emotion6:125. 10.1037/1528-3542.6.1.125

34

LokenL.WessbergJ.MorrisonI.McgloneF.OlaussonH. (2009). Coding of pleasant touch by unmyelinated afferents in human. Nat. Neurosci. 12, 547–548. 10.1038/nn.2312

35

MuellerF.VetereF.GibbsM. R.KjeldskovJ.PedellS.HowardS. (2005). Hug over a distance, in Proceedings of ACM CHI Extended Abstracts on Human Factors in Computing Systems (Portland), 1673–1676. 10.1145/1056808.1056994

36

NakanishiH.TanakaK.WadaY. (2014). Remote handshaking: touch enhances video-mediated social telepresence, in Proceedings of ACM SIGCHI Conference on Human Factors in Computing Systems, CHI '14 (New York, NY: Association for Computing Machinery), 2143–2152. 10.1145/2556288.2557169

37

RiggM. G. (1940). Speed as a determiner of musical mood. J. Exp. Psychol. 27:566. 10.1037/h0058652

38

SalvatoM.WilliamsS. R.NunezC. M.ZhuX.IsrarA.LauF.et al. (2021). Data-driven sparse skin stimulation can convey social touch information to humans. IEEE Trans. Hapt. [preprint]. 10.1109/TOH.2021.3129067

39

SamaniH.ParsaniR.RodriguezL.SaadatianE.DissanayakeK.CheokA. (2012). Kissenger: design of a kiss transmission device, in Proceedings of the Designing Interactive Systems Conference. 48–57.

40

SaravanakumarM.SuganthaLakshmiT. (2012). Social media marketing. Life Sci. J. 9, 4444–4451. 10.7537/marslsj090412.670

41

SeifiH.MacleanK. E. (2013). A first look at individuals' affective ratings of vibrations, in Proceedings of IEEE WHC (Daejeon), 605–610. 10.1109/WHC.2013.6548477

42

SrinivasanM. A. (1995). What is Haptics? Laboratory for Human and Machine Haptics: The Touch Lab; Massachusetts Institute of Technology, 1–11.

43

StaffordL. (2003). Maintaining romantic relationships: summary and analysis of one research program, in Maintaining Relationships Through Communication: Relational, Contextual, and Cultural Variations, eds CanaryD. J.DaintonM. (New York: Routledge), 51–77. 10.4324/9781410606990-3

44

SukH.-J.JeongS.-H.YangT.-H.KwonD.-S. (2009). Tactile sensation as emotion elicitor. Kansei Eng. Int. 8, 153–158. 10.5057/E081120-ISES06

45

TanH. Z.ReedC. M.DurlachN. I. (2010). Optimum information transfer rates for communication through haptic and other sensory modalities. IEEE Trans. Hapt. 3, 98–108. 10.1109/TOH.2009.46

46

TehJ. K.TsaiZ.KohJ. T.CheokA. D. (2012). Mobile implementation and user evaluation of the huggy pajama system, in Proceedings of IEEE Haptics Symposium, Vancouver, 471–478. 10.1109/HAPTIC.2012.6183833

47

TeyssierM.BaillyG.PelachaudC.LecolinetE.ConnA.RoudautA. (2019). Skin-on interfaces: a bio-driven approach for artificial skin design to cover interactive devices, in Proceedings of ACM Symposium on User Interface Software and Technology (New Orleans, LA), 307–322. 10.1145/3332165.3347943

48

ThompsonE.HamptonJ. (2011). The effect of relationship status on communicating emotions through touch. Cogn. Emot. 25, 295–306. 10.1080/02699931.2010.492957

49

ThrasherC.GrossmannT. (2021). Children's emotion perception in context: the role of caregiver touch and relationship quality. Emotion21:273. 10.1037/emo0000704

50

TillemaT.DijstM.SchwanenT. (2010). Face-to-face and electronic communications in maintaining social networks: the influence of geographical and relational distance and of information content. New Media Soc. 12, 965–983. 10.1177/1461444809353011

51

ToetA.KanekoD.UshiamaS.HovingS.de KruijfI.BrouwerA.-M.et al. (2018). Emojigrid: a 2d pictorial scale for the assessment of food elicited emotions. Front. Psychol. 9:2396. 10.3389/fpsyg.2018.02396

52

TomakaJ.ThompsonS.PalaciosR. (2006). The relation of social isolation, loneliness, and social support to disease outcomes among the elderly. J. Aging Health18, 359–384. 10.1177/0898264305280993

53

TomovaL.WangK. L.ThompsonT.MatthewsG. A.TakahashiA.TyeK. M.et al. (2020). Acute social isolation evokes midbrain craving responses similar to hunger. Nat. Neurosci. 23, 1597–1605. 10.1038/s41593-020-00742-z

54

TsalamlalM. Y.OuartiN.MartinJ.-C.AmmiM. (2015). Haptic communication of dimensions of emotions using air jet based tactile stimulation. J. Multim. User Interact. 9, 69–77. 10.1007/s12193-014-0162-3

55

TsetserukouD. (2010). Haptihug: a novel haptic display for communication of hug over a distance, in Proceedings of EuroHaptics Conference, eds KappersA. M. L.van ErpJ. B. F.Bergmann TiestW. M.van der HelmF. C. T. (Berlin; Heidelberg: Springer Berlin Heidelberg), 340–347. 10.1007/978-3-642-14064-8_49

56

WangD.GuoY.LiuS.YuruZ.XuW.XiaoJ. (2019). Haptic display for virtual reality: progress and challenges. Virt. Real. Intell. Hardw. 1, 136–162. 10.3724/SP.J.2096-5796.2019.0008

57

WangR.QuekF.TehJ. K.CheokA. D.LaiS. R. (2010). Design and evaluation of a wearable remote social touch device, in Proceedings of International Conference on Multimodal Interfaces and the Workshop on Machine Learning for Multimodal Interaction, Beijing, 1–4. 10.1145/1891903.1891959

58

WhitcherS. J.FisherJ. D. (1979). Multidimensional reaction to therapeutic touch in a hospital setting. J. Pers. Soc. Psychol. 37:87. 10.1037/0022-3514.37.1.87

59

WilsonG.DobrevD.BrewsterS. A. (2016). Hot under the collar: mapping thermal feedback to dimensional models of emotion, in Proceedings of ACM CHI Conference on Human Factors in Computing Systems (San Jose, CA), 4838–4849. 10.1145/2858036.2858205

60

WuW.CulbertsonH. (2019). Wearable haptic pneumatic device for creating the illusion of lateral motion on the arm, in Proceedings of IEEE World Haptics Conference (Tokyo), 193–198. 10.1109/WHC.2019.8816170

61

YaroshS.MejiaK.UnverB.WangX.YaoY.CampbellA.et al. (2017). Squeezebands: Mediated social touch using shape memory alloy actuation. Proc. ACM Hum.-Comput. Interact. 1, 1–18. 10.1145/3134751

Summary

Keywords

mediated social touch, emotion, tactile rendering, wearable haptics, data-driven modeling

Citation

Zhu X, Feng T and Culbertson H (2022) Understanding the Effect of Speed on Human Emotion Perception in Mediated Social Touch Using Voice Coil Actuators. Front. Comput. Sci. 4:826637. doi: 10.3389/fcomp.2022.826637

Received

01 December 2021

Accepted

14 February 2022

Published

11 March 2022

Volume

4 - 2022

Edited by

Karon E. MacLean, University of British Columbia, Canada

Reviewed by

Xueni Pan, Goldsmiths University of London, United Kingdom; Solaiman Shokur, Swiss Federal Institute of Technology Lausanne, Switzerland

Updates

Copyright

© 2022 Zhu, Feng and Culbertson.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xin Zhu xinzhu@usc.edu

This article was submitted to Human-Media Interaction, a section of the journal Frontiers in Computer Science

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.