- 1Independent Researcher, Artist, Inventor, Berlin, Germany

- 2Intelligent Instruments Lab, University of Iceland, Reykjavik, Iceland

The mainstream development of AI for artistic expression thrives on predictive models that are supposedly capable of abstracting creative ideas and actions into computable features. The assumption is that creativity can be measured, modeled, and computationally reproduced solely by examining digital representations of artistic outputs, such as images or pieces of music. Embodiment and its role in art and music making is largely ignored. But as any practicing artist knows, the act of creating an artwork and the fact of being a body are inseparable. This essay is concerned with nurturing a rapprochement of embodiment and AI in music and performing arts. To that end, it suggests it is necessary to transgress norms in engineering, musical composition, and bodily performance; to engage with modes of expression lying beyond the formal boundaries of those disciplines. The key questions are: What are the strategies needed to create embodied approaches to AI that can open up new areas of corporeal knowledge? How to create musical AI systems that allow to transgress musical and bodily borders, systems that allow learning beyond the edges of normative corporeal experience? These issues are broached by means of a transdisciplinary framework combining insight from feminist phenomenology, critical disability studies, and the posthuman with resources from physiological computing, movement-based music technology, and sound studies. Leveraging these assets, I offer an analysis of corporeal knowledge: a pre-cognitive form of perceiving and experiencing the world rooted in the somatics of incorporation, rhythm, and automaticity. Understanding this visceral way of knowing serves to discuss three strategies for transgression taken from my own artistic practice with robotics, prosthetics, sensors, and sound. By designing AI instruments and prosthetics that alter and are influenced by a performer’s somatic states, one can develop forms of artistic expression where body and instrument are co-dependent. I refer to this relation as a “configuration” of human and machine parts that, through training in sensorial and vibrational intensities, shapes not only music and performance but embodiment itself.

1 Introduction

With the advent of generative AI and large language models the fracture between human embodied experience and computation has widened significantly. A far cry from the embodiment turn in human-computer interaction exemplified by Dourish (2004) among others, it seems that today’s dominant computational approaches to AI and creativity can dump the luggage of phenomenology, somatics and corporeal knowledge. But can they? By abandoning embodiment, what are those system actually producing? And how do their outputs relate to and transform culture? Despite the flooding of supposedly “creative” and forevermore “intelligent” systems—inevitably in the form of chatbots and prompt-based generative engines—the field appears to be drifting away from meaningful representations of intelligence or creativity (Bender et al., 2021; Bishop, 2021). In music, for example, the prevailing paradigm for generative AI is to mimic or overcome a musician’s cognitive abilities, discarding the complex interaction of expression, embodiment, and cognition that makes musical performance possible (Caramiaux and Bevilacqua, 2025). The issue has dangerous repercussions into the larger cultural arena. The erasure of significant links between datasets and the embodied nature of artistic creation—a foundation of the prediction paradigm—leads too easily to define creativity as an exclusively cognitive, computational-like activity. As I have argued elsewhere (Donnarumma, 2022), when the corporeal basis of artistic expression is removed from definitions of creation, so is the ecology of cultures, histories, human and non-human beings that brings art and music to be in the first place. What is left then is an understanding of creation that denies the relations from which creative practices arise and normalizes the copying and smashing of cultural bits and original ideas.

This essay takes these observations as a steppingstone for a conceptual analysis of some of the possible strategies to bring back embodiment into AI. The intention is to provide a theoretical and practical scaffold to envision creative and challenging relations between human embodiment and AI in music and performing arts. Technical particulars are used sparingly in this text, and more detailing is dedicated to how performative, compositional, and crafting practices with machines can make use of corporeal forms of knowing, sensorial thresholds, and automaticity mechanisms. The arguments expounded here focus on musical and performance technologies designed to work in intimate and visceral relationships with a performer’s body. I suggest that an electronic musical instrument or an interactive technology of this kind can be understood as a prosthesis, insofar as it relies on the performer’s body for its functioning and directly influences that body’s expression at the same time. My use of the term “prosthesis” is borrowed from the fields of medicine and engineering, but it eschews the characterization—typical of those fields—of the prosthesis as an aiding device. Doing so opens up room for an understanding of prosthetic technologies as tools to inhabit alternate forms of embodiment. To further emphasize this potential, in later sections I will use the term “sonic organ” to refer to particular machines of my invention. In biology, an organ is a distinct grouping of tissues evolved to perform a specialized task. Although not biological in nature, my sonic organs are designed to be attached to specific parts of the human body and diffuse sound in the form of vibration through its skeletal structure, activating a focused, multimodal perception of sound across bone conduction, haptic and auditory channels.

The research elaborated here crystallizes, and hopes to expand, my past fifteen years of practice and theorization. Much of that work and this text is indebted to essential feminist studies on subjects such as the cyborg in culture and theater (Balsamo, 2000; Parker-Starbuck, 2011), the intercorporeal and immaterial aspects of embodiment (Weiss, 1999; Blackman, 2012), and the problems and opportunities of integrating machinic prostheses and organic bodies (Shildrick, 2013; Sobchack, 2010). Complementing those works with resources from the fields of new instruments for musical expression (NIME) and human-computer/human-robot interaction (HCI and HRI), the other side of my practice, has opened up a fertile territory that I am still in the process of exploring. Transdisciplinarity, therefore, is key to the arguments elaborated next. The invitation of this essay is for researchers and artists in music and performing arts to engage in a transdisciplinary rapprochement of embodiment and AI. Integrating artistic practice, scientific research, technological invention and theory allows for a multi-headed inquiry that can move nimbly among the trappings of a singular disciplinary standpoint (Caramiaux and Donnarumma, 2020). Such a strategy is also coherent with the contemporary DIY practices of artists in art and technology, new media art, music and sound art who, by being composers, performers, and creators of their own instruments at the same time, are able to implement unique techno-aesthetic languages (Magnusson, 2019). Making and performing with one’s own instruments, alone or in concert with others, empowers spaces of imagination that exceed the normative frameworks of the music technology and AI industries. New languages and unconventional designs, as those emerging from feminist HCI, for instance, have the power to challenge expectations around gender, bodily forms, and identities (Jawad and Xambó Sedó, 2024). From this standpoint, this essay proffers in a later section three strategies to transgress disciplinary boundaries, engineering guidelines, and bodily limits. The idea of transgression is useful in that it implies the act of passing over or going beyond those limits. It signals an invite to access areas of expression that lie beyond the furthest boundary of an artistic practice. This idea of transgression is not concerned with morality or law; it shall rather work as an impulse to overcome norms and limitations—in engineering, musical composition, and physical performance—that may hinder meaningful approaches toward a reconciliation of embodiment and AI.

An equally important invitation made here is to value the insight that critical disability studies offer to analyses of technological forms of embodiment. The reasons are multiple. First, a central preoccupation of critical disability is to tackle the modalities and implications of technological incorporation—the process whereby a technology becomes integrated into one’s own morphological image; hence it offers insight that can energize studies of embodiment and AI in other disciplines. Second, many if not most body technologies used in music and performing arts - from sensors, to robotics limbs, and interactive devices - originate from research into different kinds of disability or bodily conditions; understanding their development in that context helps grasping what forms of embodiment they sustain or undermine. Third, attending to critical disability theory also means to attend to the experiences of those who know first-hand how technologies worn or embedded in the body mediate corporeal experience. Shildrick (2013, p. 278) stresses that a “prosthesis may both extend functional agency and radically destabilize specifically human agency as such” (original emphasis), and it is exactly along this axis of tension that I suggest to work. Learning from disability is to learn about embodiment’s ability and struggle to shift, adapt, and incorporate a technological other. Studying this can inform technical development in ways that escape normative paradigms—where the body must be unchanging, productive, perfect, and self-sustaining—toward practices that are inherently relational, open, and vulnerable (Jochum and Donnarumma, 2025).

2 Leave prediction and control, welcome incorporation

My viewpoint on embodiment and AI is manifold, for it draws on the transdisciplinary nature of my own practice. I work as a sound, media and performance artist, inventor and theorist, combining art and music making with technological development and theory. I am late-deafened, meaning I was born hearing and I have been turning increasingly deaf in my adult life.1 About 30 years ago I first observed my heart beating through the skin, my tiny skeletal chest shifting shape with each heartbeat. Years later it occurred to me that all muscles must make a sound, just like my heart. This was the beginning of a long-term research into muscle sounds (also known as mechanomyogram or MMG), that progressively led me to combine performance art and sound art with biomechanics, wearable robotics and physiological computing. A juvenile curiosity expanded into an interest for the bodies of others and eventually became a decades-long inquiry into body politics, nurtured by feminist body theory and critical disability studies. Integral to my research has always been the practice of making transgressive machines to perform the body’s limits. Thus, in the past 15 years I conceived, designed, engineered and handcrafted numerous prosthetics and body technologies, including a biophysical instrument creating music from a performer’s body, a robotic limb jutting out of one’s face, a self-cutting AI-driven robot arm, prosthetic spines without a body, and alternative sonic organs.

Conceiving and crafting machine learning and AI-driven systems for robots and prostheses of varying sophistication taught me that the most significant challenge in art and technology is not technical development. Preserving and fostering one’s artistic identity amid an ever intense hype and standardization of technology is. In any creative endeavor, technological development shall remain at the service of aesthetics or it turns into gimmick. The current predictive turn in machine learning and AI development is unhelpful in that sense, for it produces generative systems that: (a) have highly specific aesthetics embedded in them and little room for significant changes; (b) do not “generate” from scratch but rather measure, extract, average, sculpt, and overlay existent features of image, video, or sound into pastiche; (c) treat artistic materials as disembodied traces of expression by erasing all relations to embodiment, culture, history. With these preconditions, it should come as no surprise that as generative AI aids non-professionals in producing more imaginative content, it decreases the originality of materials across users at the same time, causing a gradual homogenization of creative output (Doshi and Hauser, 2024).

To critically navigate today’s landscape of predictive modeling in view of the aesthetic possibility they are able or fail to offer, it is useful to look back at some of the early approaches to the creation of machines capable of participating in a creative process. Despite the lack of advanced machine learning techniques, or perhaps thanks to it, early principles of interaction maximized aspects of embodiment to investigate novel modes of expression; and were often transgressive in their own approaches to engineering, composition, and physical performance. Seminal examples exist across artistic practices. The work of George Lewis with digital algorithmic players is one of such cases. His work centers the design of electronic musical instruments on a political stance of resistance against western ideas of control over the “other,” be it technological, human, or non-human. In stark contrast with other instruments of the time where technology is a means of ensuring predictability and repetition, Lewis’s projects afford for a form of improvised musical interaction where player and instrument are both active subjects. They can, each in its own modality, choose whether to follow, be influenced by, or ignore each other. Implementing his claim that “a formal aesthetic can articulate political and social meaning” (Lewis, 2000), in his musical instruments, in particular Voyager,2 design, engineering, and programming do not aim for measured control. They are instead a means to enable performer and instrument to share the stakes in the expressive outcome of a piece. Lewis’ approach is important for it posits an intrinsic and powerful relation between society, technology and aesthetic practices. An observation that is integral to the arguments I will develop in the next sections.

Lewis’ insistence on designing against control is echoed in the practices of the influential composers, performers, and inventors Michel Waisvisz, Pamela Z, David Rockeby, and Laetitia Sonami (Pamela, 2025; Rockeby, 2025; Sonami, 2025). In their work, pushing away from control is a practice that primarily unfolds in the physicality of performance. The deterministic nature of computers or robots makes it hard to embed improvisatory capabilities in them; a significant difference from traditional musical instruments where control—of bodily energy and instrumental affordances—is in constant negotiation with uncontrollable or unpredictable elements, such as spatial resonances, strings’ elasticity, musical partners, or risk of injury. The unstable materiality of instruments, spaces, and performers’ bodies is always at play in Waisvisz, Z, Rockeby and Sonami’s work, in particular thanks to a shared approach to touch, gesture, or voice as means to interconnect sensing, computation, and embodiment. In their works the materiality of embodiment—of both instrument and performer—is galvanized by particular interactive affordances that rely on contact, context, and physical effort (Figure 1).

Figure 1. Michel Waisvisz performing with his instrument, The Hands, Amsterdam. Courtesy of the Michel Waisvisz Archive.

Further insight into the materiality of technological embodiment as a driver for corporeal expression can be gained from the work of Seiko Mikami with body technologies and of Stelarc with robotic prostheses. As practitioners crisscrossing the fields of media art and performance art, they have pursued artistic practices where the commixture of embodiment and technology is both a means and a mode of expression. Their works heralded the creation of technological embodiments as systems. In the performance Exoskeleton (1999), Stelarc inserts his own body inside a 600-kg machine that enacts on stage a hybridized human-user interface. Powered by an external air compressor and 18 pneumatic actuators, the machine walks forward, backwards, and sideways. The “machine choreography,” as Stelarc (2002, p. 73) calls it, is controlled by the physical gestures and orientation of Stelarc’s body, tracked using wearable magnetic sensors. Crucially though Stelarc’s arms movement is translated to the machine’s legs motion, creating a disconnect between intention and action. The sounds of the air compressor, the clicks of the switches operated by the performer, and the impact of the pneumatic legs on the floor are acoustically amplified so that the performer can compose sounds by driving the machine. Live video feeds captured by multiple cameras are projected onto large screens, providing macros of parts of Stelarc’s body and the machine. Human and machine parts appear visually juxtaposed. The work undermines functions of the human body to reenvision its physical and locomotive characteristics through the use of a prosthesis that is not controlled, but obliquely mobilized.

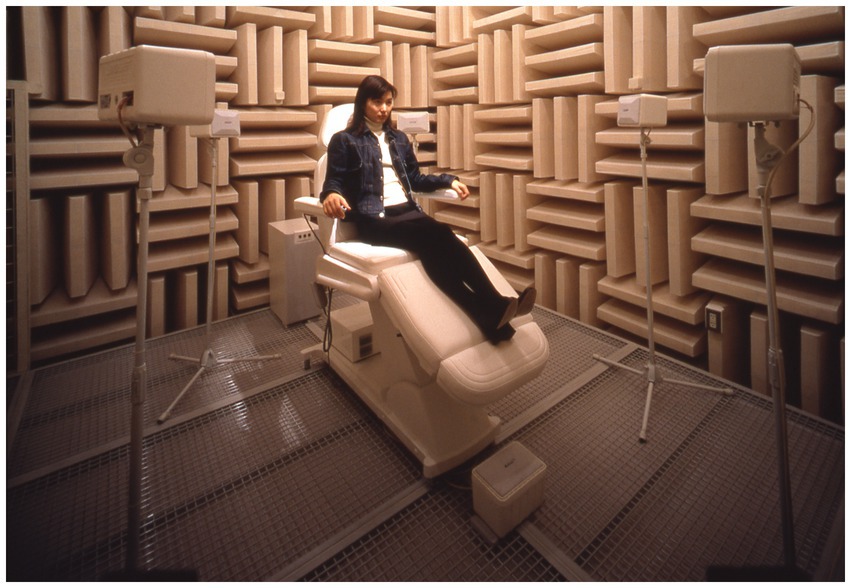

In Mikami’s World Membrane and Dismembered Body (1997) a visitor lays on a clinical bed inside an anechoic chamber (Figure 2). The sounds of their heart and lungs are captured with a stethoscope, amplified, manipulated, and diffused in the space with a slight latency. Parameters extracted in real time from the sonic material drive the digital manipulation and spatialization of the sounds. Additionally, the parameters are visualized in the form of an expanding, abstract net that is projected onto the walls of the chamber. In doing so, the evolution of the visitor’s experience and that of the environment are interfaced, they depend on and react to each other. For Mikami (1997), inside the installation the ear acts as a “perceptual link, or code, between the acoustic sense and the space of the room.” Through the ear—and through the body, I would add—the visitor can grasp the aliveness of their own body as a constitutive part of the environment. This is possible because the body, the instrument, and the ear are all co-dependent on the same bioacoustic matter. The body becomes a source of sound and a means of magnifying it. But, simultaneously, the experience is uncannily extra-personal. The time lag between the original sounds and those diffused in the room creates a perceptual offset that confuses the visitor. It is nor a mode of self-perception, neither a way of perceiving someone other; it is an experience in between, where subject and object are confounded.

Figure 2. Seiko MIKAMI World, Membrane and the Dismembered Body, Tokyo, 1997. Photo by Takashi OHTAKA, courtesy of NTT InterCommunication Center [ICC].

In Mikami and Stelarc’s works technical devices, such as sensors, screens, stethoscopes, robotic limbs, cables, and pneumatic motors, are enmeshed with the human body to the point that they cannot function without the other. In these human-machines systems, technology does not augment or predict, but productively undermines and articulates embodiment.

What this concise review points at is the numerous ways in which interactive technology can be incorporated by the body of a performer (or a visitor) prompting thus new compositional or performative processes. Here I refer to the notion of incorporation primarily developed in critical disability studies and feminist body theory. This defines how, as a human body incorporates—takes in and conjoins with—a particular instrument or prosthesis, the latter initiates a process of drastic alteration of embodiment; the two influence and stir each other, becoming effectively one multipart entity in constant, unresolved tension (Shildrick, 2013). This differs from the idea of instruments and body technologies as extensions or appendages that only augment the body or extend the mind; a view that shaped important contributions to embodiment research in music (Leman, 2008) and cognitive philosophy (Clark, 2004). The “extension” argument assumes human and technology to be in a binary relation of control where the body is a self-enclosed entity that can master or integrate a technological appendage. The human body is the central subject, while the instrument is an object that relates to the body in a more or less mediated degree, most often limited to relay or mediate meaning so as to augment predictions and subsequent actions. This conception overlooks that technology does not only do things for us, it does things with and to us. A prosthesis does not solely augment a possible action, it interpolates epistemic, physical, and psychological experience and relations at once, often in ways that bypass volitional control or intent.3 The notion of incorporation accounts for the reciprocal links between body and technology by drawing from the experience of people living with prostheses. In doing so, it shows that the body is open to radical modification, resilient to long-term change, and vulnerable to morphological changes driven by the prosthesis.

These possibilities and problematics, I suggest, shall constitute a starting point for reconciling embodiment and AI. As a body technology alters corporeality and, vice versa, corporeality incorporates that body technology, ever shifting forms of embodiment emerge through co-dependence. This is the arena where to play out possible interactions between embodiment and AI in music and performing arts. The challenge is for researchers and artists to embed technologies with the knowledge of the body; to design instrumental affordances that are sensible toward the malleability, unpredictability, and vulnerability of embodiment.

3 Corporeal knowledge

Corporeal knowledge is a modality of knowing, in the epistemic sense of the term, that is ingrained in the body. It involves bodily skill, muscle memory, cultural histories and how these are performed during physical and social practices, such as music performance, sports, skilled trades. Fundamentally epistemology in the flesh, it diverges from and adds to empiricist views—where the understanding of the world emerges from discursive or intellectual practices—by focusing on what is grasped and learned through sensing, sensations, and bodily relations with other subjects. Corporeal knowing, I suggest, can be taken as a scaffold for constructing transgressive interactions of embodiment with AI. For it does not solely reveal a physical or material form of sense-making. It offers insight into the complex net of interactions between the physiological, phenomenological, psychological and cultural aspects of embodied practices with technology. Once understood how the body senses and makes sense of its interaction with the world and the machines within it, it is possible to envision AI-driven systems that leverage sensorial and experiential aspects of embodiment toward alternative forms of creative expression. Machines play an important role in corporeal modes of knowing, for, as we will see, they can steer particular somatic states.

3.1 Rhythm and affect

Henriques (2011) defines corporeal knowing as a way of creating and gathering knowledge from the bodily performance of practices, such as music playing, dancing, and handcrafting, that are relational in nature. What differentiates corporeal knowing from other phenomenological modes of knowing is, for Henriques (ibid), an emphasis on rhythm. Bodies and machines can grow attuned to rhythms, including cycles, waves, and vibrations, that train the body into particular ways of sensing and sense-making - a training in aestheticization, as Fuller and Weizman (2021) call it. The machines Henriques refers to are self-built sound systems made of the loudspeakers, membranes, amplifiers, and mixing desks populating the Jamaican dancehall scene. I argue this list can be expanded to include computers, sensor systems, traditional and new musical instruments, as well as autonomous systems. All of these function in fact in relation to rhythm, be it in the form of electric or analogue frequency, sampling or learning rate and, in the case of music, waves and vibration. Henriques’ draws on the work of Gilbert Simondon to make a crucial and disarmingly intuitive observation. Not only the specifics of a particular technical milieu are key to the ways in which corporeal knowing happens; rhythms and vibrations tangibly influence human psyche, physiology, and phenomenological experience. Examples can be found in diverse fields. The use of sonic booms or engine hums of low-flying drones in modern warfare is one such example; the extremely loud or stubborn low frequencies produced by those machines are strategically used to induce fear and anxiety in a targeted population (Goodman, 2009). In the context of Jamaican dancehall, the skillful song selections of DJs rely on a complex interplay of frequency ranges, bass drops, chorus and flanger modulations, as well as intensive feedback delays to induce a sense of euphoria and flow in the dancing crowd (Henriques, 2011).

These examples show how sensory input is more than a way of registering experience. It is an inlet and relay point for the ongoing exchanges of intensities (of sound, or light, or touch, or mood) between bodies (in a crowd, in a couple or family) and machines (in a war zone, at a concert or place of work). The phenomenon whereby sensory intensities influence psyche, physiology, and phenomenological experience is known in cultural studies as affect. In the remainder of this text, I will use the term as a noun (affect) to indicate the phenomenon, as a verb (to affect) to refer to the unfolding of the phenomenon, and as an adjective (affective) to indicate what is conducive to the phenomenon. An important aspect of affect is that it cannot be rationally mastered for it happens without conscious engagement; it is pre-cognitive and literally visceral—think of a “gut feeling.” It can, however, be sensed and practiced: bodies sense, perceive, and remember affective situations, learning thus new knowledge for future circumstances—think of muscle memory. Gut feeling and muscle memory show that bodies change and learn in the immediacy of the moment and in the time following an event: the physiological, somatic, and psychological state experienced in the moment is registered as a reference for the next possible encounter with similar conditions. The reoccurrence of given affective conditions may provoke a recovering of memorized somatic states, that may or may not help grasp and respond to unforeseen conditions. Another crucial aspect of affect is that it takes place in multiple forms and diverse temporalities: in everyday situations affective intensities quietly and yet constantly encompass and accompany interactions; in extraordinary situations peaks of affective energy disturb, boost, or diminish a body’s psychological and somatic state. Analyzing sense-making and learning through the lens of rhythm and affect helps see that knowing is not as rational and predictive as conventional humanist or computational approaches would have it. Affect partakes in and perturbs the epistemic process at play in a given interaction because it radiates from and through bodies, sounds, and machines; it effectively submerges them.

The body learns by itself in many ways. The examples of muscle memory and gut feelings are some of the more mundane cases. However, when interacting with technology, the body performs complex processes of sensing, sense-making, and reminiscence. In music and performance with traditional musical instruments, training helps construct body schemata - internal sensorimotor models informed by proprioception and somatics - that are dependent on the affordances of the instrument at hand. The body and the instrument enter a feedback relation: the body gives energy to the instrument and this returns energy back to the player. The affective exchange of sound vibrations between them is a form of attunement that serves as a gauge of the quality of the interaction and as a stimulant that simultaneously excites the feedback. The body of a performer can become accustomed to this circular process and thus learn to test its varied parameters through repetition, error, and variations. When the musical instrument is computational and is endowed with machine learning algorithms and biosensing capabilities, it is capable of learning aspects of the performer’s bodily actions or physiological activity. This allows for reciprocal forms of interaction to be designed, such as the reorganization of software parts in response to particular movement sequences or the gradual mutation of a score guided by changes in a feature of muscles’ biosignals. This approach however can expand beyond the level of technical interaction and mapping and contribute to actively affect a performer’s somatic state. If corporeal knowing happens through rhythmic and affective exchanges between bodies and machines in interaction, then an AI-driven interactive system can be designed to leverage rhythm and affect to reach and learn new somatic states at the edge of conventional experience.

3.2 Human-machine configuration

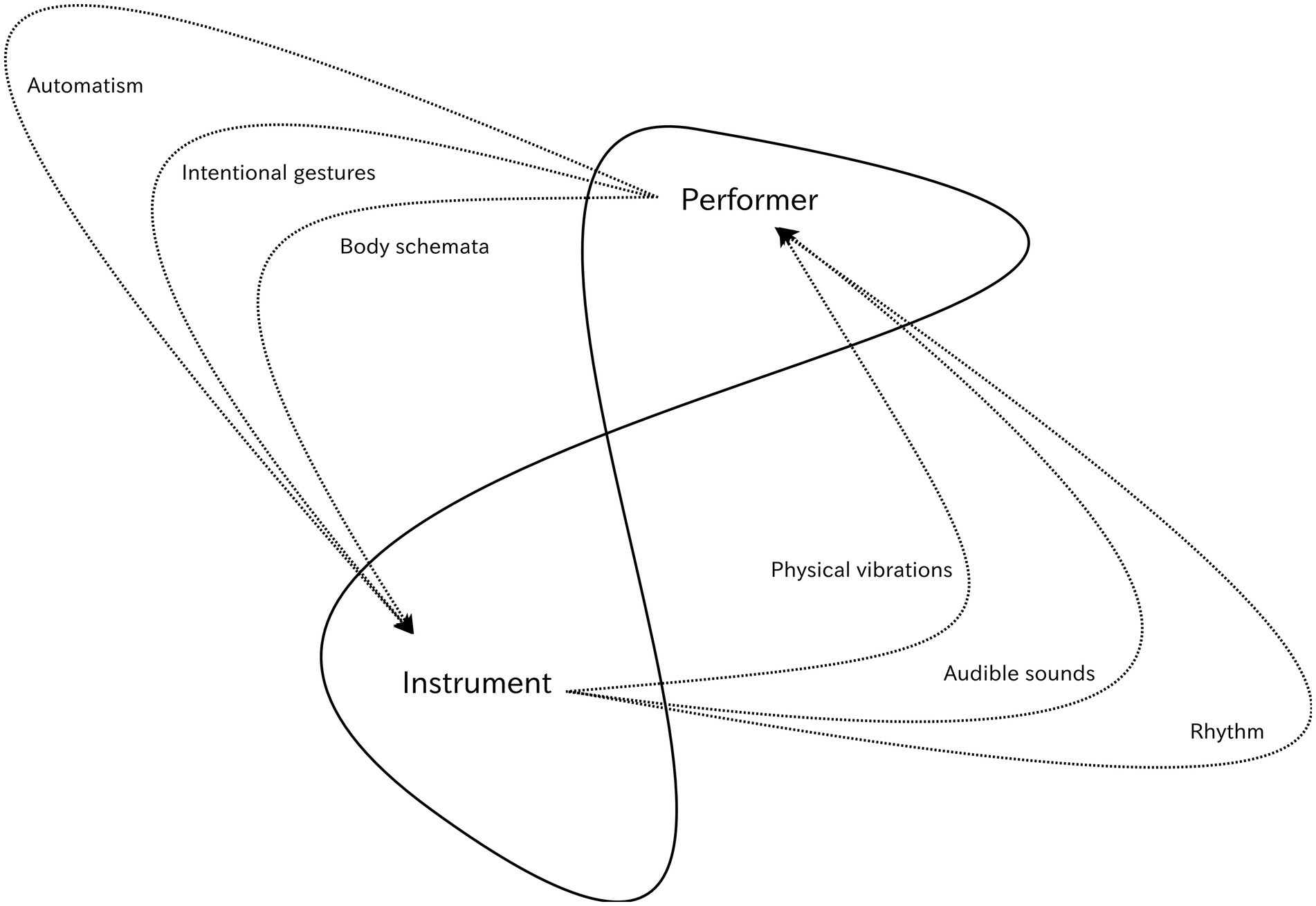

In order to analyze how rhythm, affect, and corporeal knowledge enable human-machine arrangements in music and performing arts, I have developed an analytical tool that is referred to as “configuration” (Donnarumma, 2017a). The notion originates from my work across artistic research, music and performance studies, computation, and robotics. It defines technologically-mediated corporeality as an ecology where physiology, physicality, psyche, desire, and programmatic ideas tangle up with the particular hardware and software components of a machine.4 In a configuration, sound, vibration, or haptic rhythm are considered an interface between those human and machinic elements (Figure 3). To delve into the epistemic and performative implications of such a relationship, in particular in artistic and musical contexts where expression is crucial, the notion of configuration calls upon studies of automaticity and threshold, that Lisa Blackman discusses in depth.

Figure 3. A diagram illustrating a performer-instrument configuration. The performer and the instrument are distinct, yet they constitute a whole. Arrows illustrate the affective forces that the two exchange during a performance. The inner layer of those forces consists of body schemata performance and physical vibrations. The first outer layer includes intentional physical gestures and audible sounds. The third outer layer is where rhythm and automatism take place. These forces circulate back and forth between performer and instrument in a feedback loop, that yields their mutual attunement.

Blackman (2014) describes automaticity as a form of psychic attunement with particular technical instruments that is subjective and progressive. The attunement is subjective for it depends on an individual’s training, discipline, and sensitivities, and it is progressive for it gradually increases in intensity the more psychosomatic thresholds it overcomes. The example of automatic writing illustrates well this process by showing how, through repeated training and particular environmental conditions, an individual can be brought to produce involuntary movements that drive a planchette or a pen. For a performer in a state of automaticity, the instrument becomes more than an attachment or an extension of the player’s body. Because the instrument works on and sometimes guides the body, the player perceives it as a force that is external and alien but intimate and familiar at the same time. As the instrument and the player enter a choreography of shifting intensities, they drive each other in and out of their perceptual, physical, and material thresholds. Musical or bodily expression in performance is the result of the adaptive interplay of a body that is neither only human nor only technical but combines human and technical features in an ever-shifting corporeality.

This is all the more relevant to musical instruments and artistic prostheses equipped with AI-driven computing capabilities. If the structural organization of a traditional musical or electronic instrument is, in a way, unchangeable during a performance, an AI-driven instrument can reorganize its parts. Its sensing system can be recalibrated, datasets can be created in real time, and models for inference can be adaptively changed. The question then becomes how to envision a computational behavior that can influence, is dependent on, and sensitive to the perceptual, physiological, and material thresholds of a performer’s body. Clearly, the aim is not to discard human agency or computational autonomy, but rather to provide them with a spielraum, a room for play where they can extend each other’s capabilities into an affective domain. In this form, incorporation is equally based on material and affective qualities, rhythmic and sonic cycles, compositional and programmatic ideas.

If corporeality is the ground where psychic attunement to self and others, action-perception loops, and physical effort play out their relations, then corporeal knowing is relational epistemology in motion. Corporeal knowing in fact always entails someone or something “other.” It begins with a body, but it needs another entity, human, non-human, or technological, to mobilize itself through it. In a performance for a human player and an AI-driven instrument or prosthesis, the latter can be understood as the companion “other.” As a form of configuration, the relationship between them relies on learning through thresholds. But differently from performance with traditional musical instruments or analogue prostheses, in the case of AI-capable instruments, surpassing thresholds can provoke both the human player and the technological instrument to learn from each other, each on its own terms and modalities. From this standpoint, musical and bodily expression ceases to be exclusively circumscribed to intentional actions or the willed volition of a performer, and becomes also a function of relations (Donnarumma, 2020). But I want to delve deeper into this approach and propose a somewhat counterintuitive approach. Because hardware and software technologies for the body can aid or undermine corporeal abilities and conditions, it is possible to leverage this capability to purposely challenge the abilities of a performer and provoke unfamiliar states of experience.

4 Strategies for transgression

The kind of transgression I propose is a method for creating instruments, prostheses, and artistic performances that posits overcoming conventions of engineering, design, composition, and movement. It has not to do with provoking extreme reactions in an audience, causing a shock, or breaking a moral code. Rather, it is an invitation for artists and researchers to infringe design rules, experiment with counterintuitive bodily tasks, and develop peripheral compositional thinking to engage with modes of expression that exceed the norms of their chosen discipline. It is a more intuitive endeavor than it may seem at first. Every technological system or artistic performance is an ecology, a grouping of different elements in relation with each other. In the same way as removing a single component of an ecology affects its whole structure, establishing new paths of connection between its components alters its entire functioning. In other words, during the process of conceiving a system or a performance, an initial break with—the transgression of—what may seem an unimportant basic convention, can open up new ways of establishing relations with the components at hand—sound, light, movement, sensors, algorithms, models. This, in turn, can lead one to access areas of expression and creativity that lie beyond the given boundaries of a discipline, effectively unlocking territory that would have remained hidden otherwise.

In what follows I elaborate on three different strategies of transgression drawing on my practice as an artist, performer, and inventor: (1) Appropriate hardware and software to unsettle the body; (2) Invent prosthetics to train new somatic modes; (3) Repurpose normative designs to recover what they discard. These strategies aim to configure machine learning and AI-driven systems with aspects of corporeal knowing in order to set up unfamiliar modes of expression. Each strategy is exemplified below by discussing relevant aspects of some of my performances. These examples are constrained by my own aesthetic and artistic intentions and, therefore, are not intended to stand as generalized methodologies. They can, however, illustrate the conceptual arguments elaborated so far and serve as creative stimuli for others wishing to experiment in similar directions with their instruments and bodies. It is out of question that in order to bring back embodiment into AI, one must, quite literally, put the body to work.

4.1 Appropriate hardware and software to unsettle the body

One possible onset of this kind of creative transgression is engineering. Existing algorithms or hardware blueprints can be repurposed for a new set of operations they were not designed for, but which they may serve well nevertheless. Or computational and sensing components from different fields of operation can be assembled together in new structures. Defined as “situated appropriation” by Caramiaux and Bevilacqua (2025), this approach calls upon notions of situated knowledge in HCI. It posits modularity as a means to reassemble existing components into systems tailored to the specific needs and context of an artist’s practice. I suggest to take one step further: using appropriation to create musical instruments or interactive machines that force the body into sensorimotor schemata not experienced before. By trespassing hardware and software design conventions, untested possibilities for movement and play can be set up, conditions to which the body of an experienced performer can respond creatively and autonomously. It is literally the disorientation and impossibility of reproducing a known movement sequence that, for example, enables jazz improvisers to continue play seamlessly despite an occasional wrong note or a slight injury (Berliner, 1994).

This was a strategy I took up around 2010, while researching new instruments for musical expression. I began studying the body and its functioning, in particular biomechanics and physiology, and my attention was caught by a lesser known physiological signal, the mechanomyogram (MMG), an easily measurable acoustic signal resulting from the contraction of muscle fibers (Donnarumma, 2017b). MMG sensing was, and still is, mostly used in the medical domain to detect involuntary tremors and onsets of volitional movements, with applications in rehabilitation and prosthetics. Surprisingly, and despite a long history of musicians working with biofeedback and physiological signals,5 at that time the MMG had not been yet systematically studied for applications in music. By studying blueprints for MMG sensors I defined the criteria needed to transform the medical device into a more user-friendly wearable sensor - sturdy but simple, unobtrusive and precise—and designed a simpler circuit and the accompanying wearable components. Software for MMG sensing and analysis was at the time only available by means of commercially licensed software, so I wrote one such software myself using the open source framework Pure Data. The software includes: timbre decomposition and feature extraction tools based on machine learning;6 a set of mapping technologies for rapid prototyping and composition; a suite of DSP algorithms specifically designed to manipulate the particular qualities of MMG sounds; OSC and MIDI capability; and a graphical UI. The instrument, known as Xth Sense, was completed in 2010 and it was my first methodical development of a sonic prosthesis (Figure 4). It served as the basis for the establishment of a musical practice known as biophysical music (Donnarumma, 2017b) and today is regularly used by diverse artists across disciplines.

Figure 4. The author performing Ominous for the Xth Sense instrument, one of the first pieces for biophysical music, at CTM festival, Berlin, 2012. Photo by Stefanie Kulisch.

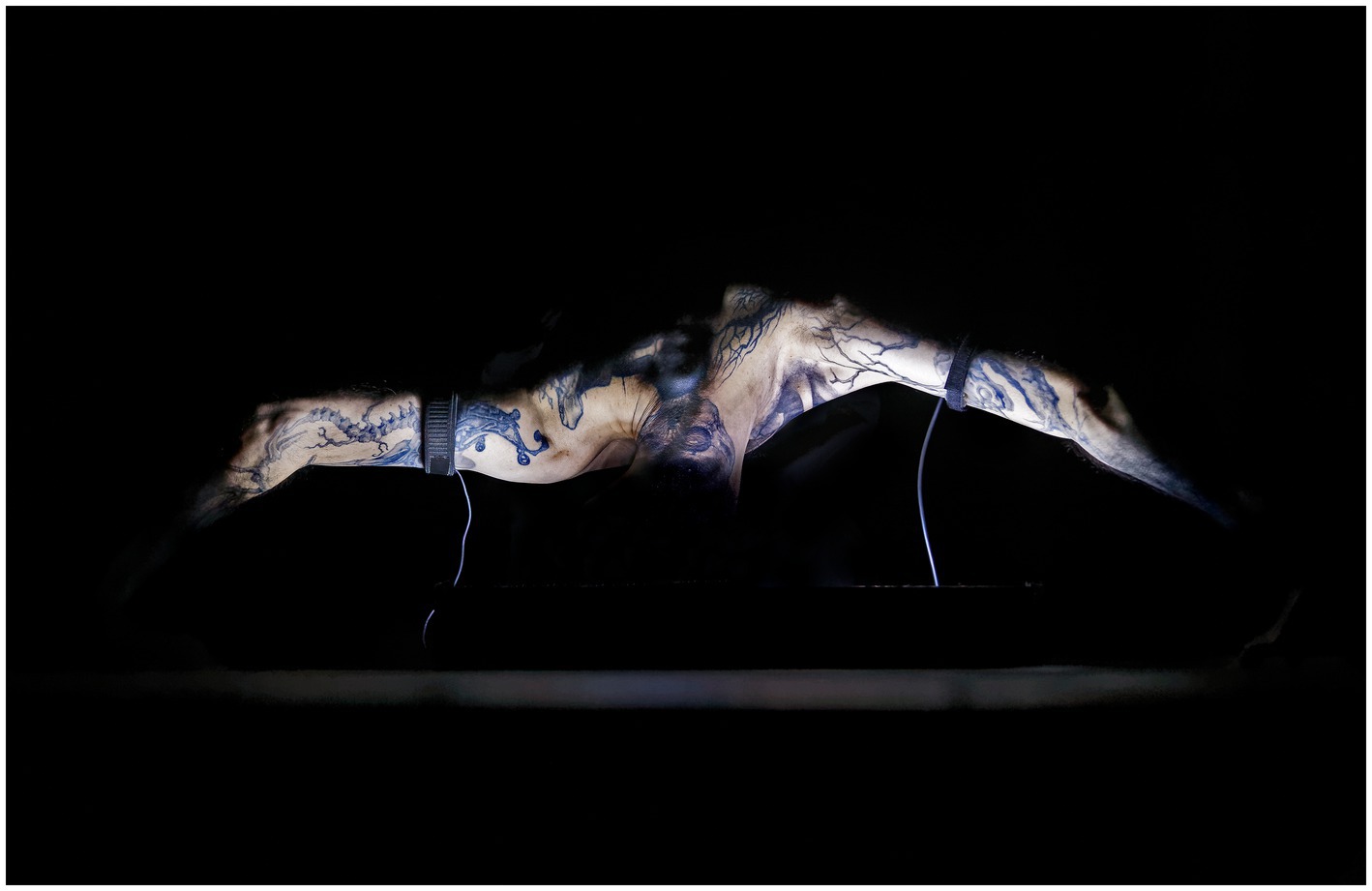

The instrument features in most of my performances and installations still today, but here I will linger on a particular performance, my solo work entitled Corpus Nil (2016).7 The piece, presented in a black box theater, is a duet between my performer’s body and the Xth Sense instrument. Aesthetically it is a hybrid performance combining interactive music, sound art, choreography, and machine learning. In the piece I use numerous mechanisms to force my body into an unsettled somatic state, to keep it continuously on alert, so to say. Choreographic strategies are interfaced with a physiological computing system via a layer of machine learning operators. Biosensors on my upper arms capture electromyogram and MMG signals from my muscles as I perform a semi-improvised choreography that visually deconstructs my body. The software instrument listens to the muscle signals, extracts a set of features, and creates a model of my muscular activity, including a detailed analysis of the muscle sounds in the spectral, time, and amplitude domains. Parameters of the model, such as spectral variance, centroid, and damping, are used to drive a bank of 20 digital oscillators, each tuned to a particular frequency of the muscle sound spectrum. The output takes the form of sound and light patterns that evolve according and in response to my movements on stage, aesthetically conjuring up drone-like sounds with complex overtones, bursts of bass frequencies and noise, and varying-rate strobe lights. This configuration is designed so as to mobilize a state of automaticity in my body. Music and light cannot be controlled by means of intentional movement for they are the result of multiple layers of machine learning algorithms computing the physiological contour of my muscle activity. There is no discrete mapping between a physiological feature and a sound form, interaction happens across a continuous field of interrelations between constantly changing input and output parameters. My performer’s agency is thus occluded and programmatic actions must be pursued through improvisation performed in attunement with the machine. Conversely, this means there is no proper way to perform a movement or trigger a specific response from the system. The most fruitful strategy is to tune into the rhythm of the sound, light, and vibration created by the system and allow my movement to surge and ebb with it (Figure 5).

Figure 5. Corpus Nil live performance at ZKM, Center for Art and Media Karlsruhe, 2016. Photo by ONUK.

4.2 Invent prosthetics to train new somatic modes

Another starting point for creative transgression is the conceptualization of alternative sensorimotor schema that can be tested through experimentation with the body. Then, a machine can be designed to subtract sensing functions from a performer’s body while offering new ones. Alternate pathways for perception can be experimented upon by creating instruments that use AI-driven techniques to undermine sensing and control, confuse them, or make them impossible by design. This is a strategy I began working with around 2015 and is partly inspired by the experimentations during the late 1990s and early 2000s by artists like Marcel.li Antunez Roca, Orlan, and Stelarc, whose work was touched upon earlier. The idea—found in their practices in different forms—to drastically re-contextualize medical technology to reflect on bodily taboos remains seminal. Given the development of computational and robotic technologies since then, along with an increasing fear of the other reinforced by technological development, I felt important and fruitful to recover that attitude, reinterpret it, and extend it further.

This effort is perhaps best illustrated in Eingeweide (2018), a duet with artist and performer Margherita Pevere.8 Part of my 7 Configurations cycle, the piece is co-directed and performed by myself and Pevere. Stylistically, it is a dance-theater piece, but it largely fails to fit in one particular genre, exceeding the conventional boundaries of performing arts for its combination of biophysical music, sound-light interaction, butoh, body manipulation, robotics, and microorganisms. For Eingeweide I designed, handcrafted, and programmed a robotic facial prosthesis, while Pevere created her own prosthetic mask from garments of biofilm—a biomaterial created by bacterial cultures that Pevere personally nurtures and manipulates as part of her practice as a bioartist and visual artist (Pevere, 2018). The robotic prosthesis covers my face with a closed helmet onto which is attached a limb that moves on two axes. The robot is endowed with a computational version of a basic sensorimotor system, leveraging the Behavior Design Environment created by Manfred Hild at the Neurorobotics Research Lab, Berlin, my scientific partner for the project. Using biomimetic techniques that I programmed starting from an attractor neural network, the robot generates movement in the form of electrical voltages that move the servomotors’ gears. The robot’s movement adapts in real time to haptic input recorded by its servomotors, that sense physical interaction with my body and with the robot’s surroundings. A regression algorithm aids the robot in learning salient information on specific attractors—and thus, implicitly, on the servomotors’ positions—that may hinder further movement. The machine therefore learns how to move “by doing,” continually testing an improvised range of movement sequences against my body and the stage (Figure 6).

The robotic limb’s morphology copies the dimensions and proportions of my arms so as to offer a coherent morphological image to both myself as a performer and to the audience. It consists of three sections interlinked by five motors, with the base motor attached to the helmet, practically positioned a few centimeters away from my forehead. I conceived this particular robotic morphology according to two principles: subtraction of bodily functions and substitution of vision with haptic feedback. These principles build upon and expand the first strategy discussed above; that is, configuring bodily and machine parts into arrangements that allow for attunement and unpredictability. The prosthesis drastically reduces my sight, entirely blocking frontal vision and allowing only for peripheral view. The lack of vision is compensated by the haptic sensations created by the vibrating servomotor at the base of the robot, which corresponds to the center of my face. The robot’s neural network generates in fact different types of vibrations according to its haptic input. Technically, the vibrations are defined by a series of attractors, particular patterns that the network settles into according to the input it receives and its internal state at a given time. If the robot limb is moving freely seeking a surface onto which to grasp, the vibration is regular and constant; when it finds another object or a body part of my performance partner, its vibration stutters at closer intervals, for the robot moves rapidly its tip upward and downward to gather the consistency of that object; if the limb is pulled by my partner or it gets stuck into a prop, the vibration becomes more intense, alternating between slow and fast rates as the robot tries to release itself from the pulling force. Thanks to regular training wearing the prosthesis, I am able to recognize the different vibration modalities and hence understand what the prosthesis is doing without seeing it. This, together with my mental images of the stage and the choreography progression, allows me to estimate with a certain degree of accuracy where I am on the stage, where my partner is, and whether the interaction expected at a particular moment is taking place or not.

The accuracy though is barely enough for me to perform safely. The robotic system and its neural network are designed to purposely force my body to the edges of its domain of experience, into a somatic state that requires openness to uncertainty, adaptiveness, and improvisation. It is a kind of state that affords for the exploration of zones of attunement between a performer’s agency and a robot’s autonomy, predicated on rhythm, instability, and unpredictability. Substituting my visual input with haptic information from vibrating servomotors has to do with attuning to the periodicity of the neural network driving the machine; a complex sensorial operation that does not allow precision of movement. What is lost in accuracy is gained in liveness and expression. The struggle of my body to attune to the rhythm of the prosthesis is real and evident; it is a configuration in becoming. While the choreography I have to perform serves the narrative of the piece, the wrestling of my senses as they strive to come to terms with the throbbing movements of the machine serves a different aesthetic purpose: it makes a process of technological incorporation manifest. It shows the ongoing configuration of my senses with the pulsations of the machine as it happens, in all its rawness, complexity, awkwardness, and grace. The human-machine configuration at play is unstable and unpredictable, obscuring the machinic determinacy of technology and sabotaging any human attempt at control or precision.

4.3 Repurpose normative designs to explore what they discard

Redirecting the analysis of somatic states of attunement with machines to the domain of listening, the final example considers my work Ex Silens (2024).9 A participatory solo performance, this is perhaps the least classifiable piece among the ones discussed so far. The performance is presented in theaters, empty warehouses, or other large spaces. It combines biophysical music, sound-light interaction and prosthetics. Differently from my previous works, it features an open stage where spectators sit around a cross-shaped performance area, in the immediate vicinity of the performer.

For this piece, I crafted a set of unconventional prostheses that translate sounds in the haptic and vibrational domain and allow to amplify sounds from a human body into another one. These sonic “organs,” as I refer to them in order to emphasize their perceptual task, offer audiences an alternate understanding of listening—through skin, flesh, bones, and the body as a whole. They make use of repurposed AI-based hearing algorithms (HA), tools conventionally employed in hearing aids and cochlear implants. My concept for the piece stemmed from a reflection on the sociopolitical implications of assuming that listening happens only through cochlear hearing, a common audist assumption. This way of thinking implies that d/Deaf and hard-of-hearing people are disabled by their condition because their cochlear hearing is damaged or lacking. In fact, d/Deaf and hard of hearing, including myself, are disabled by a society that disavows different ways of experiencing sound. The sound worlds of cities, cultural spaces, and venues are conceived only for one type of listening modality, the cochlear. One of the clearest example is the industry standard amplification methods for HA, that rely on digital manipulations of sound based on the equal loudness contour. Technically known as the ISO 226:2023 standard, this is a specification of different combinations of sound pressure levels and pure tone frequencies that is purported to describe a supposedly universal hearing profile. As Drever and Hugill (2022) note, the ISO 226:2023 standard is a constructed fiction. It is in fact the result of research experiments conducted between 1983 and 2002 with hearing individuals between 18 and 25 years old, an extremely narrow demographic range that can hardly be argued to stand for a universal reference.

In order to embed this critical reflection into the design of the sonic organs used in Ex Silens, I created a custom software framework that, using open source tools,10 reconfigures the sound manipulation pipeline commonly found in hearing prostheses. Starting from a dataset of field recordings and voice samples, timbre decomposition is performed by means of non-negative matrix factorization and principal component analysis in order to emphasize spectral components that are commonly considered outliers. These in turn are processed through standard nonlinear amplification methods, but, importantly, the amplification does not use the equal loudness contour as a reference. The reference instead is a contour gathered by averaging hearing profiles of a group of d/Deaf collaborators, that yields a bias toward frequency ranges in the mid-low and low range of the frequency spectrum. The end result is that the original sound is restructured in a form that is less clearly perceivable via cochlear hearing—if not confused—and significantly more crystalline in the haptic and vibrational domain. By zooming into the lower range of the sound spectrum, the dynamics of frequency perception are modulated in unconventional ways; as cochlear hearing is sidelined, commonly-known sounds, as a live heartbeat or ocean waves, acquire new aesthetic dimensions.

The playback system for the processed samples uses a different set of machine learning tools to create a dialogue between performer and instrument, as well as between audience members and instrument. The repurpose HA pipeline outputs the restructured sounds through the sonic organs, that I handcrafted utilizing common acoustic transducers and portable amplifiers. The output is not continuous but takes place only in response to particular features of my muscle signals captured via the Xth Sense. An interactive machine learning software, based on nearest-neighbor search algorithms,11 maps features of my MMG signals to an array of preset parameters for a granular synthesizer. At given times during the performance, I attach the prostheses to the bodies of the audience members, ideally on their skulls, neck, or sternum. As I excite the system with particular movements, the prostheses’ engine performs timbre decomposition, maps the output to granular synthesis parameters, and sends the resulting sound to the acoustic transducers. These diffuse the sound manipulated by the engine through the bone structure of the spectators’ bodies (Figure 7). Differently from the pieces discussed so far, where human-machine attunement is limited to the experience of a performer in configuration with an instrument, the attunement at play here is multidirectional. It expands beyond the borders of a performer’s body and reaches, literally, into the flesh and bones of the spectators. They stop being witnesses and become themselves parts of a configuration. Their senses are engaged in a multidirectional exchange of rhythms and vibrations that engages my performer’s body, the AI instrument, and their own bodies. While the experience is highly unconventional and can only be interpreted subjectively, the intensity of feeling sound pervading one’s own body invites audience members to acknowledge the limits of cochlear hearing and, in turn, to avow the openness and vulnerability of their bodies.

5 Conclusion

Casting aside spent ideas of control and prediction affords for a renewed understanding of human-machine relations in performance, where the two are co-dependent rather than hierarchically organized. This configuration of body and instrument is not a pairing, but an ecology of components connected to and influencing each other, acting as a system. This is possible thanks to processes of incorporation that integrate, more or less successfully or stably, an instrument into the body schemata. When engineering, composing for, and performing with body technologies, operating at the level of incorporation means to experiment with unfamiliar corporeal forms of knowing. Embedding aspects of corporeality—such as sensitivity to somatic thresholds, conditions for automaticity—into the design of computational instruments unlocks an interaction that can be extremely intimate and richly expressive, for it leverages subjective modes of sensing and knowing deeply rooted in the body. But this shall be only a starting point. In order to test further the range of expression in musical and performance practice, rules and conventions shall be infringed. Drawing on my practice, I suggested three strategies of transgression including the appropriation of hardware and software components to create states of performer-instrument attunement; the invention of prostheses that force a performer’s body into new, algorithmically-mediated body schemata; and the subversion of normative hearing technologies to recover a form of corporeal listening commonly discarded by those very same technologies. These strategies are only a few of the manifold available, and I hope the interested reader can find them generative of other modes of transgression.

The problem of embodiment and AI is multifaceted and extensive. Embodiment is restlessness, mutable and volatile; performers are hardly ever literally “in control.” Rather, they expertly negotiate a myriad thresholds of psychological and somatic intensities toward given programmatic ideas. Regardless of the amount of available data, corporeal and musical expression remains too difficult, if not impossible, to comprehensively model. Today’s prevailing type of AI may be successful at a given computational task, but remains immensely clumsy in adapting to the fickleness of human interaction. The issue, as this essay suggested, may lie with the belief at the core of these systems that anything, including expressivity and creativity, can be modeled and predicted in isolation from corporeal knowing. But it is important to consider that, as assumptions on the nature of human-machine interaction are imbued into a system, so are value systems and political stances. The premise that an LLM-based chatbot can “create” and “reason” like or beyond a human (or non-human) is a proxy for a cultural and political rejection of corporeal knowledge. Something that correlates with the current zeitgeist of increasingly normalized social intolerance. Corporeal knowledge is too fuzzy, messy, diverse, and unpredictable for it to be controlled, as the industry strives to do with the data that feed its AI models. Embodiment and the corporeal forms of knowing that sustain it are unique: no one body is or knows like another one.

Artists and researchers shall be enthused to confront these cultural problematics, for they are inextricably linked to fascinating questions of system design, engineering, interaction, and creative expression. If, as Lewis reminded us, an aesthetic is capable of forming political and social meaning, the inverse is also true: political and social paradigms have the power to articulate an aesthetic. The open question is how to respond to established social and cultural meaning with music and performances that offer alternative imaginations of the relations between self and other—be it technological, human, or non-human. The problem cannot be tackled by purely technical, theoretical, or artistic approaches. Transdisciplinarity can help interpolate aspects of theory, engineering, composition, and performance toward the making of AI-driven systems that do not merely enact an integration of human and machine, but challenge it, manifest its problematics, and probe its fragility.

Ethics statement

Written informed consent was obtained from the individual(s) for the publication of any potentially identifiable images or data included in this article.

Author contributions

MD: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Software, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author declares that no Gen AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^I have been diagnosed a sensorineural degenerative condition that currently equals to an average of 80% hearing loss in both ears.

2. ^The first version of Voyager was created at STEIM, Studio for Electro-Instrumental Music, between 1986–1988 and is still developed to this day.

3. ^An example of this process is the case of the phantom limb, where a prosthesis user develops multiple body images to account for what is perceived as a multiform kind of embodiment – a body that exists with and without the prosthesis, with and without a limb.

4. ^It is important to mention here the work of Lucy A. Suchman on human-machine “reconfigurations” (Suchman, 2007). Except for an overlap in their names, my tool of configuration is unrelated to Suchman’s work, that emerges from a different field of research – HCI and sociology – and is concerned with how the agency of machines is understood and performed at a social and technological level.

5. ^These include Ruth Anderson, Pauline Oliveros, Alvin Lucier, David Rosenboom, to name only a few of the early practitioners.

6. ^Partly developed with Baptiste Caramiaux.

7. ^To better grasp this work and the ones discussed in the following sections, I recommend the reader to watch the video trailer of the performance, available at: https://marcodonnarumma.com/works/corpus-nil (accessed February 12, 2025).

8. ^A video trailer is available at: https://marcodonnarumma.com/works/eingeweide and Pevere’s work can be viewed at: https://margheritapevere.com (accessed February 12, 2025).

9. ^A video trailer is available at: https://marcodonnarumma.com/works/ex-silens (accessed February 12, 2025).

10. ^The framework was developed in the programming language Pure Data leveraging, among others, the Fluid Corpus Manipulation library (Flucoma).

11. ^The software was implemented by the author using the Python package Anguilla, developed by Victor Shepardson, Jack Armitage and Nicola Privato at the Intelligent Instruments Lab in Reykjavik, the scientific partner for this project. See https://iil.is/research/anguilla (accessed February 12, 2025).

References

Bender, E. M., Gebru, T., McMillan-Major, A., and Shmitchell, S. (2021). “On the dangers of stochastic parrots: can language models be too big?” in FAccT '21: Proceedings of the 2021 ACM Conference on Fairness, Accountability, and Transparency, New York, NY: ACM.

Berliner, P. F. (1994). Thinking in jazz: The infinite art of improvisation. Chicago, IL: The University of Chicago Press.

Balsamo, A. (2000). “Reading cyborgs writing feminism” in The gendered cyborg: A reader. ed. G. Kirkup (London: Routledge), 148–158.

Bishop, M. J. (2021). Artificial intelligence is stupid and causal reasoning will not nix it. Front. Psychol. 11:513474. doi: 10.3389/fpsyg.2020.513474

Blackman, L. (2014). Affect and automaticity: towards an analytics of experimentation. Subjectivity 7, 362–384. doi: 10.1057/sub.2014.19

Caramiaux, B., and Bevilacqua, F. (2025). Jouer de la musique, performer l’IA. Ce que l’IA fait à l’Art. Paris: Centre Pompidou.

Caramiaux, B., and Donnarumma, M. (2020). “Artificial intelligence in music and performance: a subjective art-research inquiry” in Handbook of artificial intelligence for music. Foundations, advanced approaches, and developments for creativity. ed. E. R. Miranda (London: Springer).

Clark, A. (2004). Natural-born cyborgs: minds, technologies, and the future of human intelligence. Can. J. Sociol. 29, 471–473.

Donnarumma, M. (2022). Against the norm: othering and otherness in AI aesthetics. Digital Culture Soc. 8, 39–66. doi: 10.14361/dcs-2022-0205

Donnarumma, M. (2020). Across bodily and disciplinary borders. Hybridity as methodology, expression, dynamic. Perform. Res 25, 36–44. doi: 10.1080/13528165.2020.1842028

Donnarumma, M. (2017a). Beyond the cyborg: performance, attunement and autonomous computation. Int. J. Perform. Arts Digit. Media 13, 105–119. doi: 10.1080/14794713.2017.1338828

Donnarumma, M. (2017b). “On biophysical music” in Guide to unconventional computing for music. ed. E. R. Miranda (London: Springer).

Doshi, A. R., and Hauser, O. P. (2024). Generative AI enhances individual creativity but reduces the collective diversity of novel content. Sci. Adv. 10–28. doi: 10.1126/sciadv.adn5290

Dourish, P. (2004). Where the action is: The foundations of embodied interaction. Cambridge, MA: MIT Press.

Fuller, M., and Weizman, E. (2021). Investigative aesthetics – Conflicts and commons in the politics of truth. London: Verso.

Goodman, S. (2009). Sonic warfare: Sound, affect, and the ecology of fear. Cambridge, MA: MIT Press.

Henriques, J. (2011). Sonic bodies: Reggae sound systems, performance techniques, and ways of knowing. London: Continuum.

Lewis, G. E. (2000). Too many notes: computers, complexity and culture in voyager. Leonardo Music J. 10, 33–39. doi: 10.1162/096112100570585

Jawad, K., and Xambó Sedó, A. (2024). Feminist HCI and narratives of design semantics in DIY music hardware. Front. Commun. 8:1345124. doi: 10.3389/fcomm.2023.1345124

Jochum, E., and Donnarumma, M. (2025). “Improper bodies: robots, prosthetics, and disability in contemporary performance” in Robot theater. eds. H. Bergen and E. Mullis (London: Routledge).

Magnusson, T. (2019). Sonic writing: Technologies of material, symbolic, and signal inscriptions. London: Bloomsbury.

Mikami, S. (1997.) “World, Membrane and the Dismembered Body”, Department of Information Design, Tama Art University. Available online at: https://www.idd.tamabi.ac.jp/~mikami/artworks/World_Membrane/ear1.html (accessed April 21, 2025).

Pamela, Z. (2025). Available online at: https://pamelaz.com/ (accessed February 12, 2025).

Parker-Starbuck, J. (2011). Cyborg theatre: Corporeal/technological intersections in multimedia performance. London: Palgrave Macmillan.

Pevere, M. (2018). “Skin studies: leaking. Mattering” in Uncanny interfaces. eds. K. D. Haensch, L. Nelke, and M. Planitzer (Hamburg: Textem Verlag), 138–143.

Rockeby, D. (2025). Available online at: http://www.davidrokeby.com/ (accessed February 12, 2025).

Shildrick, M. (2013). Re-imagining embodiment: prostheses, supplements and boundaries. Somatechnics 3, 270–286. doi: 10.3366/soma.2013.0098

Sobchack, V. (2010). Living a “phantom limb”: on the phenomenology of bodily integrity. Body Soc. 16, 51–67. doi: 10.1177/1357034X10373407

Sonami, L. (2025). Available online at: https://sonami.net/ (accessed February 12, 2025).

Stelarc (2002). “Towards a compliant coupling: pneumatic projects, 1998-2001” in The cyborg experiments: The extension of the body in the media age. ed. J. Zylinska (London: Bloomsbury Academic), 73–77.

Suchman, A. L. (2007). Humane-machine reconfigurations: Plans and situated actions. New York, NY: Cambridge University Press.

Keywords: music, performing arts, corporeal knowledge, configuration, AI, prostheses, biophysical music, attunement

Citation: Donnarumma M (2025) AI and corporeal knowledge: inventing and performing with prostheses and sonic organs. Front. Comput. Sci. 7:1575730. doi: 10.3389/fcomp.2025.1575730

Edited by:

Anna Xambó, Queen Mary University of London, United KingdomReviewed by:

Alexander Refsum Jensenius, University of Oslo, NorwayJuan Pablo Martinez Avila, University of Nottingham, United Kingdom

Copyright © 2025 Donnarumma. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Marco Donnarumma, bGlzdHNAbWFyY29kb25uYXJ1bW1hLmNvbQ==

Marco Donnarumma

Marco Donnarumma