- 1Interactive Media Arts, New York University, New York, NY, United States

- 2Behavior Neuroscience, University of California, Los Angeles, CA, United States

As artificial intelligence (AI) systems become increasingly integrated into human social environments, their ability to foster meaningful interaction remains an open challenge. Building on research showing that physiological synchrony relates to social bonding, we speculate about an interpersonal musical biofeedback system that promotes synchrony by allowing users to attune to each other’s physiological rhythms. We propose a two‑stage AI training framework for an interpersonal musical biofeedback system: (1) a Foundational Model trained on diverse listeners’ physiological responses to a music library, and (2) Individualized Tuning, where the system fine-tunes itself to each user’s unique physiological responses. By analyzing musical features alongside real-time physiological responses, the proposed system illustrates a feasible architecture for dynamically personalizing music to facilitate nonverbal, embodied communication between users. This approach highlights the potential for AI-driven personalization that move beyond individual optimization to actively enhance physiological synchrony which may promote deeper emotional bonding and expand the role of music as a medium for AI-mediated social connections.

Introduction

This paper contextualizes our previous musical biofeedback systems, speculates on improvements using an Affective Generative Music-AI (AGM-AI) model, and discusses the implications and ethical considerations for such a system to be successfully integrated into current social structures. Our model draws from both Affective Computing and Music Information Retrieval (MIR) research to create an embodied physiological music system that is non-discrete and continuous. This approach addresses limitations in current data-driven methodologies (Dash and Agres, 2024) and emphasizes the embodied nature of human experience.

In the field of Affective Computing – the study and development of technologies that recognize, interpret, process, and simulate subjective experiences (Picard, 2000) – current systems often rely on pre-programmed rules and subjective tagging systems to produce outputs (Kumano et al., 2015; Martinho et al., 2000). However, this method overlooks embodied aspects of human experience, emphasized by Suchman’s (1999) theory of embodied practices, which advocates for designing systems that are reflexive to the interactive nature of human behavior. One current limitation of Affective Computing stems from the idiosyncratic nature of measuring individualized physiological responses, making it challenging to develop models that perform consistently across diverse groups.

Traditionally, MIR research has typically focused on the cognitive functions of music on the mind (Kaneshiro and Dmochowski, 2015; Stober, 2017; Ferreri et al., 2015) rather than the embodied physiological effects of music. However, music and related art forms such as dance, emerged as essential modes of establishing social cohesion and emotional bonding within communities, through shared physical, emotional, and social experiences.

In recent years, several commercial devices and platforms have emerged that integrate biometric feedback with music, such as the Muse EEG Headband and Neurable, which provide real-time adaptive audio based on brainwave monitoring, as well as APIs like Soundverse and wellness apps that use physiological data (e.g., heart rate) to tailor musical experiences for individual users. Our research moves beyond individual adaptation by developing a dual-synchrony framework that dynamically generates music in response to the physiological states of multiple users simultaneously. This approach allows for real-time, interpersonal adaptation of musical output—marking an innovative step beyond the primarily single-person focus of existing commercial solutions.

In our speculative model, reflexivity plays a crucial role in fostering physiological synchrony. Just as individual biofeedback allows users to attend to their own physiological states through real-time feedback, we propose a two-person system that allows users to attune to each other’s physiological rhythms. This aims to enhance synchrony between users, which has been speculated to be an underpinning of social bonding. By framing music AI models as extensions of humanity’s intrinsic drive for synchrony and emotional connection, our proposal envisions biofeedback technologies not only as tools of introspection (Markiewicz, 2017) but as catalysts for deeper physiological and social synchrony.

Previous studies have laid important groundwork for understanding physiological synchrony and music’s social effects, yet key limitations remain. For instance, research by Chang et al. (2016) and Huang and Cai (2017) demonstrated promising uses of EEG and heart rate data in music recommendation systems, but these systems primarily targeted individual emotional states and lacked interpersonal or synchrony-driven dimensions. Studies on physiological synchrony—such as Feldman et al. (2011), Dikker et al. (2017), and Codrons et al. (2014)—have confirmed correlations between shared physiological states and social bonding, yet many of these findings remain correlation-based and limited to predefined, often context-restricted scenarios. While music-based research, including Tarr et al. (2014) and Weinstein et al. (2016), supports the role of synchronized musical activity in promoting social cohesion, these studies typically assume fixed stimuli and asynchronous, post-hoc analysis. In contrast, our framework introduces live, bidirectional adaptation of musical output based on real-time biometric inputs, mediated by a dual-layer AI system. By combining a broad Foundational Model with user-specific Individualized Tuning, our approach seeks to dynamically generate music that promotes mutual entrainment, thus addressing both the personalization gap and the lack of real-time interaction in prior literature.

While generative AI music systems such as Google Magenta (Schreiber, 2024), Suno (Yu et al., 2024), and AIVA (Gîrbu, 2024) have gained traction in recent years, most rely on large-scale datasets of audio samples or symbolic music data without integrating real-time biometric input. Commercially available adaptive platforms, such as Brain.fm, attempt to entrain biometric states but do not read them in real time, while Endel incorporates a single biometric—heart rate—alongside environmental factors like time of day and geographic location. These systems do not support direct, multi-modal physiological synchronization between multiple users. Additionally, biofeedback-integrated music applications, such as MindTrack, typically operate at the individual level, targeting single-user states rather than facilitating interpersonal synchrony (Crespo et al., 2018). In contrast, our proposed AGM-AI framework merges real-time, multi-modal biometric inputs (EEG, ECG, GSR, motion data) with dynamic generative music systems, emphasizing continuous, interpersonal adaptation. By training dual-layer AI models—a general Foundational Model that captures average human physiological responses to music, and an Individualized Tuning model that accounts for user-specific responses—our system moves beyond existing approaches to enable personalized, synchronizable musical experiences.

Synchrony and communication in early musical traditions

Music has long served as a universal language for creative expression and social connection, transcending linguistic and cultural boundaries. In early societies, communal music-making likely functioned as a tool for non-verbal communication (Harwood, 2017) and fostering group cohesion (Oesch, 2019). Modern research suggests that the perception of music as agent-driven sound activates motor regions in the brain, potentially leading to “self-other merging,” in which individuals experience their movements synchronizing with others (Tarr et al., 2014). This synchronization is thought to create a shared physiological state that can strengthen interpersonal bonds and influence subsequent positive social feelings (Cheong et al., 2023).

For example, In Javanese gamelan, synchrony emerges through interlocking polyrhythms and cyclical patterns during improvised playing (Gibbs et al., 2023), fostering a shared flow state, known as ngeli (“floating together”) (Gibbs et al., 2023). This ecstatic state allows performers to anticipate and adapt to each other’s actions, enhancing physiological synchrony.

This ability to foster connection through physiological synchrony raises important questions about how music-induced synchronization might extend beyond individual experiences to actively shape social bonding at larger scales. In particular, understanding how physiological states align during shared musical activities could provide deeper insights into the mechanisms underlying emotional attunement, trust, and collective identity formation—concepts explored further in Section 1. Section 2 shares personal insights from our work with musical biofeedback systems and speculates on a new system that has potential to further enhance physiological synchrony and emotional bonding. In Sections 3 and 4, we discuss the ethical framework surrounding such a system – including privacy, dependency, and autonomy – and extrapolate potential societal applications.

Section 1: physiological synchrony and social bonding

A growing body of research shows that social connection and emotional bonding may be related to physiological synchrony, such as synchronized heart rates, breathing patterns, or neural activity. Though highly speculative, it is possible that physiological synchrony is not merely a byproduct of social interactions but actively facilitates bonding by increasing empathy, trust, and a sense of shared identity (Palumbo et al., 2017). We suggest that by inducing physiological synchrony through rhythmic entrainment, music may foster emotional bonding and social cohesion.

Forms of physiological synchrony

Physiological synchrony occurs when two or more individuals exhibit aligned physiological states. This synchrony can manifest in various forms, including cardiac synchrony, where individuals’ heart rates align during shared experiences (Konvalinka et al., 2011), respiratory synchrony, where breathing patterns become coordinated, such as in conversation, meditation, or collective singing (Codrons et al., 2014), and neural synchrony, where brain activity becomes synchronized during shared tasks (Dikker et al., 2017).

A number of studies have demonstrated that physiological synchrony is closely linked to social bonding and cohesion. Research on interpersonal interactions, for example, has shown that romantic partners and close friends exhibit higher heart rate synchrony during cooperative tasks, with greater synchrony predicting increased relationship closeness (Helm et al., 2018). Similarly, studies on joint attention have revealed that when two individuals focus on the same stimulus, their neural activity becomes more synchronized, strengthening their sense of social connection (Hirsch et al., 2017). Group-based physiological synchrony has also been shown to enhance collective identity formation. In experimental settings, individuals who engage in synchronized movement, like walking in step or performing coordinated dance, report greater feelings of unity and affiliation compared to those who engage in asynchronous movement (Reddish et al., 2013). These findings suggest synchrony may be a fundamental mechanism by which individuals form and maintain social bonds.

Synchrony’s role in social cohesion

Several mechanisms have been proposed to explain why physiological synchrony promotes social cohesion. One explanation is that synchronization enhances emotional attunement and empathy. Studies have found that physiological synchrony is associated with greater empathic accuracy and cooperative behavior (Goldstein et al., 2018). For example, research on mother-infant interactions has shown episodes of heart rate synchrony during affectionate exchanges are linked to stronger emotional closeness (Feldman et al., 2011).

Another possible mechanism is that synchrony serves as a signal of shared group identity. Individuals who engage in synchronized activities tend to report stronger feelings of group affiliation and cooperation, an effect that has been observed in contexts ranging from military drills to religious rituals (Fischer et al., 2013). Experimental research has demonstrated that engaging in synchronous activities with others leads to greater trust and prosocial behavior, suggesting that physiological alignment fosters a sense of belonging (Tarr et al., 2014).

Music as a catalyst for physiological synchrony

Given the strong link between physiological synchrony and social bonding, it is not surprising that music, a highly structured and rhythmic activity, has been found to facilitate synchrony, which may in turn promote social cohesion. Music has been shown to induce synchronization at multiple physiological levels. Rhythmic entrainment occurs when listeners’ heart rates, breathing patterns, and motor movements become aligned with a music beat, a phenomenon observed in both individual and group settings (Trost et al., 2015). At the neural level, EEG studies have demonstrated that individuals playing instruments exhibit greater inter-brain synchronization compared to those engaged in non-musical tasks (Lindenberger et al., 2009).

Behavioral evidence of music-induced social cohesion

Behavioral research further supports the role of music in fostering social cohesion. A study by Weinstein et al. (2016) found that individuals who moved in synchrony with music alongside others reported a stronger sense of affiliation than those who moved asynchronously. Similarly, research on drumming has demonstrated that synchronized rhythmic activity leads to increased cooperative behavior and enhanced feelings of social connection (Tarr et al., 2014). These findings suggest that music-induced synchrony may not only reflect but actively contribute to group cohesion.

Section 2: personal insights and technical framework for musical biofeedback

As an artist and researcher, I, Jason J. Snell, offer personal insights from my biofeedback performances, along with qualitative feedback from participants. These performances were minimal-risk, non-interventional public art events where participation and feedback were entirely voluntary. No personal data was collected or stored, and no physiological data was analyzed or published.

Evolution of biofeedback music performance

In my first iteration, I used a Muse biosensor headband (EEG, PPG) to translate my brainwaves and heartbeats into MIDI notes and control signals. I performed techno sets, using my mind to manipulate the sounds, and my heartbeat triggered the kick drum. Performing in these biofeedback loops increased my vulnerability and led me into meditative and transcendent states.

Next, I created ambient “biofeedback soundbaths,” inviting audiences to sit or lie down. Attendees reported profound emotional and sensory experiences.

Recently, I developed “brainwave symphonies” where 6–8 participants wore Muse headsets. Their meditative states triggered musical notes while I manually shaped the sounds. This established a nonverbal communication where participants communicated through biodata and I responded with musical adjustments.

Limitations of current systems

Despite these powerful experiences, our current biofeedback prototypes have significant limitations: my EEG classification models were trained only on my brain activity, and the sound kits were designed around my personal music preferences. These systems lacked broader applicability and robust generalizability across diverse populations.

Proposed AGM-AI framework

To overcome these limitations and expand biofeedback’s potential for synchrony, emotional bonding, and social cohesion, we propose an Affective Generative Music AI (AGM-AI) framework with two training stages:

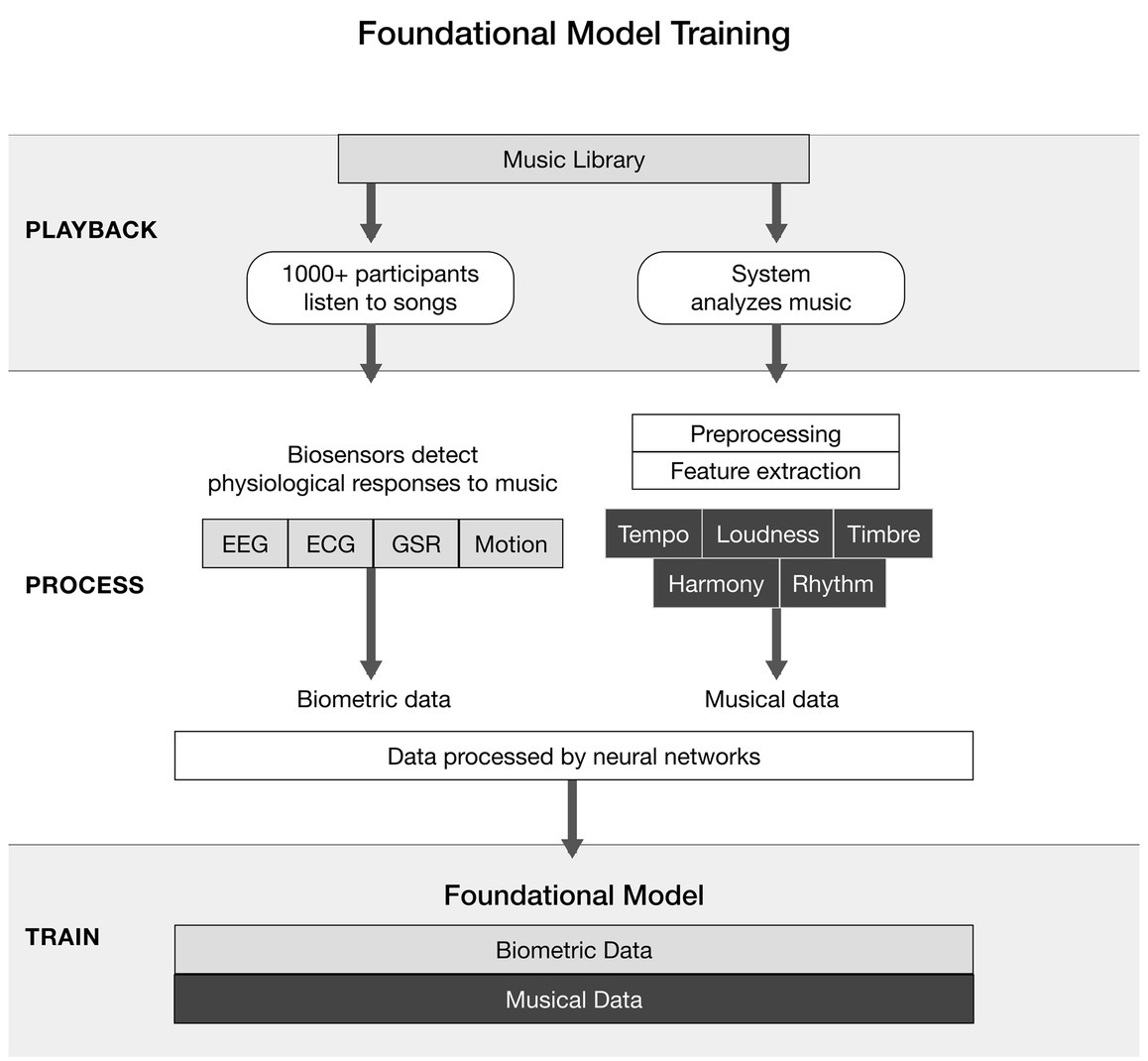

Foundational model training

We propose training a multi-modal neural network on synchronized biometric (EEG, ECG, GSR, accelerometers) and musical data (tempo, loudness, harmony, timbre) from over 1,000 diverse participants, including traditionally underrepresented and neurodivergent individuals, listening to a wide variety of music.

Data collection

Participants listen to curated music selections while their physiological responses are recorded.

Musical data

The system performs a multi-stage analysis of the music, using signal preprocessing (filtering, windowing, Short-Time Fourier Transform) and feature extraction (spectral data, RMS energy, chroma features, inter-onset intervals) to extract tempo, loudness, timbre, harmony, and rhythmic complexity. It uses variable window lengths to capture both transient details and sustained characteristics of the music.

Biometric data

Physiological responses come from EEG (full PSD arrays, including Delta, Theta, Alpha, Beta, Gamma bands), EKG (heart rate, HRV), GSR (skin conductance), and motion sensors (respiratory movement, body sway), and map them on a 4-dimensional plane. Each sensor’s sampling rate and latency align music events with corresponding biometric shifts.

Data analysis

Our system trains a neural network to associate musical features—tempo, loudness, timbre, harmonic content, and rhythmic complexity—with biometric responses, accounting for analysis latency specific to each sensor.

Synchronized datasets

Our system analyzes these synchronized datasets to identify statistical relationships between musical characteristics and physiological responses, forming our Foundational Model of average human reactions to music.

Avoiding semantic bias

By focusing exclusively on non-discrete, progressively-changing numerical representations of music and biometrics, we avoid the following technical and ethical pitfalls:

1. Losing fidelity by translating musical data into verbal language and back.

2. Using predefined emotional labels (e.g., “calm” or “focused”) that are subjective and have cultural bias (Figure 1).

Figure 1. Foundational Model Training. This diagram illustrates the first stage of the AGM-AI framework. A large cohort of participants listens to a curated music library while biosensors (EEG, ECG, GSR, motion) record their physiological responses. In parallel, the system analyzes the musical features (tempo, loudness, timbre, harmony, rhythm). Neural networks process the synchronized biometric and musical data to form a Foundational Model representing average human physiological responses to music.

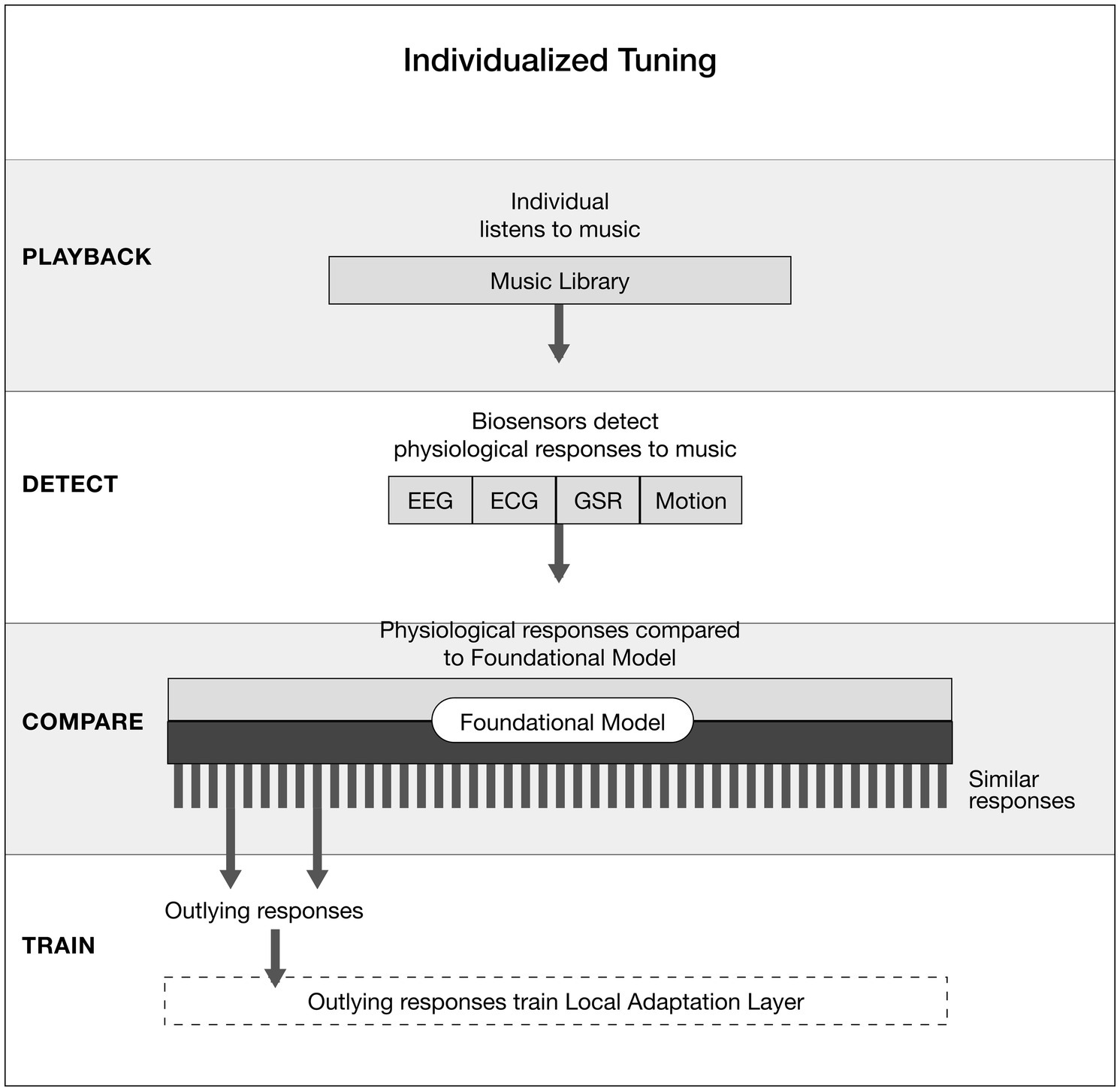

Individualized tuning

While the Foundational Model captures average human responses to music, individual users may exhibit unique physiological reactions. To embrace this variability and minimize normative bias, each user undergoes an Individualized Tuning, consisting of individualized data collection and local adaptation.

Individualized data collection

To personalize the model, the system records an user’s biometric responses to the Foundational Model music. If their responses significantly differ from the Foundational Model across multiple listening sessions, these responses are identified as outliers.

Local adaptation layer

When the system identifies consistent outlier responses, it records these into a Local Adaptation Layer. This layer operates independently of the Foundational Model, gradually adapting with each new response and refining how their biometric data is translated into personalized musical biofeedback. This user-centered approach ensures the system adapts to the individual rather than pressuring the user to conform to a generalized AI model (Figure 2).

Figure 2. Individualized Tuning. This figure shows how individual user responses are compared to the Foundational Model. Biosensors measure each person’s physiological reactions to music, which are then contrasted with the model’s expected patterns. Outlying responses are used to train a Local Adaptation Layer, ensuring the system refines itself to reflect the user’s unique physiological profile rather than enforcing conformity to the general model.

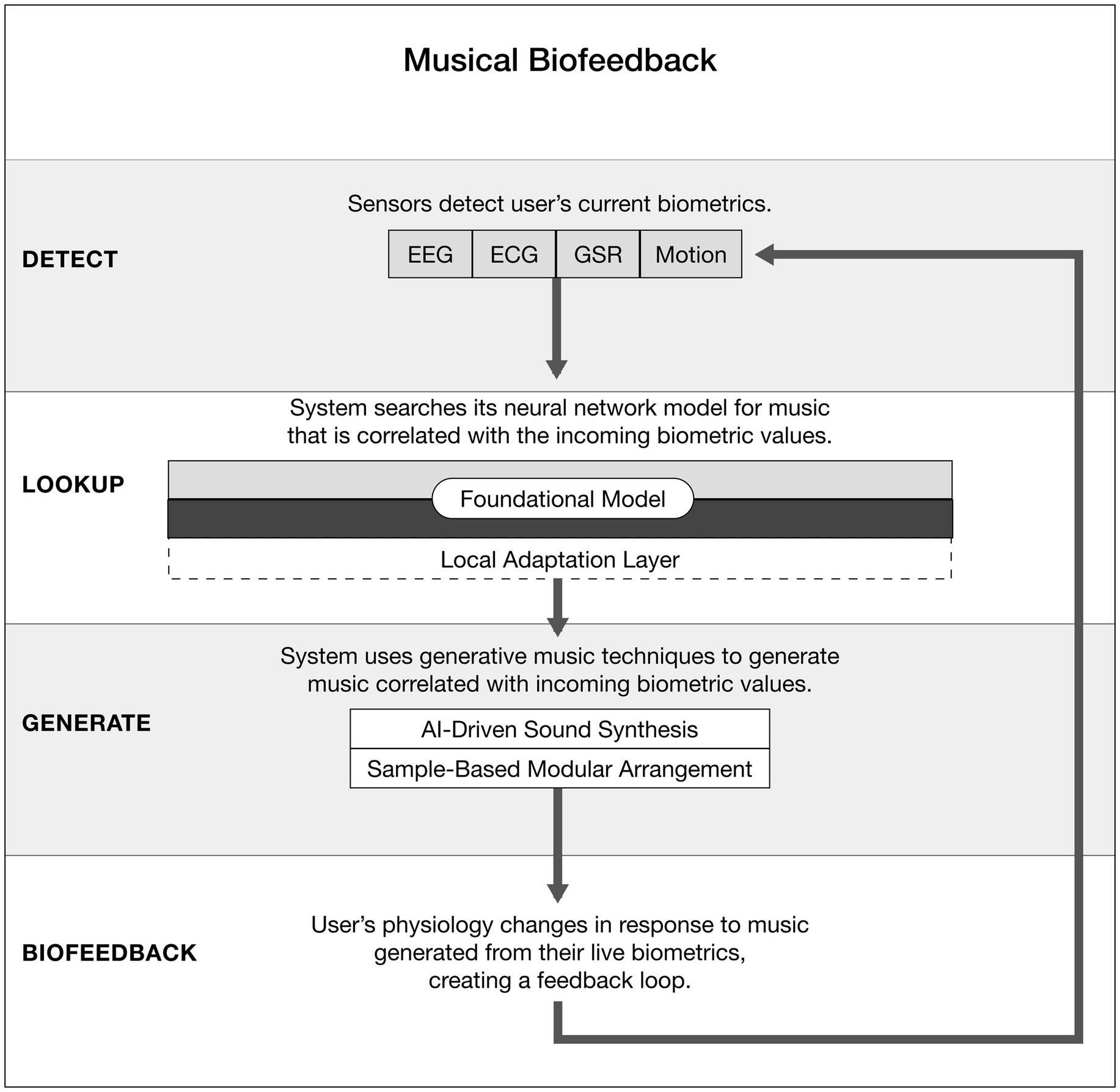

Musical biofeedback

Once personalized, the system dynamically generates music based on live biometrics. If data indicates excitement, for example, it may raise the tempo. For music generation, we employ AI-driven synthesis (direct waveform, neural granular, spectral) for evolving timbres, and a sample-based modular arrangement that consults biometric-to-music correlations (Figure 3).

Figure 3. Musical Biofeedback. This diagram depicts how real-time biofeedback is generated for an individual. Incoming biometric data (EEG, ECG, GSR, motion) are matched against both the Foundational Model and the user’s Local Adaptation Layer. The system then uses generative music techniques (AI-driven sound synthesis and modular sample-based arrangement) to produce music correlated with the user’s physiological state, creating a continuous feedback loop between body and sound.

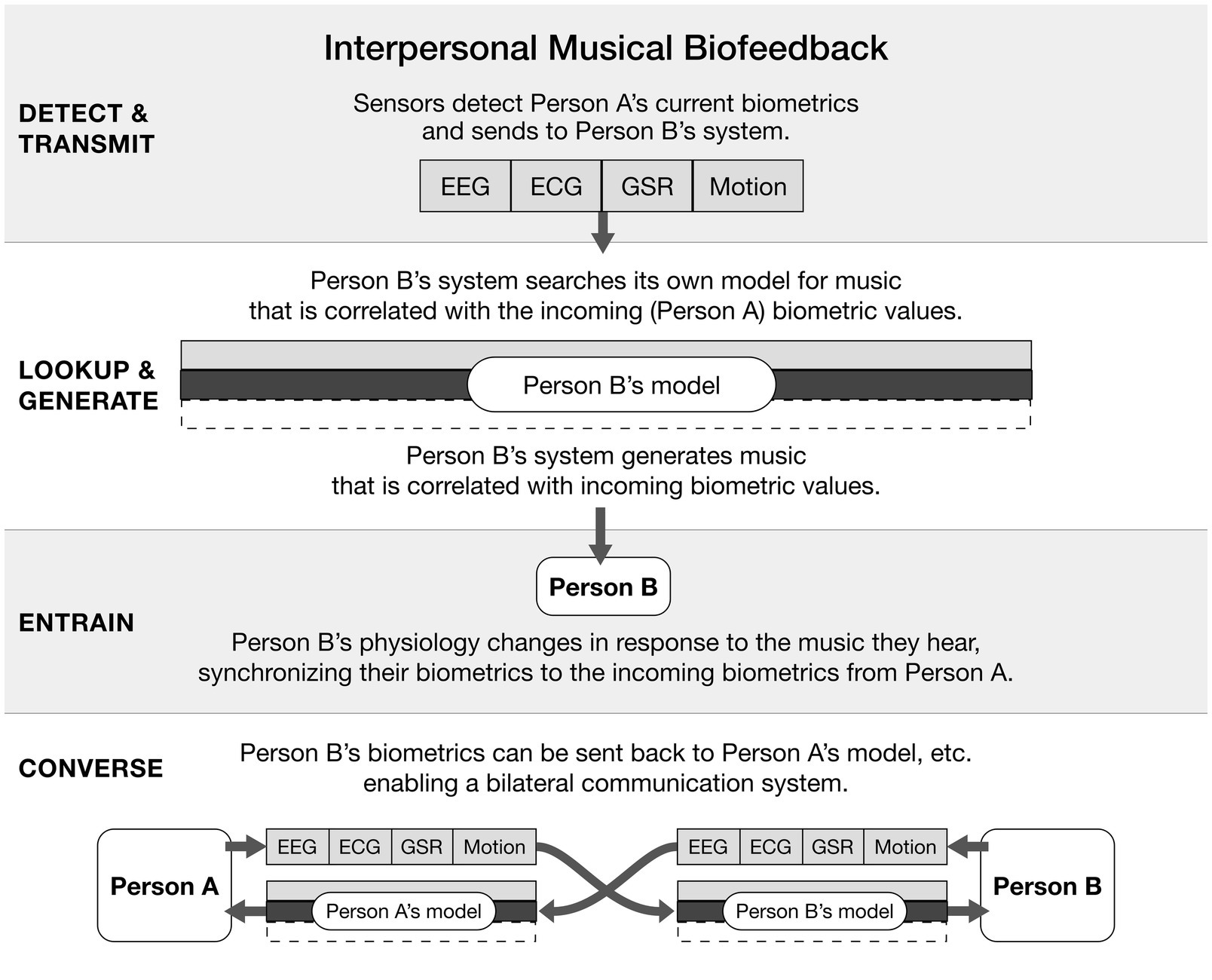

Interpersonal musical biofeedback

Once trained, the system dynamically generates personalized music through AI-driven synthesis methods (e.g., neural granular, spectral synthesis) and modular sample-based arrangements, directly responsive to real-time biometric data. One person’s biometric data could drive music generation for another, enabling physiological entrainment. Bidirectional sharing of biometric data could support cross-cultural, non-verbal communication, potentially inducing physiological synchrony and emotional intimacy (Figure 4).

Figure 4. Interpersonal Musical Biofeedback. This figure illustrates the bidirectional biofeedback process between two users. Person A’s biometric signals are transmitted to Person B’s system, which generates music aligned with Person A’s physiological state. As Person B entrains to this music, their own physiology adapts, creating synchrony between the two. Person B’s biometric data can then be transmitted back to Person A, establishing a reciprocal feedback loop that enables real-time, non-verbal communication through music.

Section 3: ethical considerations

In addition to designing the Foundational Model for diverse data representation and mitigating normative bias in Individualized Tuning, we address the following ethical considerations:

System dependency and human connection

Our proposed system would not attempt to reconstruct affective content in musical stimuli, but rather aims to acknowledge what the target is likely experiencing, and translating those experiences into music. Over-reliance on AGM-AI for fostering human connections can threaten genuine interpersonal skills development. To mitigate risks of dependency, we would design the system as a supplementary tool with interaction circuit-breakers and transparent feedback mechanisms. It would serve as a bridge, rather than a substitute, for personal connections, aiming to help users develop synchrony and empathy on their own. Sensitive applications like couples therapy or conflict resolution would require a trained professional to oversee the AGM-AI’s interpretations and interventions.

Potential for misuse

Physiological-responsive AGM-AI systems introduce significant risks of misuse that threaten individual autonomy, societal trust, and collective well-being, creating pathways for algorithmic manipulation.

We’d strongly advise against using the system for purposes such as:

• Targeted persuasion: Commercial / political actors using this model to exploit emotion-driven feedback loops to override rational decision-making.

• Intent to harm: Malicious actors exploiting the system to induce heightened negative responses through carefully designed soundscapes.

• Pseudo-intimacy: Recursive emotional mirroring to create a false sense of intimacy.

While this system aims to facilitate understanding and connection across cultural boundaries, it must be carefully designed to avoid creating a false sense of intimacy or replacing genuine human interaction. We want to emphasize that this AI system is meant to encourage and enhance real human-to-human communication, rather than foster dependency on AI-mediated emotional experiences. Implementing these ethical guidelines could help mitigate the risk of bad actors using the AGI-AI system for malicious intent, while still leveraging its potential for enhancing synchrony and social bonding.

Section 4: broader applications of AGM-AI

An AGM-AI assistant in mental healthcare could monitor physiological signals in group therapy sessions (e.g., couples, families) facilitating alternative forms of communication and synchrony between individuals. Also, our system could potentially mitigate cross-cultural bias or hostility between people of diverse backgrounds by enabling cross-lingual, nonverbal communication.

Synchronized musical experiences could be paired with storytelling, adaptively generating music that aligns with shared emotional themes, creating a shared affective environment (Shen et al., 2024). We could also add simulated role-playing tasks where participants engage with the system as both “actors” and “observers,” (Shen et al., 2024; Prentki, 2015) further strengthening their ability to empathize through the music produced by their individually tuned models.

While this study presents a promising framework for integrating physiological synchrony and affective generative music AI to enhance empathy and social bonding, there are still limitations to be further explored.

Firstly, the foundational model is built on population-level averages, which may not fully capture the nuanced variability in physiological responses across different individuals or cultural groups. Although the individualized tuning stage aims to address this, it remains challenging to comprehensively account for diverse neurophysiological patterns, particularly among neurodivergent or underrepresented populations.

Additionally, the system’s reliance on biometric data raises concerns about data fidelity and potential artifacts, as physiological signals can be influenced by unrelated environmental or psychological factors. Another limitation is that the relationship between physiological synchrony and empathy is complex and may not be fully reflected in conventional assessments or captured solely through biometric alignment (Qaiser et al., 2023).

Finally, the broader ethical context—such as the risk of dependency on biofeedback technologies and possible misuse for manipulation—highlights the need for ongoing caution in development and deployment to ensure these systems enhance, rather than diminish, genuine human connection.

This interdisciplinary approach, informed by physiological data and embodied cognition principles, offers immense potential for advancing individual and societal well-being beyond current AGM-AI systems, which typically map discrete emotions in a 2-dimensional (2D) or 3-dimensional (3D) plane (Dash and Agres, 2024). By bridging the gap between human emotion and physiological representation, these systems could enhance therapeutic practices, foster social cohesion, and create more intuitive interactions across diverse applications. However, realizing this potential requires careful attention to ethical design and deployment, ensuring that these technologies remain tools for empowerment and connection.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding authors.

Ethics statement

NYU Human Research Protection Program (HRPP/IRB) confirmed that the performances described did not qualify as human subjects research and therefore did not require IRB review or formal consent procedures. No IRB review or formal consent documentation was required, as the activities did not constitute human subjects research. Participation in these performances was entirely voluntary. Feedback, when provided, was also voluntary. No personal data was collected or stored, and no interventions were involved. The level of risk was comparable to attending a standard public art or music event. Written informed consent was obtained from the individuals for the publication of any potentially identifiable images or data included in this article.

Author contributions

SN: Conceptualization, Writing – review & editing, Writing – original draft. KS: Writing – review & editing, Conceptualization, Writing – original draft. JS: Conceptualization, Supervision, Writing – original draft, Writing – review & editing, Project administration.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Chang, H.-Y., Huang, S.-C., and Wu, J.-H. (2016). A personalized music recommendation system based on electroencephalography feedback. Multimed. Tools Appl. 76, 19523–19542. doi: 10.1007/s11042-015-3202-4

Cheong, J. H., Molani, Z., Sadhukha, S., and Chang, L. J. (2023). Synchronized affect in shared experiences strengthens social connection. Commun. Biol. 6:1099. doi: 10.1038/s42003-023-05461-2

Codrons, E., Bernardi, N. F., Vandoni, M., and Bernardi, L. (2014). Spontaneous group synchronization of movements and respiratory rhythms. PloS One 9:e107538. doi: 10.1371/journal.pone.0107538

Crespo, A. B., Idrovo, G. G., Rodrigues, N., and Pereira, A. (2018). MindTrack: using brain–computer interface to translate emotions into music. In: 2018 international conference on digital arts, media and technology (ICDAMT). Thailand: IEEE, pp. 33–37.

Dash, A., and Agres, K. (2024). AI-based affective music generation systems: a review of methods and challenges. ACM Comput. Surv. 56, 1–34. doi: 10.1145/3672554

Dikker, S., Wan, L., Davidesco, I., Kaggen, L., Oostrik, M., McClintock, J., et al. (2017). Brain-to-brain synchrony tracks real-world dynamic group interactions in the classroom. Curr. Biol. 27, 1375–1380. doi: 10.1016/j.cub.2017.04.002

Feldman, R., Magori-Cohen, R., Galili, G., Singer, M., and Louzoun, Y. (2011). Mother and infant coordinate heart rhythms through episodes of interaction synchrony. Infant Behav. Dev. 34, 569–577. doi: 10.1016/j.infbeh.2011.06.008

Ferreri, L., Bigand, E., Bard, P., and Bugaiska, A. (2015). The influence of music on prefrontal cortex during episodic encoding and retrieval of verbal information: a multichannel fNIRS study. Behav. Neurol. 2015, 1–11. doi: 10.1155/2015/707625

Fischer, R., Callander, R., Reddish, P., and Bulbulia, J. (2013). How do rituals affect cooperation? An experimental field study comparing nine ritual types. Hum. Nat. 24, 115–125. doi: 10.1007/s12110-013-9167-y

Gibbs, H. J., Czepiel, A., and Egermann, H. (2023). Physiological synchrony and shared flow state in Javanese gamelan: positively associated while improvising, but not for traditional performance. Front. Psychol. 14:1214505. doi: 10.3389/fpsyg.2023.1214505

Gîrbu, E. (2024). Experimental perspectives on artificial intelligence in music composition. Art. J. Musicol. 30:29–30, 95–99. doi: 10.35218/ajm-2024-0006

Goldstein, P., Weissman-Fogel, I., Dumas, G., and Shamay-Tsoory, S. G. (2018). Brain-to-brain coupling during handholding is associated with pain reduction. Proc. Natl. Acad. Sci. USA 115, E2528–E2537. doi: 10.1073/pnas.1703643115

Harwood, J. (2017). Music and intergroup relations: exacerbating conflict and building harmony through music. Rev. Commun. Res. 5, 1–34. doi: 10.12840/issn.2255-4165.2017.05.01.012

Helm, J. L., Miller, J. G., Kahle, S., Troxel, N. R., and Hastings, P. D. (2018). On measuring and modeling physiological synchrony in dyads. Multivar. Behav. Res. 53, 521–543. doi: 10.1080/00273171.2018.1459292

Hirsch, J., Zhang, X., Noah, J. A., and Ono, Y. (2017). Frontal temporal and parietal systems synchronize within and across brains during live eye-to-eye contact. Neuroimage 157, 314–330. doi: 10.1016/j.neuroimage.2017.06.018

Huang, C.-F., and Cai, Y. (2017) ‘Automated music composition using heart rate emotion data’, International conference on intelligent information hiding and multimedia signal processing, pp. 115–120. Springer, New York

Kaneshiro, B., and Dmochowski, J. P. (2015) ‘Neuroimaging methods for music information retrieval: current findings and future prospects’. Proceedings of the 16th international society for music information retrieval conference ISMIR, Spain, pp. 538–544.

Konvalinka, I., Xygalatas, D., Bulbulia, J., Schjødt, U., Jegindø, E.-M., Wallot, S., et al. (2011). Synchronized arousal between performers and related spectators in a fire-walking ritual. Proc. Natl. Acad. Sci. USA 108, 8514–8519. doi: 10.1073/pnas.1016955108

Kumano, S., Otsuka, K., Mikami, D., Matsuda, M., and Yamato, J. (2015). Analyzing interpersonal empathy via collective impressions. IEEE Trans. Affect. Comput. 6, 324–336. doi: 10.1109/TAFFC.2015.2417561

Lindenberger, U., Li, S.-C., Gruber, W., and Müller, V. (2009). Brains swinging in concert: cortical phase synchronization while playing guitar. BMC Neurosci. 10:22. doi: 10.1186/1471-2202-10-22

Markiewicz, R. (2017). The use of EEG biofeedback/neurofeedback in psychiatric rehabilitation. Psychiatr. Pol. 51, 1095–1106. doi: 10.12740/PP/68919

Martinho, C., Machado, I., and Paiva, A. (2000) ‘A cognitive approach to affective user modeling’, In: A. Paiva (Ed) Lecture notes in computer science, Springer Berlin. 64–75.

Oesch, N. (2019). Music and language in social interaction: synchrony, antiphony, and functional origins. Front. Psychol. 10:1514. doi: 10.3389/fpsyg.2019.01514

Palumbo, R. V., Marraccini, M. E., Weyandt, L. L., Wilder-Smith, O., McGee, H. A., Liu, S., et al. (2017). Interpersonal autonomic physiology: a systematic review of the literature. Personal. Soc. Psychol. Rev. 21, 99–141. doi: 10.1177/1088868316628405

Qaiser, J., Leonhardt, N. D., Le, B. M., Gordon, A. M., Impett, E. A., and Stellar, J. E. (2023). Shared hearts and minds: physiological synchrony during empathy. Affect. Sci. 4, 711–721. doi: 10.1007/s42761-023-00210-4

Reddish, P., Fischer, R., and Bulbulia, J. (2013). Let’s dance together: synchrony, shared intentionality and cooperation. PLoS One 8:e71182. doi: 10.1371/journal.pone.0071182

Schreiber, A., Sander, K., Kopiez, R., and Thöne, R. (2024). The creative performance of the AI Agents ChatGPT and Google Magenta compared to human-based solutions in a standardized melody continuation task. Jahrbuch Musikpsychologie, 32, Article e195.

Shen, J., DiPaola, D., Ali, S., Sap, M., Park, H. W., and Breazeal, C. (2024). Empathy toward artificial intelligence versus human experiences and the role of transparency in mental health and social support chatbot design: comparative study. JMIR Ment. Health 11:e62679. doi: 10.2196/62679

Stober, S. (2017). Toward studying music cognition with information retrieval techniques: lessons learned from the OpenMIIR initiative. Front. Psychol. 8:1255. doi: 10.3389/fpsyg.2017.01255

Suchman, L. A. (1999). Plans and situated actions: The problem of human-machine communication. Cambridge: Cambridge University Press.

Tarr, B., Launay, J., and Dunbar, R. I. M. (2014). Music and social bonding: ‘self-other’ merging and Neurohormonal mechanisms. Front. Psychol. 5:1096. doi: 10.3389/fpsyg.2014.01096

Trost, W., Frühholz, S., Cochrane, T., Cojan, Y., and Vuilleumier, P. (2015). Temporal dynamics of musical emotions examined through Intersubject synchrony of brain activity. Soc. Cogn. Affect. Neurosci. 10, 1705–1721. doi: 10.1093/scan/nsv060

Weinstein, D., Launay, J., Pearce, E., Dunbar, R. I. M., and Stewart, L. (2016). Group music performance causes elevated pain thresholds and social bonding in small and large groups of singers. Evol. Hum. Behav. 37, 152–158. doi: 10.1016/j.evolhumbehav.2015.10.002

Keywords: artificial intelligence, biofeedback, EEG, synchrony, biosensors, musical neurofeedback, affective generative music

Citation: Ng S, Sargent K and Snell JJ (2025) Cyborg synchrony: integrating human physiology into affective generative music AI. Front. Comput. Sci. 7:1593905. doi: 10.3389/fcomp.2025.1593905

Edited by:

Çagri Erdem, University of Oslo, NorwayReviewed by:

Thanos Polymeneas-Liontiris, National and Kapodistrian University of Athens, GreeceCopyright © 2025 Ng, Sargent and Snell. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Senaida Ng, c2VuYWlkYS5uZ0BueXUuZWR1; Kaia Sargent, a2FpYXNhcmdlbnRAdWNsYS5lZHU=; Jason J. Snell, amFzb24uc25lbGxAbnl1LmVkdQ==

Senaida Ng

Senaida Ng Kaia Sargent

Kaia Sargent Jason J. Snell

Jason J. Snell