- 1Faculty of Dentistry, Applied Science Private University, Amman, Jordan

- 2Faculty of Dentistry, Jordan University of Science and Technology, Irbid, Jordan

- 3Research Associate, King Hussein Cancer Center, Amman, Jordan

- 4Internship, Princess Basma Teaching Hospital, Irbid, Jordan

- 5Applied Science Research Center, Applied Science Private University, Amman, Jordan

- 6Faculty of Medicine, University of Jordan, Amman, Jordan

- 7Faculty of Medicine, Jordan University of Science and Technology, Irbid, Jordan

- 8Damanhour Teaching Hospital, General Organization for Teaching Hospitals and Institutes, Damanhour, Egypt

- 9Department of Prosthodontics, Faculty of Dentistry, Jordan University of Science and Technology, Irbid, Jordan

- 10Faculty of Medicine, University of Aleppo, Aleppo, Syrian Arab Republic

Background: Third molar extraction, a common dental procedure, often involves complications, such as alveolar nerve injury. Accurate preoperative assessment of the extraction difficulty and nerve injury risk is crucial for better surgical planning and patient outcomes. Recent advancements in deep learning (DL) have shown the potential to enhance the predictive accuracy using panoramic radiographic (PR) images. This systematic review evaluated the accuracy and reliability of DL models for predicting third molar extraction difficulty and inferior alveolar nerve (IAN) injury risk.

Methods: A systematic search was conducted across PubMed, Scopus, Web of Science, and Embase until September 2024, focusing on studies assessing DL models for predicting extraction complexity and IAN injury using PR images. The inclusion criteria required studies to report predictive performance metrics. Study selection, data extraction, and quality assessment were independently performed by two authors using the PRISMA and QUADAS-2 guidelines.

Results: Six studies involving 12,419 PR images met the inclusion criteria. DL models demonstrated high accuracy in predicting extraction difficulty (up to 96%) and IAN injury (up to 92.9%), with notable sensitivity (up to 97.5%) for specific classifications, such as horizontal impactions. Geographically, three studies originated in South Korea and one each from Turkey and Thailand, limiting generalizability. Despite high accuracy, demographic data were sparsely reported, with only two studies providing patient sex distribution.

Conclusion: DL models show promise in improving the preoperative assessment of third molar extraction. However, further validation in diverse populations and integration with clinical workflows are necessary to establish its real-world utility, as limitations such as limited generalizability, potential selection bias and lack of long-term follow up remain challenges.

1 Introduction

Third molar or wisdom tooth extraction is a common dental procedure often accompanied by varying degrees of complications. Studies highlight that complications can range from pain and swelling to more severe outcomes, such as mandibular fractures and nerve damage, emphasizing the need for meticulous surgical techniques and patient-specific risk assessments (1, 2). The prevalence of such complications underlines the importance of careful preoperative planning and radiographic evaluation to prevent complications such as tooth displacement into the submandibular space (3).

Therefore, predicting surgical difficulty in third molar extractions is critical for ensuring optimal surgical planning and effective patient management. Accurate preoperative assessments of complexity and potential risks enable dental surgeons to better manage procedural time, anticipate complications, and refine patient counseling (4, 5). Tools such as the Lambade-Dawane-Mali index further assist in aligning operative steps with case complexity, minimizing time, and effectively managing resources (6).

Panoramic radiography (PR) is a critical diagnostic tool in dental practice for assessing the third molars and evaluating their impact on adjacent anatomical structures. It enables clinicians to visualize the orientation, eruption level, and impaction pattern of third molars, which are essential for presurgical planning (7, 8). Although comparisons with cone-beam computed tomography (CBCT) show that CBCT offers higher precision, PR remains valuable for routine monitoring because of its accessibility and efficiency, capturing broad anatomical contexts that facilitate early intervention strategies.

Recent advancements in deep learning have significantly transformed medical imaging and diagnostics, thereby enhancing the accuracy and efficiency of disease detection and patient care. Innovations, such as segmentation models and AI-driven image communication systems, are improving data transmission in telemedicine and real-time diagnosis (9, 10). These advancements underscore the growing role of deep learning in creating data-driven, patient-centric healthcare solutions, highlighting the potential of deep learning models to enhance the prediction of surgical complexities and risks associated with third molar extraction.

The objective of our systematic review was to assess the predictive accuracy of deep learning models for determining the extraction difficulty of third molars and the risk of alveolar nerve injury. We followed PRISMA guidelines and applied inclusion criteria aligned with the study's objectives. We evaluated model performance across multiple studies to understand the reliability and clinical utility of these models in assessing third molar extraction complexity and potential nerve injury from panoramic radiographic images.

2 Methods

We conducted this systematic review following the Preferred Reporting Items for Systematic Review and Meta-Analysis (PRISMA) guidelines (11).

2.1 Search strategy and eligibility criteria

A comprehensive search was performed across PubMed, Scopus, Web of Science, and Embase from their inception up to September 2024. The search terms included: (((“machine learning” OR “ML” OR “deep learning” OR “DL” OR “neural network” OR “AI” OR “Artificial intelligence” OR “ANN” OR “Automated” OR “deep neural network” OR “DNN”) AND (“alveolar nerve injury” OR “mandibular nerve” OR ((extraction OR removal OR surgical) AND (difficulty OR complication))) AND (“wisdom Tooth” OR “wisdom teeth” OR “third molar”)). No restrictions were applied concerning language or publication date. Additionally, we manually searched the reference lists of relevant original studies and review articles to ensure a thorough search.

We included studies that: (1) evaluated the predictive accuracy of deep learning models, (2) assessed the prediction of extraction difficulty of third molar or the alveolar nerve injury from panoramic radiographic images, and (3) reported specific performance metrics related to the predictive accuracy of the models. Only studies that provided these performance metrics were considered. Studies that did not meet all of these inclusion criteria, reported other types of metrics but not predictive performance were excluded from the review.

2.2 Study selection and data extraction

Two authors independently screened the titles and abstracts of all identified studies using the predefined eligibility criteria. Full texts of potentially relevant studies were further reviewed in detail by the same authors. Any discrepancies between the two reviewers were resolved by consulting a third author, ensuring consensus. Data were independently extracted by the same reviewers using an Excel sheet. Extracted information included study characteristics (e.g., first author, publication year, country, and study design), baseline patient data (age, gender, and sample size), as well as sensitivity and specificity of each machine learning model's predictive accuracy.

2.3 Risk of bias assessment

The methodological quality of the included studies was independently assessed by two authors using the Quality Assessment of Diagnostic Accuracy Studies, Version 2 (QUADAS-2) tool (12). This tool evaluates four domains: (1) patient selection, (2) index test, (3) reference standard, and (4) flow and timing. Each domain was assessed for both the risk of bias and the applicability of the first three domains. The overall risk of bias for each study was categorized as low, some concern, or high.

3 Results

3.1 Search results

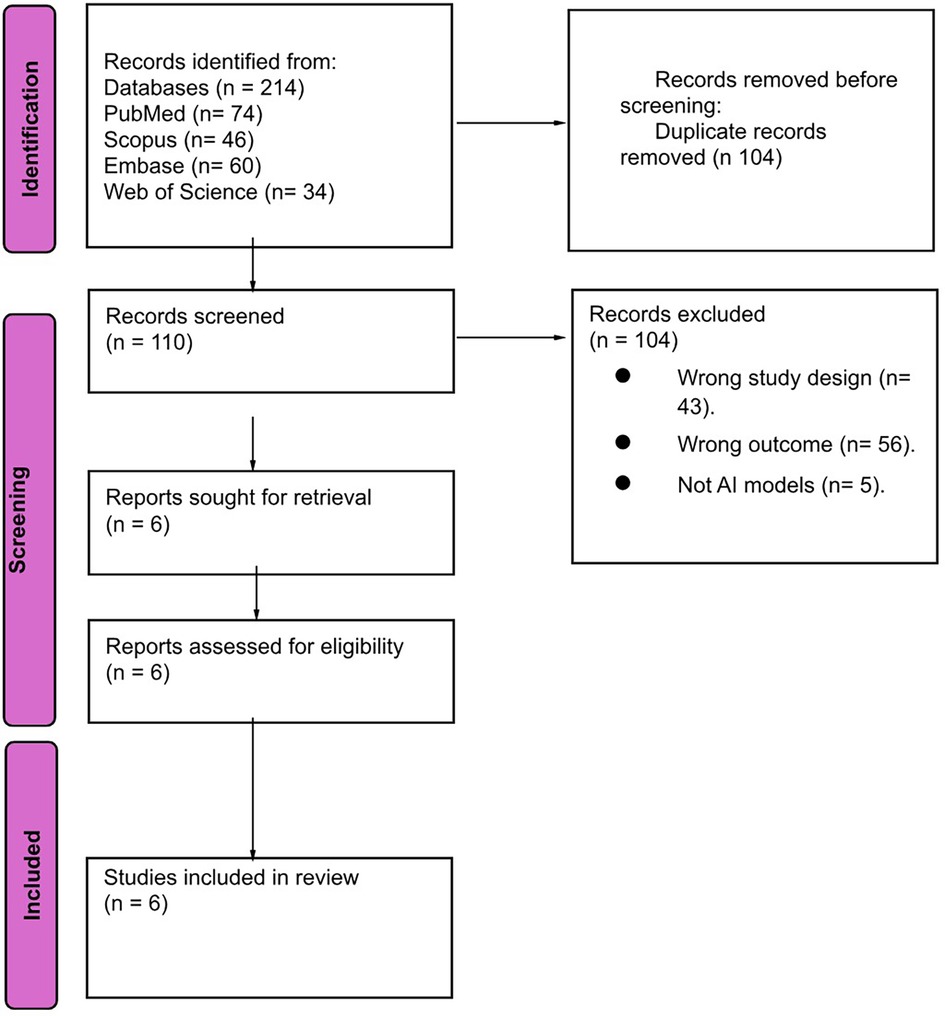

We obtained 214 papers from four electronic databases. Using EndNote software, 104 duplicate papers were removed. After screening by title and abstract, 104 papers were excluded. We evaluated the full texts of the remaining six studies for final eligibility. Finally, six papers were included in our review. The study selection process is shown in Figure 1.

3.2 Summary of the included studies

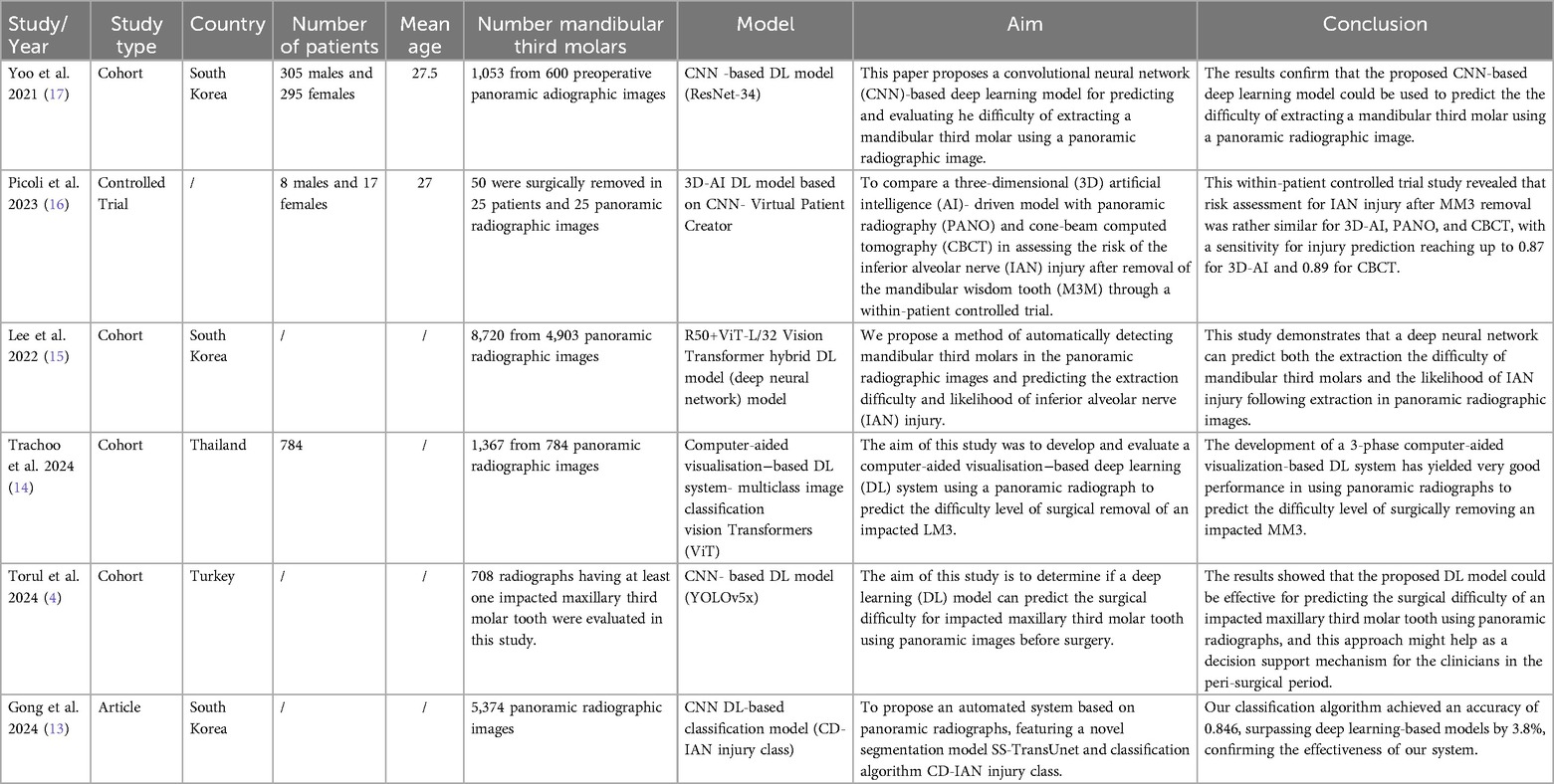

Six studies were included in our systematic review (13–17). They were conducted between 2021 and 2024. 12,419 panoramic radiographic images were analyzed across the included studies. Out of six studies. Three studies assessed the role of deep learning in predicting difficulty, two studies reported the role of deep learning in predicting alveolar nerve injury, and one study evaluated both. Four studies reported the number of extracted third molars assessed which was 11,190. It is important to note that the discrepancy arises from that some studies may have analyzed multiple radiographs per patient or assessed different tooth types in the panoramic radiographic images which were not always linked to the exact number of extraction. 12,419 images were analyzed while 11,190 were assessed. Therefore, the total number of images analyzed exceed the number of extracted third molar assessed. Only two studies reported the sex of participants which was 313 males and 312 females. Five studies reported the origin of the included patients: three studies conducted in South Korea, one study conducted in Turkey and one study conducted in Thailand. Full details about the characteristics of the included studies reported in Table 1.

3.3 Systematic review

3.3.1 Deep learning models in predicting the extraction difficulty of third molar

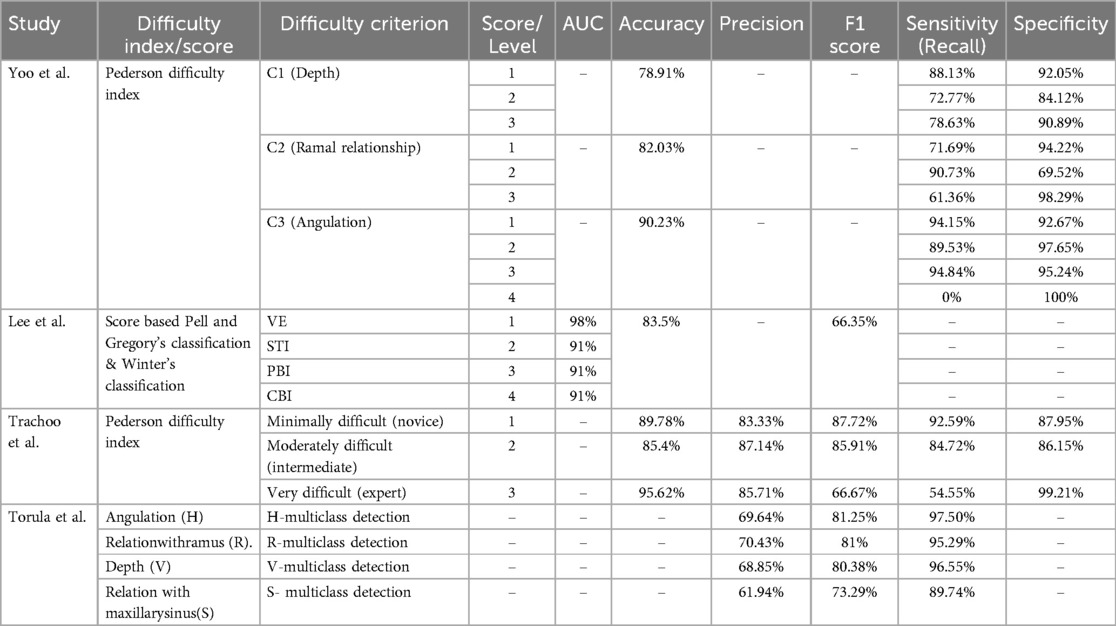

Four studies assessed the role of deep learning in extraction difficulty of third molar Table 2. Different definitions of the difficulty index definition were used across the included studied, all the definitions reported in Supplementary Table S1. Yoo et al. (17) classified the difficulty of extraction to three levels, the highest accuracy (90.23) of the model was achieved in C3 which was when the occlusal surface of the mandibular third molar was compared with the distal surface of the mandibular second molar. Also, the highest sensitivity (94.84%) was achieved in C3 score 3 which was when they were close to perpendicular. However, the highest specificity (97.65%) was achieved in C3 score 2 which was when they were close to parallel.

Table 2. Summery of four studies assessed the role of deep learning in extraction difficulty of third molar.

Lee et al. and Trachoo et al. classified the extraction difficulty into classes by the surgeon's perspective (14, 15). In lee et al. study, the highest accuracy of the model was achieved in first class when a simple extraction was done without gum incision or bone fracture. Additionally, it is worth noting that the model continued to achieve a high accuracy of 91% even when applied in different scenarios, such as when extraction was performed following a gum incision, when tooth segmentation was necessary, or in cases where more than two-thirds of the crown was impacted. However, Trachoo et al. classified the extraction difficulty into three classes (4). The model achieved 96% accuracy for both novice and experts' level and 85% accuracy for intermediate level. Alternatively, Torul et al. built a multiclass detection system related to horizontal positions, relation with ramus, relation with sinus and vertical positions. The highest sensitivity (97.5%) was achieved in detecting horizontal positions.

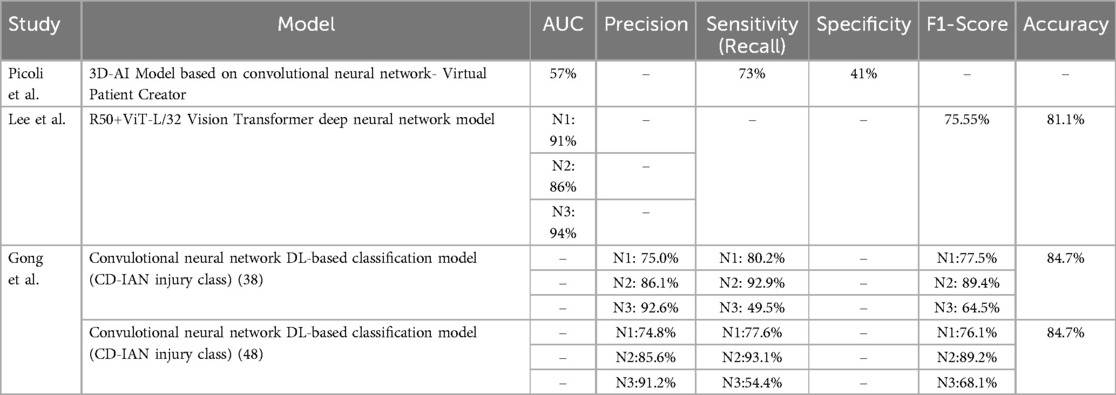

3.3.2 Deep learning models in predicting the alveolar nerve injury

Three studies assessed the role of deep learning in predicting alveolar nerve injury. The definitions of the alveolar nerve injury reported in Supplementary Table S1. Picoli et al. built a deep learning model to predict any nerve injury, the model achieved 73% sensitivity and 41% specificity with a 57% AUC (16). Gong et al. and Lee et al. classified the nerve injury to three levels (13–15). Lee et al. (15) showed that the model achieved the highest AUC (94%) in predicting N3 which was when the mandibular third molar interrupts two lines of the IAN canal in the panoramic radiographic image. Gong et al. (13) assessed the model in tooth 38 and 48. The model showed high sensitivity in predicting the N2 for booth tooth 38 (92.9%) and tooth 48 (93.1%), which was when the mandibular third molar interrupts one line of the IAN canal in the panoramic radiographic image. The reported performance of deep learning models in predicting the alveolar nerve injury in these studies is summarized in Table 3.

3.4 Quality assessment

QUADAS-2 quality assessment tool was used to assess the quality of the included studies. Two studies were had a low risk of bias in all assessed domains. Two studies had unclear risk of bias in reference standard and index test assessment. Also, one study was classified to have unclear risk of bias in index test alone and another one in reference standard domain alone. Full information about the quality assessment is reported in Supplementary Figure S1.

4 Discussion

This systematic review synthesized evidence from six studies evaluating deep learning (DL) models for predicting extraction difficulty of third molars and the risk of inferior alveolar nerve (IAN) injury from panoramic radiographic images (13–17). These studies employed various deep learning architectures based convolutional neural networks (CNN), achieving overall high accuracy and sensitivity in predicting extraction difficulty (up to 96%) and IAN injury (up to 92.9%). Notably, Yoo et al. (2021) reported the highest accuracy in angulation classification for mandibular extractions using the Pederson difficulty score (17), while Gong et al. (2024) observed high sensitivity in IAN injury classification (13).

The findings align with prior research on AI-driven models in dental imaging, showing that DL models can surpass traditional methods in objective and rapid assessment of complex clinical scenarios (18–27). Traditional indices, such as the Pederson Difficulty Score, rely on clinician interpretation, which may be subject to variability and bias. In contrast, DL models have shown to automate and standardize risk assessments with high inter-rater reliability. For example, Lee et al. (2022) achieved accuracy levels exceeding 80% across both difficulty and IAN injury predictions, demonstrating that DL algorithms can enhance consistency in diagnostic outcomes across different patient cases (15).

The integration of DL models into clinical practice for preoperative assessment has the potential to significantly improve patient outcomes and resource management (27). By accurately predicting extraction difficulty and IAN injury risk, DL tools could assist surgeons in developing patient-specific treatment plans, potentially minimizing surgical time and complication rates. These models are especially valuable for less experienced clinicians, providing a decision-support mechanism that reinforces their diagnostic judgment (15). Additionally, as demonstrated by Picoli et al. (2023), AI models offer comparable sensitivity to CBCT in assessing IAN injury risk, suggesting that panoramic imaging alone could serve as an effective, lower-cost alternative in certain cases (16).

Recent studies on IAN block injections have further contextualized the importance of precision in medical procedures. These studies highlight the challenges of managing complications and ensuring their effectiveness (28, 29).

The included studies provided substantial insights but varied widely in their sample sizes, imaging protocols, and model architectures, potentially affecting generalizability. These inconsistencies in methods and reported results may introduce bias and limit the reliability of the overall conclusion. Differences in sample sizes and imaging protocols could result in varying model performance across studies, making a consistent standard for DL-based diagnostic tools difficult. Most studies reported high accuracy and sensitivity metrics; however, performance variability was noted depending on the complexity of anatomical structures, such as in IAN proximity classifications. Furthermore, only two studies reported patient demographics, and none accounted for the potential influence of these factors on model performance. The limited diversity in patient populations, with the majority being from specific regions such as South Korea and Turkey, may impact the model's transferability to other clinical settings (14, 16).

This review has several limitations. First, the limited number of studies included may result in publication bias, as studies with positive results are more likely to be published. Additionally, variability in the methods used for difficulty and injury prediction limited the possibility of meta-analysis, and the lack of standardized reporting on demographic factors restricts the applicability of findings across broader patient populations. Furthermore, while DL models achieved promising accuracy, real-world deployment would require extensive validation and recalibration on diverse datasets, which was not covered in the studies reviewed. Model should be validated on diverse external database and recalibrated using parameters tailored to demographic and clinical variations. Techniques like transfer learning of domain adaptation can further enhance performance and generalizability in real-word settings. Additionally, only two studies reported the sex of participants, this limited the ability to assess the potential impact of demographic factors, such as sex, on the performance of the models. Also, the geographic distribution of the studies may limit the generalizability of our finding to other populations.

5 Conclusion

This systematic review provides strong evidence that DL models significantly enhanced the prediction accuracy of third molar extraction difficulties and IAN injury risks using panoramic radiographic images. These models outperform traditional methods and offer more precise and reliable preoperative assessments demonstrating their potential for clinical use that could reduce surgical complications and improve patient outcomes. However, the studies reviewed showed variability in methods and samples, making broader validation essential across diverse populations and geographic locations to ensure global applicability. Future research should focus on standardizing DL model testing, developing clinical guidelines, and integrating these tools with other diagnostic technologies to further refine their effectiveness in the clinical setting.

Future research should aim to standardize DL model development and testing protocols in dental imaging to improve reproducibility and generalizability across diverse patient populations. Further studies are needed to address demographic factors such as gender and geographic factors, enabling results to be generalized and widely applicable. Large-scale, multi-center prospective studies with diverse patient demographics are needed to validate these models' real-world utility. Exploring the integration of CBCT alongside panoramic images within DL models could enhance diagnostic accuracy, especially in complex cases with high-risk nerve proximity. Additionally, research into explainable AI could improve clinical adoption by enhancing model interpretability, addressing the current “black-box” challenge in DL applications in medicine. Finally, studies should investigate ethical, legal, and patient privacy considerations to ensure safe and equitable AI deployment in clinical practice (4, 13).

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding author.

Author contributions

HA: Writing – original draft, Writing – review & editing. HQ: Writing – original draft, Writing – review & editing. SA: Writing – original draft, Writing – review & editing. NA: Writing – original draft, Writing – review & editing. MA: Writing – original draft, Writing – review & editing. AA: Writing – original draft, Writing – review & editing. FA: Writing – original draft, Writing – review & editing. LK: Writing – original draft, Writing – review & editing. MA: Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Correction Note

This article has been corrected with minor changes. These changes do not impact the scientific content of the article.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fdmed.2025.1534406/full#supplementary-material

References

1. Kashmola MA, Mustafa NS, Kamaludin Latifi FAB, Mohamad Fadzli MMB. Postoperative complications of third molar surgical extraction in a Malaysian sample. Greenfort Int J Appl Med Sci. (2024) 2:107–14. doi: 10.62046/gijams.2024.v02i04.002

2. Almutairi F, Alotaiby F, Alrashid A, Almodhaibri N. Post-Operative complications following third molar extraction in Qassim region Saudi Arabia: a retrospective study. Am J Life Sci Innov. (2024) 3:20–7. doi: 10.54536/ajlsi.v3i1.2440

3. Nadeem A, Vohra LI, Ahsan A, Mushahid H, Tariq R, Rizwan M, et al. A rare case of mandibular third molar displaced into submandibular space: a complication of mandibular third molar extraction. Clin Case Rep. (2023) 11:e8101. doi: 10.1002/ccr3.8101

4. Torul D, Akpinar H, Bayrakdar IS, Celik O, Orhan K. Prediction of extraction difficulty for impacted maxillary third molars with deep learning approach. J Stomatol Oral Maxillofac Surg. (2024) 125(4S):101817. doi: 10.1016/j.jormas.2024.101817

5. Hermida-Cabrera P, Lima-Sánchez B, Montoya-Salazar V, Oliveros-López LG, Alomar-Velasco P, Gutiérrez-Pérez JL, et al. Proposal and validation of a new Index to assess the difficulty of lower third molar extraction. Dent J (Basel). (2024) 12:138. doi: 10.3390/dj12050138

6. Lambade P, Dawane P, Mali D. Assessment of difficulty in mandibular third molar surgery by Lambade-Dawane-Mali’s Index. J Oral Maxillofac Surg. (2023) 81:772–9. doi: 10.1016/j.joms.2023.02.013

7. Le SH, Nguyen NM, Nguyen NTB, Nguyen LTB. Anatomical positions of mesially/horizontally impacted mandibular third molars are significant predictors for distal caries in adjacent second molars. Int J Dent. (2022) 2022:1–6. doi: 10.1155/2022/8482209

8. Polat Balkan E, Deniz HA, Kurt MH, Samunahmetoğlu E, Karahan S. Application of fractal analysis in detecting trabecular bone characteristics around mandibular impacted third molars on dental panoramic radiographs. Eur Ann Dent Sci. (2024) 51:67–73. doi: 10.52037/eads.2024.0008

9. Dodda S, Narne S, Chintala S, Kanungo S, Adedoja T, Sharma S. Exploring AI-driven innovations in image communication systems for enhanced medical imaging applications. J Electr Syst. (2024) 20(3s). doi: 10.52783/jes.1409

10. Malathy V, Maiti N, Kumar N, Lavanya D, Aswath S, Banu SB. Deep learning -enhanced image segmentation for medical diagnostics. 2024 International Conference on Advances in Computing, Communication and Applied Informatics (ACCAI) (2024).

11. Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. Br Med J. (2021) 372:n71. doi: 10.1136/bmj.n71

12. Whiting PF, Rutjes AWS, Westwood ME, Mallett S, Deeks JJ, Reitsma JB, et al. QUADAS-2: a revised tool for the quality assessment of diagnostic accuracy studies. Ann Intern Med. (2011) 155:529–36. doi: 10.7326/0003-4819-155-8-201110180-00009

13. Gong Z, Feng W, Su X, Choi C. System for automatically assessing the likelihood of inferior alveolar nerve injury. Comput Biol Med. (2024) 169:107923. doi: 10.1016/j.compbiomed.2024.107923

14. Trachoo V, Taetragool U, Pianchoopat P, Sukitporn-Udom C, Morakrant N, Warin K. Deep learning for predicting the difficulty level of removing the impacted mandibular third molar. Int Dent J. (2024) 75(1):144–50. doi: 10.1016/j.identj.2024.06.021

15. Lee J, Park J, Moon SY, Lee K. Automated prediction of extraction difficulty and Inferior alveolar nerve injury for mandibular third molar using a deep neural network. Appl Sci. (2022) 12:475. doi: 10.3390/app12010475

16. Picoli FF, Fontenele RC, Van der Cruyssen F, Ahmadzai I, Trigeminal Nerve Injuries research group, Politis C, et al. Risk assessment of inferior alveolar nerve injury after wisdom tooth removal using 3D AI-driven models: a within-patient study. J Dent. (2023) 139:104765. doi: 10.1016/j.jdent.2023.104765

17. Yoo J-H, Yeom H-G, Shin W, Yun JP, Lee JH, Jeong SH, et al. Deep learning based prediction of extraction difficulty for mandibular third molars. Sci Rep. (2021) 11:1954. doi: 10.1038/s41598-021-81449-4

18. Sivari E, Senirkentli GB, Bostanci E, Guzel MS, Acici K, Asuroglu T. Deep learning in diagnosis of dental anomalies and diseases: a systematic review. Diagnostics (Basel). (2023) 13:2512. doi: 10.3390/diagnostics13152512

19. Musri N, Christie B, Ichwan SJA, Cahyanto A. Deep learning convolutional neural network algorithms for the early detection and diagnosis of dental caries on periapical radiographs: a systematic review. Imaging Sci Dent. (2021) 51:237–42. doi: 10.5624/isd.20210074

20. Sadr S, Mohammad-Rahimi H, Motamedian SR, Zahedrozegar S, Motie P, Vinayahalingam S, et al. Deep learning for detection of periapical radiolucent lesions: a systematic review and meta-analysis of diagnostic test accuracy. J Endod. (2023) 49:248–261.e3. doi: 10.1016/j.joen.2022.12.007

21. Rokhshad R, Salehi SN, Yavari A, Shobeiri P, Esmaeili M, Manila N, et al. Deep learning for diagnosis of head and neck cancers through radiographic data: a systematic review and meta-analysis. Oral Radiol. (2024) 40:1–20. doi: 10.1007/s11282-023-00715-5

22. Chaurasia A, Namachivayam A, Koca-Ünsal RB, Lee J-H. Deep-learning performance in identifying and classifying dental implant systems from dental imaging: a systematic review and meta-analysis. J Periodontal Implant Sci. (2024) 54:3–12. doi: 10.5051/jpis.2300160008

23. Dashti M, Ghaedsharaf S, Ghasemi S, Zare N, Constantin E-F, Fahimipour A, et al. Evaluation of deep learning and convolutional neural network algorithms for mandibular fracture detection using radiographic images: a systematic review and meta-analysis. Imaging Sci Dent. (2024) 54:232–9. doi: 10.5624/isd.20240038

24. Wu Z, Yu X, Chen Y, Chen X, Xu C. Deep learning in the diagnosis of maxillary sinus diseases: a systematic review. Dentomaxillofac Radiol. (2024) 53:354–62. doi: 10.1093/dmfr/twae031

25. Hartman H, Nurdin D, Akbar S, Cahyanto A, Setiawan AS. Exploring the potential of artificial intelligence in paediatric dentistry: a systematic review on deep learning algorithms for dental anomaly detection. Int J Paediatr Dent. (2024) 34:639–52. doi: 10.1111/ipd.13164

26. Albano D, Galiano V, Basile M, Di Luca F, Gitto S, Messina C, et al. Artificial intelligence for radiographic imaging detection of caries lesions: a systematic review. BMC Oral Health. (2024) 24:274. doi: 10.1186/s12903-024-04046-7

27. Karalis VD. The integration of artificial intelligence into clinical practice. Appl Biosci. (2024) 3:14–44. doi: 10.3390/applbiosci3010002

28. Aquilanti L, Mascitti M, Togni L, Contaldo M, Rappelli G, Santarelli A. A systematic review on nerve-related adverse effects following mandibular nerve block anesthesia. Int J Environ Res Public Health. (2022) 19:1627. doi: 10.3390/IJERPH19031627

Keywords: alveolar nerve, deep learning, mandibular nerve, panoramic radiographic, third molar

Citation: Al Salieti H, Qasem HM, Alshwayyat S, Almasri N, Alshwayyat M, Aboali AA, Alsarayrah F, Khasawneh L and Al-kurdi MA-m (2025) Predicting alveolar nerve injury and the difficulty level of extraction impacted third molars: a systematic review of deep learning approaches. Front. Dent. Med. 6:1534406. doi: 10.3389/fdmed.2025.1534406

Received: 25 November 2024; Accepted: 28 April 2025;

Published: 20 May 2025;

Corrected: 4 June 2025.

Edited by:

Hatice Hasturk, The Forsyth Institute, United StatesReviewed by:

Pradeep Kumar Yadalam, Saveetha Dental College And Hospitals, IndiaEndang Sjamsudin, Padjadjaran University, Indonesia

Copyright: © 2025 Al Salieti, Qasem, Alshwayyat, Almasri, Alshwayyat, Aboali, Alsarayrah, Khasawneh and Al-kurdi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Mohammed Al-mahdi Al-kurdi, bW9oYW1tZWRtYWhkaWt1cmRpQGdtYWlsLmNvbQ==

†These authors share first authorship

‡ORCID:

Amira A. Aboali

orcid.org/0000-0002-8547-4672

Hamza Al Salieti

Hamza Al Salieti Hanan M. Qasem

Hanan M. Qasem Sakhr Alshwayyat3,4,5

Sakhr Alshwayyat3,4,5 Noor Almasri

Noor Almasri Lina Khasawneh

Lina Khasawneh Mohammed Al-mahdi Al-kurdi

Mohammed Al-mahdi Al-kurdi