- 1Precision and Intelligence Laboratory, Tokyo Institute of Technology, Yokohama, Japan

- 2Department of Functional Brain Research, National Center of Neurology and Psychiatry, National Institute of Neuroscience, Tokyo, Japan

- 3Department of Advanced Neuroimaging, Integrative Brain Imaging Center, National Center of Neurology and Psychiatry, Tokyo, Japan

- 4Department of Neurosurgery, Osaka University Medical School, Osaka, Japan

- 5Department of Electronics and Mechatronics, Tokyo Polytechnic University, Atsugi, Japan

- 6Precursory Research for Embryonic Science and Technology, Japan Science and Technology Agency, Tokyo, Japan

- 7Solution Science Research Laboratory, Tokyo Institute of Technology, Yokohama, Japan

With the goal of providing assistive technology for the communication impaired, we proposed electroencephalography (EEG) cortical currents as a new approach for EEG-based brain-computer interface spellers. EEG cortical currents were estimated with a variational Bayesian method that uses functional magnetic resonance imaging (fMRI) data as a hierarchical prior. EEG and fMRI data were recorded from ten healthy participants during covert articulation of Japanese vowels /a/ and /i/, as well as during a no-imagery control task. Applying a sparse logistic regression (SLR) method to classify the three tasks, mean classification accuracy using EEG cortical currents was significantly higher than that using EEG sensor signals and was also comparable to accuracies in previous studies using electrocorticography. SLR weight analysis revealed vertices of EEG cortical currents that were highly contributive to classification for each participant, and the vertices showed discriminative time series signals according to the three tasks. Furthermore, functional connectivity analysis focusing on the highly contributive vertices revealed positive and negative correlations among areas related to speech processing. As the same findings were not observed using EEG sensor signals, our results demonstrate the potential utility of EEG cortical currents not only for engineering purposes such as brain-computer interfaces but also for neuroscientific purposes such as the identification of neural signaling related to language processing.

Introduction

Brain-computer interface (BCI) spellers offer a means of hands-free character input for individuals with motor impairments through the utilization of brain activity signals (Kubler et al., 2001; Wolpaw et al., 2002; Birbaumer, 2006a,b; Birbaumer and Cohen, 2007; Shih et al., 2012). Most BCI spellers use distinct electroencephalography (EEG) activity such as the P300 event-related potential (Farwell and Donchin, 1988; Nijboer et al., 2008) or steady-state visual evoked potentials (SSVEP) (Cheng et al., 2002). The P300 is a positive peak potential which appears approximately 300 ms after stimulus onset in reaction to infrequently presented visual or auditory stimuli (Sutton et al., 1967), whereas SSVEPs are generated in reaction to high-speed flashing light and are characterized by sinusoidal-like waveforms with frequencies synchronized to those of the flashing light (Adrian and Matthews, 1934).

BCI spellers based on the P300 and SSVEPs are considered “reactive” BCI spellers, since they utilize potentials arising in reaction to external stimuli, such as the appearance of a desired character on a communication board. Conversely, “active” BCI spellers are spellers that utilize brain activity consciously controlled by the user (Zander et al., 2010), like that when imagining a vowel. As such, active BCI spellers are not subject to limitations associated with providing external stimuli (e.g., time and space required to display a character). Comparing these spellers, reactive BCI spellers are closer to the market because of their higher information transfer rates and stability. However, with developments in neuroimaging, active BCI spellers have drawn attention from researchers using neural decoding techniques. EEG has been used to decode English vowels /a/ and /u/ (Dasalla et al., 2009); Dutch vowels /a/, /i/, and /u/ (Hausfeld et al., 2012); words “yes” and “no” (Lopez-Gordo et al., 2012); and Chinese characters for “left” and “one” (Wang et al., 2013). Decoding performance in these studies were higher than chance level but not comparable to reactive BCI spellers due to lower signal-to-noise ratio in spontaneous EEG features. Other brain imaging methods, such as semi-invasive electrocorticography (ECoG) and non-invasive functional magnetic resonance imaging (fMRI), have also attracted increasing attention due to their higher spatial discrimination than EEG. Studies using ECoG to decode vowels (Ikeda et al., 2014), vowels and consonants (Pei et al., 2011), and phonemes (Leuthardt et al., 2011); and fMRI to decode words “yes” and “no” (Naci et al., 2013) showed relatively higher decoding performance than EEG studies. Although the performance was still lower than that of reactive BCI spellers, these findings indicate that speech intention can be decoded using brain activity signals if limitations in EEG spatial discrimination can be overcome.

In this study, we demonstrated a method to enhance the utility of EEG in speech intention decoding by using EEG cortical current signals to classify imagined Japanese vowels. Applying a hierarchical Bayesian method (Sato et al., 2004; Yoshioka et al., 2008) that incorporates fMRI activity as a hierarchical prior, spatial discrimination of EEG was improved while preserving its high temporal discrimination. The method is also useable in real-time application since fMRI data need only be acquired one time in advance. The efficacy of this method has already been proven by studies on decoding of motor control (Toda et al., 2011; Yoshimura et al., 2012), visual processing (Shibata et al., 2008), and spatial attention (Morioka et al., 2014). Moreover, since ECoG-based spellers (Leuthardt et al., 2011; Pei et al., 2011; Ikeda et al., 2014) showed relatively higher decoding performance than EEG-based spellers, we hypothesized that EEG cortical current signals would also offer higher decoding performance because EEG cortical current signals are theoretically equivalent to ECoG signals if current dipoles are assigned to the cortical surface. Ten healthy human participants performed covert vowel articulation tasks (i.e., silent production of vowel speech in one's mind, Perrone-Bertolotti et al., 2014), and EEG cortical current signals were estimated using EEG and fMRI data. Classifiers based on sparse logistic regression (SLR) (Yamashita et al., 2008) were trained to discriminate between tasks, and classification accuracies were compared between EEG cortical currents and EEG sensor signals.

Materials and Methods

Participants

Ten healthy human participants (1 female and 9 males; mean age ± standard deviation: 34.1 ± 9.2) participated in this study. All participants had normal hearing. Written informed consent was obtained from all participants prior to the experiment. The experimental protocol was approved by ethics committees of the National Center of Neurology and Psychiatry and Tokyo Institute of Technology. All participants underwent an fMRI experiment to obtain prior information for EEG cortical current estimation, structural MRI acquisition to create an individual brain model, an EEG experiment, and three-dimensional position measurements of the EEG sensors on the scalp.

Experimental Tasks

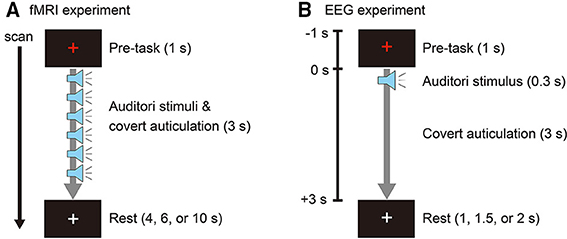

Participants covertly articulated two Japanese vowels (/a/ and /i/) cued with auditory stimuli. Auditory stimuli were obtained from the Tohoku University - Matsushita Isolated Word Database (Speech Resources Consortium, National Institute of Informatics, Tokyo, Japan) and edited using Wave Editor (Abyss Media Company, Ulyanovsk, Russia) to create stimuli consisting of a single vowel. White noise was created using MATLAB R2012b (The MathWorks, Inc., Natick, MA) and used as a stimulus for a non-imagery (control) task. Stimulus duration was 400 ms, with 300 ms of speech and 100 ms of silence. In the fMRI experiment, auditory stimuli were presented in 3-s durations, with each duration consisting of six repetitions of vowel speech or white noise. Participants covertly repeated the vowel for 3 s when presented with a vowel stimulus, and they refrained from imagery when presented with white noise. We used six repetitions of stimulus and covert speech to obtain higher brain activity signals related to the tasks (Figure 1A). In the EEG experiment, the auditory stimuli were presented only once in the 3-s durations for a single (no-repetition) covert articulation (Figure 1B). In both experiments, to prevent eye and head movement artifacts, a white fixation cross was presented at the center of the experiment screen. The color of the fixation cross changed to red 1 s before each stimulus interval and remained red for 4 s before returning to white.

Figure 1. (A) Event-related design for the fMRI experiment. One trial consisted of a pre-task (1 s), task (3 s) with six auditory stimuli (0.3 s each), and rest (4, 6, or 10 s). The rest duration was randomly assigned for each trial. The color of the fixation cross was red during the pre-task and task intervals. (B) Experimental design for the EEG experiment. One epoch consisted of a pre-task (1 s), task (3 s) with auditory stimulus (0.3 s), and rest (1, 1.5, or 2 s). The rest duration was randomly assigned for each epoch. The color of the fixation cross was red during the pre-task and task intervals.

fMRI Experiment and Data Acquisition

The fMRI experiment was conducted to identify brain activation areas and their intensities for use as hierarchical priors when estimating cortical currents from EEG (Sato et al., 2004). Participants lay in a supine position on the scanner bed and wore MR compatible headphones (SereneSound, Resonance Technology Inc., Northridge, CA). Auditory stimuli were provided at a sound pressure level of 100 dB. The fixation cross was projected on a screen and viewed through a mirror attached to the MRI head coil. To confirm task execution, participants pressed a response button with their right hand when they heard the auditory stimuli. Using an event-related design, one trial was comprised of a pre-task period (1 s), a task period (3 s), and a rest period (4, 6, or 10 s, pseudo-randomly permuted over three consecutive trials; Figure 1A). Participants performed three runs, with each run consisting of 10 trials for each task (/a/, /i/, and no-imagery). Tasks were ordered in pseudo-random permutations over three consecutive trials. The experiment program was created using Presentation version 16.3 (Neurobehavioral Systems, Inc., Berkeley, CA).

A 3 T Magnetom Trio MRI scanner equipped with an 8-channel array coil (Siemens, Erlangen, Germany) was used for the fMRI experiment. Functional data were acquired with a T2*-weighted gradient-echo, echo planar imaging sequence using the following parameters: repetition time (TR) = 3 s; echo time (TE) = 30 ms; flip angle (FA) = 90°; field of view (FOV) = 192 × 192 mm; matrix size = 64 × 64; 43 slices; slice thickness = 3 mm; 118 volumes.

After the fMRI experiment, two types of 3D anatomical images, a sagittal image and an axial image, were acquired using T1-weighted magnetization prepared rapid gradient echo sequences (for sagittal scans: TR = 2 s; TE = 4.38 ms; FA = 8°; FOV = 256 × 256 mm; matrix size = 256 × 256; 224 slices; slice thickness = 1 mm; for axial scans: TR = 2 s; TE = 4.38 ms; FA = 8°; FOV = 192 × 192 mm; matrix size = 192 × 192; 160 slices; slice thickness = 1 mm). The sagittal image covered the whole head, including the face, specifically for use in constructing a polygon model of the cortical surface.

EEG Experiment and Data Acquisition

Participants were seated in a sound-attenuated chamber (AMC-3515, O'HARA & CO., LTD. Tokyo, Japan) and wore earphones (Image S4i, Klipsch Group, Inc., Indianapolis, IN) providing auditory stimuli at a sound pressure level of 70 dB. Tasks were presented in pseudo-random permutations with randomly assigned interstimulus intervals of 2, 2.5, or 3 s. Participants performed 10 sessions, with each session consisting of 5 trials per task. Eye blinking was allowed only during the interval period when a white fixation cross was presented (Figure 1B).

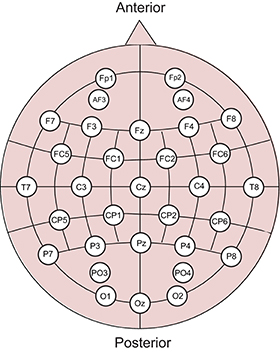

EEG signals were recorded using a g.USBamp amplifier/digitizer system and 32 g.LADYbird active sensors (g.tec medical engineering, Graz, Austria). The resolution and range of the amplifier were 30 nV and ±250 mV, respectively. The sensors were positioned according to the extended international 10–20 system (Figure 2), and the average from both earlobes was used as reference. The scalp was cleaned with 70% ethanol before filling the electrode gaps with conducting gel. Signals were acquired at a sampling rate of 256 Hz and band-pass filtered from 0.5 to 100 Hz using an experiment program created in MATLAB 2012b.

Figure 2. Sensor positions in the EEG experiment. Thirty-two electrodes were used based on the extended international 10–20 system.

After the EEG experiment, coordinate positions of EEG sensors were measured using a Polaris Spectra optical tracking system (Northern Digital Inc., Ontario, Canada). The nasion, left pre-auricular point and right pre-auricular point were also measured as reference points. These reference points were used to co-register the EEG sensor positions to voxel coordinates of the T1-weighted sagittal image.

EEG Cortical Current Estimation Using a Hierarchical Bayesian Methods

EEG cortical currents were estimated using Variational Bayesian Multimodal Encephalography (VBMEG) toolbox (ATR Neural Information Analysis Laboratories, Japan; http://vbmeg.atr.jp/?lang=en) running on MATLAB R2012b, unless otherwise specified. All VBMEG processes were conducted in accordance with standard procedures described in the toolbox documentation.

Cortical Surface Model and Three-Layer Model

We constructed a cortical surface model comprised of current dipoles equidistantly distributed on and perpendicular to the cortical surface, which consisted of sulci and gyri. We also constructed a three-layer model consisting of boundary information for scalp, skull, and cerebrospinal fluid. The T1-weighted sagittal image was used for creating the two models. A bias corrected image and a gray-matter volumetric image of the sagittal image were obtained using the “Segment” function in SPM8 (Wellcome Department of Cognitive Neurology, UK; available at http://www.fil.ion.ucl.ac.uk/spm). The bias corrected image was used for creating a polygon model of the cortical surface in FreeSurfer (Martinos Center software, http://surfer.nmr.mgh.harvard.edu/), followed by the cortical surface model. The gray-matter volumetric image was used in conjunction with the output files of FreeSurfer to create the three-layer model.

fMRI Data Processing

fMRI data were analyzed with SPM8 and VBMEG. Slice-timing correction was performed on all echo planar images (EPIs), followed by spatial realignment to the mean image of all EPIs. The T1-weighted axial image was co-registered to the T1-weighted bias-corrected sagittal image before all realigned EPIs were co-registered to the T1-weighted axial image. Then, all EPIs were spatially normalized to the Montreal Neurological Institute (MNI) (Montreal, Quebec, Canada) reference brain via the T1-weighted bias-corrected sagittal image, and spatially smoothed with a Gaussian kernel of 8 mm full-width at half-maximum.

In SPM first-level analysis, for each participant, we calculated t-values for two contrasts task /a/ > task /i/ and task /i/ > task /a/ using p-value thresholds for uncorrected multiple comparisons of 0.001, 0.005, 0.01, 0.05, and 0.1 (termed “single-activation”). All of the t-values were then used to define two kinds of priors, area (the number of cortical vertices to be estimated) and activity (affecting current amplitude of each vertex) in VBMEG. Using VBMEG, the t-values of the respective contrast images were inverse-normalized into individual participant's space and co-registered to the cortical surface model as area and activity priors. Merging priors across the two contrasts at each p-value threshold, we created final area and activity priors for EEG cortical current estimation for each participant.

We also created other sets of area and activity priors for each participant using results from SPM group (second-level) analyses. A one-sample t-test was performed for the contrast between task /a/ and task /i/ (p = 0.01 uncorrected). Group analyses were performed for 11 conditions. One analysis used all participants' images (“Group-activation”), and the remaining 10 analyses used 9 participants' images, changing combinations in a leave-one-participant-out manner (“LOOgroup-activation”). The t-value images of the group-activations and LOOgroup-activations were inverse normalized into individual participant's space to create area and activity priors of each participant.

EEG Data Processing

EEG sensor signals were low-pass filtered at a cutoff frequency of 45 Hz, and 50 epochs per task were extracted in reference to auditory stimulus onsets. Each epoch had a duration of 4 s, 1 s of pre-onset and 3 s of post-onset. Coordinate positions of EEG sensors were co-registered to the T1-weighted bias-corrected sagittal image using positioning software supplied with VBMEG.

Inverse Filter and Cortical Current Time Course Estimation

To design an inverse filter in VBMEG, several parameters, including two hyper-parameters, must be defined through a current variance estimation step. We determined the best hyper-parameters using a nested cross-validation method described in Section Vowel Classification Analysis using Sparse Logistic Regression. The other parameters were defined as follows: analysis time range = −0.5–3 s; time window size = 0.5 s; shift size = 0.25 s; dipole reduction ratio = 0.2. We then used the inverse filter to calculate cortical current time courses for trial data at 0.5–3 s. Parameters differed for area and activity analyses (described in Section Vowel Classification Analysis using Sparse Logistic Regression). The two hyper-parameters, a prior magnification parameter (m0) and a prior reliability (confidence) parameter (γ0), were selected from m0∈{10, 100, 1000} and γ0∈{1, 10, 100}, respectively. Both parameters constrain influence of fMRI data on current variance estimation (Sato et al., 2004). A larger magnification parameter m0 leads to a larger dipole current amplitude for a given fMRI activation. A larger reliability parameter γ0 requires a more sharply peaked fMRI activation in the variance distribution. For both, high values signify that brain activity during the fMRI experiment was the same as that during the EEG experiment. We chose their values based on a method by Morioka et al. (2014). However, we did not select high values because our fMRI and EEG data were not recorded simultaneously but on different days using slightly different experimental protocols.

Vowel Classification Analysis Using Sparse Logistic Regression

We applied sparse logistic regression (SLR) (Yamashita et al., 2008) for vowel classification, which can train high-dimensional classifiers without need for advance dimension reduction. Three-class classifiers for /a/, /i/, and no-imagery (control) were trained based on sparse multinomial logistic regression (SMLR) using SLR Toolbox version 1.2.1 alpha (Advanced Telecommunications Research Institute International, Japan; http://www.cns.atr.jp/~oyamashi/SLR_WEB.html). SMLR trained three classifiers for the individual three tasks, and it chose the class with the highest probability using test data.

For both EEG sensor and cortical current signals, the signals were passed through an 8-point moving average filter, and data from 1.0 to 2.0 s of post-onset were used for analysis to avoid influence by auditory or event-related evoked potentials, such as N1 and P300, and also by motor-related potentials evoked by pressing the response button. Classification accuracies for EEG cortical current signals were evaluated in a nested cross-validation manner to observe the influence of the hyper-parameters. For EEG sensor signals, nested cross-validation was not applied because hyper-parameter tuning was not applicable. Instead, 5 × 10-fold cross validation was used, which repeats a 10-fold cross validation 5 times, randomizing the trials in a fold for each iteration. This repeated cross-validation method was applied to obtain more generalized classification accuracies.

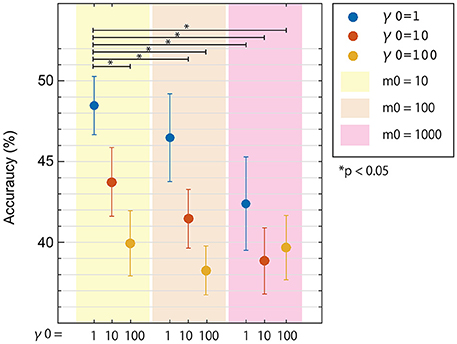

To see the influence of the hyper-parameters on classification accuracy, we used fixed area/activity priors of p = 0.01 (single-activation) as shown in Figure 3. The 50 trials for each task were randomly divided into 10 groups, with 5 trials each. Eight groups were used to train a classifier using one of nine hyper-parameter pairs (where m0∈{10, 100, 1000} and γ0∈{1, 10, 100}). One of the remaining groups was used as validation data to calculate classification accuracies for the pair of hyper-parameters. The last remaining group was reserved as test data to be used after the best hyper-parameters were found. The process was repeated 9 times for each permutation of training and validation data, and mean classification accuracies were calculated for each validation. This was repeated for all parameters pairs, and mean accuracies across participants were plotted in Figure 3.

Figure 3. Three-class classification accuracies of EEG cortical current signals obtained from inverse filters with different hyper-parameters. Results using a prior magnification parameter m0 = 10, 100, and 1000 are denoted as yellow, cream, and pink areas, respectively. Results using prior reliability parameters γ0 = 1, 10, and 100 are denoted as blue, red, and yellow dots, respectively. All conditions used the same fMRI area/activity priors from Unc001. Error bars denote standard error. Statistical differences were calculated using non-parametric permutation tests.

Next, the pair of hyper-parameters with the highest accuracy was selected, and a new classifier was trained using both the training and validation data (9 groups), and net classification accuracy was calculated using the test data reserved in the first step. This step was performed to compare accuracies among different area/activity priors. The process was repeated 10 times per pair of fMRI area/activity priors, changing the group used as test data in a leave-one-group-out manner. The entire nested cross-validation process was further repeated 5 times, randomizing the trials in each of the 10 groups.

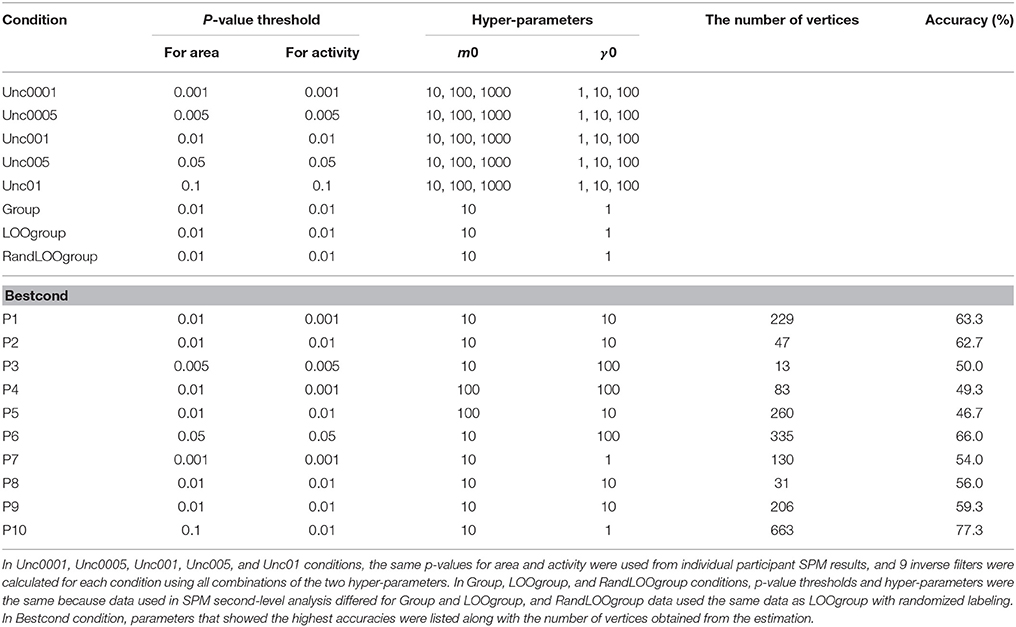

We calculated accuracies for the following 8 fMRI prior pairs (Table 1): 5 single-activations (uncorrected p = 0.001, 0.005, 0.01, 0.05, and 0.1), Group-activation (Group, uncorrected p = 0.01), LOOgroup-activation (LOOgroup, uncorrected p = 0.01), and LOOgroup with data labels randomized during classifier training (RandLOOgroup, uncorrected p = 0.01). We included the RandLOOgroup condition to determine if accuracies increased simply due to increased data dimensionality.

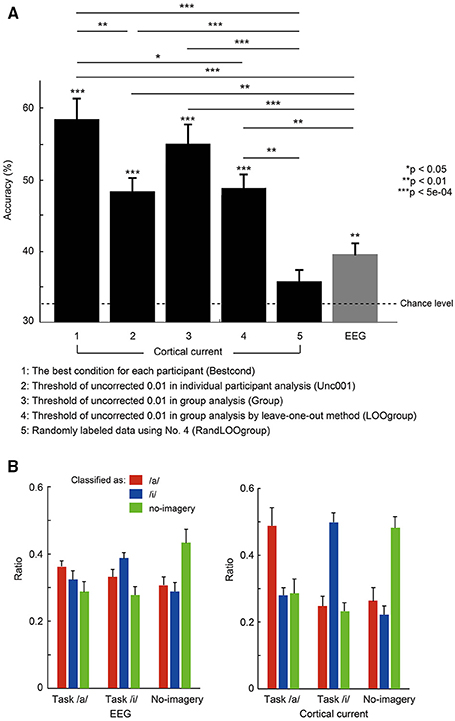

In Figure 4A, we compared mean classification accuracies across participants using the net accuracies among the prior pairs. Note that we did not show all mean accuracies for single-activations but rather mean calculated using the best accuracies among the results of the single activations (termed “Bestcond”). Conditions that gave the best accuracy for each participant are shown in Table 1. Statistical analyses to assess significant difference between them were performed using non-parametric permutation tests (Nichols and Holmes, 2002; Stelzer et al., 2013; Yoshimura et al., 2014), which compare the mean classification accuracy to other mean accuracies that were calculated repeatedly using randomly permuted class-labels and calculated p-values. The comparison was repeated 10,000 times using pseudo-randomized labels. Therefore, the labeling with the highest overall difference would have a p-value of 1/10,000 = 1.00e-04.

Figure 4. (A) Mean accuracies averaged across 10 participants for the three-class classification using EEG sensor and cortical current data. Error bars denote standard error. The dotted line denotes chance level of 33.3%. *p < 0.05, **p < 0.01, ***p < 5e-04 usnig non-parametric permutation tests. Parameters used for each condition (No. 1–No. 5) are shown in Table 1. (B) Comparison of classification output ratio for each task resulting from EEG sensor (left) and cortical current (right) classification. Using a probability map from each classification analysis, the number of times that marked the highest probability was counted for each task. Then the ratio of that number to the total number of cross-validations was calculated and averaged across participants. For each task, red bars represent the ratio classified as vowel /a/, blue bars as vowel /i/, and green bars as no-imagery.

Evaluation of EEG Cortical Current Estimation for Contribution to Vowel Classification

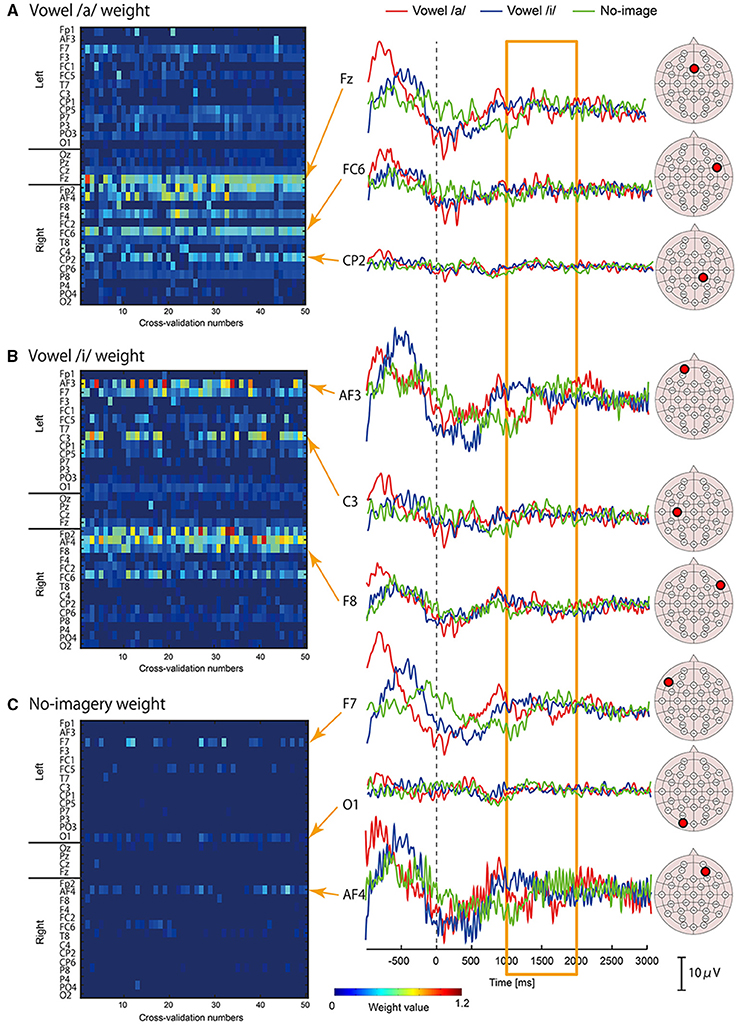

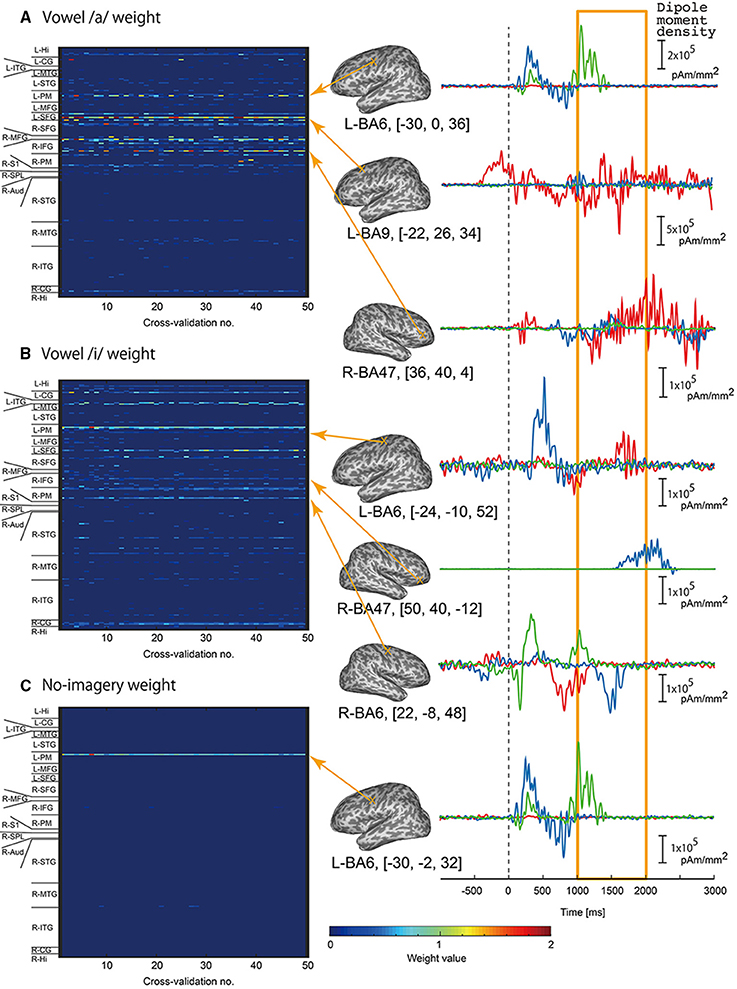

We examined which cortical vertices or EEG sensors contributed to vowel classification by analyzing weight values of the three-class classifiers. For each classifier of /a/, /i/, and no-imagery, weight values were normalized by the maximum weight value in each cross validation, and mean weight values of time point features (32 points per vertex) for each vertex or EEG sensor were then calculated. The mean weight values for all of the vertices or EEG sensors were plotted as a colored map for all cross-validation times (Figure 5 for EEG sensors, Figure 6 for cortical vertices). For some vertices and EEG sensors that were frequently selected by the cross-validation analysis, we compared differences in time course signals during the three tasks. Mean time course signals were calculated across trials and plotted for EEG sensors (Figure 5) and EEG cortical currents (Figure 6).

Figure 5. Examples of EEG sensor signals in a participant who showed the highest accuracy. Left panel: Color maps of mean weight values of all sensors for each cross-validation analysis calculated by classifier for vowel /a/ (A), vowel /i/ (B), and no-imagery (C). Right panel: Frequently selected sensors with high mean weight values (FSHV-sensors) were selected from the color maps and mean time series signals across trials were plotted. Red lines represent signals during vowel /a/ task, blue lines represent vowel /i/ task, and green lines represent no-imagery task. Signals from 1 to 2 s after the auditory stimuli (orange box) were used for classification analyses. EEG sensor positions of the FSHV-sensors are shown beside each signal plot.

Figure 6. Examples of EEG cortical current signals in the same participant in Figure 5. Left panel: Color maps of mean weight values of all vertices sorted according to brain areas for each cross-validation analysis calculated by classifier for vowel /a/ (A), vowel /i/ (B), and no-imagery (C). Right panel: Frequently selected vertices with high mean weight values (FSHV-vertices) were selected from the color maps, and mean time series signals across trials were plotted. Red lines represent signals during vowel /a/ task, blue lines represent vowel /i/ task, and green line represent no-imagery task. Signals from 1 to 2 s after the auditory stimuli (orange box) were used for classification analyses. Positions of the FSHV-vertices and their MNI standard coordinates are shown beside each signal plot. MNI standard coordinates of the current vertices were calculated using the normalization matrix obtained from SPM analysis to determine anatomical locations of the current vertices.

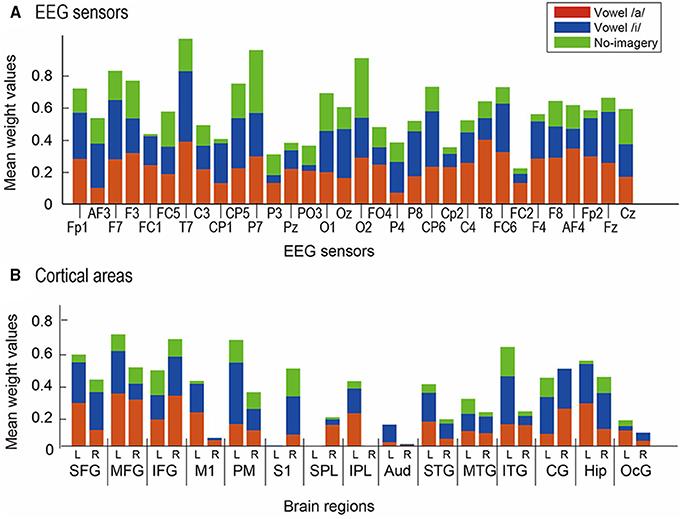

For cortical vertices, we further calculated mean weight value of each classifier across participants for 30 anatomical regions to find the most contributive anatomical area for the classification (Figure 7). Since MNI coordinate positions of cortical vertices were stored when constructing the cortical surface model in VBMEG, we were able to examine the anatomical regions corresponding to the locations of the cortical vertices using SPM Anatomy toolbox (Version 1.7) (Eickhoff et al., 2005) (http://www.fz-juelich.de/inm/inm-1/DE/Forschung/_docs/SPMAnatomy Toolbox/SPMAnatomyToolbox_node.html). We defined the following 30 anatomical regions of interest (ROIs) for the contribution analysis: left and right hemispheric superior frontal gyrus (L/R-SFG), middle frontal gyrus (L/R-MFG), inferior frontal gyrus (L/R-IFG), primary motor cortex (L/R-M1), premotor cortex (L/R-PM), primary somatosensory cortex (L/R-S1), superior parietal lobule (L/R-SPL), inferior parietal lobule (L/R-IPL), primary auditory cortex (L/R-Aud), superior temporal gyrus (L/R-STG), middle temporal gyrus (L/R-MTG), inferior temporal gyrus (L/R-ITG), cingulate gyrus (L/R-CG), hippocampus (L/R-Hip), and occipital gyrus (L/R-OcG).

Figure 7. Mean normalized weight values across participants. Red bars represent results from weight for vowel /a/, blue bars for vowel /i/, and green bars for no-imagery. (A) Values for 32 EEG sensors were compared. (B) Values for 30 ROIs were compared: left (L) and right (R) hemisphere of SFG, MFG, IFG, M1, PM, S1, SPL, IPL, Aud, STG, MTG, ITG, CG, Hip, and OcG.

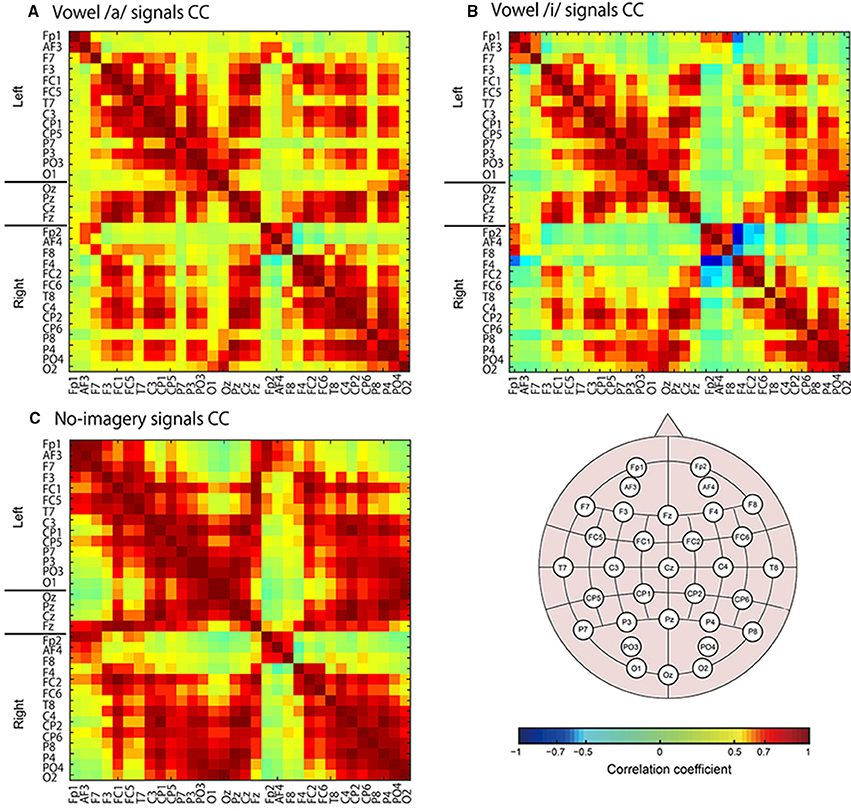

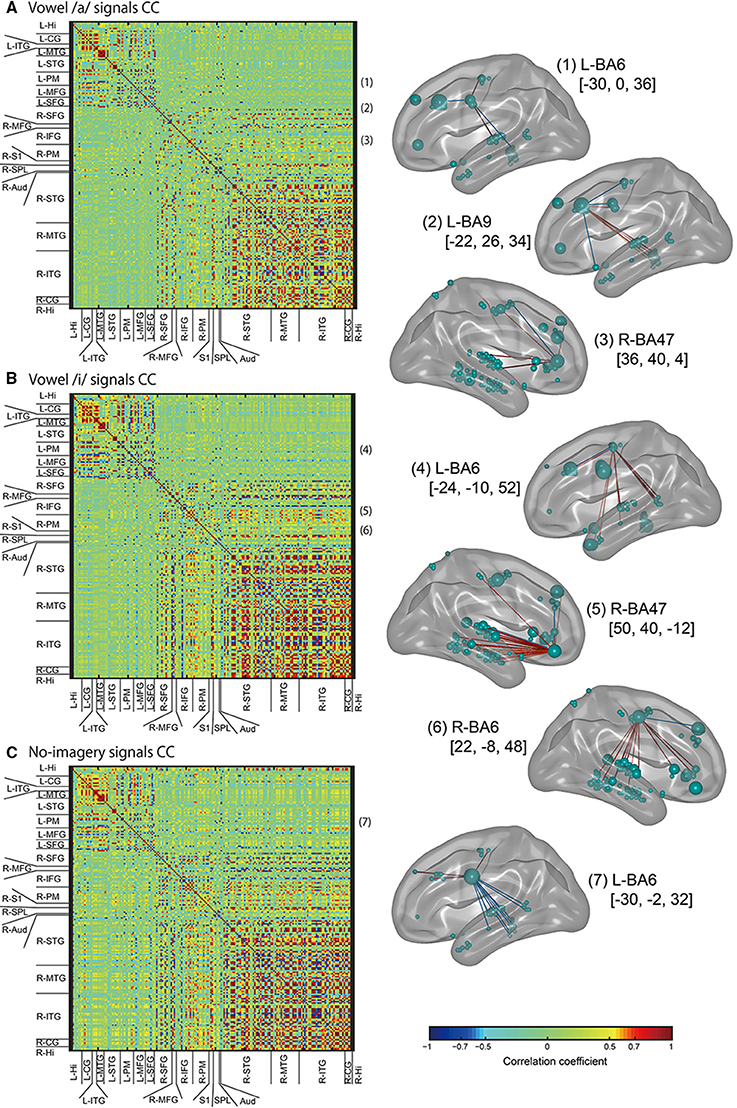

Moreover, we calculated coefficient of correlations for all vertex pairs and all EEG sensor pairs to find vertices or sensors that highly correlate with frequently selected vertices or sensors (Figures 8, 9).

Figure 8. Color maps of correlation coefficient (CC) values for EEG sensor signals. Sensors names are listed along with the horizontal and vertical axes. CC values were calculated for all tasks signals: vowel /a/ (A), vowel /i/ (B), and no-imagery (C).

Figure 9. Left panel: Color maps of correlation coefficient (CC) values for EEG cortical current signals. ROIs names that cortical vertices were included in are listed along with the horizontal and vertical axes. CC values were calculated for all tasks signals: vowel /a/ (A), vowel /i/ (B), and no-imagery (C). Right panel: The FSHV-vertices defined in Figure 6 [(1–7) in this figure) were set as seeds, and positive correlations above 0.6 (red lines) and negative correlations below–0.6 (blue lines) were visualized with BrainNet Viewer (Xia et al., 2013) (http://www.nitrc.org/projects/bnv/). Green balls on the brain maps denote vertices, with their sizes representing mean weight values, and names of the brain areas in Brodmann style and MNI coordinates of the seeds are written besides the brain maps.

Results

Comparison of Hyper-Parameters for EEG Cortical Current Estimation

In Figure 3, three-class classification accuracies from the nested cross-validation analysis were plotted for the 9 pairs of hyper-parameters. All mean accuracies were higher than chance level (33.3%) and differed according to the hyper-parameters used. Also, the best pair of hyper-parameters was not always the same in each cross-validation step. As expected, we obtained higher accuracies using lower hyper-parameter values. Since both hyper-parameters represented similarity between fMRI and EEG activity patterns, this result is reasonable because we recorded EEG and fMRI data on different days using slightly different experimental protocol. We used the hyper-parameters that provided the highest accuracy, m0 = 10 and γ0 = 1, in the next analysis (Section Comparison between Classification Accuracies for EEG Sensors and Cortical Currents) comparing accuracies for different fMRI activity priors.

Comparison between Classification Accuracies for EEG Sensors and Cortical Currents

Figure 4A compares three-class classification accuracies (/a/, /i/, and no-imagery) of EEG sensor and EEG cortical current signals estimated using different fMRI area/activity priors. All values are mean accuracies across participants. All accuracies except randomly labeled data (No. 5) were significantly higher than chance level (Bestcond: 58.5 ± 2.93%, p = 2.00e-04; Unc001: 48.5 ± 1.80%, p = 1.00e-04; Group: 55.0 ± 2.55%, p = 1.00e-04; LOOgroup: 48.8 ± 1.90%, p = 1.00e-04; RandLOOgroup: 35.7 ± 1.43%, p = 0.08; EEG: 39.5 ± 1.62%, p = 7.00e-04; using permutation tests). Furthermore, all EEG cortical currents data except randomly labeled data showed significantly higher accuracies than EEG data (Bestcond: p = 1.00e-04; Unc001: p = 0.0018; Group: p = 4.00e-04; LOOgroup: p = 0.0024; RandLOOgroup: p = 0.11; using permutation tests). Since the randomly labeled data (RandLOOgroup) showed nearly chance-level accuracy, accuracy increases using EEG cortical currents likely could not be attributed to increased data dimensionality but rather to meaningful information being extracted by EEG cortical current estimation.

Comparing accuracies for different fMRI priors (No. 1–No. 4), Bestcond (58.5%) showed significantly higher accuracy than all other conditions except the Group condition. Interestingly, the Group condition (55.0%) showed relatively higher accuracy than Unc001 even though activation of the Group condition was obtained using all participants in Unc001 (Bestcond vs. Group: p = 0.39; Unc001 vs. Group: p = 0.050; Unc001 vs. LOOgroup: p = 0.91; Unc001 vs. Bestcond: p = 0.0076; Group vs. LOOgroup: p = 0.065; LOOgroup vs. Bestcond: p = 0.011; using permutation tests).

Classification ratios (Figure 4B) further revealed that there was no disproportion in true-positive classifications among tasks. Specifically, only in EEG cortical currents were true-positives significantly more frequent than false-positives and false-negatives, and no significant difference in true-positives was observed between tasks [cortical currents: F(8, 81) = 10.8, p = 3.11e-10; EEG: F(8, 81) = 3.8, p = 0.001; by one-way ANOVA). Therefore, classification accuracy (Figure 4A) was not the result of prominently high accuracy in any single task, and there was no disproportion in accuracies for the three tasks.

Contribution of Brain Regions to Classification of Covert Vowel Articulation

Left panels of Figures 5A–C, 6A–C show weight analysis results of EEG and cortical current classifiers, respectively, in a participant who showed the highest accuracy among the participants. For both EEG sensor and cortical current signals, the same sensors or vertices tended to be selected throughout the cross-validation. These tendencies were observed for all participants, even though locations of frequently selected sensors or vertices differed between participants.

When calculating mean weight values of the 30 ROIs across participants (Figure 7), L-SFG, L-MFG, R-IFG, L-PM, L-ITG, and L-Hip showed significantly higher values than R-M1, R-PM, L-S1, LR-SPLs, R-IPL, LR-Auds, R-STG, LR-MTGs, R-ITG, and LR-OcGs [p < 0.05 in multi-comparison analysis after a three-way ANOVA (main effect of participant: F(9) = 1.63, p = 0.17; main effect of ROI: F(29) = 3.24, p = 0; main effect of task: F(2) = 12.8, p = 0; interaction between participant and area: F(261) = 1.48, p = 0.0001; interaction between area and task: F(58) = 0.94, p = 0.60; multi-comparison results showed no-significance between participants)].

Mean weight values of EEG sensors across participants (Figure 7B) revealed that T7, P7, and O2 showed significantly higher values than the other sensors [p < 0.05 in multi-comparison analysis after a three-way ANOVA (main effect of participant: F(9) = 0.28, p = 0.98; main effect of sensor: F(31) = 1.81, p = 0.005; main effect of task: F(2) = 12.4, p = 0; interaction between participant and area: F(279) = 1.50, p = 0; interaction between area and task: F(62) = 1.05, p = 0.37; multi-comparison results showed no significance between participants)].

To see the difference in time series signals between tasks for the EEG sensors and vertices that were frequently selected and assigned high weight values (termed “FSHV-sensors” and “FSHV-vertices”, respectively), we compared mean time series signals across trials for the three tasks in the same participant (orange boxes in the right panels of Figures 5A–C, 6A–C. FSHV-sensors tended to be located in the right frontal area (Fz, Fp2, F4, FC6) for vowel /a/ classifiers, in the bilateral frontal area (AF3, F7, C3, Fp2, AF4, F8, and FC6) for vowel /i/ classifiers, in the bilateral frontal and the left occipital areas (F7, AF4, and O1) for no-imagery classifiers in case of the participant. Only slight differences were observed among their signals even though the sensors positions were apart from each other. FSHV-vertices were located in bilateral frontal areas [L-SFG (BA9), L-PM (BA6), and R-IFG (BA47)] for vowel /a/ classifiers, in left temporal and bilateral frontal areas [L-MTG (BA21), L-PM (BA6), L-SFG (BA9), R-IFG (BA47), R-PM (BA6)] for vowel /i/ classifiers, and in left frontal area [L-PM (BA6)] for no-imagery classifiers. The most notable difference with the EEG results was that signal patterns during imagery (orange box in the right panels of Figures 6A–C) were quite different for each task and location.

Next we calculated correlation coefficients (CC) for all EEG sensor- or vertex- pairs of signals to identify neural signaling related to language processes. For EEG sensors (Figure 8), as expected, many pairs of EEG sensor signals showed high CC values, and high correlations were particularly observed between sensors located close to each other, even if located contra-laterally. For EEG cortical currents (Figure 9, left panels), high correlations were limited mainly to vertices located in the same hemisphere. Furthermore, when we drew connectivity maps using the FSHV-vertices as seeds and lines connecting to vertices with absolute CC values of more than 0.6, we found that the FSHV-vertices had positive or negative correlations with vertices located in Brodmann areas (BA) 6, 9, 20, 21, 22, 38, 46, and 47 (Figure 9, right panels).

Discussion

The aim of this study was to classify brain activity associated with covert vowel articulation. Results demonstrated that using EEG cortical current signals provided significantly higher classification accuracy than that using EEG sensor signals. Accuracy using cortical current signals was also comparable to those of existing studies using semi-invasive ECoG (Leuthardt et al., 2011; Pei et al., 2011; Ikeda et al., 2014). These results seem to be attributed to enhancement in spatial discrimination of EEG using cortical current estimation, since signal patterns of cortical currents were found to differ greatly from each other (Figures 6A–C). The enhancement in spatial discrimination further provided the possibility of findings on neural processing of vowel articulation (Figure 9). Although, EEG cortical current estimation employs fMRI data as hierarchical priors, real-time fMRI data acquisition is not required since the hierarchical priors are used for inverse filter design, and the inverse filter is calculated in advance using pre-recorded fMRI and EEG data. Therefore, EEG cortical current signals are applicable to real-time interfaces. To our knowledge, this is the first study to demonstrate usability for EEG cortical currents in BCI spellers, as well as the contributive anatomical areas and their functional connectivities for covert vowel articulation.

Brain Regions Contributive to Covert Vowel Articulation Revealed by EEG Cortical Current Signals

Our EEG cortical current results (Figure 7) showed that left MFG (BA46), right IFG (BA47), left PM (BA6), and left ITG (BA20) were highly contributive to the classification of vowels /a/ and /i/ and no-imagery. Though the neural circuit for covert vowel articulation cannot be confirmed from this analysis, our results are consistent with existing findings on phonological processing during language production. Several studies using fMRI reported significant activation of BA46 (left MFG) during phonological processing compared to semantic processing (Price and Friston, 1997; Heim et al., 2003). Considering that our study used auditory stimuli and covert articulation of single vowels, the observed higher contribution in left MFG is reasonable.

The right IFG has been shown to have significant activation during vowel speech production compared to listening to vowel production as well as to rest (Behroozmand et al., 2015). Our experimental tasks require similar brain activity, calling for vowel speech production and listening processes. Moreover, IFG includes Broca's area, which is a well-known area of language processing, and studies have shown that these adjacent regions are involved in spoken language processing (Hillert and Buracas, 2009), selective processing of text and speech (Vorobyev et al., 2004), and voice-based inference (Tesink et al., 2009). Considering these findings, the higher contribution of right IFG in our study may be due to our requiring selective imagery of vowel sounds rather than text of vowels.

The higher contribution of left PM (BA6) seems reasonable because PM is included in speech motor areas. Speech motor areas are thought to mediate the effect of visual speech cues on auditory processing and to be associated with phonetic perception (Skipper et al., 2007; Alho et al., 2012; Chu et al., 2013). Left ITG (BA20) is often reported in studies using comprehension tasks (Papathanassiou et al., 2000; Halai et al., 2015). Considering the simplicity of our experimental tasks, the higher contribution of left ITG might be associated with selective processing of text and speech (Vorobyev et al., 2004) because we asked participants to imagine the sound of the vowel speech they heard rather than text. Our functional connectivity analysis (Figure 9) showed negative correlations between some FSHV-vertices in BA6 and other vertices in temporal areas, especially in participants who showed high classification accuracies. These findings also may support presence of selective processing of text and speech in BA20 using information from BA6 before speech production processes are initiated in the frontal areas.

Use of SLR for Classification Analysis

This study employed SLR for classification analysis mainly for two reasons. One reason was simply that it offered higher classification accuracies than conventional methods, such as support vector machines (SVMs). An SVM may give better results if an effective feature selection method is applied before SVM analysis. However, we sought to minimize the number of processes to ensure robustness in BCI application. Therefore, we used SLR since it can train high dimensional classifiers without prior feature selection to reduce dimensionality, which was the second reason for its use in this study.

Owing to the ability of SLR to train high dimensional weight values without dimensional reduction, we were able to compare contribution of each brain region to classification. Analysis of mean weight values (Figure 7) showed that the areas highly contributive to classification were consistent with existing findings on the neural network for language processing. Furthermore, several studies, including a neurophysiological non-human study, have shown the validity of SLR's feature selection method, called automatic relevance determination (Mackay, 1992), for neuroscientific applications (Miyawaki et al., 2008; Tin et al., 2008; Yamashita et al., 2008). Our results offer additional evidence in support of SLR.

Challenges and Future Prospects Toward a BCI speller

Two steps remain for establishing an active BCI speller. First, classification accuracy must be increased for practical use. Second, the number of syllables to be classified must be increased. This study used vowels /a/ and /i/ to examine the possibility to classify Japanese words “Yes” (hái) and “No” (i.e.,). Mean accuracies of 2-class classification in this study were 82.5% (for “Group” prior) and 87.7% (for “Bestcond”), which are above the 70% accuracy deemed sufficient for real-time application (Pfurtscheller et al., 2005). Moreover, a BCI classifying “Yes” and “No” could be applicable not only as a speller but also in other applications such as cursor or robot control. For an ideal Japanese BCI speller, however, it is desirable to classify at least a 50-character syllabary including vowels and consonants. Since even existing reactive BCI spellers using stimulus-evoked potentials have difficulty in classifying 50 commands, a realistic solution would be to devise an application paradigm that includes 50 syllables but uses a smaller number of classifications (Fazel-Rezai and Abhari, 2008; Treder and Blankertz, 2010). To increase classification accuracy, we may need more parameter tuning at the individual level, because Bestcond showed that highest accuracies and contributive areas differed between participants. This study employed standard VBMEG toolbox procedures for cortical current estimation, which used SPM analysis results as priors. We varied the area and activity priors by defining thresholds in SPM statistical analysis to find the best priors for each participant. In a separate analysis, we also found a significant correlation between the number of cortical vertices and accuracy (R = 0.73, p = 0.016). This shows that it might be worthwhile to increase the number of cortical vertices not only by tuning thresholds in SPM but also by introducing anatomical information to cover full areas associated with language and speech.

Conclusion

This study provides the first demonstration of covert vowel articulation classification using EEG cortical currents. The classification accuracy using EEG cortical currents was significantly higher than that using EEG and comparable to existing findings using semi-invasive ECoG signals. SLR weight analysis further revealed that highly contributive brain regions were consistent with the results of existing findings on language processing. With fMRI data acquisition required only once in advance to calculate an inverse filter, EEG cortical currents are a potentially effective modality for active BCI spellers.

Author Contributions

NY designed the study, performed experiments, analyses, and literature review, and drafted the manuscript. AN developed experimental programs and performed experiments and analysis. AB developed experimental programs. DS, HK performed the interventions and analyzed the results. TH, YK supervised the research and revised the manuscript. All the authors read and approved the final manuscript.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This study was supported in part by JSPS KAKENHI grants 15K01849, 24500163, 15H01659, 26112004, and 26120008. A part of this study is the result of “Brain Machine Interface Development” and “BMI Technologies for Clinical Application” carried out under the Strategic Research Program for Brain Sciences and Brain, Mapping by Integrated Neurotechnologies for Disease Studies (Brain/MINDS), and Health Labor Science Research Grants from Japan Agency for Medical Research and Development, AMED. The Intramural Research Grant for Neurological and Psychiatric Disorders of National Center of Neurology and Psychiatry also supported the study. We thank Prof. Koji Jimura of Keio University for his assistance with the fMRI experiments.

References

Adrian, E. D., and Matthews, B. H. C. (1934). The Berger rhythm potential changes from the occipital lobes in man. Brain 57, 355–385. doi: 10.1093/brain/57.4.355

Alho, J., Sato, M., Sams, M., Schwartz, J. L., Tiitinen, H., and Jaaskelainen, I. P. (2012). Enhanced early-latency electromagnetic activity in the left premotor cortex is associated with successful phonetic categorization. Neuroimage 60, 1937–1946. doi: 10.1016/j.neuroimage.2012.02.011

Behroozmand, R., Shebek, R., Hansen, D. R., Oya, H., Robin, D. A., Howard, M. A. III, et al. (2015). Sensory-motor networks involved in speech production and motor control: an fMRI study. Neuroimage 109, 418–428. doi: 10.1016/j.neuroimage.2015.01.040

Birbaumer, N. (2006a). Brain-computer-interface research: coming of age. Clin. Neurophysiol. 117, 479–483. doi: 10.1016/j.clinph.2005.11.002

Birbaumer, N. (2006b). Breaking the silence: brain-computer interfaces (BCI) for communication and motor control. Psychophysiology 43, 517–532. doi: 10.1111/j.1469-8986.2006.00456.x

Birbaumer, N., and Cohen, L. G. (2007). Brain-computer interfaces: communication and restoration of movement in paralysis. J. Physiol. 579, 621–636. doi: 10.1113/jphysiol.2006.125633

Cheng, M., Gao, X. R., Gao, S. G., and Xu, D. F. (2002). Design and implementation of a brain-computer interface with high transfer rates. IEEE Transac. Biomed. Eng. 49, 1181–1186. doi: 10.1109/Tbme.2002.803536

Chu, Y. H., Lin, F. H., Chou, Y. J., Tsai, K. W., Kuo, W. J., and Jaaskelainen, I. P. (2013). Effective cerebral connectivity during silent speech reading revealed by functional magnetic resonance imaging. PLoS ONE 8:e80265. doi: 10.1371/journal.pone.0080265

Dasalla, C. S., Kambara, H., Sato, M., and Koike, Y. (2009). Single-trial classification of vowel speech imagery using common spatial patterns. Neural Netw. 22, 1334–1339. doi: 10.1016/j.neunet.2009.05.008

Eickhoff, S. B., Stephan, K. E., Mohlberg, H., Grefkes, C., Fink, G. R., Amunts, K., et al. (2005). A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage 25, 1325–1335. doi: 10.1016/J.Neuroimage.2004.12.034

Farwell, L. A., and Donchin, E. (1988). Talking off the top of your head: toward a mental prosthesis utilizing event-related brain potentials. Electroencephalogr. Clin. Neurophysiol. 70, 510–523. doi: 10.1016/0013-4694(88)90149-6

Fazel-Rezai, R., and Abhari, K. (2008). “A Comparison between a Matrix-based and a Region-based P300 Speller Paradigms for Brain-Computer Interface,” in 2008 30th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Vol. 1–8 (Vancouver, BC: IEEE), 1147–1150.

Halai, A. D., Parkes, L. M., and Welbourne, S. R. (2015). Dual-echo fMRI can detect activations in inferior temporal lobe during intelligible speech comprehension. Neuroimage 122, 214–221. doi: 10.1016/j.neuroimage.2015.05.067

Hausfeld, L., De Martino, F., Bonte, M., and Formisano, E. (2012). Pattern analysis of EEG responses to speech and voice: influence of feature grouping. Neuroimage 59, 3641–3651. doi: 10.1016/j.neuroimage.2011.11.056

Heim, S., Opitz, B., Muller, K., and Friederici, A. D. (2003). Phonological processing during language production: fMRI evidence for a shared production-comprehension network. Brain Res. Cogn. Brain Res. 16, 285–296. doi: 10.1016/S0926-6410(02)00284-7

Hillert, D. G., and Buracas, G. T. (2009). The neural substrates of spoken idiom comprehension. Langu. Cogn. Process. 24, 1370–1391. doi: 10.1080/01690960903057006

Ikeda, S., Shibata, T., Nakano, N., Okada, R., Tsuyuguchi, N., Ikeda, K., et al. (2014). Neural decoding of single vowels during covert articulation using electrocorticography. Front. Hum. Neurosci. 8:125. doi: 10.3389/fnhum.2014.00125

Kubler, A., Kotchoubey, B., Kaiser, J., Wolpaw, J. R., and Birbaumer, N. (2001). Brain-computer communication: unlocking the locked in. Psychol. Bull. 127, 358–375. doi: 10.1037/0033-2909.127.3.358

Leuthardt, E. C., Gaona, C., Sharma, M., Szrama, N., Roland, J., Freudenberg, Z., et al. (2011). Using the electrocorticographic speech network to control a brain-computer interface in humans. J. Neural Eng. 8:036004. doi: 10.1088/1741-2560/8/3/036004

Lopez-Gordo, M. A., Fernandez, E., Romero, S., Pelayo, F., and Prieto, A. (2012). An auditory brain-computer interface evoked by natural speech. J. Neural Eng. 9:036013. doi: 10.1088/1741-2560/9/3/036013

Mackay, D. J. C. (1992). Bayesian Interpolation. Neural Comput. 4, 415–447. doi: 10.1162/Neco.1992.4.3.415

Miyawaki, Y., Uchida, H., Yamashita, O., Sato, M. A., Morito, Y., Tanabe, H. C., et al. (2008). Visual image reconstruction from Human Brain Activity using a combination of multiscale local image decoders. Neuron 60, 915–929. doi: 10.1016/J.Neuron.2008.11.004

Morioka, H., Kanemura, A., Morimoto, S., Yoshioka, T., Oba, S., Kawanabe, M., et al. (2014). Decoding spatial attention by using cortical currents estimated from electroencephalography with near-infrared spectroscopy prior information. Neuroimage 90, 128–139. doi: 10.1016/j.neuroimage.2013.12.035

Naci, L., Cusack, R., Jia, V. Z., and Owen, A. M. (2013). The brain's silent messenger: using selective attention to decode human thought for brain-based communication. J. Neurosci. 33, 9385–9393. doi: 10.1523/JNEUROSCI.5577-12.2013

Nichols, T. E., and Holmes, A. P. (2002). Nonparametric permutation tests for functional neuroimaging: a primer with examples. Hum Brain Mapp 15, 1–25. doi: 10.1002/hbm.1058

Nijboer, F., Sellers, E. W., Mellinger, J., Jordan, M. A., Matuz, T., Furdea, A., et al. (2008). A P300-based brain-computer interface for people with amyotrophic lateral sclerosis. Clin. Neurophysiol. 119, 1909–1916. doi: 10.1016/j.clinph.2008.03.034

Papathanassiou, D., Etard, O., Mellet, E., Zago, L., Mazoyer, B., and Tzourio-Mazoyer, N. (2000). A common language network for comprehension and production: a contribution to the definition of language epicenters with PET. Neuroimage 11, 347–357. doi: 10.1006/nimg.2000.0546

Pei, X., Barbour, D. L., Leuthardt, E. C., and Schalk, G. (2011). Decoding vowels and consonants in spoken and imagined words using electrocorticographic signals in humans. J. Neural Eng. 8:046028. doi: 10.1088/1741-2560/8/4/046028

Perrone-Bertolotti, M., Rapin, L., Lachaux, J. P., Baciu, M., and Loevenbruck, H. (2014). What is that little voice inside my head? Inner speech phenomenology, its role in cognitive performance, and its relation to self-monitoring. Behav. Brain Res. 261, 220–239. doi: 10.1016/J.Bbr.2013.12.034

Pfurtscheller, G., Neuper, C., and Birbaumer, N. (2005). “Human Brain-Computer Interface,” in Motor Cortex in Voluntary Movements: a Distributed System for Disturbed Functions, eds. A. Riehle and E. Vaadia (Boca Raton, FL: CRC Press), 405–441.

Price, C. J., and Friston, K. J. (1997). Cognitive conjunction: a new approach to brain activation experiments. Neuroimage 5, 261–270. doi: 10.1006/nimg.1997.0269

Sato, M. A., Yoshioka, T., Kajihara, S., Toyama, K., Goda, N., Doya, K., et al. (2004). Hierarchical Bayesian estimation for MEG inverse problem. Neuroimage 23, 806–826. doi: 10.1016/j.neuroimage.2004.06.037

Shibata, K., Yamagishi, N., Goda, N., Yoshioka, T., Yamashita, O., Sato, M. A., et al. (2008). The effects of feature attention on prestimulus cortical activity in the human visual system. Cereb. Cortex 18, 1664–1675. doi: 10.1093/cercor/bhm194

Shih, J. J., Krusienski, D. J., and Wolpaw, J. R. (2012). Brain-computer interfaces in medicine. Mayo Clin. Proc. 87, 268–279. doi: 10.1016/j.mayocp.2011.12.008

Skipper, J. I., Van Wassenhove, V., Nusbaum, H. C., and Small, S. L. (2007). Hearing lips and seeing voices: how cortical areas supporting speech production mediate audiovisual speech perception. Cereb. Cortex 17, 2387–2399. doi: 10.1093/cercor/bhl147

Stelzer, J., Chen, Y., and Turner, R. (2013). Statistical inference and multiple testing correction in classification-based multi-voxel pattern analysis (MVPA): random permutations and cluster size control. Neuroimage 65, 69–82. doi: 10.1016/j.neuroimage.2012.09.063

Sutton, S., Tueting, P., Zubin, J., and John, E. R. (1967). Information delivery and the sensory evoked potential. Science 155, 1436–1439.

Tesink, C. M. J. Y., Buitelaar, J. K., Petersson, K. M., Van Der Gaag, R. J., Kan, C. C., Tendolkar, I., et al. (2009). Neural correlates of pragmatic language comprehension in autism spectrum disorders. Brain 132, 1941–1952. doi: 10.1093/Brain/Awp103

Tin, J. A., D'souza, A., Yamamoto, K., Yoshioka, T., Hoffman, D., Kakei, S., et al. (2008). Variational Bayesian least squarest: an application to brain-machine interface data. Neural Netw. 21, 1112–1131. doi: 10.1016/J.Neunet.2008.06.012

Toda, A., Imamizu, H., Kawato, M., and Sato, M. A. (2011). Reconstruction of two-dimensional movement trajectories from selected magnetoencephalography cortical currents by combined sparse Bayesian methods. Neuroimage 54, 892–905. doi: 10.1016/j.neuroimage.2010.09.057

Treder, M. S., and Blankertz, B. (2010). (C)overt attention and visual speller design in an ERP-based brain-computer interface. Behav. Brain Funct. 6:28. doi: 10.1186/1744-9081-6-28

Vorobyev, V. A., Alho, K., Medvedev, S. V., Pakhomov, S. V., Roudas, M. S., Rutkovskaya, J. M., et al. (2004). Linguistic processing in visual and modality-nonspecific brain areas: PET recordings during selective attention. Cogn. Brain Res. 20, 309–322. doi: 10.1016/J.Cogbrainres.2004.03.011

Wang, L., Zhang, X., Zhong, X. F., and Zhang, Y. (2013). Analysis and classification of speech imagery EEG for BCI. Biomed. Signal Process. Control 8, 901–908. doi: 10.1016/j.bspc.2013.07.011

Wolpaw, J. R., Birbaumer, N., Mcfarland, D. J., Pfurtscheller, G., and Vaughan, T. M. (2002). Brain-computer interfaces for communication and control. Clin. Neurophysiol. 113, 767–791. doi: 10.1016/s1388-2457(02)00057-3

Xia, M., Wang, J., and He, Y. (2013). BrainNet Viewer: a network visualization tool for human brain connectomics. PLoS ONE 8:e68910. doi: 10.1371/journal.pone.0068910

Yamashita, O., Sato, M. A., Yoshioka, T., Tong, F., and Kamitani, Y. (2008). Sparse estimation automatically selects voxels relevant for the decoding of fMRI activity patterns. Neuroimage 42, 1414–1429. doi: 10.1016/j.neuroimage.2008.05.050

Yoshimura, N., Dasalla, C. S., Hanakawa, T., Sato, M. A., and Koike, Y. (2012). Reconstruction of flexor and extensor muscle activities from electroencephalography cortical currents. Neuroimage 59, 1324–1337. doi: 10.1016/j.neuroimage.2011.08.029

Yoshimura, N., Jimura, K., Dasalla, C. S., Shin, D., Kambara, H., Hanakawa, T., et al. (2014). Dissociable neural representations of wrist motor coordinate frames in human motor cortices. Neuroimage 97, 53–61. doi: 10.1016/j.neuroimage.2014.04.046

Yoshioka, T., Toyama, K., Kawato, M., Yamashita, O., Nishina, S., Yamagishi, N., et al. (2008). Evaluation of hierarchical Bayesian method through retinotopic brain activities reconstruction from fMRI and MEG signals. Neuroimage 42, 1397–1413. doi: 10.1016/j.neuroimage.2008.06.013

Keywords: brain-computer interfaces, silent speech, electoencephalography, functional magnetic resonance imaging, inverse problem

Citation: Yoshimura N, Nishimoto A, Belkacem AN, Shin D, Kambara H, Hanakawa T and Koike Y (2016) Decoding of Covert Vowel Articulation Using Electroencephalography Cortical Currents. Front. Neurosci. 10:175. doi: 10.3389/fnins.2016.00175

Received: 09 December 2015; Accepted: 06 April 2016;

Published: 03 May 2016.

Edited by:

Cuntai Guan, Institute for Infocomm Research, SingaporeReviewed by:

Shigeyuki Ikeda, Tohoku University, JapanShin'Ichiro Kanoh, Shibaura Institute of Technology, Japan

Copyright © 2016 Yoshimura, Nishimoto, Belkacem, Shin, Kambara, Hanakawa and Koike. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Natsue Yoshimura, eW9zaGltdXJhQHBpLnRpdGVjaC5hYy5qcA==

Natsue Yoshimura

Natsue Yoshimura Atsushi Nishimoto1,2,3

Atsushi Nishimoto1,2,3 Abdelkader Nasreddine Belkacem

Abdelkader Nasreddine Belkacem Duk Shin

Duk Shin Hiroyuki Kambara

Hiroyuki Kambara Takashi Hanakawa

Takashi Hanakawa