- 1College of Mechanical Engineering, Donghua University, Shanghai, China

- 2Fashion and Art Design Institute, Donghua University, Shanghai, China

Sketching is one of the most important processes in the conceptual stage of design. Previous studies have relied largely on the analyses of sketching process and outcomes; whereas surface electromyographic (sEMG) signals associated with sketching have received little attention. In this study, we propose a method in which 11 basic one-stroke sketching shapes are identified from the sEMG signals generated by the forearm and upper arm muscles from 4 subjects. Time domain features such as integrated electromyography, root mean square and mean absolute value were extracted with analysis windows of two length conditions for pattern recognition. After reducing data dimensionality using principal component analysis, the shapes were classified using Gene Expression Programming (GEP). The performance of the GEP classifier was compared to the Back Propagation neural network (BPNN) and the Elman neural network (ENN). Feature extraction with the short analysis window (250 ms with a 250 ms increment) improved the recognition rate by around 6.4% averagely compared with the long analysis window (2500 ms with a 2500 ms increment). The average recognition rate for the eleven basic one-stroke sketching patterns achieved by the GEP classifier was 96.26% in the training set and 95.62% in the test set, which was superior to the performance of the BPNN and ENN classifiers. The results show that the GEP classifier is able to perform well with either length of the analysis window. Thus, the proposed GEP model show promise for recognizing sketching based on sEMG signals.

1. Introduction

Conceptual design is one of the earliest stages of product development and is responsible for defining key aspects of the final product (Briede-Westermeyer et al., 2014). Designers commonly use freehand sketching to express conceptual design, which is a reflection-in-action process that occurs during design (Schön, 1983). As a natural and efficient method to communicate ideas (Van der Lugt, 2005), the potential advantages of sketching have been widely recognized and exploited in many fields (Pu and Gur, 2009).

Sketching is a visual language, and as with any language, some elements go together to form more structured forms of communication. However, there is no definitive set of basic shapes. The most common are: line/curve/arc, rectangle/square/diamond, circle/ellipse, arrowhead and triangle (Schmieder et al., 2009). However, novice designers are trained to precisely and quickly draw four basic shapes with one stroke: freehand straight lines, ellipses, circles and smooth curves (Olofsson and Sjölén, 2007; Robertson and Bertling, 2013). Design sketches can be sketched with an arbitrary number of these four kinds of basic one-stroke shape.

Pen and touch medium tablets, interactive pen displays, touch screens, tablet computers, and mouse have typically been used to record and transmit sketching messages to computers. Many of the most robust sketch recognition systems today focus on gesture or isolated symbol recognition (Field et al., 2010). Hidden Markov models (Anderson et al., 2004; Cao and Balakrishnan, 2005; Sezgin and Davis, 2005), neural networks (Pittman, 1991), feature-based statistical classifiers (Rubine, 1991; Cho, 2006), dynamic programming (Myers and Rabiner, 1981; Tappert, 1982), ad-hoc heuristic recognizers (Wilson and Shafer, 2003; Notowidigdo and Miller, 2004), proportional shape matching (Kristensson and Zhai, 2004) and $1 recognizer (Wobbrock et al., 2007) have been widely used for gesture recognition. Despite substantial progress in this area, recognizing sketching remains a difficult problem since sketches are informal, ambiguous, and implicit (Li, 2003).

A recent trend is toward more accessible and natural interfaces. Thus, Human Computer Interaction (HCI) has drawn attention to the voice, hand gesture and posture as important perceptual interfaces in addition to the traditional computer peripherals or touch screens (Linderman et al., 2009; Chen et al., 2015). An interface that converts human bioelectric activity to external devices, known as bioelectric interface (Linderman et al., 2009), especially muscle-computer interface (Chowdhury et al., 2013), has become a new research focus in the field of HCI. Sketching and its key role in concept design are identified (Schütze et al., 2003; Tovey et al., 2003) and sketch-based interfaces can achieve more natural HCI (Kara and Stahovich, 2005), which has led to computer-aided sketching (CAS) systems (Sun et al., 2014) and sketch-based interfaces for modeling in computer-aided design (CAD) systems (Olsen et al., 2009). Although much progress has been made, sketching has received little attention from the designers of bioelectric interfaces due to perceived technical limitations (Linderman et al., 2009), the paucity of models (McKeague, 2005), and intra-class variations and inter-class ambiguities of sketches (Li et al., 2015).

Surface electromyographic (sEMG) signals have been used to control computers (Wheeler and Jorgensen, 2003), prosthesis (Farina et al., 2014; Jiang et al., 2014), robots (Kiguchi and Hayashi, 2012) and wheelchair (Andreasen and Gabbert, 2006). Researchers also have used EMG as new interfaces (Ahsan et al., 2009; Chowdhury et al., 2013) for recognition of hand gestures (Chen et al., 2007; Fougner et al., 2014), sign languages (Li et al., 2010), body languages (Chen et al., 2014) and facial expressions (Chen et al., 2015). However, the sEMG, which reflects to some extent the underlying neuromuscular activity (Jian, 2000), has also been found useful for the recognition of handwriting (Lansari et al., 2003; Linderman et al., 2009; Huang et al., 2010; Chihi et al., 2012). For instance, Linderman et al. (2009) showed a method in which EMG signals generated by hand and forearm muscles during handwriting activity are reliably translated into both algorithm-generated handwriting traces and font characters using decoding algorithms. Huang (2013) proposed to use the dynamic time warping algorithm for the overall recognition of handwriting signals of the EMG. The fact that brain, hand, and eye actions are tightly connected in the sketching process (Goel, 1995; Taura et al., 2012; Sun et al., 2014) suggests that bioelectric interfaces potentially could extract normal sketching patterns directly from hand and arm EMG signals. However, this question has rarely been explored so far.

Two things are needed to improve the classification accuracy of subtle sketching movements: feature extraction and recognition model construction (Englehart and Hudgins, 2003; Nielsen et al., 2011). Selecting a proper length of analysis window and increment for feature extraction can improve classification accuracy (Smith et al., 2011; Earley et al., 2016). Any algorithm that enables recognizing the sketching patterns from the features of sEMG signals should be adopted (Nielsen et al., 2011). It is expected that advanced algorithms would satisfy the following requirements (Farina et al., 2014; Hahne et al., 2014): little user training, high computational efficiency and also performing well with few electrodes. Those aspects are addressed in the present study by applying a robust variant of genetic programming, namely Gene Expression Programming (GEP).

This study proposes an sEMG-based method for the recognition of 11 basic one-stroke shapes from sketching in conceptual design. More specifically, our distinctive contributions are: (1) A new experiment protocol will be established to record the sEMG signals from 4 forearm and 2 upper arm muscles of 4 participants who will be instructed to trace and cover each printed one-stroke shape on sketching templates; (2) we will extract 180 time domain indices of the sEMG signals with a short analysis window and 18 indices with a long analysis window. (3) After reducing dimensions with principal component analysis (PCA), GEP, Back Propagation neural networks (BPNN) and Elman neural networks (ENN) will be used to construct recognition models respectively for comparison.

2. Materials and Methods

2.1. Participants

This study was approved by the Ethics Committee of Donghua University. Four healthy graduate students majoring in industrial design (2 males and 2 females; mean ± SD, age = 23.5 ± 0.58 years, height = 170.75 ± 8.61 cm, and weight = 65 ± 11.01 kg) volunteered to participate in the experiment. All participants had a medical examination to exclude upper limb musculoskeletal and nervous diseases, and they are right-handed. Before the experiment, they promised not to do any forearm or hand strenuous exercise.

2.2. Equipment and Materials

2.2.1. Electromyographer

The sEMG signals were collected, amplified and transmitted using a 12-channel digital EMG system (ZJE-II, ZJE Studio Ltd., China). It has an amplifier gain of 1000, conditioned with a digital band-pass filter between 10 and 500 Hz with a notch filter implemented to remove the 50 Hz line interference. The sEMG signals underwent a 14 bit analog to digital conversion at a sampling frequency of 1000 Hz.

The single disposable Ag/AgCl strip electrodes (5 cm in length and 3.5 cm in width), filled with conductive electrode paste (Jun Kang Medical Supplies Ltd., China) were used to measure sEMG activity. The electrodes can be snapped onto the EMG cable that connects it to the EMG amplifier.

2.2.2. Tested Shapes and Sketching Materials

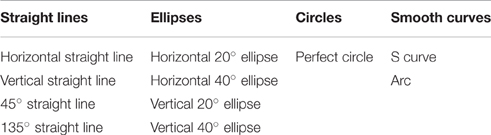

Table 1 shows the tested one-stroke shapes that are variations of the four basic one-stroke shapes. We selected in total 11 one-stroke shapes: horizontal, vertical, 45° and 135° straight lines, horizontal 20° and 40° ellipses, vertical 20° and 40° ellipses, perfect circles, S curves, and arcs. The selection of these tested shapes was based on the fact that these were short, well-known and frequently used shapes among the subjects in product design. The degree of an ellipse is the measure of the angle of the line of sight into the surface of the ellipse (Robertson and Bertling, 2013).

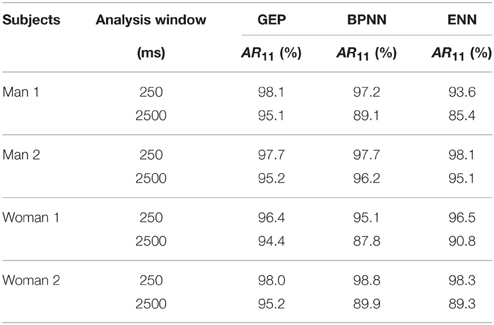

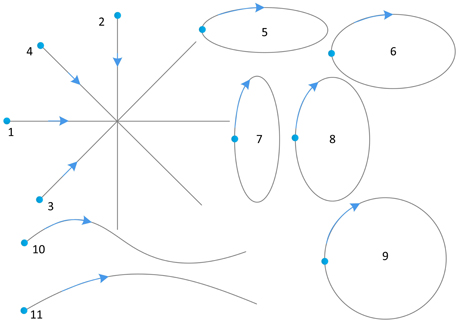

We printed these 11 one-stroke shapes into a piece of A4 paper with shallow color as a sketching template. We prepared ten patterns of template paper consisting of 11 shapes each. The order of the 11 shapes on the list of sketching template paper was randomized, with a different random order for each pattern. Subjects were required to draw on the paper to trace and cover each printed shape. Figure 1 shows an example of the sketching templates.

Figure 1. One example of the sketching templates (dots represent starting points, arrows represent directions).

A standard mesh computer chair with a 40 cm seat height and rectangular wooden desktop (length: 100 cm, width: 60 cm, height: 75 cm) was used to replicate the conditions that are found in a regular work room. Subjects sketched on a sketching template, using a blue ink ballpoint pen, model BIC Cristal, with a hexagonal barrel, a medium point of 1.0 mm and line width of 0.4 mm.

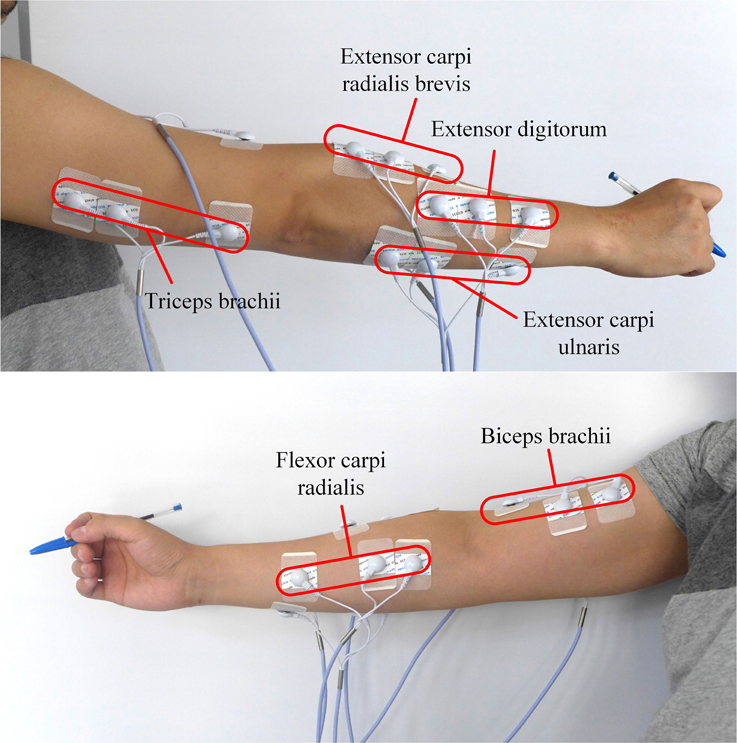

2.2.3. Electrode Placement

After the location of muscles through palpation during voluntary contraction, the skin of each subject was shaved where necessary and then carefully cleaned with alcohol and electrolyte gel to reduce contact impedance and improve the electrical and mechanical contact of the electrodes (de Almeida et al., 2013). The electrodes were fixed to the skin with hypoallergenic tape to reduce movement artifacts and minimize interference in the sketching performance.

Previous research (Lansari et al., 2003; Huang et al., 2010; Chihi et al., 2012) always recorded surface EMGs from hand muscles for handwriting recognition. However, according to Robertson and Bertling (2013) and our experience, designers should move the whole arm to sketch. Researchers have also proposed that the increasing number of tested muscles has a positive effect on improving the accuracy of recognition (Linderman et al., 2009). Since sketching involves the finger, wrist, and whole arm movements, sEMG signals can be recorded from intrinsic hand, forearm and upper arm muscles. However, the pen is prone to touching sEMG electrodes attached on hand to arise electrode shift, which will make noises during the collection of EMG signals, and reduce the recognition accuracy. Besides, placing electrodes on hand skin is not accessible and natural for practical use. Therefore, only EMG activity over the forearm and upper arm muscles was measured for feature extraction. Thus, four forearm muscles: flexor carpi radialis (FCR), extensor digitorum (ED), extensor carpi ulnaris (ECU), extensor carpi radialis brevis (ECRB) and two upper arm muscles: triceps brachii (TB) and biceps brachii (BB) were selected for their major role in stabilization and movement of the upper limb during fine dexterity activities, such as handwriting and sketching (Linderman et al., 2009; de Almeida et al., 2013). The locations of the surface EMG electrodes are shown in Figure 2.

2.3. Experimental Protocol

The experiments were carried out for 10 days in October in 2015. They were performed twice a day, one from 09:00 to 12:00 for women and another from 14:00 to 17:00 for men. The experiment was divided into three stages: a welcome stage, a preparation stage, and a task stage. During a welcome stage, the procedures and the equipment used for the experiment were introduced to the participants. All participants were required to sign a consent form with a detailed description of the experiment and complete a background questionnaire about personal information such as height and weight.

During the preparation stage, the task instructions were read to the participants, and they were given a brief tutorial on how to complete the task. The objective was to reproduce as accurately as possible the natural conditions of designers in a workroom environment. Participants were instructed to practice the task prior to the data acquisition as many times as necessary to feel secure about their performance and were informed that they would be given a sketching template paper. Then they were asked to sketch on the paper to trace and cover each printed one-stroke shape, using their trained pen grasp posture and sketching skill in one stroke.

For the task stage, we prepared 100 sheets of sketching template paper consisting 11 one-stroke shapes for each participant. By a trial, we define a recording epoch during which a subject sketches a single one-stroke shape. Each shape was successively sketched 100 times. The obtained data were used as the training and test set. Therefore, each subject sketched 1100 shapes (i.e., performed 1100 trials) during the whole experiment. Since we sought to recognize basic one-stroke shapes, the subjects were asked to make pauses between the one-stroke shapes. The trials were paced by the timer software of mobile phone which played a beep sound at the beginning of each trial. The duration of each trial was 3 s of which 1–2.5 s corresponded to each shape sketching.

At the beginning of the task stage, electrodes were attached to the participants' skin and connected to the EMG system. The locations were marked on skin with a marking pen to ensure the same locations during every session. In each session, new electrodes were attached again on the pen marks. Next, a sheet of sketching template paper was presented to the participant. Each repetition of the task was initiated with the subject holding the pen with their usual grasp pattern at a desk in front of a new sketching template. The subject was instructed to remain in this position until the maximal relaxation point, and simultaneous visualization of the EMG signal was registered, as shown in Figure 3. At this point, the beginning of the task was requested through a beep sound. EMGs of 6 muscles were simultaneously recorded. Each subject was instructed to sketch on the sketching template to trace and cover printed stimuli. To avoid muscle fatigue, subjects would rest for 5 min after each set of tested shapes (i.e., one sheet of sketching template paper) and the collection of EMG signals was stopped, but the electrodes were not removed until the subject finished ten sets of tested shapes.

The design of the experiment was a One-stroke shape (11) × Repetition (10) × Day (10) within-subjects design, amounting to 1100 trials per participant. Participation in the experiment took approximately 600 min.

2.4. Feature Extraction

Among the various ways for sEMG feature extraction, the average Electromyography (aEMG), Root Mean Square (RMS) and Mean Absolute Value (MAV) are able to respond to the changes of signal amplitude characteristics (Jian, 2000; Ren et al., 2004; Poosapadi Arjunan and Kumar, 2010; Geethanjali and Ray, 2011) and are widely used in the sEMG pattern recognition. Therefore, this paper combined these three time domain characteristics as features. These features were extracted separately from 6 channels of sEMG signals during the sketching of each shape. The aEMG, RMS and MAV are computed as follows:

where vi is the voltage at the ith sampling and N is the number of sampling points.

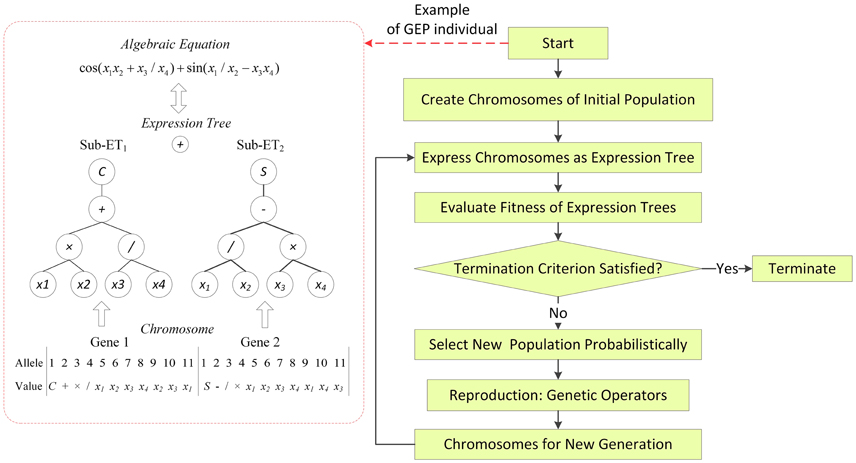

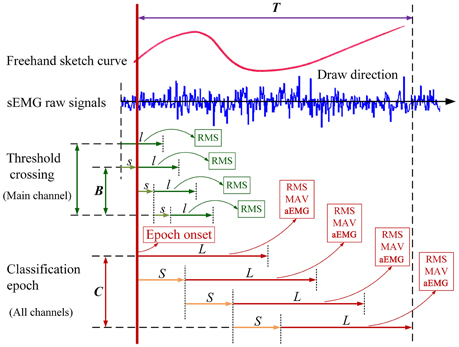

We designed a Labview script to control the feature extraction of sEMG signals. The framework is illustrated in Figure 4. There are two steps in the windowing scheme for the feature extraction of sEMG data. The first step is threshold crossing. The aim of this step is to detect the onset of individual sketching epoch for classification. The start point of the current sliding window can be decided as the epoch onset when the calculated RMS value of it is equal or greater than the presupposed threshold, and the RMS values of the following certain number of sliding windows are also crossing the threshold. The main channel that has the highest RMS value will be used for detecting threshold crossing, and the epoch onset of the main channel will be designated for all channels. The second step is feature extraction of classification epoch of individual shapes. The feature (RMS, MAV, and aEMG) values can be calculated using successive analysis windows. The size of the analysis windows here is different from that of sliding windows for detecting onset.

Figure 4. Windowing scheme of sEMG data in the continuous feature extraction. The size of each epoch of individual shapes is T. The size of sliding window for detecting threshold crossing is first set as l with a window increment of s. When the RMS value of the sliding window crossing the threshold lasts for B segments, the onset of the window will be the epoch onset of individual shapes. Next, the size of successive analysis windows for classification will change to L with a window increment of S. RMS, MAV, and aEMG are calculated at each L intervals. The sEMG data for classification is divided into C segments for every L that is the length of integrated samples as a feature extraction and the start point is shifted every S. Although six channels of sEMG data are used, only the main channel is shown here for illustrative purposes.

There are two major techniques in data windowing: adjacent windowing and overlapped windowing, depending on the difference between increment time and the segment length (Englehart and Hudgins, 2003; Oskoei and Hu, 2007). In this study, the overlapped windowing technique that results in a dense decision stream was used for detecting threshold crossing and onsets precisely, and all data were segmented for feature extraction using the adjacent windowing technique for saving computation time. The main channel in this paper is the channel collecting EMG signals from extensor digitorum (ED). In our study, sliding window for detecting threshold crossing was 20 ms (20 samples at 1000 Hz sampling), and the window increment was 5 ms. Two types of analysis window were set for comparison: short analysis window (window length of 250 ms with a 250 ms increment), and long analysis window (window length of 2500 ms with a 2500 ms increment). Rectified sEMGs from all muscles were segmented into epochs corresponding to individual shapes using a threshold that detected EMG bursts and designated the epoch onset. The threshold was set as 0.6 RMS during each set of tested shapes, which can detect all the trials (sketching epochs) per participant precisely. Then the epoch onset is determined when the RMS of the EMG signals crossing the threshold lasts for 300 ms (57 segments of sliding window for detecting threshold crossing). After these onsets had been determined, the EMG recording was segmented into 2.5 s epochs (10 segments of short analysis window; 1 segment of long analysis window) which represented the sketching of each shape. For the short analysis window, we can collect Segment (10) × Time domain characteristic (3) × Tested muscle (6), amounting to 180 parameters per participant per sketching shape. For the long analysis window, we can collect Segment (1) × Time domain feature (3) × Tested muscle (6), amounting to 18 parameters per participant per sketching shape.

After collecting all RMS, MAV, and aEMG values, to reduce data dimensionality, PCA was used to preprocess the EMG data before the classification step.

2.5. Classification

GEP is applied to the patterns extracted from multi-class, multi-channel continuous EMG signals for classification. Subsequently, the classifications were also obtained with two types of artificial neural networks (BPNN and ENN). The computing programs implementing the GEP were written with C++ language in Windows 7 and performed on a computer with a 2.8 G Intel Core Duo CPU and 8 G RAM. The neural network model is built with the simulation software NeuroSolutions 6 on Windows 7. The classifications were carried out with the same training and test sets.

2.5.1. Gene Expression Programming Classifier

GEP is a genetic algorithm (GA) as it uses populations of individuals, selects them according to fitness, and introduces genetic variation by using one or more genetic operators (Ferreira, 2001). As a global search technique using GA, GEP has exhibited great potential for solving complex problems (Ferreira, 2001). GEP uses fixed- length, linear strings of chromosomes to represent computer programs in the form of expression trees of different shapes and sizes, and implements a GA to find the best program (Zhou et al., 2003). One of the advantages of using GEP over other data-driven techniques is that it can produce explicit formulations of the relationship that rules the physical phenomenon (Martí et al., 2013). The reasons to use GEP for classification are the flexibility, capability, and efficiency of GEP (Zhou et al., 2003). The procedure of construction for sketching recognition was as follows.

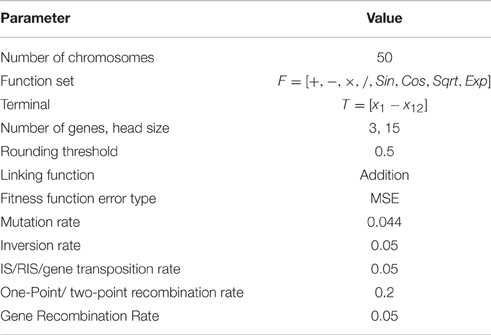

Step 1: Population Initialization

Each GEP chromosome is composed of a list of symbols with a fixed length, which can be any element from the function set and the terminal set. In our study, eight elements were chosen as the mathematical function set: F = {+, −, ×, /, Sin, Cos, Sqrt, Exp}. Then, the terminal set: T = {x1, x2, x3, x4, x5, x6, x7, x8, x9, x10, x11, x12} was selected, if, for instance, twelve sEMG features (x1 − x12) were extracted from 6 channels. Length of head, h = 15, length of tail, t = 16, three genes per chromosomes were employed. The linked function was “addition” for algebraic sub tree for our study.

Step 2: Genetic Operation

Basic genetic operators were applied for each generation, including mutation, inversion, IS transposition, RIS transposition, one-point recombination, two-point recombination, gene recombination and gene transposition. The details about how these operators implement can be seen in Ferreira (2001).

Step 3: Fitness Calculation The maximum fitness (fmax) was set to 1000, and then the fitness was calculated as follows:

where MSE represents the mean square error, m is the total number of fitness cases, Fj is the value output by individual program i for the fitness case j (out of m fitness cases) and Tj is the target value for the fitness case j. For a perfect fit, Fij = Tj.

Step 4: Termination Criterion

There were two termination criteria: (1) ffitness = fmax; (2) the maximum number of generations reached 2000. If either criterion was satisfied, stopped, else, went to step 2. The parameters used per run are summarized in Table 2. The GEP modeling approach is represented in the scheme of Figure 5.

In Figure 5, terminal x1 − x4 represent the variables; the alleles represent the position in the genes; since there is a one-to-one relationship between the symbols of the genes and the functions or terminals they represent. According to the GEP rules, the genes will be expressed as ETs and the ETs can also be easily decoded as an algebraic equation.

For a two-class (binary) problem, the GEP expression performs classification by returning a positive or nonpositive value indicating whether or not a given instance belongs to that class, i.e.,

where X is the input feature vector. For an n-class classification problem, where n > 2, one-against-all learning method is used to transform the n-class problem into n 2-class problems. These are constructed by using the examples of class j as the positive examples and the examples of classes other than j as the negative examples (Zhou et al., 2003). Our study is an 11 2-class problem.

2.5.2. Back Propagation Neural Network Classifier

There are many types of artificial neural networks (ANNs). The ANNs is suitable for modeling nonlinear data and is able to cover the distinction among different conditions. As one of the most common ANNs, BPNN has been widely used in pattern recognition models for sEMG signals (Nan et al., 2003). Back propagation (BP) learning comprises two processing steps involving the forward and BP of error. The BP architecture is the most popular model for complex, multi-layered networks (Xing et al., 2015). A three-layer network consisting of one input layer, one hidden layer with a sigmoid function, and one output layer with a tanh function was used to set up the BPNN classification models.

2.5.3. Elman Neural Network Classifier

Compared to the BPNN, the ENN was not frequently used for classifying sEMG signals of motion patterns. However, in our early work in Chen et al. (2015), the recognition rate of the ENN-based model was slightly superior to the performance of the BPNN-based model for eyebrow emotional expression recognition. As a subclass of recurrent neural networks, the ENN has a short-term memory function, which has been found to be particularly useful for the prediction of discrete time series, owing to its abilities to model nonlinear dynamic systems and to learn time-varying patterns (Ardalani-Farsa and Zolfaghari, 2010). To set up the ENN model, we used a four-layer network consisting of one input layer, one hidden layer with a sigmoid function, one context layer, and one output layer with a tanh function. More detailed description of the structure of ENN can be found in Chen et al. (2015).

2.5.4. Performance Evaluation Criteria

In this paper, the accuracy rate (AR) and AR11 were used to evaluate the classification performance of five classifiers mentioned above. The AR can be calculated as follows:

Where c is the number of correctly classified test samples, and C is the total number of tested samples. A larger AR value (close to one) indicate that the performance of the classifier is better. AR11 was the mean AR of 11 sketching shapes for each subject.

3. Results and Discussion

Feature extraction and recognition algorithms had to be performed on the data from individual subjects and did not generalize to other subjects because of inter-subject variability (Linderman et al., 2009). The dataset was randomly divided into two subsets, a training set, and test set, for recognition. 70% of the records from each day were used as the training set, and the remainder of the records was used as the test set. The training set contained 770 sEMG samples, whereas the test set contained 330 samples. For our study, the normalization interval was set as [0.05, 0.95].

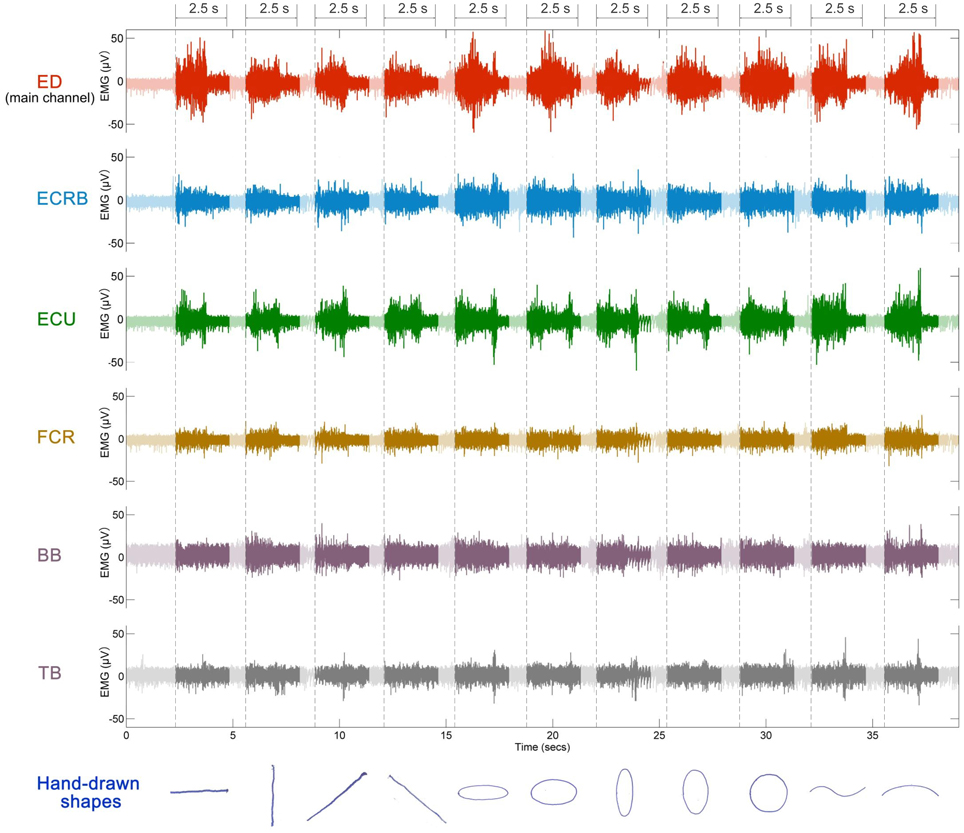

3.1. Results of Feature Extraction

Representative examples of the EMG signals and sketching traces are shown in Figure 6. The channel of ED that had the highest RMS value among the six sEMG channels was used as the main channel for epoch onset discrimination. Setting the threshold as 0.6 RMS can help detect 1100 trials per participant precisely. After these onsets had been determined, the EMG record was segmented into 2.5 s epochs which represented the sketching duration of each shape.

Figure 6. Example of discrimination for a representative recording session. Six sEMG channels were used for sketching recognition. Dotted lines represent epoch onsets. Segments (2.5 s) are marked on the top.

For the short analysis window, the number of parameters was reduced from 180 to 12 principal components using PCA. For the long analysis window, the number of parameters was reduced from 18 to 5 principal components.

3.2. Classification Results

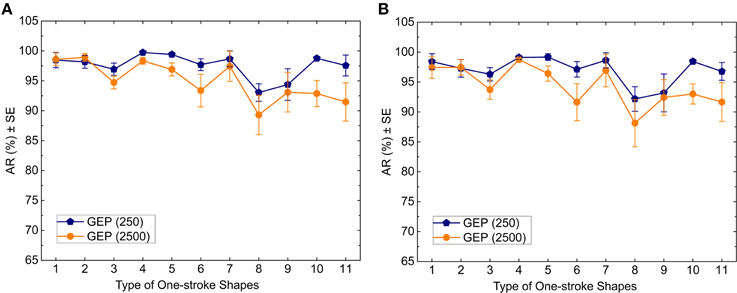

The 12 principal components extracted using short analysis windows (250 ms) and principal components extracted using long analysis windows (2500 ms) were entered as a 12-element feature vector and a 5-element feature vector into the GEP classifiers respectively. The averages of AR of the 11 one-stroke shapes are shown in Figure 7. These averages are the means of AR for the four participants. The error bars represent the standard error (SE).

Figure 7. Classification AR achieved by the GEP model vs. type of one-stroke shapes for 250 ms analysis windows and 2500 ms analysis windows as measured with (A) the training data and (B) the test data.

According to Figure 7, for the training set, using the short analysis window, the average values of AR from left to right are 98.47, 98.17, 96.93, 99.74, 99.41, 97.7, 98.67, 93.04, 94.38, 98.76, and 97.56%; using the long analysis window, the average values of AR from left to right are 98.61, 98.91, 94.72, 98.34, 96.91, 93.37, 97.43, 89.31, 93.08, 92.88, and 91.48%. For the test set, using the short analysis window, the average values of AR from left to right are 98.42, 97.46, 96.29, 99.11, 99.17, 97.13, 98.64, 92.17, 93.18, 98.45, and 96.78%; using the long analysis window, the average values of AR from left to right are 97.43, 97.28, 93.73, 98.75, 96.42, 91.64, 96.93, 88.14, 92.42, 93, and 91.66%.

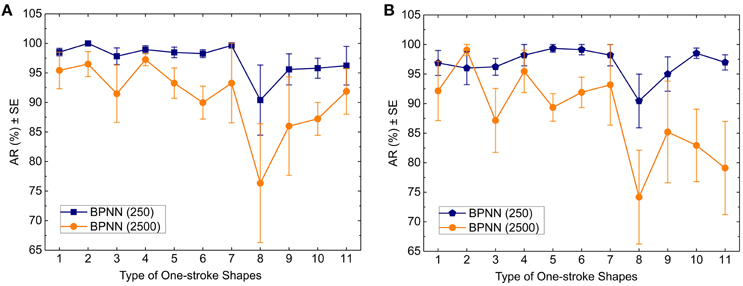

For comparison purpose, we used the same training and test set for the BPNN classifier. For the short analysis window, we set the number of hidden layer to 1 and the number of hidden layer nodes to 25. For the long analysis window, we set the number of hidden layer to 1 and the number of hidden layer nodes to 11. We defined two termination criteria for the training phase: the maximum number of iterations was 2000, and the minimum mean square error (MSE) was less than 0.01. If either criterion were satisfied, the algorithms would stop. The averages of AR of the 11 one-stroke shapes are shown in Figure 8.

Figure 8. Classification AR achieved by the BPNN model vs. type of one-stroke shapes for 250 ms analysis windows and 2500 ms analysis windows as measured with (A) the training data and (B) the test data.

According to Figure 8, for the training set, using the short analysis window, the average values of AR from left to right are 98.53, 100, 97.83, 98.95, 98.48, 98.28, 99.65, 90.4, 95.6, 95.8, and 96.23%; using the long analysis window, the average values of AR from left to right are 95.43, 96.5, 91.48, 97.25, 93.28, 89.98, 93.28, 76.35, 86, 87.23, and 91.88%. For the test set, using the short analysis window, the average values of AR from left to right are 96.88, 96, 96.23, 98.2, 99.35, 99.12, 98.2, 90.45, 95, 98.53, and 96.97%; using the long analysis window, the average values of AR from left to right are 92.15, 99.05, 87.15, 95.45, 89.35, 91.9, 93.17, 74.18, 85.2, 82.93, and 79.1%.

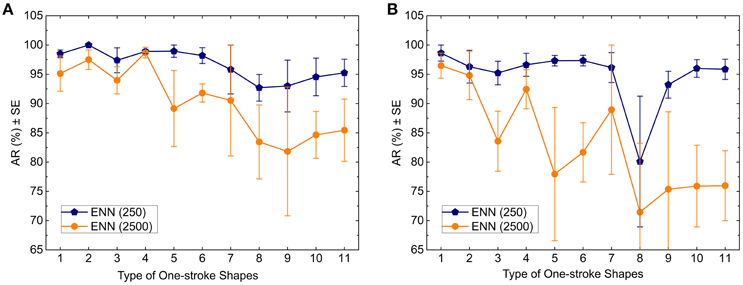

For comparison purpose, we also used the same training and test set for the ENN classifier. We establish the same number of hidden layer, the number of hidden layer nodes and termination criteria as the BPNN. The averages of AR of the 11 one-stroke shapes are shown in Figure 9.

Figure 9. Classification AR achieved by the ENN model vs. type of one-stroke shapes for 250 ms analysis windows and 2500 ms analysis windows as measured with (A) the training data and (B) the test data.

According to Figure 9, for the training set, using the short analysis window, the average values of AR from left to right are 98.5, 100, 97.4, 98.9, 98.95, 98.2, 95.83, 92.7, 93, 94.55, and 95.25%; using the long analysis window, the average values of AR from left to right are 95.13, 97.5, 93.98, 98.7, 89.15, 91.8, 90.53, 83.45, 81.83, 84.65, and 85.45%. For the test set, using the short analysis window, the average values of AR from left to right are 98.63, 96.3, 95.23, 96.63, 97.33, 97.35, 96.15, 80.1, 93.23, 96, and 95.85%; using the long analysis window, the average values of AR from left to right are 96.48, 94.8, 83.58, 92.45, 77.95, 81.68, 88.95, 71.45, 75.38, 75.9, and 75.98%.

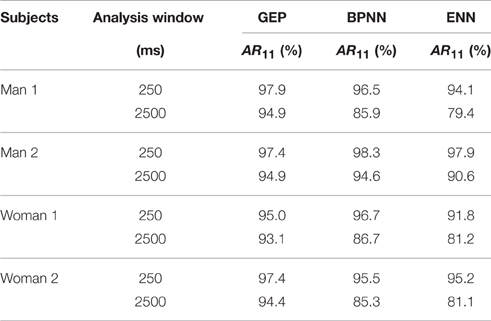

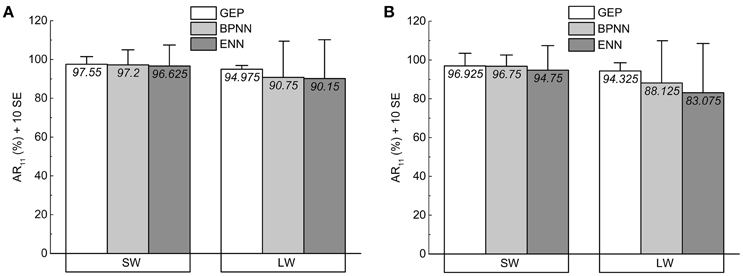

Tables 3, 4 show the AR11 for individual subjects achieved by the three recognition models. The averages of AR11 achieved by the three recognition models are shown in Figure 10. These averages are the means of AR11 for the four participants. The error bars represent the standard error (SE).

Figure 10. Histogram bars and error bars of AR11 as measured with (A) the training data and (B) the test data. (Error bars denoteSE of the means; SW, short analysis window; LW, long analysis window).

We can conclude that compared with the BPNN and ENN classifiers, the GEP classifier performed best for the training data and generalized well to the test data: for both data sets and lengths of analysis window, all the values of AR11 achieved by the GEP model are greater than 93%; the averages of AR of 11 one-stroke shapes achieved by the GEP model are higher than those of BPNN and ENN.

It can also be observed that compared with the long analysis window, the short analysis window can help improve the AR by around 6.4% averagely for all three classifiers. However, for GEP classifier, using short analysis window can only improve the AR by around 2.6% averagely, significantly less than those achieved by the BPNN and the ENN classifiers (7.6%, 9.1%), which can also indicate that the GEP classifier is able to perform well with either length of analysis window.

3.3. Discussion

Thus, we have shown that EMGs of arm muscles can be converted into sketching patterns. This technique potentially can substitute for current computer input devices or touch screens for sketching transmission. For example, it can provide another method for implementing sketching in the air. In recent years, computer vision technology can help recognize handwritten characters and sketches in the air (Chen et al., 2008; Asano and Honda, 2010; Hammond and Paulson, 2011; Vikram et al., 2013). However, it appears that attaining a high recognition rate using computer-vision based methods is currently only possible with high-quality input images or videos and is vulnerable to factors such as camera angle, background and lighting (Zhao, 2012; Chen et al., 2015). This disadvantage of the computer-vision based methods is avoided by the sEMG-based method. The sEMG-based approaches have been successfully used for the recognition of handwriting (Linderman et al., 2009). However, to our knowledge, there were rare previous studies using sEMG to recognize sketching.

Each 2.5 s sketching epoch was detected using 0.6 RMS as the threshold for feature extraction, and then dimensions were reduced with PCA. The GEP classifier was able to recognize 11 one-stroke shapes with nearly perfect accuracy using 12 or 5 principal components of sEMG signals as input vectors. Very limited number of studies has been done on using this approach to extract sEMG features for sketching recognition; however, this novel approach of feature extraction (Figure 4) reveals the basis of the excellent classification result of the GEP classifier.

Some researchers used sliding and analysis windows for feature extraction of sEMG signals in the field of real-time myoelectric control of prostheses and exoskeletons (Englehart and Hudgins, 2003; Oskoei and Hu, 2007; Geethanjali and Ray, 2011; Tang et al., 2014). We compared two different lengths of adjacent analysis window, and the results indicate that the short analysis window can get higher accuracy rates than the long one (Figures 7–9, Tables 3, 4), which is in accordance with the finding of Englehart and Hudgins (2003) who proposed that a smaller segment increment produces a denser but semi-redundant stream of class decisions that could improve response time and accuracy. The short analysis window can divide one-stroke sketching process into more segments, from which more important details of features can be extracted. A larger amount of data will result in features with lower statistical variance and, therefore, greater classification accuracy. The length of the short analysis window (250 ms) also conforms with the optimal window length (150–250 ms) proposed by Smith et al. (2011) for pattern recognition-based myoelectric control.

Interestingly, it can be observed from Figures 7–9 that the vertical 40° ellipse was recognized worst for each classifier. The reason may be that the vertical 40° ellipse is similar to two shapes (the vertical 20° ellipse and the perfect circle), which can lead to the increasing rate of false recognitions of it.

It can also be found that the proposed GEP classifier presented the highest accuracy and robust when compared with the BPNN classifier and the ENN classifier. One of the advantages of the GEP classifier is that it can produce simple explicit formulations (Landeras et al., 2012; Yang et al., 2016), which gives a better understanding of the derived relationship between the sEMG signals and one-stroke sketching shapes and makes the model suitable for application in real time. Although the ENN classifier achieved higher accuracy rate than the BPNN classifier in our previous work (Chen et al., 2015), it performed worst in this study. The recognition results (AR, AR11) of the GEP classifier were slightly better than those of the BPNN and ENN classifiers with the short analysis window. This finding suggests that the sEMG-based sketching recognition method with the short analysis window should be robust to variations of the recognition algorithm.

Overall, our method may contribute to an efficient and natural way to sketch freely and precisely in computers or digital devices, and may be appropriate for clinical applications, including computer-aided design, virtual reality, prosthetics, remote control, entertainment as well as muscle-computer interfaces in general. For further optimization of our method, we plan to deal with the contradictions between the accuracy of recognition and natural applications through finding the optimal combination of muscle channels, window lengths, and sEMG features. In future work, we plan to develop a new HCI tool with a wearable armband that can be used as a muscle-computer interface (Chowdhury et al., 2013) for sketching in the air. However, our findings and the general approach have several limitations:

(1) Three time domain indices of sEMG signals were extracted as features. To further improve the robustness and discriminatory accuracy of similar shapes (e.g., vertical 20° ellipse, perfect circle and vertical 40° ellipse), other time domain indices, frequency domain indices and time-frequency domain indices could be additionally extracted.

(2) In our study, subjects were required to sketch on a template paper with fixed dimensions (Figure 1). However, these basic shapes can also be shown with different degrees and scales in practical drawings. Whether our method can recognize these additional one-stroke shapes needs further research.

(3) We specified the sequence of shapes, the starting point and the direction of movements using sketching templates. This makes the problem simplified as compared to a real-life scenario, in which people have their habits of drawing the same shape. To prove the actual usability of the method, our future work will consider more flexible and variable ways of freehand sketching in a more general setting.

(4) To test our approach, we selected 11 one-stroke shapes, which are variations of four basic one-stroke shapes (Robertson and Bertling, 2013) and frequently used among the users in product design. However, there is no definitive set of basic sketching shapes. In the future work, we will enlarge the number of the tested shapes and offer more general basic shapes that users would need or not, in the intended context.

(5) Our method recognized 11 one-stroke shapes from the EMG patterns, showing outstanding classification rates on discrete symbols and shapes. Thus, we can note that discrete symbol classification has been a relatively easy task. Recently, a much more challenging task is continuous decoding of handwritten/drawn traces. This was attempted by Linderman et al. (2009), Huang et al. (2010), and Li et al. (2013) and improved recently by Okorokova et al. (2015). The later one attempted to use dynamical properties of the written coordinates to aid continuous decoding of the coordinates based on EMG. Another attempt of using more information was done by Rupasov et al. (2012), who complemented EMG during handwriting with EEG recordings, but they only found weak correlations between the two sets of data. One goal of our future work is continuous sketching recognition or reconstruction. Therefore, we will try to construct the prediction model between EMG signals and the coordinates (x, y) of pen traces for reconstruction of free-form curves using some linear or nonlinear decoding algorithms.

(6) Although it seems that there was sufficient data to achieve extremely high accuracy with our method, the recognition performance can be further improved with more training samples. Our method is heavily dependent on the size of the training dataset. In future work, we can use some other state-of-the-art methods that can achieve high performance with small training samples, which is convenient for users.

4. Conclusion

In this paper, we attempted to verify whether a robust variant of GP, namely GEP could be derived to recognize sketching patterns from arm sEMG signals. While the results are encouraging, additional research is needed to develop the method further. The technique mentioned in this work potentially can bring significant change for the conventional human-machine interface, and make great convenience for the healthy persons and the disabled with hand deficiency. Our future work will concentrate on the development of a wearable armband that can be used as a natural perceptual interface for sketching in the air.

Author Contributions

Substantial contributions to the conception or design of the work; or the acquisition, analysis, or interpretation (ZY, YC). Drafting the work or revising it critically for important intellectual content (ZY, YC). Final approval of the version to be published (ZY, YC). Agreement to be accountable for all aspects of the work in ensuring that questions related to the accuracy or integrity of any part of the work are appropriately investigated and resolved (ZY, YC).

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The authors would like to thank the participants of the experiment. This study was partly supported by the National Natural Science Foundation of China (No. 51305077), the Fundamental Research Funds for the Central Universities (No. CUSF-DH-D-2016068), the Zhejiang Provincial Key Laboratory of integration of healthy smart kitchen system (No. 2014E10014), and the China Scholarship Council (Nos. 201506630036, 201506635030).

References

Ahsan, M. R., Ibrahimy, M. I., and Khalifa, O. O. (2009). Emg signal classification for human computer interaction: a review. Eur. J. Sci. Res. 33, 480–501.

Anderson, D., Bailey, C., and Skubic, M. (2004). “Hidden markov model symbol recognition for sketch-based interfaces,” in AAAI Fall Symposium (Washington, DC), 15–21.

Andreasen, D., and Gabbert, D. (2006). “Electromyographic switch navigation of power wheelchairs,” in Annual Conference of the Rehabilitation Engineering and Assistive Technology Society of North America (Arlington, VA), 1064–1071.

Ardalani-Farsa, M., and Zolfaghari, S. (2010). Chaotic time series prediction with residual analysis method using hybrid elman–narx neural networks. Neurocomputing 73, 2540–2553. doi: 10.1016/j.neucom.2010.06.004

Asano, T., and Honda, S. (2010). “Visual interface system by character handwriting gestures in the air,” in RO-MAN, 2010 IEEE (Viareggio: IEEE), 56–61. doi: 10.1109/roman.2010.5598705

Briede-Westermeyer, J. C., Cabello-Mora, M., and Hernandis-Ortuño, B. (2014). Concurrent sketching model for the industrial product conceptual design. Dyna 81, 199–208. doi: 10.15446/dyna.v81n187.41068

Cao, X., and Balakrishnan, R. (2005). “Evaluation of an on-line adaptive gesture interface with command prediction,” in Proceedings of Graphics Interface 2005 (Waterloo, ON: Canadian Human-Computer Communications Society), 187–194.

Chen, X., Zhang, X., Zhao, Z.-Y., Yang, J.-H., Lantz, V., and Wang, K.-Q. (2007). “Multiple hand gesture recognition based on surface emg signal,” in Bioinformatics and Biomedical Engineering, 2007. ICBBE 2007. The 1st International Conference on (Wuhan: IEEE), 506–509. doi: 10.15446/dyna.v81n187.41068

Chen, Y., Liu, J., and Tang, X. (2008). “Sketching in the air: a vision-based system for 3d object design,” in Computer Vision and Pattern Recognition, 2008. CVPR 2008. IEEE Conference on (Anchorage, AK: IEEE), 1–6.

Chen, Y., Wang, J., and Chen, Y. (2014). An sEMG-based attitude recognition method of nodding and head-shaking for interactive optimization. J. Comput. Inform. Syst. 10, 7939–7948. doi: 10.12733/jcis11587

Chen, Y., Yang, Z., and Wang, J. (2015). Eyebrow emotional expression recognition using surface emg signals. Neurocomputing 168, 871–879. doi: 10.1016/j.neucom.2015.05.037

Chihi, I., Abdelkrim, A., and Benrejeb, M. (2012). Analysis of handwriting velocity to identify handwriting process from electromyographic signals. Am. J. Appl. Sci. 9:1742. doi: 10.3844/ajassp.2012.1742.1756

Cho, M. G. (2006). A new gesture recognition algorithm and segmentation method of korean scripts for gesture-allowed ink editor. Inform. Sci. 176, 1290–1303. doi: 10.1016/j.ins.2005.04.006

Chowdhury, A., Ramadas, R., and Karmakar, S. (2013). “Muscle computer interface: a review,” in ICoRD'13 (Chennai: Springer), 411–421. doi: 10.1007/978-81-322-1050-4_33

de Almeida, P. H. T. Q., da Cruz, D. M. C., Magna, L. A., and Ferrigno, I. S. V. (2013). An electromyographic analysis of two handwriting grasp patterns. J. Electromyogr. Kinesiol. 23, 838–843. doi: 10.1016/j.jelekin.2013.04.004

Earley, E. J., Hargrove, L. J., and Kuiken, T. A. (2016). Dual window pattern recognition classifier for improved partial-hand prosthesis control. Front. Neurosci. 10:58. doi: 10.3389/fnins.2016.00058

Englehart, K., and Hudgins, B. (2003). A robust, real-time control scheme for multifunction myoelectric control. IEEE Trans. Biomed. Eng. 50, 848–854. doi: 10.1109/TBME.2003.813539

Farina, D., Jiang, N., Rehbaum, H., Holobar, A., Graimann, B., Dietl, H., et al. (2014). The extraction of neural information from the surface emg for the control of upper-limb prostheses: emerging avenues and challenges. IEEE Trans. Neural Syst. Rehabil. Eng. 22, 797–809. doi: 10.1109/TNSRE.2014.2305111

Ferreira, C. (2001). Gene expression programming: a new adaptive algorithm for solving problems. Eprint Arxiv Cs 13, 87–129.

Field, M., Gordon, S., Peterson, E., Robinson, R., Stahovich, T., and Alvarado, C. (2010). The effect of task on classification accuracy: Using gesture recognition techniques in free-sketch recognition. Comput. Graph. 34, 499–512. doi: 10.1016/j.cag.2010.07.001

Fougner, A. L., Stavdahl, Ø., and Kyberd, P. J. (2014). System training and assessment in simultaneous proportional myoelectric prosthesis control. J. Neuroeng. Rehabil. 11:75. doi: 10.1186/1743-0003-11-75

Geethanjali, P., and Ray, K. (2011). Identification of motion from multi-channel emg signals for control of prosthetic hand. Aust. Phys. Eng. Sci. Med. 34, 419–427. doi: 10.1007/s13246-011-0079-z

Hahne, J. M., Biessmann, F., Jiang, N., Rehbaum, H., Farina, D., Meinecke, F. C., et al. (2014). Linear and nonlinear regression techniques for simultaneous and proportional myoelectric control. IEEE Trans. Neural Syst. Rehabil. Eng. 22, 269–279. doi: 10.1109/TNSRE.2014.2305520

Hammond, T., and Paulson, B. (2011). Recognizing sketched multistroke primitives. ACM Trans. Interact. Intell. Syst. 1:4. doi: 10.1145/2030365.2030369

Huang, G. (2013). Modeling, Analysis of Surface Bioelectric Signal and Its Application in Human Computer Interaction. PhD thesis, Shanghai Jiao Tong University.

Huang, G., Zhang, D., Zheng, X., and Zhu, X. (2010). “An emg-based handwriting recognition through dynamic time warping,” in Engineering in Medicine and Biology Society (EMBC), 2010 Annual International Conference of the IEEE (Buenos Aires: IEEE), 4902–4905. doi: 10.1109/IEMBS.2010.5627246

Jian, W. (2000). Some advances in the research of semg signal analysis and its application. Sports Sci. 20, 56–60.

Jiang, N., Lorrain, T., and Farina, D. (2014). A state-based, proportional myoelectric control method: online validation and comparison with the clinical state-of-the-art. J. Neuroeng. Rehabil. 11:1. doi: 10.1186/1743-0003-11-110

Kara, L. B., and Stahovich, T. F. (2005). An image-based, trainable symbol recognizer for hand-drawn sketches. Comput. Graph. 29, 501–517. doi: 10.1016/j.cag.2005.05.004

Kiguchi, K., and Hayashi, Y. (2012). An EMG-based control for an upper-limb power-assist exoskeleton robot. IEEE Trans. Syst. Man Cybern. B Cybern. 42, 1064–1071. doi: 10.1016/j.cag.2005.05.004

Kristensson, P.-O., and Zhai, S. (2004). “Shark2: a large vocabulary shorthand writing system for pen-based computers,” in Proceedings of the 17th Annual ACM Symposium on User Interface Software and Technology (New York, NY: ACM), 43–52. doi: 10.1145/1029632.1029640

Landeras, G., López, J. J., Kisi, O., and Shiri, J. (2012). Comparison of gene expression programming with neuro-fuzzy and neural network computing techniques in estimating daily incoming solar radiation in the basque country (northern spain). Energy Convers. Manage. 62, 1–13. doi: 10.1016/j.enconman.2012.03.025

Lansari, A., Bouslama, F., Khasawneh, M., and Al-Rawi, A. (2003). “A novel electromyography (emg) based classification approach for arabic handwriting,” in Neural Networks, 2003. Proceedings of the International Joint Conference on, Vol. 3 (Portland, OR: IEEE), 2193–2196. doi: 10.1109/ijcnn.2003.1223748

Li, C., Ma, Z., Yao, L., and Zhang, D. (2013). “Improvements on emg-based handwriting recognition with DTW algorithm,” in 2013 35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) (Santa Fe, NM: IEEE), 2144–2147.

Li, Y. (2003). Incremental Sketch Understanding for Intention Extraction in Sketch-based User Interfaces. Berkeley, CA: University of California.

Li, Y., Chen, X., Tian, J., Zhang, X., Wang, K., and Yang, J. (2010). “Automatic recognition of sign language subwords based on portable accelerometer and emg sensors,” in International Conference on Multimodal Interfaces and the Workshop on Machine Learning for Multimodal Interaction (Beijing: ACM), 17. doi: 10.1145/1891903.1891926

Li, Y., Hospedales, T. M., Song, Y.-Z., and Gong, S. (2015). Free-hand sketch recognition by multi-kernel feature learning. Comput. Vis. Image Understanding. 137, 1–11. doi: 10.1016/j.cviu.2015.02.003

Linderman, M., Lebedev, M. A., and Erlichman, J. S. (2009). Recognition of handwriting from electromyography. PLoS ONE 4:e6791. doi: 10.1371/journal.pone.0006791

Martí, P., Shiri, J., Duran-Ros, M., Arbat, G., De Cartagena, F. R., and Puig-Bargués, J. (2013). Artificial neural networks vs. gene expression programming for estimating outlet dissolved oxygen in micro-irrigation sand filters fed with effluents. Comput. Electron. Agric. 99, 176–185. doi: 10.1016/j.compag.2013.08.016

McKeague, I. W. (2005). A statistical model for signature verification. J. Am. Stat. Assoc. 100, 231–241. doi: 10.1198/016214504000000827

Myers, C. S., and Rabiner, L. R. (1981). A comparative study of several dynamic time-warping algorithms for connected-word recognition. Bell Syst. Tech. J. 60, 1389–1409. doi: 10.1002/j.1538-7305.1981.tb00272.x

Nan, B., Fukuda, O., and Tsuji, T. (2003). Emg-based motion discrimination using a novel recurrent neural network. J. Intell. Inform. Syst. 21, 113–126. doi: 10.1023/A:1024706431807

Nielsen, J. L., Holmgaard, S., Jiang, N., Englehart, K. B., Farina, D., and Parker, P. A. (2011). Simultaneous and proportional force estimation for multifunction myoelectric prostheses using mirrored bilateral training. IEEE Trans. Biomed. Eng. 58, 681–688. doi: 10.1109/TBME.2010.2068298

Notowidigdo, M., and Miller, R. C. (2004). “Off-line sketch interpretation,” in AAAI Fall Symposium (Arlington, VA), 120–126.

Okorokova, E., Lebedev, M., Linderman, M., and Ossadtchi, A. (2015). A dynamical model improves reconstruction of handwriting from multichannel electromyographic recordings. Front. Neurosci. 9:389. doi: 10.3389/fnins.2015.00389

Olofsson, E., and Sjölén, K. (2007). Design Sketching:[Including an Extensive Collection of Inspiring Sketches by 24 Students at the Umeå Institute of Design]. Klippan: KEEOS Design Books.

Olsen, L., Samavati, F. F., Sousa, M. C., and Jorge, J. A. (2009). Sketch-based modeling: a survey. Comput. Graph. 33, 85–103. doi: 10.1016/j.cag.2008.09.013

Oskoei, M. A., and Hu, H. (2007). Myoelectric control systems a survey. Biomed. Signal Process. Control 2, 275–294. doi: 10.1016/j.bspc.2007.07.009

Pittman, J. A. (1991). “Recognizing handwritten text,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (New York, NY: ACM), 271–275. doi: 10.1145/108844.108914

Poosapadi Arjunan, S., and Kumar, D. (2010). Decoding subtle forearm flexions using fractal features of surface electromyogram from single and multiple sensors. J. Neuroeng. Rehabil. 7, 1–26. doi: 10.1186/1743-0003-7-53

Pu, J., and Gur, D. (2009). Automated freehand sketch segmentation using radial basis functions. Comput. Aided Design 41, 857–864. doi: 10.1016/j.cad.2009.05.005

Ren, H., Xu, G., and Kee, S. (2004). “Subject-independent natural action recognition,” in Automatic Face and Gesture Recognition, 2004. Proceedings. Sixth IEEE International Conference on (Seoul: IEEE), 523–528.

Robertson, S., and Bertling, T. (2013). How to Draw: Drawing and Sketching Objects and Environments From Your Imagination. Culver City, CA: Design Studio Press.

Rubine, D. (1991). Specifying gestures by example. Acm Siggraph Comput. Graph. 38, 329–337. doi: 10.1145/127719.122753

Rupasov, V. I., Lebedev, M. A., Erlichman, J. S., Lee, S. L., Leiter, J. C., and Linderman, M. (2012). Time-dependent statistical and correlation properties of neural signals during handwriting. PLoS ONE 7:e43945. doi: 10.1371/journal.pone.0043945

Schmieder, P., Plimmer, B., and Blagojevic, R. (2009). “Automatic evaluation of sketch recognizers,” in Proceedings of the 6th Eurographics Symposium on Sketch-Based Interfaces and Modeling (New Orleans, LA: ACM), 85–92. doi: 10.1145/1572741.1572757

Schön, D. A. (1983). The Reflective Practitioner: How Professionals Think in Action, Vol. 5126. New York, NY: Basic Books.

Schütze, M., Sachse, P., and Römer, A. (2003). Support value of sketching in the design process. Res. Eng. Design 14, 89–97. doi: 10.1007/s00163-002-0028-7

Sezgin, T. M., and Davis, R. (2005). “Hmm-based efficient sketch recognition,” in Proceedings of the 10th International Conference on Intelligent User Interfaces (San Diego, CA: ACM), 281–283. doi: 10.1145/1040830.1040899

Smith, L. H., Hargrove, L. J., Lock, B. A., and Kuiken, T. A. (2011). Determining the optimal window length for pattern recognition-based myoelectric control: balancing the competing effects of classification error and controller delay. IEEE Trans. Neural Syst. Rehabil. Eng. 19, 186–192. doi: 10.1109/TNSRE.2010.2100828

Sun, L., Xiang, W., Chai, C., Wang, C., and Huang, Q. (2014). Creative segment: a descriptive theory applied to computer-aided sketching. Design Studies 35, 54–79. doi: 10.1016/j.destud.2013.10.003

Tang, Z., Zhang, K., Sun, S., Gao, Z., Zhang, L., and Yang, Z. (2014). An upper-limb power-assist exoskeleton using proportional myoelectric control. Sensors 14, 6677–6694. doi: 10.3390/s140406677

Tappert, C. C. (1982). Cursive script recognition by elastic matching. IBM J. Res. Dev. 26, 765–771. doi: 10.1147/rd.266.0765

Taura, T., Yamamoto, E., Fasiha, M. Y. N., Goka, M., Mukai, F., Nagai, Y., et al. (2012). Constructive simulation of creative concept generation process in design: a research method for difficult-to-observe design-thinking processes. J. Eng. Design 23, 297–321. doi: 10.1080/09544828.2011.637191

Tovey, M., Porter, S., and Newman, R. (2003). Sketching, concept development and automotive design. Design Studies 24, 135–153. doi: 10.1016/S0142-694X(02)00035-2

Van der Lugt, R. (2005). How sketching can affect the idea generation process in design group meetings. Design Studies 26, 101–122. doi: 10.1016/j.destud.2004.08.003

Vikram, S., Li, L., and Russell, S. (2013). “Handwriting and gestures in the air, recognizing on the fly,” in Proceedings of the CHI, Vol. 13 (Paris), 1–6.

Wheeler, K. R., and Jorgensen, C. C. (2003). Gestures as input: neuroelectric joysticks and keyboards. IEEE Pervasive Comput. 2, 56–61. doi: 10.1109/MPRV.2003.1203754

Wilson, A., and Shafer, S. (2003). “Xwand: Ui for intelligent spaces,” in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (Fort Lauderdale, FL: ACM), 545–552. doi: 10.1145/642611.642706

Wobbrock, J. O., Wilson, A. D., and Li, Y. (2007). “Gestures without libraries, toolkits or training: a $1 recognizer for user interface prototypes,” in Proceedings of the 20th Annual ACM Symposium on User Interface Software and Technology (Newport, RI: ACM), 159–168. doi: 10.1145/1294211.1294238

Xing, B., Zhang, K., Sun, S., Zhang, L., Gao, Z., Wang, J., et al. (2015). Emotion-driven chinese folk music-image retrieval based on de-svm. Neurocomputing 148, 619–627. doi: 10.1016/j.neucom.2014.08.007

Yang, Z., Chen, Y., Tang, Z., and Wang, J. (2016). Surface emg based handgrip force predictions using gene expression programming. Neurocomputing 207, 568–579. doi: 10.1016/j.neucom.2016.05.038

Zhao, Y. (2012). Human Emotion Recognition from Body Language of the Head Using Soft Computing Techniques. Ph.D. thesis, University of Ottawa.

Keywords: sketching, surface electromyography, gene expression programming, muscle-computer interface, pattern recognition

Citation: Yang Z and Chen Y (2016) Surface EMG-based Sketching Recognition Using Two Analysis Windows and Gene Expression Programming. Front. Neurosci. 10:445. doi: 10.3389/fnins.2016.00445

Received: 23 June 2016; Accepted: 14 September 2016;

Published: 14 October 2016.

Edited by:

Ning Jiang, University of Waterloo, CanadaReviewed by:

Giuseppe D'Avenio, Istituto Superiore di Sanità, ItalyAlexei Ossadtchi, St. Petersburg State University, Russia

Copyright © 2016 Yang and Chen. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) or licensor are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Zhongliang Yang, eXpsQGRodS5lZHUuY24=

Zhongliang Yang

Zhongliang Yang Yumiao Chen

Yumiao Chen