- Institute for Cognitive Neurodynamics, East China University of Science and Technology, Shanghai, China

Based on the Hodgkin-Huxley model, the present study established a fully connected structural neural network to simulate the neural activity and energy consumption of the network by neural energy coding theory. The numerical simulation result showed that the periodicity of the network energy distribution was positively correlated to the number of neurons and coupling strength, but negatively correlated to signal transmitting delay. Moreover, a relationship was established between the energy distribution feature and the synchronous oscillation of the neural network, which showed that when the proportion of negative energy in power consumption curve was high, the synchronous oscillation of the neural network was apparent. In addition, comparison with the simulation result of structural neural network based on the Wang-Zhang biophysical model of neurons showed that both models were essentially consistent.

Introduction

Currently, in neuroscience, several conventional encoding theories and decoding technologies are followed (Amari and Nakahara, 2005; Purushothaman and Bradley, 2005; Natarajan et al., 2008; Gazzaniga et al., 2009; Jacobs et al., 2009). However, none of these theories and technologies are well-established globally for analyzing the brain activity (Laughlin and Sejnowski, 2003; Abbott, 2008; Wang and Zhu, 2016). Our study shows that energy acting as a carrier throughout all the brain activities provides a novel direction for understanding the cognitive neuroscience and neural information processing. Both suprathreshold and subthreshold neural activities are accompanied by energy consumption; however, the relationship between the pattern of brain energy consumption and perceptual cognition is not yet clarified. From the perspective of global brain activity, the neural activities which response to environmentally driven demands account for less than 5% of the brain's energy budget, leaving the majority devoted to intrinsic neural signaling (Raichle and Mintun, 2006; Sokoloff, 2008; Zhang and Raichle, 2010). As for the local neuronal firing, less than 40% of signaling-related energy consumption is for the housekeeping mechanism and the maintenance of resting potentials of both neuronal and glial cells, while the other is for the action potentials and postsynaptic potentials (Attwell and Laughlin, 2001; Howarth et al., 2012; Yu et al., 2017). This highly mismatched ratio of energy consumption implies that an important mechanism associated with cognitive neural activity underlies the energy consumption of the brain, but is yet to be elucidated.

The neural activity and operations of the brain obey the principle of minimizing the energy consumption and maximizing the signal transmission efficiency (Laughlin and Sejnowski, 2003). And the collaboration of sodium channels and potassium channels contributes substantially to energy-efficient metabolism by minimizing the overlap of their respective ion fluxes (Alle et al., 2009). Owing to these operations, a novel neural coding theory, termed as neural energy coding, has been proposed in the field of neuroinformatics in recent years (Wang et al., 2006, 2008, 2016, 2017; Wang and Wang, 2014; Wang R. et al., 2014; Wang Z. et al., 2014; Yan et al., 2016). This coding theory states that membrane potential of the neuron uniquely corresponds to its consumed neural energy, which enables us to transform the complex and highly nonlinear spiking pattern of membrane potentials into the distribution pattern of energy to deal with neural activity (Abbott, 2008; Wang et al., 2008; Wang and Wang, 2014; Wang R. et al., 2014; Wang Z. et al., 2014). According to this theory, the neural energy coding can serve as the foundation for global neural coding of brain function (Wang et al., 2006; Wang and Zhu, 2016; Zheng et al., 2016) because of the following reasons: (1) Energy can be used for analyzing and describing the neuroscientific experiments at various levels such that the computational results are no longer mutually unavailable, contradictory, and irrelevant (Wang and Zhu, 2016). Thus, the neural information can be expressed as energy at the level of molecule, neuron, network, cognition, behavior, and their combination such that it can unify the neural models among various levels. (2) The neural energy can be combined with spiking pattern of membrane potentials to resolve the neural information (Wang et al., 2006, 2008, 2016, 2017; Wang and Wang, 2014; Wang R. et al., 2014; Wang Z. et al., 2014; Kozma, 2016; Yan et al., 2016; Zheng et al., 2016). (3) Neural energy can describe the interaction of large-scale neurons referring to the interaction of multiple brain regions that cannot be achieved by any conventional coding theory (Wang et al., 2009; Vuksanović and Hövel, 2016; Zhang et al., 2016; Déli et al., 2017; Peters et al., 2017). (4) Currently, a simultaneous recording from multiple brain regions in traumatic brain injury experiments is challenging. Although EEG and MEG can sample the neuronal activity from various brain regions, it is difficult to estimate the cortical interactions based on these extracranial signals. The main obstacle is the lack of a theoretical tool to effectively analyze the interaction between cortices in a high dimensional space (Hipp et al., 2011) and transform the scalp EEG into cortical potential. Nevertheless, neural energy resolves this issue (Wang and Wang, 2017). (5) Whether the neural model is based on a single neuron, neural populations, networks or behaviors and is linear or nonlinear, their dynamic response can describe the pattern of neural coding by energy superposition owing to the scalar property of energy (Wang et al., 2006, 2008, 2016, 2017; Wang and Wang, 2014; Wang R. et al., 2014; Wang Z. et al., 2014; Yan et al., 2016; Zheng et al., 2016). Thus, the global information of functional brain activity can be acquired, which is not achieved by the other traditional coding theories. (6) Despite the ever-changing pattern of network oscillation, the uniquely corresponding relationship between network oscillations and energy oscillations greatly facilitates the modeling and numerical analysis of a large scale of neural networks with large dimensions and strong nonlinearity. This phenomenon is effectuated by neural energy coding such that the complex neuroinformatics becomes easy to handle without losing information.

This coding theory has made several research findings possible (Wang et al., 2006, 2008, 2016, 2017; Wang and Wang, 2014; Wang R. et al., 2014; Wang Z. et al., 2014; Du et al., 2016; Wang and Zhu, 2016; Yan et al., 2016; Zheng et al., 2016). Previously, Wang and Zhang proposed a novel biophysical model of neurons, which provides the membrane potential function of a single neuron and the corresponding energy function with their calculation method (Wang et al., 2006). The comparison with the Hodgkin–Huxley (H–H) model reveal that both models are essentially consistent (Wang R. et al., 2017). Subsequently, we investigated the energy coding of a structural neural network and captured its energy distribution feature under different parameters (Wang and Wang, 2014; Wang R. et al., 2014; Wang Z. et al., 2014). Recently, we applied this novel neural coding theory to mental exploration by regarding the spatial distribution of the power of place cells in hippocampus as a type of neural energy field. The result showed that a nearly optimal exploration path can be found only by about ten times of mental exploration. Compared to the conventional studies on mental exploration, this method of neural energy field gradients greatly improves the efficiency of mental exploration (Wang et al., 2016).

In our previous study, the structural network model was based on the Wang–Zhang biophysical model of neurons (Wang et al., 2006). Herein, we explore the energy consumption property of structural neural networks based on the classical H–H model in order to lay the foundation of neural energy in the field of computational neuroscience. Then, according to the dynamic feature that neurons firstly absorb energy followed by consumption while firing the action potentials, we propose a vital index defined as the ratio of cumulatively stored energy to cumulatively consumed energy in order to quantitatively analyze the synchronization of neural activity. Moreover, the comparison with the previous studies (Wang et al., 2006; Wang and Wang, 2014) lay a theoretical foundation for the future investigation of the global brain function neural model and large scale global neural coding.

Computational Model

Energy Consumption of the H–H Neuron

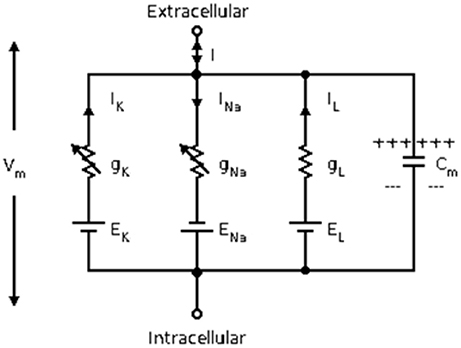

Herein, the classical Hodgkin–Huxley model (Hodgkin and Huxley, 1990) is used for calculating the energy consumption of neurons. The equivalent circuit diagram of Hodgkin–Huxley model is shown in Figure 1. The differential equation is described as follows:

Where Cm is the membrane capacitance, Vm is the membrane potential, ENa and EK are the Nernst potentials of sodium and potassium ions, respectively, and El is the potential at the time when the leakage current is zero. In addition, gl is the leakage conductance, gNa and gK are the maximum conductance of sodium and potassium ion channels, respectively. The two variable conductances are described by the following set of nonlinear differential equations:

where:

Vr is resting potential.

The procedure involves the following steps for the neuron firing action potentials: (1) The postsynaptic neurons are stimulated by presynaptic neurons, which increases the permeability of the cell membrane to sodium ions. Then, the sodium ions begin to flow inward, while the membrane potential approaches the threshold for the preparation of depolarization (subthreshold activity); (2) The permeability of the cell membrane to sodium ions increases further, and the sodium ions flow inward largely, while the membrane potential increases rapidly (suprathreshold activity); (3) The permeability of the cell membrane to sodium ions are weakened, while the permeability to potassium ions increases such that the potassium ions flow outward. Then, the membrane potential begins to decrease after reaching the peak to perform repolarization; (4) The permeability of the cell membrane to potassium ions increases further such that they flow outward until hyperpolarized; (5) The permeability of the membrane to potassium decreases, and membrane potential increases to the level of resting.

The flow of ions include active and passive transport across the membrane. Active transport is that the sodium-potassium pump consumes ATP to carry in potassium ions and carry out sodium ions of the membrane, while passive transport is that ions flow with the driving force of the gradient differences of concentration and potential (Zheng et al., 2014). Ion diffusion through the ion channels doesn't consume biological energy under the effect of concentration gradient. And the flow of ions under the effect of potential gradient is actually caused by the work of electric field force rather than the consumption of ATP. Thus, only the energy consumed by active transport should be regarded as the biological energy consumed by the neuron.

Since the energy consumed by active transport can't be directly calculated, we firstly consider the energy of the whole electric circuit of the Hodgkin–Huxley neuron. The electric energy is accumulated in membrane capacitor and equivalent batteries generated by Nernst potentials of ions at a particular moment (Moujahid et al., 2011), which can be expressed as:

Due to the difficulty of calculating the last three terms, we get the first order differential of Eall(t) to focus on the electric power:

where the last three terms are the power of equivalent batteries represented by Nernst potentials of ions.

While

thus,

in which, IVm is the power of external stimuli, (PNa + PK + Pl) is the power of the voltage sources represented by the Nernst potentials, whereas Vm(iNa + iK + il) is the power consumed by the driving force of the membrane potential gradient (electric field force), which should be regarded as the power of passive transport. However, in the course of the firing action potentials by neurons, if the energy consumed by the changes in the permeability of the cell membrane is not considered, then the involved energy includes the energy provided by surrounding environments, the energy in the potential differences between the internal and external cell membrane, as well as the biological energy (ATP) consumed by the ions pump. During the transformation of subthreshold neurons into functional neurons, the sum of these three types of energy is dynamically equivalent to the total energy in the circuit system of H–H model. The former two types correspond to IVm and Vm(iNa + iK + il) in the circuit, respectively. Then, the power (PNa + PK + Pl) of the voltage source represented by the Nernst potential is nearly equal to the biological power of the sodium-potassium pump which is the power consumed by the neuron. In this process, the sodium-potassium pump continually transports ions, thereby directly consuming the biological energy which means that 1 ATP can pump out three sodium ions and pump in two potassium ions (Attwell and Laughlin, 2001). This also confirms that the existence of the ion pump helps to maintain the Nernst potential (Laughlin et al., 1998) by continuously transporting ions. As the sodium ions flow inward and the potassium ions flow outward, the membrane potential goes up above zero, and the sodium-potassium pump can pump out sodium ions with the help of potential difference but pump into potassium ions against potential difference. It can be regarded as that the voltage source represented by the Nernst potential of sodium ions is storing energy, while the counterpart of potassium ions is consuming energy. Consequently, PNa is negative but PK is positive. The situation about Pl is the same asPK. Therefore, we can calculate the power consumed by the ion pump through the power of voltage source represented by the Nernst potential as below:

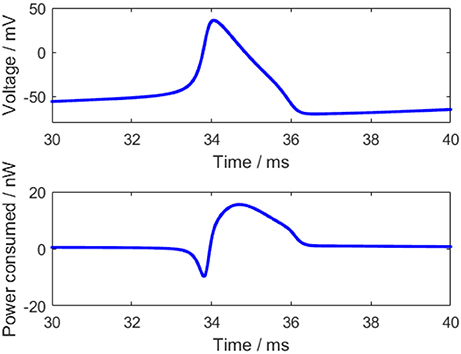

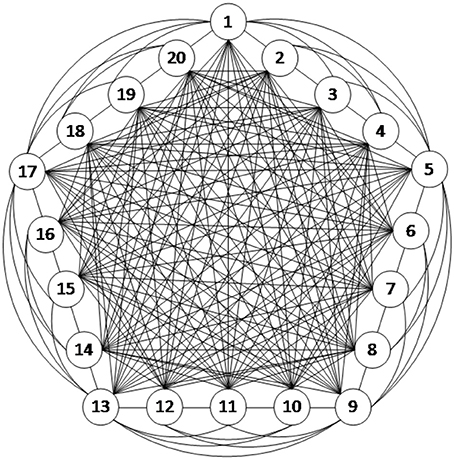

For an action potential, the neuronal energy consumption can be calculated by the above equation (Figure 2). All parameter values in the calculation refer to the experimental values (Hodgkin and Huxley, 1990) and are shown in Table 1.

The power consumption curve of the neuron can be obtained as shown in Figure 2 according to Equation (3). At the start of the action potential, the neuron absorbs some energy from ATP hydrolysis. Meanwhile, the sodium-potassium pump works weakly due to a few sodium ions and a great many potassium ions inside the membrane, thereby consuming merely a small amount of energy. Thus, the energy absorbed by the neuron is more than the energy consumed by the sodium-potassium pump in the initial stage of the action potential, which is reflected by the negative region in the power consumption curve before the peak of the membrane potential. As the membrane potential goes up, there accumulates a large number of sodium ions gradually inside the membrane and some potassium ions flow outward, which increases the work demand of the sodium-potassium pump that can pump out the sodium ions and pump in the potassium ions to maintain the Nernst potential (Laughlin et al., 1998). Hence, the power consumption of the neuron begins to change toward positive and the energy consumption is greatly increased when the membrane potential reaches the peak, and the peak of the energy consumption lags behind the peak of the membrane potential (~0.4 ms), which is consistent with the previous results (Wang et al., 2006; Wang R. et al., 2014). Importantly, the positive and negative regions of this power consumption curve have profound neurobiological significance that they correspond to the experimental result, which states that the blood flow rises by about 31% while the accompanying oxygen consumption increases by only 6% in the stimulation-induced neural activity (Lin et al., 2010; Tozzi and Peters, 2017).

Structural Neural Network Model

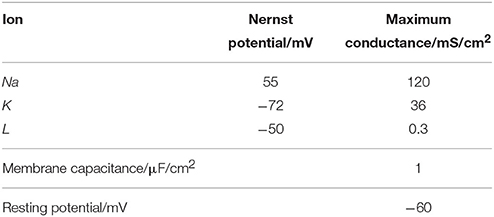

In this study, the connection structure of the neural network is illustrated in Figure 3. The dynamic characteristics of each neuron are represented by the H–H model as described above, and thus, the network structure is strictly defined neurobiologically. Figure 3 is an example of a fully connected neural network consisting of 20 excitatory neurons. Since the scope of this article focused on understanding the energy coding pattern of the neural network under different parameters, the neural network connection is simplified to some degree. The neurons are connected with bidirectional and asymmetrical coupling strengths. According to the principle of synaptic plasticity, the statistical data from the experiments demonstrate that the synaptic coupling strength between the neurons is uniformly distributed (Rubinov et al., 2011).

The coupling strength matrix:

wi,j is coupling strength when the ith neuron is coupled to the jth neuron, and n is the number of neurons in the network.

The network operates as follows:

where I(t) is the sum of stimulated currents to the neuron at timet, Iin(t) is the interaction between neurons, and Iext(t) is the external stimulated current to the neuron; Q(t − τ) = [Q1(t − τ), Q2(t − τ), …, Qj(t − τ), …Qn(t − τ)], indicating the firing state of each neuron at time t − τ, which assigns the value of 0 at resting and 1 at firing. τ indicates the interval from the presynaptic neuron firing a spike to the postsynaptic neuron receiving stimulus, which is the signal transmitting delay. In this study, its value is subjected to a uniform distribution.

Synchronization Index

In order to quantitatively estimate the synchronization of the network activity, the traditional synchronization index of the mean-max correlation coefficient (MCC) and the novel negative energy ratio were used in this article.

The MCC is defined as follow:

Where Ci,j is the Pearson correlation coefficient between the membrane potentials of the ith and jth neurons. If the Pearson's correlation coefficient between any two neurons is closer to 1, the synchronization between these two neurons is stronger. Previous studies have found that if the network reaches a steady state under the transient stimulus, two or more synchronous oscillation groups might occur (Wang and Wang, 2014). It can be seen that the approaching to 1 of MCC denotes that the synchronization within the oscillation group is salient, and the network coexists multiple synchronous oscillation groups. On the other hand, the approaching to 0 of MCC denotes that the synchronization within the oscillation group is weak and only a subset of neurons are in a synchronized state.

The negative energy ratio is defined as the ratio of negative energy to the sum of negative and positive energy consumed by the network during the period from moment 0 to t; i.e.,

Where Pi(t) is the power consumed by the ith neuron at time t, and the integration of Pi(t) in [0,t] represents the energy consumption during this period. sgn(x) is the sign function which is defined as and Epositive represent the negative and positive energies, respectively, consumed by the network in [0,t].

Results and Discussion

Energy Consumption Property During Oscillation in Various Parameters

The Energy Consumption Property of the Neural Network With Different Sizes Under Continuous Stimulus

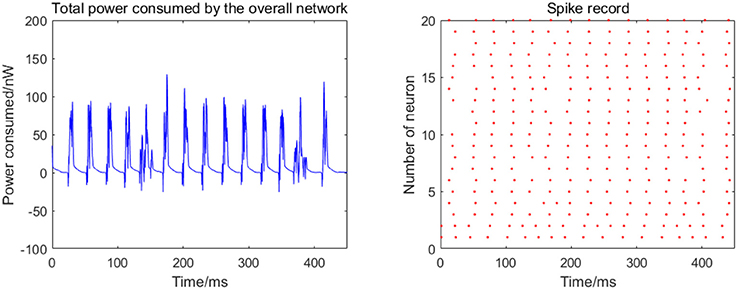

Given the fully connected neural network consisting of 20 neurons, the total energy consumed by the overall network and the spike record under continuous stimulus is shown in Figure 4.

Figure 4. Total power consumed by the overall network of 20 neurons and the spike record under continuous stimulus. The coupling strength is uniformly distributed in [0, 0.5]; the signal transmitting delay is uniformly distributed in [0.3 ms, 1.8 ms].

The 20 neurons are numbered from 1 to 20, and the coupling strength between the neurons is uniformly distributed in [0, 0.5], and the signal transmitting delay is uniformly distributed in [0.3 ms, 1.8 ms]. The 1st and 2nd neurons are continuously stimulated with the intensity of 10 μA/cm2 from t = 0–450 ms. The left panel in Figure 4 shows the total energy consumed by the overall network during the simulation, and the right records the spike within the first 250 ms (the red dot at the coordinate (t,i) represents the ith firing a spike at timet). The more streak-like the record, the stronger the synchronization of the network.

According to the left panel in Figure 4, the total power consumption curve does not demonstrate a stable periodicity over time, and the peaks of power consumption differ greatly; the streak of the spike record is not clear from the right panel in Figure 4. Taken together, it can be speculated that the synchronization of the network is weak; however, this is an intuitive prediction based on the figure and cannot estimate the synchronization of the network quantitatively. Therefore, the following two synchronization indexes (the mean-max correlation coefficient ρmean and negative energy ratio α) are used for an enhanced description of the response of the network under the continuous stimulus.

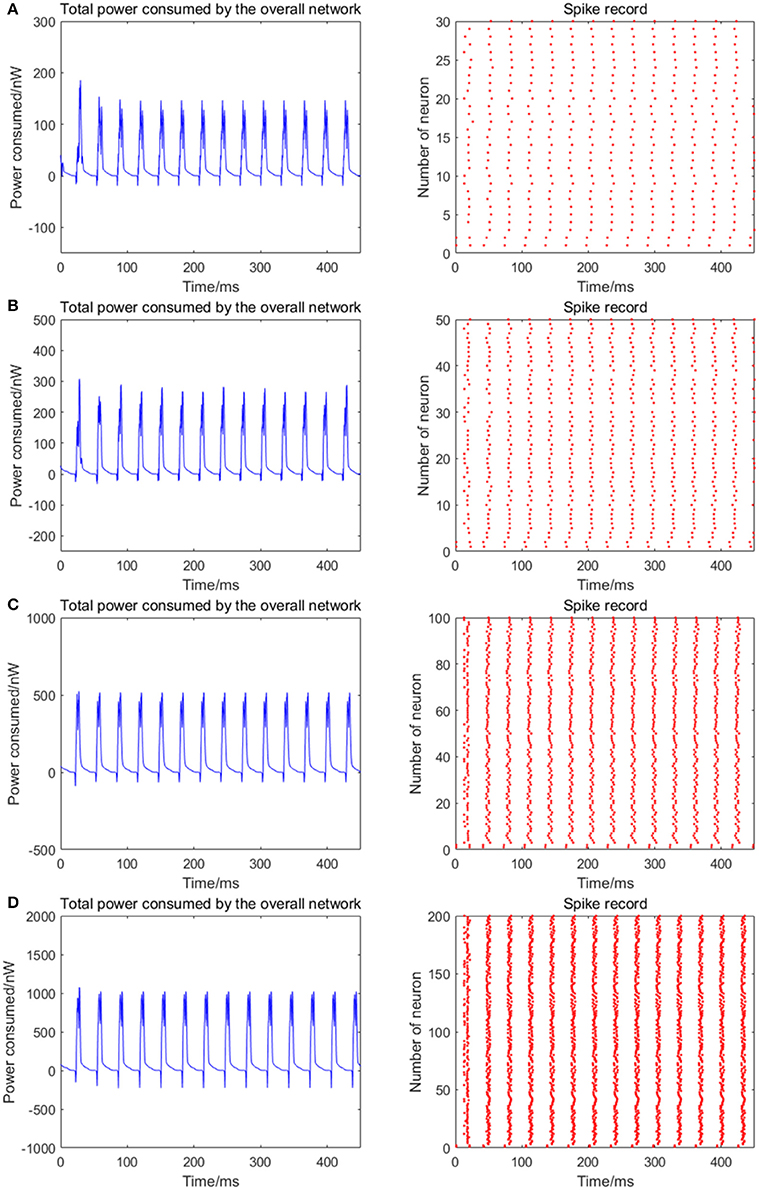

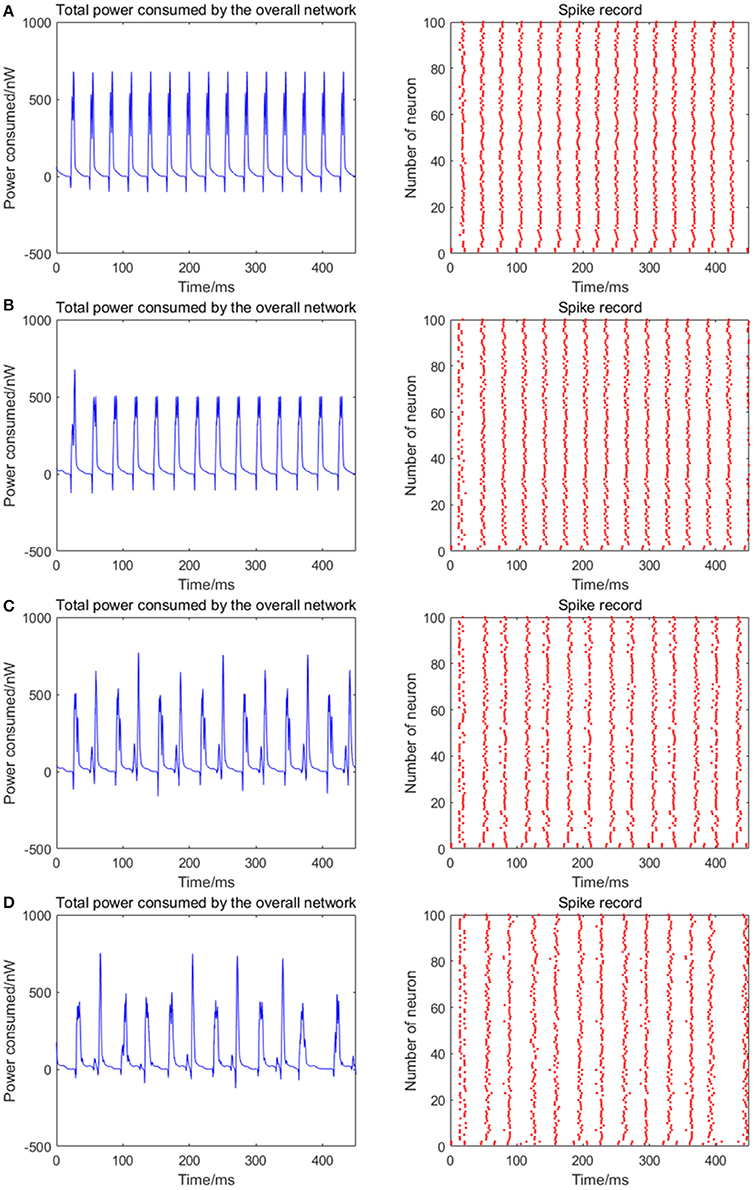

The number of neurons is increased to 30, 50, 100, and 200 in the network, where the 1st and 2nd neurons are continuously stimulated with the intensity of 10 μA/cm2 from t = 0–450 ms. The total energy consumption and the spike record of the overall network under continuous stimulus are shown in Figure 5. The corresponding negative energy ratio and MCC for Figure 5 is shown in Table 2.

Figure 5. The total power consumption and the spike record of the overall network under continuous stimulus. The number of neurons in (A–D) are 30, 50, 100, 200, respectively. The coupling strength is uniformly distributed in [0, 0.5]; the signal transmitting delay is uniformly distributed in [0.3 ms, 1.8 ms]. The periodicity of the total power consumption curve is increasingly apparent and the spike record exhibits clear streaks with the increasing size of the network which indicates the synchronization of neuronal activity is getting stronger.

Table 2. The corresponding negative energy ratio and MCC for Figure 5.

According to Figure 5, when the distribution interval of the coupling strength and signal transmitting delay between neurons remains [0, 0.5] and [0.3 ms, 1.8 ms] unchanged, the periodicity of the total power consumption curve is increasingly apparent and the spike record exhibits clear streaks with the increasing size of the network. This indicates that the synchronization of neuronal activity is getting stronger. Based on the statistical results in Table 2, with the increase of the number of neurons, the corresponding MCC and negative energy ratio are both increasing monotonically, which shows that the raised synchronization of the network is in agreement with that in Figure 5. Combining Figure 5 and Table 2, it can be concluded that the distribution feature of the power consumption curve is closely related to the size of the network. That is, in the mutually coupling neural network under the continuous stimulus, the synchronization of the network and the periodicity of its power consumption curve are positively correlated to the number of neurons. Physiologically, the functional realization of the neural network depends on the common activity of a large number of neurons, and the neural population with the same function generally exhibits a high synchronization, thus the corresponding energy consumption changes periodically.

Correlation Between Energy Distribution and Coupling Strength

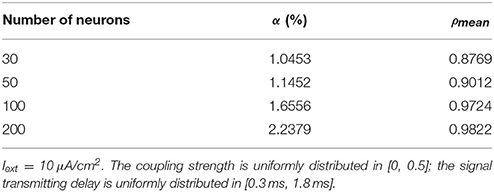

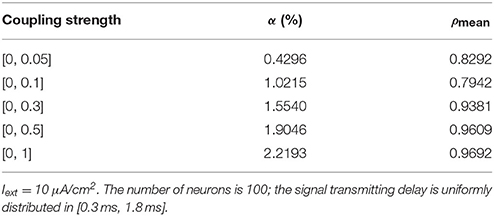

Given the fully connected neural network consisting of 100 neurons, the signal transmitting delay between neurons is uniformly distributed in [0.3 ms, 1.8 ms]. The 1st and 2nd neurons are continuously stimulated with the intensity of 10 μA/cm2 from t = 0–450 ms. The total energy consumed by the overall network and the spike record under continuous stimulus is shown in Figures 6A–E when the coupling strength between the neurons is uniformly distributed in [0, 0.05], [0, 0.1], [0, 0.3], [0, 0.5], [0, 1], respectively. The corresponding negative energy ratio and MCC for Figure 6 is shown in Table 3.

Figure 6. The total power consumption and the spike record of the overall network under continuous stimulus. The distribution interval of the coupling strength in (A–E) are [0, 0.05], [0, 0.1], [0, 0.3], [0, 0.5], [0, 1], respectively. The number of neurons is 100 and the signal transmitting delay is uniformly distributed in [0.3 ms, 1.8 ms]. The periodicity of the total power consumption curve and the synchronization of the network are positively correlated to the coupling strength.

Table 3. The corresponding negative energy ratio and MCC for Figure 6.

According to the results of Figure 6 and Table 3, as the coupling strength between the neurons increases, the periodicity of the total power consumption curve, MCC, negative energy ratio, and the synchronization reflected by spike record exhibit a consistent monotonicity. This indicates that the synchronous oscillation and energy distribution of the network are closely related to the coupling strength, that is, the synchronization of the network under identical conditions (the number of neurons and signal transmitting delay remains unchanged) and the periodicity of its power consumption curve are positively correlated to the coupling strength. It's consistent to the experimental result that the thalamocortical neurons with high-synaptic-strength exhibited well-synchronized activities during early sleep but exhibited a weak synchronization during late sleep due to the decreasing synaptic strength (Esser et al., 2007; Riedner et al., 2007). Since neural energy consumption reflects the law of global brain activity, the synchronous oscillation of neural populations corresponds to the periodic energy consumption of brain regions. Therefore, the larger the coupling strength, the more active the brain region and more salient the periodicity of energy consumption.

Notably, the negative energy ratio is extremely small, and the corresponding MCC is slightly large when the distribution interval of the coupling strength is [0, 0.05], which is inconsistent with the above conclusion. This might be attributed to the small coupling strength so that some neurons in the network exhibit subthreshold activities rather than firing spikes, while other neurons are the opposite that leads to a strong synchronization within their respective oscillation groups and a weak synchronization of the overall network. Thus, MCC which describes the synchronization within the oscillation groups is slightly large, while the negative energy ratio which describes the synchronization among the oscillation groups is extremely small. It's also clear in spike record from Figure 6 that the firing rate of neurons increases with the increase of coupling strength, which is manifested as the shortened oscillation period in the power consumption curve. This also shows that the neural energy can encode neural signals.

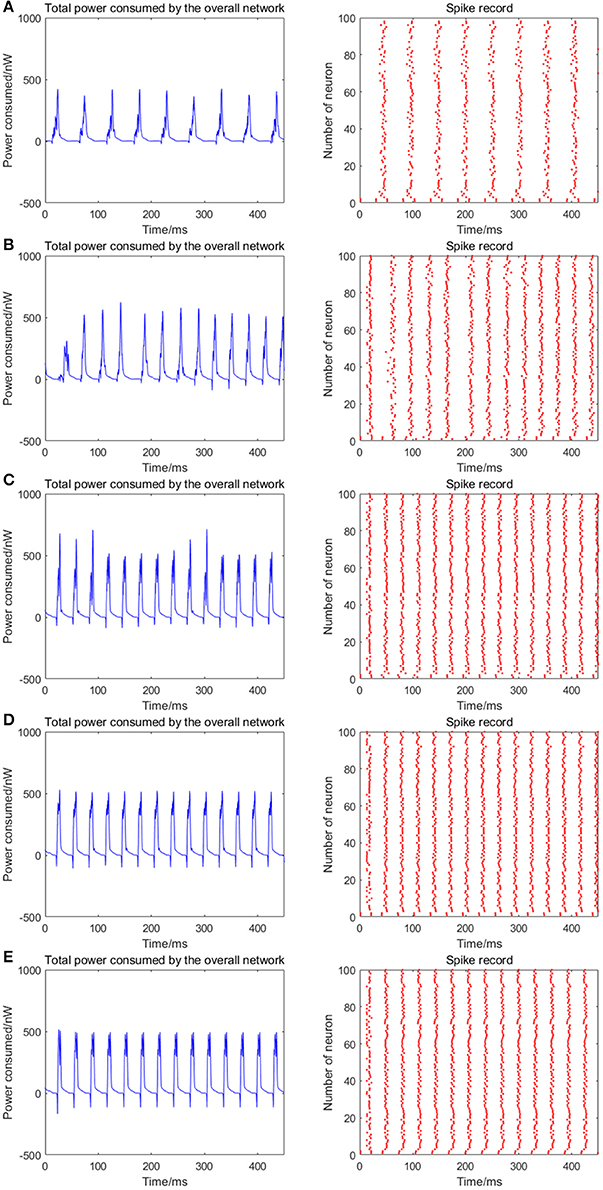

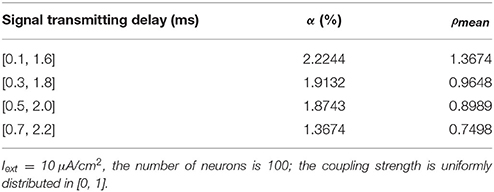

Correlation Between Energy Distribution and Signal Transmitting Delay

Given the fully connected neural network consisting of 100 neurons, the coupling strength between the neurons is uniformly distributed in [0, 1]. The 1st and 2nd neurons are continuously stimulated with the intensity of 10 μA/cm2 from t = 0–450 ms. The total energy consumed by the overall network and the spike record under continuous stimulus is shown in Figures 7A–D when the signal transmitting delay between neurons is uniformly distributed in [0.1 ms, 1.6 ms], [0.3 ms, 1.8 ms], [0.5 ms, 2.0 ms], [0.7 ms, 2.2 ms], respectively. The corresponding negative energy ratio and MCC for Figure 7 is shown in Table 4.

Figure 7. (A) The total power consumption and the spike record of the overall network under continuous stimulus. The distribution interval of the signal transmitting delay in (A–D) are [0.1 ms, 1.6 ms], [0.3 ms, 1.8 ms], [0.5 ms, 2.0 ms], [0.7 ms, 2.2 ms], respectively. The number of neurons is 100 and the coupling strength is uniformly distributed in [0, 1]. The periodicity of the total power consumption curve and the synchronization of the network are negatively correlated to the signal transmitting delay.

Table 4. The corresponding negative energy ratio and MCC for Figure 7.

According to the results of Figure 7 and Table 4, as the signal transmitting delay between neurons approaches 0, the periodicity of the total power consumption curve becomes salient. In addition, MCC and negative energy ratio increase simultaneously, and the spike record exhibits a streak-like feature. This indicates the close relationship between signal transmitting delay and synchronous oscillation with the energy distribution of the network, that is, the synchronization of the network under identical conditions (the number of neurons and coupling strength remains unaltered) and the periodicity of its power consumption curve are negatively correlated to the signal transmitting delay. Since the signal transmitting delay represents the lag from the release of excitatory neurotransmitters by the presynaptic to the postsynaptic neurons receiving neurotransmitters, in the case of prolonged lag, the correlation of neuronal activities is weak, thereby affecting the synchronization activity of the overall network and the periodicity of energy consumption. The change in signal transmitting delay can also affect firing rate of the network that the shorter the signal transmitting delay, the higher the firing rate.

The Relationship Between Network Parameters and Neural Energy

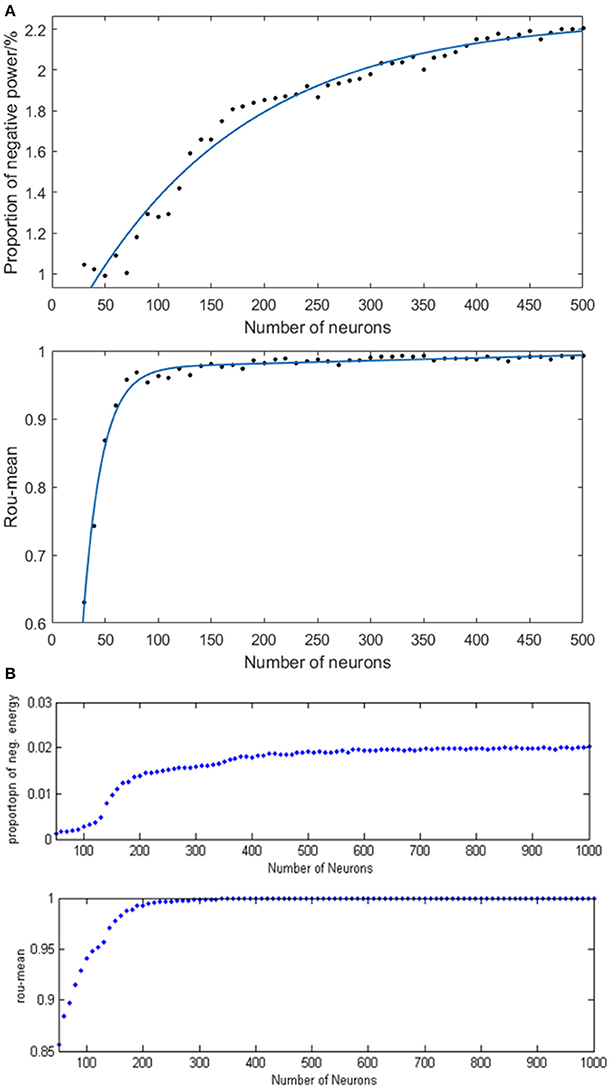

The Relationship Between the Number of Neurons and Neural Energy

In order to study the specific relationship between the number of neurons (30–500) and neural energy, we simulated the neural network where the coupling strength and the signal transform delay were distributed uniformly in [0, 0.5] and [0.3 ms, 1.8 ms], respectively. The corresponding negative energy ratio and MCC are shown in Figure 8A of which, each point is obtained as follows: for the number of neuronsN, simulate any two neurons in the network with the intensity of 10 μA/cm2 from t = 0–450 ms and calculate the negative energy ratio α and MCCρmean. This process is repeated 5 times for the average and as vertical coordinates corresponding to N neurons in Figure 8A. Figure 8B is the result of the structural neural network based on the Wang–Zhang biophysical model in the previous study.

Figure 8. (A) The curve of α and ρmean varies as a function of the number of neurons in the network based on the Hodgkin–Huxley model; (B) The curves of α and ρmean vary as a function of the number of neurons in the network based on Wang–Zhang biophysics model (Wang et al., 2006; Wang and Wang, 2014).

According to Figure 8A, a monotonous relationship occurs between the number of neurons and the negative energy ratio with MCC that these two synchronization indexes are elevated with the increasing number of neurons, thereby indicating the increasingly synchronous oscillation of the network. This phenomenon suggests that the energy coding is also capable of representing the network activity synchronization and is highly consistent with the traditional measure of the correlation coefficient. Physiologically, the synchronous oscillation of the network consisting of a large number of neurons requires a high energy supply which means more energy storage, and the negative energy ratio reflects the stored energy in the network activity; hence, it can also reveal the state of network synchronization similar to the conventional measure of the correlation coefficient. However, the curve of MCC achieves saturation early, which is caused by the saturated synchronization of the network when the number of neurons is increased to 100, while the saturation for the curve of negative energy ratio occurs after N > 400. Therefore, the analysis of energy coding is superior to that of correlation coefficient. The slower tendency of saturation for negative energy ratio enables it to distinguish the number of neurons in the network effectively. The comparison with the result based on the Wang–Zhang biophysical model (Wang et al., 2006; Wang and Wang, 2014) shows that the network property displayed by both neuronal models is nearly consistent despite the difference in the computational values due to intrinsic differences between the two models. In addition, the simulated network based on the H–H model consists maximally 500 neurons due to computational complexity.

The Relationship Between Coupling Strength and Neural Energy

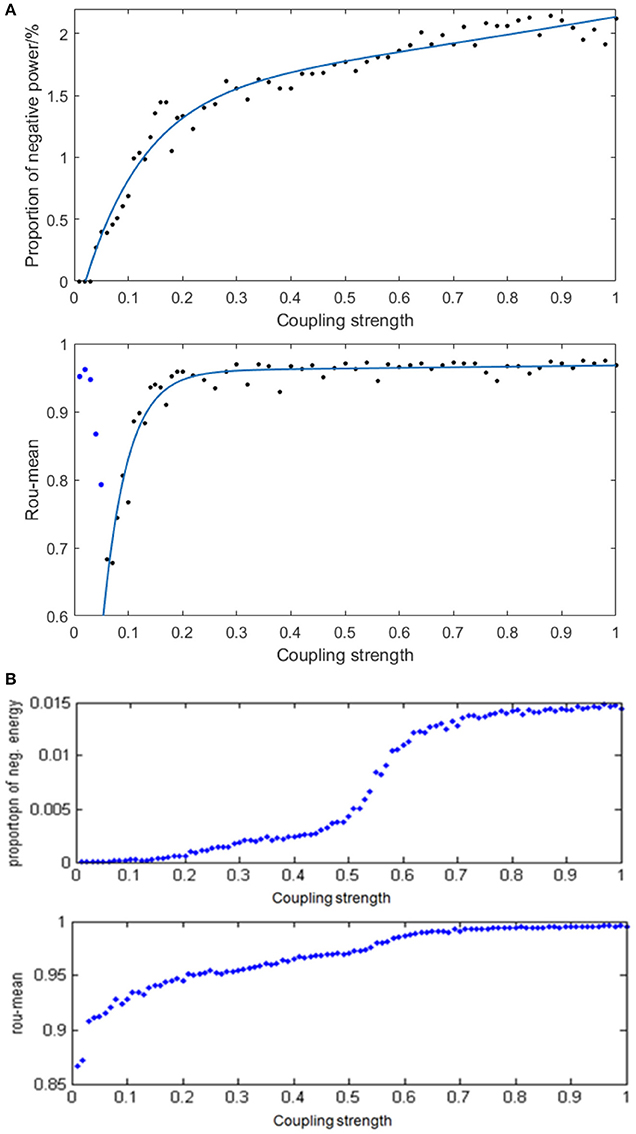

Given the fully connected neural network consisting of 100 neurons and the signal transmitting delay uniformly distributed in [0.3 ms, 1.8 ms], we simulated the neural network with different distribution intervals of coupling strength and calculated the corresponding negative energy ratio as well as MCC. Figure 9A demonstrates the specific relationship between the coupling strength and neural energy. Each point in Figure 9A is obtained as follows: for each distribution interval of the coupling strength [0,x] (x take totally 60 values as 0.01, 0.02,…, 0.20, 0.22, … 1 that represents the horizontal coordinate of corresponding point), any two neurons in the network are stimulated with the intensity of 10 μA/cm2 from t = 0–450 ms and the negative energy ratio α and MCC ρmean were calculated. The process is repeated 5 times and the average and serve as vertical coordinates in Figure 9A. Figure 9B is the result of the structural neural network based on Wang–Zhang biophysical model in the previous study.

Figure 9. (A) The curve of α and ρmean varies with different distribution intervals of coupling strength in the network based on the Hodgkin–Huxley model; (B) The curves of α and ρmean varies with different distribution intervals of coupling strength in the network based on Wang–Zhang biophysical model (Wang et al., 2006; Wang and Wang, 2014).

According to Figure 9A, when x > 0.05, both the negative energy ratio and MCC monotonically increase with the increasing distribution interval of coupling strength, which indicates the increasing synchronization of the network. In addition, both curves exhibit a gradual saturation as the coupling strength increases; however, the curve of the negative energy ratio reaches saturation slowly relative to the curve of MCC. When x ≤ 0.05, the negative energy ratio is small, while MCC is abnormally large, which is not in agreement with the above analysis. This phenomenon might be attributed to the small coupling strength between neurons, which results in the subthreshold state of most of the neurons instead of firing spikes. And other neurons are the opposite, which leads to a strong synchronization within their respective oscillation groups but a weak synchronization of the overall network. Thus, it manifested as that the negative energy ratio which describes the synchronization among oscillation groups is small, while the MCC which describes the synchronization within oscillation groups is large. This is also one of the advantages of energy coding. Moreover, the comparison with the result based on Wang–Zhang biophysical model (Figure 9B) shows a nearly consistent dynamic behavior of the network. Since the coupling strength between neurons affects their information interaction and this process relies on energy consumption for completion, it can be speculated that the stronger the coupling strength, the more synchronous the network activity and higher the demand for energy which means more energy storage that can be described by the negative energy ratio. Thus, the negative energy ratio can be regarded as a measure of network synchronization.

The Relationship Between Signal Transmitting Delay and Neural Energy

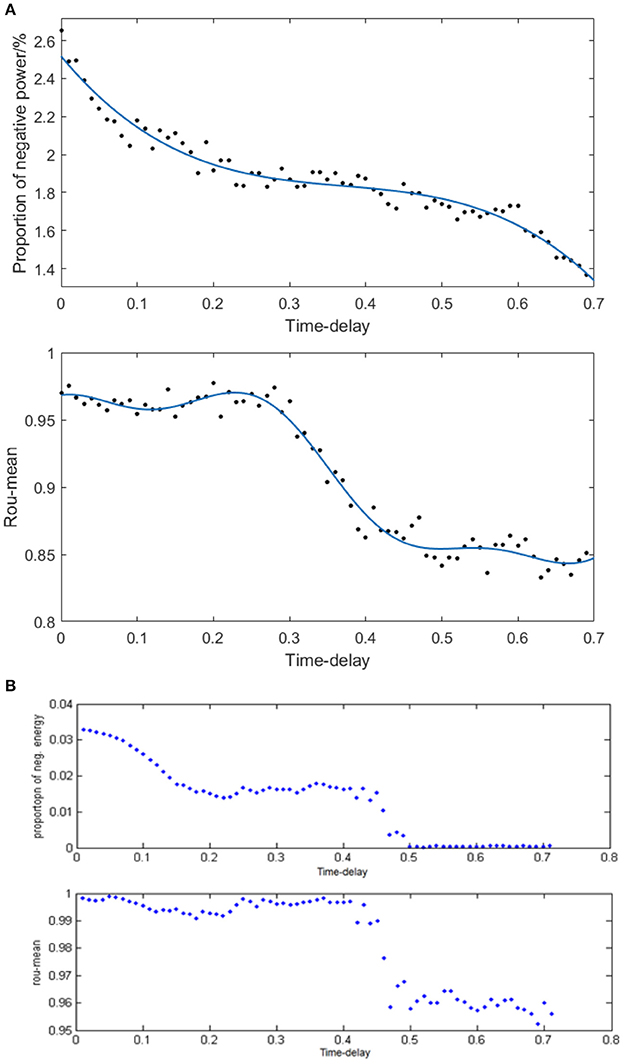

Given the fully connected neural network consisting of 100 neurons and the coupling strength uniformly distributed in [0, 1], we simulated the neural network with various distribution intervals of signal transmitting delay and calculated the corresponding negative energy ratio as well as MCC shown in Figure 10A to study the specific relationship between signal transmitting delay and neural energy. Each point in Figure 10A is obtained as follows: for each distribution interval of signal transmitting delay [x,x +1.5] ms (x takes 70 different values as 0, 0.01, 0.02,… 0.69 that represents the horizontal coordinate of the corresponding point), any two neurons in the network are simulated with the intensity of 10 μA/cm2 from t = 0–450 ms and the negative energy ratio α and MCC ρmean are calculated. This process is repeated 5 times to obtain the average and as vertical coordinates in Figure 10A. Figure 10B is the result of the structural neural network based on Wang–Zhang biophysical model in the previous study.

Figure 10. (A) The curve of α and ρmean varies with different distribution intervals of signal transmitting delay in the network based on the Hodgkin–Huxley model; (B) The curve of α and ρmean varies with different distribution intervals of signal transmitting delay in the network based on Wang–Zhang biophysical model (Wang et al., 2006; Wang and Wang, 2014).

According to Figure 10A, both the negative energy ratio and MCC decrease as the distribution interval of signal transmitting delay increases which means the weakening synchronization of the network. This simulation result can be attributed to the prolonged lag from the release of excitatory neurotransmitters by the presynaptic neuron to the postsynaptic neuron receiving neurotransmitters which decreases the activity correlation between the presynaptic neuron and the postsynaptic neuron. This phenomenon results in less salient synchronization activity of the overall network that requires less energy supply; thus, the stored energy in the network activity is less, and the corresponding negative energy ratio is low. More specifically, both the curves of the negative energy ratio and MCC can be roughly divided into three stages. In the first stage corresponding to the horizontal coordinate at [0, 0.3], the curve of negative energy ratio declines continually as the distribution interval of signal transmitting delay moves away from zero; however, the curve of MCC is maintained at a stable horizontal state. This could be ascribed to the synchronization among oscillation groups in the network that weakens gradually with the increase in signal transmitting delay, while the synchronization within each oscillation group maintains a high level within this interval. In the second stage corresponding to the horizontal coordinate at [0.3, 0.5], the curve of negative energy ratio shows no apparent tendency to decline, whereas the curve of MCC declines rapidly as the signal transmitting delay increases. This phenomenon indicates that the increase in signal transmitting delay greatly reduces the synchronization within each oscillation group but has almost no influence on the synchronization of the overall network. And the situation in the third stage corresponding to the horizontal coordinate at [0.5, 0.7] is similar to that of the first stage. These features are consistent with the results based on Wang–Zhang biophysical model (Figure 10B) that the negative ratio and MCC of the network alter in stages with the variation of the signal transmitting delay. Therefore, there exists a close relation between the signal transmitting delay and the oscillation groups in the network, and combining the analysis of energy coding and correlation coefficient can improve the understanding of the operations of the network.

Conclusions

The cognitive neural structure of the brain is complex and multi-hierarchical; thus, it is essential to combine various scales and hierarchies for investigating the neural coding of the cerebral cortex (Abbott, 2008). Especially, the effective theory of neural coding should be proposed from the global view of the brain activity. The neural energy coding provides an excellent solution for combining different hierarchies and establishing a global model of brain function (Wang R. et al., 2014; Wang and Zhu, 2016).

The current study investigated the action potential of a single neuron and the synchronous oscillation of a structural neural network by neural energy applying the H–H neuronal model. We obtained the preliminary conclusions about the energy consumption of an action potential and the quantitative relationship among synchronous oscillation, energy consumption, and network parameters (number of neurons, coupling strength, and signal transmitting delay) as follows:

(1) In the course of firing an action potential, the neuron firstly stores energy before the peak of the action potential and then consumes energy. And in the power consumption curve, the negative energy, which means the energy stored by the neuron from ATP hydrolysis, makes up only a small proportion of the total energy consumed by the neuron. This neuronal work mechanism can explain the physiological phenomenon that the blood flow rises by about 31% while the accompanying oxygen consumption increases by only 6% in the stimulation-induced neural activity, which is consistent with the previous research findings (Wang R. et al., 2014).

(2) The synchronization of the network and the periodicity of the network energy distribution is positively correlated to the number of neurons and coupling strength, but negatively correlated to signal transmitting delay. In fact, well-synchronized network activities resulting from the change of these network parameters will lead to similar power consumption curves of each neuron in course of time which constitute periodic energy consumption of the network.

(3) The proportion of negative energy in power consumption curve was positively correlated to the synchronous oscillation of the neural network. From the biological point of view, the stronger synchronous oscillation of the neural network demands for excessive energy supplies which means more energy storage, and the negative energy ratio reflects the stored energy in the network activity. Therefore, the energy index of negative energy ratio can be used to describe the dynamic properties of the network, and the energy coding has great superiorities for exploring the operation of the network further.

(4) In addition, we compared the simulation result of the structural neural network based on H–H model with the counterpart based on Wang–Zhang biophysical model (Wang et al., 2006; Wang and Wang, 2014) and found almost identical dynamic properties and energy coding characteristics of the network in both models. This suggests that the H–H model is essentially similar to the Wang–Zhang biophysical model despite different levels at which both are constructed. The former is constructed at the level of the molecule, while the latter is directly established at the level of neurons. Considering the computational complexity, the result based on the H–H model requires more time as compared to the Wang–Zhang biophysical model, which is the limitation of the H–H model. On the other hand, the advantage of the H–H model is that it can obtain precise calculation results, whereas that of the Wang–Zhang biophysics model is that it can obtain the membrane potential function as well as energy function of neurons (Wang et al., 2006; Wang R. et al., 2014; Wang and Zhu, 2016); the H–H model can obtain only the numerical solution.

In the field of experimental neuroscience, a technical record of the membrane potential of each neuron anatomically in order to study the cortical network comprising of a plethora of neurons is challenging and cost-ineffective (Hipp et al., 2011). However, it's possible to calculate the power consumption of hundreds of neurons in some functional region by the electrophysiological records of the membrane potential of a small number of neurons. Due to the scalar property of energy, the energy consumption of local cortical network can be estimated to study the formation mechanism of the cognitive functional network cost-effectively according to the density of neurons (Wang et al., 2006, 2008, 2016, 2017; Wang and Wang, 2014; Wang R. et al., 2014; Wang Z. et al., 2014; Yan et al., 2016; Zheng et al., 2016). Taken together the neural theory of energy coding has great potential and can significantly influence the study of encoding and decoding in cognitive neuroscience.

Author Contributions

Modeling and simulation: ZZ; Result analysis and discussion: ZZ, RW, and FZ; Writing and modification of the paper: ZZ and RW. All authors approved it for publication.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

This work is supported by the National Natural Science Foundation of China (No. 11232005, 11472104).

References

Abbott, L. F. (2008). Theoretical neuroscience rising. Neuron 60, 489–495. doi: 10.1016/j.neuron.2008.10.019

Alle, H., Roth, A., and Geiger, J. R. P. (2009). Energy-efficient action potentials in hippocampal mossy fibers. Science 325, 1405–1408. doi: 10.1126/science.1174331

Amari, S., and Nakahara, H. (2005). Difficulty of singularity in population coding. Neural Comput. 17, 839–858. doi: 10.1162/0899766053429426

Attwell, D., and Laughlin, S. B. (2001). An energy budget for signaling in the grey matter of the brain. J. Cereb. Blood Flow Metab. 21, 1133–1145. doi: 10.1097/00004647-200110000-00001

Déli, E., Tozzi, A., and Peters, J. F. (2017). Relationships between short and fast brain timescales. Cogn. Neurodyn. 11, 539–552. doi: 10.1007/s11571-017-9450-4

Du, M., Li, J., Wang, R., and Wu, Y. (2016). The influence of potassium concentration on epileptic seizures in a coupled neuronal model in the hippocampus. Cogn. Neurodyn. 10, 405–414. doi: 10.1007/s11571-016-9390-4

Esser, S. K., Hill, S. L., and Tononi, G. (2007). Sleep homeostasis and cortical synchronization: i. modeling the effects of synaptic strength on sleep slow waves. Sleep 30, 1617–1630. doi: 10.1093/sleep/30.12.1617

Gazzaniga, M. S., Ivry, R. B., and Mangun, G. R. (2009). Cognitive Neuroscience: The Biology of the Mind, 3rd Edn. New York, NY: W. W. Norton.

Hipp, J. F., Engel, A. K., and Siegel, M. (2011). Oscillatory synchronization in large-scale cortical networks predicts perception. Neuron 69, 387–396. doi: 10.1016/j.neuron.2010.12.027

Hodgkin, A. L., and Huxley, A. F. (1990). A quantitative description of membrane potential and its application to conduction and excitation in. Bull. Math. Biol. 52, 25–71. doi: 10.1007/BF02459568

Howarth, C., Gleeson, P., and Attwell, D. (2012). Updated energy budgets for neural computation in the neocortex and cerebellum. J. Cereb. Blood Flow Metab. 32, 1222–1232. doi: 10.1038/jcbfm.2012.35

Jacobs, A. L., Fridman, G., Douglas, R. M., Alam, N. M., Latham, P. E., Prusky, G. T., et al. (2009). Ruling out and ruling in neural codes. Proc. Natl. Acad. Sci. U.S.A. 106, 5936–5941. doi: 10.1073/pnas.0900573106

Kozma, R. (2016). Reflections on a giant of brain science. Cogn. Neurodyn. 10, 457–469. doi: 10.1007/s11571-016-9403-3

Laughlin, S. B., de Ruyter van Steveninck, R. R., and Anderson, J. C. (1998). The metabolic cost of neural information. Nat. Neurosci. 1, 36–41. doi: 10.1038/236

Laughlin, S. B., and Sejnowski, T. J. (2003). Communication in neuronal networks. Science 301, 1870–1874. doi: 10.1126/science.1089662

Lin, A. L., Fox, P. T., Hardies, J., Duong, T. Q., and Gao, J. H. (2010). Nonlinear coupling between cerebral blood flow, oxygen consumption, and ATP production in human visual cortex. Proc. Natl. Acad. Sci. U.S.A. 107, 8446–8451. doi: 10.1073/pnas.0909711107

Moujahid, A., D'Anjou, A., Torrealdea, F. J., and Torrealdea, F. (2011). Energy and information in hodgkin-huxley neurons. Phys. Rev. E. Stat. Nonlin. Soft Matter Phys. 83(3Pt 1):031912. doi: 10.1103/PhysRevE.83.031912

Natarajan, R., Huys, Q. J. M., Dayan, P., and Zemel, R. S. (2008). Encoding and decoding spikes for dynamic stimuli. Neural Comput. 20, 2325–2360. doi: 10.1162/neco.2008.01-07-436

Peters, J. F., Tozzi, A., Ramanna, S., and Inan, E. (2017). The human brain from above: an increase in complexity from environmental stimuli to abstractions. Cogn. Neurodyn. 11, 391–394. doi: 10.1007/s11571-017-9428-2

Purushothaman, G., and Bradley, D. C. (2005). Neural population code for fine perceptual decisions in area MT. Nat. Neurosci. 8, 99–106. doi: 10.1038/nn1373

Raichle, M. E., and Mintun, M. A. (2006). Brain work and brain imaging. Annu. Rev. Neurosci. 29, 449–476. doi: 10.1146/annurev.neuro.29.051605.112819

Riedner, B. A., Vyazovskiy, V. V., Huber, R., Massimini, M., Esser, S., Murphy, M., et al. (2007). Sleep homeostasis and cortical synchronization: iii. a high-density eeg study of sleep slow waves in humans. Sleep 30, 1643–1657. doi: 10.1093/sleep/30.12.1643

Rubinov, M., Sporns, O., Thivierge, J. P., and Breakspear, M. (2011). Neurobiologically realistic determinants of self-organized criticality in networks of spiking neurons. PLoS Comput. Biol. 7:e1002038. doi: 10.1371/journal.pcbi.1002038

Sokoloff, L. (2008). The physiological and biochemical bases of functional brain imaging. Cogn. Neurodyn. 2, 1–5. doi: 10.1007/s11571-007-9033-x

Tozzi, A., and Peters, J. F. (2017). From abstract topology to real thermodynamic brain activity. Cogn. Neurodyn. 11, 283–292. doi: 10.1007/s11571-017-9431-7

Vuksanović, V., and Hövel, P. (2016). Role of structural inhomogeneities in resting-state brain dynamics. Cogn. Neurodyn. 10, 361–365. doi: 10.1007/s11571-016-9381-5

Wang, R., Tsuda, I., and Zhang, Z. (2014). A new work mechanism on neuronal activity. Int. J. Neural Syst. 25:1450037. doi: 10.1142/S0129065714500373

Wang, R., Wang, Z., and Zhu, Z. (2017). The essence of neuronal activity from the consistency of two different neuron models. Nonlinear Dyn. doi: 10.1007/s11071-018-4103-7. [Epub ahead of print].

Wang, R., Zhang, Z., and Chen, G. (2008). Energy function and energy evolution on neural population. IEEE Trans. Neural Netw. 19, 535–538. doi: 10.1109/TNN.2007.914177

Wang, R., Zhang, Z., and Chen, G. (2009). Energy coding and energy unctions for local activities of brain. Neurocomputing 73, 139–150. doi: 10.1016/j.neucom.2009.02.022

Wang, R., Zhang, Z., and Jiao, X. (2006). Mechanism on brain information processing: energy coding. Appl. Phys. Lett. 89:123903. doi: 10.1063/1.2347118

Wang, R., and Zhu, Y. (2016). Can the activities of the large scale cortical network be expressed by neural energy? A brief review. Cogn. Neurodyn. 10, 1–5. doi: 10.1007/s11571-015-9354-0

Wang, Y., and Wang, R. (2017). An improved neuronal energy model that better captures of dynamic property of neuronal activity. Nonlinear Dyn. 91, 319–327. doi: 10.1007/s11071-017-3871-9

Wang, Y., Wang, R., and Xu, X. (2017). Neural energy supply-consumption properties based on hodgkin-huxley model. Neural Plast. 2017:6207141. doi: 10.1155/2017/6207141

Wang, Y., Wang, R., and Zhu, Y. (2016). Optimal path-finding through mental exploration based on neural energy field gradients. Cogn. Neurodyn. 11, 99–111. doi: 10.1007/s11571-016-9412-2

Wang, Z., and Wang, R. (2014). Energy distribution property and energy coding of a structural neural network. Front. Comput. Neurosci. 8:14. doi: 10.3389/fncom.2014.00014

Wang, Z., Wang, R., and Fang, R. (2014). Energy coding in neural network with inhibitory neurons. Cogn. Neurodyn. 9, 129–144. doi: 10.1007/s11571-014-9311-3

Yan, C., Wang, R., Qu, J., and Chen, G. (2016). Locating and navigation mechanism based on place-cell and grid-cell models. Cogn. Neurodyn. 10, 353–360. doi: 10.1007/s11571-016-9384-2

Yu, Y., Herman, P., Rothman, D. L., Agarwal, D., and Hyder, F. (2017). Evaluating the gray and white matter energy budgets of human brain function. J. Cereb. Blood Flow Metab. 1:271678X17708691. doi: 10.1177/0271678X17708691

Zhang, D., and Raichle, M. E. (2010). Disease and the brain's dark energy. Nat. Rev. Neurol. 6, 15–28. doi: 10.1038/nrneurol.2009.198

Zhang, Y., Pan, X., Wang, R., and Sakagami, M. (2016). Functional connectivity between prefrontal cortex and striatum estimated by phase locking value. Cogn. Neurodyn. 10, 245–254. doi: 10.1007/s11571-016-9376-2

Zheng, H., Wang, R., and Qu, J. (2016). Effect of different glucose supply conditions on neuronal energy metabolism. Cogn. Neurodyn. 10, 563–571. doi: 10.1007/s11571-016-9401-5

Keywords: neural energy coding, energy distribution, synchronous oscillation, negative energy, power consumption curve

Citation: Zhu Z, Wang R and Zhu F (2018) The Energy Coding of a Structural Neural Network Based on the Hodgkin–Huxley Model. Front. Neurosci. 12:122. doi: 10.3389/fnins.2018.00122

Received: 30 August 2017; Accepted: 15 February 2018;

Published: 01 March 2018.

Edited by:

Yu-Guo Yu, Fudan University, ChinaCopyright © 2018 Zhu, Wang and Zhu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Rubin Wang, cmJ3YW5nQDE2My5jb20=

Zhenyu Zhu

Zhenyu Zhu Rubin Wang

Rubin Wang Fengyun Zhu

Fengyun Zhu