- 1Tecnologico de Monterrey, Escuela de Ingeniería y Ciencias, Monterrey, Mexico

- 2Tecnologico de Monterrey, Escuela de Medicina y Ciencias de la Salud, Monterrey, Mexico

This work presents the design, implementation, and evaluation of a P300-based brain-machine interface (BMI) developed to control a robotic hand-orthosis. The purpose of this system is to assist patients with amyotrophic lateral sclerosis (ALS) who cannot open and close their hands by themselves. The user of this interface can select one of six targets, which represent the flexion-extension of one finger independently or the movement of the five fingers simultaneously. We tested offline and online our BMI on eighteen healthy subjects (HS) and eight ALS patients. In the offline test, we used the calibration data of each participant recorded in the experimental sessions to estimate the accuracy of the BMI to classify correctly single epochs as target or non-target trials. On average, the system accuracy was 78.7% for target epochs and 85.7% for non-target trials. Additionally, we observed significant P300 responses in the calibration recordings of all the participants, including the ALS patients. For the BMI online test, each subject performed from 6 to 36 attempts of target selections using the interface. In this case, around 46% of the participants obtained 100% of accuracy, and the average online accuracy was 89.83%. The maximum information transfer rate (ITR) observed in the experiments was 52.83 bit/min, whereas that the average ITR was 18.13 bit/min. The contributions of this work are the following. First, we report the development and evaluation of a mind-controlled robotic hand-orthosis for patients with ALS. To our knowledge, this BMI is one of the first P300-based assistive robotic devices with multiple targets evaluated on people with ALS. Second, we provide a database with calibration data and online EEG recordings obtained in the evaluation of our BMI. This data is useful to develop and compare other BMI systems and test the processing pipelines of similar applications.

1. Introduction

Since the early developments of BMIs, one of the most promising applications of this technology is the use of neuroprosthetic devices to assist people with reduced mobility. There is a consensus among researchers of this area that BMIs may significantly improve the lives of patients who suffer neuromuscular disorders such as ALS. Even so, despite all the efforts in the last three decades to design and implement reliable BMI systems, the goal of developing functional neuroprostheses has not been reached yet. Researchers and engineers must solve many technical and practical problems before bringing this technology into everyday life. Some open issues concerning the development of robust brain-controlled applications are the ability of the system to interpret the user's intentions accurately, the time to process and analyze brain signals, and the stability of performance over time (Murphy et al., 2016).

A BMI is a system that translates cerebral activity into commands to communicate with an external device, bypassing the normal neuromuscular pathways (Wolpaw et al., 2002; Aydin et al., 2018). There are various techniques to register brain signals, but the non-invasive neuroimage modality most widely used in BMI applications is electroencephalography (EEG) because of its high temporal resolution, low cost, and mobility (Flores et al., 2018; Xiao et al., 2019). Among EEG-based BMIs, the P300 paradigm is one of the most popular techniques for building applications with multiple options because it allows achieving high accuracies without the need for long calibration sessions (Hwang et al., 2013; De Venuto et al., 2018). Compared with other paradigms, P300-based BMIs have higher bit rates than motor imagery interfaces, while the stimulation technique for evoking P300 potentials is less visually fatiguing than the method used to elicit steady-state visually evoked potentials (Cattan et al., 2019).

The P300 signal is an event-related potential (ERP) component observed in the electroencephalogram elicited about 300 ms after the perception of an oddball or relevant auditory, visual, or somatosensory stimulus (Cattan et al., 2019). Typically, in a P300-based BMI, characters, syllables, or icons presented on a computer screen flash randomly one at a time while the user focuses attention on one particular graphical element (target stimulus). Each flashing stimulus represents an option, action, or command that the system can execute. The user selects one option of the interface by counting or performing a cognitive task every time the target stimulus is highlighted. Because the target option flashes randomly, this stimulus produces a P300 evoked potential synchronized with the flickering event in the timeline. In this way, a P300-based BMI identifies which option is evoking an ERP to decode the user's intentions and perform the desired action.

Numerous published works have reported examples of P300-based BMIs for communication and control, including spellers (Kleih et al., 2016; Poletti et al., 2016; Okahara et al., 2017; Flores et al., 2018; Guy et al., 2018; Deligani et al., 2019; Shahriari et al., 2019), authentication systems (Yu et al., 2016; Gondesen et al., 2019), assistive robots (Arrichiello et al., 2017), smart home environments (Achanccaray et al., 2017; Masud et al., 2017; Aydin et al., 2018), neurogames (Venuto et al., 2016), remote vehicles (De Venuto et al., 2017; Nurseitov et al., 2017), wheelchairs (De Venuto et al., 2018), and robotic arms (Tang et al., 2017; Garakani et al., 2019). Because the development of assistive technologies for motor-impaired people is one of the major purposes of BMI research, some groups have evaluated similar applications in clinical environments on people with neurological disorders or reduced mobility. Regarding medical applications, we can find P300-based BMIs for ALS (Liberati et al., 2015; Schettini et al., 2015; Poletti et al., 2016; Guy et al., 2018; Deligani et al., 2019; Shahriari et al., 2019; McFarland, 2020), Alzheimer's (Venuto et al., 2016), spinocerebellar ataxia (Okahara et al., 2017), and post-stroke paralysis (Kleih et al., 2016; Achanccaray et al., 2017; Flores et al., 2018). Recently, P300-based BMIs have also been proposed for rehabilitation contexts (Kleih et al., 2016), and diagnosis/evaluation purposes (Poletti et al., 2016; Venuto et al., 2016; Deligani et al., 2019; Shahriari et al., 2019).

Some studies have stated the benefits of orthoses for ALS patients (Tanaka et al., 2013; Ivy et al., 2014); however, the implementation of BMI-controlled robotic hand-orthoses for this target population remains underexplored in comparison to the application of these systems for other neuromotor disorders. Moreover, most of the recent published BMIs for ALS are designed for communication purposes (Vaughan, 2020). Similarly, while the employment of BMI-controlled hand-orthoses is well-known in other neuromotor conditions (e.g., stroke recovery), the effect of the use of these systems in ALS patients remains poorly investigated. A critical step toward the development of practical robotic neuroprostheses for people with ALS is the evaluation of this technology in different scenarios. It is essential to determine if ALS patients can operate this particular mind-controlled application and evaluate the possible effect of a hand-orthosis on the user's experience and performance.

This work presents the development and evaluation of a P300-based BMI coupled with a robotic hand-orthosis device. The purpose of this system is to assist people with ALS to perform movements of individual fingers of one hand, or more complex tasks that involve a sequence of actions of one or more fingers. Eighteen healthy participants and eight ALS patients conducted an experiment in which they tested the proposed BMI selecting a sequence of actions that the robotic hand-orthosis executed. In the evaluation of this BMI, we considered six types of operations: the flexion-extension of individual fingers, and the flexion-extension of the five fingers simultaneously.

In the experiments, we recorded the data used in the training phase of the BMI, and the EEG signals measured during the online tests. The training data was used to evaluate offline the performance of the classification model implemented in the BMI to discriminate between target and non-target epochs. Additionally, we analyzed the P300 responses of the participants to determine if there are subjects without clear evoked potentials. In the online tests, we calculated the classification accuracy and the selection times of the BMI. It is important to say that some selections were made without connecting the hand-orthosis to the system to evaluate the effect of the robotic device in the online accuracy of the BMI.

To our knowledge, our system is the first P300-based BMI that allows ALS patients to perform sequences of movements of individual or two or more digits simultaneously; it is important to consider the advantage of our system to allow the individual movement of the digits since ALS is associated with the degeneration of the corticospinal tract (Sarica et al., 2017) that allows to perform the fine finger motor tasks (Levine et al., 2012). Besides, being a P300-based system, the calibration precises a minimum time consuming calibration, reducing the fatigue of patients in comparison with other systems.

Another contribution of this work is the dataset obtained in the experimental sessions of the proposed BMI. This dataset contains the training data and the online recordings of 26 participants. The calibration samples are useful to evaluate different machine learning models of P300-based BMIs, whereas the online signals can be used to test practical systems without the need for real participants. The relevance of this database resides in the importance of providing high-quality EEG observations that represent both control and ALS groups. Any researcher may evaluate other P300-based BMIs and verify if their proposals can correctly identify the user's intentions in online conditions.

The remainder of this paper is divided into three sections. Section 2 describes the hardware and software components of the mind-controlled hand-orthosis, and the experimental setup under which we tested the BMI. Section 3 shows the results obtained from the system evaluation, while section 4 discusses the implications of the results and the conclusions derived from this work.

2. Materials and Methods

2.1. Brain-Machine Interface

The proposed system consists of a P300-based BMI coupled with a Hand Of Hope robotic arm (Rehab-Robotics Company, China). This hand-orthosis is a therapeutic device with five DC linear motors designed initially for the rehabilitation of post-stroke patients (Aggogeri et al., 2019). There is a detailed description of the Hand of Hope and its functionality in Ho et al. (2011). To communicate the orthosis with the BMI, we enabled a wireless communication channel to send the position of each motor during the execution of one movement or sequence of movements. In this way, the user selects one action to perform with the hand-orthosis using the P300-based interface.

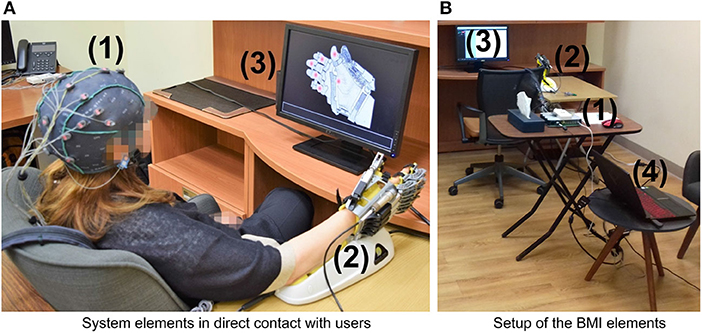

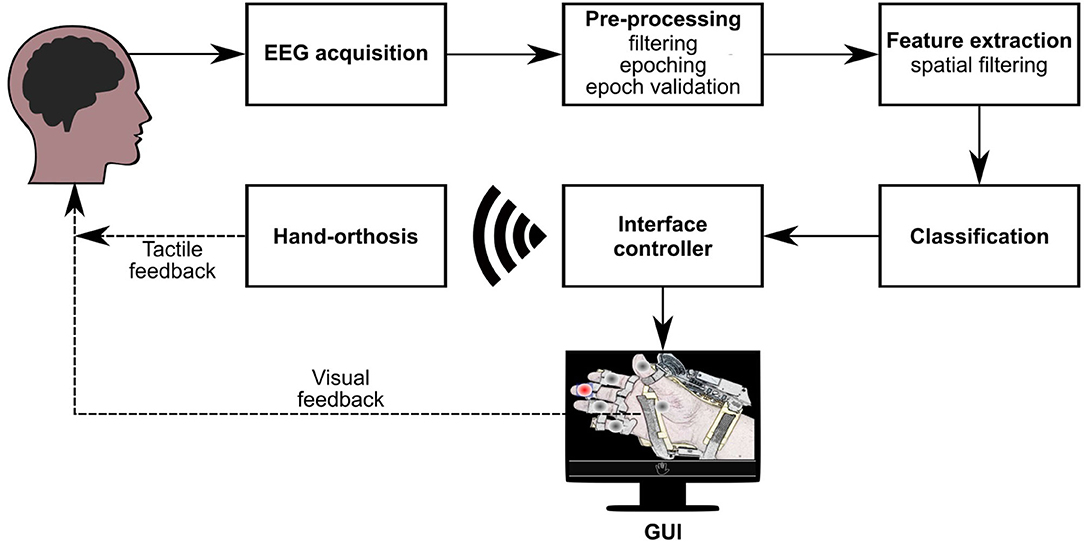

Figure 1 sketches the components of the mind-controlled hand-orthosis, and how the users interact with them. The main hardware components of the interface are:

• An EEG recording system (a g.GAMMASYS active wet electrode arrangement and a g.USBamp amplifier provided by g.tec medical engineering GmbH, Austria). For this study, the sampling rate was 256 Hz, and we used eight monopolar electrodes, placed according to the 10–20 international system at positions Fz, Cz, P3, Pz, P4, PO7, PO8, and Oz. The ground electrode was located at AFz, and the reference electrode on the right earlobe.

• A Hand of Hope robotic arm. The users can wear the robotic device on any hand.

• A monitor that displays the graphical user interface (GUI) of the BMI.

• A computer that processes the EEG signals, synchronizes the stimulus presentation, and sends the control commands to the hand-orthosis.

Figure 1. Hardware components of the BMI: (1) EEG recording system (EEG cap, active electrodes, and amplifier), (2) Hand of Hope orthosis, (3) monitor to display the GUI, and (4) computer to process EEG signals, synchronize the stimuli, and send control commands. (A) System elements in direct contact with users. (B) Setup of the BMI elements.

The software elements of this system, including the GUI, were implemented in-house using C++.

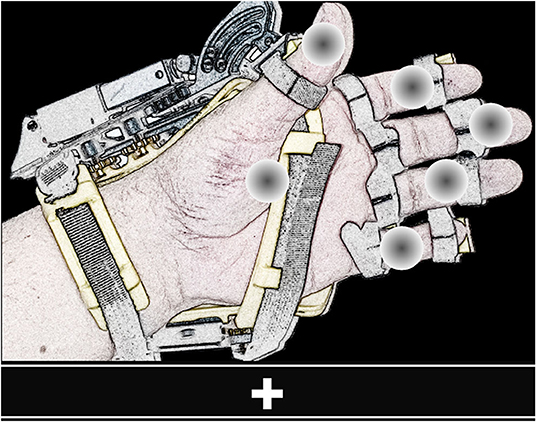

The GUI of the BMI (shown in Figure 2) provides the instructions to operate the system, presents the flashing elements, and displays visual feedback. In this GUI, gray circles positioned on a graphical illustration of the hand-orthosis represent the available options (i.e., actions or movements of the robotic device). Since the orthosis can be used on any hand, the GUI can display the image of a left or right hand, according to the side where the robotic device would be placed.

Figure 2. View of the GUI. The screen shows six flashing gray circles (possible options) placed over the image of a left or right hand wearing the orthosis. The bar located at the lower part of the GUI presents the instructions to operate the BMI and feedback.

It is possible to program different movements or actions for the hand-orthosis. The system can move each finger independently or perform multiple movements at the same time. For this study, we evaluated the BMI using six options: the individual flexion-extension of each finger, and the simultaneous flexion-extension of the five fingers. The five gray circles placed over the fingers represent the individual movements, whereas the circle over the palm corresponds to the hand opening and closing.

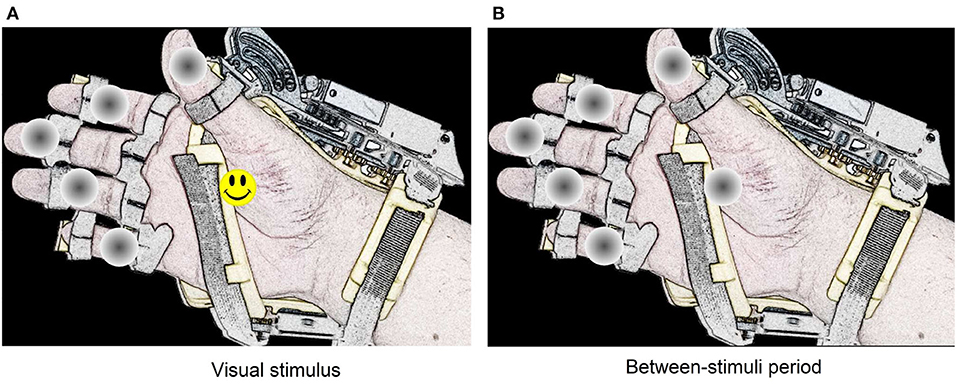

In this system, the stimulation method used to elicit evoked responses is the dummy face pattern (Chen et al., 2015, 2016), which consists of a yellow happy face icon that replaces for a short time a gray circle selected randomly. In one flashing cycle, the happy face icon is shown for 75 ms, and then all the gray circles appear for 75 ms (see Figure 3). The users are instructed to choose freely one movement or action of the robotic device by counting how many times the happy face is displayed on the desired option. If the system detects a P300 response for one action, the flashing stops for 4 s, while the hand-orthosis performs the corresponding movement. Then, the interface restarts the random flashing and waits for another evoked response. The same routine is repeated continuously during the regular operation of the BMI.

Figure 3. Representation of the dummy face pattern method for visual stimulation. The visual stimulus remain active during 75 ms on one option selected randomly (A). Between each stimulus, there is a period of 75 ms where all circles remain gray colored (B).

The detection of evoked responses consists of a sequence of processing steps necessary to extract relevant information from the measured EEG signals. Figure 4 summarizes the different stages implemented in our BMI to analyze and classify electrophysiological data. Firstly, when one option flashes, the system extracts the EEG epoch (or trial) around this event and applies some pre-processing and feature extraction techniques on this data segment. Then, a classification model evaluates the computed characteristics to obtain the label that represents the class of the processed epoch (target or non-target). A third class is also considered in this design (artifact) to indicate if a trial is contaminated by noise or muscle artifacts. Finally, the BMI processes this label to determine whether the flashing stimulus is eliciting evoked responses. If there is a P300 evoked potential, the BMI sends the respective control signals to the robotic device. This processing pipeline is based on the classification approach described in Mendoza-Montoya (2017).

Figure 4. Processing stages of the BMI. Once acquired the EEG signals, they go through the pre-processing, feature extraction, and classification stages. Based on the results of the classifier, the interface controller synchronizes the visual stimuli and sends control commands via WiFi to the orthosis. If the BMI detects a target option, the GUI provides visual feedback to the user, and the orthosis provides tactile feedback.

The following describes the processing stages of the BMI, and the component of the interface that interacts with the robotic device. Also, we present the calibration routine implemented to train the system.

2.1.1. EEG Pre-processing

In the pre-processing stage, the BMI extracts three band-limited components using FIR filters with cut-off frequencies between 4 and 14, 20–40, and 4–40 Hz. Then, when one flashing occurs, the samples around the time-window of the event are separated so that the epoched signals contain 800 ms of post-stimulus samples, starting from the stimulus onset. The result of this processing step are signals , , and , where e represents the electrode position (e = 1, 2, 3, …, ne), ne is the number of electrodes or channels, t is the time index (t = 1, 2, 3, …, nt), and nt is the number of samples.

The next step is the epoch validation, which is necessary to determine if the EEG trial is not contaminated by muscle artifacts or other sources of noise. Here, the BMI calculates the peak-to-peak voltage , the standard deviation σe, and the power ratio re of each channel as follows:

where

The system classifies as “artifact” any epoch with one or more channels for which μV, σe ⩾ 50 μV, or re ⩾ 0.7. In this case, the trial is not processed and evaluated by the machine learning model of the BMI. On the other hand, if the epoch passes the validation, i.e., the calculated metrics for all channels are below the threshold levels, the system downsamples X4−14 using a decimation factor of four to obtain signal , where is the number of time points after the downsampling.

2.1.2. Feature Extraction

The system implements an algorithm of spatial filtering based on canonical correlation analysis (CCA) for feature extraction. This approach is effective in reducing the data dimensionality and increasing the classification accuracy (Spüler et al., 2014; Mendoza-Montoya, 2017). Spatial filtering is a technique that finds linear combinations or projections of a set of signals in such a way that the new signals in the projected space have better separability between classes or another improved property. Given column vector with ne spatial weights, the projected signal (t) is obtained as follows:

In our BMI, the spatial weights increases the correlation between epochs of the target option and the expected ERP response of this class. Let be a set with ntarget pre-processed observations free of artifacts of the target class obtained from raw calibration data (). The average ERP waveform of these observations is:

The training epochs of the target class and their average ERP waveform are concatenated to build matrices and of dimensions , where T denotes transpose. CCA is applied to calculate vectors w and that maximize the correlation between Uw and . Here, the system uses w as spatial filter to transform the pre-processed epoch.

Because CCA produces ne spatial filters, the system selects the best nw projections which correspond to the highest correlation values (1 ⩽ nw ⩽ ne). To evaluate the system performance, we set nw = 4. In this way, after the spatial filtering, the BMI obtains the projected signal .

2.1.3. Classification

To classify one flashing event, the machine learning model of the BMI evaluates the corresponding signal and returns a label or category L ∈ {target, non-target}, indicating whether the flickering option is a target stimulus. This operation is only applied to trials free of noise or artifacts. For non-valid epochs, the assigned label is “artifact.”

The system uses the regularized version of the linear discriminant analysis (RLDA) (Lotte and Guan, 2009) to distinguish between the target and non-target epochs. This binary model has been employed previously to detect P300 potentials (Zhumadilova et al., 2017) and classify other electrophysiological responses (Cho et al., 2018). In this stage, the classifier evaluates only a small subset Z = {z1, z2, …, znf} of nf features, selected from the spatially filtered variables (). This dimensionality reduction is necessary to prevent over-fitting and reduce the complexity of the machine learning model (Tyagi and Nehra, 2017).

During the system calibration, the BMI chooses the characteristics to evaluate in the classification stage using the forward-backward stepwise (SW) method for variable selection (James et al., 2015). This algorithm starts with an empty classification model without variables and incorporates the one that contributes best to the model performance according to a scoring criterion. Then, the best feature that is not in the model and improves the performance criterion significantly is incorporated. If none of the candidate variables help to enhance the classifier, the model is not modified. In the next step, the variable that is in the model that may be excluded without reducing the actual scoring significantly is removed. Again, the feature set is not altered when it is not possible to discard one feature without worsening the model. These steps are repeated until no more changes in the feature set are possible. In this framework, the features selection and the model training are performed simultaneously.

2.1.4. Interface Controller

The label obtained in the classification stage might be used directly to determine the action or movement to produce with the robotic device. However, because the accuracy of the machine learning model is typically below 90%, the risk of executing an incorrect action is high. It is essential to consider that there is only one target stimulus and multiple non-target options so that before evaluating an epoch of the desired option, the model must detect correctly multiple instances of the non-target class. For this reason, the system processes the history of labels to determine if there is enough evidence that the user wants to select one particular option.

The interface controller is the element of the BMI that receives the labels of the flashing events and determines which action must perform the hand-orthosis. Additionally, it generates the control signals necessary to perform the selected movements or actions and synchronizes the state of the GUI to produce visual feedback. This component decides when the hand-orthosis must be activated and which movement or sequence of movements it must execute.

When the system processes one flashing event, the interface controller evaluates the number of times that each option has been classified as target and non-target responses. Only the last ten flashing events of each flashing symbol free of artifacts are considered in this counting. One option is selected if the following conditions are satisfied:

• The corresponding gray circle of the analyzed option has flashed at least five times (minimum number of processed epochs).

• At least 70% of the flashing events of this option has been classified as target stimuli (target class threshold).

• The responses of each of the other flashing circles have been classified 60% of the time as non-target (non-target class threshold).

If the controller detects a P300 response for one particular option, the flashing sequence is interrupted, providing visual feedback to the user about the selection. Subsequently, the hand-orthosis executes the chosen routine, and the flashing sequence restarts for another selection.

2.1.5. Calibration Routine

The operation of the BMI requires a set of spatial filters and a classification model to process and evaluate the epochs of the flashing events. To find these components, the system provides a calibration routine in which the user focuses attention on target options while the BMI records the subject's brain signals. This routine replicates the operational conditions of the BMI without activating the hand-orthosis. It uses the same GUI with six options, the stimulation method is the dummy face pattern, and the happy face icon appears for 75 ms alternating with 75 ms of no visual stimulus. Because the hand-orthosis is not necessary to train the interface, this device is disabled, and the user is not instructed to wear it.

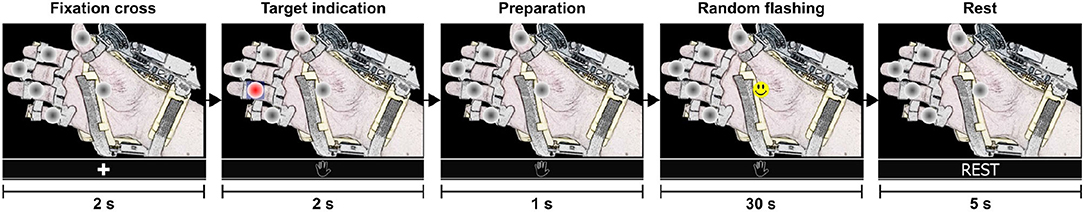

The calibration routine is divided into eight training sequences or runs. A run (shown in Figure 5) starts with a fixation cross to indicate that a training sequence has begun. Then, the interface presents a target option (selected randomly by the interface), followed by short preparation time. Next, the options flash randomly one after another for 30 s. Here, the user must count mentally how many times the happy smile icon appears on the specified target option. Finally, the user rests for a few seconds before the next run. At the end, the training dataset contains 264 epochs of the target class and 1,320 trials of the non-target class.

Figure 5. Steps of a single training sequence (training run). The complete calibration consists of eight runs and lasts 320 s. At the end of the calibration routine, the training dataset contains 264 epochs of the target class and 1,320 trials of the non-target class.

After completing the calibration routine, the system processes and validates the dataset to train the machine learning model of the BMI (see Figure 6). Firstly, the system pre-processes the complete dataset to obtain downsampled epochs free of artifacts of both classes. Next, the spatial filters are calculated using the set of observations of the target class. Then, the calculated filters are applied to the extracted epochs of both classes. Finally, the spatially filtered observations are used to find the optimal subset of features of the classifier and the parameters of this model. Once the classification model is trained, the BMI is ready to operate online and send control commands to the hand-orthosis.

Figure 6. Processing stages for system calibration. The information contained in a calibration dataset is pre-processed and analyzed to obtain a set of spatial filters and a classification model. These two components are necessary to operate the BMI and control the hand-orthosis.

2.2. Participants

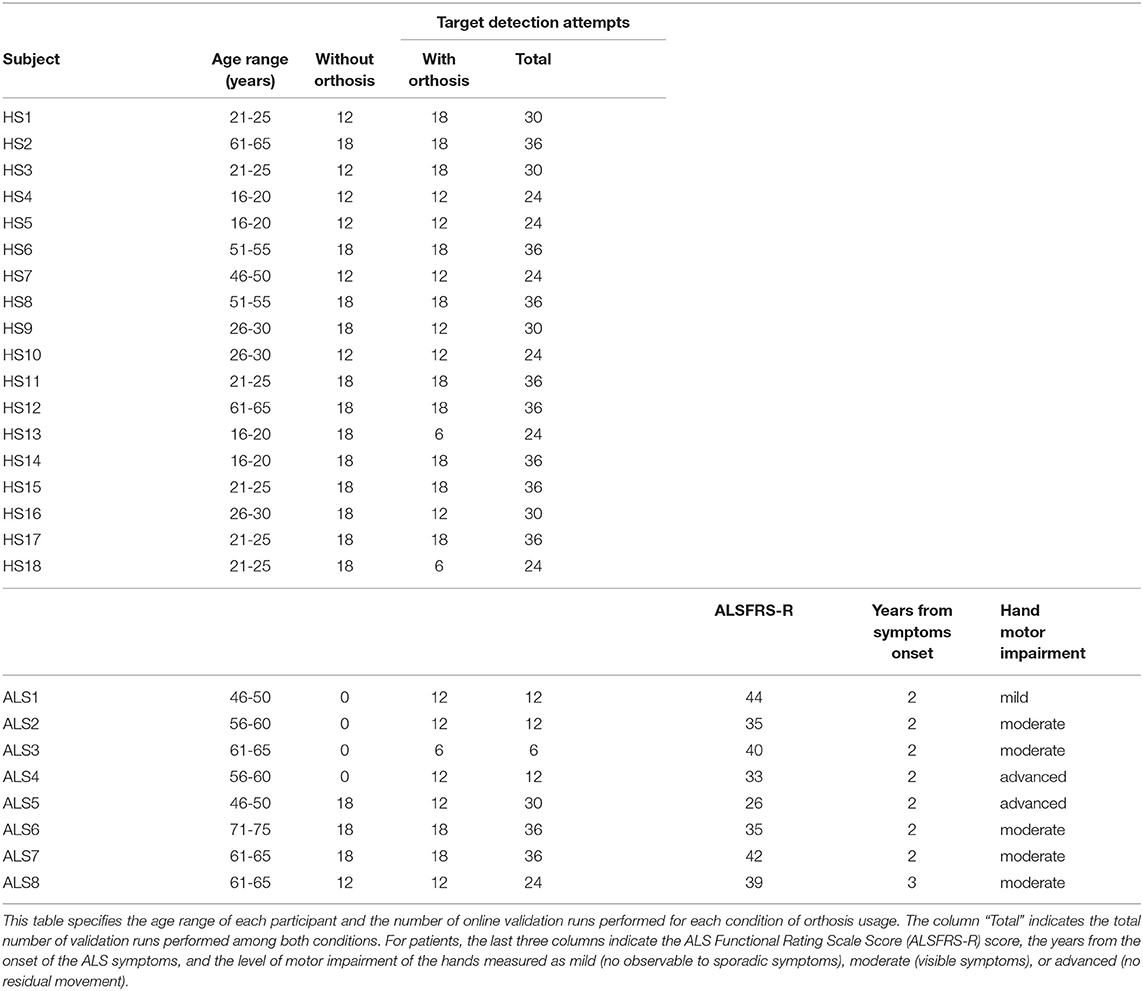

To evaluate the proposed mind-controlled hand-orthosis, we conducted an experiment in which HS and people with ALS tested the brain-machine interface. In this study, we included eighteen healthy participants (10 females and eight males, aged between 19 and 63 years old, mean age 32.7) and eight ALS patients (three females and five males, aged between 49 and 72 years old, mean age 59.6), all with normal or corrected vision. Table 1 shows the age range of each participant, and the characteristics of the ALS patients. Study subjects had no previous experience with any brain-machine interface.

ALS participants were selected from the patients attending the TecSalud ALS Multidisciplinary Clinic (Martínez et al., 2020) considering the disease duration and disability level as inclusion criteria. According to this criterion, the eight participants had, at the time of the tests, a disease duration from 2 to 3 years, and a general disability level ranged from mild to moderate (according to the ALSFRS-R scores). Both, HS and ALS groups volunteered for the study and provided informed consent before the experimental sessions. This study followed the ethical principles of the World Medical Association (WMA) Declaration of Helsinki (WMA, 2013).

2.3. Experimental Design

The experiments were carried out in a dedicated medical room at Zambrano-Hellion Medical Center. HS and patients who could walk without help or a wheelchair were asked to sit in a comfortable chair approximately one meter apart from the 22 inch LCD monitor of the BMI. For patients that needed assistance, the room space was adapted to accommodate a wheelchair close to the robotic device in front of the monitor. Before starting the experiments, participants were informed about the general instructions of the different tasks and were asked to avoid unnecessary movements when they had to focus attention on the interface.

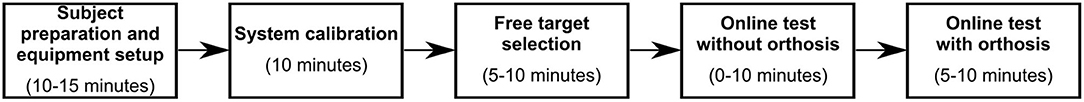

Figure 7 summarizes the different stages of one experimental session. After placing the EEG cap and preparing the wet electrodes, the experimenter instructed the participants to calibrate the BMI and perform a short free validation. In this test, subjects selected freely any option of the interface and notified if the system detected the desired action correctly. The purpose of the free selections was to obtain information about the detection times and demonstrate the users that the BMI is effectively responding to their intentions. Participants repeated at least three times the free target selection before continuing with the experiment.

Figure 7. Different stages of the experiment designed for evaluating the mind-controlled hand-orthosis. An experimental session started with the subject preparation and system setup. Then, the participant trained the BMI and tested the interface freely. Finally, the experiment concluded with the online tests. A complete experiment lasted approximately from 30 to 55 min.

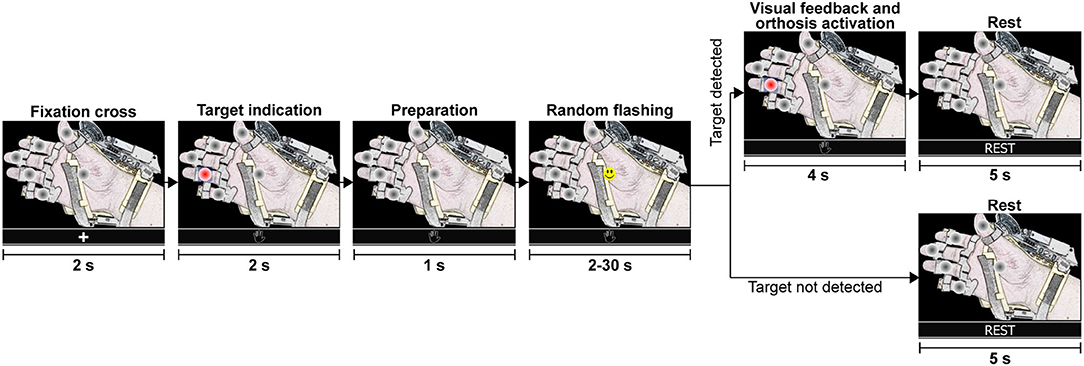

In the next stages of the experiment (online tests), subjects were indicated to focus attention on the specified target option until the BMI recognized a P300 response for one of the flashing elements. All online attempts (shown in Figure 8) are similar to the calibration runs. The interface presented a fixation cross to indicate the beginning of a test run, followed by the presentation of the target option and preparation time. Then, the random flashing started, and the BMI tried to recognize an evoked response. If the system detected in <30 s the correct option, the hand-orthosis performed the selected movement; otherwise, nothing happened. Finally, there were 5 s of resting time before starting another attempt.

Figure 8. Graphical representation of a single test run. During the random flashing, the BMI tried to detect an evoked potential while the participant focused attention on the specified target option. If the system detected the correct option, the robotic device performed the selected action. If the target was not detected within 30 s during the random flashing, the system went directly to the rest period before starting a new test run with the fixation cross.

Some online runs were performed with the robotic device disabled. In these cases, subjects did not wear the hand-orthosis, and the system did not send the control signals to the device. Table 1 indicates the number of online attempts performed by each participant with and without the Hand of Hope. In this way, we collected three datasets for each participant, the calibration data, the online test data without the robotic device, and the EEG recordings with the hand-orthosis.

2.4. Data Analysis

2.4.1. ERP Analysis

The calibration data recorded in our experiments was used to analyze the ERP responses of each participant. In this study, we pre-processed and validated the training epochs of the target and non-target classes to calculate the average waveforms of both conditions. To determine the ERPs, we used the filtered signals obtained with the bandpass filter of 4–40 Hz. We considered 200 ms of pre-stimulus samples and 800 ms of post-stimulus time points.

Significant ERP peaks were identified through a statistical test of the ERP amplitude at each time point and channel with the corresponding probability density function (PDF) of the pre-stimulus interval. We estimated the PDF of the pre-stimulus segment of each channel with the non-parametric kernel density estimation method (Bowman and Azzalini, 1997). The upper and lower limits of the PDFs were then computed for a significance level of α = 0.05, i.e., significant ERP responses are those for which the probability values under the PDF of the corresponding pre-stimulus are higher than 1−α/2 or smaller than α/2.

Significant ERP responses in the target class indicate that the interface is eliciting evoked potentials when the subject perceives a flashing event of a target option. On the other hand, it is expected not to observe significant evoked potentials in the non-target class because the subject is not attending these events.

2.4.2. Classification Model Evaluation

In this study, we evaluated the accuracy of the machine learning model of the BMI for each subject by applying five-fold cross-validation on the calibration data (Berrar, 2019). This method is useful to estimate the prediction error and accuracy of a model when the number of available observations is limited, and it is not possible to split the complete dataset into training data and test data. For this assessment, we report the accuracy acci of each class i ∈ {target, non-target} (the proportion of samples of class i predicted in this class correctly), and the weighted model accuracy accw = 0.5 × (acctarget + accnon-target). We used the weighted accuracy because the training data sets are highly unbalanced, and we want to avoid a bias toward the non-target class.

Additionally, the significance levels of the model accuracies were calculated with a permutation test (Good, 2006). In this methodology, the null hypothesis indicates that observations of both classes are exchangeable so that any random permutation of the class labels produces similar accuracies to the obtained with the non-permuted data. The alternative hypothesis is accepted when the model accuracy is an extreme value in the empirical distribution built with m random permutations. When the alternative hypothesis is accepted, we can say that the cross-validated accuracy is above the chance level.

2.4.3. Online Evaluation

We assessed the online BMI performance in terms of selection accuracies, detection times and ITR. These parameters are computed through Equations (7)–(9), where acconline is the online accuracy, nsel is the number of correctly selected targets, natt is the number of attempts to select a target or test runs, B is the information-rate transmitted (bits), nc is the number of flashing circles, and tavg is the average time from target indication to target selection (detection time in seconds).

3. Results

3.1. ERP Responses

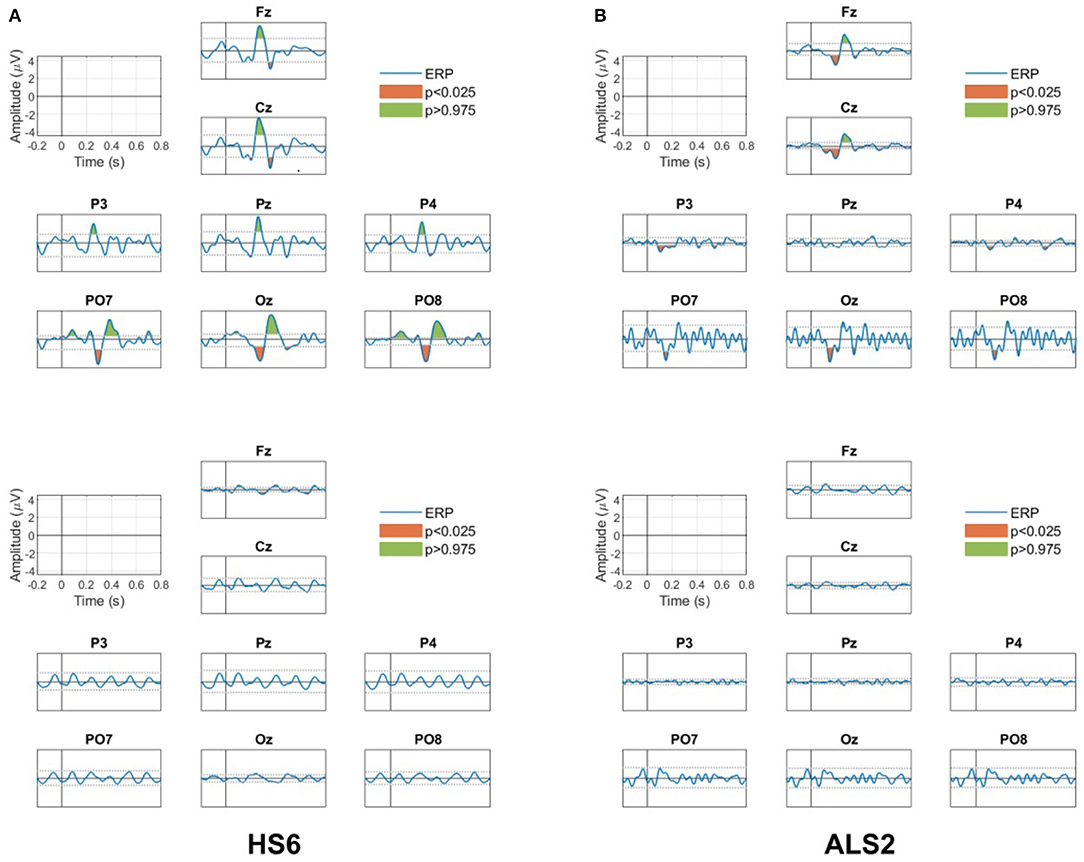

Figure 9 shows the results of the ERP analysis for one of the healthy subjects (HS6) and one of the ALS patients (ALS2). This analysis is presented for all channels separately for the target and non-target conditions. For the two participants, significant positive and negative peaks (p < 0.05, two-tail test) are observed in the ERP for the target condition (top figures), while no significant ERP peaks (p > 0.05, two-tail test) are observed in the non-target condition (bottom figures).

Figure 9. ERP responses for all channels for the target (upper panels) and non-target (bottom panels) conditions for (A) the healthy participant HS6 and (B) the patient ALS2. Green and red areas in the ERP for the target condition are the positive and negative peaks that presented significant differences (p < 0.05, two tail test) with the estimated PDF of the baseline period. No significant peaks are observed in the ERP for the non-target condition.

For the healthy subject HS2, the ERP in the target condition shows (i) a positive peak between 250 and 450 ms in all channels (the P300 response), (ii) a negative peak between 450 and 550 ms in the frontal Fz and the central Cz channels (possibly a late negativity), and (iii) an early negativity around 200 and 250 ms in the parieto-occipital (PO7 and PO8) and occipital (Oz) channels. Note that none of these features are observed in the non-target condition.

For the patient ALS2, the ERP in the target condition shows (i) the positive peak representing the P300 response between 250 and 450 ms in the frontal Fz and the central Cz channels, and (ii) an early negativity around 200 and 250 ms in the frontal and the central (Fz and Cz), the parieto-occipital (PO7 and PO8) and the occipital (Oz) channels. Note that these significant peaks are not observed in the non-target condition.

Similar observations are also present in the rest of the participants and indicate the existence of significant task-related evoked activity that is used by the proposed BMI system to recognize the stimulus the user is attending.

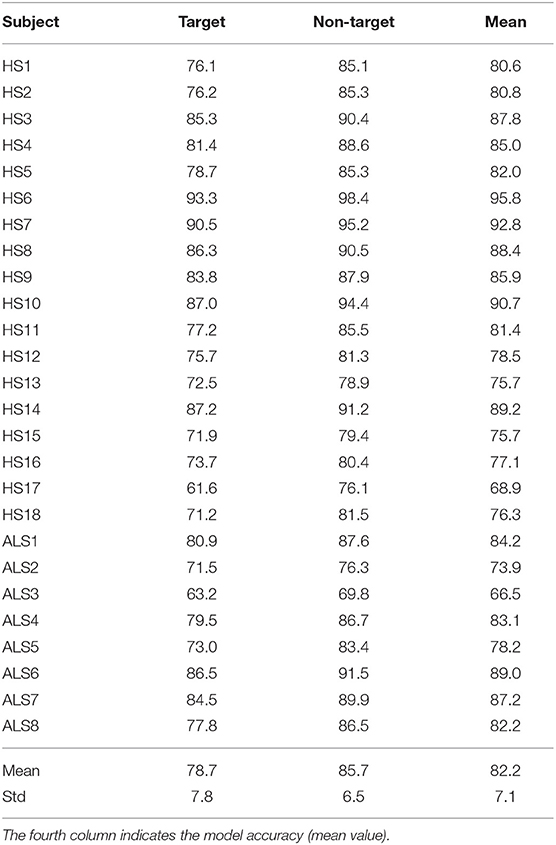

3.2. Classification Model Accuracy

Table 2 contains the classification accuracies estimated with five-fold cross-validation for each participant. The mean accuracy for the target class was 78.7%, for the non-target class was 85.7%, and the weighted accuracy was 82.2%. Only the model performance for two participants was below 70% (HS17 and ALS3), whereas three participants obtained accuracies above 90% (HS6, HS7, and HS10). The best classifier performance was 95.8%, and the worst was 66.5%. All these results are similar to those reported in other similar works (Wang and Chakraborty, 2017; Won et al., 2018).

Table 2. Classification accuracies (%) estimated with cross-validation for the target and non-target classes.

In the permutation tests, the classification accuracies for all participants were significant (p < 0.001, 10,000 random permutations). These results indicate that the machine learning model implemented in our BMI can discriminate between EEG epochs of the target and non-target classes. However, if we want to avoid selection errors in the online operation, it is important to consider a multi-trial strategy because the error rates are not zero. For this reason, the interface controller processes consecutive labels returned by the classification stage to determine the desired option.

Finally, we performed a Wilcoxon rank sum test and no significant differences were observed between the classification accuracies of the HS group and the ALS group (p = 0.60). We can say from this result that ALS participants can operate the BMI just as HS would.

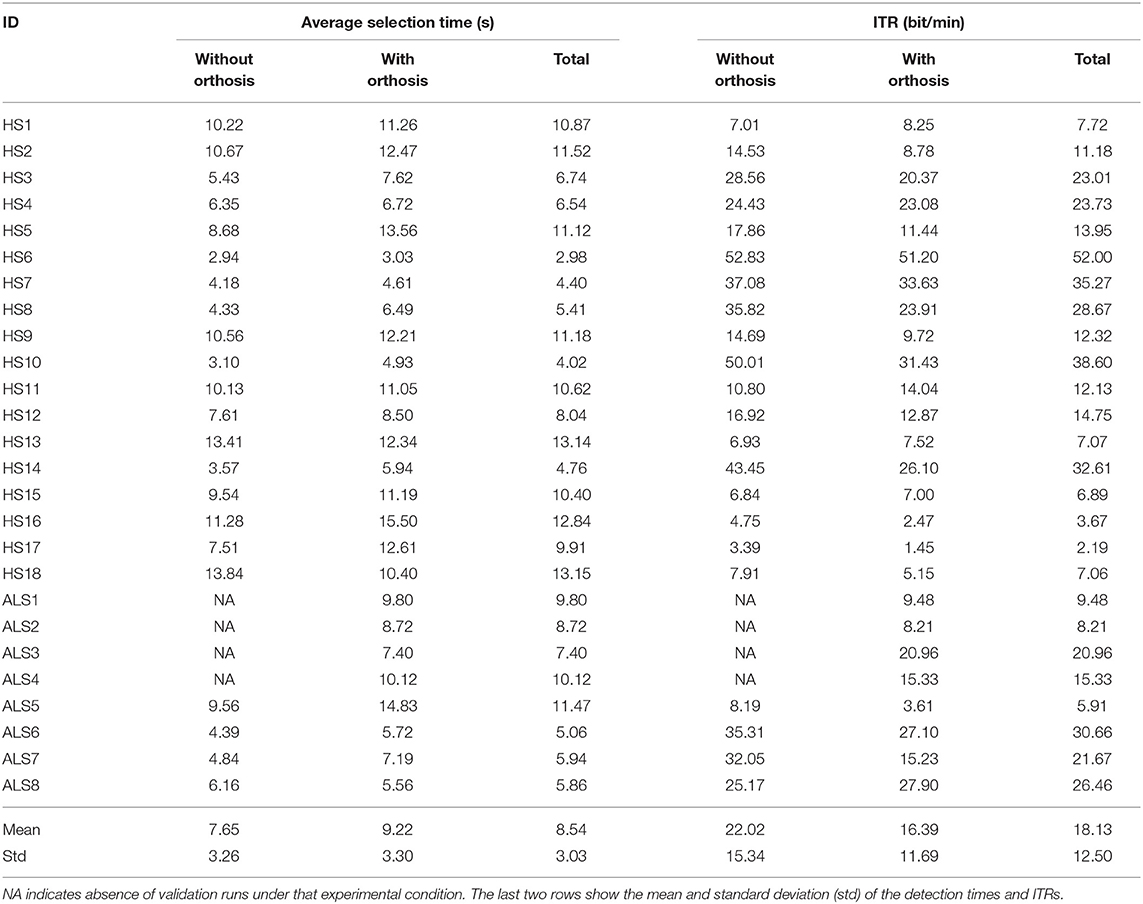

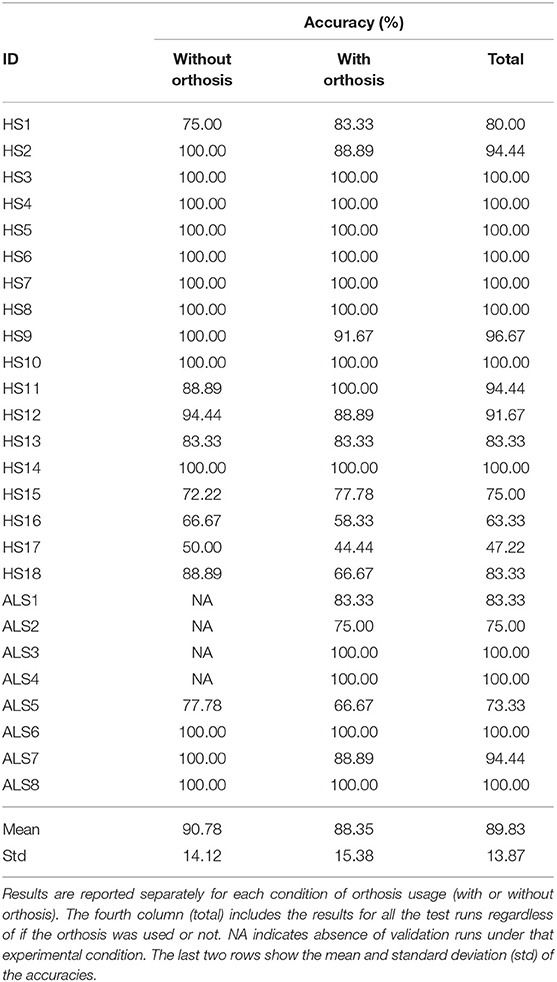

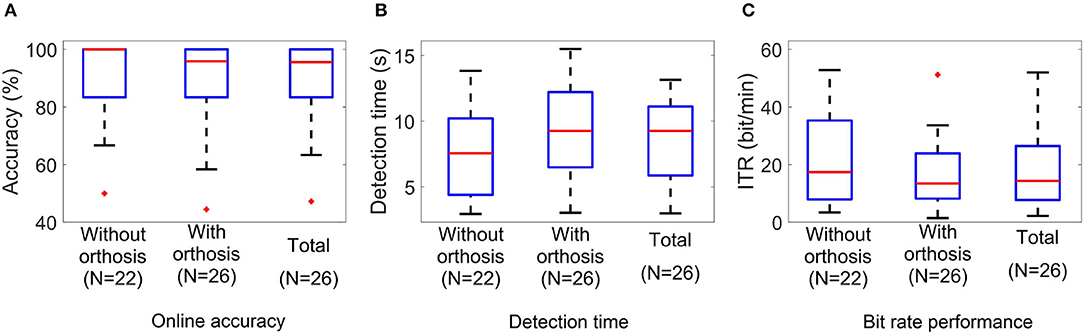

3.3. Online Performance and Detection Times

Tables 3, 4 summarize the results obtained in the online evaluation of the proposed BMI. The distribution of the online accuracies, detection times, and ITRs are represented in Figure 10. From these results, we can observe that around 46% of the participants achieved an accuracy of 100% in the online tasks. The mean online accuracy was 89.83%, and only three participants obtained accuracies below 75% (HS16, HS17, and ALS5). We can say from this performance evaluation that the implemented BMI decodes the user's intentions effectively in most cases, and users could manipulate the hand-orthosis without much hassle in more complex tasks. However, it is essential to improve the system performance for those users who can not achieve high detection rates.

Table 3. Online classification performance obtained in the evaluation of the mind-controlled hand-orthosis.

Figure 10. Boxplots of the (A) accuracy, (B) target detection time, and (C) ITR values of the BMI online test. N indicates the number of participants who performed the experiment under each condition.

One way to increase online accuracy is to modify the detection criteria of the interface controller. The number of processed epochs and thresholds for target and non-target classes determine the balance between detection times and classification errors. For instance, if we decrease the non-target class threshold, we can reduce the number of online errors, but it is possible to see higher detection times. Our BMI can customize these parameters for each subject, but for this study, we used the same parameters for all participants.

The average detection time observed in our experiments was 8.54 s, whereas the ITR was 18.13 bit/min. The best and worst times were 2.98 and 13.15 s, and the minimum and maximum ITRs were 2.19 and 52 bit/min. Other studies have reported similar results for P300-based BMIs. If we consider that the target population of this technology is people with ALS, these response times are acceptable for many applications such as spellers and smart houses. In the case of a hand-orthosis, it is clear that it is not possible to implement an active fine control for the robotic device. However, users can select complete movements or sequences of actions using our interface. For this reason, we consider that the detection times and ITRs of our system are suitable for the movements or actions contemplated in our BMI.

Performing a Wilcoxon rank sum test to compare the HS group and the ALS group, we do not observe significant differences in any of the three performance metrics studied in this work. Online accuracies (p = 0.95), detection times (p = 0.52), and ITRs (p = 0.93) are similar among groups; consequently, we cannot say the BMI performance is significantly affected by the disease, at least for the disability level of the participants included in this study.

Finally, considering the 22 subjects who performed the experiment without and with orthosis, we carried out a paired t-test to analyze the differences in the system performance between not wearing and wearing the hand-orthosis. While no significant differences in accuracy were found between these two conditions (t = 1.69, df = 21, p = 0.1), the study suggests a significant impact on the detection times (t = −3.67, df = 21, p = 0.001) and ITRs (t = 3.82, df = 21, p = 0.001) produced by the use of the orthosis. These differences may be explained by induced noise mixed with the EEG when the participant wears the hand-orthosis. The linear motors and the power supply of the robotic device produce noise components that can be observed in the electroencephalogram. In this way, the system detects and rejects contaminated epochs more often when the device is turned on and in contact with the user's skin, increasing the detection time. Fortunately, the penalization in the system performance is only 1.57 s, which is not a problem in a P300-based BMI if we consider the typical reaction times of these systems.

4. Discussion

In this work, we presented the development and evaluation of a P300-based BMI coupled with a robotic hand-orthosis. With this system, ALS patients can manipulate each finger of a hand mentally or perform a sequence of movements of one or more fingers. Because the BMI uses the P300 paradigm, the number of possible movements is not limited, and the BMI can provide a range of options for different needs. Our system is able to perform the thumb opposition movement or movements with any combination of fingers, we can also configure the orthosis to be initially closed and perform the extension-flexion of the fingers, the initial position and angular range of the movements of the orthosis can also be controlled, this allows to adapt the system to the individual characteristics of the users (e.g., spasticity, rigidity, level of hand motor impairment), however, for this initial evaluation, we wanted to test the general performance of the interface at the most individual level (single finger movements) and with the most complex movement (all fingers simultaneously), having a total of six possible movements.

In the experiments conducted with HS and ALS patients, we observed event-related activity for the target class in the EEG recordings of all the participants. Additionally, the classification accuracies estimated with cross-validation were above the chance level for all subjects. Finally, in the online tests, both HS and ALS participants were able to control the hand-orthosis with the interface. Only three subjects obtained online accuracies below 75%, and 46% of the study subjects completed all the test runs without errors. These results indicate that our interface can discriminate successfully between target and non-target flashing events, and we can expect that most healthy people and ALS patients with mild to moderate general disability levels (according to the ALSFRS-R scale) are potential users of this assistive technology. After the tests, the users were informally asked about their experience; being the first experience of the subjects with a BMI technology, most of them showed amazement, many of them showed deeply interested and asked about the details of operation and current state of this technology. Some users reported mild eyestrain during the BMI training stage, but all reported feeling physically comfortable during the test.

In this kind of application, it is essential to achieve high accuracies to avoid the user's frustration and increase the chance of acceptance of this technology for daily life use. Although most of the participants obtained low error rates in the conducted experiments, we must find strategies to improve the system performance for users with low classification accuracies. As long as the training data contains observable event-related activity for the target class and the classification model accuracy is above the chance level, we can modify the detection criteria implemented in the interface controller to improve the online performance and adapt the interface to the user's needs. Another possibility would be the modification of the stimulus presentation and the graphical user interface. Some studies have suggested that variations in the visual stimuli characteristics produce variations in the ERPs waveforms, and thus an impact on the BMI performance (Speier et al., 2017; Li et al., 2020).

To our knowledge, this is the first report of a non-invasive P300-based system with multiple possible selections coupled with a robotic hand-orthosis that has been tested with ALS patients. Despite there are previous recent reports of P300-BMIs to control hand-orthosis or artificial hands (Stan et al., 2015; Syrov et al., 2019), these systems were tested only with healthy people, and consider applications mainly for stroke survivors. Stan et al. (2015) presented a system where a hand-orthosis is controlled through a P300-based BMI; however, the system contains only three possible selections (turn on, close, and open orthosis) that allow the flexion-extension of the five fingers simultaneously, while our system allows the passive flexion-extension of a single finger at a time. The evaluation of these fine motor movements is particularly important in ALS patients since this disease is directly associated with the degeneration of the corticospinal tract (Sarica et al., 2017), which is involved in fine digital movements (Levine et al., 2012). Syrov et al. (2019) developed a P300-BMI approach to control each finger of an wired, artificial phantom hand which does not perform the passive flexion-extension of the users fingers. In their system, the visual stimuli are shown through LEDs placed directly on the fingers of the artificial hand; this configuration, in addition to the absence of wireless communication to the robotic hand, could bring additional difficulties to test the system with ALS patients due to their motor limitations. On the other hand, Gull et al. (2018) proposed a prototype intended to be used with ALS patients that includes a BMI and a robotic glove to assist hand grasping; nevertheless, the robotic glove (Nilsson et al., 2012) covers only three fingers (thumb, middle, and ring), and the implementation of the BMI paradigm, glove control, and clinical tests were reported inconclusive.

The datasets of each participant collected in this study are publicly available with the idea of contributing to the development of new processing and classification methods for BMI systems. The inclusion of datasets of ALS participants increases the available information containing EEG recordings for BMI purposes and facilitates the improvement of BMI-based tools for patients. Furthermore, the ERPs could be used to investigate potential electrophysiological biomarkers of ALS (McCane et al., 2015; Lange et al., 2016), which would help to understanding the neurodegenerative mechanisms of the disease.

In conclusion, the results presented in this work show the capability of our mind-controlled hand-orthosis to be used with no need of adaptations for ALS patients with moderate level of disability. Future work will focus on increasing the sample size of ALS users and investigating the effect of longitudinal use of the system on patients. We will also modify the available options of the interface to test more realistic scenarios. Our system could represent the basis for developing more practical tools, such as a portable orthosis that responds to other biosignals in addition to the EEG and that is adaptable to the degree of disability of the users. Our system could also be modified to communicate with other wireless systems (e.g., smart homes).

For this initial evaluation, we tested our system's effectiveness and efficiency in terms of accuracy and ITR, respectively; for our future work, we will adopt an user-centered design (UCD) approach (Liberati et al., 2015; Schettini et al., 2015; Riccio et al., 2016; Kübler et al., 2020) and include the evaluation of satisfaction by consulting and registering the opinion of primary (ALS patients) and secondary (caregivers) end-users through formal interviews. Feedback from patients and their caregivers will help to develop a more customizable system according to the individual characteristics and needs of each user. The UCD approach will also help us to properly identify and correct the present limitations in order to improve the usability of our system in daily life.

Data Availability Statement

The datasets generated and analyzed for this study are available upon request to the corresponding author.

Ethics Statement

All participants volunteered for the study and provided informed consent before the experimental sessions.

Author Contributions

JD, OM-M, JG, RC, HM, and JMA participated in the study design, experiments, and manuscript writing. JD and OM-M designed and implemented the brain-machine interface. JD, OM-M, and JA performed the acquisition and analysis of data. JD recruited the healthy participants. RC and HM selected the amyotrophic lateral sclerosis patients.

Funding

This research has been funded by the National Council of Science and Technology of Mexico (CONACyT) through grant PN2015-873.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

References

Achanccaray, D., Flores, C., Fonseca, C., and Andreu-Perez, J. (2017). “A P300-based brain computer interface for smart home interaction through an ANFIS ensemble,” in 2017 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE) (Naples), 1–5. doi: 10.1109/FUZZ-IEEE.2017.8015770

Aggogeri, F., Mikolajczyk, T., and O'Kane, J. (2019). Robotics for rehabilitation of hand movement in stroke survivors. Adv. Mech. Eng. 11:168781401984192. doi: 10.1177/1687814019841921

Arrichiello, F., Di Lillo, P., Di Vito, D., Antonelli, G., and Chiaverini, S. (2017). “Assistive robot operated via p300-based brain computer interface,” in 2017 IEEE International Conference on Robotics and Automation (ICRA) (Singapore), 6032–6037. doi: 10.1109/ICRA.2017.7989714

Aydin, E. A., Bay, O. F., and Guler, I. (2018). P300-based asynchronous brain computer interface for environmental control system. IEEE J. Biomed. Health Informatics 22, 653–663. doi: 10.1109/JBHI.2017.2690801

Berrar, D. (2019). “Cross-validation,” in Encyclopedia of Bioinformatics and Computational Biology, eds S. Ranganathan, M. Gribskov, K. Nakai, and C. Schönbach (Oxford: Academic Press), 542–545. doi: 10.1016/B978-0-12-809633-8.20349-X

Bowman, A., and Azzalini, A. (1997). Applied Smoothing Techniques for Data Analysis. Number 18 in Oxford statistical science series. Oxford: Clarendon Press.

Cattan, G. H., Andreev, A., Mendoza, C., and Congedo, M. (2019). A comparison of mobile VR display running on an ordinary smartphone with standard pc display for p300-bci stimulus presentation. IEEE Trans. Games 2019:2957963. doi: 10.1109/TG.2019.2957963

Chen, L., Jin, J., Daly, I., Zhang, Y., Wang, X., and Cichocki, A. (2016). Exploring combinations of different color and facial expression stimuli for gaze-independent BCIs. Front. Comput. Neurosci. 10:5. doi: 10.3389/fncom.2016.00005

Chen, L., Jin, J., Zhang, Y., Wang, X., and Cichocki, A. (2015). A survey of the dummy face and human face stimuli used in BCI paradigm. J. Neurosci. Methods 239, 18–27. doi: 10.1016/j.jneumeth.2014.10.002

Cho, J., Jeong, J., Shim, K., Kim, D., and Lee, S. (2018). “Classification of hand motions within EEG signals for non-invasive BCI-based robot hand control,” in 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC) (Miyazaki), 515–518. doi: 10.1109/SMC.2018.00097

De Venuto, D., Annese, V. F., and Mezzina, G. (2017). “An embedded system remotely driving mechanical devices by P300 brain activity,” in Design, Automation Test in Europe Conference Exhibition (DATE) (Lausanne), 2017, 1014–1019. doi: 10.23919/DATE.2017.7927139

De Venuto, D., Annese, V. F., and Mezzina, G. (2018). Real-time P300-based bci in mechatronic control by using a multi-dimensional approach. IET Softw. 12, 418–424. doi: 10.1049/iet-sen.2017.0340

Deligani, R. J., Hosni, S. I., Vaughan, T. M., McCane, L. M., Zeitlin, D. J., McFarland, D. J., et al. (2019). “Neural alterations during use of a P300-based BCI by individuals with amyotrophic lateral sclerosis*,” in 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER) (San Francisco, CA), 899–902. doi: 10.1109/NER.2019.8717044

Flores, C., Fonseca, C., Achanccaray, D., and Andreu-Perez, J. (2018). “Performance evaluation of a P300 brain-computer interface using a kernel extreme learning machine classifier,” in 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC) (Miyazaki), 3715–3719. doi: 10.1109/SMC.2018.00629

Garakani, G., Ghane, H., and Menhaj, M. B. (2019). Control of a 2-DOF robotic arm using a P300-based brain-computer interface. AUT J. Model. Simul. doi: 10.22060/miscj.2019.15569.5136

Gondesen, F., Marx, M., and Kycler, A. (2019). “A shoulder-surfing resistant image-based authentication scheme with a brain-computer interface,” in 2019 International Conference on Cyberworlds (CW) (Kyoto), 336–343. doi: 10.1109/CW.2019.00061

Gull, M. A., Bai, S., Mrachacz-Kersting, N., and Blicher, J. (2018). “Wexo: Smart wheelchair exoskeleton for ALS patients,” in Proceedings of the 12th International Convention on Rehabilitation Engineering and Assistive Technology, i-CREATe 2018 (Midview City: Singapore Therapeutic, Assistive & Rehabilitative Technologies (START) Centre), 97–100.

Guy, V., Soriani, M.-H., Bruno, M., Papadopoulo, T., Desnuelle, C., and Clerc, M. (2018). Brain computer interface with the P300 speller: usability for disabled people with amyotrophic lateral sclerosis. Ann. Phys. Rehabil. Med. 61, 5–11. doi: 10.1016/j.rehab.2017.09.004

Ho, N. S. K., Tong, K. Y., Hu, X. L., Fung, K. L., Wei, X. J., Rong, W., et al. (2011). “An EMG-driven exoskeleton hand robotic training device on chronic stroke subjects: task training system for stroke rehabilitation,” in 2011 IEEE International Conference on Rehabilitation Robotics (Zurich), 1–5. doi: 10.1109/ICORR.2011.5975340

Hwang, H.-J., Kim, S., Choi, S., and Im, C.-H. (2013). EEG-based brain-computer interfaces: a thorough literature survey. Int. J. Human. Comput. Interact. 29, 814–826. doi: 10.1080/10447318.2013.780869

Ivy, C. C., Smith, S. M., and Materi, M. M. (2014). Upper extremity orthoses use in amyotrophic lateral sclerosis/motor neuron disease: three case reports. HAND 9, 543–550. doi: 10.1007/s11552-014-9626-x

James, G., Witten, D., Hastie, T., and Tibshirani, R. (2015). An Introduction to Statistical Learning: With Applications in R. New York, NY: Springer Publishing Company, Incorporated.

Kleih, S. C., Gottschalt, L., Teichlein, E., and Weilbach, F. X. (2016). Toward a P300 based brain-computer interface for aphasia rehabilitation after stroke: presentation of theoretical considerations and a pilot feasibility study. Front. Hum. Neurosci. 10:547. doi: 10.3389/fnhum.2016.00547

Kübler, A., Nijboer, F., and Kleih, S. (2020). “Chapter 26 - hearing the needs of clinical users,” in Brain-Computer Interfaces, Vol. 168 of Handbook of Clinical Neurology, eds N. F. Ramsey and J. del R. Millan (Elsevier), 353–368. doi: 10.1016/B978-0-444-63934-9.00026-3

Lange, F., Lange, C., Joop, M., Seer, C., Dengler, R., Kopp, B., et al. (2016). Neural correlates of cognitive set shifting in amyotrophic lateral sclerosis. Clin. Neurophysiol. 127, 3537–3545. doi: 10.1016/j.clinph.2016.09.019

Levine, A., Lewallen, K., and Pfaff, S. (2012). Spatial organization of cortical and spinal neurons controlling motor behavior. Curr. Opin. Neurobiol. 22, 812–821. doi: 10.1016/j.conb.2012.07.002

Li, S., Jin, J., Daly, I., Zuo, C., Wang, X., and Cichocki, A. (2020). Comparison of the ERP-based BCI performance among chromatic (RGB) semitransparent face patterns. Front. Neurosci. 14:54. doi: 10.3389/fnins.2020.00054

Liberati, G., Pizzimenti, A., Simione, L., Riccio, A., Schettini, F., Inghilleri, M., et al. (2015). Developing brain-computer interfaces from a user-centered perspective: assessing the needs of persons with amyotrophic lateral sclerosis, caregivers, and professionals. Appl. Ergon. 50, 139–146. doi: 10.1016/j.apergo.2015.03.012

Lotte, F., and Guan, C. (2009). “An efficient p300-based brain-computer interface with minimal calibration time,” in Assistive Machine Learning for People With Disabilities Symposium (NIPS'09 Symposium) (Whistler).

Martínez, H. R., Figueroa-Sánchez, J. A., Cantú-Martínez, L., Caraza, R., de la Maza, M., Escamilla-Garza, J. M., et al. (2020). A multidisciplinary clinic for amyotrophic lateral sclerosis patients in northeast mexico. Rev. Mex. Neuroci. 21, 66–70. doi: 10.24875/RMN.19000144

Masud, U., Baig, M. I., Akram, F., and Kim, T. (2017). “A P300 brain computer interface based intelligent home control system using a random forest classifier,” in 2017 IEEE Symposium Series on Computational Intelligence (SSCI) (Honolulu), 1–5. doi: 10.1109/SSCI.2017.8285449

McCane, L., Heckman, S., McFarland, D., Townsend, G., Mak, J., Sellers, E., et al. (2015). P300-based brain-computer interface (BCI) event-related potentials (ERPs): people with amyotrophic lateral sclerosis (ALS) vs. age-matched controls. Clin. Neurophysiol. 126, 2124–2131. doi: 10.1016/j.clinph.2015.01.013

McFarland, D. J. (2020). Brain-computer interfaces for amyotrophic lateral sclerosis. Muscle Nerve 61, 702–707. doi: 10.1002/mus.26828

Mendoza-Montoya, O. (2017). Development of a hybrid brain-computer interface for autonomous systems (Ph.D. thesis). Freie Universitat Berlin, Berlin, Germany.

Murphy, M. D., Guggenmos, D. J., Bundy, D. T., and Nudo, R. J. (2016). Current challenges facing the translation of brain computer interfaces from preclinical trials to use in human patients. Front. Cell. Neurosci. 9:497. doi: 10.3389/fncel.2015.00497

Nilsson, M., Ingvast, J., Wikander, J., and von Holst, H. (2012). “The soft extra muscle system for improving the grasping capability in neurological rehabilitation,” in 2012 IEEE-EMBS Conference on Biomedical Engineering and Sciences (Langkawi), 412–417. doi: 10.1109/IECBES.2012.6498090

Nurseitov, D., Serekov, A., Shintemirov, A., and Abibullaev, B. (2017). “Design and evaluation of a P300-ERP based BCI system for real-time control of a mobile robot,” in 2017 5th International Winter Conference on Brain-Computer Interface (BCI) (Jeongseon), 115–120. doi: 10.1109/IWW-BCI.2017.7858177

Okahara, Y., Takano, K., Komori, T., Nagao, M., Iwadate, Y., and Kansaku, K. (2017). Operation of a P300-based brain-computer interface by patients with spinocerebellar ataxia. Clin. Neurophysiol. Pract. 2, 147–153. doi: 10.1016/j.cnp.2017.06.004

Poletti, B., Carelli, L., Solca, F., Lafronza, A., Pedroli, E., Faini, A., et al. (2016). Cognitive assessment in amyotrophic lateral sclerosis by means of P300-brain computer interface: a preliminary study. Amyotr. Lateral Scler. Frontotemp. Degener. 17, 473–481. doi: 10.1080/21678421.2016.1181182

Riccio, A., Pichiorri, F., Schettini, F., Toppi, J., Risetti, M., Formisano, R., et al. (2016). “Chapter 12 - interfacing brain with computer to improve communication and rehabilitation after brain damage,” in Brain-Computer Interfaces: Lab Experiments to Real-World Applications, Vol. 228 of Progress in Brain Research, ed D. Coyle (Amsterdam: Elsevier), 357–387. doi: 10.1016/bs.pbr.2016.04.018

Sarica, A., Cerasa, A., Valentino, P., Yeatman, J., Trotta, M., Barone, S., et al. (2017). The corticospinal tract profile in amyotrophic lateral sclerosis. Hum. Brain Mapp. 38, 727–739. doi: 10.1002/hbm.23412

Schettini, F., Riccio, A., Simione, L., Liberati, G., Caruso, M., Frasca, V., et al. (2015). Assistive device with conventional, alternative, and brain-computer interface inputs to enhance interaction with the environment for people with amyotrophic lateral sclerosis: a feasibility and usability study. Arch. Phys. Med. Rehabil. 96(3 Suppl.), S46–S53. doi: 10.1016/j.apmr.2014.05.027

Shahriari, Y., Vaughan, T. M., McCane, L. M., Allison, B. Z., Wolpaw, J. R., and Krusienski, D. J. (2019). An exploration of BCI performance variations in people with amyotrophic lateral sclerosis using longitudinal EEG data. J. Neural Eng. 16:056031. doi: 10.1088/1741-2552/ab22ea

Speier, W., Deshpande, A., Cui, L., Chandravadia, N., Roberts, D., and Pouratian, N. (2017). A comparison of stimulus types in online classification of the P300 speller using language models. PLoS ONE 12:e175382. doi: 10.1371/journal.pone.0175382

Spüler, M., Walter, A., Rosenstiel, W., and Bogdan, M. (2014). Spatial filtering based on canonical correlation analysis for classification of evoked or event-related potentials in EEG data. IEEE Trans. Neural Syst. Rehabil. Eng. 22, 1097–1103. doi: 10.1109/TNSRE.2013.2290870

Stan, A., Irimia, D. C., Botezatu, N. A., and Lupu, R. G. (2015). “Controlling a hand orthosis by means of P300-based brain computer interface,” in 2015 E-Health and Bioengineering Conference (EHB) (Iasi), 1–4. doi: 10.1109/EHB.2015.7391389

Syrov, N., Novichikhina, K., Kirayanov, D., Gordleeva, S., and Kaplan, A. (2019). The changes of corticospinal excitability during the control of artificial hand through the brain-computer interface based on the P300 component of visual evoked potential. Hum. Physiol. 45, 152–157. doi: 10.1134/S0362119719020117

Tanaka, K., Horaiya, K., Akagi, J., and Kihoin, N. (2013). Timely manner application of hand orthoses to patients with amyotrophic lateral sclerosis: a case report. Prosthet. Orthot. Int. 38, 239–242. doi: 10.1177/0309364613489334

Tang, J., Zhou, Z., and Liu, Y. (2017). “A 3D visual stimuli based p300 brain-computer interface: for a robotic arm control,” in Proceedings of the 2017 International Conference on Artificial Intelligence, Automation and Control Technologies, AIACT'17 (New York, NY: Association for Computing Machinery), 1–6. doi: 10.1145/3080845.3080863

Tyagi, A., and Nehra, V. (2017). A comparison of feature extraction and dimensionality reduction techniques for EEG-based BCI system. IUP J. Comput. Sci. 11, 51–66.

Vaughan, T. M. (2020). “Chapter 4 - brain-computer interfaces for people with amyotrophic lateral sclerosis,” in Brain-Computer Interfaces, Vol. 168 of Handbook of Clinical Neurology, eds N. F. Ramsey and R. del Millán (Amsterdam: Elsevier), 33–38. doi: 10.1016/B978-0-444-63934-9.00004-4

Venuto, D. D., Annese, V. F., Mezzina, G., Ruta, M., and Sciascio, E. D. (2016). “Brain-computer interface using P300: a gaming approach for neurocognitive impairment diagnosis,” in 2016 IEEE International High Level Design Validation and Test Workshop (HLDVT) (Santa Cruz, CA), 93–99.

Wang, W., and Chakraborty, G. (2017). “Probes minimization still maintaining high accuracy to classify target stimuli P300,” in 2017 IEEE 8th International Conference on Awareness Science and Technology (iCAST) (Taichung), 13–17. doi: 10.1109/ICAwST.2017.8256431

WMA (2013). World Medical Association Declaration of Helsinki. Ethical principles for medical research involving human subjects. JAMA 310, 2191–2194. doi: 10.1001/jama.2013.281053

Wolpaw, J. R., Birbaumer, N., McFarland, D. J., Pfurtscheller, G., and Vaughan, T. M. (2002). Brain-computer interfaces for communication and control. Clin. Neurophysiol. 113, 767–791. doi: 10.1016/S1388-2457(02)00057-3

Won, K., Kwon, M., Lee, S., Jang, S., Lee, J., Ahn, M., et al. (2018). “Seeking rsvp task features correlated with P300 speller performance,” in 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC) (Miyazaki), 1138–1143. doi: 10.1109/SMC.2018.00201

Xiao, X., Xu, M., Jin, J., Wang, Y., Jung, T., and Ming, D. (2019). Discriminative canonical pattern matching for single-trial classification of erp components. IEEE Trans. Biomed. Eng. 67, 2266–2275. doi: 10.1109/TBME.2019.2958641

Yu, M., Kaongoen, N., and Jo, S. (2016). “P300-BCI-based authentication system,” in 2016 4th International Winter Conference on Brain-Computer Interface (BCI) (Gangwon-do), 1–4. doi: 10.1109/IWW-BCI.2016.7457443

Zhumadilova, A., Tokmurzina, D., Kuderbekov, A., and Abibullaev, B. (2017). “Design and evaluation of a P300 visual brain-computer interface speller in cyrillic characters,” in 2017 26th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN) (Lisbon), 1006–1011. doi: 10.1109/ROMAN.2017.8172426

Keywords: brain-machine interface, electroencephalography, evoked potentials, P300, amyotrophic lateral sclerosis, signal processing, artificial intelligence, hand-orthosis

Citation: Delijorge J, Mendoza-Montoya O, Gordillo JL, Caraza R, Martinez HR and Antelis JM (2020) Evaluation of a P300-Based Brain-Machine Interface for a Robotic Hand-Orthosis Control. Front. Neurosci. 14:589659. doi: 10.3389/fnins.2020.589659

Received: 31 July 2020; Accepted: 22 October 2020;

Published: 27 November 2020.

Edited by:

Davide Valeriani, Harvard Medical School, United StatesReviewed by:

Rupert Ortner, g.tec Medical Engineering Spain S.L., SpainFloriana Pichiorri, Santa Lucia Foundation (IRCCS), Italy

Copyright © 2020 Delijorge, Mendoza-Montoya, Gordillo, Caraza, Martinez and Antelis. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Jonathan Delijorge, ZGVsaWpvcmdlQHRlYy5teA==

Jonathan Delijorge

Jonathan Delijorge Omar Mendoza-Montoya

Omar Mendoza-Montoya Jose L. Gordillo

Jose L. Gordillo Ricardo Caraza2

Ricardo Caraza2 Javier M. Antelis

Javier M. Antelis