- Foundations of Natural and Stochastic Computing, Donders Institute for Brain, Cognition and Behaviour, Radboud University, Nijmegen, Netherlands

The implementation of inference (i.e., computing posterior probabilities) in Bayesian networks using a conventional computing paradigm turns out to be inefficient in terms of energy, time, and space, due to the substantial resources required by floating-point operations. A departure from conventional computing systems to make use of the high parallelism of Bayesian inference has attracted recent attention, particularly in the hardware implementation of Bayesian networks. These efforts lead to several implementations ranging from digital circuits, mixed-signal circuits, to analog circuits by leveraging new emerging nonvolatile devices. Several stochastic computing architectures using Bayesian stochastic variables have been proposed, from FPGA-like architectures to brain-inspired architectures such as crossbar arrays. This comprehensive review paper discusses different hardware implementations of Bayesian networks considering different devices, circuits, and architectures, as well as a more futuristic overview to solve existing hardware implementation problems.

Introduction

Bayesian inference (i.e., the computation of a posterior probability given a prior probability and new evidence; Jaynes, 2003) is one of the most crucial problems in artificial intelligence (AI), in areas as varied as statistical machine learning (Tipping, 2003; Theodoridis, 2015), causal discovery (Heckerman et al., 1999), automatic speech recognition (Zweig and Russell, 1998), spam filtering (Gómez Hidalgo et al., 2006), and clinical decision support systems (Sesen et al., 2013). It is a powerful method for fusing independent (possibly conflicting) data for decision-making in robotic, biological, and multi-sensorimotor systems (Bessière et al., 2008). Bayesian networks (Pearl, 1988) allow for a concise representation of stochastic variables and their independence and the computation of any posterior probability of interest in the domain spanned by the variables. The structure and strength of the relationships can be elicited from domain experts (Druzdzel and van der Gaag, 1995) or, more commonly, learned from data using algorithms such as expectation-maximization or maximum likelihood estimation (Heckerman et al., 1995; Ji et al., 2015). However, both the inference problem (Cooper, 1990) and the learning problem (Chickering, 1996) are NP-hard problems in general.

As a result, an efficient implementation of Bayesian networks is highly desirable. Although the implementation of inference on a large Bayesian network on conventional general-purpose computers provides high precision, it is inefficient in terms of time and energy consumption. Several complex floating-point calculations are required to estimate the probability of occurrence of a variable since the network is composed of various interacting causal variables (Shim et al., 2017). Moreover, the high parallelism feature of Bayesian inference is not used efficiently in conventional computing systems (F. Kungl et al., 2019). Conventional systems need exact values throughout the computation, preventing the use of the stochastic computing paradigm that consumes less power (Khasanvis et al., 2015a). To realize stochastic computing-based Bayesian inference especially using emerging nanodevices, it is highly needed to develop a robust hardware structure to overcome the characteristic imperfection of these new technologies. On the other hand, the practical realization and usage of large Bayesian networks has been problematic due to the abovementioned intractability of inference (Faria et al., 2021). Therefore, any hardware implementation of Bayesian inference needs to pay attention to a hierarchy of device, circuit, architecture, and algorithmic improvements.

Various approaches and architectures for Bayesian network hardware implementations have been developed; in the literature, approaches such as probabilistic computing platforms based on Field Programmable Gate Arrays (FPGAs), fully digital systems with stochastic digital circuits, analog-based probabilistic circuits, mixed-signal approaches, stochastic computing platforms with scaled nanomagnets, and Intel’s Loihi chip have been proposed.

In this overview paper, we describe these different approaches as well as the pros and cons of each of them. To this end, in Section “Bayesian Networks and the Inference Problem,” some basic preliminaries on Bayesian networks will be explained. Section “Probabilistic Hardware-Based Implementation of Bayesian Networks” describes several probabilistic neuronal circuits for Bayesian network variables using different nonvolatile devices. We explain neural sampling machines (NSMs) for approximate Bayesian inference. In Section “New Computing Architecture With Nonvolatile Memory Elements for Bayesian Network Implementation,” different systems for the implementation of Bayesian networks will be discussed that make use of new nonvolatile magnets and CMOS circuit elements. Section “Bayesian Inference Hardware Implementation With Digital Logic Gates” explains digital implementations of Bayesian inference algorithms as well as the definition of a standard cell-based implementation. At the end of this section, probabilistic nodes based on CMOS technology will be discussed. Section “Crossbar Arrays for Bayesian Networks Implementation” represents two brain-inspired hardware implementations of naïve Bayesian classifiers in the crossbar array architecture, in which memristors are employed as nonvolatile elements for algorithm implementation. Also, Bayesian reasoning machines with magneto-tunneling junction-based Bayesian networks are described. In Section “Bayesian Neural Networks,” employing Bayesian features in neural networks is represented. First Bayesian neural networks are explained. Then, Gaussian synapses for probabilistic neural networks (PNNs) will be introduced. Afterward, PNN with memristive crossbar circuits is described. Approximate computing to provide hardware-friendly PNNs and an application of probabilistic artificial neural networks (ANNs) for analyzing transistor process variation are explained. In Section “Hardware Implementation of Probabilistic Spiking Neural Networks,” employing Bayesian features in Spiking Neural Network (SNN) is represented. The feasibility of nonvolatile devices as synapses in SNNs architectures will be discussed for Bayesian-based inference algorithms. A scalable sampling-based probabilistic inference platform with spiking networks is explained. Then, a probabilistic spiking neural computing platform with MTJs is explained. Afterward, high learning capability of a probabilistic spiking neural network implementation and hardware implementation of SNNs utilizing probabilistic spike propagation mechanism are described. At the end of this section, memristor-based stochastic neurons for probabilistic SNN computing and Loihi based Bayesian inference implementation are represented. In Section “Discussion,” we provide an overall discussion of the different approaches. Finally, Section “Conclusion” concludes the paper.

Bayesian Networks and the Inference Problem

A discrete joint probability distribution defined over a set of random (or stochastic) variables assigns a probability to each joint value assignment to the set of variables; this representation, as well as any inference over it, is exponential in the number of variables. For most practical applications, however, there are many independences in the joint probability distribution that allows for a more concise representation. There are several possible ways to represent such independences in probabilistic graphical models, representing a probabilistic model with a graph structure (Korb and Nicholson, 2010). The commonly described graphical models are Hidden Markov Models (HMMs), Markov Random Fields (MRFs), and Bayesian networks. MRFs use undirected graphs to represent conditional independences and capture stochastic relations in potentials. Bayesian networks use directed a-cyclic graphs, capturing stochastic relations in conditional probability tables (CPTs). Both structures can represent different subsets of conditional independence relations. HMMs are dynamic Bayesian networks that efficiently model endogenous changes over time, under the assumption of the Markov property.

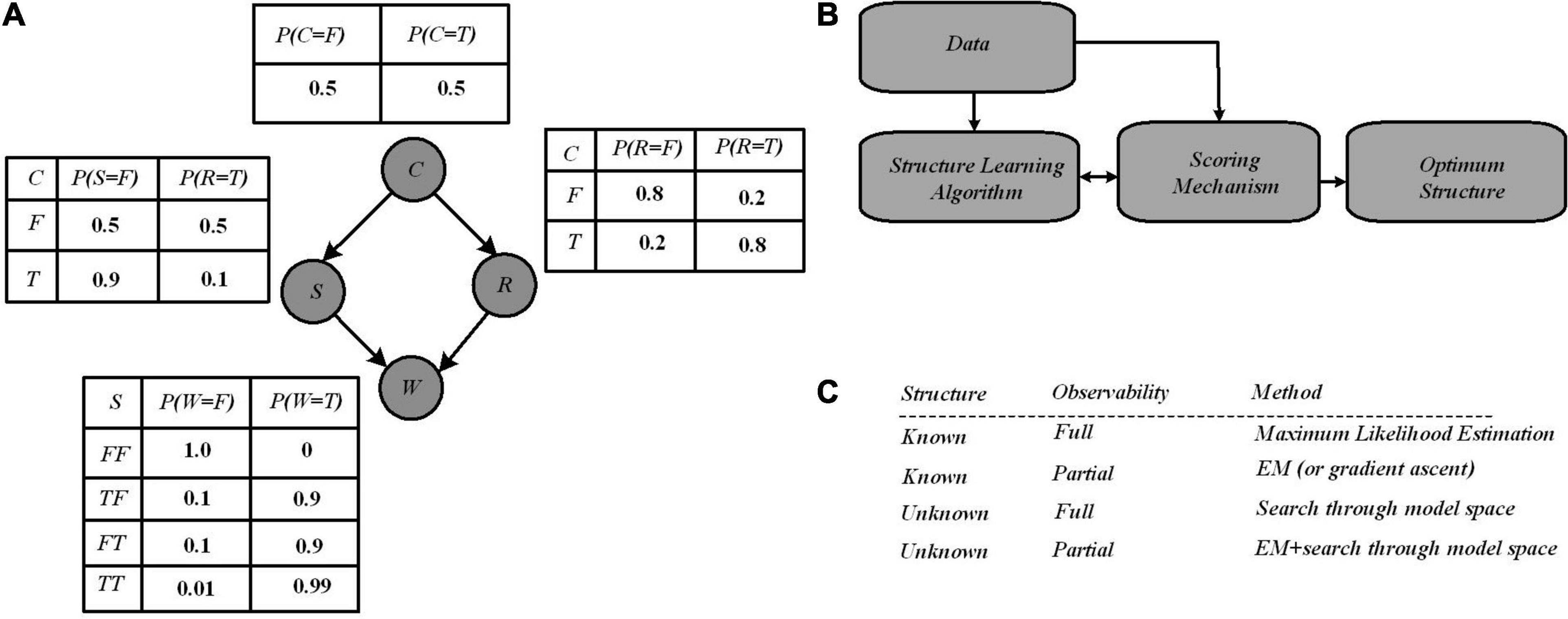

A simple Bayesian network with four variables (Pearl, 1988) has been shown as a running example in Figure 1 in which Bayesian networks are represented by a directed acyclic graph composed of nodes and edges and a set of CPTs. The nodes in the graph model random variables, whereas the edges model direct dependencies among the variables.

Figure 1. (A) A Bayesian network with four variables where independence between variables has been reported via conditional probability tables (CPTs). All posterior probabilities of interest in this network can be computed using the laws of probability theory, notably Bayes’ rule (1) that allows inferring the causes of effects observed in the network. (B) Structure learning flow in Bayesian networks through which Learning algorithm provides graphs from data, and are scored by the scoring mechanism. Finally, an optimum structure is selected after iteratively improvements of the score over the graph structure (Kulkarni et al., 2017b). (C) Several learning case in Bayesian networks (Murphy, 1998).

The four binary variables (denoted True or False, or equally “1” or “0”) “C,” “R,” “S,” and “W” represent whether it is cloudy, it is rainy, the sprinkler is on, and the grass is wet, respectively. The conditional probabilities (given in the CPTs) describe the conditional dependencies between parent and child nodes. Based on the network structure, the inference operation estimates the probability of the hidden variables, based on the observed variables (Pearl, 1988). For example, suppose one observes that the grass is wet, then the inference operation seeks to compute the probability distribution over the possible causes. There are possibly two hidden causes for the grass being wet: if it is raining or the sprinkler is on. Bayes’ rule defined in Equation (1), is used to calculate the posterior probability of each cause when wet grass has been observed; it allows us to compute this distribution from the parameters available in the CPTs:

For data analysis, the graphical model provides several benefits. Different methods are utilized for data analysis, which are rule bases, decision trees, and ANNs. Different techniques for data analysis are density estimation, classification, regression, and clustering. Then, what do Bayesian methods provide? One, it readily handles the missing of some data entries since the model encodes dependencies among all variables. Two, a Bayesian network paves the way to understanding about a problem domain and predicting the consequences of intervention via learning the causal relationships. Three, the model provides a causal and probabilistic semantics, though which an ideal representation for combining prior knowledge and data is possible. Four, with Bayesian statistics as well as Bayesian networks, the overfitting of data can be solved (Heckerman, 2020).

Learning a Bayesian network has two major aspects, i.e., discovering the optimal structure of the graph and learning the parameters in the CPTs. Learning a Bayesian network from data requires two steps of structure learning and parameter learning. There are a few works focusing on hardware implementation for structure learning. In order to find an optimal structure, exploring all possible graph structures for a given dataset is necessary. As shown in Figure 1B, for structure learning, based on the data, an algorithm starts with a random graph, then a scoring mechanism determines how well the structure can explain the data, where this quality is typically a mix of simplicity and likelihood. The graph structure is updated based on the score, and as a graph provides a better score, it is accepted. Several algorithms have been proposed in the literature for structure learning, with the two major scoring mechanisms being Bayesian scoring and information-theoretic scoring (Kulkarni et al., 2017b). Most of the information-theoretic scoring methods are analytical, and then complex mathematical computations are required. These methods are currently performed by software and the required time for structure learning is impacted significantly. Equation (2) represents the Bayesian scoring that uses the Bayes’ rule to compute the quality of a given Bayesian network structure. Using the Bayes rule, for a given data, and for a structure, the Bayes score is defined by:

The score of a structure as shown by Equation (2) is proportional to how closely it can describe observed data and on the prior probability of the structure (which could be uniform or provided by a domain expert). The Bayesian score is calculated via stochastic sampling through which a model of the graph is generated with the CPT values set, and sampling over each node for several iterations is performed. For example, for a probability value of 0.5 for a node, with 10 sampling iterations, it is expected to show “True” in 5 iterations. To calculate the Bayesian score of the graph (i.e., defining the correlation degree between the sampled data and learning data), the inference data taken from the stochastic sampling process are utilized. Once the structure of the network has been learned from the data, parameter learning (i.e., using data to learn the distributions of a Bayesian network) can be performed efficiently by estimating the parameters of the local distributions implied by the structure obtained in the previous step. There are two main approaches to the estimation of those parameters in literature: one based on maximum likelihood estimation and the other based on Bayesian estimation (Heckerman, 2020). Parameter estimation still could be challenging when the sample sizes are much smaller than the number of variables in the model. This situation is called “small n, large p,” which brings a high variability unless particular care is taken in both structure and parameter learning. As mentioned above, the graph topology (structure) and the parameters of each CPT can be inferred from data. However, learning structure is in general much harder than learning parameters. Also, learning when some of the nodes are hidden, or in case data are missing, is harder than when everything is observed. This gives rise to four distinct cases with increasing difficulty shown in Figure 1C.

Bayesian network learning involves the development of both the structure and the parameters of Bayesian networks from observational and interventional datasets; Bayesian inference on the other hand is often a follow-up to Bayesian network learning and deals with inferring the state of a set of variables given the state of others as evidence. The computation of the posterior probabilities shown above (Figure 1A) is a fundamental problem in the evaluation of queries. This allows for diagnosis (computing P(cause| symptoms)), prediction (computing P(symptoms| cause)), classification (computing P(class| data)), and decision-making when a cost function is involved. In summary, Bayesian networks allow for a very rich and structured representation of dependencies and independencies within a joint probability distribution. This comes at the price of the intractability of both inference (i.e., the computation of posterior probabilities conditioned on some observations in the network) and learning (i.e., the establishment of the structure of the model and/or the conditional probabilities based on data and a learning algorithm). One can deal with this intractability either by reducing the complexity of the model or by accepting approximate results. Examples of the former are reducing the tree width of the network model (Kwisthout et al., 2010), reducing the structure of the model to a polytree describing a hidden state model and observable sensors (Hidden Markov model) (Baum and Petrie, 1966), or assuming mutual independence between features (Naïve Bayesian classifiers) (Maron and Kuhns, 1960). Examples of the latter are approximation algorithms such as Metropolis-Hastings (Hastings, 1970) and Likelihood weighting (Shachter and Peot, 1990).

Probabilistic Hardware-Based Implementation of Bayesian Networks

This section represents several probabilistic neuron circuits for Bayesian network variables by using different nonvolatile devices connected to CMOS circuit elements. To this end, the first two abstraction layer-based implementations and then a direct implementation of probabilistic circuits will be discussed. Then, a NSM for approximate Bayesian inference is explained.

Probabilistic Spin Logic-Based Implementation of Bayesian Networks

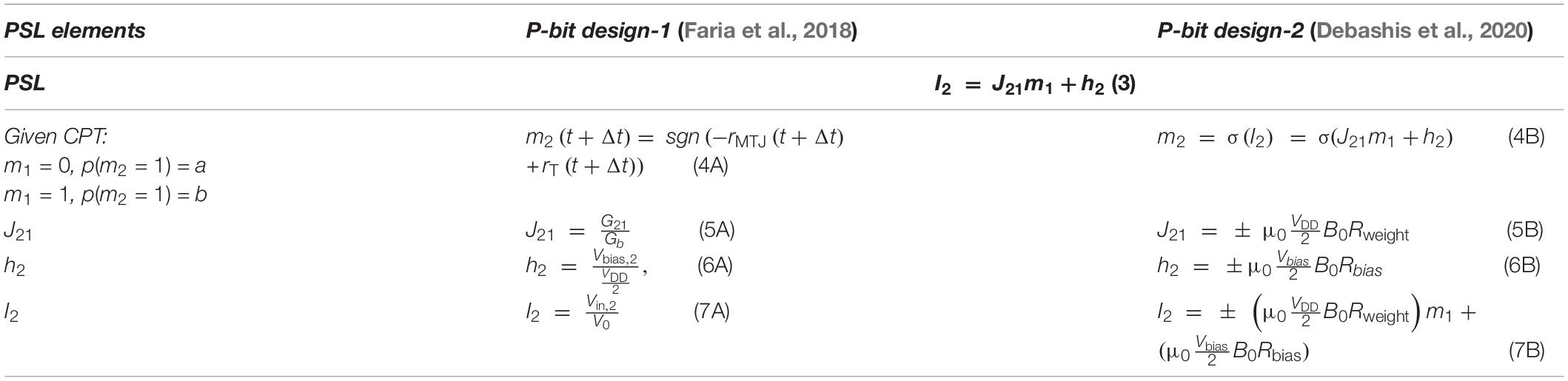

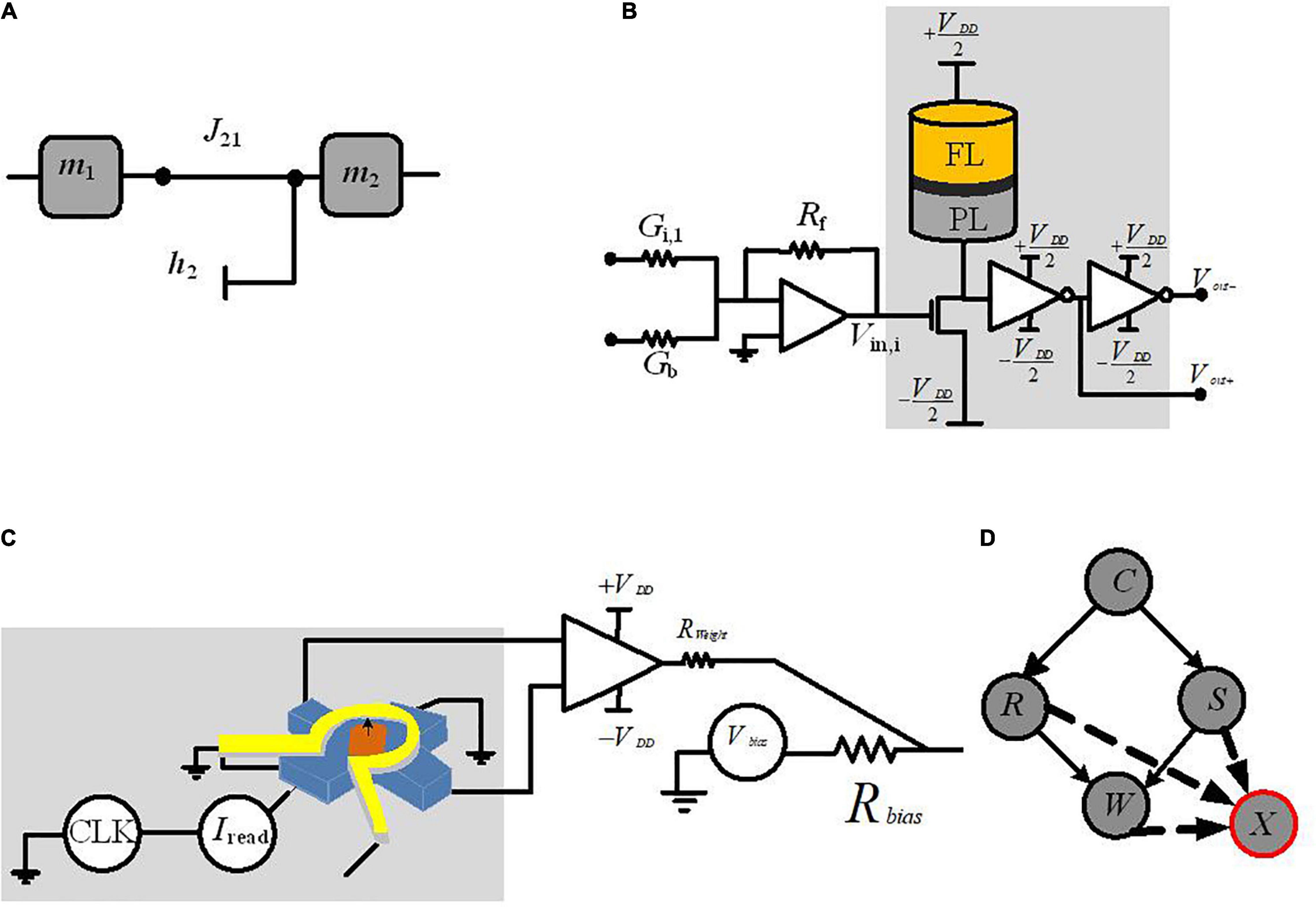

The first approach we will discuss is the mapping of CPTs to probabilistic circuits constructed by probabilistic bits (p-bits) (Faria et al., 2018; Debashis et al., 2020). In this approach, each variable in a Bayesian network is modeled by a stochastic circuit, representing a specific conditional probability. This probability is represented by the input that comes from its parent nodes, via the weights of the links between nodes. For the p-bit implementation, the Bayesian network is translated into probabilistic spin logic (PSL). PSL is a behavioral model, represented by biasing (h) and interconnection (J) coefficients (shown in Figure 2A). Then, PSL is translated into electronic devices.

Figure 2. Circuit implementation of a p-bit block. (A) PSL-based representation of two-node Bayesian network. (B) The p-bit design based on MTJ p-circuit with connection weight and bias to be connected to another node (Faria et al., 2018). (C) The p-bit design, based on nanomagnet p-circuit with connection weight and bias to be connected to another node (Debashis et al., 2020). (D) The required auxiliary node, X, for representing the four-node Bayesian network.

The reported p-bit implementation in Faria et al. (2018) as shown in Figure 2B uses a stochastic spintronic device, i.e., magnetic tunnel junction (MTJ), connected to the drain of a transistor.

Table 1 reports the required equations for the PSL translation into a circuit whose output m1 is related to its input I2 (the synapse generates the input I2 from a weighted state of m2, Figure 2A), Equation (3). Based on Equation (4A), a random number generator (RNG) (rand) and a tunable element (tanh) construct m2. The RNG is the MTJ and the tunable component is the NMOS transistor; rMTJ is a correlated RNG and the NMOS transistor resistance rT is approximated as a tanh function found by fitting based on I–V characteristics. The PSL model is then translated into electronic components (shown in Figure 2B) where each node (represented by m) is connected to other nodes and biased through voltages Vbias and conductances G; V0 is a fitting parameter. Biasing (h) and interconnection (J) coefficients of PSL model have been reported in Table 1 by Equations (5A)–(7A), due to its corresponding P-bit circuit in Figure 2B.

Note that individual p-bits require sequencers in software implementations to be programmed in a directed order. The p-bit in Faria et al. (2018) and Faria et al. (2021) is an autonomous, asynchronous circuit that can operate correctly without any clocks or sequencers, in which the individual p-bits need to be carefully designed and the interconnect delays, from one node to another node, must be much shorter than the nanomagnet fluctuations of the stochastic device. This condition is met as magnetic fluctuations occur at approximately the 1-ns time scale. However, in asynchronous operations, updating the network as well as dealing with variations in the thermal barriers or interconnect delays necessitates further study.

Debashis et al. (2020) present another alternate p-bit implemented with inherently stochastic spintronic devices based on a nanomagnet with perpendicular magnetic anisotropy. This device utilizes the spin orbit torque from a heavy metal (HM) under-layer to be initialized to its hard axes. Equations (4B)–(7B) in Table 1 show the relation between the stochastic variables m1 and m2 based on the corresponding p-bit circuit in Figure 2C. Here, σ defines the sigmoidal activation function for the device in m2. Equation (4B) explains the conditional dependencies. The probability of m2 being 1 given m1 being 1 is calculated through Equation (4B) while setting m1 = 1. The parameters B0 and h2 represented in Equations (5B)–(7B) can be tuned to change the shape and offset of the sigmoidal activation function (while presenting the CPTs via the connection weights).

To implement the four-node Bayesian network by p-bits, Figure 2D, using PSL behavioral models in Faria et al. (2018) and Debashis et al. (2020), requires an auxiliary p-bit defined by node “X.” The CPT of node “W” has four conditional probability distributions (based on nodes “R” and “S,” see Figure 1); based on the principles of linear algebra, this CPT needs four independent parameters. The interconnection weights JWR and JWS and the bias parameter to the node “W” (hW) are three parameters of four. The fourth parameter has been implemented with the interconnection to node “X.” Nodes with N parents need a total of (N+ 1) parameters and 2N equations to meet the PSL model requirement. Based on the number of linearly independent equations, it is needed to represent the appropriate number of auxiliary variables (Faria et al., 2018). Utilizing the auxiliary nodes in p-bit-based implementation of Bayesian networks adds extra area/energy overhead and requires further studies.

Spintronic Devices for Direct Hardware Implementation of Bayesian Networks

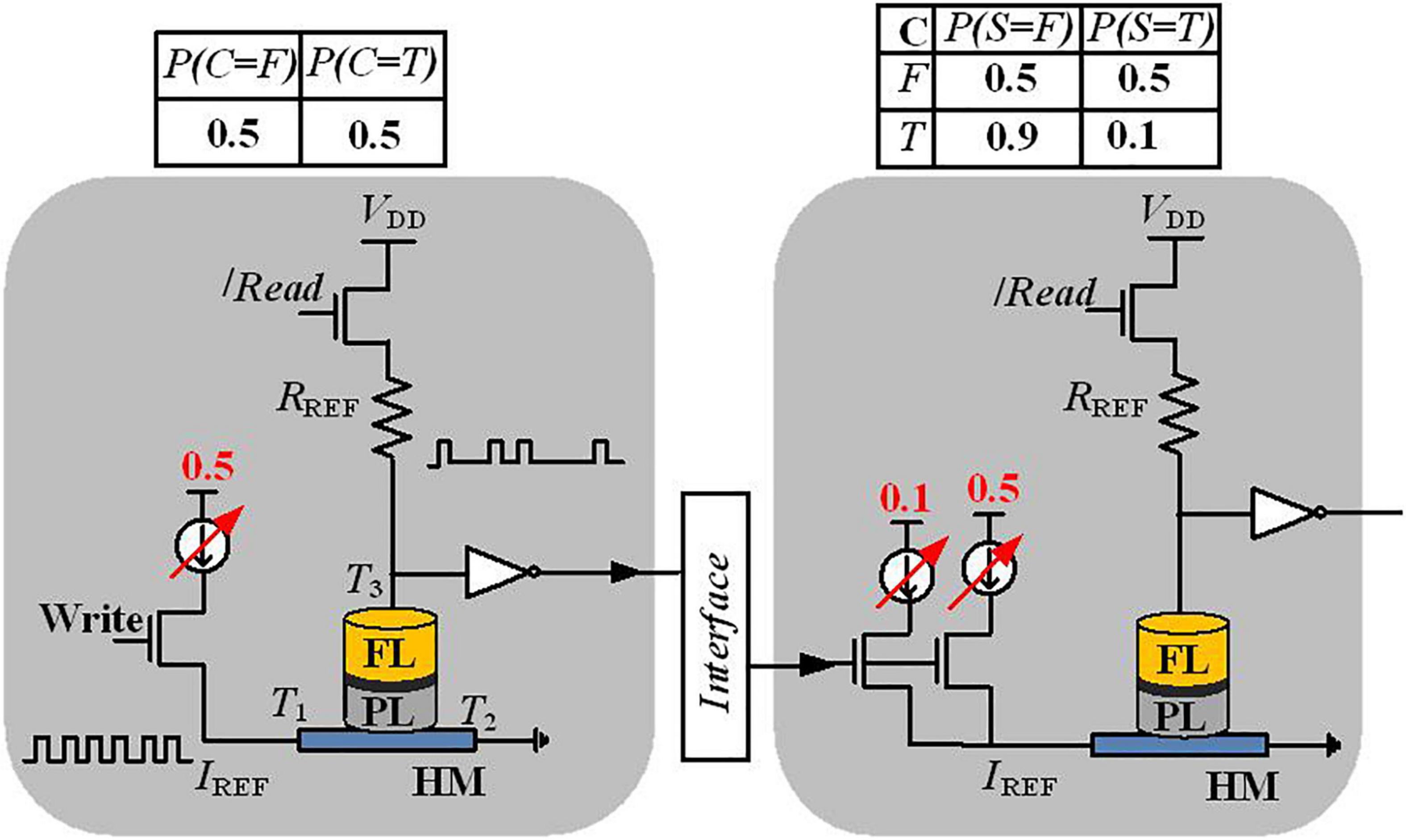

In Shim et al. (2017), a direct implementation of Bayesian networks has been proposed with a stochastic device that is based on a three-terminal device structure, shown in Figure 3. The proposed stochastic device can be developed by fabricating an MTJ stacked on top of the ferromagnet-HM layers. The stochastic switching of the device in the presence of thermal noise has been employed to implement a Bayesian network. This MTJ with two permanent states (represent two different resistance levels) models stored values by the resistance levels (Shim et al., 2017). The MTJ is composed of a tunneling barrier (TB) sandwiched between two ferromagnetic layers, namely, the free layer (FL) and the pinned layer (PL). The relative magnetization direction of two ferromagnetic layers defines the MTJ state; MTJ shows low (or high) resistance when the relative magnetization direction is parallel (or anti-parallel) (Bagheriye et al., 2018). Based on the write current through terminals T1 and T2, which probabilistically switches the magnet (with a probability controlled by the current magnitude), the read path through the terminals T3 and T2 controls the final state of the magnet.

Figure 3. Detailed implementation view of a Bayesian network variable and the interconnection between the two nodes C and S in the four-node Bayesian network of Figure 1.

In order to represent a variable of the Bayesian network, a Poisson pulse train generator translates the probability data into the frequency of the output pulses. Thanks to the controllable stochastic switching of the nanomagnet, along with current sources and some circuit elements [reference resistor (Rref) and separate write and read paths], the Poisson spikes can be generated as shown in Figure 3. A reference resistor is used to generate a Poisson spike train, where the number of spikes encodes information about the probability. For instance, if 30 spikes are observed at the output of the “S” node in 100 write cycles, this determines that the probability of “S is True” is 30%. Moreover, for more complex inference, extra arithmetic building blocks using CMOS circuits between two Poisson pulses are needed.

Neural Sampling Machine for Approximate Bayesian Inference

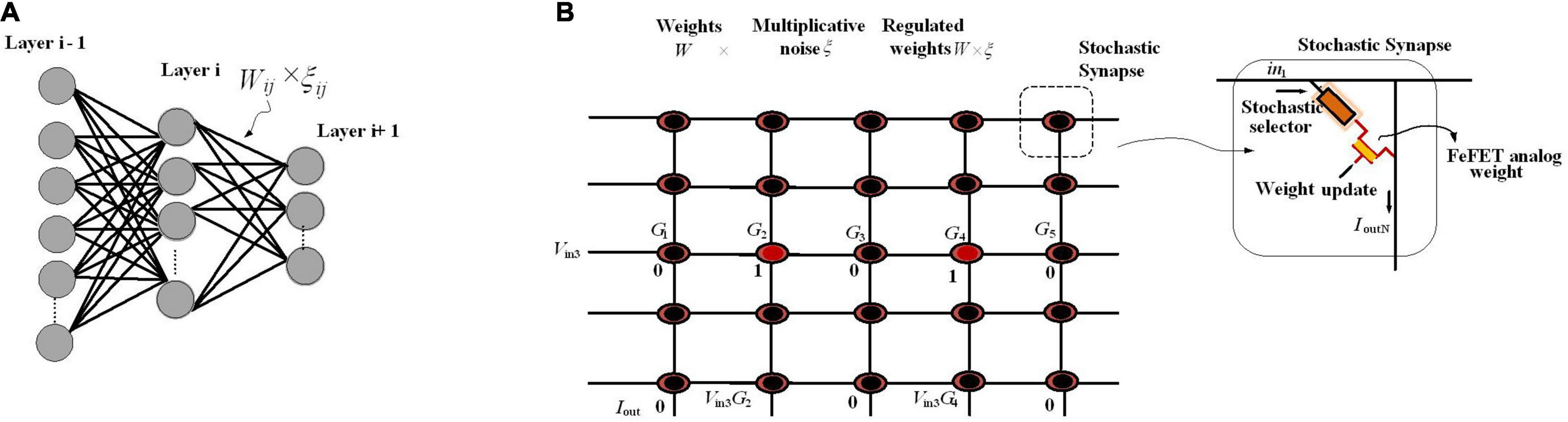

In biological neural networks, synaptic stochasticity occurs at the molecular level, and due to the presynaptic neuronal spike, the neurotransmitters at the synaptic release site release with a probability of approximately 0.1. Dutta et al. (2021) presented a neuromorphic hardware framework to support a recently proposed class of stochastic neural networks called the neural sampling machine (NSM), which mimics the dynamics of noisy biological synapses. NSM incorporates a Bernoulli or “blank-out” noise in the synapse to being multiplicative, which has an important role in learning and probabilistic inference dynamics. This performs as a continuous DropConnect mask on the synaptic weights, where a subset of the weights is continuously forced to be zero. Stochasticity is switched off during inferencing in DropConnect, whereas it is always on in an NSM providing probabilistic inference capabilities to the network. Figure 4 shows the hardware implementation of NSM using hybrid stochastic synapses. These synapses consist of an embedded non-volatile memory, eNVM [a doped HfO2 ferroelectric field-effect transistor (FeFET)-based analog weight cell] in series with a two-terminal Ag/HfO2 stochastic selector element. By changing the inherent stochastic switching of the selector element between the insulator and the metallic state, the Bernoulli sampling of the conductance states of the FeFET can be performed. Moreover, the multiplicative noise dynamics has a key side effect of self-normalizing, which performs automatic weight normalization and prevention of internal covariate shift in an online fashion. The conductance states of the eNVM in the crossbar array (which performs row-wise weight update and column-wise summation operations in a parallel fashion) are adapted by weights in the Deep Neural Network (DNN). In order to implement an NSM with the same existing hardware architecture, selectively sampling or reading the synaptic weights Gij with some degree of uncertainty is required. A selector device as a switch has been employed, stochastically switching between an ON state (representing ξij = 1, ξij generated for each of the synapse and is a random binary variable) and an OFF state (ξij = 0). Figure 4B depicts an input voltage Vin3 applied to a row of the synaptic array with conductance states G = {G1, G2, G3, G4,…, GN}, and based on the state of the selectors in the cross-points, an output weighted sum current Iout = {0, G2.Vin3, 0, G4.Vin3, 0} is obtained, which is exactly the same as multiplying the weight sum of WijZj (Zj, is the activation function of the neuron j) with a multiplicative noise ξij.

Figure 4. An NSM implemented in hardware using crossbar array architecture. (A) The utilized NSM with an input layer, three hidden layers, and an output layer. (B) The stochastic selector device used for injecting Bernoulli multiplicative noise is placed at each cross-point connected to an analog weight cell implemented using eNVMs. The stochastic selector element provides the selectively sampling or reading the synaptic weights Gij with some degree of uncertainty controlled by random binary variables ξij.

For Bayesian inference, the hardware NSM captures uncertainty in data and produces classification confidence. To this end, in Dutta et al. (2021), the hardware NSM has been trained on the full MNIST dataset. During the inference mode, the performance of the trained NSM on continuously rotated images has been evaluated where, for each of the rotated images, 100 stochastic forward passes are performed and the softmax input (output of the last fully connected hidden layer in Figure 4A) as well the softmax output were recorded. The NSM will correctly predict the class corresponding to an input neuron if the softmax input of a particular neuron is larger than all the other neurons. However, as the images are rotated more, even though the softmax output can be arbitrarily high for, e.g., neuron 2 or 4 predicting that the image are 2 or 4, respectively, the uncertainty in the softmax output is high, showing that the NSM can account for the uncertainty in the prediction. The uncertainty of the NSM has been quantified by looking at the entropy of the prediction, defined as H = −∑P*log(P), where p is the probability distribution of the prediction. When the NSM makes a correct prediction, the uncertainty measured in terms of the entropy remains 0. However, in the case of wrong predictions, the uncertainty associated with the prediction becomes large. This is in contrast to the results obtained from a conventional MLP network (deterministic neural network) where the network cannot account for any uncertainty in the data.

New Computing Architecture With Nonvolatile Memory Elements for Bayesian Network Implementation

In this section, several Bayesian network implementation systems will be discussed that make use of new nonvolatile magnets and CMOS circuit elements. We will first explain FPGA-like architectures and then discuss developed spintronic-based inference systems.

Direct Physical Equivalence Implementation of Bayesian Networks

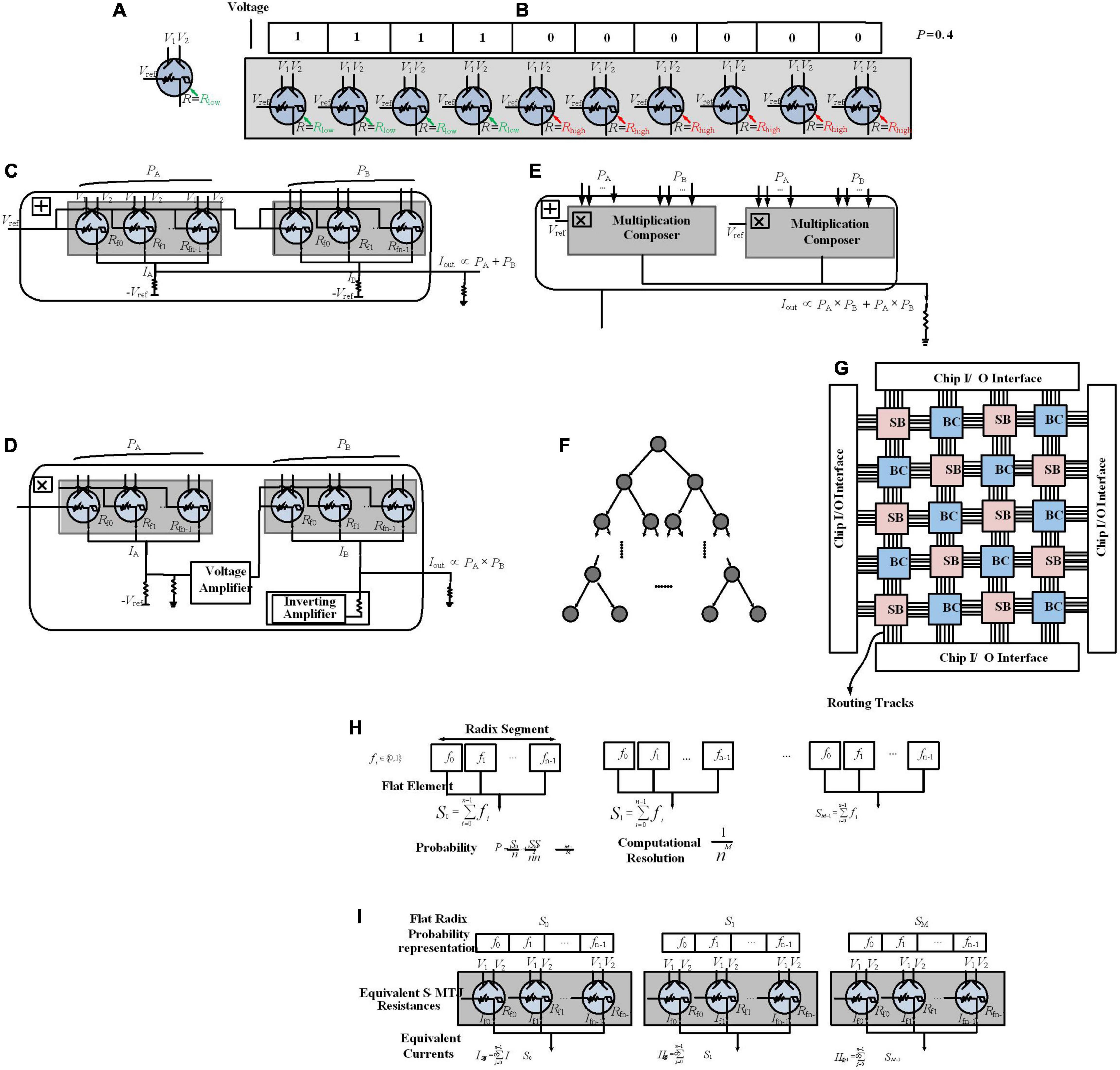

In Khasanvis et al. (2015a), in addition to transistors, strain-switched magneto tunneling junctions (S-MTJs) are used for a Bayesian hardware implementation. S-MTJs as nonvolatile devices provide low switching energy (Atulasimha and Bandyopadhyay, 2013). As shown in Figure 5A, it has four terminals and the resistance between reference and output terminals can be changed by the two input digital voltage terminals change. It shows hysteresis in resistance vs. voltage characteristics that provides non-volatility. Khasanvis et al. (2015a) represents a mindset of physical equivalence, which means each digit in the probability representation is mapped directly (without any abstraction layer) to S-MTJ resistance with equivalent digital voltage representation (Figure 5B), while the proposed work in Faria et al. (2018) and Debashis et al. (2020) need the PSL abstraction level to map Bayesian networks in hardware.

Figure 5. Probability encoding via Strain-switched Magneto-Tunneling Junction (S-MTJ) device. (A) A S-MTJ Device. (B) Probability data encoding by spatially distributed digits, and two-state S-MTJs for physically equivalent representations. Probability value P = 0.4 has been encoded with 10 digits (resolution of 0.1). Probability composer framework. (C) Addition composer. (D) Multiplication composer. (E) Add–Multiply composer. Physically Equivalent Architecture for Reasoning (PEAR). (F) Tree Bayesian Network. (G) Mapping every node in a Bayesian network graph to a Bayesian cell (BC) on PEAR. (H) Flat-Radix information representation pattern. Probability is encoded in segments where each segment has a radix arrangement and contains flat elements. (I) Probability data encoding by the proposed information representation scheme, and S-MTJs for physically equivalent representations.

For encoding, n spatially distributed digits p1, p2,…, pn have been defined (Figure 5B), each digit pi can be any one of k values, the number of states of the physical device (e.g., for devices with two states, k = 2 and pi ∈ {0, 1}). The encoded probability P is defined by: P = , which is called a flat linear representation (resolution is determined by the number of digits n). These digits have been physically represented in resistance domains using two-state S-MTJs, where Rlow represents digit 1 and Rhigh represents digit 0. For Bayesian computations in hardware, it is necessary to have analog arithmetic functions such as probability addition and multiplication. Figures 5C–E depict arithmetic composers, which are operating intrinsically on probabilities as elementary building blocks. Figures 5C–E show the addition composer, multiplication composer, and add–multiply operation composer, respectively, as well as support circuits such as amplifiers, implemented with CMOS operational amplifiers.

In Khasanvis et al. (2015a), thanks to the analog arithmetic composers, a paradigm departure from the Von Neumann paradigm has been developed that uses a distributed Bayesian cell (BC) architecture. In this architecture, each BC maps a Bayesian variable in hardware as physical equivalence, shown in Figures 5F,G, named Physically Equivalent Architecture for Reasoning (PEAR). BCs are constructed from probability arithmetic composers and are used to include CPTs, likelihood vectors, belief vectors, and prior vectors; BCs locally store these values continuously and perform inference operation, removing the need for external memory (Khasanvis et al., 2015a). Metal routing layers are used for BC interconnection. This connectivity is programmable through reconfigurable switch boxes (SBs) (similar to FPGAs) to map arbitrary graph structures.

Bayesian networks using binary trees, as shown in Figures 5F,G, have been mapped directly in hardware on PEAR. This computing architecture scales the number of variables to a million. Although for a resolution of 0.1, it gains three orders of magnitude efficiency improvement in terms of runtime, power, and area over implementation on 100-core microprocessors, it does not support efficient scaling for higher resolutions. To increase the resolution, it is needed to change the abovementioned flat linear representation that increases area linearly (where a single probability value requires multiple physical signals). To this end, as shown in Figures 5H,I, another S-MTJ-based circuit paradigm leveraging physical equivalence with a new approximate circuit-style has been reported (Kulkarni et al., 2017a), where the computation resolution is 1/(nM) where M is the number of Radix segments where each segment is composed of flat elements. This is a new direction on scaling computational resolution, which is a hybrid method for representing probabilities, aiming to provide networks with millions of random variables. Here, precision scaling provides much lower power and performance cost than in Khasanvis et al. (2015b) for PEAR implementation via offering area overhead at a logarithmic vs. linear scale. Results show a 30× area reduction for a 0.001 precision vs. prior direction (Khasanvis et al., 2015a) while obtaining three orders of magnitude benefits over 100-core processor implementations.

Stochastic Hardware Frameworks for Learning Bayesian Network Structure

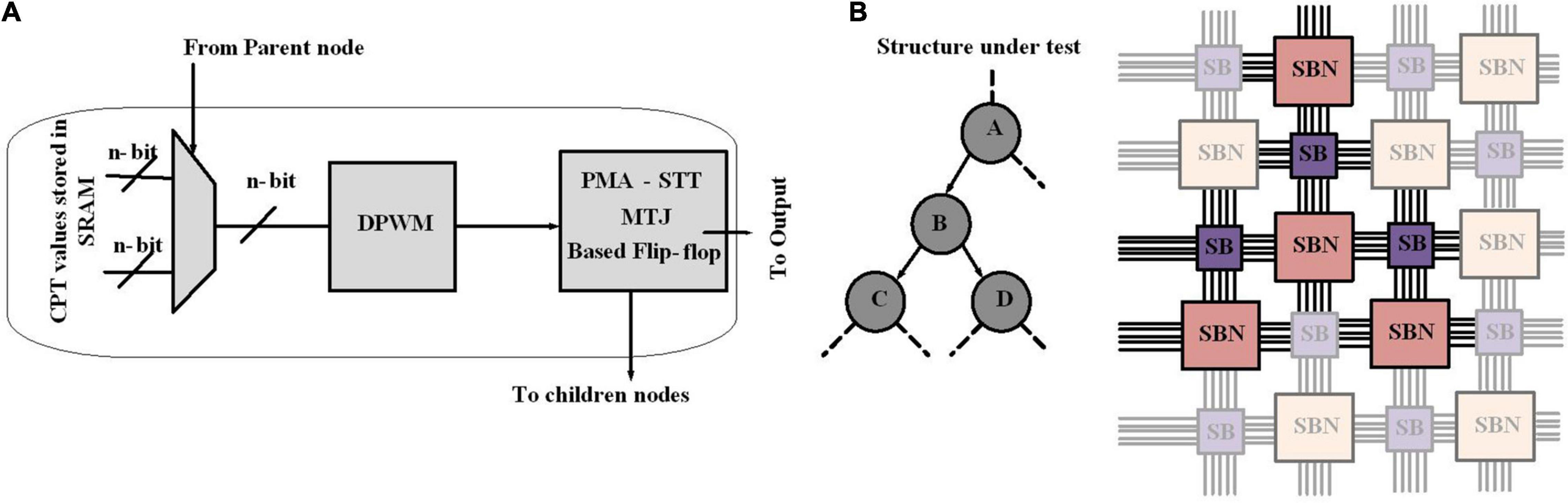

A Bayesian network has two major aspects: the structure of the graph and the parameters in the CPT and determining the structure of a Bayesian network is known as structure learning. In Kulkarni et al. (2017b), the stochastic behavior of emerging magneto-electric devices is used to accelerate the structure learning process of Bayesian learning, which results in reducing the runtime by five orders of magnitude for Bayesian inference. For structure learning, based on the data, an algorithm starts with a random graph, then a scoring mechanism determines how well the structure can explain the data, where this quality is typically a mix of simplicity and likelihood. The graph structure is updated based on the score; as a graph provides a better score, it is accepted. To perform scoring, the framework should support mapping of arbitrary Bayesian networks; hence, configurability is necessary. The proposed design employs an FPGA-like reconfigurable architecture constructed from a set of programmable SBs and Stochastic Bayesian Nodes (SBNs). For scoring a Bayesian structure, nodes are mapped into SBN and the connectivity between nodes is implemented by SBs (Figures 6A,B).

Figure 6. Design flow for structure learning in Bayesian networks. (A) Building blocks of Stochastic Bayesian Nodes (SBN). (B) Every node in a Bayesian network is mapped to an SBN reconfigurable framework. The links of the Bayesian network are implemented with metal routing layers and the connectivity programmed through switch boxes. Stochastic bitstream generator blocks for Bayesian network implementation.

Stochastic Bayesian Nodes represents a node in a Bayesian network. The node consists of multiplexers, a digital pulse width modulator (DPWM), and perpendicular magnetic anisotropy spin transfer torque magnetic tunnel junctions (PMA-STT MTJs). The switching operation of PMA-STT MTJ is probabilistic and directly controlled via modulating the duration of the applied current; this unique property has been employed to design circuits to perform probabilistic operations. As shown in Figure 6A, the CPT values are preconfigured in the SRAM cell. An appropriate CPT value to be sent to the DPWM is selected by multiplexers based on the output of the parent SBN. A DPWM generates voltage pulses with precise duration. Once the pulse corresponding to the CPT value is fed to the MTJ, the output is stored in a flip-flop. The output of the flip-flop is available for read-out and is also sent to the next node. The configured Bayesian structure is stochastically sampled to reach sufficient statistics. The sampled data are employed to calculate the Bayesian score of that structure, through Equation (2).

Through this hardware acceleration of the structure discovery (via scoring mechanism) process of Bayesian learning, the runtime for Bayesian network inference has been highly reduced (Kulkarni et al., 2017b). This property attracts more attention to structure learning acceleration and turns out to be a promising field to be studied.

Stochastic Bitstream Generator Blocks for Bayesian Network Implementation

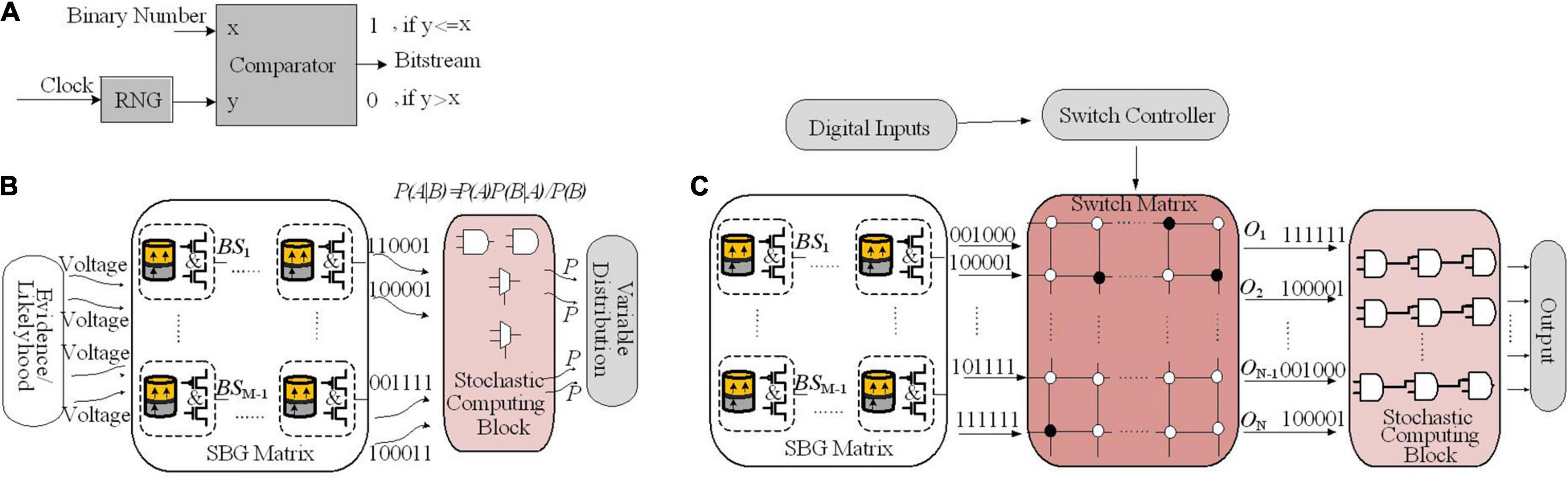

In Jia et al. (2018), the inherent stochastic behavior of spintronic device, MTJs, has been used to build a stochastic bitstream generator (SBG), which is critical for the Bayesian inference system (BIS).

Figures 7A,B describe the diagram of the proposed BIS in Jia et al. (2018). A SBG block consists of a RNG and a comparator, which together generate the corresponding bit stream (Figure 7A). The input of BIS is shown in Figure 7B, which is a series of bias voltages proportional to evidence or likelihood. These evidences or likelihoods may come from sensors of different platforms. The SBG matrix and the SC architecture are two key components of a BIS. The SBG matrix is employed to translate the input voltages to stochastic bitstreams. The stochastic computing architecture is constructed by simple logic gates such as AND gate and scaled addition implemented by a multiplexer (MUX) and takes SBs as inputs. Stochastic computing block implements Bayesian inference by a novel arrangement of logic gates.

Figure 7. (A) Stochastic bitstream generator (SBG) block design. (B) Stochastic Bayesian inference system. (C) Spintronic-based Bayesian Inference System (SPINBIS) diagram work in this figure (switch matrix).

For an SBG, the small margin input voltages (Jia et al., 2018) is highly problematic when it generates the output probability. Digital-to-analog converters (DACs) with high precision are needed for precise mapping from digital probabilities to voltages. In addition, tackling the nonlinear relationship between probabilities and voltages is difficult and a slight noise or process variation may translate a probability to a wrong voltage value. In order to address these limitations, for the specified applications a prebuild SBG array utilizing SBG sharing strategy is employed. It is implemented by hybrid CMOS/MTJ technologies named spintronic-based Bayesian inference system (SPINBIS) (Figure 7C). The aim of proposing the SPINBIS is to enhance the stability of SBG and to use a smaller number of SBGs (Jia et al., 2020). The outcome probability of each SBG is predetermined and is then multiplexed through the switch matrix, which is a crossbar array. This crossbar array is constructed from transistors implemented at cross points, which are controlled by the switch controller. Since the SBG array is prebuilt, it should provide enough kinds of bitstreams to have an accurate stochastic computing block. In order to improve the energy efficiency and speed of SBG circuit, a state-aware self-control mechanism is utilized. Thanks to the SBG sharing property, the inputs with the same evidence can be modeled by the bitstream of the same SBG. However, for the inputs that are related together by one or more logic gates, which are called conflicting inputs, sharing the same SBG is problematic and is not allowed. The SBG sharing mechanism provides a much smaller number of SBGs compared with the input terminals of stochastic computing logic since the non-conflicting terminals are allowed to share the same bitstream. For data fusion applications, SPINBIS provides 12× less energy consumption compared to the MTJ-based approach (Jia et al., 2018) with 45% area overhead and about 26× compared to the FPGA-based implementation. On the other hand, the relation between probability and voltage is not very smooth; as a result, the stability of the proposed SBG needs improvement. Although the scale can be reduced, the switch matrix can show a congestion problem; hence, the reduction of the scale of SPINBIS is also worth exploring.

Bayesian Inference Hardware Implementation With Digital Logic Gates

In this section, digital implementation of Bayesian inference will be discussed. First, we describe an implementation of Bayesian inference on HMM structures in digital logic gates. Next, an approximate inference algorithm based on a novel abstraction defined by stochastic logic circuits and some other hardware implementations of MRFs will be explained. Then, we describe C-Muller circuits as implemented with standard cells for Bayesian inference. Finally, we discuss probabilistic nodes based on CMOS technology. Hardware implementation of Bayesian inference employs the HMM network.

Hardware Implementation of Bayesian Inference Employing Hidden Markov Model Network

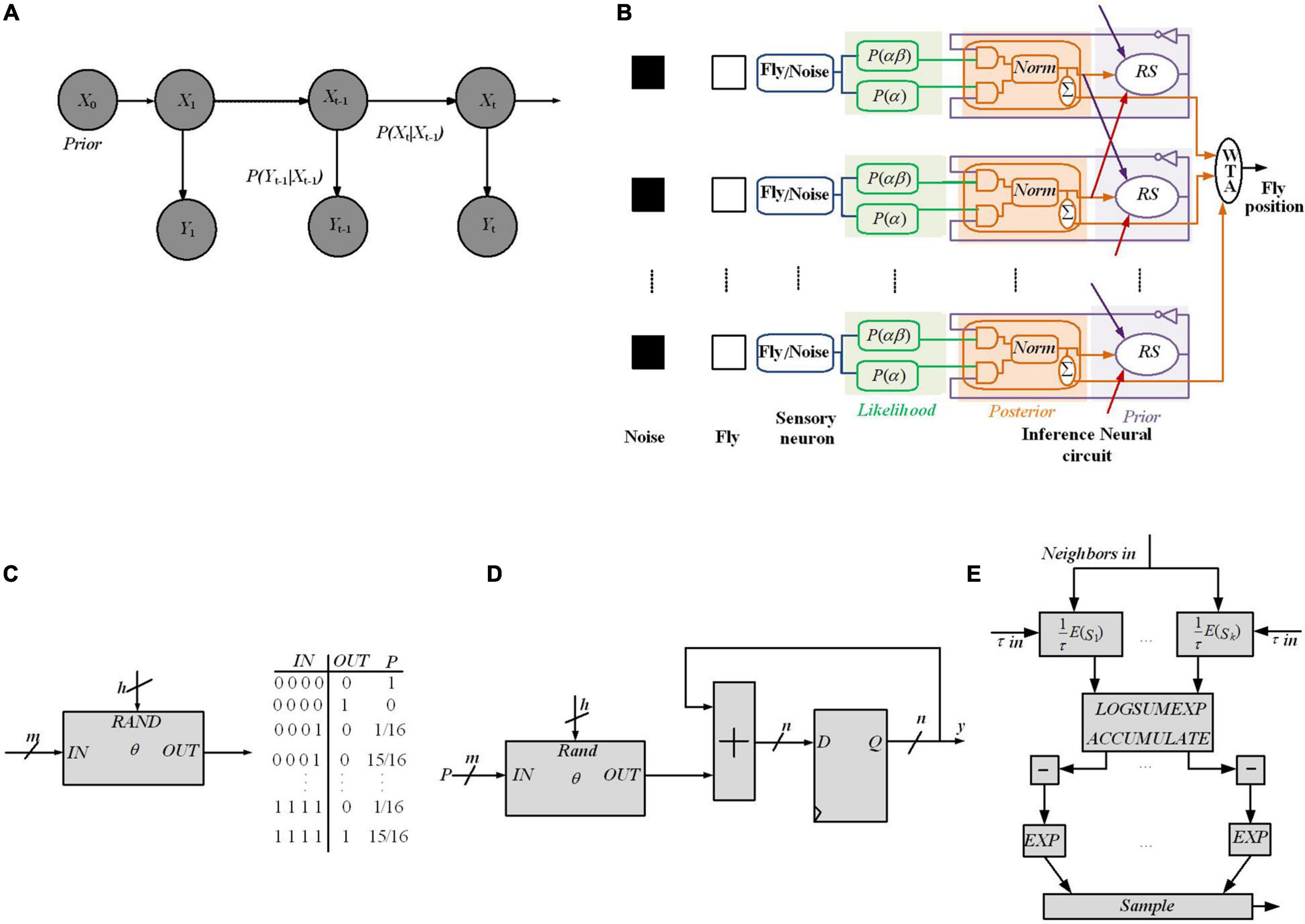

In Thakur et al. (2016), a hardware implementation of an HMM network has been proposed that utilizes sequential Monte Carlo (SMC) in SNNs. An HMM shown in Figure 8A models a system defined by a process that generates an observable sequence depending on the underlying process (Yu et al., 2018). In an HMM, Xt and Yt represent the signal process and the observation, respectively. In a first order HMM, Yt, is considered as a noisy function of Xt and the development of a hidden state depends only on its current state. Xt is computed by its posterior distribution based on the noisy measurements or Yt. For a discrete-time problem, Equation (8) defines the first-order HMM, in which dt and vt denote random noise sequences.

Figure 8. FPGA implementation of different Bayesian networks (A) An HMM architecture, in which the random variables Xt and Yt are the hidden state at time t and the observation at time t, respectively (conditional dependencies are shown by arrows). (B) Digital Hardware implementation of HMM algorithm for Bayesian inference in Thakur et al. (2016). Implement the Gibbs sampling algorithms for Markov random fields in Mansinghka et al. (2008). (C) The schematic and CPT for a O gate, which flips a coin by specifying the weight on IN as a binary number [e.g., for IN = 0111, P(OUT = 1| IN) = 7/16]. A comparator that outputs 1 if RAND ≤ IN has been employed for implementing the O gate. (D) The proposed circuit for sampling, for a binomial distribution, utilizes nh bits of entropy to perform sampling while considering n flips of a coin of weight. (E) Gibbs pipeline, for Gibbs sampler depicting the required operations to numerically sample an arbitrary-size variable.

The posterior density function P (Xt| Y1:t) is computed recursively in two steps (i) prediction, and (ii) update. In the prediction step, the next state is estimated based on the current state utilizing the state transition model, without making use of new observations [see Equation (9)]. In contrast, the predicted state is updated utilizing the new observations at time t as shown in Equation (10).

In Thakur et al. (2016), to estimate a fly’s position at time t, a digital framework working based on the HMM rule shown in Figure 8B is utilized, through which a dragonfly tracks a fruit fly in a randomly flickering background. The sensory afferent neurons of the dragonfly fire probabilistically, when there is a fruit fiy or a false target (noise).

Dividing the state space (Xt) into M discrete states reflects the fly’s (discretized) position at time t. A sensory neuron and an inference neuronal circuit demonstrate each discrete state. The fruit fly’s position at time t is predicted by the dragonfly’s central nervous system through utilizing the statistics of the output spikes of the sensory afferent neurons until time (t-1), and updates the prediction when it receives a new observation (Yt), at time t. Utilizing prediction and update Equations (9) and (10), it can be written as:

where P(Yt | Xt) is the likelihood, P(Xt | Xt–1) is the transition probability, and P(Xt–1| Y1:t–1) is the posterior at the previous time step. Here, Y ∈ RM, and M denotes the total number of states. At each time step, for each state, the probability of the fly is computed. To this end, a WTA circuit is used to predict the fruit fly position by finding the maximum a posteriori of the probability distribution over states.

To predict the position of the fruit fly, the posterior probabilities of the state space is employed. To this end, an algorithm is utilized, which is similar to the SMC technique as a Monte Carlo method, useful for sequential Bayesian inference (Gordon, 1993). In the proposed framework, spikes denote a probability distribution over a set of states (i.e., the probability of a state is proportional to the sum of its spikes) and the RS (resampling) neuron block encodes the transition model, P(Xt | Xt–1) through spatial connections (Figure 8B).

The likelihood generator block has a Poisson neuron (PN), generating spike trains based on its intrinsic firing rate, α and αβ (α: the probability of firing of the kth sensory neuron, either due to a fruit fly or a distractor; αβ is a spike when there is no fly, but a distractor instead). To implement the posterior generator block, the two subblocks of the Coincidence Detector (CD) neurons along with the normalization (norm) neural circuits have been utilized. Since the likelihood spike train does not depend on the prior spike train, a simple AND logic gate for the CD neuron can be utilized for the posterior implementation. The output spike trains of the CD neurons as the posterior probabilities of not having a fly and having a fly, respectively, are sent to the norm block to normalize spike trains.

Recurrent connection weights in the framework (shown by red, orange, and purple arrows in Figure 8B) are based on the transition probabilities. Spikes from the posterior distributions of adjacent norm neural circuits by considering their transition probabilities are sampled for a pathway by the RS block utilizing an inverse transform sampling approach in a discrete distribution.

Through collecting statistics of the spikes over many HMM time steps, the observation model parameters, α and αβ, are computed. At the start of the learning process, through stochastic exponential moving average filters (SEMAs), the parameters α and αβ are initialized and updated at each HMM time step for each location. An RNG circuit is implemented by the commonly used linear feedback shift register (LFSR) circuit. Neuronal building blocks used for implementing the HMM in Figure 8B are the PN, CD neuron, division, and normalization neural circuit, LFSR, and SEMA, which all are implemented on FPGA while all pathways are implemented in parallel on the FPGA hardware too. The implementation of these frameworks using simple logic gates will pave the way for stochastic computing to have digital hardware implementation of Bayesian inference using other approximation inference algorithms in spiking networks.

Hardware Implementation of Approximate Inference Algorithm Using MCMC With Stochastic Logic Gates

By employing a novel abstraction, called combinational stochastic logic, probabilities are directly mapped to digital hardware in a massively parallel fashion (Mansinghka et al., 2008). On each work cycle, the output of a Boolean logic gate is a Boolean function of its inputs. Each gate represents a truth table whereas stochastic gates represent CPTs. Figure 8C shows the CPT and schematic for a gate called O, which generates flips of a weighted coin by specifying the weight on its input lines (IN) with h random bits on RAND. A comparator is utilized to implement the O gates where the output will be 1 if RAND ≤ IN.

Figure 8D shows a serial circuit composed of a stochastic logic gate, an accumulator, and a D flip-flop to implement the Gibbs sampling algorithms for MRFs. For a binomial distribution, this circuit utilizes nh bits of entropy to perform sampling while considering n flips of a coin of weight. It provides O(log(n)) space and O(n) time complexity. For a given variable, in order to implement a Gibbs MCMC kernel, a pipeline platform depicted in Figure 8E has been proposed (Mansinghka et al., 2008). Each possible setting while considering its neighbors under the joint density of the MRF has been scored by the pipeline and those scores have been tempered. Then, it computes the (log) normalizing constant and normalizes the energies. The normalized energies are translated to probabilities, and finally the pipeline outputs a sample. This pipeline can provide linear time complexity in the size of the variable by utilizing standard techniques and with a stochastic accumulator for sampling (using the circuit in Figure 8D). To this end, a fixed-point format is utilized to represent the state values, energies (i.e., unnormalized log probabilities), and probabilities. The logsumexp(e1,e2) function used for adding and normalizing the energies and the exp(e1) function used for converting the energies to probabilities are approximated. Then, the pipeline samples by exact accumulation. Moreover, numerically tempering a distribution, i.e., exponentiating it to some, can be utilized as energy bit shifting.

The proposed stochastic circuits have been implemented on Xilinx Spartan 3 family FPGAs. Typically large quantities of truly random bits are needed for stochastic circuit implementation. In almost all Monte Carlo simulations high quality pseudorandom numbers are used. For the FPGA implementation in Mansinghka et al. (2008), the XOR-SHIFT pRNG (Marsaglia, 2003) is used.

In order to develop more sophisticated circuits, such as circuits for approximate inference in hierarchical Bayesian models, which is a challenging research field, it is needed to combine the stochastic samplers with stack-structured memories and content-addressable memories (Shamsi et al., 2018; Guo et al., 2019). Moreover, directly using sub-parts from the proposed Gibbs pipeline to implement more sophisticated algorithms, including SMC methods and cluster techniques like Swendsen-Wang, is a promising research effort for the future.

There are a couple of works that provide MRF implementation for different applications via utilizing FPGA, application-specific integrated circuit (ASIC), graphics processor unit (GPU), and hybrid implementation via CPU+FPGA. Gibbs sampling as a probabilistic algorithm is utilized to solve problems represented by an MRF. In Gibbs sampling method, all random variables in MRF are iteratively explored and updated until converging to the final result (Bashizade et al., 2021). Ko and Rutenbar (2017) explores sound source separation while considering real-time execution and power constraints to isolate human voice from background noise on mobile phones. The implementation uses MRFs and Gibbs sampling inference, which demonstrates a real-time streaming FPGA implementation that achieves a speedup of 20× over a conventional software implementation. In addition, the approach also has a preliminary ASIC design-based implementation, which requires fewer than 10 million gates, with a power consumption of 52× better than an ARM Cortex-A9 software reference design. For more ASIC optimization, it is necessary to use a lower-power technology library and design optimization for lower memory usage.

In Seiler et al. (2009), an optimization framework utilizing a hierarchical Markov-random field (HMRF) implemented on a GPU is presented to deal with prediction/simulation of soft tissue deformations on medical image data. A method that combines mechanical concepts into a Bayesian optimization framework has been proposed (Seiler et al., 2009). This method has been implemented on a GPU and has been defined and solved under an HMRF approach. Providing an HMRF feature is an appealing technique that is able to solve the proposed stochastic problem since it was found that local minima are avoided. Where using a hierarchical approach and in addition, the nature of the hierarchical approach leads to a straightforward implementation in the GPU. It is assumed that the number of hierarchical levels on the number of iterations for the model to converge has a strong influence, which can be further explored in the future.

In Choi and Rutenbar (2013, 2016) to demand fast and high-quality stereo vision, a custom hardware-accelerated MRF system has been proposed for 3D gesture recognition and automotive navigation. The stereo task has been modeled as statistical inference on an MRF model and shows how to implement streaming tree-reweighted message-passing style inference at video rates. To provide the required speed, the stereo matching procedure has been partitioned between the CPU and the FPGAs. This partitioning provides using both function-level pipelining and frame-level parallelism. Experimental results show that this system is faster than several recent GPU implementations of similar stereo inference methods based on belief propagation.

As can be seen, there are still open windows to utilize new emerging nonvolatile devices and crossbar arrays to implement MRFs rather than just utilizing FPGA, ASIC, GPU, and hybrid implementations (CPU + FPGA). Moreover, refining the algorithms to make them more amenable to hardware implementations is needed while keeping the accuracy high.

Muller C-Element Based Bayesian Inference

In order to calculate the probability of an event V, Bayesian inference incorporates the probability of V given the prior P(V) and evidence input E1 as in Equation (12), where, with parameter as defined by Equation (13), Equation (14) gets rewritten as Equation (15).

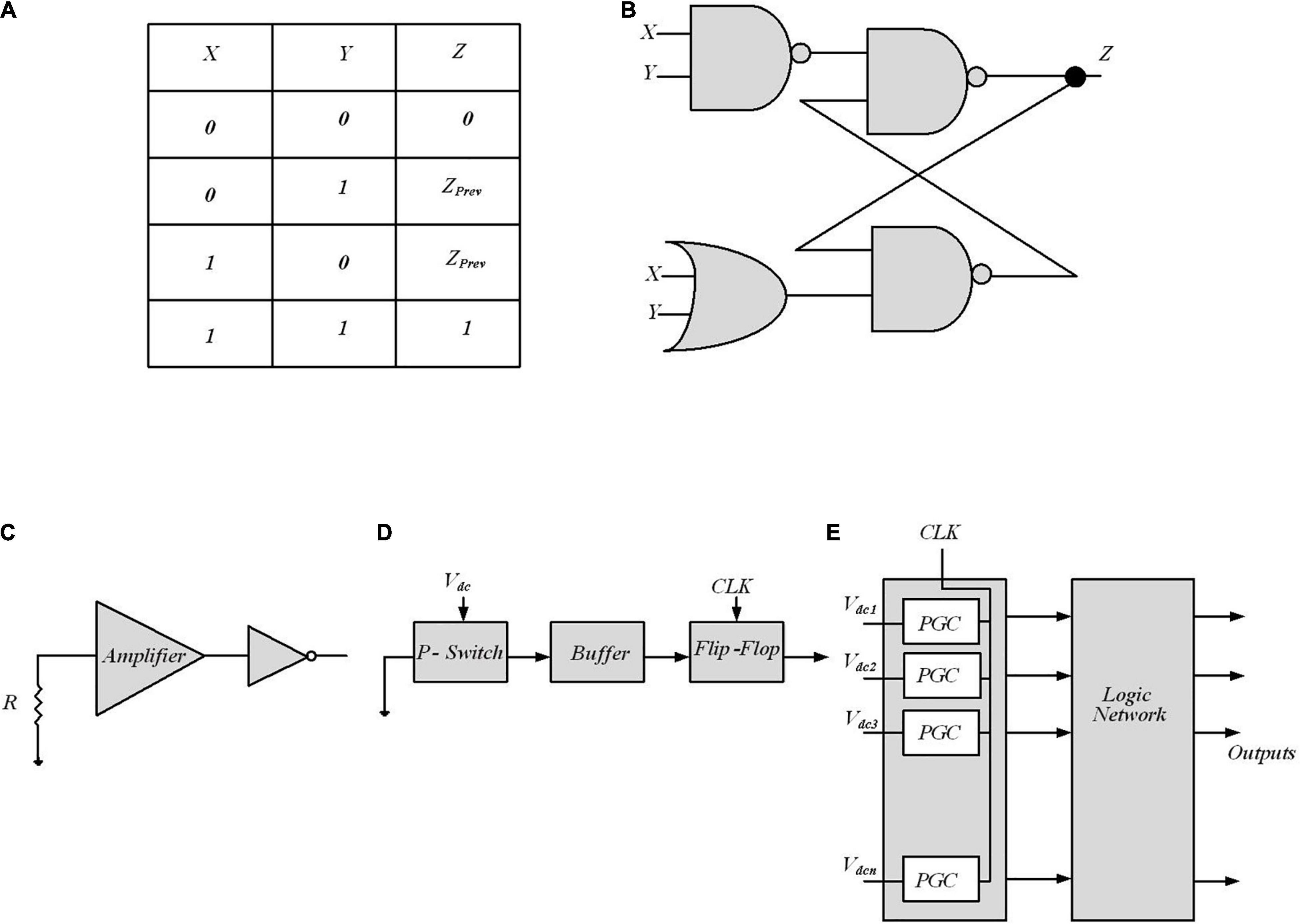

The Muller C-element reported in Friedman et al. (2016), a two-input memory element, characterized by the truth table of Figure 9A, and shown in Figure 9B, performs the complete inference of Bayes’ rule. The output Z keeps its state, Zprev while both inputs X and Y are opposite the current output state; afterward, it switches to the shared input value. A Muller C-element is able to compute Equation (14), thereby enabling efficient inference circuits. Note that input signals i with switching probabilities ai and bi for 0- > 1 and 1- > 0 switching, respectively, show no autocorrelation if ai + bi = 1. Then, considering no autocorrelation for input signals, the output probability is defined by Equation (15) for C-element, where P*(E1), P(V), and P(V | E1) are substituted by for P(X), P(Y), and P(Z). The reported Equation (15) is equivalent to Equation (14), representing the Bayesian inference provided by C-elements.

Figure 9. Digital implementation of Bayesian inference. (A) Muller C-element truth table in Friedman et al. (2016). (B) Standard cell C-element design of Muller C-element. PCMOS-based Bayesian network in Weijia et al. (2007). (C) p-switch circuit implementation block. (D) Probabilistic generating cells (PGCs) block. (E) The inference system utilizes a probabilistic generating block and a logic network.

Clocked bitstreams in stochastic computing are utilized to encode probabilistic signals permitting complex computations with minimal hardware and significantly improve the computation power consumption and inference speed when compared with conventional methods. Stochastic computing is not an exact computing technique and the slight loss of accuracy arises from several reasons. Compared to fixed or floating-point methods, in stochastic computing, the probability values P are usually translated to a stochastic bitstream with a lower quantization accuracy and the correlations between bitstreams usually lead to the loss of accuracy, since these bitstreams are usually generated by pseudo RNGs. Addressing this inherent imprecision and correlations need novel design techniques.

In Friedman et al. (2016), the number of “1”s in a bitstream encodes its probability and has nothing to do with the position of the 1 bits. In a stochastic bitstream, to represent a state switching probability a (b), i.e., the dynamics of a 0- > 1(1- > 0) switching, the probability R defined as R = a/(a+b). For an uncorrelated bitstream (i.e., a+b = 1), the probability is equivalent to R = a, where being “1” has a probability of R and being “0” has a probability of 1-R. Then, the switching rate for an uncorrelated bitstream is defined by Equation (16):

The C-element outputs a stochastic bitstream, which is probabilistic and converging more slowly toward the exact Bayesian inference. If the switching rate of the output was low, the longer “domains” of consecutive “0”s and “1”s are needed and it leads to a more imprecise bitstream. Hence, more computation time is required to provide a precise output.

For multi-input Bayesian inference calculation, utilizing multi-stage C-element circuits is necessary, which would need one additional cycle per stage to compute a bitstream. On the other hand, the floating-point circuit provides a highly precise output while needing multiple pipelined computations and a long characteristic delay time. Hence, the C-element structure’s performance benefit is dependent on the required precision for the specific application.

For embedded decision circuits, where different independent sources of evidence are considered, for computing the probability of an event, C-element trees can provide direct stochastic hardware implementation. However, exploring the autocorrelation and inertia mitigation through signal randomization is required for further studies. For extreme inputs with low switching rates, the loss of accuracy is significantly increased. By increasing the length of the bitstream, the output signals converge in a polynomial manner to Bayesian precise inference. In addition, C-element trees have larger errors for opposing extreme input combinations. It is mentioned that this type of input and the error can be considered as strong conflicting evidence and the inference uncertainty, respectively.

The standard cells from Synopsys (Synopsys, 2012) SAED-EDK90-CORE library are used (Tziantzioulis et al., 2015) for C-Muller module implementation. For a two-input Bayesian inference implementation the standard cells have been employed and the simulation results showed that the floating-point circuit utilizes 16,000× area more than a C-element. This is due to the fact that for a two-input inference problem, just one C-element is required while the conventional floating-point circuit needs addition, multiplication, and division units. Also, for multi-input Bayesian inference, the C-element still outperforms the floating-point circuit.

Probabilistic CMOS Based Bayesian Inference

In Weijia et al. (2007), probabilistic CMOS (PCMOS) technology has been used to implement RNGs to create a highly randomized bit sequence suitable for inference in a Bayesian network. A PCMOS-based RNG is composed of the PCMOS switch or p-switch, which is a CMOS switch with a noise source coupled at its input node. Figure 9C shows a p-switch block. The resistor is employed as a source of thermal noise, which follows the Gaussian distribution. An amplifier is used to amplify the noise signal to have a comparable signal with supply voltage.

The inference system is shown in Figure 9E, composed of probabilistic generating block and logic network. The probabilistic generating block generates random bits with different probabilities, and the logic network defines the edges between the nodes in a Bayesian network. The probabilistic generating block is composed of a number of probabilistic generating cells (PGCs), each of which generates a “1” bit with a probability. A PGC shown in Figure 9D is made up of a p-switch, a buffer, and a flip-flop. The buffer constructed from two inverters strengthens the output signal of the switch. The flip-flop, formed by two D latches, synchronizes the PGCs. Arithmetic operations (addition and multiplication in Bayesian network) computed in computers require a lot of time and energy. Here, two simple logic gates (an AND gate and an OR gate), together with some inverters, are employed to construct the logic network. To determine the approximate probability of the output at each node, a simulation has been performed to generate a 10,000-bits sequence at each node and then measure the “1”-bits in each sequence. The PCMOS-based hardware implementation of the Bayesian network outperforms the software counterpart in terms of energy consumption, performance, and quality of randomness. However, making use of mixed-signal implementations needs paying attention to noise and variation sources as well as examining the multiple independent sources of evidence for embedded decision circuits that require circuit design remedies.

Crossbar Arrays for Bayesian Networks Implementation

In this section, two brain-inspired hardware implementations of inference in naïve Bayesian (NB) classifiers will be discussed. These implementations use memristors as nonvolatile elements for the inference algorithm implementation. Bayesian reasoning machine with magneto-tunneling junction-based Bayesian graph is explained.

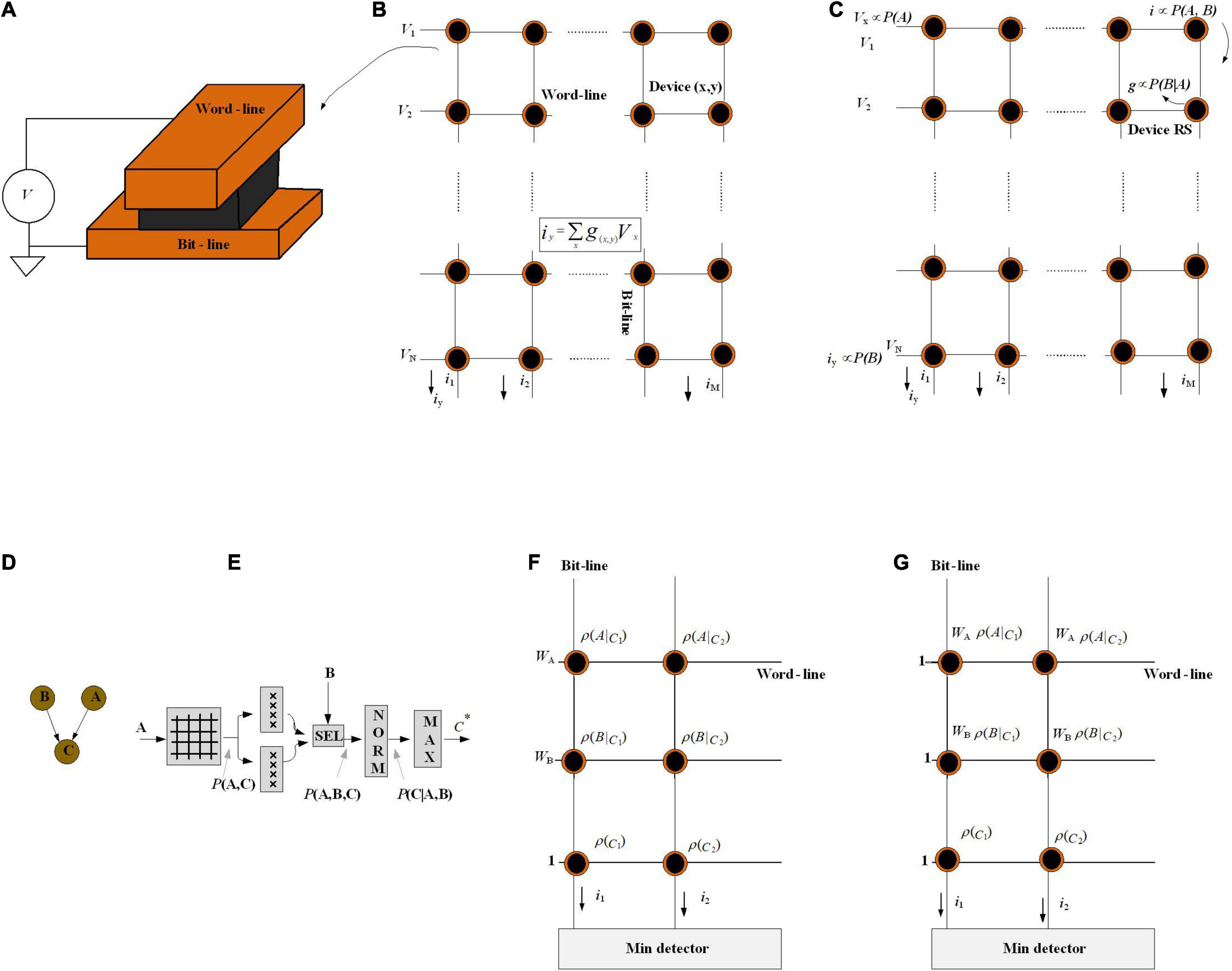

Crossbar Arrays for Naïve Bayesian Classifiers

A crossbar array of memristors is a promising hardware platform for Bayesian processing implementation in a massively parallel and energy-efficient way (Yang et al., 2020). Figure 10A depicts a schematic view of a memristor cell, in which a storage layer is sandwiched between the top and bottom electrodes, and the conductance of the device is dependent on the applied voltage. Figure 10B shows a crossbar array; it represents a maximum area efficiency of 4F2 per cell (Wu et al., 2019). Memristor crossbar arrays provide a natural implementation of matrix-vector multiplication (MVM). The current flowing through a memristor cell at the wordline x and bitline y is equal to Vxg(x,y). Here, Vi is the voltage applied to the wordline x and g(x, y) is the conduction of the cell. The total current through the bitline y is ∑xVxg(x, y), which implements a dot product of Vx.g(x, y). The algorithmic complexity of MVM is reduced from O(n2) to O(1), which makes them a promising computing paradigm for different machine learning applications (Wu et al., 2019).

Figure 10. (A) Schematic of the memristor device in which the device’s active material is surrounded by two electrodes [top (wordline) and bottom (bitline)]. (B) Ohm’s law: i = g. V is utilized to perform multiplier operation. (C) The crossbar array is used as a Bayesian inference system. (D) Graphical model for Bayesian network. (E) Implementation of the Bayesian classifier. (F) Implementation of the naïve Bayesian classifier for a network of two attributes. (G) Implementation of a different way to calculate Ic. In this method, the weight of the ith attribute (wi) is stored in the cell resistance.

To perform Bayesian inference, Figure 10C shows a memristive crossbar array where a discrete distribution represented by a voltage is injected to the wordlines, the conditional probability P(B| A) translate to the memristor conductance, and all bit-lines are virtually earthed. Utilizing the current summing action of the crossbar bitlines, the current of each memristor is proportional to P(B| A)⋅P(A) = P(A, B), which is marginalized to P(B). Finally, inputs are multiplied by memristor conductances (gk) and exit as currents.

In analog systems, due to the noise, mismatch, and other variation sources, the input vectors do not necessarily meet the fact that the probability distributions of random variables must sum up to 1. To this end, the “normalizer” circuit is employed as a supporting module. Moreover, utilizing a linear method to convert the probability into voltage levels or memristor resistive states limits the dynamic range of the probability. That is, very small probability values may be translated into voltages below the noise levels in the system (Serb et al., 2017). However, the normalizers could scale these values when they are very low, but similar. It turns out to be problematic if there are very large probability values as well as very low ones in the same distribution. To solve this issue, it has been suggested that the resistive state/voltage needs to be mapped to the log probability domain (Wu et al., 2019).

Naïve Bayesian classifiers assume that the feature variables are all independent of each other (Serb et al., 2017) and the classification is based on the Bayesian theorem. For a test instance x, represented by an attribute value vector (A, B), the NB finds a class label c that provides the maximum conditional probability of c given A, B.

In Serb et al. (2017), a small graphical model for the prediction of potential health issues (Figure 10D) has been supposed to be implemented in memristor crossbar arrays, where A shows the air quality as A ∈ {bad, medium, good}, and B shows the corresponding heartbeat of the patient for two different activities B ∈ {resting, exercising}. Then, by considering random variables A, B, in order to predict the probability of a health crisis, and thus to clarify whether to warn the patient, i.e., C ∈ {safe, crisis} with a classic NB classifier, the goal of NB is to find a class label c that has the maximum conditional probability of c given A, B (as attributes):

C* is defines as the maximum a posteriori estimate.

Figure 10E depicts the hardware implementation process of the proposed NB framework. With air quality level A as input and the crisis level prediction C as an output, first, a crossbar stores P(A, C) = P(A| C)P(C), before factoring heart rate B in. Then, the output is sent in parallel to two arrays of memristors that maintain P(B = resting | C) and P(B = exercising | C), respectively. Based on the heartbeat B, one of the two outputs would be selected to put into the normalizer to calculate P(C| A, B). Finally, the max-finder module finds the estimate C*. This inference platform depicts that with the crossbar arrays as well as utilizing a cascade of small modules, it is able to scale to more complicated graphical models.

As discussed above, by directly employing the multiply accumulate capabilities of the crossbar array, the inference can be performed. During learning, as new data arrives, the conditional probability matrix needs to be updated; thus, the devices in the crossbar need to be programmed. The conductance stability and the energy efficiency of memristor switching, i.e., how many attempts are needed to reach the memristors desired state, determine the energy, speed, and circuit complexity cost of the probability updates (Serb et al., 2017). In Equation (17), it has been assumed, given the class, that all attributes (A, B) are fully independent of each other. The classification accuracy would be harmed when this assumption is violated in reality.

Wu et al. (2019) propose another analog crossbar computing architecture to implement the NB algorithm while considering the abovementioned concerns. It assigns every attribute a different weight to indicate different importance between each other. This assignment relaxes the conditional independence assumption. The prediction formula is formally defined as:

where wA and wB are the weight of attributes A and B, respectively. The NB classifier in Equation (17) is a special case of the Weighted NB (WNB) classifier when wA and wB are equal to 1.

Naïve Bayesian formula [Equation (18)] transformation to the crossbar array Equation (18) cannot be directly applied to the Memristor crossbar array (concern 1). So, a log(•) operation is applied because P(•) ∈ (0, 1). log P(•) is a negative value that cannot be represented by the conductance of memristor cells as the conductance is always positive; then, ρ(•) denotes -log P(•) and then Equation (18) is rewritten as:

The q(c) rewritten in the form of a dot product v→.g→, where v→ = [1 wA, wB ] and g→ = [ρ(c), ρ(A|c), ρ(B|c)]. Hence, it is feasible to compute q of every class by the MVM.

After training, every prior probability ρ(c) is stored, as well as every conditional probability ρ(A| c) in the crossbar array in the form of memristor conductance, where c ∈ C.

For attribute A, voltage wA is applied to the wordline (Figure 10F) and the current gathered on this sub-bitline (IAc). With the addition of ρ(c), the final result is obtained as current on one bitline. Multiple bitlines together give answers of Equation (19) to all classes. Optimization has also been proposed to the input voltage. Due to the I–V nonlinearity of the ReRAM cell, the analog input voltage (i.e., wi) might result in inaccuracy. The weight wi is included in the cell conductance shown in Figure 10G.

The simulations show that the design offers a high runtime speedup up with negligible accuracy loss over the software-implemented NB classifier. This brain-inspired hardware implementation of NB algorithm as well as providing insights from techniques like mean-field approximation (Yu et al., 2020) will help to find an optimal balance between structure and independence, using hardware feasibility considerations and independence assumptions as mutually constraining objectives, which can be a promising research field.

Bayesian Reasoning Machine With Magneto-Tunneling Junction-Based Bayesian Network

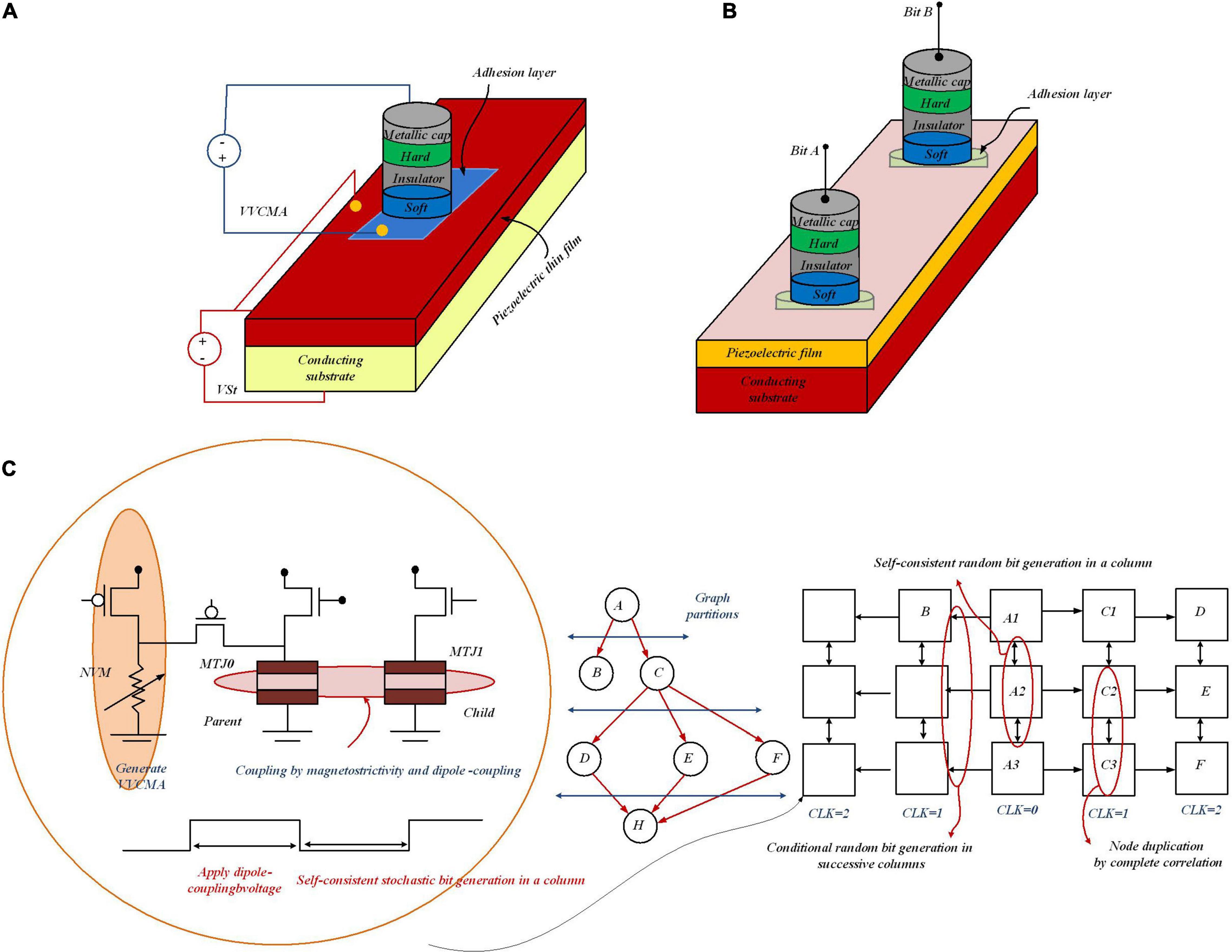

Predictions from Bayesian networks can be accelerated by a computing substrate that allows high-speed sampling from the network. Nasrin et al. (2020) provide the development of such a platform to map an arbitrary Bayesian network through an architecture of the MTJ network along with circuits to writing, switching, and interactions among MTJs. By these means, electrically programmable sub-nanosecond probability sample generation, voltage-controlled magnetic anisotropy (VCMA), and spin-transfer torque (STT) have been co-optimized. As Figure 11A shows for programmable random number generation, VCMA, STT (applied via the voltage VCMA), and magnetostriction, i.e., strain (injected with the voltage VSt), in an MTJ are co-optimized. To stochastically couple the switching probability of one MTJ depending on the state of the other, as Figure 11B depicts, MTJ integration is required, in which dipole coupling, controlled with local stress, is applied to one MTJ. This results in electrically tunable correlation between the bits “A” and “B” (encoded in the resistance states of the two MTJs), without requiring energy-inefficient hardware like OP-AMPS, gates, and shift-registers for correlation generation. To compute posterior and marginal probabilities in Bayesian networks via stochastic simulation methods, samples of random variables are drawn to determine the posterior probabilities. For the platform, mere stochasticity in devices is not enough, and for a scalable Bayesian network, “electrically programmable” stochasticity to encode arbitrary probability functions, P(x); x = 0 or 1, is required; moreover, this “electrically programmable” stochasticity is necessary for stochastic interaction among devices for conditional probability, P(x| y). In the presence of thermal noise at room temperature, the “flipping” is stochastic, i.e., the magnetization will precess when VVCMA is turned on and can either return back to the original orientation or flip to the other orientation. By adjusting the magnitude of VVCMA, the probability of flipping can be tuned. Therefore, the voltage VVCMA as a knob controls the probability of getting either “0” or “1.” The MTJ grid in Figure 11C only enables the nearest-neighbor correlation, and each node can only have binary states. For nodes with more than two states, splitting by binary coding is required. In order to run a general Bayesian network on the 2D grid, new mapping and graph partitioning/restructuring algorithms must be developed.

Figure 11. Stochastic random number generation utilizing MTJs with programmable probability. (A) MTJ with VCMA and STT (applied via the voltage VVCMA). For programmable random number generation, the magnetostriction, i.e., strain (controlled with the voltage VSt) can be co-optimized. (B) MTJ integration utilizing the effect of dipole coupling (controlled with local stress applied to one MTJ) can be used to couple the switching probability of one MTJ depending on the state of the other. Thereby, the correlation between the bits “A” and “B” can be controlled via the resistance states of the two MTJs. (C) MTJ network-based Bayesian reasoning machine to show an example mapping of Bayesian graph on 2D nanomagnet grid.

In Figure 11C, an example mapping strategy is shown to run general edges in a graph. Graph nodes are duplicated by setting the coupling voltages for perfect anti-correlation. To perform independent sampling on the MTJ grid, it is required to map the parent variables on the parent MTJ column and the children on the successive columns. In the stochastic simulation, different sampling algorithms on the grid are tested to speed up the process of sample generation of random variables in a Bayesian network to compute the posterior probabilities. These algorithms speed up the inference in Bayesian networks but can still fall short of the escalating pace and scale of Bayesian network-based decision engines in many Internet of Things (IoT) and cyber-physical systems (CPS). With a higher degree of process variability, prediction error for P(F) increases. By increasing the size of the components (resistive memory, current biasing transistor, etc.) as well as post-fabrication calibration, tolerance to process variability in the proposed design can be increased. The discussed platform would pave the way for a transformational advance in a novel powerful generation of ultra-energy-efficient computing paradigms, like stochastic programming and Bayesian deep learning.

Bayesian Features in Neural Networks

In this section, employing Bayesian features in neural networks is represented. To this end, first Bayesian neural networks are explained. Then, Gaussian synapses for PNNs will be introduced. Afterward, a PNN with memristive crossbar circuits is described. At the end of this section, approximate computing to provide hardware-friendly PNNs and an application of probabilistic ANN for analyzing transistor process variation are explained.

Bayesian Neural Networks

Bayesian deep networks define the synaptic weights with a sample drawn from a probability distribution (in most cases, Gaussian distributions) with learnt mean and variance and inference based on the sampled weights. In Malhotra et al. (2020), the gradual reset process and cycle-to-cycle resistance variation of oxide-based resistive random access memories (RRAMs) and memristors have been utilized to perform such a probabilistic sampling function.

Unlike standard deep networks, defining the network parameters as probability distributions in Bayesian deep networks allows characterizing the network outputs by an uncertainty measure (variance of the distribution), instead of just point estimates. These uncertainty considerations are necessary in autonomous agents for decision-making and self-assessment in the presence of continuous streaming data. In Bayesian formulation, defined by Equation (20), P(W) represents the prior probability of the latent variables before any data input to the network and P(D| W) is the likelihood, corresponding to the feedforward pass of the network. P(W| D) is the posterior probability density where two popular approaches, variational Bayes inference methods and Markov chain Monte Carlo methods, are used to make its estimation tractable.

In Malhotra et al. (2020) and Yang et al. (2020), the variational inference approach has been used since it is scalable to large-scale problems. In the variational inference approach, to approximate the posterior distribution, a Gaussian distribution, q(W, θ), is used. q(W, θ) is characterized by parameters, θ = (μ, σ) in which μ and σ, respectively, are the mean and standard deviation vectors for the probability distributions representing P (W| D) [see Equation (21)]. The main hardware design concerns for implementation of Bayesian neural networks are Gaussian random number generation block and dot-product operation between inputs and sampled synaptic weights.

A Normal distribution with a particular mean and variance is equivalent to a scaled and shifted version of a Normal distribution with zero mean and unit variance. This consideration would allow partitioning the inference equation as shown in Equation (22). The jk, and σjk are the mean and variance of the probability distribution of the corresponding synaptic weight. As shown in Figure 12A, to construct the resultant system, the domain-wall MTJ memory devices based on two crossbar arrays are used for the jk and σjk implementation, respectively. While the inputs of a particular layer are directly applied to the crossbar array storing the mean values, they are scaled by the random numbers generated from the RNG unit.

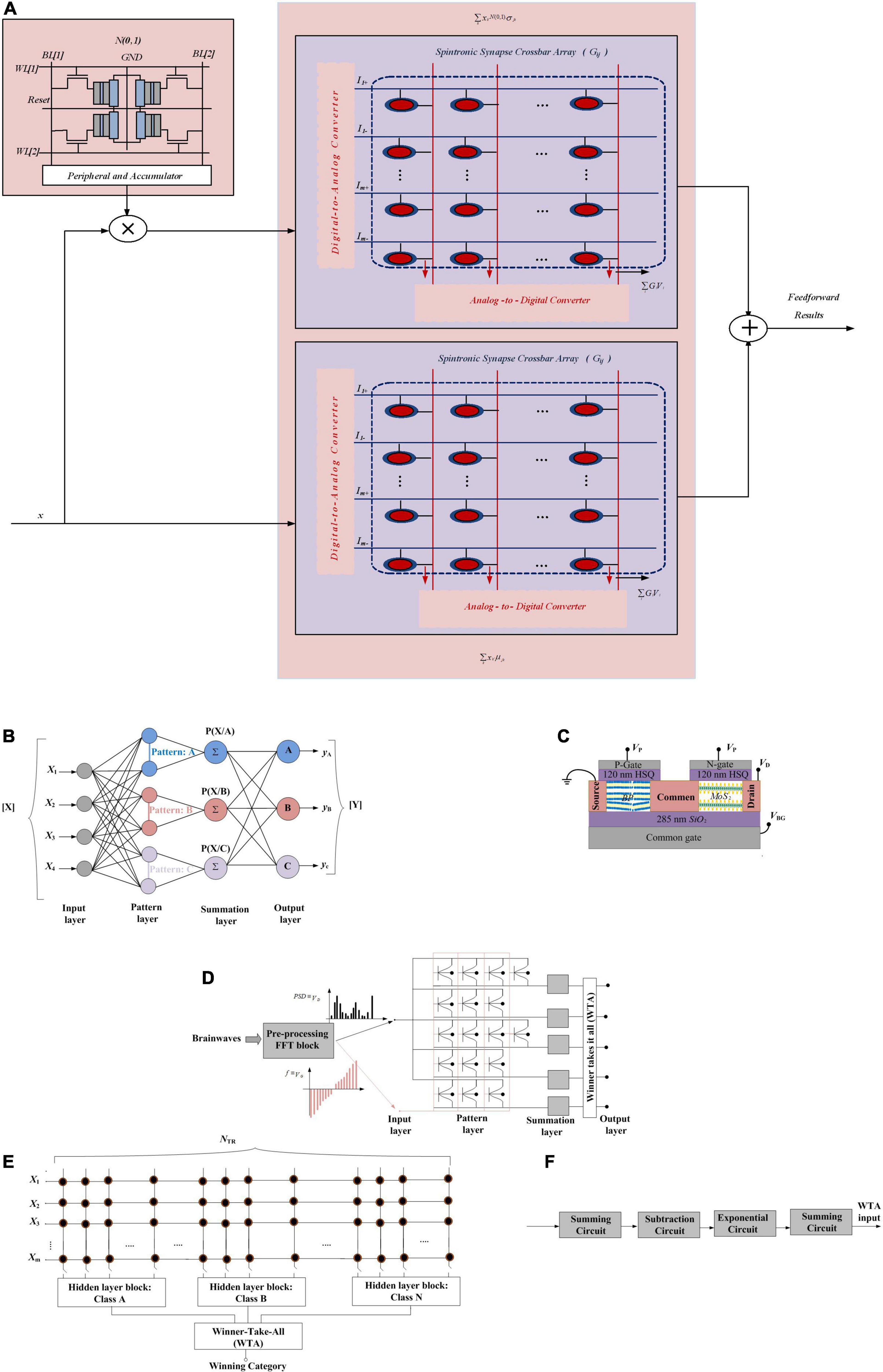

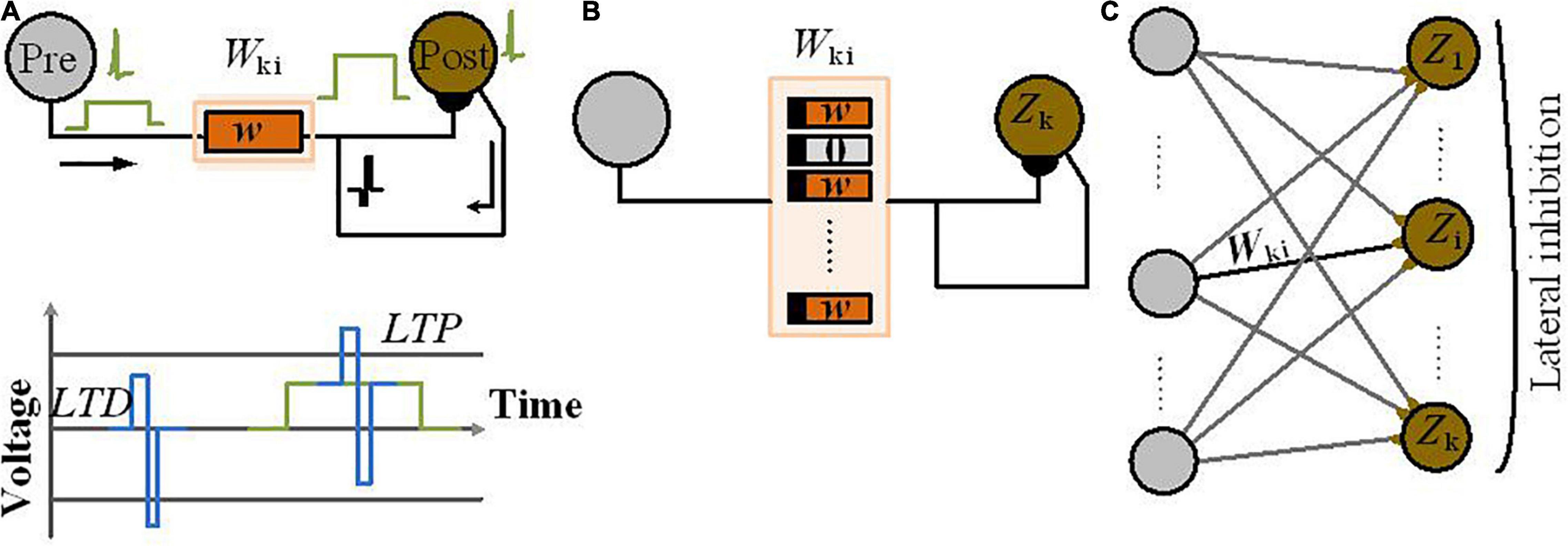

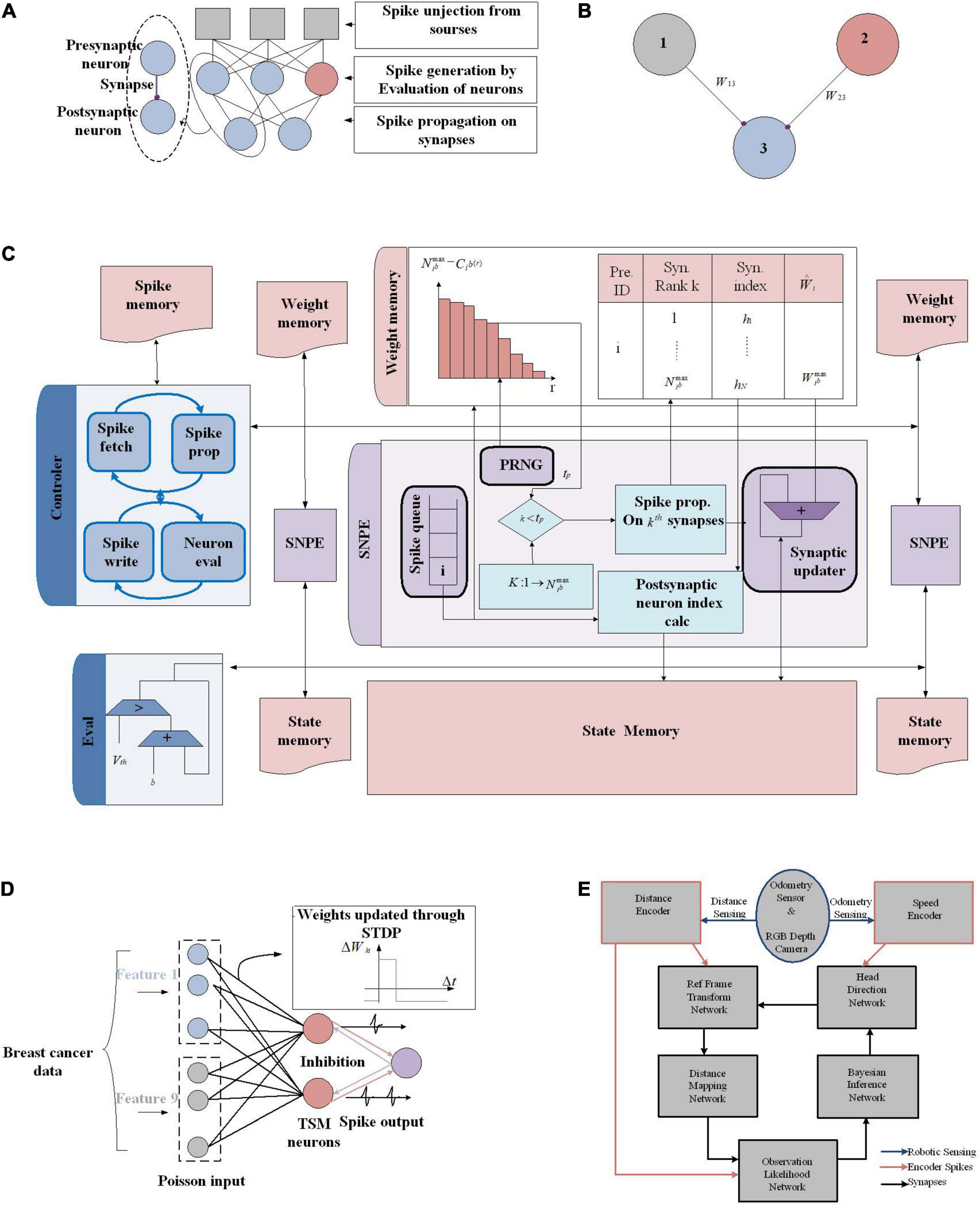

Figure 12. (A) All-spin Bayesian neural network implementation. The RNG unit performs sampling operation from Gaussian random number generators and the two crossbar arrays provide the “In-Memory” computing kernels (Malhotra et al., 2020). (B) The structure of the Probabilistic Neural Network (PNN) model (Gaussian Synapse based PNN) translates any input pattern to any number of output classifications through using a pattern layer and a summation layer. (C) Schematic of a reconfigurable Gaussian synapse composed of dual-gated n-type MoS2 and p-type black phosphorus (BP) back-gated field-effect transistors (FETs). Hydrogen silsesquioxane (HSQ) was used as the top-gate dielectric and nickel/gold (Ni/Au) was used as the top-gate electrode. (D) PNN Architecture for Brainwave recognition. The amplitude of the FFT data is passed from the input layer to the pattern layer as drain voltage (VD) of the Gaussian synapses, and the frequency range is mapped to the back-gate voltage (VG) range. The summation layer integrates the current over the full swing of VG from the individual pattern blocks and communicates with the winner-takes-it-all (WTA) circuit and then the output layer recognizes the brainwave patterns. (E) The architecture of the PNN (Akhmetov and Pappachen, 2019) is implemented with crossbar arrays where each class has been implemented with a crossbar array where NTR denotes the total size of the training set (classes). (F) Block diagram of the hidden layer block.