- 1Department of Electrical and Computer Engineering, Auburn University, Auburn, AL, United States

- 2Alabama Micro/Nano Science and Technology Center, Auburn University, Auburn, AL, United States

- 3Department of Physics, University of California, San Diego, San Diego, CA, United States

- 4Department of Materials Engineering, Auburn University, Auburn, AL, United States

- 5Department of Mathematics and Statistics, Auburn University, Auburn, AL, United States

We explore the use of superconducting quantum phase slip junctions (QPSJs), an electromagnetic dual to Josephson Junctions (JJs), in neuromorphic circuits. These small circuits could serve as the building blocks of neuromorphic circuits for machine learning applications because they exhibit desirable properties such as inherent ultra-low energy per operation, high speed, dense integration, negligible loss, and natural spiking responses. In addition, they have a relatively straight-forward micro/nano fabrication, which shows promise for implementation of an enormous number of lossless interconnections that are required to realize complex neuromorphic systems. We simulate QPSJ-only, as well as hybrid QPSJ + JJ circuits for application in neuromorphic circuits including artificial synapses and neurons, as well as fan-in and fan-out circuits. We also design and simulate learning circuits, where a simplified spike timing dependent plasticity rule is realized to provide potential learning mechanisms. We also take an alternative approach, which shows potential to overcome some of the expected challenges of QPSJ-based neuromorphic circuits, via QPSJ-based charge islands coupled together to generate non-linear charge dynamics that result in a large number of programmable weights or non-volatile memory states. Notably, we show that these weights are a function of the timing and frequency of the input spiking signals and can be programmed using a small number of DC voltage bias signals, therefore exhibiting spike-timing and rate dependent plasticity, which are mechanisms to realize learning in neuromorphic circuits.

1. Introduction

Neuromorphic computing has been a rich area of study over the past several decades, bringing together the fields of electronics, biology, materials and computer science, among others (Mead, 1990). A Von Neumann (or Princeton) architecture (Burks et al., 1982), as well as the closely-related Harvard architecture, have been the basis of most computational systems since their conception. These architectures employ a central processing unit that works alongside a dedicated memory that stores data and instructions together for von Neumann architectures or, in the case of the Harvard architecture, separately. The processor and memory must communicate with each other to process information, requiring movement of data and instructions, leading to an information flow bottleneck that provides one limitation for the speed of computation. Neuromorphic hardware attempts to mimic a biological brain, specifically a human brain, and is organized with both processing and memory distributed among the system, with a goal of reducing the inherent latency found in von Neumann-like systems. Though our current understanding of the brain is most certainly not a complete one, efforts to mimic nature are expected to lead us to more efficient computational architectures and a deeper understanding of the human brain. It has been claimed that efficient emulation of scalable biological neural networks could allow for computation that negates the information bottleneck associated with von Neumann like architectures and provides a low power platform more apt for neural networks and parallel processing (Monroe, 2014). Different approaches have been taken to realize physical electronics hardware for neuromorphic circuits that imitate some of the useful functions of the brain, primarily including semiconductor-based electronics such as complementary metal oxide semiconductor (CMOS) approaches (Mead, 1990; Seo et al., 2011; Merolla et al., 2014), memristive devices (Jo et al., 2010; Sung et al., 2018) and organic electronics (van de Burgt et al., 2017; Pecqueur et al., 2018). While the performance and scale of some of these systems is impressive, reaching the extremely low power consumption (or energy per operation) and the massive level of interconnection of the human brain still remain big challenges. When compared to semiconducting devices, superconductive devices demonstrate drastically lower, nearly zero, power dissipation and are competitive even when considering the necessary cooling to cryogenic temperatures (Holmes et al., 2013). Superconductive circuit elements, such as Josephson junctions (JJs), magnetic JJs (MJJs), superconducting nanowire single photon detectors (SNSPDs), and quantum phase slip junctions (QPSJs), have been shown to compete with the ultralow power consumption of the brain (Crotty et al., 2010; Schneider et al., 2018a,b). Superconductive electronics (SCE), with lossless superconducting interconnects, can also allow the massive interconnections needed to realize complex neuromorphic systems. Furthermore, the non-linear switching dynamics of superconductive devices allow realization of spiking behavior with non-volatile memory in the form of spike timing dependent plasticity (STDP), which is a biologically plausible learning mechanism. With these benefits in mind, we are exploring superconductive electronics based circuits to create a scalable system of neurons and synapses that can be integrated to form learning circuits.

One-dimensional (or quasi-one-dimensional) (1D) superconductivity has been an active subject of research due to resultant interesting physical effects. In a superconducting 1D nanowire, quantum phase slip (QPS) causes the wire to demonstrate an insulating, zero-current state when an applied voltage is below a critical value and to exhibit resistive behavior when above (Mooij and Harmans, 2005). This leads to a measurable resistive tail at temperatures significantly below the superconducting critical temperature (Giordano, 1988), or as a single-electron charging effect in nano-scale tunnel junctions (Fulton and Dolan, 1987). Quantum phase slip occurs along with coherent tunneling of fluxons across superconducting nanowires. The phase difference along the wire, along the phase slip region, changes by 2π and the superconducting order parameter is reduced to zero within the phase slip region (Kerman, 2013). This tunneling of magnetic flux through the superconducting nanowire has been identified as a quantum dual to Josephson tunneling of Cooper pairs across an insulating charge tunnel barrier (Mooij and Nazarov, 2006). Several experiments have been conducted over the past few years to demonstrate coherent quantum phase slip behavior in superconducting nanowires (Astafiev et al., 2012; Webster et al., 2013; Constantino et al., 2018). These phase slip events have been suggested for applications such as a quantum current standard (Wang et al., 2019), single charge (Hongisto and Zorin, 2012) and single flux transistors (Kafanov and Chtchelkatchev, 2013), superconducting qubits (Mooij and Harmans, 2005), and digital computing (Goteti and Hamilton, 2018; Hamilton and Goteti, 2018). In addition to these suggested applications, the stochastic nature of occurrence of coherent quantum phase-slips in nanowires can be particularly applicable for neuromorphic computing. Recently, there have been promising results for an algorithm-level, digit recognition approach using models for QPSJ-based superconductive circuitry, which furthermore shows the growing interest in this area (Zhang et al., 2021).

Quantum phase slip junctions (QPSJs) are promising superconductive electronic devices for applications in high-speed and low-power neuromorphic computing (Cheng et al., 2018, 2019, 2021). Coherent quantum phase slip can be leveraged through overdamped QPSJs to create individual quantized current pulses, which are analogs to neuron spiking events. When compared to Josephson junction based neuromorphic hardware, simulations of QPSJ neuromorphic circuits have been demonstrated to consume less power and require smaller chip area, all while maintaining a similar operation speed (Cheng et al., 2018). To begin, we briefly review the simulation model and previously reported neuromorphic circuits. We present results from SPICE simulations of multiple new QPSJ-based neuromorphic circuit elements and demonstrate their utility through exploration of a long term depression (LTD) circuit and a long term potentiation (LTP) circuit for use in simplified STDP learning. STDP learning is a form of asynchronous Hebbian learning that uses temporal correlations between the spikes of presynaptic and postsynaptic neurons and is believed to underlie learning and information storage in biological brains (Bi and Poo, 2001). Previously described hardware capable of STDP learning include memristor based approaches (Serrano-Gotarredona et al., 2013) and hybrid superconductive-optoelectronic circuits based on Josephson junctions combined with single photon detectors (Shainline et al., 2019). Though not shown here, neuromorphic circuit elements exhibiting STDP behavior can be systematically connected together to construct a large neural network that is capable of supervised learning with programmable weights using pulsed “write” signals to each synapse or unsupervised learning with long term potentiation and depression circuits. Results from our recent explorations of new versions of these circuits based on QPSJ and QPSJ + JJ are presented in this paper. We also present an alternative approach to construct neural networks by coupling QPSJ-based circuit elements together such that the weights of multiple synapses can be collectively programmed using only a few adjustable parameters. While individual weights cannot be deterministically programmed in such networks, we show that the collective network configuration can be programmed, while the network exhibits STDP learning behavior with respect to the input spiking signals. Therefore, we establish that QPSJ-based neural network elements have the potential to achieve completely supervised and semi-supervised learning, with possibility for unsupervised learning in hardware, which we expect to lead to more capable and lower energy per operation neuromorphic and artificial intelligence systems.

2. QPSJ-Based Neuromorphic Circuit Elements

In this section, we briefly re-introduce circuit configurations and principles of operation of a single QPSJ and QPSJ-based neuromorphic circuits to familiarize the readers with QPSJ-based neuromorphic circuits, including neuron, synapse and fan-out circuits (Cheng et al., 2018, 2021). The simulations were carried out in WRspice, using a QPSJ SPICE model introduced in Goteti and Hamilton (2015), along with Python programs to automate a large number of simulations. SPICE is an open-source analog electronic circuit simulator (Nagel, 1975), that performs time-dependent equivalent circuit nodal analysis to determine the resultant electrical behavior. It is worth noting that SPICE is useful for simulating a wide range of dynamic systems (Hewlett and Wilamowski, 2011), including neuromorphic systems.

2.1. QPSJ SPICE Model

The equivalent electronic circuit model of a QPSJ is defined by an intrinsic QPSJ in series with a resistor R and an inductor L (Mooij et al., 2015). The equations that govern QPSJ behavior and are the basis of our SPICE model are:

where q is the charge equivalent in the QPSJ normalized to the charge of a Cooper pair (2e). The critical voltage Vc is defined by:

where Es is the phase-slip energy. The junction exhibits a Coulomb blockade when the applied voltage is below its critical voltage, and becomes resistive when the voltage is above its critical voltage (Hriscu and Nazarov, 2011). The critical voltage is a device parameter, analogs to the critical current of a JJ, which can be tuned through device material, design (i.e., geometrical parameters), and fabrication processes. A single QPSJ can be treated as a series RLC oscillator under appropriate operating conditions. When the oscillator is over-damped, a quantized current pulse (spike) can be generated and propagated, which can be used to emulate neuron spiking behavior. A QPSJ-based neuromorphic system generates, processes and transmits narrow, high-frequency spike-shaped current signals to emulate the dynamics of a biological neural network and perform computational functions based on input and output definitions.

2.2. Integrate-and-Fire Neuron

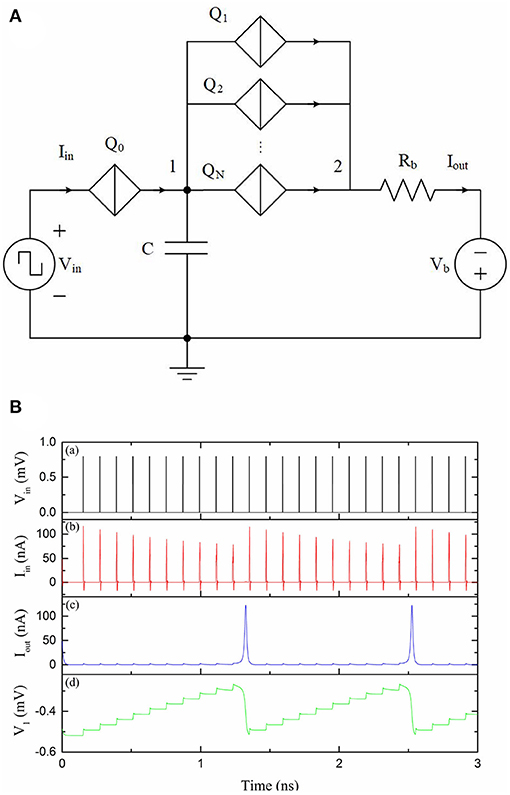

Integrate-and-fire neurons (IFNs) perform their neuron function by integrating a signal up to a threshold, after which an output signal (pulse) is generated (fired). A QPSJ-based IFN, as shown in Figure 1A, integrates electrons (through QPSJ Q0) from input signals, for example from other neurons or control circuitry, onto a membrane capacitor C and fires a spike signal if the total number of electrons reach the threshold (Cheng et al., 2018). The threshold is defined in hardware by N, the number of parallel QPSJs (Q1 to QN) and the capacitance of capacitor C, as shown in Figure 1A. The simulation results of this IFN circuit are shown in Figure 1B. The number of parallel QPSJs is 10 (i.e., the threshold is 10) during this simulation. The input voltage pulse from Vin can switch Q0 and generate a current pulse that contains a charge of 2e. The voltage at capacitor C keeps increasing as a result of quantized charge accumulation. Once the voltage applied on the parallel QPSJs reaches the critical voltage, the capacitor discharges a charge of 20e. Each 2e charge pulse is transmitted through a parallel QPSJ. The ten parallel current pulses are summed at node 2, which results in a current pulse that contains a charge of 20e. The circuit operation is similar to a digital IFN circuit that has a pre-defined threshold of N. In this example, the time constant associated with discharging of capacitor C through normal resistances of the parallel QPSJs is designed to be larger than the switching speed of the QPSJs therefore allowing simultaneous switching of 10 parallel junctions. We note that this parallel combination of nominally identical QPSJs is sensitive to device-to-device variation. While in simulation we can use identical devices, in real hardware, the circuits will have a range of tolerance associated with device-to-device variation. The device-to-device tolerance of these parallel QPSJs was found to be ~1%, according to the simulation results discussed in Cheng et al. (2021). This is an important aspect for advancing this technology and will require close attention in future device fabrication and circuit design efforts. Next, we will briefly introduce a multi-weight synaptic circuit in the following subsection to provide a weighted connection between neuron circuits.

Figure 1. A QPSJ-based IFN circuit that has a firing threshold of N (Cheng et al., 2018). Vin was 0.8 mV and Vb was 1 mV. The Vc values used for Q1–QN were 0.7 mV. Rb was 9 kΩ. (A) Circuit schematic. (B) Simulation results of an IFN circuit with a threshold of 10. (a) Input voltage. (b) Input current. (c) Output current. (d) Voltage at node 1.

2.3. Multi-Weight Synapse

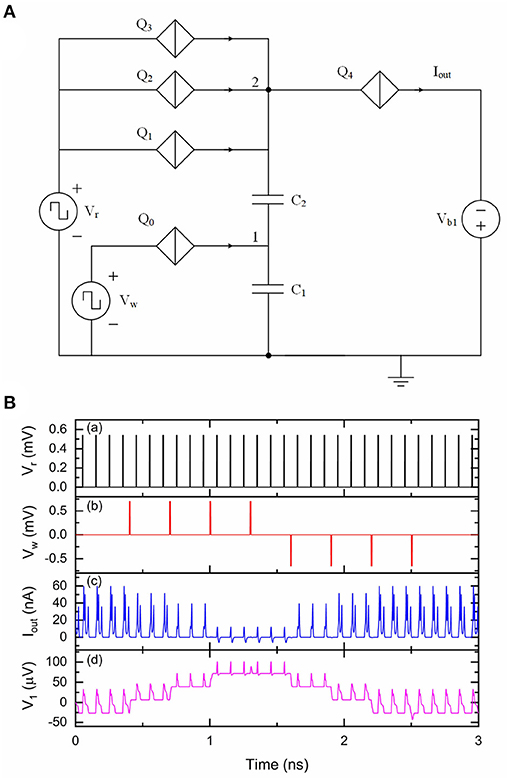

A synapse is connected between two neurons to transmit weighted spiking signals. We previously designed and presented a QPSJ-based multi-weight synaptic circuit to transmit weighted current pulses between neuron circuits, which is briefly reviewed here (Cheng et al., 2021). The circuit shown in Figure 2A is a multi-weight synaptic circuit that can generate different numbers of sequential current pulses, which correspond to a weight of 0, 1, 2 or 3. Here, the weight is defined as the number of pairs of electrons at the output for each input pulse. In general, N sequential current pulses contain N pairs of electrons, although the shapes of these pulses may not look significantly different. Parallel QPSJs Q1, Q2 and Q3 have different critical voltages in order to function correctly. The critical voltages of Q1, Q2 and Q3 are VC1, VC2 and VC3, respectively, while VC1 < VC2 < VC3. Ideally, the critical voltage difference between Q1 and Q2 or Q2 and Q3 should be the same as the voltage change on node 1 after receiving an input voltage pulse from Vw, which is ~2e/C1. In simulation, the tolerance of these parallel QPSJs was found to be less than ~1% (Cheng et al., 2021). The weight can be increased by applying negative pulses at Vw or decreased by applying positive pulses at Vw. Applying (positive) pulses at Vr can read but not destroy the neuron memory state. Different numbers of sequential current pulses will be generated at Iout upon the arrival of one short voltage pulse at Vr, depending on the number of electrons stored at capacitor C1. The simulation results of this circuit are shown in Figure 2B. The initial weight of the synaptic circuit is set to 3. A voltage pulse from Vr can switch all three parallel QPSJs Q1, Q2, and Q3, resulting in three sequential current pulses at Iout. Applying a positive voltage pulse at Vw can add two electrons onto capacitor C1 and the voltages at node 1 and node 2 increase accordingly. In this case, the upcoming voltage pulse from Vr can only switch two out of three parallel QPSJs, which causes two sequential current pulses at Iout. Once the synaptic weight reaches 0, it will not decrease any more. Similarly, applying a negative voltage pulse at Vw can take two electrons from capacitor C1 and the voltages at node 1 and node 2 decrease accordingly. Therefore, the synaptic weight is increased by 1. This can be repeated up to reaching the maximum weight. Different weights result in different numbers of sequential current pulses at Iout during each read operation. The weight modulation scheme in this circuit allows us to design learning circuits that can generate appropriate positive and negative pulses based on specific learning rules to control the synaptic weight.

Figure 2. A multi-weight QPSJ-based synaptic circuit that has two inputs Vr and Vw and one output Iout. The weight can be modified by applying positive or negative pulses at Vw, and can be read-out by applying positive pulses at Vr. Vr was 0.54 mV and Vw was 0.7 mV. The Vc values used for Q0–Q4 were 0.3, 0.5, 0.52, 0.54, and 0.31 mV, respectively. C1 was 9.2 fF and C2 was 1.2 fF. Vb1 was 0.5 mV. (A) Circuit schematic. (B) Simulation results of the synaptic circuit. (a) Read signal Vr. (b) Write signal Vw. (c) Output current Iout. (d) Voltage at node 1.

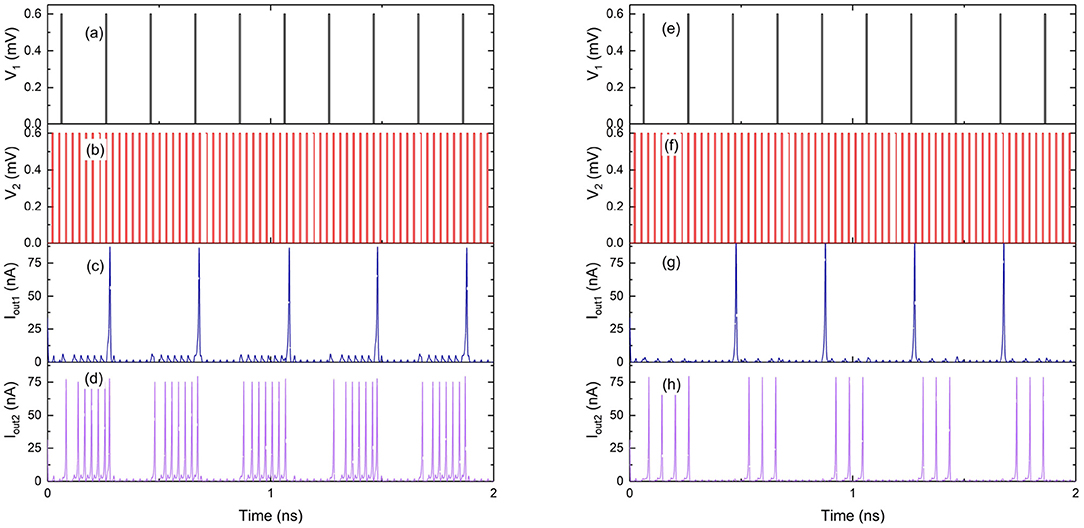

2.4. Fan-Out Circuit

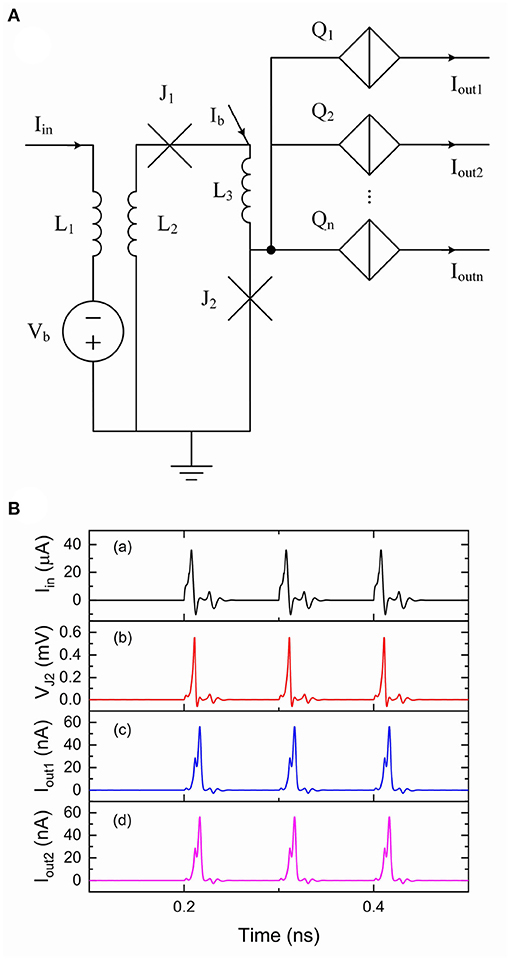

In biological neural systems, neurons are typically connected to thousands of other neurons (von Bartheld et al., 2016). A fan-out circuit allows one neuron to be able to connect to multiple other neurons. We previously designed and presented a fan-out circuit to split current pulses from an IFN circuit to provide a means to connect to other IFN circuits, which is briefly reviewed here (Cheng et al., 2021). Charge-flux converters (Goteti and Hamilton, 2019) were used to convert quantized current pulses to single flux quantum (SFQ) pulses that can in turn switch multiple QPSJs, as shown in Figure 3A. The current pulse Iin from an IFN circuit flows into an inductor L1. The current pulse is then coupled to a mutual inductor L2 and injected to Josephson junctions J1 and J2. Since J2 is biased to a value near its critical current by bias current Ib, the additional current pulse from L2 can switch J2 and generate a SFQ pulse, which can in turn switch multiple parallel QPSJs. Once J2 is switched and in the resistive state, J1 can be switched by Ib and the system recovers to its initial state. This circuit can be designed to provide a large fan-out. As an example, the simulation results of a ten fan-out circuit are shown in Figure 3B. We use an IFN circuit that has a threshold of 500 to generate current pulses flowing into Iin. The induced current pulses from mutual inductors are injected to J2, resulting in SFQ pulses across J2. Since all the parallel QPSJs (Q1 to Q10) are switched at the same time, we can see identical output current pulses from Iout1 and Iout2 in Figure 3Bc,d, which are synchronized to the input current pulses. The simulation results have demonstrated the fan-out function of this circuit. This circuit does not appear to have a limit for the maximum fan-out in simulation, but can be limited by the practical circuits due to fabrication challenges (Cheng et al., 2021).

Figure 3. A fan-out circuit is comprised of flux-charge and charge-flux circuits (Cheng et al., 2021). The Vc values used for Q1 to Q10 were 0.5 mV. The critical current Ic value used for J1 was 40 μA and the Ic value used for J2 was 50 μA. The inductance values used for L1 and L2 were 0.1 nH with a coupling coefficient of 0.9. The inductance value used for L3 was 0.01 nH. Bias current Ib was 70 μA and bias voltage Vb was 0.5 mV. Input current Iin was from the output of a QPSJ-based IFN circuit that has a threshold of 500. (A) Circuit schematic. (B) Simulation results of the fan-out circuit. (a) Input current. (b) Voltage at J2. (c) Output current 1. (d) Output current 2.

3. QPSJ-Based Learning Circuits

The human brain can be viewed as an energy-efficient learning machine, solving demanding computational tasks while consuming a small amount of energy. One feature of the human brain that enables it to adapt to the surrounding environment and to solve complex problems is synaptic plasticity (Haykin, 2010). In order to mimic this synaptic plasticity in neuromorphic computing we desire to have the ability to adjust synaptic weights through learning processes. While there are multiple learning strategies in neuromorphic computing, we focused on the STDP learning approach in this work to provide potential learning functions for QPSJ-based superconductive neuromorphic systems. Early neuroscience experiments on synaptic plasticity suggested that the relative timing of presynaptic and postsynaptic action potentials, on a timescale of milliseconds, had significant effects on the plasticity (Levy and Steward, 1983). This is well known as spike timing dependent plasticity (STDP), which was observed in cortical neurons (Cooke and Bliss, 2006). In neuromorphic hardware systems, STDP-type learning rules are widely used as an unsupervised learning method (Linares-Barranco et al., 2011; Lee et al., 2018; Srinivasan et al., 2018). In this paper, we introduce a method of realizing a simplified STDP rule using QPSJ-based circuits. The weight change is either +1 or −1 during each learning event. The learning circuit is comprised of a long term depression circuit and a long term potentiation circuit, which are combined together to realize a simplified STDP rule circuit. Each of these circuits are described in more detail in the following sections.

3.1. A Long Term Depression Circuit

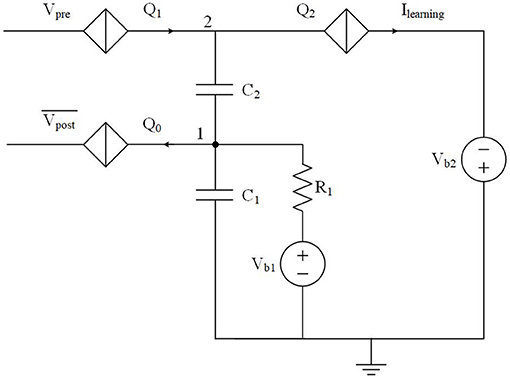

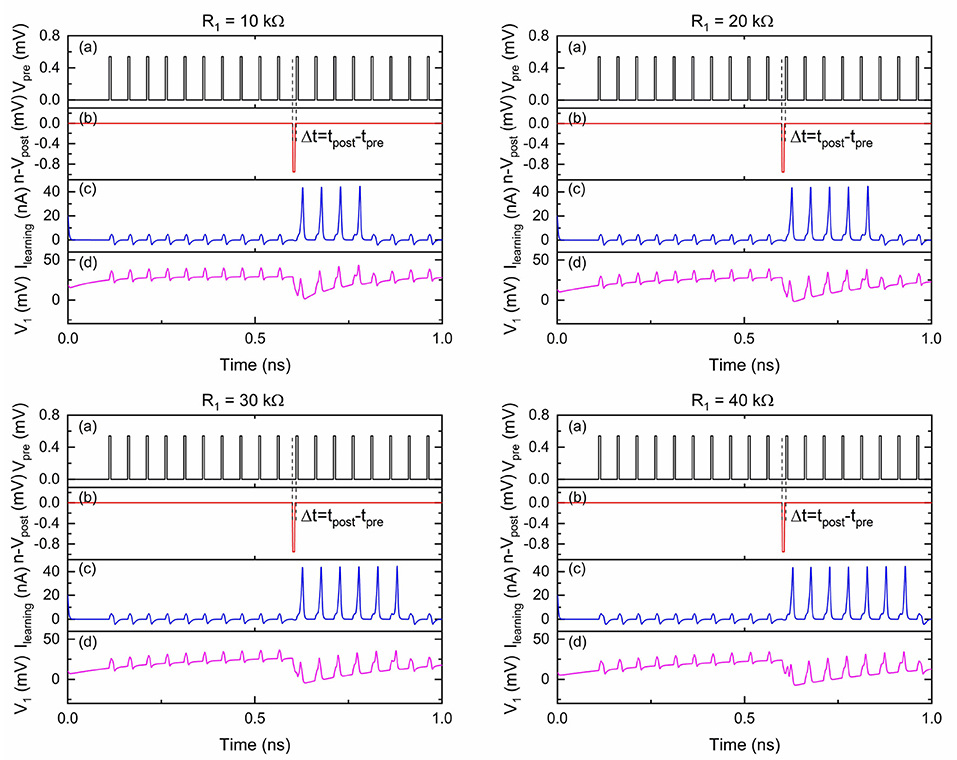

In a biological neural system, long term depression (LTD) occurs when a postsynaptic spike leads a presynaptic spike by ~20–100 ms (Ito and Kano, 1982; Markram et al., 1997). The synaptic weight between these two neurons is thus depressed as they are considered to be uncorrelated. The LTD circuit shown in Figure 4 can generate positive pulses used to depress the synaptic weight if the timing difference Δt = tpost − tpre is within a short learning window. This circuit operates at a much faster speed than its biological counterpart, tens of GHz vs. kHz, therefore LTD is designed to be effective within a shorter (ps scale) learning window. In Figure 4, the initial voltage at node 1 is set by bias voltage Vb1 when there are no inputs at . In the circuit design, we choose an appropriate critical voltage value for Q1 such that Q1 cannot be switched by Vpre should a voltage pulse from Vpre arrive first. Therefore, no current pulses are generated at Ilearning. On the other hand, if a negative voltage pulse from arrives first, Q0 is switched and a pair of electrons are taken from capacitor C1. The voltages at node 1 and node 2 drop by 2e/C1, where C1 is the capacitance of capacitor C1. The slight voltage change at node 2 allows the upcoming pulse from Vpre to switch Q1 and in turn switch Q2, resulting in a positive current pulse at Ilearning. The voltages at nodes 1 and 2 will recover to their initial states since C1, R1, and Vb1 behave like a series RC circuit with a corresponding voltage decay time. As a result, there will be pulses at Ilearning only if signals at Vpre and are close enough in time. The width of the learning window is determined by the resistance value of R1. The simulation results in Figure 5 illustrate how the learning window changes as R1 changes.

Figure 4. An LTD circuit that generates depression pulses to a synapse. A pulse will be generated at Ilearning when the timing difference Δt = tpost − tpre is within a short learning window.

Figure 5. Simulation results of the circuit shown in Figure 4 with different R1 values. Vpre was 0.54 mV and was 0.95 mV. The critical voltage values used for Q0–Q2 were 0.75, 0.56, and 0.31 mV, respectively. C1 was 9.2 fF and C2 was 1.2 fF. Vb1 was 0.03 mV and Vb2 was 0.5 mV. R1 was 10/20/30/40 kΩ. (a) Input signal Vpre. (b) Input signal . (c) Output signal Ilearning. (d) Voltage at node 1.

In Figure 5, the voltage at node 1 drops upon arrival of a negative pulse into . A current pulse at Ilearning is followed by each upcoming pulse from Vpre before the voltage at node 1 gradually increases to a stable point. The effective time window over which the circuit responds as intended is viewed as the learning window for this LTD function. In this LTD circuit design, the width of the learning window increases as R1 increases. This can be explained by the different voltage level recovering speeds due to different RC time constants.

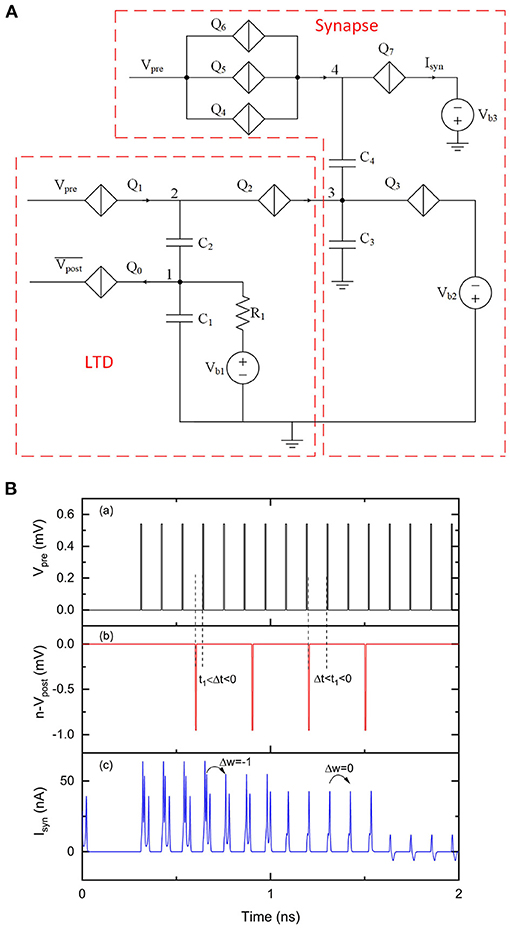

This LTD circuit works seamlessly with a synaptic circuit as shown in Figure 6A. LTD occurs when the circuit detects t1 < Δt < 0, where t1 ≈ 50 ps defines the maximum LTD learning window. Charge (electrons) will be injected onto capacitor C3, which depresses the synaptic weight. A simulation was performed to show how the synaptic weight changes according to the LTD rule. The results are shown in Figure 6B. The width of LTD learning window was not a concern during this simulation, as the circuit parameters were chosen to demonstrate LTD functions but not for a specific LTD learning window. In this circuit design, the initial weight was set to 3 based on the device parameters used for this simulation. Each presynaptic pulse could result in three sequential current pulses (containing a charge of six electrons) at Isyn. As the first negative voltage pulse from is presented, the voltage at node 1 drops due to the switching of Q0, which takes two electrons from C1. The voltage at node 2 also drops subsequently, which allows the fourth voltage from Vpre to switch Q1 and in turn switch Q2 to inject two electrons onto C3. As a result, the synaptic weight is depressed by 1. The weight change is not immediate but can be observed by the upcoming pulse from Vpre, which results in two sequential current pulses (containing a charge of four electrons) at Isyn. We can also see that the timings between the third pulse from and the tenth pulse from Vpre is relatively larger (~100 ps), which does not result in a weight depression. This is because the voltage at node 1 and node 2 recover to their initial states (set by bias voltages) before the tenth pulse from Vpre arrives. These simulation results demonstrate that the LTD circuit can realize a weight depression function with respect to the relative timing information between presynaptic and postsynaptic pulses.

Figure 6. An LTD circuit with a multi-weight synaptic circuit. The number of sequential current pulses at Isyn can be reduced when the timings of pulses from Vpre and trigger an LTD learning event. Vpre was 0.54 mV and was 0.95 mV. The critical voltage values used for Q0–Q7 were 0.75, 0.55, 0.3, 2, 0.54, 0.52, 0.5, and 0.34 mV, respectively. C1 and C3 were 9.2 fF, and C2 and C4 were 1.2 fF. Vb1, Vb2 and Vb3 were 0.03, 0.77, and 0.53 mV, respectively. R1 was 10 kΩ. (a) Input signal Vpre. (A) Circuit schematic. (B) Simulation results. (a) Input signal Vpre. (b) Input signal . (c) Output signal Isyn.

3.2. A Long Term Potentiation Circuit

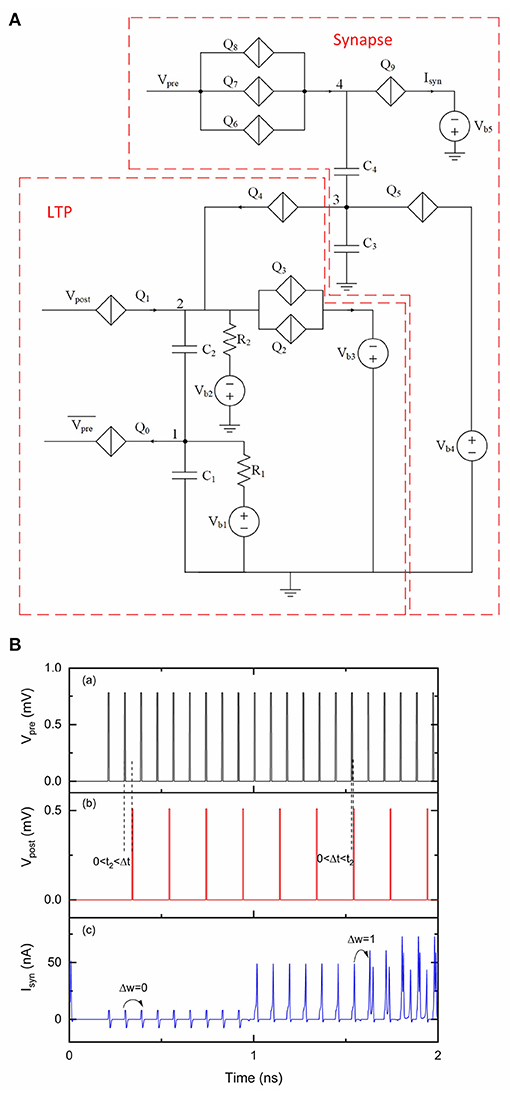

In a biological neural system, long term potentiation (LTP) occurs when a presynaptic spike leads a postsynaptic spike by up to 20 ms (Bliss and Lømo, 1973; Markram et al., 1997). The synaptic weight between these two neurons is thus potentiated as they are considered as correlated. In a synaptic circuit shown in Figure 2, the weight can be potentiated by applying negative pulses at Vw. Here we propose an LTP circuit that can generate negative current pulses to potentiate the synaptic weight according to the relative timing information between a presynaptic neuron and a postsynaptic neuron. The circuit shown in Figure 7A is an LTP circuit with a multi-weight synaptic circuit. Similar to the LTD circuit shown in Figure 4, the LTP circuit has two inputs and Vpost. Q2 and Q3 are identical and biased by voltage Vb3. The initial voltage at node 1 is set by bias voltage Vb1. Voltage at node 2 (V2) is set by bias voltage Vb2 so that the voltage across Q2 and Q3 is near their critical voltages. When there are no inputs at , Vpost cannot switch Q1. A negative voltage pulse from can switch Q0, taking two electrons from capacitor C1. The voltage drop at node 1 results in a voltage drop at node 2 as well. The slight voltage change at node 2 allows the upcoming voltage pulse from Vpost to switch Q1 and in turn switch Q2 and Q3, resulting in a current pulse that contains a charge of 4e. Since there are only two electrons coming from Vpost, the voltage drop at node 2 allows Q4 to be switched and allows C3 to provide another pair of electrons. This circuit behaves like an “inverter” circuit that can convert positive voltage (or current from an upstream neuron) pulses to negative current pulses. By choosing appropriate biasing conditions and critical voltage value of Q4, we only allow Q4 to be switched for a maximum of three times, which represents a maximum weight change of 3. Each time Q4 is switched, a pair of electrons flow from C3 to C2 and voltage at node 3 drops by 2e/C3, which makes the synaptic weight increase by 1.

Figure 7. An LTP circuit with a multi-weight synaptic circuit. The number of sequential current pulses at Isyn can be increased when the timings of pulses from Vpre and Vpost trigger an LTP learning event. Vpre was 0.78 mV and was 0.54 mV. Vpost was 0.51 mV. The critical voltage values used for Q0–Q9 were 0.4, 0.5, 1, 1, 0.58, 2, 1.04, 1.02, 1, and 0.28 mV, respectively. C1, C2, C3, and C4 were 9 fF, 1 fF, 9.2 fF and 2 fF, respectively. Vb1, Vb2, Vb3, Vb4 and Vb5 were 0.05, 0.2, 1.1, 1.01, and 0.6 mV, respectively. R1 and R2 were 10 kΩ. (A) Circuit schematic. (B) Simulation results. (a) Input signal Vpre. (b) Input signal Vpost. (c) Output signal Isyn.

In Figure 7B, we assume LTP is effective when 0 < Δt < t2, where t2 ≈ 34 ps is primarily determined by the resistance of R1 in Figure 7A. The width of LTP learning window was not a concern during this simulation, as the circuit parameters were chosen to demonstrate LTP functions but not for a specific LTP learning window. The initial weight of the synapse was set to 0. Different periodic pulses were applied at Vpre and Vpost in the simulation to demonstrate LTP learning. For example, the sixteenth pulse from Vpre is slightly ahead of the seventh pulse from Vpost, which triggers LTP for the multi-weight synapse. As a result, the weight changes from 1 to 2. The upcoming pulse from Vpre can trigger two sequential current pulses at Isyn. However, the second pulse from Vpre has a relatively large time interval (~100 ps) with the first pulse from Vpost, which does not trigger a weight change.

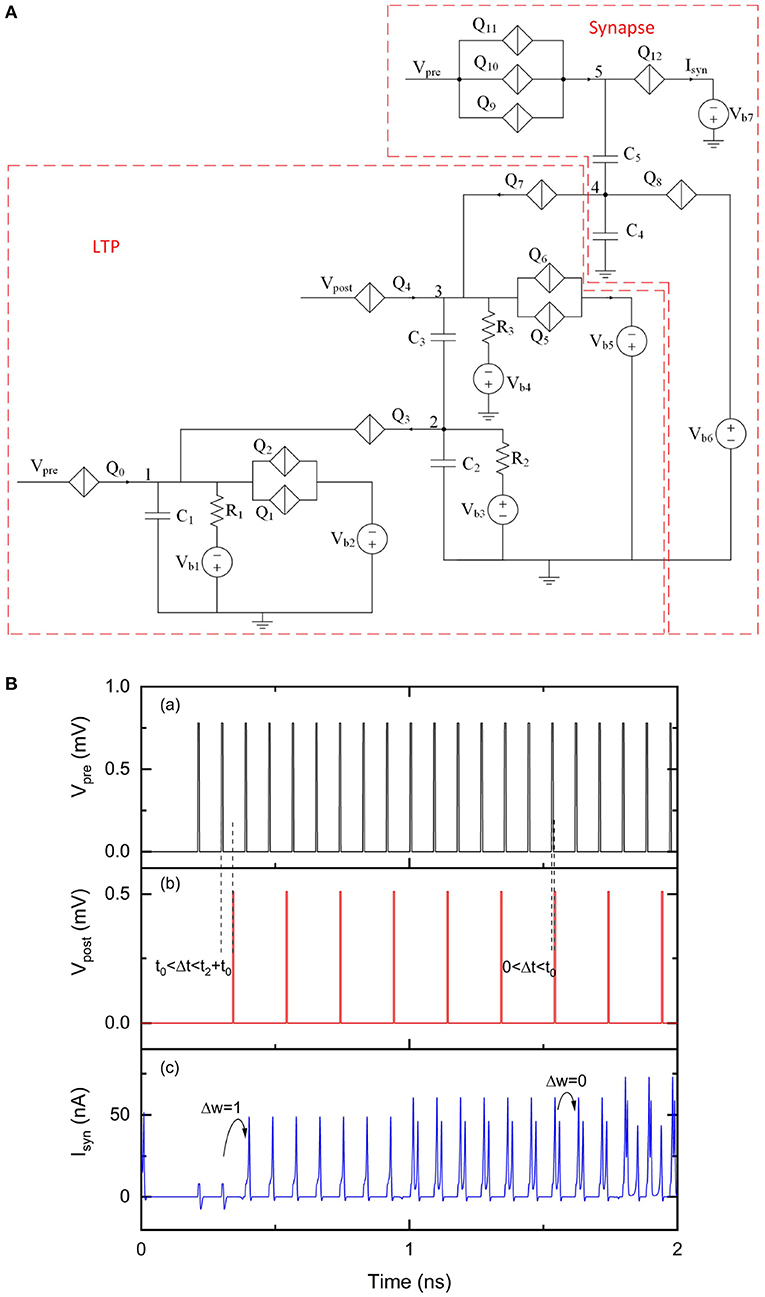

We replaced negative input voltage pulses from with positive input voltage pulses from Vpre in the circuit shown in Figure 8A. This circuit contains another “inverter” circuit to convert positive voltage pulses from Vpre to negative current pulses. As we explained earlier, the “inverter” circuit can take electrons from capacitor C2 to temporally reduce voltage at node 2. Like many other technologies, signals transmission and processing in QPSJ-based circuits exhibit delays. The extra “inverter” circuit in Figure 8A also adds extra delay. The learning window shifts by t0≃ 10 ps and becomes ~t0 < Δt < t2 + t0, as shown in Figure 8B. Although the input signals are identical during the simulations, the output results of Isyn in Figure 8B are different from results in Figure 7B. We observed that LTP occurs in Figure 8B where Δt is relatively large (t2 < Δt < t2 + t0) but does not occur where Δt is very small (0 < Δt < t0). Proper choice of resistance values and potentially adding a delay circuit (e.g., using a QPSJ transmission line circuit) for some of the input signals can adjust the LTP learning window to desired values.

Figure 8. A modified LTP circuit with a multi-weight synaptic circuit. The number of sequential current pulses at Isyn can be increased when the timings of pulses from Vpre and Vpost trigger an LTP learning event. Vpre was 0.78 mV and Vpost was 0.51 mV. The critical voltage values used for Q0–Q12 were 0.8, 0.95, 0.95, 0.36, 0.5, 1, 1, 0.58, 2, 1.04, 1.02, 1, and 0.28 mV, respectively. C1, C2, C3, C4, and C5 were 1, 9, 1, 9.2, and 2, respectively. Vb1, Vb2, Vb3, Vb4, Vb5, Vb6, and Vb7 were 0.2, 1.1, 0.05, 0.2, 1.1, 1.01, and 0.6 mV, respectively. R1, R2, and R3 were 10 kΩ. (A) Circuit schematic. (B) Simulation results. (a) Input signal Vpre. (b) Input signal Vpost. (c) Output signal Isyn.

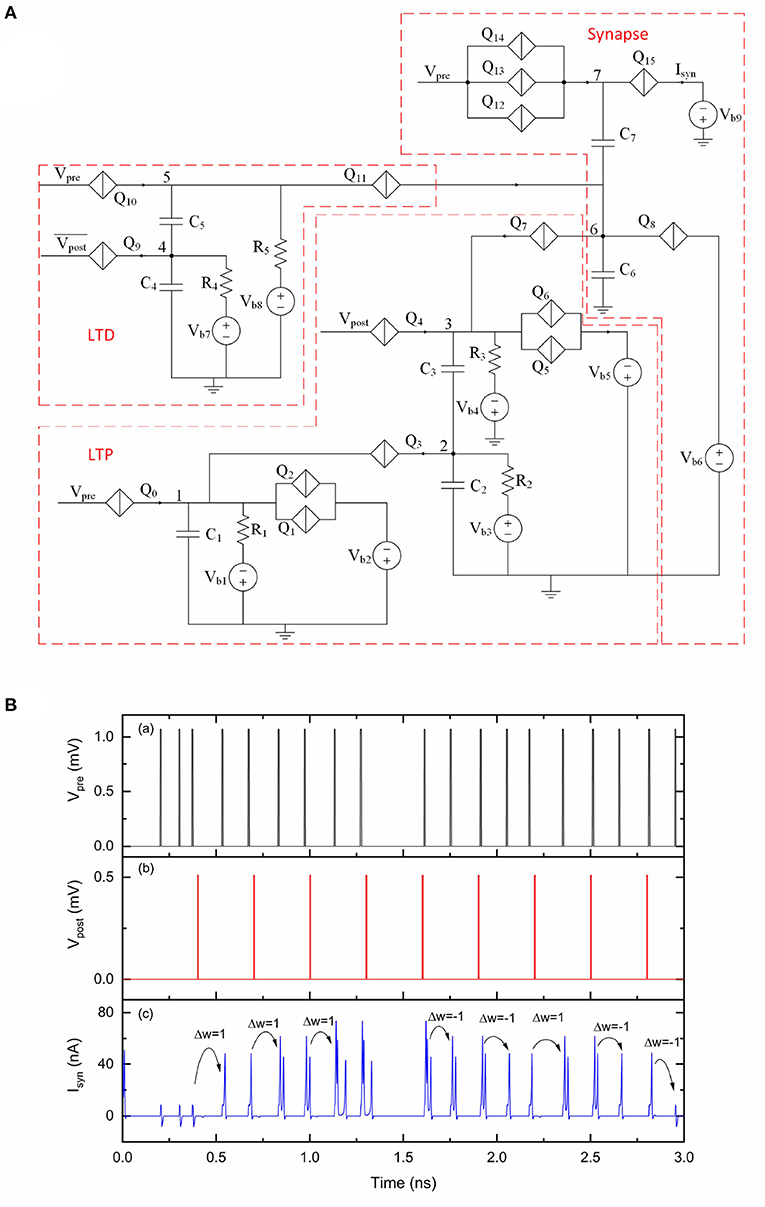

3.3. A Spike Timing Dependent Plasticity Circuit

A simplified STDP rule can be realized by combining the proposed LTD and LTP circuit, as shown in Figure 9A. Charge can be injected onto or taken from capacitor C6, resulting in a weight depression or potentiation for the multi-weight synapse. The LTD portion has an additional bias voltage Vb8 and a resistor R5 to provide voltage bias for Q10 and Q11, which is different from the original LTD circuit shown in Figure 4. The simulation results of this circuit are shown in Figure 9B. We use a customized spike train applied at Vpre and a periodic spike train applied at Vpost and to demonstrate STDP learning functionality. The initial weight of the synapse is set to 0. At the beginning of this simulation, no current pulses are presented at Isyn when applying voltage pulses at Vpre. As synaptic weight changes according to the relative timings of presynaptic pulses and postsynaptic pulses, the output current pulses at Isyn also change over time. Specifically, both presynaptic and postsynaptic voltage pulses are transmitted to the LTD and LTP units. However, using the specific device parameter values during this simulation, the LTD unit only generates depression pulses to the synaptic circuit if −10ps < Δt < −2ps. The LTP unit only generates potentiation pulses to the synaptic circuit if 16ps < Δt < 41ps. These results demonstrate the simplified learning rule realized by this STDP circuit.

Figure 9. An STDP circuit with a multi-weight synaptic circuit. The number of sequential current pulses at Isyn can be updated according to the timings of pulses from Vpre and Vpost. Vpre was 1.07 mV. Vpost was 0.51 mV and was 0.51 mV. The critical voltage values used for Q0–Q15 were 0.8, 0.95, 0.95, 0.36, 0.5, 1, 1, 0.46, 2, 0.75, 0.55, 0.3, 1.37, 1.35, 1.33, and 0.28 mV, respectively. C1, C2, C3, C4, C5, C6, and C7 were 1, 9, 1, 9.2, 1.2, 9.2, and 2 fF, respectively. Vb1, Vb2, Vb3, Vb4, Vb5, Vb6, Vb7, Vb8 and Vb9 were 0.2, 1.1, 0.05, 0.2, 1.1, 0.89, 0.46, 0.3, and 0.6 mV, respectively. R1, R2, R3, R4, and R5 were 10, 10, 20, 10, and 20 kΩ, respectively. (A) Circuit schematic. (B) Simulation results. (a) Input signal Vpre. (b) Input signal Vpost. (c) Output signal Isyn.

The simplified learning rule presented in this paper aims to provide a simple learning method to update synaptic weights according to relative timings of presynaptic and postsynaptic pulses, but has interesting differences compared to its biological counterpart. One aspect is that the superconducting circuit processes information for signals with pulse rates in the tens of GHz scale, which is many orders of magnitude faster than a human brain that typically operates at tens of Hz. Another aspect is the effective learning window for a circuit in Figure 9A is −10 to −2 ps for LTD and 16 to 41 ps for LTP using the specific parameters in this simulation. Though this learning window does not have the exact shape of a more realistic STDP, it may still be useful for implementation to solve practical problems. We also note that the learning window can be adjusted by slightly modifying the STDP circuit in Figure 9A, in addition to what we mentioned earlier to fix delay issues noted in this paper. For example, adding QPSJs in parallel with Q9 and increasing the resistance of R4 could extend the effective learning window for LTD. We have not yet combined input and output neuron circuits, synaptic circuit, fan-out circuit and STDP circuit to demonstrate a large network application. While voltage biasing in QPSJ-based circuits has advantages, as circuit sizes grow and become more complex, challenges related to biasing and impedance matching will likely become more critical (Cheng et al., 2021). We believe that these challenges, which are also found as challenges in other technologies (e.g., current distribution in large JJ-based circuits), do have engineering solutions and require additional work. We also note that these solutions may exist as trade-offs with circuit operation speed and may impact the overall power or energy efficiency. Circuit modifications and new circuit configurations to realize interconnection circuits for synapse feedback loops may also be needed. These aspects are expected to be the focus of potential improvements in future studies.

4. Neural Networks With Coupled Charge-Island Synapses

In the previous sections, we have introduced multi-weight synapse circuits (Figure 2) where the weight can either be programmed using voltage pulses Vw for supervised learning, or can be coupled to long term potentiation and depression circuits to form of an STDP circuit (Figure 9A) as a route to achieving unsupervised learning. Such synapses can be connected to neurons (Figure 1) to construct neural networks with the ability to program individual synapses. While this approach to neural networks is desirable for several applications, it is also possible to construct simpler neural networks using fewer circuit elements by coupling several individual dissimilar synaptic elements together analogs to neural network architectures presented in Goteti et al. (2021) and Goteti and Dynes (2021). This approach takes advantage of the exponential scaling of memory capacity with size that arises from complex (and possibly random) connectivity between nodes in the network similar to that of biological neural networks (Hopfield and Herz, 1995). While such coupled synapses cannot be programmed individually, the circuit construction comprises a mechanism to simultaneously update the weights of all the synapses in the network using only a small number of bias voltage terminals. Additionally, we show that this approach allows weights to be programmed within a continuous set of values, and therefore shows potential to be robust to variation and noise associated with wider device parameter margins. Therefore, this approach may be useful in certain applications to implement aspects of spike-timing and rate-dependent plasticity, with algorithms implemented through coupling of bias voltages to the output signals. Furthermore, as an example, small randomly connected networks could also be used as multi-weight synapse components in larger specifically organized networks, though this is not explored in this work.

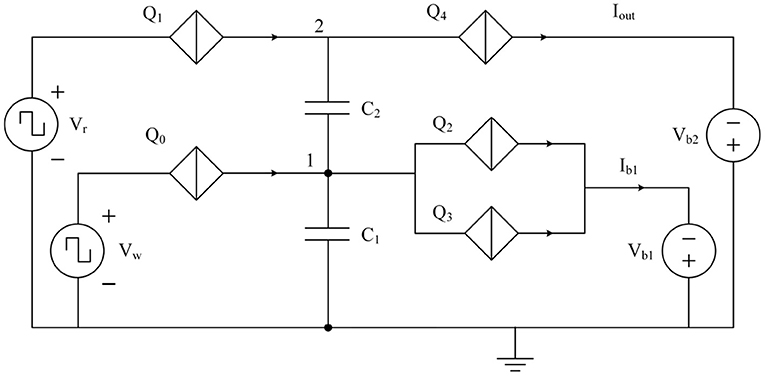

The approach to a coupled synapse network can be demonstrated using binary synapse circuits previously introduced in Cheng et al. (2021). An equivalent circuit that can operate as a simplified 2 × 2 synapse-network is shown in Figure 10. The circuit can be described as two charge island circuits (Goteti and Hamilton, 2018) coupled together, with charge on one of the islands affecting the switching dynamics of the other. Therefore, the weight of the synapse can be switched between 0 and 1 using voltage pulse signals at Vw. When an incoming voltage write pulse Vw is larger than critical voltage Vc of Q0, the junction switches to a resistive state allowing a charge of 2e to the capacitor C1. The value of capacitance C1 is chosen such that it can only hold a charge of 2e before the voltage at node 1 exceeds the critical voltage of junctions Q2 and Q3 and the total charge on C1 discharges through the output current Ib1. When the charge on capacitor C1 is zero, voltage pulse excitations from Vr do not induce transport of charge 2e across junction Q1 (Cheng et al., 2021), resulting in a weight of zero. When the charge on capacitor C1 is 2e, the resulting weight is 1, with one output spike per input pulse, as shown in Figures 11a–d. The weight can be decreased by increasing the capacitance of the capacitors C1 and C2 as shown in Figures 11e,f. When both the capacitances are doubled, the resulting weight becomes 0.5 with one output spike for every two input pulses.

Figure 10. Binary synapse circuit reported in Cheng et al. (2021) reconfigured to operate as a 2 × 2 synapse network.

Figure 11. Simulation results of the 2 × 2 synapse network shown in Figure 10. (a–d) Capacitors C1 and C2 are both chosen to be 3fF. (e–h) Capacitors C1 and C2 are both chosen to be 6fF. (a,e) Voltage pulse input at Vw of the binary synapse circuit shown in Figure 10. (b,f) Voltage pulse input at Vr. (c,g) Current output at Ib1. (d,h) Current output at Iout.

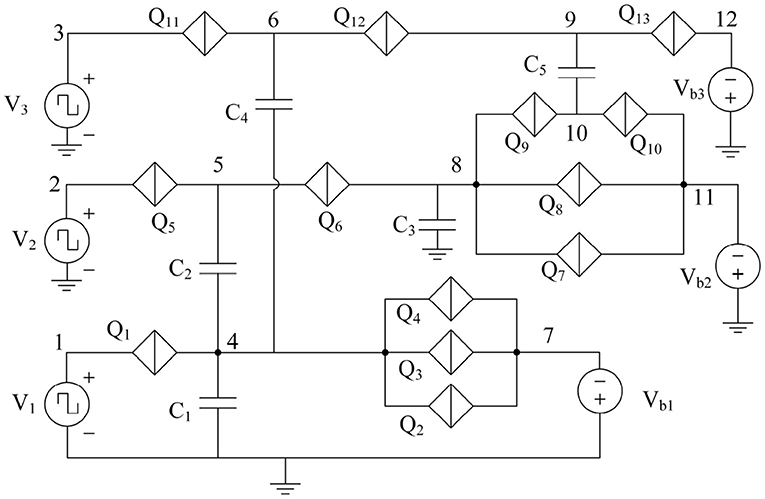

The binary synapse circuit described in Figures 10, 11 establishes that the weight depends on the capacitance values as well as the charge on the capacitors at any instant. Therefore, a multi-weight synapse network can be constructed by similarly coupling several charge islands of different capacitance values and corresponding QPSJ parameters. An example 3 × 3 network with 5 charge islands capacitively coupled to each other is shown in Figure 12. While the values of circuit parameters determine the weights achieved for different input signals, the actual choice of parameters is not crucial to demonstrate the properties of the 3 × 3 network. Additionally, the input junctions in the network are excited using voltage pulses of constant (i.e., not variable) amplitude. Each of these voltage pulses induce a charge of 2e into the network, and therefore represent quantized charge current spikes from the input neurons. The critical voltages of the junctions labeled from Q1 to Q13 are randomly chosen to exist between the range of 0.2–1.2 mV. The capacitance values chosen for simulations are given as: C1 = 6, C2 = 3, C3 = 8, C4 = 2, and C5 = 5 fF. The charge capacity of a charge island is given by , where C is the capacitance of the capacitor on the island and Vc is the critical voltage of the smallest QPSJ in the island. Therefore, for the values chosen, each of the islands in the 3 × 3 network can accommodate a maximum charge ≥10. As the charge on one or more of the islands changes by 2e at any instant, the weights of all the synapses in the network are updated simultaneously. The coupled synapse network can be directly connected to the neuron circuits described in Figure 1 at each of its inputs and outputs to construct a fully connected neural network.

Figure 12. 3 × 3 synapse network with 5 charge island capacitively coupled to each other. Capacitance values are given by: C1 = 6 fF, C2 = 3 fF, C3 = 8 fF, C4 = 2 fF, and C5 = 5 fF. Voltage pulse inputs are applied at V1, V2, and V3. Weights are programmed using bias voltages Vb1, Vb2 and Vb3.

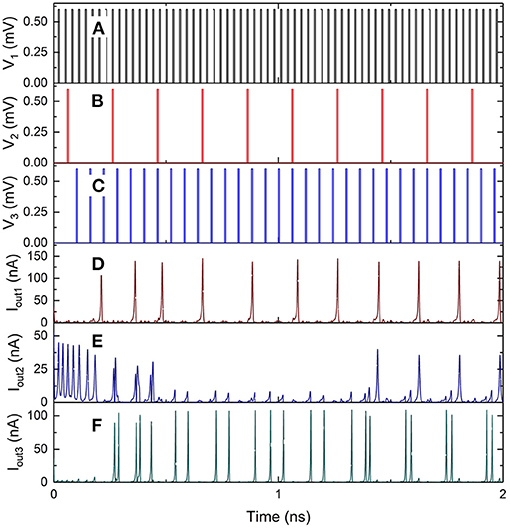

The inputs V1, V2, and V3 to the 3 × 3 network shown in Figure 12 are excited using voltage pulses of constant amplitude of 0.7 mV but with different frequencies. The resulting output spiking signals are observed as a function of time, as shown in the simulation results in Figures 13A–F. The bias voltages Vb1, Vb2 and Vb3 are constant at 0.7, 1.9, and 1.6 mV. With each voltage pulse at one of the inputs, the resulting charge on different islands is updated resulting in different dynamically varying output currents. These are observed as current pulse trains at the outputs, with each pulse comprising a charge 2e, with continuously changing time duration between consecutive spikes. The weight can be calculated as the fraction of the number of current pulses at the output with respect to the number of input pulses applied. Therefore, the variation of time duration between consecutive output spikes is evidence of dynamic updating of the synaptic weights in the network.

Figure 13. Simulation results of the 3 × 3 coupled synapse network shown in Figure 12. (A) Input voltage pulses of 0.6 mV with time period of 60 ps at V1. (B) Input voltage pulses of 0.6 mV with time period of 200 ps at V2. (C) Input voltage pulses 0f 0.6 mV with time period of 30 ps at V3. (D) Spiking current output at Iout1. (E) Spiking current output at Iout2. (F) Spiking current output at Iout3.

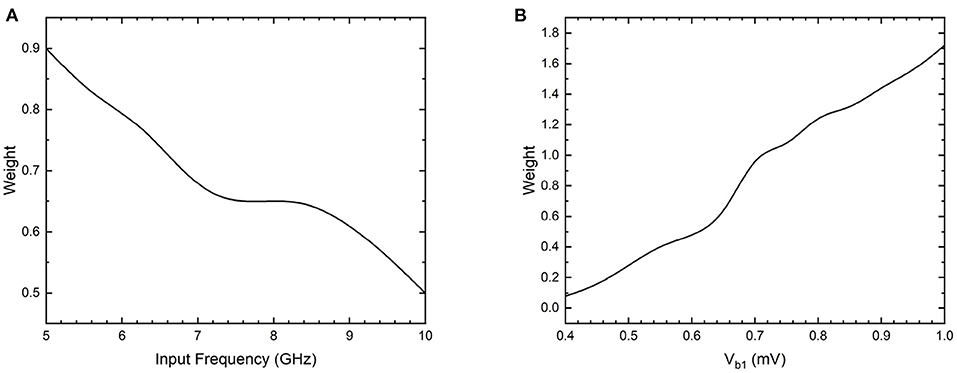

Additionally, the synapse networks can be configured to exhibit spike timing dependent plasticity with respect to input spiking signal timing by including resistors across the capacitors of the network, similar to LTD and LTP circuits in Figure 12. The resistors allow discharging of charge on the island with a fixed time constant for each node, thereby enabling STDP behavior with respect to input pulse frequency. When the bias voltage is constant, the synaptic weight between input V1 and output Iout1 is dependent on the time period between input voltage pulses as shown in the Figure 14A. The weight decreases from 0.9 to 0.5 as the input frequency increases from 5 to 10 GHz. Similarly, the weight can also be configured using the bias voltage Vb1 when the input pulse frequency is constant as shown in Figure 14B. During this operation, the weight increases from 0.1 to 1.7 as the bias voltage is increased from 0.4 to 1 mV. Therefore, the bias voltage and the input frequency have opposing effects on the weight of the synapse between input-output node. The bias voltage can either be coupled to the output signal in the form of a feedback loop, or can induce back-propagating charges with current flow in the opposite direction. The synapse then exhibits a spike timing and rate dependent plasticity with respect to both the input and the output signals.

Figure 14. Simulation results of weight between an input-output node 1 in the 3 × 3 coupled synapse network shown in Figure 12, with an additional 100 kΩ resistor included parallel with C1. (A) Weight as a function of input frequency of V1 with constant bias voltages. (B) Weight as a function of bias voltage Vb1 with input pulse voltage excitations of constant amplitude and frequency.

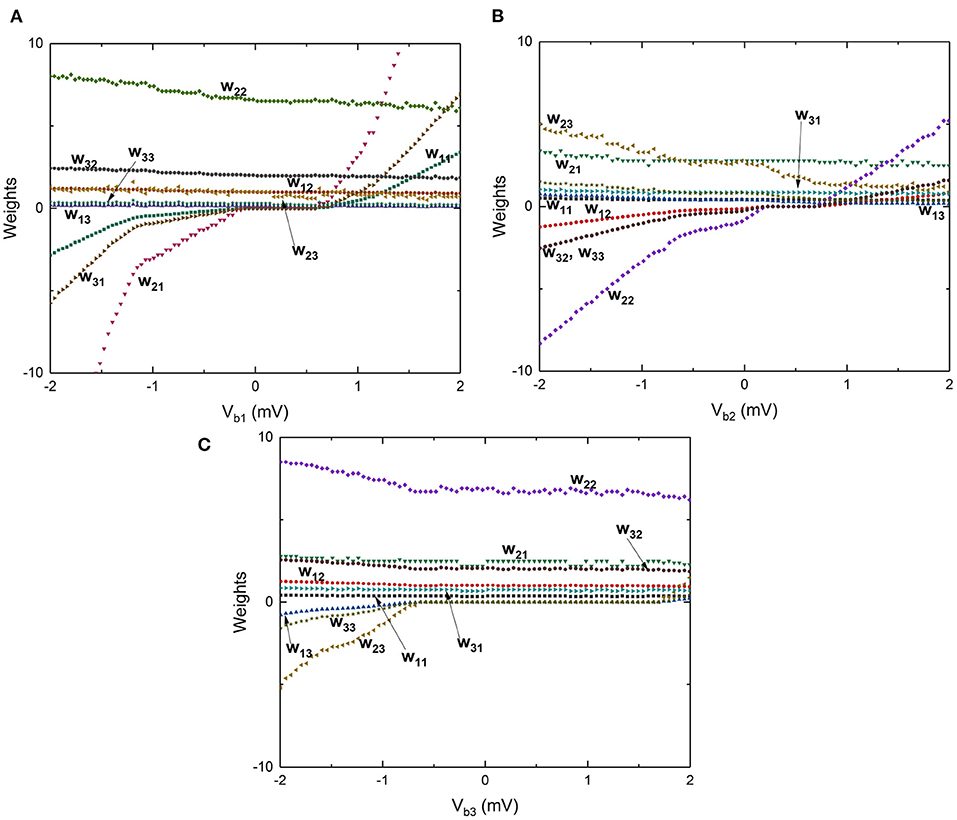

The 3 × 3 network shown in Figure 12 can exhibit similar STDP learning behavior between weights of all of the 9 input-output connections shown by a weight matrix given as:

These weights are affected by any of the 3 input voltage pulse excitations and can also be programmed using the three bias voltage signals. To demonstrate this behavior, the circuit is simulated by independently changing the bias voltages between –2 and 2 mV and the weights between all the synapses in the 3 × 3 network, shown by the weight matrix, are plotted as a function of bias voltages in Figures 15A–F for bias voltages across Vb1, Vb2, and Vb3. Voltage pulses of constant amplitude 0.6 mV are applied with different time periods of 30, 200, and 60 ps to inputs V1, V2, and V3, respectively. The results plotted in Figure 15 show that a large number of continuously varying weights can be realized in a synapse using only a few junctions by capacitively coupling different charge islands, with weights that can be controlled using bias voltages. Since the time-periods are constant at input excitations with constant bias voltages, STDP behavior is not explicitly observed. However, all the weights in the weight matrix are expected to be dynamically updated as a function of input frequency at each of the inputs, similar to the results shown in Figure 14A. Furthermore, different dynamical behaviors are observed in weights as shown in Figure 15. Weights w11, w21, and w31 show an increase in value with an increase in bias voltage Vb1, with a plateau occurring between −−0.25 and 0.5 mV, where convergence in weights corresponding to a stable charge configuration on islands is observed. Similar behavior is observed in weights w12 w22, w32, and w33 with respect to bias voltage Vb2, while weights between other input-output nodes continuously vary with bias voltages. These stable convergent regions are specific to the values of junction critical voltages and capacitance values chosen for the 3 × 3 network shown in Figure 12. Nevertheless, these results indicate that coupled synapse networks can be designed to demonstrate stable configurations that can be programmed using the bias voltages as desired by circuit designers and neural network programmers for specific neural network applications, thereby setting the stage for integration of coupled charge-island synapses into more complex neuromorphic circuits.

Figure 15. Large scale simulations performed by varying the bias voltages between -2 and 2 mV and measuring the weights in the matrix associated with the 3 × 3 network shown in Figure 12. (A) Weights vs. bias voltage Vb1 with voltages Vb2 and Vb3 constant. (B) Weights vs. bias voltage Vb2 with voltages Vb1 and Vb3 constant. (C) Weights vs. bias voltage Vb3 with voltages Vb1 and Vb2 constant.

5. Discussion

In this section, we briefly discuss additional aspects of QPSJ operation temperatures, power or energy dissipation, and some of the expected design and experimental challenges related to QPSJ technology. Quantum phase-slip events have been previously observed at temperatures up to hundreds of mK (Aref et al., 2012) and recent experiments suggested coherent quantum phase-slips in NbN nanowires at temperatures up to 1.92 K (Constantino et al., 2018). We expect, and hope, that additional efforts in this area will allow materials, device structures and fabrication processes to be developed that will allow realization of coherent QPS at temperatures closer to 4 K. In the simulations we have performed, the QPSJ model was temperature independent, though once these dependencies are known, they can be included in more advanced QPSJ circuit models. As discussed in our previous papers (Cheng et al., 2018, 2021), QPSJ-based circuits, if fabricated properly, should have negligible static energy dissipation as the QPSJs are assumed to be in a Coulomb blockade condition when the voltage across them is less than their critical voltage. The primary energy dissipation during normal operation is assumed to be from the switching energy of each QPSJ that undergoes a switching event. Other than the energy dissipation within QPSJs, nominally only the resistors dissipate a small amount of energy, since the other circuit elements such as inductors and capacitors are assumed to be nearly ideal superconductive circuit elements. Since the currents used in these circuits are, in general, exceedingly small, the dissipation in the resistors is also small. For example, we have performed simulations to determine the energy dissipation in the circuit shown in Figure 4. The simulation results show that the energies dissipated at Vpre, , Vb1 and Vb2 are 79.8, 134, 9.45, and 159 yJ, respectively, during each learning event.

In additional to biasing and impedance matching challenges, which were discussed in a previous section, device tolerance is also a concern for practical applications. For example, we have performed a small number of simulations to determine the tolerance for each QPSJ in the previously-presented multi-weight synaptic circuit (Cheng et al., 2021). The results indicated that the tolerance for identical parallel QPSJs is generally low (< 1%) while the tolerance for other QPSJs in the circuit is usually between a few to tens of percent. Therefore, device-to-device variation could affect the overall performance of the proposed circuit configurations, as it does in many electronic circuits. Once the fabrication technology is advanced to the point to realize relatively uniform, repeatable QPSJ devices, it will be important to optimize circuit designs, with the tolerances taken into account.

6. Conclusion

We have reviewed QPSJ-based superconducting neuromorphic circuits such as neurons and synapses and we have introduced new designs that enable STDP learning behavior. These circuits operate with spiking inputs and produce equivalent spiking outputs with each spike or current pulse comprised of a quantized charge 2e. The circuits for various neuromorphic network elements such as integrate-and-fire neurons, multi-weight synapses and fan-out mechanisms are discussed and demonstrated using SPICE circuit simulations. The simulation results indicate that artificial neural networks capable of learning through spike timing dependent plasticity can be constructed using QPSJs. STDP can be achieved in individual synapses through the LTD and LTP circuits presented, which allows deterministic control of weights and through a dynamic response to input excitations to the network. Alternatively, similar spike timing dependent plasticity can also be observed in the QPSJ-based coupled synapse network as demonstrated in 3 × 3 synapse network discussed in section 4. While these networks do not allow deterministic control of all the network parameters, neural networks can be constructed using this approach that may be useful to achieve spike-timing and rate dependent plasticity. In summary, QPSJs present a promising hardware platform to realize power efficient, high-speed spiking neural networks that are capable of both supervised and unsupervised learning.

Data Availability Statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author Contributions

RC created circuit designs and performed simulations for the circuits presented in sections 2 and 3. UG created circuit designs and performed simulations for the circuits presented in sections 2 and 4. HW, KK, and LO participated in discussions and contributed to manuscript preparation. MH led the project, participated in circuit design, simulation, analysis, led discussions, and manuscript preparation. All authors participated in manuscript preparation and revision processes.

Funding

Funding and computing resources for this work performed was provided by Alabama Micro/Nano Science and Technology Center (AMNSTC) at Auburn University.

Conflict of Interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's Note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Acknowledgments

We would like to acknowledge support from the AMNSTC at Auburn University. We also thank Robert Dynes for insights and helpful discussions.

Supplementary Material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fnins.2021.765883/full#supplementary-material

References

Aref, T., Levchenko, A., Vakaryuk, V., and Bezryadin, A. (2012). Quantitative analysis of quantum phase slips in superconducting Mo76Ge24 nanowires revealed by switching-current statistics. Phys. Rev. B 86:024507. doi: 10.1103/PhysRevB.86.024507

Astafiev, O., Ioffe, L., Kafanov, S., Pashkin, Y. A., Arutyunov, K. Y., Shahar, D., et al. (2012). Coherent quantum phase slip. Nature 484, 355–358. doi: 10.1038/nature10930

Bi, G.-Q., and Poo, M.-M. (2001). Synaptic modification by correlated activity: Hebb's postulate revisited. Annu. Rev. Neurosci. 24, 139–166. doi: 10.1146/annurev.neuro.24.1.139

Bliss, T. V., and Lømo, T. (1973). Long-lasting potentiation of synaptic transmission in the dentate area of the anaesthetized rabbit following stimulation of the perforant path. J. Physiol. 232, 331–356. doi: 10.1113/jphysiol.1973.sp010273

Burks, A. W., Goldstine, H. H., and Von Neumann, J. (1982). “Preliminary discussion of the logical design of an electronic computing instrument,” in The Origins of Digital Computers (Berlin; Heidelberg: Springer), 399–413.

Cheng, R., Goteti, U., and Hamilton, M. C. (2021). High-speed and low-power superconducting neuromorphic circuits based on quantum phase-slip junctions. IEEE Trans. Appl. Superconduct. 31, 1–8. doi: 10.1109/TASC.2021.3091094

Cheng, R., Goteti, U. S., and Hamilton, M. C. (2018). Spiking neuron circuits using superconducting quantum phase-slip junctions. J. Appl. Phys. 124:152126. doi: 10.1063/1.5042421

Cheng, R., Goteti, U. S., and Hamilton, M. C. (2019). Superconducting neuromorphic computing using quantum phase-slip junctions. IEEE Trans. Appl. Superconduct. 29, 1–5. doi: 10.1109/TASC.2019.2892111

Constantino, N. G., Anwar, M. S., Kennedy, O. W., Dang, M., Warburton, P. A., and Fenton, J. C. (2018). Emergence of quantum phase-slip behaviour in superconducting NbN nanowires: DC electrical transport and fabrication technologies. Nanomaterials 8:442. doi: 10.3390/nano8060442

Cooke, S., and Bliss, T. (2006). Plasticity in the human central nervous system. Brain 129, 1659–1673. doi: 10.1093/brain/awl082

Crotty, P., Schult, D., and Segall, K. (2010). Josephson junction simulation of neurons. Phys. Rev. E 82:011914. doi: 10.1103/PhysRevE.82.011914

Fulton, T. A., and Dolan, G. J. (1987). Observation of single-electron charging effects in small tunnel junctions. Phys. Rev. Lett. 59, 109. doi: 10.1103/PhysRevLett.59.109

Giordano, N. (1988). Evidence for macroscopic quantum tunneling in one-dimensional superconductors. Phys. Rev. Lett. 61, 2137. doi: 10.1103/PhysRevLett.61.2137

Goteti, U. S., and Dynes, R. C. (2021). Superconducting neural networks with disordered josephson junction array synaptic networks and leaky integrate-and-fire loop neurons. J. Appl. Phys. 129, 073901. doi: 10.1063/5.0027997

Goteti, U. S., and Hamilton, M. C. (2015). SPICE model implementation of quantum phase-slip junctions. Electron. Lett. 51, 979–981. doi: 10.1049/el.2015.0904

Goteti, U. S., and Hamilton, M. C. (2018). Charge-based superconducting digital logic family using quantum phase-slip junctions. IEEE Trans. Appl. Superconduct. 28, 1–4. doi: 10.1109/TASC.2018.2803123

Goteti, U. S., and Hamilton, M. C. (2019). Complementary quantum logic family using josephson junctions and quantum phase-slip junctions. IEEE Trans. Appl. Superconducti. 29, 1–6. doi: 10.1109/TASC.2019.2904695

Goteti, U. S., Zaluzhnyy, I. A., Ramanathan, S., Dynes, R. C., and Frano, A. (2021). Low-temperature emergent neuromorphic networks with correlated oxide devices. Proc. Natl. Acad. Sci. U.S.A. 118:e2103934118. doi: 10.1073/pnas.2103934118

Hamilton, M. C., and Goteti, U. S. (2018). Superconducting quantum logic and applications of same. U.S. Patent 9,998,122, June 12.

Haykin, S. (2010). Neural Networks and Learning Machines, 3/E. Upper Saddle River, NJ: Pearson Education India.

Hewlett, J. D., and Wilamowski, B. M. (2011). SPICE as a fast and stable tool for simulating a wide range of dynamic systems. Int. J. Eng. Educ. 27, 217.

Holmes, D. S., Ripple, A. L., and Manheimer, M. A. (2013). Energy-efficient superconducting computing—power budgets and requirements. IEEE Trans. Appl. Superconduct. 23, 1701610–1701610. doi: 10.1109/TASC.2013.2244634

Hongisto, T., and Zorin, A. (2012). Single-charge transistor based on the charge-phase duality of a superconducting nanowire circuit. Phys. Rev. Lett. 108, 097001. doi: 10.1103/PhysRevLett.108.097001

Hopfield, J. J., and Herz, A. V. (1995). Rapid local synchronization of action potentials: Toward computation with coupled integrate-and-fire neurons. Proc. Natl. Acad. Sci. U.S.A. 92, 6655–6662. doi: 10.1073/pnas.92.15.6655

Hriscu, A., and Nazarov, Y. V. (2011). Coulomb blockade due to quantum phase slips illustrated with devices. Phys. Rev. B 83:174511. doi: 10.1103/PhysRevB.83.174511

Ito, M., and Kano, M. (1982). Long-lasting depression of parallel fiber-purkinje cell transmission induced by conjunctive stimulation of parallel fibers and climbing fibers in the cerebellar cortex. Neurosci. Lett. 33, 253–258. doi: 10.1016/0304-3940(82)90380-9

Jo, S. H., Chang, T., Ebong, I., Bhadviya, B. B., Mazumder, P., and Lu, W. (2010). Nanoscale memristor device as synapse in neuromorphic systems. Nano Lett. 10, 1297–1301. doi: 10.1021/nl904092h

Kafanov, S., and Chtchelkatchev, N. (2013). Single flux transistor: The controllable interplay of coherent quantum phase slip and flux quantization. J. Appl. Phys. 114:073907. doi: 10.1063/1.4818706

Kerman, A. J. (2013). Flux-charge duality and topological quantum phase fluctuations in quasi-one-dimensional superconductors. New J. Phys. 15:105017. doi: 10.1088/1367-2630/15/10/105017

Lee, C., Panda, P., Srinivasan, G., and Roy, K. (2018). Training deep spiking convolutional neural networks with STDP-based unsupervised pre-training followed by supervised fine-tuning. Front. Neurosci. 12:435. doi: 10.3389/fnins.2018.00435

Levy, W., and Steward, O. (1983). Temporal contiguity requirements for long-term associative potentiation/depression in the hippocampus. Neuroscience 8, 791–797. doi: 10.1016/0306-4522(83)90010-6

Linares-Barranco, B., Serrano-Gotarredona, T., Camu nas-Mesa, L. A., Perez-Carrasco, J. A., Zamarreno-Ramos, C., and Masquelier, T. (2011). On spike-timing-dependent-plasticity, memristive devices, and building a self-learning visual cortex. Front. Neurosci. 5:26. doi: 10.3389/fnins.2011.00026

Markram, H., Lübke, J., Frotscher, M., and Sakmann, B. (1997). Regulation of synaptic efficacy by coincidence of postsynaptic APs and EPSPs. Science 275, 213–215. doi: 10.1126/science.275.5297.213

Merolla, P. A., Arthur, J. V., Alvarez-Icaza, R., Cassidy, A. S., Sawada, J., Akopyan, F., et al. (2014). A million spiking-neuron integrated circuit with a scalable communication network and interface. Science 345, 668–673. doi: 10.1126/science.1254642

Monroe, D. (2014). Neuromorphic computing gets ready for the (really) big time. Commun. ACM 57, 13–15. doi: 10.1145/2601069

Mooij, J., and Harmans, C. (2005). Phase-slip flux qubits. New J. Phys. 7:219. doi: 10.1088/1367-2630/7/1/219

Mooij, J., and Nazarov, Y. V. (2006). Superconducting nanowires as quantum phase-slip junctions. Nat. Phys. 2, 169–172. doi: 10.1038/nphys234

Mooij, J., Schön, G., Shnirman, A., Fuse, T., Harmans, C., Rotzinger, H., et al. (2015). Superconductor-insulator transition in nanowires and nanowire arrays. New J. Phys. 17:033006. doi: 10.1088/1367-2630/17/3/033006

Nagel, L. W. (1975). SPICE2: A Computer Program to Simulate Semiconductor Circuits (Ph. D. dissertation). University of California at Berkeley.

Pecqueur, S., Vuillaume, D., and Alibart, F. (2018). Perspective: organic electronic materials and devices for neuromorphic engineering. J. Appl. Phys. 124:151902. doi: 10.1063/1.5042419

Schneider, M. L., Donnelly, C. A., and Russek, S. E. (2018a). Tutorial: High-speed low-power neuromorphic systems based on magnetic Josephson junctions. J. Appl. Phys. 124:161102. doi: 10.1063/1.5042425

Schneider, M. L., Donnelly, C. A., Russek, S. E., Baek, B., Pufall, M. R., Hopkins, P. F., et al. (2018b). Ultralow power artificial synapses using nanotextured magnetic Josephson junctions. Sci. Adv. 4:e1701329. doi: 10.1126/sciadv.1701329

Seo, J.-S., Brezzo, B., Liu, Y., Parker, B. D., Esser, S. K., Montoye, R. K., et al. (2011). “A 45nm CMOS neuromorphic chip with a scalable architecture for learning in networks of spiking neurons,” in 2011 IEEE Custom Integrated Circuits Conference (CICC) (San Jose, CA: IEEE), 1–4.

Serrano-Gotarredona, T., Masquelier, T., Prodromakis, T., Indiveri, G., and Linares-Barranco, B. (2013). STDP and STDP variations with memristors for spiking neuromorphic learning systems. Front. Neurosci. 7:2. doi: 10.3389/fnins.2013.00002

Shainline, J. M., Buckley, S. M., McCaughan, A. N., Chiles, J. T., Jafari Salim, A., Castellanos-Beltran, M., et al. (2019). Superconducting optoelectronic loop neurons. J. Appl. Phys. 126:044902. doi: 10.1063/1.5096403

Srinivasan, G., Panda, P., and Roy, K. (2018). STDP-based unsupervised feature learning using convolution-over-time in spiking neural networks for energy-efficient neuromorphic computing. ACM J. Emerg. Technol. Comput. Syst. 14, 1–12. doi: 10.1145/3266229

Sung, C., Hwang, H., and Yoo, I. K. (2018). Perspective: a review on memristive hardware for neuromorphic computation. J. Appl. Phys. 124:151903. doi: 10.1063/1.5037835

van de Burgt, Y., Lubberman, E., Fuller, E. J., Keene, S. T., Faria, G. C., Agarwal, S., et al. (2017). A non-volatile organic electrochemical device as a low-voltage artificial synapse for neuromorphic computing. Nat. Mater. 16, 414–418. doi: 10.1038/nmat4856

von Bartheld, C. S., Bahney, J., and Herculano-Houzel, S. (2016). The search for true numbers of neurons and glial cells in the human brain: a review of 150 years of cell counting. J. Compar. Neurol. 524, 3865–3895. doi: 10.1002/cne.24040

Wang, Z. M., Lehtinen, J., and Arutyunov, K. Y. (2019). Towards quantum phase slip based standard of electric current. Appl. Phys. Lett. 114:242601. doi: 10.1063/1.5092271

Webster, C., Fenton, J., Hongisto, T., Giblin, S., Zorin, A., and Warburton, P. (2013). NbSi nanowire quantum phase-slip circuits: DC supercurrent blockade, microwave measurements, and thermal analysis. Phys. Rev. B 87:144510. doi: 10.1103/PhysRevB.87.144510

Keywords: quantum phase slip junction, Josephson junction, neuromorphic computing, spike timing dependent plasticity, unsupervised learning, coupled synapse networks

Citation: Cheng R, Goteti US, Walker H, Krause KM, Oeding L and Hamilton MC (2021) Toward Learning in Neuromorphic Circuits Based on Quantum Phase Slip Junctions. Front. Neurosci. 15:765883. doi: 10.3389/fnins.2021.765883

Received: 27 August 2021; Accepted: 11 October 2021;

Published: 08 November 2021.

Edited by:

Kenneth Segall, Colgate University, United StatesReviewed by:

Ahmedullah Aziz, The University of Tennessee, United StatesAbhinav Parihar, Columbia University, United States

Copyright © 2021 Cheng, Goteti, Walker, Krause, Oeding and Hamilton. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Michael C. Hamilton, bWNoYW1pbHRvbkBhdWJ1cm4uZWR1

†These authors have contributed equally to this work and share first authorship

Ran Cheng

Ran Cheng Uday S. Goteti

Uday S. Goteti Harrison Walker

Harrison Walker Keith M. Krause1,2

Keith M. Krause1,2 Luke Oeding

Luke Oeding Michael C. Hamilton

Michael C. Hamilton