- 1Institute of Plasma Physics, Hefei Institutes of Physical Science, Chinese Academy of Sciences, Hefei, China

- 2University of Science and Technology of China, Hefei, China

- 3Mechanical Department, School of Energy Systems, Lappeenranta University of Technology (LUT), Lappeenranta, Finland

Introduction: Within the development of brain-computer interface (BCI) systems, it is crucial to consider the impact of brain network dynamics and neural signal transmission mechanisms on electroencephalogram-based motor imagery (MI-EEG) tasks. However, conventional deep learning (DL) methods cannot reflect the topological relationship among electrodes, thereby hindering the effective decoding of brain activity.

Methods: Inspired by the concept of brain neuronal forward-forward (F-F) mechanism, a novel DL framework based on Graph Neural Network combined forward-forward mechanism (F-FGCN) is presented. F-FGCN framework aims to enhance EEG signal decoding performance by applying functional topological relationships and signal propagation mechanism. The fusion process involves converting the multi-channel EEG into a sequence of signals and constructing a network grounded on the Pearson correlation coeffcient, effectively representing the associations between channels. Our model initially pre-trains the Graph Convolutional Network (GCN), and fine-tunes the output layer to obtain the feature vector. Moreover, the F-F model is used for advanced feature extraction and classification.

Results and discussion: Achievement of F-FGCN is assessed on the PhysioNet dataset for a four-class categorization, compared with various classical and state-of-the-art models. The learned features of the F-FGCN substantially amplify the performance of downstream classifiers, achieving the highest accuracy of 96.11% and 82.37% at the subject and group levels, respectively. Experimental results affirm the potency of FFGCN in enhancing EEG decoding performance, thus paving the way for BCI applications.

1 Introduction

Brain-Computer Interface (BCI) technology facilitates information exchange between the human brain and external devices, enabling information transmission bypassing the traditional nerve and muscle pathways (Hou et al., 2022b). By circumventing conventional neural pathways and muscle systems, BCI has successfully established themselves in diverse domains such as exoskeleton-assisted rehabilitation, fatigue monitoring, and process control in the industry (Huang et al., 2023; Liu et al., 2023; Zhang R. et al., 2023). A prominent subset of BCI, benefiting from advances in signal processing and deep learning (DL), is Electroencephalography (EEG) (Gao and Mao, 2021; Zhao et al., 2022; Li H. et al., 2023). EEG technology primarily aims to identify and categorize motor imagery (MI) signals, a vital aid for individuals with mobility impairments such as stroke victims. EEG's high accuracy, real-time response and cost-effectiveness distinguish it from other neuroimaging techniques like magnetoencephalography and functional magnetic resonance imaging (Huang et al., 2021; Mirchi et al., 2022; Tong et al., 2023).

Conventional algorithms for MI-EEG classification employ spatial decoding techniques, leveraging multichannel EEG data recorded from the scalp to identify motor intentions (Xu et al., 2021). In an endeavor to classify signals sourced from multi-channel MI-EEG, various methods have been proposed, effectively capturing their temporal, spectral and spatial characteristics (Tang et al., 2019; Wang and Cerf, 2022; Hamada et al., 2023; Li Y. et al., 2023). Given the rhythmic and non-linear nature of EEG signals, several feature extraction techniques leveraging wavelet modulation and fuzzy entropy have been proposed. Grosse (Grosse-Wentrup and Buss, 2008) introduced a methodology that incorporates common spatial pattern (CSP) for spatial filtering and reducing dimensionality, which is supplemented with the filter bank technique to divide the spatially refined signals into multiple frequency sub-bands. On a similar note, Malan and Sharma (2022) developed a filter bank based on dual-tree complex wavelet transform to separate EEG signals into sub-bands. Once the EEG signals have been segmented into these sub-bands, spatial characteristics are derived from each sub-band through the CSP and subsequently refined employing a supervised learning framework. A multi-layer twin support vector machine leveraging phase space and wavelet transform is presented by Fei and Chu (2022). Despite their potential, these methodologies overlook the topological relationships among electrodes, necessitating further optimization to improve MI classification accuracy.

Recognizing the growing emphasis in neuroscience on brain network dynamics and neural signal propagation mechanism, Graph Convolutional Network (GCN) have been introduced to decode EEG signals (Wang et al., 2021; Du G. et al., 2022; Gao et al., 2022). Then Kipf and Welling (2016) combined graph theory and DL to capture the relationship between nodes. Coincidentally, the forward-forward (F-F) mechanism, a groundbreaking concept in neurotransmission introduced by Hinton (2022), is garnering attention. This mechanism provides an efficient method to process sequential data in neural networks without the need to store neural activities or pause for error propagation. Our study aims to integrate F-F mechanism with GCN for EEG-based BCI, proposing a significant advance in motor imagery classification.

In research, we put forward an innovative F-FGCN framework for MI classification. The salient contributions of our research are as follows:

1. A novel EEG classification model for four-class MI intentions called F-FGCN is presented, driven by brain network dynamics and neural signal propagation mechanism, incorporates brain-inspired F-F mechanism and cooperates with the functional topological relationships of EEG electrodes.

2. F-FGCN utilizes both pre-training and fine-tuning phases in GCN. By leveraging the pre-training process of the GCN, it effectively recognizes the relationships between multichannel EEG signals from the subjects, thus significantly enhancing the performance and robustness of our approach.

3. F-FGCN motivated by medical domain knowledge, alters the typical model where the neurons explicitly propagate error derivatives or stores neural activities for a subsequent backward pass. We replace backpropagation with F-F mechanism, generating a hybrid EEG feature by merging the feature with a mask. The creation of negative data involves the generation of a mask characterized by vast regions containing binary values of ones and zeros, using two consecutive forward passes to iterate over the parameters of positive and negative data.

4. Our model is benchmarked against a range of traditional and contemporary models through comprehensive experimental comparisons. Experimental results on the PhysioNet demonstrate that the proposed method achieved the highest accuracy of 93.06% and 88.57% at the subject and group level, respectively.

This paper is organized in the following manner: Section I outlines the BCI development and contributions of our research. Section 2 presents a detailed review of relevant background knowledge and related works. The structure of our model is delineated in Section 3. The results from our model and comparison tests are elaborated in Section 4. The discussion takes place in Section 5. The paper wraps up with concluding remarks and a glimpse into future work in Section 6.

2 Related work

BCI systems pivot around feature extraction and classification for executing MI tasks. Recent studies have largely focused on the feature extraction and classification of EEG signals within the DL framework.

2.1 Feature extraction

In recent times, DL has achieved remarkable performance across various fields due to its ability to extract underlying features from signals, thus mitigating the necessity for manual feature engineering. The Convolutional Neural Network (CNN) has found extensive usage in classifying Euclidean-structured signals, credited to its capability to learn informative features through local receptive fields (Du Y. et al., 2022; Mughal et al., 2022; de Oliveira and Rodrigues, 2023).

Tang et al. (2023a) developed a Multi-Scale Hybrid CNN to isolate temporal and spatial EEG signal attributes. Advanced temporal features were captured through the utilization of one-dimensional convolution, yielding impressive accuracies of 85.25% and 84.86% on BCI competition IV datasets 2b and 2a, respectively. Similarly, Jia et al. (2023) engineered a multi-branch CNN module for learning spectral-temporal domain characteristics. By incorporating a channel attention mechanism, more discriminative features were extracted, leading to 74% average accuracy on four class data. Moreover, Hu et al. (2023) employed band common spatial pattern coupled with duplex mean-shift clustering to extract diverse features across temporal, spectral and spatial domains. By combining these with CNN, features from different domains were consolidated, significantly improving the classification results.

However, conventional CNN encounters challenges in processing non-Euclidean structured data, primarily due to the inherent inability of discrete convolution to preserve translation invariance on non-Euclidean. To overcome this issue, GCN can process graph-structured signals and extract features from non-Euclidean data, taking into account the relationship properties between nodes. When coupled with functional topological relationships between electrodes, GCN can enhance the decoding efficiency of EEG tasks.

The concept of spatial GCN was initially introduced by studies (Song et al., 2020; Yang et al., 2020; Bui et al., 2022). In a noteworthy development, Liang et al. (2022) designed a GCN model for channel classification, treating all EEG channels as graph nodes, thus transforming channel selection into a graph node classification problem. In a different approach, Hou et al. (2022a) formulated a model that leveraged bidirectional LSTM with attention mechanism. By coordinating the GCN with the topological structure of features extracted from the total data, they significantly improved decoding performance. Similarly, Ye et al. (2022) introduced a hierarchical dynamic GCN that investigates dynamic spatial information at multiple levels across EEG channels. Their method consistently delivered superior results in extensive tests on the SJTU emotion EEG dataset.

2.2 Feature classification

The superiority of DL in signal processing has prompted researchers to adopt end-to-end algorithms based on the backpropagation mechanism for classification (Wang et al., 2020; Tang et al., 2023b; Zhang H. et al., 2023; Zhang J. et al., 2023). A multitude of models have been devised which transfigure unprocessed EEG signals into spatial-spectral-temporal forms for categorization, including CNN (Hossain et al., 2023), ANN (Subasi, 2005), and EEGNET (Lawhern et al., 2018).

Xiao et al. (2022) converted unprocessed EEG data into a 4D representation encompassing spatial, spectral, and temporal dimensions. Their method enhanced by spectral and spatial attention mechanisms, allowed to attribute distinct weights to various brain regions and frequency bands in a selective manner. On the other hand, Schirrmeister et al. (2017) developed three distinct CNN architectures, namely ShallowNet (Hu et al., 2021), DeepNet (Wang H. et al., 2022), and HybridNet (Dai, 2019), which served to decode MI-EEG from its raw EEG counterparts.

Wang Q. et al. (2022) put forth Anes-MetaNet, a model that applies CNN to extract power spectrum features and incorporates LSTM-based temporal to identify temporal dependencies. In parallel, Akmal (2022) employed tensor-based Canonical/Polyadiac Weighted-Optimization alongside ANN for the purpose of both rectifying missing data and performing classification tasks. Capitalizing on the transformer architecture strengths and the inherent spatial-temporal traits of EEG signals, Xie et al. (2022) developed transformer-centric models intended for classifying motor imagery EEG signals using the PhysioNet dataset. When implemented on 3s EEG data, this model exhibited remarkable classification accuracies, achieving 83.31%, 74.44%, and 64.22% for defferent MI classification tasks. Further, an unique graph sequence neural network was presented by Cai et al. (2022) to precisely decode motor imagery patterns from EEG data even amidst environmental distractions. Lastly, Umrani and Harshavardhanan (2022) harnessed ANN for anxiety detection, trained through their bespoke trace and forage optimization algorithm, which merges characteristics from rescue search agents and finches to enhance detection efficacy.

Nevertheless, most of the current DL methods heavily rely on backpropagation. These methods require a comprehensive understanding of computations performed during the forward pass, which can be a daunting task when the exact details of the forward computation are not available. The F-F mechanism enables learning by streaming sequential data through a neural network without the need to retain neural activities or interruption for error backpropagation. Consequently, the application of GCN based on the F-F mechanism for MI classification presents a novel approach.

3 Methods

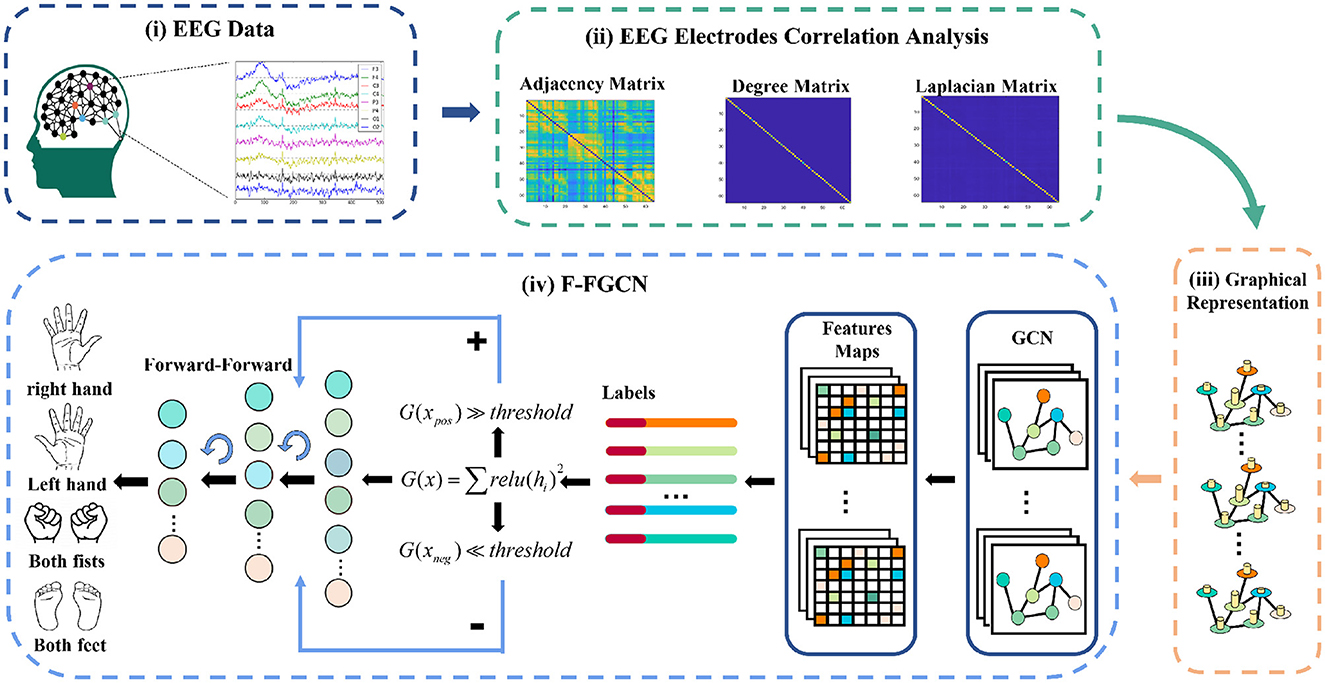

In this section, we thoroughly detail each block, and the overall framework. Firstly, we provide an explanation for the representation of EEG data in the Graph representation block, which forms the input for our proposed F-FGCN. Next, we delve into the structural components of the GCN block. Lastly, we leverage the F-F mechanism block to further extract EEG features for classification. Figure 1 provides a visual representation of the F-FGCN framework, consisting of three main blocks: the Graph representation block, the GCN block, and the F-F mechanism block.

Figure 1. The system framework encompasses: (i) the collection of raw EEG signals, (ii) conducting correlation analysis for graph weights and degrees, demonstrated through the adjacency matrix, PCC matrix degree, and graph Laplacian, (iii) representing the international 10-10 EEG system in a graph, and (iv) integrating a new DL structure of the F-FGCN.

3.1 Graph representation block

GCN is an appropriate choice to non-Euclidean data for its advantage for capturing topological structure information. EEG signals are naturally considered data with a graph structure. Electrodes, which collect EEG signals, are arranged on the surface of the brain following the guidelines of the 10-10 system.

An undirected weighted graph is symbolized as G = {V, E, A}, wherein V = {v1, v2, ..., vn} signifies the ensemble of n nodes. The set of e edges is represented by ei ∈ E. The weighted adjacency matrix, denoted as A ∈ RN×N, indicaed the linkages between any pair of nodes.

The Laplacian matrix of the graph G is defined as L, which can be written as Equation (1):

where is the degree matrix of the graph G.

The symmetric normalized graph Laplacian, L, is defined as Equation (2):

where I is the identify matrix. Normalized graph Laplacian Lnormal represents the correlations between nodes.

For the purpose of illustrating a graph's degree matrix, we undertake the scale in graph weights, disregarding the direction of correlations. Consequently, the absolute value of the Pearson correlation coefficient (PCC) matrix is adopted. The PCC matrix is utilized to represent each electrode as a node in the graph, with edge weights determined by the correlations observed among the time-series signals in Equation (3):

where xi, xj are the signal vectors from nodes vi and vj, and T is the total number of samples. wi, j ∈ [0, 1] can quantify the relationship between two channels and assess the strength of their correlation. A higher wi, j value indicates a stronger correlation between the channels.

3.2 GCN block

Spectral graph theory is rooted in the study of the structural attributes of graph data. The graph filter and graph convolution have been constructed using the Laplacian matrix. Signals on the nodes of the graph can be expressed as , and f ∈ ℝn, where fi is the value at the ith node. With the eigendecomposition of the graph Laplacian matrix L = UΛUT, the eigenvectors matrix is obtained, where is a diagonal matrix with corresponding eigenvalues

The Graph Fourier Transform (GFT) for the initial signal x on the graph is expressed as Equation (4):

The inverse GFT is Equation (5):

Per the convolution theorem, a convolution involving two signals can be transformed into a point-wise multiplication in the Fourier domain.

In accordance with the convolution theorem, considering a signal x as input and another signal g acting as a filter, the graph convolution G can be expressed as Equation (6):

In this context, ⊙ signifies the elementwise Hadamard product and g ∈ ℝN operates as a convolutional filter. Moreover, g is nonparametric and denoted as gθ(Λ) = diag(θ), with θ ∈ ℝN acting as the vector of Fourier coefficients. The convolution operation carried out in the GCN is as followed Equation (7):

The differentiation in spectral graph convolution is primarily due to the choice of filter gθ. Owing to the fact that a nonparametric filter is not spatially localized and bears substantial computational complexity, we resort to polynomial approximation to resolve this matter. Chebyshev polynomials are commonly employed for filter approximation. Consequently, gθ is parameterized as a truncated expansion in the following manner Equation (8):

In this equation, θ ∈ ℝK represents a set of Chebyshev coefficients, is the kth-order Chebyshev polynomial assessed at , and IN stands as a diagonal matrix with scaled eigenvalues. Then, the signal x is convolved by the defined filter gθ as follows Equation (9):

denotes the kth order Chebyshev polynomial evaluated at the rescaled Laplacian . Here, , and a recursive relationship is employed to compute , such as with initial conditions and .

The application of Chebyshev polynomial for the approximation of convolutional filters is advantageous since it lessens the demand for calculations related to graph Fourier basis. This subsequently leads to a decrease in computational complexity from O(N2) to a much more feasible O(KN).

3.3 Forward-forward block

Inspired by Boltzmann machines and Noise Contrastive Estimation, F-F provides speedy multi-layer learning, functioning even when forward computation specifics are obscured. F-F implements two alike forward passes instead of backpropagation's bidirectional passes, using dissimilar data and opposing goals. The positive pass uses authentic data to amplify the goodness of each hidden layer. The positive pass uses authentic data to amplify the goodness n of each hidden layer. In contrast, the F-F also includes another forward pass, which uses negative data generated by the network itself to adjust the weights in a manner that decreases the goodness measure in each hidden layer. The two forward passes replace the traditional forward and backward passes in backpropagation. Instead of calculating error gradients and propagating them back through the network as in backpropagation, the FF algorithm uses these two types of forward passes to adjust weights directly based on the goodness measure.

In experiments, only directions are used for subsequent layer transmission for several reasons. Firstly, to prevent the escalation of values, which could destabilize the network, especially in deep architectures. Secondly, focusing on the orientation rather than the magnitude encourages the network to discriminate features based on patterns of neuron activations. The activity vectors are normalized before entering the next layer to accelerate training convergence, prevent vanishing or exploding gradients, enhance generalization, mitigate overfitting, and maintain numerical stability, ensuring consistent learning progress.

The journey of an input vector x through the F-F commences with its propagation through the network to yield the hidden layer's output, h, articulated as Equation (10):

This step marks the genesis of the model's response to the input data. Central to the F-F is the concept of goodness, a metric that quantifies the hidden layer's reaction to input data. It's computed as the cumulative sum of the squares of the hidden units' activities within the layer. Mathematically, the current goodness SL of a layer with output h is represented as Equation (11):

To navigate toward the desired state of the network, a target goodness level S* is pre-established. The learning rate α is pivotal for steering the weight adjustments in alignment with this target. It's calculated using the formula Equation (12):

The initial hidden layer activity vector, with both length and orientation uses the former for defining layer specific goodness, and the latter for subsequent layer transmission. F-F utilizes real and corrupted data vectors that merge labels with the feature. The incoming weight increments for hidden neuron j are given by Equation (13):

After updating weights, the neuron j's activity change equals Δwjx, depending solely on yj, causing proportional changes in hidden activities without affecting the vector orientation.

An integral step involves discerning the input vector's probability of being positive, achieved by applying the logistic function σ to the goodness less a threshold θ in Equation (14):

Each layer's performance is gauged using a loss function designed to bifurcate the layer mean activity around a tunable threshold value θ. The function is Equation (15):

hj, P represents the activity of the j hidden unit for the positive sample, and hj, N represents the activity for the negative sample.

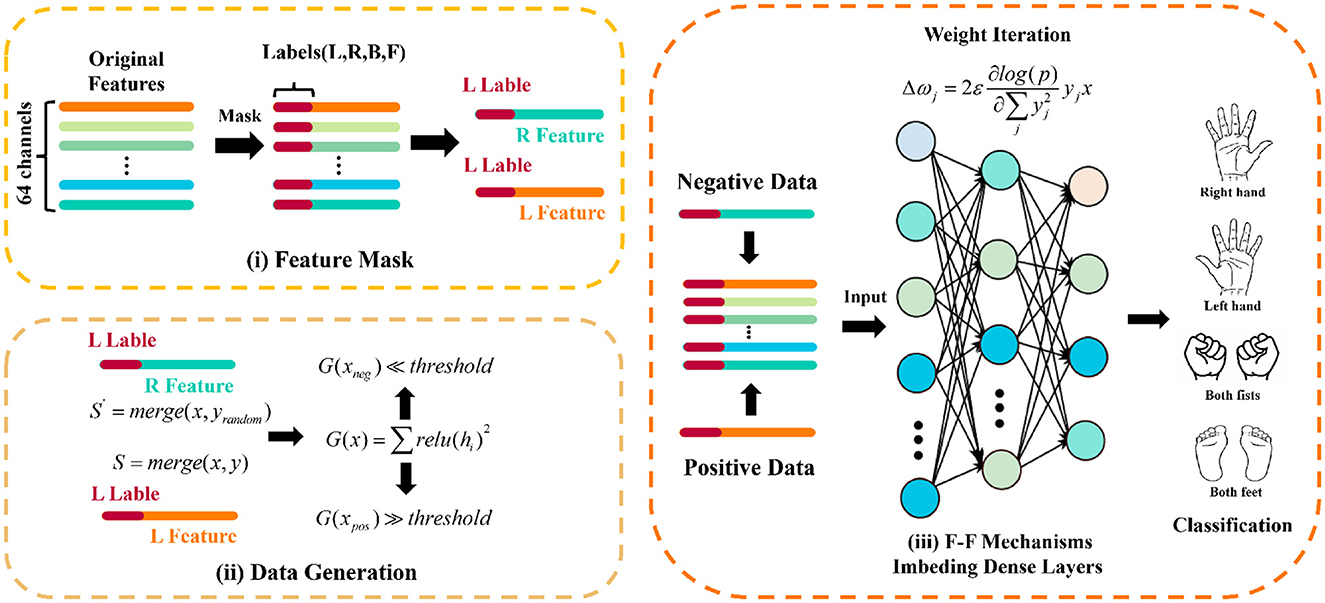

The iterative optimization process entails utilizing the normalized outputs of positive and negative data from preceding layers as inputs for successive layers. The process perpetuates until the loss value attains saturation, a hallmark of network convergence and its adeptness at differentiating between positive and negative data. The specific implementation of F-F block is shown in the Figure 2.

Figure 2. F-F block is composed of (i) Mask the features, (ii) Generate training data, and (iii) Training by F-F mechanism imbeding dense layers.

4 Experiments

4.1 Dataset and evaluation approaches

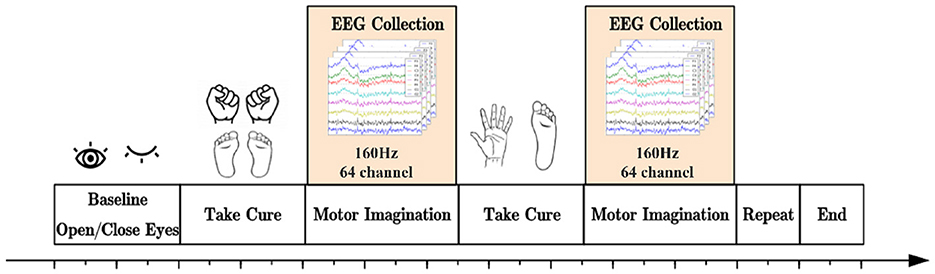

PhysioNet Dataset: This dataset comprises more than 1,500 EEG recordings from 109 participants, collected using 64 electrodes following the 10-10 system. It encompasses four distinct motor imagery tasks: L (left fist), R (right fist), B (both fists), and F (both feet), as depicted in Figure 3. Each subject performed 84 trials split into 3 runs of 7 trials in 4 tasks.

BCI Competition III 3a: This dataset focuses on cued motor imagery tasks with a multi-class approach, encompassing four distinct classes: left hand, right hand, foot, and tongue movements. The recorded data is extensive, with EEG readings taken from 60 channels, providing a comprehensive array of brain activity signals. Each of the four classes is well-represented, with 60 trials per class, offering a robust dataset for analysis and application in brain-computer interface research and development.

The effectiveness of our models is primarily evaluated on the basis of accuracy, determined by the Equation (16):

Here, TP corresponds to true positives, FP refers to false positives, FN stands for false negatives, and TN indicates true negatives.

4.2 Training procedure

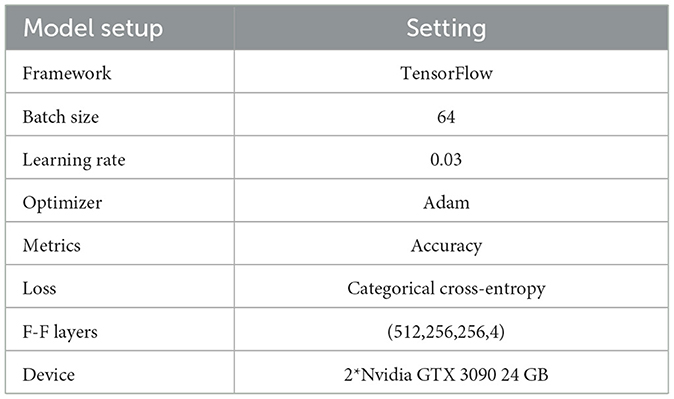

The model is trained using the TensorFlow framework, with training over 300 epochs harnessing the computational power of two Nvidia GTX 3090 GPU 24 GB of memory. Detailed parameters are outlined in Table 1.

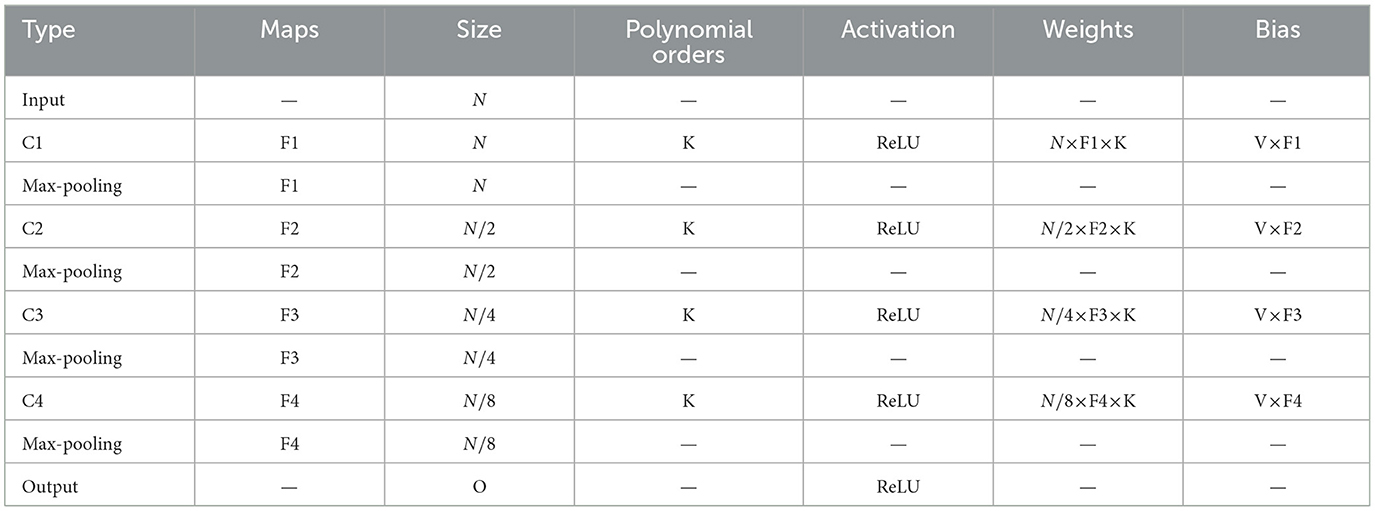

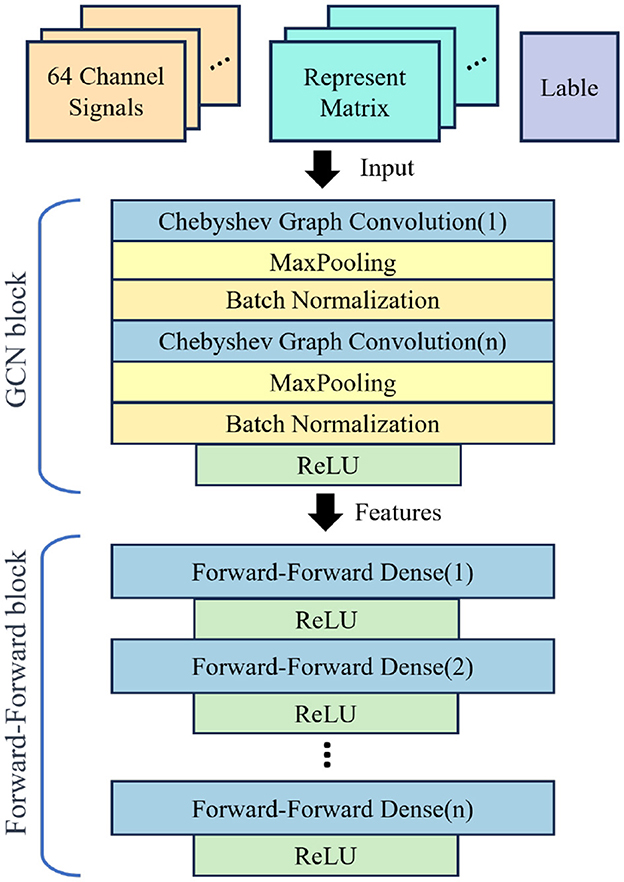

These specific hyperparameters are meticulously chosen after a series of comparative experiments, ensuring an optimal balance between performance and generalization of the models. Our experiments utilize a GCN model equipped with four graph convolution layers, with Chebyshev polynomial serving as the convolution kernel for GCN. The chosen structure for the GCN is depicted in Figure 4.

Figure 4. Architecture of F-FGCN. It consists of Chebyshev Graph Convolution, MaxPooling, and Forward-Forward Dense. Incorporating dense layers featuring Forward-Forward mechanism, which behind the GCN for enhanced feature extraction and categorization.

Detailed aspects of the model are provided in Table 2, “Maps” refers to the count of neurons present in each layer, “Size” signifies the dimension of the input feature for every corresponding layer, “N” is representative of the quantity of node features, “K” corresponds to the order of the Chebyshev Polynomial, and “O” denotes the classification category count. In the devised model, we incorporate dense layers featuring F-F mechanism, which is behind the GCN for enhanced feature extraction and categorization. The efficacy of this hierarchical design forms the key to our experimental analysis.

4.3 Experimental results

The performance of F-FGCN is benchmarked against both traditional and SOTA models. The data is randomly shuffled, creating datasets with identical data but in different orders. Subsequently, the data is partitioned into training, validation, and testing sets with a ratio of 7:2:1, respectively.

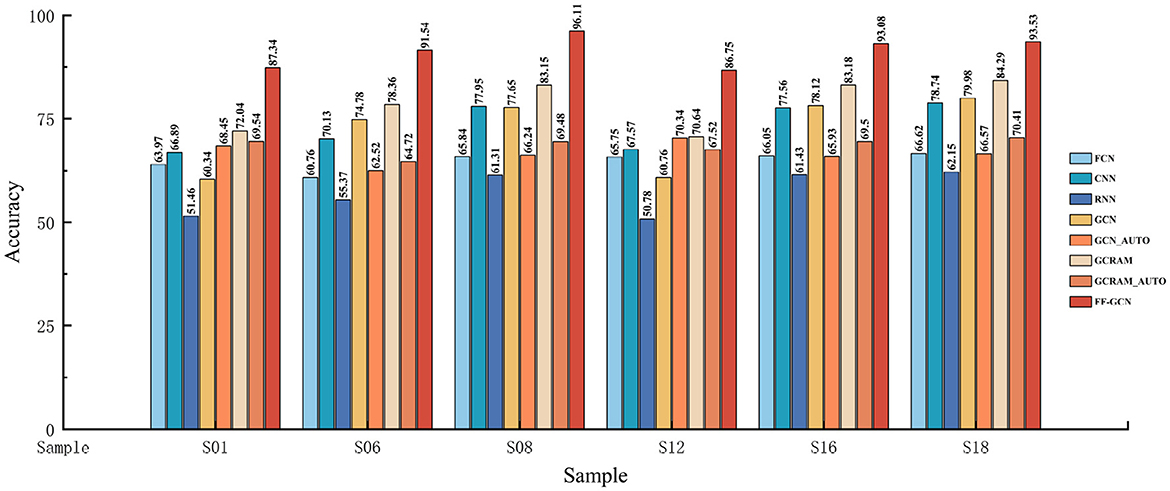

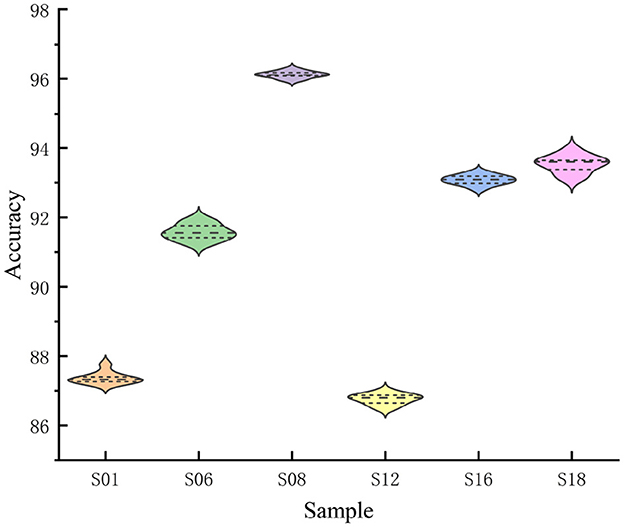

We conducted cross-individual trials on the PhysioNet dataset utilizing our proposed network structure to evaluate the adaptability of F-FGCN to individual subjects. We select six subjects at random. As illustrated in Figure 5, F-FGCN demonstrated strong competitiveness, garnering an average classification accuracy of 89.39% across the six subjects.

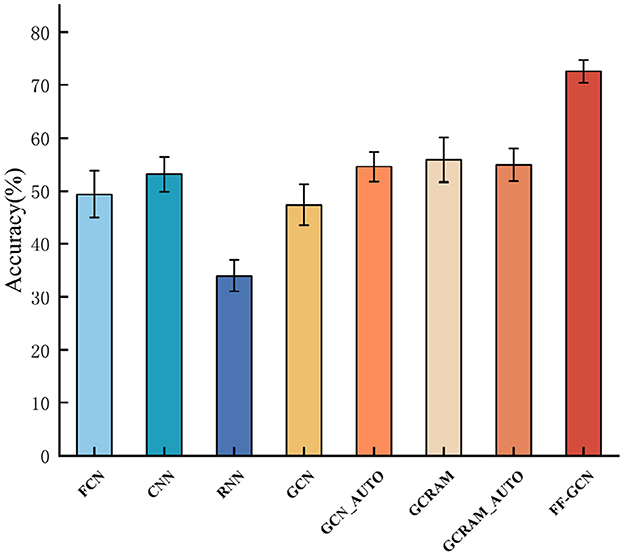

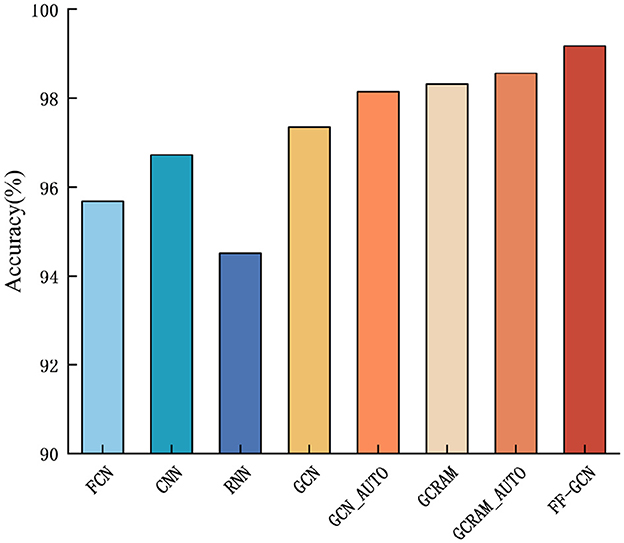

In the context of the experiment, the convolution kernel sizes of the other networks are aligned with that of F-FGCN, with identical hyperparameters applied as provided in the experimental method section. Figure 6 exhibits the comparative results.

The precision of our model is juxtaposed with the results achieved by traditional models, including FCN, CNN, RNN, GCN, GCN_AUTO, GCRAM, and GCRAM_AUTO in Figure 6. Notably, GCN_AUTO and GCRAM_AUTO amalgamate a GCN and an autoencoder block to capture graph structures by reconstructing node-wise transformations from original and transformed graph features.

Classification accuracy for the six subjects is presented in violin plots in Figure 7. The mean is represented by a horizontal line, while a solid diamond shows the classification accuracy distribution for each test. The kernel density representation outside the violin signifies a greater distribution probability surrounding more extensive graph regions. The F-FGCN model demonstrated commendable stability across different individual tests. However, the classification accuracy slightly reduces as the S12 dataset appears to be scattered.

Figure 7. The violin plot illustrates the accuracy for six subjects, with the horizontal line symbolizing the average value, and every solid diamond signifies the classification precision for each specific subject.

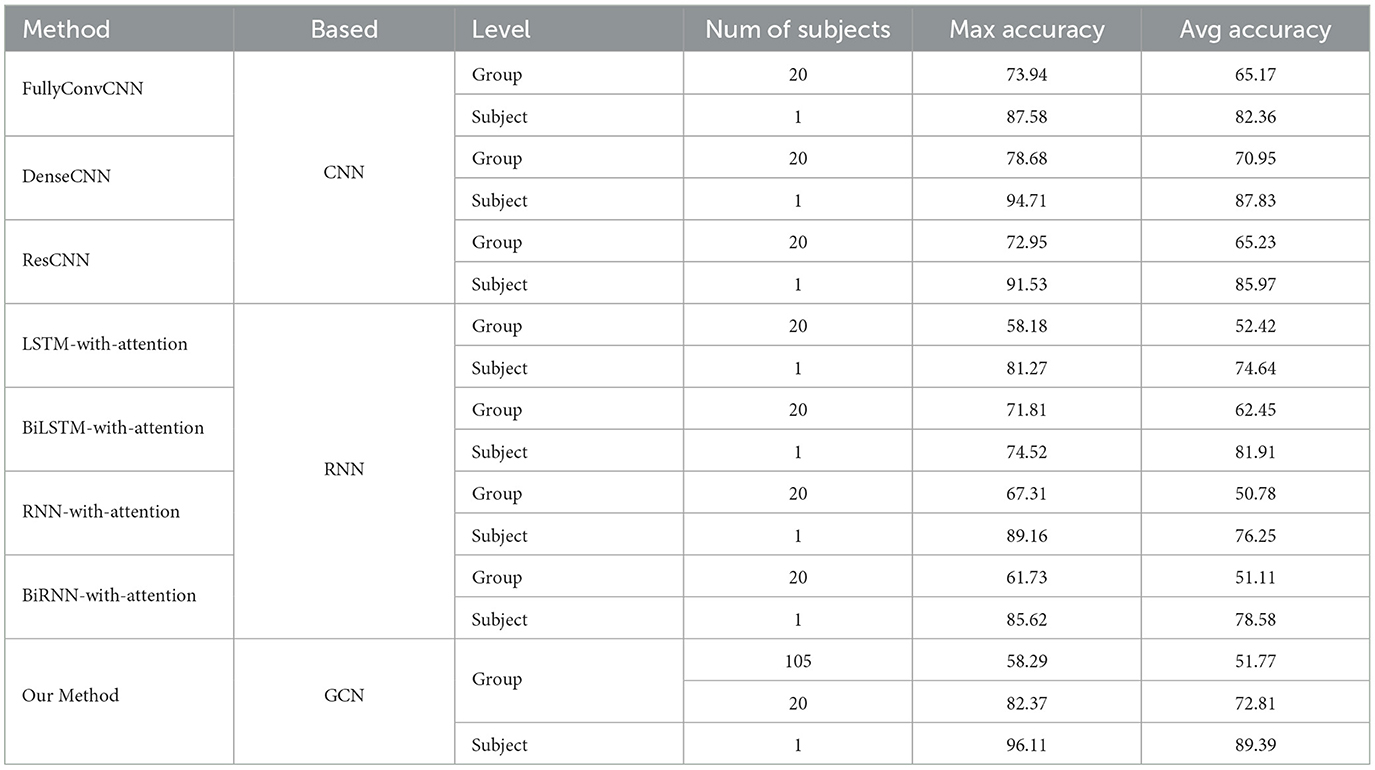

Table 3 presents the classification results of recent SOTA approaches on the PhysioNet Dataset, with F-FGCN consistently outperforming other methods. F-FGCN achieves the average accuracy, 89.39% and 72.81% in the PhysioNet dataset at the subject and group levels, respectively, demonstrating the complex feature learning ability of DL models. Due to its versatility, the F-FGCN model has a moderate error rate, signifying a high level of accuracy with only a 6.72% discrepancy.

In comparison to CNN-related methods like ResCNN and DenseCNN, which demonstrate high performances, our model exhibited comparable results. It achieved 82.37% top accuracy for a 20 participant group and 96.11% at the subject level. This underscores the efficacy of graph representation learning for EEG signal interpretation. Notably, at the group level, the accuracy between F-FGCN and the CNN models has a large difference, indicating a significant advantage in the predictive capabilities of F-FGCN, and establishing superiority in forecasting EEG tasks.

To rigorously ascertain the generalizability and efficacy of our proposed algorithm, we have undertaken a series of comprehensive tests on BCI Competition III dataset. The outcome of these tests is graphically represented as Figure 8, clearly illustrating the performance benchmarks. It is noteworthy to mention that, as demonstrated in the accompanying figures, our algorithm has a clear accuracy advantage. This evidence indicates that our algorithm maintains a consistent and superior performance across various datasets, highlighting its potential for widespread application in the field.

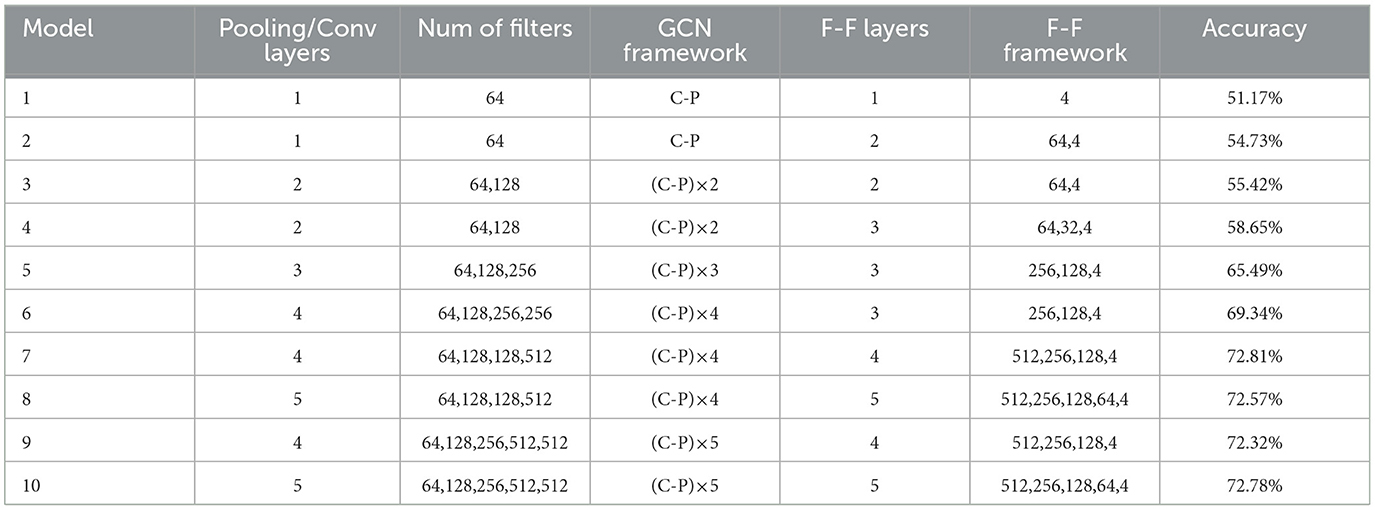

Each experiment is conducted five times to derive the average accuracy rate. That is crucial to emphasize that our assessments were exclusively concerned with the quantity of graph convolutional layers, max pooling layers, and F-F layers. Model 7 demonstrated exceptional performance across all evaluated datasets.

As presented in Table 4, securing the count of graph convolutional layers at four enhances the effectiveness of the F-F mechanism in interpreting features. Escalating the number of said layers does not appreciably augment accuracy but extend the duration of the feature extraction phase. In parallel, the model yielded superior outcomes when utilizing a fixed quartet of F-F layers for feature classification.

The FF-GCN adversarial training, which compares positive and negative samples to adjust weights, adds complexity and sensitivity to the training process due to its dual objective and dependence on the quality of negative samples. It increases the computational load as both sample types are processed, potentially slowing down each epoch.

5 Discussion

Our novel F-FGCN effectively decodes brain activity by leveraging the GCN model grounded in the F-F mechanism, thereby enhancing the accuracy of the algorithm.

F-FGCN manages to harmonize its performance across diverse subject data, thereby attaining extraordinary accuracy. When benchmarked against a multitude of alternative algorithms, our proposed model consistently demonstrates optimal classification performance. These results substantiate the assertion that the integration of topology and forward propagation in DL continues to exhibit formidable competitiveness in the MI-BCI decoding field.

Still, our study has certain limitations, particularly regarding the translation of electrode positioning and topology. Changes in electrode placements between different individuals can infuse distinct attributes in the features of the trained network. For different types of electrode locations used during an EEG examination, our network would need to be retrained to extract feature vectors effectively. Taking inspiration from related work (Hou et al., 2022b), we aim to explore methods to enhance versatility in future work.

6 Conclusion

In our study, we comprehensively investigate the task of MI EEG categorization, with consideration of brain network dynamics and neural signal transmission mechanism. We introduced the innovative F-FGCN model designed for four-class MI intents. F-FGCN amalgamates high-level individual interactions while considering EEG signal topology. By employing pre-training and F-F mechanism, our model further extracts feature vectors and amplifies the accuracy of the downstream classifier, resulting in the optimal detection results for the PhysioNet dataset. Our approach exhibited exemplary performance, cording the highest accuracy rates of 96.11% and 82.37% at the subject and group levels in the PhysioNet dataset, respectively.

In the future, we plan to integrate the F-F mechanism into our design of an end-to-end GCN network that could further enhance the accuracy of multi-classification tasks in MI. We also intend to explore the parallels between the human brain's signal propagation mechanism and the propagation process in DL. By efficiently leveraging EEG and label information, we aspire to apply this technology in areas such as humanoid robot control and the development of medical auxiliary equipment.

Data availability statement

Publicly available datasets were analyzed in this study. This data can be found here: https://www.physionet.org/content/eegmmidb/1.0.0/.

Author contributions

QX: Writing—original draft. YS: Writing—review & editing. HW: Writing—review & editing. YC: Writing—review & editing. HP: Writing—review & editing.

Funding

The author(s) declare financial support was received for the research, authorship, and/or publication of this article. This work is supported by the Comprehensive Research Facility for Fusion Technology Program of China under Contract no. 2018-000052-73-01-001228.

Acknowledgments

The authors would like to express their great thanks to all the members of the ASIPP remote handling team.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Akmal, M. (2022). Tensor factorization and attention-based CNN-LSTM deep-learning architecture for improved classification of missing physiological sensors data. IEEE Sensors J. 23, 1286–1294. doi: 10.1109/JSEN.2022.3223338

Bui, K.-H. N., Cho, J., and Yi, H. (2022). Spatial-temporal graph neural network for traffic forecasting: an overview and open research issues. Appl. Intell. 52, 2763–2774. doi: 10.1007/s10489-021-02587-w

Cai, S., Li, H., Wu, Q., Liu, J., and Zhang, Y. (2022). Motor imagery decoding in the presence of distraction using graph sequence neural networks. IEEE Trans. Neur. Syst. Rehabil. Eng. 30, 1716–1726. doi: 10.1109/TNSRE.2022.3183023

Dai, X. (2019). Hybridnet: a fast vehicle detection system for autonomous driving. Signal Proc. 70, 79–88. doi: 10.1016/j.image.2018.09.002

de Oliveira, I. H., and Rodrigues, A. C. (2023). Empirical comparison of deep learning methods for eeg decoding. Front. Neurosci. 16:1003984. doi: 10.3389/fnins.2022.1003984

Du, G., Su, J., Zhang, L., Su, K., Wang, X., Teng, S., et al. (2022). A multi-dimensional graph convolution network for eeg emotion recognition. IEEE Trans. Instrument. Measur. 71, 1–11. doi: 10.1109/TIM.2022.3204314

Du, Y., Huang, J., Huang, X., Shi, K., and Zhou, N. (2022). Dual attentive fusion for eeg-based brain-computer interfaces. Front. Neurosci. 16:1044631. doi: 10.3389/fnins.2022.1044631

Fei, S.-W., and Chu, Y.-B. (2022). A novel classification strategy of motor imagery EEG signals utilizing WT-PSR-SVD-based MTSVM. Exp. Syst. Applic. 199:116901. doi: 10.1016/j.eswa.2022.116901

Gao, M., and Mao, J. (2021). A novel active rehabilitation model for stroke patients using electroencephalography signals and deep learning technology. Front. Neurosci. 15:780147. doi: 10.3389/fnins.2021.780147

Gao, Y., Fu, X., Ouyang, T., and Wang, Y. (2022). Eeg-gcn: spatio-temporal and self-adaptive graph convolutional networks for single and multi-view eeg-based emotion recognition. IEEE Signal Proc. Lett. 29, 1574–1578. doi: 10.1109/LSP.2022.3179946

Grosse-Wentrup, M., and Buss, M. (2008). Multiclass common spatial patterns and information theoretic feature extraction. IEEE Trans. Biomed. Eng. 55, 1991–2000. doi: 10.1109/TBME.2008.921154

Hamada, H., Wen, W., Kawasaki, T., Yamashita, A., and Asama, H. (2023). Characteristics of EEG power spectra involved in the proficiency of motor learning. Front. Neurosci. 17:1094658. doi: 10.3389/fnins.2023.1094658

Hinton, G. (2022). The forward-forward algorithm: some preliminary investigations. arXiv preprint arXiv:2212.13345.

Hossain, K. M., Islam, M. A., Hossain, S., Nijholt, A., and Ahad, M. A. R. (2023). Status of deep learning for EEG-based brain-computer interface applications. Front. Comput. Neurosci. 16:1006763. doi: 10.3389/fncom.2022.1006763

Hou, Y., Jia, S., Lun, X., Hao, Z., Shi, Y., Li, Y., et al. (2022a). GCNS-net: a graph convolutional neural network approach for decoding time-resolved EEG motor imagery signals. IEEE Tran. Neural Netw. Lear. Syst. 2022, 1–12. doi: 10.1109/TNNLS.2022.3202569

Hou, Y., Jia, S., Lun, X., Zhang, S., Chen, T., Wang, F., et al. (2022b). Deep feature mining via the attention-based bidirectional long short term memory graph convolutional neural network for human motor imagery recognition. Front. Bioeng. Biotechnol. 9:706229. doi: 10.3389/fbioe.2021.706229

Hu, X., Wu, D., Li, H., Jiang, F., and Lu, H. (2021). “Shallownet: an efficient lightweight text detection network based on instance count-aware supervision information,” in International Conference on Neural Information Processing (Springer), 633–644. doi: 10.1007/978-3-030-92185-9_52

Hu, Y., Liu, Y., Zhang, S., Zhang, T., Dai, B., Peng, B., et al. (2023). A cross-space cnn with customized characteristics for motor imagery eeg classification. IEEE Trans. Neur. Syst. Rehabilit. Eng. 31, 1554–1565. doi: 10.1109/TNSRE.2023.3249831

Huang, J.-S., Liu, W.-S., Yao, B., Wang, Z.-X., Chen, S.-F., and Sun, W.-F. (2021). Electroencephalogram-based motor imagery classification using deep residual convolutional networks. Front. Neurosci. 15:774857. doi: 10.3389/fnins.2021.774857

Huang, Y., Zheng, J., Xu, B., Li, X., Liu, Y., Wang, Z., et al. (2023). An improved model using convolutional sliding window-attention network for motor imagery EEG classification. Front. Neurosci. 17:1204385. doi: 10.3389/fnins.2023.1204385

Jia, H., Yu, S., Yin, S., Liu, L., Yi, C., Xue, K., et al. (2023). A model combining multi branch spectral-temporal CNN, efficient channel attention, and lightgbm for MI-BCI classification. IEEE Trans. Neural Syst. Rehabilit. Eng. 31, 1311–1320. doi: 10.1109/TNSRE.2023.3243992

Kipf, T. N., and Welling, M. (2016). Semi-supervised classification with graph convolutional networks. arXiv preprint arXiv:1609.02907.

Lawhern, V. J., Solon, A. J., Waytowich, N. R., Gordon, S. M., Hung, C. P., and Lance, B. J. (2018). Eegnet: a compact convolutional neural network for eeg-based brain-computer interfaces. J. Neural Eng. 15:056013. doi: 10.1088/1741-2552/aace8c

Li, H., Liu, M., Yu, X., Zhu, J., Wang, C., Chen, X., et al. (2023). Coherence based graph convolution network for motor imagery-induced eeg after spinal cord injury. Front. Neurosci. 16:1097660. doi: 10.3389/fnins.2022.1097660

Li, Y., Zhang, X., and Ming, D. (2023). Early-stage fusion of eeg and fnirs improves classification of motor imagery. Front. Neurosci. 16:1062889. doi: 10.3389/fnins.2022.1062889

Liang, W., Jin, J., Daly, I., Sun, H., Wang, X., and Cichocki, A. (2022). Novel channel selection model based on graph convolutional network for motor imagery. Cogn. Neurodyn. 17, 1283–1296. doi: 10.1007/s11571-022-09892-1

Liu, C., You, J., Wang, K., Zhang, S., Huang, Y., Xu, M., et al. (2023). Decoding the EEG patterns induced by sequential finger movement for brain-computer interfaces. Front. Neurosci. 17:1180471. doi: 10.3389/fnins.2023.1180471

Malan, N., and Sharma, S. (2022). Motor imagery eeg spectral-spatial feature optimization using dual-tree complex wavelet and neighbourhood component analysis. IRBM 43, 198–209. doi: 10.1016/j.irbm.2021.01.002

Mirchi, N., Warsi, N. M., Zhang, F., Wong, S. M., Suresh, H., Mithani, K., et al. (2022). Decoding intracranial EEG with machine learning: a systematic review. Front. Hum. Neuroscience. 16, 913777. doi: 10.3389/fnhum.2022.913777

Mughal, N. E., Khan, M. J., Khalil, K., Javed, K., Sajid, H., Naseer, N., et al. (2022). EEG-fnirs-based hybrid image construction and classification using CNN-LSTM. Front. Neurorob. 16:873239. doi: 10.3389/fnbot.2022.873239

Schirrmeister, R. T., Springenberg, J. T., Fiederer, L. D. J., Glasstetter, M., Eggensperger, K., Tangermann, M., et al. (2017). Deep learning with convolutional neural networks for EEG decoding and visualization. Hum. Brain Mapp. 38, 5391–5420. doi: 10.1002/hbm.23730

Song, C., Lin, Y., Guo, S., and Wan, H. (2020). “Spatial-temporal synchronous graph convolutional networks: a new framework for spatial-temporal network data forecasting,” in Proceedings of the AAAI Conference on Artificial Intelligence, 914–921. doi: 10.1609/aaai.v34i01.5438

Subasi, A. (2005). Automatic recognition of alertness level from eeg by using neural network and wavelet coefficients. Expert Syst. Applic. 28, 701–711. doi: 10.1016/j.eswa.2004.12.027

Tang, X., Yang, C., Sun, X., Zou, M., and Wang, H. (2023a). Motor imagery EEG decoding based on multi-scale hybrid networks and feature enhancement. IEEE Trans. Neur. Syst. Rehabilit. Eng. 31, 1208–1218. doi: 10.1109/TNSRE.2023.3242280

Tang, X., Zhang, W., Wang, H., Wang, T., Tan, C., Zou, M., et al. (2023b). Dynamic pruning group equivariant network for motor imagery eeg recognition. Front. Bioeng. Biotechnol. 11:917328. doi: 10.3389/fbioe.2023.917328

Tang, Z.-C., Li, C., Wu, J.-F., Liu, P.-C., and Cheng, S.-W. (2019). Classification of EEG-based single-trial motor imagery tasks using a B-CSP method for BCI. Front. Inf. Technol. Electr. Eng. 20, 1087–1098. doi: 10.1631/FITEE.1800083

Tong, L., Qian, Y., Peng, L., Wang, C., and Hou, Z.-G. (2023). A learnable EEG channel selection method for MI-BCI using efficient channel attention. Front. Neurosci. 17:1276067. doi: 10.3389/fnins.2023.1276067

Umrani, A. T., and Harshavardhanan, P. (2022). Hybrid feature-based anxiety detection in autism using hybrid optimization tuned artificial neural network. Biomed. Signal Proc. Control 76:103699. doi: 10.1016/j.bspc.2022.103699

Wang, D., Lei, C., Zhang, X., Wu, H., Zheng, S., Chao, J., et al. (2021). “Identification of depression with a semi-supervised gcn based on eeg data,” in 2021 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) (IEEE), 2338–2345. doi: 10.1109/BIBM52615.2021.9669572

Wang, G., and Cerf, M. (2022). Brain-computer interface using neural network and temporal-spectral features. Front. Neuroinform. 16:952474. doi: 10.3389/fninf.2022.952474

Wang, H., Ma, S., Dong, L., Huang, S., Zhang, D., and Wei, F. (2022). Deepnet: Scaling transformers to 1,000 layers. arXiv preprint arXiv:2203.00555.

Wang, H., Su, Q., Yan, Z., Lu, F., Zhao, Q., Liu, Z., et al. (2020). Rehabilitation treatment of motor dysfunction patients based on deep learning brain-computer interface technology. Front. Neurosci. 14:595084. doi: 10.3389/fnins.2020.595084

Wang, Q., Liu, F., Wan, G., and Chen, Y. (2022). Inference of brain states under anesthesia with meta learning based deep learning models. IEEE Trans. Neur. Syst. Rehabilit. Eng. 30, 1081–1091. doi: 10.1109/TNSRE.2022.3166517

Xiao, G., Shi, M., Ye, M., Xu, B., Chen, Z., and Ren, Q. (2022). 4D attention-based neural network for EEG emotion recognition. Cogn. Neurodyn. 16, 805–818. doi: 10.1007/s11571-021-09751-5

Xie, J., Zhang, J., Sun, J., Ma, Z., Qin, L., Li, G., et al. (2022). A transformer-based approach combining deep learning network and spatial-temporal information for raw EEG classification. IEEE Trans. Neur. Syst. Rehabilit. Eng. 30, 2126–2136. doi: 10.1109/TNSRE.2022.3194600

Xu, L., Xu, M., Jung, T.-P., and Ming, D. (2021). Review of brain encoding and decoding mechanisms for EEG-based brain-computer interface. Cogn. Neurodyn. 15, 569–584. doi: 10.1007/s11571-021-09676-z

Yang, J., Zheng, W.-S., Yang, Q., Chen, Y.-C., and Tian, Q. (2020). “Spatial-temporal graph convolutional network for video-based person re-identification,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 3289–3299. doi: 10.1109/CVPR42600.2020.00335

Ye, M., Chen, C. P., and Zhang, T. (2022). Hierarchical dynamic graph convolutional network with interpretability for EEG-based emotion recognition. IEEE Trans. Neur. Netw. Lear. Syst. 2022, 1–12. doi: 10.1109/TNNLS.2022.3225855

Zhang, H., Ji, H., Yu, J., Li, J., Jin, L., Liu, L., et al. (2023). Subject-independent eeg classification based on a hybrid neural network. Front. Neurosci. 17:1124089. doi: 10.3389/fnins.2023.1124089

Zhang, J., Li, K., Yang, B., and Han, X. (2023). Local and global convolutional transformer-based motor imagery EEG classification. Front. Neurosci. 17:1219988. doi: 10.3389/fnins.2023.1219988

Zhang, R., Chen, Y., Xu, Z., Zhang, L., Hu, Y., and Chen, M. (2023). Recognition of single upper limb motor imagery tasks from EEG using multi-branch fusion convolutional neural network. Front. Neurosci. 17:1129049. doi: 10.3389/fnins.2023.1129049

Keywords: brain computer interface (BCI), Electroencephalography (EEG), motor imagery (MI), forward-forward mechanism, Graph Convolutional Network (GCN)

Citation: Xue Q, Song Y, Wu H, Cheng Y and Pan H (2024) Graph neural network based on brain inspired forward-forward mechanism for motor imagery classification in brain-computer interfaces. Front. Neurosci. 18:1309594. doi: 10.3389/fnins.2024.1309594

Received: 08 October 2023; Accepted: 04 March 2024;

Published: 28 March 2024.

Edited by:

S. Abdollah Mirbozorgi, University of Alabama at Birmingham, United StatesReviewed by:

Jiancai Leng, Qilu University of Technology, ChinaFan Gao, University of Kentucky, United States

Copyright © 2024 Xue, Song, Wu, Cheng and Pan. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Yuntao Song, c29uZ3l0QGlwcC5hYy5jbg==; Huapeng Wu, SHVhcGVuZy5XdUBsdXQuZmk=

Qiwei Xue

Qiwei Xue Yuntao Song1,2*

Yuntao Song1,2* Huapeng Wu

Huapeng Wu