- 1School of Computer Science and Digital Technologies, Aston University, Birmingham, United Kingdom

- 2Warwick Manufacturing Group, University of Warwick, Coventry, United Kingdom

- 3China Mobile System Integration Co. Ltd, Beijing, China

- 4School of Computer Science and Engineering, South China University of Technology, Guangzhou, China

The integration of Artificial Intelligence (AI) and Digital Twin (DT) technology is reshaping modern manufacturing by enabling real-time monitoring, predictive maintenance, and intelligent process optimisation. This paper presents the design and partial implementation of an AI-enabled Digital Twin System (AI-DT) for manufacturing, focusing on the deployment of Generative AI (GAI) and Predictive AI (PAI) modules. The GAI component is used to augment training data, perform geometric inspection, and generate 3D virtual testing environments from multiview video input. Meanwhile, PAI leverages sensor data to enable proactive defect detection and predictive quality analysis in welding processes. These integrated capabilities significantly enhance the system's ability to anticipate issues and support decision-making. While the framework also envisions incorporating Explainable AI (EAI), Context-Aware AI (CAI), and Agentic AI (AAI) for future extensions, the current work establishes a robust foundation for scalable, intelligent digital twin systems in smart manufacturing. Our findings contribute toward improving operational efficiency, quality assurance, and early-stage digital-physical convergence.

1 Introduction of digital twins

Manufacturing has undergone a significant transformation from manual operations to automated, data-driven systems. Traditional manufacturing relies heavily on static models, rule-based control, and reactive maintenance, which limits adaptability and efficiency. The development of AI-enabled digital twin systems in manufacturing follows a logical progression from rigorous design verification and validation models, which include both the integration and co-evolution of products and processes (Tolio et al., 2010). These models are further extended to consider the product lifecycle (Maropoulos, 2010). Then, progressing to advanced sensing is necessary for effective process monitoring with capabilities to enable root cause analysis of quality defects (Ding et al., 2003). This ultimately leads to AI-driven control and autonomy of manufacturing processes (Ding et al., 2019; Gao et al., 2024). Early work on systematic validation frameworks established the conceptual foundation for virtual models of processes and products (Maropoulos, 2010; Tolio et al., 2010), while research in sensing led to (i) development of novel sensors for in-line/in-process monitoring of manufacturing systems (Shi, 2006); (ii) determining placement of in-line monitoring station(s) within a multi-stage manufacturing systems which is then synergistically integrated with measurement coverage analysis for more effective process monitoring, for e.g., reducing mean-time-to-detection by advancing process monitoring from off-line inspection toward in-line and in-process monitoring (Modoni et al., 2022) and system diagnosability (Ding et al., 2003); and (iii) increase monitoring data: variety (for e.g., ranging from categorical to spatio-temporal data) and volume (from small samples to 100% inspection rate) which are collected in-process with sufficient fidelity (i.e., within the required accuracy, repeatability and reproducibility) and veracity (i.e., accurate reflections of the process “defect condition” patterns). These advances provided the real-time input needed for both high fidelity and veracity analysis of the manufacturing process (Babu et al., 2019; Wang et al., 2021).

Subsequent methodologies for root cause analysis (RCA) and process variation modeling have provided a robust foundation for diagnosing product quality issues and quantifying sources of process variability, and as such evolved into critical components of intelligent manufacturing systems (Ceglarek et al., 2004; e Oliveira et al., 2023). RCA methodologies facilitate the systematic identification of latent defect drivers, while variation modeling quantifies stochastic process behavior and parameter sensitivities (Ceglarek et al., 1994; Franciosa et al., 2020). These analytical frameworks, when synergistically integrated with deep learning architectures and statistical reasoning techniques for predictive quality estimation (Sinha et al., 2020) and closed-loop process control, enable the development of high-fidelity digital twins (Soori et al., 2023). Simultaneously, the emergence of smart manufacturing theme supported by “Factory of the Future” and then Industry 4.0/5.0 and the “twin transition” (i.e., integration of digital and green transformations) initiatives (European Commission, 2020; Thelen et al., 2023) has further reinforce the need for real-time process monitoring, predictive analytics, and intelligent decision-making, i.e., enablers that currently drive the development of Digital Twin (DT) Technology (Wang et al., 2021; Villalonga et al., 2021).

Digital twin (DT) systems have emerged as a groundbreaking innovative framework in manufacturing (Leng et al., 2021; Soori et al., 2023), offering a virtual replica of physical processes and systems to enable real-time process monitoring (Franciosa et al., 2020; Ceglarek et al., 2015), optimization (Thelen et al., 2023), process control (Stavropoulos et al., 2021; Stavropoulos and Sabatakakis, 2024), and decision-making (Bocklisch Franziska, 2023). By integrating advanced sensor networks (Javaid et al., 2021), robust data management (Schadt et al., 2010), and artificial intelligence (AI)-driven analytics (Ertel, 2024), digital twins empower manufacturers to simulate, predict, and proactively resolve potential issues. While significant progress has been made in enabling in-line and in-process monitoring, comparatively fewer studies have addressed control-oriented digital twins, and even fewer have systematically examined the robustness of their underlying models when faced with uncertainties such as sensor noise, parameter variability, and environmental disturbances (Stavropoulos et al., 2021). Addressing these challenges remains critical for ensuring reliable and resilient digital twin deployment in industrial environments.

AI-driven approaches are expected to revolutionize digital twin technology by significantly expanding current reliance on traditional simulations by enhancing real-time decision-making, predictive modeling, and process optimization. Unlike traditional simulation-based methods (Mourtzis et al., 2015), which often rely on predefined scenarios and assumptions that limit responsiveness due to time-consuming calculations and dynamic environments (Tao et al., 2024), AI has the potential to learn from vast amounts of historical information, physical simulation and real-time monitoring data to generate accurate predictions, adapt dynamically to changing conditions, and provide context-based insights and ultimately better informed decisions (Nti et al., 2022). AI-driven defect detection (Ren et al., 2022), predictive maintenance (Achouch et al., 2022), and quality assurance (Sinha et al., 2020) can leverage various sources of data, information, and knowledge without relying only on a single resource-intensive simulation, offering manufacturers a more scalable and efficient approach.

In the context of current manufacturing trends (i.e., Industry 4.0 and twin transition) where manufacturing ecosystems are increasingly characterized by cyber-physical integration, interoperability, and data-driven decision-making (Ceglarek, 2014; Herrera Vidal, 2021), the limitations of traditional simulation-based digital twins in addressing scalability, responsiveness, and contextual awareness have become evident. To address these challenges, we propose an AI-enabled Digital Twin (AI-DT) framework that operationalizes key enablers of Industry 4.0/twin transition through the integration of advanced AI modalities: Generative AI for synthetic data augmentation and design-space exploration; Predictive AI for real-time forecasting and anomaly detection; Explainable AI for model interpretability and traceability; Context-Aware AI for environment-sensitive adaptability; and Autonomous Agentic AI for decentralized, self-governing control and decision-making. This composite AI-DT architecture enhances the semantic interoperability, cognitive autonomy, and adaptive intelligence of digital twins, thereby supporting resilient, self-optimizing manufacturing in line with Industry 4.0 and “twin transition” objectives.

2 AI-enabled digital twin framework

The framework provided in Figure 1 outlines a comprehensive and modular architecture for the AI-enabled Digital Twin (AI-DT) system. It integrates physical and virtual systems, ensuring seamless interaction between real-world processes and their digital counterparts. The framework is designed to leverage cutting-edge technologies like sensors, advanced data management, AI and interactive user interfaces.

Figure 1. Framework of the AI-enabled Digital Twin (AI-DT) System for Manufacturing. The system is composed of four core layers: the Sensor Data Acquisition Layer collects multimodal inputs (e.g., photodiodes, high-speed cameras, 3D scanners); the Data Management Layer handles data preprocessing, storage, access, and compliance; the Multi-Model AI Layer integrates diverse AI modules, the User Interface Layer provides visualization, monitoring, and third-party interfacing capabilities. This modular and scalable architecture bridges physical and virtual systems to enable intelligent, real-time manufacturing insights.

1. Sensor data acquisition layer (physical system, SDAL) forms the foundation of a digital twin system by accurately mirroring the physical system through real-time acquisition of diverse types of data from various sensors. This layer plays a critical role in monitoring, analyzing and simulating real-world processes by providing high-fidelity, precise, and contextually relevant inputs. The SDAL primarily focuses on data collection and transmission, serving as the entry point for real-world data into the virtual ecosystem. While the sensor hardware (e.g., photodiodes, cameras, and 3D scanners, et al.) is typically pre-selected and fixed based on system requirements, the emphasis lies on how data is efficiently captured and transmitted to meet system demands. This ensures the quality and timeliness of the data flow that underpins the accuracy and effectiveness of the Digital Twin.

2. Data management layer (bridging physical and virtual systems, DAML) acts as the bridge between the physical and virtual domains, responsible for processing, organizing, and managing the data collected by the sensors. Its main objective is to handle the vast amounts of data generated by the SDAL and make it usable for the Multi-Model AI Layer and the User Interface Layer.

3. Multi-model AI layer (virtual system, MMAL) is the core intelligence engine of the AI-DT system. It leverages multiple AI techniques to process different kinds of data, generate insights, predict outcomes, and provide actionable recommendations. This layer operates on data provided by the DAML, supports real-time and proactive decision-making in manufacturing.

Generative AI (GAI) helps simulate scenarios, generate synthetic data, create virtual representations of real-world systems, and is often the starting point in creating a Digital Twin system, it lays the foundation for building the virtual model of the physical system (Gozalo-Brizuela and Garrido-Merchan, 2023; Bandi et al., 2023; Feuerriegel et al., 2024). The main tasks include: (1) Synthetic Data Generation (Lu et al., 2023): it generates realistic training datasets to augment defect detection models, addressing challenges like data scarcity or class imbalances. (2) 3D Model Reconstruction (Liu et al., 2024): utilizes video or sensor data to generate high-resolution 3D models of manufacturing components for inspection and 3D environment building. GAI can effectively simulate manufacturing scenarios to test and validate predictive models without disrupting real-world operations.

Predictive AI (PAI) focuses on forecasting future system states, such as defects, based on historical and real-time sensor data. Its primary role is to analyse and infer probable outcomes, supporting proactive decision-making (e.g., predicting porosity or spatter formation based on welding video). The key tasks include (1) Defect Classification (Dai et al., 2024): categorizes defects into specific types (e.g., spatter, porosity, cracks) based on labeled data and predefined classes. This involves analyzing sensor data and assigning defect categories to improve the efficiency of quality inspection. (2) Defect Detection (Ren et al., 2022): identifies the presence and location of defects in manufacturing components using sensor data, video streams, or 3D models. It employs computer vision and sensor fusion techniques to locate defect areas in real-time. (3) Defect Prediction (Liu et al., 2022): predicts the occurrence and type of defects based on process parameters, sensor data, and historical defect records.

Beyond defect classification, detection, and prediction, PAI methods are also widely applied to non-intrusive or indirect feature measurement. For example, in welding, PAI can infer weld quality metrics without destructive testing, while in additive manufacturing, it can estimate over-deposition or surface geometry deviations from sensor data (Stavropoulos and Sabatakakis, 2024; Bourlesas et al., 2024). This extended role demonstrates the flexibility of PAI in providing quality assurance through indirect, real-time measurements that avoid part removal or offline inspection.

Explainable AI (EAI) is critical for interpreting predictions and decisions made by the system, fostering trust and transparency, it provides insights into the “why” and “how” behind system behaviors, which is important for audits, compliance, and user trust (Hrnjica and Softic, 2020; Chen, 2023; Ahmed et al., 2022). The key tasks include (1) Model Explainability (Dai et al., 2024; Li et al., 2018): it could provide clear explanations of how AI models arrive at their classifications or predictions. (2) Causal Analysis (e Oliveira et al., 2023): Identifies the underlying factors influencing AI decisions by establishing cause-and-effect relationships between input variables and predictions. This helps in understanding why specific defects occur, how process variations impact outcomes, and what corrective actions can mitigate identified issues. (3) Trust Building (Choung et al., 2023): It increases trust in AI systems by offering insights into decision-making processes, especially in high-stakes scenarios.

Context-aware AI (CAI) ensures adaptability and robustness in real-time decision-making, making predictions more relevant and actionable by incorporating environmental and operational variations (Hribernik et al., 2021). The key tasks include: (1) Dynamic Decision-Making (Villalonga et al., 2021): adjusts recommendations based on current process parameters (e.g., speed, material type, temperature) and evolving conditions. (2) Context Integration (Reiman et al., 2021): incorporates external factors such as environmental conditions, operator inputs, or production goals to tailor AI actions. (3) Real-Time Feedback (Pantelidakis et al., 2022): provides adaptive feedback loops for optimizing processes as they occur.

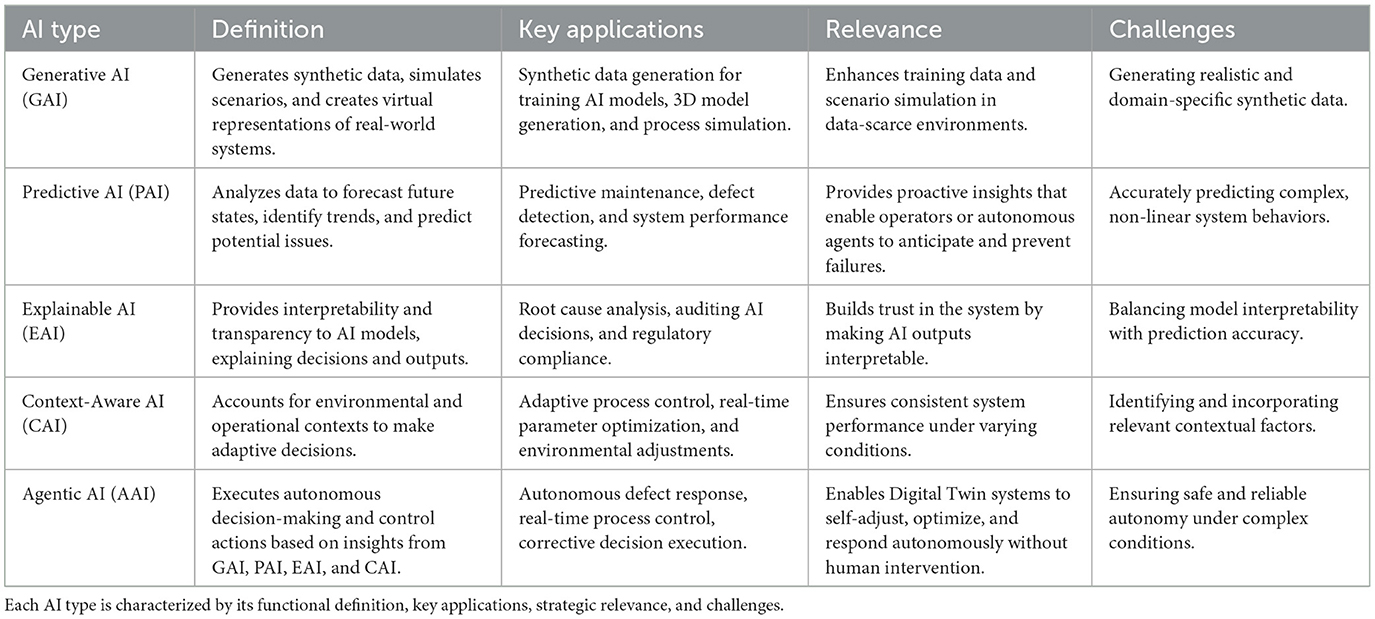

Agentic AI (AAI) represents an autonomous decision-making agent that can act based on insights from modules such as GAI, PAI, EAI, and CAI, shown in Table 1. AAI is designed to close the loop by executing decisions. It empowers the system to act without human intervention, enabling full autonomy in complex environments (Acharya et al., 2025). The key tasks include: (1) Autonomous Operations (Leng et al., 2023): executes routine tasks like defect classification, report generation, or process adjustments without human input. (2) Decision Support (Psarommatis and Kiritsis, 2022): provides ranked recommendations or options for operators to choose from, balancing automation with human oversight. (3) Learning and Adaptation (Li C. et al., 2022): continuously learns from feedback and evolving scenarios to improve its decision-making capabilities. For example, an Agentic AI module in a welding application can operate as a self-correcting welding robot that autonomously adjusts process parameters, such as laser power, welding speed, or focal position, in response to defect signals. Unlike traditional automation systems that follow predefined rules, this self-correcting behavior allows the robot to learn from sensor feedback and adapt dynamically to process variations, ensuring consistent weld quality without human intervention.

4. USer interface layer (virtual system, USIL) forming the virtual interface of the AI-DT System, acts as the primary point of interaction between operators and the decisions (Banaeian Far and Imani Rad, 2022). This layer is designed to ensure seamless communication, real-time feedback, and actionable insights for effective decision-making and process optimization. In addition to its role as the primary interaction layer, the USIL enables bi-directional engagement with both MMAL. For example, operators can initiate tasks such as 3D reconstruction and synthetic data augmentation through the USIL, directly visualizing outputs generated by GAI. Likewise, such as defect classification, detection outputs, and predictive trends (e.g., spatter evolution) from PAI's results, are presented via real-time dashboards, supporting informed decision-making. Operator feedback provided through the USIL can be fed back into GAI and PAI, like by refining simulation parameters, adjusting predictive thresholds, or prioritizing specific defect categories. This continuous feedback loop ensures that AI outputs are not only accessible and interpretable but can also be iteratively improved and adapted within production workflows.

3 Designing and building test system

In this paper, we use welding as a case study, present a comprehensive framework for designing and implementing a DT system, our approach integrates data collection, advanced AI to develop and apply for industrial processes. The primary objective of this virtual system is to enable operators to carry out their tasks within an augmented or virtual reality environment. Furthermore, evaluate whether welding products meet the required tolerances based on specified process parameters. This framework not only bridges the gap between theory and practical application but also demonstrates the potential of DT systems to transform manufacturing processes by improving operational efficiency and product quality.

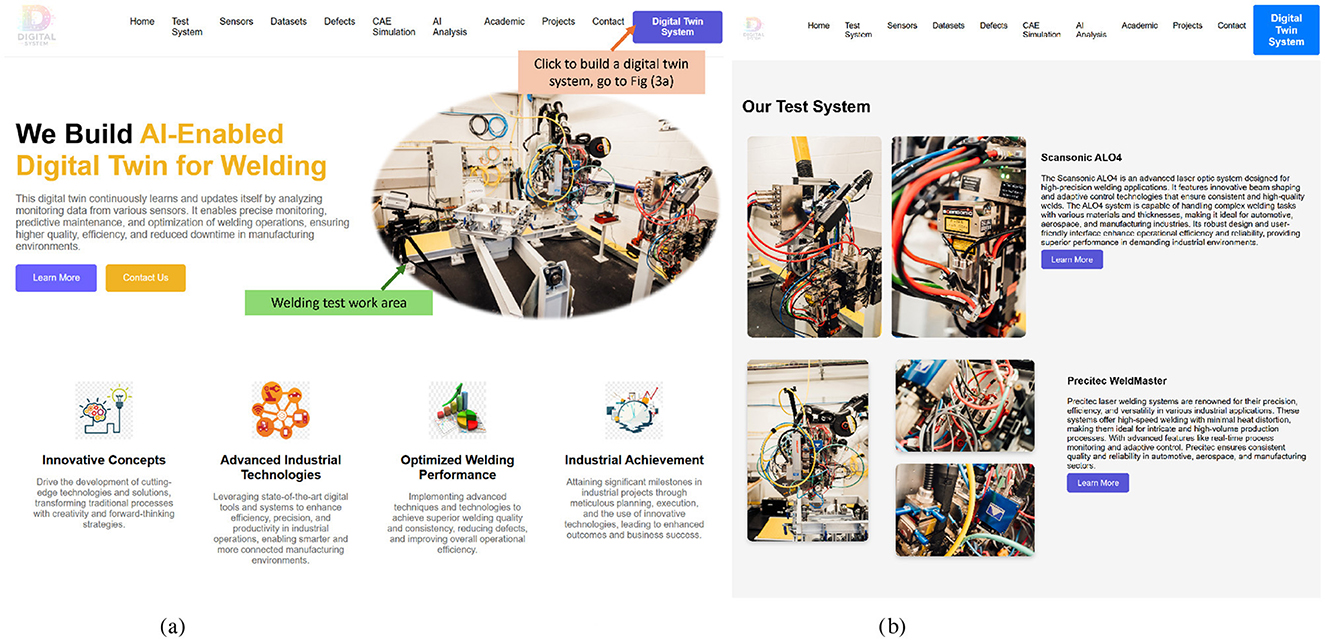

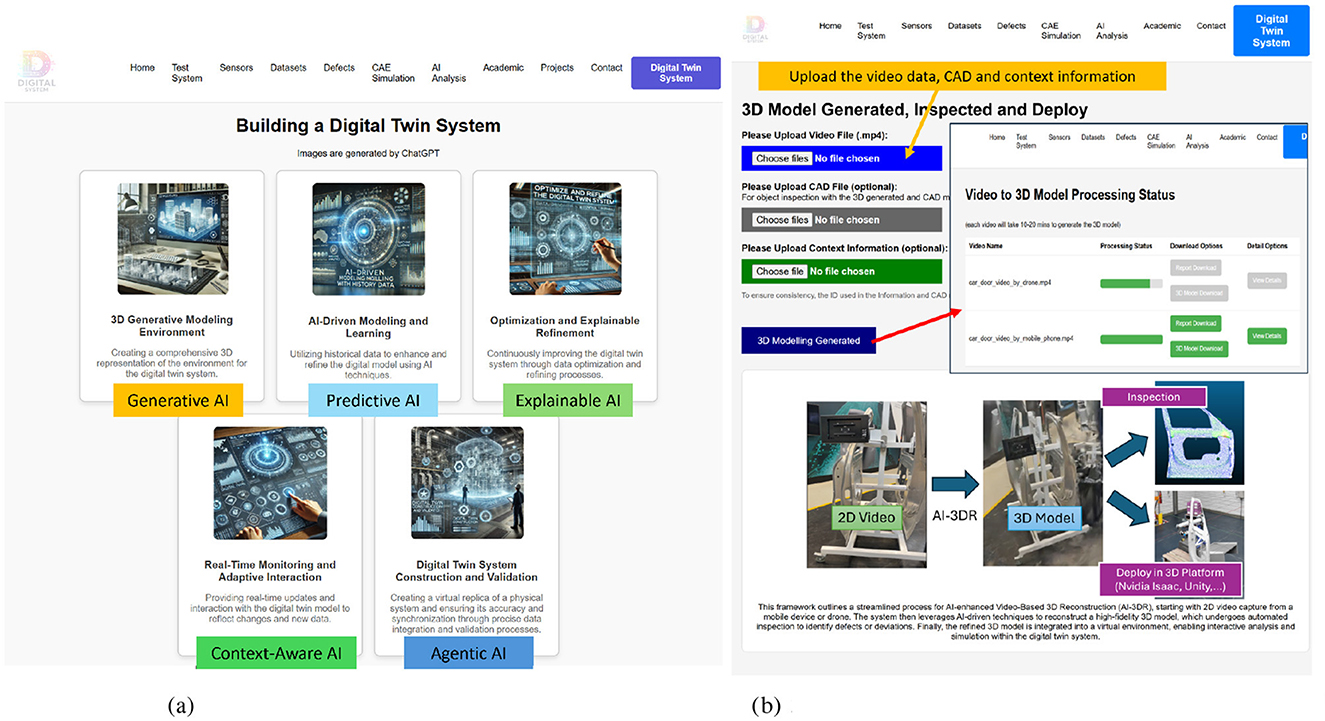

Figure 2a serves as the home page of the AI-DT System for welding, offering an overview of the welding system (Figure 2b), sensors, datasets, defects and AI analysis. Building on this foundational overview, the detailed technical framework (Figure 3a) provides a structured methodology for the design and implementation of the AI-DT system. This framework leverages AI-driven methodologies to enhance modeling, monitoring, and decision-making capabilities, ensuring a seamless interaction between physical and virtual systems. (1) 3D Generative Modeling Environment starts with the creation of a 3D virtual model that accurately replicates the welding environment. Using Generative AI, the model is constructed from multi-view videos, integrating spatial, geometric, and contextual data for an immersive digital representation. (2) AI-Driven Modeling and Learning is predicted using Predictive AI while incorporating physics-informed constraints to ensure realistic process simulations. AI learns from historical welding data, simulation outputs, and real-world observations, enabling precise quality assessment and defect forecasting. (3) Optimization and Explainable Refinement undergoes iterative root cause analysis and performance optimization through Explainable AI. This step ensures that AI decisions, such as defect predictions and recommended process adjustments, are transparent and interpretable, fostering trust in automated decision-making while improving accuracy over time. (4) Real-Time Monitoring and Adaptive Interaction remains dynamic through real-time monitoring, capturing live data from sensors, video feeds, and contextual information. Context-Aware AI adapts the digital twin's behavior in response to changes in environmental conditions, process variations, or external influences, ensuring continuous feedback and actionable insights. (5) Digital Twin System Construction and Validation integrates Agentic AI for autonomous decision-making, allowing it to proactively adjust parameters, detect defects, and implement corrective actions without direct human intervention. This ensures that the digital twin can self-optimize and maintain peak efficiency in dynamic operational environments.

Figure 2. (a) A web-based interface showcasing the integration of AI modules, sensor data, and digital twin functionalities for welding process optimisation and defect monitoring. (b) The physical welding system setup comprising the Scansonic ALO4 and Precitec WeldMaster modules used for laser welding experiments and data acquisition to support digital twin development and evaluation. (a) AI-enabled digital twin system for welding. (b) Welding test system for AI-DT.

Figure 3. (a) Modular AI Interface of the Digital Twin System, showcasing the integration of Generative AI, Predictive AI, Explainable AI, Context-Aware AI, and Agentic AI to support end-to-end quality assurance and autonomous decision-making. (b) Interactive 3D Model Generation Interface, illustrating the workflow for uploading video data (e.g., from mobile phones or drones), converting it into high-fidelity 3D models, and deploying it within the Digital Twin environment for inspection and validation. (a) AI-driven digital twin system modules. (b) 3D models generated interface powered by the GAI module.

3.1 3D generative modeling environment for welding object reconstruction

To create a virtual environment that accurately replicates the physical one, it is essential to incorporate detailed 3D models of various objects, including their geometric shapes, functional attributes, and precise spatial locations. This process captures the structural and operational characteristics of each object, ensuring the virtual representation aligns seamlessly with the physical environment. Such fidelity is critical for enabling effective interaction, analysis, and simulation within the virtual space. There are multiple methods to generate 3D models, including: (1) Computer-Aided Design (CAD): CAD models are manually created by professionals based on design specifications, blueprints, or technical drawings. (2) Reverse Engineering: This approach involves reconstructing a digital 3D model from a physical object through the use of technologies such as 3D scanning and mesh processing. It is commonly applied to replicate existing parts, perform quality analysis, or generate digital representations of legacy components without existing CAD data (Helle and Lemu, 2021). (3) Artificial Intelligence Generated Content: AI techniques such as Image-to-3D reconstruction [e.g., Neural Radiance Fields (Mildenhall et al., 2021)], Text-to-3D [e.g., DreamFusion (Poole et al., 2022)], 3D Gaussian Splatting (3DGS) (Kerbl et al., 2023), and Direct3D (Wu et al., 2024) allow automated generation of 3D models from inputs like images, sketches, or point clouds. Among these, GAI is particularly promising for its ability to handle diverse input formats and automate the 3D model generation process. As AI technologies evolve, GAI-generated 3D models are expected to become a primary approach due to their scalability, efficiency, and adaptability.

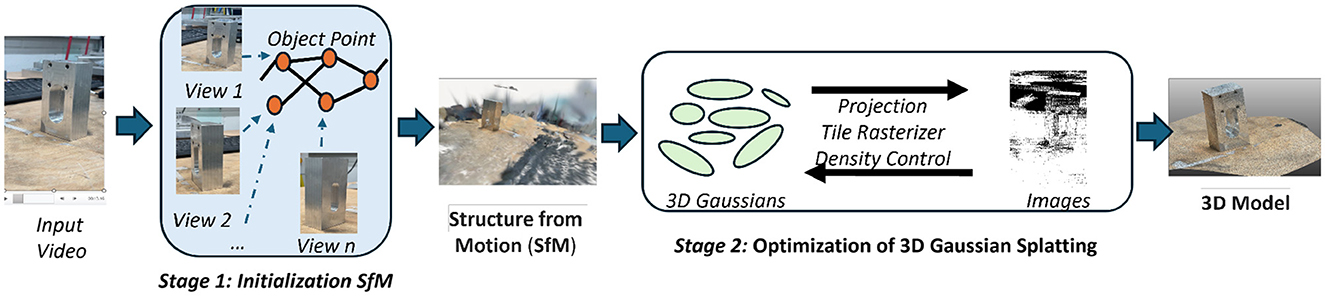

AI-enhanced video-to-3D reconstruction (AI-3DR): Our system focuses on leveraging video data to generate 3D models for inspection and deployment into a virtual environment, the system page in Figure 3b. The AI-enhanced Video-to-3D Reconstruction process includes: (1) Video Acquisition: Capturing video data from various perspectives using mobile cameras or drones. (2) 3D Model Generation: The AI-3DR module converts input video from mobile devices, drones, or fixed cameras into accurate and high-fidelity 3D models. As illustrated in Figure 4, the reconstruction process involves two main stages. Stage 1 performs Structure from Motion (SfM), which extracts object points by analyzing multiple video frames from different viewpoints (e.g., View 1, View 2, and View n), resulting in a sparse 3D structure that captures the global geometry of the object. Stage 2 involves the optimisation of this structure using 3D Gaussian Splatting (3DGS) (Kerbl et al., 2023), which enhances spatial fidelity through a series of steps including projection, tile rasterisation, and density control. These steps refine the point cloud and produce a continuous surface representation, ultimately generating a dense, photorealistic 3D model suitable for downstream tasks such as defect inspection, simulation, or integration into digital twin environments. This workflow highlights the integration of motion capture and advanced AI-driven optimization for precise 3D reconstruction. (3) Inspection and Deployment: By analyzing the generated 3D models for defects or deviations, our system will generate a quality analysis report to show the local area that contains a large degree of deformation.

Figure 4. Framework of the AI-enhanced Video-to-3D Reconstruction (AI-3DR) Model for GAI modular. The pipeline includes Stage 1: Initialization using Structure from Motion (SfM), where multiple video views are used to extract object points and build a sparse 3D structure, followed by Stage 2: Optimization via 3D Gaussian Splatting, which refines the geometry through projection, tile rasterisation, and density control. The final output is a high-fidelity 3D model suitable for virtual inspection, simulation, and digital twin integration.

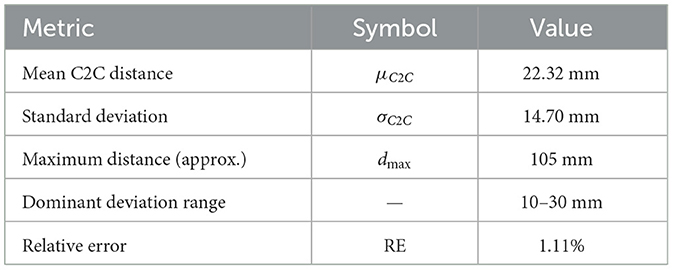

Quantitative evaluation of 3D reconstruction: To evaluate the fidelity of the AI-3DR generated 3D models, we perform a Cloud-to-Cloud (C2C) distance analysis between the AI-3DR output point cloud and a ground-truth CAD model point cloud . For each point pi∈P, the shortest Euclidean distance to the reference point cloud Q is computed as:

The set of all such distances defines the C2C deviation:

From this, we compute the Mean distance, Standard deviation, and Maximum observed distance:

To contextualize the geometric accuracy, we compare the mean C2C distance to the object's bounding box diagonal as Relative Error (RE):

where L, W, H are the length (height), width, and thickness/depth of the object, the resulting Relative Error is a percentage expressing the average deviation with respect to object size, this low relative error indicates high geometric fidelity, suitable for quality inspection and Digital Twin deployment in industrial settings.

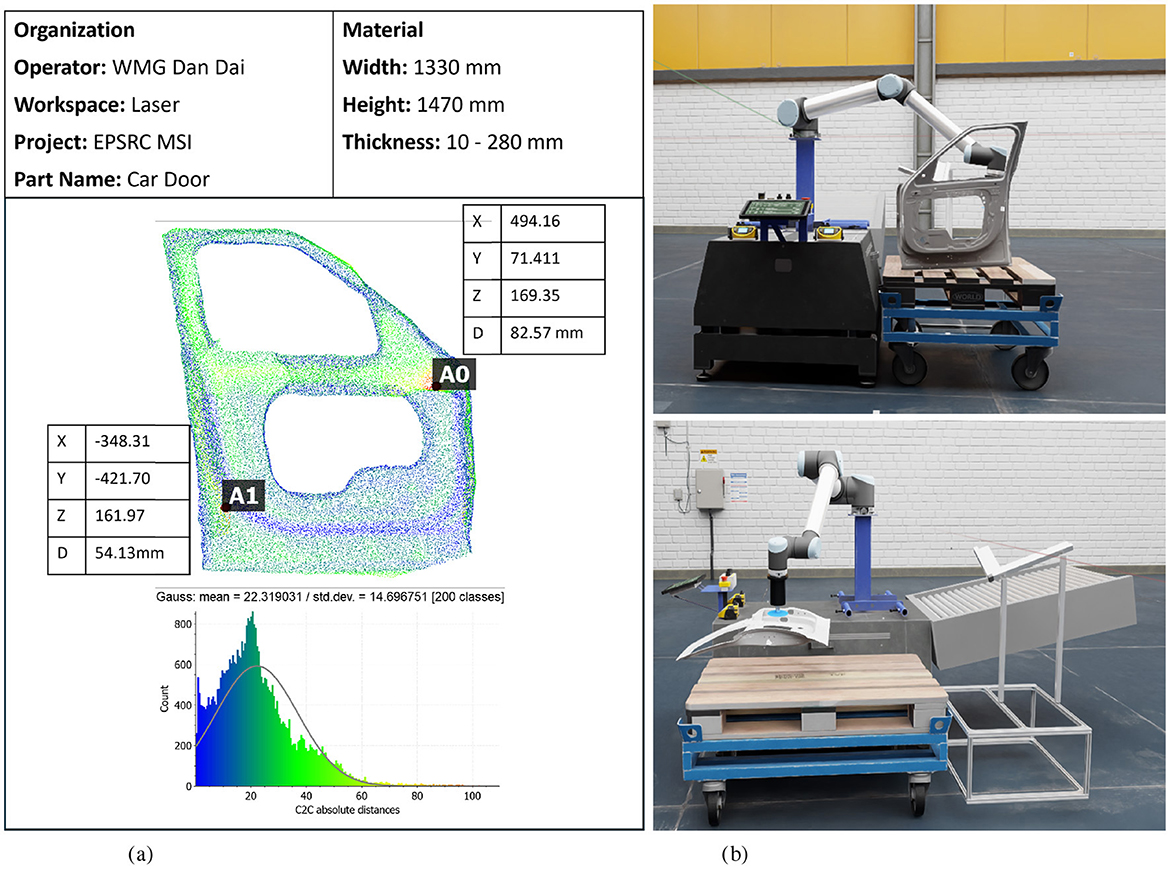

For our car door, the dimensions are width = 1, 330mm, height = 1, 470mm, thickness = 280mm. The results from our evaluation, based on C2C analysis shown in Table 2. The mean deviation of 22.32 mm and standard deviation of 14.70 mm indicate a relatively tight spread in deviation, though the tail (max 105 mm) suggests some outlier points. The dominant deviation range (10–30 mm) indicates that the majority of points in the AI-3DR model deviate from the ground-truth CAD model by only 10 to 30 mm, reflecting consistent and accurate reconstruction across most of the object's surface. A relative error of 1.11% with respect to object scale demonstrates that the reconstructed geometry is highly accurate, validating its use in precision manufacturing workflows such as inspection, virtual assembly, and process optimisation.

To provide practical insights into the system's deployment readiness, we evaluated the processing times for key modules. The video-to-3D reconstruction pipeline, which includes frame extraction, feature matching, point cloud generation, and mesh refinement, takes approximately 12–15 min per 10-second video, depending on resolution and scene complexity. The Quality Analysis Report (Figure 5a) presents a point cloud-based inspection of a car door, detailing dimensional measurements, deviations, and statistical analysis. The report includes key material specifications such as width, height, and thickness, along with precise spatial coordinates (X, Y, Z) and deviation (D) values at critical points (A0, A1). The bottom histogram represents the absolute distance distribution C2C, illustrating the deviation pattern across the scanned model. This analysis enables defect detection, structural validation, and process optimization in laser-based manufacturing environments. The 3D car door model can be imported into a virtual platform (Figure 5b), allowing simulation, inspection, and process validation within a digital twin environment. The system utilizes AI-driven 3D reconstruction to replicate the physical car door in a realistic virtual workspace, allowing for robotic path planning, quality analysis, and automated assembly simulations. This integration enhances manufacturing precision, defect analysis, and workflow optimization in smart factory settings.

Figure 5. (a) Quality analysis report of a car door based on the AI-3DR-generated 3D model for GAI modular. The report includes detailed spatial deviation measurements (e.g., at points A0 and A1), part dimensions, and a histogram of cloud-to-cloud (C2C) distance deviations, supporting defect detection and dimensional validation. (b) Virtual integration of the 3D Car Door Model into a digital twin platform, enabling robotic simulation, in-process inspection, and quality validation in a smart manufacturing environment. (a) Quality analysis report about the car door. (b) Virtual integration of 3D car door model.

3.2 AI-driven modeling and learning for welding defect detection

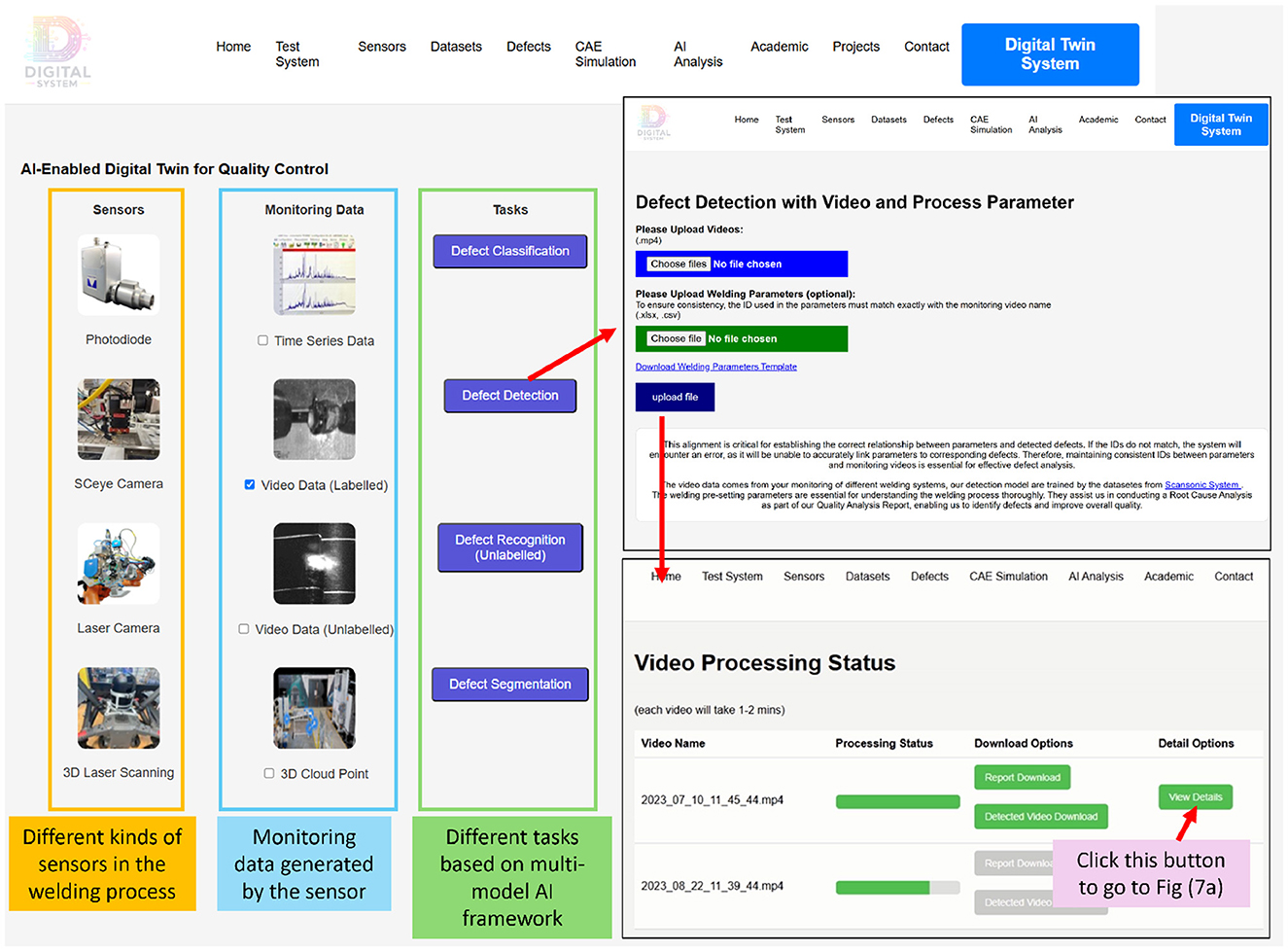

AI-driven modeling and learning form the backbone of a robust digital twin system by combining advanced machine learning techniques and physics-informed constraints to ensure high accuracy and physical consistency. The system integrates multi-modal sensor data, including photodiodes, high-speed cameras, laser scanners, and 3D laser scanning, to capture monitoring data such as time series, video, and 3D point clouds. Users upload their data and process parameters, which are processed by AI-based defect classification, detection, and segmentation models (Figure 6). We will demonstrate this AI-driven modeling and learning through defect detection.

Figure 6. AI-driven defect identification workflow for Welding Quality Control and PAI modular. The interface guides users through uploading sensor data and process parameters, initiating defect detection, and visualizing results. The processing status and outputs—such as detection videos and downloadable defect reports—enhance traceability and support actionable insights for quality assurance.

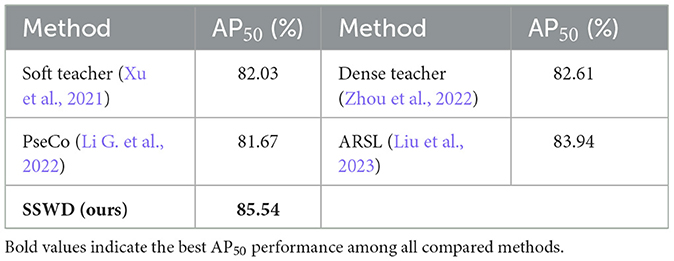

Semi-supervised welding defect detection (SSWD): The process of annotating welding defect data is both labor-intensive and time-consuming, especially in industrial environments where large volumes of unlabeled data are continuously generated. To address this challenge, we propose a semi-supervised defect detection framework based on a Transformer-based teacher-student architecture (Hu et al., 2023), designed to leverage labeled and unlabeled data effectively. The framework comprises the Detection Transformer Teacher and the Detection Transformer Student two core components. The teacher model is trained using a limited set of labeled welding data, the student model is trained primarily on the unlabeled data, using the teacher's predictions as soft targets. Through this teacher–student paradigm, the system effectively propagates reliable knowledge from labeled to unlabeled domains, improving defect detection accuracy while significantly reducing the dependency on manual annotation. The integration of transformer architectures ensures that both spatial and contextual information is preserved, further enhancing the system's generalization capabilities in real-world welding scenarios.

Quantitative evaluation of defect detection: To assess the performance of defect detection models on labeled data, we adopt the Average Precision at IoU = 0.50 (AP50) metric. AP50 measures the model's accuracy in detecting and localizing defects by evaluating how well the predicted bounding boxes align with ground-truth annotations, based on a fixed Intersection over Union (IoU) threshold of 0.50. Mathematically, AP is computed as the area under the precision–recall curve:

where P(R) denotes the precision as a function of recall R. In practice, this integral is approximated by a summation over all discrete recall levels at which precision drops occur. A higher AP50 value reflects better performance, indicating that the model maintains high precision on a wide range of recall levels, a crucial property for reliable defect identification in manufacturing systems.

In our semi-supervised welding defect detection framework, our dataset consists of 450 welding videos, which collectively yield approximately 146,000 image frames, of which only 1,247 are manually labeled. we also compare with Soft Teacher (Xu et al., 2021), Dense Teacher (Zhou et al., 2022), PseCo (Li G. et al., 2022), and ARSL (Liu et al., 2023), these models are trained using 1,247 labeled images and 145,138 unlabeled images. Table 3 presents a comparative evaluation of AP50 across those five semi-supervised defect detection methods. Despite the limited labeled data, our SSWD achieves a strong performance, with an AP50 of 85.54%. This result highlights the effectiveness of our Teacher–Student architecture in leveraging large-scale unlabeled data to improve detection accuracy, while significantly reducing reliance on manual annotation.

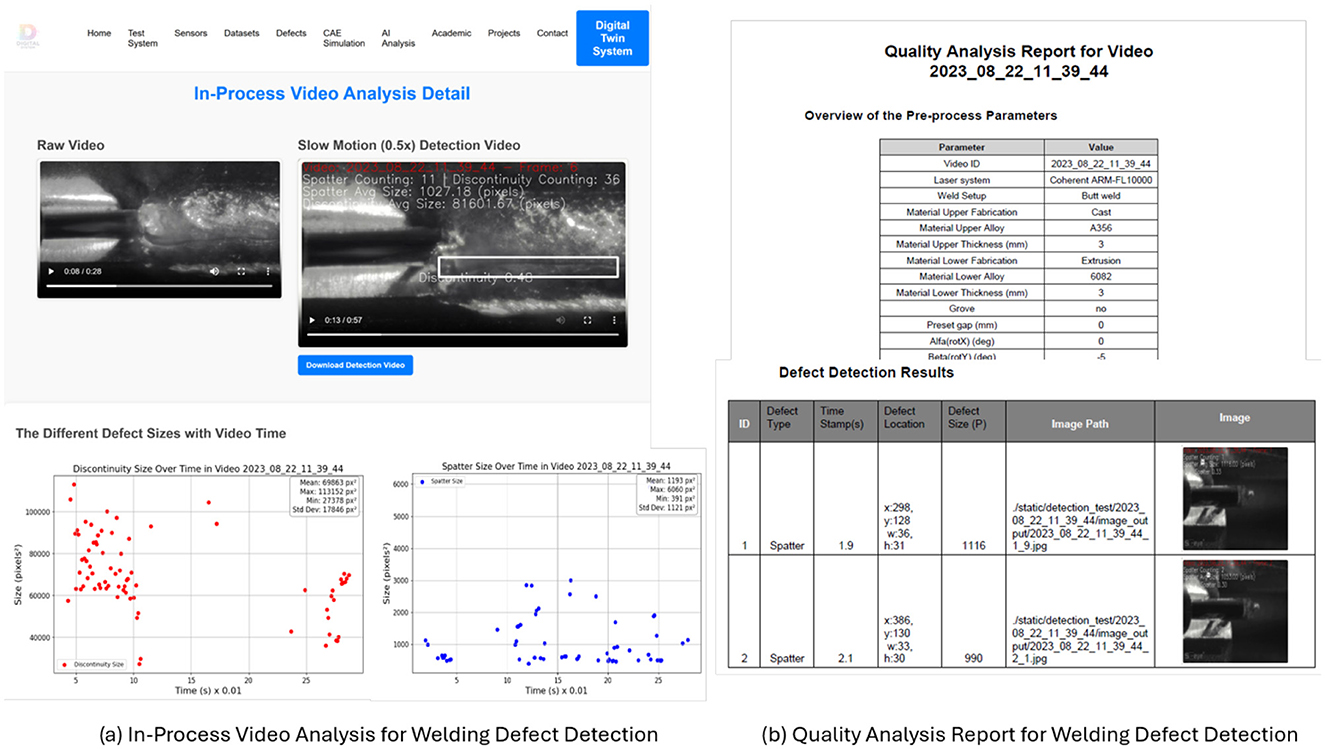

The processed results are shown on the video processing status dashboard (Figure 7). It includes a raw welding video and a slow-motion detection video that highlights detected defects such as spatter and discontinuities, along with real-time defect statistics. The size distribution of defects over time is visualized through discontinuity and spatter size plots, allowing for a quantitative assessment of welding quality. Users can download processed detection videos and defect size data for further inspection and analysis, and the defect analysis reports in Figure 7b, the report includes an overview of pre-process parameters, detailing material properties, welding setup, and laser system configurations, etc. The defect detection results section presents automatically identified defects, including their timestamps, spatial locations, and sizes, alongside corresponding annotated images. This structured analysis supports process optimization and quality assurance by enabling precise defect tracking and corrective actions. The interface enables users to access detailed defect reports, visualize defect trends, and refine detection accuracy. The system currently requires about one minute to analyse a 15-second video, making it more suitable for post-process inspection. This subsection explores the application of AI-driven defect detection and analysis in manufacturing. By integrating edge computing devices within practical manufacturing environments, where video data may be partially occluded and sensor signals can become noisy or misaligned, the system could incorporate multi-sensor data fusion to enhance robustness. Future developments will focus on improving reliability through training on noise-rich datasets and integrating physics-informed, context-aware adaptation mechanisms, thereby enabling early defect warnings through the combination of historical trends and live data stream

Figure 7. (a) In-process video analysis interface for welding defect detection and PAI modular. This interface displays raw and slow-motion annotated videos, alongside visual plots of defect size trends (e.g., discontinuities and spatter) over time, enabling quantitative analysis and traceability of defect progression during the welding process. (b) The report includes a comprehensive summary of pre-process parameters, spatial-temporal defect metadata (type, location, timestamp, size), and annotated defect images to support automated quality assessment and corrective action planning.

4 Conclusion and next steps

This paper presents a modular and scalable AI-enabled Digital Twin (AI-DT) framework that integrates Generative AI (GAI) and Predictive AI (PAI) to enhance real-time monitoring and defect detection in manufacturing. Although demonstrated within the context of welding, which includes sensor data acquisition, multi-model AI layers, and user interface components. The proposed framework can be adapted to other manufacturing scenarios, such as machining, assembly, and additive manufacturing, by reconfiguring sensor inputs, retraining the AI modules with domain-specific data, and updating process parameters to reflect task-specific operational conditions.

As manufacturing environments are inherently noisy and unpredictable, several limitations remain. Future developments will focus on enhancing the robustness and adaptability of our AI models, incorporate metamodeling approaches and automation workflow (Stavropoulos et al., 2023). Training on diverse datasets that capture noise, occlusion, and variability will strengthen model resilience, while metamodels and workflow databases can accelerate Digital Twin development by enabling modular reuse, systematic workflow design, and alignment with business and policy constraints. Furthermore, we plan to integrate Explainable AI (XAI) for actionable root cause analysis, deploy Context-Aware AI to support real-time parameter optimization, and incorporate Agentic AI to facilitate autonomous decision-making and adaptive control. Collectively, these enhancements will advance the scalability, reliability, and practical applicability of the proposed framework across a wide range of industrial scenarios.

Data availability statement

The datasets presented in this article are not readily available because as those data are used for the BMW, we should apply from BMW. Requests to access the datasets should be directed to ZC5kYWlAYXN0b24uYWMudWs=.

Author contributions

DD: Investigation, Software, Methodology, Writing – original draft, Visualization, Writing – review & editing, Validation, Conceptualization. BZ: Validation, Writing – review & editing, Visualization, Investigation. ZY: Writing – review & editing, Visualization, Conceptualization. PF: Resources, Validation, Project administration, Methodology, Writing – review & editing, Funding acquisition. DC: Funding acquisition, Writing – review & editing, Project administration, Resources, Validation.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by the EPSRC MSI: Made Smarter Innovation -Research Centre for Smart, Collaborative Industrial Robotics (EP/V062158/1).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that Gen AI was used in the creation of this manuscript. Yes, we will take full responsibility for the generative AI in the preparation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frai.2025.1655470/full#supplementary-material

References

Acharya, D. B., Kuppan, K., and Divya, B. (2025). Agentic AI: autonomous intelligence for complex goals-a comprehensive survey. IEEE Access 13, 18912–18936. doi: 10.1109/ACCESS.2025.3532853

Achouch, M., Dimitrova, M., Ziane, K., Sattarpanah Karganroudi, S., Dhouib, R., Ibrahim, H., et al. (2022). On predictive mAIntenance in industry 4.0: overview, models, and challenges. Appl. Sci. 12:8081. doi: 10.3390/app12168081

Ahmed, I., Jeon, G., and Piccialli, F. (2022). From artificial intelligence to explAInable artificial intelligence in industry 4.0: a survey on what, how, and where. IEEE Trans. Industr. Inform. 18, 5031–5042. doi: 10.1109/TII.2022.3146552

Babu, M., Franciosa, P., and Ceglarek, D. (2019). Spatio-temporal adaptive sampling for effective coverage measurement planning during quality inspection of free form surfaces using robotic 3d optical scanner. J. Manufact. Syst. 53, 93–108. doi: 10.1016/j.jmsy.2019.08.003

Banaeian Far, S., and Imani Rad, A. (2022). Applying digital twins in metaverse: user interface, security and privacy challenges. J. Metaverse 2, 8–15. doi: 10.48550/arXiv.2204.11343

Bandi, A., Adapa, P. V. S. R., and Kuchi, Y. E. V. P. K. (2023). The power of generative AI: a review of requirements, models, input-output formats, evaluation metrics, and challenges. Fut. Internet 15:260. doi: 10.3390/fi15080260

Bocklisch Franziska, H. N. (2023). Humans and cyber-physical systems as teammates? Characteristics and applicability of the human-machine-teaming concept in intelligent manufacturing. Front. Artif. Intell. 6:1247755. doi: 10.3389/frAI.2023.1247755

Bourlesas, N., Tzimanis, K., Sabatakakis, K., Bikas, H., and Stavropoulos, P. (2024). Over-deposition assessment of direct energy deposition (DED) using melt pool geometric features and machine learning. Procedia CIRP 124, 797–802. doi: 10.1016/j.procir.2024.08.228

Ceglarek, D. (2014). Key enabling technologies (kets) can ‘strengthen European industry'. Parliam. Mag. 397, 79–80.

Ceglarek, D., Colledani, M., Váncza, J., Kim, D.-Y., Marine, C., Kogel-Hollacher, M., et al. (2015). Rapid deployment of remote laser welding processes in automotive assembly systems. CIRP Ann. 64, 389–394. doi: 10.1016/j.cirp.2015.04.119

Ceglarek, D., Huang, W., Zhou, S., Ding, Y., Kumar, R., and Zhou, Y. (2004). Time-based competition in multistage manufacturing: stream-of-variation analysis (Sova) methodology. Int. J. Flexible Manufact. Syst. 16, 11–44. doi: 10.1023/B:FLEX.0000039171.25141.a4

Ceglarek, D., Shi, J., and Wu, S. (1994). A knowledge-based diagnostic approach for the launch of the auto-body assembly process. ASME J. Eng. Ind. 116, 491–499. doi: 10.1115/1.2902133

Chen, T.-C. T. (2023). “ExplAInable artificial intelligence (xAI) in manufacturing,” in ExplAInable Artificial Intelligence (XAI) in Manufacturing: Methodology, Tools, and Applications (Springer), 1–11. doi: 10.1007/978-3-031-27961-4_1

Choung, H., David, P., and Ross, A. (2023). Trust in AI and its role in the acceptance of AI technologies. Int. J. Hum. Comput. Interact. 39, 1727–1739. doi: 10.1080/10447318.2022.2050543

DAI, D., Mohan, A., Franciosa, P., Zhang, T., Chen, C. L. P., and Ceglarek, D. (2024). “Adaptive domAIn-enhanced transfer learning for welding defect classification,” in 2024 IEEE International Conference on Systems, Man, and Cybernetics (SMC), 3152–3158. doi: 10.1109/SMC54092.2024.10832066

Ding, K., Chan, F. T., Zhang, X., Zhou, G., and Zhang, F. (2019). Defining a digital twin-based cyber-physical production system for autonomous manufacturing in smart shop floors. Int. J. Prod. Res. 57, 6315–6334. doi: 10.1080/00207543.2019.1566661

Ding, Y., Kim, P., Ceglarek, D., and Jin, J. (2003). Optimal sensor distribution for variation diagnosis in multistation assembly processes. IEEE Trans. Robot. Autom. 19, 543–556. doi: 10.1109/TRA.2003.814516

e Oliveira E. Miguéis V. L. Borges J. L. (2023). Automatic root cause analysis in manufacturing: an overview &conceptualization. J. Intell. Manuf. 34, 2061–2078. doi: 10.1007/s10845-022-01914-3

Ertel, W. (2024). Introduction to Artificial Intelligence. Cham: Springer Nature. doi: 10.1007/978-3-658-43102-0_1

European Commission (2020). A New Industrial Strategy for Europe. Brussels: European Commission. Available online at: https://eur-lex.europa.eu/legal-content/EN/TXT/?uri=CELEX:52020DC0102

Feuerriegel, S., Hartmann, J., Janiesch, C., and Zschech, P. (2024). Generative AI. Bus. Inf. Syst. Eng. 66, 111–126. doi: 10.1007/s12599-023-00834-7

Franciosa, P., Sokolov, M., Sinha, S., Sun, T., and Ceglarek, D. (2020). Deep learning enhanced digital twin for closed-loop in-process quality improvement. CIRP Ann. 69, 369–372. doi: 10.1016/j.cirp.2020.04.110

Gao, R. X., Krüger, J., Merklein, M., Möhring, H.-C., and Váncza, J. (2024). Artificial intelligence in manufacturing: State of the art, perspectives, and future directions. CIRP Ann. 73, 723–749. doi: 10.1016/j.cirp.2024.04.101

Gozalo-Brizuela, R., and Garrido-Merchan, E. C. (2023). Chatgpt is not all you need. A state of the art review of large generative AI models. arXiv preprint arXiv:2301.04655.

Helle, R. H., and Lemu, H. G. (2021). A case study on use of 3D scanning for reverse engineering and quality control. Mater. Today 45, 5255–5262. doi: 10.1016/j.matpr.2021.01.828

Herrera, V., and Germán, C. H. J. R. (2021). Complexity in manufacturing systems: a literature review. Prod. Eng. 15, 321–333. doi: 10.1007/s11740-020-01013-3

Hribernik, K., Cabri, G., Mandreoli, F., and Mentzas, G. (2021). Autonomous, context-aware, adaptive digital twins–state of the art and roadmap. Comput. Ind. 133:103508. doi: 10.1016/j.compind.2021.103508

Hrnjica, B., and Softic, S. (2020). “ExplAInable AI in manufacturing: a predictive maintenance case study,” in IFIP International Conference on Advances in Production Management Systems (Springer), 66–73. doi: 10.1007/978-3-030-57997-5_8

Hu, C., Li, X., Liu, D., Wu, H., Chen, X., Wang, J., et al. (2023). Teacher-student architecture for knowledge distillation: a survey. arXiv preprint arXiv:2308.04268.

JavAId, M., Haleem, A., Singh, R. P., Rab, S., and Suman, R. (2021). Significance of sensors for industry 4.0: roles, capabilities, and applications. Sensors Int. 2:100110. doi: 10.1016/j.sintl.2021.100110

Kerbl, B., Kopanas, G., Leimkühler, T., and Drettakis, G. (2023). 3D Gaussian splatting for real-time radiance field rendering. ACM Trans. Graph. 42, 139–131. doi: 10.1145/3592433

Leng, J., Wang, D., Shen, W., Li, X., Liu, Q., and Chen, X. (2021). Digital twins-based smart manufacturing system design in industry 4.0: a review. J. Manufact. Syst. 60, 119–137. doi: 10.1016/j.jmsy.2021.05.011

Leng, J., Zhong, Y., Lin, Z., Xu, K., Mourtzis, D., Zhou, X., et al. (2023). Towards resilience in industry 5.0: a decentralized autonomous manufacturing paradigm. J. Manufact. Syst. 71, 95–114. doi: 10.1016/j.jmsy.2023.08.023

Li, C., Chen, Y., and Shang, Y. (2022). A review of industrial big data for decision making in intelligent manufacturing. Eng. Sci. Technol. Int. J. 29:101021. doi: 10.1016/j.jestch.2021.06.001

Li, G., Li, X., Wang, Y., Wu, Y., Liang, D., and Zhang, S. (2022). “Pseco: pseudo labeling and consistency training for semi-supervised object detection,” in European Conference on Computer Vision (Springer), 457–472. doi: 10.1007/978-3-031-20077-9_27

Li, H., Xu, Z., Taylor, G., Studer, C., and Goldstein, T. (2018). “Visualizing the loss landscape of neural nets,” in Advances in Neural Information Processing Systems, 31.

Liu, C., Zhang, W., Lin, X., Zhang, W., Tan, X., Han, J., et al. (2023). “Ambiguity-resistant semi-supervised learning for dense object detection,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 15579–15588. doi: 10.1109/CVPR52729.2023.01495

Liu, D., Du, Y., ChAI, W., Lu, C., and Cong, M. (2022). Digital twin and data-driven quality prediction of complex die-casting manufacturing. IEEE Trans. Ind. Inf. 18, 8119–8128. doi: 10.1109/TII.2022.3168309

Liu, J., Huang, X., Huang, T., Chen, L., Hou, Y., Tang, S., et al. (2024). A comprehensive survey on 3d content generation. arXiv preprint arXiv:2402.01166.

Lu, Y., Shen, M., Wang, H., Wang, X., van Rechem, C., Fu, T., et al. (2023). Machine learning for synthetic data generation: a review. arXiv preprint arXiv:2302.04062.

Maropoulos, P.aul G.. (2010). Design verification and validation in product lifecycle. CIRP Ann. 59, 740–759. doi: 10.1016/j.cirp.2010.05.005

Mildenhall, B., Srinivasan, P. P., Tancik, M., Barron, J. T., Ramamoorthi, R., and Ng, R. (2021). Nerf: representing scenes as neural radiance fields for view synthesis. Commun. ACM 65, 99–106. doi: 10.1145/3503250

Modoni, G. E., Stampone, B., and Trotta, G. (2022). Application of the digital twin for in process monitoring of the micro injection moulding process quality. Comput. Ind. 135:103568. doi: 10.1016/j.compind.2021.103568

Mourtzis, D., Papakostas, N., Mavrikios, D., Makris, S., and Alexopoulos, K. (2015). The role of simulation in digital manufacturing: applications and outlook. Int. J. Comput. Integr. Manufact. 28, 3–24. doi: 10.1080/0951192X.2013.800234

Nti, I. K., Adekoya, A. F., Weyori, B. A., and Nyarko-Boateng, O. (2022). Applications of artificial intelligence in engineering and manufacturing: a systematic review. J. Intell. Manuf. 33, 1581–1601. doi: 10.1007/s10845-021-01771-6

Pantelidakis, M., Mykoniatis, K., Liu, J., and Harris, G. (2022). A digital twin ecosystem for additive manufacturing using a real-time development platform. Int. J. Adv. Manufact. Technol. 120, 6547–6563. doi: 10.1007/s00170-022-09164-6

Poole, B., JAIn, A., Barron, J. T., and Mildenhall, B. (2022). Dreamfusion: text-to-3D using 2D diffusion. arXiv preprint arXiv:2209.14988.

Psarommatis, F., and Kiritsis, D. (2022). A hybrid decision support system for automating decision making in the event of defects in the era of zero defect manufacturing. J. Industr. Inf. Integr. 26:100263. doi: 10.1016/j.jii.2021.100263

Reiman, A., KAIvo-oja, J., ParviAInen, E., Takala, E.-P., and Lauraeus, T. (2021). Human factors and ergonomics in manufacturing in the industry 4.0 context-a scoping review. Technol. Soc. 65:101572. doi: 10.1016/j.techsoc.2021.101572

Ren, Z., Fang, F., Yan, N., and Wu, Y. (2022). State of the art in defect detection based on machine vision. Int. J. Prec. Eng. Manuf. Green Technol. 9, 661–691. doi: 10.1007/s40684-021-00343-6

Schadt, E. E., Linderman, M. D., Sorenson, J., Lee, L., and Nolan, G. P. (2010). Computational solutions to large-scale data management and analysis. Nat. Rev. Genet. 11, 647–657. doi: 10.1038/nrg2857

Shi, J. (2006). Stream of Variation Modeling and Analysis for Multistage Manufacturing Processes. CRC press. doi: 10.1201/9781420003901

Sinha, S., Franciosa, P., and Ceglarek, D. (2020). Object shape error response using Bayesian 3-D convolutional neural networks for assembly systems with compliant parts. IEEE Trans. Industr. Inform. 17, 6676–6686. doi: 10.1109/TII.2020.3043226

Soori, M., Arezoo, B., and Dastres, R. (2023). Digital twin for smart manufacturing, a review. SustAIn. Manufact. Serv. Econ. 2:100017. doi: 10.1016/j.smse.2023.100017

Stavropoulos, P., Papacharalampopoulos, A., MichAIl, C. K., and Chryssolouris, G. (2021). Robust additive manufacturing performance through a control oriented digital twin. Metals 11:708. doi: 10.3390/met11050708

Stavropoulos, P., Papacharalampopoulos, A., Sabatakakis, K., and Mourtzis, D. (2023). Metamodelling of manufacturing processes and automation workflows towards designing and operating digital twins. Appl. Sci. 13:1945. doi: 10.3390/app13031945

Stavropoulos, P., and Sabatakakis, K. (2024). Quality assurance in resistance spot welding: state of practice, state of the art, and prospects. Metals 14:185. doi: 10.3390/met14020185

Tao, F., Zhang, H., and Zhang, C. (2024). Advancements and challenges of digital twins in industry. Nat. Comput. Sci. 4, 169–177. doi: 10.1038/s43588-024-00603-w

Thelen, A., Zhang, X., Fink, O., Lu, Y., Ghosh, S., Youn, B. D., et al. (2023). A comprehensive review of digital twin–part 2: roles of uncertainty quantification and optimization, a battery digital twin, and perspectives. Struct. Multidisc. Optim. 66:1. doi: 10.1007/s00158-022-03476-7

Tolio, T., Ceglarek, D., ElMaraghy, H. A., Fischer, A., Hu, S. J., Laperriére, L., et al. (2010). Species—co-evolution of products, processes and production systems. CIRP Ann. 59, 672–693. doi: 10.1016/j.cirp.2010.05.008

Villalonga, A., Negri, E., Biscardo, G., Castano, F., Haber, R. E., Fumagalli, L., et al. (2021). A decision-making framework for dynamic scheduling of cyber-physical production systems based on digital twins. Annu. Rev. Control 51, 357–373. doi: 10.1016/j.arcontrol.2021.04.008

Wang, K.-J., Lee, Y.-H., and Angelica, S. (2021). Digital twin design for real-time monitoring-a case study of die cutting machine. Int. J. Prod. Res. 59, 6471–6485. doi: 10.1080/00207543.2020.1817999

Wu, S., Lin, Y., Zhang, F., Zeng, Y., Xu, J., Torr, P., et al. (2024). Direct3D: scalable image-to-3D generation via 3D latent diffusion transformer. arXiv preprint arXiv:2405.14832.

Xu, M., Zhang, Z., Hu, H., Wang, J., Wang, L., Wei, F., et al. (2021). “End-to-end semi-supervised object detection with soft teacher,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 3060–3069. doi: 10.1109/ICCV48922.2021.00305

Keywords: AI-enabled digital twin, Generative AI, Predictive AI, quality assurance, smart manufacturing

Citation: Dai D, Zhao B, Yu Z, Franciosa P and Ceglarek D (2025) Generative and Predictive AI for digital twin systems in manufacturing. Front. Artif. Intell. 8:1655470. doi: 10.3389/frai.2025.1655470

Received: 27 June 2025; Revised: 16 October 2025;

Accepted: 17 November 2025; Published: 17 December 2025.

Edited by:

Jichao Bi, Zhejiang Institute of Industry and Information Technology, ChinaReviewed by:

Tian Feng, Zhejiang University, ChinaPanagiotis Stavropoulos, University of Patras, Greece

Jingqi Liu, University of Leeds, United Kingdom

Tianyang Zhang, University of Birmingham, United Kingdom

Copyright © 2025 Dai, Zhao, Yu, Franciosa and Ceglarek. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Dariusz Ceglarek, ZC5qLmNlZ2xhcmVrQHdhcndpY2suYWMudWs=

Dan Dai

Dan Dai Baixiang Zhao3

Baixiang Zhao3 Zhiwen Yu

Zhiwen Yu Dariusz Ceglarek

Dariusz Ceglarek