- 1Two Chairs, San Francisco, CA, United States

- 2The Donald and Barbara Zucker School of Medicine at Hofstra/Northwell, Hempstead, NY, United States

- 3JG Research and Evaluation, Boseman, MT, United States

Introduction: Measurement-based care (MBC) is an evidence-based practice; however there are challenges associated with implementing and sustaining this practice in care. This study examined the outcomes of an organization-wide implementation of MBC in a technology-supported psychotherapy practice. Outcomes were patient symptom change, clinician behaviors, and clinician performance.

Methods: A total of n = 18,721 patients and 755 clinicians were included in the 6-month implementation. Change efforts targeted organizational alignment, technology integration, education and support, and cultural and operational change. Outcomes were assessed across three phases: pre-implementation, implementation, and post-implementation. Primary outcome measures for patients were percent change on the PHQ-9 and GAD-7. Estimates of differences between phases of implementation were computed using linear mixed effects models, adjusted for patient characteristics. Clinician behaviors associated with MBC were extracted from progress notes. Changes in individual clinician performance were assessed for clinicians with sufficient data across the implementation phases.

Results: Patient outcomes improved significantly from pre- to post-implementation by approximately 5 percentage points for all outcomes. This represents a relative improvement of 23.5% on a combined PHQ-9 and GAD-7 measure. Clinicians demonstrated significant increases in MBC-related documentation behaviors. Among clinicians with sufficient data, 95% showed evidence of improved performance. Notably, clinicians whose baseline performance was superior showed greater improvements in performance.

Discussion: Overall, this study suggests that structured MBC implementation was associated with improved patient outcomes, clinician behavior change, and clinician performance, although causal attributions are not possible given the retrospective non-randomized design. These results have implications for scalable implementation approaches in regular practice settings.

1 Introduction

1.1 Introduction to measurement based care

Measurement-based care (MBC), also known as routine outcome monitoring (ROM), is an evidence-based practice (EBP) that has been associated with improved outcomes for individuals with mental health conditions (1). MBC involves the systematic collection of clinical data throughout treatment to guide clinical decision-making and adapt treatment strategies (2). As an EBP, MBC has been endorsed by major professional organizations, including the American Psychology Association (APA) and the Substance Abuse and Mental Health Services (SAMHSA), for its role in enhancing treatment efficacy, patient engagement, and overall care quality (1, 2).

MBC follows a structured process where clinicians: (1) collect standardized assessments on patient symptoms, functioning, and treatment processes; (2) share this information with patients to enhance communication and engagement; and (3) adapt interventions based on insights from the patient-reported data (1, 3). This approach allows for personalized, responsive treatment, and reduces the risk of stagnation or deterioration in care (1, 4). Research indicates that MBC enhances therapy outcomes by enabling clinicians to identify and address care delivery and alliance challenges in real time, leading to reduced dropout, improved therapeutic alliance, and faster symptom resolution (5, 6).

1.2 Challenges and pitfalls in MBC implementation

Despite the benefits, implementation of MBC remains limited, with adoption rates among behavioral health providers estimated at less than 20% and only about 5% adhering to MBC following any evidence-based schedule (e.g., every session) (7). Various systemic barriers, including financial costs, time constraints, and the burden of ongoing monitoring may have contributed to the slow uptake of MBC in clinical practice (7, 8). One of the primary obstacles identified in the existing research literature on MBC implementation is the burden associated with the set up and maintenance of the practice (9). Establishing MBC infrastructure requires an investment in assessment tools, data management systems and clinician training, which can be costly, particularly for smaller clinics and community mental health providers (9, 10). Sustaining this practice requires consistent efforts from both patients and clinicians, which can be difficult in settings with high staff turnover, limited resources, or patient populations facing barriers to regular participation (3, 4).

Although strong evidence supports MBC as a gold standard practice, improper implementation or superficial adoption of MBC can negate its benefits and may even lead to adverse effects on both patients and clinicians (11). When routine assessment is mandated without effective training or support for clinicians, it can create negative experiences for both patients and clinicians (12). Administering measures without discussing their purpose or relevance can lead to decreased patient engagement, and lower therapeutic alliance and satisfaction with care (12). Clinicians may also experience frustration and burnout when required to collect data without proper training or guidance on how measurement should be integrated into care (12). Put simply, gathering data without integrating feedback into clinical care does not align with best practices in MBC, and may even be harmful (13). Conversely, patients who have been educated on the purpose of MBC and whose clinicians regularly discuss self-reported data in session report improved trust, self-awareness and strengthened therapeutic alliance (14).

The implementation science literature acknowledges the substantial set-up and maintenance costs incurred to install a new EBP, as well as the need for ongoing clinician support to drive the sustained clinical behavior change necessary before the impact of the EBP may be fully realized. Effective implementations often rely on resource and time-intensive interventions, including synchronous training, direct supervision, and individualized coaching of clinicians, which can limit the scalability of this approach (15). Time to outcomes can also be lengthy; authors caution that the initial installation of a new EBP typically takes 2–4 years (16). Furthermore, achieving full, sustainable implementation—to the point where it demonstrably improves patient outcomes—is a multi-stage process often cited as taking 3–7 years (15, 17). Understandably, leaders might be reluctant to invest in MBC without certainty about the impact or sustainability of the implementation within their organizations and the long timelines needed before impact might be observed (14).

Although MBC is a gold-standard EBP, the challenges associated with implementation, including effectiveness, scalability, and time to outcome, highlight the need for new models of implementation that can drive clinical behavior change efficiently and sustainably.

1.3 The current study

The extant literature reflects a gap in the science: there are few validated models for efficient, MBC implementation that are scalable and lead to improved patient outcomes on timelines that demonstrate and confirm impact. This study analyzes the patient and provider outcomes associated with MBC implementation in a real-world, large-scale, technology-enabled psychotherapy practice. MBC implementation occurred across the entire organization with a large, distributed, and remote workforce (755 teletherapy clinicians and over 18,000 patients). Implementation focused on motivating clinical behavior change on a short time scale, with the initial implementation intended to be completed within six months and observable impact on clinician behaviors and patient outcomes expected within one year (this is in contrast to the 3–7 year timeline cited in the literature). To achieve these goals, this model leveraged technology platforms to support the use and interpretation of outcome measures, provide feedback to clinicians, promote MBC adherence, and track and promote clinical behavior associated with MBC. Existing and new clinicians used learning platforms to support self-lead asynchronous training and to evaluate changes in their clinical skills. There was minimal direct oversight, supervision, or coaching in conjunction with self-directed learning. The technology suite for collecting, sharing, and providing clinical decision support to clinicians has been described in a previous manuscript (18). This study focuses on a pre-training vs. post-training comparison of patient treatment outcomes. The secondary outcomes of interest measure fidelity to the MBC model, and include indicators of training effectiveness and MBC-consistent clinician behaviors and performance.

2 Methods

2.1 Setting and context

The MBC implementation was conducted at Two Chairs, a hybrid technology-enabled behavioral health company founded in 2017 that provides psychotherapy to self-pay, commercially insured, and publicly insured individuals. During the period of data collection, services were available in California and Washington. Patients served by Two Chairs are aged 18 years or older and receive outpatient psychotherapy for anxiety, depression, and related conditions. All study procedures were deemed exempt by the Sterling institutional review board, Atlanta, Georgia, United States.

Two Chairs' clinical model allows clinicians to practice using their preferred theoretical orientation and approach. In 2021, MBC was selected as an EBP to implement because it can apply across different theoretical therapeutic approaches and clinical diagnoses (19, 20). During the initial implementation of MBC, the management team at Two Chairs launched a clinician-facing software platform and a set of automated patient-reported measures, the PHQ-9, GAD-7, Satisfaction with Life Scale, and a therapeutic alliance measure, accompanied by a brief self-led training in how to use these measures in care. Surveys measuring adherence to MBC were also implemented to generate a fidelity performance metric. In some ways this implementation was successful, in that it led to MBC survey completion rates of greater than 90%. However, anecdotal reports after the implementation suggested that clinicians had low buy-in, and there was also limited impact on patient outcomes, consistent with the known issues related to MBC implementation (19, 20). In light of this limited success, a quality improvement team at Two Chairs was tasked in late 2022 with understanding the problem and developing a quality improvement effort to strengthen the MBC implementation. The ultimate goal of the quality improvement effort was to improve clinical outcomes.

2.2 EPIS framework

The quality improvement team organized their approach around elements of the Exploration, Preparation, Implementation and Sustainment (EPIS) framework, a comprehensive model used to guide the integration of EBPs into real world service settings. EPIS consists of four phases, described as follows: (1) Exploration: identify unmet clinical or community needs, survey available EBPs to address these gaps, and identify whether an intervention aligns with their goals and resources; (2) Preparation: lay groundwork for successful implementation by assessing organizational readiness, developing strategies, and leveraging strengths; (3) Implementation: introduce the EBP, and iterate and refine based on feedback; and (4) Sustainment: the EBP is embedded into routine practice to ensure benefits are maintained and strengthened over time (21). The quality team was focused on identifying and leveraging known drivers of success, including clinical competency, leadership, and organizational factors, within and across EPIS phases (22).

2.3 Exploration

The quality improvement team began by identifying specific gaps in the initial implementation, including ineffective efforts at generating clinician buy-in and inadequate education, supervision, and support for clinical behavior change. Other gaps included inappropriate use of alliance scores as a clinician performance metric, low perceived clinical utility of some standardized measures, and inadequate clinical decision support. Several existing strengths were also identified including the robust software package, very high levels of MBC survey completion, and strong mission alignment across levels of the organization. Further details on the Two Chairs software package and outcomes can be found in a previous publication (18).

2.4 Preparation

2.4.1 MBC implementation design

Two Chairs developed a formal plan based on the findings of the exploration period. Quality improvement team members shared evidence of the current training gaps and examples of best practice training with company leadership and linked improvement in clinical outcomes to company goals. Similar efforts were made to drive alignment among upper and lower levels of clinical management. The senior leader of the clinical organization served as an internal advocate of this work.

2.4.2 Training development

A four-part training series was developed, each focused on a specific aspect of using MBC within Two Chairs' organizational context. Each self-led module took about 45 min to complete. Training focused on addressing: (1) the rationale and evidence for MBC and why the company chose to adopt it; (2) effective practices for using patient-reported data to guide care decisions; and (3) pragmatic information on how to interpret and use the standardized measure set.

The training modules were as follows:

• Module 1: MBC overview

• Module 2: Therapeutic alliance

• Module 3: The PHQ9 and GAD7

• Module 4: Putting it all together

2.4.3 Skills assessment

After modules 2–4, clinicians participated in hour-long practice groups facilitated by the quality team focused on demonstration of the clinical skills they learned. After module 4, clinicians submitted two recordings to the quality team demonstrating: (1) how to introduce MBC to patients, and (2) how to respond to specific changes in symptoms. These recordings were rated by the quality team using a structured scoring system developed in-house. Clinicians who received non-passing scores were given coaching and required to submit new recordings.

2.4.4 MBC system improvements

A set of technical adjustments were planned for the MBC system to improve functionality. These included the development and testing of a new measure to replace the Satisfaction with Life Scale and the adjustment of MBC system rules regarding alerts around meaningful symptom change. These changes were scoped and implemented across the training period in 2023 and into early 2024.

2.5 Implementation

The implementation of the training program began in May 2023 and continued until the end of 2023, with all training and skill assessment activities complete within six months. Additional support included dedicated time for training, skills practice, and manager evaluation of clinician skills. Two Chairs' employment model allowed for adequate and compensated time to be set aside for clinicians to complete required training and oversight. Completion of training exercises and skills assessments were tracked and if clinicians did not complete the required training they would be reminded, and eventually their managers would be informed. Training completion ranged from 75% to 79% across the four modules. The quality improvement team collected feedback from clinicians on training design and effectiveness and adjusted training approaches as the implementation progressed. The use of average alliance score as a performance metric was de-implemented, and peer-led consultation spaces on the use of MBC were launched.

2.6 Sustainment

Several steps were taken to ensure that clinician behavioral changes could be continued after the initial implementation was completed. The goal was to develop an efficient and cost-effective process that was self-sustaining. The quality improvement team leveraged technology platforms as much as feasible during this phase to promote scalability as new clinicians were onboarded into the system. The following sustainment activities were developed and implemented:

• Hiring criteria were adjusted to identify clinicians who are open to practicing MBC;

• The four-part training series and skills assessments were built into clinician onboarding;

• Manager training on how to supervise their clinicians in MBC was developed and conducted in April 2024;

• Consultation spaces for clinicians to receive peer feedback on MBC were set up and staffed; and

• Continuous organization-wide monitoring of patient outcomes was stood up to evaluate the durability of implementation impacts.

2.7 Sample

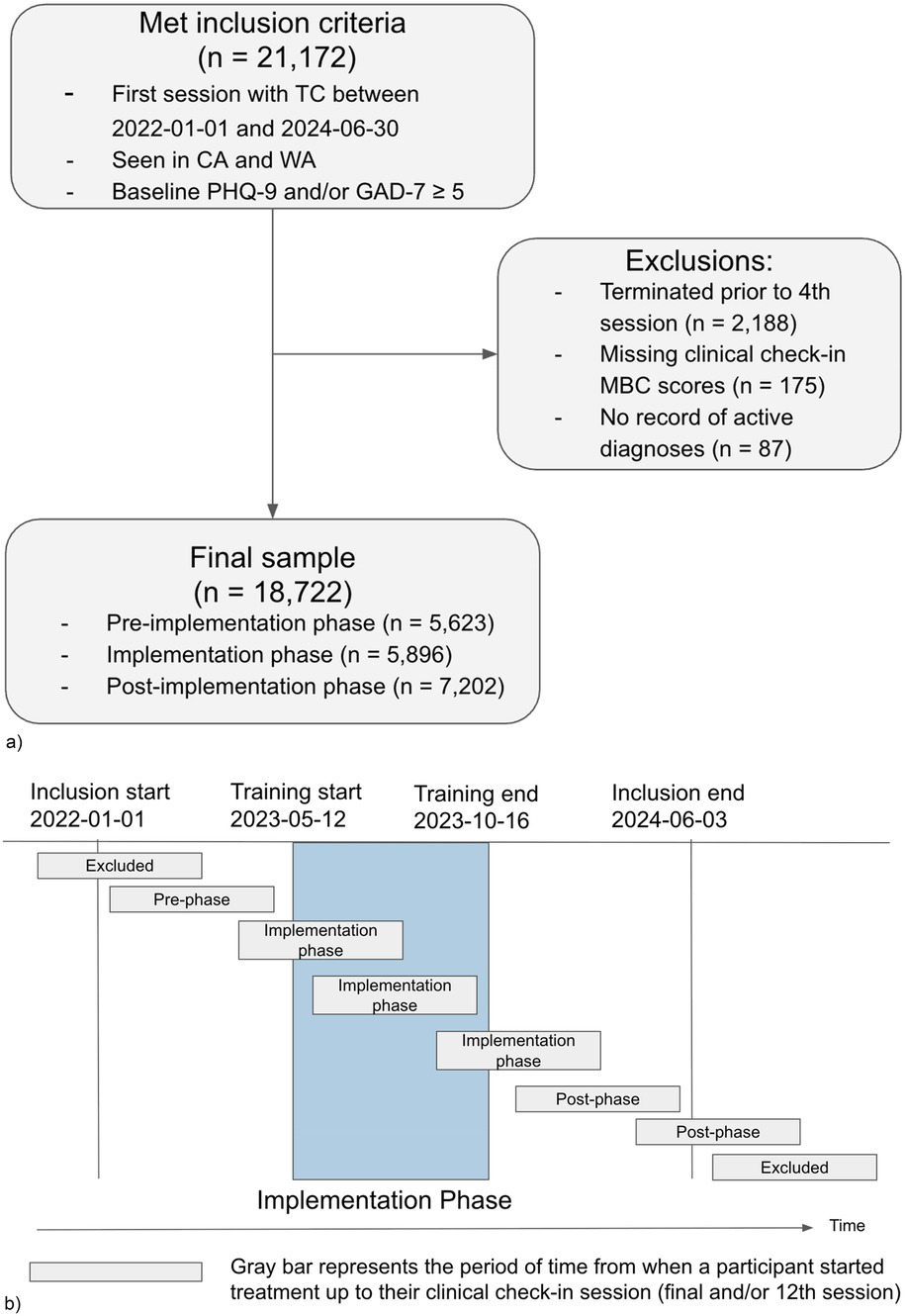

Clinicians were licensed master's and doctorate-level providers who were full-time employed (W2 employees) by Two Chairs. All clinicians practicing at Two Chairs were eligible for inclusion. Patients were eligible for the study if they began treatment between 01-01-2022 and 06-30-2024 in California or Washington and their baseline PHQ-9 and/or GAD-7 was ≥5 (n = 21,172). Patients were excluded if they terminated treatment prior to the fourth session (n = 2,188), were missing all MBC data between sessions 4 and 12 (n = 175), or had no record of an active diagnosis (n = 87), leaving a final analytic sample of 18,722 (88.4% of the original included population, Figure 1a).

Figure 1. (a) diagram of participant inclusion and exclusion. (b) Participant group assignment according to the participants’ treatment start and clinical check-in dates relative to the timing of the implementation.

2.8 Measures

Patients completed a standardized set of measures prior to every therapy session that included the PHQ-9, GAD-7, and a measure of therapeutic alliance (21, 22). The PHQ-9 is a nine-item self-report of depressive symptoms (21, 22). Items are rated on a scale ranging from 0 (not at all) to 3 (nearly every day) (21). Total scores range from 0 to 27, and cut-off scores for mild, moderate, moderately severe, and severe depressive symptoms are 5, 10, 15, and 20, respectively (21). The GAD-7 is a seven-item self-report measure of anxiety (22). Items are rated on a scale ranging from 0 (not at all) to 3 (nearly every day) (22). Total scores range from 0 to 21, and the cut-off scores for mild, moderate, and severe anxiety symptoms are 5, 10, and 15, respectively (22).

Alliance between patient and clinician was measured using a 5-item scale developed at Two Chairs that measured the following established elements of the therapeutic relationship: agreement on goals, shared understanding of tasks, and clinical bond. Items were rated on a scale of 1–5 from strongly disagree to strongly agree and averaged to create a total score ranging from 1 to 5.

2.9 Clinical baseline and outcomes

The first attended therapy session was defined as baseline for each patient; in the event that MBC data were not available at the first session (7% of cases), the intake assessments or second session were used, in that order. Clinical outcomes were assessed at the patient's last available session between 4 and 12, termed the “clinical check-in.” (18)

2.10 Phases

Patients were separated into three groups based on their session tenure relative to the timing of the project: “pre-implementation” for patients who completed their clinical check-in session prior to the launch of training on 05-12-2023, “post-implementation” for patients whose first session was after 10-16-2023, when all training activities had been completed; and “implementation” for all patients who met inclusion criteria but whose treatment didn't fall neatly into either pre or post periods (Figure 1b).

2.11 Implementation outcomes

To evaluate the effectiveness of the implementation in changing clinician attitudes, clinicians were asked to respond to a survey prior to the start of the project and after each module was completed. The survey asked clinicians to rate their responses to the following questions on a scale from 1 to 10 with 1 = not at all and 10 = very:

1. How important is it for you to be able to provide measurement based care to your patients?

2. How confident are you that you are able to provide measurement based care to your patients?

3. How ready are you to provide measurement based care to your patients?

2.12 Clinical outcomes

Three primary measures were used to measure symptom improvement at the clinical check-in session compared with baseline: percent improvement on PHQ-9, percent improvement on GAD-7, and percent improvement on PHQ-9/GAD-7 combined (PHQ-9 improvement + GAD-7 improvement)/(baseline PHQ-9 + baseline GAD-7). Adding PHQ-9 and GAD-7 scores together is a reasonable method for assessing symptoms of general affective illness, and the aggregate measure has acceptable psychometric properties (22). Percent change was selected as an outcome measure because it normalizes change across clinical and subclinical participants, and permits reasonable aggregation across these groups. To avoid large negative percent change values for patients who began with minimal initial symptoms and worsened, all outcomes were bounded between 100% improvement and 150% decline.1

2.13 Clinician behavioral outcomes

Markers of clinician behaviors associated with adherence to the MBC model were extracted from the clinical progress notes. Clinicians are required to indicate whether they discussed any measures in session and if so, which ones (PHQ-9, GAD-7, alliance). A set of binary measures (yes/no) were used to evaluate the frequency of conversations about the MBC measures across the implementation phases.

3 Analysis

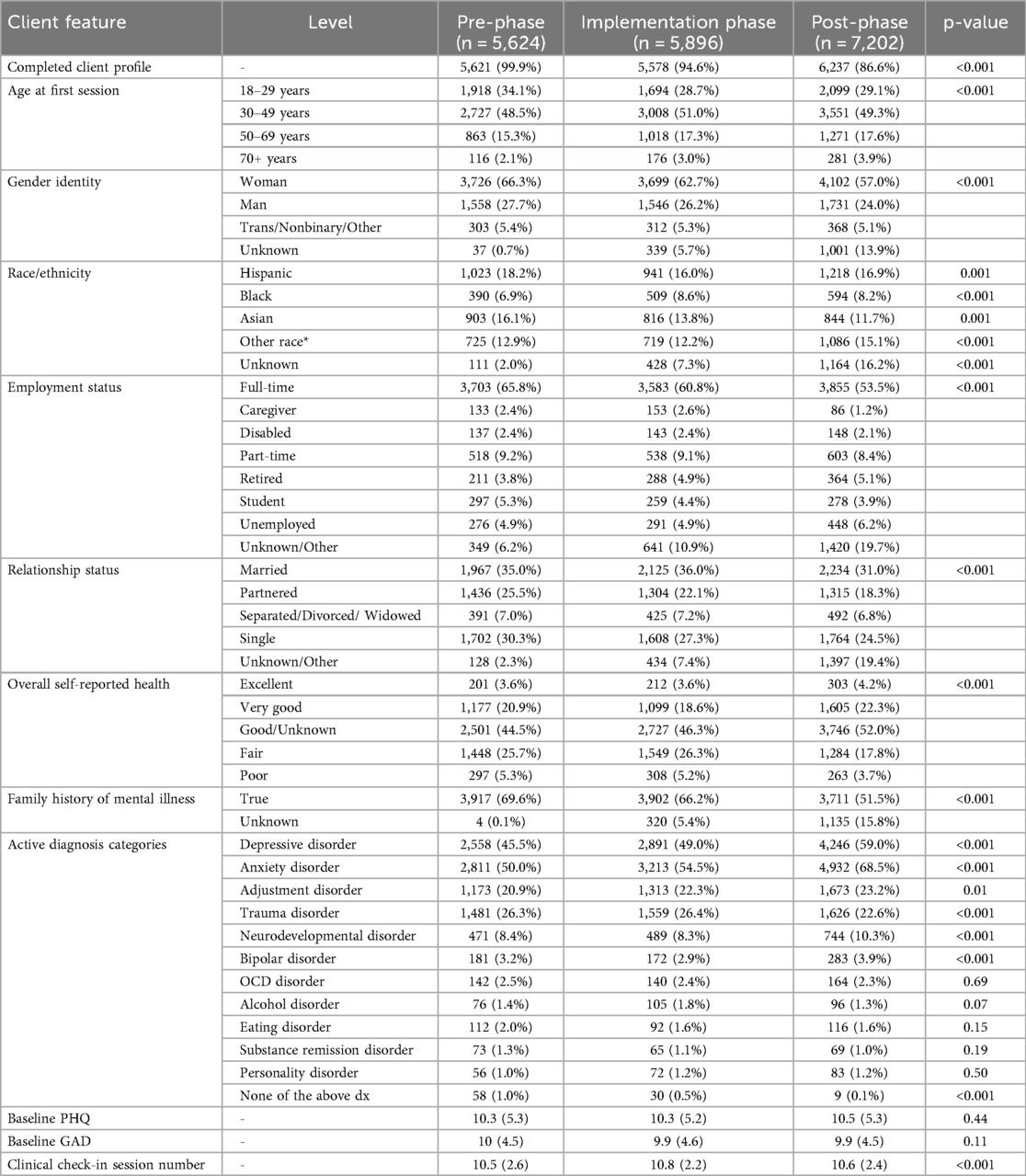

The study population was characterized using means, standard deviations, and frequencies with statistical tests to evaluate differences between the three phases (Chi-squared tests, ANOVA) (Table 1).

3.1 Implementation outcomes

Scores from each assessment of clinician attitudes across the implementation period were reported as averages.

3.2 Clinical outcomes

The primary analysis contrasts change in anxiety and depression symptoms across the three implementation phases. Raw averages are provided for participant-level percent change in each of the three phases, stratified by baseline severity using a lower-bound cutoff score of 10 on either the PHQ-9 or GAD-7. The decision to stratify outcomes was made in order to better understand the impact of implementation across levels of severity of intake symptoms. Estimates of differences between the post-implementation and implementation phase outcomes relative to the pre-implementation outcomes were computed using linear mixed effects models. A mixed-effects model with a random intercept and indicator for treatment period was selected to account for clustering of effect by clinician. Models included random intercepts and random treatment group effects per clinician to account for clinician-level clustering of effects and unbalanced clinician contribution in the treatment group.

There are no random effects at the patient level because each patient is included using only a single observation in a single time period. These models controlled for factors known or suspected to be related to clinical outcomes, including patient diagnoses, race/ethnicity, age, gender, insurance type, state, employment status, relationship status, self-reported health, family history of mental illness, baseline PHQ-9 and GAD-7 severity categories, service state, and insurance type. The features selected for the models were chosen to minimize the amount of potential residual confounding in the contrast between the three time periods given the data available about patients. Adjustment using patient features was necessary to address potential shifts in the patient population over time. Given the very large sample sizes and the fact that parameter estimates for demographic features were not of primary importance, models were chosen to be conservative in the estimation of our primary contrast rather than parsimonious in terms of patient features. For GAD-7 and PHQ-9 outcomes, only patients whose baseline assessment was ≥5 were included. During the implementation phase, some intake data collection was changed from mandatory to optional, which resulted in decreased frequency of baseline patient information over time. Missing intake data were accounted for explicitly by using a dummy variable to encode missingness. The large transition group was included in the analysis for the purpose of providing stable estimates for these parameters that create separability between the pre-implementation period and the post-implementation with regard to these missing profile features. Given the increasing frequency of missing patient information, a sensitivity analysis was performed limited to just patients who had completed the intake data collection; the results were nearly identical.

3.3 Within clinician outcomes

To address a potential bias based on changes in the clinician population, a secondary analysis repeated the primary analysis of patient level-outcomes contrasting the post-phase with the pre-phase but restricted to clinicians who were present during both phases and contributed at least 10 unique patient outcomes to each phase (n = 80 clinicians). Clinician-specific slopes and intercepts were constructed based on the combination of fixed components and random clinician-specific components. To assess the relationship between baseline clinician performance and improvement across the implementation phases, the association between clinician-specific random intercepts and treatment effects were assessed with a Pearson correlation coefficient.

3.4 Clinical behavior change

A final analysis evaluated markers of clinician behavior change across the implementation phases. Clinician behavior change was evaluated using indicators of discussing MBC with patients in session derived from the therapy notes. Frequencies of MBC discussions reported in therapy notes from sessions 1 through 12 are shown for each of the three assessment periods along with 95% confidence intervals computed with clustered standard errors (clinician as cluster).

4 Results

4.1 Sample characteristics

4.1.1 Patients

A total of n = 18,722 participants met requirements for analysis with 5,624, 5,896, and 7,202 in the pre-, implementation-, and post-phases, respectively (Table 1). Most participant characteristics varied significantly across the three phases (p < 0.05) with the exception of average baseline PHQ-9 and GAD-7 scores, which remained stable. The participants provided a diverse cross-section of treatment-seeking adults with variation across age, race, self-reported health, and employment. The most common diagnoses across all three phases were anxiety disorders, depressive disorders, and trauma disorders, respectively.

4.1.2 Clinicians

A total of 755 unique clinicians contributed to the data: 361 in the pre-implementation phase, 468 during the implementation phase, and 574 post-implementation. The median number of patient observations per clinician was 13, 10, and 11 in the pre-, implementation-, and post-phases, respectively.

4.2 Implementation outcomes

4.2.1 Clinician attitudes

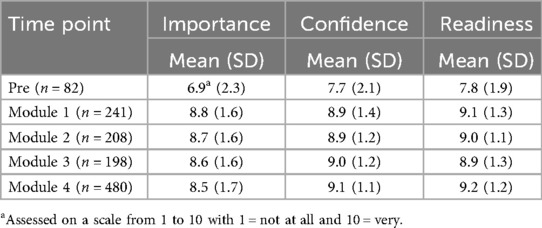

Table 2 represents the average of clinician attitude scores from pre-training and after each training module. The relatively lower scores in the pre-implementation period likely represent the general lower clinician engagement in MBC prior to the initiation of the implementation. Conversely, clinicians reported high scores for importance, confidence, and readiness at all time points after the training series began.

4.2.2 MBC adherence

Adherence to session-level MBC evaluation assessments in sessions 1–12 was high across all three time periods but did increase by 1.6 percentage points in the transition period and 2.4 percentage points in the post-period (pre-phase: 92.9%, implementation phase: 94.5%, post-phase: 95.3%). These differences are significant (p < .001), indicating improved adherence across the phases of implementation.

4.3 Clinical outcomes

Each individual participant contributes a percent improvement for each of the three metrics (combined PHQ-9/GAD-7, PHQ-9, and GAD-7) which reflects their relative improvement compared with their baseline assessment. An average of these individual-level percentages reflects the typical improvement seen within the group, in percentage points. An estimated difference between groups reflects an absolute difference between groups.

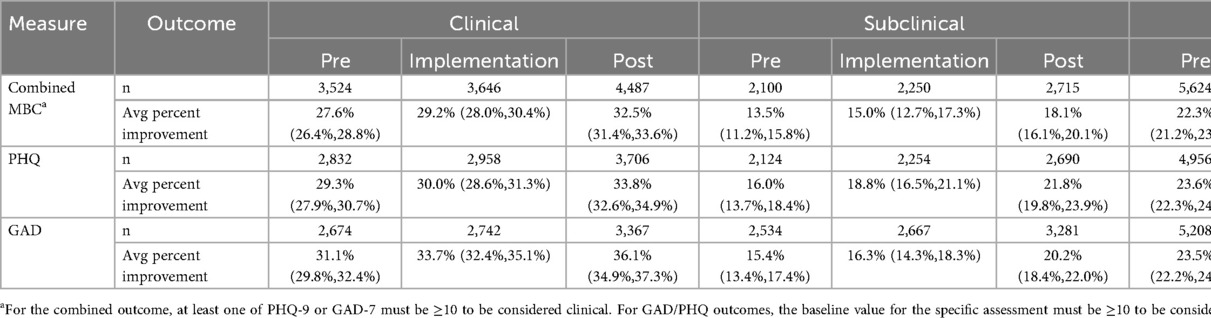

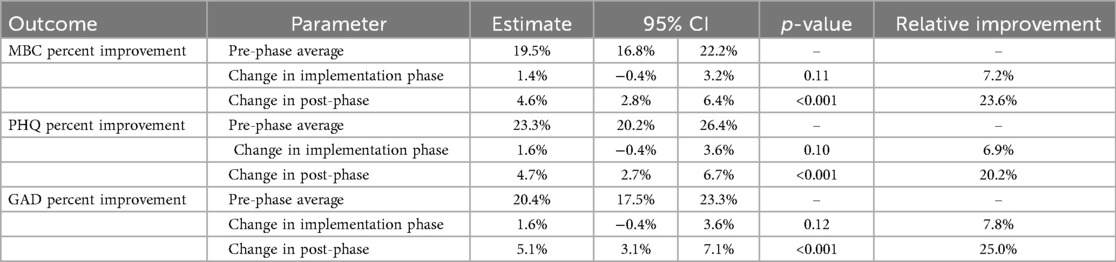

Table 3 characterizes the sample sizes and unadjusted clinical outcomes at the clinical check-in session across the three phases, stratified by whether the participant met the clinical cutoff for the assessment. In general, patient outcomes in the clinical group were approximately twice the size of those seen in the subclinical group across all time periods. Despite these differences, the magnitude of change associated with the pre-post comparison was a consistent approximately 5 percentage points for both clinical and subclinical groups.

Table 3. Raw average of percent improvement outcomes across the three phases stratified by baseline clinical status.

Adjusted analysis was performed on the combined MBC (PHQ-9/GAD-7) percent improvement, PHQ-9 percent improvement, and GAD-7 percent improvement outcomes with results in Table 4 in absolute terms. For all three outcomes, the post-implementation phase outcomes were significantly improved over the pre-phase outcomes, with average percent improvements of approximately 5 percentage points compared to the pre-implementation period. This finding is consistent with the range seen in both the clinical and subclinical groups in unadjusted analysis. This represents a relative improvement of 23.6% on combined MBC improvement, 20.2% on PHQ-9 improvement, and 25.0% on GAD-7 improvement in the post-phase relative to the pre-phase. Modest but non-significant improvements were observed in the transition phase relative to the pre-phase.

Table 4. Adjusted differences in post-phase percent symptom improvement compared with the pre-phase.

4.4 Within-clinician outcomes

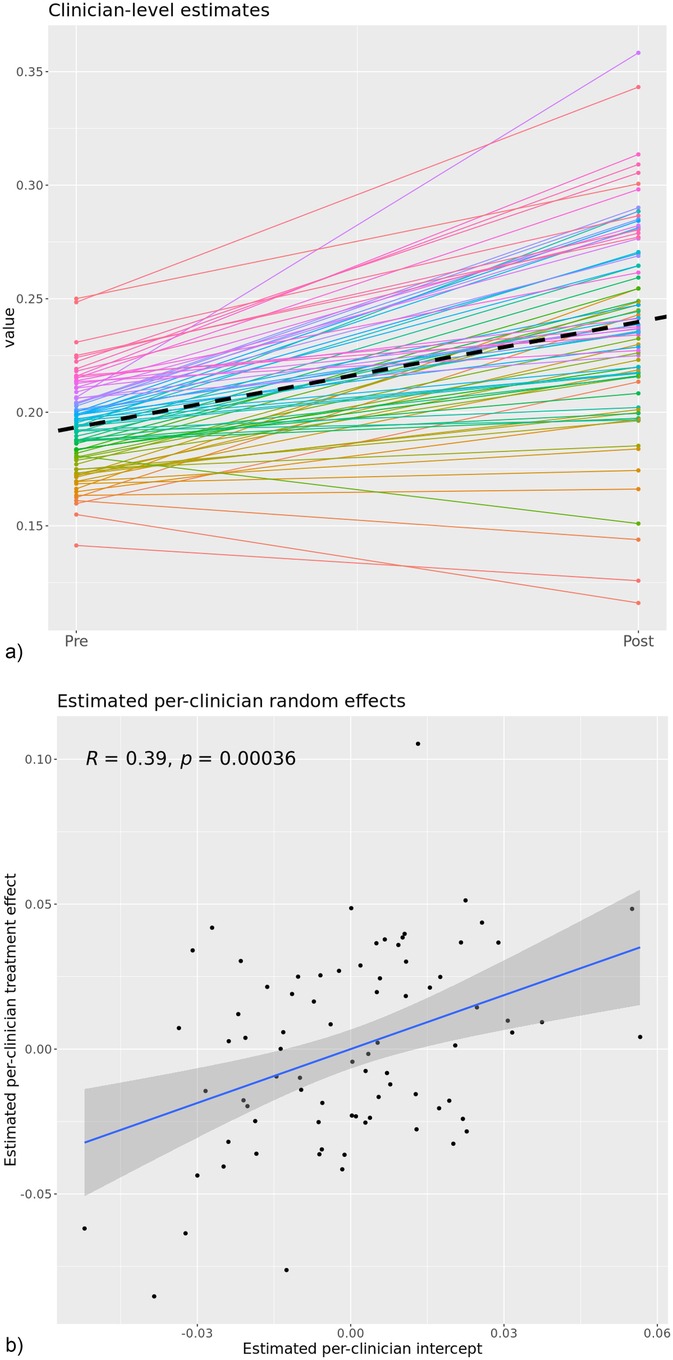

The secondary analysis restricted to clinicians who were present at both the pre- and post- phases reinforces the stability of the overall result; the post-phase was associated with an average improvement of 4.6 percentage points on the combined MBC outcome (p = 0.01) which is the same change observed in the primary analysis. Figure 2 depicts two different views of clinician-specific change from this analysis; 2a contrasts clinician-specific estimates at the pre- and post- phases based on fixed and random parameter estimates. Figure 2b plots the random treatment effect as a function of the random slope. Figure 2a demonstrates that nearly all clinicians (76 of 80; 95%) had positive clinician-specific slopes (fixed + random components), suggesting that the overall change is being driven by widespread change rather than a large change in a small number of clinicians. Figure 2b demonstrates a strong linear relationship (Pearson correlation coefficient, r = 0.39) between estimated random intercepts and random treatment effects such that clinicians who were already performing better than their colleagues during the pre-phase actually improved by a larger amount in the post-phase.

Figure 2. (a) estimated clinician-level outcomes across the pre- to post-implementation phases for n = 80 clinicians with sufficient pre and post data. (b) Clinician-specific random slopes versus random intercepts with a linear regression applied. This image represents the within-clinician change of the 80 clinicians who had sufficient data to estimate their pre vs. post phase clinical outcomes. The dashed line represents the fixed-effects slope which was significant in the model and similar in magnitude with the estimated effect in the overall model.

4.5 Clinical behavior change

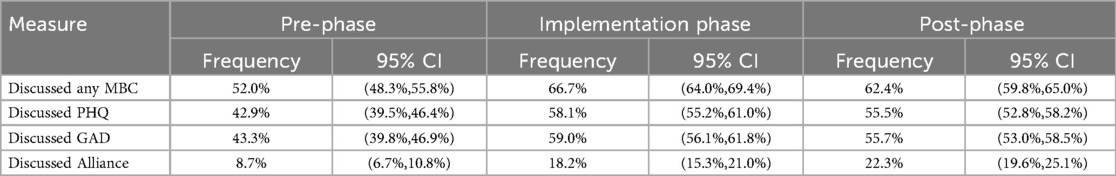

Clinical progress notes contain structured questions about whether any MBC measures were discussed in session with the patient (and specifically whether PHQ-9, GAD-7, and/or alliance were discussed) provide a tangible measure of behavior change in clinicians. Analysis was based on 201,326 sessions across all three phases, restricted to sessions 1–12. The implementation-phase and post-phase sessions had significantly higher frequency of such discussions across all measures. In general, the frequency of discussion was higher during the implementation phase and fell slightly during the post-phase but remained substantially higher than the pre-phase (Table 5). A notable exception was discussion of therapeutic alliance, which increased across all three phases. Discussion of measures at any point during the course of care improved from 79.8% of cases during the pre-phase to 96.2% of cases during the post-phase.

5 Discussion

This study reports on the effect of an efficient implementation of MBC in a large-scale, technology-enabled psychotherapy practice on patient outcomes and clinician behaviors. Results showed that the implementation was associated with improvements in patients' depression and anxiety outcomes. Clinician behaviors associated with fidelity to the MBC model also increased during this time. This study suggests that an implementation completed over a relatively brief period (6 months) with primarily low-touch, self-lead training interventions can drive widespread clinical adoption of MBC and promote improvement in patient outcomes in a diverse clinical practice.

5.1 Improvements in depression and anxiety outcomes

The goal of implementing MBC at Two Chairs was to improve patient outcomes. Compared to patient outcomes measured prior to the project start, patient outcomes measured after the completion of the training improved by nearly 24% on a composite measure of depression and anxiety. These gains were observed among patients at both higher and lower symptom severity at baseline, and across measures of both anxiety and depression. Our analyses suggest that these gains are not attributable to changes in the composition of either the patient or clinician population. Progressive improvement in outcomes occurred across all three phases of the implementation, suggesting that these results reflect more than a transient change in provider behaviors and that these improvements may be durable. Of note, previous implementations of MBC or related practices have found that impact on patient outcomes often take up to 3–7 years to emerge and require high levels of sustained clinical oversight, suggesting that this method of implementation may be more rapid and more efficient than other approaches explored in the literature (23). These results may be attributable to combined effects of the training program, organizational alignment, technology platform, and ongoing clinical support offered.

These findings align with existing literature demonstrating the efficacy of MBC in enhancing treatment outcomes. A systematic review and meta-analysis by Scott and Lewis found that MBC was associated with significantly greater remission rates in patients with depression compared to standard care (19). The uniformity of gains across different severity levels and symptom domains underscores the transdiagnostic and transtheoretical utility of MBC and its effectiveness across diverse clinical settings (13). We observed modest and non-significant improvement during the implementation phase, which grew to robust improvements during the post-implementation phase, suggesting the benefits of the MBC implementation were sustained beyond the training period. This is consistent with findings from Lewis et al., who reported that tailored implementation of MBC led to sustained improvements in depression outcomes over time (20).

5.2 Evidence of change in clinician behaviors

We also found evidence that clinicians engaged in greater rates of clinical behaviors associated with adherent practice of MBC. In clinical progress notes, providers reported greater rates of discussing measures in therapy sessions (from 52.0% of sessions during the pre-implementation period to 62.4% during the post implementation period). These data help support the hypothesis that discussion of patient-reported data in session is an important factor in MBC's effectiveness (2). The evident impact of clinician-reported discussion of measures in session stands in contrast to minimal impact of merely collecting MBC data, even at very high rates. This finding highlights the limits of attending only to the organizational or technical aspects of an implementation without addressing clinical competence or leadership drivers that are required to drive meaningful and sustained change in clinical behavior.

Notably, whereas discussion of symptom measures peaked during the implementation period and dropped slightly during post-implementation, discussion of alliance started at a much lower rate at the pre-implementation period and then improved both during implementation and again at post implementation. A decrease in intervention fidelity after the cessation of implementation activities has been observed in other studies, and has been termed “voltage drop” or “program drift.” (24) Waller and Turner (2016) note that even well-intentioned clinicians may gradually drift from evidence-based practices in the absence of continued support, monitoring, or reinforcement (25). However, the continued improvement in both patient outcomes and discussion of alliance in the post-implementation period may suggest a different interpretation. The training provided during the implementation intentionally focused on the importance of alliance in promoting positive therapy outcomes and the utility of using alliance-related patient feedback in care; and further Two Chairs ceased a potentially harmful practice of using alliance as a clinician performance metric. Numerous studies suggest that sustained clinician attention to therapeutic alliance is one of the most powerful mechanisms of change in psychotherapy (14). This shift observed among providers examined in this study toward alliance focused MBC may reflect clinicians internalizing the principles of feedback informed care, moving from mechanical use of screening instruments to a more nuanced, relational integration of patient reported data (6, 14, 25). Further research is needed to disentangle the unique contributions of in-session focus on symptoms vs. alliance.

5.3 Improvement of individual clinicians

Among the most noteworthy findings in the current study is that of the clinicians with sufficient data to estimate changes in their pre- to post-implementation clinical outcomes, 95% showed evidence of improved outcomes (76 of 80). The magnitude of this improvement was similar to the size of the effect in the full population, providing strong support that the effect in the overall population is not simply due to a shift in the underlying clinician population but instead represents individual improvement. This analysis suggests that the implementation had a generalized positive effect on clinician performance and was not the result of large improvements for just a few providers.

Although nearly all clinicians in this subsample improved, we observed evidence of a differential impact of the implementation on groups of clinicians within this sample. As displayed in Figure 2b, the providers with the highest pre-implementation clinical performance (as determined by their clinical outcomes) also experienced the most improvement in clinical outcomes. This pattern is counter to what is typically observed in previous studies of MBC implementations, where gains are often most pronounced among lower performing clinicians (26). For example Delgadillo et al. found that routine outcome monitoring and feedback systems tend to improve outcomes primarily for clinicians with lower initial effectiveness (26). In contrast, our results align with a smaller but growing body of work suggesting that even high-performing clinicians can benefit meaningfully from feedback informed implementation efforts (27).

One possible explanation for these findings may lie in the training approach taken by Two Chairs, which utilized primarily self-directed learning on virtual modules. Given this relatively light-touch intervention, those individuals with the most motivation or innate skill may be the most able to learn and implement new skills from self-directed content. Prior studies have found that clinician engagement and motivation are key factors in mediating the impact of implementation efforts (27). If this result is replicated in other settings, systems seeking to enhance clinical quality outcomes may benefit from bifurcating training programs, with some training exercises aimed at existing high-performing staff and others aimed at medium- to low-performing staff. High-performing staff may gain organization-wide benefits when given self-directed training that is easily scalable and repeatable across cohorts.

5.4 Organizational strengths

There were existing organizational and leadership factors that may have enabled the success of the implementation (22). The organization had an existing robust software platform and high levels of MBC adherence. Furthermore, there was an existing commitment among clinical and company leadership to MBC as an evidence-based practice. The success of implementation may reflect strong buy-in across all organizational levels, from leadership to frontline clinicians, which helped to align strategy, ensure resource commitment, and embed MBC practices into routine workflows. This multi-level implementation strategy likely played a critical role in accelerating adoption and supporting sustained behavior change over time.

5.5 Limitations

Limitations of this study include its retrospective non-randomized design. This design precludes drawing clear causal inferences about the effect of implementation on patient outcomes.

Other factors, such as co-occurring organizational changes or other external events, may also have influenced the results. Future studies could use quasi-experimental designs that stagger training among staff or a truly randomized design within a set of cohorts of clinicians. A second limitation is the loss of demographic characteristics for some patients in the follow-up period, which limited our ability to control for changes in the patient population over time that may have affected the results; however, this limitation is mitigated somewhat by the results of the sensitivity analysis and inclusion of the “transition” group in the analyses.

There are also several potential threats to generalizability. First, as noted above, the organization had a strong internal commitment to MBC, a robust and proprietary software platform, and an employment model that allowed clinicians and support staff to dedicate time to training and oversight, and allowed the agency to enforce standards around MBC adherence. The agency's commitment to MBC may also have attracted clinicians who were already open to this practice, making clinician adoption of MBC practices smoother. Organizations without these features in place, including organizations where clinicians are employed on a contractual basis, may need to do more foundational work before an implementation such as the one described in this paper can be effective. Finally, it is possible that improvements in rates of discussing MBC captured in the notes could have been influenced by social desirability bias or improved diligence in documentation in the context of the training, instead of indicating clinical behavior change.

5.6 Implications and conclusion

This study shows that MBC implementation can be successful and sustainable, at scale, when organizations invest in and organize training and support activities in line with the best practices, including: (1) investing in organizational alignment among all key stakeholders; (2) developing intuitive, user-friendly software platforms that automate key MBC practices and provide decision support; (3) aligning messaging and setting clear expectations for clinician behaviors; (4) reinforcing the utilization of MBC principles in onboarding and ongoing staff development; and (5) proactively implementing elements designed to sustain the evidence based practice in a scalable and cost-effective way.

Beyond our primary findings, the results also illustrate the high cost of an ineffective MBC implementation. The organization's initial state, characterized by high MBC completion rates but low clinician buy-in and understanding, yielded limited impact on patient outcomes. This “implementation-in-name-only” represents a poor return on investment, incurring technological and operational costs without the corresponding clinical benefits. This distinction is critically relevant for payers and the broader shift toward value-based care. As reimbursement models increasingly focus on outcomes rather than service volume, our study suggests that to realize the value of MBC, payers and policy makers must look beyond merely mandating standardized assessments. Instead, these stakeholders should demand evidence of effective MBC implementation, including impact on clinical improvement, an outcome that aligns most closely with the goals of value-based care.

Overall, this study suggests that the structured implementation approach for MBC employed within this study was associated with improved clinical outcomes across a broad range of patients, clinical presentations, and clinicians. It also provides support for the clinical utility of MBC as an evidence-based practice that – if adequately implemented – can improve clinical outcomes in diverse clinical settings. These findings suggest a model for implementing a sustainable and effective practice of MBC.

Data availability statement

The datasets presented in this article are not readily available because these data is held by Two Chairs and therefore is not shareable. Requests to access the datasets should be directed tobmZvcmFuZEB0d29jaGFpcnMuY29t.

Ethics statement

The studies involving humans were approved by Sterling Institutional Review Board. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants' legal guardians/next of kin in accordance with the national legislation and institutional requirements.

Author contributions

NF: Conceptualization, Methodology, Writing – original draft, Writing – review & editing. JN: Data curation, Methodology, Formal analysis, Visualization, Writing – original draft, Writing – review & editing. AB: Investigation, Writing – original draft, Writing – review & editing. MA: Conceptualization, Writing – original draft, Writing – review & editing. RT: Writing – original draft, Writing – review & editing. BG: Writing – original draft, Writing – review & editing. CM: Investigation, Funding acquisition, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

We would like to thank Brittany Gautreau, Louann Toscano, Maura Church and Marisa Perera for their contributions to the MBC implementation and conception and drafting of this manuscript.

Conflict of interest

NRF, MTA, JN, AB, and CM are all employees of and have equity interest in Two Chairs.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frhs.2025.1659238/full#supplementary-material

Footnote

1. ^Bounding the percent change outcomes to the range [-150%, 100%] resulted in minimal impact among the population meeting at least one clinical threshold at baseline ( <1% of participants were impacted by bounding). There was a larger but still small impact in the subclinical population (1.5-3.5% of participants impacted) due to the potential for larger regressions percentage-wise given lower baseline scores.

References

1. Substance Abuse and Mental Health Services Administration (SAMHSA). Use of Measurement-Based Care for Behavioral Health Care in Community Settings: A Brief Report. Interdepartmental Serious Mental Illness Coordinating Committee (ISMICC). (2022). p. 1–11. https://www.samhsa.gov/sites/default/files/ismicc-measurement-based-care-report.pdf (Accessed June 2, 2025).

2. American Psychological Association. Measurement-Based Care. APA Services. Available online at: https://www.apaservices.org/practice/measurement-based-care (Accessed June 2, 2025).

3. Barkham M, De Jong K, Delgadillo J, Lutz W. Routine outcome monitoring (ROM) and feedback: research review and recommendations. Psychother Res. (2023) 33(7):841–55. doi: 10.1080/10503307.2023.2181114

4. Bickman L, Kelley SD, Breda C, de Andrade ARV, Riemer M. Effects of routine feedback to clinicians on mental health outcomes of youth: results of a randomized trial. Psychiatr Serv. (2011) 62(12):1423–9. doi: 10.1176/appi.ps.002052011

5. Schuckard E, Miller SD, Hubble MA. Feedback-informed treatment: historical and empirical foundations. In: Prescott DS, Maeschalck CL, Miller SD, editors. Feedback-Informed Treatment in Clinical Practice: Reaching for Excellence. Washington, DC: American Psychological Association (2017). p. 13–35. doi: 10.1037/0000039-002

6. Lewis CC, Boyd M, Puspitasari A, Navarro E, Howard J, Kassab H, et al. Implementing measurement-based care in behavioral health: a review. JAMA Psychiatry. (2019) 76(3):324–35. doi: 10.1001/jamapsychiatry.2018.3329

7. Fortney JC, Unützer J, Wrenn G, Pyne JM, Smith GR, Schoenbaum M, et al. A tipping point for measurement-based care. Psychiatr Serv. (2017) 68(2):179–88. doi: 10.1176/appi.ps.201500439

8. Jensen-Doss A, Haimes EMB, Smith AM, Lyon AR, Lewis CC, Stanick CF, et al. Monitoring treatment progress and providing feedback is viewed favorably but rarely used in practice. Adm Policy Ment Health. (2018) 45(1):48–61. doi: 10.1007/s10488-016-0763-0

9. Connors EH, Douglas S, Jensen-Doss A, Landes SJ, Lewis CC, McLeod BD, et al. What gets measured gets done: how mental health agencies can leverage measurement-based care for better patient care, clinician supports, and organizational goals. Adm Policy Ment Health. (2021) 48(2):250–65. doi: 10.1007/s10488-020-01063-w

10. Meadows Mental Health Policy Institute. Increasing Measurement-Based Assessment and Care for People with Serious Mental Illness. (2024). Available online at: https://mmhpi.org/wp-content/uploads/2024/04/Increasing-Measurement-Based-Assessment-and-Care-for-People-with-Serious-Mental-Illness.pdf (Accessed June 2, 2025).

11. Ridout K, Vanderlip ER, Alter CL, Carlo AD, Kadriu B, Livesey C, et al. Resource Document on Implementation of Measurement-Based Care. Washington, DC: American Psychiatric Association (2023). https://www.psychiatry.org/getattachment/3d9484a0-4b8e-4234-bd0d-c35843541fce/Resource-Document-on-Implementation-of-Measurement-Based-Care.pdf (Accessed June 2, 2025).

12. Solstad SM, Kleiven GS, Castonguay LG, Moltu C. Clinical dilemmas of routine outcome monitoring and clinical feedback: a qualitative study of patient experiences. Psychother Res. (2021) 31(2):200–10. doi: 10.1080/10503307.2020.1788741

13. Meyer-Kalos P, Owens G, Fisher M, Wininger L, Williams-Wengerd A, Breen K, et al. Putting measurement-based care into action: a multi-method study of the benefits of integrating routine patient feedback in coordinated specialty care programs for early psychosis. BMC Psychiatry. (2024) 24:871. doi: 10.1186/s12888-024-06258-1

14. Flückiger C, Del Re AC, Wampold BE, Horvath AO. The alliance in adult psychotherapy: a meta-analytic synthesis. Psychotherapy. (2018) 55(4):316–40. doi: 10.1037/pst0000172

15. Prescott DS, Maeschalck CL, Miller SD. Feedback-informed Treatment in Clinical Practice: Reaching for Excellence. Washington, DC: American Psychological Association (2017).

16. Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation Research: A Synthesis of the Literature. Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network (2005).

17. Fixsen DL, Blase KA, Naoom SF, Friedman RM, Wallace F. Core Implementation Components. Chapel Hill, NC: National Implementation Research Network, University of North Carolina at Chapel Hill (2009).

18. Forand NR, Nettiksimmons J, Anton M, Truxson R, Vanderwood K, Green B. Depression and anxiety outcomes in a technology-EnabledPsychotherapy practice: retrospective cohort study. JMIR Form Res. (2025).

19. Scott K, Lewis CC. The efficacy of measurement-based care for depressive disorders: a systematic review and meta-analysis. J Clin Psychiatry. (2021) 82(1):20r13457. doi: 10.4088/JCP.20r13457

20. Lewis CC, Marti CN, Scott K, Walker MR, Boyd M, Puspitasari A, et al. Standardized versus tailored implementation of measurement-based care for depression in community mental health clinics. Psychiatr Serv. (2022) 73(1):6–13. doi: 10.1176/appi.ps.202100284

21. Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Adm Policy Ment Health. (2011) 38(1):4–23. doi: 10.1007/s10488-010-0327-7

22. Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation Research: A Synthesis of the Literature. (FMHI Publication #231). Tampa, FL: University of South Florida, Louis de la Parte Florida Mental Health Institute, The National Implementation Research Network (2005). p. 1–101.

23. Brattland H, Koksvik JM, Burkeland O, Gråwe RW, Klöckner C, Linaker OM, et al. The effects of routine outcome monitoring (ROM) on therapy outcomes in the course of an implementation process: a randomized clinical trial. J Couns Psychol. (2018) 65(5):641–52. doi: 10.1037/cou0000286

24. Waller G, Turner H. Therapist drift redux: why well-meaning clinicians fail to deliver evidence-based therapy, and how to get back on track. Behav Res Ther. (2016) 77:129–37. doi: 10.1016/j.brat.2015.12.005

25. Chiauzzi E, Wicks P. Digital measurement-based care in behavioral health: the rise of digital therapeutics and the need for a roadmap. Psychiatr Serv. (2020) 71(12):1214–6. doi: 10.1176/appi.ps.202000236

26. Delgadillo J, Deisenhofer A-K, Probst T, Shimokawa K, Lambert MJ, Kleinstäuber M. Progress feedback narrows the gap between more and less effective therapists: a therapist effects meta-analysis of clinical trials. J Consult Clin Psychol. (2022) 90(7):559–67. doi: 10.1037/ccp0000747

Keywords: measurement-based care, implementation, training, quality improvement, sustainment, depression, anxiety, psychotherapy outcomes

Citation: Forand NR, Nettiksimmons J, Brownell A, Anton MT, Truxson R, Green B and Marshall C (2025) The impact of measurement based care at scale: examining the effects of implementation on patient outcomes and provider behaviors. Front. Health Serv. 5:1659238. doi: 10.3389/frhs.2025.1659238

Received: 25 August 2025; Revised: 31 October 2025;

Accepted: 4 November 2025;

Published: 28 November 2025.

Edited by:

Abdu A. Adamu, South African Medical Research Council, South AfricaReviewed by:

Aline Rocha, Federal University of São Paulo, BrazilVassilis Martiadis, Department of Mental Health, Italy

Copyright: © 2025 Forand, Nettiksimmons, Brownell, Anton, Truxson, Green and Marshall. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nicholas R. Forand, bmZvcmFuZEB0d29jaGFpcnMuY29t

Nicholas R. Forand

Nicholas R. Forand Jasmine Nettiksimmons

Jasmine Nettiksimmons Amanda Brownell1

Amanda Brownell1 Margaret T. Anton

Margaret T. Anton Raven Truxson

Raven Truxson Brandn Green

Brandn Green