- France Laboratoire C2S (Cognition, Santé, Société) EA6291, Université de Reims Champagne Ardenne, Reims, France

While the debate regarding the embodied nature of human cognition is still a research interest in cognitive science and epistemology, recent findings in neuroscience suggest that cognitive processes involved in social interaction are based on the simulation of others’ cognitive states and ours as well. However, until recently most research in social cognition continues to study mental processes in social interaction deliberately isolated from each other following 19th century’s scientific reductionism. Lately, it has been proposed that social cognition, being emerged in interactive situations, cannot be fully understood with experimental paradigms and stimuli which put the subjects in a passive stance towards social stimuli. Moreover, social neuroscience seems to concur with the idea that a simulation process of possible outcomes of social interaction occurs before the action can take place. In this “perspective” article, we propose that in the light of past and current research in social neuroscience regarding the implications of mirror neuron system and empathy altogether, these findings can be interpreted as a framework for embodied social cognition. We also propose that if the simulation process for the mentalization network works in ubiquity with the mirror neuron system, human experimentations for facial recognition and empathy need a new kind of stimuli. After a presentation of embodied social cognition, we will discuss the future of methodological prerequisites of social cognition studies in this area. On the matter, we will argue that the affective and reactive virtual agents are at the center in conducting such research.

Introduction

We owe to Fodor (1983), one of the most eminent propositions of a functional topography of cognitive mechanisms underlying behavior. By proposing the modularity of mind, Fodor (1983) acknowledged that there are different levels of information processing such as input-system and higher-level processing. According to this, input-system level would be modular, which means self-contained modules representing lower-level object-identification (perceptual, linguistic, or other) whereas higher level such as reasoning would be without any specific modules.

This view of human cognition had an impact on the scholars to study solely module centric expressions of human cognition, from a symbolic point of view until the advent of embodied cognition as a new proposition of the human mind. Therefore, before embodied cognition became a new framework for studying the human mind, cognitive sciences have had a longtime experience on scientific reductionism. It tends to study and describe mental processes underlying conscious or unconscious behavior by creating experimental situations which allows excluding various factors and stimuli by leaving only one or a few of interest just to be interested primarily to input-system level modules.

Contrarily, the embodied cognition defines the human cognition being holistically situated, being a part of the physical world, grounded in mechanisms that evolved for interaction with the environment and utterly using action for receiving input and as a response (Wilson, 2002). On the matter, it has been already proposed that even in the case of abstract domains, such as concepts and metaphors, there is always sensorimotor properties associated with them. The simulation system seems to be a key component in manipulating concepts, not only related to sensorimotor representations but also intentions and beliefs, which are components of social cognition (for a review see Marmolejo-Ramos et al., 2017).

The study of social cognition has also suffered from the main paradigm that prevails until now: features composing social cognition have been investigated by separating psychological constructs and by designing paradigms that require the observation and interpretation of a unique social stimulus. The most documented constructs such as theory of mind, empathy and emotion recognition have been tested by presenting picture stimuli, drawings, or videos that place the subject in a passive stance adopting the third person perspective. Such an approach was criticized by Schilbach et al. (2013): “social cognition is fundamentally different when we are emotionally engaged with someone as compared to adopting an attitude of detachment and when we are interacting with someone as compared to merely observing her” (pg. 396).

In line with this claim, we will consider in this perspective paper that the phenomena of interpersonal interaction as central to research in social cognition and assume that they should not be reduced to the separated constructs cited above. Moreover, following the advent of embodied cognition, we will show that new intersubjective stimuli, namely virtual agents, can be proposed for studying embodied social cognition. Firstly, we will present what is called embodied social cognition, following the neuroscientific evidence in the literature. Finally, we will show why and how affective virtual agents seem to be a tailor-made technology in the service embodied social cognition framework, but more particularly for psychiatry and mental health studies.

Embodied social cognition

Embodied Social Cognition as a theoretical construct followed the emergence of embodied cognition which is a great rupture from Fodor’s (1983) view of human cognition. In embodied cognition, some authors such as Clark (1997); cited by Chemero, 2011) favors a radical approach, claims that there is no abstract information processing between perceptual features of the object and its symbolic representation and thus, it is inconceivable to separate action and perception in bodily experience. Moreover, in this view, the cognition is also enactive, a concept proposed by Varela et al. (1991) which states that our understanding of an object in a given environment is solely determined by mutual interactions between the organism (and its neuropsychological functioning) and the environment. Thus, phenomenologically, the sense that we attribute to the objects is not a result of an abstraction process from visual features to a representation but an enactment of bodily activities. Moreover, according to Wilson (2002), embodied cognition is situated; it “involves interaction with the thing that the cognitive activity is about…in the context of task-relevant inputs and outputs” (pg. 626). This would suggest that cognitive activity is always “on-line” with the features regarding the elements of the environment, the action-perception coupling, and the bodily experience by a simulation process (Wilson, 2002).

Naturally, the idea of applying embodied cognition framework in the field of social cognition has been proposed in the last 15 years. For instance, Gallese (2007) paved the way by proposing that the mirror neuron system in monkeys and humans and the simulation (Gallese and Sinigaglia, 2011) can be accounted for embodied social cognition framework. The mirror neuron system has been discovered in the 90’s within the ventral premotor cortex of the macaque monkey (Di Pellegrino et al., 1992; Gallese et al., 1996), although today, its subsequent areas is still a research interest (for a review see, Kilner and Lemon, 2013). This neuron network was found implicated when a monkey performs an action such as grasping and when monkey just observes another one performing a similar action (Di Pellegrino et al., 1992; Gallese et al., 1996), thus called the mirror neuron system (for a review, see Rizolatti et al., 2001). The extent of MNS (mirror network system) in humans seems to be broader: implicating human inferior frontal gyrus (IFG), (Parsons et al., 1995; also see Keysers and Gazzola, 2006). According to Oberman et al. (2007), the MNS works in line with superior temporal sulcus (known also for facial expression recognition, see Iacoboni et al., 2001); inferior parietal lobule (known also for body image, Buccino et al., 2001); and the limbic system (known also for emotion; Singer et al., 2004). According to Oberman et al. (2007) these systems altogether “may play a key role in multiple aspects of social cognition from biological action perception to empathy” (pg. 62). However, as Heyes et al. (2022) proposed, the MNS framework in social cognition seems to lose interest of scholar the last decade, however there is “still much to be discovered about the sources and developmental timing of the sensorimotor experience that builds mirror neurons and how this information might be used for clinical and educational interventions” (pg. 162).

If social cognition has been identified by any cognitive process “that involves conspecifics, either at a group level or on a one-to-one basis” (Blakemore et al., 2004, pg. 216), according to these authors, understanding others’ actions, intentions, and emotions, also called mentalization, is a key object in social cognition. Indeed, other authors such as Van Overwalle and Baetens (2009) and Iacobonu et al. (2005) propose that there is a cooperation between MNS and the mentalizing network (the precuneus, the temporo-parietal junction and the medial prefrontal cortex), by the fact that the MNS provides crucial information to the mentalizing network particularly when moving parts of a body is observed1.

Moreover, it seems that there is another cooperation between MNS and emotion recognition (Bastiaansen et al., 2009). According to these authors, motor simulation may be a trigger for the simulation of associated feeling states. In other words, simulation of other people’s facial configuration and imitation can trigger pertinent emotional states. This proposition is in line with the work of Niedenthal et al. (2001) which reported that blocking facial mimicry leads to a slower detection of facial expression. Bastiaansen et al. (2009) concludes that “regions involved in stimulating facial expressions indeed seem to trigger an affective simulation of the hidden inner states of others” (pg. 2397). On the subject, it is worth mentioning that Holstege et al. (1996) reports a case study with a patient having a small infarction in the white matter which interrupted the corticobulbar fibers originating in the face part of the motor cortex. Holstege et al. (1996) highlights the fact that the patient, although unable to use oral muscles on her left side of the mouth voluntarily, was able use the same muscles when she reacts spontaneously to a joke or a funny remark. According to Holstege et al. (1996), this should provide an argument for the existence of two distinct motor systems, one voluntary and other emotional (the latter can also be characterized as automatic).

Regarding recognition other’s facial expressions, Van der Gaag et al. (2007), proposed that the MNS can, in concert with the limbic regions and somatosensory system, become active during the monitoring of facial expressions as well as the production of similar facial expressions. However, whether the mimicry plays a central role in emotion recognition is still a research topic (see Niedenthal et al., 2010), for instance “people with bilateral facial paralysis stemming from Moebius syndrome can categorize the facial expressions of others without difficulty” according to Rives Bogart and Matsumoto (2010), cited by Morsella et al., 2010; pg. 456). Moreover, mimicry (or lack thereof) has already been studied in neuroatypical children with the use of virtual agents as a stimulus (Forbes et al., 2016).

Studying embodied social cognition with virtual agents

Affective and reactive virtual agents have the ability to simulate human behaviors, and to present stimuli that trigger social interaction while providing a strong degree of experimental control and reproducibility (Wilms et al., 2010). The opportunity of using virtual agents, also called embodied conversational agents—ECA (Cassell et al., 2001), has been highlighted by Gratch (2014). According to Gratch (2014), virtual agents simulate embodied aspects of human behavior, particularly in non-verbal behavior. In this section, we will present how and why virtual agents and virtual characters are part of virtual reality and virtual environments, then how they can be an asset in the field of cognitive psychopathology.

Virtual agents provide interaction

Until last decade, all studies conducted before used either static stimuli such as photographs or dynamic stimuli such as movie clips provided by actors expressing an emotion but, in every situation, participants are inactive and mere observers. Researchers point out that studying the recognition of emotions with static images is not consistent with what happens in everyday life (Isaacowitz and Stanley, 2011). Emotional recognition and contextualization of emotion in a discourse are dynamic processes, integrating several sensory modalities, where both interlocutors are active, and not in a situation where the participant is a passive observer as previous studies presented in social cognition. Recently, the importance of interactive and immersive environments for investigating social cognition has been highlighted (Zaki and Ochsner, 2009; Schilbach et al., 2013). Schilbach et al. (2013) advocate the idea of using social interactions to study social behaviors and knowledge (including recognition) because, in their view, there is a difference between observing an interaction and participating in the interaction. Participating in social interaction involves emotional engagement, which is displaced from traditional emotional recognition tasks (Schilbach et al., 2013).

For example, one study conducted by affective and reactive virtual agents showed that ocular strategies in social cognition for information gathering are different whether the experimental setting is interactive or not and whether healthy aging individuals listen or answer (Pavic et al., 2021) to the question asked by virtual avatars animated by a platform named “Virtual Interactive Behaviour” (Pecune et al., 2014). Another example Giraud et al. (2021) present a design of a virtual interactive system in which a virtual agent plays a mediator role for the training of children with autism spectrum disorder (ASD). The proposed system is composed of a virtual character projected onto a surface on which a tangible object is magnetized: both the user and the anthropomorphic virtual character hold the object, thus simulating a joint action. Although realizing a joint action is a difficult task for children with ASD, preliminary feedback from this study is encouraging.

Virtual agents provide automatic immersion

As pointed out by Pan and Hamilton (2018), virtual reality is not just “things viewed in a head-mounted display’ but means among other things ‘a computer-generated world” (pg.395). Of course, virtual agents can be a part of words presented in a head-mounted display, however, this is not why they provide immersion and interaction to the user. Regarding immersion, it should be noted that arguments presented above regarding the MNS explain why the sole presentation of a real emotional face triggers simulation in cognitive system. Regarding automatic immersion provided by virtual agents, there is a largely known phenomenon called ‘The Uncanny Valley’ proposed by Masahiro Mori, 1970. According to Mori (1970), humanlike objects, such as robots, provoke positive emotional responses according to their degree of human likeness. However, at some point, when a certain degree of likeness is reached, the humanlike object is qualified by being repulsive. Among several explanations of this phenomenon, one is of importance for the main contribution of this paper: components of social cognition but particularly empathy would be involved when visualizing inanimate objects depicting humans (Misselhorn, 2009; Stein and Ohler, 2017).

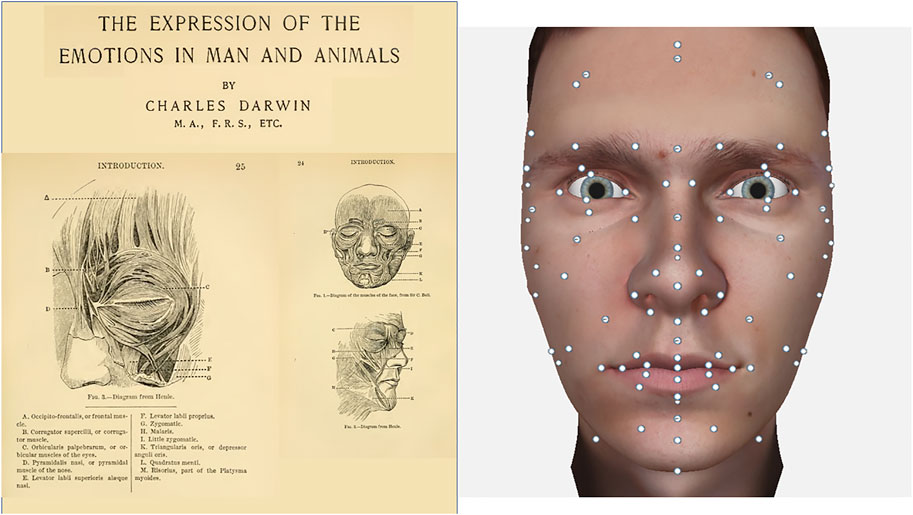

However, it can be proposed that virtual agents being an 3D graphical animation, the immersion that they provide are lesser than the real humans. On the contrary, it is worth noting that most advanced virtual agent platforms simulation of emotional facial expressions are based on real human facial muscle contractions by a taxonomy provided by the works of Paul Ekman. The relevance of ECA’s is that they allow researchers to manipulate the action units which are supposed to be universal and based on the actual human facial muscle contractions (Ekman and Friesen, 1978; Ekman, 2002). Ekman and Friesen (1978) proposed the idea that there would be prototypical features for expressions of which some of them would be also universal (Ekman, 1994). To this end, Ekman and Friesen (1978) also proposed a taxonomic system of facial muscles named FACS (Facial Action Coding System) which would standardize physical expression of emotions. These 44 codes called “Action Units” are what is being used to animate most conversational virtual agents today, but not all. In fact, these authors used largely (but not only) the description of human facial muscles described by Darwin in 1872. In the book “the expression of the emotions in man and animals,” Darwin (1872) depicted the facial muscles involved in the facial expressions. Although the animation of action units in virtual environments can differ because of the technology used, it can be proposed that the simultaneous use of these units within different intensities can create more than 7.000 different combinations (Scherer and Ekman, 1982). For instance, one of these ECA’s namely MARC (Multimodal Affective and Reactive Characters) framework (Courgeon et al., 2008; Courgeon and Clavel, 2013) can be used to animate a realistic 3D character. It is a software of virtual agents capable of expressing emotions through facial expressions while interacting with the user, based on actions units. MARC is a multi-character animation platform including body and facial animations in real-time. Its architecture is composed by 3 modules: 3D animation software interactive in real time, an offline editor of facial expressions and an offline body animation editor (see Figure 1).

FIGURE 1. On the left, original pictures of Darwin’s “the expression of the emotions in man an animals” in 1872; on the right, an example of embodied conversational agents with the action units presented on the neutral model, offering to be activated with several intensity (Courgeon et al., 2008; Courgeon and Clavel, 2013).

Finally, the embodied point of view of social cognition assumes that intentionality and perception cannot be explained apart from the action that accompanies it (Barsalou, 2009). This is also the case for the spontaneous discourse comprehension and inferences which can be made through the same simulation process of actions (Cevasco and Marmolejo-Ramos, 2013). According to the embodied social cognition, we could understand the intentionality of the other only with internal simulation, which is an automatic and nonconscious reconstitution of events. This reconstruction may be possible only if the virtual agent has the same physical characteristics with us, like the same facial muscle contractions and if these muscle contractions have a meaning in the context of events. Thus, our claim is that the use of embodied conversational agents animated with the action units are tailor-made stimuli for affective cognitive sciences studies. The use of the same human facial muscles can trigger the MNS more directly and automatically than any other stimuli, and the fact that virtual agents can adapt their behavior in accordance with user’s input makes them situated which (which is a prerequisite of embodied social cognition) cannot be done with videos or animations.

Virtual agents are tailored-made stimuli for affective cognitive sciences and mental health studies

One of the biggest issues in experimental research on affective cognitive sciences is the difficulty to design interactive paradigms that are, at the same time, ecological, controlled, and reproducible. Progress in affective computing is overcoming these constraints. Embodied conversational agents—ECA (Cassell et al., 2001) are at the center of all the requirements: They can simulate human intelligence, communicate by expressing affects via facial expressions and affective prosody, react according to user’s actions, and contextualize a human interaction. Thus, ECAs can be used in facial expression experiments, (Grynszpan et al., 2011; Marcos-Pablos et al., 2016). For instance, Grynszpan et al. (2011) showed that when interacting with a virtual agent, people with high functioning autism spectrum disorders showed a weaker modulation of eye movements, suggesting impairments in self-monitoring of gaze (for a debate regarding the autism and MNS see Heyes et al., 2022). Interesting enough, in Marcos-Pablos et al. (2016), although people with schizophrenia globally present deficits in the recognition of facial expressions, they showed that patients outperform the control group in the recognition of happiness when they are dealing with the virtual agent expressing happiness.

Other attempts for investigating facial expressions recognition, empathy, and intentionality performance in psychiatry with the use of ECA’s have been made (Oker et al., 2015; Barrada-Baby et al., 2016). Oker et al. (2015) evaluated whether a virtual card game with affective and reactive virtual agents can be proposed to people with schizophrenia. In their study created with MARC platform presented above, people suffering from schizophrenia were presented a game in which they met a female virtual agent and had to infer from her facial expression displays which card to choose to match the color of another card. They suggest that the interaction with virtual agents can be fully functional with patients with schizophrenia. Another study showed that patients with schizophrenia are less prone to interpret virtual characters empathetic questioning as helpful during an interaction following the same virtual card game (Berrada-Baby et al., 2016).

Moreover, although this is beyond the scope of this paper, virtual reality and virtual avatars have also been proposed as cue-exposure therapy for substance use disorders (Hone-Blanchet et al., 2014). Other studies with virtual agents interested in social anxiety (Kiser et al., 2022), social phobia (Ruch et al., 2014) or assessment of depression and anxiety (Egede et al., 2021). Regarding mental health issues and therapeutic interventions which can be made by virtual agents, one cannot ignore that unparalleled advances have been made in the field of emotional artificial intelligence. Emotional AI is a term for artificial intelligence as a biologically inspired cognitive architecture which can interact with people according to their emotions. These emotions can be gathered for instance by facial expression configurations (Ko, 2018) displayed during interaction. As such, if implemented to virtual agents, they can adapt their behavior, discourse, and facial expressions according to the user’s mood and induced emotional states, allowing them to adapt their behavior autonomously to motivate them and obtain a more engaging social interaction (Rodríguez and Ramos, 2014; Barrett et al., 2019) which could have more successful therapeutic outcomes.

In conclusion, to perform a functional social interaction, the importance of interpreting others’ emotional states, needs and desires is well documented (Beer and Ochsner, 2006; Frith and Frith, 2012). These emotional states can be mostly interpreted by facial expression recognition (Izard, 2001; Kohler et al., 2002) and the direction of one’s gaze (Pfeiffer et al., 2013). People who have difficulties to process and integrate all this information have been reported to be impaired in social interactions and quality of life, such as depression and social isolation. In the literature, these impairments in the adults have been reported mostly in schizophrenia (Brüne, 2005; Penn et al., 2008), schizotypy (Wang et al., 2015), but also in aging individuals (Chaby and Narme, 2009; Ziaei et al., 2016). The use of virtual agents can achieve a huge leap by taking advantage of human neurology in an attempt to understand, evaluate and rehabilitate these pathologies more efficiently than any other stimuli.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

The author confirms being the sole contributor of this work and has approved it for publication.

Conflict of interest

The author declares that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1This might also be the case of bodily expressions of emotions, as De Gelder et al. (2004) described.

References

Barrett, L. F., Adolphs, R., Marsella, S., Martinez, A. M., and Pollak, S. D. (2019). Emotional expressions reconsidered: Challenges to inferring emotion from human facial movements. Phil. Trans. R. Soc. B 20 (1), 1–68. doi:10.1177/1529100619832930

Barsalou, L. W. (2009). Simulation, situated conceptualization, and prediction. Philosophical Trans. R. Soc. B Biol. Sci. 364 (1521), 1281–1289. doi:10.1098/rstb.2008.0319

Bastiaansen, J. A., Thioux, M., and Keysers, C. (2009). Evidence for mirror systems in emotions. Phil. Trans. R. Soc. B 364 (1528), 2391–2404. doi:10.1098/rstb.2009.0058

Beer, J. S., and Ochsner, K. N. (2006). Social cognition: A multi level analysis. Brain Res. 1079 (1), 98–105. doi:10.1016/j.brainres.2006.01.002

Berrada-Baby, Z., Oker, A., Courgeon, M., Urbach, M., Bazin, N., Amorim, M. A., et al. (2016). Patients with schizophrenia are less prone to interpret virtual others' empathetic questioning as helpful. Psychiatry Res. 242, 67–74. doi:10.1016/j.psychres.2016.05.022

Blakemore, S. J., Winston, J., and Frith, U. (2004). Social cognitive neuroscience: Where are we heading? Trends cognitive Sci. 8 (5), 216–222. doi:10.1016/j.tics.2004.03.012

Brüne, M. (2005). Emotion recognition, ‘theory of mind, ’and social behavior in schizophrenia. Psychiatry Res. 133 (2-3), 135–147. doi:10.1016/j.psychres.2004.10.007

Buccino, G., Binkofski, F., Fink, G. R., Fadiga, L., Fogassi, L., Gallese, V., et al. (2001). Action observation activates premotor and parietal areas in a somatotopic manner: An fMRI study. Eur. J. Neurosci. 13, 400–404.

Cassell, J., Cevasco, J., and Marmolejo-Ramos, F. (2001). Embodied conversational agents: Representation and intelligence in user interfacesThe importance of studying the role of prosody in the comprehension of spontaneous spoken discourse. AI Mag. Latinoam. Psicol. 2245 (41), 6721–6733. doi:10.1609/aimag.v22i4.1593

Chaby, L., and Narme, P. (2009). Processing facial identity and emotional expression in normal aging and neurodegenerative diseases. Psychol. Neuropsychiatr. Vieil. 7 (1), 31–42. doi:10.1684/pnv.2008.0154

Courgeon, M., and Clavel, C. (2013). Marc: A framework that features emotion models for facial animation during human–computer interaction. J. Multimodal User Interfaces 7 (4), 311–319. doi:10.1007/s12193-013-0124-1

Courgeon, M., Martin, J. C., and Jacquemin, C. (2008). “Marc: A multimodal affective and reactive character,” in Proceedings of the 1st workshop on affective interaction in natural environments (Chania, Greece: Spinger).

Darwin, C. (1872). The expression of the emotions in man and animals by Charles Darwin. London: John Murray.

De Gelder, B., Snyder, J., Greve, D., Gerard, G., Hadjikhani, N., Di Pellegrino, G., et al. (2004). Fear fosters flight: A mechanism for fear contagion when perceiving emotion expressed by a whole bodyUnderstanding motor events: A neurophysiological study. Exp. Brain Res.Experimental Brain Res. 10191 (471), 16701176–16706180. doi:10.1007/bf00230027

Di Pellegrino, G., Fadiga, L., Fogassi, L., Gallese, V., and Rizzolatti, G. (1992). Understanding motor events: A neurophysiological study. Exp. Brain Res. 9 (1), 176–180.

Egede, J. O., Price, D., Krishnan, D. B., Jaiswal, S., Elliott, N., Morriss, R., et al. (2021). “Design and evaluation of virtual human mediated tasks for assessment of depression and anxiety,” in Proceedings of the 21st ACM international Conference on intelligent virtual agents (New York, United States: Association for Computing Machinery), 52–59.

Ekman, P. (2002). Facial action coding system (FACS). A human face. Salt Lake City: Paul Ekman Group.

Ekman, P., and Friesen, W. V. (1978). Facial action coding system. Environ. Psychol. Nonverbal Behav.

Ekman, P. (1994). Strong evidence for universals in facial expressions: A reply to russell's mistaken critique. Psychol. Bull. 115, 268–287. doi:10.1037/0033-2909.115.2.268

Forbes, P. A., Pan, X., and de C Hamilton, A. F. (2016). Reduced mimicry to virtual reality avatars in autism spectrum disorder. J. Autism Dev. Disord. 46 (12), 3788–3797. doi:10.1007/s10803-016-2930-2

Frith, C. D., and Frith, U. (2012). Mechanisms of social cognition. Annu. Rev. Psychol. 63, 287–313. doi:10.1146/annurev-psych-120710-100449

Gallese, V. (2007). Before and below ‘theory of mind’: Embodied simulation and the neural correlates of social cognition. Phil. Trans. R. Soc. B 362 (1480), 659–669. doi:10.1098/rstb.2006.2002

Gallese, V., Fadiga, L., Fogassi, L., and Rizzolatti, G. (1996). Action recognition in the premotor cortex. Brain 119 (2), 593–609. doi:10.1093/brain/119.2.593

Gallese, V., and Sinigaglia, C. (2011). What is so special about embodied simulation? Trends cognitive Sci. 15 (11), 512–519. doi:10.1016/j.tics.2011.09.003

Giraud, T., Ravenet, B., Tai Dang, C., Nadel, J., Prigent, E., Poli, G., et al. (2021). “Can you help me move this over there?”: Training children with ASD to joint action through tangible interaction and virtual agent,” in Proceedings of the fifteenth international conference on tangible, embedded, and embodied interaction (New York, United States: Association for Computing Machinery), 1–12.

Gratch, J. (2014). “Understanding the mind by simulating the body: Virtual humans as a tool for cognitive science research,” in Oxford handbook of cognitive science (Oxford: Oxford University Press).

Grynszpan, O., Nadel, J., Jacques, C., Le Barillier, F., Carbonell, N., Simonin, J., et al. (2011). A new virtual environment paradigm for high-functioning autism intended to help attentional disengagement in a social context. J. Phys. Ther. Educ. 25 (1), 42–47. doi:10.1097/00001416-201110000-00008

Heyes, C., Catmur, C., Holstege, G., Bandler, R., and Saper, C. B. (2022). What happened to mirror neurons?The emotional motor system. Prog. Brain Res.Progress Brain Res. 17107 (1), 1533–1686. doi:10.1016/s0079-6123(08)61855-5

Holstege, G., Bandler, R., and Saper, C. B. (1996). The emotional motor system. Prog. Brain Res. 107, 3–6.

Hone-Blanchet, A., Wensing, T., and Fecteau, S. (2014). The use of virtual reality in craving assessment and cue-exposure therapy in substance use disorders. Front. Hum. Neurosci. 8, 844. doi:10.3389/fnhum.2014.00844

Iacoboni, M., Koski, L. M., Brass, M., Bekkering, H., Woods, R. P., Dubeau, M. C., et al. (2001). Reafferent copies of imitated actions in the right superior temporal cortex. Proc. Natl. Acad. Sci. U. S. A. 98 (24), 13995–13999. doi:10.1073/pnas.241474598

Iacoboni, M., Molnar-Szakacs, I., Gallese, V., Buccino, G., Mazziotta, J. C., and Rizzolatti, G. (2005). Grasping the intentions of others with one's own mirror neuron system. PLoS Biol. 3 (3), e79. doi:10.1371/journal.pbio.0030079

Isaacowitz, D. M., and Stanley, J. T. (2011). Bringing an ecological perspective to the study of aging and recognition of emotional facial expressions: Past, current, and future methods. J. Nonverbal Behav. 35 (4), 261–278. doi:10.1007/s10919-011-0113-6

Keysers, C., and Gazzola, V. (2006). Towards a unifying neural theory of social cognition. Prog. Brain Res. 156, 379–401. doi:10.1016/S0079-6123(06)56021-2

Kilner, J. M., and Lemon, R. N. (2013). What we know currently about mirror neurons. Curr. Biol. 23 (23), R1057–R1062. doi:10.1016/j.cub.2013.10.051

Kiser, D., Gromer, D., Pauli, P., Hilger, K., and Ko, B. C. (2022). A virtual reality social conditioned place preference paradigm for humans: Does trait social anxiety affect approach and avoidance of virtual agents?A brief review of facial emotion recognition based on visual information. Sensors 18 (2), 401. doi:10.3390/s18020401

Ko, B. C. (2018). A brief review of facial emotion recognition based on visual information. Sensors 18 (2), 401.

Kohler, E., Keysers, C., Umilta, M. A., Fogassi, L., Gallese, V., and Rizzolatti, G. (2002). Hearing sounds, understanding actions: Action representation in mirror neurons. Science 297 (5582), 846–848. doi:10.1126/science.1070311

Marcos-Pablos, S., González-Pablos, E., Martín-Lorenzo, C., Flores, L. A., Gómez-García-Bermejo, J., and Zalama, E. (2016). Virtual avatar for emotion recognition in patients with schizophrenia: A pilot study. Front. Hum. Neurosci. 10, 421. doi:10.3389/fnhum.2016.00421

Marmolejo-Ramos, F., Khatin-Zadeh, O., Yazdani-Fazlabadi, B., Tirado, C., and Sagi, E. (2017). Embodied concept mapping: Blending structure-mapping and embodiment theories. Pragmat. Cognition 24 (2), 164–185. doi:10.1075/pc.17013.mar

Misselhorn, C. (2009). Empathy with inanimate objects and the uncanny valley. Minds Mach. (Dordr). 19 (3), 345–359. doi:10.1007/s11023-009-9158-2

Morsella, E., Montemayor, C., Hubbard, J., and Zarolia, P. (2010). Conceptual knowledge: Grounded in sensorimotor states, or a disembodied deus ex machina? Behav. Brain Sci. 33 (6), 455–456. doi:10.1017/s0140525x10001573

Niedenthal, P. M., Brauer, M., Halberstadt, J. B., and Innes-Ker, Å. H. (2001). When did her smile drop? Facial mimicry and the influences of emotional state on the detection of change in emotional expression. Cognition Emot. 15 (6), 853–864. doi:10.1080/02699930143000194

Niedenthal, P. M., Mermillod, M., Maringer, M., and Hess, U. (2010). The Simulation of Smiles (SIMS) model: Embodied simulation and the meaning of facial expression. Behav. Brain Sci. 33, 417–433. doi:10.1017/s0140525x10000865

Oberman, L. M., Winkielman, P., and Ramachandran, V. S. (2007). Face to face: Blocking facial mimicry can selectively impair recognition of emotional expressions. Soc. Neurosci. 2 (3-4), 167–178. doi:10.1080/17470910701391943

Oker, A., Prigent, E., Courgeon, M., Eyharabide, V., Urbach, M., Bazin, N., et al. (2015). How and why affective and reactive virtual agents will bring new insights on social cognitive disorders in schizophrenia? An illustration with a virtual card game paradigm. Front. Hum. Neurosci. 9, 133. doi:10.3389/fnhum.2015.00133

Pan, X., and Hamilton, A. F. D. C. (2018). Why and how to use virtual reality to study human social interaction: The challenges of exploring a new research landscape. Br. J. Psychol. 109 (3), 395–417. doi:10.1111/bjop.12290

Parsons, L. M., Fox, P. T., Downs, J. H., Glass, T., Hirsch, T. B., Martin, C. C., et al. (1995). Use of implicit motor imagery for visual shape discrimination as revealed by PET. Nature 375 (6526), 54–58. doi:10.1038/375054a0

Pavic, K., Oker, A., Chetouani, M., and Chaby, L. (2021). Age-related changes in gaze behaviour during social interaction: An eye-tracking study with an embodied conversational agent. Q. J. Exp. Psychol. 74 (6), 1128–1139. doi:10.1177/1747021820982165

Pecune, F., Cafaro, A., Chollet, M., Philippe, P., and Pelachaud, C. (2014). “Suggestions for extending saiba with the vib platform,” in 14th international conference on intelligent virtual agents (Boston: Springer), 16–20.

Penn, D. L., Sanna, L. J., and Roberts, D. L. (2008). Social cognition in schizophrenia: An overview. Schizophr. Bull. 34 (3), 408–411. doi:10.1093/schbul/sbn014

Pfeiffer, U. J., Vogeley, K., and Schilbach, L. (2013). From gaze cueing to dual eye-tracking: Novel approaches to investigate the neural correlates of gaze in social interaction. Neurosci. Biobehav. Rev. 37 (10), 2516–2528. doi:10.1016/j.neubiorev.2013.07.017

Rives Bogart, K., and Matsumoto, D. (2010). Facial mimicry is not necessary to recognize emotion: Facial expression recognition by people with Moebius syndrome. Soc. Neurosci. 5 (2), 241–251. doi:10.1080/17470910903395692

Rizzolatti, G., Fogassi, L., Gallese, V., Rodríguez, L. F., and Ramos, F. (2001). Neurophysiological mechanisms underlying the understanding and imitation of actionDevelopment of computational models of emotions for autonomous agents: A review. Cogn. Comput.Cognitive Comput. 26 (93), 661351–670375. doi:10.1007/s12559-013-9244-x

Rodríguez, L. F., and Ramos, F. (2014). Development of computational models of emotions for autonomous agents: A review. Cogn. Comput. 6 (3), 351–375.

Ruch, W. F., Platt, T., Hofmann, J., Niewiadomski, R., Urbain, J., Mancini, M., et al. (2014). Gelotophobia and the challenges of implementing laughter into virtual agents interactions. Front. Hum. Neurosci. 8, 928. doi:10.3389/fnhum.2014.00928

Scherer, K. R., and Ekman, P. (1982). Methodological issues in studying nonverbal behavior. Handb. methods nonverbal Behav. Res. 1, 44.

Schilbach, L., Timmermans, B., Reddy, V., Costall, A., Bente, G., Schlicht, T., et al. (2013). Toward a second-person neuroscienceVenturing into the Uncanny Valley of mind—the influence of mind attribution on the acceptance of human-like characters in a virtual reality setting. Behav. Brain Sci. 36160 (4), 39343–41450. doi:10.1016/j.cognition.2016.12.010

Singer, T., Seymour, B., O’doherty, J., Kaube, H., Dolan, R. J., and Frith, C. D. (2004). Empathy for pain involves the affective but not sensory components of pain. Sci. 303 (5661), 1157–1162.

Stein, J. P., and Ohler, P. (2017). Venturing into the uncanny valley of mind—The influence of mind attribution on the acceptance of human-like characters in a virtual reality setting. Cognition 160, 43–50.

Van der Gaag, C., Minderaa, R. B., and Keysers, C. (2007). Facial expressions: What the mirror neuron system can and cannot tell us. Soc. Neurosci. 2 (3-4), 179–222. doi:10.1080/17470910701376878

Van Overwalle, F., and Baetens, K. (2009). Understanding others' actions and goals by mirror and mentalizing systems: A meta-analysis. Neuroimage 48 (3), 564–584. doi:10.1016/j.neuroimage.2009.06.009

Varela, F., Thompson, E., and Rosch, E. (1991). The embodied mind: Cognitive science and human experience. Cambridge, MA: MIT Press.

Wang, Y., Liu, W. H., Li, Z., Wei, X. H., Jiang, X. Q., Neumann, D. L., et al. (2015). Dimensional schizotypy and social cognition: An fMRI imaging study. Front. Behav. Neurosci. 9, 133. doi:10.3389/fnbeh.2015.00133

Wilms, M., Schilbach, L., Pfeiffer, U., Bente, G., Fink, G. R., and Vogeley, K. (2010). It’s in your eyes—Using gaze-contingent stimuli to create truly interactive paradigms for social cognitive and affective neuroscience. Soc. cognitive Affect. Neurosci. 5 (1), 98–107. doi:10.1093/scan/nsq024

Wilson, M. (2002). Six views of embodied cognition. Psychonomic Bull. Rev. 9 (4), 625–636. doi:10.3758/bf03196322

Zaki, J., and Ochsner, K. (2009). The need for a cognitive neuroscience of naturalistic social cognition. Ann. N. Y. Acad. Sci. 1167, 16–30. doi:10.1111/j.1749-6632.2009.04601.x

Keywords: embodied social cognition, virtual agents, social brain, social interaction, affective cognition

Citation: Oker A (2022) Embodied social cognition investigated with virtual agents: The infinite loop between social brain and virtual reality. Front. Virtual Real. 3:962129. doi: 10.3389/frvir.2022.962129

Received: 05 June 2022; Accepted: 04 October 2022;

Published: 13 October 2022.

Edited by:

Regis Kopper, University of North Carolina at Greensboro, United StatesReviewed by:

Fernando Marmolejo-Ramos, University of South Australia, AustraliaCopyright © 2022 Oker. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: A. Oker, YWxpLm9rZXJAdW5pdi1yZWltcy5mcg==

A. Oker

A. Oker