- 1Department of Psychological and Brain Sciences, University of California, Santa Barbara, Santa Barbara, CA, United States

- 2Department of Neurobiology and Behavior, University of California, Irvine, Irvine, CA, United States

- 3Department of Cognitive Sciences, University of California, Irvine, Irvine, CA, United States

Spatial perspective taking is an essential cognitive ability that enables people to imagine how an object or scene would appear from a perspective different from their current physical viewpoint. This process is fundamental for successful navigation, especially when people utilize navigational aids (e.g., maps) and the information provided is shown from a different perspective. Research on spatial perspective taking is primarily conducted using paper-pencil tasks or computerized figural tasks. However, in daily life, navigation takes place in a three-dimensional (3D) space and involves movement of human bodies through space, and people need to map the perspective indicated by a 2D, top down, external representation to their current 3D surroundings to guide their movements to goal locations. In this study, we developed an immersive viewpoint transformation task (iVTT) using ambulatory virtual reality (VR) technology. In the iVTT, people physically walked to a goal location in a virtual environment, using a first-person perspective, after viewing a map of the same environment from a top-down perspective. Comparing this task with a computerized version of a popular paper-and-pencil perspective taking task (SOT: Spatial Orientation Task), the results indicated that the SOT is highly correlated with angle production error but not distance error in the iVTT. Overall angular error in the iVTT was higher than in the SOT. People utilized intrinsic body axes (front/back axis or left/right axis) similarly in the SOT and the iVTT, although there were some minor differences. These results suggest that the SOT and the iVTT capture common variance and cognitive processes, but are also subject to unique sources of error caused by different cognitive processes. The iVTT provides a new immersive VR paradigm to study perspective taking ability in a space encompassing human bodies, and advances our understanding of perspective taking in the real world.

1 Introduction

Spatial perspective taking is the ability to take a spatial perspective different from one’s own. It enables people to imagine themselves in a space from a different vantage point without physically seeing the view. People rely on this fundamental cognitive ability in daily life frequently, for example when understanding the orientation presented on a navigational aid system, or when describing target locations from others’ or imagined perspectives. Previous research has highlighted its connections to social cognition outside the spatial domain, such as social perspective taking, theory of mind, or empathy (Johnson, 1975; Baron-Cohen et al., 2005; Kessler and Wang, 2012; Shelton et al., 2012; Tarampi et al., 2016; Gunalp et al., 2019). Within the spatial cognition domain, it has been shown to be partially dissociated from other small scale spatial abilities (e.g., object-based spatial transformations as measured by mental rotation tasks) and is more related to large-scale spatial or navigation abilities than to mental rotation (Kozhevnikov and Hegarty, 2001; Hegarty and Waller, 2004; Weisberg et al., 2014; Holmes et al., 2017; Galati et al., 2018). Some evidence has suggested that this ability is an important mediator of the relationship between small-scale spatial ability and navigation ability (Allen et al., 1996; Hegarty and Waller, 2004; Hegarty et al., 2006).

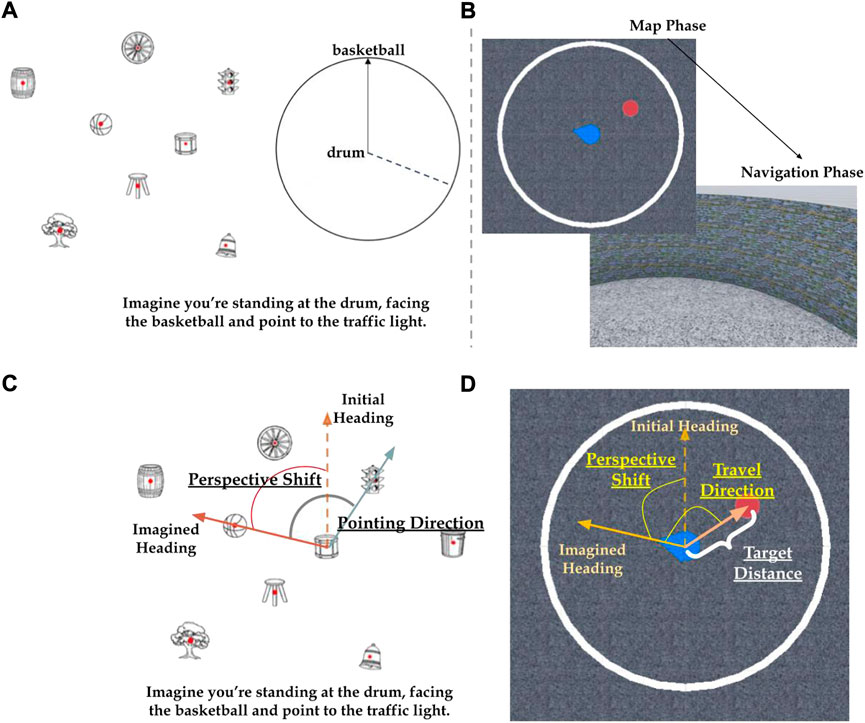

To measure perspective taking ability, cognitive psychologists have developed several psychometric tasks (Brucato et al., 2022). One commonly used task is the Spatial Orientation Task (Kozhevnikov and Hegarty, 2001; Hegarty and Waller, 2004; Friedman et al., 2020; Gunalp et al., 2021), in which participants are presented with an overhead view of an object array and are asked to imagine standing at object A, facing object B and to indicate the direction of object C (See Figure 1A). Performance is measured by the absolute angular error between the correct direction and the direction they indicated. To calculate the correct direction, people first need to shift their current perspective to the imagined perspective (Step 1) and then estimate the relative direction to the target (Step 2). Thus, these two angles, or two trial attributes of Perspective Shift and Pointing Direction, influence people’s performance in perspective taking (See Figure 1C). Perspective Shift (Step 1) refers to the angular deviation from the original orientation of the array (or the initial heading of participants) to the perspective to be imagined. Normally people assume the upward direction of the display is aligned with their current facing or heading direction, which is called the map alignment effect or front-up effect (Levine et al., 1982; Presson and Hazelrigg, 1984; Montello et al., 2004). Pointing Direction (Step 2) refers to the angular deviation from the imagined perspective to the direction to the target object.

FIGURE 1. Sample trials for (A) the Spatial Orientation Task, with the correct answer shown in the dotted line and (B) the immersive Viewpoint Transformation Task; (C,D) shows their trial features. Perspective Shift is the difference in angle between the initial heading and the imagined heading required by each trial, (C) Pointing Direction is the difference in angle between the imagined heading and the target, and (D) Travel Direction is the difference in angle between the imagined heading and the target. Finally, Target Distance is the straight-line distance translation from the participant’s standing location to the target location.

In daily navigation scenarios, people need to transform the direction indicated on a 2D external representation (e.g., a map) to their 3D surroundings so as to guide their navigation (including movement through the environment). For instance, when people consult a map to find a store in a mall, they need to 1) first transform their viewpoint from a birds-eye (allocentric) view shown in maps to a first-person (egocentric) view in the environment, 2) determine the angular deviation to the target location (Travel Direction) and 3) update their position as they move in the environment and constantly track their viewpoints in order to make sure they are walking far enough and in the right direction to the target. These common scenarios of using perspective taking ability necessitate developing a perspective taking task in an immersive virtual environment that involves transforming perspective on a 2D display to guide movement in 3D surroundings.

Perspective taking to guide movement through 3D surroundings requires spatial updating during self-motion, which depends on proprioception, motor efference and the vestibular system, collectively called body-based cues. However, no previous research has evaluated participants’ perspective taking ability in a task where people need to physically walk to the target object from a first-person view. Previous research has shown that body-based cues are critical for spatial perception and spatial knowledge acquired by path integration (e.g., Campos et al., 2014; Chance et al., 1998; Chrastil, et al., 2019; Chrastil and Warren, 2013; Grant and Magee, 1998; Hegarty et al., 2006). For example, Campos and colleagues (2014) manipulated the visual gains and proprioception gains while testing participants’ distance estimation ability and showed an overall higher weighting of proprioception over vision. Other studies have also demonstrated that proprioception, a critical sensory input for distance or angle calculation, tends to either dominate compared to vision in spatial updating (Klatzky et al., 1998; Kearns et al., 2002) or make a similar contribution as vision (Chrastil et al., 2019). Based on these considerations, we expect that the addition of body-based information during perspective taking could influence performance.

In the present study, we developed a new task, the immersive Viewpoint Transformation Task (iVTT), which involves body movements in a 3D environment. In this task (see Figure 1B), participants see a map from a bird’s eye view (map phase), take the perspective indicated by the blue pointer (perspective shift phase), estimate the relative location of the red dot, and walk to the location of the red dot (which is not visible in the immersive environment) after the map disappears (travel phase). The primary outcome measures are absolute distance error and absolute angular error. The distance error is the difference between Target Distance (see Figure 1D) and the actual distance traveled. The angular error is the angular deviation in degrees between the travel direction indicated on the map and the direction to the participants’ ending position.

In order to successfully reach the goal in the iVTT, the participant must 1) take the perspective indicated by the map, which involves transforming from an overhead viewpoint to a first-person viewpoint 2) compute the direction and distance to the target object relative to the imagined heading, and 3) successfully update their orientation and position in space as they walk to the goal location1.

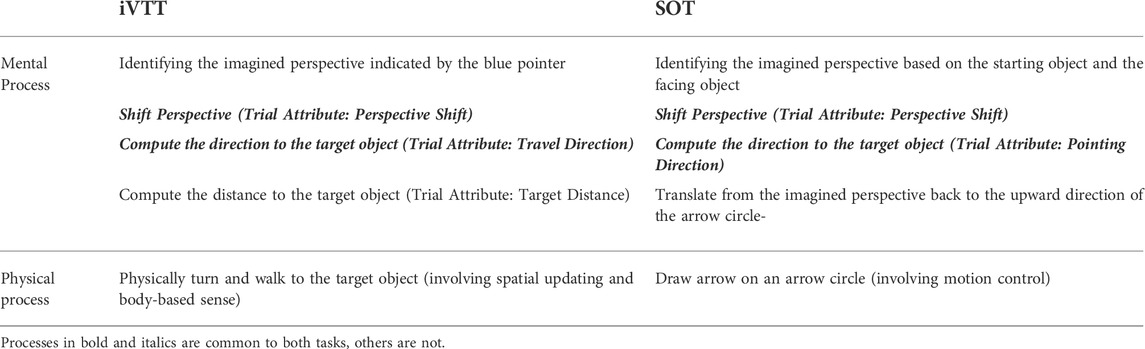

Theoretically, as shown in Table 1, the iVTT and the SOT share common processes of shifting perspective and computing the direction to the target object, but the iVTT differs from the SOT in the following ways: the iVTT additionally requires participants 1) to estimate the distance to the target; and 2) to physically walk and update their position as they walk to the target. Thus, updating could contribute to errors in the iVTT. In addition, the dominant strategy in the SOT requires participants to translate back from the egocentric view to an overhead view to indicate the direction on the arrow circle.

TABLE 1. Task Process Analysis for immersive Viewpoint Transformation Task (iVTT) and Spatial Orientation Task (SOT).

In this study, we first examined the correlation between the iVTT and the Spatial Orientation Task (SOT) at the participant level to see if performance in one task can be used to predict performance in the other task. The key hypotheses and the corresponding predictions are:

1) Perspective-taking hypothesis: If the common processes, including perspective shift and target direction estimation, are the dominant sources of individual differences in the iVTT, then

a) An individual’s absolute angular error in the iVTT should be correlated with their absolute angular error in the SOT;

b) Angular errors on the iVTT will be similar to those on the SOT;

c) An individual’s absolute distance error in the iVTT should not be strongly correlated with angular error in the iVTT or in the SOT, because distance is not part of the perspective taking process.

2) Spatial-updating hypothesis: If the common processes are not the dominant sources of individual differences, and spatial updating processes unique to the iVTT are also a source of individual differences, then

a) An individual’s absolute angular error in the iVTT should not be highly correlated with their absolute angular error in the SOT;

b) Angular errors on the iVTT will be greater than those on the SOT;

c) An individual’s absolute distance error in the iVTT should be correlated with their absolute angular error in iVTT.

Second, we analyzed the performance at the trial level (i.e., participants’ average performance on different trials with different trial attributes) to test specific strategies that may account for the performance variance in the two tasks. The iVTT and SOT both require participants to shift their perspective (Perspective Shift) and then compute the relative direction to the target object (Pointing or Travel Direction). In previous research on the SOT, participants’ angular error increased linearly as the magnitude of Perspective Shift increased from 0 to 180° (Kozhevnikov and Hegarty, 2001; Gunalp et al., 2021). This finding has been interpreted to indicate that the process of perspective shifting (Step 1) is an analog process (e.g. Rieser, 1989) or that it reflects more difficulty inhibiting one’s current perspective when it is more different to the perspective to be imagined (May, 2004).

In terms of Pointing or Travel Direction, (Step 2) previous perspective taking studies have also demonstrated that pointing to locations to the front are easier than pointing to the side or back and that the front-back axis is easier for people to transform than the left/right axis (e.g. Gunalp et al., 2021). One interpretation of these results is that pointing is easier when it is aligned with the body axes (Franklin and Tversky, 1990; Bryant and Tversky, 1999; de Vega and Rodrigo, 2001; Gunalp et al., 2021; Hintzman et al., 1981; Kozhevnikov and Hegarty, 2001; Wraga, 2003).

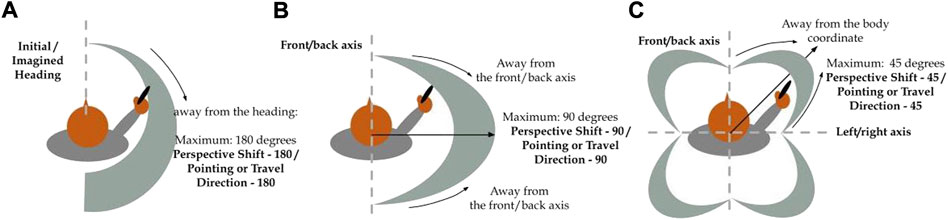

Here we examined the influences of deviations from three different reference frames, (1) front, ranging from 0 to 180, (2) front-back, ranging from 0 to 90 and (3) front-back-left-right, ranging from 0 to 45 as shown in Figure 2A (cf. Montello et al., 1999). Based on previous research indicating that larger perspective shifts are associated with more error (Kozhevnikov and Hegarty, 2001; Gunalp et al., 2021), we expected participants to have larger errors on the trials with a larger perspective shift (ranging from 0–180), leading to a positive correlation between the required mental transformation of a trial and participants’ average angular error on the trial. We have termed this transformation perspective shift - 180 to indicate that the maximum error is expected at 180°.

FIGURE 2. Illustrations of the three different reference frames that might affect the relative difficulty across trials (A) front (initial heading), (B) front/back axis or (C) both the front/back and left/right axes. Maximum errors are expected when furthest from the reference axis. Thus, when using just the initial heading direction for reference (A), maximum errors are expected at 180°. When using the front/back axis (B), maximum errors are expected at 90°. When using both the front/back and left/right axes (C), maximum errors are expected at 45°.

As shown in Figures 2B,C, for both the iVTT and the SOT, during the travel or pointing phase, if participants use the body coordinate system as a reference frame, as suggested by previous research, the canonical angles (0°, 90°, 180°, 270°) would be relatively easy for them to transform to. For example, when participants use the front/back axis as a reference frame to estimate a transformation of a trial, if the transformation is close to the front/back axis, then participants’ pointing or traveling accuracy would increase. As shown in Figure 2B, if participants use the front/back axis, the mental transformation required would increase from 0° (aligned with front or back) to 90° at the maximum (left or right). Thus, participants’ average angular error for a trial would be positively correlated with how far the prompted transformation differs from their front or back, which we term Pointing/Traveling Direction - 90 to indicate that the maximum error is expected at 90°. Finally, if participants use both body axes to estimate the transformation, participants’ average angular error on a trial would increase linearly as the prompted transformation differs from both the front/back and left/right axes (Figure 2C). Since the axes are at 90°, the maximum effort and error is expected at 45°. We have termed this type of transformation as Pointing/Travel Direction - 45 to indicate that the maximum error is expected at 45°.

We are interested in whether the SOT and/or the iVTT have analogue or embodied processes, but even more directly related to this study is whether they have the same embodied processes. Although we primarily expect the Perspective Shift to be related to the analogue process and the Pointing/Travel Direction to be related to the embodied process, we will test for relationships at all levels (180, 90, and 45) for both phases. Thus, the key hypotheses and the corresponding predictions for the trial level analysis are the following:

3) Common-embodied process hypothesis: If participants use similar embodied process for iVTT and SOT, then

a) We would expect angular error on the SOT and iVTT to have similar correlations with Perspective Shift - 180, Perspective Shift - 90, and Perspective Shift - 45 (See Figure 2)

b) Likewise, we would expect angular error for the SOT and iVTT to have similar correlations with Pointing/Travel Direction - 180, Pointing/Travel Direction - 90, and Pointing/Travel Direction - 45.

4) Differential-embodied process hypothesis: If the participants use different process for iVTT compared with SOT, then

a) We would expect different correlations between angular error and perspective shift for the SOT and iVTT for Perspective Shift - 180, Perspective Shift - 90, and Perspective Shift - 45 (See Figure 2)

b) Likewise, we would expect different correlations with pointing/travel direction for the SOT and the iVTT.

Notably, the trial-level analysis complements the individual differences analysis. Even if perspective-taking or spatial updating might dominate the variance at an individual level, the other non-dominant processes may still play a role and differentially influence the strategies or embodied processes involved for different phases (e.g., perspective-shift/map-viewing phase or pointing/travel phase) or for different estimations (i.e., direction estimation or distance estimation). Thus, we emphasize that our hypotheses are not necessarily mutually exclusive, such that we could find evidence for common processes as well as differential processes at both the individual and trial levels.

2 Methods

2.1 Participants

The participants were 48 undergraduates (24 female, age: Mean: 18.8 SD: 1.15) who received course credit for participation. A priori power analysis showed that with 48 participants, the study has sufficient power ((1- β >.80, α = .05) to detect a .4 correlation between measures.

2.2 Materials

2.2.1 Apparatus

The virtual environment (VE) was displayed using an HTC VIVE Pro Eye VR head-mounted display (HMD) with Dual OLED 3.5” diagonal display (1440 × 1600 pixels per eye or 2880 × 1600 pixels combined), a 90 Hz refresh rate, and a 110° field of view capable of delivering high-resolution audio through removable headphones. In addition to the HMD, the VR interface included two HTC VIVE wireless handheld controllers for interacting with the experiment and four HTC Base Station 2.0 infrared tracking sensors for large-scale open space tracking. The system was equipped with wireless room tracking via a 60 GHz WiGig VIVE Wireless adapter and was run on an iBuyPower desktop computer powered by an eight-core, 3.60 GHz Intel core i9-9900K central processing unit (CPU), an NVIDIA GeForce RTX 2070 Super graphics processing unit (GPU) with 16 GB of system memory.

The VE was built and rendered in Unity using custom scripts. Participants physically walked in the environment while wearing a HMD. Thus, this system provided vestibular and proprioceptive information.

2.2.2 Immersive viewpoint transformation task

The ambulatory VE for this task was a virtual desert with a circular walled arena with a radius of 3 m (See Figure 1). Each trial included a map presentation and a navigation phase (Figure 1B). First, the participant was shown a map for 2 s, indicating the location and imagined perspective of the participant at the start of the trial, and the location of their goal. Then, during the navigation phase, the participant was returned to the first-person view at the center of the arena and the target was invisible to them. They must then turn and walk to where they thought the goal was located, and click the trigger of the handheld controller when they thought they had reached the target. Critically, the imagined perspective indicated by the map did not align with the actual facing direction of the participant on most trials.

Three main trial attributes were Perspective Shift, Target Direction, and Target Distance (Figure 1D). The Perspective Shift ranged from 0.0° (i.e., imagined perspective was aligned with initial heading) to 179.5°, counterbalanced between right and left sides. Travel Direction was the direction of travel relative to the target, after the perspective shift had taken place. This ranged from 2.5° to 176.0°, counterbalanced between right and left. Target Distance ranged between 1.5 and 2.7 m.

The primary outcome measures were absolute angular error and absolute distance error. The signed angular error and distance error were calculated as well to check whether bias might be involved in the spatial updating processes, such as overshooting a small turn angle and undershooting a large turn angle (Loomis et al., 1993; Schwartz, 1999; Petzschner and Glasauer, 2011; Chrastil and Warren, 2017). The possible range of absolute angular error was from 0° to 180°. If participants’ pointing direction is uniformly distributed, chance performance is 90° (see Huffman and Ekstrom, 2019 for an alternative approach to characterizing chance performance). Given that the radius of the virtual arena was 3 m, chance performance for the absolute distance error was 1.05 m if participants moved randomly. There was a total of 48 trials (4 four quadrants for perspective shift: 0°–45°, 45°–90°, 90°–135°, 135°–180°) × 3 (four quadrants for pointing direction: front, left/right, back) × 2 (levels of traveling distance: 1.5–1.7; 2.5–2.7) × 2 repeats). Five practice trials were given, and participants were given unlimited time to complete the test trials.2

2.2.3 Spatial orientation task

The SOT is a 32-item computerized perspective-taking task adapted by Gunalp and colleagues (2021) from the standard 12-item version used as a psychometric task (Kozhevnikov and Hegarty, 2001; Hegarty and Waller, 2004; Fredman et al., 2020), displayed through E Prime (2.0, Schneider et al., 2012) on Dell 24-in. P24124 (60-Hz refresh rate) monitors with Nvidia GeForce GTX (660) graphics cards. As shown in Figure 1A, participants viewed a layout of objects on the screen. They were asked to imagine standing at one object, facing a second object, and then to point to a third object, using an arrow circle. Perspective Shift and Pointing Direction (see Figure 1C) also ranged from 0° to 180°, counterbalanced between right and left sides. There were a total of 32 trials, 4 quadrants for perspective shift × 4 quadrants for pointing direction (front, left, right, back × 2 repeats. Three practice trials were given, and participants were allowed 20 min to complete 32 test trials3. The difficulty of the test trials is equivalent to the iVTT in terms of Perspective Shift and the direction to the target. The main outcome measure was the absolute angular error of each trial, ranging from 0° to 180°4.

2.2.4 Post-virtual reality experience scale

A 5-item survey was used to ask the usability of the VR system to our participants including the feeling of motion sickness (See Supplementary Material). Only 3 (out of 48) participants reported multiple slightly uncomfortable feelings and were not perfectly satisfied with the usability of the VR system. Their performance in the task was not at the lowest or highest end of the distribution. Thus, the following analyses did not exclude these 3 participants or discuss them separately.

2.3 Procedure

The local Institutional Review Board (IRB) reviewed and approved the study as adhering to ethical guidelines. All participants completed the experiment alone with an experimenter giving instructions. After giving informed consent, half of the participants did the SOT first and the other half of the participants did the iVTT first. Preliminary analysis found that there was no significant effect of task performance, and so the counterbalanced tasks were combined for the remainder of the analysis. Before they started the iVTT task, they were introduced to the VR system. A task-irrelevant VR tutorial was used for them to become familiar with the immersive VR system and to calibrate their perceptual sense to this immersive VR setup, where they physically walked in a virtual maze and picked up three bubbles in the environment.

After they completed both SOT and iVTT tasks, they answered the post-VR questionnaire on Qualtrics. Finally, participants were compensated for this participation and debriefed.

2.4 Analyses

We first compared performance on the SOT and iVTT at the participant level. First, we calculated each individual’s average absolute angular error for SOT, absolute angular error and absolute distance error for iVTT. Then, we examined the internal reliability (permutation-based split-half reliability) of the measures before we tested their overall correlations (Hedge et al., 2018; Parsons et al., 2019; Ackerman and Hambrick, 2020). These correlation analyses help us test the Perspective-taking hypothesis and the Spatial-updating hypothesis outlined in the introduction.

Then, we examined the cognitive processes involved in the iVTT by testing the relations between trial attributes (e.g., Perspective Shift - 180, Travel Direction - 180, Perspective Shift - 90, Travel Direction - 90, Perspective Shift - 45, Travel Direction - 45, and Target Distance) and trial level performance (i.e., average absolute & signed angular error and average absolute & signed distance error in meters across participants). In comparison, trial level angular error in the Spatial Orientation Task (SOT) was analyzed. Similar to the iVTT, we tested the linear correlations between each trial attribute and average absolute angular error, considering that embodied processing was potentially being used at different phases (Perspective Shift - 180; Perspective Shift-90; Perspective Shift-45; Pointing Direction - 180; Pointing Direction - 90; Pointing Direction - 45). All analyses were carried out using R scripts. All experimental materials, raw data and analysis scripts are available on GitHub ( https://github.com/CarolHeChuanxiuyue/HumanViewpointTransformationAbility).

3 Results

3.1 Individual differences

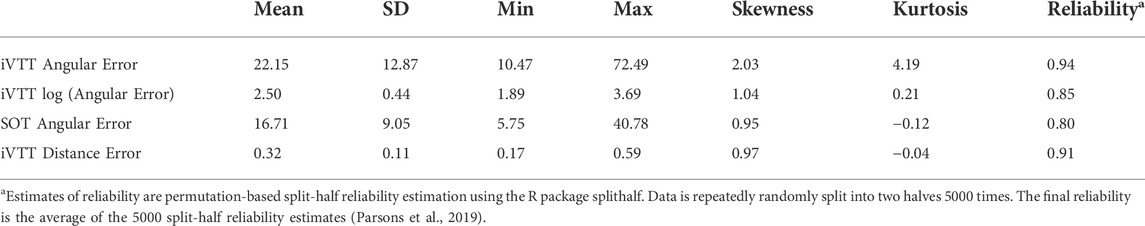

Descriptive statistics for all measures, computed across participants are shown in Table 2. The distribution of the average absolute angular error for the immersive Viewpoint Transformation Task (iVTT) was positively skewed. To remedy this departure from normality, the data for teh iVTT were log transformed for the correlation analysis. All scales had good internal reliability (permutation-based split-half reliability is between .8–.95).

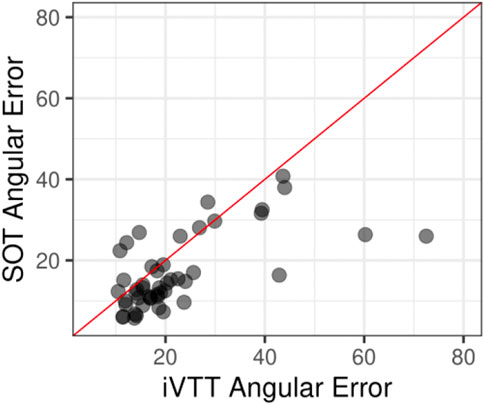

As shown in Figure 3, angular error was higher in the iVTT than in the SOD, paired t (47) = 3.80, p < 0.001, mean difference = 5.5°, 95%CI [2.56,8.33]). This result suggests that the additional processes listed in Table 1, namely, action and spatial updating, might be sources of angular error in the iVTT, in addition to the processes shared by the two tasks.

FIGURE 3. A scatter plot for the individual average absolute angular error for the immersive viewpoint transformation task (iVTT) and average absolute angular error for the spatial orientation task (SOT). When points fall on the red line, the corresponding participants have the exactly same average errors for both tasks. Most points are below the red line, indicating that most people have poorer performance on the iVTT task.

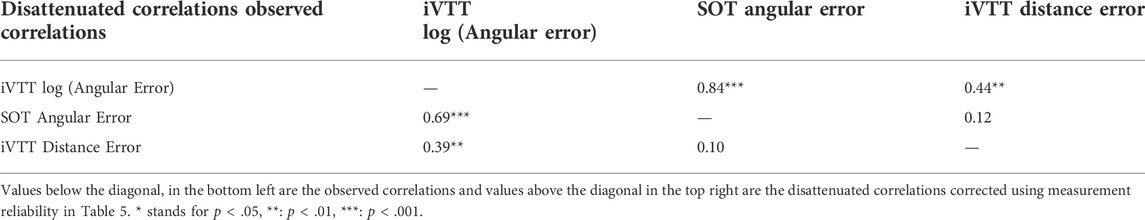

As shown in Table 3, the absolute angular errors for the iVTT and that for the Spatial Orientation Task (SOT) were highly correlated (r = 0.69; t (46) = 6.43, p < .001, 95%CI = [0.50,0.81]). Note that we reported the correlations after correcting for the right-skewed distribution of the absolute angular error, which means we used the log-transformed absolute angular error. Moreover, the disattenuated correlation, taking the reliability of the measures into account is 0.86. The strong correlation between the two absolute angular errors supports the perspective-shift hypothesis, indicating that the processes common to the two tasks (shifting perspective and computing the direction to the target object) were the dominant processes underlying individual differences in these tasks.

The distance error and angular error of iVTT were significantly correlated with each other but the correlation was moderate (r = 0.39.; t (46) = 2.85, p = 0.007, 95%CI = [0.12,0.60], and .44 after disattenuation). This result suggests that spatial updating of distance and direction also accounted for a significant proportion of the variance although it did not change the strong correlations dominated by the common processes. Moreover, distance error in the iVTT was not correlated with angular error in the SOT (r = 0.10.; t (46) = 0.69, p = .49, 95%CI = [−0.19,0.38]). These results suggest that distance estimation and angle estimation are dissociated sources of individual differences.

In sum, analyses of performance across individuals indicated that individual differences in the iVTT were largely correlated with individual differences in the SOT but spatial updating also contributed to the variance in the iVTT so that errors were greater overall for the iVTT. Thus, we found evidence for both hypotheses. To understand these results further we computed analyses of performance across trials to examine the degree to which they reflected embodied processes.

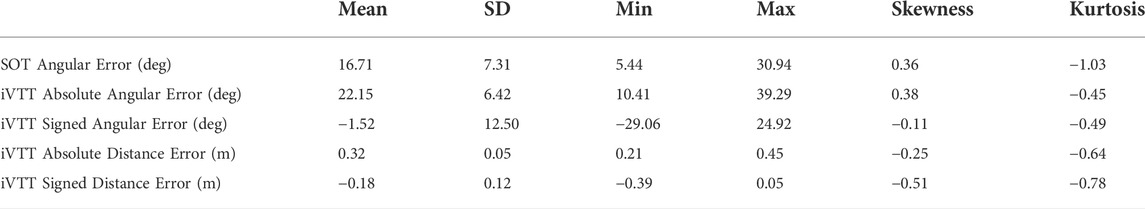

3.2 Measures at the trial level—underlying embodied processes

Descriptive statistics for all measures (both absolute and signed error) at the trial level are shown in Table 4. A positive signed error indicated an overshoot of the distance or angle estimation in the clockwise direction, while a negative signed error indicated an undershoot of the distance or angle estimation in the counterclockwise direction. Overall, signed error (bias) for angle estimation was negligible, and did not show any linear relations with trial attributes, so we only report the descriptive statistics here without further investigation. In contrast, for distance estimation, the signed error indicated that distance tended to be underestimated by participants.

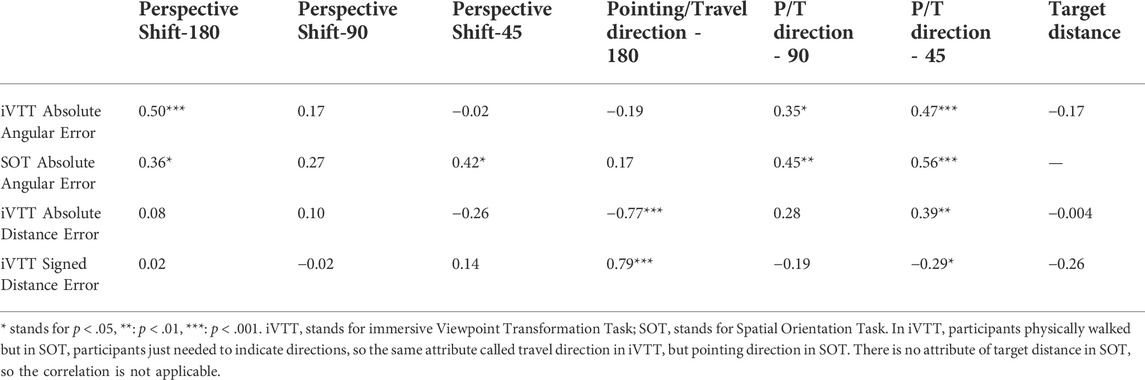

The correlations between performance measures and the trial attributes (with or without considering the constraints of the body coordinate frame) are shown in Table 5. The angular error for the iVTT increased linearly as the perspective shift (reflecting phase 1 of the perspective taking process) increased from 0 to 180 (Perspective Shift - 180). In contrast, the angular error for the SOT was influenced by both the magnitude of perspective shift away from the imagined heading (Perspective Shift - 180) and the body coordinate frame such that the angular error also increased linearly as the perspective shifted away from the body coordinate frame (Perspective Shift - 45). This finding, which was unexpected, suggests that the perspective shift phase for the SOT was more influenced by alignment of the imagined perspective with the body axes.

As for the second trial attribute (deviation of pointing and travel direction from the perspective to be assumed, reflecting phase 2 of the perspective taking process), all errors showed strong linear correlations with the body axes, suggesting that embodied processing was the key process during the pointing phase of the SOT and the traveling phase of the iVTT, indicating a cognitive process common to both iVTT and SOT, despite the differences in their mode of responding (see Table 1).

Distance estimation in the iVTT indicated that participants tended to undershoot on most trials (see Table 4). However, when the targets were behind participants, they were less likely to undershoot, which led to lower absolute distance error (Table 5). Note that no perspective-taking phase body-axis effect was detected on distance estimation in the iVTT, suggesting that during the map-viewing phase, angular estimation was dissociated from distance estimation, although a similar body axes effect was found during the execution or pointing/travel phase. Interestingly, target distance had no effect on the distance estimation either.

4 Discussion

The newly developed immersive Viewpoint Transformation task (iVTT) has high internal reliability and validity for measuring individual differences in viewpoint transformation ability. We compared the iVTT with the Spatial Orientation Task (Kozhevnikov and Hegarty, 2001; Hegarty and Waller, 2004; Friedman et al., 2020; Gunalp et al., 2021) to examine the relationship between the two tasks and to elucidate the underlying cognitive processes revealed by the iVTT. In general, absolute angular error in the iVTT was highly correlated with absolute angular error in SOT, suggesting that perspective taking is a key source of individual differences in both tasks, and supporting the perspective-shift hypothesis. At the trial level, both iVTT and SOT involved embodied processing such that pointing/travel direction (step 2) was influenced by the body axes in both tasks (cf., Gunalp et al., 2021). These results add to the validity of both tasks as measures of perspective taking and suggest that they measure common processes.

On the other hand, spatial updating also contributed to distance estimation and execution in the iVTT, and angular errors were greater overall for the iVTT (although the common processes still dominate the variance as indicated by the correlation between angular errors). These findings support the spatial-updating hypothesis. At the trial level, we found some minor differences in the particular embodied processes involved. Together, these results suggest that the two tasks are not completely overlapping, and that spatial updating does play a role in individual differences in the iVTT.

As expected, and consistent with previous research (Gunalp et al., 2021; Kozhevnikov and Hegarty, 2001) SOT errors increased with deviation of the perspective to be imagined from the initial heading (Perspective Shift - 180) and this was also true for the iVTT, suggesting common processes. Surprisingly, SOT errors were also correlated with deviation of the front/back/left/right axes (Perspective Shift 45), whereas those of the iVTT were not, suggesting that perspective shift (step 1) on the SOT involved more influence of body axes than on the iVTT. A possible explanation of this result is that the process of assuming the imagined heading is more difficult in the SOT than in the iVTT because in the SOT it is defined by two objects (imagine you are at A facing B) whereas in the iVTT is shown by the blue pointer. The additional coordinate framework may have helped participants to estimate the prompted perspective shift so that it was easier to imagine a perspective in which the body axis was aligned with the major axes (up-down, left-right) of the map displays. This speculation calls for further research.

Distance estimation in the iVTT is a separate process from perspective taking. First, distance estimation was partially dissociated from angle estimation in the iVTT and was not correlated with angular estimation in the SOT. Second, distance estimation was influenced by the body axes during the travel phase but not the perspective-shift/map-viewing phase, suggestive of errors during spatial updating. These results echo the findings of Chrastil and Warren (2017; 2021) that execution errors, which refer to errors produced by walking and spatial updating to make a response, make a large contribution to performance in distance and angle estimation. Third, we found an overall distance underestimation bias in iVTT, but this was less evident for targets behind participants. This might be explained by the ambiguity of mapping the center of participants’ own body to the location of the blue pointer. Due to the triangle part of the blue pointer, participants may have mapped their center closer to the front of this icon. Thus, the target distance indicated on the map may have appeared longer when the target was behind the blue pointer. This speculation calls for further study so that in future research, we will add a center dot to the blue pointer and tell participants to imagine they are this center dot, to eliminate the ambiguity.

The current study showed no evidence of effects of Perspective Shift or Target Distance on distance errors in the iVTT. Producing the target distance may not have been challenging in this task because 1) the total distance people need to reproduce was fairly short (less than 2.7 m, although that is not too far from many path integration studies) (Loomis et al., 1993; Loomis and Knapp, 2003; Chrastil and Warren, 2021), so that the distances are relevantly easy for participants to estimate and produce; and 2) the walls of the circular arena could serve as a cue for participants to gauge the distance, which may make the long distances as easy as short distances close to the body. Previous research has shown that environmental boundaries and geometry enable mammals, including humans, to learn the scale and functional affordances of the environment (Barry et al., 2006; Solstad et al., 2008; Lever et al., 2009; Ferrara and Park, 2016). Although target distance did not make a difference in this task, individual differences in distance error may relate to ability to perceive and produce distances in general. Future studies will relate distance error in the current task to other large-scale spatial tasks involving distance estimation.

There are several differences between the SOT and the iVTT, including the immersive nature of the iVTT and the fact that the iVTT involves distance estimation as well as angular estimation. The effects of these different task attributes should be teased apart in future research, for example by comparing performance on the iVTT to a more immersive version of the SOT, as studied by Gunalp, Moossaian and Hegarty (2019), and by including some trials in the iVTT that just involve turning one’s body to face the target, to further understand the influences of direction and distance estimation on performance of this task. These studies will further advance our knowledge of the cognitive processes involved in perspective taking in more realistic environments and how perspective taking guides action.

The present study advances our understanding of the cognitive processes underlying perspective taking in a 3D environment to guide physical navigation to a target. Perspective taking ability was the key underlying process in this immersive virtual environment task and accounts for the majority of the variance in angle estimation. But we also captured processes beyond perspective shift that are commonly embedded in real-life perspective taking, such as transformation from a 2D (top down) representation to 3D (first person view) surroundings, walking, and spatial updating based on the body senses. Therefore, we suggest that if future researchers aim to measure spatial perspective taking ability in terms of direction estimation per se, the present research supports the construct validity of the SOT. However, the iVTT contributes a useful new instrument for researchers who aim to link spatial perspective taking to real world navigation tasks in which the perspective taking is used to guide movement to goal locations in the environment.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found below: https://github.com/CarolHeChuanxiuyue/HumanViewpointTransformationAbility.git.

Ethics statement

The studies involving human participants were reviewed and approved by University of California Santa Barbara Human Subjects Committee. The patients/participants provided their written informed consent to participate in this study.

Author contributions

CH was responsible for designing and programming the immersive viewpoint transformation task and was responsible for the data collection, analysis, writing and revising the manuscript. EC and MH supervised CH and proposed the concept of the study and were responsible for discussing and revising the manuscript.

Funding

This work was supported by the National Science Foundation (NSF-FO award ID 2024633).

Acknowledgments

We would like to thank Mengyu Chen, Scott Matsubara and Fredrick (Rongfei) Jin for helping with programming the task. Fredrick Jin helped with data collection as well.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frvir.2022.971502/full#supplementary-material

Footnotes

1Notably, the transformation process can happen either during the map-view phase or the navigation phase. When participants view the map, they can either take a top-down view as the map suggested, or take a first-person view by imagining themselves being the blue pointer. If they take a top-down view, then the overhead-to-first-person view transformation would happen while they are walking to the target. If they take a first-person view during the map-view phase, the overhead-to-first-person view transformation would happen during the map-view phase. Determining the timings of this transformation is out of the scope of the current study and is left for future research. In SOT, previous research has shown that the egocentric strategy is the dominant strategy used by participants (Kozhevnikov and Hegarty, 2001; Hegarty and Waller, 2004; Gunalp et al., 2021). This means that although the object array was displayed from an overhead view, participants tend to imagine themselves being in the object array and then estimate the direction from a first-person-view.

2The 5 practice trials were different from the testing trials. For the first 2 practice trials, the target objects were visible in the virtual area. Thus, participants could check if they understood the task correctly. For the first 3 practice trials, the map view phase was 4 s, but for the rest of the 2 practice trials, the map view phase was the same as the testing trials. This practice phase also helped users to get familiar with the virtual environment before they started to do the actual task. The task program will be available to future researchers to use.

3In the regular SOT task (Hegarty and Waller, 2004; Friedman et al., 2020), participants need to complete 12 trials within 5 min. The current task gave people more time and all participants completed the tasks within the time limit.

4Note that the SOT has 32 trials but the iVTT has 48 trials. This is because the SOT used a balanced number of trials for the right and left sides and did not have target distance as a factor. For both tasks, we balanced the left and right sides but combined them for analysis because in previous studies we did not see a substantial difference between left and right sides (Gunalp et al., 2021).

References

Ackerman, P. L., and Hambrick, D. Z. (2020). A primer on assessing intelligence in laboratory studies. Intelligence 80, 101440. doi:10.1016/j.intell.2020.101440

Allen, G. L., Kirasic, K. C., Dobson, S. H., Long, R. G., and Beck, S. (1996). Predicting environmental learning from spatial abilities: An indirect route. Intelligence 22 (3), 157–158. doi:10.1016/s0160-2896(96)90010-0

Baron-Cohen, S., Knickmeyer, R. C., and Belmonte, M. K. (2005). Sex differences in the brain: Implications for explaining autism. Science 310 (5749), 819–823. doi:10.1126/science.1115455

Barry, C., Lever, C., Hayman, R., Hartley, T., Burton, S., O'Keefe, J., et al. (2006). The boundary vector cell model of place cell firing and spatial memory. Rev. Neurosci. 17 (1-2), 71–97. doi:10.1515/revneuro.2006.17.1-2.71

Brucato, M., Frick, A., Pichelmann, S., Nazareth, A., and Newcombe, N. S. (2022). Measuring spatial perspective taking: Analysis of four measures using item response theory. Top. Cogn. Sci. doi:10.1111/tops.12597

Bryant, D. J., and Tversky, B. (1999). Mental representations of perspective and spatial relations from diagrams and models. J. Exp. Psychol. Learn. Mem. Cognition 25 (1), 137–156. doi:10.1037/0278-7393.25.1.137

Campos, J. L., Butler, J. S., and Bülthoff, H. H. (2014). Contributions of visual and proprioceptive information to travelled distance estimation during changing sensory congruencies. Exp. Brain Res. 232 (10), 3277–3289. doi:10.1007/s00221-014-4011-0

Chance, S. S., Gaunet, F., Beall, A. C., and Loomis, J. M. (1998). Locomotion mode affects the updating of objects encountered during travel: The contribution of vestibular and proprioceptive inputs to path integration. Presence. (Camb). 7 (2), 168–178. doi:10.1162/105474698565659

Chrastil, E. R., Nicora, G. L., and Huang, A. (2019). Vision and proprioception make equal contributions to path integration in a novel homing task. Cognition 192, 103998. doi:10.1016/j.cognition.2019.06.010

Chrastil, E. R., and Warren, W. H. (2013). Active and passive spatial learning in human navigation: acquisition of survey knowledge. J. Exp. Psychol. Learn. Mem. Cognition 39 (5), 1520–1537. doi:10.1037/a0032382

Chrastil, E. R., and Warren, W. H. (2021). Executing the homebound path is a major source of error in homing by path integration. J. Exp. Psychol. Hum. Percept. Perform. 47 (1), 13–35. doi:10.1037/xhp0000875

Chrastil, E. R., and Warren, W. H. (2017). Rotational error in path integration: encoding and execution errors in angle reproduction. Exp. Brain Res. 235 (6), 1885–1897. doi:10.1007/s00221-017-4910-y

de Vega, M., and Rodrigo, M. J. (2001). Updating spatial layouts mediated by pointing and labelling under physical and imaginary rotation. European Journal of Cognitive Psychology 13 (3), 369–393. doi:10.1080/09541440126278

Ferrara, K., and Park, S. (2016). Neural representation of scene boundaries. Neuropsychologia 89, 180–190. doi:10.1016/j.neuropsychologia.2016.05.012

Franklin, N., and Tversky, B. (1990). Searching imagined environments. J. Exp. Psychol. General 119 (1), 63–76. doi:10.1037/0096-3445.119.1.63

Friedman, A., Kohler, B., Gunalp, P., Boone, A. P., and Hegarty, M. (2020). A computerized spatial orientation test. Behav. Res. Methods 52 (2), 799–812. doi:10.3758/s13428-019-01277-3

Galati, A., Diavastou, A., and Avraamides, M. N. (2018). Signatures of cognitive difficulty in perspective-taking: Is the egocentric perspective always the easiest to adopt? Lang. Cognition Neurosci. 33 (4), 467–493. doi:10.1080/23273798.2017.1384029

Grant, S. C., and Magee, L. E. (1998). Contributions of proprioception to navigation in virtual environments. Hum. Factors 40 (3), 489–497. doi:10.1518/001872098779591296

Gunalp, P., Chrastil, E. R., and Hegarty, M. (2021). Directionality eclipses agency: How both directional and social cues improve spatial perspective taking. Psychon. Bull. Rev. 28 (4), 1289–1300. doi:10.3758/s13423-021-01896-y

Gunalp, P., Moossaian, T., and Hegarty, M. (2019). Spatial perspective taking: Effects of social, directional, and interactive cues. Mem. Cogn. 47 (5), 1031–1043. doi:10.3758/s13421-019-00910-y

Hedge, C., Powell, G., and Sumner, P. (2018). The reliability paradox: Why robust cognitive tasks do not produce reliable individual differences. Behav. Res. Methods 50 (3), 1166–1186. doi:10.3758/s13428-017-0935-1

Hegarty, M., Montello, D. R., Richardson, A. E., Ishikawa, T., and Lovelace, K. (2006). Spatial abilities at different scales: Individual differences in aptitude-test performance and spatial-layout learning. Intelligence 34 (2), 151–176. doi:10.1016/j.intell.2005.09.005

Hegarty, M., and Waller, D. (2004). A dissociation between mental rotation and perspective-taking spatial abilities. Intelligence 32 (2), 175–191. doi:10.1016/j.intell.2003.12.001

Hintzman, D. L., O'Dell, C. S., and Arndt, D. R. (1981). Orientation in cognitive maps. Cogn. Psychol. 13 (2), 149–206. doi:10.1016/0010-0285(81)90007-4

Holmes, C. A., Marchette, S. A., and Newcombe, N. S. (2017). Multiple views of space: Continuous visual flow enhances small-scale spatial learning. J. Exp. Psychol. Learn. Mem. Cognition 43 (6), 851–861. doi:10.1037/xlm0000346

Huffman, D. J., and Ekstrom, A. D. (2019). Which way is the bookstore? A closer look at the judgments of relative directions task. Spatial Cognition & Computation 19 (2), 93–129. doi:10.1080/13875868.2018.1531869

Johnson, D. W. (1975). Cooperativeness and social perspective taking. J. Personality Soc. Psychol. 31 (2), 241–244. doi:10.1037/h0076285

Kearns, M. J., Warren, W. H., Duchon, A. P., and Tarr, M. J. (2002). Path integration from optic flow and body senses in a homing task. Perception 31 (3), 349–374. doi:10.1068/p3311

Kessler, K., and Wang, H. (2012). Spatial perspective taking is an embodied process, but not for everyone in the same way: differences predicted by sex and social skills score. Spatial Cognition Comput. 12 (2-3), 133–158. doi:10.1080/13875868.2011.634533

Klatzky, R. L., Loomis, J. M., Beall, A. C., Chance, S. S., and Golledge, R. G. (1998). Spatial updating of self-position and orientation during real, imagined, and virtual locomotion. Psychol. Sci. 9 (4), 293–298. doi:10.1111/1467-9280.00058

Kozhevnikov, M., and Hegarty, M. (2001). A dissociation between object manipulation spatial ability and spatial orientation ability. Mem. Cognition 29 (5), 745–756. doi:10.3758/bf03200477

Lever, C., Burton, S., Jeewajee, A., O'Keefe, J., and Burgess, N. (2009). Boundary vector cells in the subiculum of the hippocampal formation. J. Neurosci. 29 (31), 9771–9777. doi:10.1523/jneurosci.1319-09.2009

Levine, M., Jankovic, I. N., and Palij, M. (1982). Principles of spatial problem solving. J. Exp. Psychol. General 111 (2), 157–175. doi:10.1037/0096-3445.111.2.157

Loomis, J. M., Klatzky, R. L., Golledge, R. G., Cicinelli, J. G., Pellegrino, J. W., and Fry, P. A. (1993). Nonvisual navigation by blind and sighted : Assessment of path integration ability. J. Exp. Psychol. General 122 (1), 73–91. doi:10.1037/0096-3445.122.1.73

Loomis, J. M., and Knapp, J. M. (2003). Visual perception of egocentric distance in real and virtual environments. Virtual Adapt. Environ. 11, 21–46. doi:10.1201/9781410608888.pt1

May, M. (2004). Imaginal perspective switches in remembered environments: Transformation versus interference accounts. Cogn. Psychol. 48 (2), 163–206. doi:10.1016/s0010-0285(03)00127-0

Montello, D. R., Richardson, A. E., Hegarty, M., and Provenza, M. (1999). A comparison of methods for estimating directions in egocentric space. Perception 28 (8), 981–1000. doi:10.1068/p280981

Montello, D. R., Waller, D., Hegarty, M., and Richardson, A. E. (2004). “Spatial memory of real environments, virtual environments, and maps,” in Human spatial memory (London: Psychology Press), 271–306.

Parsons, S., Kruijt, A. W., and Fox, E. (2019). Psychological science needs a standard practice of reporting the reliability of cognitive-behavioral measurements. Adv. Methods Pract. Psychol. Sci. 2 (4), 378–395. doi:10.1177/2515245919879695

Petzschner, F. H., and Glasauer, S. (2011). Iterative bayesian estimation as an explanation for range and regression effects: a study on human path integration. J. Neurosci. 31 (47), 17220–17229. doi:10.1523/jneurosci.2028-11.2011

Presson, C. C., and Hazelrigg, M. D. (1984). Building spatial representations through primary and secondary learning. J. Exp. Psychol. Learn. Mem. Cognition 10 (4), 716–722. doi:10.1037/0278-7393.10.4.716

Rieser, J. J. (1989). Access to knowledge of spatial structure at novel points of observation. J. Exp. Psychol. Learn. Mem. Cognition 15 (6), 1157–1165. doi:10.1037/0278-7393.15.6.1157

Schneider, W., Eschman, A., and Zuccolotto, A. (2012). E-Prime 2.0 reference guide manual. Pittsburgh, PA: Psychology Software Tools.

Schwartz, M. (1999). Haptic perception of the distance walked when blindfolded. J. Exp. Psychol. Hum. Percept. Perform. 25, 852–865. doi:10.1037/0096-1523.25.3.852

Shelton, A. L., Clements-Stephens, A. M., Lam, W. Y., Pak, D. M., and Murray, A. J. (2012). Should social savvy equal good spatial skills? The interaction of social skills with spatial perspective taking. J. Exp. Psychol. General 141 (2), 199–205. doi:10.1037/a0024617

Solstad, T., Boccara, C. N., Kropff, E., Moser, M. B., and Moser, E. I. (2008). Representation of geometric borders in the entorhinal cortex. Science 322 (5909), 1865–1868. doi:10.1126/science.1166466

Tarampi, M. R., Heydari, N., and Hegarty, M. (2016). A tale of two types of perspective taking: Sex differences in spatial ability. Psychol. Sci. 27 (11), 1507–1516. doi:10.1177/0956797616667459

Wraga, M. (2003). Thinking outside the body: An advantage for spatial updating during imagined versus physical self-rotation. Journal of Experimental Psychology: Learning, Memory, and Cognition 29 (5), 993–1005. doi:10.1037/0278-7393.29.5.993

Keywords: perspective taking, immersive virtual reality, spatial cognition, embodied cognition, navigation

Citation: He C, Chrastil ER and Hegarty M (2022) A new psychometric task measuring spatial perspective taking in ambulatory virtual reality. Front. Virtual Real. 3:971502. doi: 10.3389/frvir.2022.971502

Received: 17 June 2022; Accepted: 16 September 2022;

Published: 06 October 2022.

Edited by:

Alexander Klippel, Wageningen University and Research, NetherlandsReviewed by:

Sarah H. Creem-Regehr, The University of Utah, United StatesJeanine Stefanucci, The University of Utah, United States

Timothy P. McNamara, Vanderbilt University, United States

Copyright © 2022 He, Chrastil and Hegarty. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Chuanxiuyue He, Y19oZUB1Y3NiLmVkdQ==

Chuanxiuyue He

Chuanxiuyue He Elizabeth R. Chrastil

Elizabeth R. Chrastil Mary Hegarty

Mary Hegarty