- 1Centre for Clinical Brain Sciences, Edinburgh University, Edinburgh, United Kingdom

- 2Department of Trauma and Orthopaedic Surgery, Leeds General Infirmary, Leeds, United Kingdom

- 3Department of Management, London School of Economics, London, United Kingdom

- 4Department of Neurosurgery, Trauma and Gamma Knife Center, Cannizzaro Hospital, Catania, Italy

- 5Digital Surgery, Medtronic Ltd, London, United Kingdom

- 6Division of Surgery and Interventional Science, Research Department of Targeted Intervention, University College London, London, United Kingdom

- 7Institute of Translational Medicine, ETH Zurich, Zurich, Switzerland

- 8Wellcome/EPSRC Centre for Interventional and Surgical Sciences (WEISS), University College London, London, United Kingdom

- 9Division of Neurosurgery, UCL Queen Square Institute of Neurology, University College London, London, United Kingdom

Background: Advances in machine learning and robotics have allowed the development of increasingly autonomous robotic systems which are able to make decisions and learn from experience. This distribution of decision-making away from human supervision poses a legal challenge for determining liability.

Methods: The iRobotSurgeon survey aimed to explore public opinion towards the issue of liability with robotic surgical systems. The survey included five hypothetical scenarios where a patient comes to harm and the respondent needs to determine who they believe is most responsible: the surgeon, the robot manufacturer, the hospital, or another party.

Results: A total of 2,191 completed surveys were gathered evaluating 10,955 individual scenario responses from 78 countries spanning 6 continents. The survey demonstrated a pattern in which participants were sensitive to shifts from fully surgeon-controlled scenarios to scenarios in which robotic systems played a larger role in decision-making such that surgeons were blamed less. However, there was a limit to this shift with human surgeons still being ascribed blame in scenarios of autonomous robotic systems where humans had no role in decision-making. Importantly, there was no clear consensus among respondents where to allocate blame in the case of harm occurring from a fully autonomous system.

Conclusions: The iRobotSurgeon Survey demonstrated a dilemma among respondents on who to blame when harm is caused by a fully autonomous surgical robotic system. Importantly, it also showed that the surgeon is ascribed blame even when they have had no role in decision-making which adds weight to concerns that human operators could act as “moral crumple zones” and bear the brunt of legal responsibility when a complex autonomous system causes harm.

Introduction

Advances in machine learning and robotics have allowed the development of increasingly autonomous robotic systems which are able to make decisions and learn from experience. This distribution of decision-making away from human supervision poses a legal challenge for determining liability as these systems become more independent (1). This is particularly the case with surgical robotic systems due to the inherent risks posed by surgery. There are several human-controlled surgical robotic systems currently on the market, of which the da Vinci system (Intuitive Surgical, USA) is one of the best known. However, in recent years, more advanced systems have been developed that assist the surgeon, executing specific tasks such as navigating the gut, directing screw insertion into the spine or suturing (2–4). With the exponential pace of technological advancement, there is the prospect that a fully autonomous surgical robotic system will be developed in the not-so-distant future. The performance of such a system may prove superior to human surgeons bringing with it improvements in patient care and outcomes. However, with decision-making shifted away from the human surgeon and towards the robotic system, how do you ascribe liability if harm comes to the patient? Surveys of public opinion have found that the issue of legal liability with autonomous vehicles is a major worry (5). In particular, there is concern that there will be a bias towards human actors taking on a disproportionate burden of responsibility in complex human-robot systems; a situation which has been described as the human operator being the “moral crumple zone”, in the sense that humans may absorb the moral and legal responsibility when accidents occur, as the reputation of the technology is protected (6). We believe there is a need to explore public attitudes towards the issue of liability with surgical robotic systems (7). Here we report the findings of the iRobotSurgeon Survey focusing on how the burden of responsibility is allocated with increasing robotic system autonomy and the effect of demographic and geographic factors on these preferences.

Materials and methods

Development of the iRobotSurgeon survey

The study was approved by the ethical board of the London School of Economic (Ref 08387). The iRobotSurgeon survey was developed through an iterative and consultative process with a range of stakeholders including clinicians, ethicists, members of industry and public engagement professionals. Individual scenarios were designed to test attitudes towards increasing robotic system autonomy with decision-making across three domains (management decision, operative planning and technical execution) shifting away from the human surgeon (Table 1). The definitions of these decisions were: management decision (the primary party deciding the need for surgery to treat the underlying pathology), operative planning (the primary party deciding on the type of surgery and how it should be approached) and technical execution (the primary party making intraoperative technical decisions such as where/what to cut). A test survey was posed to a group of patients and their relatives to get feedback on the survey's content, language, and ways to improve it (Supplementary material). Once finalised, the survey included five hypothetical scenarios where a patient comes to harm and the respondent needs to determine who they believe is most responsible: the surgeon, the robot manufacturer, the hospital, or another party (Figure 1A). Several demographic parameters were collected including age, gender, country, education level, occupation and if the respondent had previously undergone surgery. At the end of the survey a simple question was posed to the respondent as an attention check.

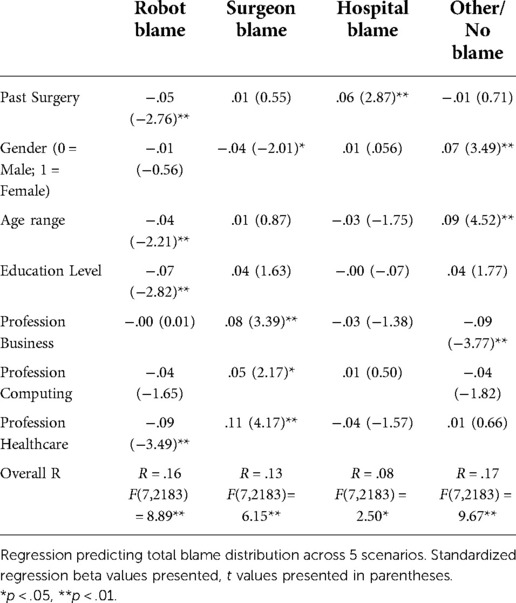

Figure 1. The irobotsurgeon survey poses five scenarios to respondents and asks them to decide who they believe is most liable: the surgeon, robot manufacturer, hospital or another party (A) a total of 2191 responses were collected from 78 countries spanning 6 continents (B).

Distribution of the iRobotSurgeon survey

The survey was launched on 1 January 2020 and was open for 1 year till 31 December 2020. The survey was delivered using the SurveyMonkey™ platform. The survey was distributed through two approaches. The first was through social media and messaging networks (Twitter Inc, Facebook Inc and Watsapp by Facebook Inc) through a collaborator-led model. Study collaborators were recruited to assist with the study by distributing the survey through their social networks. The second approach was through a compensation-per-response approach performed through the Amazon Mechanical Turk (mTurk) platform. Respondents were paid $1 per response.

Data analysis

Responses were included in the final analysis if all questions had been answered and had a correct answer to the attention check question at the end of the survey. Concordance analysis be internal validity was performed using mTurk responses where individual respondents could be identified using their mTurk numbers. Those respondents who responded more than once were used to check if there was concordance between their individual scenario responses. Concordance was defined as agreement between each of their responses for any given scenario. Two-tailed Pearson correlations between variables was performed. Linear Ordinary Least Squares (OLS) regression models were developed to test the explanatory power of multiple demographic variables including having had surgery in the past, gender, age range, education level, and profession. Statistical analysis and graphical representation were performed using GraphPad Prism 9.1.0 (216. GraphPad Software, LLC.) and SPSS 24 (IBM Corp. Released 2017. IBM SPSS Statistics for Windows, Version 25.0. Armonk, NY: IBM Corp).

Results

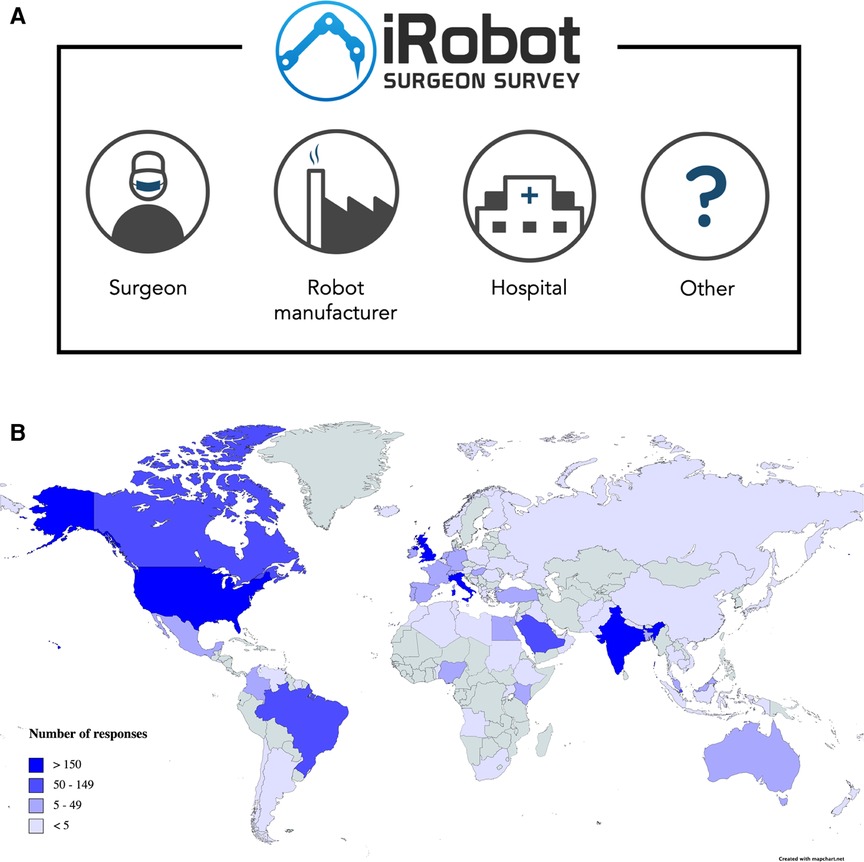

A total of 2,191 completed surveys were gathered evaluating 10,955 individual scenario responses from 78 countries spanning 6 continents (Figure 1B). Basic demographic data was collected from survey respondents including the country they were living in (Supplementary materials). From the 10,955 responses, surgeons (n = 4,404, 40.2%) were the most identified responsible party across the five scenarios. This was followed by robot manufacturer (n = 4,186, 38.2%), the hospital (n = 1,554, 14.2%) and another party (n = 811; 7.4%) (Figure 2A). When respondents allocated blame to “other” they were invited to leave a text comment. These comments were qualitatively analysed, and five themes emerged: no party is responsible (n = 308; 37.9%), one or more party is responsible (n = 166; 20.5%), another party is responsible (n = 121; 14.9%), more information required to decide (n = 118; 14.5%) and not relevant comments (n = 98; 12.1%) (Figure 2D).

Figure 2. A total of 10,955 individual scenario responses were captured from 2,191 respondents. Bar charts demonstrating the overall responses across the five scenarios (A) the levels of autonomy (B) and the individual scenarios (C). The comments from another party option were collated and qualitatively then quantitively analysed (D).

Blame distribution across levels of autonomy and scenarios

The individual scenarios were then categorised based upon the degree of the surgical robotic system's autonomy level based upon a three-level classification described by Jamjoom et al. (Table 1) (7). For level 1 robotic systems, where the human surgeon is the primary decision maker across all parts of the patient management process, the surgeon was the most commonly ascribed responsible party with 2,145 (48.9%) of respondents placing the blame with them (Figure 2B). For level 2 systems where the robotic system is starting to take on more autonomy by technically executing an operative plan defined by the surgeon, the robot manufacturer was the most commonly identified responsible party (n = 1,390; 63.4%) in the situation a technical malfunction leads to patient harm. For level 3 systems where most or all of the decision-making was taken on by the surgical robotic system, there was no clear consensus on who shoulder responsibility: with surgeon responsibility at (n = 1,729, 39.5%), robot manufacturer responsibility at (n = 1,103, 25.1%) and hospital responsibility at (n = 931, 21.3%).

Looking at individual scenarios demonstrated interesting insights into how the distribution of decision-making impacts perceptions on who holds responsibility (Figure 2C). In scenario 1, a patient comes to harm after a complication arises during an operation performed by a surgeon (Surgeon A) using a tele-robotic system despite the efforts of a support surgeon (Surgeon B). This system is completely under control of the surgeon who is the primary decision maker on management approach and technical execution. In this scenario, a clear majority (n = 1,482; 67.6%) identified Surgeon A as the primary bearer of responsibility. A one-sample chi-square analysis showed a statistically significant deviation from equal distribution across 5 blame categories in Scenario 1, (χ2(4) = 3,140.03, p < .01). By contrast, in scenario 2, where a patient comes to harm due to a smart robotic telescope providing inaccurate information to the surgeon, the majority of respondents (n = 1,524; 69.6%) view was that the robot manufacturer was most at blame despite the surgeon being the primary decision maker for the patient's management (χ2(3) = 2,482.22, p < .01). Similarly in scenario 3, a patient comes to harm due to technical malfunction of the robotic system despite a correctly planned procedure by the surgeon. In this circumstance, a majority (n = 1,390; 63.4%) felt that the robot manufacturer was most responsible (χ2(3) = 1,935.92, p < .01). In scenario 4, a patient does not get a satisfactory outcome after an operative performed independently by a surgical robotic system based upon the decision for surgery by a human surgeon. In this case, no party reached over 50% consensus of respondents, but the surgeon was attributed responsibility by 998 (45.6%) respondents (χ2(3) = 521.96, p < .01). Finally, in scenario 5, the surgical robotic system makes all the decisions on how the patient is managed and executes the operation. In this scenario, there was a relatively equal distribution of blame ascribed across all three parties: robot manufacturer (n = 803; 36.7%), surgeon (n = 731; 33.4%) and the hospital (n = 510; 23.3%) (χ2(3) = 476.05, p < .01).

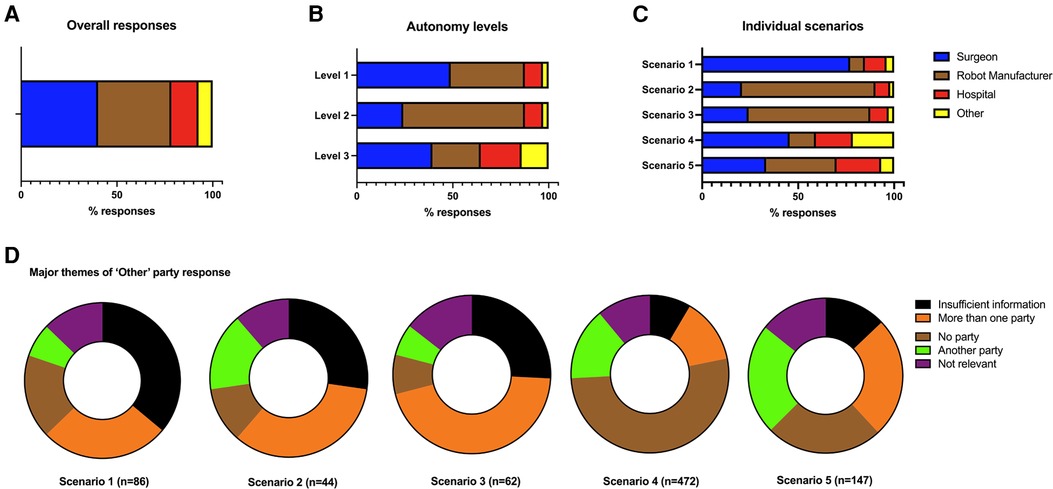

Effect of demographics on blame distribution

In terms of effect of demographics on blame allocation, experience with past surgery was negatively correlated with robot blame (r = −.06, p < .01), and positively correlated with hospital blame (r = .05, p < .01). Female respondents were more likely to select the “other” category as the target of blame (r = .08, p < .01) (Table 2). Employment in the healthcare domain was negatively correlated with robot blame (r = −.12; p < .05), and positively correlated with surgeon blame (r = .09, p < .01). Age was negatively correlated with robot blame and positively correlated with “other” blame. Education level was negatively correlated with robot blame (r = −.12, p < .05) and positively correlated with surgeon blame (r = .08, p < .01). Utilizing linear Ordinary Least Squares (OLS) regression analysis, two models were developed predicting total blame distribution across the 5 scenarios (Table 3). Analyses revealed significant predictors of robot blame in Model 1, with past surgery (b = −.05, t = −2.76, p < .01), age (b = −.04, t = −2.21, p < .01), education level (b = −.07, t = −2.82, p < .01), and employment in healthcare (b = −.09, t = −3.49, p < .01) being negatively related to robot blame. In Model 2 predicting surgeon blame, we found that women were less likely to blame surgeons (b = −.04, t = −2.01, p < .05) while there was an increase in surgeon blame associated with occupation in domains including business (b = .08, t = 3.39, p < .01), computing (b = .05, t = 2.17, p < .05), and healthcare (b = .11, t = 4.17, p < .01).

Discussion

Our findings suggest a dilemma on how to ascribe responsibility with increasing autonomy with surgical robotic systems. As more decision-making is taken on by the robotic system across the patient management process, there is a growing divergence of opinion on who shoulders the responsibility when the system fails. When a patient came to harm with the surgeon controlling the robotic system then the surgeon was viewed as the most responsible. This supports the finding by Furlough and colleagues who found that human actors received most blame in scenarios of non-autonomous robotic systems (8). Conversely, in the event of a technical fault with the robotic system, be it providing inaccurate information to the surgeon or not executing a pre-planned operation correctly, there was consensus from the respondents that the robot manufacturer is most responsible in these situations. However, with autonomous systems, there were no clear majorities on where to allocate the blame. This reflects a growing uncertainty and disagreement among the respondents on who to ascribe responsibility to. Saying that, the surgeon is still the most identified responsible actor despite them having a limited role in the decision-making process for the patients' care across the two scenarios. This adds weight to the concept of the “moral crumple zone” which was coined by Madeline Elish to describe humans bearing the consequences of failure in complex human-robot systems (6). This has been shown quantitively by Awad and colleagues who examined blame distribution in semi-autonomous vehicle accidents and found that where a human and machine share control of a car, more blame is ascribed to the human driver when both drivers make an error (9). These findings represent evidence of bias in how the public distribute blame in autonomous systems, however there is also legal precedent in medicine that tilts liability away from the manufacturer and towards the physician. The “Learned Intermediary” doctrine limits the recovery from a manufacturer when they have provided adequate information about the risks of their device or drug (10).

Machine learning approaches permit robotic systems to learn and solve problems with solutions previously unknown to human operators. This degree of independence in decision-making, as with scenario 5, can lead to unpredictable actions which poses significant legal challenges in determining liability. Firstly, in Tort law, negligence is an action that leads to unreasonable harm or risk to property or an individual (11). As these systems becomes more autonomous and move away from predetermined instructions and arrive at novel decisions, it becomes difficult to determine and define this standard. This “black box” challenge in machine learning has been the driving force behind calls for making the solutions reached by these algorithms explainable to humans. Hacker and colleagues argue that current tort liability provides incentives to make machine learning algorithms explainable to protect professional actors such as doctors (12). This view is backed up by a study by Kim and Hinds who found a reduction in blame attribution to human participants when autonomous robotic system was more transparent in their decision-making (13). Our finding that the public has a bias towards ascribing blame to the surgeon, even when they have limited role in decision-making, has important policy implications. As suggested by Awad et al (9), this finding highlights that a bottom-up regulatory system from Tort law adjudicated in a jury system may fail to regulate complex autonomous systems effectively. A recently published WHO guidance on the governance of artificial intelligence for health recommended establishing international norms and legal standards to ensure national accountability to protect patients (14).

In this study, a number of demographic factors appeared to influence respondents' likelihood to attribute blame to the surgeon. This included male respondents and those working in healthcare, computing and business professions. Conversely, robot manufacturer blame was reduced in those respondents who had experienced surgery, were older, had higher levels of education and worked in healthcare. This highlights that those with experience of the healthcare system, be it as a patient or professionally, tend to direct blame towards surgeon as opposed to the robot manufacturer. For the healthcare professionals, this is may be explained by the respondents' understanding that the onus of responsibility typically lies with the surgeon. While for respondents who had previously undergone surgery, the experience of placing trust in a surgeon may have influenced their perception of blame attribution in the study.

The study has several limitations which need to be considered when drawing conclusions from our results. Firstly, the respondent population was titled towards a more male, medically trained and highly educated population. In conjunction to this, despite responses from a wide range of countries, there were 27 countries with only 1 response which limits the generalisability of the findings. The scenarios posed to respondents had limited information in them which several respondents felt was insufficient to make a decision as reflected in some of the text responses. Coupled to this, our decision to use categorical answers prevented respondents from ascribing proportions of blame to multiple parties.

Conclusion

As decision-making becomes distributed across increasingly intelligent autonomous systems there is a growing challenge in determining liability. In this study, we provide the first empirical evidence of current public attitudes towards this problem and demonstrate a liability dilemma as surgical robotic systems become increasingly autonomous. This highlights the challenges facing policy makers and regulators in developing legal frameworks around these new technologies, but also the importance of engaging the public with this process.

Data availability statement

The iRobotSurgeon raw data is available from the Edinburgh University's Data Share service via: https://datashare.ed.ac.uk/handle/10283/4481.

Ethics statement

The study was approved by the ethics board of the London School of Economic (Ref 08387). Written informed consent for participation was not required for this study as it involved a voluntary anonymised questionnaire.

iRobotSurgeon Collaborators

D. Dasgupta, Y.J. Tan, Y.T. Lo, A. Asif, F. Samad, A. Negida, R. McNicholas, L. McColm, A. Chari, H. Al Abdulsalam, S. Venturini, T. Hasan, G.H. Majernik, S. Sravanam, B. Vasey, D. Taylor, H. Lambert.

Author contributions

AABJ, Conceptualization; Methodology; Investigation; Formal analysis; Writing - Original Draft; Writing - Review / Editing; Visualization; Project administration; Supervision; AMAJ, Conceptualization; Methodology; Investigation; Formal analysis; Writing - Original Draft; Writing - Review / Editing; JT, Methodology; Investigation; Formal analysis; Writing - Original Draft; Writing - Review / Editing; PP, Methodology; Writing - Review / Editing; KK, Methodology; Writing - Review / Editing; JWC, Methodology; Writing - Review / Editing; EV, Conceptualization; Methodology; Writing - Review / Editing; DS, Conceptualization; Methodology; Writing - Review / Editing; HJM, Conceptualization; Methodology; Investigation; Formal analysis; Writing - Original Draft; Writing - Review / Editing; Supervision; Funding acquisition. All authors contributed to the article and approved the submitted version.

Funding

HJM is supported by the Wellcome (203145Z/16/Z) EPSRC (NS/A000050/1) Centre for Interventional and Surgical Sciences and the NIHR Biomedical Research Centre, University College London.

Acknowledgments

We would like to extend our appreciation to JD for his input and expertise on editing the iRobotSurgeon Survey.

Conflict of interest

KK is employed by Digital Surgery Medtronic Ltd. JC is employed part-time by CMR Surgical Ltd. DS is employed part-time by Digital Surgery Medtronic Ltd and is a shareholder in Odin Vision Ltd. None of these companies provided any funding for the study.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fsurg.2022.1015367/full#supplementary-material.

References

1. Yang GZ, Cambias J, Cleary K, Daimler E, Drake J, Dupont PE, et al. Medical robotics-regulatory, ethical, and legal considerations for increasing levels of autonomy. Sci Robot. (2017) 2(4):1–2. doi: 10.1126/scirobotics.aam8638

3. Ahmed AK, Zygourakis CC, Kalb S, Zhu AM, Molina CA, Jiang B, et al. First spine surgery utilizing real-time image-guided robotic assistance. Comput Assist Surg. (2019) 24(1):13–7. doi: 10.1080/24699322.2018.1542029

4. Shademan A, Decker RS, Opfermann JD, Leonard S, Krieger A, Kim PCW. Supervised autonomous robotic soft tissue surgery. Sci Transl Med. (2016) 8(337):337ra64-337ra64. doi: 10.1126/scitranslmed.aad9398

5. Piao J, McDonald M, Hounsell N, Graindorge M, Graindorge T, Malhene N. Public views towards implementation of automated vehicles in urban areas. Transp Res Procedia. (2016) 14(0):2168–77. doi: 10.1016/j.trpro.2016.05.232

6. Elish MC. Moral crumple zones cautionary tales in human-robot interaction madeleine clare elish engaging science, technology, and society. SSRN Electron J. (2019) 5:40–60. doi: 10.17351/ests2019.260

7. Jamjoom AAB, Jamjoom AMA, Marcus HJ. Exploring public opinion about liability and responsibility in surgical robotics. Nat Mach Intell. (2020) 2(4):194–6. doidoi: 10.1038/s42256-020-0169-2

8. Furlough C, Stokes T, Gillan DJ. Attributing blame to robots: I. The influence of robot autonomy. Hum Factors. (2021) 63(4):592–602. doi: 10.1177/0018720819880641

9. Awad E, Levine S, Kleiman-Weiner M, Dsouza S, Tenenbaum JB, Shariff A, et al. Blaming humans in autonomous vehicle accidents: shared responsibility across levels of automation. Arxiv. (2018):1–40. doi: 10.48550/arXiv.1803.07170

10. Husgen J. Product liability suits involving drug or device manufacturers and physicians: the learned intermediary doctrine and the physician’s duty to warn. Mo Med. (2014) [cited 2021 Aug 9] 111(6):478. PMID: 25665231

12. Hacker P, Krestel R, Grundmann S, Naumann F. Explainable AI under contract and tort law: legal incentives and technical challenges. Artif Intell Law. (2020) [cited 2021 Jun 12] 28(4):415–39. doidoi: 10.1007/s10506-020-09260-6

Keywords: robotics, surgery, liability, public opinion, autonomous

Citation: Jamjoom AA, Jamjoom AM, Thomas JP, Palmisciano P, Kerr K, Collins JW, Vayena E, Stoyanov D and Marcus HJ (2022) Autonomous surgical robotic systems and the liability dilemma. Front. Surg. 9:1015367. doi: 10.3389/fsurg.2022.1015367

Received: 9 August 2022; Accepted: 29 August 2022;

Published: 16 September 2022.

Edited by:

Pasquale Cianci, Azienda Sanitaria Localedella Provincia di Barletta Andri Trani (ASL BT), ItalyReviewed by:

Vincenzo Neri, University of Foggia, ItalyMarina Minafra, Ospedale civile “Lorenzo Bonomo”, Italy

© 2022 Jamjoom, Jamjoom, Thomas, Palmisciano, Kerr, Collins, Vayena, Stoyanov, Marcus and Collaboration. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Aimun A.B. Jamjoom djFhamFtam9AZXhzZWVkLmVkLmFjLnVr

Specialty Section: This article was submitted to Surgical Oncology, a section of the journal Frontiers in Surgery

Aimun A.B. Jamjoom1*

Aimun A.B. Jamjoom1* Ammer M.A. Jamjoom

Ammer M.A. Jamjoom Paolo Palmisciano

Paolo Palmisciano Justin W. Collins

Justin W. Collins Effy Vayena

Effy Vayena Danail Stoyanov

Danail Stoyanov