- 1Department of Anesthesiology, Wayne State University, Detroit, MI, United States

- 2Outcomes Research Consortium®, Houston, TX, United States

- 3Department of Anesthesiology and Critical Care, Johns Hopkins University School of Medicine, Baltimore, MD, United States

- 4Imperial College London, South Kensington Campus, South Kensington, United Kingdom

- 5Michigan State University College of Osteopathic Medicine, East Lansing, MI, United States

Background: Medical applications of artificial intelligence (AI) range from diagnostic support and electronic health record optimization to personalized treatment and administrative automation. Despite these advances, AI integration into healthcare requires the acceptance and trust of clinicians and patients. Understanding their perspectives is critical to guiding effective and ethical AI adoption in medicine.

Methods: We conducted a nationwide, anonymous, online survey of self-identified physicians and patients in the United States using the Clinician and Patient Experience Registry (CaPER) platform. The survey employed Random Domain Intercept Technology (RDIT) and Random Device Engagement (RDE) to collect nationally-representative online responses while minimizing known survey biases. Respondents were stratified into physicians (n = 382) or patients (n = 760), and completed a series of questions assessing demographics, comfort with AI-supported decision-making, trust in AI vs. human clinicians, and perceived impact of AI on the physician-patient relationship. Data were analyzed descriptively and comparatively, including specialty-specific sub-analyses among physicians.

Results: A total of 1,142 complete responses were analyzed. Both physicians and patients reported generally positive attitudes toward AI-supported medical decision-making, with the majority expressing comfort or neutrality. Approximately one-third of both groups favored a collaborative model integrating both human and AI input. Specialty-specific analysis revealed higher comfort with AI among procedure-based disciplines, while diagnostic-oriented specialties expressed more reservations. Respondents were generally evenly divided regarding the anticipated impact of AI on the physician-patient relationship, with many predicting a strengthening effect.

Conclusions: This large-scale online survey highlights a generally favorable outlook toward AI integration among both physicians and patients, with notable variation by medical specialty for physicians. The findings underscore the importance of tailoring AI implementation strategies to specific clinical contexts and maintaining a focus on human-AI collaboration.

1 Introduction

Artificial intelligence (AI), using modalities such as machine learning (ML), neural networks, and others, is being incorporated into systems and applications that support both healthcare and other industries (1). Such solutions have been rapidly developing, and are being increasingly embedded as tools in healthcare. Examples include image and tissue analysis (2, 3), electronic health record (EHR) optimization with decision support and error flagging (4–7), personalized treatment leveraging genetics (8–10), surgical assistance for precision procedures (11), and administrative offloading within healthcare facilities (12).

The applications of AI in medicine aim to improve patient care, increase diagnostic precision, and reduce complications. Practical examples are diagnostic support systems, resource-limited treatment options, and predictive models aimed at prognosis, disease prevention, and epidemic control. Currently, these applications still call for human oversight and ethical safeguards (2, 13). Despite their latent prospective capabilities, the effective integration of AI systems into medical practice still hinges on human factors, particularly the acceptance and trust of those directly impacted: clinicians and patients. The current landscape of AI adoption in healthcare presents a unique inflection point where technological developments are rapidly outpacing the understanding of stakeholder readiness and concerns. Nevertheless, healthcare systems must measure AI-enhanced diagnostics and treatment against complex issues of liability, transparency, and the preservation of human clinical judgment. This period of swift technological development makes it particularly crucial to understand how frontline healthcare participants—both clinicians and patients—perceive the integration or AI tools that could fundamentally reshape medical practice. Without comprehensive insight into these perceptions, AI solutions may fail to gain the trust and adoption necessary to unlock their respective potential benefits (14).

Clinicians play a crucial role in accepting, selecting, evaluating, and implementing AI technology in healthcare. By actively participating in these processes, they can help ensure the effective integration of AI tools into clinical workflows, addressing real-world challenges, and improving patient care (15). Clinician insights are vital to advancing AI systems through assessing their accuracy, reliability, and usability; however, their ambivalence and concerns about liability, loss of autonomy, and overreliance on these systems are legitimate and warrant further investigation (16). On the other hand, clinician engagement is critical for building trust in AI, ensuring ethical practices, and fostering the overall acceptance and integration of AI-driven solutions into medical practice.

Likewise, patient perspectives are essential for evaluating the usability, accessibility, and ethical considerations of AI tools. Research highlights the importance of active patient involvement in the evaluation and feedback process. The limited research about patient perceptions of AI use suggests mixed feelings of trust and openness to AI systems as supportive tools in healthcare. Patients express concerns over transparency, data privacy, and, more importantly, compromised human oversight in decision-making (16). Building trust and tailoring AI technologies that align with individual needs, preferences, and abilities requires understanding and addressing of these concerns and barriers (17). Consequently, collecting and leveraging patient feedback is vital for effective development and deployment of future AI tools in healthcare, facilitating continuous adaptive learning from actual real-world experiences and outcomes.

Despite the growing integration of AI in healthcare and the critical need for clinician and patient engagement, there remains a considerable gap in acquiring comprehensive data regarding their perceptions of the impact of such technological leaps. This gap reflects both the relative novelty of AI technologies and the scarcity of empirical studies examining what shapes clinician and patient perceptions and acceptance of AI integration. Little is particularly known about how clinician perspectives of AI integration may vary across medical specialties and, consequently, may influence AI implementation strategies that could be specialty-specific besides being patient-centered. To address this gap, we surveyed physicians and patients in the United States to explore their attitudes and perspectives regarding the use of AI in healthcare (18). The use of a mass random sampling methodology allowed the collection of sentiment data, theme analysis, and head-to-head comparison of specific data points between the two groups, thereby offering valuable insights into this dynamic subject.

2 Methods

The Clinician and Patient Experience Registry, or CaPER (https://riwi.com/caper), was utilized to conduct an electronic, anonymous, opt-in, non-incentivized survey of random internet users in the United States. Respondents self-identified as recipients of clinical care (“patients”) or as clinicians. Out of the clinician category, only physician responses were included. This study used two distinct “recruitment” methodologies: Random Domain Intercept Technology (RDIT) and Random Device Engagement (RDE). RDIT is a patented tool (RIWI Corp, Toronto, Canada) that delivers anonymous opt-in surveys to random web users. RDIT mitigates self-selection, social desirability, acquiescence, and online coverage biases through proprietary algorithms, and also compares responses to census data to maximize generalizability (19). This technology does not use ad-tracking pixels, essentially preventing ad-blocking technology from impacting survey distribution. While the survey is underway, RDIT employs bot-filtering, anomaly detection techniques like straight-lining detection, fraud detection, and rotation of entry points to surveys to ensure unique respondents. RDE allows surveys to reach users across applications, websites, and pop-ups, further diversifying reach. This technology has been utilized successfully to collect respondent perceptions across diverse healthcare topics (20, 21).

All potential participants were presented with an opt-in screen indicating that the survey was secure, anonymous, non-incentivized, and non-committal. Qualifying respondents (physicians and patients) were directed along two separate answer trees with some common questions to both groups. Data were analyzed both descriptively and comparatively and evaluated against census data. The analysis was limited to surveys that were fully completed. This project did not constitute human participant research according to the definition codified in the Common Rule at 45 CFR 46 and FDA regulations. WSU IRB HPR Number: 2023 126.

The results reported herein represent a sub-analysis of 1,142 responses focusing on general demographics, trust in AI-supported systems, and the relationship between medical specialty and comfort with AI integration in medicine.

2.1 Statistical analysis

Data Preparation and Grouping: Data was imported and cleaned using R. Participants were classified by role as physicians or patients. Age groups were created to facilitate demographic analysis and are defined as: 16–24, 25–34, 35–44, 45–54, 55–64, and 65 or over. Physicians and patients were analyzed separately for demographic summarization and subgroup analyses.

Descriptive Statistics: Demographic characteristics for physicians (n = 382) and patients (n = 760) were summarized in separate tables, including age, age group, gender, medical specialty for physicians, and industry for patients. Frequencies and percentages were calculated for categorical variables and means and standard deviations for continuous variables.

Statistical Analyses: Comparisons between physicians and patients on AI-related survey responses were performed using non-parametric tests due to the ordinal nature of Likert scale data. For comparisons of response distributions by group, Chi-square or Fisher's exact tests were used for categorical proportions. Wilcoxon rank-sum tests assessed differences in mean ordinal response scores between physicians and patients. Kruskal–Wallis tests evaluated differences in responses across medical specialties, followed by Bonferroni-adjusted pairwise Wilcoxon post-hoc tests when significant. Statistical significance was set at a two-sided p-value < 0.05.

Software: All data analyses and visualizations were conducted using R (version 4.3.2).

3 Results

The online survey was released electronically nationwide between January 29th and March 26th, 2024. A total of 1,500 responses were collected for the parent survey, out of which 1,142 had completely answered the three questions contained within this analysis. Of those, 382 classified as Physicians and 760 as Patients.

3.1 Survey logic and demographics

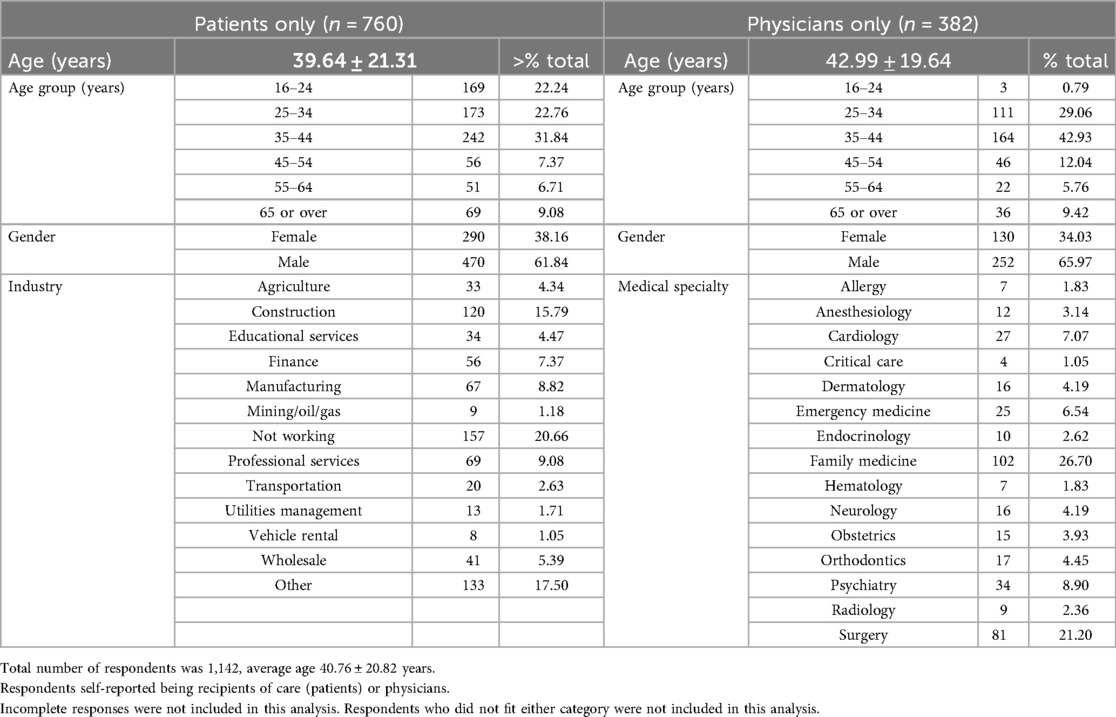

Participants were initially asked to report their age, gender, and occupation, and 1,499 complete responses were collected. Of those, respondents were asked to report their occupation from an inclusive list of general categories (Table 1). Those who reported “Healthcare” as an occupation were further asked to specify their role, and the “Physician” responses were included in the final analysis. Non-healthcare respondents were asked survey questions from their perspective as recipients of care or “Patients”. Respondent demographics are depicted in Table 1 and physician specialty data in Figure 1.

Figure 1. Physician specialty data. (A) All respondents who indicated “Healthcare” as an occupation were further asked to specify field of work. Those who answered “Physician” were further sub-stratified into medical and surgical sub-specialties. (B) All physician respondents who indicated “Surgery” as a specialty were further sub-divided into surgical sub-specialties.

3.2 Comfort in AI-supported decision making and trust in AI-supported systems

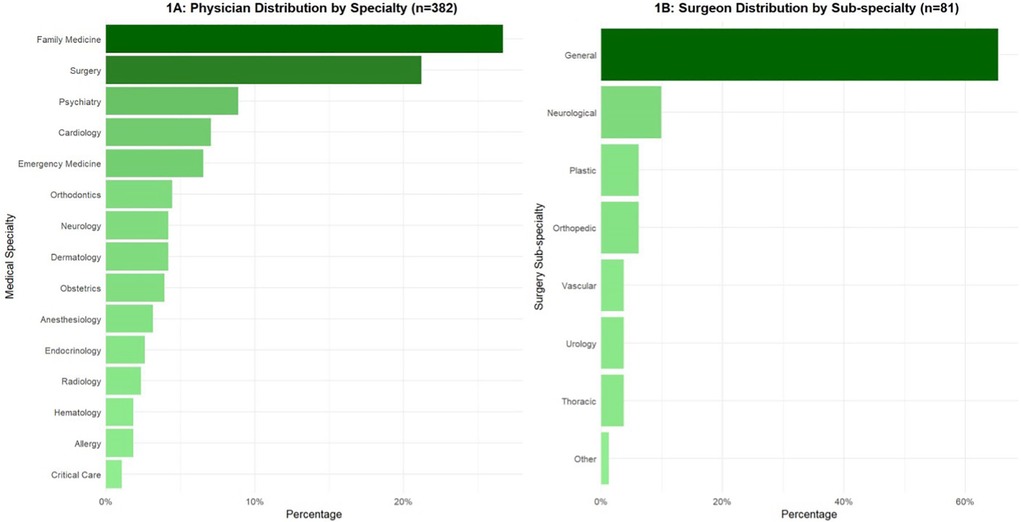

Respondents were asked about their comfort level regarding the use of AI to support medical decision making. Their responses were graded on a 7-point scale ranging from “extremely uncomfortable” to “extremely comfortable”. The same question was asked to both survey groups, and the responses reflected perspectives on the issue (Figure 2).

Figure 2. How comfortable are you with reliance on AI systems for medical decision-making support? Each survey group (patients vs. physicians) received the same question tailored to their respective views on the matter. The question was prefaced with “As a patient…” or “As a physician…”. (A) Inter-group comparisons per answer category. (B) Overall group comparison. The Wilcoxon rank-sum test (Mann–Whitney U test) was conducted to determine whether comfort levels with reliance on AI systems for medical decision-making differed significantly between physicians and patients. This non-parametric test was selected due to the ordinal nature of the Likert-scale data.

Among patient and physician respondents, all categories indicating discomfort received around 10% or less of the total responses per group. Answers were comparable between patients and physicians except for a statistically significant higher “neutral” responses in physicians and “quite comfortable” in patients. The highest response was “extremely comfortable” for physicians and “quite comfortable” for patients (Figure 2A). There was no statistically significant difference between the two groups, indicating that physicians and patients exhibited comparable attitudes toward the use of AI in supporting medical decision-making (Figure 2B).

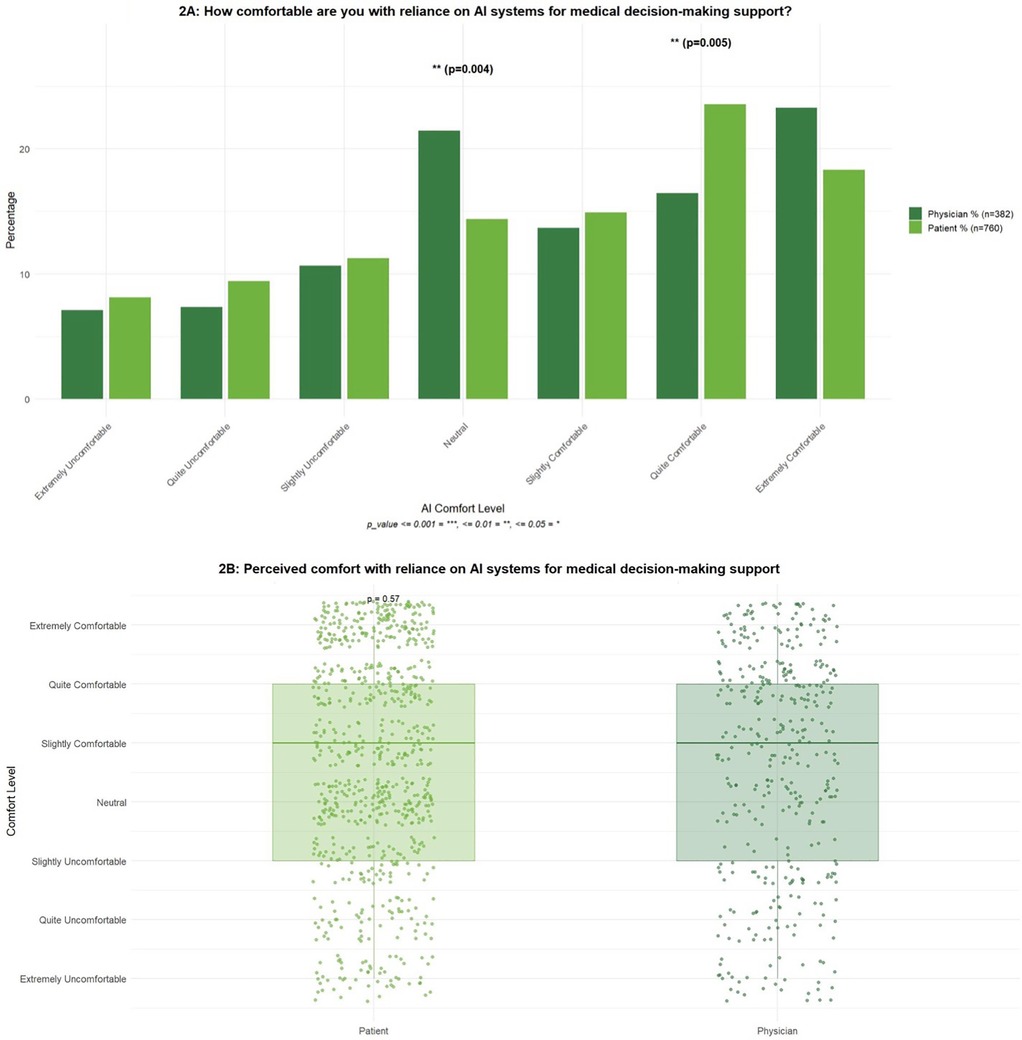

Respondents were also asked about the extent of their trust in AI supported medical systems as compared to the traditional clinician-led systems. Their responses were categorized into AI-favoring, human-favoring, collaboration-favoring, or no preference. The same question was asked to both survey groups, and the responses reflected perspectives on the issue (Figure 3).

Figure 3. To what extent do you trust AI systems in comparison to human physicians when it comes to making medical decisions such as treatment choices and plan of care?. Each survey group (patients vs. physicians) received the same question tailored to their respective views on the matter. The question was prefaced with “As a patient…” or “As a physician…”. (A) Inter-group comparisons per answer category. (B) Overall group comparison. A Wilcoxon rank-sum test (Mann–Whitney U test) was conducted to assess whether levels of trust in AI systems, relative to human physicians, differed between physicians and patients. Given the ordinal structure of the trust scale, this non-parametric approach was appropriate for the analysis.

Most respondents in both groups (around 30%) expressed trusting human-AI collaboration, and a similar proportion of patients expressed favoring AI-supported systems but within limits. Per answer category, the groups differed significantly in the proportion of physicians who “trusted AI more” and “trusted physicians more” (Figure 2A). There was no statistically significant difference in trust levels between the two groups, suggesting that physicians and patients demonstrated similar attitudes toward the use of AI in medical decision-making. Overall, both groups tended to express the greatest confidence in models emphasizing collaboration between AI systems and human physicians (Figure 3B).

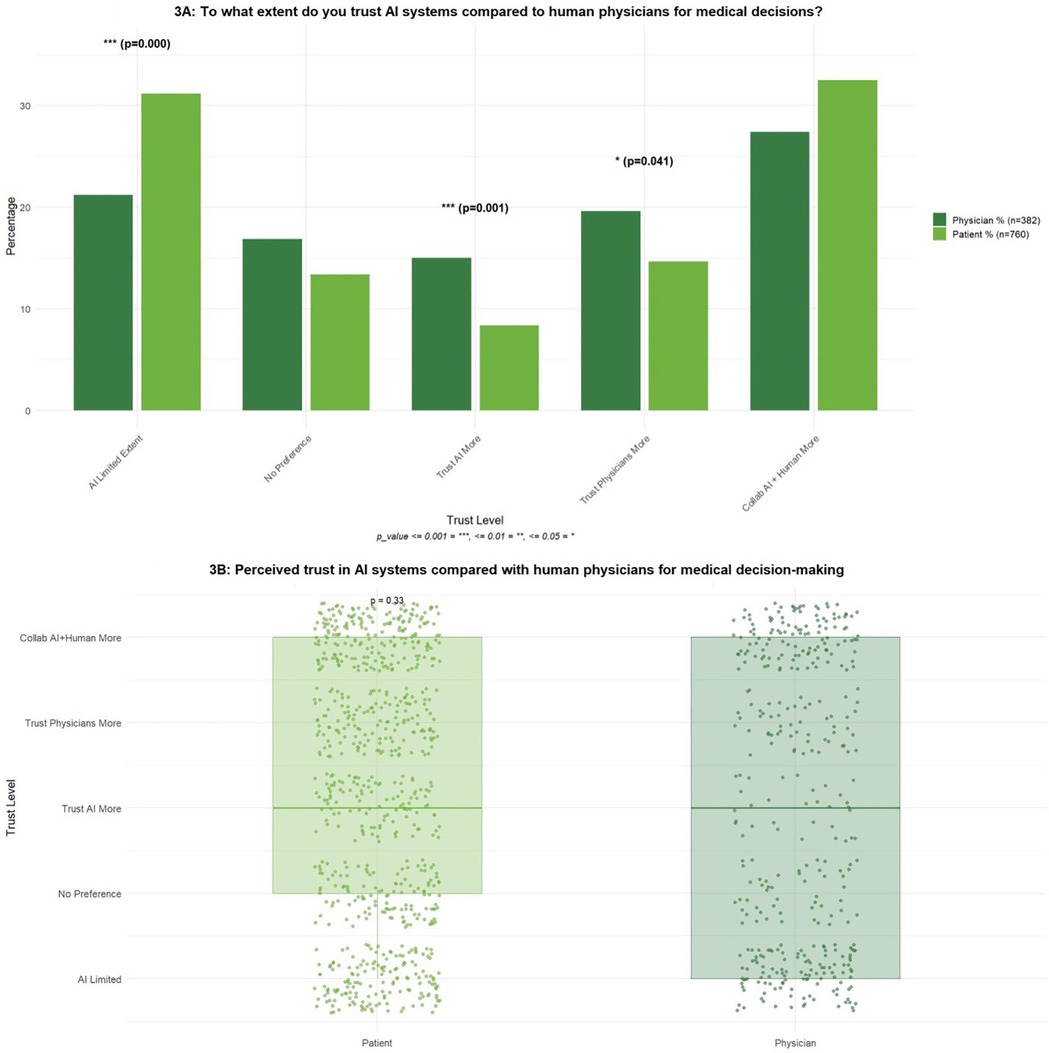

3.3 Medical specialty stratification

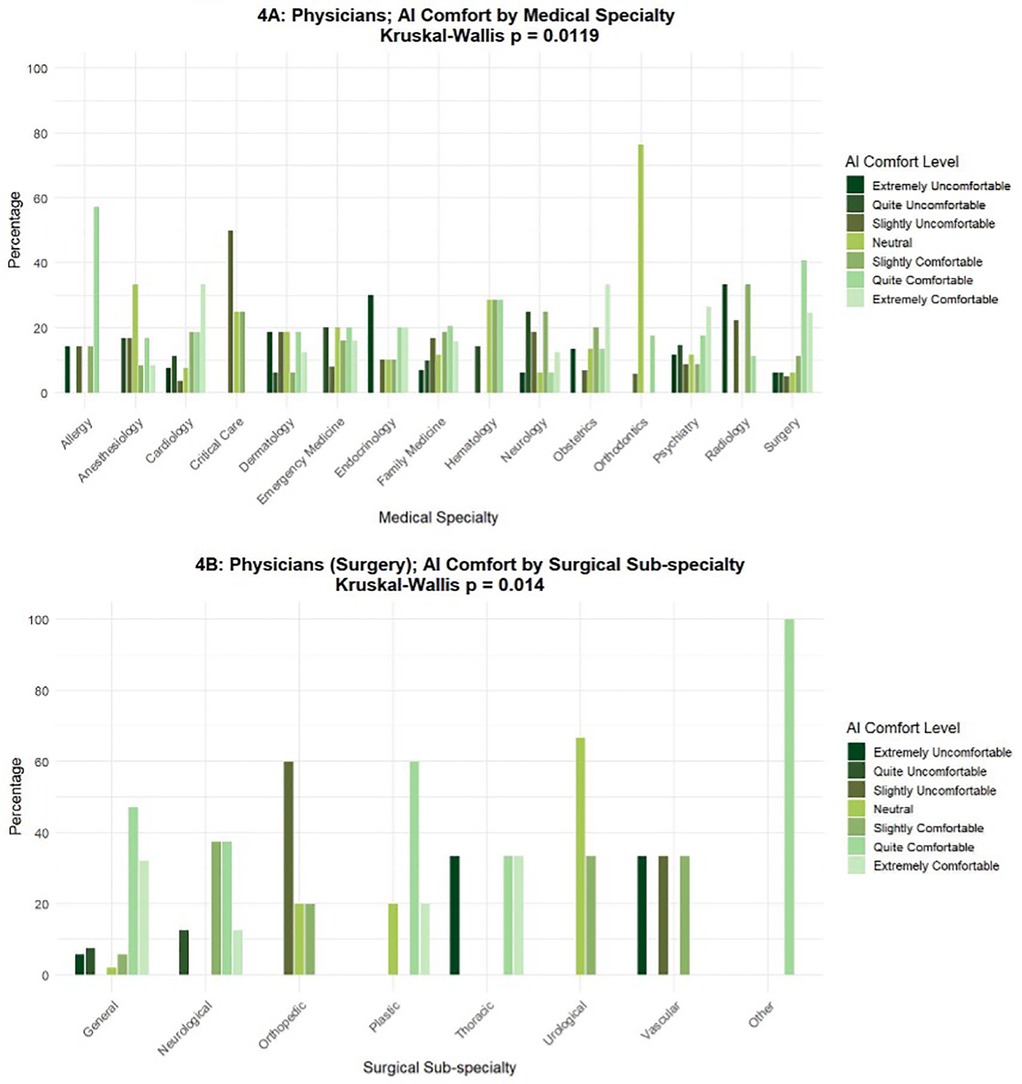

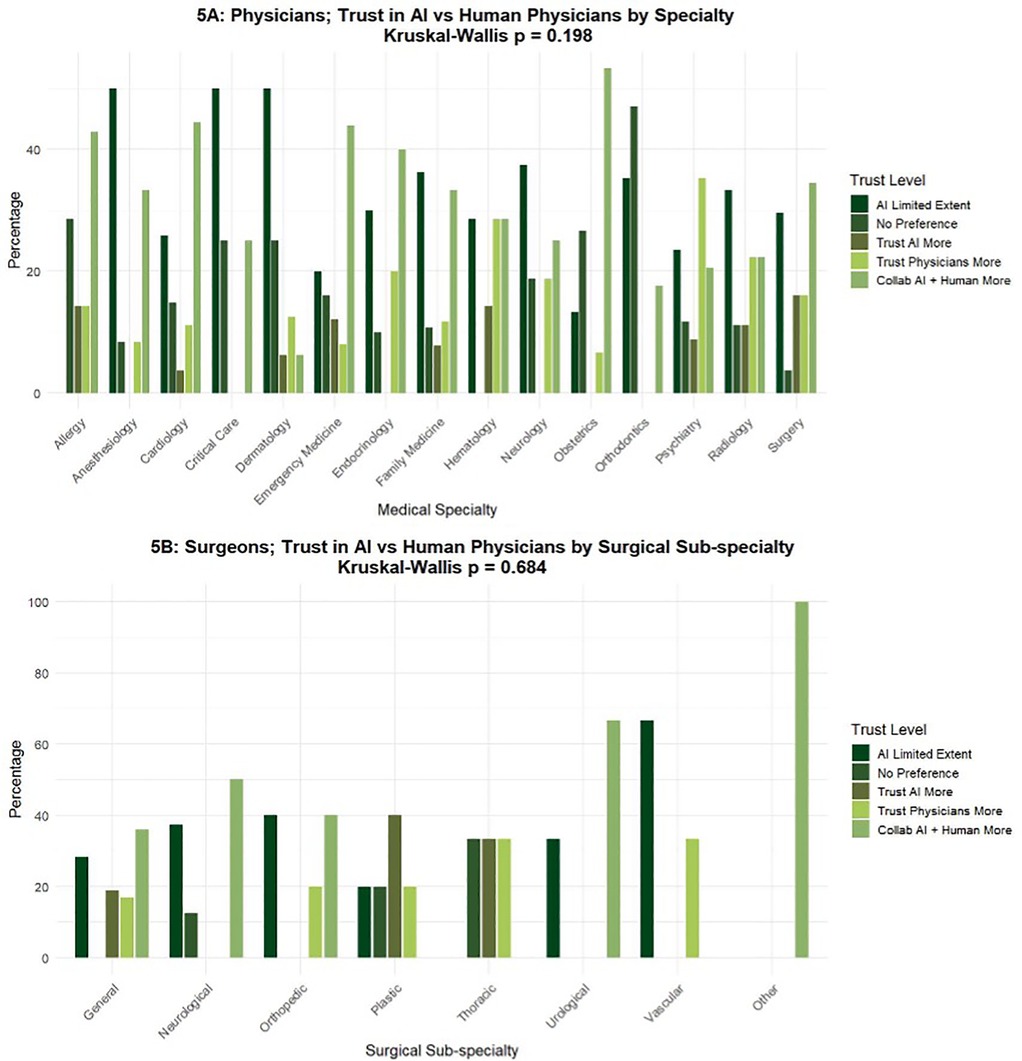

Stratifying the above results for physicians and by specialty, responses were somewhat widely spread (Figures 4, 5). Notable categories included the following:

Figure 4. “How comfortable are you with reliance on AI systems for medical decision-making support?” (medical and surgical specialties). The total number of respondents in the “Physician” group was 382, including 301 in medical (A) and 81 in surgical (B) sub-specialties. A Kruskal–Wallis test was conducted to assess whether physicians' comfort levels with AI in medical decision-making differed significantly across medical specialties. This non-parametric approach was deemed appropriate due to the ordinal nature of the 7-point Likert scale used to measure comfort. A separate Kruskal–Wallis test applied to physicians within surgical disciplines also demonstrated similar findings.

Figure 5. To what extent do you trust AI systems in comparison to human physicians when it comes to making medical decisions such as treatment choices and plan of care? (medical and surgical specialties). The total number of respondents in the “Physician” group was 382 (A), including 81 in surgical sub-specialties (B). A Kruskal–Wallis test was employed to examine whether physicians' levels of trust in AI systems relative to human physicians differed significantly across medical specialties. This non-parametric approach was selected due to the ordinal nature of the five-point categorical trust scale. A separate Kruskal–Wallis test applied to physicians within surgical disciplines also demonstrated similar findings.

Most Allergists indicated comfort with reliance on AI-supported systems, while the majority of Orthodontic surgeons were neutral, and most Critical Care physicians expressed slight discomfort. Regarding preferences in trust between AI-supported systems and humans, Anesthesiologists, Dermatologists, Neurologists, and Critical Care physicians trusted AI-supported systems, but within limitations. Orthodontic surgeons generally had no preference. Allergists, Cardiologists, Emergency physicians, Endocrinologists, and Obstetricians preferred human-AI collaborative systems. There was a statistically significant difference among medical as well as surgical specialties, indicating that comfort with AI varied by field of practice (Figure 4).

When evaluating physician level of trust in AI systems relative to humans, there was no statistically significant difference among medical or surgical specialties, suggesting that trust in AI was relatively consistent across fields (Figure 5).

3.4 The physician-patient relationship

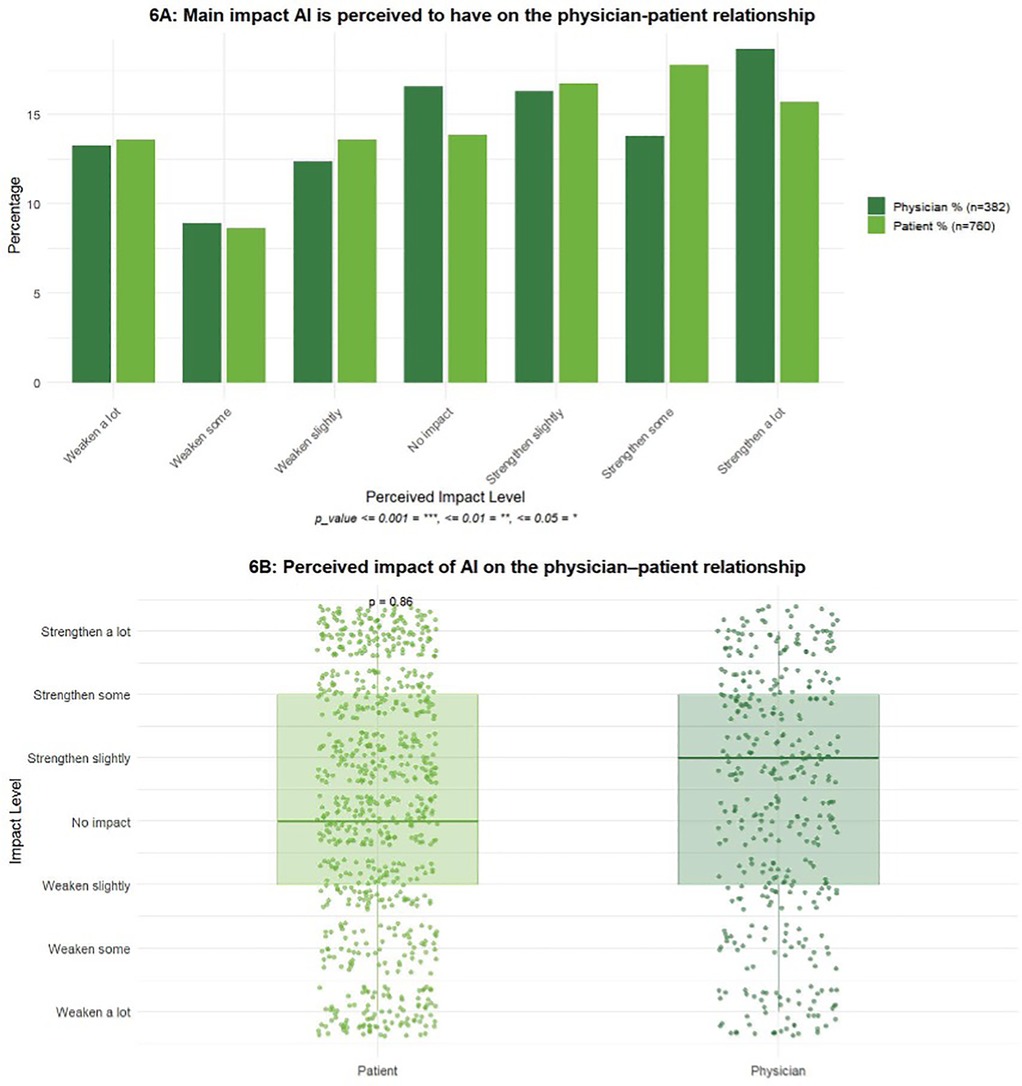

Respondents were asked to predict the impact of AI-supported medical systems on the physician-patient relationship. Responses were recorded on a 7-point scale ranging from “Strengthen a lot” to “Weaken a lot”. The two groups were generally in agreement, and the responses were more or less evenly spread across all answer choices with no statistically significant differences (Figure 6A).

Figure 6. What is the main impact you consider that AI will have on the physician-patient relationship?. Each survey group (patients vs. physicians) received the same question tailored to their respective views on the matter. The question was prefaced with “As a patient…” or “As a physician…”. (A) Inter-group comparisons per answer category. (B) Overall group comparison. A Wilcoxon rank-sum test (Mann–Whitney U test) was performed to assess differences in perceived impact of AI on the physician–patient relationship between physicians and patients. This non-parametric approach was selected due to the ordinal structure of the response scale.

There was no statistically significant difference between the two groups regarding perceived impact of AI on the physician-patient relationship, suggesting that physicians and patients hold broadly similar perceptions regarding the influence of AI on this relationship. Overall, the responses of both groups were concentrated around neutral to slightly positive categories, reflecting a general expectation that AI will either have minimal effect or may modestly strengthen the physician–patient relationship (Figure 6B).

4 Discussion

We surveyed a sample of self-identified patients and physicians across the US in 2024 and analyzed 1,499 responses regarding the use of AI-supported medical systems. Our aim was to address the gap in our understanding of the perspectives of some of the key stakeholders in our medical systems—patients and physicians—regarding the utilization of AI in medicine. A sub-analysis of the parent survey included 382 physicians and 760 recipients of healthcare, here termed “patients”.

Our survey was conducted via web-delivery technology, and the respondents spanned multiple age groups. We observed a higher concentration in the 16–44 year age range, likely reflecting the internet-using demographic. The physician group represented multiple medical and surgical specialties, the largest being Family Medicine.

Overall, we noted varying degrees of comfort and trust from both physicians and patients with the use of AI in medicine. The level of comfort with reliance on AI-supported systems showed a positive trend for physicians and patients, and about a third of respondents in each group preferred a system that involved human-AI collaboration (Figures 2, 3). Interestingly, the two statistically significant comparisons indicated that physicians were more likely to take an extreme view of trusting AI more or trusting physicians more as compared to patients (Figure 3A). Taking a closer look at the physician group, different specialties predictably showed different affinities to integrating AI into their workstream. The specialty that seemed most comfortable with the reliance on AI-supported systems was Allergy, while Endocrinology and Cardiology expressed less trust in those systems (Figure 4). When looking into the trust placed in AI-supported systems, specialties such as Allergy, Cardiology, Emergency Medicine, Endocrinology, and Obstetrics called for a physician-AI collaboration, or the “human-in-the-center” model (Figure 5). Physicians who most “Trust physicians more (than AI)” belonged to Family Medicine and Psychiatry. These results indicate increased comfort with AI-supported systems in procedure-based disciplines that are already closely integrating technology. This also highlights apprehension among specialists in diagnostic-oriented specialties, which may reflect concern that AI could replace current human skillsets and devalue clinical expertise. Orthodontics was the most noted “neutral” specialty, where AI integration has been limited and does not yet pose such issues to the clinicians. Overall, the varying responses across numerous specialties strengthen the importance of tailoring AI integration to each specialty.

Perhaps surprisingly, patients and physicians seem to be evenly distributed in their perspective on the impact of AI on the physician-patient relationship. The overall sentiment seems to be that introducing AI would strengthen this relationship, presumably by allowing physicians to allocate more time and energy to be present in their interactions. Another perceived benefit to the integration of AI into medical systems may be the alleviation of logistical burdens of tasks best automated.

The integration of AI in healthcare presents a mixed yet overall positive outlook for both physicians and patients. While AI is widely acknowledged for its potential to enhance diagnostic accuracy and efficiency, there are significant concerns regarding privacy, empathy, and over-reliance that surface with its usage (16, 22). It is, however, prudent to identify avenues where AI and ML can assist in data analysis and identification of complex patterns. For example, nucleic acid-level interactions can be prohibitively complex, yet they are manageable with the aid of advanced models such Heterogeneous Information Networks (HINs) (23). Similarly, novel indications for established drugs can be discovered via computational drug repositioning models (24, 25).

This study has several limitations inherent to survey research, particularly when conducted online. First, the voluntary and anonymous nature of participation introduces the potential for self-selection bias, as individuals who choose to respond may differ systematically from those who do not, potentially limiting the generalizability of the findings. Additionally, the reliance on self-reported data is subject to recall bias, social desirability bias, and inaccuracies in participant responses, which may affect the validity of the results. Online surveys present unique challenges, including limited control over the survey environment and the inability to verify the identity or eligibility of respondents. This raises the possibility of multiple submissions by the same individual or participation by individuals outside the intended target population. Furthermore, online surveys may exclude individuals with limited internet access or lower digital literacy, introducing sampling bias and potentially underrepresenting certain demographic groups. The cross-sectional design of the survey precludes the establishment of causal relationships between variables. Non-response bias is also a concern, as those who did not complete the survey may differ in meaningful ways from those who did. Finally, the use of closed-ended questions, while facilitating quantitative analysis, may restrict the depth and nuance of responses.

Our survey methodology differs from traditional survey research in key aspects. Utilizing RDIT, RDE, and mass-sampling across a wide geographical base provides a more representative sample than traditional targeted survey research. The proprietary algorithm used in collecting and analyzing responses automatically minimizes the influence of typical online survey outliers via bot filtering, anomaly detection (such as straight-lining), and comparison to census data. Unlike traditional surveys, this methodology allows for rapid collection of responses, mitigating the time delay typically faced. Despite limitations, we believe that this analysis provides valuable insights into the research question and highlights areas for future investigation using complementary methodologies.

5 Conclusion

This study aimed to collect and evaluate the perspectives on AI in medicine from both physician and patient perspectives. The results suggest that comfort with AI integration in healthcare is influenced by prior exposure to technology, with clinicians in technology-intensive specialties and younger patients reporting a greater acceptance. In contrast, physicians in diagnostic fields such as radiology and elderly patients reported a lower comfort level with AI in the medical field. By understanding the opinions on AI integration, researchers are able to design AI systems that are both trusted and accepted by providers and patients, supporting the overall goal of advancing healthcare and improving patient outcomes.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

WS: Conceptualization, Data curation, Formal analysis, Funding acquisition, Investigation, Methodology, Project administration, Resources, Supervision, Validation, Visualization, Writing – original draft, Writing – review & editing. KG: Project administration, Writing – original draft, Writing – review & editing. CG: Writing – review & editing. CR: Writing – original draft, Writing – review & editing. AG: Conceptualization, Investigation, Methodology, Writing – original draft, Writing – review & editing. MH: Data curation, Visualization, Writing – review & editing. MS: Resources, Writing – review & editing. RI: Investigation, Supervision, Validation, Visualization, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

WS is the Founding Owner of Ether Innovations LLC (co-owner CaPER).

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

2. Jiang F, Jiang Y, Zhi H, Dong Y, Li H, Ma S, et al. Artificial intelligence in healthcare: past, present and future. Stroke Vasc Neurol. (2017) 2:230–43. doi: 10.1136/svn-2017-000101

3. Kanan M, Alharbi H, Alotaibi N, Almasuood L, Aljoaid S, Alharbi T, et al. AI-driven models for diagnosing and predicting outcomes in lung cancer: a systematic review and meta-analysis. Cancers (Basel). (2024) 16(3):674. doi: 10.3390/cancers16030674

4. Miller DD, Brown EW. Artificial intelligence in medical practice: the question to the answer? Am J Med. (2018) 131:129–33. doi: 10.1016/j.amjmed.2017.10.035

5. Choudhury A, Asan O. Role of artificial intelligence in patient safety outcomes: systematic literature review. JMIR Med Inform. (2020) 8(7):e18599. doi: 10.2196/18599

6. Rajpurkar P, Chen E, Banerjee O, Topol EJ. AI in health and medicine. Nat Med. (2022) 28:31–8. doi: 10.1038/s41591-021-01614-0

7. Romero-Brufau S, Wyatt KD, Boyum P, Mickelson M, Moore M, Cognetta-Rieke C. A lesson in implementation: a pre-post study of providers’ experience with artificial intelligence-based clinical decision support. Int J Med Inform. (2020) 137:104072. doi: 10.1016/j.ijmedinf.2019.104072

8. Chua IS, Gaziel-Yablowitz M, Korach ZT, Kehl KL, Levitan NA, Arriaga YE, et al. Artificial intelligence in oncology: path to implementation. Cancer Med. (2021) 10(12):4138–49. doi: 10.1002/cam4.3935

9. Aggarwal R, Farag S, Martin G, Ashrafian H, Darzi A. Patient perceptions on data sharing and applying artificial intelligence to health care data: cross-sectional survey. J Med Internet Res. (2021) 23(8):e26162. doi: 10.2196/26162

10. Scheetz J, Rothschild P, McGuinness M, Hadoux X, Soyer HP, Janda M, et al. A survey of clinicians on the use of artificial intelligence in ophthalmology, dermatology, radiology and radiation oncology. Sci Rep. (2021) 11(1):5193. doi: 10.1038/s41598-021-84698-5

11. Arakaki S, Takenaka S, Sasaki K, Kitaguchi D, Hasegawa H, Takeshita N, et al. Artificial intelligence in minimally invasive surgery: current state and future challenges. JMA Journal. (2025) 8(1):86–90. doi: 10.31662/jmaj.2024-0175. Available online at: https://www.jstage.jst.go.jp/article/jmaj/8/1/8_86/_article/-char/en39926089

12. Kaplan A, Haenlein M. Siri, Siri, in my hand: who’s the fairest in the land? On the interpretations, illustrations, and implications of artificial intelligence. Bus Horiz. (2019) 62(1):15–25. doi: 10.1016/j.bushor.2018.08.004

13. Saasouh W, Manafi N, Manzoor A, McKelvey G. Mitigating intraoperative hypotension: a review and update on recent advances. Adv Anesth. (2024a) 42(1):67–84. doi: 10.1016/j.aan.2024.07.006

14. Davenport T, Kalakota R. The potential for artificial intelligence in healthcare. Future Healthc J. (2019) 6(2):94–8. doi: 10.7861/futurehosp.6-2-94

15. Allen MR, Webb S, Mandvi A, Frieden M, Tai-Seale M, Kallenberg G. Navigating the doctor-patient-AI relationship—a mixed-methods study of physician attitudes toward artificial intelligence in primary care. BMC Prim Care. (2024) 25:42. doi: 10.1186/s12875-024-02282-y

16. Gundlack J, Thiel C, Negash S, Buch C, Apfelbacher T, Denny K, et al. Patients’ perceptions of artificial intelligence acceptance, challenges, and use in medical care: qualitative study. J Med Internet Res. (2025) 27:e70487. doi: 10.2196/70487

17. Westerink HJ, Garvelink MM, van Uden-Kraan CF, Zouitni O, Bart HAJ, van der Wees PJ, et al. Evaluating patient participation in value-based healthcare: current state and lessons learned. Health Expect. (2024) 27(1):e13945. doi: 10.1111/hex.13945

18. Fritsch SJ, Blankenheim A, Wahl A, Hetfeld P, Maassen O, Deffge S, et al. Attitudes and perception of artificial intelligence in healthcare: a cross-sectional survey among patients. Digit Health. (2022) 8:20552076221116772. doi: 10.1177/20552076221116772

19. Adus S, Macklin J, Pinto A. Exploring patient perspectives on how they can and should be engaged in the development of artificial intelligence (AI) applications in health care. BMC Health Serv Res. (2023) 23:1163. doi: 10.1186/s12913-023-10098-2

20. Abdalla SM, Assefa E, Rosenberg SB, Hernandez M, Koya SF, Galea S. Perceptions of the determinants of health across income and urbanicity levels in eight countries. Commun Med. (2024) 4:107. doi: 10.1038/s43856-024-00493-z

21. Saasouh W, Ghanem K, Al-Saidi N, LeQuia L, McKelvey G, Jaffar M. Patient- centered perspectives on perioperative care. Front Anesthesiol. (2024b) 3:1267127. doi: 10.3389/fanes.2024.1267127

22. Jones C, Thornton J, Wyatt JC. Artificial intelligence and clinical decision support: clinicians’; perspectives on trust, trustworthiness, and liability. Med Law Rev. (2023) 31(4):501–20. doi: 10.1093/medlaw/fwad013

23. Zhao BW, Su XR, Yang Y, Li DX, Li GD, Hu PW, et al. A heterogeneous information network learning model with neighborhood-level structural representation for predicting lncRNA-miRNA interactions. Comput Struct Biotechnol J. (2024) 23:2924–33. doi: 10.1016/j.csbj.2024.06.032

24. Zhao BW, Su XR, Li DX, Li GD, Hu PW, Zhao YG, et al. A graph deep learning-based framework for drug-disease association identification with chemical structure similarities. J Comput Biophys Chem. (2025) 24:331–43. doi: 10.1142/S2737416523410053

Keywords: artificial intelligence, healthcare, medicine, physician, patient, survey, attitude, perspective sentiment

Citation: Saasouh W, Ghanem K, Ghanem C, Robinson C, Gupta A, Hasan MS, Schostak M and Ismail R (2025) Assessment of patient and physician sentiment on artificial intelligence use in US healthcare. Front. Anesthesiol. 4:1676819. doi: 10.3389/fanes.2025.1676819

Received: 31 July 2025; Accepted: 3 November 2025;

Published: 24 November 2025.

Edited by:

Thomas Schricker, McGill University, CanadaReviewed by:

Ammar Younas, University of Chinese Academy of Sciences, ChinaSucheendra K. Palaniappan, The Systems Biology Institute, Japan

Bo-Wei Zhao, Zhejiang University, China

Copyright: © 2025 Saasouh, Ghanem, Ghanem, Robinson, Gupta, Hasan, Schostak and Ismail. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Wael Saasouh, d2FlbC5zYWFzb3VoQHdheW5lLmVkdQ==

Wael Saasouh

Wael Saasouh Kristina Ghanem1

Kristina Ghanem1 Avanti Gupta

Avanti Gupta Md Sakibur Hasan

Md Sakibur Hasan Rana Ismail

Rana Ismail