- 1Key Laboratory of Ministry of Industry and Information Technology of Biomedical Engineering and Translational Medicine, Chinese PLA General Hospital, Beijing, China

- 2Beijing Key Laboratory for Precision Medicine of Chronic Heart Failure, Chinese PLA General Hospital, Beijing, China

- 3Medical Big Data Research Center, Chinese PLA General Hospital, Beijing, China

- 4Division of Pharmacoepidemiology and Clinical Pharmacology, Utrecht Institute for Pharmaceutical Sciences, Utrecht University, Utrecht, Netherlands

- 5Department of Pulmonary and Critical Care Medicine, Chinese PLA General Hospital, Beijing, China

- 6BioMind Technology, Zhongguancun Medical Engineering Center, Beijing, China

Background: Coronary artery disease (CAD) is a progressive disease of the blood vessels supplying the heart, which leads to coronary artery stenosis or obstruction and is life-threatening. Early diagnosis of CAD is essential for timely intervention. Imaging tests are widely used in diagnosing CAD, and artificial intelligence (AI) technology is used to shed light on the development of new imaging diagnostic markers.

Objective: We aim to investigate and summarize how AI algorithms are used in the development of diagnostic models of CAD with imaging markers.

Methods: This scoping review followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR) guideline. Eligible articles were searched in PubMed and Embase. Based on the predefined included criteria, articles on coronary heart disease were selected for this scoping review. Data extraction was independently conducted by two reviewers, and a narrative synthesis approach was used in the analysis.

Results: A total of 46 articles were included in the scoping review. The most common types of imaging methods complemented by AI included single-photon emission computed tomography (15/46, 32.6%) and coronary computed tomography angiography (15/46, 32.6%). Deep learning (DL) (41/46, 89.2%) algorithms were used more often than machine learning algorithms (5/46, 10.8%). The models yielded good model performance in terms of accuracy, sensitivity, specificity, and AUC. However, most of the primary studies used a relatively small sample (n < 500) in model development, and only few studies (4/46, 8.7%) carried out external validation of the AI model.

Conclusion: As non-invasive diagnostic methods, imaging markers integrated with AI have exhibited considerable potential in the diagnosis of CAD. External validation of model performance and evaluation of clinical use aid in the confirmation of the added value of markers in practice.

Systematic review registration: [https://www.crd.york.ac.uk/prospero/display_record.php?ID=CRD42022306638], identifier [CRD42022306638].

Introduction

Cardiovascular disease (CVD), with a broad definition, refers to a group of disorders of the heart and blood vessels and is the main reason of death globally. CVD has several subtypes, among which coronary artery disease (CAD) is the most prevalent and remains one of the main causes of morbidity and mortality (1). CAD, including heart attack, acute myocardial infarction (MI), stable and unstable angina pectoris (AP), and sudden cardiac death (2), can affect heart functioning and brain processing (3) and further lead to cognitive impairment (4). As a result, CAD became one of the major global economic burdens in healthcare.

Invasive coronary angiography (ICA) is the reference standard for the diagnosis of CAD, especially obstructive disease; however, people who underwent ICA may suffer from complications (5) such as bleeding, pseudoaneurysm, and hematoma. Medical imaging, as a non-invasive technique, has developed from lesion recognition to functional imaging like diagnosis and evaluation of disease, especially radiological methods (6). Previous studies showed that the diagnostic accuracy of coronary computed tomographic angiography (CCTA) for coronary atherosclerosis is comparable to that of invasive techniques due to its potential to identify and describe plaques (7), and the clinical use of MRI techniques in CAD is now widely available in many aspects of CAD (8). The rapid growth of medical imaging data accelerates the discovery of new imaging markers for diagnosis, prediction, or stratification of CAD, which is also known as radiomics. Artificial intelligence (AI), as a technology to enable problem-solving by simulating human intelligence (9), plays an important role in imaging marker derivation and model development in this field.

The application of AI in medical imaging is an interdisciplinary work and involves researchers from different backgrounds. Thus, there are significant differences in study design, medical imaging technique, AI algorithm, and performance evaluation in diagnostic models of CAD. In this scoping review, we aim to investigate and summarize how AI algorithms are used in the development of diagnostic models of CAD with imaging markers and to discover the knowledge gaps to point out the direction for future research.

Methods

This scoping review followed the Preferred Reporting Items for Systematic Reviews and Meta-Analyses extension for Scoping Reviews (PRISMA-ScR) guideline (10), and a completed PRISMA-ScR checklist was provided in the Supplementary Material 1. The protocol of the systematic review and methodological quality assessment was registered with the International Prospective Register of Systematic Reviews (PROSPERO) with the registry number CRD42022306638.

This scoping review is part of the project, aiming to provide an understanding of the role of medical imaging markers integrated with AI for the diagnosis of CAD. For the purpose of this scoping review, the term CAD includes AP, coronary artery disease, coronary stenosis, myocardial infarction, coronary artery atherosclerosis, and coronary artery vulnerable plaque, which can completely or partially block the blood flow of the major arteries of the heart, as these are the terms used to describe the same medical condition that causes lesions in blood vessels supplying the heart and lead to ischemic heart disease in the International Classification of Diseases (ICD-10) (Supplementary Material 2).

Inclusion and exclusion criteria

Publications of primary research on the development of diagnostic models of CAD using AI techniques based on imaging, regardless of targeted patients, data sources, or study design, were included in the review. Exclusion criteria were (1) publications not in English or not using human data or not imaging tests, (2) models not developed for diagnosis, (3) meta-research studies (e.g., reviews of prediction models), (4) conference abstracts, (5) studies that are only focused on automatic segmentation of images or extraction of medical image parameters, and (6) diagnostic models developed or validated not associated with CAD.

Identification of eligible publications

Eligible publications for this scoping review were selected from a systematic review and methodological quality assessment on the image-based diagnostic models with AI in CVD performed by the same research group. The systematic literature search was conducted in PubMed and Embase, and the search strategy information can be found in the public online protocol.

Studies identified by the search strategy were imported into EndNote for checking duplicates. After removing duplicates, titles and abstracts were screened independently by two authors to identify eligible studies. The potentially eligible studies were independently checked with full text by the same two researchers for final inclusion. As the last step, models for the diagnosis of CAD were selected for this scoping review.

Data extraction

Data were collected on general information of articles (first author, year of publication, title, journal, and DOI), study characteristics (date of submission, acceptance, publication, country of author, and study), population characteristics (age-group, clinical setting, and participant inclusion), AI technique characteristics (purpose/use of the AI technique and AI models/algorithms), data set characteristics (data set size, data types, type of imaging, number of image features, reference/gold standard, competitor, data sources, study design, internal validation, and external validation), and diagnostic model characteristics (clinical effectiveness). We then performed a double data extraction for all included articles on the basis of detailed explanations for each item (Supplementary Material 3). If multiple models were established in an article, only one model was selected based on the following criteria in order: (1) the one with the largest total sample size, (2) the one with the largest number of events, and (3) the one with the highest predictive performance. A total of two reviewers (two of WW, HG, JD, JS, YD, MZ, DZ, and XW) independently extracted data from each article using a data extraction form designed for this review. Disagreements were resolved through discussion, and if necessary, the final judgment was made by a third reviewer (JW).

Data synthesis

On account of the heterogeneity in selected studies, a narrative synthesis of the extracted data was performed. Numbers and percentages were used to describe categorical data, and the distribution of continuous data was assessed and described using median and IQR. We also summarized the characteristics of the included articles in this scoping review by descriptive statistics and data visualization. In the process of analysis, all the statistical analyses were performed by R version 3.6.1 and RStudio version 1.2.5001, and graphic charts and tables were used to present the results.

Results

Selection of publications

After removing duplicates, screening titles and abstracts, and checking the full text, a total of 110 eligible articles were identified for the systematic review and methodological quality assessment on the image-based diagnostic models with AI in CVD, of which 46 were about the diagnosis of CAD and thus were selected for this scoping review. A complete list of the included studies and their characteristics is available in the Supplementary Material 4.

Characteristics of the included studies

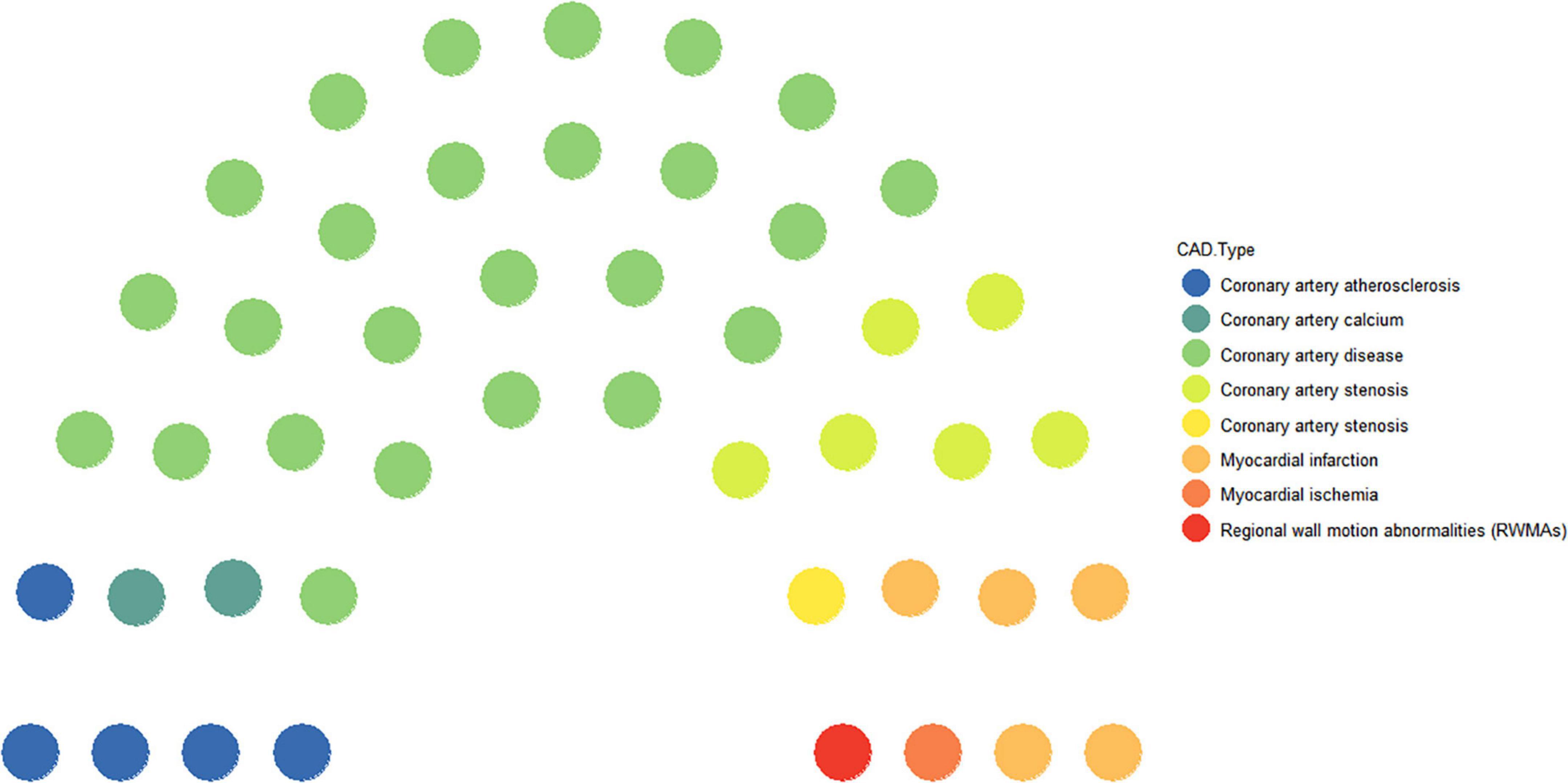

Coronary artery disease is a progressive disease and also a general term for a class of diseases. Of the 46 studies included, 54.4% were specifically for CAD (11–35) as the research disease, and the other specific diseases were named coronary artery atherosclerosis (10.8%) (36–40), coronary artery stenosis (15.3%) (41–47), coronary artery calcium (4.3%) (48, 49), MI (10.8) (50–54), myocardial ischemia (2.1%) (55), and regional wall motion abnormalities (2.1%) (56) (Figure 1).

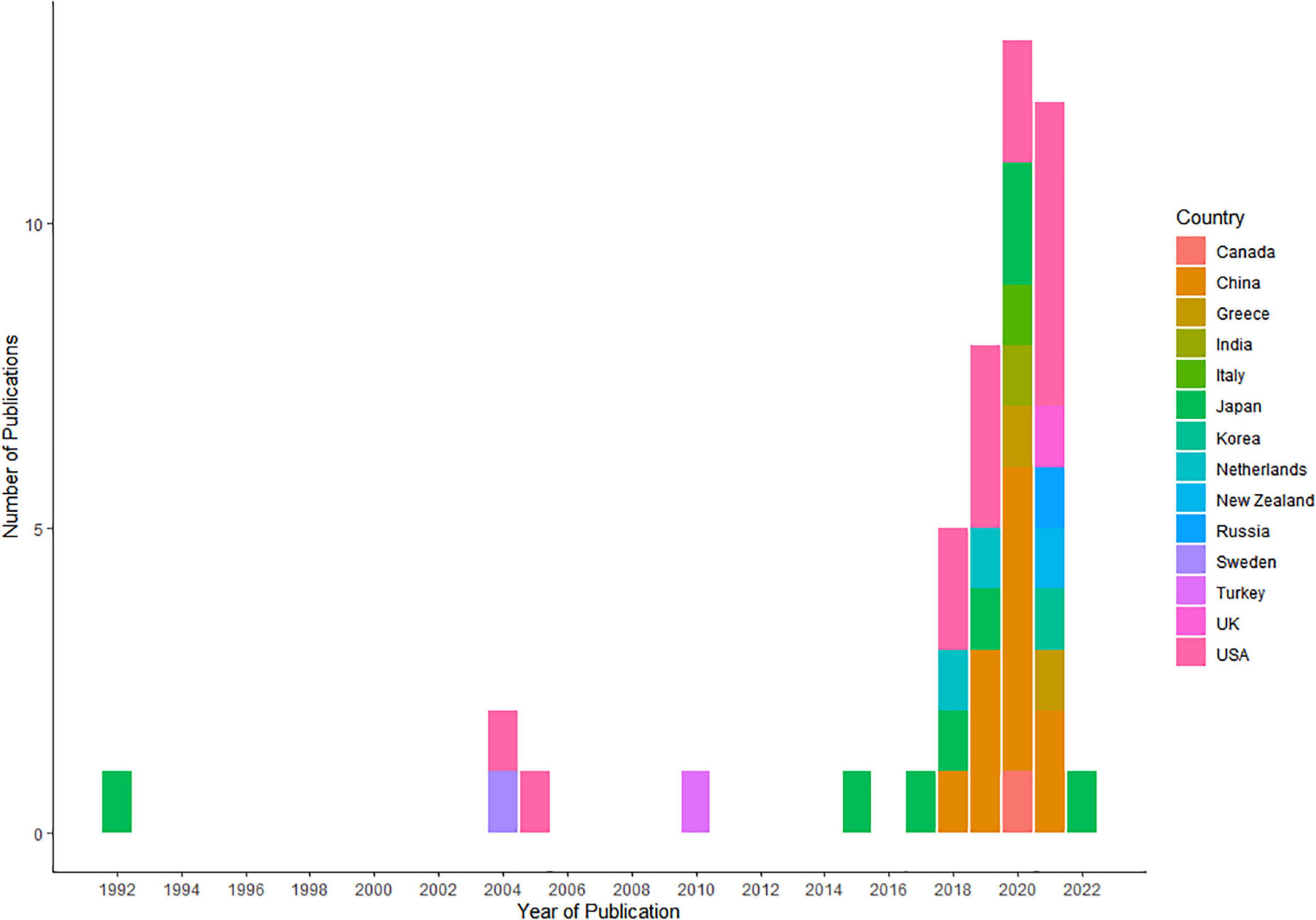

Approximately, half of the included studies were conducted in years 2020 (12/46, 26.0%) (14, 15, 17, 19, 20, 25, 26, 30, 36, 37, 45, 56) and 2021 (12/46, 26.0%) (13, 16, 21, 24, 29, 33, 39, 40, 43, 46, 50, 53) (Figure 2). The corresponding authors of the included studies were from 13 countries, including the United States (14/46, 30.3%) (12, 16, 21, 22, 29, 31, 34, 36, 39, 41, 42, 48, 49, 53), China (11/46, 23.9%) (15, 19, 20, 35, 37, 38, 40, 45, 46, 52, 54), Japan (8/46, 17.3%) (17, 23, 25, 27, 28, 32, 55, 56), Greece (2/46, 4.3%) (13, 14), and Netherlands (2/46, 4.3%) (44, 47), whereas Italy (26), Canada (11), India (30), Korea (24), New Zealand (50), Russia (43), Sweden (51), Turkey (18), and the United Kingdom (33) each had only one study (1, 2.1%). In most of the articles, corresponding authors and study cohorts were from unified countries. Only one study involved cross-country collaborations, with the authors of the article being from India, while the study cohort was from China. Supplementary Table 1 shows the all characteristics of the studies included in our review.

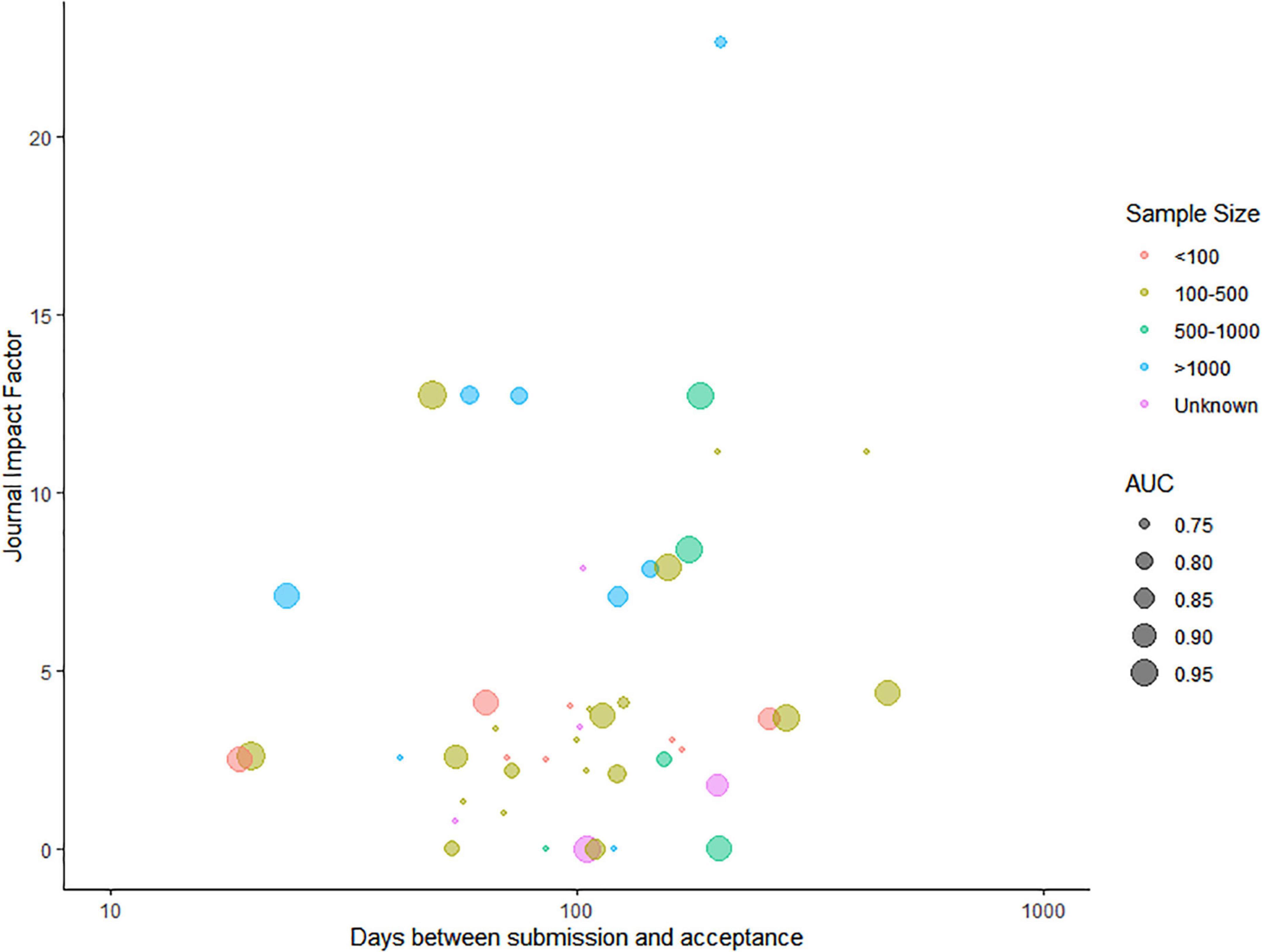

Of all the included articles, 44 articles mentioned the date of submission and date of acceptance, and the time from submission to acceptance varied from 19 days to 466 days, with the median of 105 days and the interquartile range of [66.25, 162.75]. Except for six articles being published in journals not having an impact factor (IF) yet, the IF of the other 40 articles ranged from 0.785 to 22.673, with the median of 3.6645 and the interquartile range of [2.52775, 7.887]. As can be seen in Figure 3, the time needed for a decision of acceptance was positively correlated with the journal IF (Spearman rank correlation = 0.24). Supplementary Table 2 shows the time from submission to acceptance and the IF of all included articles.

Figure 3. Relationship between the time needed for acceptance for publication and the journal impact factor.

The colors represent the sample size of the model training data set, and the AUC of each model is presented as the radius of the bubble.

Data sources and study designs in the included studies

For data sources, private data (data collected by centers) (35/46, 76.0%) (11–21, 23, 24, 27, 33–50, 53, 54, 56) were the most commonly used data sources for the development of AI models. Except for one article for which the data source is unclear (28), public data (10/46, 21.7%) were the other sources of data for AI models (22, 25, 26, 29–32, 51, 52, 55). Most studies were single-center studies, accounting for 76.0% (35/46), and 19.5% (9/46) were multi-center studies (16, 20, 29, 33, 41, 42, 51, 53, 55). There were three major types of study designs: cohort study (30/46, 65.2%) (11–28, 36–38, 40–43, 46–50), case–control (8/46, 17.3%) (34, 35, 39, 44, 52–54, 56), and nested case–control (1/46, 2.1%) (33), whereas for the other 15.3% of the studies (7/46) (29–32, 45, 51, 55), the type of study design could not be determined based on the information in the article.

Of the 41 (89.2%) studies that reported sample size on patient level, eight (17.3%) studies used data sets of less than 100 samples (11, 12, 19, 34, 35, 37, 43, 46), 20 (43.5%) studies used data sets with 100–500 samples (14–16, 18, 23, 26, 31, 32, 36, 39, 40, 44, 47, 48, 51–56), five (10.8%) studies used data sets with 500–1,000 samples (13, 24, 25, 30, 33), and eight (17.3%) studies used data sets with more than 1,000 samples (20–22, 27–29, 41, 42). The other five (10.8%) studies directly selected relevant medical imaging scans or videos as training samples with a sample size between 63 and 4,664 (17, 38, 45, 49, 50) (Figure 3).

Population characteristics in the included studies

Across the populations studied, most studies had no age restrictions on the study population (39/46, 86%), while other studied populations included people older than 18 years (4/46, 8.6%) (19, 35, 39, 45), people older than 40 years (1/46, 2.1%) (15), or older adults (above the age of 65 years) (2/46, 4.3%) (32, 55). In the included articles, most of the study population was patients who were hospitalized (34/46, 74.1%), and some studies included the general population (3/46, 6.5%) (24, 26, 27) or outpatients (3/46, 6.5) (25, 31, 39), while one study dealt with coronial postmortem examination (1/46, 2.1%) (50), and the population of the rest of the studies was unclear (5/46, 10.8%) (22, 38, 45, 49, 52).

Outcome and reference standards in the included studies

The main outcome of the diagnostic models was classified into three formats: binary (e.g., the status of CAD, yes or no) (34/46, 74.1%), ordinal (e.g., severity grading of CAD) (8/46, 17.3%) (16, 19, 29–33, 55), and multinomial (e.g., multiple diseases or classification of CAD) (4/46, 8.6%) (18, 46, 50, 51).

Reference standards for determining the outcomes were only mentioned in 36 of the 46 studies. Experts (11/46, 23.9%) (11, 16, 20, 21, 36, 38, 39, 45, 48, 49, 52), such as cardiologists or radiologists, and coronary angiography (13/46, 28.3%) (12–15, 17–19, 33, 41–43, 46, 53) were the two main reference standards. Coronary angiograms and experienced physicians (6/46, 13.0%) (28, 29, 31, 32, 51, 55), fractional flow reserve (FFR) (4/46, 8.6%) (34, 35, 44, 47), and clinical characteristics, electrocardiogram, and laboratory test index (2/46, 4.3%) (40, 54) were used as the reference standards for CAD in other studies.

Types of medical imaging and artificial intelligence algorithms in the included studies

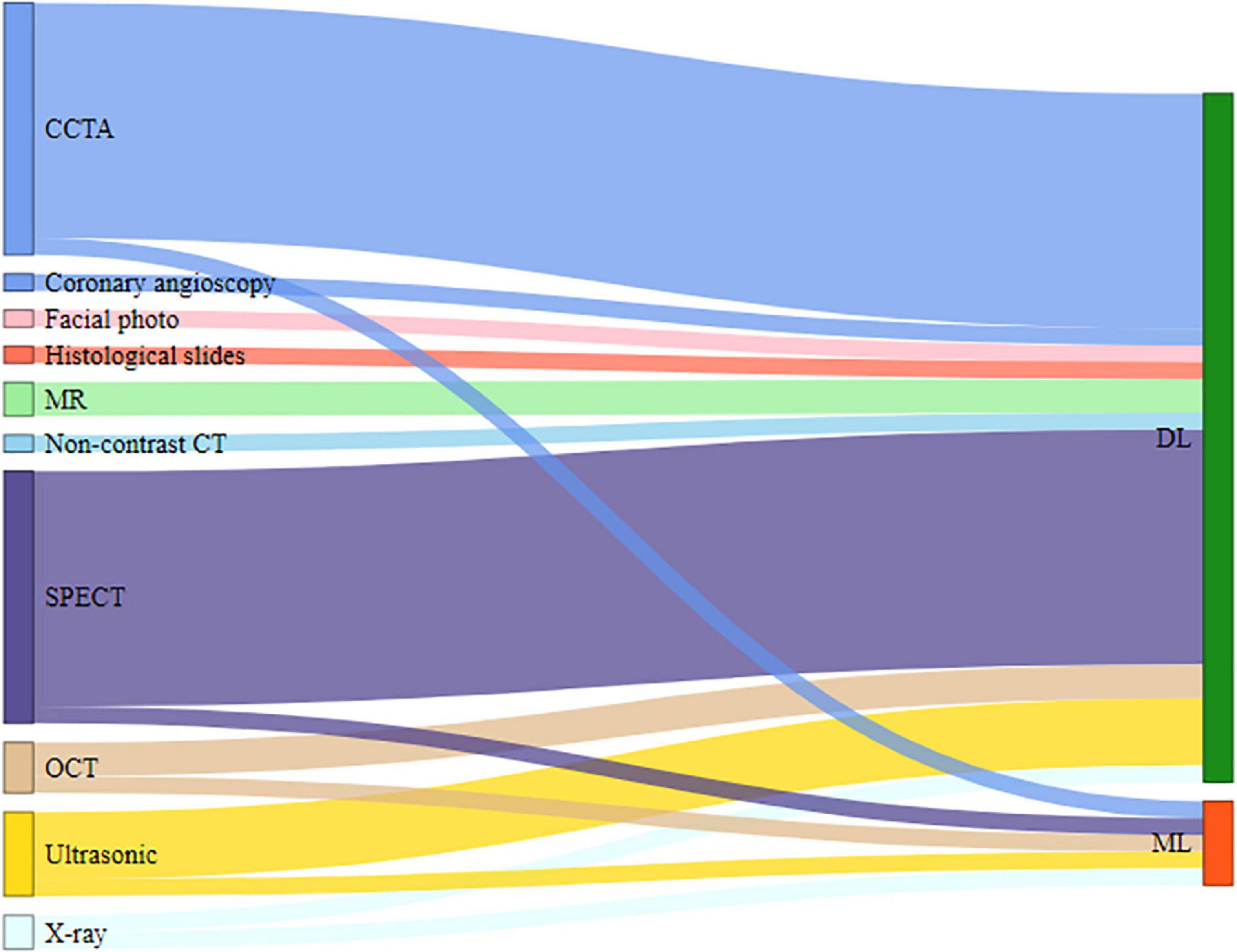

The included studies demonstrate 10 types of medical imaging that have been used to diagnose CAD with AI techniques. The most common medical imaging used was computed tomography (CT), comprising 73.9% (34/46) of the studies, which included single-photon emission computed tomography (SPECT) (15/46, 32.6%) (12–14, 17, 18, 21, 27–29, 31, 32, 41, 42, 51, 55), coronary computed tomography angiography (CCTA) (15/46, 32.6%) (15, 16, 19, 23, 26, 30, 34–36, 39, 40, 44, 46–48), optical coherence tomography (OCT) (3/46, 6.5%) (11, 37, 38), and non-contrast CT (1/46, 2.1%) (49). Other more commonly used medical imaging techniques were ultrasonography (5/46, 10.8%) (22, 24, 33, 53, 56), MR (2/46,4.3%) (52, 54), and X-ray (2/46, 4.3%) (43, 45). In contrast, the least commonly used images were coronary angioscopy (1/46, 2.1%) (25), histological slides (1/46, 2.1%) (50), and facial photo (1/46, 2.1%) (20). In the process of model development, the majority of the studies focused only on using various characteristics of medical imaging of participants, although few articles clearly defined the image features. Other combinations of data in some included studies, such as demographic data (5/46, 10.8%) (18, 20, 21, 29, 30), clinical data (2/46, 4.3%) (13, 27), and laboratory data (1/46, 2.1%) (31), were also used to evaluate their effect on the performance of the AI technology to predict the diagnosis of CAD.

Many different AI algorithms were applied to explore the diagnostic value of information from images. AI algorithms were classified into deep learning (DL) (41/46, 89.2%) (12, 14–29, 31, 32, 34–42, 44–56) and machine learning (ML) (5/46, 10.8%) (11, 13, 30, 33, 43), as shown in Figure 4.

Model performance measures used in the included studies

Different indicators were used in the validation process of different models, and we only summarized the commonly used validation indicators: accuracy, sensitivity, specificity, and the area under the curve (AUC).

The accuracy of the diagnostic models was reported in 26 studies, and the accuracy level ranged from 57 to 100%. The accuracy level was < 70% in three studies (18, 26, 28), 70–90% in 12 studies (13, 14, 19, 20, 32, 35, 38, 40, 45, 47, 48, 53), and > 90% in 11 studies (11, 15, 16, 22, 24, 36, 39, 46, 49, 50, 52).

Sensitivity was reported in 32 studies and ranged from 47 to 97.14%. The sensitivity level was < 70% in five studies (26, 32, 36, 42, 53), 70–90% in 19 studies (13, 14, 18–21, 29, 31, 33, 34, 39–41, 43–47, 54), and > 90% in eight studies (11, 12, 15, 16, 35, 48, 52, 55). Moreover, specificity was reported in only 30 studies and ranged from 48.4 to 99.8%. The specificity level was < 70% in nine studies (15, 18, 20, 29, 31, 40–42, 44), 70–90% in 11 studies (12–14, 19, 21, 26, 32, 33, 35, 47, 48), and > 90% in 10 studies (11, 16, 34, 36, 39, 46, 52–55).

The area under the curve was only reported in 29 studies, ranging from 0.74 to 0.98 (Figure 3). In seven studies, the AUC was below 0.80 (13, 15, 18, 20, 44, 47, 53); in nine studies, it was between 0.8 and 0.9 (19, 21, 23, 29, 32, 33, 38, 41, 42); and in 13 studies, it was above 0.9 (24, 25, 27, 33–36, 39, 49, 51, 54–56). However, among all the included articles, only four carried out external validation of the AI model, accounting for a proportion of 8.6% (20, 29, 33, 39).

Competitor and clinical effectiveness of developed models in the included studies

After the AI models were developed, 11 articles compared the performance of the model with clinicians, including experts (10/46, 21.7%) (12–18, 33, 53, 56) and less experienced clinicians (1/46, 2.1%) (49). Some models in the included studies (13/46, 28.3%) were compared with previously existing or published models (20–23, 27, 28, 37, 38, 43, 45, 47, 52, 54). Other methods used for comparison with models in the included studies include total perfusion deficit (2/46, 4.3%) (41, 42), CCTA (1/46, 2.1%) (19), and conventional 120 kVp images (1/46, 2.1%) (46), and the rest of the studies (18/46, 39.1%) (11, 24–26, 29–32, 34–36, 39, 40, 44, 48, 50, 51, 55) have no information about competitors of the AI models.

However, few developed models of CAD have been used in clinical practice or prospective studies to prove their clinical applicability. Only one article (1/46, 2.1%) (51) mentioned that some physicians of the invited hospitals used the model system and generally found it easy to use and of value in their clinical practice.

Discussion

Principal findings and the implications for practice and research

In this review, we explored the use of imaging disease markers in the diagnosis of CAD with AI. This review has highlighted a few salient points and some research gaps which have the potential to guide future research and enhance the value of new imaging disease markers for medical decisions.

First, in a total of 46 included studies, it is obvious that the number of studies increased in the past 20 years, especially in the recent 2 years (12 in 2020 and 12 in 2021), which is not surprising given that the use of AI technology in medical care, especially the diagnosis of common diseases, became a hot topic. Some developed countries have a long history of carrying out research on AI-based diagnostic prediction models of CAD, such as Japan (1992) and the United States (2004). In recent years (2018–2021), China is the fastest growing country in the establishment of AI models, and the final proportion of articles included is 26.0%, second to the United States (30.3%).

Second, there is significant heterogeneity in the study design. The study design of more than half of the articles was a cohort study as the primary studies we included are predominantly retrospective in nature. The common data sources are mostly private data and single-center studies, mainly from different clinical settings in different hospitals in different countries, which cannot be shared by the general public. The performance of models based on these data cannot be effectively verified, so it cannot be widely applied to other sources of data. It is important to emphasize that the generalizability of data and reproducibility of methods (57) are crucial to making new imaging disease markers interpretable and translatable to clinical care for an AI diagnostic model.

Third, most included studies used experts, such as cardiologists or radiologists, and coronary angiography as the reference standards. CAD, the most common clinical heart disease, is a progressive pathological process with varying degrees of severity and clinical symptoms for different patients. Although coronary angiography was often used as the gold standard for CAD in clinical settings, it may be invalid, especially in patients who have intermediate severity of stenosis (58–60). In the process of establishing CAD diagnostic models using imaging as disease markers, we should carefully select the appropriate reference standard so that the model can obtain more accurate diagnostic performance in prospective research or clinical practice.

Fourth, the most often used outcome is binary (disease versus no disease) in studies using imaging markers integrated with AI techniques, without classifying diseases or grading their level of severity. This explains the rapid and single application of imaging disease markers developed with AI in the reviewed studies. Future research should explore the fusion methods of image features and AI technology to attain higher prediction accuracy in terms of the coronary lesions that occur in the patient and the severity of CAD.

Fifth, we identified the features of AI techniques as observed in the literature. For AI models, DL techniques were used much more than ML techniques. DL can learn from unstructured data, and the information obtained in the learning process is of great help to the interpretation of image data. Therefore, it is understandable that most researchers used DL techniques as they achieved far more results in image recognition than using other related technologies.

Sixth, in this scoping review, a variety of imaging types can be used together with AI in the diagnosis of CAD. Ordinarily, experts in different hospitals make their own judgments about CAD based on the types of medical imaging they specialize in. Thus, it may be related to the strengths of different imaging tests in different hospitals or the professional habits of each doctor. Based on our findings, CCTA and SPECT were the most used non-invasive imaging modality for AI applications. One explanation for this is that radiomics features extracted by CCTA and SPECT showed good diagnostic accuracy for the identification of coronary lesions, coronary plaques, and coronary stenosis.

Seventh, less than one-fifth of the articles used data other than image features in the process of model development, such as clinical data and demographic data, which can contribute to the early prediction of CAD. Furthermore, we should also evaluate the potential of laboratory data and genetic data, as a combination of data with image features, in the early diagnostic prediction of CAD. The earlier the diagnostic prediction time, the more effective a medical or surgical treatment that the physicians can give the patients with CAD, which can significantly reduce the risk of death.

Eighth, the sample size was less than 1,000 in most of the included articles, regardless of whether the research subjects were patients or relevant medical imaging scans or videos. Sample size plays a more important role than model performance in determining the impact of the study, quantified by the journal IF (Figure 3). In future studies, AI models should be trained and validated on a larger data set and have a larger healthy control sample, preferably from public sources.

Ninth, several articles claimed that their AI models had a higher performance than existing models or methods (20–22, 27, 28, 38, 45–47, 49, 52, 54). Furthermore, some articles compared with experts (experienced radiologists) and readers (board-certified radiologists) indicated that image-based AI improved the non-invasive diagnosis of CAD (12–16, 23, 33, 53, 56). Although most of the included diagnostic models were verified internally, different model performance measures were used in the validation process of different models. As we calculated, nearly 90% of the AI diagnostic prediction models using imaging as a marker for diagnosing CAD in our included articles were not externally validated. So, we suggest that clinicians and researchers should conduct external validation or prospective studies to explore the use of imaging markers integrated with AI in clinical settings and compare the performance of different imaging models used to diagnose CAD by using relatively uniform indicators.

Last and interestingly, a positive correlation was observed between the time needed for acceptance for publication and the journal IF: the higher the IF of the journal, the longer the review and decision time required. The IF is calculated from how many times articles in the same journal have been cited and usually is seen as an indicator of influence. One possible explanation might be that low-impact journals were less strict than high-impact journals; thus, the decision of acceptance was given fast. Researchers who aim to publish their models in high-impact journals need to take the risk of not being published timely.

Strengths and limitations

The present review was conducted to address the use of all types of imaging disease markers developed with AI in the diagnosis of CAD, with no restrictions on targeted patients, data sources, or study design. Simultaneously, we also explored the features of AI techniques and data sources that were used to develop these models.

Recent reviews focused on the detection of CAD using AI techniques (61) or on machine learning quantitation of CVD (including CAD) (62). The previous review assessed the clinical effectiveness of the use of medical imaging, such as computed tomography angiography (CTA), instead of ICA (63). This review explored and summarized the application of new imaging disease markers developed with AI in the diagnosis of CAD, which gives a deeper insight into the fusion of imaging and AI in medicine.

We have included any primary research publication (in English) related to image-based diagnostic models with AI of CAD for reducing the selection bias. Furthermore, study selection and data extraction involved two reviewers working independently, and disagreements in the process were resolved through discussion, and if necessary, the final judgment was given by a third senior reviewer.

This review included only PubMed and Embase databases, which led to the loss of some gray literature and other potentially relevant studies in other databases. The exclusion of non-English studies may lead to an oversight of relevant articles in other languages. In some of the included articles, we could not extract all the information from the description and reporting of the diagnostic model according to the contents in the data extraction form. Adherence to the Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD) Statement (64, 65) and the Standards for Reporting of Diagnostic Accuracy Studies (STARD) Statement (66, 67) should be recommended for authors. In this scoping review, we only summarized the types of imaging disease markers developed with AI, but not compared models using different types of imaging or the performance of different models using the same type of imaging. As it is part of our overall systematic review project, the assessment of the possible methodological quality and risk of bias in the included literature will be reserved for later research studies.

Conclusion

The current scoping review included 46 studies that focused on the use of imaging markers integrated with AI as diagnostic methods for CAD in all clinical settings. We explored and summarized the types of images and the classification of AI in these models. We have also provided information about the data source and study design commonly used in the diagnostic models and strongly recommend external validation of the models and prospective clinical studies in the future. With the advance in medical imaging data, AI has exhibited considerable potential in clinical decision support and analysis in multiple medical fields. The integrated development of imaging and AI can assist clinicians to make more accurate medical decisions for different diseases, including CAD, which can improve clinical efficiency while avoiding the wastage of medical resources and reducing the economic burden on patients.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary material, further inquiries can be directed to the corresponding author/s.

Author contributions

XW, JW, WW, and KH contributed to the conception and design of the study. XW, WW, MZ, HG, JD, JS, DZ, and YD organized the database. XW and JW performed the statistical analysis and wrote the first draft of the manuscript. WW, XC, PZ, and ZW wrote sections of the manuscript. KH supervised the study. All authors contributed to manuscript revision, read, and approved the submitted version.

Funding

This work was supported by the Science and Technology Innovation 2030 - Major Project (2021ZD0140406) and the Ministry of Industry and Information Technology of China (2020-0103-3-1).

Conflict of interest

Authors PZ and ZW were employed by the company BioMind Technology.

The remaining authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcvm.2022.945451/full#supplementary-material

References

1. WHO. Cardiovascular-Diseases-(CVDs). (2017). Available online at: https://www.who.int/news-room/fact-sheets/detail/cardiovascular-diseases-(cvds) (accessed May 17, 2017)

2. Wong ND. Epidemiological studies of CHD and the evolution of preventive cardiology. Nat Rev Cardiol. (2014) 11:276–89. doi: 10.1038/nrcardio.2014.26

3. Abete P, Della-Morte D, Gargiulo G, Basile C, Langellotto A, Galizia G, et al. Cognitive impairment and cardiovascular diseases in the elderly. A heart-brain continuum hypothesis. Ageing Res Rev. (2014) 18:41–52. doi: 10.1016/j.arr.2014.07.003

4. Barekatain M, Askarpour H, Zahedian F, Walterfang M, Velakoulis D, Maracy MR, et al. The relationship between regional brain volumes and the extent of coronary artery disease in mild cognitive impairment. J Res Med Sci. (2014) 19:739–45.

5. Knuuti J, Wijns W, Saraste A, Capodanno D, Barbato E, Funck-Brentano C, et al. 2019 ESC Guidelines for the diagnosis and management of chronic coronary syndromes. Eur Heart J. (2020) 41:407–77.

6. Xukai, MO, Lin M, Liang J, Center MI. Coronary artery disease and myocardial perfusion imaging evaluation of coronary heart disease meta-analysis. J Clin Radiol. (2019):1020–24.

7. Murgia A, Balestrieri A, Crivelli P, Suri JS, Conti M, Cademartiri F, et al. Cardiac computed tomography radiomics: an emerging tool for the non-invasive assessment of coronary atherosclerosis. Cardiovasc Diagn Ther. (2020) 10:2005–17. doi: 10.21037/cdt-20-156

8. Vick GW III. The gold standard for noninvasive imaging in coronary heart disease: magnetic resonance imaging. Curr Opin Cardiol. (2009) 24:567–79. doi: 10.1097/HCO.0b013e3283315553

9. Chartrand G, Cheng PM, Vorontsov E, Drozdzal M, Turcotte S, Pal CJ, et al. Deep learning: a primer for radiologists. Radiographics. (2017) 37:2113–31. doi: 10.1148/rg.2017170077

10. Tricco AC, Lillie E, Zarin W, O’Brien KK, Colquhoun H, Levac D, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. (2018) 169:467–73. doi: 10.7326/M18-0850

11. Abdolmanafi A, Cheriet F, Duong L, Ibrahim R, Dahdah N. An automatic diagnostic system of coronary artery lesions in Kawasaki disease using intravascular optical coherence tomography imaging. J Biophotonics. (2020) 13:e201900112. doi: 10.1002/jbio.201900112

12. Allison JS, Heo J, Iskandrian AE. Artificial neural network modeling of stress single-photon emission computed tomographic imaging for detecting extensive coronary artery disease. Am J Cardiol. (2005) 95:178–81. doi: 10.1016/j.amjcard.2004.09.003

13. Apostolopoulos ID, Apostolopoulos DI, Spyridonidis TI, Papathanasiou ND, Panayiotakis GS. Multi-input deep learning approach for cardiovascular disease diagnosis using myocardial perfusion imaging and clinical data. Phys Med. (2021) 84:168–77. doi: 10.1016/j.ejmp.2021.04.011

14. Apostolopoulos ID, Papathanasiou ND, Spyridonidis T, Apostolopoulos DJ. Automatic characterization of myocardial perfusion imaging polar maps employing deep learning and data augmentation. Hell J Nucl Med. (2020) 23:125–32.

15. Chen M, Wang X, Hao G, Cheng X, Ma C, Guo N, et al. Diagnostic performance of deep learning-based vascular extraction and stenosis detection technique for coronary artery disease. Br J Radiol. (2020) 93:20191028. doi: 10.1259/bjr.20191028

16. Choi AD, Marques H, Kumar V, Griffin WF, Rahban H, Karlsberg RP, et al. CT evaluation by artificial intelligence for atherosclerosis, stenosis and vascular morphology (CLARIFY): a multi-center, international study. J Cardiovasc Comput Tomogr. (2021) 15:470–6. doi: 10.1016/j.jcct.2021.05.004

17. Fujita H, Katafuchi T, Uehara T, Nishimura T. Application of artificial neural network to computer-aided diagnosis of coronary artery disease in myocardial SPECT bull’s-eye images. J Nucl Med. (1992) 33:272–6.

18. Guner LA, Karabacak NI, Akdemir OU, Karagoz PS, Kocaman SA, Cengel A, et al. An open-source framework of neural networks for diagnosis of coronary artery disease from myocardial perfusion SPECT. J Nucl Cardiol. (2010) 17:405–13. doi: 10.1007/s12350-010-9207-5

19. Han D, Liu J, Sun Z, Cui Y, He Y, Yang Z. Deep learning analysis in coronary computed tomographic angiography imaging for the assessment of patients with coronary artery stenosis. Comput Methods Programs Biomed. (2020) 196:105651. doi: 10.1016/j.cmpb.2020.105651

20. Lin S, Li Z, Fu B, Chen S, Li X, Wang Y, et al. Feasibility of using deep learning to detect coronary artery disease based on facial photo. Eur Heart J. (2020) 41:4400–11. doi: 10.1093/eurheartj/ehaa640

21. Liu H, Wu J, Miller EJ, Liu C, Yaqiang, Liu, et al. Diagnostic accuracy of stress-only myocardial perfusion SPECT improved by deep learning. Eur J Nucl Med Mol Imaging. (2021) 48:2793–800. doi: 10.1007/s00259-021-05202-9

22. Madani A, Ong JR, Tibrewal A, Mofrad MRK. Deep echocardiography: data-efficient supervised and semi-supervised deep learning towards automated diagnosis of cardiac disease. NPJ Digit Med. (2018) 1:59. doi: 10.1038/s41746-018-0065-x

23. Masuda T, Nakaura T, Funama Y, Oda S, Okimoto T, Sato T, et al. Deep learning with convolutional neural network for estimation of the characterisation of coronary plaques: validation using IB-IVUS. Radiography. (2022) 28:61–7. doi: 10.1016/j.radi.2021.07.024

24. Min HS, Ryu D, Kang SJ, Lee JG, Yoo JH, Cho H, et al. Prediction of coronary stent underexpansion by pre-procedural intravascular ultrasound-based deep learning. JACC Cardiovasc Interv. (2021) 14:1021–9. doi: 10.1016/j.jcin.2021.01.033

25. Miyoshi T, Higaki A, Kawakami H, Yamaguchi O. Automated interpretation of the coronary angioscopy with deep convolutional neural networks. Open Heart. (2020) 7:e001177. doi: 10.1136/openhrt-2019-001177

26. Muscogiuri G, Chiesa M, Trotta M, Gatti M, Palmisano V, Dell’Aversana S, et al. Performance of a deep learning algorithm for the evaluation of CAD-RADS classification with CCTA. Atherosclerosis. (2020) 294:25–32. doi: 10.1016/j.atherosclerosis.2019.12.001

27. Nakajima K, Kudo T, Nakata T, Kiso K, Kasai T, Taniguchi Y, et al. Diagnostic accuracy of an artificial neural network compared with statistical quantitation of myocardial perfusion images: a Japanese multicenter study. Eur J Nucl Med Mol Imaging. (2017) 44:2280–9. doi: 10.1007/s00259-017-3834-x

28. Nakajima K, Matsuo S, Wakabayashi H, Yokoyama K, Bunko H, Okuda K, et al. Diagnostic performance of artificial neural network for detecting ischemia in myocardial perfusion imaging. Circ J. (2015) 79:1549–56. doi: 10.1253/circj.CJ-15-0079

29. Otaki Y, Singh A, Kavanagh P, Miller RJH, Parekh T, Tamarappoo BK, et al. Clinical deployment of explainable artificial intelligence of SPECT for diagnosis of coronary artery disease. JACC Cardiovasc Imaging. (2021). 15:1091–102. doi: 10.1016/j.jcmg.2021.04.030

30. Saikumar K, Rajesh V, Hasane Ahammad SK, Sai Krishna M, Sai Pranitha G, Ajay Kumar Reddy R. CAB for heart diagnosis with RFO artificial intelligence algorithm. Int J Res Pharm Sci. (2020) 11:1199–205. doi: 10.26452/ijrps.v11i1.1958

31. Scott JA, Aziz K, Yasuda T, Gewirtz H. Integration of clinical and imaging data to predict the presence of coronary artery disease with the use of neural networks. Coron Artery Dis. (2004) 15:427–34. doi: 10.1097/00019501-200411000-00010

32. Shibutani T, Nakajima K, Wakabayashi H, Mori H, Matsuo S, Yoneyama H, et al. Accuracy of an artificial neural network for detecting a regional abnormality in myocardial perfusion SPECT. Ann Nucl Med. (2019) 33:86–92. doi: 10.1007/s12149-018-1306-4

33. Upton R, Mumith A, Beqiri A, Parker A, Hawkes W, Gao S, et al. Automated echocardiographic detection of severe coronary artery disease using artificial intelligence. JACC Cardiovasc Imaging. (2021). doi: 10.1016/j.jcmg.2021.10.013

34. von Knebel Doeberitz PL, De Cecco CN, Schoepf UJ, Duguay TM, Albrecht MH, van Assen M, et al. Coronary CT angiography-derived plaque quantification with artificial intelligence CT fractional flow reserve for the identification of lesion-specific ischemia. Eur Radiol. (2019) 29:2378–87. doi: 10.1007/s00330-018-5834-z

35. Wang ZQ, Zhou YJ, Zhao YX, Shi DM, Liu YY, Liu W, et al. Diagnostic accuracy of a deep learning approach to calculate FFR from coronary CT angiography. J Geriatr Cardiol. (2019) 16:42–8.

36. Candemir S, White RD, Demirer M, Gupta V, Bigelow MT, Prevedello LM, et al. Automated coronary artery atherosclerosis detection and weakly supervised localization on coronary CT angiography with a deep 3-dimensional convolutional neural network. Comput Med Imaging Graph. (2020) 83:101721. doi: 10.1016/j.compmedimag.2020.101721

37. He C, Wang J, Yin Y, Li Z. Automated classification of coronary plaque calcification in OCT pullbacks with 3D deep neural networks. J Biomed Opt. (2020) 25:095003. doi: 10.1117/1.JBO.25.9.095003

38. Liu R, Zhang Y, Zheng Y, Liu Y, Zhao Y, Yi L. Automated detection of vulnerable plaque for intravascular optical coherence tomography images. Cardiovasc Eng Technol. (2019) 10:590–603. doi: 10.1007/s13239-019-00425-2

39. White RD, Erdal BS, Demirer M, Gupta V, Bigelow MT, Dikici E, et al. Artificial intelligence to assist in exclusion of coronary atherosclerosis during CCTA evaluation of chest pain in the emergency department: preparing an application for real-world use. J Digit Imaging. (2021) 34:554–71. doi: 10.1007/s10278-021-00441-6

40. Zhao H, Yuan L, Chen Z, Liao Y, Lin J. Exploring the diagnostic effectiveness for myocardial ischaemia based on CCTA myocardial texture features. BMC Cardiovasc Disord. (2021) 21:416. doi: 10.1186/s12872-021-02206-z

41. Betancur J, Commandeur F, Motlagh M, Sharir T, Einstein AJ, Bokhari S, et al. Deep learning for prediction of obstructive disease from fast myocardial perfusion SPECT: a multicenter study. JACC Cardiovasc Imaging. (2018) 11:1654–63. doi: 10.1016/j.jcmg.2018.01.020

42. Betancur J, Hu LH, Commandeur F, Sharir T, Einstein AJ, Fish MB, et al. Deep learning analysis of upright-supine high-efficiency SPECT myocardial perfusion imaging for prediction of obstructive coronary artery disease: a multicenter study. J Nucl Med. (2019) 60:664–70. doi: 10.2967/jnumed.118.213538

43. Danilov VV, Klyshnikov KY, Gerget OM, Kutikhin AG, Ganyukov VI, Frangi AF, et al. Real-time coronary artery stenosis detection based on modern neural networks. Sci Rep. (2021) 11:7582. doi: 10.1038/s41598-021-87174-2

44. van Hamersvelt RW, Zreik M, Voskuil M, Viergever MA, Išgum I, Leiner T. Deep learning analysis of left ventricular myocardium in CT angiographic intermediate-degree coronary stenosis improves the diagnostic accuracy for identification of functionally significant stenosis. Eur Radiol. (2019) 29:2350–9. doi: 10.1007/s00330-018-5822-3

45. Wu W, Zhang J, Xie H, Zhao Y, Zhang S, Gu L. Automatic detection of coronary artery stenosis by convolutional neural network with temporal constraint. Comput Biol Med. (2020) 118:103657. doi: 10.1016/j.compbiomed.2020.103657

46. Yi Y, Xu C, Guo N, Sun J, Lu X, Yu S, et al. Performance of an artificial intelligence-based application for the detection of plaque-based stenosis on monoenergetic coronary CT angiography: validation by invasive coronary angiography. Acad Radiol. (2021) 29 Suppl. 4:S49–58. doi: 10.1016/j.acra.2021.10.027

47. Zreik M, Lessmann N, van Hamersvelt RW, Wolterink JM, Voskuil M, Viergever MA, et al. Deep learning analysis of the myocardium in coronary CT angiography for identification of patients with functionally significant coronary artery stenosis. Med Image Anal. (2018) 44:72–85. doi: 10.1016/j.media.2017.11.008

48. Fischer AM, Eid M, De Cecco CN, Gulsun MA, van Assen M, Nance JW, et al. Accuracy of an artificial intelligence deep learning algorithm implementing a recurrent neural network with long short-term memory for the automated detection of calcified plaques from coronary computed tomography angiography. J Thorac Imaging. (2020) 35(Suppl. 1):S49–57. doi: 10.1097/RTI.0000000000000491

49. Huo Y, Terry JG, Wang J, Nath V, Bermudez C, Bao S, et al. Coronary calcium detection using 3D attention identical dual deep network based on weakly supervised learning. Proc SPIE Int Soc Opt Eng. (2019) 10949:1094917. doi: 10.1117/12.2512541

50. Garland J, Hu M, Duffy M, Kesha K, Glenn C, Morrow P, et al. Classifying microscopic acute and old myocardial infarction using convolutional neural networks. Am J Forensic Med Pathol. (2021) 42:230–4. doi: 10.1097/PAF.0000000000000672

51. Ohlsson M. WeAidU-a decision support system for myocardial perfusion images using artificial neural networks. Artif Intell Med. (2004) 30:49–60. doi: 10.1016/S0933-3657(03)00050-2

52. Xu C, Xu L, Gao Z, Zhao S, Zhang H, Zhang Y, et al. Direct delineation of myocardial infarction without contrast agents using a joint motion feature learning architecture. Med Image Anal. (2018) 50:82–94. doi: 10.1016/j.media.2018.09.001

53. Zaman F, Ponnapureddy R, Wang YG, Chang A, Cadaret LM, Abdelhamid A, et al. Spatio-temporal hybrid neural networks reduce erroneous human “judgement calls” in the diagnosis of Takotsubo syndrome. EClinicalMedicine. (2021) 40:101115. doi: 10.1016/j.eclinm.2021.101115

54. Zhang N, Yang G, Gao Z, Xu C, Zhang Y, Shi R, et al. Deep learning for diagnosis of chronic myocardial infarction on nonenhanced cardiac cine MRI. Radiology. (2019) 291:606–17. doi: 10.1148/radiol.2019182304

55. Nakajima K, Okuda K, Watanabe S, Matsuo S, Kinuya S, Toth K, et al. Artificial neural network retrained to detect myocardial ischemia using a Japanese multicenter database. Ann Nucl Med. (2018) 32:303–10. doi: 10.1007/s12149-018-1247-y

56. Kusunose K, Abe T, Haga A, Fukuda D, Yamada H, Harada M, et al. A deep learning approach for assessment of regional wall motion abnormality from echocardiographic images. JACC Cardiovasc Imaging. (2020) 13(2 Pt 1):374–81. doi: 10.1016/j.jcmg.2019.02.024

57. Hlatky MA, Greenland P, Arnett DK, Ballantyne CM, Criqui MH, Elkind MS, et al. Criteria for evaluation of novel markers of cardiovascular risk: a scientific statement from the American heart association. Circulation. (2009) 119:2408–16. doi: 10.1161/CIRCULATIONAHA.109.192278

58. Miller DD, Donohue TJ, Younis LT, Bach RG, Aguirre FV, Wittry MD, et al. Correlation of pharmacological 99mTc-sestamibi myocardial perfusion imaging with poststenotic coronary flow reserve in patients with angiographically intermediate coronary artery stenoses. Circulation. (1994) 89:2150–60. doi: 10.1161/01.CIR.89.5.2150

59. Pijls NH, De Bruyne B, Peels K, Van Der Voort PH, Bonnier HJ, Bartunek JKJJ, et al. Measurement of fractional flow reserve to assess the functional severity of coronary-artery stenoses. N Engl J Med. (1996) 334:1703–8. doi: 10.1056/NEJM199606273342604

60. White CW, Wright CB, Doty DB, Hiratza LF, Eastham CL, Harrison DG, et al. Does visual interpretation of the coronary arteriogram predict the physiologic importance of a coronary stenosis? N Engl J Med. (1984) 310:819–24. doi: 10.1056/NEJM198403293101304

61. Alizadehsani R, Khosravi A, Roshanzamir M, Abdar M, Sarrafzadegan N, Shafie D, et al. Coronary artery disease detection using artificial intelligence techniques: a survey of trends, geographical differences and diagnostic features 1991-2020. Comput Biol Med. (2021) 128:104095. doi: 10.1016/j.compbiomed.2020.104095

62. Boyd C, Brown G, Kleinig T, Dawson J, McDonnell MD, Jenkinson M, et al. Machine learning quantitation of cardiovascular and cerebrovascular disease: a systematic review of clinical applications. Diagnostics. (2021) 11:551. doi: 10.3390/diagnostics11030551

63. Mowatt G, Cummins E, Waugh N, Walker S, Cook J, Jia X, et al. Systematic review of the clinical effectiveness and cost-effectiveness of 64-slice or higher computed tomography angiography as an alternative to invasive coronary angiography in the investigation of coronary artery disease. Health Technol Assess. (2008) 12:iii–iv, ix-143. doi: 10.3310/hta12170

64. Collins GS, Reitsma JB, Altman DG, Moons KG. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): the TRIPOD statement. BMJ. (2015) 350:g7594. doi: 10.1136/bmj.g7594

65. Moons KG, Altman DG, Reitsma JB, Ioannidis JP, Macaskill P, Steyerberg EW, et al. Transparent reporting of a multivariable prediction model for individual prognosis or diagnosis (TRIPOD): explanation and elaboration. Ann Intern Med. (2015) 162:W1–73. doi: 10.7326/M14-0698

66. Bossuyt PM, Reitsma JB, Bruns DE, Gatsonis CA, Glasziou PP, Irwig L, et al. STARD 2015: an updated list of essential items for reporting diagnostic accuracy studies. BMJ. (2015) 351:h5527. doi: 10.1136/bmj.h5527

Keywords: coronary artery disease, artificial intelligence, diagnosis, prediction model, imaging, scoping review

Citation: Wang X, Wang J, Wang W, Zhu M, Guo H, Ding J, Sun J, Zhu D, Duan Y, Chen X, Zhang P, Wu Z and He K (2022) Using artificial intelligence in the development of diagnostic models of coronary artery disease with imaging markers: A scoping review. Front. Cardiovasc. Med. 9:945451. doi: 10.3389/fcvm.2022.945451

Received: 16 May 2022; Accepted: 31 August 2022;

Published: 04 October 2022.

Edited by:

Gianluca Rigatelli, Hospital Santa Maria della Misericordia of Rovigo, ItalyReviewed by:

Natallia Maroz-Vadalazhskaya, Belarusian State Medical University, BelarusAntonis Sakellarios, University of Ioannina, Greece

Filippo Cademartiri, Gabriele Monasterio Tuscany Foundation (CNR), Italy

Copyright © 2022 Wang, Wang, Wang, Zhu, Guo, Ding, Sun, Zhu, Duan, Chen, Zhang, Wu and He. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Kunlun He, a3VubHVuaGVAMzAxaG9zcGl0YWwuY29tLmNu; orcid.org/0000-0002-3335-5700

†These authors have contributed equally to this work

Xiao Wang

Xiao Wang Junfeng Wang

Junfeng Wang Wenjun Wang

Wenjun Wang Mingxiang Zhu

Mingxiang Zhu Hua Guo5

Hua Guo5 Yongjie Duan

Yongjie Duan Zhenzhou Wu

Zhenzhou Wu Kunlun He

Kunlun He