- 1Department of Ophthalmology, The Second Affiliated Hospital, Harbin Medical University, Harbin, China

- 2National Key Laboratory of Laser Spatial Information, Harbin Institute of Technology, Harbin, China

- 3Harbin University, Harbin, China

Purpose: To design an artificial intelligence (AI) algorithm based on the Lens Opacities Classification System III (LOCS III) to realize automatic diagnosis of cataracts and classification of its.

Methods: This retrospective study develops an AI-based neural network to diagnose cataracts and grade lens opacity. According to the LOCS III, cataracts are classified into Nuclear Opalescence (NO), Nuclear Color (NC), Cortical(C) and Posterior subcapsular(P). The newly developed neural network system uses grayscale, binarization, cluster analysis, “dilation-corrosion” and other methods to process and analyze the images, then the study need to test and evaluate the generalization ability of the system.

Results: The new neural network system can identify 100% of lens anatomy. It has an accuracy of 92.28%–100% in the diagnosis of nuclear cataract, cortical cataract and posterior subcapsular cataract. The classification accuracy rate of the system for cataract NO, NC, C, P is between 90.88% and 100%, the Area Under the Curve (AUC) is between 96.68% and 100%.

Conclusion: A novel cataract diagnostic and grading system can be developed based on the AI recognition algorithm, which establishes an automatic cataract diagnosis and grading scheme. The system facilitates rapid and accurate cataract diagnosis and grading.

1 Introduction

Cataract is a main cause of visual impairment and blindness worldwide (Lee and Afshari, 2023). Surgery is the most effective treatment for cataract. Severe cataract can cause complications such as lens nucleus dislocation and glaucoma (Guan et al., 2022) significantly increasing surgical risks. Thus, early and precise diagnosis is clinically critical. In clinical practice, cataract is typically diagnosed under a slit lamp (Brown et al., 1987) and graded using the lens opacity grading system II or III (Chylack et al., 1989; Chylack et al., 1993). However, the accurate diagnosis and grading of lens diseases depend on ophthalmologists’ clinical experience. Different ophthalmologists may evaluate the patient’s eye condition differently based on their years of experience (Lu et al., 2025). In remote areas, limited access to professional ophthalmologists and ophthalmic equipment, along with inconvenient medical conditions, lead to delayed diagnosis and treatment for patients (Mundy et al., 2016).

Artificial intelligence (AI), as an interdisciplinary technological domain, is dedicated to developing computational systems that emulate human cognitive processes (Liu et al., 2021). Its medical applications predominantly utilize machine learning (ML) and deep learning (DL) frameworks for imaging diagnostics (Castiglioni et al., 2021). Ophthalmology currently represents one of the most dynamic frontiers in AI research (Yang et al., 2023), where image-based diagnostic systems show remarkable suitability for traditional ML and DL implementations (Wang et al., 2024). Owing to its exceptional capability in extracting high-level features and latent patterns from massive datasets, DL systems now match clinicians’ performance levels in feature-based diagnostic tasks (Yu et al., 2018). The scope of applications has expanded significantly, progressing beyond its initial focus on diagnosing retinal pathologies (e.g., diabetic retinopathy, age-related macular degeneration, and retinopathy of prematurity) (Oganov et al., 2023; Zhang et al., 2023; Xu et al., 2024) to now include screening for anterior segment conditions such as glaucoma, cataracts, iris abnormalities, and corneal diseases (Ting et al., 2021; Wu et al., 2022). Currently, machine learning and image processing technology are widely used by researchers in their studies to develop cataract detection methods (Wan Zaki et al., 2022; Gali et al., 2019). Many researchers employ various deep learning algorithms (Xu et al., 2020; Wu et al., 2019), such as Convolutional Neural Networks (CNN), Residual Neural Network (ResNet) and Support Vector Machine (SVM) (Imran et al., 2020), to diagnose image-based categorization of cataract as non-cataract, mild, moderate, and severe. However, there is a lack of utilizing deep learning algorithms for simultaneous diagnosis and grading of various types of cataracts in anterior segment images, including nuclear (N) (Li et al., 2010; Li et al., 2009), cortical (C) (Lu et al., 2022), and posterior subcapsular (P) cataract, based on the Lens Opacities Classification System III (LOCS III).

Building an AI model involves several steps, including system data preparation (image preprocessing), dataset partitioning, model construction, optimization, and evaluation (Shao et al., 2023). Prior to implementing algorithms, many researchers perform preprocessing on images to eliminate noise, thereby enhancing the accuracy of feature extraction (Xu et al., 2020). Due to reflection of eyes and local uneven illumination, the quality of the original images is affected. That effect may decrease the accuracy of feature extraction, and consequently impact the reliability of cataract diagnosis and grading. Xu et al. converted the original images from RGB color mode (RGB) color space to the green component images to eliminate the uneven illumination (Xu et al., 2020; Linglin et al., 2017). But they did not preprocess specific areas of the original images indetail. Gan et al. proposed two artificial intelligence diagnostic platforms for cortical cataract classification, dividing the cataract into four stages: incipient stage, intumescent stage, mature stage, and hyper-mature stage (Gan et al., 2023). The platforms did not consider the influence of bright spots in the images and did not provide more detailed classification of cortical cataract. In addition, preprocessing encompasses extracting regions of interest to mitigate the influence of surrounding redundant information. In 1997, researchers proposed a method based on deep learning algorithms to classify the severity of nuclear cataract, which extracted second-order gray-level statistics from within circular regions of the nucleus as image features (Duncan et al., 1997). However, the algorithm did not consider the information of elliptical lens regions, resulting in incomplete extraction of feature information. Li et al. (Li et al., 2010) investigated an algorithm for the automatic diagnosis of nuclear cataract based on the LOCS III that can automatically detect the nucleus region from slit-lamp images using the modified active shape model (ASM) method (Li and Chutatape, 2003), which is critical for assessing nuclear cataract. This article presents an automated nuclear cataract severity classification algorithm that utilizes the YOLOv3 algorithm to locate the nuclear region of the ocular lens (Hu et al., 2020). But, the complexity and large computational burden of YOLOv3 make it challenging to implement.

Currently, no algorithm exists that can comprehensively diagnose and grade all types of cataracts based on LOCS III during initial screening, and the aforementioned article also lacks detailed description of methods for accurate localization of the lens (Litjens et al., 2017; Li et al., 2021; Yousefi et al., 2020). Additionally, existing research has not adequately addressed the impact of extremely bright spots in images on the extraction of ocular features, which may lead to inaccurate diagnostic results. In response to the above issues, the primary contributions of this paper are as follows.

1. Based on deep learning, this study proposes a systematic algorithm that accurately classifies and grades various types of cataracts according to the Lens Opacities Classification System III (LOCS III).

2. The article presents a lens localization algorithm of nuclear type image. The algorithm is based on expanded ellipse traversal that can enhance the accuracy of interested region localization, which can contribute to improved feature extraction precision of Nuclear Cataract images.

3. The paper advances a color-based multivariate clustering analysis technique for filling image highlight that contributes to improve feature extraction precision of Cortical (C) and Posterior Subcapsular (P) Cataract images and conduces to enhance diagnosis and grading precision of C and P.

2 Materials and methods

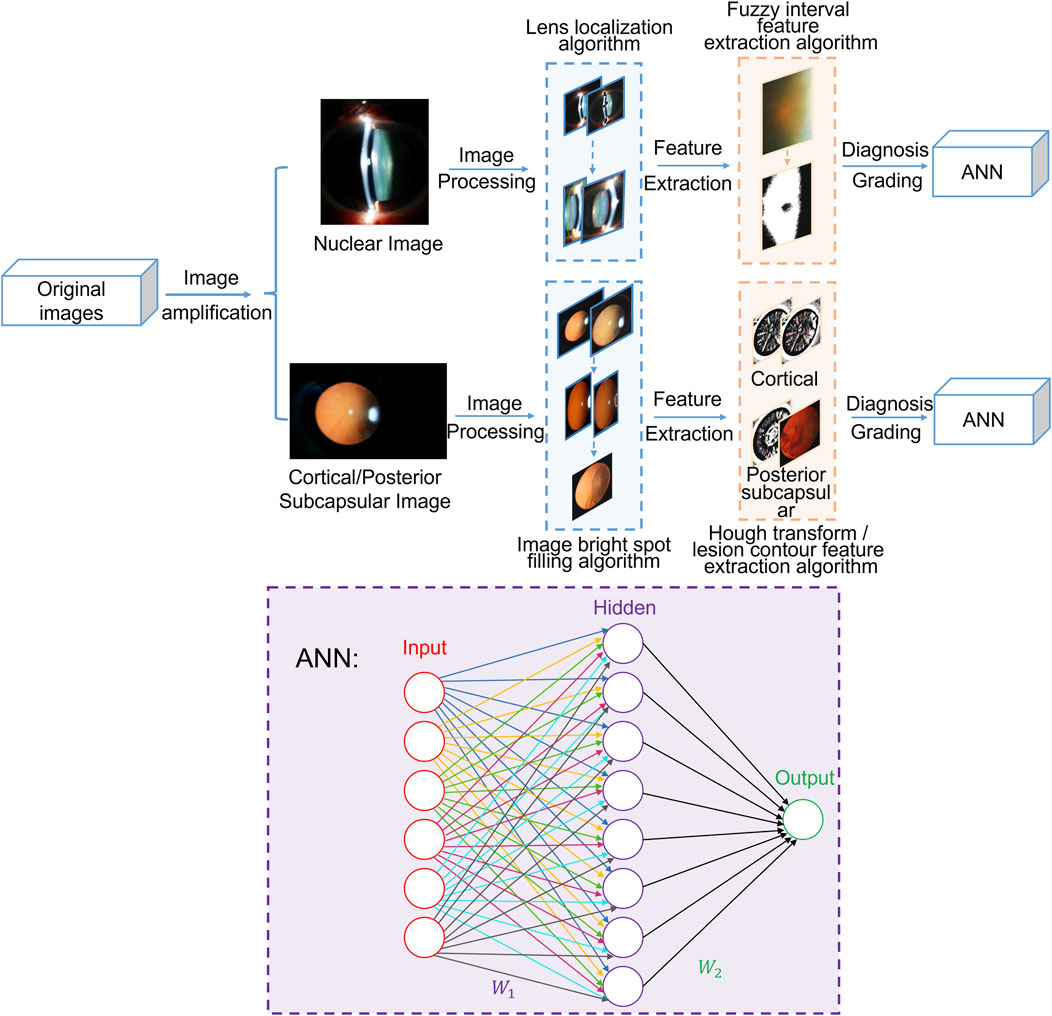

The experimental protocol was established Helsinki according to the ethical guidelines of the Declaration and was approved by the Ethics Committee of The Second Affiliated Hospital of Harbin Medical University. Written informed consent was obtained from all patients before collection. In this section, this study is expanded from two models: the nuclear cataract diagnosis and grading module and cortical and posterior subcapsular cataract diagnosis and grading module. As shown in Figure 1, the total algorithm framework includes preprocessing, feature extraction and cataract grading neural network and other processing. The artificial neural network (ANN) consists of two layers and employs the sigmoid function as its activation function.

For nuclear images, the preprocessing stage involves eliminating bright spots caused by flash light and employing the expanded ellipse traversal method to achieve lens localization. During feature extraction, the fuzzy interval scale method is adopted to obtain feature information regarding Nucleur Opalescence (NO) and Nuclear Color (NC). Finally, the extracted feature information is input into the ANN to enable the automated diagnosis and grading of nuclear cataracts.

For cortical and posterior subcapsular images, the preprocessing stage includes image segmentation using the minimum circumscribed circle method and filling image bright spots through color multivariate cluster analysis. In the subsequent feature extraction phase, the Hough transform is employed to detect line information in the images as feature information for cortical cataracts, and lesion contour information is extracted as feature information for posterior subcapsular cataracts. Ultimately, this extracted feature information is fed into the ANN to facilitate the diagnosis and grading of cortical and posterior subcapsular cataracts.

2.1 Dataset and statistical methods

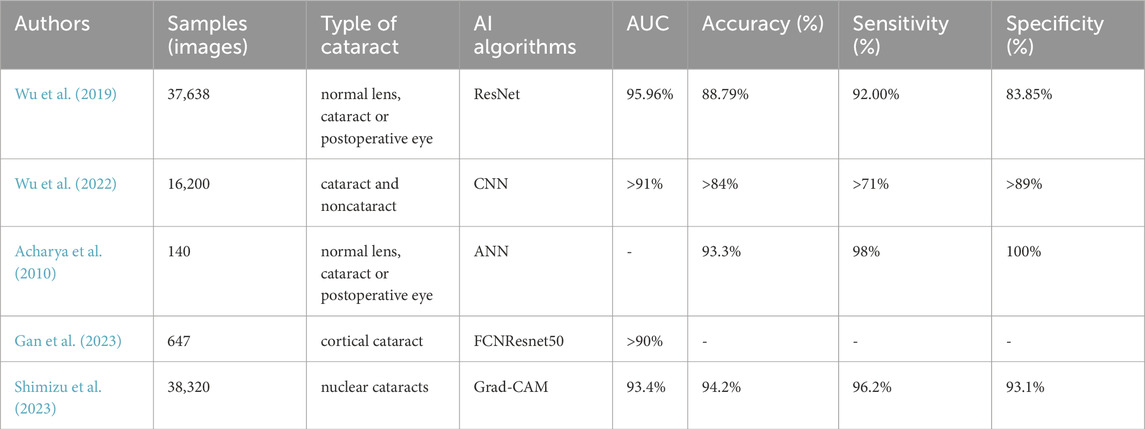

All slit-lamp photographs were obtained from the Department of Ophthalmology of the Second Affiliated Hospital of Harbin Medical University from 2019 to 2022, including 1,003 photographs of normal lenses and cataracts of different severities. Each photograph was taken under mydriatic conditions. Different modes were used: slit-beam mode was used for NO and NC evaluation, and retro-illuminated photographs were used to assess C and P based on LOCS III (Figure 2). Slit-beam photos were taken with an angle greater than 15° between the illumination arm and the viewing arm, while the retro-illuminated photographs were taken with a frontal view of the lens.

Figure 2. Lens opacities classification system Ⅲ, LOCS Ⅲ Chylack et al., 1989.

The exclusion criteria for the photo were: (1) pupil diameter ≤ 5 mm in mydriatic conditions or unclear image; (2) other special types of cataracts; (3) presence of other anterior segment diseases, trauma, surgical history and so on.

The data sets were partitioned. After that, each type of anterior segment images was divided into two disjoint subsets. The training set accounts for 70% of the data, while the test set accounts for 30% (Krizhevsky et al., 2017). The dataset comprised 1,003 anterior segment images, including 215 from healthy lenses and 788 from cataractous lenses classified per LOCS III criteria. All images were categorized by modality: slit-beam illumination (n = 717) and retro-illumination (n = 286). Healthy lenses were uniformly graded as NO0/NC0, while cataract severity followed LOCS III grading (NO1-6, NC1-6, C0-5, P0-5) (Supplementary Tables S1-S2).

To address the limited original image dataset, we need to augment it to prevent model overfitting and improve algorithm performance. Multiple methods were used to augment the data set, including adding salt and pepper noise, gaussian noise, dimming the image, brightening the image, rotating, mirror flipping, mix-up, and more.

2.2 Nuclear cataract diagnosis and grading module

2.2.1 Preprocessing

2.2.1.1 Removing bright spots

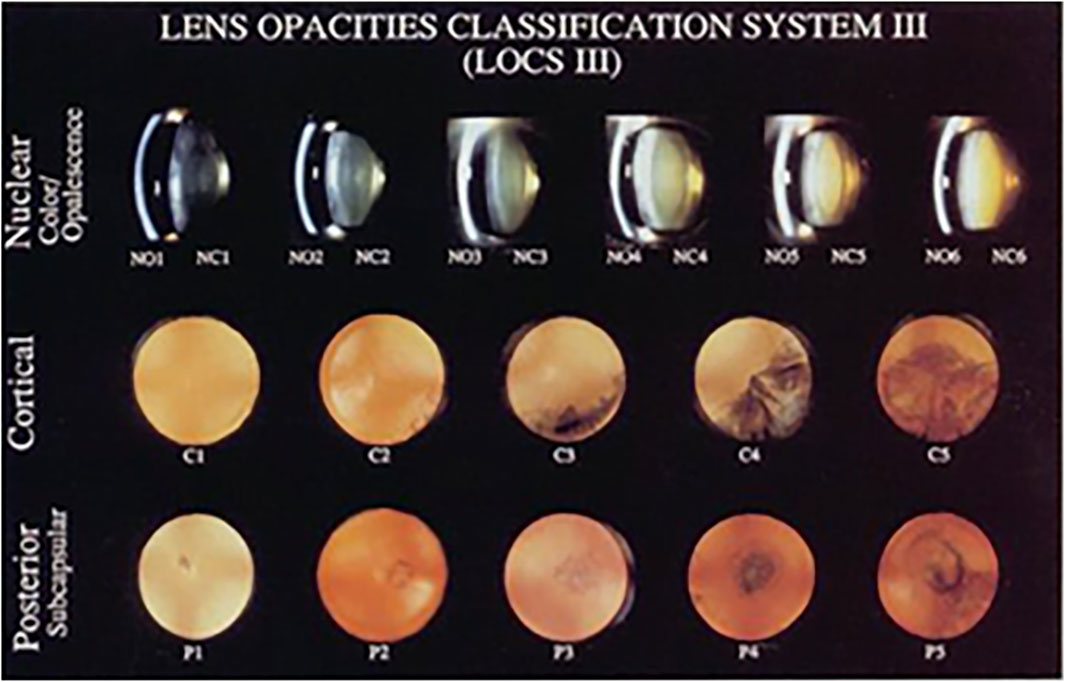

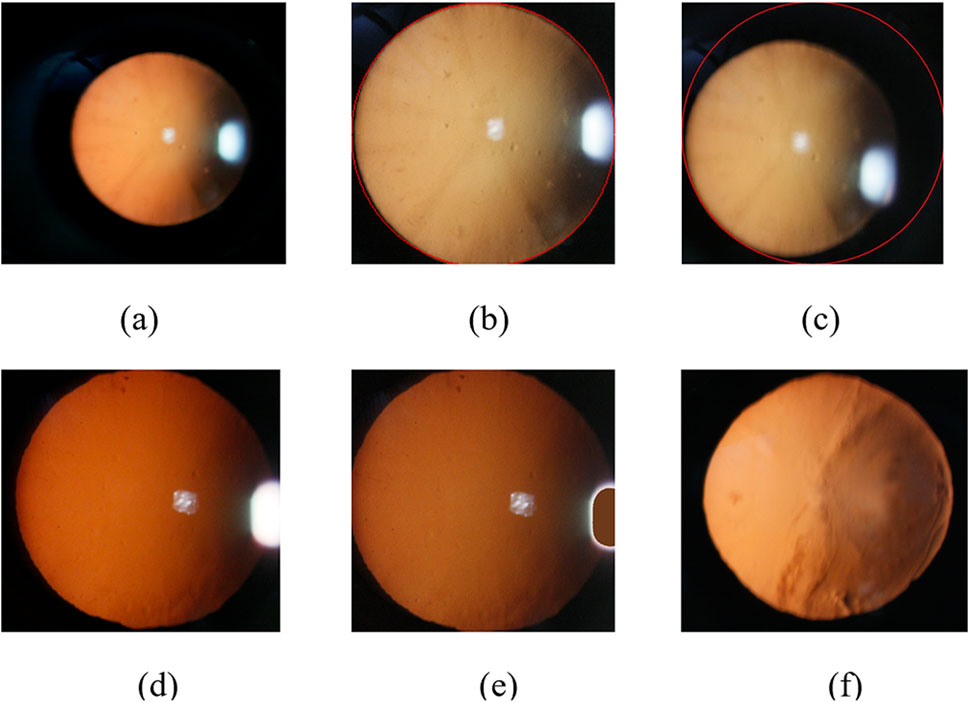

The original image is obtained by using the slit-beam mode of the anterior segment. Due to the influence of flash light, there are usually two kinds of light spots in the nuclear type image: one is white light spots due to the reflection of cornea and the other is yellow spots on the skin near the eye, as shown in Figure 3a. Due to reflection of eyes and local uneven illumination, the quality of original images is impacted, which may hinder the detection and grading of cataract precisely.

Figure 3. Nuclear image preprocessing under slit-beam photos. (a) Original image; (b) Binary result of Fig. (a) affected by highlighted noise; (c) Localization results of oversized lens; (d) Incorrect lens positioning results; (e) The result of drawing black dots; (f) Lens localization result based on expanded ellipse traversal; (g) Intercepted lens area.

Figure 3c is a lens contour obtained using a basic contour extraction algorithm for Figure 3b, which contains a large amount of interference information. The highlighted area in Figure 3d is the region where inaccurate localization of the lens occurs due to the influence of bright spots. Therefore, in this paper, we first use the method of drawing black dots on bright areas with three RGB values above 250 to reduce the high-brightness light spot, as shown in Figure 3e. In this way, it is impossible to form a large internal ellipse in the bright area and the contour area where the lens is located is maximized. Then, the algorithm converts the original image into a gray image, and uses dynamic threshold to binarize the gray image.

2.2.1.2 Lens localization algorithm of nuclear image based on expanded ellipse traversal

Figure 3c illustrates the imprecise lens contour obtained using basic contour detection methods, which contains a lot of useless information, thereby compromising the accuracy of feature extraction. Therefore, prior to implementing the DL model, it is necessary to accurately extract the region of interest from the original image. This section proposes a lens localization method based on extended elliptical traversal for kernel images, which processes the binarized image in Figure 3b.

Before that, we need to use the contour search function to find the approximate position of the lens contour, and find the minimum rectangular boundary covering this contour to obtain the position coordinates, width and height of the upper left corner of the rectangle. We think that the lens contour circled by the contour search function is approximately the maximum contour. Next, we will use the approximate coordinates and other information to accurately locate the lens. During the positioning, we first traverse all rectangles in the maximum contour and obtain their inner ellipses. Then we need to traverse all points in the bounding rectangle to determine whether they are inside the ellipse. If so, we need to check whether the point is white. Once some spots inside the ellipses are not white, the ellipse does not meet the conditions and other ellipses need to be traversed. In fact, the ultimate goal of the algorithm is to find the largest elliptical area that is completely filled with white. Therefore, the algorithm eventually obtains the largest ellipse after many iterations, as shown in Figure 3f.

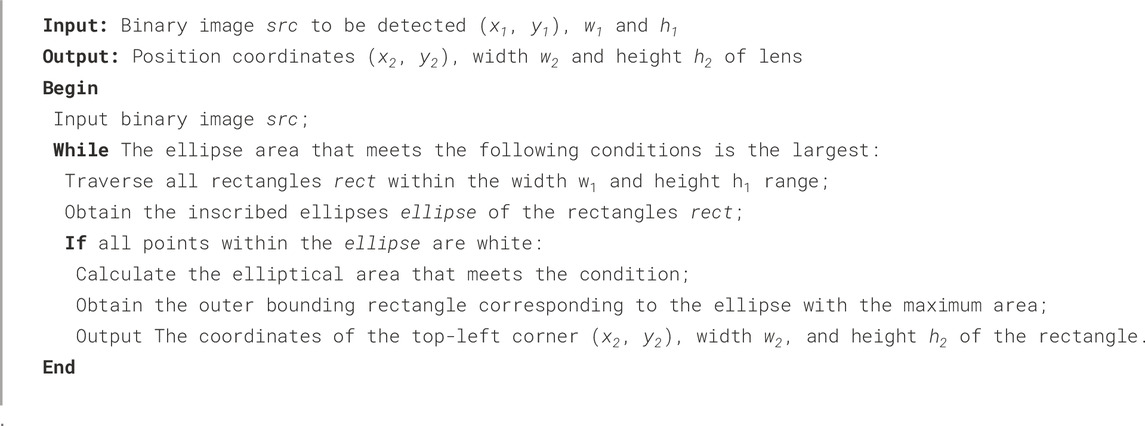

The specific implementation process of lens positioning algorithm is shown in Algorithm 1. The input of the algorithm is that we use the contour search function in computer vision to get the largest possible area of the lens, and obtain the coordinates (x1, y1) of the top left corner of the outer rectangle of the area, as well as the width w1 and height h1.

The lens part is not strictly elliptical shape, resulting in the ellipse not containing the complete lens. Therefore, it is necessary to fine-tune the size of the ellipse to obtain the final lens positioning result. Finally, the algorithm intercepts the lens portion for subsequent feature extraction, as shown in Figure 3g.

2.2.2 Nuclear cataract diagnosis and grading neural network

2.2.2.1 Feature extraction

The diagnosis and classification of nuclear cataract include NO and NC. We make use of color proportion to classify, in which cyan pixels are used to judge NO and yellow pixels are used to judge NC. In this part, we use a kernel image color eigenvalue extraction algorithm based on fuzzy interval scale. The specific process is as follows.

We first need to set the standard color (cyan or yellow) and the offset interval Offset. We assume that the RGB of the standard color is (r,g,b). According to this, we can calculate the corresponding fuzzy interval, which are respectively R = [r-Offset,r + Offset], G = [g-Offset,g + Offset], B = [b-Offset,b + Offset]. It is not difficult to see that different standard colors and offset intervals will obtain different fuzzy intervals. After that we traverse all pixels in the image and count the proportion of pixels points whose RGB values are in the fuzzy interval, which is color feature of nuclear type image.

2.2.2.2 Lens nucleus diagnosis and grading method based on neural network

The input of the neural network is the ratio value of cyan or yellow pixels in the lens image after image expansion, positioning and clipping. The input data set is divided into training set and test set according to the ratio of 7:3. The output layer of the neural network is a point, representing the grade of the nuclear classification. After the above processing, we have obtained a complete nuclear cataract diagnosis and classification model, including the classification of Nucleus Opacification and Nucleus Color.

2.3 Cortical and posterior subcapsular cataract diagnosis and grading module

2.3.1 Preprocessing

Before feature extraction, we need to preprocess the original image, which mainly includes two steps: image positioning segmentation and bright spot filling. This phenomenon occurs because the eyeball’s three-dimensional structure generates luminance gradients under unidirectional illumination. At the same time, flash lights make bright spots unavoidable in the image. These problems will interfere with feature extraction, so preprocessing is needed.

2.3.1.1 Image segmentation technology based on minimum circumscribed circle

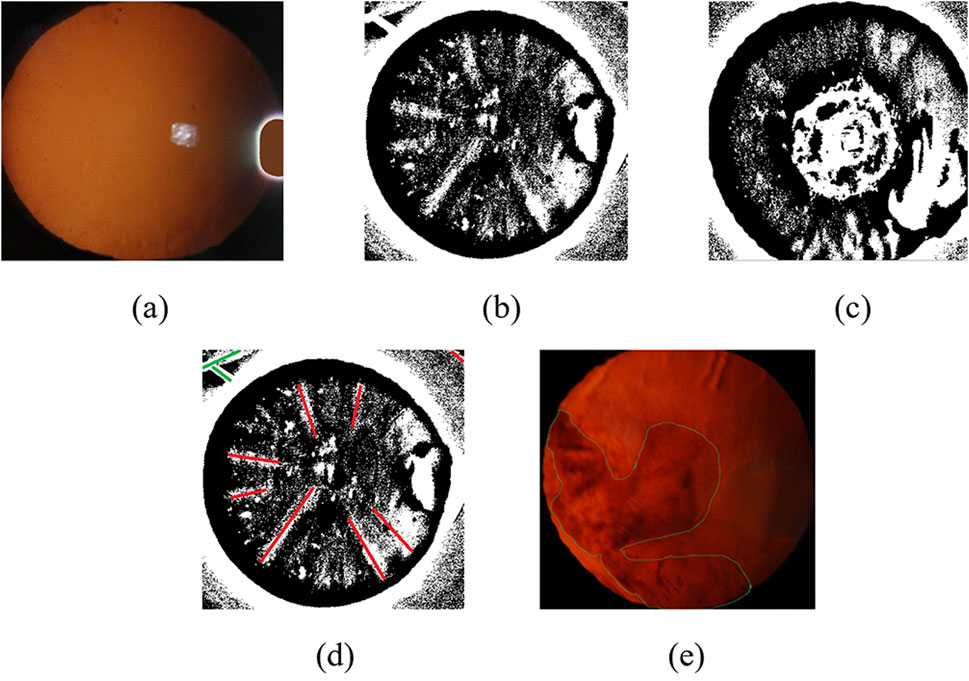

The eyeball occupies only a small part of the original image. In order to obtain the features of the eyeball image, it is necessary to locate the eye part of the original red light reflection image. In this paper, we use the method of minimum circumcircle to segment the original images to remove the information of dark features. Figure 4b shows the ideal result of interception, which can accurately extract the eyeball region.

Figure 4. Slit-beam photos preprocessing. (a) Original image; (b) Ideal result using minimum circumscribed circle; (c) Inaccurate eye region positioning results; (d) Unfilled Image; (e) Filled image; (f) Filled color image after debugging.

However, there are often several bright spots in the real cataract eyeball images due to the existence of flash during the photographing process, and the image often exist uneven light and dark distribution because of the three-dimensional shape of the eyeball, which will lead to inaccurate positioning results of some images using the above method, as shown in Figure 4c.

Therefore, in order to solve the above inaccurate results, we first use bright spot filling technology to remove noise, and then locate the part of the eyeball.

2.3.1.2 Image bright spot filling technology based on color multivariate cluster analysis

Due to local uneven illumination and reflection of eyes, the quality of original images are impacted, which may hinder the detection and grading of cataract precisely. Therefore, we adopt color filling to make the RGB values approximately consistent between bright spot area and the surrounding pixels to eliminate the effect of bright spots.

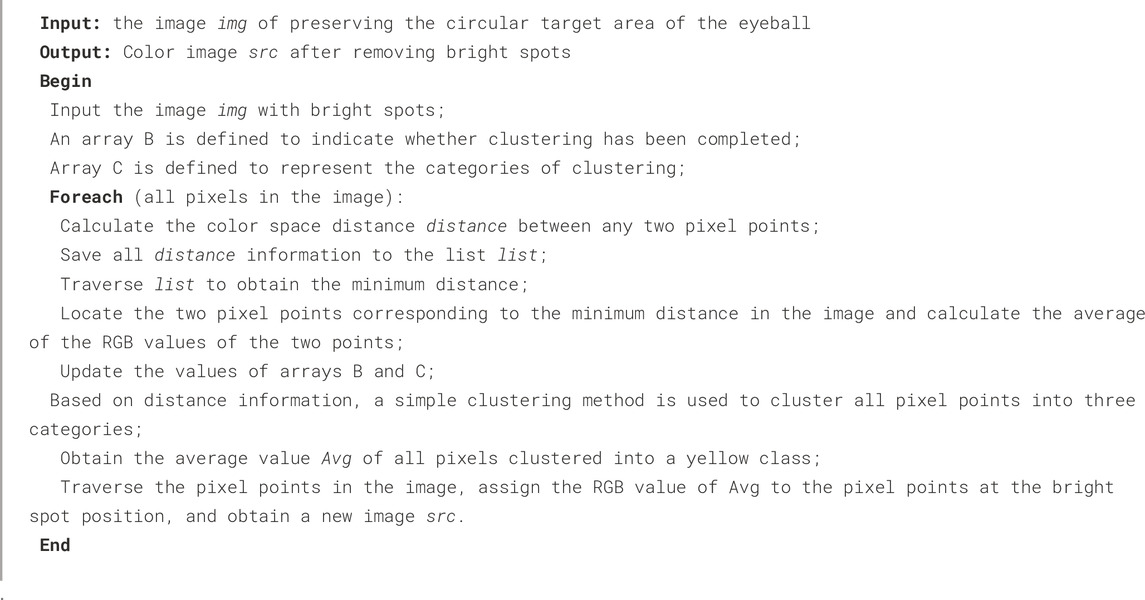

We use the idea of averaging to select the required fill color. The algorithm first classifies the image pixels in the circular domain using the idea of clustering, which can be roughly divided into three categories: yellow, white and black. Then, the algorithm calculates the mean value of the RGB values of all pixels in the yellow classification. Finally, the algorithm fills the bright spot area with the color corresponding to the mean value, as shown in Figure 4e. The specific implementation process of the algorithm is as follows (Algorithm 2).

After the above processing, we can restore the image color and retain the image information of the bright area of the image. The preprocessing results of cortical or posterior subcapsular images after multiple adjustments is shown in Figure 4f.

2.3.1.3 Image circular contour detection technology based on Hough transform

In this section, we conduct more precise eyeball localization on the image after removing the bright spots. We first convert the colored eye image into a grayscale image, then set a suitable fixed threshold to remove some noise, and finally use Hough transform to detect the circular contour of the eyeball image.

2.3.2 Cortical and posterior subcapsular cataract diagnosis and grading neural network

2.3.2.1 Feature extraction

In order to preserve the images feature of the lesion in the eyeball, we use an adaptive threshold method to process the images. We first convert the color image obtained after removing the bright spots into a grayscale image Grey, and then use a fixed threshold method to obtain a binary image with the largest circular contour, which contains complete lesion information. Finally, we adopt an adaptive binarization method to process the grayscale image Grey combining the above circular contour to obtain binarized images that preserve the lesion information, as shown in Figures 5b,c.

Figure 5. Slit-beam photos feature extraction results. (a) Filled color image after debugging; (b) Suspected cortical binarization image preserving lesion information; (c) Suspected posterior subcapsular binary image preserving lesion information; (d) Result of binary original image line fitting based on Hough transform method; (e) Original image contour extraction results.

There are differences feature information in binary images of cortical and posterior subcapsular cataract. It is necessary to adopt other feature extraction methods to obtain more accurate feature information in binary images. We use Hough transform to detect line information in binary images, including the length, position, and distance between the line and the center of the circle, which are used as feature information of cortical cataract, as shown in Figure 5d. Subsequently, we extracted the lesion contour information from the binary image, including the area ratio, perimeter ratio, and contour centroid of the contour to the circular contour of the eyeball, which are used as feature information of posterior subcapsular cataract, as shown in Figure 5e.

2.3.2.2 Cortical and posterior subcapsular cataract diagnosis and grading method based on neural network

The input of the neural network is the feature information obtained from the cortical and posterior subcapsular cataract images in the previous section. The input data set is divided into training set and test set according to the ratio of 7:3. The output layer of the neural network is a point, representing the classification of the cortical and posterior subcapsular cataract. After the above processing, we have obtained a complete cortical and posterior subcapsular cataract diagnosis and classification model.

3 Results and analysis

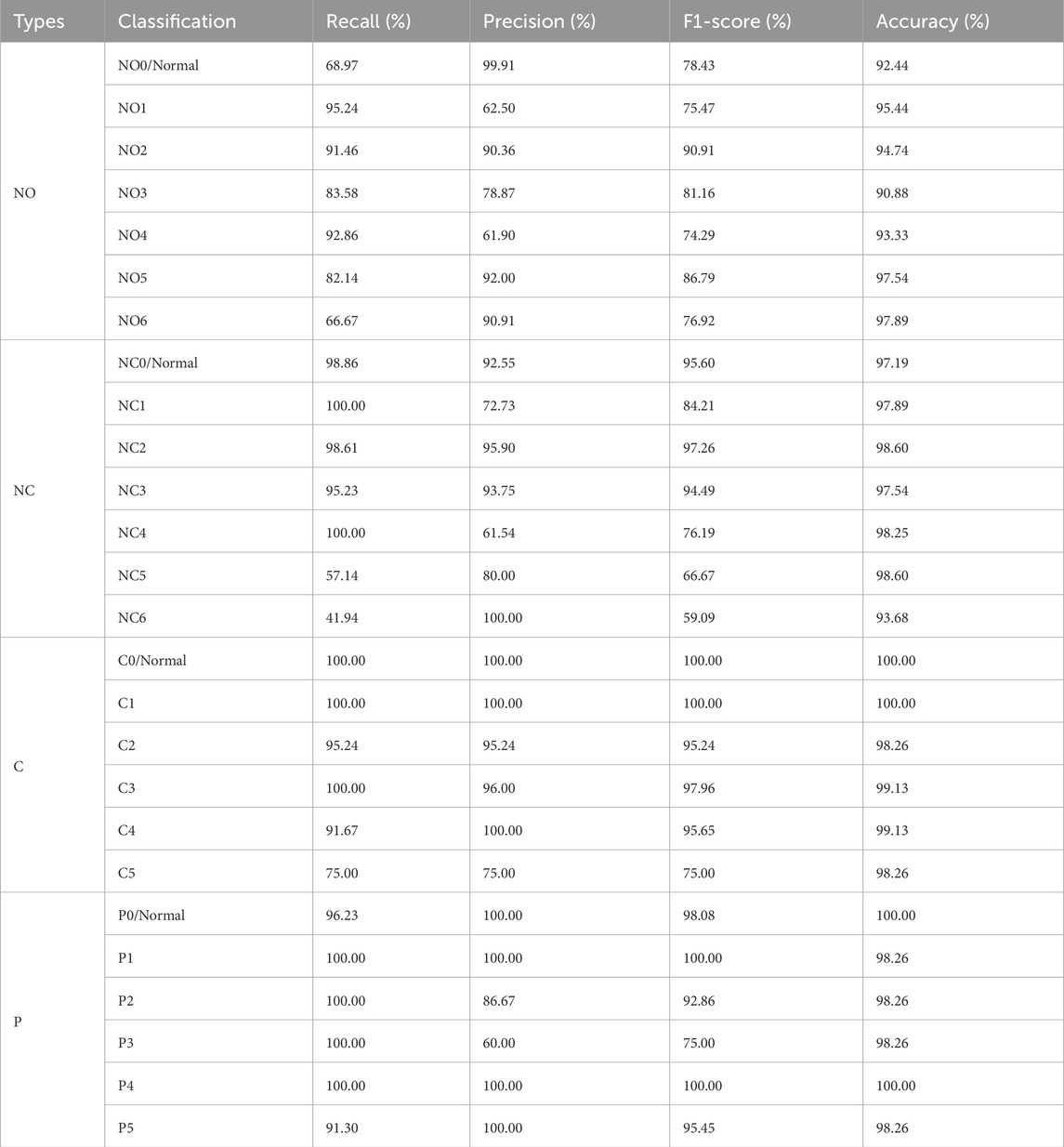

The diagnosis and grading results of cataract was evaluated using a confusion matrix. To comprehensively assess the diagnostic and grading performance of the neural network, the following metrics were employed: Precision, Recall, F1-score, Accuracy, Receiver Operating Characteristic (ROC) curves and the Area Under the Curve (AUC). Precision, recall, and F1-score were primarily utilized to evaluate the classification effectiveness of various categories within the system, while Accuracy and AUC were employed to assess the overall performance of system. A total of 1,003 original images were marked, with 715 slit-lamp images used to differentiate between NO and NC, and an additional 288 retro-illumination images used to differentiate between C and P. In this study, we set level zero, NO0 is the transparent lens nucleus, NC0 is the normal color of lens nuclear, C0 and P0 is the transparent area of lens cortex and posterior subcapsular.

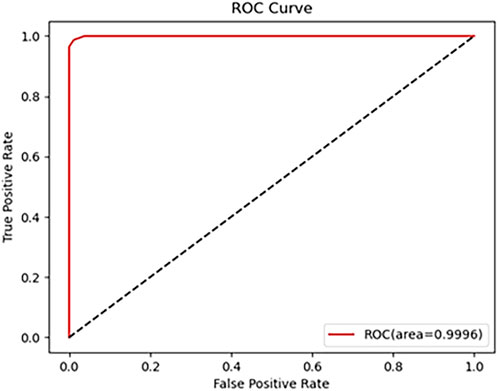

As shown in Table 1, the metrics for C and P outperform those for NO and NC. which can achieve 96.88% classification Precision, 98.41% F1-score and 98.26%Accuracy, with all other metrics at 100%. It can be seen that the overall accuracy of the Neural Network for diagnosing is above 92.28%, and the accuracy for C is the highest, which is 100%. The AUC is 99.96% (shown in Figure 6). These results indicate that the proposed algorithm performs well in the classification of various cataract types.

3.1 Result of NO grade

In this study, a neural network was utilized to categorize anterior segment images captured by slit-lamp photography into seven levels ranging from NO0/Normal to NO6. The overall accuracy exceeded 90.88%, with an AUC of 96.68% (shown in Figure 7). As illustrated in Table 2, concerning the NO classification outcomes of the neural network, the recall for NO1, NO2, and NO4 all exceeded 91.46%, indicating satisfactory performance of the system in these NO classifications. While the model demonstrated high sensitivity for early nuclear opacity (NO1, 95.24%), its performance was more limited for normal lenses (NO0, 68.97%). This suggests inherent challenges in detecting subtle features of normal lenses from static images compared to clinical dynamic evaluation.

3.2 Result of NC grade

As shown in Table 2, for the classification ranging from NC0 to NC6, the overall accuracy surpassed 93.68%, with an AUC reaching 99.55% (as illustrated in Figure 7). Specifically, the classification recall for NC0-NC4 all exceeded 95.23%. These results indicate excellent performance of the proposed neural network in the task of NC classification.

3.3 Result of C grade

The proposed algorithm subdivides anterior segment images under red reflex into levels ranging from C0/Normal to C5. The overall grading accuracy exceeds 98.26%, with an AUC value of 99.98%. As demonstrated in Table 2, the recall for levels C0 to C4 all remain above 91.67%, while precision exceeds 95.24%. This algorithm exhibits significant advantages in distinguishing non-severe cortical cataract.

3.4 Result of P grade

The overall accuracy for the six grades P0 to P5 reached 98.26%, according to Table 2, with an AUC of 100% (as illustrated in Figure 7). The recall in the P classification exceeded 91.30%. These results demonstrate a significant advantage of the algorithm in P classification.

4 Discussion

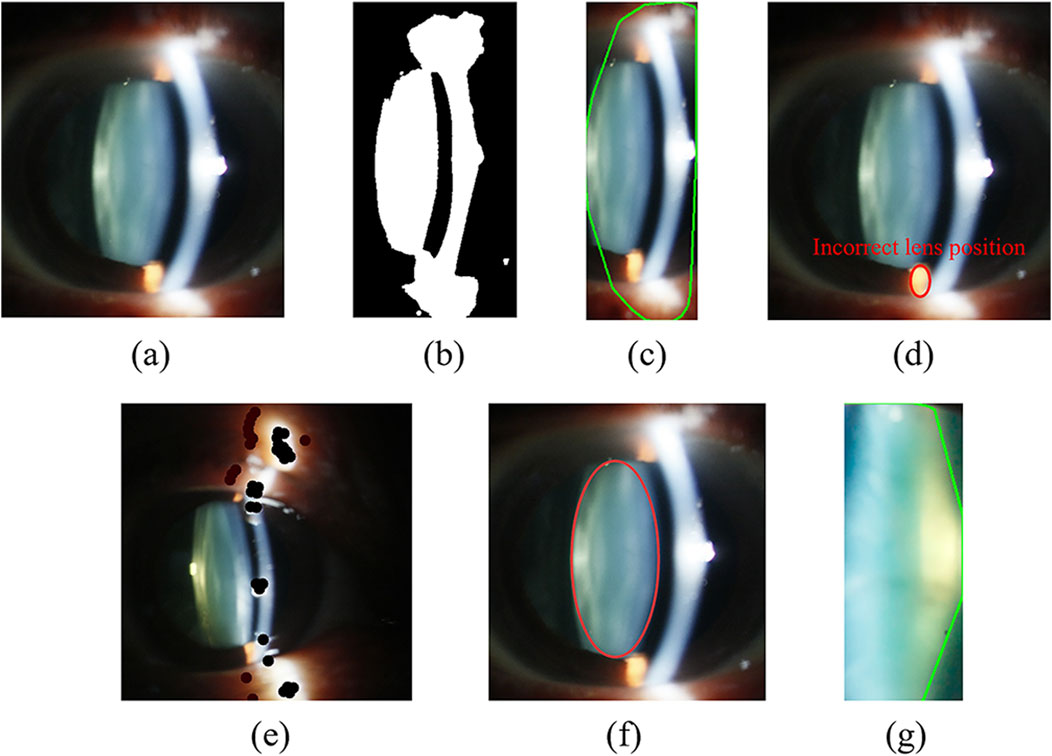

In this paper, we propose a novel paradigm for automatic cataract detection. This study first expanded the dataset and divided it in a 7:3 ratio. Subsequently, several image preprocessing methods were proposed, including a lens localization algorithm for nuclear cataract images and a bright spot filling algorithm for cortical and subcapsular cataract images (Son et al., 2022). The processed images were then diagnosed and graded using deep learning algorithms. In terms of diagnostic results, the neural network achieved accuracies of 92.28%, 97.19%, 100%, and 98.26% for the NO, NC, C, and P classifications. respectively, demonstrating good image recognition performance under retro-illumination conditions. Regarding grading, the algorithm achieved accuracies exceeding 92.28% for NO grading, over 97.19% for NC grading, and above 100%,98.26% for C, P grading, particularly excelling in C and P grading. To further contextualize our findings, we compare our results with recent state-of-the-art approaches in automatic cataract detection (Table 3). While previous studies predominantly focused on specific cataract types (e.g., Wu et al., 2019 on mixed cases or Shimizu et al., 2023 on nuclear cataracts), our method achieves both high accuracy (up to 100% for C grading) and broad generalization across NO, NC, C, and P categories. Our model consistently outperforms these benchmarks while maintaining strong sensitivity and specificity.

After systematic analysis of diagnostic error causes, three primary biases were identified: Firstly, inconsistencies in exposure intensity impair feature extraction efficacy, particularly in underexposed regions. Secondly, unilateral illumination in slit-lamp systems induces image shadows that mimic pathological lesions, significantly complicating accurate lesion identification in shadowed areas. Thirdly, dataset limitations critically constrain artificial intelligence performance: recognition accuracy exhibits strong dependence on both training data volume and feature diversity, adhering to the scaling laws demonstrated in ophthalmic AI studies (Zhao et al., 2023).

To this day, Artificial intelligence algorithms have been applied to the diagnosis and grading of cataract in fundus images. Early on, Xu et al. employed a CNN model to analyze fundus images for cataract diagnosis and grading (Xu et al., 2020). In the early years, in clinical practice, ophthalmologists rely more on observing anterior segment images under a slit lamp for intuitive and accurate cataract diagnosis. Wu et al. utilized anterior segment images to develop a remote cataract screening platform based on deep learning algorithms, specifically targeting nuclear cataract (Wu et al., 2019). This study categorized cataract into mild, moderate, and severe, providing corresponding treatment recommendations. However, it is worth noting that this study focused solely on nuclear cataract and did not provide precise grading results, which somewhat limits the AI system’s ability to follow up and assess patients’ conditions. In contrast, our algorithm design offers several significant advantages: Firstly, we employ the more precise LOCS III criteria for cataract diagnosis and grading, ensuring diagnostic accuracy. Secondly, our study explicitly delineates the current severity of cataract, providing robust support for patient follow-up. Lastly, we not only investigate the diagnosis and grading of nuclear cataract but also encompass cortical and posterior subcapsular cataract, thus achieving a more comprehensive study for cataract.

This study explores the application of artificial intelligence in the diagnosis and grading of cataract. The application of this technology has the potential to significantly optimize healthcare delivery for remote areas, impoverished communities, and elderly patients, addressing challenges such as long-distance travel and high costs, thereby reducing the economic burden on the populace (Ting et al., 2019). For diagnosed cataract patients, the technology provides a relatively standardized severity index, facilitating follow-up visits and optimizing patients management. In clinical practice, the implementation of this system is expected to enhance the efficiency of healthcare providers, allowing ophthalmologists to serve more patients and increase screening rates. Furthermore, the objective data parameters provided by the system can offer standardized guidance for surgical operators, thereby enhancing surgical safety. In conclusion, this study provides novel insights for future research and underscores the significance of integrating emerging artificial intelligence technologies into clinical practice. During the image collection process, we encountered several challenges. (1) The eyeball is a three-dimensional structure, while anterior segment images are two-dimensional. When attempting to focus on a specific point, surrounding features may appear blurred to varying degrees. Therefore, during image acquisition, multiple adjustments of focus were necessary to capture images at different planes, demanding precise alignment of the focus on specific points on the lens in clinical practice. (2) The majority of patients in the clinic present with various types of cataracts. When patients have two or more types of cataracts, the images obtained in practice are often not as clear and discernible as those displayed in the LOCS III. (3) In clinical practice, there is significant variability in the number of images collected for each grading, and the study lacks sufficient data support.

5 Conclusion

This study has successfully developed a novel artificial intelligence system for identifying nuclear cataract, cortical and posterior subcapsular cataract. Among the various types of cataracts, the system demonstrates particularly outstanding accuracy in identifying cortical cataract. Not only does this neural network system possess the capability to diagnose the types of cataracts, but it also accurately grades the severity of cataract into NO, NC, C, and P. Particularly noteworthy is its performance in grading between C and P, where the system excels. Furthermore, the system exhibits superior sensitivity in cataract grading when the opacity of the lens is at a lower level compared to higher opacity levels. These findings underscore the promising prospects of artificial intelligence in cataract diagnosis and grading. Hence, continued efforts should be directed towards the development and optimization of more precise algorithms to facilitate its widespread application in clinical practice.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material, further inquiries can be directed to the corresponding authors.

Ethics statement

The studies involving humans were approved by the Ethics Committee of The Second Affiliated Hospital of Harbin Medical University. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

GT: Data curation, Formal Analysis, Investigation, Project administration, Supervision, Writing – original draft, Writing – review and editing. JZ: Conceptualization, Formal Analysis, Methodology, Writing – original draft. YD: Formal Analysis, Investigation, Writing – original draft. DJ: Formal Analysis, Writing – original draft. YQ: Conceptualization, Methodology, Writing – review and editing. NZ: Conceptualization, Funding acquisition, Methodology, Resources, Supervision, Writing – review and editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. Natural Science Foundation of Heilongjiang Province of China (grant numbers LH2023H041, NZ) Heilongjiang Medical Development Foundation (2022YSWL-001, NZ).

Acknowledgments

We would like to thank for the Ophthalmic Diagnostic and Treatment Center of the Second Affiliated Hospital of Harbin Medical University (Harbin, China). During the data collection phase of this study, the advanced equipment and expertise provided by the Ophthalmic Diagnostic and Treatment Center were instrumental in ensuring the accuracy and reliability of the data. And also we would like to thank Dr. Xiaofeng Zhang, Ph.D. in Statistics, for his valuable guidance on statistical data analysis.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fcell.2025.1669696/full#supplementary-material

References

Acharya, R. U., Yu, W., Zhu, K., Nayak, J., Lim, T. C., and Chan, J. Y. (2010). Identification of cataract and post-cataract surgery optical images using artificial intelligence techniques. J. Med. Syst. 34 (4), 619–628. doi:10.1007/s10916-009-9275-8

Brown, N. A., Bron, A. J., Ayliffe, W., Sparrow, J., and Hill, A. R. (1987). The objective assessment of cataract. Eye (Lond) 1 (Pt 2), 234–246. doi:10.1038/eye.1987.43

Castiglioni, I., Rundo, L., Codari, M., Di Leo, G., Salvatore, C., Interlenghi, M., et al. (2021). Ai applications to medical images: from machine learning to deep learning. Phys. Med. 83, 9–24. doi:10.1016/j.ejmp.2021.02.006

Chylack, L. T., Leske, M. C., McCarthy, D., Khu, P., Kashiwagi, T., and Sperduto, R. (1989). Lens opacities classification system Ii (Locs Ii). Arch. Ophthalmol. 107 (7), 991–997. doi:10.1001/archopht.1989.01070020053028

Chylack, L. T., Wolfe, J. K., Singer, D. M., Leske, M. C., Bullimore, M. A., Bailey, I. L., et al. (1993). The Lens opacities classification System Iii. The longitudinal Study of Cataract Study Group. Arch. Ophthalmol. 111 (6), 831–836. doi:10.1001/archopht.1993.01090060119035

Duncan, D. D., Shukla, O. B., West, S. K., and Schein, O. D. (1997). New objective classification System for nuclear opacification. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 14 (6), 1197–1204. doi:10.1364/josaa.14.001197

Gali, H. E., Sella, R., and Afshari, N. A. (2019). Cataract grading systems: a review of past and present. Curr. Opin. Ophthalmol. 30 (1), 13–18. doi:10.1097/icu.0000000000000542

Gan, F., Liu, H., Qin, W. G., and Zhou, S. L. (2023). Application of artificial intelligence for automatic cataract staging based on anterior segment images: comparing automatic segmentation approaches to manual segmentation. Front. Neurosci. 17, 1182388. doi:10.3389/fnins.2023.1182388

Guan, J. Y., Ma, Y. C., Zhu, Y. T., Xie, L. L., Aizezi, M., Zhuo, Y. H., et al. (2022). Lens Nucleus dislocation in hypermature Cataract: case report and literature review. Med. Baltim. 101 (35), e30428. doi:10.1097/md.0000000000030428

Hu, S., Wang, X., Wu, H., Luan, X., Qi, P., Lin, Y., et al. (2020). Unified diagnosis framework for automated nuclear cataract grading based on Smartphone slit-lamp images. IEEE Access 8, 174169–174178. doi:10.1109/ACCESS.2020.3025346

Imran, A., Li, J., Pei, Y., Akhtar, F., Yang, J.-J., and Dang, Y. (2020). Automated identification of cataract severity using retinal fundus images. Comput. Methods Biomechanics Biomed. Eng. Imaging and Vis. 8 (6), 691–698. doi:10.1080/21681163.2020.1806733

Krizhevsky, A., Sutskever, I., and Hinton, G. E. (2017). Imagenet classification with deep convolutional neural networks. Commun. ACM 60 (6), 84–90. doi:10.1145/3065386

Lee, B. J., and Afshari, N. A. (2023). Advances in drug therapy and delivery for cataract treatment. Curr. Opin. Ophthalmol. 34 (1), 3–8. doi:10.1097/icu.0000000000000910

Li, H., and Chutatape, O. (2003). Boundary detection of optic disk by a modified asm method. Pattern Recognit. 36 (9):2093–2104. doi:10.1016/S0031-3203(03)00052-9

Li, H., Lim, J. H., Liu, J., Wong, D. W. K., Tan, N. M., Lu, S., et al. (2009). An automatic diagnosis System of nuclear cataract using slit-lamp images. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2009, 3693–3696. doi:10.1109/iembs.2009.5334735

Li, H., Lim, J. H., Liu, J., Mitchell, P., Tan, A. G., Wang, J. J., et al. (2010). A computer-aided diagnosis System of nuclear cataract. IEEE Trans. Biomed. Eng. 57 (7), 1690–1698. doi:10.1109/tbme.2010.2041454

Li, Z., Jiang, J., Chen, K., Chen, Q., Zheng, Q., Liu, X., et al. (2021). Preventing corneal blindness caused by Keratitis using artificial intelligence. Nat. Commun. 12 (1), 3738. doi:10.1038/s41467-021-24116-6

Linglin, Z., Jianqiang, Li, Zhang, i, He Han, , Bo Liu, , Yang, J., et al. (2017). Automatic cataract detection and grading using deep convolutional neural network, 60, 65. doi:10.1109/icnsc.2017.8000068

Litjens, G., Kooi, T., Bejnordi, B. E., Setio, A. A. A., Ciompi, F., Ghafoorian, M., et al. (2017). A Survey on deep learning in medical image analysis. Med. Image Anal. 42, 60–88. doi:10.1016/j.media.2017.07.005

Liu, P. R., Lu, L., Zhang, J. Y., Huo, T. T., Liu, S. X., and Ye, Z. W. (2021). Application of artificial intelligence in medicine: an overview. Curr. Med. Sci. 41 (6), 1105–1115. doi:10.1007/s11596-021-2474-3

Lu, Q., Wei, L., He, W., Zhang, K., Wang, J., Zhang, Y., et al. (2022). Lens opacities classification System Iii-Based Artificial Intelligence Program for automatic cataract grading. J. Cataract. Refract Surg. 48 (5), 528–534. doi:10.1097/j.jcrs.0000000000000790

Lu, S., Ba, L., Wang, J., Zhou, M., Huang, P., Zhang, X., et al. (2025). Deep learning-driven approach for cataract management: towards precise identification and predictive analytics. Front. Cell Dev. Biol. 13, 1611216. doi:10.3389/fcell.2025.1611216

Mundy, K. M., Nichols, E., and Lindsey, J. (2016). Socioeconomic disparities in cataract prevalence, characteristics, and management. Semin. Ophthalmol. 31 (4), 358–363. doi:10.3109/08820538.2016.1154178

Oganov, A. C., Seddon, I., Jabbehdari, S., Uner, O. E., Fonoudi, H., Yazdanpanah, G., et al. (2023). Artificial intelligence in retinal image analysis: development, advances, and challenges. Surv. Ophthalmol. 68 (5), 905–919. doi:10.1016/j.survophthal.2023.04.001

Shao, Y., Jie, Y., and Liu, Z. G.Expert Workgroup of Guidelines for the application of artificial intelligence in the diagnosis of anterior segment diseases 2023; Ophthalmic Imaging and Intelligent Medicine Branch of Chinese Medicine Education Association; Ophthalmology Committee of International Association of Translational Medicine; Chinese Ophthalmic Imaging Study Groups (2023). Guidelines for the application of artificial intelligence in the diagnosis of anterior segment diseases (2023). Int. J. Ophthalmol. 16 (9), 1373–1385. doi:10.18240/ijo.2023.09.03

Shimizu, E., Tanji, M., Nakayama, S., Ishikawa, T., Agata, N., Yokoiwa, R., et al. (2023). Ai-Based diagnosis of nuclear cataract from slit-lamp videos. Sci. Rep. 13 (1), 22046. doi:10.1038/s41598-023-49563-7

Son, K. Y., Ko, J., Kim, E., Lee, S. Y., Kim, M. J., Han, J., et al. (2022). Deep learning-based cataract detection and grading from slit-lamp and retro-illumination photographs: model development and validation Study. Ophthalmol. Sci. 2 (2), 100147. doi:10.1016/j.xops.2022.100147

Ting, D. S. W., Pasquale, L. R., Peng, L., Campbell, J. P., Lee, A. Y., Raman, R., et al. (2019). Artificial intelligence and deep learning in ophthalmology. Br. J. Ophthalmol. 103 (2), 167–175. doi:10.1136/bjophthalmol-2018-313173

Ting, D. S. J., Foo, V. H., Yang, L. W. Y., Sia, J. T., Ang, M., Lin, H., et al. (2021). Artificial intelligence for anterior segment diseases: emerging applications in ophthalmology. Br. J. Ophthalmol. 105 (2), 158–168. doi:10.1136/bjophthalmol-2019-315651

Wan Zaki, W. M. D., Abdul Mutalib, H., Ramlan, L. A., Hussain, A., and Mustapha, A. (2022). Towards a connected Mobile cataract screening System: a future approach. J. Imaging 8 (2), 41. doi:10.3390/jimaging8020041

Wang, R. Y., Zhu, S. Y., Hu, X. Y., Sun, L., Zhang, S. C., and Yang, W. H. (2024). Artificial intelligence applications in ophthalmic optical coherence tomography: a 12-Year bibliometric analysis. Int. J. Ophthalmol. 17 (12), 2295–2307. doi:10.18240/ijo.2024.12.19

Wu, X., Huang, Y., Liu, Z., Lai, W., Long, E., Zhang, K., et al. (2019). Universal artificial intelligence platform for collaborative management of cataracts. Br. J. Ophthalmol. 103 (11), 1553–1560. doi:10.1136/bjophthalmol-2019-314729

Wu, X., Xu, D., Ma, T., Li, Z. H., Ye, Z., Wang, F., et al. (2022). Artificial intelligence model for antiinterference cataract automatic diagnosis: a diagnostic accuracy Study. Front. Cell Dev. Biol. 10, 906042. doi:10.3389/fcell.2022.906042

Xu, X., Zhang, L., Li, J., Guan, Y., and Zhang, L. (2020). A hybrid global-local representation Cnn model for automatic cataract grading. IEEE J. Biomed. Health Inf. 24 (2), 556–567. doi:10.1109/jbhi.2019.2914690

Xu, X., Zhang, M., Huang, S., Li, X., Kui, X., and Liu, J. (2024). The application of artificial intelligence in diabetic retinopathy: progress and prospects. Front. Cell Dev. Biol. 12, 1473176. doi:10.3389/fcell.2024.1473176

Yang, W. H., Shao, Y., and Xu, Y. W.Expert Workgroup of Guidelines on Clinical Research Evaluation of Artificial Intelligence in Ophthalmology 2023, Ophthalmic Imaging and Intelligent Medicine Branch of Chinese Medicine Education Association, Intelligent Medicine Committee of Chinese Medicine Education Association (2023). Guidelines on clinical research evaluation of artificial intelligence in ophthalmology (2023). Int. J. Ophthalmol. 16 (9), 1361–1372. doi:10.18240/ijo.2023.09.02

Yousefi, S., Takahashi, H., Hayashi, T., Tampo, H., Inoda, S., Arai, Y., et al. (2020). Predicting the likelihood of need for future keratoplasty intervention using artificial intelligence. Ocul. Surf. 18 (2), 320–325. doi:10.1016/j.jtos.2020.02.008

Yu, K. H., Beam, A. L., and Kohane, I. S. (2018). Artificial intelligence in healthcare. Nat. Biomed. Eng. 2 (10), 719–731. doi:10.1038/s41551-018-0305-z

Zhang, L., Tang, L., Xia, M., and Cao, G. (2023). The application of artificial intelligence in glaucoma diagnosis and prediction. Front. Cell Dev. Biol. 11, 1173094. doi:10.3389/fcell.2023.1173094

Keywords: cataract, neural network, artificial intelligence, anterior segment image, Lens Opacities Classification System III (LOCS III)

Citation: Tang G, Zhang J, Du Y, Jiang D, Qi Y and Zhou N (2025) Artificial intelligence in cataract grading system: a LOCS III-based hybrid model achieving high-precision classification. Front. Cell Dev. Biol. 13:1669696. doi: 10.3389/fcell.2025.1669696

Received: 20 July 2025; Accepted: 27 August 2025;

Published: 09 September 2025.

Edited by:

Weihua Yang, Southern Medical University, ChinaReviewed by:

Zhe Zhang, Shenzhen Eye Hospital, ChinaSujatha Kamepalli, Technology and Research, India

Copyright © 2025 Tang, Zhang, Du, Jiang, Qi and Zhou. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Nan Zhou, bnpob3VfaG11QDEyNi5jb20=; Yanhua Qi, cXloODY2MDU2NDNAMTI2LmNvbQ==

†These authors have contributed equally to this work and share first authorship

Gege Tang

Gege Tang Jie Zhang

Jie Zhang Yingqi Du

Yingqi Du Dexun Jiang

Dexun Jiang Yanhua Qi

Yanhua Qi Nan Zhou

Nan Zhou