Abstract

Introduction:

In precision agriculture, Wireless Sensor Networks (WSNs) are essential for real-time monitoring and informed decision-making. Nevertheless, increased node density, constrained energy supplies, and unstable environmental circumstances present barriers to resource allocation and communication efficiency.

Methods:

To address these limitations, a hybrid system combining deep learning and metaheuristic optimization was developed, integrating Bidirectional Long Short-Term Memory (Bi-LSTM) with Ant Colony Optimization (ACO). Real-time multivariate data, encompassing temperature, humidity, soil moisture, and power usage, were gathered utilizing a customized embedded sensing technology used in an agricultural environment. Z-score normalization was utilized for preprocessing, followed by Principal Component Analysis (PCA) for feature extraction and Particle Swarm Optimization (PSO) for the selection of appropriate feature subsets. The Bi-LSTM model was optimized using ACO to improve temporal learning and energy-efficient scheduling among sensor nodes.

Results:

The assessment of the proposed Bi-LSTM-ACO system resulted in an accuracy of 98.61%, precision of 92.16%, recall of 98.06%, and an F1-score of 91.41%, outperforming baseline models including LSTM, GRU, and CNN-LSTM.

Discussion:

The findings indicate that the proposed framework significantly decreases energy consumption, enhances resource usage, and guarantees low-latency actuation in Agri-IoT implementations. The proposed work provides a scalable and intelligent system for real-time, energy-efficient agricultural monitoring.

1 Introduction

Modern agriculture faces growing pressure to satisfy global food requirements, enhance sustainability, and adjust to constrained resources. In addition, it promotes sustainable farming methods and addresses the shortage of resources (Jararweh et al., 2023). The growing population and shifting climate increase the pressure on traditional farming practices. This calls for a paradigm change in favor of more environmentally friendly and resilient methods (Wakweya, 2023). The complex interactions between these problems have spurred research in smart agriculture. Technology is driving the novel and revolutionary concept of “smart agriculture,” which aims to reduce environmental impact, improve operational effectiveness, and maximize resource use. Smart agriculture, as opposed to traditional methods, incorporates cutting-edge solutions to build an agricultural environment that is more data-driven and networked (Vishnoi and Goel, 2024). This paradigm seeks to reduce the ecological footprint left by conventional farming practices to promote sustainability, in addition to increasing agricultural productivity. Conventional agricultural practices are inadequate to satisfy the demands of an expanding population under evolving climatic conditions. IoT-based sensing solutions provide a scalable, data-driven solution for real-time environmental monitoring. One of the most important initial steps in creating an intelligent and networked ecosystem is the use of IoT (Internet of Things) in agriculture. The deployment of a surveillance system based on the Internet of Things aims to revolutionize farmers’ data collection and utilization. To gather vital data for precise decision-making in smart agriculture, it focuses on carefully placing sensors and communication devices throughout agricultural infrastructure.

The IoT-based monitoring system provides farmers with real-time data, allowing them to make informed decisions about energy use, soil moisture, and atmospheric conditions. This information can help improve resource allocation, streamline operations, and boost efficiency. The technology also allows for more adaptable and responsive agricultural practices, such as improved energy management and precise irrigation methods. By providing farmers with real-time insights into soil moisture levels, the Internet of Things (IoT)-based monitoring system enables resource optimization and promotes sustainable agricultural practices, equipping them with the information needed to make informed decisions (Liu, 2022).

Allocating resources, such as time, energy, bandwidth, and processing capacity, to conflicting needs is an essential procedure. Many industries, such as energy management, manufacturing processes, transportation networks, and communication systems, depend on the efficient allocation of resources (Sun et al., 2020). Resource allocation is important in wireless sensor network (WSN)-based agriculture systems since available resources are characteristically restricted and sensor energy consumption must be reduced to increase network lifetime. Several benefits, including improved system performance, lower energy usage, increased dependability, and improved scalability, can result from optimal resource allocation.

In agri-IoT networks, efficient resource allocation is essential for maintaining system scalability, lowering latency, and ensuring energy economy. Numerous techniques, such as heuristic, optimization, reinforcement learning, and clustering-based approaches, have been put forth for this goal. Clustering techniques such as threshold-sensitive energy-efficient (TEEN) network, hybrid energy-efficient distributed (HEED) clustering, and low-energy adaptive clustering hierarchy (LEACH) are frequently used to rotate roles among sensor nodes and aggregate data, thereby increasing network’s lifetime (Daanoune et al., 2021; Jabbar et al., 2023; Daanoune et al., 2021; Jabbar et al., 2023). To effectively schedule sensing tasks, optimize routing paths, and manage energy resources, optimization-based metaheuristics such as ant colony optimization (ACO), particle swarm optimization (PSO) (Prakash et al., 2024), genetic algorithms (GAs), gray wolf optimizer (GWO), and whale optimization algorithm (WOA) (Chandrasekaran and Rajasekaran, 2024) have been used. For tasks such as irrigation and scheduling sensors, methods such as Q-learning, deep Q-networks (DQNs), and multi-agent RL help make smart decisions in changing situations (Ganesh et al., 2023, Zhao et al., 2022). In less complex situations, rule-based approaches such as round-robin procedures and priority scheduling are employed for load balancing and job ordering. Together, these algorithms enable scalable, adaptive, and intelligent resource management in smart agriculture systems.

According to recent studies, combining optimization techniques with predictive modeling greatly enhances resource allocation in agri-IoT systems. Numerous deep learning and machine learning models have been used to predict environmental factors and maximize the use of scarce agricultural resources. For example, IoT-based frameworks have employed convoluted neural network (CNN) and long short-term memory (LSTM) models to forecast agricultural diseases and insect outbreaks, allowing for the timely administration of pesticides and lowering the use of chemicals (Wang et al., 2024). To promote accurate irrigation and minimize water waste, models such as random forest, XGBoost, and LSTM have been used to forecast weather and soil moisture (Li et al., 2023). Optimal irrigation strategies that balance crop water requirements and energy consumption have been learned using deep reinforcement learning approaches such as DQNs. Additionally, simple scheduling methods have been combined with bidirectional long short-term memory (Bi-LSTM) models to predict future soil moisture levels and enhance control over irrigation cycles. These methods show how prediction-driven, context-aware decision-making is increasingly being used in smart agriculture to maximize the use of energy, water, and chemical resources, eventually fostering cost-effectiveness and sustainability.

The following justifies the use of optimization in the proposed Bi-LSTM model:

• Resource allocation in agri-IoT systems within WSNs necessitates the creation of scalable, robust, and dependable deep learning models.

• The Bi-LSTM model comprises numerous hyperparameters that substantially influence its predictive efficiency. Consequently, optimizing these hyperparameters is essential for enhancing accuracy and efficiency.

The primary contributions of this work are as follows:

• The proposed model (Bi-LSTM–ACO) is trained using time-series data collected from an agricultural field, with the dataset obtained through a real-time embedded sensor system that is positioned in the field.

• A new optimization technique based on the behavior of certain animals is being offered in order to utilize the power of sensor devices positioned inside the wireless sensor network. Ant colony optimization is a method used to tackle complex problems, which draws inspiration from nature.

• To solve the specific challenges of resource allocation such as the utilization of power and optimization of energy in IoT networks, a novel Bi-LSTM–ACO methodology is proposed.

• This methodology addresses the crucial problem of resource allocation in Internet of Things networks by efficiently distributing resources to IoT applications and devices according to different priorities and needs.

The previous work explains the contributions of various authors, which are discussed in Section 2 along with the proposed Bi-LSTM with ACO algorithm. The analysis of model performance and the results are presented in Section 3 (Results and Discussion). Finally, the discussion and summary are provided in Section 4 (Conclusion).

2 Related work

Energy efficiency and predictive intelligence in smart agriculture have emerged as vital research domains owing to the growing use of WSNs and IoT-enabled devices. Conventional approaches often fail to inadequately address the dynamic management of power consumption and the accurate forecasting of energy usage in these systems. Recent studies have investigated hybrid models and optimization methods to mitigate these limitations. A joint optimization approach using deep reinforcement learning for the management of power and channel allocation in agricultural wireless sensor networks was suggested by Han et al. (2021). The network control issue is formulated as a Markov decision process and addressed by deep deterministic policy gradient (DDPG), resulting in enhanced network rewards and energy equilibrium under SINR constraints.

A hybrid LSTM–GRU architecture was proposed by Rahman et al. (2024) for real-time environmental and electricity forecasting in IoT systems. The integration of both models substantially diminished prediction errors, yielding an MAE of 3.78% and an RMSE of 8.15%, hence providing enhanced reliability compared to individual LSTM or GRU models.

To tackle privacy concerns in distributed IoT settings, Sharma and Kaur (2024) introduced a fog-based federated learning framework for time-series prediction. The solution sustained performance equivalence with centralized models despite non-IID data distributions while safeguarding user data privacy and minimizing network traffic. In the field of building energy prediction, Wang et al. (2025) introduced the BE-LSTM model, which integrates backward elimination (BE) with LSTM for efficient forecasting on limited datasets. The method surpassed CNN–LSTM and Bi-LSTM models, especially in contexts featuring non-periodic human behavior patterns.

Tace et al. (2022) introduced a hybrid deep learning model that combines CNN with a multi-layer bidirectional LSTM (M-BDLSTM) for short-term home energy consumption prediction. The model exhibited enhanced accuracy with reduced RMSE and MSE across various validation methods, confirming its efficacy for practical application.

A hybrid optimization technique called the EAO and an enhanced CNN are suggested by the clustering algorithm to boost training accuracy and precision. The methodology achieves better results than current methods with a 99% packet delivery ratio, 76.92% throughput, 98.24% network lifetime, 50% maximum energy consumption, and 99.23% classification accuracy (Pandiyaraju et al., 2023).

Vashisht et al. (2024) proposed a GRU–Bi-LSTM model for crop yield prediction, integrating data preprocessing, an improved shearlet transform for feature extraction, and enhanced gray wolf optimization for feature selection. The hybrid model attained a high accuracy of 97% and demonstrated robust performance in precision, recall, and F-measure, surpassing conventional techniques. This underscores its efficacy in improving precision agriculture via precise yield forecasts.

Ullah et al. introduced a hybrid deep learning framework that integrates CNNs with M-BDLSTM for the prediction of short-term residential energy consumption. The model incorporates data preparation, sequential learning, and comparing prediction phases. It adeptly captures temporal trends and enhances forecasting precision, surpassing conventional models by reducing prediction errors such as MSE and RMSE, as proven by 10-fold cross-validation and hold-out techniques (Ullah et al., 2020).

Tace et al. devised an intelligent irrigation system utilizing IoT and machine learning to enhance water efficiency in agriculture. Through the integration of environmental sensors (humidity, temperature, and precipitation) with models such as KNN, SVM, and neural networks, and the deployment of the system utilizing Node-RED and MongoDB, they attained precise, cost-effective, and adaptable irrigation management. The KNN model surpassed others with an accuracy of 98.3% and a low RMSE, illustrating the promise of ML–IoT integration in precision irrigation (Tace et al., 2022).

Liu et al. (2024) tackled the issue of improving Bi-LSTM hyperparameters for short-term power load forecasting by introducing a hybrid differential evolution–improved Harris hawk optimization (DE–IHHO) approach. This method improves the global search efficacy and convergence rate of Bi-LSTM training by integrating evolutionary and metaheuristic techniques. The results demonstrated significant enhancements in MAE, MAPE, and RMSE parameters, confirming the model’s efficacy for dynamic load forecasting in smart grid applications.

Mbamba and Batstone (2023) created a genetic algorithm-driven optimization framework for deep learning models in the water sector. The research combines feature selection methodologies (PCC, PCA, and GA) with Bi-LSTM networks to forecast effluent quality and biogas generation. The findings indicate that shallow network designs with optimized hyperparameters yield efficient, interpretable, and precise forecasts, rendering this technique appropriate for multi-objective optimization and predictive analytics in environmental systems.

Mustaffa et al. (2024) introduced a hybrid forecasting model for Earth’s surface temperature prediction utilizing deep learning and optimized with the Barnacles Mating Optimizer (BMO). The model optimizes both weights and biases to improve predictive accuracy on a global temperature dataset. Compared to other optimization methods such as PSO, HSA, ACO, and traditional ARIMA, the BMO-optimized deep learning model exhibited enhanced performance in terms of MAE, RMSE, and R2, underscoring its efficacy for climate-sensitive applications, including agriculture and meteorology.

These studies underscore the increasing implementation of deep learning, metaheuristic optimization, and decentralized learning frameworks in time-series forecasting and energy management. Nonetheless, a research gap persists in the application of these approaches to real-time agri-IoT systems featuring multivariate sensor–actuator feedback. This study tackles the existing gap by employing an integrated Bi-LSTM–ACO model designed for energy-efficient scheduling and resource optimization in agricultural sensor networks.

The proposed agri-IoT wireless sensor network system depends on several factors, including node placement, hardware status, and dynamic power consumption. To ensure energy-efficient communication, it is critical to develop a strategy that ensures sufficient energy to complete tasks effectively. The goal of this integrated approach is to improve communication reliability and energy efficiency inside the agricultural IoT network. Figure 1 depicts the total system architecture. There are several sensor nodes in the agricultural field that are used to read data. The sensor node, used to monitor the agricultural field and perform necessary measures, such as controlling the water pump, consists of various sensors including those for temperature, humidity, and soil moisture.

FIGURE 1

Overall proposed system architecture.

The sensor data are sent to the IoT cloud. The dataset consists of sensor values, actuator state, and power consumption of the sensor node. The deep neural network model, Bi-LSTM with ACO, is used to determine the power consumption and energy optimization of the sensor nodes using a trained dataset. In this context, we have introduced a comprehensive approach that incorporates Z-score normalization for preprocessing; particle swarm optimization is used for feature selection, and principal component analysis (PCA) is used for feature extraction.

2.1 Dataset preparation

A real-time dataset was generated using a custom-built embedded system to facilitate predictive energy optimization in the agri-IoT setting. The system consisted of a collection of environmental sensors combined with a microcontroller and linked to a cloud dashboard for ongoing data capture and logging. The sensor suite comprised a DHT11 for temperature and humidity measurement, a capacitive soil moisture sensor, and a water level sensor for irrigation monitoring. The sensors were connected to a Node MCU ESP8266 microcontroller that was chosen for its integrated Wi-Fi functionality and energy-efficient performance, which is ideal for IoT applications.

The system intermittently collected environmental parameters and actuator states (e.g., water pump ON/OFF) and relayed the gathered data to a cloud-based IoT platform for storage and analysis. The dataset was created by recording time-stamped sensor readings and the associated power consumption values computed for each sensor transmission cycle. The cloud platform offered an API interface for accessing historical records, which was subsequently utilized for model training and assessment.

2.2 Preprocessing: Z-score data normalization

Z-score normalization (standardization) is used on the dataset to guarantee scale consistency and enhance the prediction model’s efficacy. Time, latency, temperature, humidity, soil moisture, and pump status are among the features included in the collection. The data formats include strings, integers, floats, and possibly missing (NaN) values. First, a uniform numerical representation is created by cleaning and converting these numbers.

When feature variables have different magnitudes or units (such as temperature in degrees Celsius versus humidity in %), Z-score normalization is particularly helpful. It helps the Bi-LSTM model learn more effectively by transforming the data so that each feature has a mean of 0 and a standard deviation of 1.

The Z-score normalization for temperature data point is calculated using the Equation 1:Here,

This standardization ensures that each input feature contributes proportionally to the model’s learning process and improves convergence during training.

2.3 Feature extraction: PCA

PCA is a statistical method for reducing dimensionality while maintaining the greatest amount of data variation. It converts the initial correlated characteristics into principal components, which are a collection of linearly uncorrelated variables. These elements stand for the directions along which the data variance is maximized in the feature space. In multivariate sensor data, such as temperature, humidity, soil moisture, and actuator status included in agri-IoT datasets, PCA helps simplify feature space complexity while maintaining important predictive information.

Equation 2 shows that the mean depends on temperature data input values , where represent stochastic m-dimensional sensor data:

Equation 3 expresses the covariance matrix with input as follows:

PCA proceeds by solving the eigenvalue problem of the covariance matrix as shown in Equation 4:

where and

To use low-dimensional vectors to describe data records, we create m eigenvectors, also called principal directions, which correspond to the n highest eigenvalues. Using this approach, we can identify the directions along which the data differ the most. These principal directions are critical for efficient dimensionality reduction and analysis since the variance of the input data predictions along these directions is known to be higher than along any other direction.

The matrix of eigenvectors is formed as defined in Equation 5.

The relationship between the eigenvectors and eigenvalues is expressed in Equation 6.

Equation 8 defines the threshold condition for retaining the top principal components.

A new input dataset, y, is assigned a low-dimensional feature vector using the given equations to calculate how many eigenvectors to choose based on a precision value, v, that has been selected. This procedure significantly reduces the dimensionality while preserving the highest degree of variation in the recovered features. The transformed dataset using principal components is computed using Equation 8.

2.4 Feature selection: PSO

PSO is a nature-inspired metaheuristic method employed to address intricate optimization challenges. This study utilizes PSO as a feature selection technique to discern the most pertinent properties from the agri-IoT dataset, thereby minimizing dimensionality and enhancing the efficacy of the subsequent Bi-LSTM prediction model.

PSO commences with the initialization of a population (swarm) of particles, each representing a potential solution (a subset of characteristics). The particles iteratively adjust their placements in the feature space, influenced by their individual optimal experiences (p best) and the global optimal solution identified by the swarm (g best). The velocity of each particle is updated according to Equations 9, 10 determines the new position of each particle based on its velocity.Here,

The inertia weight (w) decreases linearly with iterations using the maximum and minimum constraints ( and ) and is described as follows:

Particles’ velocity and position updates guide the swarm’s cognitive and social paradigms through the search space. PSO can address complicated engineering optimization issues, but it often results in premature convergence due to local entrapment.

2.5 Bi-LSTM

2.5.1 LSTM

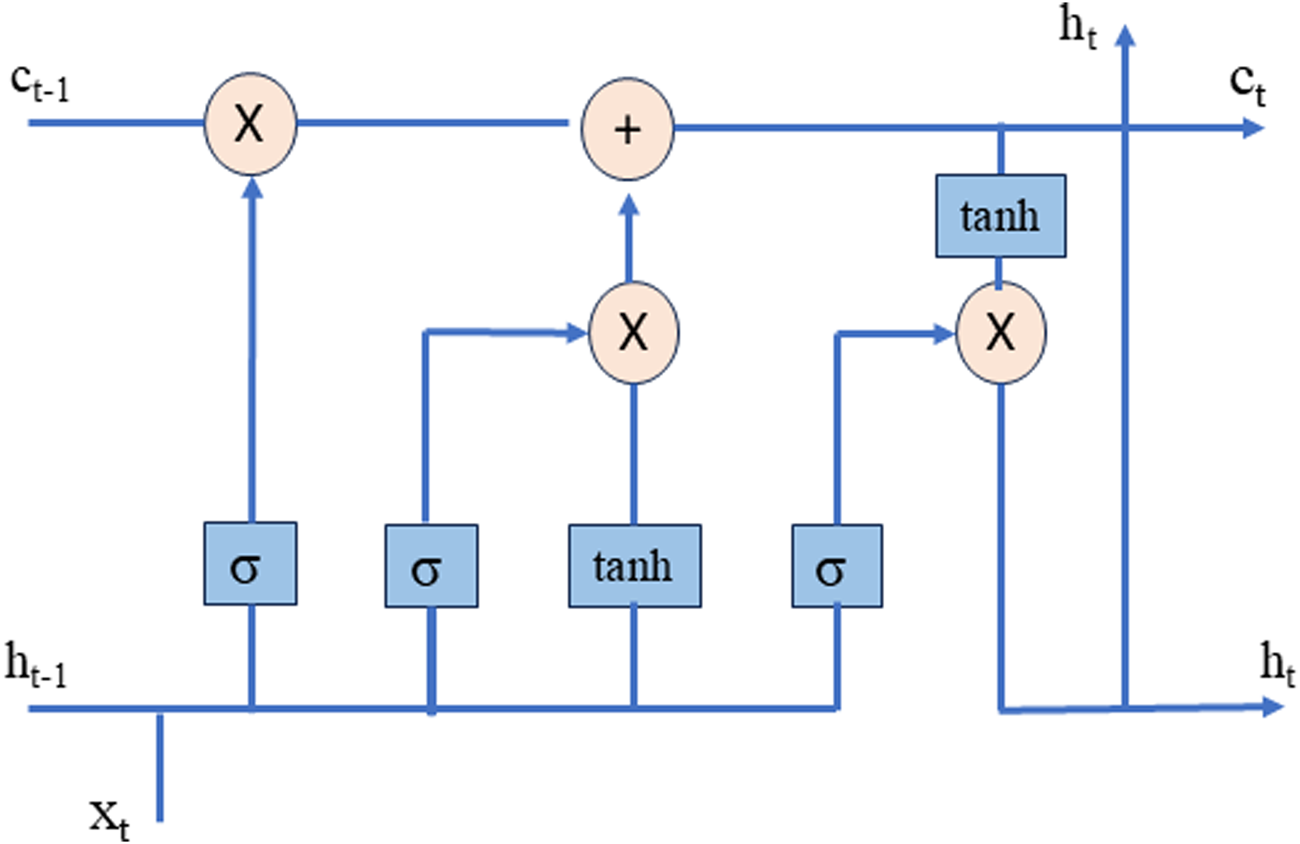

Recurrent neural networks (RNNs) with LSTM are ideal for sequential input applications because of their long-term dependency understanding capabilities. The three primary components of their gate structure that normalize the information flow are the input gate, forget gate, and output gate. The forget gate uses a sigmoid function in conjunction with a tanh function to determine which data in the memory cell should be deleted or retained. These features are also used by the input gate to control when fresh data are added to the memory cell. Using a sigmoid function, the output gate chooses the data that will have an impact on the current output. Figure 2 shows the LSTM architecture.

FIGURE 2

Architecture of LSTM

The forget gate in the cell’s gating mechanism initially determines which data should be removed from the neural network’s state. It processes the up-to-date involvement () and earlier output when in the state . The result has a range of 0–1, where 1 represents total information retention and 0 represents total information elimination. Equation 12 provides a comprehensive computational formula.

The next stage involves determining which new data should be retained in the neural network. This phase has two components: a tanh function generates a candidate vector state, , which can be added to the cell state, and a sigmoid layer, known as the “input gate,” determines which values to update first. The computational formulas for this process are presented in Equations 13, 14.

Here,

Updating the cell state from the earlier cell state, , to the current cell state, Ct, is the third step. The computational formula is presented in Equation 15, where × represents the new candidate value and Ct− 1 × determines which information of the old unit status is stored.

The last stage involves determining the LSTM’s output. The output of the sigmoid threshold is obtained by multiplying the unit state’s tanh value with the output of the sigmoid layer, which first decides which unit states should be output. This normalizes the output value and yields the new unit state of the output. The computational equations are displayed in Equations 16, 17.

Here,

2.5.2 Bi-LSTM

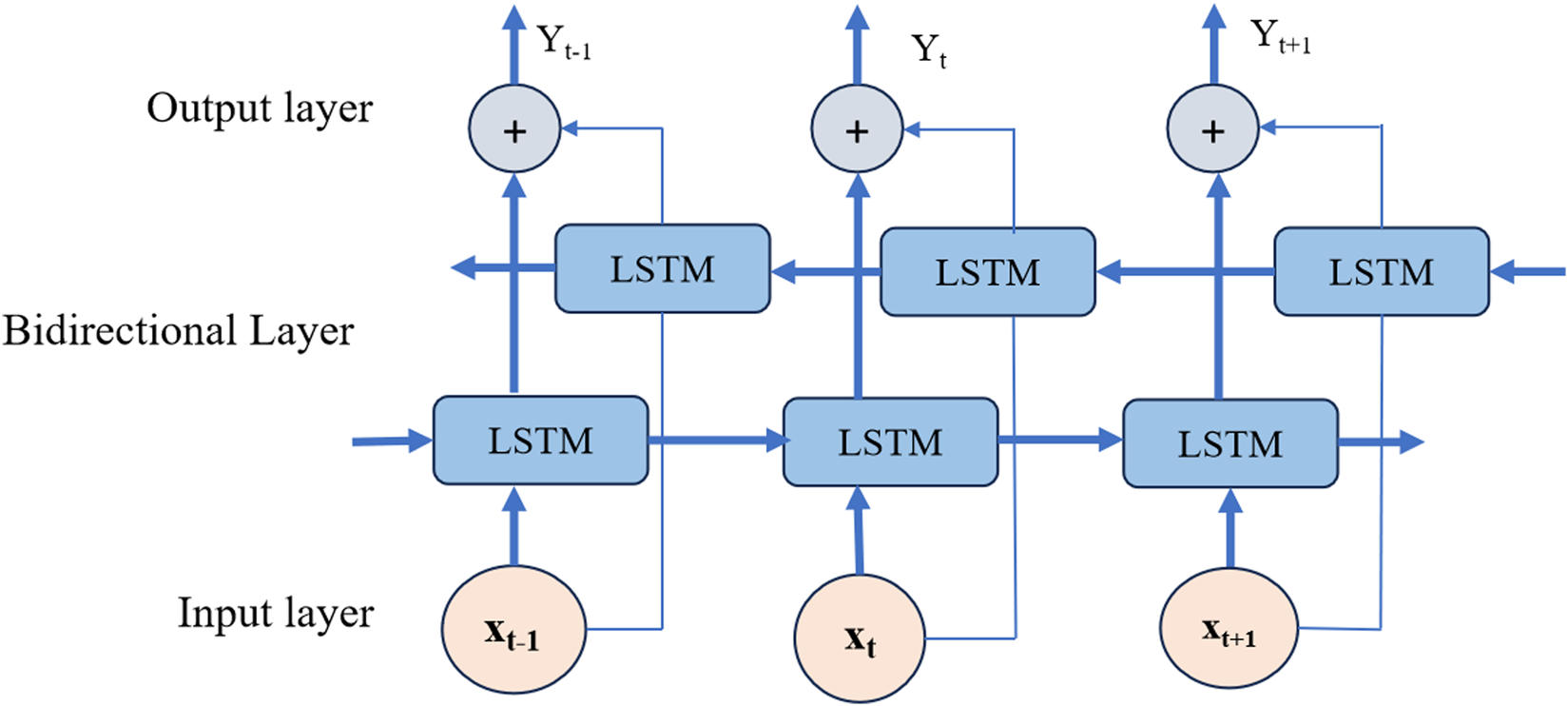

An extension of the LSTM neural network, a Bi-LSTM network, is developed to gather data in sequential input from both past and future contexts. The traditional LSTM network is used to calculate sequence data step by step in chronological order, from front to rear. However, there are times when the sequence’s backward dependency relationship, that is, the potential for a one-time step’s outcome to potentially affect subsequent time steps, becomes rather significant. The inverse layer of the Bi-LSTM network was added to enable bidirectional scanning to solve this problem. Figure 3 illustrates the diagram of Bi-LSTM.

FIGURE 3

Bi-LSTM architecture.

Bi-LSTM consists of two LSTM layers, one forward and one reverse. Combinations of hidden states in both directions can be passed into subsequent layers to extract temporal information. Equation 20 describes data as a result of concatenating the forward (left-to-right) and backward (right-to-left) hidden states in the Bi-LSTM output. The final hidden state is computed using Equations 18, 19 and the forward and backward hidden states are combined as shown in Equation 20.

Here,

2.6 Ant colony optimization

The ACO-based approach functions as follows. An ant could see every neuronal connection in a theoretically fully linked bidirectional long short-term memory network as a potential path, with each node capable of connecting to every other node in the succeeding layer and to a corresponding node in the recurrent layer. The master process records the pheromone quantity of each connection and initializes each potential connection with a base pheromone. A chosen number of ants are given neural network designs to use in worker processes, which then ask them to pick a path across the fully linked neural network that is skewed by the quantity of pheromone on each connection. Several ants can choose the neuronal connections. Once these ant paths are integrated, the resulting neural network design is distributed to worker processes for training using methods such as backpropagation, evolutionary algorithms, or other neural network training techniques on the given flight data. The master process manages a population of the best neural network designs. If a worker reports that the accuracy of a newly trained neural network surpasses the existing population, the pheromone levels on the corresponding links within the neural network are increased. Similar to the standard ACO algorithm, the master process continuously reduces pheromone levels over time. This approach facilitates the creation of recurrent neural networks with multiple hidden layers and nodes, enabling the identification of optimal designs for predicting flight characteristics. To balance computational cost and enhance the accuracy of Bi-LSTM, this study combines ACO with Bi-LSTM.

The Bi-LSTM model is optimized in the proposed method by adjusting its parameters and allocating resources more effectively using ACO. The Bi-LSTM network processes input data both forward and backward to forecast sequential data patterns such as energy use and resource requirements. However, the network’s parameters (such as weights, biases, and hyperparameters) must be adjusted to attain the best possible performance. By mimicking the behavior of ants searching for the shortest paths, each of which could be a potential solution for configuring the Bi-LSTM model, ACO improves this process. The ACO algorithm iteratively modifies pheromone levels to direct the search toward optimal solutions after evaluating these paths according to a performance criterion such as prediction accuracy or energy efficiency. ACO makes certain that the Bi-LSTM model achieves increased accuracy, lower energy consumption, and minimal latency by dynamically modifying parameters and identifying efficient pathways. An optimized model that can provide better forecasts and resource management in smart agriculture is produced by combining ACO with Bi-LSTM.

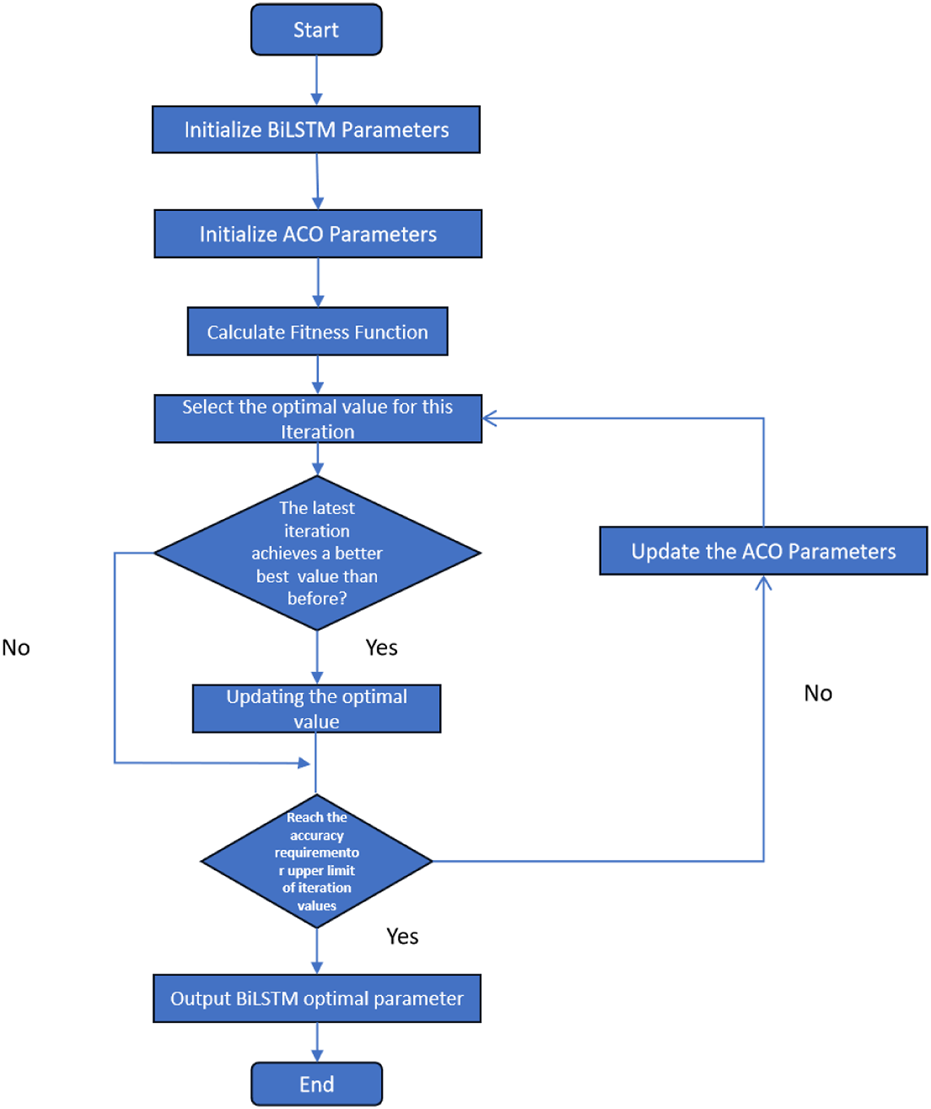

The flow diagram shows how the ACO algorithm (see Figure 4) and the Bi-LSTM neural network are integrated for resource optimization and performance enhancement. Initializing the Bi-LSTM parameters and pheromone levels for ACO is the first step in the procedure. The number of ants (agents) needed to investigate the possible paths (solutions) is decided by the ACO algorithm. Pheromone trails and solution quality are used by worker procedures to assess the routes. The Bi-LSTM neural network is then trained using the optimized routes, which enhances its prediction accuracy. Iteratively, the solutions are improved by updating the pheromone levels and assessing the trained network’s performance. The population of solutions grows across several iterations, improving system performance, forecast accuracy, and resource allocation. To achieve effective and high-performing results, this hybrid approach combines the sequential data processing capacity of Bi-LSTM with the optimization capabilities of ACO.

FIGURE 4

Flow diagram of the ACO optimizer.

The below pseudo code shows the algorithm steps for combining Bi LSTM with the ACO optimizer.

Pseudo-code for the ACO optimized Bi-LSTM model

Define the number of ants N_ants, number of iterations Max_iter, pheromone importance α, heuristic importance β, evaporation rate ρ, and initial pheromone level τ0.

Define the search space of hyperparameters:

- LSTM units

- Learning rate

- Dropout rate

Initialization:

Initialize pheromone trails τ(h) = τ0 for each hyperparameter h

Define the fitness function f as a combination of prediction accuracy and energy efficiency

While termination condition not met (t<Max_iter):

For each ant k = 1 to N_ants:

Construct a solution Sk by probabilistically selecting hyperparameters h

using the transition rule:

P(h) ∞ [τ(h)]α * [η(h)]β

where η(h) is the heuristic desirability (e.g., based on prior performance)

Train a Bi-LSTM model with the selected hyperparameters Sk

Evaluate fitness f(Sk)

Update pheromones for each hyperparameter h:

τ(h) ← (1 - p) * τ(h) + Δτ(h)

where Δτ(h) = ∑ (1/f(Sk)) for ants that selected h

Optionally apply elitism to preserve best solution found

End While

Return best hyperparameter configuration S_best with minimum f(S)

3 Results and discussion

The proposed Bi-LSTM–ACO framework significantly enhances resource allocation in agri-IoT networks by integrating predictive intelligence with energy-aware optimization. The Bi-LSTM model captures complex temporal dependencies in multivariate sensor data, enabling the precise forecasting of environmental conditions and optimal scheduling of actuator responses such as irrigation. This predictive capability reduces unnecessary activations, conserving energy and water. Simultaneously, ACO is employed to fine-tune Bi-LSTM hyperparameters and optimize sensor operation schedules, allowing dynamic adjustment of sensing intervals and communication paths based on energy trends. Specifications for the hyperparameters for the proposed model are discussed in Table 1. The incorporation of PCA and PSO during preprocessing further minimizes computational overhead by selecting only the most relevant features, thereby lowering bandwidth and power consumption. By leveraging real-time sensor–actuator feedback and adaptive control strategies, the framework provides a scalable and context-aware solution for efficient resource management. Overall, this research offers a robust and intelligent approach to sustainable agriculture through optimized use of energy, bandwidth, and sensing resources.

TABLE 1

| Parameter | Specification |

|---|---|

| Learning rate | 0.005 (selected by ACO) |

| Optimizer | Ant colony optimization (ACO) |

| Loss function | Binary cross-entropy |

| Batch size | 32 |

| Epoch | 100 |

| Number of LSTM units | 32 (selected by ACO) |

| Dropout rate | 0.3 (selected by ACO) |

| Sequence length (time step) | 20 |

| Hidden layers | Bidirectional LSTM |

| Activation function (output) | Sigmoid |

| Fitness function | Accuracy |

Hyperparameters of the proposed model.

Temperature, humidity, soil moisture, and pump status are just a few of the parameters that the system collects and periodically updates on the Adafruit IO cloud. The data collection process generates a considerable dataset that can be used in downstream deep learning applications to improve the efficiency and decision-making of farming techniques. The integration of these technologies highlights the potential for improved energy efficiency and optimal power resource management in smart farming.

3.1 Performance metrics

3.1.1 Accuracy

Accuracy is defined as the proportion of all accurate estimates the classification model makes compared to the total number of test samples. The accuracy of the model is calculated as per Equation 21.

3.1.2 Precision

The definition of precision is the ratio of the classification model’s anticipated true positive values to the total of its true and false positives. The precision metric is computed using Equation 22.

3.1.3 Recall

The ratio of the classification model’s predicted true positive values to the total of its true positive and false negative values should be remembered. The recall is calculated using the Equation 23.

3.1.4 F1 score

The F1 score is the hormonal mean of the classification model’s recall and precision. The F1-score is calculated as shown in Equation 24.

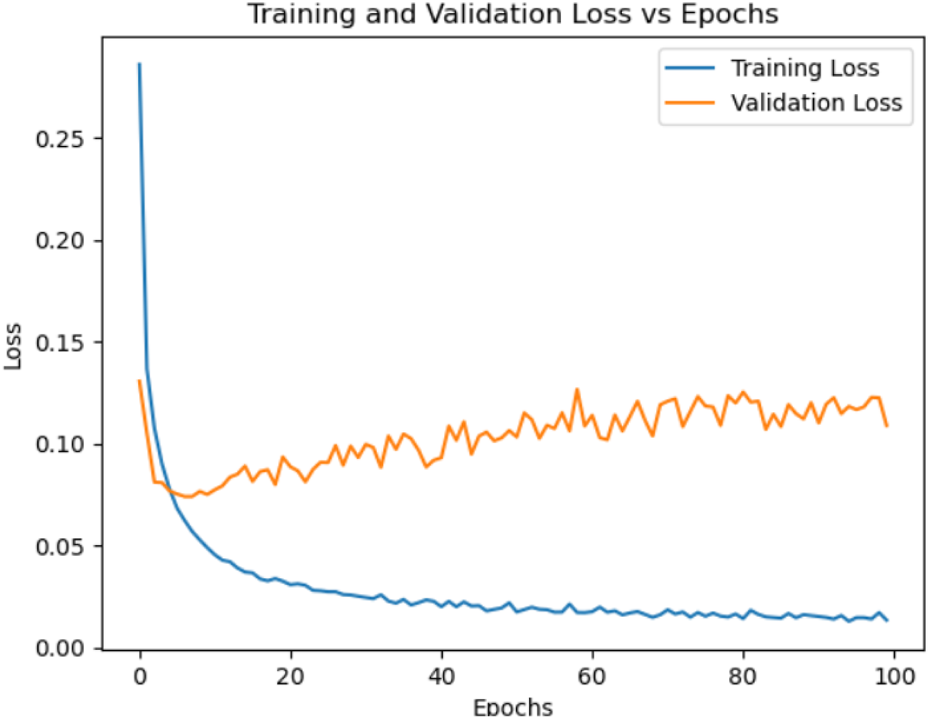

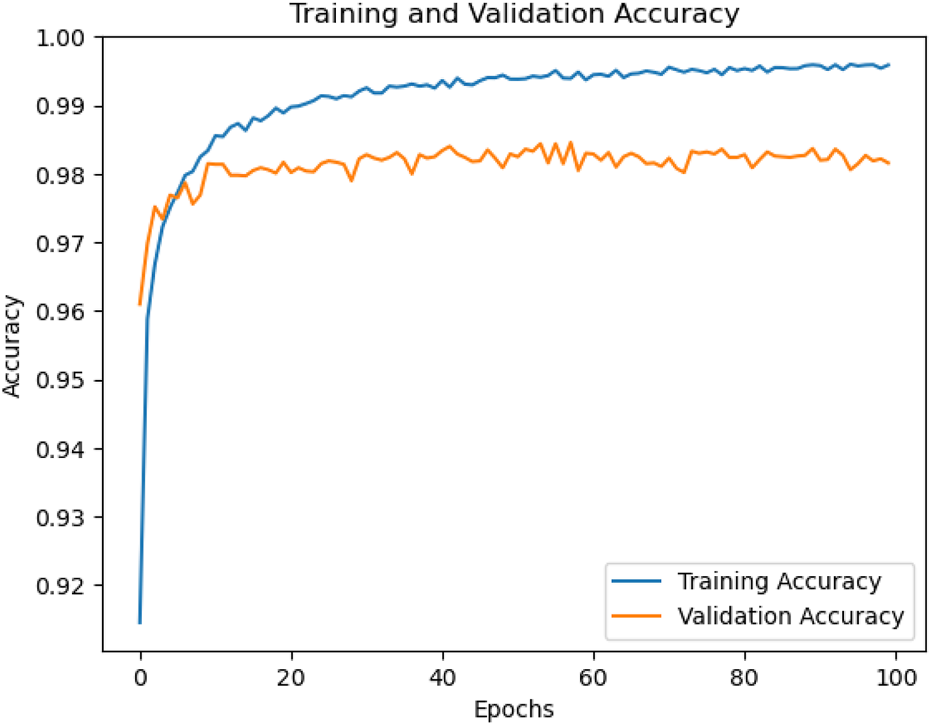

The training and validation losses of the dataset were monitored to assess the performance of the Bi-LSTM model optimized with ACO during the analysis. These loss measures reveal information about how well the model is learning from the data in bits, as illustrated in Figure 5. The training and validation losses show a distinct trend that demonstrates how well the Bi-LSTM with ACO optimization reduces mistakes and enhances overall model performance.

FIGURE 5

Training loss of the proposed deep learning model (Bi-LSTM–ACO).

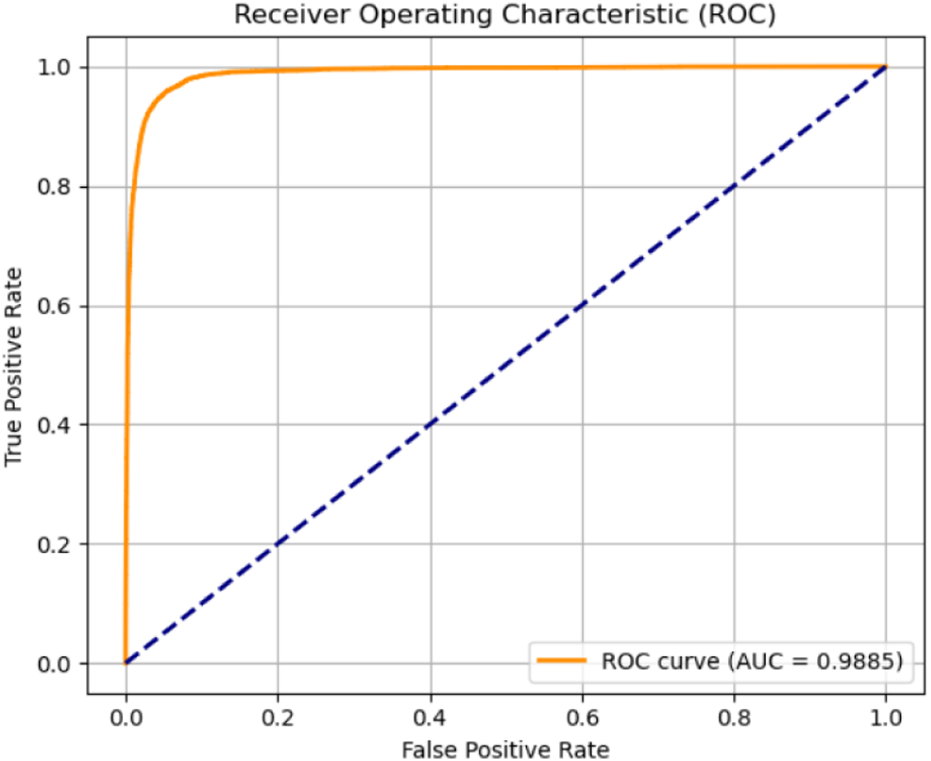

Additionally, each class evaluated a trained model to assess the model’s performance. Every job included in the verification set was tested. The number of quantities in each class that were correctly predicted is displayed in the results. The graph below demonstrates how the accuracy of the model increased rapidly as the number of iterations increased. Figure 6 displays the accuracy of the model after 100 training and testing epochs. Figure 7 shows the ROC curve (AUC = 0.9885) and validates the better classification efficacy of the proposed Bi-LSTM–ACO model, attaining enhanced sensitivity with negligible false positives.

FIGURE 6

Proposed model accuracy of 100 epoch steps.

FIGURE 7

ROC curve of the proposed model.

As indicated in Table 2, a variety of assessment measures, including accuracy, precision, recall, and F1 score, were used to evaluate the models’ performance. Notably, in terms of accuracy, Bi-LSTM and Bi-LSTM with the ACO optimizer models perform better than the other models. To conduct comparative benchmarking, we have enhanced the evaluation by comparing the proposed Bi-LSTM–ACO model with established state-of-the-art deep learning methodologies, specifically, GRU, LSTM, MLP, CNN-LSTM, and Bi-LSTM. These models are extensively utilized in energy-efficient wireless sensor networks and time-series forecasting. As observed from the results, the proposed Bi-LSTM–ACO achieves significant improvements in all classification metrics. This gain is primarily due to the ACO-based optimization of model weights and hyperparameters, which allows better adaptation to time-dependent sensor data patterns.

TABLE 2

| Model | Performance metrics | |||

|---|---|---|---|---|

| Accuracy | Precision | Recall | F1 score | |

| MLP | 0.9417 | 0.818 | 0.9598 | 0.8833 |

| LSTM | 0.9466 | 0.8358 | 0.9552 | 0.8915 |

| GRU | 0.9496 | 0.8516 | 0.9455 | 0.8961 |

| CNN-LSTM | 0.9481 | 0.8377 | 0.9598 | 0.8946 |

| Bi-LSTM | 0.9452 | 0.8285 | 0.9426 | 0.8815 |

| Bi-LSTM + ACO | 0.9861 | 0.9216 | 0.9806 | 0.9141 |

Performance analysis of the proposed model with other models.

We assessed the efficacy of different evolutionary optimization strategies by comparing Bi-LSTM models optimized using GWO, PSO, GA, and ACO algorithms, which are presented in Table 3. The ACO-tuned Bi-LSTM attained the maximum performance across all critical metrics, achieving an accuracy of 98.61%, a precision value of 92.16%, a recall value of 98.06%, and an F1 score of 91.41%. PSO and GWO exhibited formidable predictive powers, achieving F1 scores of 89.75% and 89.50%, respectively, underscoring their efficacy in resource-constrained contexts. The GA-based model, albeit exhibiting marginally inferior precision, attained the maximum recall (96.44%), rendering it especially appropriate for irrigation jobs where the omission of positive cases (i.e., instances requiring watering) must be reduced. These findings underscore the significance of ACO in attaining an optimal equilibrium between detection precision and system efficacy in agri-IoT networks.

TABLE 3

| Model | Performance metrics | |||

|---|---|---|---|---|

| Accuracy | Precision | Recall | F1 score | |

| Bi-LSTM + GWO | 0.9489 | 0.8513 | 0.9432 | 0.8950 |

| Bi-LSTM + PSO | 0.9507 | 0.8585 | 0.9403 | 0.8975 |

| Bi-LSTM + GA | 0.9438 | 0.8819 | 0.9644 | 0.8875 |

| Bi-LSTM + ACO | 0.9861 | 0.9216 | 0.9806 | 0.9141 |

Performance analysis of the proposed model with various optimization algorithms.

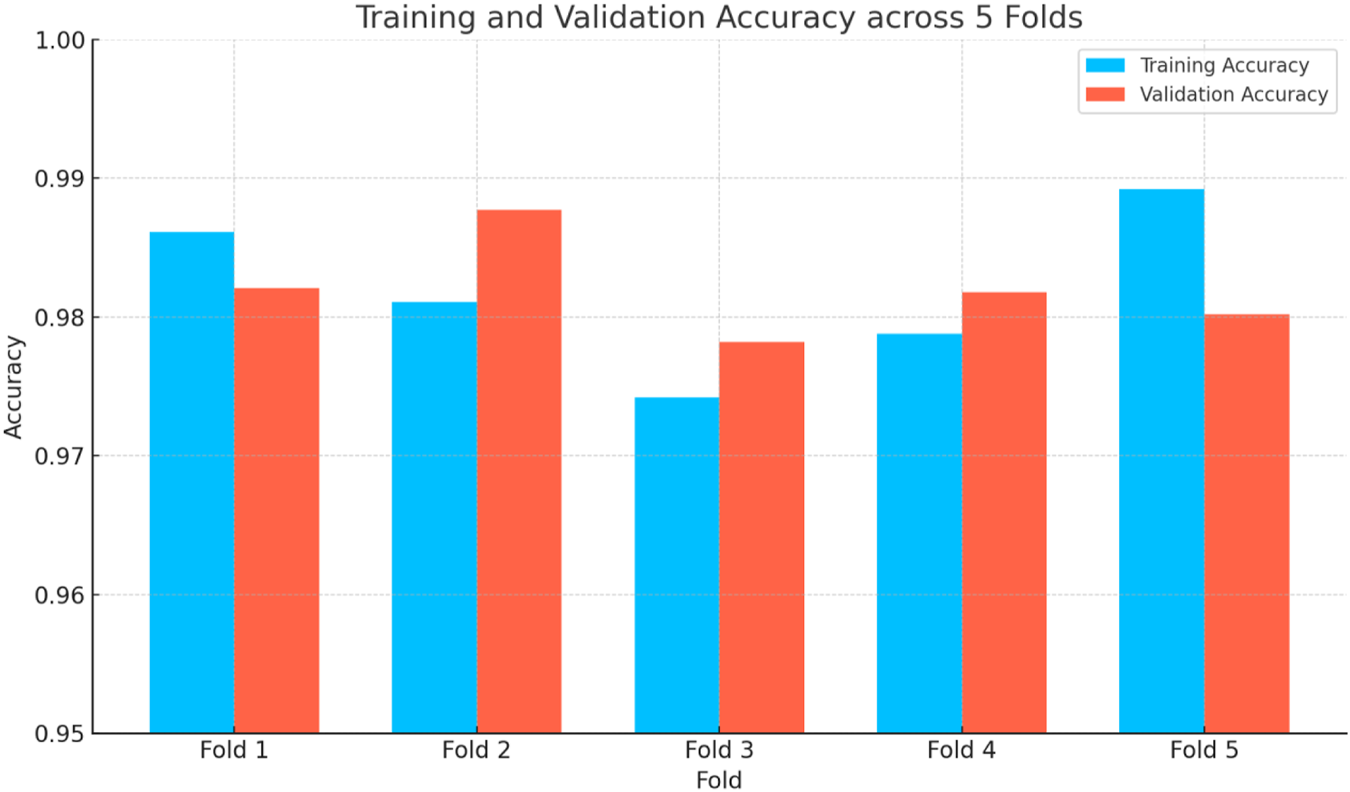

3.2 Performance evaluation of the model using K-fold validation

Cross-validation is a popular resampling method employed to assess machine learning models with constrained data. It reduces the possibility of overfitting by verifying that the model is evaluated on previously unobserved segments of the data in each iteration. This study employed five-fold cross-validation to evaluate the efficacy of the proposed Bi-LSTM model. The dataset was randomly divided into five equal sections. In each fold, four subsets were utilized for training, while the remaining subset functioned as the validation set. The technique was executed five times, guaranteeing that each subset was utilized once for validation.

The model’s performance was assessed using training and validation accuracy and training and validation loss. The Bi-LSTM model attained a mean training accuracy of 98.19% and a mean validation accuracy of 98.20%, demonstrating consistent performance across all folds. The mean training loss and validation loss were 0.0181 and 0.0180, respectively. The results indicate that the model effectively learns underlying patterns during training and generalizes well to novel data, demonstrating excellent stability and reliability of the suggested method.

The efficacy of the proposed Bi-LSTM–ACO model was additionally assessed using five-fold cross-validation to guarantee applicability and stability across various subsets of the dataset. Table 4 illustrates that the model exhibited consistently elevated training and validation accuracy across all folds, achieving an average training accuracy of 98.19% and a validation accuracy of 98.20%. The associated training and validation loss values remained consistently low and constant, averaging 0.0181 and 0.0180, respectively. The results indicate that the model is appropriately fitted, avoids overfitting, and exhibits strong generalization across various training subsets. The negligible disparity between training and validation accuracy across all folds signifies that the model adeptly identifies the inherent patterns in the data and demonstrates consistent performance on unseen samples. This underscores the effectiveness of the ACO-tuned Bi-LSTM architecture in modeling intricate temporal connections and facilitating precise predictions in smart agriculture IoT applications (Figure 8).

TABLE 4

| Fold | Training accuracy | Validation accuracy | Training loss | Validation loss |

|---|---|---|---|---|

| Fold 1 | 0.9861 | 0.9821 | 0.0139 | 0.0179 |

| Fold 2 | 0.9811 | 0.9877 | 0.0189 | 0.0123 |

| Fold 3 | 0.9742 | 0.9782 | 0.0258 | 0.0218 |

| Fold 4 | 0.9788 | 0.9818 | 0.0212 | 0.0182 |

| Fold 5 | 0.9892 | 0.9802 | 0.0108 | 0.0198 |

| Average | 0.9819 | 0.982 | 0.0181 | 0.0180 |

K-fold validation of the proposed work.

FIGURE 8

Performance analysis of the model using K-fold validation.

4 Conclusion

This paper presents a Bi-LSTM-based deep learning framework enhanced using the ACO method for effective resource allocation and energy management in agri-IoT settings. The system utilizes real-time sensor data—comprising temperature, humidity, water level, and soil nutrients—gathered using microcontroller-based sensing nodes and saved on the Adafruit IO cloud platform. By integrating time-series learning with Bi-LSTM and optimizing hyperparameters through ACO, the model attains a high level of accuracy in forecasting actuator behavior, such as pump activation, facilitating informed irrigation decisions. Comparative assessments with conventional LSTM, GRU, and Bi-LSTM models reveal that the proposed Bi-LSTM–ACO methodology markedly enhances performance, attaining an accuracy of 98.61%, a precision value of 92.16%, a recall value of 98.06%, and an F1 score of 91.41%. Cross-validation and supplementary measures, including latency and network longevity, further substantiate the system’s resilience and operational efficacy. The suggested strategy boosts prediction reliability and adds to energy-efficient sensor operation, rendering it highly suitable for real-time, large-scale smart agriculture implementations. Future studies will concentrate on optimizing communication characteristics, including path loss and delay, to provide scalable performance in dense IoT networks.

Statements

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

MR: writing – original draft. CG: supervision and writing – review and editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The reviewer KE declared a shared parent affiliation with the authors to the handling editor at the time of review.

Generative AI statement

The authors declare that no Generative AI was used in the creation of this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1

Chandrasekaran S. K. Rajasekaran V. A. (2024). Energy-efficient cluster head using modified fuzzy logic with WOA and path selection using enhanced CSO in IoT-enabled smart agriculture systems. J. Supercomput80, 11149–11190. 10.1007/s11227-023-05780-5

2

Daanoune I. Abdennaceur B. Ballouk A. (2021). A comprehensive survey on LEACH-based clustering routing protocols in Wireless Sensor Networks. Ad Hoc Netw.114, 102409. 10.1016/j.adhoc.2020.102409

3

Ganesh G. D. Senthil M. N. Ramana T. V. Vignesh T. Ghosh U. Alnumay W. (2023). DDNSAS: deep reinforcement learning based deep Q-learning network for smart agriculture system. Sustain. Comput. Inf. Syst.39, 100890. 10.1016/j.suscom.2023.100890

4

Han X. Wu H. Zhu H. Chen C. (2021). Joint power and channel optimization of agricultural wireless sensor networks based on hybrid deep reinforcement learning. Processes9 (11), 1919. 10.3390/pr9111919

5

Jabbar M. S. Issa S. S. Ali A. H. (2023). Improving WSNs execution using energy-efficient clustering algorithms with consumed energy and lifetime maximization. Indonesian J. Electr. Eng. Comput. Sci.29, 1122. 10.11591/ijeecs.v29.i2.pp1122-1131

6

Jararweh Y. Fatima S. Jarrah M. AlZu’bi S. (2023). Smart and sustainable agriculture: fundamentals, enabling technologies, and future directions. Comput. Electr. Eng.110, 108799. 10.1016/j.compeleceng.2023.108799

7

Li Y. Zeng H. Zhang M. Wu B. Zhao Y. Yao X. et al (2023). A county-level soybean yield prediction framework coupled with XGBoost and multidimensional feature engineering. Int. J. Appl. Earth Obs. Geoinf.118, 103269. 10.1016/j.jag.2023.103269

8

Liu S. (2022). Towards a sustainable agriculture: achievements and challenges of Sustainable Development Goal Indicator 2.4.1. Glob. Food Secur.37, 100694. 10.1016/j.gfs.2023.100694

9

Liu X. Ma Z. Guo H. Xu Y. Cao Y. (2024). Short-term power load forecasting based on DE-IHHO optimized BiLSTM. IEEE Access12, 145341–145349. 10.1109/ACCESS.2024.3437247

10

Mbamba C. K. Batstone D. J. (2023). Optimization of deep learning models for forecasting performance in the water industry using genetic algorithms. Comput. Chem. Eng.175, 108276. 10.1016/j.compchemeng.2023.108276

11

Mustaffa Z. Sulaiman M. H. Mohamad M. A. (2024). Improving Earth surface temperature forecasting through the optimization of deep learning hyper-parameters using Barnacles Mating Optimizer. Frankl. Open8, 100137. 10.1016/j.fraope.2024.100137

12

Pandiyaraju V. Ganapathy S. Mohith N. Kannan A. (2023). An optimal energy utilization model for precision agriculture in WSNs using multi-objective clustering and deep learning. J. King Saud Univ. Comput. Inf. Sci.35, 101803. 10.1016/j.jksuci.2023.101803

13

Prakash V. Singh D. Pandey S. Singh P. K. (2024). Energy-optimization route and cluster head selection using M-PSO and GA in wireless sensor networks. Wirel. Pers. Commun.10.1007/s11277-024-11096-1

14

Rahman M. M. Joha M. I. Nazim M. S. Jang Y. M. (2024). Enhancing IoT-based environmental monitoring and power forecasting: a comparative analysis of AI models for real-time applications. Appl. Sci.14 (24), 11970. 10.3390/app142411970

15

Sharma M. Kaur P. (2024). Fog-based federated time series forecasting for IoT data. J. Netw. Syst. Manage32, 26. 10.1007/s10922-024-09802-2

16

Sun L. Wan L. Wang X. (2020). Learning-based resource allocation strategy for industrial IoT in UAV-enabled MEC systems. IEEE Trans. Industrial Inf.17 (7), 5031–5040. 10.1109/tii.2020.3024170

17

Tace Y. Tabaa M. Elfilali S. Leghris C. Bensag H. Renault E. (2022). Smart irrigation system based on IoT and machine learning. Energy Rep.8 (S9), 1025–1036. 10.1016/j.egyr.2022.07.088

18

Ullah F. U. M. Ullah A. Haq I. U. Rho S. Baik S. W. (2020). Short-term prediction of residential power energy consumption via CNN and multi-layer Bi-directional LSTM networks. IEEE Access8, 123369–123380. 10.1109/ACCESS.2019.2963045

19

Vashisht S. Kumar P. Trivedi M. C. (2024). Enhanced GRU-BiLSTM technique for crop yield prediction. Multimed. Tools Appl.83, 89003–89028. 10.1007/s11042-024-18898-2

20

Vishnoi S. Goel R. K. (2024). Climate smart agriculture for sustainable productivity and healthy landscapes. Environ. Sci. Policy151, 103600. 10.1016/j.envsci.2023.103600

21

Wakweya R. B. (2023). Challenges and prospects of adopting climate-smart agricultural practices and technologies: implications for food security. J. Agric. Food Resour.14, 100698. 10.1016/j.jafr.2023.100698

22

Wang W. Shimakawa H. Jie B. Sato M. Kumada A. (2025). BE-LSTM: an LSTM-based framework for feature selection and building electricity consumption prediction on small datasets. J. Build. Eng.102, 111910. 10.1016/j.jobe.2025.111910

23

Wang Y. Li T. Chen T. Zhang X. Taha M. F. Yang N. et al (2024). Cucumber downy mildew disease prediction using a CNN-LSTM approach. Agriculture14 (7), 1155. 10.3390/agriculture14071155

24

Zhao N. Ye Z. Pei Y. Liang Y.-C. Niyato D. (2022). Multi-agent deep reinforcement learning for task offloading in UAV-assisted mobile edge computing. IEEE Trans. Wirel. Commun.21 (9), 6949–6960. 10.1109/TWC.2022.3153316

Summary

Keywords

wireless sensor network, internet of things, bidirectional long short-term memory, principal component analysis, particle swarm optimization, ant colony optimization

Citation

Rathi M and Gomathy C (2025) Smart agriculture resource allocation and energy optimization using bidirectional long short-term memory with ant colony optimization (Bi-LSTM–ACO). Front. Commun. Netw. 6:1587402. doi: 10.3389/frcmn.2025.1587402

Received

04 March 2025

Accepted

28 April 2025

Published

03 June 2025

Volume

6 - 2025

Edited by

Oluwakayode Onireti, University of Glasgow, United Kingdom

Reviewed by

Ahmed Aftan, Middle Technical University, Iraq

Kaliappan E., Easwari Engineering College, India

Shreekant Salotagi, Dayananda Sagar University, India

Updates

Copyright

© 2025 Rathi and Gomathy.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: M. Rathi, rm3910@srmist.edu.in

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.