- 1College of Engineering and Computer Science, Jazan University, Jazan, Saudi Arabia

- 2Department of Computer and Information, Applied College, Jazan University, Jazan, Saudi Arabia

- 3College of Computing and Information Technology, University of Doha for Science and Technology, Doha, Qatar

- 4Department of Computer Science, Hekma School of Engineering, Computing and Informatics, Dar Al-Hekma University, Jeddah, Saudi Arabia

- 5Department of Computing, University of Turku, Turku, Finland

Introduction: The migration of business and scientific operations to the cloud and the surge in data from IoT devices have intensified the complexity of cloud resource scheduling. Ensuring efficient resource distribution in line with user-specified SLA and QoS demands novel scheduling solutions. This study scrutinizes contemporary Virtual Machine (VM) scheduling strategies, shedding light on the complexities and future prospects of VM design and aims to propel further research by highlighting existing obstacles and untapped potential in the ever-evolving realm of cloud and multi-access edge computing (MEC).

Method: Implementing a Systematic Literature Review (SLR), this research dissects VM scheduling techniques. A meticulous selection process distilled 67 seminal studies from an initial corpus of 722, spanning from 2008 to 2022. This critical filtration has been pivotal for grasping the developmental trajectory and current tendencies in VM scheduling practices.

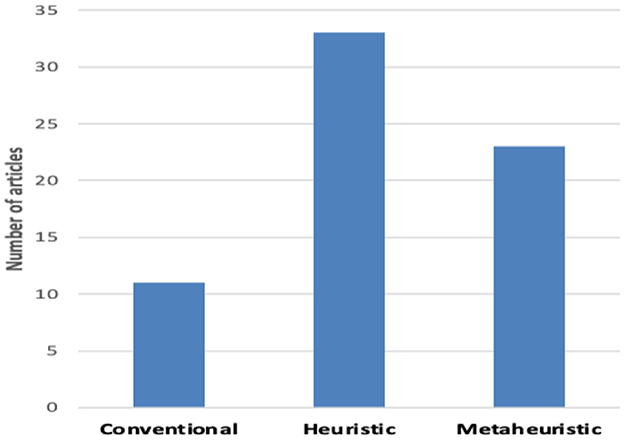

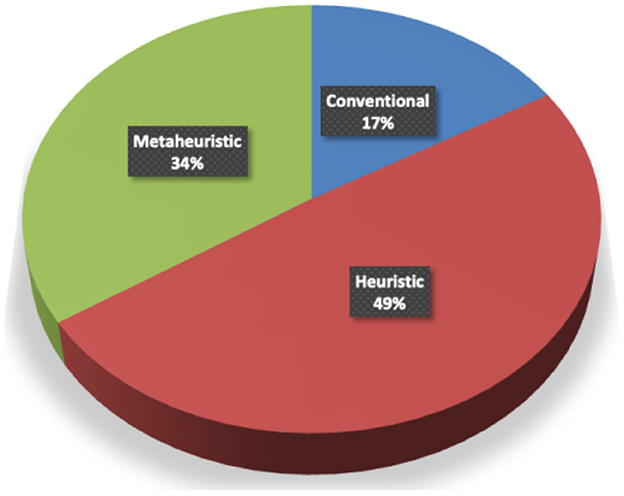

Result: The in-depth examination of 67 studies on VM scheduling has produced a taxonomic breakdown into three principal methodologies: traditional, heuristic, and meta-heuristic. The review underscores a marked shift toward heuristic and meta-heuristic methods, reflecting their growing significance in the advancement of VM scheduling.

Conclusion: Although VM scheduling has progressed markedly, the focus remains predominantly on metaheuristic and heuristic approaches. The analysis enlightens ongoing challenges and the direction of future developments, highlighting the necessity for persistent research and innovation in this sector.

1 Introduction

Virtual machine (VM) scheduling in cloud computing refers to the process of assigning virtual machines to physical servers in a way that optimizes the utilization of resources, complies with service level agreements (SLA), and ensures the quality of service (QoS). The goal is to manage the computing resources efficiently, handling tasks such as load balancing, reducing energy consumption, and minimizing response times (Beloglazov and Buyya, 2012; Xu et al., 2017; Sayadnavard et al., 2022). VM scheduling can be categorized into two: Static and Dynamic. Static VM scheduling typically involves pre-determined placement of virtual machines on physical hosts, often based on initial load estimates and without consideration for changing workloads. Dynamic VM scheduling, conversely, adapts to real-time conditions, continuously optimizing resource allocation as demands fluctuate. Dynamic VM scheduling emphasizes real-time optimization techniques that are characteristic of dynamic approaches, which adapt to ongoing changes in the cloud environment to improve efficiency and performance. This Systematic Literature Review (SLR) primarily focuses on dynamic VM scheduling (Ajmera and Tewari, 2023; Ajmera and Kumar Tewari, 2024). As a consequence of the advancement of cloud computing, many computing resources are provisioned as utilities on a metered basis to the client over the Internet (Buyya and Ranjan, 2010; Manvi and Shyam, 2014). Based on user demand, the cloud provider may easily and dynamically allocate and release these resources (Li W. et al., 2017). Virtual Machines (VMs) play the most critical role in the virtual cloud environment as a resource container with business services encapsulated. As a matter of fact, due to ever-changing conditions, VM scheduling and optimization in a heterogeneous environment remains a challenging issue for cloud resource providers (Khosravi et al., 2017). From the perspective of cloud providers, a massive number of resources are provisioned on VMs. In the cloud, thousands of users share the same amount of available resources fairly and dynamically. VM scheduling, at the same time, aims at ensuring the Quality of Service (QoS) along with cost-effectiveness (Qi et al., 2020). Some major issues that supposedly interconnected with Infrastructure-as-a-Service (IaaS) in cloud computing are the resource organization (Mustafa et al., 2015), data management (Wang et al., 2018), network infrastructure management (Ahmad et al., 2017), virtualization and multi-tenancy (Duan and Yang, 2017), application-programming-interfaces (APIs), interoperability (Challita et al., 2017), VM security (Uddin et al., 2015; Aikat et al., 2017) and the load-balancing (Mousavi et al., 2018).

VM scheduling ensures a balancing scenario in which VMs are allocated to the available Physical Machines (PMs) as per resource requirements (Shaw and Singh, 2014). Moreover, VM scheduling techniques are utilized to schedule VM requests of particular data center (DC), according to the required computing resources. In essence, the optimization of VM scheduling techniques to achieve efficient and effective resource scheduling gained larger attention of researchers in cloud computing (Rodriguez and Buyya, 2017).

The present literature in cloud computing scheduling can be categorized using performance matrix and scheduling methods. The surveys that are based on performance focus on specific issues such as (i) energy-aware scheduling, (ii) cost-aware scheduling, (iii) load balancing-aware scheduling, and (iv) utilization-aware scheduling. The method-based survey categorizes (i) VM allocation, (ii) VM consolidation or placement, (iii) VM migration, (iv) VM provisioning, and (v) VM scheduling. The classification, as mentioned above, is discussed in Section 4 of this study. According to the author's best knowledge, several polls and studies have been conducted on the themes that were discussed earlier. However, an extensive study on VM scheduling has been found missing in the available cloud computing literature. Hence, this study tries to do an extensive systematic survey on VM scheduling and presents the following contributions:

• To provide the outline of the techniques in VM scheduling in the same manner as these techniques have been applied in cloud computing.

• To present syntheses of contemporary issues and challenges and mention the problems related to VM scheduling.

• To present a comparative analysis of VM scheduling methods and parameters in cloud and multi-access computing (MAC).

• To evaluate various VM scheduling approaches critically while highlighting their drawbacks and advantages.

• To emphasize the importance of VM scheduling as a baseline for researchers to solve issues in near future.

Extensive examination and analysis of existing literature on contemporary issues and research gaps are crucial for generating ideas. This study tries to disseminate the most relevant VM scheduling techniques and approaches available in the literature and anticipates that they can effectively improve modern VM scheduling methods. This study attempts to present recent trends, requirements, and future scopes in the development of VM scheduling techniques in cloud computing.

The structure of the study is organized as follows: Section 2 discusses literature reviews in cloud scheduling. Section 3 presents the research methodology. Section 4 illustrates VM management methods and systems models. Section 5 presents the analysis of VM scheduling approaches and their parameters. Section 6 discusses scheduling in mobile edge computing and the validity of the research. Finally, Section 7 illustrates research issues and opportunities. Section 8 concludes with the findings of the literature review.

2 Literature review

Numerous studies are presented in the area of cloud scheduling, and some generic challenges are discussed, such as resource scheduling, resource provisioning, and load balancing. However, current studies have no extensive systematic survey on VM scheduling. This section refers to some studies in the area of cloud scheduling. When allocating dynamic, heterogeneous, and shared resources, resource scheduling in cloud environments is considered one of the most crucial challenges. To provide reliable and cost-effective access, overloading of those resources must be prevented by proper load balancing and effective scheduling techniques.

In the study mentioned in the reference (Kumar et al., 2019), a comprehensive survey of resource scheduling algorithms offer an analysis based on categorizing some parameters that include load balancing, energy management, and makespan. The study observed that there is no scheduling algorithm that has the potential to effectively address all parameters of VM scheduling. Furthermore, the study discussed some task scheduling algorithms, limitations, and future problems. However, the scope of the study is restricted to only grid computing. Similar study in references (Beloglazov et al., 2012; Rathore and Chana, 2014; Li H. et al., 2017; Uddin et al., 2021) presented a study of energy-aware resource allocation methods focusing on the QoS. They mentioned some critical and open challenges in resource scheduling, particularly energy management in a cloud data center. According to their analysis of previous studies, the challenges are enumerated as follows: (1) processes that are quick and energy-efficient for placing VMs and can anticipate workload peaks to prevent performance deprivation in a heterogeneous environment, (2) energy-based virtual network topology optimization technique among VMs for the best location to lessen network traffic congestion, (3) to properly regulate temperature and energy use and new heat management algorithms, (4) even workloads and workload-aware resource allocation processes, and (5) scalability and fault-tolerance techniques for VM placement (VMP) challenges that are decentralized and distributed.

In a similar type of study, Li et al. (2010) delved into VM scheduling issues within cloud data centers. They also offered an overview of contemporary technologies in the realm of cloud computing, encompassing aspects such as virtualization, resource allocation, VM migration, security measures, and performance evaluation. Parallel to this, they highlighted emerging challenges and complexities, including CPU design, resource governance, security maintenance strategies, and evaluation methods in multi-VM environments. Even with their research, their research fell short in areas such as structured categorization, problem definition, parametric study, and a comprehensive exploration of the techniques, as pointed out in previous studies.

Analyzing the cloud computing architecture, Zhan et al. (2015) systematically presented two-level taxonomy of cloud resources. Researchers have critically examined the issue and remedy of cloud scheduling in their review. Additionally, they investigated EC methodologies and talked about several cutting-edge evolutionary algorithms and their potential to solve the cloud scheduling issue. Based on their categorization, they have also identified some problems and research fields, such as distributed parallel scheduling, adaptive dynamic scheduling, large-scale scheduling, and multi-objective scheduling. They have also highlighted some of the most cutting-edge future themes, including the Internet of Things and the convergence of cyber and physical systems with big data. However, they should have described the problem's mathematical modeling or included any parametric analysis in the study.

In another investigation, Xu et al. (2013) described the causes of the performance overhead problem while scheduling virtual resources under several scenarios, i.e., from single server virtualization to multiple server virtualization in distributed data centers. The review presents a detailed comparison of contemporary migration techniques and modeling approaches to manage performance overhead problems. However, the authors suggest that a lot remains to be resolved to ensure the predictable performance of VM with guaranteed SLA. Similarly, Madni et al. (2016) examine the difficulties and possibilities in resource scheduling for cloud infrastructure as a service (IaaS). They categorize the previous scheduling schemes according to the issues addressed and performance metrics and present a classification scheme. Furthermore, some essential parameters are evaluated and their strengths and weaknesses are highlighted. Finally, they suggest some innovative ideas for future enhancements in resource scheduling techniques.

Meta-heuristic strategies have set a standard in VM scheduling because they produce efficient and nearly optimal outcomes in a feasible time frame. Numerous studies have been conducted to evaluate the performance of these advanced meta-heuristic algorithms. In a parallel vein, Kalra and Singh (2015) examined six prominent meta-heuristic optimization methods, including Ant Colony Optimization (ACO), Genetic Algorithm (GA), Particle Swarm Optimization (PSO), League Championship Algorithm (LCA), and the Bat algorithm. These techniques are presented within a taxonomic structure, with comparisons made based on several scheduling metrics such as task recognition, SLA observance, and energy consciousness. They further delve into the practical applications of these meta-heuristic methods and the existing challenges in grid or cloud scheduling. Nevertheless, their review is confined to specific meta-heuristic methods and optimization metrics.

In another development, Madni et al. (2017) investigated the potential of existing state-of-the-art Meta-heuristic techniques for resource scheduling in a cloud computing environment to maximize the cloud provider's financial benefit and minimize cost for cloud users. In their research, they selected 23 meta-heuristic technique studies between 1954 and 2015. They compared meta-heuristic techniques with traditional techniques to evaluate the performance criteria of the algorithms. They claimed that there can be several ways to enhance the performance of these algorithms which can further solve the resource scheduling problem. However, the focus of the study is only on meta-heuristic methods.

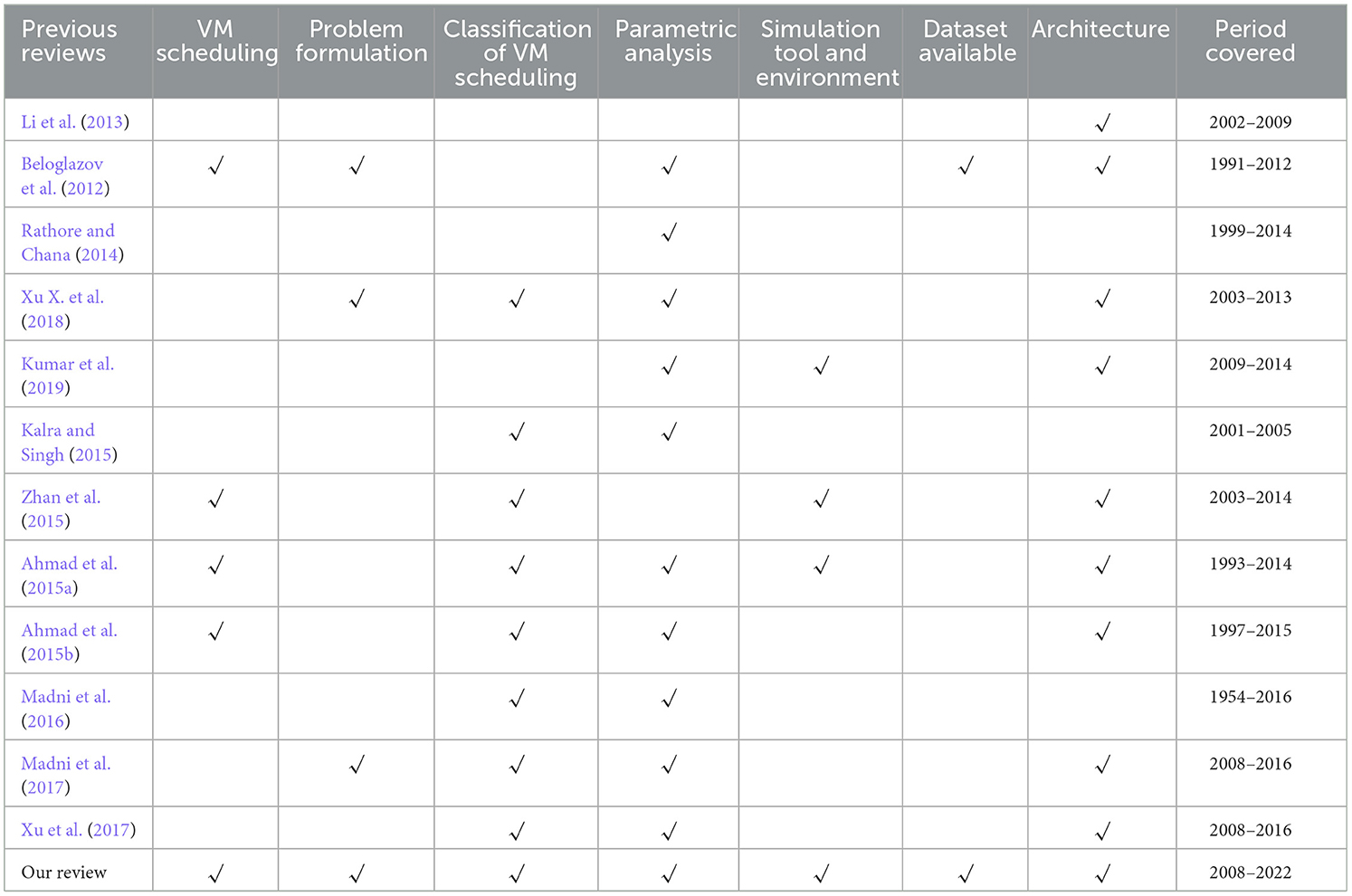

Unlike previous studies shown in Table 1, our research presents an extensive (not exhaustive) review of VM scheduling techniques and presents the most appropriate categorization, problem formulation, architecture, and future challenges. Then, based on our research, we formalize three questions and choose the most important study from the most trustworthy research database to address them. Furthermore, we delineate the importance of VM scheduling techniques, current issues and challenges, and future direction to support future research.

3 Research methodology

According to the guidelines mentioned in Kitchenham et al. (2007) and Kitchenham (2004), the presented SLR employs a tried-and-true procedure to examine earlier research by other researchers, which should provide sufficient details for other researchers to reproduce in the future (Charband and Navimipour, 2016; Navimipour and Charband, 2016). Following the best practice and guidelines, this study developed a protocol to accumulate the necessary details for VM scheduling techniques, approaches, and their parameters. Three research questions are established based on the analysis of collected literature on the main concerns with VM scheduling in cloud computing. The research questions are presented in the section below.

3.1 Research questions

In this section, the most important problems and challenges related to cloud-based scheduling were discussed, including resource provisioning, resource scheduling, task scheduling, VM scheduling, resource utilization, load balancing, and prospective balancing solutions. Therefore, the effort of this research is to address the following important research questions:

Research Question (RQ1): What is the significance of VM scheduling in light of the increase in cloud usage? RQ1 will try to survey several VM scheduling studies published over the period under study, to underline the importance of VM scheduling along with increasing cloud usage.

RQ2: How many of the current scheduling strategies achieve the primary VM scheduling goals concerning the particular parameters? RQ2's objective is to assess current VM scheduling strategies in a cloud computing system based on the key VM scheduling parameters.

RQ3: What problems and potential solutions were found concerning VM scheduling for upcoming research trends? RQ3's goal is to classify the difficulties in VM scheduling in cloud computing and the methods utilized to ensure QoS in the system.

The specific responses to the questions posed within the scope of this study are obtained through a multi-stage approach. Once the necessity for the research has been established, a standardized process has been used to frame the research topic. The research must go through several processes to adhere to the protocol, including the search request, source selection, quality assessment criteria, extraction, and information analysis approach.

For respected online academic libraries and databases, search strings or keywords were created by defining keywords, which are based on inclusion and exclusion criteria. The Boolean “OR” and Boolean “AND” operators are used to connect similar and alternative spellings for each of the question elements to define keywords (Milani and Navimipour, 2016). The search string is created using a combination of synonyms and alternate spellings for each element of the inquiry to find the pertinent topic. The best keywords from our subject have been chosen based on the established search string to obtain the desired outcome from databases. Thus, the terms “Virtual Machine,” “VM,” “Cloud,” “Scheduling,” and “Scheduler” have been chosen as the five keywords. The query was defined after going through many processes and assessing the findings of our preliminary study as a pilot to look at the result's coverage. Supposedly, if we used the pilot search from our studies and the original query did not yield the required results, we then modified our search using terms such as “Virtual machine” OR “VM” AND “scheduling,” OR “scheduler.” The search was carried out in August 2018 and covered the years 2008 to 2022.

3.2 Selection of sources

In the process of article selection, we have chosen some of the most relevant journal articles and conference papers from the most relevant academic databases for our search query. Subsequently, the selected results have been classified based on the publishers. We have searched through Web of Science, Scopus, and Google Scholar as our primary data source search engines. As a result, practically, all of the articles published in the most reputable online journals and conferences that have undergone technical and scientific peer review were covered by the search process: Springer Link, ScienceDirect, IEEE Infocom, IEEE -Xplore, ACM-Digital Library, and ICDCS.

3.3 Selection criteria

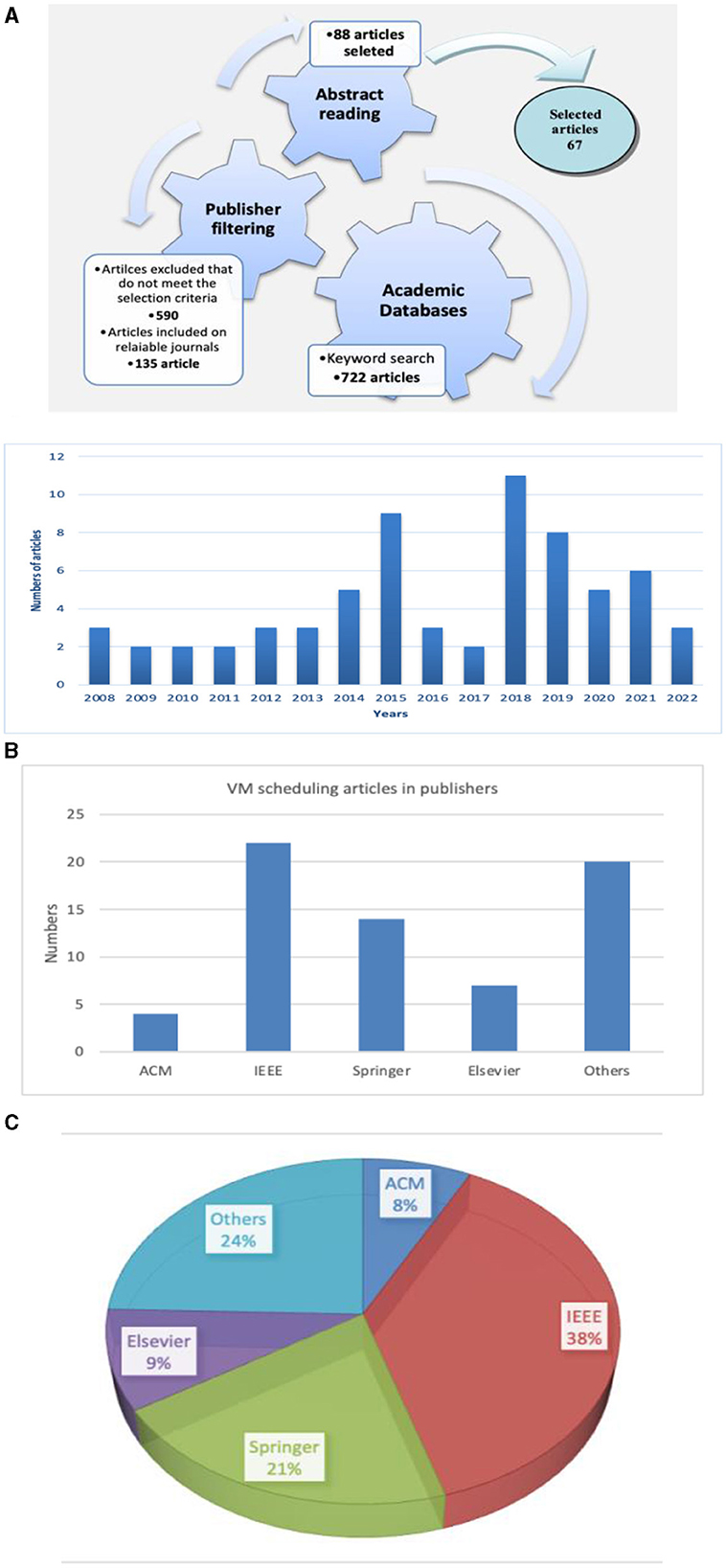

An assessment method has been followed for the inclusion of the studies based on the prepared quality assessment checklist (QAC) in the study mentioned in the reference (Kitchenham et al., 2009), to assess only specific articles from the peer-reviewed journal published between 2008 and 2022 as mentioned in Figure 1. Based on the above filtering and analysis of the articles based on the checklist, a list of questions is prepared: (a) Does the research approach depend on the research article? (b) Is the research approach appropriate for the issue covered in the article? (c) Is the analysis of the study adequately done? (d) Does the survey meet the requirements for evaluation?

Figure 1. Virtual machine scheduling article identification and selection process. (A) Number of studies selected in each phase. (B) Number of articles present in publishers. (C) Percentage of articles in publisher out of 67 selected studies.

3.4 Extraction of data and quality evaluation process

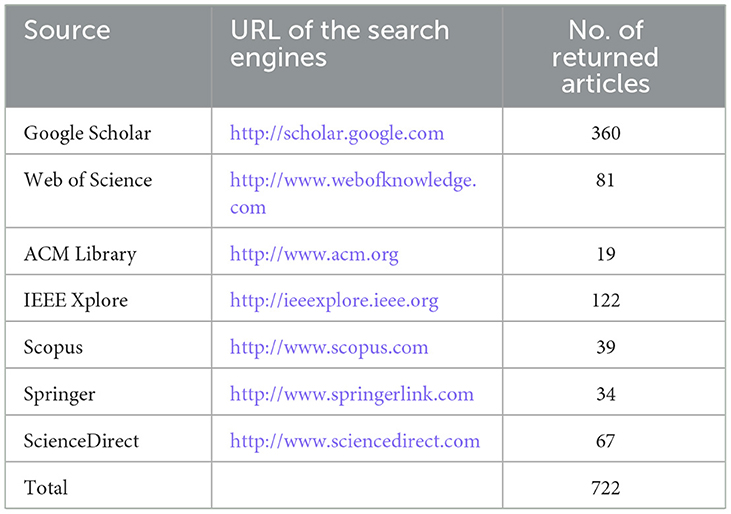

We compile the data from the chosen research during the data extraction process for additional analysis. Primarily, we selected a sum of 722 articles from all relevant databases. Then, we read keywords, abstracts, and concepts that match our topic of study. Consequently, 88 articles were selected based on abstract, and the rest of the studies were discarded. Then, the full body of each article was studied; those studies were not found suitable as the details mentioned inside the text were also removed. After summarizing the studies based on inclusion and exclusion criteria and QAC, 67 articles were selected for our review. Figure 1 demonstrates the overall inclusion and exclusion process followed in this study to identify the most suitable articles. As per the analysis of the retrieved data from relevant sources, a significant amount of growth can be observed in the articles published in the field of cloud scheduling during 2008 and 2022, as mentioned in Figures 1A–C. Among them, most of the publications were published in 2018.

3.5 Keyword search

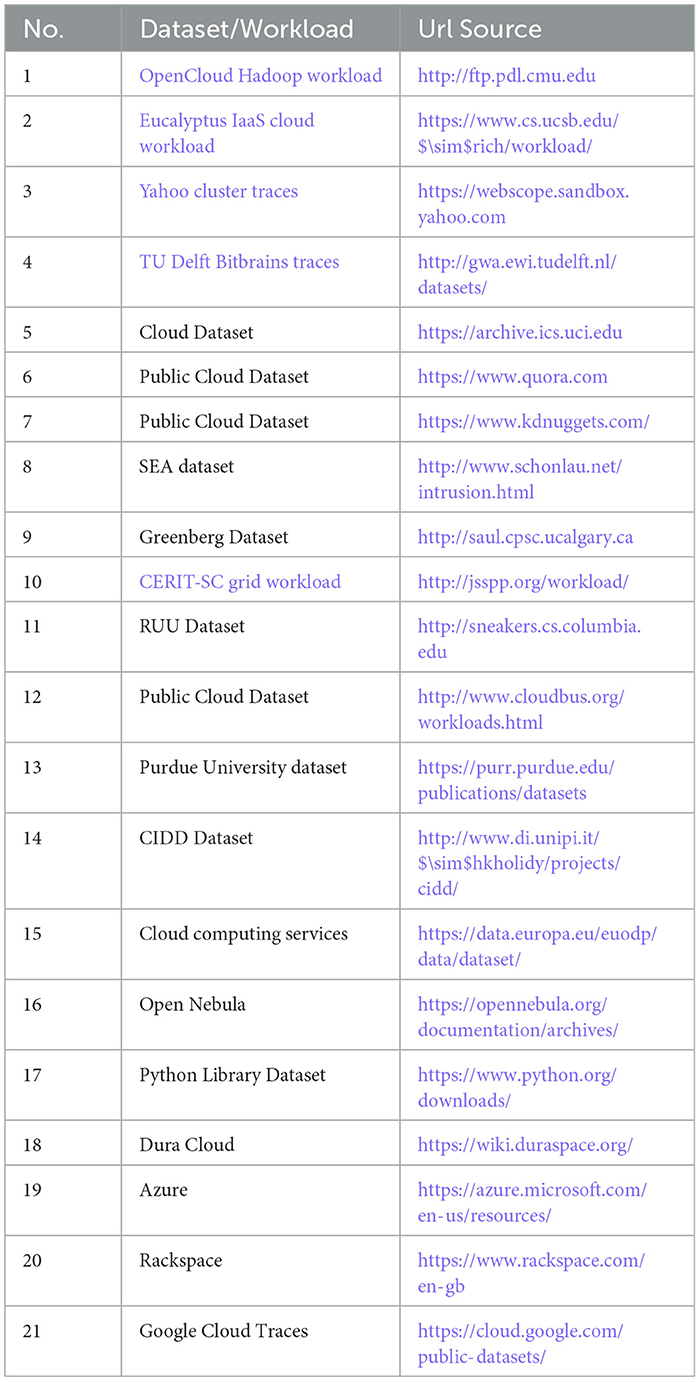

In the formulation of our first question (RQ1), we particularly outlined the importance of VM scheduling and the necessity to improve its mechanisms due to the high rise in data accumulation and resource utilization. Based on this perspective and the growing interest of the researchers in VM scheduling, we only included peer review journal articles and conference papers from the most relevant digital libraries, as shown in Table 2. However, since we assumed that researchers and practitioners frequently use journals to obtain knowledge and disseminate new findings, we rejected conference papers that were not from trustworthy sources.

3.6 Scope of the study

Based on the standards outlined in the study's procedure, the major studies were included. The 67 articles included in this study are further divided into two categories: those that specifically address the VM scheduling challenge and those that examine various problem-solving strategies.

The literature review will provide a solution as follows:

1. What is the present status of VM scheduling in cloud computing?

2. What are the various methods used in VM management?

3. What types of research are carried out in this area?

4. Why is VM scheduling important in the area of cloud computing?

5. What are the approaches prevalent to solving VM scheduling problems?

6. Which approach may be opted for in the current cloud computing scenario?

7. How and why do VM scheduling approaches impact the performance of resource management in cloud data centers?

8. What are the challenges in the design and devilment of VM scheduling techniques in cloud computing?

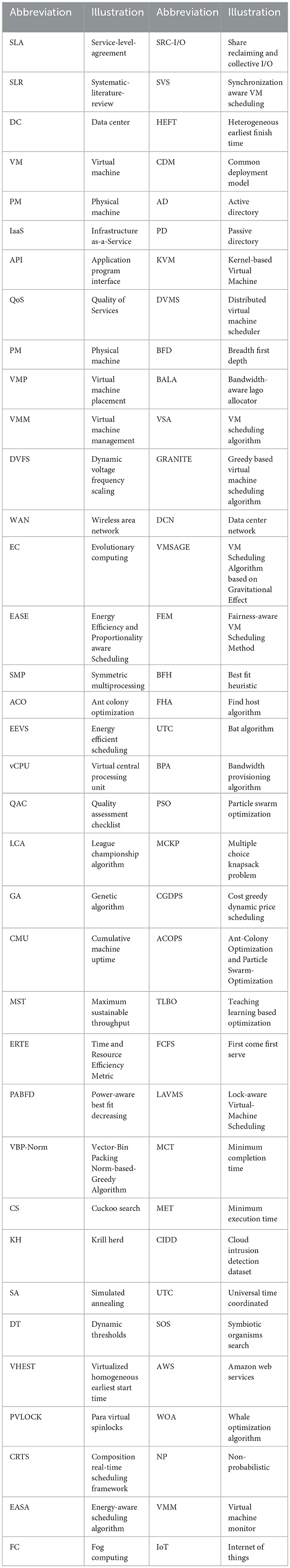

Here, it is important to mention that the foremost attention of this study is VM scheduling, its architecture, and the techniques used in the literature to solve the VM scheduling problem. Hence, we do not concentrate on the other underlying elements of cloud scheduling such as task or job scheduling, workload scheduling, and workflow scheduling. In addition, the study does not consider VM migration in most cases. In the forthcoming section, the VM management methods are explained, and the abbreviation used throughout the review is shown in Table 3 with its illustration.

4 Virtual machine management

Virtual machine management is a solution for VM scheduling in the data center, which enables us to create and deploy the virtual host or VM and allocate or de-allocate the VMs, mapping the VMs with the PMs to provide better QoS as per user demand. The VM can be managed by different methods to achieve optimal resource utilization and cost saving. VM management methods consolidate the VMs on the physical machines without considering heterogeneity, which is one of the main aspects of modern-day data centers. Since finding the system's heterogeneity is essential to achieving considerable performance and effective resource management, it must be accounted for in designing VM management schemes. Many studies have been done on management strategies in cloud data centers; however, there is a lot to be explored for the schemes that can improve the effectiveness of data center.

4.1 Classification of VM management method

In this section, we put forward the underlined methods for VM management and their possible classifications. According to the investigation of the surveyed literature in this study, the methods or techniques involved in VM management can be classified as VM Scheduling, VM Allocation, VM Placement, VM Migration, VM Consolidation, and VM Provisioning. Things to be noted here, these methods are often used interchangeably in the literature, and the distinction between the actual methods used becomes challenging to identify. However, the main focus of this study is VM scheduling since an ill-managed VM scheduling on the data center in a heterogeneous environment not only leads to performance degradation of computing resources but also lowers energy efficiency, which results in more energy consumption (Sharifi et al., 2012).

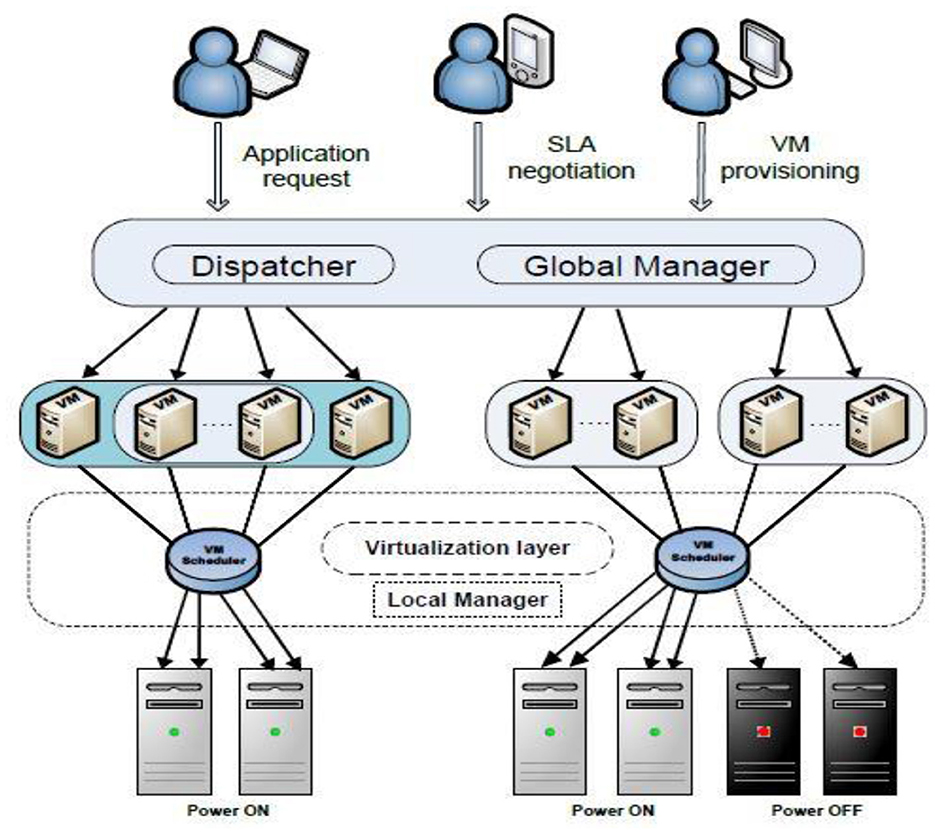

This article focuses on VM scheduling since it has the following advantages: scalability, QoS, a particular environment, decreased overheads and latency, enhanced throughput, cost-effectiveness, and a more straightforward user interface. The VM management methods (see Figure 2) can be classified as below, whereas an overview of VM scheduling is shown in Figure 3.

• VM Scheduling: Allocating a group of VMs to a group of physical machines is the definition of a VM scheduling problem (Prajapati, 2013; Khan et al., 2018).

• VM Allocation: Allocating the user tasks to VMs is known as “VM allocation,” and it often takes CPU, network, and storage requirements into account (Bouterse and Perros, 2017).

• VM Placement: It is a method for deciding which VM belongs to which physical machines (Chauhan et al., 2018).

• VM Migration: Relocating a VM means shifting it from one server or storage facility to another (Leelipushpam and Sharmila, 2013).

• VM Consolidation: As a result of the strategic placement of the VMs, we may reduce the number of necessary PMs (Corradi et al., 2014).

• VM Provisioning: Configurable actions linked to deploying and personalizing VMs following organizational needs (Patel and Sarje, 2012).

Figure 3. Virtual machine scheduling overview (Rana and Abd Latiff, 2018).

4.2 Systems model of VM scheduling

Figure 3 demonstrates the association between VMs and PMs. A sequence of all the PMs in the system here is represented as; ρ = {ρ1, ρ2, …, ρℵ}, ℵ is the number of PMs, ρi(1 ≤ i ≤ ℵ) which represents PM i. Whereas, VMs set on the PM ρiϑi = {ϑi1, ϑi2, ..., ϑimi} in which mi is the number of assigned VMs on PM i. Considering S = {S1, S2, …, Sℵ } is the solution set which can be generated after the deployment of the VM ϑ on each physical machine. Hence, Si is the resultant solution set when VM ϑ is mapped to PM ρi.

4.2.1 The formulation of the load

A workload of a PM generally can be derived by summing up the workloads of the VMs executing on it. We presume the finest time examined by previous data is τ, which is the period of τ from the existing time in the monitoring zone by previous data. According to the changing policies of PM workload, we can distribute the time τ into n times. Therefore, we define τ = [(1−0), (2−1), …, (n−n−1)]. The equation states that, according to the changing policies of PM workload, the time τ is distributed into n smaller time intervals. In this notation, (1,2, …,n−1) represent the end points of the n time intervals, and 0 is the starting point. The values in the brackets represent the duration of each time interval, which is calculated as the difference between consecutive end points (i−i−1). The sum of all the duration of the time intervals is equal to the total duration of the period τ.

In the explanation (k−k−1), signifies time k. Assuming the workload of VMs is fairly constant every time, then we can define the workload of VM number in period k is ϑ (i, k). Thus, we can determine that in cycle τ, where n is the number of instances in the index I and workload(i) is the workload value for the ith instance. The workload of the VM ϑi on PM ρi is calculated by Equation 1:

Going by the system policy, the workload of a PM is generally derived by summing up workloads of the VMs executing on it as shown in Equation 2. Hence, we can assume that the workload of the PM pi where mi is the number of VMs on PMj.

The present VM requires placement as ϑ. Then, the previous VM configuration is required by the current schedular, and the estimation of the workload of the VM is ϑ' based on historical data. Therefore, when ϑ is mapped to PM, the workload of each PM should be measured by Equation 3.

Typically, when ϑ is allocated to ρi, there will be some variations in the system workload. Consequently, to achieve load balancing, we must do load adjustments. The load discrepancy of the mapping solution Si in time τ after ϑ is arranged to ρi by Equations 4 and 5.

where

5 VM scheduling approaches

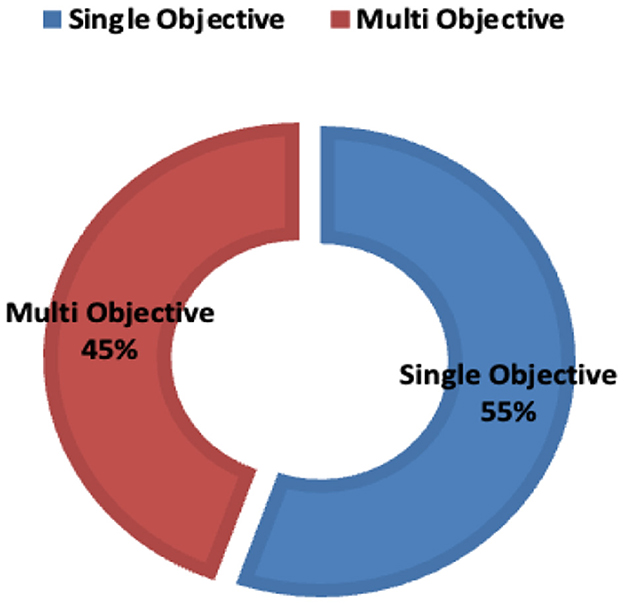

This review methodically segments the body of research on VM scheduling into three distinct methodologies. Initially, the traditional approach is detailed, highlighting fundamental scheduling techniques. The subsequent section digs into heuristic methods, which tailor problem-specific heuristic strategies for optimization challenges. The final section examines metaheuristic methodologies, embracing advanced, intelligent algorithms to tackle intricate VM scheduling in cloud computing. The objective of this study is to put forward the different methodologies tackling the same objectives from unique angles. Figures 4, 5 illustrate the distribution of these methodologies, while Tables 4–6 provide an exhaustive examination of the literature pertaining to each method, which is thoroughly discussed herein.

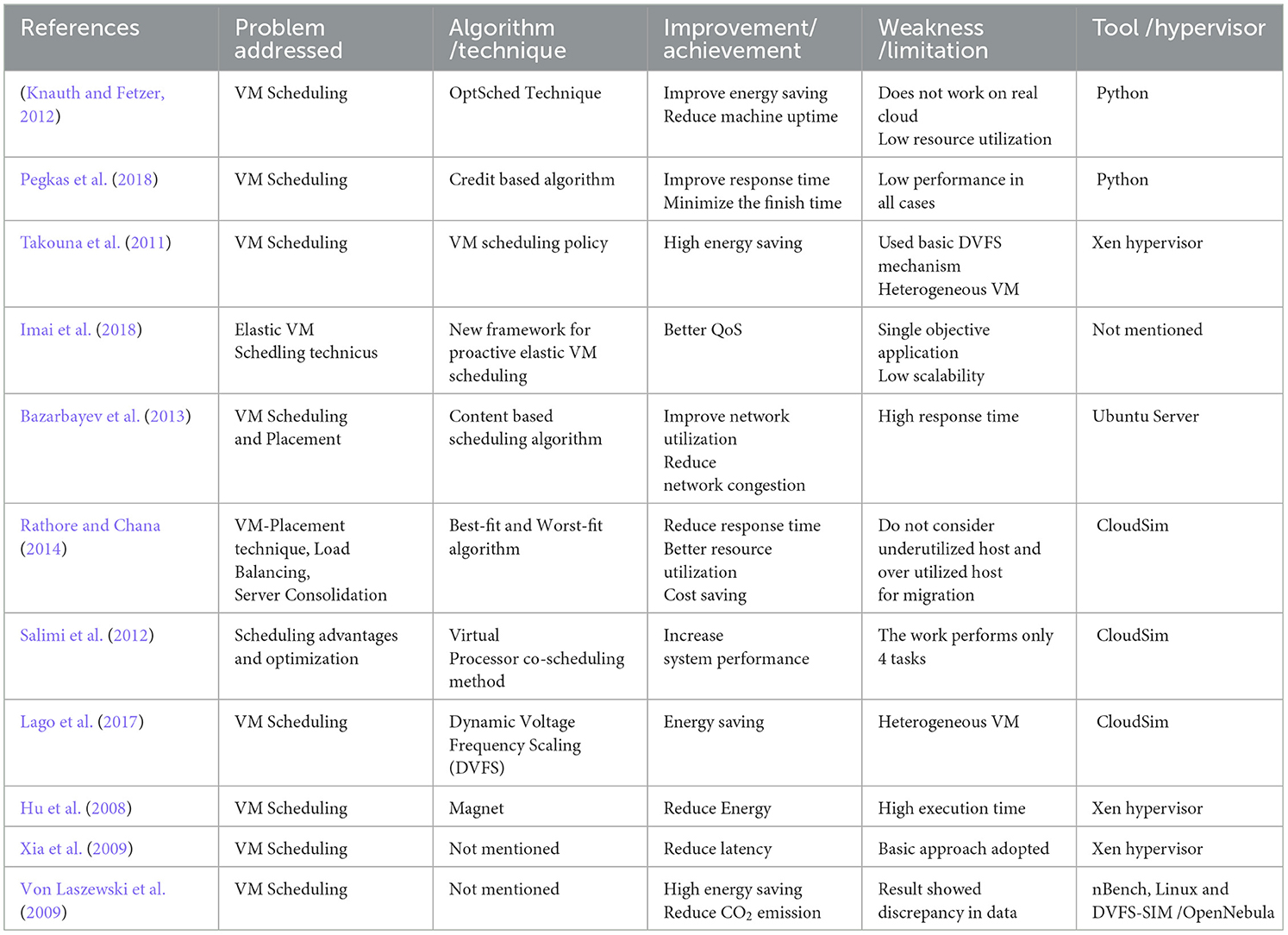

5.1 Conventional approach

Efficient VM scheduling techniques are proven to be efficient in solving problems, such as high response time taken by tasks, distribution of the VMs on the physical hosts to achieve optimal load balancing, equal resource consumption, and server consolidation in data centers. The mentioned problems are addressed using Best-Fit and Worst-Fit algorithms, which follow two mechanisms. The reaction time is reduced by a factor of (logn) for the best-fit method and by a factor of (logn) for the worst-fit method (1). In the worst-fit technique, the load on the PMs is equally distributed, but it requires additional VMs, such that every single host has to execute the processes. Then, in the best-fit process, every physical machine has equal resources left out for the execution of the remaining tasks. Better response times and more evenly distributed workloads on VMs are what the simulations suggest is possible. However, in the mentioned scheduling technique, they did not consider VM migration for the underutilized or overutilized host (Rahimikhanghah et al., 2022).

The study elaborates the distinction between VM scheduling and processor task scheduling in a traditional computing environment. In addition, it points out some key advantages and challenges of VM scheduling. The proposed gang scheduling-based co-scheduling algorithm works in two fashions. First, the algorithm schedules the coherent processes to run simultaneously on different processors. At the same time, it maps the related virtual CPUs (vCPUs) to the real processors. The simulation results exhibit faster execution of processes that execute on VMs and display higher performance and avoid unnecessary VM blocks (Salimi et al., 2012).

Hu et al. (2008) presented a novel scheme for VM scheduling using live migration of VMs to the under-loaded server clusters. The scheme named Magnet shows a better reduction in energy saving and is applied to both homogeneous and heterogeneous physical machines in the data center. The scheme also claimed an appositive impact on average job slowdown and a negative impact on the execution time for task processing. The authors of the study mentioned in the reference (Xia et al., 2008) measured the performance of interactive desktops and tried to solve the latency peak problem that arises during server peak workload. The proposed method enhances the XEN credit scheduler to analyze the latency for peak operation. They claim to reduce latency and frequency by their scheduler in comparison to the default one.

Von Laszewski et al. (2009) anticipated the Dynamic Voltage Frequency Scaling (DVFS) technique to analyze the problem of energy consumption in computer clusters. The proposed design focuses on the allocation of VMs on the DVFS-enabled clusters. The simulation results show an acceptable reduction in energy consumption. Lago et al. (2017) presented an optimization algorithm for VM scheduling considering bandwidth constraints in a heterogeneous network environment. These techniques work in two steps, first they used Find Host Algorithm (FHA) to find the optimum host to allocate the available VM which is executed by the cloud broker. Second, the Bandwidth Provisioning Algorithm (BPA) is used to provision the network bandwidth for the VM which is to be run on the host machine. In the simulation results, the proposed algorithm showed significant reduction in energy saving and a better makespan.

In VM scheduling of heterogeneous multicore processor environment, two key issues are significant to achieve an efficient performance. Characteristics of VM for optimum VM placement at the suitable core and the actual source of delay to eliminate the impede cloud performance. The authors of the study mentioned in the Takouna et al. (2011) developed a plan to allocate resources among the several VMs. The authors discuss performance dependence on the physical host and responsiveness to CPU clock frequency. The simulation outcomes show that the proposed scheduling policy is effective in energy saving in a cloud environment. In a cloud data center, excessive amount of energy is consumed by the VM scheduler. Knauth and Fetzer (2012) suggested the energy-aware scheduling algorithm OptSched to minimize energy-saving problems in cloud computing. Simulation results show that the enhanced method can significantly reduce CMU up to 61.1% when compared with the default scheduler round-robin and is considered the best fit in OpenStack, OpenNebula, and Eucalyptus.

One other study proposes a credit-aware VM scheduling method to reduce data center overhead. The mechanism seems to be easy to implement with a simplified design. However, the experimental result does not show optimal performance in all cases and is not even implanted in the real cloud (Pegkas et al., 2018). In stream data processing, the demand of the workloads changes over a period of time. To maintain seamless processing, the VMs need to allocate and deallocate frequently by the VM Manager (VMM). In this so-called steam processing scenario, maintaining QoS is a challenging task and requires adaptive scheduling techniques to handle uncertainties. Imai, Patterson (Imai et al., 2018) provided a proactive elastic VM scheduling framework to forecast the arrival of workloads; when the estimation is done for the arrival of the highest workload, the minimum amount of VM is allocated to handle that workload. To know the uncertainties from VM and application, they have used MST (maximum sustainable throughput) model. The authors applied their framework on three different workloads and were able to achieve 98.62% of QoS satisfaction and 48% less cost in comparison to static scheduling.

On the other hand, there is a high possibility to discover a high amount of content similarity and identical disk blocks with a similar operating system and the same host with the help of VM scheduling. The researcher observed that a similarity between VM images can be as high as 60%−70% which causes a reduction in the amount of data transfer in the VM deployment process. Based on the above notion, Bazarbayev, Hiltunen (Bazarbayev et al., 2013) developed a content-based scheduling scheme to reduce the network congestion which is related to the VM disk image transfer process inside data centers. Data center network usage and congestion are significantly reduced as a result of the algorithms' evaluation, which shows a reduction in data transfer of up to 70% during the processes of VM migration and virtual disk image transfer.

Conventional VM scheduling methods, including Best-Fit and Worst-Fit algorithms, have effectively addressed core issues within cloud computing environments, such as task response time optimization, equitable VM distribution for load balancing, and server consolidation. These techniques, grounded in simplicity, have proven to be quite robust, with Best-Fit methods reducing reaction times significantly and Worst-Fit approaches ensuring even workload distribution across physical machines. Additionally, innovations such as the gang scheduling-based co-scheduling algorithm have introduced improvements in running coherent processes simultaneously, thereby enhancing the overall execution performance.

However, these traditional methods are not without their limitations. They often do not account for the dynamic aspects of cloud computing, such as VM migration to balance the load on underutilized or overutilized hosts, which can be crucial for maintaining efficiency and responsiveness in data centers. While these methods have laid a strong foundation for VM scheduling, their lack of adaptability in rapidly changing environments and their potential for increased resource requirements highlight the need for more advanced approaches to address the evolving complexities of VM management.

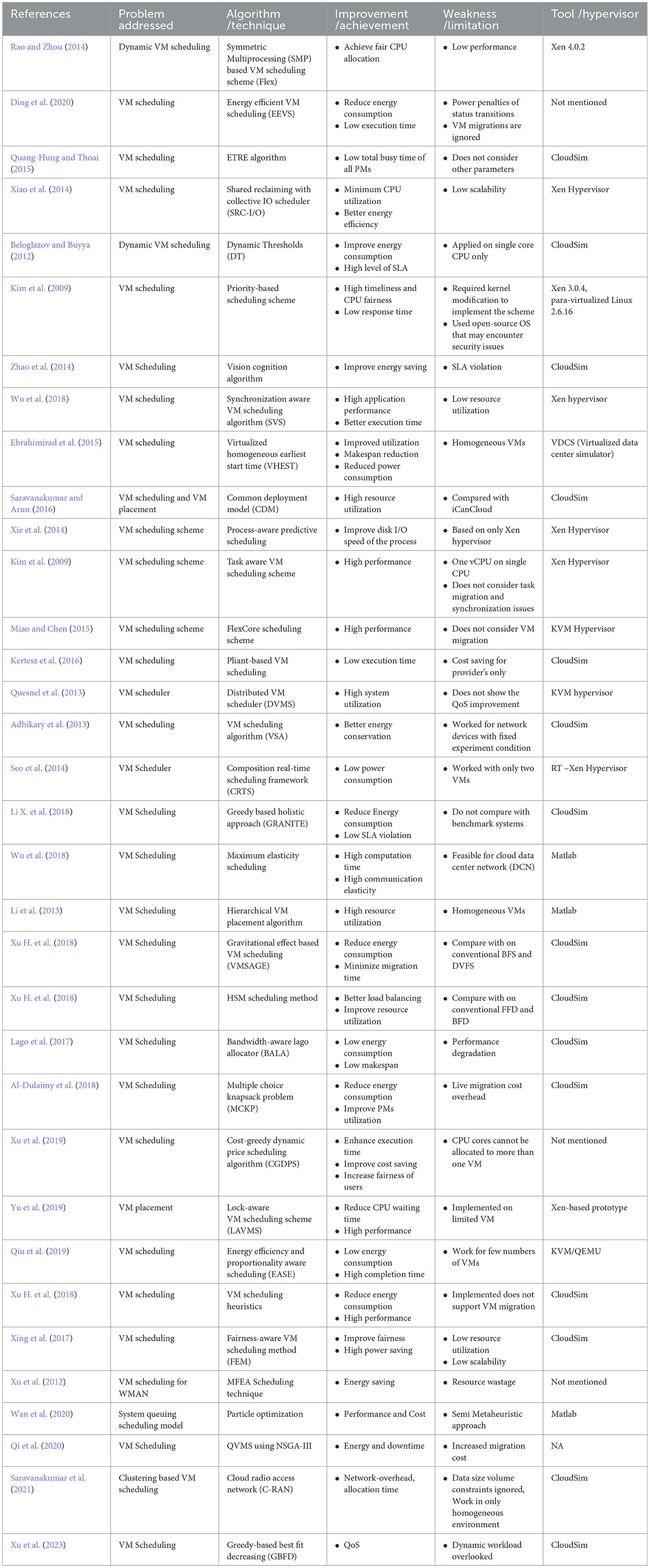

5.2 Heuristic approach

The heuristic approach to handling complex optimization problems is explained as trying to find a probable number of solutions to an NP-hard problem and suggest the best solution to achieve some specific objective function. It is mostly bound with hard and soft constrains which must not be overlooked in the optimization design. Heuristic approaches perform where traditional approaches fail, especially in the high dimensional or multimodal space when a problem can be addressed using more than one solution. In this context, many researchers have applied heuristic approaches in their study and achieved effective solutions to their problems. Table 5 shows a descriptive analysis of the heuristic approach. We discuss here the heuristic approaches used to solve VM scheduling problems.

In the SMP (Symmetric Multiprocessing) VM scheduling, dynamic load balancing and CPU capping techniques are used, which consequently result in a significant number of inefficiencies in parallel workloads. In a virtualized system, where the tenants rent the resources, fairness among them considered being the key to success in running their applications effectively. However, the available virtualization platforms do not implement fairness in a condition where some VMs contain several virtual CPUs running on different CPUs. Based on this method, Rao and Zhou (2014) developed an innovative vCPU scheduling technique, namely, Flex, which applies fairness at the VM level and also increases the effectiveness of parallel running applications on the host servers.

In other progress, an efficient dynamic VM scheduling, the algorithm is developed to address the energy-consumption problem with the concentration of deadline constraints (Uddin et al., 2014). The study presents a robust energy-efficient scheduling technique, namely EEVS, which can be capable of dealing with various physical nodes and equally performs in a dynamic voltage environment. Furthermore, the algorithm considers scheduling periods and optimal performance-power ratio as performance parameters. Experiment analysis shows that in the best instances, VMs can reduce their energy consumption by over 20% while increasing their processing power by 8%.

According to Quang-Hung and Thoai (2015), Time and Resource Efficiency Metric (ERTE) is a suggested technique for scheduling VMs that take energy efficiency into account to reduce data center idle time. In addition, the suggested approach was evaluated in terms of power consumption alongside two state-of-the-art algorithms, namely, power-aware best fit decreasing (PABFD) and vector bin packing norm-based greedy algorithm (VBP-Norm L1/L2). Based on experimental results, the suggested scheduling method not only improves performance by 48% but also reduces average energy usage by 49%.

In the virtualized environment and with the presence of an intensive mixed workload, reducing energy consumption is considered one of the challenging tasks. According to Xiao et al. (2014), to reduce the energy consumption caused by I/O virtualization, a mixed-workload energy-aware VM scheduling technique was developed. Additionally, they developed a novel scheduler called SRC-I/O by fusing two newly designed techniques, namely, share-reclaiming and communal I/O. Both the share-reclaiming method and the collective I/O method aim to increase CPU utilization and reduce context-switching costs due to I/O-intensive workloads, respectively. Simulation results reveal that the SRC-I/O scheduler outperforms its rival on a different performance matrix.

Increases in virtualization technologies have allowed for massive VM consolidation in data centers. Services that depend on rapid responses could be hampered by a lack of availability if they did not have access to latency-sensitive task support. In this regard, Kim et al. (2009) accommodate latency-sensitive tasks, and it is necessary to devise a priority-based VM scheduling method that takes into account the needs of guests. The provided method schedules the required VMs for workload allocation based on the priority of the VMs and the current state of the guest-level tasks running on each VM. In addition, it selects for scheduling those VMs that are capable of running latency-sensitive applications with the quickest possible response to I/O events. The study mentioned in the Zhao et al. (2014) reduces the VM's carbon footprint by putting forward a cognitive scheduling method based on its camera's eye. The suggested method seeks to identify the optimal PM to allocate to a VM so that it may run within a specified response time. When compared with other algorithms, this one is 17% more efficient at saving power. Due to SLA violations of up to 14%, the proposed algorithm does not achieve optimal performance with response time.

Due to high flexibility and cost-effectiveness, multiple applications run concurrently on the virtual cloud. Running tightly-coupled parallel applications is a feasible solution over the clustered cloud environment for better resource utilization. However, due to over-commitment in the cloud and ignorance of the synchronization constraint of VMs by Virtual Machine Monitor (VMM), performance degradation is taken into consideration in recent research. To overcome this problem Wu et al. (2018) emphasized the role of dynamic workload on the VM in a Data Center Network (DCN) and presented a VM scheduling to improve the elasticity as a new QoS parameter. A new precedence-constrained parallel VM consolidation algorithm is anticipated by the study mentioned in the reference (Ebrahimirad et al., 2015), which tends to improve the resource utilization level of physical machines and also displays minimum energy consumption. Simulation results show that their algorithm performs better in comparison to Heterogeneous Earliest Finish Time (HEFT) in reducing energy and make span time of the services.

Based on a brokering mechanism, Saravanakumar and Arun (2016) proposed a Common Deployment Model (CDM) to manage VM in cloud data centers efficiently. After a task has been completed, the current state of the VM is preserved using the active directory (AD) and passive directory (PD). These folders are used for two processes, VM migration and VM rollback, ensuring that VMs have the correct configuration mapping of the physical computers. The suggested model takes into account VM downtime for various job kinds. When it comes to managing unused VMs in a repository, the CDM model is contrasted with the iCanCloud concept. Keeping the inactive VM in the hypervisor eliminates the latency issue that arises when moving VMs between the hypervisor and the VM repository. The experimental results show that the CDM-based model takes less latency in VM management. They proposed two algorithms for VM scheduling and VM placement to achieve effective utilization of VM. Furthermore, they have compared both algorithms with different scheduling and placement algorithms, respectively. VM scheduling algorithms show a better result when compared with other algorithms regarding CPU utilization, whereas VM placement resulted in better improvement in terms of completion time of VM placement and resource utilization.

I/O performance degradation is a common phenomenon in a virtualized environment. The VM is not able to distinguish the different processes coming from the same physical machine. Since the process information is located in the higher layers, getting it can be challenging. To address this problem, Xie et al. (2014) suggested a disk predictive scheduling method that takes into account running processes be used to solve the disk I/O issue. With the assistance of a predictive model, the VMM in this approach learns about the process and then uses that knowledge to categorize the I/O request. The connection between a process and its address space is used to infer the level of awareness of the process. The simulation results validate the practicability of the proposed strategy and highlight the subsequent increase in disk I/O speed.

In a multi-core virtualized environment, Symmetric Multiprocessing (SMP) is increasingly being used for efficient resource utilization and performance degradation. A separate scheduler exists in the hypervisor and in the guest host, resulting in a problem of double scheduling. To overcome this problem, Miao and Chen (2015) evaluated a scheduling scheme FlexCore using vCPU ballooning. The scheme dynamically adjusts the number of vCPUs of a VM at runtime and eliminates unnecessary scheduling within the hypervisor layer to considerably improve the performance. The experimentation is done on a KVM-based hypervisor which shows that the average performance improvement is approximately 52.9%, ranging from 35.4% to 79.6% for a 12-core Intel machine for PARSEC applications. In a similar progress, Kertesz et al. (2016) presented an improved pliant-based VM scheduling scheme for solving energy consumption problems. The authors in their study utilized industrial application workloads to evaluate the performance of their improved CloudSim framework. The results depict a significant improvement in energy saving and a better trade-off in execution time.

Due to the hard scalability problem in a distributed virtualized cloud environment, it is difficult to manage VMs by VM In-charge on a pool of physical machines. It becomes worse in the case of VM image transfer. In this regard, Quesnel et al. (2013) provided a new Distributed Virtual Machine Scheduler (DVMS), which acts as a decentralized and preemptive scheduler in a massive-scale distributed environment. As shown in the results, the elements of the validation approach are sufficiently solving the resource violation problem.

In another type of progress, Adhikary et al. (2013) suggested a distributed and localized VM scheduling algorithm (VSA) to cater to energy consumption problems in data centers. The proposed algorithm functions as intra-cluster and inter-cluster scheduling and addressed some major parameters, such as energy, resource estimation, and availability. It schedules VMs in a way that energy consumption is minimized for both servers and networking devices. The results show that the algorithm outperforms other existing algorithms in terms of energy reduction.

VM consolidation is often used to solve energy consumption problems. Second, energy consumption can also be managed by sending the real-time resource requirement to the VMM and controlling the frequency of recourse demand. In that essence, a power-aware framework is introduced for compositional real-time scheduling. The method encapsulates each VM into a single component to minimize resource utilization and thus reduce energy. The framework is implemented on Xen hypervisor on Linux kernel, resulting in better performance (Hu et al., 2010).

Efficient VM scheduling increases the performance of the data center and increases the profitability of the cloud providers. In this regard, Li X. et al. (2017) offered a greedy-based VM scheduling algorithm GRANITE to reduce datacenter energy consumption following two major strategies, namely, VM placement and VM migration. They have used computational fluid dynamics techniques to address the cooling model of the datacenter. Moreover, they claim to address the CPU temperature for the first time along with the other infrastructure devices and nods. The results show that the algorithm outperforms other existing algorithms in terms of energy reduction. In a different study, Li W. et al. (2017) improved the deficiency of the semi-homogeneous tree to a general heterogeneous tree as its optimal solution. Using a hose model, the proposed maximum elasticity scheduling optimizes both maximum elasticity computation and maximum elasticity communication. Inspired by the gravitational model of physics, Xu et al. (2019) presented a VM Scheduling Algorithm based on Gravitational Effect (VMSAGE) to handle the issue of energy consumption in data centers. This study is the extension of the study mentioned in the Rahbari (2022) in which the authors presented a heuristic-based approach for VM scheduling for Fog-cloud. To assure optimum utilization of the resources, their method addressed the issue of load balancing and achieved better resource utilization on the edge network.

Using Virtual Machine Management (VMM) strategy, Al-Dulaimy et al. (2018) anticipated an improved energy-efficient VM scheduling technique for dynamic consolidation and placement of the VMs in data centers. In this strategy, Multiple Choice Knapsack Problem (MCKP) first decides the set of VMs to migrate from the under loaded and overloaded PM criteria. Then, VM selection is performed from the generated candidate solutions, and finally, this selected VM is placed on the number of PMs. The proposed method outperforms when compared with similar strategies in terms of energy saving.

In a similar study, Xu H. et al. (2018) investigated the VM scheduling problem and proposed an incentive-aware scheduling technique for both cloud providers and cloud users with a guaranteed QoS. In this study, the improved meta-heuristic method, namely, Cost Greedy Dynamic Price Scheduling (CGDPS) prioritizes the VM requests as per the user demand and generates several candidate solutions. Finally, the VMs are assigned to the candidate node with minimum computation cost. The comparative results show a competitive improvement in user satisfaction.

In the study by Yu et al. (2019), a synchronization problem in VM scheduling is addressed to avoid the extra-long waiting time assigned to a vCPU for lock spins. The proposed Lock-aware Virtual Machine Scheduling (LAVMS) provides additional scheduling chances for processors to avoid locks. The method ensures the scheduling without wasting the waiting time of the vCPU. The scheme outperforms when compare with the contemporary para-virtual-spinlocks (PVLOCK) in terms of performance. Along the same lines, Qiu et al. (2019) introduced an energy efficiency and proportionality-aware VM Scheduling framework (EASE). The framework set out the standard benchmarking as per the specified configuration components of the servers. Again, it addresses the real workload which again configuration-centric to the servers. Then, real-time server data are collected, efficiency is identified, and finally, workload classification is performed to achieve optimum VM scheduling. The simulation results depict a significant reduction in energy and completion time up to 49.98% and 8.49%, respectively, in a homogenous cluster. Similarly, in heterogeneous clusters, the observed reductions in energy and completion time are 44.22% and 53.80% respectively.

Considering resource provisioning a major concern for IoT applications, the study mentioned in the Xing et al. (2017) adapted a fairness-aware VM scheduling method (FEM) to achieve fairness and energy saving. Therefore, the system is designed and evaluated on three IoT datasets and compared with the benchmark energy-efficient VM scheduling (EVS). The experimented graphs show superior performance in resource-fairness and power saving. In the same context, Xu et al. (2020) considered the balancing scenario between energy saving with guaranteed performance and introduced a novel VM scheduling technique for Cyber-physical system. The joint-optimization model-based method utilizes the live migration of the VMs to underloade PMs and offload the overhead, consequently reducing power consumption and performance degradation. The study mentioned in the Xu H. et al. (2018) examined the power management problem in Wireless Metropolitan Area Network (WMAN) and put forward a VM mapping strategy to reduce power consumption. The proposed method, namely, MFEA, is optimized to reduce the number of VMs on the physical servers after migrating the underutilized VMs. The experimental graph shows comparable energy reduction with other benchmark techniques.

In a different study Wan et al. (2020) offered a system queuing scheduling model to analyze the performance of the cloud systems by switching off and on the (hot and cold shutdown) VMs. The proposed method uses multi-objective particle optimization to optimize the most critical parameters in the cloud scheduling process, such as performance and cost. However, the heuristics approach is not used in the true sense, and the description is lacking. Similarly, Qi et al. (2020) developed a QoS-aware cloud scheduling system by applying the NSGA-III algorithm to find the optimal VMs to migrate on the PMs in the cyber-physical system (CPS). The algorithms generate multiple VM scheduling solutions and select the best strategy to map the VMs. In another study, Saravanakumar et al. (2021) proposed a VM clustering method to monitor the performance measure of the VM metrics, such as network-overhead cost. It dynamically allocates the submitted tasks to the VMs to deal with the network overload problem and reduce the allocation time. However, the proposed method lacks in dealing with the volume of the data size constraints. Furthermore, Xu et al. (2023) addressed one of the significant factors called reliabilities in VM scheduling and presented a fault tolerance scheduling system while satisfying several QoS. They designed a greedy-based technique to identify suitable computer nods to execute the user's tasks with improved performance.

The heuristic approach to VM scheduling has been instrumental in addressing the challenges of resource management in cloud computing environments. Methods such as Flex, which prioritize fairness and EEVS, designed for energy efficiency, demonstrate the versatility and effectiveness of heuristic strategies. These approaches consider a variety of performance parameters, such as load balancing, energy consumption, and CPU utilization to improve the overall performance of cloud services. They excel particularly in scenarios requiring dynamic load balancing and in systems where resources are rented, ensuring fairness and efficient application performance.

However, heuristic methods are not without drawbacks. For instance, some may not fully support the complexities of VM migration or may not effectively address the latency issues during peak server workloads. Moreover, while these methods aim to reduce energy consumption and improve resource utilization, they sometimes fall short in terms of scalability and in addressing the synchronization constraints of VMs in cloud environments.

In conclusion, while heuristic methods for VM scheduling have shown a better response to the dynamic nature of cloud computing and have provided solutions to specific optimization problems, they still face challenges. Improvements are needed to enhance their adaptability to rapid changes in workload and better address the intricacies of VM migration and consolidation. Despite these challenges, heuristic approaches remain a critical component of the VM scheduling toolkit, offering a range of solutions that traditional methods cannot provide.

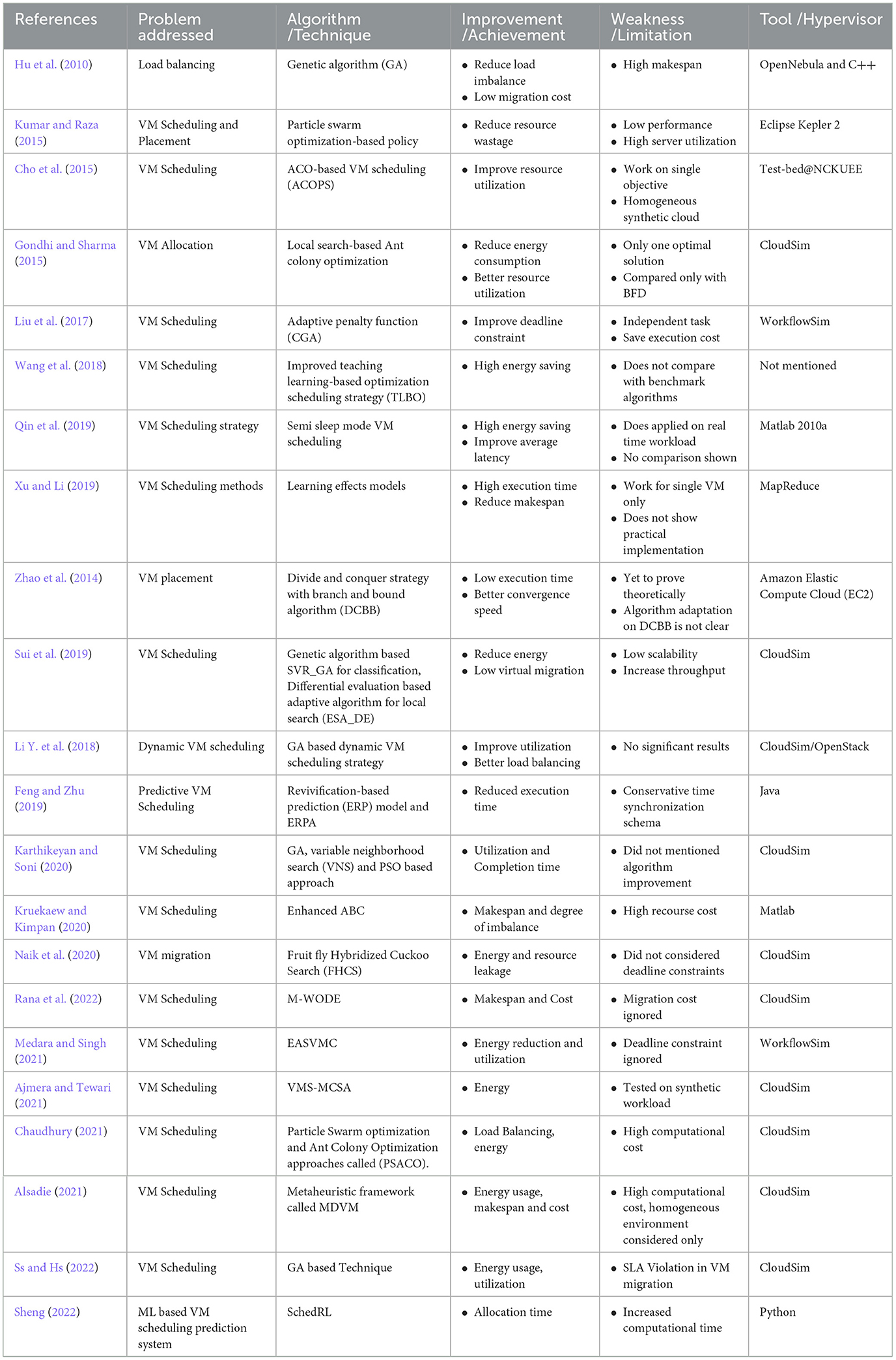

5.3 Meta-heuristic approach

The distinction between heuristic and meta-heuristic is overwhelming. Both heuristic and meta-heuristic approaches are used to solve high-dimensional and multi-model problems and provide near to optimal solutions for a problem. Heuristic approaches are problem-specific, whereas meta-heuristic approaches are more generalizable and adaptable. The latter can guide, modify, and hybridize with other heuristic approaches in the process of local optima generation (Mukherjee and Ray, 2006).

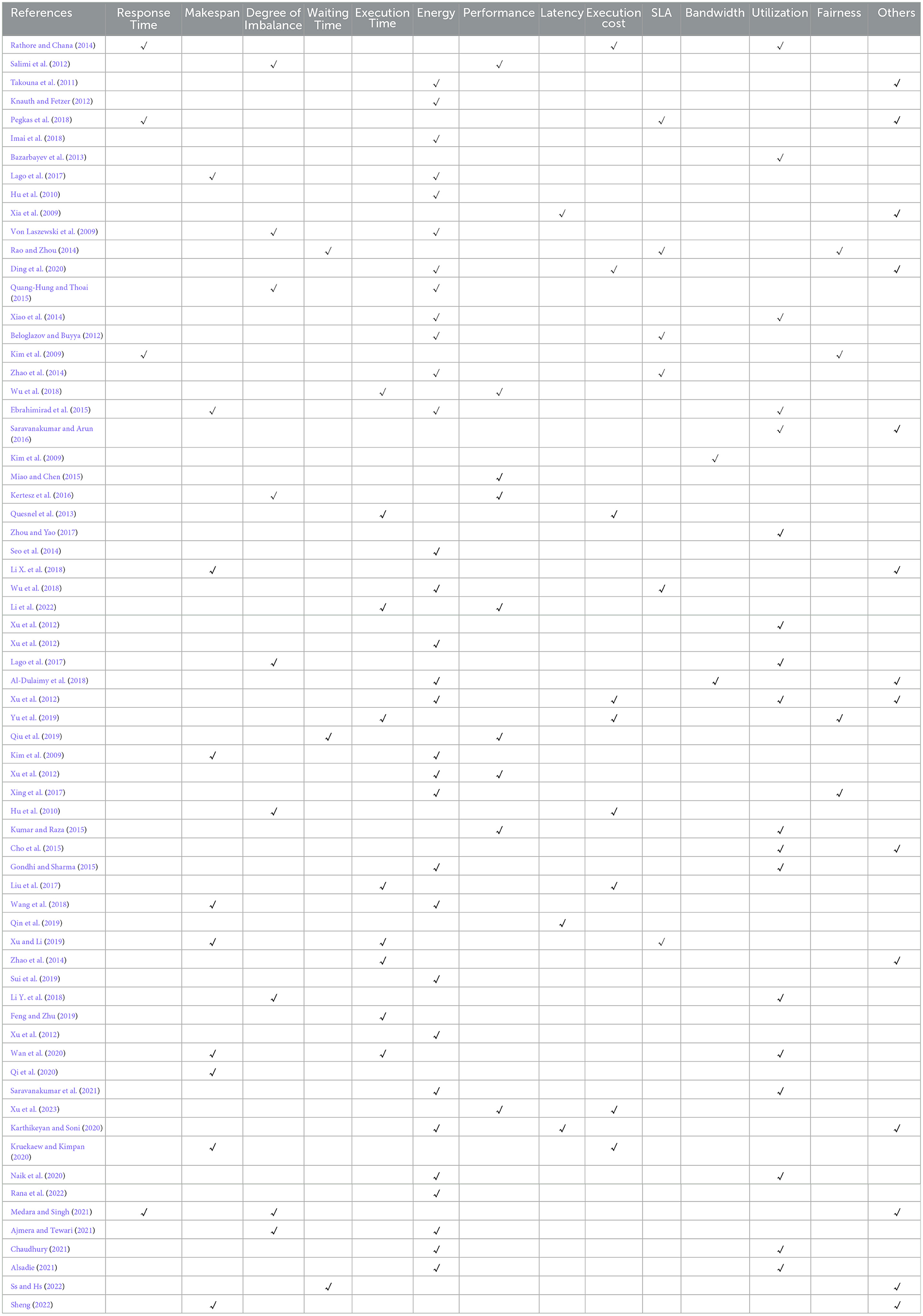

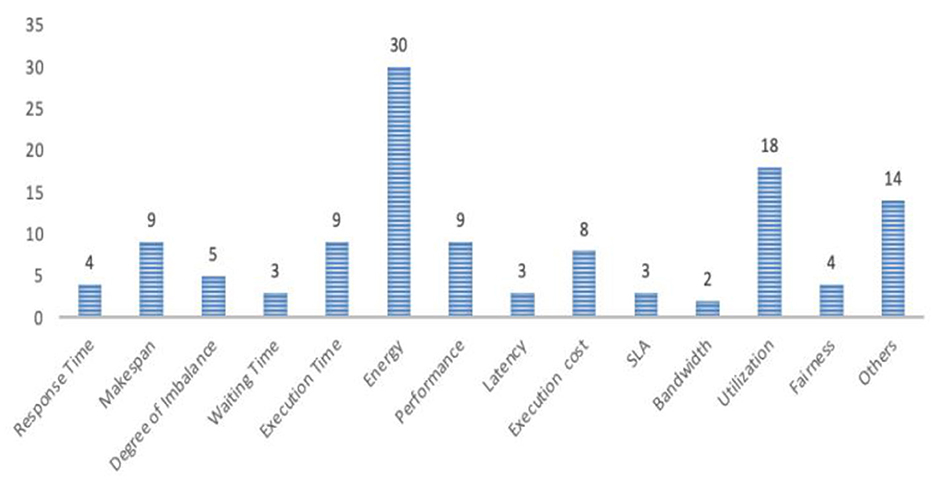

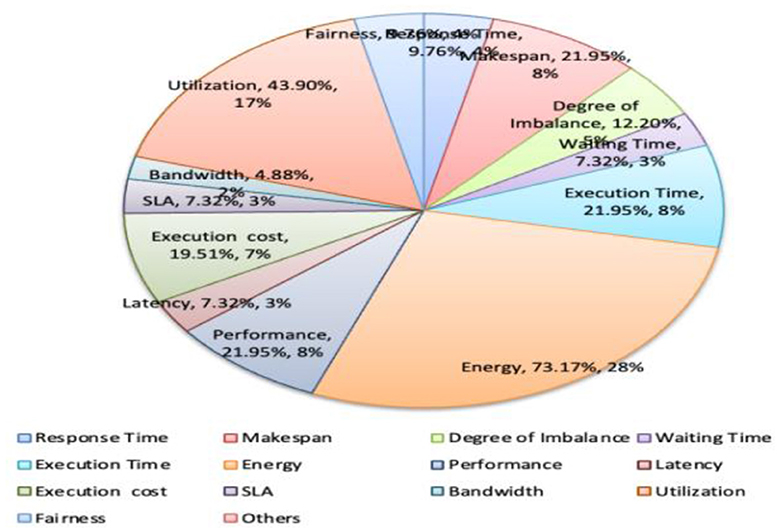

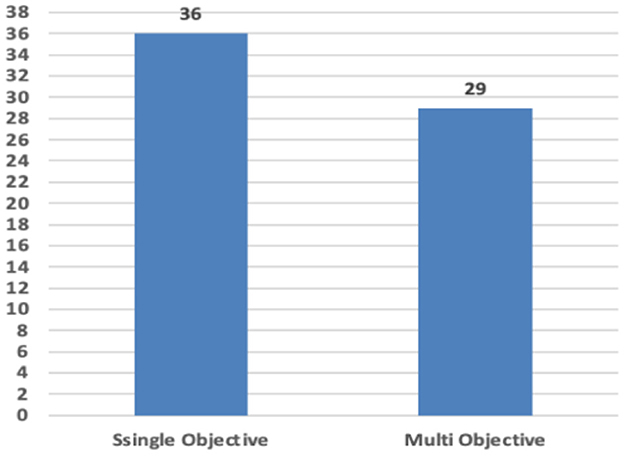

Nature-inspired meta-heuristics contain immense power in solving complex engineering problems. Meta-heuristic approaches have unique features in striking a balance between exploration and exploitation phases and avoiding local optima stagnation (Ss and Hs, 2022). Due to these unique and promising features, researchers across the world prefer using meta-heuristic approaches in their efforts to solve optimization problems. In this section, we discuss the most relevant metaheuristic approaches used in solving VM solving problems. Table 6 shows a brief analysis of the meta-heuristic approach. Furthermore, the parameters used in this surveyed literature are shown in Table 7. In Figures 6, 7, the distribution of the literature based on parameters used in numbers and percentages is mentioned. Figures 8, 9 show a comparison between single-objective and multi-objective optimization problems used in the literature. Table 8 maintains a list of available datasets for cloud computing. Here, in this subsection, we talk about using various meta-heuristic techniques to address VM scheduling issues.

In a cloud environment that has been virtualized, the incoming requests frequently change. The types of requests a VM may get and the tasks it will carry out are unknown to the system. Therefore, a technique either considers a fixed number of tasks or requires detailed information about the tasks, which has become insignificant. In this regard, Cho et al. (2015) introduced a hybrid meta-heuristic approach that incorporates ACO and PSO, two highly developed algorithms, to tackle the VM scheduling problem. To anticipate incoming workload and adapt to changeable settings, the proposed ACOPS algorithm employs previously stored information on the server. To save computing time, it does not require any more job information and disproves unmet scheduling needs. The simulation graphs demonstrate that the suggested algorithms outperform other comparable systems and have a balanced cognitive burden. In a cloud environment that has been virtualized, the incoming requests frequently change. The types of requests a VM may get and the tasks it will carry out are unknown to the system. Gondhi and Sharma (2015) developed a VM allocation problem solution based on the ACO algorithm. The authors modified the ACO by using a local search algorithm to maximize the allocation result because they believed that the combinatorial problem of bin packing was NP-hard.

VM scheduling can be perceived as the allocation and placement of several VMs to a set of PMs. In this regard, Kumar and Raza (2015) proposed an enhanced VM scheduling policy for VM allocation in cloud data centers based on particle swarm optimization (PSO). The suggested policy intelligently distributes the VMs among the fewest possible physical hosts, reducing resource costs. According to the findings, the strategy not only reduces the number of VMs allocated to the host machines but also improves performance and scalability.

There are common pitfalls in existing evolutionary algorithms, such as defining problem-specific parameters for constrained optimization problems and their static nature, which can lead to premature crossover. Liu et al. (2017) provide a metaheuristic approach using an adaptive penalty function for workflow scheduling to enhance time constraints. When compared with existing state-of-the-art algorithms, the presented algorithms perform admirably and produce reasonable results under constraints, such as time and money.

In another progress, Zhou and Yao (2017) developed a revolutionary scheduling method based on teaching and learning optimization (TLBO) to cut down on energy use. It divides the VM scheduling into two, one pool of the VMs is to keep in active mode to cater for the arrival of a dynamic workload. The second pool of VMs is kept in reserve and put in low energy saving mode or sleep mode. The reserve pool of VMs allocated and deallocated based on resource demand. In a different study, the authors of the study mentioned in the reference (Rana and Abd Latiff, 2018) presented a whale optimization algorithm (WOA)-based cloud framework for multi-objective VM scheduling in data centers.

Qin et al. (2019) proposed a semi-sleep mode issue in VM scheduling, which was considered, and a plan to decrease the average latency of resource requests, which was offered to help preserve power in data centers. In their proposed system, the authors introduced a cost function to optimize the semi-sleep parameter using Ant Colony Optimization (ACO) and was able to reduce the cost function of the system. In another study, Xu and Li (2019) anticipated the problem of calculating the total execution time of processes on a VM. They considered this problem as NP-hard and introduced a learning effect-based weighted model. Their model accurately estimates the total completion time and maximum lateness minimization. The proposed schedule-based rule exhibits better near-optimal results.

In another progress, Zhao et al. (2019) investigated an improved scheduling technique to reduce the high upfront cost of the systems. The proposed dynamic bin packing model used a divide and conquer strategy with a branch and bound algorithm (DCBB) for minimizing the VMs on the physical servers. The method is evaluated on three different real-time workloads and also on synthetic workloads. The experimental results show its superiority over comparative techniques for execution time and fast convergence rate.

By applying a machine learning technique for load balancing, Sui et al. (2019) established an intelligent technique for scheduling VMs in the data centers. First, the prediction is done for incoming workloads on the servers by utilizing a hybridization of the genetic algorithm with the combination of a Support Vector Machine (SVM) named SVR_GA. Then, to improve the local search capability, Differential Evaluation (DE)-based adaptive algorithm (ESA_DE) is utilized to overcome the problem of load balancing. When compared with the benchmark algorithms, the proposed method overtakes in terms of energy saving by minimizing the VM migration. An intelligent Genetic Algorithm (GA)-based metaheuristic technique is proposed for dynamic VM scheduling for optimum resource allocation. In this study, both memory and CPU utilization are considered equally for VM migration in the scheduling process. The study claims improvement in load balancing and resource utilization; however, the results are not mentioned in the Li et al. (2019). Similarly, Yao et al. (2019) implemented a GA-based Revivification-based prediction (ERP) model to estimate the execution time of applications on VMs. Then, another method ERPA is used to minimize the execution times for parallel and distributed applications running on the optimized set of VMs. The simulation results confirm better execution time for the selected VMs.

Karthikeyan and Soni (2020) proposed a hybrid GA, variable neighborhood search (VNS), and PSO to address the VM allocation problem, improving resource utilization and minimizing completion time. However, they did not mention how this algorithm improved the parameters. A similar study proposed an ABC-based scheduling algorithm, HABC, to reduce the average make span time of task allocation and the degree of load imbalance in the VMs. The algorithm is designed to work in both homogeneous and heterogeneous systems (Kruekaew and Kimpan, 2020). The fruit fly is combined with Cuckoo search to overcome the deficiency of local optima entrapment, perform better in local search, and find the optimal solution for VM mapping in the cloud data centers. The proposed method works well compared with similar techniques to reduce energy and resource leakage (Naik et al., 2020). Rana et al. (2022) combined WOA with DA to develop VM scheduling techniques in the cloud environment. This study uses WOA as a global optimizer to generate optimal solutions. In contrast, DA replaces the substandard solutions generated by WOA and improves the search speed in the local search space. Medara and Singh (2021) presented a solution for reducing energy consumption and resource utilization between workflow scheduling and VM scheduling in the data center. The method uses a nature-inspired water wave optimization (WWO) algorithm to find the optimal solution for VM migration on the host machines. An artificial immune-based clonal selection algorithm is modified to cope with the ever-changing cloud environment for VM scheduling. The randomized mutation operator is introduced to handle the dynamic load on the VM while scheduling. The simulation graphs show that the presented method performed better than benchmark methods for the energy reduction (Ajmera and Tewari, 2021).

In an identical work, Chaudhury (2021) proposed a metaheuristic-based scheduling algorithm for VM scheduling combining PSO and ACO. The proposed method retains the historical details of the scheduling components in its search process. It uses it to predict the incoming load on the cloud, reducing the load imbalance on the servers. Similarly, Alsadie (2021) modified the NSGA-II metaheuristic algorithm to cope with the dynamic environment of cloud scheduling. The technique works on two levels; first, the algorithm finds the optimal mapping solutions for tasks to the suitable VMs; second, the optimal solutions are generated for VM allocation to the best-fitted host in the data centers. The method outperforms other similar techniques but works only in a homogeneous environment. Because recent techniques do not consider NUMA architecture while designing VM scheduling, Sheng (2022) proposed multi-NUMA VM scheduling techniques by applying a machine learning approach. The authors first converted the VM scheduling problem into combinatorial optimization and then used reinforcement learning to guide the schedule per sample data. As per the result, the proposed techniques efficiently reduce the task allocation time on the host node.

Meta-heuristic approaches in VM scheduling have shown a remarkable ability to adapt and find near-optimal solutions in the dynamic landscape of cloud computing. Techniques such as the ACOPS algorithm, which combines Ant Colony Optimization (ACO) and Particle Swarm Optimization (PSO), are particularly noteworthy for their innovative use of historical server data to predict and adapt to changing workloads without the need for additional job information. Similarly, methods such as the Teaching and Learning Optimization (TLBO) algorithm have demonstrated significant energy savings by managing VMs in active and reserve modes to handle dynamic workloads efficiently.

The meta-heuristic methods stand out for their problem-solving versatility, with algorithms such as the Adaptive Penalty Function and Whale Optimization Algorithm (WOA) offering robust solutions under constraints such as time and cost. The parameters used in these studies, detailed in the surveyed literature, and reflected a focus on performance-power ratios, scalability, and energy conservation. Techniques, including hybrid models that integrate Genetic Algorithm (GA) with local search capabilities and nature-inspired algorithms such as the Water Wave Optimization (WWO), emphasize the importance of intelligent scheduling in enhancing resource utilization and reducing operational costs in cloud environments.

Furthermore, the application of meta-heuristic algorithms has transcended traditional boundaries, addressing the semi-sleep mode in VM scheduling to decrease resource request latency and optimizing execution time through learning-effect models. These advanced scheduling techniques, such as the hybrid GA and PSO or the incorporation of machine learning for load prediction, highlight the evolution of cloud resource management. They showcase a shift toward more intelligent, adaptive frameworks capable of meeting the high demands of cloud services, reducing energy consumption, and ensuring cost-effective VM management while maintaining service level agreements and user satisfaction.

However, meta-heuristics are not without their limitations. Some of these methods may struggle with problem-specific parameter definition, leading to premature convergence and suboptimal solutions. There are challenges in maintaining the balance between exploration and exploitation, particularly in rapidly changing environments where static models fail to keep up.

In conclusion, while meta-heuristic methods have advanced the field of VM scheduling through their generalizability and ability to hybridize, they must continue to evolve to overcome their inherent weaknesses. Advancements in adaptive penalty functions and algorithmic hybridization show promise in enhancing time and cost constraints, but further innovation is needed to refine these methods for better accuracy, reducing energy consumption and efficient resource utilization in the dynamic and diverse realm of cloud computing.

6 VM scheduling in mobile edge computing

6.1 Mobile edge computing

The MEC, commonly known as multi-access computing or multi-access edge computing, is a distributed computing ecosystem that moves processing and data storage closer to the network's edge. It has been envisaged to prevent mobile devices from running heavy and power-hungry algorithms. Among other things, MEC is used to offload traffic off the leading network, allowing operators to save money while expanding network capacity (Pham et al., 2020). In the Internet of Things (IoT) context, MEC enables seamless integration of IoT and 5G (Qi et al., 2020).

6.2 Scheduling in MEC

In MEC, VM scheduling is essential for task offloading and resource allocations. Dynamic resource allocation uses Lyapunov optimization, a decision engine and deep-reinforcement learning. Priority scheduling is when tasks are scheduled based on their priority (Wei et al., 2017; Alfakih et al., 2020). The authors of the study mentioned in the reference (Mao et al., 2016; Gao and Moh, 2018) proposed joint offloading and priority-based task scheduling. The goal has been to reduce task completion time and the cost of edge server VM use. The same approach has been used in the study mentioned in the reference (Lei et al., 2019), where the authors extended further scope to include multi-users in a narrow-band IoT environment and solved the offloading using dynamic programming techniques. Cotask offloading and schedules have been investigated in the study mentioned in the reference (Chiang et al., 2020). The authors formulated the problem of cotask offloading as a non-linear program and solved it using the deep dual learning method. Similarly, Choi et al. (2019) present a deadline-aware task offloading algorithm for mobile edge computing environments. The algorithm is based on classifying tasks according to their latency requirements and offloading them to the most appropriate edge server. The algorithm is designed to minimize the overall completion time of the tasks while satisfying the deadlines and maximizing resource utilization.

Zhu et al. (2023) proposed a new approach for offloading in mobile edge computing that utilizes an improved multi-objective immune cloning algorithm. The goal of the proposed method is to enhance the efficiency of offloading by optimizing multiple objectives, including maximizing computational performance and minimizing energy consumption. This new approach aims to improve the parameters of computational performance and energy efficiency in mobile edge computing offloading. Similarly, Li et al. (2023) put forth a jointly non-cooperative game-based offloading and dynamic service migration approach in mobile edge computing. The approach uses game theory to optimize the performance of the system by making optimal offloading and migration decisions based on limited resources, such as bandwidth and computation capacity. Naouri et al. (2021) put forward a novel framework for mobile-edge computing that optimizes task offloading. The authors aim to address the challenges in offloading tasks from mobile devices to edge servers. The framework employs optimization techniques to improve the offloading decision-making process, leading to better performance and reduced energy consumption. The results show that the proposed framework outperforms existing solutions in terms of efficiency and effectiveness.

In the same vein, Cui et al. (2021) presented a new approach to task offloading scheduling for the application of mobile edge computing. The authors aim to improve the performance and efficiency of task offloading in mobile devices by proposing a new scheduling method. The approach considers various factors such as device resources, network conditions, and service requirements to make offloading decisions. The experimental results show that the proposed method outperforms existing solutions in terms of task completion time and energy consumption. Sheng et al. (2019) proposed a computation offloading strategy for mobile edge computing. The authors aim to optimize the offloading of computationally intensive tasks from mobile devices to edge servers. The proposed strategy takes into account various factors such as network conditions, device resources, and task requirements to make offloading decisions. The results show that the proposed strategy improves performance and reduces energy consumption compared with existing solutions. Hao et al. (2019) examined a formal concept analysis approach to VM scheduling in mobile edge computing. The authors aim to address the challenge of resource allocation in mobile devices when offloading tasks to edge servers. The proposed approach uses formal concept analysis to model the scheduling problem and find optimal solutions for task offloading.

Deadline-aware scheduling is another problem in which tasks are scheduled based on the time the task should be completed. Zhu et al. (2018) addressed the problem of scheduling multiple mobile devices under various MEC servers. Lakhan et al. (2022) devised an algorithm for scheduling fine-grained tasks in mobile edge computing environments. The algorithm considers both the tasks' deadlines and the edge servers' energy efficiency when scheduling the tasks. The algorithm aims to minimize the total energy consumption while satisfying the deadlines of the tasks and maximizing resource utilization. The authors evaluate the proposed algorithm using simulations, and the results show that the algorithm outperforms existing algorithms in terms of energy efficiency and meeting deadlines. Ali and Iqbal (2022) proposed a task scheduling technique for offloading microservices-based applications in mobile cloud computing environments. The technique considers both the cost and energy efficiency when scheduling the tasks. The technique is designed to minimize the total cost while satisfying energy efficiency and meeting the deadlines of the tasks. The authors evaluate the proposed technique using simulations, and the results show that the technique outperforms existing techniques in terms of cost and energy efficiency. In the same vein, Bali et al. (2023) consider the priority of the tasks when scheduling tasks to offload data at edge and cloud servers. The technique is designed to minimize the total completion time while satisfying the priority and meeting the deadlines of the tasks. The authors evaluate the proposed technique using simulations, showing that the technique outperforms existing techniques in terms of completion time and meeting priority.

Qureshi et al. (2022) and Yadav and Sharma (2023) developed a method for improving the sustainability of mobile edge computing through blockchain technology. The presented method uses blockchain to secure cooperative task scheduling in these environments. The method aims to enhance task scheduling security by utilizing the blockchain's decentralized and immutable nature. The results show improved security and sustainability of task scheduling in mobile edge computing. The authors of Li et al. (2022) proposed a solution to enhance the efficiency of mobile edge computing by collaborating between User Plane Functions (UPFs) and edge servers. Their proposed algorithm, UPF selection, considers the current load and computing capacities of UPFs and edge servers for optimal resource utilization. The simulation results show that this approach improves system performance compared with traditional methods. In conclusion, the authors state that collaboration between UPFs and edge servers can significantly improve mobile edge computing performance. A different study is presented by Lou et al. (2023) on addressing the problem of scheduling dependent tasks in a mobile edge computing environment while considering the startup latency caused by limited bandwidth on edge servers. The authors propose a novel algorithm named Startup-aware Dependent Task Scheduling (SDTS), which selects the edge server with the earliest finish time for each dependent task. The selection process considers the edge servers' downloading workload, computation workload, and processing capability. Additionally, the algorithm employs a cloud clone for each task to utilize the scalable computation resources in the cloud. The results of simulations using real-world datasets show that SDTS outperforms existing baselines in terms of make span. In future study, the authors plan to further study the dependent task scheduling problem in more dynamic edge computing networks.