- 1School of Computing Science and Engineering (SCOPE), Vellore Institute of Technolog, Vellore, India

- 2Vietnam-Korea University of Information and Communications Technology, University of Danang, Da Nang, Vietnam

- 3Saint-Petersburg Institute for Information and Automation RAS, St. Petersburg, Russia

- 4Department of Computer Science and Engineering, Saint Petersburg Electrotechnical University, LETI (ETU), St. Petersburg, Russia

Introduction: There is a growing need for advanced systems to monitor patients in both hospital and home settings, but existing solutions are often costly and require specialized hardware. This article presents a method for building “Intellectual Rooms,” a cost-effective and intelligent environment based on Ambient Intelligence (AmI) and Internet of Things (IoT) technologies. The objective is to enhance patient care by using machine learning to process data from readily available devices.

Methods: We developed a method for creating Intellectual Rooms that utilize a complex model integrating medical domain knowledge with machine learning for image processing. To ensure cost-effectiveness, data were gathered from various sources including public cameras, smartphones, and medical sensors. Machine learning tasks were distributed across edge devices, fog, and cloud platforms based on technical constraints. The system's effectiveness was evaluated using simulated test data representing various patient scenarios and abnormal actions, comparing four conditions with incrementally added data sources (public camera, smartphone, sensors, and environmental objects).

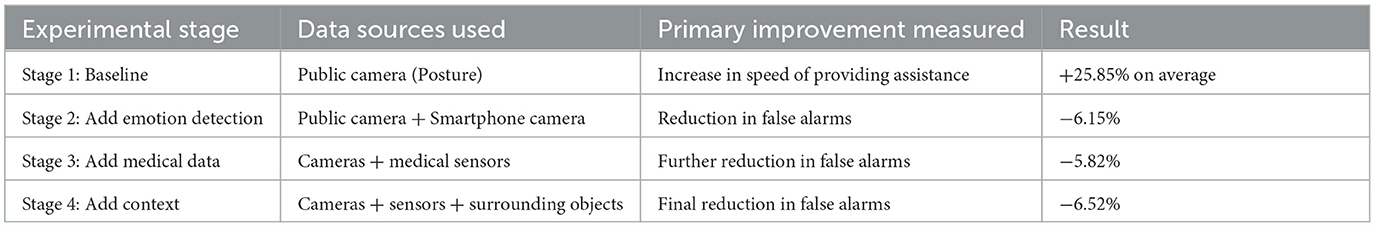

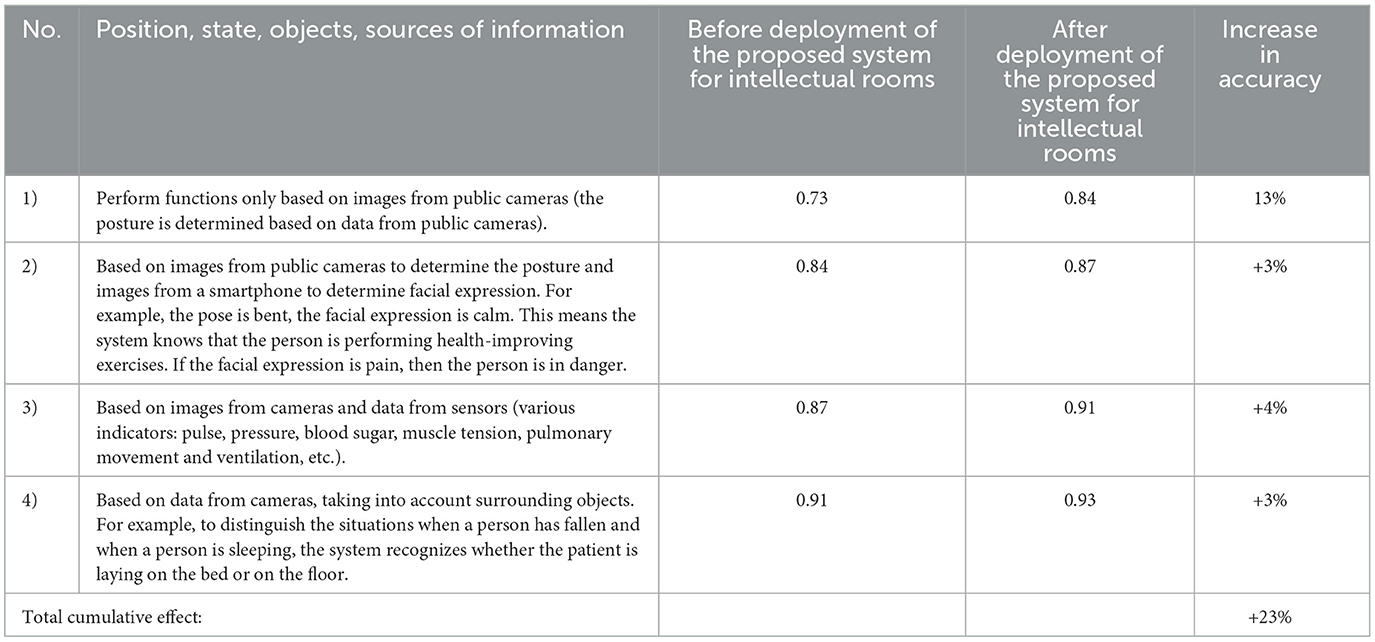

Results: The system demonstrated a significant increase in the speed of providing assistance, with an average improvement of over 25%. The integration of multiple data sources progressively reduced false alarms: adding smartphone camera data reduced false alarms by 6.15%, incorporating sensor data led to a further 5.82% reduction, and considering surrounding objects achieved an additional 6.52% reduction. The cumulative effect of using all data sources resulted in a 23% overall improvement in the accuracy of identifying patient states.

Discussion: The results validate that the proposed method for building Intellectual Rooms is a feasible and effective approach for patient monitoring. By leveraging existing, low-cost hardware, the system offers a non-intrusive and intelligent solution suitable for both hospitals and home care. This study successfully demonstrated the core functionalities using simulated data; future work will involve deployment and evaluation in real-world clinical environments to confirm its practical utility.

1 Introduction

In recent years, demands for human care have increased, leading to the development of smart electronic medical devices that can connect to the internet and exchange information with each other. These devices produce multiple streams of heterogeneous real-time data that contain different information about the state and the behavior of the patients. This has paved the way for the development of patient care and support systems, in particular, smart rooms, intelligent rooms, etc.

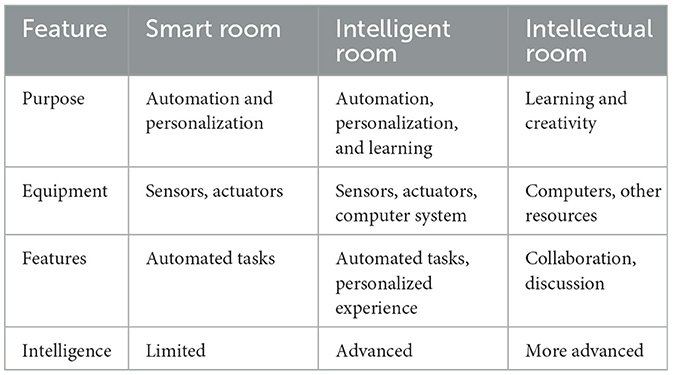

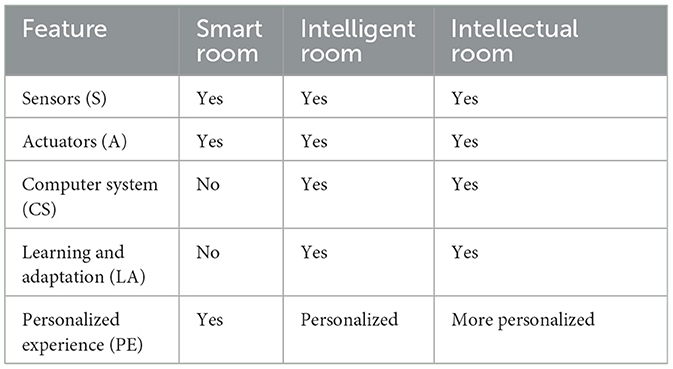

The terms “Smart Room” and “Intelligent Room” are often used interchangeably, but there are some subtle differences between them.

A smart room is a room that is equipped with sensors and actuators that can be used to automate tasks (Zhukova et al., 2023). For example, a smart room might have sensors that can detect the presence of people, the level of light, and the temperature. These sensors can then be used to automate tasks such as turning on the lights when someone enters the room or adjusting the temperature to a comfortable level.

An intelligent room is a more advanced version of a smart room. In addition to sensors and actuators, an intelligent room also has a computer system that can learn and adapt to the user's behavior (Brooks, 1997). This means that the intelligent room can make predictions about what the user wants or needs, and it can take action to fulfill those needs. For example, an intelligent room might learn that a user always turns on the lights at a certain time, and it can then turn on the lights automatically at that time.

The next step of the hospital rooms development should result in creation Intellectual Rooms. An Intellectual Room is a space that is designed to promote learning and creativity. It is typically equipped with computers and other resources, like cameras, sensors, etc., that can be used for research and study. Intellectual Rooms may also have features that promote collaboration and discussion.

Intellectual Rooms have the potential to revolutionize the way we interact with our environment.

Here are some examples to differentiate between rooms:

Smart room provide:

1. Voice-controlled lighting and temperature adjustments.

2. Automated window blinds and curtains opening and closing.

3. Smart appliances that can be remotely controlled or scheduled.

4. Security systems with motion sensors and cameras.

Intelligent room provide:

1. AI-powered assistants that can answer questions, play music, or provide information.

2. Lighting systems that adjust based on the time of day or occupant activity.

3. Temperature control that learns individual preferences and adjusts accordingly.

4. Proactive suggestions for music, movies, or other entertainment based on past choices.

Intellectual Room provide:

1. Interactive displays that support access to educational content and tools.

2. Ambient soundscapes that enhance focus or relaxation.

3. Smart furniture that adapts to different activities, such as working or reading.

4. AI-powered tutors or mentors that provide personalized guidance and support.

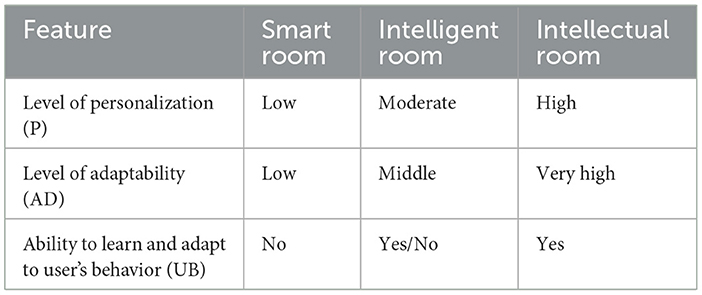

The evolution of smart, intelligent, and intellectual rooms represents a significant shift in how we interact with our homes. These spaces have the potential to revolutionize our daily lives, making them more efficient, enjoyable, and fulfilling. The distinctions between these environments are summarized in Tables 1–3. As technology continues to advance, we can expect even more innovative and transformative features to emerge, shaping the future of living spaces for generations to come.

Table 1. Summary of the key differences between smart rooms, intelligent rooms, and Intellectual Rooms.

Table 2. Comparative summary of the key features of smart rooms, intelligent rooms, and intellectual Rooms.

The combination of Ambient Intelligence (AmI) and Internet of Things (IoT) technologies makes it possible to create Intellectual Rooms that are more comfortable, efficient, and secure than existing intelligent rooms.

As these technologies continue to develop, we can expect to see even more innovative and user-friendly intellectual rooms being built.

The mathematical notation of state machines can be used to describe the rooms. A state machine is a mathematical model that describes the possible states of a system and the transitions between those states.

In the context of a room, the states of the system can be the physical state of the room, such as the temperature, the lighting, the sound, and the presence of people. The transitions between states can be caused by events such as someone entering the room, the lights turning on, or the sound being turned off. The following mathematical notation describes all three rooms.

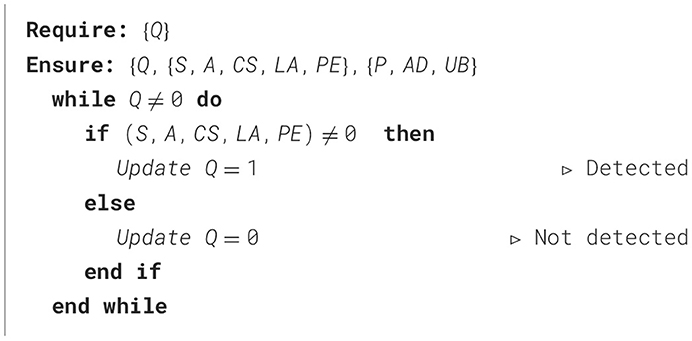

Let Q be the state of the room, E be the environment, and U be the user's preferences and habits.

In a smart and intelligent room, the feedback function would be: f(Q, E, U)

where Q' is the next state of the room, E is the current state of the environment, which depends on sensors (S), actuators (A), computers (CS), learning and adaptation (LA), and personalized experiences (PE), and U is the user's preferences and habits.

In an Intellectual Room, the feedback function would be: f(Q, E, U)

In this room, U is the user's preferences and habits, support of which depend on the level of personalisation (P), level of adaptability (AD), and ability to learn and adapt to the user's behavior (UB).

As smart and intelligent rooms systems continue to evolve, they are becoming more efficient and thus more in demand by both users with low and with very high requirements to smart and intelligent rooms.

The first studies aimed at creating smart and intelligent rooms relate to health care support systems with automatic measurement of oxygen in the blood, cholesterol level, markers of inflammation, etc. that were able to identify infectious in the patient, provide automatic supply of oxygen to the lungs, glucose through a dropper, and fix the cardiogram in electronic form with dynamic diagnostics that are displayed on the screen. It is necessary to distinguish between health care support systems for elderly patients, which are targeted at prolonging the life of elderly people and improving its quality and the systems that provide help to people with stretchings and after operations on the joints, and health care support systems that are oriented on treating patients with various diseases, in particular the diseases of the gastrointestinal tract, spinal injuries, lesions of the nervous system, bone marrow, chemical lesions of the body, brain injuries, and severe heart damage. In each case, the system of health care support and prevention of threshold and near-death conditions is not the same.

One of the main deterrents to the widespread use of health care support systems is the need to install special technical equipment and, as a result, their high cost. Modernization of technical facilities, as well as the deployment of new devices, leads to additional costs.

Also, existing life support systems need further development of the functionality that they provide. In particular, it is required to carry out a complex analysis of all the data about the patients provided by different sources, to consider the data about the patient's states at long time intervals, and to take into account the surroundings. Evidently, such new requirements to intelligent systems from specialists in the medical domain require advanced usage of artificial intelligence (AI).

Today, artificial intelligence (AI) is in demand in different fields where it allows increase confidence in decisions and improve their quality over time. AI systems make decisions based on machine learning algorithms and neural networks. They are characterized by the degree to which they can replicate human cognitive features. Systems that can perform more human-like functions are considered to have more evolved AI. To solve the modern tasks of the medicine domain and change healthcare for the better, it is required to have AI-based medical systems that ensure timely data collection, are capable to consume incoming medical data streams, perform deep analyses of the gathered data, and provide context-dependent evaluation of the obtained results.

Intellectual Rooms with high AI level can be constructed based on using modern advanced technhologies, in particular IoT is used for gathering data from the devices and interacting with human, and machine learning (ML) for performing deep analyses of the collected data. In recent years, a significant number of advanced ML techniques applicable for building Intellectual Rooms have been developed in the domain of computer vision, including such techniques as facial expression recognition (FER) (Mao et al., 2023; Zeng et al., 2022; Xue et al., 2021), gender identification (Savchenko, 2021; Haseena et al., 2022), and fall motion tracking (Cao et al., 2021; Sun et al., 2019; Toshev and Szegedy, 2014).

The distinguishing features of the proposed Intellectual Rooms are the following:

1. Allows processing data from all available devices.

2. Joint data processing is supported, in particular, joint processing of video streams, images and sensor data.

3. Data processing is executed based on using medical domain knowledge and knowledge of the ML domain (Arrotta et al., 2023, 2024; Arrotta, 2024).

4. Deployment of new specialized medical devices or additional computing facilities is not required.

5. Data processing is executed on devices, in fog or cloud depending on the network parameters, computing performance of the devices, and limitations on time. Computational load is redistributed in case the conditions change.

6. For Intelligent Rooms mobile application was developed to ensure easy interaction with the system.

Key differences of the Intellectual Rooms from the existing Intelligent Rooms:

1. Knowledge-based joint data processing.

2. Usage of existing devices for gathering data.

3. Context-dependent redistribution of the computational load over the system elements (devices, fog, cloud).

Key benefits of using Intellectual Room:

1. High accuracy due to using data from all available data sources and processing it using ML methods.

2. Low cost as it is not necessary to deploy additional technical means.

3. The system can be used by doctors, nurses, patients in hospitals and by elderly people and their relatives at home as it does not require special knowledge in the medical domain or ML domain and the Intelligent Room mobile applications have user-friendly interface.

The key scenarious of using the proposed Intellectual Rooms are the following:

1. Continuous monitoring of the patient's states in hospitals and at home.

2. Remote examination of the patient's states using essential information about the patients gathered by the devices.

3. Providing support for taking care about the patients depending on patients states.

In the present research we use AI technologies when building Intellectual Rooms that allows to provide an Intellectual Rooms according to the ambient intelligence concept in application to a typical hospital room, which makes hospital rooms sensitive and adaptive to patient states and their needs. In the proposed intelligent environment, real-time data about the patients is gathered from all available IoT devices and analyzed using machine learning techniques.

The main contribution of the paper is the following:

1. The method for building Intellectual Rooms ensures the possibility of widespread use of Intellectual Rooms both in hospitals and at home as it significantly reduces the cost of their building due to the use of readily available hardware devices and technologies.

2. The Intellectual Room has a high intellectual level that allows to implement an intelligent environment that is sensitive to the patients states and adaptive to their specific needs due to deep analyses of real-time and statistical medical data based on the extensive use of up-to-date machine learning methods.

3. The developed smartphone application for monitoring patients by the hospital stuff on the Linux Android 11 operating system is provided in “*.apk” format, which is easily launched from the smartphone's file system.

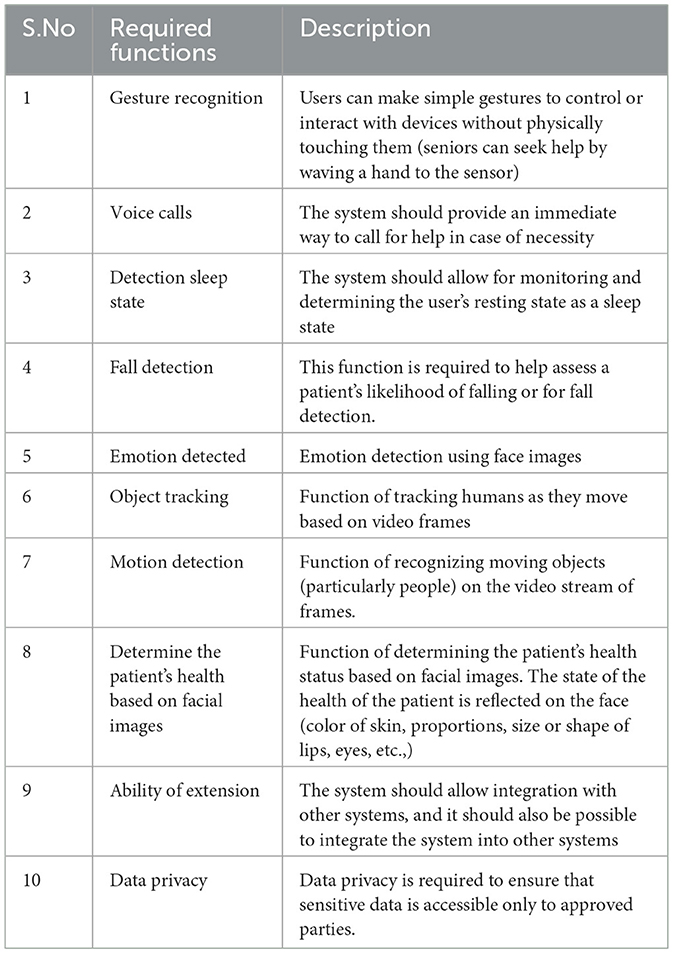

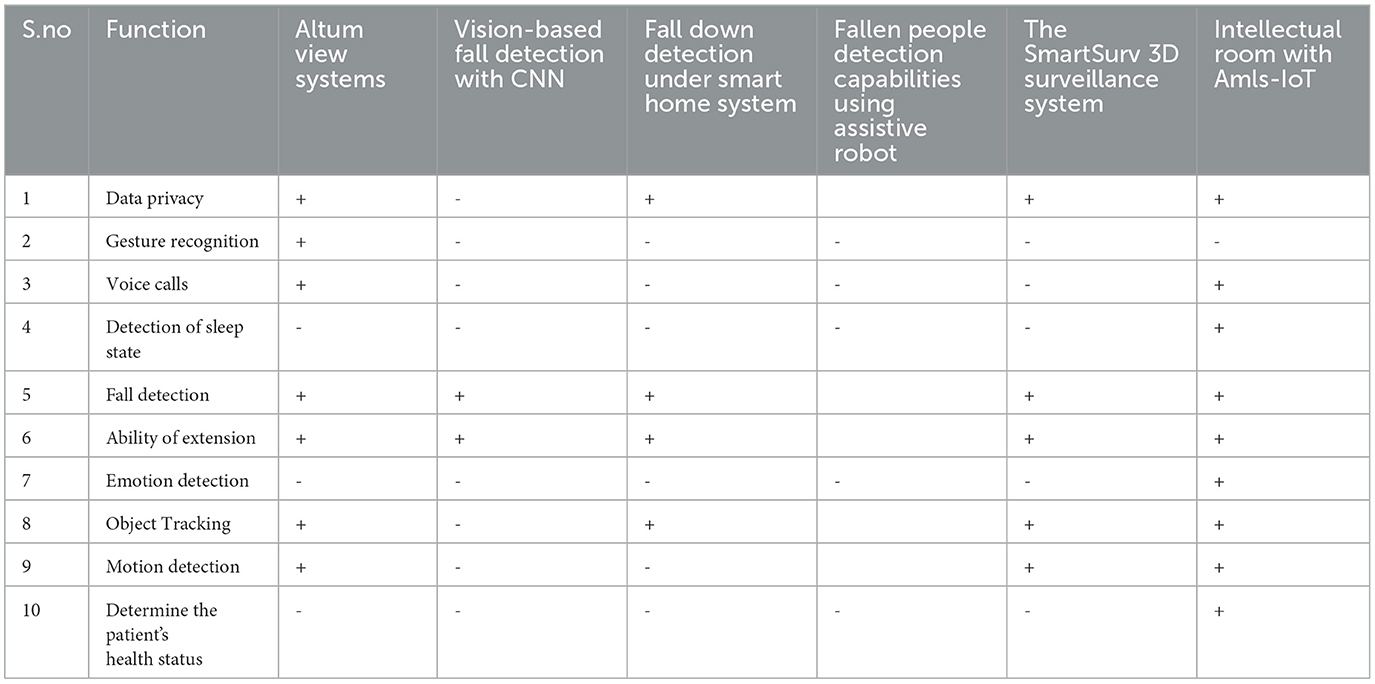

In this paper, we review existing systems, paying special attention to vision-based systems as they are widely used now, and highlight the essential functions that they should have (Table 4). Based on this, we propose a method for building intellectual room based on AmI and IoT technologies that provides all the necessary functions and has low cost. We develop a smartphone application for the medical staff and conduct extensive experimental research on the proposed method for building Intellectual Rooms that conforms its effectiveness and efficiency.

2 Related work

We first analyze various medical systems for monitoring the health status of patients and then focus on identifying capabilities of modern vision based systems.

In Balasubramanian et al. (2022), the authors introduce an innovative telemedicine system designed for efficient monitoring of COVID-19 quarantine facilities in India. This system relies on near-field communication (NFC) and natural language processing technology, which combine the usage of NFC chips with intelligent cloud analytics to facilitate contactless or minimal-contact communication with the system. Each patient is provided with an NFC chip, granting physicians easy access to the patient's medical history stored in the cloud (Supriya et al., 2017; Sujadevi et al., 2016). The cloud-based data organization tool assists healthcare personnel in structuring and integrating patient data into existing databases with minimal effort.

In the work (Wen et al., 2022), a novel intelligent patient care system is introduced. Its primary purpose is to alert the nurses when a patient requires assistance. This patient care system enables the transmission of all signals directly to the nurses' mobile phones, ensuring prompt communication and a three-stage notification system for bed leave. This feature is particularly crucial since preventing patient falls is a top priority and a key expected capability of the care system (Bouldin et al., 2013; Hempel et al., 2013).

In research (Cai and Pan, 2022), a novel approach is presented for the development of hospital rooms. This approach combines neurocomputer interface (NCI) and IoT technology, utilizing hybrid signals. The system is composed of subsystems that incorporate hybrid asynchronous electroencephalography (EEG), electrooculography (EOG), gyroscope-based NCI control, and IoT monitoring and control. It employs a graphical interface controlled through the NCI, comprising a cursor and multiple buttons. The gyroscope is employed to select the cursor's area, while blink-related EOG signals are used to initiate cursor clicks through blinking. This system captures eye movements (blinking) and records this information simultaneously. Additionally, wearable devices and cameras are used to collect physiological and monitoring data, allowing for passive patient control through comprehensive assessment.

In the study (Yadav et al., 2022), the authors introduce a fluid level control system for IV drip vials, particularly suitable for use in intensive care units and postoperative units. This system relies on a GSM-based message-exchange mechanism to alert healthcare staff when the vial is running low. It incorporates a strain gauge to measure the weight of the fluid, with a microcontroller reading and transmitting this data to the GSM module (Gayathri and Ganesh, 2017). The real-time liquid level within the vial is displayed through an Android app, indicating the remaining fluid as a percentage. When the level drops below 100 mL, a warning appears, accompanied by SMS notifications sent to the attendant at 10-second intervals until the system is deactivated. Simultaneously, an audible alert is sounded. Additionally, a team of healthcare professionals can monitor fluid levels in real time from their workstations via the IoT module. If the personnel or attendants cannot respond immediately to the alarm, an electromagnetic valve automatically blocks fluid outflow.

Scientists from Malawi (Kadam'manja et al., 2022) presented an IoT-based system designed to measure vital signs of patients waiting in outpatient units. The sensors used for gathering these vital signs communicate with computers and mobile devices accessible to medical staff. The pulse rate provides an estimate of body temperature and blood pressure (Dalal et al., 2019), while oxygen saturation indicates respiratory rate. Any values outside the normal range can be rapidly detected, enabling medical staff to deliver timely patient care.

In work (Liu et al., 2022), a cervical monitoring system based on wearable devices is introduced. This study examines the current status and medical prospects of using smart wearable devices to prevent and treat cervical spondylosis in office workers. The system employs cloud-based IoT technology for intelligent management and control of equipment in hospital rooms. It is designed to integrate various toolsets based on the specific requirements to the hospital rooms scenario. The cloud IoT platform and system technology offer scalable storage and efficient processing capabilities. The IoT system includes monitoring and controller nodes, each comprising key control, Wi-Fi connectivity, power, sensors, and controller modules. Additionally, the system incorporates analytical tools to display process dynamics and manage sensor nodes.

In research (Feng et al., 2021), a patient localization system is discussed. The advancements in device-free localization technologies, coupled with the development of machine learning, have significantly improved localization accuracy. The authors utilize readily available Wi-Fi signals within intelligent rooms and maintain patient localization while ensuring confidentiality. They achieve this through multiscale convolutional neural networks (CNNs) and long- and short-term memory models, resulting in high localization accuracy. The system can also be expanded to detect emergencies, enabling swift responses from medical personnel.

2.1 Vision based hospital rooms

AltumView Systems (AltumView, 2023) is a smart medical alert system for senior care that comprises the Cypress smart visual sensor, cloud server, and mobile app. The Cypress sensor is an IoT device that runs advanced deep learning algorithms, making it possible to monitor seniors' activities. In the event of emergencies, such as falls, the sensor sends immediate alerts to family members or healthcare workers. To preserve users' privacy while visualizing incidents, the alerts include stick-figure animations of the incidents instead of raw videos, making the sensor suitable for bedrooms and bathrooms. Other features of the sensor include face recognition, gesture recognition (seniors can seek help by waving a hand to the sensor), geo-fence (to alert carers when dementia patients leave the safe zone), voice calls, and statistics of daily activities to keep track of seniors' health over time. It also has a built-in fall risk assessment feature, which allows seniors to perform fall risk assessments at home instead of going to hospitals. In the future, the company plans to develop gait analysis algorithms for the early diagnosis of Parkinson's disease and dementia. Additionally, the system also provides a cloud API that allows users to integrate other sensors into the system or integrate the Cypress sensor into other systems.

Vision-Based Fall Detection with Convolutional Neural Networks (Núñez-Marcos et al., 2017) proposes a vision-based solution for fall detection using Convolutional Neural Networks (CNNs). To model the video motion and make the system scenario independent, auditors use optical flow images as input to the networks, followed by a novel three-step training phase. In this system, auditors present an approach that takes advantage of convolutional neural networks for fall detection. More precisely, they introduce a CNN that learns how to detect falls from optical flow images. Using typical fall datasets of small size, they take advantage of the capacity of CNNs to be sequentially trained on different datasets. The training of the model on the Imagenet dataset that allows it to acquire relevant features for image recognition is followed by training on the UCF101 action dataset to teach the network how to detect different actions using optical flow images. Finally, they apply transfer learning by reusing the network weights and fine-tuning the classification layers so the network focuses on the binary problem of fall detection. The vision-based fall detector is a solid step toward safer, smarter environments. The system has been shown to be generic; it works on camera images using a few image samples to determine the occurrence of a fall. This vision-based fall detector is a promising candidate for deployment in various environments, making them smart environments and providing means to assist the elderly in a wide range of scenarios, including non-home scenarios.

In Fall Down Detection Under Smart Home System (Juang and Wu, 2015) in order to detect the fall-down motion of human beings in real time, the use of the triangular pattern rule is proposed. To the images received from the security cameras, the fall detection process applies a number of image pre-processing techniques to remove image noise, acquire the posture change variance between a normal state and a fall, and then use feature extraction to obtain identifying information. Then binarization is used as a pre-process to extract posture features. Fall identification is based on extracting features that allow distinguishing between a normal state and a fall for standing and non-standing postures. Identifying the fall-down motion is difficult because of the varying angle of the posture that affects the correctness of the identification. Therefore, in order to improve the identification rate, this system adopts a series of continuous postures in motion and uses an SVM classifier.

Fallen People Detection Using an Assistive Robot proposed in Maldonado-Bascon et al. (2019) is a vision-based system for fall vision-based solution for fall detection using a mobile-patrol robot. The process of fall detection consists of two stages: person detection and fall classification. Deep learning-based computer vision for person detection and fall classification is done by using a learning-based Support Vector Machine (SVM) classifier. This approach meets the following design requirements: easy to use, real-time, adaptable, high performance, independent of person size, clothes, environment, and low cost. One of the main contributions of this research is the input feature vector for the SVM-based classifier, which can distinguish between a resting position and a real fall scene.

The SmartSurv 3D Surveillance System Fleck and Straßer (2008) is a distributed surveillance and visualization system consists of three levels: sensor analysis level [scene acquisition and distributed scene analysis (smart camera network)], virtual world (server) level, and visualization level. The tracking engine is based on particle filter units and considers color distributions in hue, saturation, and value space. The tracking engine is also capable of adapting the target's appearance during tracking using the probability density function's (pdf) unimodality.

2.2 Disadvantages of the existing works

The majority of the systems reviewed above can be related to smart medical systems or to intelligent systems that can adapt to the user's behavior.

The disadvantages of the existing works are as follows:

• Individual solutions: many of the reviewed systems provide individual solutions that address only one or a few specific requirements for patient care and support.

• Need for new systems: implementing individual solutions often requires building entirely new systems with specialized software and hardware, including new medical sensors and computational facilities.

• High cost: the need for specialized architecture and infrastructure can make these systems very expensive to deploy, especially for medical facilities with limited funding.

These drawbacks highlight the need for a more efficient and effective solution that can provide a comprehensive range of functions for hospital rooms using available devices, machine learning, implement knowledge-based approach and can redistribute the computing load across devices, fog, and cloud resources. The common feature of individual solutions is that in each case it is necessary to build a new system with specialized software and new technical means, in particular, new medical sensors and new computational facilities. Some of the systems use fog and cloud resources for data processing, but to ensure their high efficacy, it is necessary to develop a specialized architecture for each of the systems, taking into account the computational and network infrastructure; consequently, medical facilities with little funding are not able to apply for the deployment of such systems.

Thus new efficient and effective solution should provide the full list of fuctions required in hospital rooms, based on gathering data from the available devices and processing it using ML. To ensure the high level of the efficiency, the new system should be knowledge based. To ensure the high level of effectiveness the system should be able to redistribute the computing load over the devices, fog and cloud depending on the state of the network, the solved task, the overall load of the system. To reach the low cost of the system, it should use available devices and computing facilities. In the paper, the task of building such Intellectual Rooms is set out.

The main challenges to the development of the required Intellectual Rooms are the following:

1. The devices in hospital rooms are of different types and have different technical capabilities; the list of the devices and their number change over time. The system should be able to collect data from the devices and use their technical capabilities for data processing.

2. The data about the patients gathered from the devices is heterogeneous, context-dependent, and of huge volume. Processing of the data should be adaptive; to support the complex examination of the patients, joint data processing should be performed (Ni et al., 2016).

3. The complexity of the tasks of patients states continuous monitoring and providing personalized health care support require using various ML and deep learning methods or their combinations, depending on the context in which the tasks are solved.

4. The application for monitoring the patients states should be user-friendly, simply installed, and always available to the medical stuff and relatives of the elderly, i.e., a mobile application should be developed.

The proposed Intellectual Room considered below ensures implementation of the enumerated requirements due to the usage of AmI and IoT technologies and the extensive application of ML techniques.

3 Design of an Intellectual Room based on AmI and IoT technologies

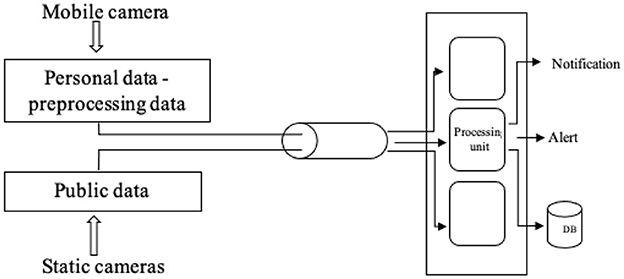

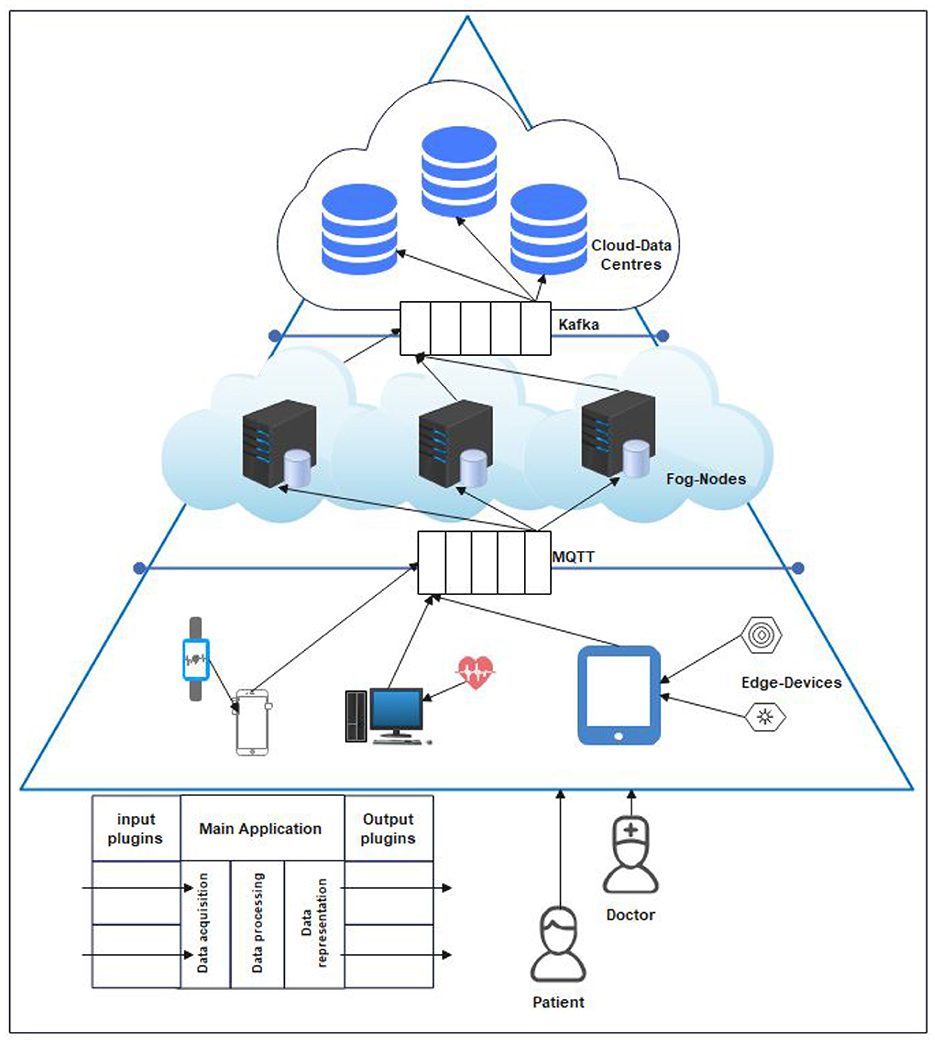

Intellectual Rooms assume continuous monitoring of patients states and provide support for personalized patient care (Levonevskiy and Motienko, 2023). The proposed system for Intellectual Rooms that takes advantage of the use of available surveillance cameras and other devices to collect data about patients and the environment is presented in Figure 1. It can be applied in medical facilities with low financial resources to equip with modern and expensive equipment, or used by families who need to take care of someone who is sick, has limited mobility, or needs monitoring. According to Figure 1, input data includes two main groups of data. The first group contains data obtained from public cameras or security cameras that capture the whole scene. The data is transferred to the server, where it is processed. The data streams provided by video cameras are processed as a series of frames (15 fps) separately in the fog and together in the cloud.

The second group of data contains data from one or more personal cameras that are located near the patients and can easily capture facial images. This data is commonly gathered using smartphones. Analyses and processing are executed on smartphones. The gathered data is processed using machine learning models and neural networks, and the results of its processing are used for the implementation of the functions provided by the Intellectual Rooms.

The data from the public cameras is used to perform the functions of object tracking to detect people and track as humans appear in the tracking space. It is also used to perform such functions as body pose detection and fall detection. The functions that run on the smartphone include face detection and emotion detection. The emotion detection function allows users to monitor and evaluate the patient's emotions, thereby allowing them to make judgments about the trend in the state of the patient, identifying whether it has become worse or better. Mobile phone devices can also perform alarm or communication functions.

Such functions of the system, as body pose detection and fall detection, are performed on the system's server. The task of identifying the posture of a person from the input video streams is the core task of the recognition server. The machine learning algorithms are executed on the server for the classification and identification of human activities. If the image storage option is enabled via the mobile application, the server also stores the images of the identified postures with a user ID and timestamp for later use.

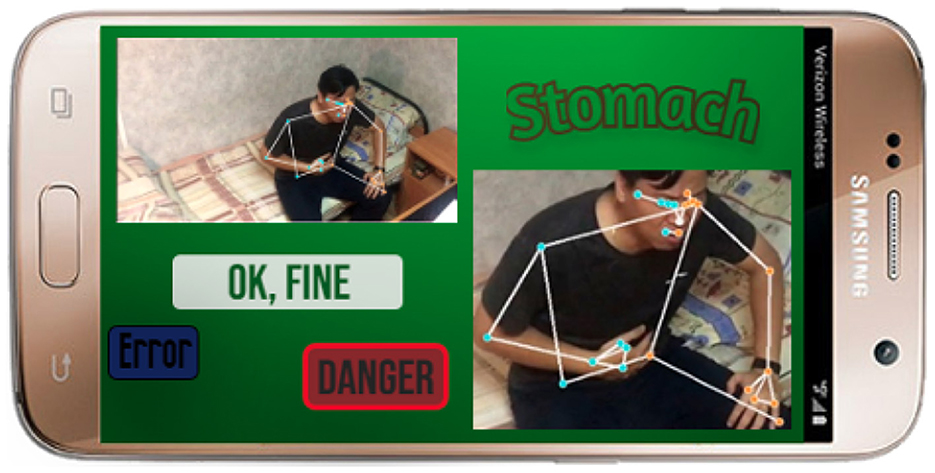

According to the determined states of the patients, various types of events are generated, f.e., falls, heart attacks, contractions in the lower abdomen, etc., and sent to the users smartphones along with the images that correspond to the events. When an event is generated an audible signal and color accompaniment (frames and an inscription) are reflected on the screen of the smartphone. For. example, “pain in the abdomen” and a red border around the ribs, pelvis, and entire abdominal cavity. The medical stuff based on the events received from the server provides operational help to the patients.

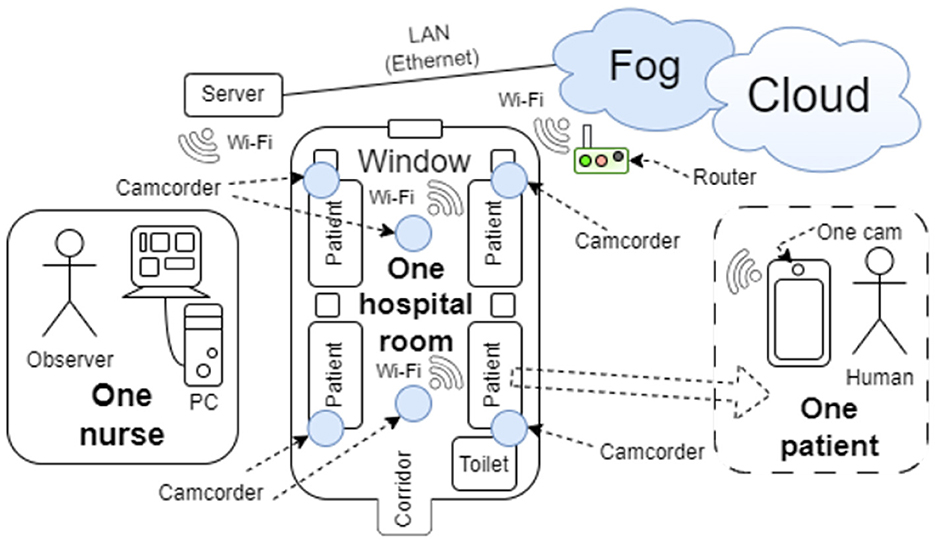

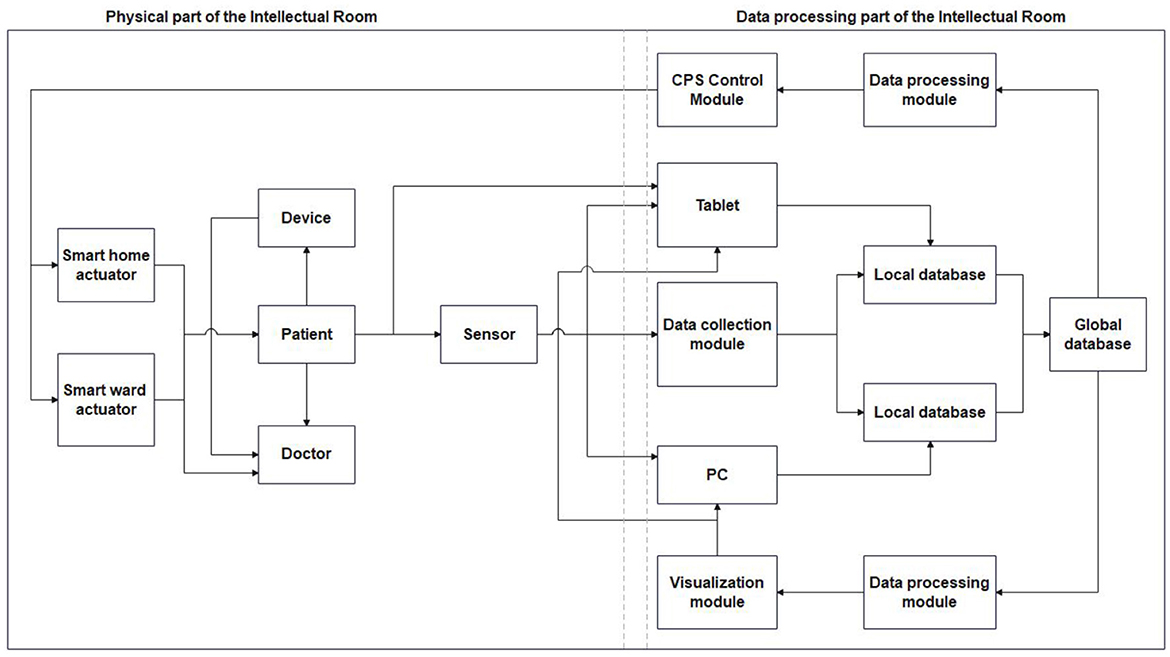

The general structure of the Intellectual Room is shown in Figure 2. In the center of the figure is a hospital room with patients. On the left side of the figure, the nurses who monitor the patients are presented as a conventional unit, and the conventional unit that reflects a patient is placed on the right side of the picture. Figure 2 presents one room with four patients, but the system can be expanded to monitor multiple hospital rooms and multiple patients, and involve the operation of several nurses as well as other medical stuff (Poppe, 2010; Aggarwal and Ryoo, 2011; Guo and Lai, 2014).

Intellectual Rooms assumes continuous monitoring of patients state and providing support for personalized patient care, the diagram of the patient monitoring process in the Intellectual Rooms is shown in Figure 1.

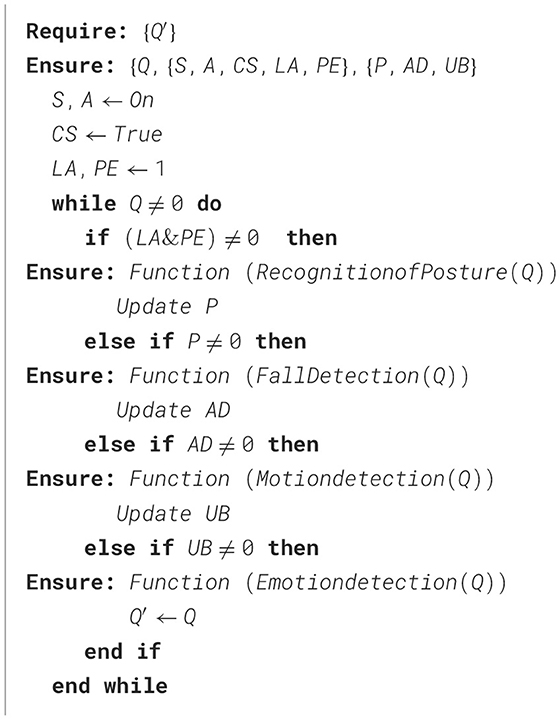

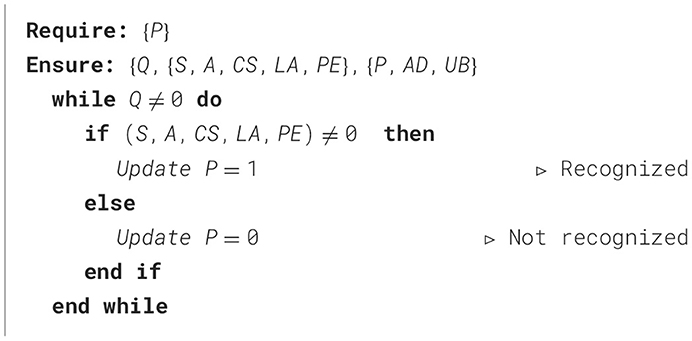

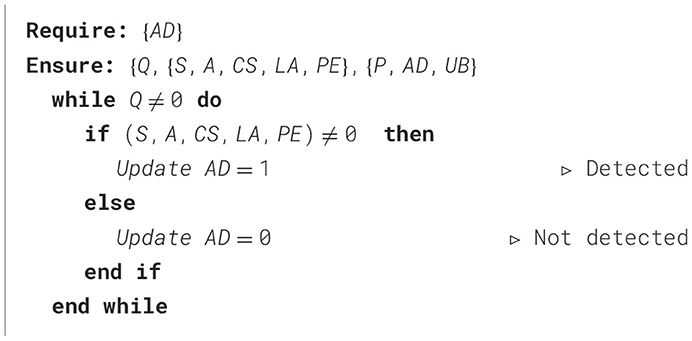

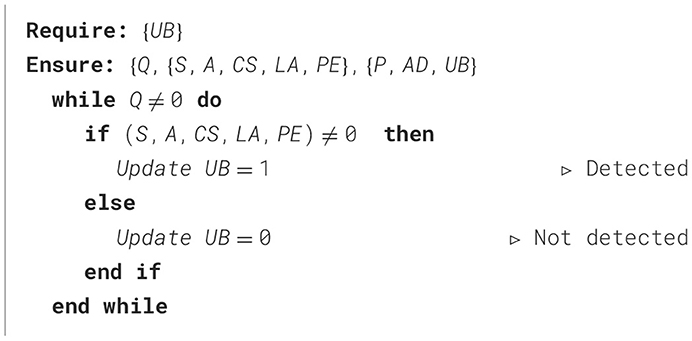

Implementation of the proposed method for Intellectual rooms (Algorithm 1) ensures timely assistance for the elderly, the chronically ill, and the situationally ill, thus prolonging their lives.

The proposed method ensures the system provides personalized, complex processing of real data from all devices (public cameras, the front camera of a smartphone) based on using machine learning methods and neural networks.

The key functions of the proposed Intellectual room based on AmI and IoT Technologies are presented below in Section 4 and the architecture of the system is described in Section 5.

4 The functions of the Intellectual Room based on AmI and IoT technologies

4.1 Person detection and tracking

The function of person detection and tracking is applied to videos obtained from security cameras (public cameras). This function, based on using MediaPipe pose solution (Mediapipe, 2023), helps to detect the body shape and build the human skeleton in the observable area quickly and accurately.

4.2 Recognition of posture

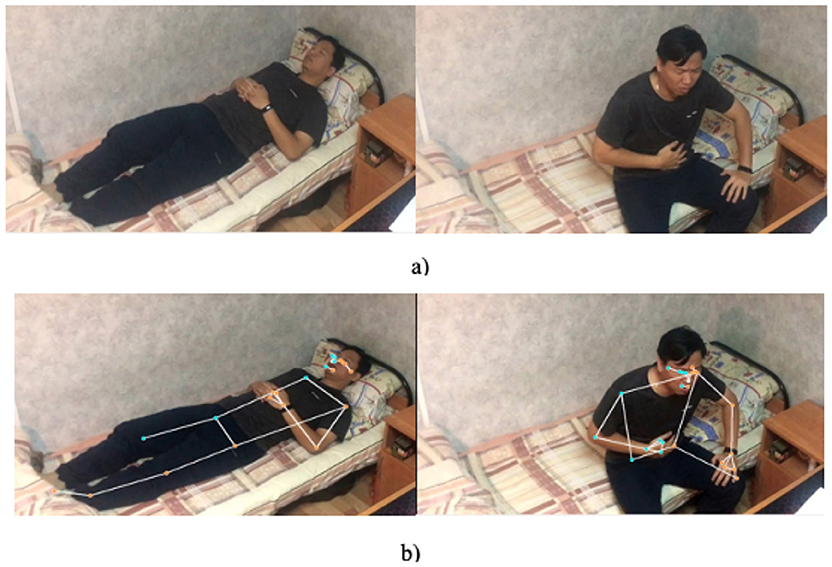

The process of posture recognition, detailed in Algorithm 2, assumes that first the image is captured by the camera (Figure 3a), then when the person is detected, the pose and skeleton identification function is performed, and the image is sent to the server to classify the activity (Figure 3b).

Figure 3. Detection poses and skeleton process: (a) input image from the public camera; (b) skeleton human identification.

The server can recognize different postures, in particular, sitting, standing, lying, and resting or sleeping. Since there is no complete recognition model for this task in the existing literature, a custom model was developed and trained using Image AI. ImageAI provides four convolutional neural networks (CNNs), namely ResNet50, DenseNet12, SqueezeNet, and InceptionV3. From these networks, ResNet50 was selected due to its time efficiency and higher accuracy (Lin et al., 2014).

For training the customized model, ~500 images per posture were used, and a dataset of 100 images was used for testing each posture. In posture recognition process, the human is first detected on the image to avoid redundant processing; after that, the cropped images are passed to the customized model for activity recognition.

4.3 Fall detection

The system allows to identify four poses, i.e., sitting, standing, lying, and resting or sleeping. The first three are easy to identify, and the model was trained directly. However, the lying and resting poses are very similar, so to distinguish between lying, resting, and falling, bed detection was also included, as outlined in Algorithm 3. A person is considered to be lying or resting if a human is detected on the bed.

4.4 Motion detection

If the person stays in the same position for a long time in bed, it will be determined that he or she is asleep, and the time spent lying still will be recorded. It is also possible to determine the state of the eyes, i.e., to determine if the eyes are open or closed using data from the smartphone cameras. Combining the results of data processing from the smartphone camera and the public camera allows to identify of the sleeping and waking states of the patient.

The system monitors for significant movement to identify potentially important events, a process formalized in Algorithm 4.

4.5 Emotion detection

The face detection function and emotion analysis function, implemented as shown in Algorithm 5, are based on processing images from personal cameras (mobile cameras). The analysis of emotion recognition using facial expressions allows to detect the following emotional states: sadness, anger, happiness, fear, surprise, and neutral, and track changes in emotional states caused by specific conditions and events. It is important to determine the emotions, as they form the basis on which the patient's state can be estimated (Zhang et al., 2024). Sadness and a full stop can indicate the pain that a person is experiencing at the moment. The function of quick detection of the patient's health status based on the face image obtained using the personal phone's front camera assists the medical staff in collecting and assessing the patient's daily health status. The facial analysis models are implemented in Python using the TensorFlow library. These models support continuous monitoring of the patient's condition, thereby enhancing the nurse's work through the application of machine learning. Additionally, emotion detection enables the examination of patient feedback, the assessment of patients' health in the context of medical factors, and the evaluation of the efficacy of first aid.

The implementation of the function is based on using the factor model of a person's face (An et al., 2021). Images of patients' faces are converted into feature vectors. According to these vectors, the patient's condition is classified using machine learning methods. The process of collecting images of the patient's face over time also helps to personalize the patient's face in the system. Due to the use of a factor model that ensures low computational complexity in face image processing, facial emotion analysis can be performed using video streams from cameras in real-time.

For additional analysis of data on patient faces, the Mediapipe face mesh solution can also be used (Alamoodi et al., 2020).1

4.6 Reporting of results

The detected postures and emotions of the patients' states are logged with timestamps and can be provided to the users (Motienko, 2023) on the request. This data shows the change in posture and emotions of the patient over time.

To effectively implement these diverse functions and ensure seamless integration of AmI and IoT technologies, a robust and adaptable architecture is essential. The following section details the architectural design of the Intellectual Room, highlighting how its components and their interactions enable the realization of the aforementioned functionalities.

5 Architecture of the system for the Intellectual Room based on AmI and IoT technologies

5.1 General architecture of the Intellectual Rooms

The Intellectual Rooms operates as a complex system, that implements both physical and digital processes. So it operates as an information system with various elements responsible for gathering, storing, processing, retrieving, and producing information. Simultaneously, it operates as a physical system equipped with sensors, which are devices that collect data from the environment, and actuators carrying out physical actions based on this information.

The proposed system supports the acquisition of data, which can be performed in several ways. Data can be obtained automatically by receiving information from smartphones and wearable devices or from general cameras. It can be also manually entered by users. Such devices as cameras, health monitors, microphones gather different data periodically according to their configuration settings. The data can be partially processed by the devices themselves or in the fog and then it is transferred to the cloud. On a cloud server, machine learning algorithms determine the patient's state and according to the patients state generate various types of events. Then the event and the image corresponding to the event is transmitted to the medical stuff, who provide operational help to the patients.

The server provides an Application Programming Interface (API) for devices and client applications. The devices can run applications and support input and output plugins.

Main types of output plugins:

1. None (default): data are displayed on the screen of the user device.

2. Plugins for databases: they transfer data to external sources (databases and medical information systems).

Filling in the data model and connecting plugins is performed on the basis of a typical data collection and processing application. The application is developed using cross-platform technologies Flutter (Fleck and Straßer, 2008), so it is possible to perform the assembly for various types of hosting devices and operating systems. Given the auxiliary models and concepts above, the process of creating the required application consists of the following steps:

1. Define the initial data: indicators; scoring rules; rules for generating results.

2. Determine the required input plugins for each indicator. If the required plugins are not available, they need to be developed.

3. Determine the required plugins for data output and develop them if necessary.

4. Assemble the application with connected plugins for the required platform.

5. Install the application.

6. Fill the application database with information about indicators and rules.

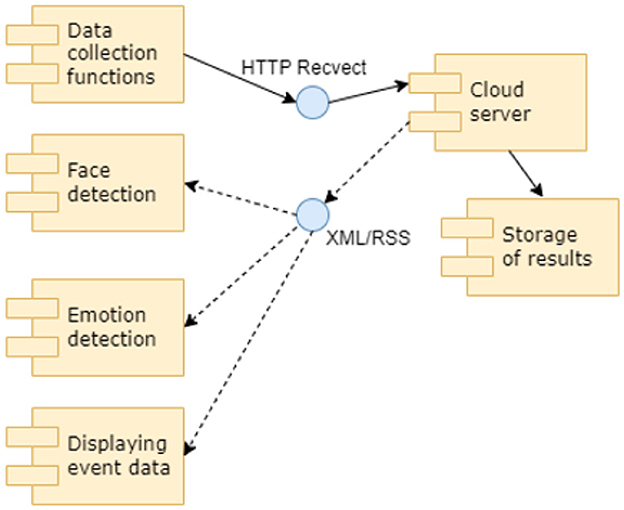

Figure 4 describes the application's structure and its use as a part of the medical infrastructure. The medical infrastructure can be delineated into three primary layers: Edge Devices, Fog Nodes, and Cloud Data Centers. Edge Devices encompass various terminals such as computers, smartphones, and tablets, which interface with Internet of Things (IoT) devices like touch sensors, heart rate monitors, and wearable smart devices including smartwatches and smart rings. Signals from these devices are transmitted to the Fog Nodes layer, where they are classified and stored using queues with the MQTT protocol, and then relayed to server clusters. Subsequently, data from these Fog Nodes clusters is processed and sent to Cloud Data Centers via the Kafka protocol. To enhance operational efficiency, the system integrates multiple layers of artificial intelligence at each device level, effectively reducing response times.

Key modules and data streams of the Intellectual Room are shown in Figure 5. The organization of the physical structure of the Intellectual Rooms depends on the set of modules and connections used.

Data processing modules have the versatility to operate independently or as an integral part of the Intellectual Rooms ecosystem. When used independently, these modules allow data input and result retrieval directly by medical stuff or patients. Conversely, when integrated as a component of the Intellectual Rooms, these modules serve as intelligent information hubs. They collect, store, process, and present data within the broader Intellectual Rooms infrastructure. This approach offers several advantages, including:

• Automation of data entry: This is the first step, where data is automatically collected from various sources like sensors and databases.

• Automation of data presentation: This is the second step. In this context, “presentation” refers to the process of transferring the collected data to the data processing and storage modules. The key outcome here is that this transfer makes it possible for the new data to be processed along with other data already in the databases. Think of it as “presenting” the data to the next stage of the system in a usable form, rather than presenting it to a human user.

• Merging data: This is the final step described. The processed data is then stored consistently with data from other sources. This merging enhances the completeness and accuracy of the data, reduces the need for repeated measurements, and expands the potential for analysis.

5.2 Smartphone application for medical stuff of Intellectual Rooms

The authors of the study developed a smartphone application for medical stuff to monitor the states of the patients and provide timely care for them.

A component diagram with the components of the smartphone application for the Intellectual Room based on AmI and IoT technologies is shown in Figure 6. The mobile application is designed to handle multiple cameras and multiple users. The application provides different control functions, e.g., start capturing images, stop capturing images, and enable or disable image storage.

Figure 6. A component diagram with the components of the smartphone application of the system for Intellectual Room.

The application requires the user to undergo the authorization procedure before accessing the user's private information. The user needs to register by providing personal details such as email, ID, and name in order to access the information through the application. These details are used to create the profile of the user, provide authorization to the user, and allow him/her to access the data about the patients on the cloud. The access of the users to the cameras and to the data about the patients is provided according to their access rights.

The Android Studio environment (https://developer.android.com/studio) was chosen as the development environment for smartphone application as it allows developers to develop programme code for smartphones based on the Linux Android 11 operating system which is the most popular version of Android OS in comparison with the Android 13 version (https://www.android.com) that is currently not stable. Smartphones with the Android operating system are rather popular, and thus the majority of the medical stuff can use the application. Subsequently, the developed application for monitoring patients and providing timely assistance can be placed in such stores as Google Play (https://play.google.com), RuStore (https://www.rustore.ru), and many other catalogers' applications.

On the main screen of the developed smartphone application information about the patient's state in the hospital room is provided in the left (general view) and in the right (detailed view) areas. On the main screen presented in Figure 7, the system detected the posture of the patient typical for a stomach ache. The artificial intelligence determined that the probability of the “stomach ache event” was equal to 0.78. The medical stuff is asked to confirm the event (“DANGER” button) or to deny (“OK, FINE” button). It is also possible to send the data relative to the identified event to the expert system (“ERROR” button) for further analysis by machine learning specialists. If the event is confirmed, the medical staff will provide assistance to the patient immediately.

5.3 Analysis of the advantages of the Intellectual Rooms

The proposed Intellectual Rooms take advantage of available technical equipment (security cameras, internet connections, servers for data processing, personal cameras on smartphones, etc.). The construction of the system does not require new equipment, thus saving costs. It also uses the advantages of each of the existing devices. Cameras on smartphones have enough computing capacities to process images, but their transmission capacities are limited, so first the data is processed (pre-processed) on the smartphones and then transferred to the server. A security camera (Alrebdi et al., 2022) is not capable of preprocessing data but has a good connection with the server, and there are servers that can receive and process data, so data from these cameras is transferred to the servers. Thus, by taking advantage of the available devices and networks to organize connections and processing, it becomes possible to create a reasonable system with a full set of functions in accordance with the modern requirements to Intellectual Rooms. The proposed Intellectual Rooms can be easily applied both in medical facilities and at home.

The results of the comparison of how the existing systems and the proposed system for Intellectual Rooms meet the functional requirements of the systems for monitoring the patient's states and providing support for patient treatment are given in Table 5.

Table 5. Comparison of the functions of existing systems and the proposed system for Intellectual Rooms.

According to Table 5, the proposed system for Intellectual Rooms supports almost all functions required in modern systems for patient monitoring and treatment support.

6 Experiment setting

6.1 Datasets

The developed Intelligent Room system was tested based on processing images 1) from general cameras and 2) from a smartphone. The images were taken from the open image datasets library of the Max Planck University in Germany (https://www.mpib-berlin.mpg.de/en) with a size of 800 × 600 pixels and a frame depth of 80 dpi in PNG format (since the format conveys the accuracy of the depicted objects higher than JPEG). The images were black and white. To the dataset, images from open sources, in particular, from the More.Tv system (https://more.tv), which contains video clips and series of frames with certain symptoms of diseases, were added. The data from the open datasets library of Max Planck University was also used as the data from the sensors. The sensor data and the images were combined, and the dataset was supplemented with images generated by the authors. Fragments of images with a size of 200 × 200 pixels and a frame depth of 15 dpi, built based on images from the dataset, were used. In total, 54,957 pictures were used that contained 12,592 unique events; each of the events was described in 3–5 series of frames. Events were divided into several groups:

• diseases of the stomach;

• kidney disease;

• liver disease;

• joint problems;

• heart disease;

• falls;

• damage to the nervous system.

The quality of the patient care was assessed based on the time that was required to provide assistance to the patients and on the number of false alarms.

The repository provided by the authors (https://github.com/alex1543/subbotin/tree/main/roomsAI) contains the code for creating application for vizualizing events about patients' diseases for the Android Studio IDE development environment (https://developer.android.com/studio) in Java and Kotlinis in the “app*” and “dop” directories; data set and trained models are placed in the “ds” and “medal” directories.

Steps to run the experiment:

1. Download, install and open PyCharm 2024.3.1 (https://www.jetbrains.com/pycharm/)

2. Open application from directories and compile *.apk in debug mode

3. Connect to the fog computing server

4. Check the program's functionality

5. Define a test image

6. Retrain the model on NumPy 2.2.4 (https://numpy.org/)

7. Pre-download all dependent packages via the “pip” utility bundled with Python 3.13.2 (https://www.python.org/)

8. Upload the trained model to the fog or cloud server

9. Correct the connection scripts (addresses and ports)

10. Run the APK application

11. Collect statistics for 3 months

12. Open logs in Excel 365

13. Apply filtering, aggregation, average

14. Fill in the resulting tables.

6.2 Method

The goal of the experiment was to determine the patients' states based on images received from different cameras and data from other sensors and also data about surroundings. The patients' states were assessed under the following conditions:

1) (option 1): only based on data from public cameras.

2) (option 2): based on data from public cameras that was used for posture recognition and data from a smartphone camera used for emotion detection.

3) (option 3): based on data from public cameras, a smartphone camera, and data from sensors (pulse, pressure, oxygen saturation, etc.).

4) (option 4): based on data from public cameras, smartphone camera, sensors, and surrounding objects (bed, table, walker, scattered crutches, etc.)

A neural network from Keras library was used (https://keras.io/). The model was trained on 7 types of events (stomach diseases, kidney diseases, liver diseases, joint problems, heart diseases, falls, damage to the nervous system), but the main focus was on gastroenterology. For training the neural network Pyton script was developed that uses the following libraries with commands: “from keras.datasets import mediLETI from keras.models import Model from keras.layers import Input, Convolution2D, Dense, Dropout, MaxPooling2D, Flatten from keras.utils import np−utils import numpy as np”. When training this model, the parameters used: “hidden−size, num−epochs, batch−size, kernel−size, pool−size, drop−prob−1, conv−depth−2, etc.” And at the end of training, the functions “Flatten()(drop−2) and model.evaluate(X−test, Y−test, verbose=1)” were applied. Detailed information about parameters and cost functions is available in the documentation (https://numpy.org/).

6.3 Results of determining the states of patients

6.3.1 Results when using data from public camera

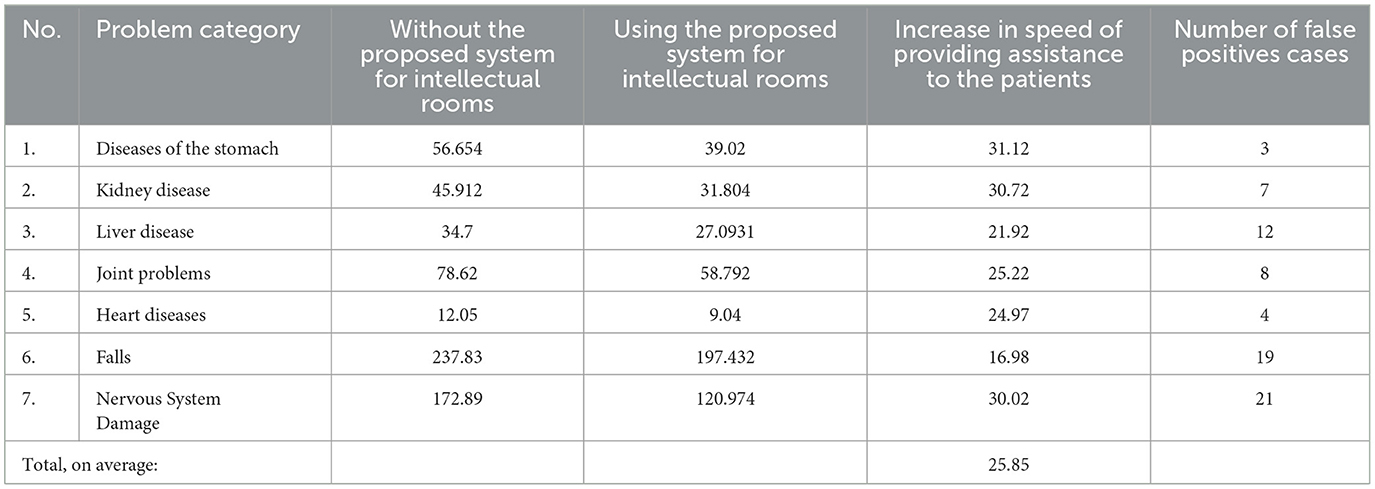

This subsection presents the results of determining the patients' states in the case when only data from public cameras is used. The state of the patient was determined based on posture recognition. After conducting experiments for all types of events, it was revealed that the speed of assistance increased the most in cases where patients had a stomach ache (31.12%). However, in general, the proposed system for Intellectual Rooms showed good results (the speed of assistance increased on average by more than 25%) regarding the current practice when the nurses monitor the states of the patients in the hospital rooms. In Table 6, the results of determining the patient states only according to data from public cameras are presented.

Table 6. The increase in the speed of providing assistance to the patients (sec.) during patient care (%) using images from a public camera (option 1).

6.3.2 Results when using data from public camera together with data from other data sources

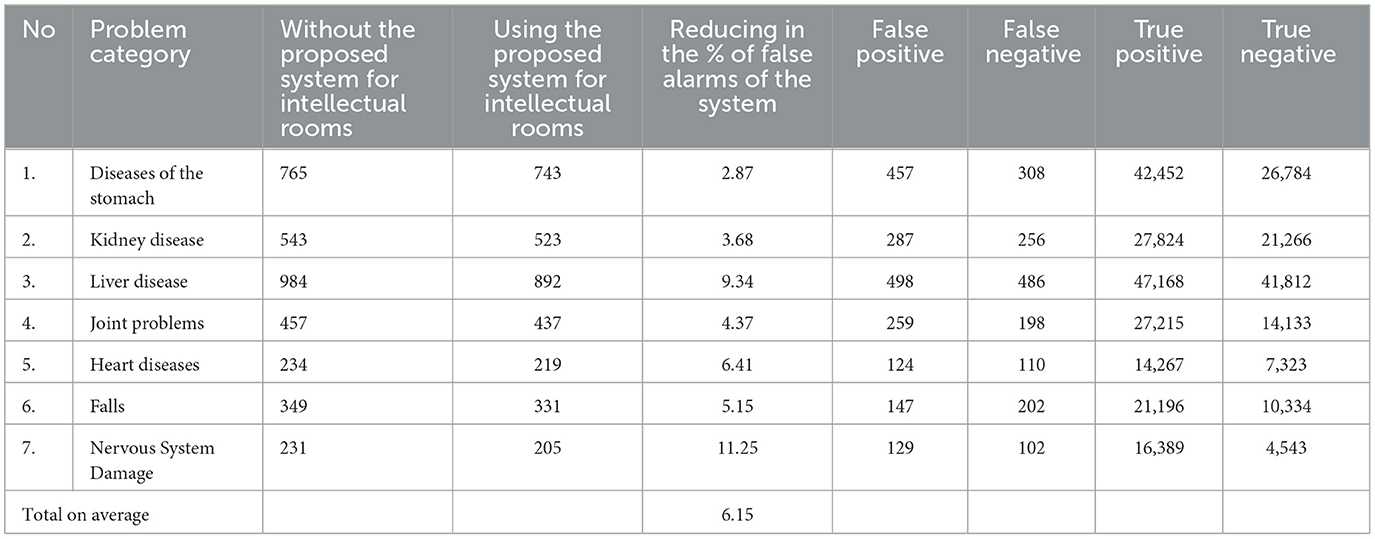

When determining the posture of the patients, it is also important to determine their facial expression, not to generate alarms in situations when a patient is doing gymnastics, etc. The posture was detected based on processing images from a public camera, and for the detection of facial expression, images from a smartphone camera were used. In Table 7, the results of determining patients' states based on posture recognition and emotion detection are presented.

Table 7. Reduce in the number of system false alarms during patient care (%) using images from public cameras and smartphone cameras (option 2).

The results obtained (Table 7) were compared with the results of experiments conducted in conditions where images only from public cameras were used to determine the patient's states; it was revealed that the use of images from smartphones as additional data made it possible to reduce the percentage of false alarms by 6.15%.

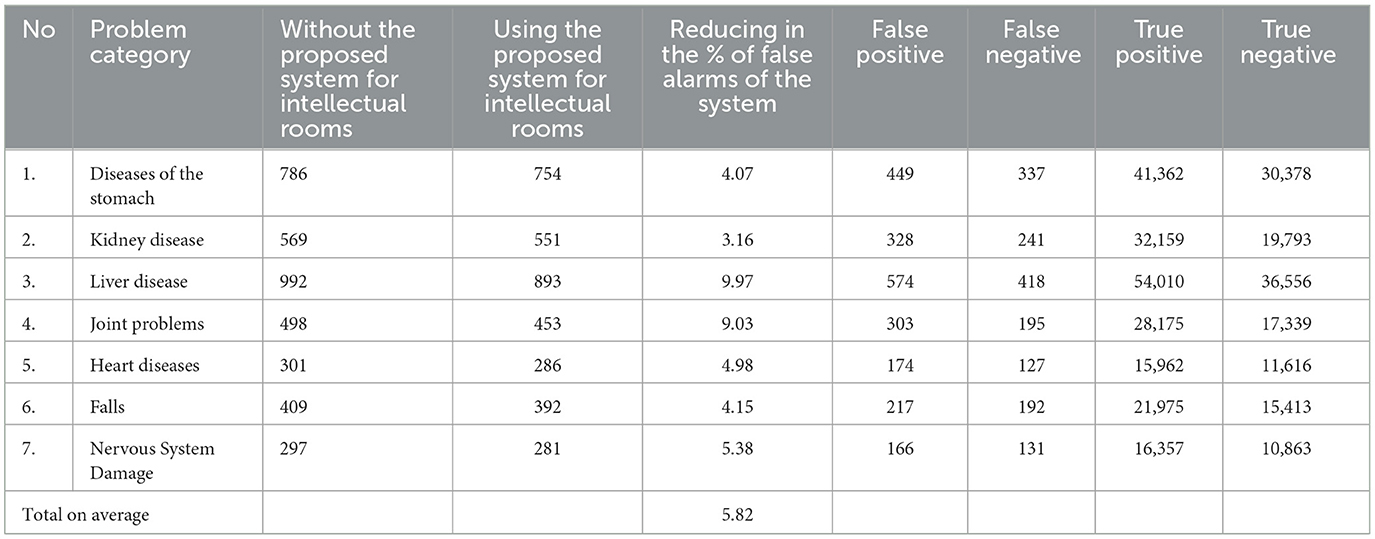

To further increase the degree of confidence in the determined patients' states, data from sensors was used. The data from the following groups of sensors was used:

1) data provided by life support devices (ventilation, heartbeat, markers of critical human states based on the chemical content of blood, etc.);

2) data gathered within supportive rehabilitation treatment (blood sugar level, pulse, oxygen saturation, blood chemical indicators of general parameters, etc.).

The results of determining patients' states based on data from public cameras and smartphone and data from sensors are given in Table 8.

Table 8. Reduce in the number of system false alarms during patient care (%) using images from public and smartphone cameras and sensors (option 3).

The experiment conducted according to option 3 (Table 8) showed that usage of sensor data as additional information allows reduce the false alarms of the system by 5.82% compared to option 2.

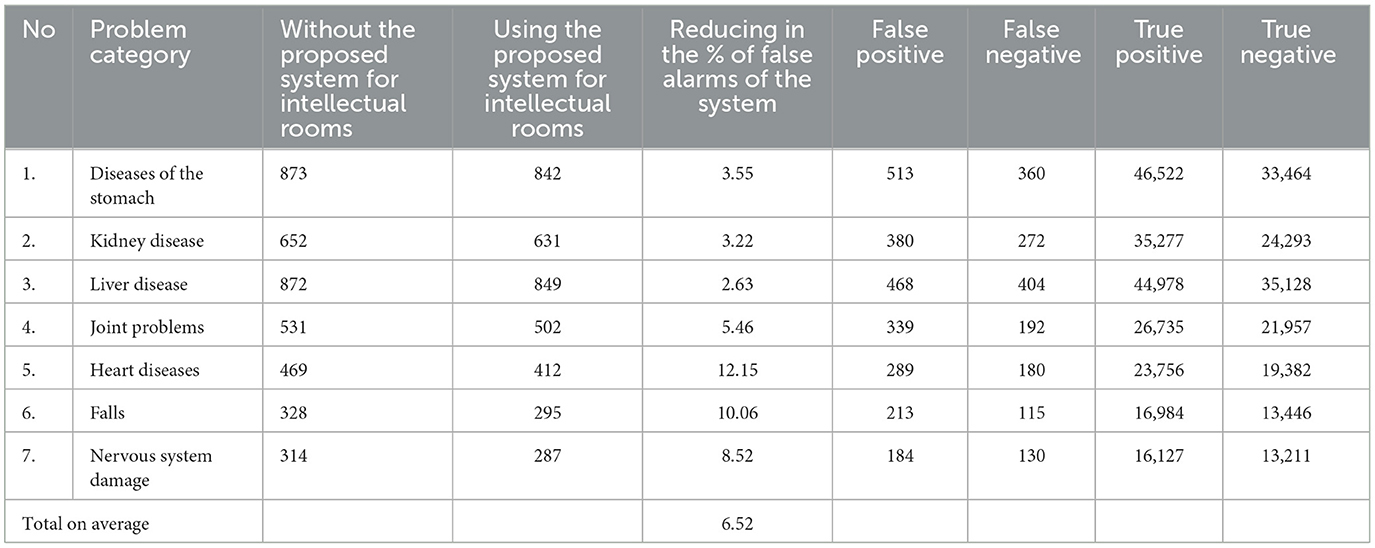

The use of information about the surrounding objects also increases the degree of confidence in the determination of patients' states.

The surrounding objects that were considered when determining the patients' states were divided into two groups:

1) furniture (beds, bedside tables, chairs, tables for eating, etc.);

2) devices to help disabled people and cripples to recover from operations (crutches, toilet chairs, ladders, walkers, walkways, wheelchairs, gowns, stretchers for legs and spine, bandages for the abdomen and back, elastic bandages and casts for legs and arms, etc.).

The results of the experiment are given in Table 9. After conducting the experiment in which information about surrounding objects was used (Table 9), the results were compared with the results obtained in option 3 (Table 8). It was identified that due to using additional information about surrounding objects, the number of false alarms in the system was reduced by 6.52%.

Table 9. Reduce in the number of system false alarms during patient care (%) using images from public and smartphone cameras, sensor data, and information about surrounding objects (option 4).

In Table 10 a summary of the results obtained in the experiments conducted according to options 1–4 is provided. The results prove that the proposed method can be used for building Intellectual Rooms. They also show that the usage of additional information sources significantly improves the results of determining the states of the patients; the usage of additional data sources improves the results by 23%.

6.3.3 Statistical validation performance improvements

The t-test values were: t = 29.4 and p = 0.0065 (6.5/1000). Since p is much less than 0.05, we conclude that there are statistically significant differences.

The ANOVA analysis of variance showed the following values: η = 0.1289 and f = 0.31, which indicates an effect above average. The Fisher F-statistics were: F = 14, Fcrit. = 6.2 at a significance level of α = 0.06, F > Fcrit. Additional metrics of the model: accuracy = 0.933, precision: 0.9436, recall: 0.933, F1 score: 0.9327, AUC score: 0.75, Mean Absolute Error: 1.72, Mean Square Error: 3.98, Root Mean Square Error: 1.995, Mean Absolute Percentage Error: 0.02334.

6.3.4 Model performance and experimental environment

In the experiment, the Samsung Galaxy S7 Verizon was used as a smartphone. Statistics were aggregated using MSI Katana GF76 12UC-258RU, Full HD (1920x1080), IPS, Intel Core i5-12450H, 4 cores, 12 GB RAM, 256 GB SSD, GeForce RTX 3050 for 2 GB laptops, and Windows 11.

The study evaluated the “Intellectual Room” system through a series of experiments to determine its effectiveness in identifying patient states using various data sources. The evaluation was conducted using a combination of images from open datasets, author-generated images, and sensor data, totaling 54,957 pictures representing 12,592 unique events. The experiment was designed in four progressive stages to measure the specific benefit of adding each new data source.

Stage 1: baseline performance with public cameras

The initial experiment assessed the system's performance using only data from public cameras to recognize a patient's posture. Compared to the standard practice of manual nurse monitoring, the system demonstrated a significant improvement in the speed of providing assistance.

• Key Finding: The average speed of providing assistance to patients increased by over 25%.

• Specific Case: The most significant improvement was seen in cases of stomach aches, where assistance speed increased by 31.12%.

Stage 2: improving accuracy by adding smartphone camera data

To reduce false alarms that might occur from posture analysis alone (e.g., misinterpreting gymnastics for a fall), this stage added data from smartphone cameras to detect facial expressions. By combining posture with emotion detection, the system could more accurately interpret a situation.

• Key Finding: The addition of smartphone camera data reduced the percentage of false alarms by an average of 6.15% across all problem categories.

Stage 3: enhancing reliability with sensor data

To further increase the system's confidence in determining patient states, the third stage incorporated data from medical sensors. This included information from life support devices (like ventilation and heartbeat monitors) and rehabilitation treatment sensors (measuring pulse, blood sugar, and oxygen saturation).

• Key Finding: Adding sensor data further reduced the system's false alarms by an additional 5.82% compared to using cameras alone.

Stage 4: achieving contextual awareness with surrounding objects

The final stage of the experiment introduced information about surrounding objects to provide context. The system was trained to recognize furniture (beds, tables) and assistive devices (crutches, walkers) to better distinguish between events, such as a patient sleeping in bed versus having fallen on the floor.

• Key Finding: The inclusion of environmental object data lowered the number of false alarms by another 6.52% compared to the previous stage.

Overall summary of improvements

The multi-stage experiment demonstrates that layering additional data sources progressively enhances the system's performance. Each new source of information—from smartphone cameras, medical sensors, and environmental context—served to significantly reduce false alarms and improve the reliability of the patient state determination. The final model achieved an accuracy of 0.933. We conclude that the cumulative effect of using all data sources resulted in a 23% overall improvement in patient state identification accuracy.

In Table 10 is a summary table reflecting this clearer progression. In Table 11 are the summary of the experimental results.

7 Discussion

The conducted of this study demonstrates the potential of the proposed Intellectual Room system in increasing the speed of assistance and reducing false alarms based on the evaluations performed. The system's strengths lie in its joint data processing capabilities and its use of machine learning and existing cost-effective hardware.

The key findings of the study, key features of the Intellectual room system, and ethical considerations of the study are as follows:

Key Findings of the study:

1. Increased speed of assistance: the intellectual room system significantly improved the speed at which assistance can be provided to patients, in particular, the speed of assistance to patients experiencing stomach aches has been improvemd on 31.12%.

2. Reduced false alarms: the system effectively reduced false alarms by combining data from multiple sources. Using images from both public cameras and smartphone cameras led to a 6.15% reduction in false alarms. Incorporating sensor data further reduced false alarms by 5.82%. Finally, including information about surrounding objects allowed achieve an additional 6.52% reduction.

3. Improved accuracy: overall, the use of additional data sources (smartphone images, sensor data, and surrounding object information) resulted in a 23% improvement in patient state identification accuracy.

Key features of the intellectual room system:

1. Joint data processing: the system leverages data from various sources, including public cameras, smartphone cameras, sensors, and information about surrounding objects to enhance the accuracy and reliability of patient state identification.

2. Machine learning: the system employs machine learning algorithms and neural networks to analyze data, detect events, and provide real-time support for patient care.

3. Smartphone application: the developed smartphone application allows medical staff to monitor patients, receive alerts, and provide timely assistance.

4. Cost-effectiveness: the system utilizes existing technical equipment, such as security cameras, smartphones, and servers, to minimize costs while providing a comprehensive solution for patient monitoring and care.

Ethical considerations of study:

1. The study emphasizes the importance of continuous patient monitoring, especially in situations where immediate assistance is crucial.

2. The integration of Federated Learning and data-centric AI is highlighted as a potential avenue for future development, promising enhanced privacy, security, and model performance.

Currently, no pilot deployment of the Intellectual Room system has been conducted in a real-world clinical setting. The evaluations presented in this paper are based on simulated test data and publicly available datasets, designed to validate the core functionalities and the proposed method for building such rooms. We acknowledge this as a limitation of the current study. Our future plans include progressing toward pilot deployments in clinical environments to rigorously assess the system's performance, usability, and practical benefits in real-world healthcare scenarios. This will be a crucial step for further validation and refinement of the system.

8 Conclusion

Intellectual Rooms based on Ambient Intelligence and Internet of Things technologies are an urgent need today (Motienko, 2023; Silhavy and Silhavy, 2023). High demand in Intellectual Rooms stems from the fact that in multiple cases, the operativeness of providing assistance to the patient defines the possibility of saving his or her health. In many cases, if the assistance is provided in a timely manner, a complete or partial recovery of the patient is possible when a person becomes practically healthy and can live for years and dozens of years, depending on his/her age. Sudden cardiac arrest can cause cerebral hypoxia, and the person falls into a vegetative state for several minutes. It is important to urgently take action, and this requires constant monitoring of the patient's state. Previously, this was done by the medical staff. The development of the proposed system for Intellectual Rooms ensured the possibility of continuous monitoring of the patients states in the hospital rooms and support of the patients complex personalized treatment.

Intellectual Rooms provide a range of benefits, including improved patient safety, enhanced comfort, higher treatment quality, and reduced costs. A key advantage lies in their high potential for practical application, as the system is low-cost due to its use of existing technical equipment, while also being highly efficient thanks to the wide application of machine learning techniques.

The future effectiveness of intellectual rooms in healthcare will be significantly amplified by the critical roles of Federated Learning and data-centric AI. By combining these advanced technologies, these intelligent spaces will become more effective, offering enhanced privacy, better security, and improved model performance. This integration represents a major step toward creating healthcare systems that are more efficient, effective, and centered around the patient.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Author contributions

RD: Writing – review & editing. PT: Formal analysis, Writing – review & editing. NZ: Conceptualization, Supervision, Methodology, Writing – original draft, Funding acquisition. AS: Software, Investigation, Writing – original draft, Funding acquisition.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was supported by the state budget, project no. FFZF-2025-0019. Additionally, a VIT SEET grant is supporting this work.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^https://www.ncbi.nlm.nih.gov/pmc/articles/PMC8850169/; https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3478791/

References

Aggarwal, J. K., and Ryoo, M. S. (2011). Human activity analysis: a review. ACM Comput. Surv. 43:16. doi: 10.1145/1922649.1922653

Alamoodi, A. H., Benter, A., Zaidan, B. B., Al-Masawa, M. R., Zaidan, A. A., Albahri, O. S., et al. (2020). A systematic review into the assessment of medical apps: motivations, challenges, recommendations and methodological aspect. Health Technol. 10, 1045–1061. doi: 10.1007/s12553-020-00451-4

Alrebdi, N., Alabdulatif, A., Iwendi, C., and Lian, Z. (2022). SVBE: searchable and verifiable blockchain-based electronic medical records system. Sci. Rep. 12:266. doi: 10.1038/s41598-021-04124-8

AltumView. (2023). AltumView: Sentinare 2. Available online at: https://altumview.ca/ (accessed March 30, 2023).

An, F. T., Zhukova, N. A., and Evnevich, E. L. (2021). Factorial model for detecting and recognizing the contour and basic elements of a human face. Sci. Tech. J. Inf. Technol. Mech. Opt. 21, 482–489. doi: 10.17586/2226-1494-2021-21-4-482-489

Arrotta, L. (2024). Neuro-Symbolic AI Approaches For Sensor-Based Human Activity Recognition (Ph.D. thesis). Università degli Studi di Milano-Bicocca.

Arrotta, L., Civitarese, G., and Bettini, C. (2023). Neuro-symbolic approaches for context-aware human activity recognition. arXiv preprint arXiv:2306.05058. doi: 10.48550/arXiv.2306.05058

Arrotta, L., Civitarese, G., and Bettini, C. (2024). Semantic loss: a new neuro-symbolic approach for context-aware human activity recognition. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 7, 1–29. doi: 10.1145/3631407

Balasubramanian, V., Vivekanandhan, S., and Mahadevan, V. (2022). Pandemic tele-smart: a con-tactless tele-health system for efficient monitoring of remotely located COVID-19 quarantine wards in India using near-field communication and natural language pro-cessing system. Med. Biol. Eng. Comput. 60, 1–19. doi: 10.1007/s11517-021-02456-1

Bouldin, E. D., Andresen, E. M., Dunton, N. E., Simon, M., Waters, T. M., Liu, M., et al. (2013). Falls among adult patients hospitalized in the United States: prevalence and trends. J. Patient Saf. 9, 13–17. doi: 10.1097/PTS.0b013e3182699b64

Brooks, R. A. (1997). “The Intelligent Room project,” in Proceedings Second International Conference on Cognitive Technology Humanizing the Information Age, Aizu-Wakamatsu, Japan, 271–278. doi: 10.1109/CT.1997.617707

Cai, X., and Pan, J. (2022). Toward a brain-computer interface-and internet of things-based smart ward collaborative system using hybrid signals. J. Healthc. Eng. 2022:6894392. doi: 10.1155/2022/6894392

Cao, Z., Hidalgo, G., Simon, T., Wei, S. E., and Sheikh, Y. (2021). OpenPose: realtime multi-person 2D pose estimation using part affinity fields. IEEE Trans. Pattern Anal. Mach. Intell. 43, 172–186. doi: 10.1109/TPAMI.2019.2929257

Dalal, J., Dasbiswas, A., Sathyamurthy, I., Maddury, S. R., Kerkar, P., Bansal, S., et al. (2019). Heart rate in hypertension: review and expert opinion. Int. J. Hypertens. 2019:2087064. doi: 10.1155/2019/2087064

Feng, Y. S., Liu, H. Y., Hsieh, M. H., Fung, H. C., Chang, C. Y., Yu, C. C., et al. (2021). “An RSSI-based device-free localization system for smart wards,” in 2021 IEEE International Conference on Consumer Electronics-Taiwan (ICCE-TW) (IEEE), 1–2. doi: 10.1109/ICCE-TW52618.2021.9603249

Fleck, S., and Straßer, W. (2008). Smart camera-based monitoring system and its application to assisted living. Proc. IEEE. 96, 1698–1714. doi: 10.1109/JPROC.2008.928765

Gayathri, S., and Ganesh, C. S. (2017). Automatic indication system of glucose level in glucose trip bottle. Int. J. Multidiscip. Res. Mod. Educ. 3, 148–151. Available online at: http://rdmodernresearch.org/wp-content/uploads/2017/04/30.pdf

Guo, G., and Lai, A. (2014). A survey on still image based human action recognition. Pattern Recognit. 47, 3343–3361. doi: 10.1016/j.patcog.2014.04.018

Haseena, S., Jameel, A., Al-Muhaish, H., Al-Mughanam, T., Javid, O., and Al-Wesabi, F. N. (2022). Prediction of the age and gender based on human face images based on deep learning algorithm. Comput. Math. Methods Med. 2022:1413597. doi: 10.1155/2022/1413597

Hempel, S., Newberry, S., Wang, Z., Booth, M., Shanman, R., Johnsen, B., et al. (2013). Hospital fall prevention: a systematic review of implementation, components, adherence, and effectiveness. J. Am. Geriatr. Soc. 61, 483–494. doi: 10.1111/jgs.12169

Juang, L. H., and Wu, M. N. (2015). Fall down detection under smart home system. J. Med. Syst. 39, 1–12. doi: 10.1007/s10916-015-0286-3

Kadam'manja, J., Mukamurenzi, S., and Umuhoza, E. (2022). “Smart vital signs monitoring system for patient triage: a case of Malawi,” in 2022 International Conference on Computer Communication and Informatics (ICCCI) (IEEE), 1–6. doi: 10.1109/ICCCI54379.2022.9740751

Levonevskiy, D. K., and Motienko, A. I. (2023). Modeling and automation of data collection and processing in a smart medical ward. Programmn. Inzh. 14, 502–512. doi: 10.17587/prin.14.502-512

Lin, T. Y., Maire, M., Belongie, S., Hays, J., Perona, P., Ramanan, D., et al. (2014). “Microsoft coco: common objects in context,” in Computer Vision-ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6–12, 2014, Proceedings, Part V 13 (New York, NY: Springer International Publishing), 740–755. doi: 10.1007/978-3-319-10602-1_48

Liu, Q., Hou, S., and Wei, L. (2022). Design and implementation of intelligent monitoring system for head and neck surgery care based on internet of things (IoT). J. Healthc. Eng. 2022:4822747. doi: 10.1155/2022/4822747

Maldonado-Bascon, S., Garcia-Moreno, P., Lafuente-Arroyo, S., Lopez-Ferreras, F., and Martinez-del-Rincon, J. (2019). Fallen people detection capabilities using assistive robot. Electronics. 8:915. doi: 10.3390/electronics8090915

Mao, J., Wang, J., Liu, J., and Lu, J. (2023). POSTER V2: a simpler and stronger facial expression recognition network. arXiv preprint arXiv:2301.12149. doi: 10.48550/arXiv.2301.12149

Mediapipe (2023). Available online at: https://developers.google.com/mediapipe/solutions/guide (accessed March 30, 2023).

Motienko, A. (2023). “Remote monitoring of patient health indicators using cloud technologies. Software engineering methods in systems and network systems,” in Proceedings of 7th Computational Methods in Systems and Software 2023, Vol 1, eds. D. Levonevskiy, A. Motienko, L. Tsentsiper, I. Terekhov (Cham: Springer). doi: 10.1007/978-3-031-54813-0_20

Ni, Q., Garcia-Ceja, E., and Brena, R. (2016). A foundational ontology-based model for human activity representation in smart homes. J. Ambient Intell. Smart Environ. 8, 47–61. doi: 10.3233/AIS-150359

Núñez-Marcos, A., Azkune, G., and Arganda-Carreras, I. (2017). Vision-based fall detection with convolutional neural networks. Wirel. Commun. Mob. Com. doi: 10.1155/2017/9474806

Poppe, R. (2010). A survey on vision-based human action recognition. Image Vis. Comput. 28, 976–990. doi: 10.1016/j.imavis.2009.11.014

Savchenko, A. V. (2021). “Facial expression and attributes recognition based on multi-task learning of lightweight neural networks,” in 2021 IEEE 19th International Symposium on Intelligent Systems and Informatics (SISY) (Subotica: IEEE), 119–124. doi: 10.1109/SISY52375.2021.9582508

Silhavy, R., and Silhavy, P., (eds.). (2023). ”Software engineering research in system science, CSOC 2023,” in Lecture Notes in Networks and Systems, Vol. 722 (Cham: Springer). doi: 10.1007/978-3-031-35311-6

Sujadevi, V. G., Kumar, T. H., Arunjith, A. S., Hrudya, P., and Prabaharan, P. (2016). “Effortless exchange of personal health records using near field communication,” in 2016 International Conference on Advances in Computing, Communications and Informatics (ICACCI) (Jaipur: IEEE), 1764–1769. doi: 10.1109/ICACCI.2016.7732303

Sun, K., Xiao, B., Liu, D., and Wang, J. (2019). “Deep high-resolution representation learning for human pose estimation,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (Long Beach, CA: IEEE/CVF), 5693–5703. doi: 10.1109/CVPR.2019.00584

Supriya, A., Ramgopal, S., and George, S.M. (2017). “Near field communication based system for health monitoring,” in 2017 2nd IEEE International Conference on Recent Trends in Electronics, Information and Communication Technology (RTEICT) (Bangalore: IEEE), 653–657. doi: 10.1109/RTEICT.2017.8256678

Toshev, A., and Szegedy, C. (2014). “Deeppose: human pose estimation via deep neural networks,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (Columbus, OH: IEEE), 1653–1660. doi: 10.1109/CVPR.2014.214

Wen, M. H., Bai, D., Lin, S., Chu, C. J., and Hsu, Y. L. (2022). Implementation and experience of an innovative smart patient care system: a cross-sectional study. BMC Health Serv. Res. 22, 1–11. doi: 10.1186/s12913-022-07511-7