- 1Department of Computer Science, The University of Manchester, Manchester, United Kingdom

- 2Centre for Practice and Research in Science and Music, Royal Northern College of Music, Manchester, United Kingdom

- 3Alliance Manchester Business School, The University of Manchester, Manchester, United Kingdom

- 4NOVARS Research Centre, The University of Manchester, Manchester, United Kingdom

Modern sensor and gesture tracking technologies (e.g., Myo armbands) allow us access to novel data measuring musical performance at a nuanced level: allowing us to “see” otherwise unseen musical techniques. Meanwhile, advances in machine learning have given us the ability to create accurate predictions from often complex data capturing such techniques. At the same time, instrumental music education in the UK has seen great challenges regarding accessibility, caused by factors such as cost, standardized (non-personalized) curricula, and health issues as barriers to learning. As e-learning becomes a low-cost and personalized alternative to mainstream education, gamification is being used in popular music education apps (e.g., Yousician) to leverage game design principles and teach abstract musical concepts, such as timing and pitch, and stimulate learning. This ongoing project seeks to understand the challenges and opportunities in pairing modern sensor technologies with AI to develop an accessible AI guitar assistant. To do this, our work collects and analyses survey data from 21 guitarists across the UK to understand such accessibility issues and how we may design an AI system to address them. Our results show there is clear scope for developing a flexible and adaptive approach to music tuition via our developing AI guitar assistant, with the ability to address specific accessibility issues regarding the needs of individuals who feel excluded by expensive, standardized and homogeneous music education systems. Our contribution is a set of thematic insights, captured from survey data, for building an AI guitar assistant, which adjacent fields using AI can also benefit from. Our survey insights inform and stimulate a developing conversation around how to effectively integrate AI into music education. Our insights also indicate potential alternative approaches to mainstream and longstanding music education when leveraging emerging technologies, such as AI, to solve pressing social issues.

1 Introduction

Today, music education in the UK is in a critical state according to emerging evidence outlined in numerous organizational reports including the UK Royal Philharmonic Orchestra (Royal Philharmonic Orchestra, 2018), Musician's Union (Savage and Barnard, 2019) and, more recently, the UK government office dedicated to scrutinizing educational standards—Ofsted (Office for Standards in Education, Children's Services and Skills), stating: “The inequalities in provision that we highlighted in our last subject report in 2012 persist. There remains a divide between the opportunities for children and young people whose families can afford to pay for music tuition and for those who come from lower socio-economic backgrounds.” (Ofsted, 2023). This is evidently a long–standing and pertinent issue in the UK. Contributing factors to this crisis in music education appear to be funding shortages (Stone, 2023; Savage, 2021b), instrument tuition cost and access to instruments (Child Poverty Action Group, 2022), quality and variation of provision in schools (including teaching by non-specialists) (Stone, 2023) and a reduction/lack of subsidized music lessons (Ofsted, 2023). However, the myriad benefits of music education include positively impacting social aspects of schooling (Sala and Gobet, 2020), improving mental well-being and confidence (Department for Education, 2021), as well as positive emotional, intellectual and creative outcomes (Hallam, 2010).

Outside of academe, ubiquitous technologies such as smartphone apps (e.g., Yousician) and video streaming services (e.g., Youtube) are enabling budding music students to take education into their own hands, for example, by watching videos of people playing guitar online and following along as closely as they can. This is a compound behavior based on public music education funding shortages, an expectation of parents to fund music lessons in schools (Savage, 2021b), the soaring cost of private instrumental lessons and privatization of learning musical instruments (Sharkey, 2024) vs. the low cost and growing market success of educational apps (catalyzed by the COVID-19 pandemic), the growing popularity of e-learning platforms and the ubiquity of digital devices, where the online music education market, valued at $2.1 billion in 2025, is expected to more than double, reaching $4.9 billion by 2030 (Knowledge Sourcing Intelligence, 2024). For instance, Duolingo is a successful example of an educationally-focused app, which aims to increase access to language education through being free to use. These insights resonate with the recommendation provided by the Musician's Union of using technologies in schools to teach music and help everyone have access to a broad range of music education opportunities, noting that music education networks must be strengthened in the digital space as well as the physical (Savage and Barnard, 2019).

Albeit much more accessible to the public compared to costly private music lessons, technologies such as apps for online music learning (e.g., Yousician) are not as useful as emerging technologies which, when paired, allow for bespoke and detailed user feedback based on capturing the nuances of performing with a musical instrument–i.e., machine learning (AI) and wearable sensors. Modern wearable interfaces measuring biometrics (biosensors) have become more accessible to consumers (i.e., Myo armbands—used in our work), albeit widespread integration and public accessibility remain a challenge. Such biosensors are very useful for music performance research because they allow us to ‘see' musical techniques on instruments via novel biometrics—electromyographic (EMG)—measuring muscle tension. In this sense, we can use such sensor information to understand intricate and granular performance behavior, which often give rise to specific forms of musical expression (e.g., legato and staccato), as noted in our earlier work (Rhodes et al., 2023). Moreover, we can pair such performance data to computing systems and create a relationship between musical gesture and digital output (e.g., the classification of musical technique). Albeit the biometric data returned is complex when attempting to pair it to nuanced musical technique. Therefore, algorithms are needed to deconstruct this complexity and identify patterns—in other words, AI. Deep learning has been applied in the last few years to predict gestures used in instrumental music performance, such as violin bowing techniques studied by Dalmazzo et al. (2021). Thus, pairing the increased accessibility of wearable technologies (elucidating musical technique) with advances in machine learning may allow us to address the problem of increasing accessibility to music education.

This area of research is motivated by the affordances of such current technologies and their usefulness in addressing pressing social issues with music education accessibility. However, it is unclear which features would make the AI guitar assistant most effective, including the optimal pedagogical context (e.g. supporting a human teacher or functioning as a standalone tutor), the accessibility needs of users and device availability, cost and ubiquity; a problem which current research (Wilson et al., 2023) is also seeking to address with regard to finding a suitable alternative for the Myo interface—in other words, the challenges and opportunities in developing an AI guitar assistant.

This project analyses survey data collected from 21 guitarists, from established music schools across the UK, to inform the design and execution of our wider ongoing research enquiry: developing a smart, personalized, musical instrument feedback system. Through this developing project, we aim to deliver a bespoke and accessible guitar feedback tool through leveraging advances in AI: pairing emerging technical affordances with societal challenges. Building upon our initial research (Rhodes et al., 2023), which sought to understand and validate if such a guitar feedback system could be constructed using performance data, we seek instead in this work to understand which factors affect the development, usability and need of our AI guitar assistant by stakeholders. We seek to address the following research questions, respectively:

• What barriers do people face when accessing music education?

• How can an AI tool be designed to increase accessibility of learning the guitar, using modern advances in deep learning?

• Which features of guitar performance are most pertinent for an AI to evaluate?

• What pedagogical context is suitable for using an AI assistant in music learning—as a teaching assistant/practice companion in between instrumental lessons, within the classroom (human-AI partnership), or as a standalone system (without a human tutor)?

As AI becomes more pervasive in digital education and e-learning, our contribution in this paper is a set of derived insights for the scholarly and practice-based research community when developing an AI guitar assistant, based on a thematic analysis of survey data we collected. The paper is structured as follows. Section 2 will look at literature in the field which motivates our enquiry. Section 3 will outline the method we used to collect qualitative data from the participants in this study. Following this, Section 4 will report the participant data we captured and Section 5 will analyse and contextualize participant data toward addressing our research questions. Section 6 will address the limitations of our study and guide recommendations for improving study design to minimize bias and overcome challenges related to the sample. Finally, Section 7 will conclude our findings, provide limitations of the study and discuss future work.

2 Literature review

This section discusses themes which are pertinent to establishing a theoretical framework for our work. Section 2.1 looks at research within the field of wearables (biometrics) and performance monitoring, Section 2.2 investigates machine learning applied to predicting musical gestures, Section 2.3 then examines how humans and AI systems can work in tandem, Section 2.4 looks at the current state of music education in the UK and key reports outlining this, Section 2.5 outlines studies using AI as a tutor in different domains and, lastly, Section 2.6 looks at how gamification can be used in delivering music education.

2.1 Biometrics and monitoring performance

Biometrics from wearable devices are useful in performative applications because they allow us to be more aware of the caliber of our performance in tasks, and respond accordingly. Wearable sensors that measure biometrics have been used in particular in sports to individually monitor athletes with respect to their functional movements, biometric markers and workloads to maximize performance and minimize injury (Li et al., 2016). We see the same logic applied in different fields that place value on maximizing performance from individuals, such as within military applications, healthcare, transportation and fields affiliated with Industry 4.0 (Svertoka et al., 2020).

Music is no different when the question of maximizing performance is concerned. Research has shown that using wearable interfaces in musical performance can allow performers to create new instruments (Rob Hamilton, 2015), develop novel compositional methods (Rhodes, 2022) and gain analytical insights into the nuances of instrumental performance in order to create digital feedback systems (Dalmazzo et al., 2021). Wearable interfaces are typically selected based on the affordances they provide, particularly in terms of the types of data they capture. Some interfaces are more suitable than others depending on the task at hand. For example, gross motor movements (e.g., bow strokes) may be best captured using inertial sensors, while fine motor movements (e.g., fingering) may require more precise measurements via muscle-sensing equipment.

In 2015, Thalmic Labs released the Myo armband, making a consumer-level armband capable of monitoring nuanced muscle behavior in the form of EMG data, proving useful for music researchers and applications in music. EMG data is useful, compared to motion data, because it shows us nuanced muscular movements. Myos operate more efficiently than optical sensors because EMG data is more indicative of musical actions, compared to optical sensors, and are not affected by occlusion and poor lighting. Other forms of interfaces have been used in the past to capture musical information, such as MIDI controllers and cameras (e.g., LeapMotion).

However, the Myo was discontinued in 2018, creating a problem for music researchers when finding a suitable alternative. Due to our use of Myo armbands, we surveyed the consumer market and found two viable alternatives to the Myo, which use EMG sensors, namely: SiFiBand (from SifiLabs) (SiFi Labs, n.d.) and MindRove Armband (MINDROVE, n.d.). However useful, these wearable interfaces are still unaffordable for the general public, having an average cost of £732.

Looking at wearables currently on the consumer market, we see that most devices do not communicate EMG biometrics. Apple, a company dealing with wearables, has made their Core Motion application programming interface (API) available for developers with regard to other forms of movement data, such as inertial measurement unit (IMU) data. In 2023, Apple released their Series 9 Watch which allowed for communication of high-frequency motion data via Core Motion (Apple Developer, 2023). This is promising and shows an avenue for plugging in findings from the lab to the real world via ubiquitous devices. However, there is still an affordability issue. What is promising in the future consumer space, however, is that Apple and Meta have filed patents in the last couple of years for including EMG sensors in their wearables (Purcher, 2023; Osborn et al., 2021): suggesting a focus of shifting to gestural interactions with future technologies. A study noted that there are numerous benefits to measuring gestural information from the wrist rather than the forearm (Botros et al., 2022). Other studies (Wilson et al., 2023) have compared the Myo armband with the use of the Apple Watch for musical applications with favorable results (discussed in Section 2.2). Paired with industry behavior (patents above), the increased ownership of wearables and the preference for personal assistants in smartwatches (Birch, 2023), this implies that modern wearables could become next-gen interfaces for public use within musical applications. Evidently, there is a clear gap between lab-based research and low-cost wearables. However, data showing increased ownership of wearables (smartwatches) and public attitudes toward digital personal assistants are promising. In light of the above, exploring widely available wearable devices equipped with accessible APIs – such as Apple's Core Motion – and developing a low-cost interface appears to be a promising direction for further investigation.

2.2 Machine learning to predict musical gesture

Machine learning is used to classify performance gestures in music because algorithms can observe complex patterns, i.e., when we perform different musical techniques. This is useful because we can accurately predict musical gesture, plug such predictions into digital systems and provide multimodal insights (i.e, visualizations) of musical performance: especially useful in pedagogy.

Work by Visi and Tanaka (2021) provided an overview of the relationship between musical performance, gesture and machine learning. In doing so, the authors discuss machine learning techniques and then position some of them within the context of four of their own creative works. The authors firstly explore the motivation behind using machine learning to predict musical gesture. To do this, the authors discuss the key relationship between musical gesture, intent and mapping: where the latter refers to plugging gestural data representing musical actions into sound parameters. Gestural interaction design (i.e., the process of retrieving and processing gestural signals based on musical performance behavior, and mapping them (in many possible ways) to sound), as an evidently complex task, is discussed to benefit from the application of machine learning. Significantly, the authors describe the main components of an interactive machine learning system when pairing gestures to musical/sonic output: motion sensing, analysis and feature extraction, machine learning techniques, and sound synthesis approaches. The discussion of such components in this work is highly informative and practical when navigating how to appropriately sense complex musical gestures given gestural characteristics (e.g., motion-oriented or bodily), extract meaningful information from the gestures (i.e., feature extraction) and plug them into musical systems via machine learning techniques (e.g., classification, regression and temporal modeling).

Wilson et al. (2022) used classification (via neural networks) on EMG data, as well as audio and inertial, to see if violin performance could be accurately predicted according to combinations of these data measuring violin performance techniques, using an open source dataset of 880 performances. Music information retrieval techniques were used to classify the audio data, and gestural analysis on the biometrics. The study found that IMU data types had the best prediction accuracy on violin techniques (77%), where EMG + IMU was not far behind (74%), and EMG data was poorest of biometrics (70%). Biometrics far outshone audio data for prediction. However, feature extraction was not used on EMG data despite reported benefits (Arief et al., 2015); affecting the accuracy of EMG data classification in this work.

Dalmazzo et al. (2021) used different machine learning algorithms to assess how efficient they were on predicting violin techniques, using biometric IMU (motion) data taken from wearables (Myo armbands). A total of 8 participants were used in the study. The study found deep learning (CNN and LSTM) was most effective when applied to predicting musical gestures on the violin, showing prediction rates of > 97% using motion data from Myo sensors; EMG, however, was not investigated for classification.

A study by Wilson et al. (2023) explored the feasibility of using a smartwatch as an alternative to the Myo armband, measuring EMG data. The study recorded six violinists performing musical exercises, namely two-octave G and D major scales (performed using two bow articulation techniques: Spiccato and Legato), each wearing an Apple Watch and a Myo armband. A microphone was also used to capture violinists' performances. Musical scales and bow articulations were studied. A classifier (MLP) was used to predict the exercises and bow articulations, trained on various performance data combinations. The study found the smartwatch could be a prospective alternative to the Myo armband regarding IMU capabilities. However, the study noted that a comparison cannot be drawn to the EMG capabilities of the Myo, which gives the ability to detect fine motor movements instead of gross movements detected by an IMU, such as those used in bowing. Above all, the study notes that the smartwatch could indeed be a ubiquitous alternative to the Myo and, in turn, could help democratize the delivery of audio-gestural products: as discussed in our work.

Finally, Dalmazzo and Ramírez (2020) classified seven bow gestures on the violin to identify three levels of expertise (experts, high-level, and middle-level) of players, toward building a computer-modeled assistant that gives real-time feedback to novice students. A total of 9 participants (violinists) were used to record motion (from Myo armbands) and audio data of violin techniques, as well as a piece of music. A hierarchical hidden Markov model was used to classify the recorded data. The study returned accurate classification results of violin techniques, particularly for expert performers, but less so for middle-level performers. Showing the variation in prediction accuracy for players of different abilities based on motion data. Applying this outcome to our EMG-focused research with our sample of guitarists is therefore key to consider, regarding future model generalization.

2.3 Humans in the loop

As AI technologies are deployed into public society at a rapid rate, it is important that such technologies are “humanized” through our participation in their design. The studies navigated in this section elucidate some risks when not incorporating public input into AI design, especially if intended for public use.

Floridi et al. (2021) analyzed 27 AI-based projects and reported seven factors that should be considered when designing AI for social good. Two of these factors discuss the importance of public autonomy when encountering AI systems. The first—Receiver-contextualized intervention—centers on consulting users when building decision-making systems; if not consulted, at an extreme end, smart systems can cause human fatality—as the authors outline when Boeing airline pilots could not reverse a software malfunction due to a lack of safety features. The second—Human-friendly semanticization—focuses on not hindering the ability for people to make sense of a system.

Another study by Toivonen et al. (2020) worked with 34 schoolchildren to design machine learning algorithms in workshops via Google Teachable Machine, based on the idea of meta-design—“positioning children as designers and creators in the evolving process of learning”. Given the task of applying machine learning to real-world situations, the children designed and tested their smart applications. Albeit the algorithms were not particularly effective, this study shows the benefit of involving stakeholders in designing AI apps toward promoting an understanding of how they work: effectively improving model behavior transparency (where the ‘black box' of model operation is a known issue) and allowing this to be taken forward by stakeholders when questioning how ML models operate in daily life. The latter is especially significant regarding raising public awareness of how applied AI works when addressing concerns related to bias, fairness and inclusivity.

A study by Tomasev et al. (2020) discusses the importance of consulting subject-specific experts in the design of AI systems aimed at social good, particularly regarding ethical considerations and inclusivity. This is important to consider when debating the nature of AI in modeling domain-specific skills–such as those required in musical practices. Field specific human input is therefore vital not only to fully realize the benefits of AI technology but also to avoid potential misalignment with users' real-world needs, reducing the risks of biases, inaccuracies, or unintended consequences in systems deployed within specialized practices.

2.4 Current state of music education in the UK

An industry report by Savage and Barnard (2019) constructed four online surveys aimed at those responsible for the delivery of the UK National Plan for Music Education (NPME) (Ofsted, 2021) including instrumental teachers, classroom teachers, music managers and head-teachers, receiving over 1,000 participants. They also conducted 42 telephone interviews from the three main survey groups. They report significant challenges facing music organizations such as regional inequalities, ineffective training and financial support, in particular for self-employed instrumental teachers, with a majority across all participant groups criticizing the NPME design, voicing many flaws in its approach.

Another report by the Department for Education (2021) discusses responses from over 5,000 individuals including young people, their parents and carers, school or college teachers and those working for a music education hub or service. Many respondents voice the need for learning opportunities to be more inclusive and accessible, and stress the associated inconsistency of deliverance across educational institutions. The report highlights the inability of some educational institutions and music services/hubs to meet the aims that the NPME sets out, despite support from governing bodies. The report also emphasizes that the capacity to meet these plans is something that will be crucial to configure if we are to reduce the existing inequality. Inegalitarianism is evidenced even in the author's more recent report (Ofsted, 2023), suggesting that a revision of the approach is needed in order to better address the inconsistencies and deficiencies voiced.

A market report by Beesley (2024) sought to gain insight into the current state of music education by collecting and coding data sets of stakeholders in the UK music industry. The report queries the perceptions and attitudes of participants (N = 15) toward technology and its effectiveness in teaching and learning. Key findings bring to attention the significant variation of quality of music pedagogy, and exhibit the root problem to be financial limitations (in the report 80% chose this as limitation they faced themselves). The second most prevalent theme, geographical limitations, highlights the “postcode lottery” (discussed by Savage, 2021a), wherein stakeholders experience variable access to music education provisions (e.g., schools, Music Education Hubs, charities) and associated funding, depending on their location. In turn, this has an impact on the quality of music education and associated opportunities received. The conclusions of this report propose an opportunity toward efforts of bridging inequality, its suggestion being to investigate where technology has a space alongside ongoing efforts. With technology use generally being well received in the study report (87% of participants advocated for its use), the findings motivate the development of an AI music assistant as a potential solution.

In response to these problems of access to music education, many people turn to virtual learning platforms in the pursuit of directed and personalized tuition. Digital music creation has become increasingly prevalent over the past decade, accounting for curricular and extra-curricular facilitation of learning musical theory and instrumentation (Haning, 2016). Prospective learners are now able to access music resources from any compatible smart device, and this has broadened the scope of information that they can receive outside of, and complimentary within, a formal educational setting. Since COVID-19 regulations enforced strain on face-to-face teaching, there have been powerful grounds to facilitate integration of music software as a pedagogical tool, with its potential to augment the teaching and learning experience. A study by Koehler et al. (2013) proposes the Technological Pedagogical Content Knowledge (TPACK) framework for integrating technology into teaching practice, highlighting the complex and layered interplay between technology and facets of teacher knowledge. This review offers a framework to examine how smart systems might be integrated within an emerging paradigm of music teaching practices.

2.5 AI as a tutor and design ethics

AI is being used increasingly within education; however, the context regarding how AI should be used within a pedagogical setting is very unclear because the field is rapidly evolving. At the same time, there is a clear opportunity for personalized technologies within education due to the ubiquity of smart devices. Through questioning the relationship between human and algorithm, potential ethical issues can also be mitigated when designing and using AI tutoring systems.

An online music education market report by Knowledge Sourcing Intelligence (2024) highlights the increasing demand for personalized learning in the online music education sector. Companies such as PianoVision (PianoVision, n.d.) are exploring personalized learning approaches, though currently without the integration of wearable technologies. This recommendation corresponds to our observations on pairing deep learning with wearable technologies, in efforts toward creating an advanced AI guitar assistant capable of delivering personalized feedback.

A study by Dalmazzo et al. (2021) highlights the potential of AI to predict performance techniques in support of instrumental music education, focusing on a quantitative approach to classifying violin techniques. The study positions its AI system as a musical feedback tool intended for use supplementary to lessons with human teachers, rather than as a replacement for music teachers. This distinction is significant, as it invites further exploration of the tensions between AI functioning as a tutor, a personal technological practice aid (akin to a metronome), or a pedagogical tool used by educators. Despite such contributions, there remains a notable lack of qualitatively driven inquiry into how these systems should be designed if AI is to be meaningfully integrated into music education, including the context and intended use cases of such systems.

Another study by Luo et al. (2020) looked at the role of AI in coaching sales agents toward improving their job skills, based on three field experiments. This work is very interesting because the results show different aspects of human-AI interaction in tuition: the first experiment shows that middle agents benefited the most from AI coaching, but lower and upper agents did not. This is reportedly due to a knowledge overload issue for lower agents when coached by an AI, and a strong aversion by upper agents to learn from an AI vs. human. Experiment 2 sought to alleviate the issues lower-ranked agents were having by redesigning the AI's feedback level, resulting in improved agent performance. The last experiment revealed that a combination of AI-human coaching outperformed the AI or human coaching alone; allowing for an effective coaching combination of hard skills from the AI and soft skills of human managers.

Finally, a study by Wang et al. (2019) looked at creating an AI coach for sports, toward personalized athletic training, using 63 clips of skiing training videos from 30 sports enthusiasts. Their AI system was designed with distinct features: trajectory extraction, human pose estimation, and pose correction. The first step—trajectory extraction—involves using a tool to identify if humans are within a video, a bounding box is then applied to detected humans, and a tracking model then follows them from the second frame of the video to the last. When the tracking process is finished, each identified human is surrounded by a tubelet for the duration of the video. The second step—human pose estimation—uses a single-person video pose estimation model with the tubelet (discussed in the prior step) to extract human pose per frame. The final step—pose correction—uses a classification model to recognize ‘bad poses' and highlights such poses to warn athletes (users of the system) and, instead, show them standard poses (i.e., provide them feedback). The usability of their AI coach was conducted through speaking to 44 participants, who were asked to rate the AI coach in comparison to other sports apps. Study results show that the AI coach was viewed favorably by usability participants based on the AI detecting errors and providing feedback to them, especially in relation to other apps which show videos to users rather than utilizing AI. However, the AI coach was scored unfavorably by participants regarding its applicability to sports outside of skiing. This is very promising where the future of personalized learning via AI coaches is concerned, within skilled professions.

Ethical issues should always be considered and pre-empted when a human is in a feedback loop with an AI system. Especially given educational contexts, when students using such systems are working closely under the guidance of an agent and relying on their instruction. Maia et al. (2024) conducted a systematic review of 43 studies and identified key factors for designing responsible and effective AI tutoring systems. These include selecting appropriate evaluation metrics to support personalization, ensuring privacy through secure storage and encryption of student data, collecting less sensitive data (e.g., activity logs instead of video), fostering human-AI collaboration to share instructional responsibility, and accounting for how institutional governance influences deployment. These considerations are directly relevant to our work on AI-assisted music learning, particularly as we explore biometric data collection, develop feedback mechanisms, and consider deployment pathways beyond academic settings.

Thomas et al. (2024) explore how large language models can support tutor development by providing suggestions to enhance their student-facing feedback. The model used was trained on a corpus of tutor-student transcripts from remote teaching sessions. In the study, tutors were asked to give feedback to students solving maths problems. The AI then evaluated the tutor's response and provided explanatory suggestions, guiding them to rephrase in line with research-based best practices, such as praising effort over outcome. To ensure ethical use, the system's feedback for tutors was clearly labeled (“AI-generated feedback suggests…”), limited to pre-approved templates/phrases vetted by human experts, and subject to tutor scoring to refine output relevance. To mitigate ethical issues, the authors maintain the use of a human-in-the-loop review process and note that bias could be alleviated through random sampling across school sites and regular auditing of model performance. Their safeguards inform our own thinking around how to deliver tutor or performer-facing feedback in a way that maintains trust, clarity, and accountability.

Together, these studies highlight the ethical imperatives of transparency, data protection, bias, privacy, fairness, and responsibility, co-operation and accountability in AI-assisted education. We aim to incorporate these practices into the development of our AI guitar assistant.

2.6 Gamification in learning

Gamification is used in a variety of fields such as languages, sports and music. Gamification is applied in the field of education to use game design principles, elements and mechanics to stimulate learning, engage and motivate students, and promote learning and problem solving (Manzano-León et al., 2021), while affording opportunities to communicate abstract concepts.

RockBand is a prominent example of music education software that features a large varied library in an accessible, gamified, and inexpensive format. With such applications, players tend to focus on the gameplay element, rather than on the pedagogical delivery (Graham and Schofield, 2018). This is a tension worth noting for creating music education applications using interactive, audiovisual, components.

BeatSaber is a virtual reality application that uses a human tracked gesture to clash with objects in time with music. The game is marketed as a VR rhythm game, requiring users to hit the emerging objects at the correct instance of song beat synchronicity. A study by Tammy Lin et al. (2023) looked at BeatSaber gameplay from 240 students allocated to three different game settings. It found merit in an immersive and interactive game on psychological and physiological health, and termed “exergaming” as a means of motivation for maintaining physical health.

PianoVision (PianoVision, n.d.) is a mixed reality tool for personalized, real-time piano learning. PianoVision can either be utilized with a user's existing piano or a virtual keyboard generated by the software itself. To do this, it uses an AI system called Learning Engine and the Meta Quest 3 pass-through cameras, via which it receives data from hand-tracking and note detection. This generates real-time feedback on the player's technique, allowing learners of all abilities to progress in their learning. Work in this space brings to light the importance of such applications being guided by qualified music teachers and, with this guidance, proposes AR-assisted music education as a viable approach in alleviating a qualified music teacher shortage (Simion et al., 2021).

Yousician is an app for music education, allowing real-time feedback when playing a musical instrument through a device's built-in microphone. Lessons are tailored to user performance progression and gamifies (i.e., uses audiovisual, interactive, elements to reflect musical information, such as pitch and rhythm, and apply scoring based on user accuracy) the learning experience with players, who are able to compete on a global leaderboard for in-game achievements. A study by Yun Yi and Thiruvarul (2021) set out to explore the role of Yousician as a tool to enhance music learning and practice. They looked at participants with beginner and intermediate formal experience and found that participants felt motivated to continue practicing due to the gamificiation elements offered on the application (completing challenges, receiving scores and accessing a global leaderboard). They concluded that the game mechanics of this application promote a sense of fulfillment and engagement that learners obtain from progression in learning.

Duolingo has been a successful example of language learning, using a gamification strategy since it was released. The learning platform provides in-game achievements for commitment and accuracy of lesson completion. A study by Huynh et al. (2016) collected information of completed language courses and their corresponding in-app skill commendation features. They concluded that the app conveys a non-game learning environment in which all users from novice to more advanced can tailor advancement in learning via the ‘milestone' selection. Recently, Duolingo has released a music learning feature, offering a piano keyboard to play notes on a musical stave (i.e., using sheet music). Advantages of using game engines to teach where pedagogy is concerned include visualizations, hearing concepts (sonification), and seeing abstract concepts in new, multimodal, ways. Teaching abstract musical concepts in lessons has been noted to be difficult particularly to music teachers (Beesley, 2024). A study by Bremmer and Nijs (2020) investigated the role of the body within teaching music, emphasizing the use of gestures to be key in engagement and effective delivery. Therefore, gamification in music learning may help bridge the gap between communicating abstract musical concepts and learners' comprehension of them.

3 Method

In this project, we collected guitar performance and survey data from 21 guitarists (2 female, 19 male) enrolled in prominent music schools across the UK, including the Guildhall School of Music & Drama, the Royal Northern College of Music, and music departments at the Universities of Oxford, Manchester, and Liverpool. This recruitment approach aligns with established research methodologies in this field (Wilson et al., 2023). While this project involved collecting both multimodal guitar performance data and survey responses, the present work focuses exclusively on insights derived from the survey data. The multimodal performance data, gathered to support the development of the AI guitar assistant (which is an ongoing thread related to this work), is not covered in this analysis but will be examined in future publications. The remit of this work is to analyse the survey data collected in order to inform, understand and contextualize the development of the AI guitar assistant, and at the same time inform the research community of the findings.

To recruit participants, we contacted music school administrators across the UK, requesting that they circulate a recruitment advertisement within their respective institutions. For the purpose of this study, we aimed to recruit two distinct performer categories: amateurs and professionals. This classification enabled us to explore differences in performance ability and technique between these groups while also capturing diverse perspectives on the requirements of an AI guitar assistant. To place participants within these categories, we designated undergraduates as amateur performers and graduates/postgraduates as professional performers. Our final sample included 11 amateur participants (all male) and 10 professional participants (8 male and 2 female), resulting in a total of 21 participants. To ensure transparency and informed consent, participants were provided with an information sheet at the beginning of each session, outlining the research aims and the context of the study. Participants also completed and returned consent forms, which detailed their expected involvement, potential uses of their data, and personal data storage rules. We acknowledge the gender imbalance in our sample and address this limitation in Section 6. The participants attended separate recording sessions at the NOVARS Research Center, University of Manchester. Each recording session was structured into two distinct parts to ensure the systematic collection of data.

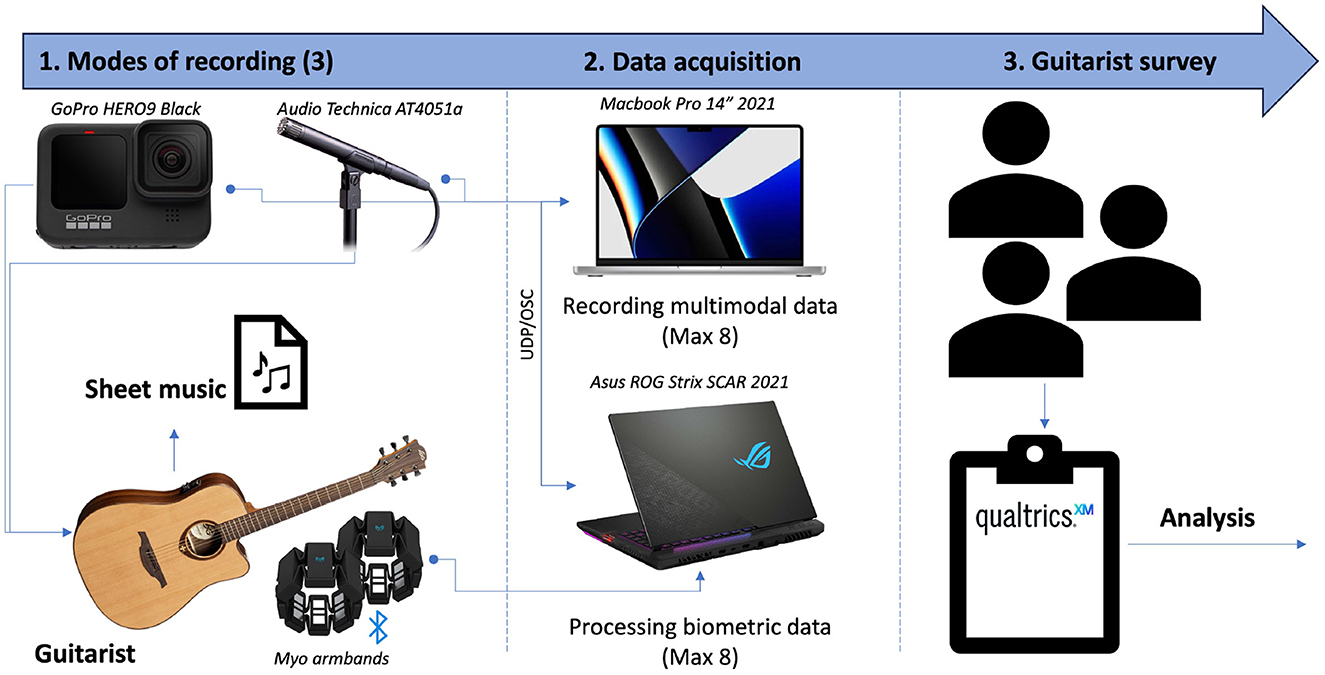

Firstly, the guitarists were instructed to perform a series of musical exercises specifically designed for this study, informed by observations from music examination boards in the UK (see Rhodes et al., 2023). During these performances, we recorded three distinct types of data to assess their guitar playing: biometric data (via two Myo armbands), video data (captured using a GoPro camera positioned directly in front of the performer to provide a clear, frontal view of their playing technique and posture), and audio data (via an Audio Technica microphone). See Figure 1 for an overview.

To facilitate this, we developed two custom software programs (referred to as ‘patches') within Max 8 (hereafter referred to as Max) to receive, process, record, and store the multimodal performance data. These patches were deployed across two separate laptops connected via Ethernet and communicating through the UDP/OSC protocol (see Figure 1). This design ensured smooth, low-latency recording by distributing the computational load across two machines.

The first Max patch, named ‘The Brain', received, parsed, and processed biometric data from the Myo armbands, received via bluetooth. This processed data was then transmitted to the second patch, responsible for collecting and recording performance data. This second patch, named ‘Data Acquisition', recorded additional data streams (i.e., video through a USB connection and audio via a MOTU audio interface) and maintained tempo alignment using a metronome.

For each exercise performed, four files were generated: two .csv files (one for each Myo armband), an audio file, and a video file. Throughout the data acquisition process, a total of 61.93GB of data was collected. These data types are further examined in (Rhodes et al., 2023), which details their use in informing the development of an AI guitar assistant.

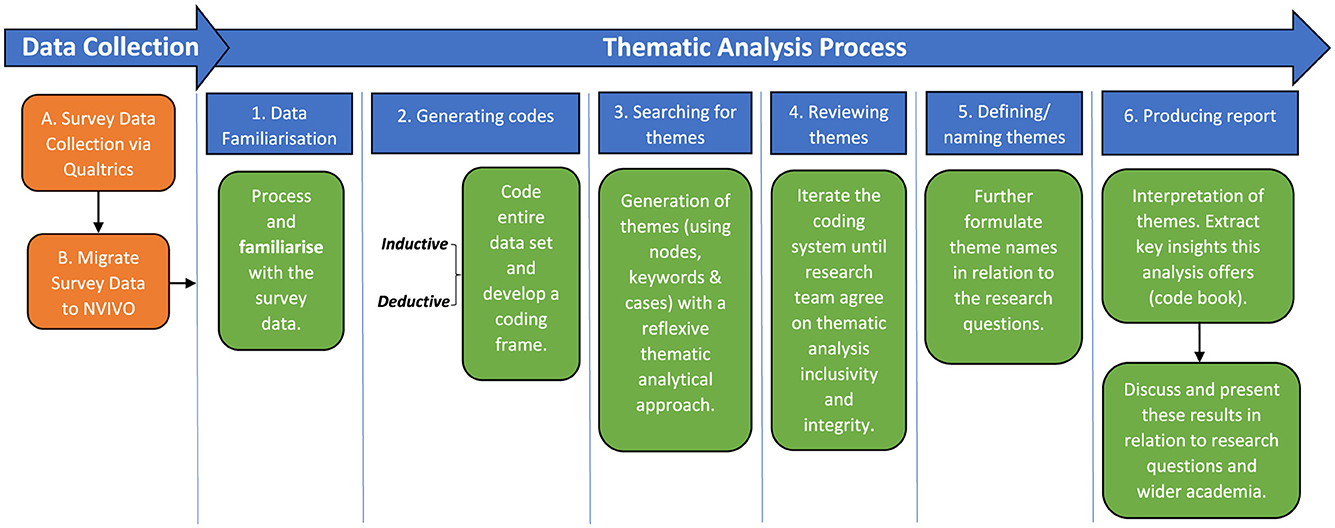

The latter half of the recording session involved participants completing a survey, via Qualtrics, serving as the focal point for the subsequent results and discussion sections (see Sections 4, 5). An overview of the qualitative method used can be observed in Figure 2. We ensured the safe and secure collection and storage of survey data collection via the Qualtrics platform, in compliance with the University of Manchester's research ethics guidelines. The project, its methodology, and data acquisition procedures received formal approval from the University of Manchester's Research Ethics Committee.

Figure 2. A figure showing the thematic analysis method we used to generate codes and themes for participant data insights.

To facilitate a comprehensive analysis, encompassing both qualitative and quantitative dimensions, the survey was designed to include 22 multiple-choice and 24 open-ended questions. This mixed methods approach, exemplified by the work of Cajander and Grünloh (2019) and prevalent in contemporary HCI research, was deliberately chosen to leverage the complementary strengths of both descriptive and discrete data types. Specifically, the inclusion of open-ended questions alongside structured multiple-choice items enabled a nuanced thematic analysis. This strategy allowed us to simultaneously capture broad trends and patterns through pre-defined responses, while affording participants the opportunity to articulate their reasoning and experiences in their own words, thereby providing a rich, granular dataset.

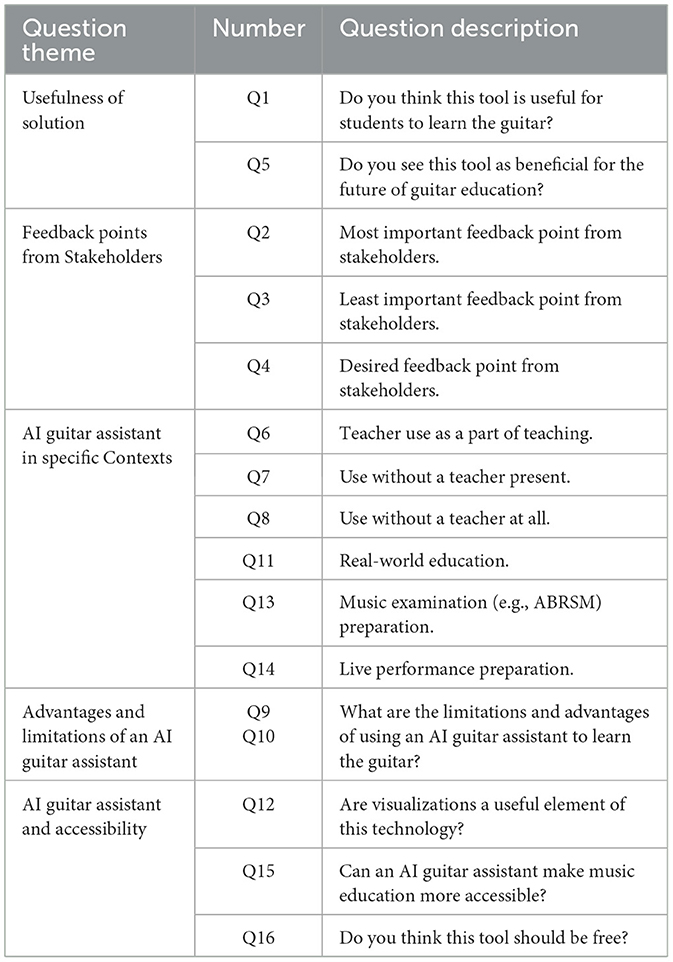

The following analysis adopted a qualitative approach, leveraging the rich, open-ended responses from participants, which provided deeper insights than a quantitative analysis of the limited sample size would allow. The specific open-ended survey questions pertinent to the qualitative analysis are detailed in Table 1. The corresponding survey instrument was structured into five distinct areas: 1. Usefulness of Solution (2 questions), 2. Feedback Points from Stakeholders (3 questions), 3. AI guitar assistant in Specific Contexts (6 questions), 4. Advantages and Limitations of an AI guitar assistant (2 questions), and 5. AI guitar assistant and Accessibility (3 questions).

Following data collection, a structured working chronology (see Figure 2) was established to facilitate collaboration, task coordination, progress tracking, and to maintain a focused approach to our research questions. Central to this process was the application of thematic analysis to the survey data. Specifically, we employed a reflexive thematic analysis framework, adhering to the six-phase process outlined by Braun and Clarke (2006). This reflexive approach, as described by Campbell et al. (2021), enabled a nuanced analysis that integrated both inductive and deductive coding methodologies.

Following the identification of the open-ended survey questions relevant to the qualitative analysis (Table 1), responses to these questions were imported into NVivo. This facilitated the analysis by enabling systematic generation, categorization, and iterative refinement of codes (Castleberry and Nolen, 2018). The research team continuously reviewed the codes to ensure accurate categorization and representation of participant responses, actively working to mitigate individual researcher biases in the categorization of responses. This process began with line-by-line coding and grouping based on conceptual similarities, which led to the development of preliminary themes. Theme review ensured both internal coherence and external distinctiveness, resulting in well-defined themes. A comprehensive codebook, detailing themes, codes, and illustrative examples, facilitated the identification of hierarchical relationships.

4 Results

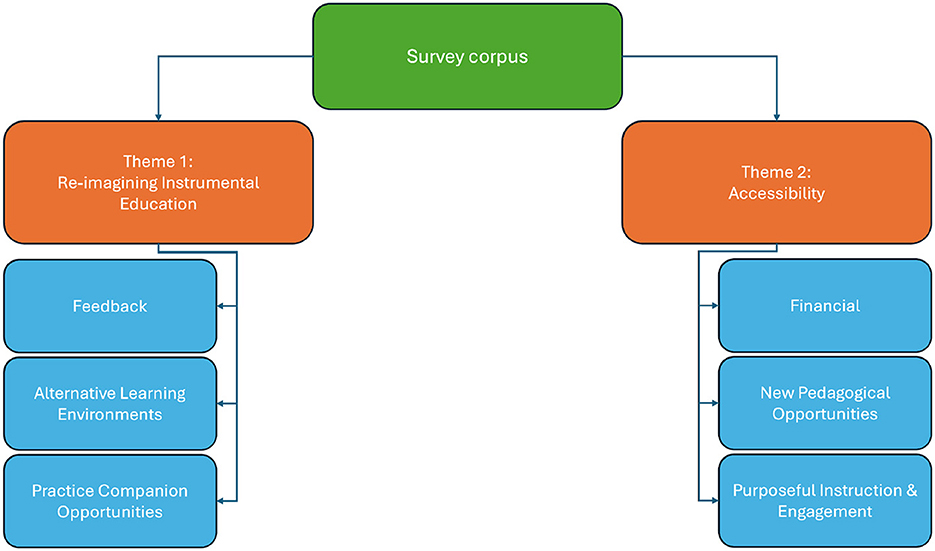

The following results section outlines key findings from the thematic analysis we conducted on the survey data. The analysis utilized a hierarchical coding framework in which themes serve as overarching categories, encompassing distinct codes (see Figures 3–5). Each theme includes specific codes that capture more granular patterns in the data. These finer details are crucial for uncovering nuanced insights, enabling a deeper understanding of the participants' perspectives and informing more targeted and effective design decisions. These codes capture the complexity and diversity of the responses, emphasizing the importance of maintaining the richness of the data within this holistic analytical framework.

Figure 3. A figure showing a high-level overview of the themes and codes we generated from the corpus of survey data.

The results are presented using an integrative approach; each code is introduced within its theme, followed by illustrative participant responses that provide depth and contextual richness. Particular focus is placed on responses that encapsulate complex or multifaceted views, often shedding light on subtle aspects of the data that might otherwise remain obscured. This strategy effectively highlights the interplay between broader patterns and individual insights, illustrating the ways AI assistants could both enhance and hinder accessibility, depending on its implementation. By integrating thematic representation with contextualized examples, the results provide a comprehensive foundation for the discussion.

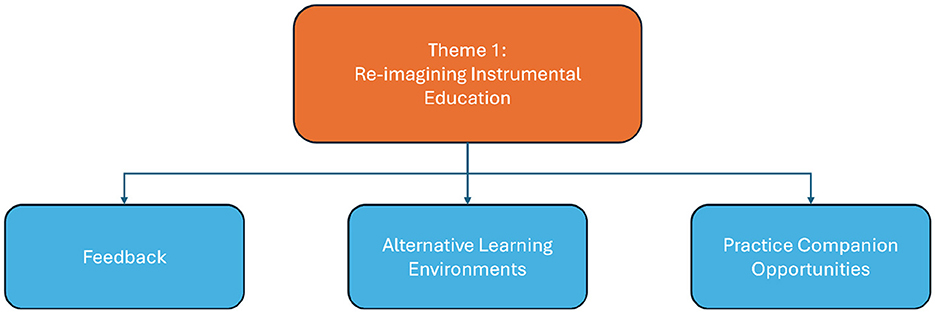

4.1 Theme 1: re-imagining instrumental education

The theme Re-imagining Instrumental Education is comprised of three distinct codes: Feedback, Alternative Learning Environments, and Practice Companion Opportunities, illustrated in Figure 4. This theme encompasses responses related to the feedback capabilities and limitations of an AI guitar assistant, as well as its potential use cases and implementation. We present data from the codes in this section which gives an insight into how we arrived at relating them to our generated theme.

Figure 4. A figure illustrating the high-level thematic map for Re-imagining Instrumental Education.

4.1.1 Feedback

The first code within our theme, Re-imagining Instrumental Education, was Feedback. Within this code, several factors were discussed that contributed to its identification, including technique improvement, musicality and performance readiness, rhythm, tempo, and sound quality, precision and accuracy, as well as the limitations for advanced students. These areas were seen as essential in evaluating the AI assistant's effectiveness and identifying where its feedback capabilities may fall short. We will now present these factors sequentially.

Technique improvement emerged as a particulalrly engaging aspect of our code, frequently highlighted in participant discussions. P1 pointed to the advantages of personalized exercises, such as targeting specific weaknesses like “right-hand technique.” Another popular focus was on posture, including critical aspects such as arm, hand, and body alignment, as well as breathing. P10 specifically noted the importance of addressing the movement of the picking hand, linking it to the motor neuron relationships essential in guitar playing. P12 highlighted the AI's potential to democratize access to specialized techniques, such as those required for classical guitar performance. Despite these advantages, some concerns were raised. Criticisms centered on the AI's ability to effectively accommodate unconventional playing styles, with P15 referencing legendary guitarists like Hendrix, whose “sloppy technique” is both aspirational and possibly difficult for a homogeneous AI to evaluate. Others emphasized the importance of supporting the intuitive and creative dimensions of playing, with P8 describing this as the capacity to “envision a specific sound and then create it”–a nuanced, embodied process that is fundamental to musical performance.

Musicality emerged as a significant concern, with participants emphasizing the importance of feedback on expression and interpretation. P11 underscored the need for such feedback to be meaningful and effective, while P8 questioned the AI's ability to provide critique on inherently intersubjective elements. Additionally, participants discussed the role of AI in preparing learners for performance, particularly in developing the technical foundations essential for performance readiness. However, concerns about the AI's limitations were evident. Participants questioned its ability to address performance-related challenges, with P9 expressing skepticism about the AI's potential to help learners manage “pressure/anxiety” during live performances. Others raised doubts about whether an AI guitar assistant could effectively critique the subjective and aesthetic aspects of playing, with P8 suggesting that human teachers may be better equipped to address “musicality” and other interpretive dimensions of performance.

Rhythm, tempo, and sound quality were identified as critical areas for feedback. Participants emphasized the importance of rhythm and timing, alongside the need for guidance on sound quality. These elements were regarded as foundational to musical ability, with expectations that the AI assistant would address these aspects to support well-rounded musical development. There was a pronounced demand for high levels of precision and accuracy. P3 emphasized that the AI assistant should assist teachers with a “high level of precision,” while P5 highlighted the necessity of accurate feedback as fundamental to the AI's effectiveness. Participants linked the AI's utility to its ability to provide precise feedback, with P9 and P11 stressing its role in articulating specific embodied musical elements such as “posture, attack, and hand position.” Additionally, P19 stressed the importance of verifying note and timing accuracy, described as ensuring musicians “play the right notes at the right time.” These findings suggest that precision and accuracy are not merely desirable features but central to the AI's role in supporting effective musical instruction.

A recurring theme was the AI guitar assistant's perceived limitations for advanced learners. P7 noted that advanced students, who focus on subtle and complex elements of playing, might “struggle to get much use out of it.” Broader skepticism also emerged, with participants questioning the AI's ability to provide meaningful feedback as subjective and nuanced aspects of musicality become more central at higher levels. These findings suggest that while the AI assistant shows promise for beginners and intermediate learners, its applicability for advanced musicians appears more limited, particularly when addressing high-level interpretative and technical challenges.

4.1.2 Alternative learning environments

The Alternative Learning Environments code focused on how AI assistants might reshape instrumental learning dynamics and environments. This code revealed diverse perspectives on the AI's potential to complement or substitute traditional teaching methods.

A significant theme that emerged was the AI's potential role as a complementary tool for teachers. P13, for example, envisioned the AI as a monitoring system, allowing teachers to identify and address areas where students are struggling, thus enabling them to “target these areas in lessons.” Similarly, P8 described the AI's potential to deliver “personalized feedback” during group lessons on technical concepts previously introduced by a human teacher, enhancing individualized learning without replacing the teacher's foundational role or requiring additional human teaching resources to provide equivalent levels of personalized feedback. Participants also explored the AI's utility for learners who are uninterested in or unable to access traditional teaching methods. This group saw potential in the AI's ability to democratize music education by supporting independent learners. P1, in particular, emphasized its importance for self-taught learners, highlighting that it could offer guidance surpassing current self-learning resources, by providing a more structured and tailored approach.

However, skepticism regarding the AI's standalone effectiveness was also voiced. Participants expressed concerns about its limitations in addressing subjective elements of music education, such as “musical aesthetic decisions,” which, according to P5, still require human input and guidance. Interestingly, some participants highlighted the AI's potential to increase student retention and expedite progress. They argued that by enabling learners to achieve technical proficiency more quickly, the AI could facilitate the exploration of a more diverse repertoire, fostering greater engagement and the need for specialized music instruction. These findings reveal a complex interplay between the potential benefits of AI in diversifying learning environments and the perceived limitations of its standalone application. While participants acknowledged the AI's potential when combined with human teaching and in supporting self-taught learners, they emphasized the need for human input to address the nuanced, interpretive aspects of musical learning.

4.1.3 Practice companion opportunities

The Practice Companion Opportunities code within the Re-imagining Instrumental Education theme highlighted the AI guitar assistant's potential to enhance practice sessions by accelerating learning, fostering effective habits, and providing actionable insights.

P13, for instance, remarked that “the extra support and accuracy will make them learn quicker,” reflecting expectations for the AI to streamline progress. Others emphasized its value in exam preparation, noting that the AI could simulate examiner criteria to help students identify weaknesses and improve their exam performance. P11 discussed the potential for the AI to prevent “bad habits in between lessons,” suggesting the use of “measurable data, such as volume and force of the attack on the string” to deliver precise feedback. The AI's ability to monitor practice sessions and provide teachers with insights to tailor lessons more effectively was also highlighted.

Visual learning tools emerged as a key focus. P10, for example, described “being able to visualize” chords on the fretboard as particularly beneficial for engaging visual learners during practice. However, P12 expressed skepticism, arguing that watching human demonstrations on platforms like YouTube offers a more relatable learning experience than “computer-generated animation”. This code emphasizes an AI practice companions' potential to complement traditional instruction by accelerating progress, reinforcing good habits, and providing targeted feedback, while also revealing a preference among some participants for human-centered learning resources, demonstrations and visualizations.

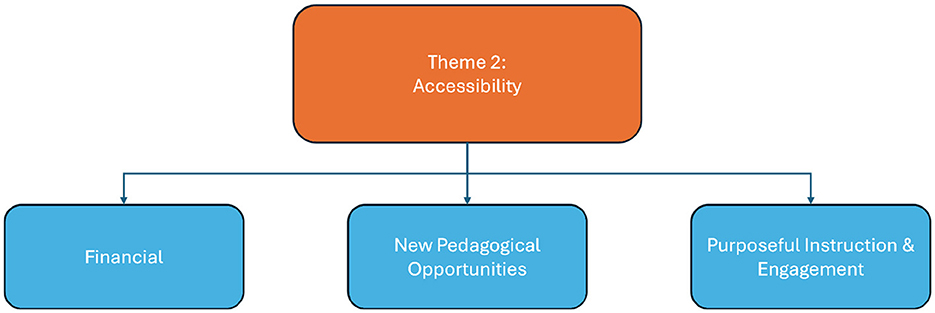

4.2 Theme 2: accessibility

The theme Accessibility is made up of three codes: Financial, New Pedagogical Opportunities and Purposeful Instruction and Engagement, illustrated in Figure 5. This theme explores both the barriers and opportunities related to AI assistants in expanding access to music education.

4.2.1 Financial

The Financial code emerged as a key topic within Accessibility. Participants discussed the economic challenges associated with music education and the potential implications of an AI guitar assistant's pricing on accessibility. Affordability was a recurrent theme, with P11 remarking, “If it will be cheap to access, it will make receiving a music education for underprivileged children much easier”. While the relative affordability of the technology is a significant consideration within the ensuing discussion, a clearer yardstick for comparison with human lesson costs will become more readily available as the AI guitar assistant system is further developed.

Additionally, some participants described the AI assistant as a cost-effective alternative to traditional music lessons, while others highlighted the prohibitive financial barriers of conventional music education. P7 noted unexpected financial barriers such as “exam entrance fees,” while others pointed to the expense of musical instruments, like guitars, technology, and sheet music as additional financial obstacles. Further references acknowledged financial obstacles in music education but did not provide sufficient detail to be classified under specific themes. These findings underscore affordability as a potential benefit of an AI guitar assistant, depending on how pricing models are structured.

4.2.2 New pedagogical opportunities (affordances of AI)

The New Pedagogical Opportunities code highlights the transformative potential of AI assistants to innovate teaching practices and broaden access through adaptive methods. A key insight came from P16, who highlighted the AI's ability to deliver real-time, adaptive feedback tailored to individual student needs, enabling learners to “focus their practice to their weaknesses.” This was framed as a significant improvement over traditional periodic assessments, which can slow student progress due to delayed feedback.

P3 further identified the AI assistant's capacity to support learners unable to leave home or those facing social challenges, emphasizing its role in fostering confidence and accessibility. Additionally, some participants explored the benefits of detailed visualizations, which were described as enhancing focus and enjoyment for visual learners. P3 noted, “It could also help make practizing more enjoyable for people with attention disorders, as it could make practizing easier to focus.”

Despite these benefits, some participants raised concerns about the lack of human interaction. P3 warned that “lessons may feel somewhat lifeless,” and others highlighted the absence of emotional and anecdotal support typically provided by human teachers. This also includes the absence of real-time human demonstration, where students can see and hear the instrument played live, providing a model of the sound quality striven for. Further references described additional singular opportunities, similarly to earlier themes, underscoring the diverse ways in which AI assistants could enhance accessibility without replacing traditional instruction.

4.2.3 Purposeful instruction and engagement

The final code, Purposeful Instruction and Engagement, relates to responses that examined the potential of the AI guitar assistant to facilitate goal setting and sustain student motivation, thus enhancing accessibility for individuals who struggle with traditional benchmarking and goal setting conventions. P8 proposed that the AI could support teachers by organizing structured practice sessions or even act autonomously in their absence, ensuring consistent progression in students' learning journeys.

Additionally, some references highlighted the AI assistant's ability to engage students through diverse and adaptive strategies, with varied practice regimes sustaining motivation. P3 suggested that such approaches “could help maintain interest over time.” However, further references acknowledged the AI assistant's organizational capabilities without detailing the mechanisms or effects involved. These findings illustrate the potential of an AI guitar assistant to complement traditional teaching by enhancing student engagement and self-direction, while also revealing gaps in understanding how such features would function in practice and thus, their potential impact.

5 Discussion

This analysis examines the key themes identified in our participant data, within the broader context of advancements in AI and music pedagogy, aimed at understanding the challenges and opportunities when using AI to develop a virtual guitar assistant. In this sense, building on the theoretical framework we established, our focus is on evaluating the viability of an AI-driven music assistant designed to enhance accessibility to instrumental education while providing high-quality feedback. Through a reflexive thematic analysis of the survey data, we identified two themes: Re-imagining Instrumental Education and Accessibility. In this discussion section, we offer a nuanced analysis of these themes and their associated codes, exploring their implications and situating them within the theoretical framework outlined in Section 2, which underpinned the formulation of our research questions (see Section 1). The insights from this discussion not only contribute to the ongoing development of our AI guitar assistant (as discussed in Rhodes et al., 2023) but also inform broader advancements in the design of AI-based educational feedback systems.

5.1 Theme 1: re-imagining instrumental education

This section critically analyses the findings from our thematic analysis under the Re-Imagining Instrumental Education theme. It explores the types of feedback considered essential for an AI guitar assistant and examines participant views on the contexts–whether as a teaching assistant, practice companion, or standalone system–in which such a tool could be most effectively implemented. Additionally, it highlights gaps and opportunities identified in the data we collected.

5.1.1 Feedback

In addressing our research question regarding the forms of musical feedback stakeholders perceive as vital for an AI guitar assistant to give, the discussion begins with the findings under the Feedback code. The references within this code underscored the critical importance of feedback in learning, musical assessment, and guidance. Participants consistently highlighted the need for tools capable of addressing posture, breathing, hand positioning, body alignment, and intricate hand movements. For example, P11 stated that the AI should offer feedback on critical aspects like “posture, attack, and hand position,” highlighting the necessity of this precise feedback for effective learning. The need for this feedback is supported by the findings of Dalmazzo and Ramírez (2020), whose work demonstrated the ability of AI to classify violin gestures and predict techniques, illustrating the feasibility of an AI guitar assistant in recognizing nuanced musical gestures. Such capabilities are integral to delivering the precise technical feedback that participants identified as desirable. Additional areas of feedback included checking for accuracy, articulation, musicality, rhythm, tempo, sound quality, and aiding players in performance preparation. These elements represent a diverse and comprehensive set of features that should be central to the design of an AI assistant, ensuring it functions as an accurate and effective teaching tool.

Interestingly, some responses in this code were categorized as conditional, reflecting stakeholders' hesitations in forming concrete opinions without access to a prototype. A recurring concern was the potential inability of an AI assistant to deliver high-resolution feedback, particularly for advanced players requiring detailed insights into musicality and technique. As P11 noted, there was concern about whether the AI could account for context or “the nuances of musicality,” which are often key to the development of an advanced player. Respondents were also skeptical regarding the assistant's capacity to account for anecdotal advice and subjectivity, with some participants explicitly questioning its ability to recognize unique individual playing styles. For instance, P15 referred to Jimi Hendrix as a paradigm of a guitarist whose “sloppy technique” belied a unique style and tone that many musicians seek to emulate.

Notably, some responses in this code expressed either conditional support or were skeptical regarding the capability of AI assistant to deliver adequate feedback. As P8 pointed out, while AI tools might be effective in certain areas, “it might struggle with the more intuitive and creative elements of playing,” highlighting the limitations of AI in capturing the full depth of musical expression. This skepticism can be attributed to selection biases within the data set and the emphasis of participants on anecdotal and subjective support, particularly given their journey through music education. Despite these concerns, P9 acknowledged the potential for AI to assist in foundational technique, commenting, “For beginners or those struggling with basic concepts, this could be a game-changer.” This aligns with the common view that AI tools can be especially effective for entry-level or self-directed learning, where foundational skills are developed.

5.1.2 Alternative learning environments

This section addresses our research question which examines the appropriate contexts for implementing an AI assistant (i.e., as a teaching assistant, as a tool within the classroom, or as a standalone system independent of a human tutor). This inquiry is significant as it explores broader questions about AI's role in human-centered domains, necessitating careful evaluation of its implications. Responses within the Alternative Learning Environments code provide valuable insights into how an AI assistant might reshape or reinforce instrumental learning dynamics.

A notable proportion of responses expressed skepticism about the feasibility of deploying AI assistants as a standalone tool. This concern was largely attributed to the subjective and human-centric aspects of teaching, such as emotional, anecdotal, and aesthetic guidance, which are challenging for AI to replicate. P5, for example, pointed out that “musical aesthetic decisions” still require human input. Participants also highlighted limitations in providing performance-related feedback, an area seen as essential to instrumental education. P13, for instance, emphasized the importance of human guidance when addressing these subjective elements, noting that “emotional connection and musical context” are aspects that an AI assistant may be incapable of providing. Consequently, the design of an AI guitar assistant must address these concerns by placing humans in the loop, as discussed in Section 2.3, as well as leaning into the need for human connection and constructive support through its implementations or learning outcomes.

Despite these reservations, some responses indicated that AI assistants could positively impact music education by increasing student enrollment and improving teacher retention rates. They may achieve this by supporting students outside of lessons and helping them overcome entry-level obstacles more quickly. Furthermore, some responses recognized the potential of AI assistants as tools for self-directed learning, particularly for self-taught individuals. P1 remarked that self-taught students could use AI assistants to reach higher levels of skill more efficiently, providing a quicker and more effective learning route for beginners. This suggests a potential benefit for teachers, as students might progress faster with AI support, enabling teachers to focus on more advanced concepts with students—as touched upon by Maia et al. (2024) in Section 2.5. P8 also highlighted that AI assistants could help “self-motivated learners progress at their own pace” without the restrictions of traditional teaching environments, offering an alternative that complements existing learning strategies.

Moreover, some responses favored a hybrid approach, in which AI assistants complement human instruction rather than replace it. Among these, some responses emphasized the potential value of AI assistants in classroom settings, particularly where individualized support from teachers is limited. P8, for instance, envisioned the AI delivering “personalized feedback” during group lessons on technical concepts previously introduced by a human teacher. This would enhance individualized learning without replacing the teacher's foundational role. P13 further noted that AI could serve as a “monitoring system,” helping teachers identify areas where students need additional attention, which would ultimately allow teachers to focus on more nuanced aspects of learning. The discussion also featured the use of AI as a classroom teaching tool, where a guitar teacher could supervise while the AI companion provides personalized feedback to a class, enhancing both accessibility and engagement in music education, especially in scenarios where teacher attention is limited.

Another group of responses identified AI assistants as effective practice companions, capable of providing real-time feedback and generating practice reports. P19 highlighted that such reports could enable teachers to tailor their instructional strategies to address specific weaknesses observed during students' independent practice sessions, thereby fostering adaptive and personalized learning. P14 noted that AI's real-time feedback during practice could allow learners to “immediately correct mistakes”, improving the effectiveness of independent practice sessions. The strong preference for hybrid use aligns with recommendations from Luo et al. (2020) and Dalmazzo et al. (2021), who advocate for the integration of AI with human teaching as the most effective model. By leveraging the complementary strengths of AI and human instruction, this approach addresses the research question posed at the outset, highlighting the hybrid model as the most supported alternative for enhancing instrumental music education.

5.1.3 Practice companion opportunities

The Practice Companion Opportunities code extends from the conclusion of the previous section and similarly addresses our research question regarding the appropriate pedagogical contexts for implementing an AI guitar assistant. It encompasses references highlighting an AI practice companions' potential to support independent practice, accelerate learning due to this, and improve continuous exam preparation. Some participants emphasized its ability to enhance learning speed, with P13 noting that “the extra support and accuracy will make them learn quicker”. Some participants recognized its value for exam preparation, citing the AI's ability to simulate examiner criteria and guide students in identifying areas for improvement during practice. Additionally, some participants discussed its potential to prevent bad practice habits, with P11 suggesting it could leverage measurable data, such as the volume and force of string attacks, to provide precise feedback.

Participants explored the opportunities and risks of AI-generated feedback and visualizations in this context, with some highlighting the benefits of animated and interactive visuals like fretboard diagrams. P10, for example, emphasized how visualizing chords could help visual learners. However, a few participants expressed a preference for human videos over computer-generated animations, finding them more accessible and easier to replicate. There is a need to further investigate how musicians, and humans more broadly, interpret and react to AI feedback and demonstration—as explored by Thomas et al. (2024). Overall, the findings in this thematic code indicate that an AI practice companion has the potential to complement traditional teaching by accelerating progress, reinforcing good practice habits, and offering targeted feedback and demonstration, while some learners express a preference for human-centered resources.

5.2 Theme 2: accessibility

The following discussion is a critical analysis of the results from our thematic analysis, specifically under the Accessibility theme, examining their implications for accessibility in music education and addressing gaps in the data we recorded.

5.2.1 Financial

This section addresses our research question, which asks what barriers do people face in society when accessing music education? In alignment with Beesley (2024)'s market report, our findings highlight Financial Inaccessibility as the most prominent code under the Accessibility theme. Financial challenges accounted for over half of participant responses, underscoring the pressing need for more affordable pathways to instrumental music education. Participants frequently emphasized that an AI guitar assistant could alleviate these barriers, provided it remains low-cost. As P11 noted, “If it will be cheap to access, it will make receiving a music education for underprivileged children much easier.”

The Instrumental Tuition Unaffordability code emerged as particularly salient, with participants highlighting not only the high costs of tuition, technology, sheet-music and equipment, but also unexpected financial burdens, such as exam entrance fees. For instance, P7 specifically cited “exam entrance fees” as an overlooked but significant obstacle. These findings extend beyond tuition and equipment costs, exposing systemic financial barriers within traditional music education. This aligns with Salaman's (1994) analogy of musical examinations as hurdles in a sprint: while they provide measurable benchmarks, they also introduce obstacles, which in the case of musical education comes in the form of financial and psychological pressures, which may deter learners. Examinations often act as external validation of skill levels, potentially deterring students unable to afford these costs from pursuing music education.

The interplay between examination pressures and student well-being further complicates the issue. Research by Steare et al. (2023) found a positive correlation between academic pressure and mental health challenges, highlighting the unintended consequences of traditional examination systems. These findings suggest that current structures may prioritize examination over music-making, as noted by P3, who emphasized the need for inclusive, alternative approaches to music education. AI assistants offer a potential solution by providing personalized progress tracking and skill development, mitigating reliance on costly and high-pressure examination systems.

These findings suggest that AI assistants could provide the benefits traditionally associated with examinations, such as progress benchmarking, without imposing the same financial or psychological burdens. This is particularly significant for underprivileged or non-traditional learners who may view current systems as exclusionary. However, the dataset consists exclusively of university-level guitarists with substantial prior experience in examination-based learning, introducing a selection bias that may obscure the broader impacts of financial and examination-related barriers on less experienced learners or those who have discontinued music education. Future research should address these gaps to provide a comprehensive understanding of how AI assistants can enhance accessibility in music education. Ultimately, this analysis reaffirms that financial barriers remain the most significant obstacle to accessing music education.

5.2.2 New pedagogical opportunities (affordances of AI)

Beyond financial constraints, indirect barriers such as limitations in traditional pedagogical approaches significantly impact accessibility to music education. Addressing these issues aligns with our research question: how can an AI tool be designed to increase accessibility of learning the guitar, using modern advances in deep learning? Advances in AI and machine learning present opportunities to address these gaps, offering personalized and continuous learning frameworks that align with modern educational needs.

Dalmazzo and Ramírez (2020) argue that AI assistants can mitigate risks such as the development of poor practice habits by providing consistent feedback outside formal lessons. P16 highlighted the value of real-time, adaptive feedback, which allows learners to “focus their practice to their weaknesses.” Unlike periodic teacher assessments, these AI-enabled features provide timely insights that accelerate skill development and sustain motivation.