- Communication Study Program, School of Communication and Social Science, Telkom University, Bandung, Indonesia

This study explores the interaction between humans and artificial intelligence (AI) through the lens of affordance theory, focusing on how Indonesian users experience and interpret ChatGPT in their daily lives. Adopting a socioconstructivist approach to affordance, the research investigates how users perceive, adapt to, and assign meaning to ChatGPT’s capabilities beyond its technical design. Using a qualitative descriptive method with light netnographic observation, this study examines user interactions and discussions within online communities. The findings reveal that affordances are not solely embedded in the AI system but emerge relationally, shaped by users’ intentions, contexts, and social interpretations. ChatGPT is variously perceived as a thinking assistant, productivity enhancer, confidence booster, and reflective partner. These perceptions are informed by diverse user motivations, such as improving work efficiency, overcoming cognitive barriers, or seeking emotional support in moments of solitude. The study identifies a dynamic interaction between technological features and human agency, highlighting how users’ lived experiences co-construct the functional and symbolic value of AI. This research contributes to the evolving discourse on human–AI relationships by emphasizing the subjective and socially situated nature of affordances, offering insights into how users domesticate AI tools in everyday contexts. Ultimately, the study challenges deterministic views of technology by demonstrating that the perceived value of AI is co-created through experiential engagement rather than solely defined by its technical affordances.

Introduction

Artificial Intelligence (AI) has emerged as a pivotal force in digital transformation, playing a central role in the Fourth Industrial Revolution (Danso et al., 2023). This technology is capable of processing large volumes of data, managing complex tasks, and producing outputs with high accuracy and exceptional processing efficiency. As highlighted in a McKinsey report, AI holds the potential to assist organizations in formulating strategic options based on data-driven insights while accelerating critical decision-making processes (D’Amico et al., 2025). Fundamentally, AI is not only designed to automate tasks previously performed exclusively by humans but also to enhance data analysis capabilities, thereby generating more nuanced and accurate insights. Given projections that digitalization and AI will generate 149 million new jobs by 2025, it is essential to understand how human–AI interaction, particularly in the realm of communication, may influence Indonesia’s social and economic dynamics (Yesidora, 2024). Notably, Indonesia ranks sixth globally in ChatGPT usage, with 32% of consumers engaging with the platform through text-based interactions (Dewi, 2024). These figures reflect the rapid transformation of professional communication practices.

Data show that the global artificial intelligence (AI) market has experienced significant growth, rising from USD 22.6 billion in 2020 (Ishak, 2022) to USD 233.46 billion in 2024, and is projected to reach USD 1,771.62 billion by 2032, with a compound annual growth rate (CAGR) of 29.2%. North America currently leads with a 32.93% market share (Fortunebusinessinsights, 2025). This growth confirms that AI is not merely a trend but a foundational force driving cross-sectoral transformation, including in the field of communication. AI refers to systems capable of mimicking human intelligence, such as reasoning, learning, pattern recognition, and decision-making (Shin, 2021; Verma, 2018). With capabilities in cognitive reasoning and data-driven decision-making, AI enables the automation of complex tasks traditionally performed by humans, thereby reshaping workflows and creating new opportunities for more adaptive communication systems (Chen et al., 2020).

AI systems heavily rely on machine learning technologies, particularly deep learning, which allows the identification of patterns in big data and the automation of analytical processes for various tasks (Janiesch et al., 2022; Shinde and Shah, 2021). These advancements have driven major progress in natural language processing (NLP), allowing computers to understand and analyze human language at scale (Xie et al., 2021). NLP enhances human–machine communication by enabling machines to comprehend linguistic structures. Deep learning has also accelerated the rise of conversational agents, chatbots, and social robots that facilitate more personal and authentic interactions (Westerman et al., 2020). One of the key innovations in this space is Generative AI, which is fundamentally transforming human communication by offering experiences that resemble human interaction, surpassing conventional digital platforms (Grimes et al., 2021). A leading example is ChatGPT, which has rapidly become a global phenomenon.

Over the past decade, user adoption of ChatGPT has surged dramatically. Launched by OpenAI in November 2022, ChatGPT marks a major milestone in the development of communicative AI (Fui-Hoon Nah et al., 2023). This language model is designed to generate human-like responses across various languages (Kalla and Kuraku, 2023). It employs a Generative Pre-trained Transformer (GPT) architecture, combining neural networks with NLP to produce contextually relevant output. A study by Al Lily et al. (2023) found that users perceived ChatGPT as “more humanlike” compared to conventional AI systems. As of August 2024, ChatGPT has over 200 million active users and receives 1.6 billion monthly visits, according to Kompas.com. A survey by the Boston Consulting Group ranks Indonesia among the countries with the highest adoption rates, reaching 32% market share (Riyanto, 2024). Designed for natural interaction via text and voice, ChatGPT enhances communication efficiency and text production (Vinet and Zhedanov, 2011). However, its popularity also raises new challenges, particularly in terms of ethical considerations and social impact.

Although ChatGPT enhances communication efficiency, its rising popularity has sparked debates over its benefits and risks, including emotional dependency and the ethical urgency surrounding AI use (Kalla and Kuraku, 2023). Brandtzaeg et al. (2022) observed users’ tendencies to form parasocial relationships with chatbots, often feeling more comfortable confiding in AI than in humans. Such attachments raise concerns about excessive social dependence. Meanwhile, educators have expressed fears regarding threats to academic integrity and the spread of disinformation, reinforcing the need for critical literacy when engaging with AI-generated content (Archibald and Clark, 2023; Sundar and Liao, 2023). Within this context, Jobin et al. (2019) emphasize the importance of implementing core ethical principles for AI—transparency, fairness, non-maleficence, responsibility, and privacy which, despite varying interpretations, reveal the need for accountability and equity in the design and use of systems like ChatGPT. Consequently, AI adoption entails not only technical dimensions but also emotional, cultural, and ethical implications.

AI design must strike a balance between personalization and ethical constraints, as user perceptions of ChatGPT are shaped by cultural and social contexts. For example, users from collectivist East Asian cultures tend to express higher trust in AI, while in developing countries, ChatGPT is often used for administrative tasks as a substitute for limited access to professional services (Androutsopoulou et al., 2019; Cheng et al., 2024). These challenges reveal that AI adoption is both technical and socio-cultural. Regulatory frameworks are needed to address algorithmic bias and system transparency (Q. V. Liao and Sundar, 2022), including challenges in prompt engineering where ChatGPT’s response quality depends heavily on users’ prompting skills and the application of strategies such as few-shot learning, the IDEA-PARTS framework, or CLEAR language to reduce hallucinated outputs (Korzynski et al., 2023; Park and Choo, 2024; Reynolds and McDonell, 2021). Human–AI interaction is influenced by user personality, awareness, and system design (Caci and Dhou, 2020), yet early adopters often hold unrealistic expectations due to a lack of understanding about LLM structures (Knoth et al., 2024; Zamfirescu-Pereira et al., 2023). Therefore, AI literacy and the REFINE approach—which emphasizes continuous evaluation and iteration—are critical to optimize use, as affordances are shaped not only by technology but also by users’ social and economic needs (Ashktorab et al., 2019; Nagy and Neff, 2015). Artificial intelligence like ChatGPT has shifted the role of technology from a passive tool to an active communicative partner that co-constructs meaning within social interactions (Hancock et al., 2020; Littlejohn et al., 2021; Westerman et al., 2020). In this context, the concept of cognitive offloading (Risko and Gilbert, 2016) explains the human tendency to delegate cognitive tasks to AI in order to reduce mental load. This is further supported by Parra et al. (2025), whose development of MyndFood—a mindful conversational agent demonstrates that AI can enhance sensory awareness during meals and foster emotional connection, affirming the affective role AI now plays in everyday life.

The presence of artificial intelligence has transformed the way humans communicate. With the increasing interaction between humans and AI-based systems such as ChatGPT in both personal and professional contexts, it becomes essential to understand its impact from technical, social, and communicative perspectives (Westerman et al., 2020). These interactions are no longer merely transactional; users have begun to treat AI as social entities capable of providing emotional responses (Guzman and Lewis, 2020). This suggests that human-AI interaction has evolved beyond the use of technology as a mere tool and has entered a realm of more complex relationships. Such a phenomenon necessitates a multidisciplinary approach to grasp its social and psychological implications.

To understand human interaction with AI systems like ChatGPT, the concept of affordance becomes central referring to the potential actions a technology offers to its users (Hutchby, 2001). Users often interpret AI’s capabilities through the lens of personal expectations and experiences, which may create a gap between anticipated and actual AI performance, thereby affecting user trust (Ardón et al., 2021; Hopkinson et al., 2023). In this context, media equation theory explains that humans tend to respond to media as if interacting with real people (Littlejohn et al., 2021; Soash, 1999). This tendency is further reinforced by anthropomorphism the attribution of human traits to machines (Guzman and Lewis, 2020; Utari et al., 2024). Text-based interfaces that mimic human conversation encourage users to perceive AI as social partners (Araujo, 2018; A. Guzman, 2020), even though these responses often occur automatically without recognizing that AI lacks consciousness or emotion (Littlejohn et al., 2021)This raises ethical questions regarding the extent to which AI should emulate human behavior.

Therefore, understanding human-AI interaction, especially with tools like ChatGPT, requires integrating the perspectives of affordance, media equation theory, anthropomorphism, and mindlessness. These frameworks mark a shift from traditional Human-Computer Interaction (HCI) to Human-Machine Communication (HMC), in which AI is seen not just as a tool, but as an active communication partner (Brandtzaeg et al., 2022; Guzman, 2020; Littlejohn et al., 2021). This shift reflects an evolution from transactional relationships to more dynamic and collaborative interactions. However, despite the increasingly natural quality of AI conversations, user awareness of AI’s contributions remains limited (Skulmowski, 2024). Studies show that using agents with visual embodiments, such as avatars and human-like voices, can enhance anthropomorphism and strengthen users’ emotional closeness to the system (Deshpande et al., 2023). These interactions shape a complex socio-technical relationship between humans and robotic entities, implying that AI design must account for both visual and emotional aspects to enrich user engagement and experience.

Several studies have revealed how humans interact with artificial intelligence (AI). Human-AI interaction increasingly resembles interpersonal communication, wherein AI is not merely perceived as a tool, but as a communicative partner capable of modifying and generating messages (Hancock et al., 2020; Haqqu and Rohmah, 2024; Westerman et al., 2020). In this context, Jobin et al. (2019) emphasize the importance of global ethical principles—such as transparency and accountability in assessing the potential social and emotional impact of these relationships. Furthermore, Pergantis et al. (2025) demonstrate that AI tools such as chatbots also hold potential as metacognitive facilitators that enhance users’ executive functions, thereby reinforcing the view that AI now functions not only as a technical medium but also as a cognitive and social agent.

This study aims to explore the experiences of active ChatGPT users in Indonesia by categorizing them into three groups: early adopters (Caci and Dhou, 2020), users who employ prompt engineering strategies (Knoth et al., 2024), and those who treat ChatGPT as a communication partner (Westerman et al., 2020). Using a case study approach, the research investigates: (1) how users interpret the affordances of ChatGPT in their daily lives; (2) how ChatGPT’s features and responses shape emotional and social relationships; and (3) how local Indonesian cultural values influence the patterns of human-AI interaction.

Literature review

AI-mediated communication and the mediating relationship in human-AI communication

Hancock et al. (2020) introduced the concept of Artificial Intelligence-Mediated Communication (AI-MC) as a form of interpersonal communication mediated by intelligent agents (AI) that operate on behalf of the communicator to modify, enhance, or generate messages in order to achieve communicative goals. AI-MC not only extends classical technology-based communication theories such as Computer-Mediated Communication (CMC), but also challenges fundamental assumptions regarding agency, mediation, and self-representation in digital communication. Their article outlines various dimensions of AI-MC, including the degree of AI autonomy, the purpose of message optimization, the mediums used (text, audio, video), and the role of AI in communication (as sender or receiver). In the context of affordance, AI-MC reveals that chatbots like ChatGPT are not merely passive intermediaries, but communicative actors capable of influencing perception, trust, and the dynamics of interpersonal relationships especially in affective and social domains. This is particularly relevant to research on human-AI interaction, which involves users’ affective dimensions, imaginative projections, and social expectations of AI performance, as well as ethical and representational issues within today’s digital culture.

The human-likeness dimension in human-machine communication

Westerman et al. (2020) argue that in the context of Human-Machine Communication (HMC), the perceived “human-likeness” of AI plays a crucial role in shaping interpersonal relationships. The article builds upon Martin Buber’s philosophical framework of “I-It” and “I-Thou” relationships, applying it to HMC to show that users may perceive AI agents not merely as functional objects (“It”) but as social entities (“Thou”) worthy of dialogic engagement. This perspective aligns with the paradigm of Computers as Social Actors (CASA), which posits that humans often respond to technology as they would to other humans, employing social communication scripts typically used in interpersonal interactions. These findings deepen our understanding of affordances in human-AI interaction, particularly in affective and perceptual dimensions highlighting how linguistic features, anthropomorphic design, and interactivity contribute to social perception, intimacy, and even parasocial relationships with AI agents such as ChatGPT. Accordingly, concepts such as social presence, social acceptance, and empathic responses in communication with machines must be considered integral to the relational affordances within digital environments.

Ethics and global principles of AI usage

Ethical responsibility in the use of artificial intelligence (AI) has become a central focus in global discourse. Jobin et al. (2019), through a review of more than 80 AI policy documents from various countries and international organizations, identified a consensus around five core principles: transparency, fairness, non-maleficence, accountability, and privacy. These principles not only serve as normative foundations for the development of AI systems, but also provide a framework for evaluating the potential social and emotional impacts of human-AI interaction, such as with ChatGPT. In the context of affordance, these principles are particularly relevant for understanding how AI should be designed and utilized within a framework of social responsibility, especially in collectivist cultures like Indonesia, where interpersonal relationships are highly valued. Research further indicates that users may develop emotional attachment or even parasocial relationships with chatbots, underscoring the ethical urgency in AI design (Brandtzaeg et al., 2022; Kalla and Kuraku, 2023). In some cultural contexts, such as East Asia, there is a greater tendency to trust AI, often using it for administrative or relational tasks, whereas in other regions, adoption is more focused on efficiency (Androutsopoulou et al., 2019; Cheng et al., 2024). Thus, AI adoption is not merely a technical matter, but a social practice deeply intertwined with users’ cultural values, ethics, and emotional dynamics.

Chatbots in the cognitive and educational domain

This study highlights the potential of AI chatbots in supporting executive cognitive functions and learning strategies. Pergantis et al. (2025), in their systematic review, show that interaction with chatbots can improve working memory, cognitive flexibility, and decision-making, making chatbots not only information aids but also metacognitive facilitators. They conclude that AI chatbots can serve as “digital assistants” that support the development of users’ cognitive skills in educational contexts as well as daily life. These findings strengthen the affordance framework in the dimensions of materiality and mediation, because the interactive and adaptive features of chatbots like ChatGPT can mediate users’ mental stimulation and reflection. In addition, Moraiti and Drigas (2023) add that generative AI such as ChatGPT has the potential to become an inclusive learning tool for individuals with neurodevelopmental disorders such as autism and ADHD, although they emphasize that AI is not a replacement for medical professionals. Thus, these studies affirm the practical value of AI in education and cognitive intervention, and open discussions about ethical responsibility and design sensitivity to the diversity of users’ needs.

User interaction and capability

The concept of cognitive offloading introduced by Risko and Gilbert (2016) explains the human tendency to delegate cognitive processes such as decision-making, memory, and task completion to external technologies in order to reduce mental load. In the context of human interaction with AI such as ChatGPT, this concept becomes key to understanding the shift in AI’s function from merely a technical aid to a cognitive partner that is relied upon even in aspects of thinking, writing, and constructing personal arguments. Tschopp et al. (2023) note that users often anthropomorphize chatbots, interpreting them as entities with social presence and affective capabilities such as empathy or humor, thus facilitating emotional closeness in the offloading process. Both novice and experienced users show different strategies: novices tend to use ChatGPT as a passive information source, while experienced users actively refine prompts to shape more appropriate AI responses (Gupta et al., 2025; Nagy and Neff, 2015). Within the affordance framework, this phenomenon shows how ChatGPT is interpreted not only technologically, but also affectively and socially—as a system that shapes how humans allocate attention, memory, and intentionality in everyday communicative practices.

Mindfulness applications and lifestyle

Parra et al. (2025) developed MyndFood, a conversational agent designed to enhance awareness during cooking and eating through a mindful approach. Through experiments on two user groups (mindful and non-mindful), they found that interaction with the mindful agent not only increased sensory awareness (aroma, taste, texture) but also strengthened hedonic dimensions such as feelings of joy and emotional connection with the agent. This study shows that affordances in AI technology, particularly conversational agents, are not limited to instrumental functions but also include affective and social aspects. In the context of this research, these findings enrich the understanding of how the cognitive and emotional affordances of ChatGPT enable users to form personal and reflective relationships, going beyond its role as a mere technical tool.

Theoretical framework

Anthropomorphism

The concept of anthropomorphism the human tendency to attribute human-like qualities to non-human entities originates from the Greek philosopher Xenophanes in the 6th century BCE, who criticized how humans created gods in their own image. This idea was later developed by Hume (1757), who explained anthropomorphism as a natural human inclination to understand the world through self-projection. In modern development, the theory of anthropomorphism was systematized by Epley et al. (2007) through a psychological approach based on three factors: agency, experience, and sociality. In the context of human-AI communication, anthropomorphism becomes increasingly significant when technological interfaces are designed to mimic human interaction. Araujo (2018) emphasized that chatbots simulating human conversational patterns increase users’ tendency to perceive AI as a social partner. As shown by Guzman and Lewis (2020) and Littlejohn et al. (2021), this tendency creates complex social and affective relationships, even forming emotional attachments to AI. Deshpande et al. (2023) further demonstrated that this inclination also opens up the potential for manipulation, illusions of credibility, and ethical risks, particularly when AI is designed to resemble authoritative or intimate figures. Therefore, anthropomorphism not only reflects a natural human response but also a social practice and technological design that influences perception, trust, and affective relationships in human-AI communication.

Human-machine communication

Human-Machine Communication (HMC) is a field of study that positions technology, including artificial intelligence, as a communicative subject that actively participates in the process of meaning exchange (Littlejohn et al., 2021). Within this framework, HMC does not merely examine technical interactions between humans and tools, but also explores ontological relationships and the social and cultural dimensions of communication with digital entities such as virtual agents, robots, and AI operating in both real and virtual spaces (Guzman and Lewis, 2020). One theoretical foundation that explains this phenomenon is the media equation theory, developed by Reeves and Nass (1996), which shows that humans tend to treat technology as if it were a social participant. This approach underlies the CASA (Computers Are Social Actors) paradigm, wherein technology like ChatGPT is treated as a social partner through interpersonal interaction scripts. Complementing this approach, Guzman (2020) introduced the theory of Communicative AI, which emphasizes the shift of technology from being merely a mediator to becoming an active communicator, challenging the ontological boundaries between humans and machines. Meanwhile, Nagy and Koles (2014) developed the Structural Model of Virtual Identity to explain how self-identity is formed and negotiated in virtual environments, showing that human-machine interaction shapes not only new communication patterns but also identity meaning within the digital context. The collaboration of these theories affirms that technology is not merely a tool, but a part of a living, dynamic communication system imbued with social meaning.

Media equation theory

The Media Equation Theory, developed by Reeves and Nass (1996), provides a crucial foundation for understanding how humans treat technology in communication contexts. This theory posits that people automatically respond to communication media including computers, television, and AI as if they were real humans, even when users are fully aware that they are interacting with machines (Littlejohn et al., 2021; Reeves and Nass, 1996). In explaining how users socially interact with technology, Reeves and Nass (1996) introduced the concept of the media equation, which asserts that humans treat media including computers and digital technologies as they would treat other people in real life. This interaction occurs even when users know the device is not human; social responses still emerge automatically and unconsciously. This phenomenon shows that human-like characteristics in media even in minimal forms such as friendly voices or animations—are sufficient to evoke politeness, empathy, or even emotional attachment from users toward machines. These findings are highly relevant in the context of human interaction with AI such as ChatGPT, which is often treated as a conversational partner, a listener, or even a “friend.” The media equation concept helps explain why the affective and social affordances of technology, even if imaginative, have real consequences in shaping users’ perceptions, emotional responses, and behaviors in increasingly mediated and personal digital communication practices.

Affordance

The concept of affordance, as introduced by Gibson (1979), refers to the possible actions an environment offers to an organism, and in the context of technology, it describes the dynamic relationship between users and media. In the study of human-machine communication, affordance is understood as the result of interaction between the technological characteristics, user perception, and the surrounding social context (Evans et al., 2017; Hutchby, 2001). To capture this complexity, Nagy and Neff (2015) proposed the framework of imagined affordances, emphasizing three core dimensions: mediation, materiality, and affect referring to how technology shapes perception, possesses both physical and digital attributes, and elicits emotional responses. In the context of AI, affordance describes the potential actions a system offers to users (Polster et al., 2024), yet users often interpret AI capabilities based on personal expectations, which can create a gap between expectation and reality, and impact trust in the technology (Ardón et al., 2021; Hopkinson et al., 2023). Therefore, affordance becomes a key concept for understanding how users imagine, respond to, and make meaning of AI such as ChatGPT in ways that are social, affective, and contextual (Bucher and Helmond, 2017; Pentzold and Bischof, 2019).

Method

This study explores the phenomenon of user interaction with ChatGPT in depth. A qualitative approach analyses contemporary phenomena in real-life contexts, especially when the boundaries between phenomena and context cannot be explicitly separated (Yin, 2016). In human-AI interaction, the user experience is closely related to the socio-cultural context and the technology surrounding it. Therefore, case studies are considered relevant in this study to understand the complexity of relationships formed as expressed by Guzman (2020) and Hirsch et al. (2024).

The study specifically focused on three different categories of users: novice users, active users with prompting capabilities, and users who use ChatGPT as a communication partner. Case studies allow researchers to analyze various cases simultaneously to comprehensively understand the phenomenon being studied (Stake, 2013). Thus, this approach allows the identification of patterns that emerge from the experiences of different categories of users following the recommendations of Creswell and Poth (2016) in the study of complex technological phenomena. This aligns with the findings of Jiang et al. (2024) who emphasize the importance of in-depth analysis of variations in usage patterns and user experiences in understanding human-AI interactions.

Data collection was carried out through in-depth interviews with informants. This technique was chosen because of its ability to uncover the complexity of the user experience in interacting with ChatGPT. In-depth interviews allow for exploring the psychological and social aspects of human-AI interactions (Yildirim et al., 2023), while observation allows for an understanding of how users interact with ChatGPT in everyday contexts. In addition, documentation techniques are used to complement and reinforce data obtained from interviews and observations. The documentation in this study includes an analysis of transcripts of conversations between users and ChatGPT, as well as other relevant materials.

Interview data will be systematically analyzed using NVivo 12 software. NVivo allows researchers to encode data more structured, identify key themes that emerge from interview transcripts, and visualize the relationships between concepts in the user experience. With features such as word frequency analysis and query tools, NVivo helps uncover interaction patterns not always seen in manual analysis. In addition, NVivo’s ability to manage and compile qualitative data digitally improves efficiency in analysis, reduces the potential for subjective bias, and strengthens the validity of research results.

The analysis of these documents aims to identify patterns of interaction, understand the context of use, and verify the findings that emerge from interviews and observations. Documentation allows researchers to gain a more comprehensive understanding of the user experience and reinforces the validity and reliability of research findings. Combining these three techniques allows for data triangulation that reinforces the validity of research findings, as Merriam and Tisdell (2016) recommended in qualitative research.

Result

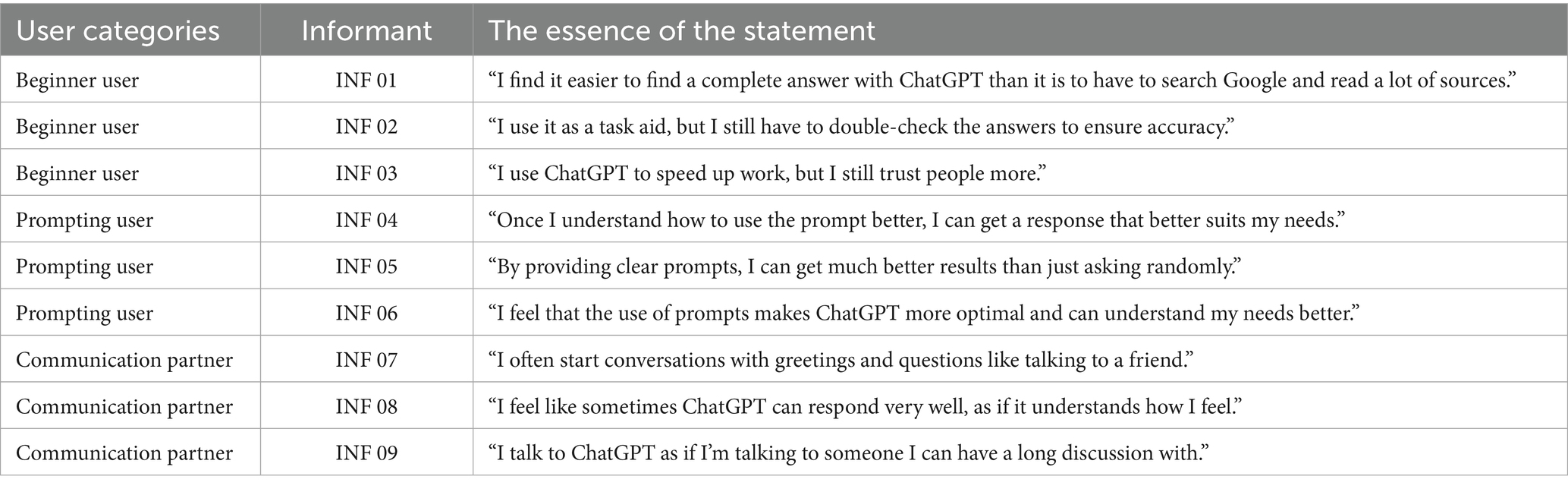

The study involved nine informants who had more than 1 year of experience interacting with ChatGPT, which was divided into three categories: users who used ChatGPT as a communication tool to speed up the search for information, users who were skilled in formulating prompts to get optimal results, and users who interacted as if talking to a human, which allowed analysis of the emotional connections formed. The results of the study will present the results of interviews conducted with informants based on three dimensions of affordance (Guzman and Lewis, 2020; Nagy and Neff, 2015).

Mediation

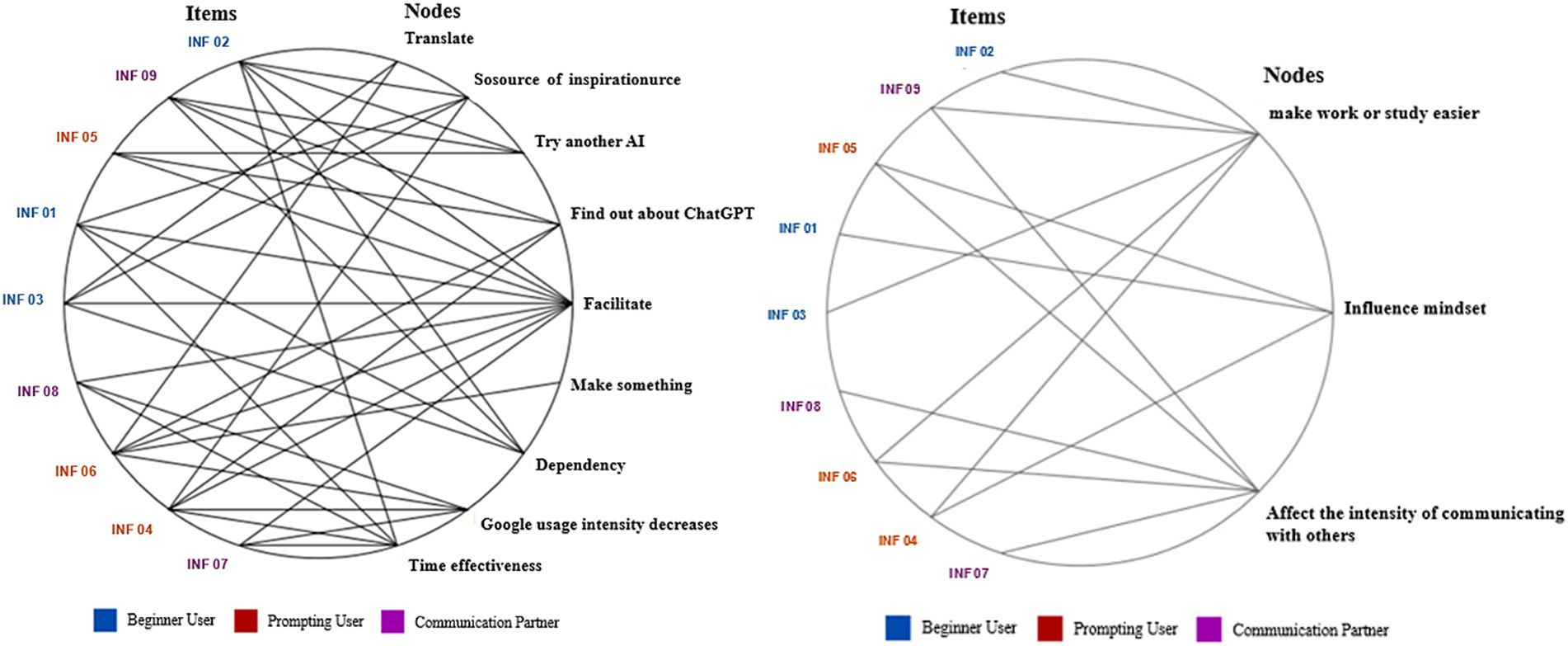

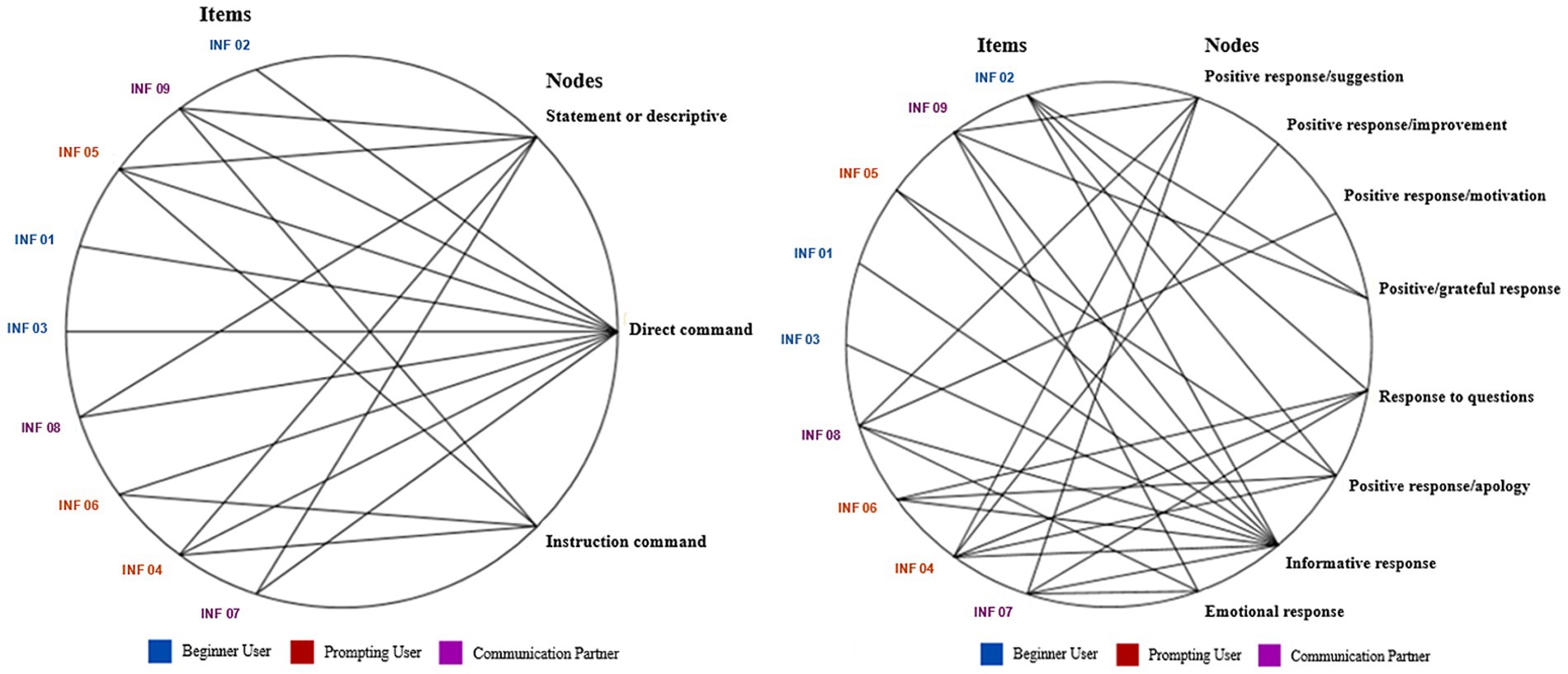

Mediation in this context refers to how technologies like ChatGPT shape its users’ cultural practices and social lives. In other words, technology affects how individuals perceive and interact with the world around them, including themselves, and how social practices and values influence how they are used and developed (see Figure 1).

The circular above visualizes the role of ChatGPT in shaping user perceptions and its impact on their social lives. Words like “Eimplient,” “Time Effectiveness,” and “Source of Inspiration” indicate that users see ChatGPT as a tool that provides ease and efficiency and encourages creativity. The advent of the word “Dependency” reflects concerns about potential dependency on this technology. “Trying Other AI” and “Finding out about ChatGPT” indicate that interactions with ChatGPT encourage further exploration of AI technologies. “Google usage intensity decreases” indicates a shift in behavior in information searches. Overall, word cloud illustrates how ChatGPT as technology acts as a mediator that shapes users’ perceptions of technology and influences practices in their social lives.

Table 1 summarizes how ChatGPT bridges the interaction between users and their environment, both in shaping technology perceptions and influencing users’ social lives.

Based on Table 1, it can be analyzed that new users who view ChatGPT as a communication tool feel significant benefits in terms of speed and completeness of information. The results of the interviews show that ChatGPT acts as a mediator that influences the way individuals interact with information and technology and shapes their perception of the role of technology in social life.

For the category of beginner users, the emergence of ChatGPT as a communication tool has significantly changed the way new users access information, offering an advantage in speed and completeness compared to traditional search engines such as Google. Users report that ChatGPT provides concise and focused answers, improving efficiency and saving time, which is in line with findings that highlight a preference for platforms that facilitate quick information retrieval (Stojanov, 2023; Subbaramaiah and Shanthanna, 2023).

This phenomenon is in line with studies that show that users tend to choose platforms that provide quick and easy access to information (Matei, 2013). However, this ease of access raises concerns about potential reliance on technology, which can reduce critical thinking skills and cognitive independence (Subbaramaiah and Shanthanna, 2023; Sundar and Liao, 2023). Research shows that while ChatGPT can improve learning and increase engagement, it can also lead to overconfidence in user understanding due to its sometimes superficial and inconsistent responses (Stojanov, 2023).

In addition, reliance on AI-generated content poses ethical challenges, including academic integrity issues and the risk of generating unreliable information, which requires careful consideration of its implications in educational and research contexts (Subbaramaiah and Shanthanna, 2023). This concern is intertwined with research showing that dependence on technology can lead to a decline in cognitive skills and independence (Matei, 2013).

Skilled users in prompt engineering actively optimize their interactions with ChatGPT to better align with specific needs. Mastery of this technique allows them not only to receive information passively but also to actively shape and direct the technology (Hargadon and Sutton, 1997). This interaction evolves into a dynamic dialogue where users can tailor responses and refine outputs based on their requests. For instance, in clinical settings, ChatGPT has been effectively used to generate accurate medical documentation and support decision-making, demonstrating its adaptability to user needs (Liu et al., 2023).

In the educational context, users have reported that ChatGPT facilitates learning by providing relevant feedback, although caution is advised due to occasional inconsistencies in generated content (Stojanov, 2023). Furthermore, research indicates that when guided by well-structured prompts, ChatGPT can deliver high-quality, unbiased information on complex topics such as fertility, highlighting its potential as a reliable resource (Beilby and Hammarberg, 2023). Thus, the ability to shape interactions with ChatGPT underscores the importance of user expertise in maximizing the benefits of AI technology (Balmer, 2023; Lyu et al., 2023).

For users who interact with ChatGPT as if communicating with a human, a deeper shift occurs in human-machine relationships. ChatGPT is no longer merely viewed as a tool but is increasingly positioned as a communication partner capable of engaging naturally, even being assigned social roles such as a friend or personal assistant. This phenomenon reflects the tendency toward anthropomorphism, where humans attribute human-like qualities to machines (Guzman and Lewis, 2020). Research indicates that users often perceive their interactions with AI in terms of authority and information exchange rather than mere friendship (Tschopp et al., 2023).

Furthermore, the integration of ChatGPT in knowledge production raises ethical considerations and underscores the need for reflexivity in understanding its social implications (Balmer, 2023). The perceived agency of AI also influences users’ trust and their willingness to follow AI-generated recommendations, demonstrating that AI identity can significantly impact interpersonal influence dynamics (Liao et al., 2023). Interviews with nine informants on the mediation indicator reveal that AI advancements, such as ChatGPT, not only facilitate access to information but also shape perceptions, interaction patterns, and relationships between humans and technology. This confirms that technology does not exist in isolation but is intrinsically linked to social and cultural practices (Nagy and Neff, 2015). These findings further highlight the importance of ethical considerations in AI deployment, given its impact on social dynamics and human communication (Dergaa et al., 2023).

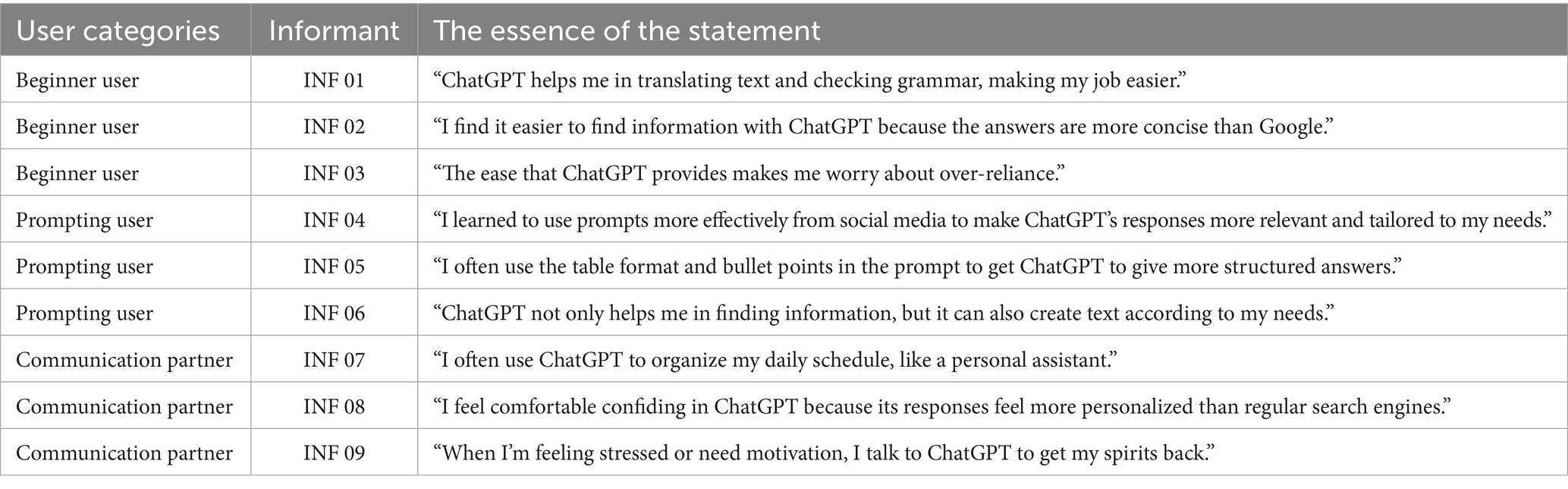

Materiality

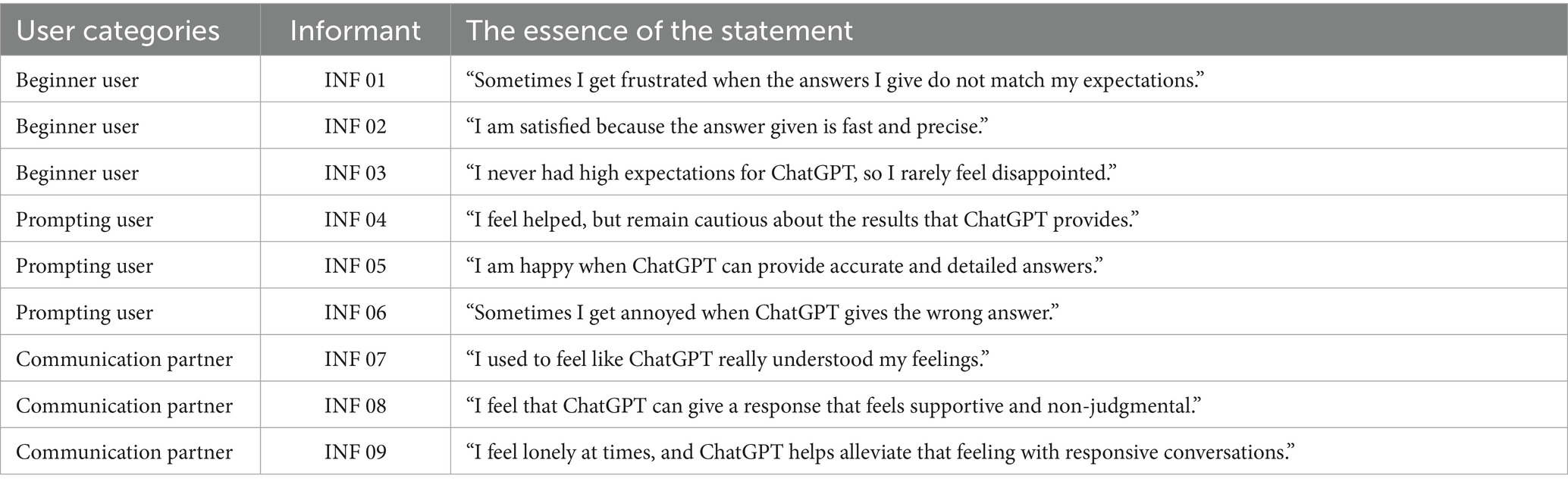

Materiality in this context refers to how specific features of technology, in this case ChatGPT, affect the interaction and formation of meaning between humans and machines. How users use these features to achieve their goals and how they shape their experience of interacting with technology (see Figure 2).

The diagram data above illustrates the material aspects of ChatGPT that stand out in interactions with users. The word “Informative Response” shows that ChatGPT’s ability to provide complete and detailed information is one of the main attractions. “Question Response,” “Positive Response (Suggestion, Improvement, Motivation, Thanks, Apology),” and “Emotional Response” indicate that ChatGPT not only provides information but is also capable of providing responses that more closely resemble human interaction. The appearance of these words indicates that users view ChatGPT as an entity that can be interacted with in more depth. Overall, the diagram shows that ChatGPT’s materiality, which includes the different types of responses given, shapes the way users interact with technology and integrate it into their lives.

Table 2 highlights how ChatGPT's features and characteristics, such as the language style and the type of responses given, shape user interactions and experiences in communicating with technology.

From the presented table, it is evident that users from various categories utilize ChatGPT’s features to meet diverse needs, ranging from practical tasks such as translation and grammar checking to emotional support and motivation. The materiality of ChatGPT—which includes its ability to provide informative responses, suggestions, and even encouragement—plays a crucial role in shaping how users interact with technology and integrate it into their daily lives.

Furthermore, ChatGPT’s materiality not only influences everyday use but also has implications across various domains, including healthcare and emotional support. In clinical settings, users leverage ChatGPT for practical tasks such as generating medical documentation and answering health-related queries, contributing to increased efficiency and accuracy in healthcare services (Liu et al., 2023; Nguyen and Pepping, 2023). Research indicates that ChatGPT can provide high-quality, unbiased information on fertility, demonstrating its usefulness in patient education (Beilby and Hammarberg, 2023). Additionally, in nursing practice, ChatGPT helps simplify complex medical language, enhancing communication between healthcare professionals and patients (Scerri and Morin, 2023). However, while ChatGPT effectively supports practical needs, emotional support remains a challenge. Traditional conversational agents often struggle to deliver nuanced emotional responses, which are essential in mental health contexts (Wang Q. et al., 2023). Overall, the integration of ChatGPT into daily practices reflects a significant shift in how technology fulfils both practical and emotional needs, shaping user experiences and expectations of AI.

The language style used when interacting with ChatGPT varies based on user categories and objectives, reflecting broader trends in conversational AI usage. New users who perceive ChatGPT as a communication tool tend to use direct and specific command sentences, prioritizing efficiency and clarity in obtaining information. This aligns with research indicating that conversational systems are designed to effectively meet user needs (Brabra et al., 2022). Conversely, skilled users in prompt engineering tend to employ more complex instructional commands and descriptive statements, demonstrating their effort to direct the conversation by providing human-like stimuli and controlling ChatGPT’s responses. This practice is supported by the TRISEC framework, which emphasizes the importance of context in optimizing conversational agent design (Blazevic and Sidaoui, 2022).

Meanwhile, users who communicate with ChatGPT as if conversing with a human are more likely to adopt a natural and expressive language style, reflecting their desire to establish a more personal relationship with the technology. This pattern aligns with findings that highlight human-like interaction as a highly sought-after feature of advanced AI systems (Grosz, 2018). The evolution of user interactions with ChatGPT not only underscores the adaptability of conversational agents but also emphasizes the need for ongoing research into dialogue management and user preferences to enhance the effectiveness of this technology (Muench et al., 2014).

The responses provided by ChatGPT are highly diverse and significantly influence user perceptions and experiences. Detailed and informative responses are widely regarded as beneficial by all user categories, particularly in fields such as healthcare, where chatbots have demonstrated effectiveness in improving lifestyle behaviors such as physical activity and sleep quality (Singh et al., 2023). Additionally, users appreciate more natural responses, such as suggestions, motivation, and emotional expressions, which create the impression that ChatGPT is not merely an information provider but also a conversational agent capable of engaging in more human-like interactions.

Conversational AI’s ability to facilitate human-like exchanges fosters a sense of connection, with many users perceiving these systems as more than just tools but as partners in an exchange relationship (Tschopp et al., 2023). This anthropomorphism plays a crucial role in shaping user expectations and engagement, particularly in mental health applications where emotional support is essential (Wang Q. et al., 2023).

ChatGPT’s ability to provide adaptive and diverse responses is a key factor in shaping its materiality. This aligns with research indicating that the materiality of technology is not solely determined by its physical attributes but also by its capabilities and functionality in facilitating interaction (Nagy and Neff, 2015). Furthermore, the ongoing discourse surrounding AI’s role in communication highlights the need for a nuanced understanding of its capabilities and limitations, emphasizing the importance of human agency in the evolving landscape of AI interactions (Sundar and Liao, 2023).

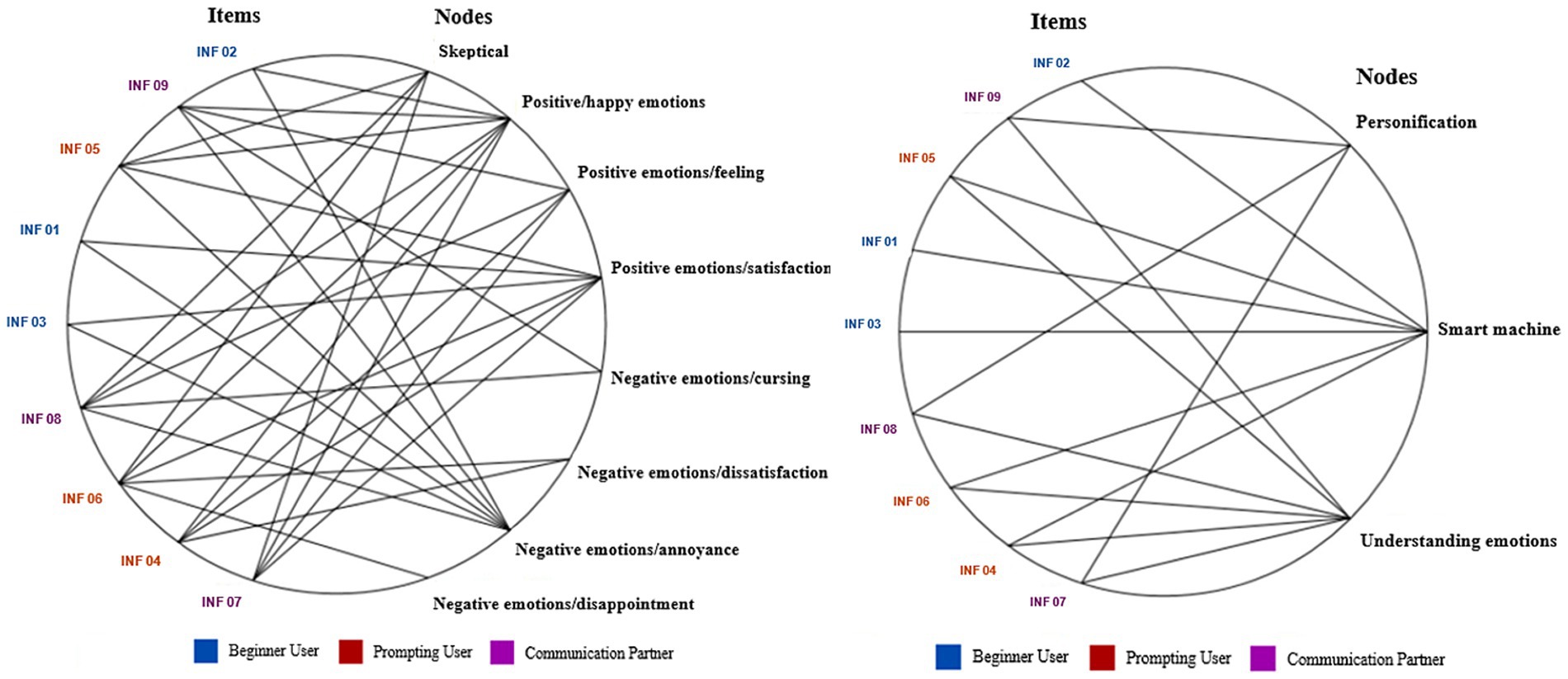

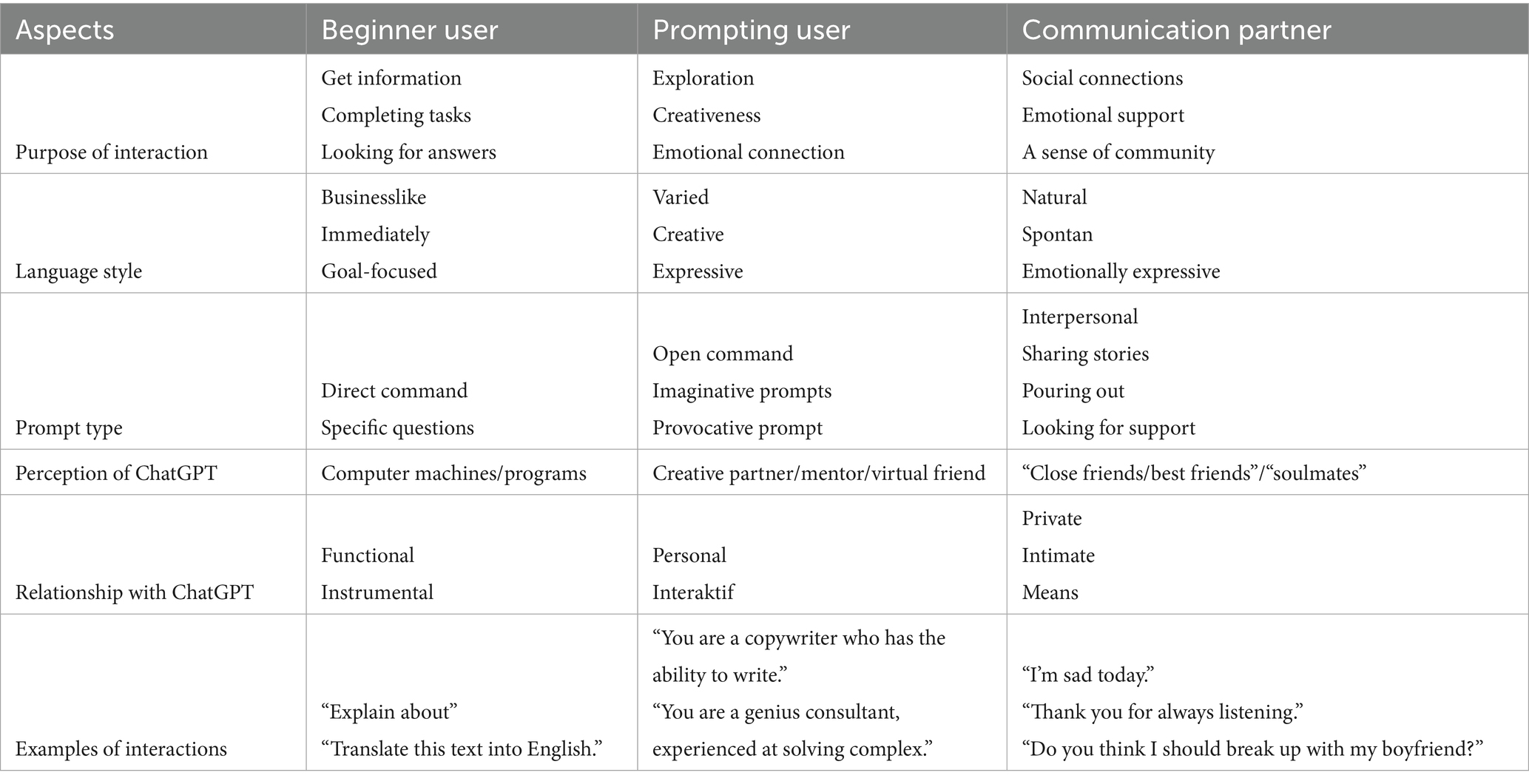

Affection

Affection in this context has to do with the emotional aspects of human interaction with ChatGPT. How users feel and emotions affect the way they perceive and use technology. In the end, technology is considered to be able to affect the emotional state of users (see Figure 3).

The diagram above visualizes the affective dimension in human interaction with ChatGPT. Words such as “Positive Emotions (Happy, Feeling Helped, Satisfied)” indicate that ChatGPT is able to cause positive feelings in users, either in the form of satisfaction because they get the information they need or feelings of help because ChatGPT can provide solutions or support. The appearance of the words “Negative Emotions (Cursing, Dissatisfaction, Upset, Disappointment)” indicates that interactions with ChatGPT can also trigger emotions, especially when the user’s expectations are not met or when ChatGPT gives a response that is not as expected. The depiction explains that interactions with ChatGPT involve emotional engagement from users. Where these affective experiences can affect the way they interact with technology.

Table 3 shows how users' emotions and fantasies towards ChatGPT affect the way they interact with technology and integrate it into their lives.

Based on interview results, users from various categories experience a wide range of emotions when interacting with ChatGPT. Positive emotions such as happiness, satisfaction, and feeling supported emerge when ChatGPT provides accurate, relevant, and expectation-aligned responses. Conversely, negative emotions such as frustration, disappointment, and irritation arise when ChatGPT delivers incorrect, irrelevant, or unsatisfactory responses.

These emotional responses are significantly influenced by the accuracy and relevance of ChatGPT’s answers, as highlighted in various studies (Biassoni and Gnerre, 2025; Saviano et al., 2025). Emotional engagement is crucial, as it not only affects users’ perceptions of the technology but also shapes their interactions with it. Therefore, AI systems must effectively manage emotional aspects to prevent disengagement or dissatisfaction (Saviano et al., 2025).

Additionally, ChatGPT’s ability to adjust its communication style based on users’ concerns enhances its empathetic engagement, particularly in healthcare contexts, where responsive interactions can improve user satisfaction (Biassoni and Gnerre, 2025). The integration of affective computing in AI, including ChatGPT, presents a growing potential for fostering deeper emotional connections with users. However, ethical considerations regarding emotional manipulation and privacy remain critical concerns (Vashishth et al., 2024). Overall, the emotional dynamics in AI-mediated interactions underscore the importance of aligning AI capabilities with user expectations to enhance their overall experience (Padmavathy and Alamelu, 2025; Šumak et al., 2025).

The range of emotions experienced by users when interacting with ChatGPT spans from positive emotions such as happiness, satisfaction, and feeling supported to negative emotions like frustration, disappointment, and annoyance. Positive emotions tend to arise when ChatGPT provides accurate, relevant, and expected responses, whereas negative emotions occur when responses are incorrect, irrelevant, or fail to meet user expectations (Batubara et al., 2024; Chin et al., 2024). This emotional landscape is further complicated by users’ tendency to anthropomorphize ChatGPT, where some perceive it merely as an intelligent machine, while others attribute human-like qualities such as empathy and emotional understanding (Chin et al., 2024; Chinmulgund et al., 2023; Rawat et al., 2024).

These projected fantasies influence how users communicate with ChatGPT, such as using greetings, expressing gratitude, or even sharing personal feelings. This phenomenon aligns with the media equation theory, which suggests that humans tend to respond to media in the same way they respond to other humans (Chin et al., 2024; Reeves and Nass, 1996). Moreover, advancements in emotion recognition models further highlight the importance of personalizing AI interactions to enhance user experience, indicating that emotional awareness in AI can significantly impact user satisfaction (Kovacevic et al., 2024). Overall, this analysis shows that interactions with ChatGPT involve a rich and complex affective dimension. The emotions and fantasies that emerge not only shape user perceptions and interaction patterns but also reflect how technology can “touch” the emotional and psychological aspects of humans. These findings underscore the need for AI development that is more emotionally responsive to enhance user experience in human-machine interactions (Batubara et al., 2024; Rawat et al., 2024).

ChatGPT user interaction model

This model illustrates the spectrum of human interactions with ChatGPT, divided into three main categories. First, users who perceive ChatGPT as a tool focus on its practical functions for obtaining information and completing tasks. Their interactions are straightforward, direct, and goal-oriented, viewing ChatGPT purely as an advanced machine or computer program (Tschopp et al., 2023). Second, users who utilize prompts as stimuli demonstrate a deeper understanding of ChatGPT’s capabilities, leveraging it for exploration and creativity. They engage with ChatGPT using more varied and expressive language, attributing human-like qualities to it and perceiving it as a creative partner or virtual companion (Rapp et al., 2023) (see Table 4).

Third, users who consider ChatGPT a communication partner exhibit high emotional attachment, using it to fulfill social and emotional needs through natural, spontaneous, and expressive interactions. In these cases, ChatGPT is perceived as a close friend, confidant, or even a “virtual soulmate,” creating deep, intimate, and meaningful relationships (Seaborn et al., 2022). This model highlights the diverse and dynamic nature of human interactions with ChatGPT, shaped by factors such as user goals, perceptions, and emotional attachment. It provides a deeper understanding of how AI technologies like ChatGPT not only transform the way humans interact with information but also influence their social and emotional relationships (Awais et al., 2020; Choudhury and Shamszare, 2023).

Discussion

The development of AI such as ChatGPT has driven a transformation in human-machine communication (HMC), shifting from transactional relationships to more dialogic and relational interactions. This study examines the experiences of three user categories beginners, prompt users, and communicative partners in interpreting their interactions through the dimensions of affordance: mediation, materiality, and affect. The mediation dimension shows that ChatGPT not only delivers information but also shapes how users view, access, and use information. Beginner users, for instance, appreciate the speed and accuracy of ChatGPT in summarizing information, in line with Nagy and Neff’s (2015) view that affordances are situational and influenced by social context and user expectations. This interaction strengthens the argument that ChatGPT has shifted from being merely a tool to becoming a social entity that shapes communication dynamics (Guzman and Lewis, 2020; Westerman et al., 2020).

On the other hand, the use of ChatGPT in educational contexts raises ethical and pedagogical concerns, particularly regarding academic integrity and critical thinking processes (Archibald and Clark, 2023; Sundar and Liao, 2023). Users who anthropomorphize AI treating ChatGPT like a human affirm the presence of affective relationships in communication, as noted by Tschopp et al. (2023). These findings indicate that ChatGPT offers not only technical efficiency but also emotional and cognitive affordances that affect behavior, social relationships, and learning patterns. Therefore, the role of AI in shaping communication, interaction, and social institutions must continue to be critically examined, especially in increasingly digital societies (Balmer, 2023).

Active users who drive interaction show more complex mediation patterns, in which they do not passively receive information from ChatGPT, but actively shape outputs through refined dialogue techniques. This pattern supports Hutchby’s (2001) notion of communicative affordance, which asserts that technological affordances are both functional and constrained by system structures. A deep understanding of ChatGPT’s capabilities and limitations enables more effective interaction, as described by Gupta et al. (2025). At a higher level, users in the communicative partner category do not merely position ChatGPT as a tool, but as a social entity capable of engaging in natural dialogue, reinforcing the concept of anthropomorphism in HMC (Guzman and Lewis, 2020). The human-like language capabilities encourage users to perceive AI as an interactive partner (Chinmulgund et al., 2023; Gomes et al., 2025), even forming parasocial relationships involving emotional attachment akin to interpersonal relationships (Guingrich, 2024; Luger and Sellen, 2016; Skjuve et al., 2021). These findings indicate that conversational AI not only mediates information exchange but also shapes users’ social and affective engagement with technology.

The materiality dimension of ChatGPT’s affordance is evident in how its specific features such as a text-based interface, language capabilities, and types of responses shape user interactions and experiences. Findings show that different user categories display varying linguistic styles: beginner users tend to use direct and specific command sentences, while communicative partners engage in more natural and expressive dialogue. This aligns with Araujo’s (2018) claim that text-based interfaces mimicking human conversation patterns encourage users to interact in a more personal manner (McTear et al., 2016; Rapp et al., 2023).

ChatGPT’s ability to produce varied responses including informative suggestions, motivational cues, and emotional expressions creates a sense of materiality that influences user perception. Mozafari et al. (2021) argue that conversational AI capable of providing contextual and diverse responses enhances social presence, reinforcing the perception of AI as an interactive entity rather than a static tool (Croes et al., 2023).

In addition, the adaptability of large language models (LLMs) plays a crucial role in shaping user experience. Their ability to adjust to various conversational contexts without extensive retraining facilitates versatile and dynamic interactions, enhancing user engagement and satisfaction (Wang B. et al., 2023). This adaptability, combined with effective prompting strategies, enables a smoother and more personalized user experience, underscoring the importance of material features in defining how users understand and interact with conversational AI (Liu et al., 2020; Maddigan and Susnjak, 2023).

The affective dimension in interactions with ChatGPT indicates that AI is not only a technical aid but also a trigger for complex emotions both positive, such as feeling helped and satisfied, and negative, such as frustration and disappointment depending on how well the responses align with user expectations. These findings are consistent with the Media Equation theory (Reeves and Nass, 1996), which explains that humans tend to respond to media as they would to other humans. Lomas et al. (2024) and Balmer (2023) affirm that the more natural the AI interaction becomes, the more likely users are to engage emotionally as they would in interpersonal communication. Users even tend to project human-like qualities onto AI such as empathy and emotional understanding reinforcing anthropomorphic tendencies and opening the door for parasocial relationships to form (Guerreiro and Loureiro, 2023; Liu-Thompkins et al., 2022; Qi et al., 2025).

Different types of AI can evoke various emotional responses, making it important to design AI that can bridge the emotional gap between humans and machines (Pantano and Scarpi, 2022). In contexts such as mental health support, the role of AI in facilitating empathetic conversations becomes increasingly significant (Sharma et al., 2022). This study highlights that the complexity of human-AI communication increases alongside users’ technological literacy and anthropomorphic tendencies, particularly among those who treat AI as a communication partner (Westerman et al., 2020). These findings align with those of (Košir and Strle, 2017; Liew et al., 2022), which show that even simple affective elements such as emoticons or visual anthropomorphism can enhance emotional engagement and reduce cognitive load, although they may not always directly influence intrinsic motivation or learning outcomes.

An important contribution of this study lies in the integration of the affective dimension in Human-Machine Communication (HMC), where users not only experience functional convenience but also intense emotional engagement, both positive and negative (Bucher and Helmond, 2017). This expands the concept of imagined affordances (Nagy and Neff, 2015), by demonstrating that mediation, materiality, and affect interact in shaping users’ perceptions of ChatGPT as a communicative partner. The informative and emotional responses from ChatGPT reinforce anthropomorphic tendencies, especially among users who view it as a social subject. These findings also challenge the limitations of the Media Equation Theory (Reeves and Nass, 1996), by showing that anthropomorphism is not universal, but influenced by user literacy, expectations, and interaction intensity. Esposito et al. (2014) also emphasize the importance of multimodal interfaces that are affectively adaptive to support successful HMC interactions.

In the context of Indonesia’s collectivist culture, this affective engagement appears more complex and culturally embedded. Indonesian users tend to display norms of politeness and social warmth in their interactions, for example by greeting, saying thank you, or even “comforting” ChatGPT when its responses are inadequate, reflecting strong values of harmony and social hierarchy (Awais et al., 2020; Hirsch et al., 2024). This shows that affordances are not only shaped by technology, but also constructed by the social and cultural values embedded in users. Therefore, the development of AI like ChatGPT in Indonesia must consider ethical and emotionally as well as culturally responsive design, in order to avoid emotional dependence while supporting a healthy relationship between humans and machines (Brandtzaeg et al., 2022; Liao and Sundar, 2022).

Limitations and future research

This study has limitations in terms of the small number of participants, namely only nine informants (n = 9) who were selected purposively. Each informant represents a different faculty at Telkom University and comes from various regional backgrounds across Indonesia, with adaptive characteristics in using ChatGPT. The participants were classified according to the three AI user types identified in this study: novice users, prompt-based users, and users who treat ChatGPT as a communication partner. Although this approach provides in-depth exploration of active user behavior, the generalizability of the findings remains limited. Furthermore, demographic variables such as age, gender, and professional background were not explored in depth, and inter-coder reliability in the thematic analysis was not conducted. Future research is recommended to use a longitudinal design, a mixed-method approach, and a more socio-culturally and geographically diverse sample. A particular focus on vulnerable groups such as adolescents, the elderly, and persons with disabilities is also important to broaden the ethical, inclusive, and justice-oriented perspectives in human-AI interaction, particularly with tools like ChatGPT.

Conclusion

This research reveals that human interaction with ChatGPT is not limited to instrumental functions but also reflects affective and social aspects. The three categories of users studied showed variations in how they communicate with AI. Early adopters rely more on ChatGPT as an information retrieval tool with certain limitations, while users who understand prompt techniques are able to optimize AI for more specific and complex purposes. Users who make ChatGPT a communication partner show greater emotional attachment, which reflects the phenomenon of anthropomorphism in human-machine interactions. These findings confirm that the affordance of technology is not only determined by technical features but also by users’ social expectations and experiences. In addition, the phenomenon of parasocial relationships with AI is increasingly evident in certain categories of users, which has implications for future AI design. Therefore, it is important for technology developers to consider the emotional and ethical aspects of AI system design in order to improve the user experience without incurring the risk of excessive emotional dependency.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

Ethical approval was not required for the study involving humans in accordance with the local legislation and institutional requirements. The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

RH: Validation, Formal analysis, Data curation, Methodology, Writing – review & editing, Supervision, Conceptualization, Project administration, Investigation, Writing – original draft, Software, Resources, Visualization, Funding acquisition. AZ: Investigation, Software, Writing – original draft, Conceptualization. AW: Supervision, Resources, Writing – review & editing. FE: Methodology, Writing – review & editing, Supervision. AA: Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that Gen AI was used in the creation of this manuscript. The authors verify and declare that the use of generative AI in the preparation of this manuscript was conducted responsibly and based entirely on research data independently collected and analyzed by the authors. AI tools were employed solely to assist with language processing, structuring arguments, and refining the manuscript’s expression, without replacing the author’s role in scientific decision-making, data interpretation, and drawing conclusions. The authors assume full responsibility for the originality, integrity, and accuracy of the content presented in this manuscript.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Al Lily, A. E., Ismail, A. F., Abunaser, F. M., Al-Lami, F., and Abdullatif, A. K. A. (2023). ChatGPT and the rise of semi-humans. Human. Soc. Sci. Commun. 10, 1–12. doi: 10.1057/s41599-023-02154-3

Androutsopoulou, A., Karacapilidis, N., Loukis, E., and Charalabidis, Y. (2019). Transforming the communication between citizens and government through AI-guided chatbots. Gov. Inf. Q. 36, 358–367. doi: 10.1016/j.giq.2018.10.001

Araujo, T. (2018). Living up to the chatbot hype: the influence of anthropomorphic design cues and communicative agency framing on conversational agent and company perceptions. Comput. Human Behav. 85, 183–189. doi: 10.1016/j.chb.2018.03.051

Archibald, M. M., and Clark, A. M. (2023). ChatGTP: what is it and how can nursing and health science education use it? J. Adv. Nurs. 79, 3648–3651. doi: 10.1111/jan.15643

Ardón, P., Pairet, È., Lohan, K. S., Ramamoorthy, S., and Petrick, R. P. A. (2021). Building affordance relations for robotic agents – a review. IJCAI International Joint Conference on Artificial Intelligence, 4302–4311. doi: 10.24963/ijcai.2021/590

Ashktorab, Z., Jain, M., Vera Liao, Q., and Weisz, J. D. (2019). Resilient chatbots: repair strategy preferences for conversational breakdowns. Conference on Human Factors in Computing Systems – Proceedings, 1–12. doi: 10.1145/3290605.3300484

Awais, M., Saeed, M. Y., Malik, M. S. A., Younas, M., and Rao Iqbal Asif, S. (2020). Intention based comparative analysis of human-robot interaction. IEEE Access 8, 205821–205835. doi: 10.1109/ACCESS.2020.3035201

Balmer, A. (2023). A sociological conversation with ChatGPT about AI ethics, affect and reflexivity. Sociology 57, 1249–1258. doi: 10.1177/00380385231169676

Batubara, M. H., Nasution, A. K. P., Burmalia,, and Rizha, F. (2024). ChatGPT in communication: a systematic literature review. Appl. Comput. Sci. 20, 96–115. doi: 10.35784/acs-2024-31

Beilby, K., and Hammarberg, K. (2023). Using ChatGPT to answer patient questions about fertility: the quality of information generated by a deep learning language model. Hum. Reprod. 38:154. doi: 10.1093/humrep/dead093.103

Biassoni, F., and Gnerre, M. (2025). Exploring ChatGPT’s communication behaviour in healthcare interactions: a psycholinguistic perspective. Patient Educ. Couns. 134:108663. doi: 10.1016/j.pec.2025.108663

Blazevic, V., and Sidaoui, K. (2022). The TRISEC framework for optimizing conversational agent design across search, experience and credence service contexts. J. Serv. Manag. 33, 733–746. doi: 10.1108/JOSM-10-2021-0402

Brabra, H., Baez, M., Benatallah, B., Gaaloul, W., Bouguelia, S., and Zamanirad, S. (2022). Dialogue management in conversational systems: a review of approaches, challenges, and opportunities. IEEE Trans. Cogn. Dev. Syst. 14, 783–798. doi: 10.1109/TCDS.2021.3086565

Brandtzaeg, P. B., Skjuve, M., and Følstad, A. (2022). My AI friend: how users of a social chatbot understand their human-AI friendship. Hum. Commun. Res. 48, 404–429. doi: 10.1093/hcr/hqac008

Bucher, T., and Helmond, A. (2017). “The affordances of social media platforms” in The SAGE handbook of social media. eds. J. Burgess, A. Marwick, and T. Poell (India: SAGE), 233–253.

Caci, B., and Dhou, K. (2020). Intelligence and users’ personalities: A new scenario for human-computer, vol. 1. Switzerland: Springer International Publishing.

Chen, L., Chen, P., and Lin, Z. (2020). Artificial intelligence in education: a review. IEEE Access 8, 75264–75278. doi: 10.1109/ACCESS.2020.2988510

Cheng, Q., Sun, T., Liu, X., Zhang, W., Yin, Z., Li, S., et al. (2024). Can AI assistants know what they don’t know? Proc. Mach. Learn. Res., 235, 8184–8202. Available online at: https://www.scopus.com/inward/record.uri?eid=2-s2.0-85203844366&partnerID=40&md5=172597ee791ed1f57923629e066e5be7

Chin, H., Zhunis, A., and Cha, M. (2024). Behaviors and perceptions of human-chatbot interactions based on top active users of a commercial social chatbot. Proc. ACM Hum.-Comput. Interact. 8, 1–28. doi: 10.1145/3687022

Chinmulgund, A., Khatwani, R., Tapas, P., Shah, P., and Sekhar, R. (2023). Anthropomorphism of AI based chatbots by users during communication. 2023 3rd International Conference on Intelligent Technologies (CONIT), 1–6. doi: 10.1109/CONIT59222.2023.10205689

Choudhury, A., and Shamszare, H. (2023). Investigating the impact of user trust on the adoption and use of ChatGPT: survey analysis. J. Med. Internet Res. 25:e47184. doi: 10.2196/47184

Creswell, J. W., and Poth, C. N. (2016). Qualitative inquiry and research design: Choosing among five approaches. United States: Sage publications.

Croes, E. A. J., Antheunis, M. L., Goudbeek, M. B., and Wildman, N. W. (2023). “I am in your computer while we talk to each other” a content analysis on the use of language-based strategies by humans and a social Chatbot in initial human-Chatbot interactions. Int. J. Hum.-Comput. Interact. 39, 2155–2173. doi: 10.1080/10447318.2022.2075574

D’Amico, A., Delteil, B., and Hazan, E. (2025) Artificial intelligence is set to revolutionize strategy activities. But as AI adoption spreads, strategists will need proprietary data, creativity, and new skills to develop unique options. Available online at: https://www.mckinsey.com/capabilities/strategy-and-corporate-finance/our-insights/how-ai-is-transforming-strategy-development#/ (Accessed Febuary 5)

Danso, S., Annan, M. A. O., Ntem, M. T. K., Baah-Acheamfour, K., and Awudi, B. (2023). Artificial intelligence and human communication: a systematic literature review. World J. Adv. Res. Rev. 19, 1391–1403. doi: 10.30574/wjarr.2023.19.1.1495

Dergaa, I., Chamari, K., Zmijewski, P., and Ben Saad, H. (2023). From human writing to artificial intelligence generated text: examining the prospects and potential threats of ChatGPT in academic writing. Biol. Sport 40, 615–622. doi: 10.5114/biolsport.2023.125623

Deshpande, A., Rajpurohit, T., Narasimhan, K., and Kalyan, A. (2023). Anthropomorphization of AI: opportunities and risks. Proceedings of the Natural Legal Language Processing Workshop 2023, 1–7. doi: 10.18653/v1/2023.nllp-1.1

Dewi, I. R. (2024). Peringkatnya Tak Disangka, Warga RI Ternyata Paling Rajin Pakai AI. CNBC Indonesia. Available online at: https://www.cnbcindonesia.com/tech/20240903121342-37-568715/peringkatnya-tak-disangka-warga-ri-ternyata-paling-rajin-pakai-ai (Accessed February 15, 2025).

Epley, N., Waytz, A., and Cacioppo, J. T. (2007). On seeing human: a three-factor theory of anthropomorphism. Psychol. Rev. 114, 864–886. doi: 10.1037/0033-295X.114.4.864

Esposito, A., Fortunati, L., and Lugano, G. (2014). Modeling emotion, behavior and context in socially believable robots and ICT interfaces. Cogn. Comput. 6, 623–627. doi: 10.1007/s12559-014-9309-5

Evans, S. K., Pearce, K. E., Vitak, J., and Treem, J. W. (2017). Explicating affordances: a conceptual framework for understanding affordances in communication research. J. Comput.-Mediat. Commun. 22, 35–52. doi: 10.1111/jcc4.12180

Fortunebusinessinsights (2025). Artificial intelligence market size and future outlook. Fortune Business Insights. Available online at: https://www.fortunebusinessinsights.com/industry-reports/artificial-intelligence-market-100114 (Accessed February 15, 2025).

Fui-Hoon Nah, F., Zheng, R., Cai, J., Siau, K., and Chen, L. (2023). Generative AI and ChatGPT: applications, challenges, and AI-human collaboration. J. Inf. Technol. Case Appl. Res. 25, 277–304. doi: 10.1080/15228053.2023.2233814

Gibson, J. J. (1979). The ecological approach to visual perception. United Kingdom: Houghton Mifflin.

Gomes, S., Lopes, J. M., and Nogueira, E. (2025). Anthropomorphism in artificial intelligence: a game – changer for brand marketing. Future Bus. J. 11, 1–15. doi: 10.1186/s43093-025-00423-y

Grimes, G. M., Schuetzler, R. M., and Giboney, J. S. (2021). Mental models and expectation violations in conversational AI interactions. Decis. Support. Syst. 144:113515. doi: 10.1016/j.dss.2021.113515

Grosz, B. J. (2018). Smart enough to talk with us? Foundations and challenges for dialogue capable AI systems. Comput. Ling. 44, 1–15. doi: 10.1162/COLI_a_00313

Guerreiro, J., and Loureiro, S. M. C. (2023). I am attracted to my cool smart assistant! Analyzing attachment-aversion in AI-human relationships. J. Bus. Res. 161:113863. doi: 10.1016/j.jbusres.2023.113863

Guingrich, R. E. (2024). CHATBOTS as social companions: How people perceive consciousness, human likeness, and social health.

Gupta, P., Nguyen, T. N., Gonzalez, C., and Woolley, A. W. (2025). Fostering collective intelligence in human–AI collaboration: laying the groundwork for COHUMAIN. Top. Cogn. Sci. 17, 189–216. doi: 10.1111/tops.12679

Guzman, A. (2020). Ontological boundaries between humans and computers and the implications for human-machine communication. Hum.-Mach. Commun. 1, 37–54. doi: 10.30658/hmc.1.3

Guzman, A. L., and Lewis, S. C. (2020). Artificial intelligence and communication: a human–machine communication research agenda. New Media Soc. 22, 70–86. doi: 10.1177/1461444819858691

Hancock, J. T., Naaman, M., and Levy, K. (2020). AI-mediated communication: definition, research agenda, and ethical considerations. J. Comput.-Mediat. Commun. 25, 89–100. doi: 10.1093/jcmc/zmz022

Haqqu, R., and Rohmah, S. N. (2024). Interaction process between humans and ChatGPT in the context of interpersonal communication. J. Ilm. LISKI (Lingkar Studi Komun.) 10, 23–35. doi: 10.25124/liski.v10i1.7216

Hargadon, A., and Sutton, R. I. (1997). Technology brokering and innovation in a product development firm. Admin. Sci. Q. 42:716. doi: 10.2307/2393655

Hirsch, L., Paananen, S., Lengyel, D., Häkkilä, J., Toubekis, G., Talhouk, R., et al. (2024). Human–computer interaction (HCI) advances to re-contextualize cultural heritage toward multiperspectivity, inclusion, and sensemaking. Appl. Sci. 14, 1–25. doi: 10.3390/app14177652

Hopkinson, P. J., Singhal, A., Perez-Vega, R., and Waite, K. (2023). “The transformative power of artificial intelligence for managing customer relationships: an abstract” in Developments in marketing science: Proceedings of the academy of marketing science (Asterios Bakolas, Switzerland: Springer Nature), 307308.

Hutchby, I. (2001). Technologies, texts and affordances. Sociology 35, 441–456. doi: 10.1177/S0038038501000219

Ishak. (2022). Bagaimana Perkembangan Kecerdasan Buatan di Luar Negeri. Digital Transformation Indonesia. Available online at: https://digitaltransformation.co.id/bagaimana-perkembangan-kecerdasan-buatan-di-luar-negeri/#:~:text=PerkembanganAI(ArtificialIntelligence)atau,$22%2C6miliar) (Accessed February 15, 2025).

Janiesch, C., Zschech, P., and Heinrich, K. (2022). “Machine learning and deep learning” in Elgar Encyclopedia of Technology and Politics, Edward Elgar Publishing Limited. 114–118.

Jiang, T., Sun, Z., Fu, S., and Lv, Y. (2024). Human-AI interaction research agenda: a user-centered perspective. Data Inf. Manag. 8:100078. doi: 10.1016/j.dim.2024.100078

Jobin, A., Ienca, M., and Vayena, E. (2019). The global landscape of AI ethics guidelines. Nat. Mach. Intell. 1, 389–399. doi: 10.1038/s42256-019-0088-2

Kalla, D., and Kuraku, S. (2023) Study and analysis of chat GPT and its impact on different fields of study Int. J. Innov. Sci. Res. Technol., 8 827–833. Available online at: www.ijisrt.com

Knoth, N., Tolzin, A., Janson, A., and Leimeister, J. M. (2024). AI literacy and its implications for prompt engineering strategies. Comput. Educ. Artif. Intell. 6:100225. doi: 10.1016/j.caeai.2024.100225

Korzynski, P., Mazurek, G., Krzypkowska, P., and Kurasinski, A. (2023). Artificial intelligence prompt engineering as a new digital competence: analysis of generative AI technologies such as ChatGPT. Entrep. Bus. Econ. Rev. 11, 25–37. doi: 10.15678/EBER.2023.110302

Košir, A., and Strle, G. (2017). Emotion elicitation in a socially intelligent service: the typing tutor. Computers 6:14. doi: 10.3390/computers6020014

Kovacevic, N., Holz, C., Gross, M., and Wampfler, R. (2024) On multimodal emotion recognition for human-chatbot interaction in the wild. International Conference on Multimodel Interaction, 12–21. doi: 10.1145/3678957.3685759

Liao, W., Oh, Y. J., Feng, B., and Zhang, J. (2023). Understanding the influence discrepancy between human and artificial agent in advice interactions: the role of stereotypical perception of agency. Commun. Res. 50, 633–664. doi: 10.1177/00936502221138427

Liao, Q. V., and Sundar, S. S. (2022). Designing for responsible trust in AI systems: a communication perspective. ACM International Conference Proceeding Series, 1257–1268. doi: 10.1145/3531146.3533182

Liew, T. W., Pang, W. M., Leow, M. C., and Tan, S.-M. (2022). Anthropomorphizing malware, bots, and servers with human-like images and dialogues: the emotional design effects in a multimedia learning environment. Smart Learn. Environ. 9:5. doi: doi: 10.1186/s40561-022-00187-w

Littlejohn, S., Foss, K. A., and Oetzel, J. (2021). Theories of human communication. 12th Edn. United States: Waveland Press.

Liu, Q., Chen, Y., Chen, B., Lou, J.-G., Chen, Z., Zhou, B., et al. (2020). You impress me: dialogue generation via mutual persona perception. Proceedings of the 58th annual meeting of the Association for Computational Linguistics, 1417–1427. doi: 10.18653/v1/2020.acl-main.131

Liu, J., Wang, C., and Liu, S. (2023). Utility of ChatGPT in clinical practice. J. Med. Internet Res. 25:e48568. doi: 10.2196/48568

Liu-Thompkins, Y., Okazaki, S., and Li, H. (2022). Artificial empathy in marketing interactions: bridging the human-AI gap in affective and social customer experience. J. Acad. Mark. Sci. 50, 1198–1218. doi: 10.1007/s11747-022-00892-5

Lomas, J. D., van der Maden, W., Bandyopadhyay, S., Lion, G., Patel, N., Jain, G., et al. (2024). Evaluating the alignment of AI with human emotions. Adv. Design Res. 2, 88–97. doi: 10.1016/j.ijadr.2024.10.002

Luger, E., and Sellen, A. (2016). “Like having a really bad pa”: the gulf between user expectation and experience of conversational agents. Conference on Human Factors in Computing Systems – Proceedings, 5286–5297. doi: 10.1145/2858036.2858288

Lyu, Q., Tan, J., Zapadka, M. E., Ponnatapura, J., Niu, C., Myers, K. J., et al. (2023). Translating radiology reports into plain language using ChatGPT and GPT-4 with prompt learning: results, limitations, and potential. Vis. Comput. Ind. Biomed. Art 6:9. doi: 10.1186/s42492-023-00136-5

Maddigan, P., and Susnjak, T. (2023). Chat2VIS: generating data visualizations via natural language using ChatGPT, codex and GPT-3 large language models. IEEE Access 11, 45181–45193. doi: 10.1109/ACCESS.2023.3274199

Matei, S. A. (2013). The shallows: what the internet is doing to our brains, by Nicholas Carr. Inf. Soc. 29, 130–132. doi: 10.1080/01972243.2013.758481

McTear, M., Callejas, Z., and Griol, D. (2016). The conversational Interface. Switzerland: Springer International Publishing.

Merriam, S. B., and Tisdell, E. J. (2016). Qualitative research: A guide to design and implementation. 4th Edn. United Kingdom: Jossey-Bass A Wiley Brand.

Moraiti, I., and Drigas, A. (2023). AI tools like ChatGPT for people with neurodevelopmental disorders. Int. J. Online Biomed. Eng.(IJOE) 19, 145–155. doi: 10.3991/ijoe.v19i16.43399

Mozafari, N., Weiger, W. H., Arabia, S., and Hammerschmidt, M. (2021) That’s so embarrassing! When not to design for social presence in human – chatbot interactions (Forty-Second International Conference on Information Systems, Association for Information Systems