- Air Force Research Laboratory, Dayton, OH, United States

Artificial intelligence (AI) and machine learning (ML) are rapidly changing the landscape of almost every environment. Despite the burgeoning attention on this subject matter, limited human-centered research has focused on understanding how users interact with AI and ML to facilitate greater trust toward these systems, leveraging classic human-machine interaction principles to investigate human interaction with these emerging complex systems. The current paper incorporates literature from Social Psychology, Computer Science, Information Sciences, and Human Factors Psychology to create a single comprehensive model for understanding user interactions with AI/ML-enabled systems. This paper expands previous theoretical models by explicating transparency, incorporating individual differences in the information processing model of cognition, and summarizing the different attitudes and personality variables that can facilitate use and disuse of AI and ML. The theoretical model proposed explicitly demarcates the referent algorithm from the human user, detailing the processes that eventuate a user’s reliance on and compliance with an AI/ML-enabled system. Actual and potential applications of the literature review and theorized model are discussed.

Introduction

Artificial intelligence (AI) and machine learning (ML) are rapidly changing the technological landscape of work. AI/ML have been utilized in a variety of tasks such as image classification (e.g., Hendrycks et al., 2019), route planning (e.g., Hu et al., 2020), and parole recommendations (e.g., Hübner, 2021), to name a few (see Kaplan et al., 2020). These applications of AI/ML have brought increased productivity and alleviated aspects of work that were previously reserved for human oversight and assumed to be outside the realm of machine capability. However, the applications of AI/ML have also brought questions as to how to facilitate proper trust in and use of these algorithms.

The current paper seeks to build a theoretical model of human-AI/ML interaction, incorporating the underlying information that both AI/ML algorithms and explainable AI (XAI)1 tools use as input and provide as output, and explicate the psychological processes that lead to trust in AI/ML/XAI from an information processing theory perspective. In this paper we leverage research from many fields including Computer Science, Psychology, and Information Sciences. The current paper seeks to add clarity to the field to help guide research on interface design(s) relevant to human-AI/ML/XAI interaction, with the goal of increasing appropriate use of AI/ML/XAI-enabled systems. Importantly, our proposed model can be applied to all human-AI/ML/XAI interactions. Our model expands information processing theory (Wickens and Hollands, 2000; Wickens, 2002) by situating individual differences from the Organizational, Personality, and Social Psychology literatures as meaningful constructs that may shape users’ processing of information from AI/ML/XAI referents. We also expand on the different attitudes that can result from this information processing. Lastly, our theoretical model also expands the theory on machine transparency, explaining how individual differences influence the cognitive processing of the outputs of the AI/ML/XAI information. The goal of the paper is to incorporate all previous knowledge into one workable framework that can be utilized across disciplines.

AI, ML, and XAI

AI/ML

AI/ML are advanced algorithms that enable software systems with human-like cognitive capacity for decision-making (Dwivedi et al., 2021; Herm et al., 2021; Hradecky et al., 2022). AI/ML are powerful generalizers and predictors (Burrell, 2016) that have demonstrated their use in a variety of settings (Barreto Arrieta et al., 2020). The application of AI/ML to prediction cases is not new. It is both the rapid increase of AI/ML into a variety of fields and the opaqueness of many of the emerging algorithms that are relatively new (Ali et al., 2023). Newer algorithms utilize complex networks of nodes and hidden layers that predict outcomes. These nodes and hidden layers are much more complex than traditional algorithms like regression. The increased complexity leads to a lack of comprehension as to how the model came to a decision, either locally (e.g., a given instance) or globally (e.g., how the model works overall; see Zhou et al., 2021).

At its simplest form, the Computer Science literature has largely demarcated algorithms into white- and black-box models (Herm et al., 2023).2 White-box models are relatively less complex AI/ML-enabled algorithms, where the underlying decision processes are understandable to the user (e.g., regression, decision trees, and generalized additive models). Their processes for reaching a given classification, and the variables that are or are not important to reach a given prediction, are comparatively interpretable by human users (Barreto Arrieta et al., 2020). White-box models typically require feature selection of pertinent characteristics for the development of an algorithm. In contrast, black-box models utilize all relevant data and interactions in non-linear models to predict and generalize. Black-box models are often so complex they provide little to no information about the underlying decision process to the user (Lundberg, 2017; Rudin, 2019; Samek et al., 2021).

We turn to deep neural networks (DNNs; Samek et al., 2021) to illustrate an example of the opacity of black-box models. DNNs are a family of algorithms that make decisions typically through a complex series of nodes. Nodes are combinations of input data with a set of weights or coefficients that either increase or decrease that input. There are often so many nodes in the algorithm that a human cannot understand all the information, even if it was provided, making them theoretically explainable but not necessarily interpretable or understandable (Angelov and Soares, 2020). Given their complexity, they do not provide meaningful information about the algorithm’s underlying decision processes to the user (see also Samek et al., 2021). This resulting lack of human understanding as to the underlying decision-making complexities has led researchers (Barreto Arrieta et al., 2020; Sanneman and Shah, 2022; Vilone and Longo, 2021) and governments (European Union Act, 2024/1689; Air Force Doctrine Note, 2024) to call for transparency in these algorithms as they are leveraged to make key decisions.

There is a tradeoff between white- and black-box models. Black-box models are often the most predictive / accurate, yet they are the opaquest, whereas white-box models provide all or most of the information used in reaching a given classification but are less accurate (Herm et al., 2023). Differences between black- and white-box models are non-linear with numerous parameters that are not easily interpreted (Li et al., 2022; Mahmud et al., 2021). However, the tradeoffs for black- and white-box models are not as straightforward as previously thought, such that there is nuance between models (Herm et al., 2023). Traditionally, researchers and practitioners have needed to balance the tradeoff between the performance and explainability of these algorithms (Rudin, 2019). The advent of XAI in the last 20 years has attempted to alleviate some of the explainability issues in black-box models, applying these algorithms to highlight the important features of a black-box model’s decision-making process to afford human understanding (Adadi and Berrada, 2018; Herm et al., 2023).

XAI

XAI are algorithms that make it possible for humans to keep intellectual oversight of AI/ML (Adadi and Berrada, 2018; Gunning and Aha, 2019; Longo et al., 2024). The focus of the literature surrounding XAI is to create algorithms that provide explanations for AI/ML decision processes in a manner that is interpretable for human users (Ali et al., 2023; Sanneman and Shah, 2022; Visser et al., 2023; Hassija et al., 2024). XAI explanations are meant to facilitate appropriate reliance and proper use of AI/ML systems, ensure fairness in the resulting decisions informed by those systems’ outcomes, and provide an understanding of where these systems are lacking in performance (Barreto Arrieta et al., 2020; Visser et al., 2023). However, even the concepts of explainability versus interpretability within the XAI literature are nuanced, and the unique challenges for affording both are not necessarily one and the same (Guidotti et al., 2018; Tocchetti and Brambilla, 2022).

A key aspect to XAI is the theory that if users can interpret the behavior of the algorithm, whether correct or incorrect, they will be more willing to act on the suggestions of the algorithm appropriately, especially in instances where predictions are not consistent with the user’s expectations (Berger et al., 2021; Ribeiro et al., 2016b). In other words, explanations can bridge the information gap between the AI/ML model and the user (Baird and Maruping, 2021; Barreto Arrieta et al., 2020). However, research has found less than 1% of XAI studies in the literature contain user interactions with these models (Keane and Kenny, 2019; Suh et al., 2025).

Current paper

The research on human interpretations of AI/ML/XAI has been largely atheoretical in previous research. Black-box models were created largely without the user in mind as much of the early research was focused on model accuracy. The advent of XAI has sought to remedy these limitations but has not focused on human-machine interactions (Keane and Kenny, 2019; Suh et al., 2025). Although research has been conducted on increasing interpretability of AI/ML/XAI models, there remains a relative dearth of theoretical frameworks for creating these models. A comprehensive theory of how users comprehend AI/ML/XAI is necessary to help understand how and why users trust a system so that systems can be designed with the user in mind. The current paper bridges the gap between the human-computer interaction literature in social sciences and the AI/ML/XAI literature in the computer sciences. We sought to create a framework based on information processing theory in the social sciences and of AI/ML/XAI and experimentation to determine if the advances of new models meet the creator’s criteria of facilitating trust. The theoretical model provided below provides key testable hypotheses for researchers when developing AI/ML/XAI for users and developers.

User-centered trust towards AI model

Deciding to trust or rely on a machine is inherently an information processing model (Alarcon et al., 2023; Chiou and Lee, 2023), where system transparency leads to more information about the system that should inform the user and thereby facilitate calibrated trust, assuming the appropriate amount of information—both its perceptibility and veridicality—is displayed.

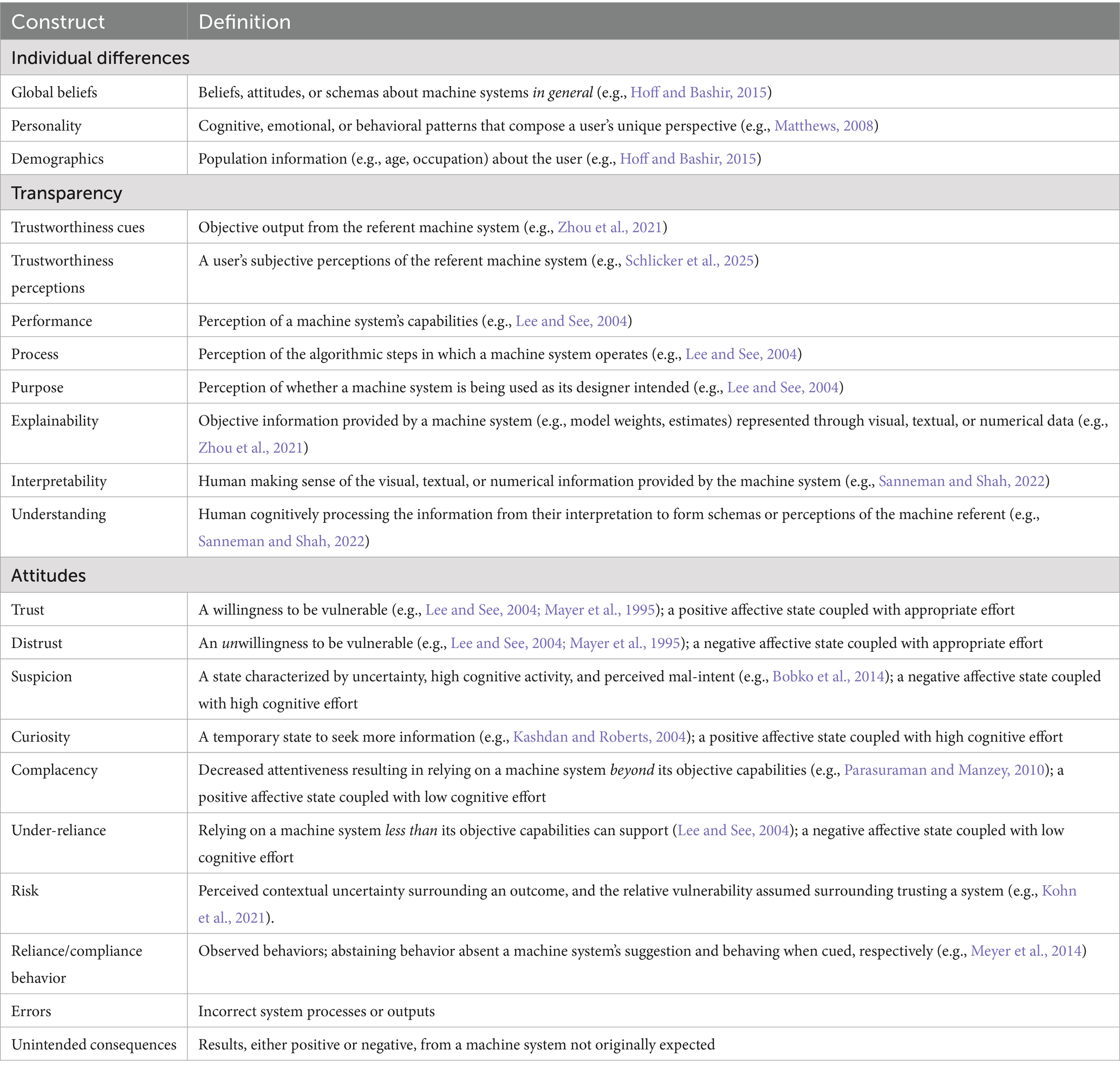

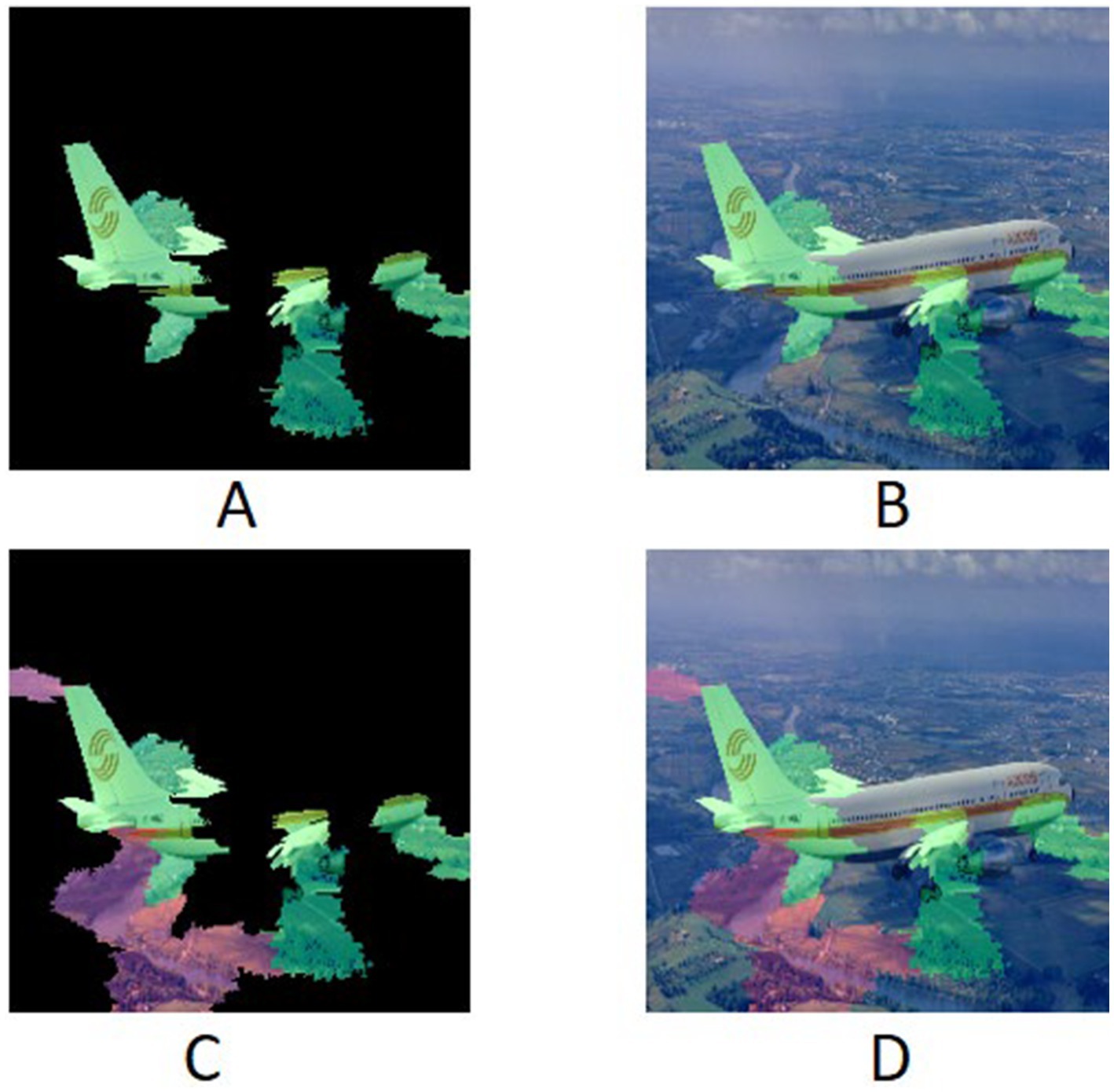

Although we do view much of the trust process as previous researchers do, we expand on the previous research explicating the mechanisms for many variables in the model. Figure 1 illustrates our theoretical model of trust in human-AI/ML/XAI interaction. Information is perceived from the environment, processed by the user, and mental models of the system are formed. Information below the black line illustrates aspects of the machine, which have been drawn with squares. Aspects of the model above the black line illustrate aspects of the user, which are drawn with circles. Information processing occurs on the black line, with the user interpreting information about the system as illustrated with grey rounded boxes. The mental models about the referent lead to attitudes about the system which result in behaviors.

Figure 1. User-centered trust towards AI model. Objective aspects of the model are illustrated as squares. Subjective aspects of the model are illustrated as circles. Subjective user interpretation of objective information is illustrated as rounded boxes. The figure illustrates the information from AI/ML models in the form of trustworthiness cues. Trustworthiness cues are then interpreted by the human, and the demarcation line through the rounded boxes denotes the emphasis on the AI/ML referent (below) or user (above). The subjective interpretation of the referent system leads to subjective trustworthiness perceptions. These perceptions facilitate attitudes toward the AI/ML-enabled system. The attitudes facilitate behaviors. Trustworthiness cues were left blank as they depend on the models used.

In our theoretical model, we demarcate between antecedents to trust, trust, and behaviors based on previous models (Hoff and Bashir, 2015; Lee and See, 2004; Mayer et al., 1995; Schlicker et al., 2025; see also Kohn et al., 2021). Antecedents to trust in our model comprise individual differences and trustworthiness perceptions, much like previous literature (Kohn et al., 2021).

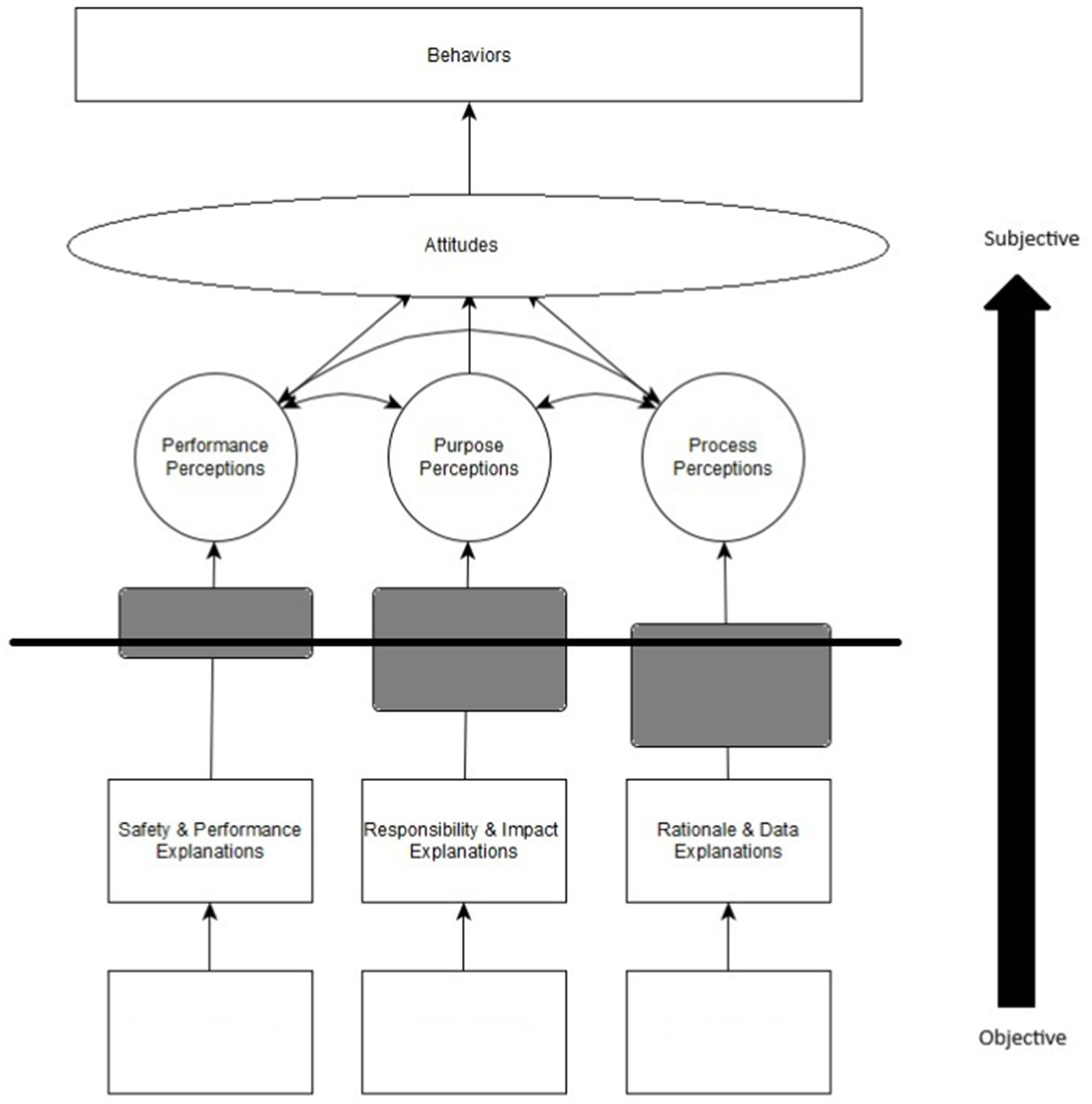

Moving beyond previous research, we expand on the various individual differences and their theoretical effects on the trust process. We also expand on the process of transparency, describing how transparency of the referent machine model influences cognitive processing of information in the environment and links to relevant literature in the computer science research, as illustrated in Figure 2. Information from the environment is processed and comprises trustworthiness perceptions, which influence attitudes toward the system. Importantly, we do not theorize trust is the only antecedent to behaviors. We expand on the different attitudes that can influence behavioral outcomes with a system.

Figure 2. Transparency process as a function of explainability, interpretability, and understanding. We match the terms explainability, interpretability and understanding from the computer science literature to relevant constructs from the social sciences. Explainability is the machine output of relevant criteria (i.e., weights, estimates, etc.) in terms of textual, visual or numerical representation from the model. Interpretability is the cognitive processing of information by the user of the machine output. Understanding is the schema/mental model that is formed about the referent machine that facilitates attitudes in the system.

Figure 1 incorporates all the previous research that we have described into one cohesive model. First, we note that unlike other models (but see Schlicker et al., 2025), there is a clear demarcation between the user and the machine. The bottom of the figure illustrates the AI/ML/XAI algorithm and the information it provides, which we call trustworthiness cues. The upper half of the image illustrates the information perceived by the user, which we call trustworthiness perceptions. This was done to emphasize the difference between objective aspects of the machine referent and the user’s perceptions of the machine. Although previous theoretical models implied the demarcation of trustworthiness cues from trustworthiness perceptions (e.g., Alarcon and Willis, 2023; Hoff and Bashir, 2015; Lee and See, 2004), we explicitly state and illustrate these differences in our model. We have used squares to represent objective information, circles to represent subjective perceptions, and squares with rounded edges to illustrate the utilization/processing of information in the figure (see Figure 1). Table 1 defines all constructs and provides citations for each construct.

Individual differences and information processing

The information processing approach to human perception and cognition has been utilized to much acclaim in the Human Factors literature (Wickens and Hollands, 2000; Wickens, 2002; Wickens and Carswell, 2012). However, the role of individual differences in the information processing theory has largely been ignored (Endsley, 2023; Wickens and Carswell, 2012). In this section we outline the types of individual differences, and the three proposed influences individual differences can have on information processing: (1) individual differences as information, (2) individual differences in the processing of information, and (3) individual differences as direct effects on behaviors.

There are individual differences between users, which can theoretically explain different perceptions of and interactions with machine systems in general (Hoff and Bashir, 2015; Lee and See, 2004) and AI/ML-enabled systems specifically (Kaplan et al., 2023). We demarcate these into general beliefs, personality variables, and demographics. We theorize individual differences play a role across all aspects of the decision-making process when humans interact with AI/ML/XAI. We expand on this throughout the theoretical model. In this section, we discuss the three types of individual differences; then, we discuss the three mechanisms through which they operate on the trust process.

Global beliefs

Global beliefs can influence perceptions through a variety of variables. We define global beliefs as any general belief or cognitive schema about AI/ML/XAI that are preconceived prior to working with a specific AI/ML/XAI referent. Perhaps the two most researched global beliefs variables in relation to the human-machine trust process are propensity to trust machines (Jessup et al., 2019; Li et al., 2017; Merritt, 2011) and perfect automation schema (Dzindolet et al., 2002; Gibson et al., 2023; Merritt et al., 2015). Propensity to trust machines is defined as a general tendency to utilize machines or view them favorably (Jessup et al., 2019). The propensity to trust machines is a global heuristic about machines in general that influences initial perceptions of a new system particularly when there is little information about the system available to the user (Hoff and Bashir, 2015; Siau and Wang, 2018). Perfect automation schema is like propensity to trust machines in that it pertains to global perceptions of machines; however, this construct comprises high expectations and all-or-none thinking (Merritt et al., 2015). High expectations are the initial beliefs that machine systems should perform well and thus has strong overlap with propensity to trust machines (Gibson et al., 2023; Merritt, 2011). All-or-none thinking is the bias that machine systems should be abandoned if they commit an error.

Importantly, these are all global beliefs about systems which are informed by experience with the world, rather than personality constructs. We note these two global beliefs are not theorized to be an exhaustive list of beliefs but rather just two examples of beliefs in the literature.

Personality

Personality is defined as any cognitive, emotional, or behavioral patterns that comprise an individual’s unique perspective in life. Personality psychology is focused on individual differences in mental processes and how they develop (Roberts and Yoon, 2022). There are several personality structures in the literature that can influence human perceptions and behaviors, such as the Five-Factor Model (Costa Jr and McCrae, 1992). We note that relatively little research has been conducted on the effects of personality on human-AI/ML/XAI interaction (Kaplan et al., 2023). However, personality has been related to aspects of the information processing model. For example, the Five-Factor Model has been related to aspects of information processing theory such as working memory (Waris et al., 2018) and short-term memory (Matthews, 2008). Indeed, research has noted that task and person characteristics should not be explored in isolation but instead explored concurrently to determine their effects (Matthews, 2008; Szalma, 2008, 2009; Szalma and Taylor, 2011). As such, personality variables may help explain individual differences in how users process information from the system.

Demographics

Recent meta-analytic work shows the importance of demographic variables on human trust toward AI/ML (Ehsan et al., 2024; Kaplan et al., 2023).3 Knowledge, skills, and abilities (KSAs) of the human user play a key role in understanding how they perceive AI/ML-enabled systems (Hoff and Bashir, 2015; Schaefer et al., 2016). For example, Ehsan et al. (2024) found novices had different heuristics for XAI than computer science majors, leading to different interpretation of the stimuli provided by the XAI. The age of the user may also be important in cognitive processing. Research has noted that younger users are more likely to have a positive view of technology and are more likely to use technology than older individuals. This effect has been noted in a variety of research. For example, Casey and Vogel (2019) found millennials were more likely to use technology than any other generation. Males have also demonstrated a stronger propensity to use technology than females across a variety of situations. Thus, we see the import of simple demographic variables normally gathered in research as playing a role in baseline interactions with machine human systems (see Kaplan et al., 2023).

Mechanism for individual differences on perceptions

Individual differences have demonstrated the ability to act as information when little to no information about the referent or situation is present. Specifically, global beliefs such as propensity to trust have been theorized to act as information when little is known about the referent partner in interpersonal research (Alarcon et al., 2016, 2018; Alarcon and Jessup, 2023; Jones and Shah, 2016). A person that has a global belief that people are trustworthy will be more trusting of others when information is lacking. As information becomes salient about the referent, the loci of information transitions from the trustor to the trustee (Alarcon et al., 2016; Jones and Shah, 2016). We theorize similar relationships in human-AI/ML/XAI interactions.

Specifically, global beliefs can act as information about the environment or referent AI/ML/XAI algorithm when there is little to no information available. Demographic variables can also play a role in this initial perception. For example, Alarcon et al. (2017) found computer programmers were reticent to trust code from an unknown source, specifically if the use case was high in vulnerability. The training, experience, and personality variables the user has can act as information about the situation when information about the referent is lacking. The differences they found may be due to different cognitive heuristics developed through training and expertise (Ehsan et al., 2024). This information that is inherent in the user facilitates initial perceptions about the referent AI/ML/XAI.

Proposition 1: Global beliefs about AI/ML/XAI, demographic variables, and personality influence initial perceptions of the referent system.

Proposition 2: As information about the referent AI/ML/XAI-enabled system is made salient over time, the influence of global beliefs will have less of an impact in the human-AI/ML/XAI interaction.

Second, individual differences can influence the processing of information as the user receives information from the AI/ML/XAI output. As information becomes salient in the interaction with the AI/ML/XAI, the user will process this information which can be influenced by individual differences. First, global beliefs and personality differences can influence the processing of information. These constructs may influence the cognitive processing of information, and include personality variables that influence one’s perceptions such as need for cognition (i.e., the extent to which individuals enjoy and engage in effortful cognitive activity; Cacioppo et al., 1996; Cacioppo and Petty, 1982), curiosity (i.e., the general desire for knowledge, to resolve knowledge gaps, to solve problems, and a motivation to learn new ideas and engage in effortful cognitive activity; Berlyne, 1960, 1978; Loewenstein, 1994), and complacency potential (i.e., a general propensity to not engage in effortful thinking; Merritt et al., 2019; Singh et al., 1993), along with global beliefs like all-or-none thinking. Other personality characteristics such as neuroticism, extraversion, and risk aversion can also play a role. For example, people high in neuroticism tend to view the world negatively (Bunghez et al., 2024), which can influence their perceptions about the AI/ML/XAI, especially if stimuli are ambiguous. Conversely, people high in extraversion tend to experience high positive affect (Costa Jr and McCrae, 1992), which may influence their perceptions of AI/ML/XAI. Alarcon and Jessup (2023) found risk aversion, a general disinclination towards risk, influences the processing of information in a modified version of the trust game. Participants high in risk aversion were less apt to view their referent partner as more trustworthy and took fewer risks with their partner.

Importantly, demographic variables such as knowledge, skills and abilities can also influence the processing of this information (Hoff and Bashir, 2015). For example, expertise can influence how information from the model is perceived through previously formed heuristics (Ehsan et al., 2024). Take the extant work investigating algorithm aversion which is a reluctance toward relying on algorithms for decision-making compared to humans, even if the algorithm is more accurate than a human assistant (Capogrosso et al., 2025; Dietvorst et al., 2015). The research paradigm by Dietvorst et al. (2015) on algorithm aversion has noted that people are reticent to use algorithms in a GPA prediction task. Jessup et al. (2024) have noted algorithm aversion was strong for individuals who engaged in tasks with the GPA prediction as the focus, but if the focus of the task was classifying Github repositories, participants over-trusted the algorithm. Jessup et al. (2024) also note that participants may not have had the requisite knowledge, skills, or abilities in the Github repository task. Ribeiro et al. (2016b) note that interpretability of algorithm is dependent on the target user. Thus, the reason for algorithm aversion’s effect in human-machine interaction may be due to the individual’s knowledge of, skills pertaining to, and abilities using AI/ML-enabled algorithm systems.

Proposition 3: Users’ global beliefs, personality, and demographics will influence their processing of information, thereby impacting their perceptions of and attitudes toward AI/ML/XAI referents.

Lastly, we theorize that some individual differences can have a direct impact on behaviors. Personality variables such as complacency potential, curiosity, and need for cognition may all have direct influences on behaviors because of the nature of the constructs. As noted in the Psychology literature, behaviors are the outcome of many different psychological processes. In this instance, whether a user does or does not perform monitoring behaviors may be indicative of personality rather than aspects of the system (Gibson et al., 2023). Notably, need for cognition is typically associated with positive emotions under the broaden and build theory of emotions (Fredrickson, 2001), which can further lead to greater exploration. This exploration can lead to increasing or decreasing trust behaviors in human-AI/ML/XAI interactions, depending on the results of the exploration. There may be contexts in which combinations of these variables amplify, suppress, or have no effect on trust-relevant criteria of interest, which we discuss later in the Attitudes section. For example, someone with a high complacency potential may be more likely to rely on an AI/ML system at baseline and more so given contextual factors (e.g., time pressure, system opacity; Singh et al., 2021). However, if one has a high need for cognition and enjoys thinking deeply, they may be more apt to spend time trying to figure out the underlying processes by which an AI/ML system serves their needs in said context, regardless of how the system operates. This is just one example of these variables interacting to shape human-AI/ML interaction, and their effects likely have different impacts given the context.

Proposition 4: Individual difference variables associated with high or low cognitive engagement will directly influence a user’s engagement with AI/ML/XAI referents.

Transparency

There remains confusion in the XAI literature between explainability, interpretability, and understanding often with the terms being used interchangeably (Guidotti et al., 2018; Tocchetti and Brambilla, 2022). We view all of these as the process of establishing transparency. Transparency as typically referred to in the literature is related to the user’s ability to detect how a referent system operates based on cues of the system (Lyons, 2013). However, transparency is also treated as a subjective construct in the literature (Alarcon and Willis, 2023; Chiou and Lee, 2023). Transparency pertains to the degree to which human-users are able to perceive system performance (what is the system doing), purpose (is the system being used as it was designed to be used), and process cues (how is the system doing what it is doing) as information (Chiou and Lee, 2023). A system that is transparent to one user may be opaque to another user because they lack specific knowledge, skills, or abilities (Ehsan et al., 2024; Jessup et al., 2024). Below we demarcate explainability, interpretability, and understanding within the context of transparency, with explainability being the most objective form of transparency, interpretability being a mix of objective information and subjective cognitive processing, and understanding being mainly a subjective perception or schema, as illustrated in Figure 2. In other words, transparency is the process of establishing ascriptions of an AI/ML-XAI-enabled system through information provided by cues in the environment.

Explainability—machine transparency and trustworthiness cues

An explanation is something that is provided by a referent, for instance, the details of an AI/ML-enabled system by an XAI tool or beta weights provided by a regression. Explainability is a representation of aspects of the algorithm, such as weights or noting important aspects of the stimuli, through visual, textual, or numerical representation. Explanations are a set of details or elements that illustrate or elucidate the causes, contexts, or outcomes of those details or elements (Drake, 2018). In our model, the trustworthiness cues provide different types of explanations. Importantly, the information provided by the trustworthiness cues may provide more than one type of explanation.

As Alarcon and Willis (2023) note, most of the taxonomies in the Computer Science literature refer to the underlying mathematical processes for computation. The different AI/ML/XAI taxonomies all attempt to classify the different algorithms utilized to create the various AI/ML/XAI methods. We acknowledge these differences are important as they help to describe how the various AI/ML (e.g., decision trees, DNNs) and XAI (e.g., local interpretable model-agnostic explanation, or LIME) algorithms are computing different weights and calculations for their respective predictions, classifications, or descriptions of a given data set. However, the individual model taxonomies are all classifications of how the algorithms function rather than the information provided to the user. The algorithms that are being created are objective, in that they provide some information about their inner workings (or no information in the case of black-box models) and then display this information to the user. The method used for analyzing what was important in the algorithm may vary, but often they result in similar output such as numerical, visual, or text output (Zhou et al., 2021). The differences of the model methods are not necessary for most users. It is the meaning of the numerical, visual, or text output within the context that is important for interpretations (Broniatowski and Broniatowski, 2021). As such, we placed the different AI/ML/XAI taxonomies for model methods in the squares at the bottom of the model in Figure 1, as they are the most objective.

The taxonomy provided by Zhou et al. (2021) differentiates algorithms by their explanation types as described in the previous section. Although they intended their taxonomy to only apply to XAI methods, we theorize it can be extended to all AI/ML/XAI methods and algorithms. The explanation types provide information to the user which is displayed in various manner. Black- and white-box models both adhere to these classifications, as the former provides little information besides performance information and the latter are fully or almost fully transparent as to their processes. These explanation types are similar to previous demarcations of objective referent trust from subjective trust perceptions (Schlicker et al., 2025).

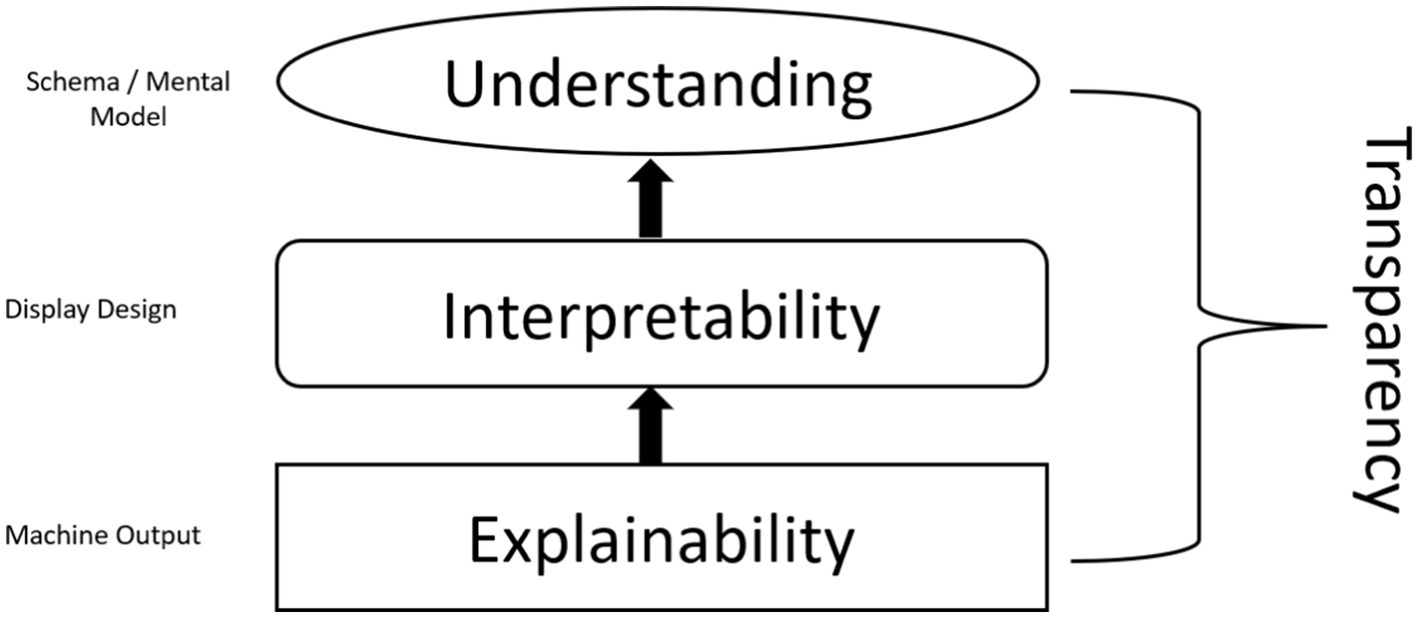

Zhou et al. (2021) demarcated six explanation types: rationale, data, responsibility, impact, fairness, and safety-performance. Their study focused on the type of explanation the algorithm was providing to the user. The rationale explanation focuses on explaining why the algorithm made a specific decision to the user. For example, a LIME algorithm output can provide rationale for a certain classification by highlighting the relevant information. Data explanations illustrate what contextual data the algorithm used to make its decision. Figure 3 illustrates an image that has been classified as a civilian airplane, and the LIME data explanation highlights the relevant information for the classification in green.

Figure 3. Locally interpretable model-agnostic explanation of civilian airplane. (A) Obfuscated image with positive information highlighted; (B) non-obfuscated image with positive information highlighted; (C) obfuscated image with positive and negative information highlighted; (D) non-obfuscated image with positive and negative information highlighted.

Responsibility explanations focus on the development of the algorithm, how the algorithm was managed, and how the algorithm was implemented. This type of explanation is focused on the accountability of the algorithm and who has ultimate responsibility for the implementation of the algorithm. Responsibility explanations have largely driven laws regarding AI/ML in Europe (Hoofnagle et al., 2019). Impact explanations illustrate the societal impact of utilizing algorithms and possible consequences of using them in certain arenas. For example, researchers and governments have discussed the possible implications of self-driving cars (which utilize AI/ML for their autonomous function; Holstein et al., 2018). Self-driving cars will have various impacts on the legal system, ethical decision-making, and loci of responsibility (human or machine), to name a few.

Fairness explanations can be viewed as a subset of impact explanations as they are specifically focused on possible bias pertaining to their use. As mentioned, AI/ML has been utilized in parole decisions in recent years. However, these algorithms have been shown to be biased against minorities based on various variables such as zip codes (Hübner, 2021). The impacts of these biases and the broader implications of using AI/ML in sensitive areas have been discussed at length by both researchers and governments (Goodman and Flaxman, 2017; Hoofnagle et al., 2019). Lastly, safety and performance explanations illustrate the process of increasing the accuracy, security, reliability, and robustness of the decisions of and output from AI/ML algorithms. An example of this is when a researcher finds there are data in the data set that the algorithm has not been trained on or that the use of said data has unintended consequences. For example, Target utilized AI/ML for targeting coupons toward their customers. In one instance, the algorithm noticed a 16-year-old girl was pregnant and sent her various coupons for baby supplies, angering her father who was unaware of her pregnancy. As such, Target recalibrated the AI/ML to only offer coupons to those over 18 years of age (Oprescu et al., 2020).

The explanation types described by Zhou et al. (2021) are the outputs of the AI/ML/XAI algorithms, which we illustrate in Figure 2. We note that even a black-box model will have trustworthiness cues related to it, but in comparison to an XAI or white-box model, there will be relatively fewer as the traditional black-box models do not typically illustrate much information other than performance in their output (Adadi and Bouhoute, 2023; Vilone and Longo, 2021). In other words, black-box models are simply low in process cues as they do not illustrate their decision processes for humans. Alarcon et al. (2024) note that all algorithms lie somewhere on the information spectrum and that a lack of information, such as with black-box DNNs, does not negate the theory.

The explanation types are closest to the objective reality of the algorithm in our theoretical model. AI/ML/XAI often comprise weights and errors for the algorithm but can be illustrated differently through natural language, color, or text (Zhou et al., 2021). It is how the information is displayed that facilitates effective human processing of the information. To illustrate this point, we use another example of LIME algorithms for image classification. There are several different ways to view the output of a LIME algorithm. Utilizing graphical images, we can increase the interpretability of the XAI output. Figure 3 illustrates several LIME outputs from the same algorithmic output. First, Figures 3A,C present LIME output that illustrates the important aspects of the image in making its classification of the stimuli on a black background. In this instance, LIME XAI displays the relevant information, but the context is not clear. In Figures 3B,D, the LIME XAI is transposed on top of the image, with translucent color so the relevant aspects of the image and the total image are clear. In this example, the user may be able to better understand the aspects of the image that helped the AI/ML classify the image. Third, Figures 3C,D offer two types of relevance to the image classification, green which is associated with information that influences the classification as civilian, and red which illustrates information that is not relevant to the classification. All these outputs were based on the same algorithmic output but are displayed differently.

Zhou et al. (2021) do not explicitly state whether the explanation types are orthogonal or not. We, however, contend these are not orthogonal. The example of the Target shopper above illustrates this point: the AI/ML sending the coupons to an underage person contributes to both impact and performance explanations. The performance explanation was correct, in that the daughter was pregnant. However, the impact explanation of sending the coupons to anyone under the age of 18 was also clear from the output of the algorithm. Thus, the same output can have different impacts based on different explanation types. We note that there is no influence of individual differences on the trustworthiness cues as they are inherent in machines. The output is the objective information but how that information is displayed is important from the human perspective, as noted in the LIME XAI examples above. We do acknowledge that user inputs at the algorithm level can occur, but these are more important when creating and training the algorithm.

Proposition 5: AI/ML/XAI-enabled systems that provide more information on their underlying processes pertaining to their respective explanation types will be viewed as more informative.

Interpretability—cognitive processing of information

We define interpretability as a human making sense of the information provided by the AI/ML-enabled algorithm or XAI, as illustrated in Figure 2. In the human-AI/ML/XAI interaction context, it is the human that ascribes meaning to the information provided by the algorithm. Just because information is explained does not mean it is interpreted or understood by the user. How the information is displayed helps the user interpret the data. As such, per Audi (1999) we define interpretation as the mental processing of information provided from the system (i.e., explained information) by the user to establish an (in)accurate understanding of the system referent (e.g., Ribeiro et al., 2016b). Indeed, Ehsan et al. (2024) found differences in interpretation of XAI between novices and computer science students with the same XAI stimuli.

To illustrate our point, Figure 3 illustrates a LIME algorithm representation of the information that led to the classification of a civilian airplane with the relevant weights being highlighted in green and red. Green illustrates the top five most relevant aspects of the image to classify it as a civilian plane. Red illustrates the five least relevant aspects of the image to the classification as a civilian plane. Although these are illustrated as colors over the image, it is the numerical value that the algorithm weights for each pixel that is important. The LIME algorithm describes the visual information that is used to generate a classification of each aspect of the image. The data being explained by the machine are pixels. The color is a quick visual cue for easy information processing for humans to interpret (Xing, 2006). As such, if the algorithm simply listed the pixels used in making the determination, the information would be uninterpretable but explainable because there is too much information for users to process (e.g., Young et al., 2015).

In Figure 1, the line across the middle of the figure illustrates the demarcation of subjective user perceptions and objective machine output. In the lower portion of the figure, performance, purpose, and process cues from the machine are illustrated with square boxes. There is a direct path from the trustworthiness cues to the user’s trustworthiness perceptions, which are noted in the model with performance, purpose, and process perceptions, instantiated with circles to indicate subjective perceptions. Although the current figure only illustrates direct lines from each trustworthiness cue to its respective trustworthiness perception, this is only done for clarity of the theoretical model.

The grey boxes on the lines between the trustworthiness cues and perceptions are the information the user is employing to interpret the trustworthiness cues (Schlicker et al., 2025). Individual differences are an integral part of how the user perceives and interprets the information. For example, Ehsan et al. (2024) found participants in a computer science program perceived information provided from an AI/ML algorithm differently than novices. In the first line from performance cues to performance perceptions, the grey box is mostly on the upper portion of the figure indicating the user is relying on information within oneself (e.g., personal beliefs, experience with AI/ML) to make the assessment rather than the cues from the machine. This illustrates an instance when individual differences such as propensity to trust technology or previous experience with AI/ML will be driving the decisions, as the loci of information for the assessment resides primarily in the user and not the system. This is what Hoff and Bashir (2015) refer to as dispositional trust. The grey box representing the performance cue information the user is perceiving is smaller than the boxes for purpose and process, representing less trustworthiness information when making the decision because they are relying on individual beliefs.

Proposition 6: Users deferring solely to their global beliefs / cognitive schemas to inform their trustworthiness perceptions of AI/ML/XAI will understand less about these systems.

On the line from the purpose cues to purpose perceptions, the grey box indicating information for perceiving the system is split hallway between the user’s perceptions and the information from the algorithm. In this instance, we also see the box is much larger as more information is being utilized, with equal information from the user and the machine. The grey box on the purpose line might indicate an expert user with adequate domain knowledge is properly utilizing balanced information from both subjective perceptions and objective cues in the environment. This example would be representative of a statistician that understands and is utilizing regression weights in making an informed decision in a domain for which these models were designed to be applied.

Proposition 7: Users who leverage their domain knowledge coupled with relevant information from AI/ML/XAI systems will more appropriately calibrate their trust toward the system.

Lastly, process perceptions are illustrated with a grey box with most of the variance for information being held on the objective side of the figure. In this instance, the algorithm may be providing information that requires little to no interpretation by the user. We can think of image classification for easy images which are often used to develop new models, such as classifying animals, as they require little cognitive processing from the developer. Across Figures 3A–D, we can see that the algorithm primarily utilizes information about the airplane in its classification. However, there are aspects of the image background that also are included in making the determination. Figures 3A–D would be representative of the box on the process line because it requires little cognitive processing by the user, as most users would be familiar with and comfortable classifying the image as a plane. These three examples illustrate the differences in interpretability across individual differences.

Proposition 8: Users leveraging objective information solely from the AI/ML/XAI will benefit in their interaction with the AI/ML/XAI system so long as that information provided is veridical.

Some important aspects of the performance, purpose, and process perceptions should be discussed. First, XAI may not reduce overreliance on AI/ML (Müller et al., 2024). Instead, it may increase overreliance as research has demonstrated users are more likely to agree with an AI/ML algorithm if it provides an explanation, regardless of accuracy (Poursabzi-Sangdeh et al., 2021). Indeed, Ehsan et al. (2024) found novices trusted the numeric output of an algorithm simply because it was numeric, inferring that the numbers were based on algorithmic thinking. However, this may be moderated by personality variables such that those with greater attention to detail may not over-trust. Situational variables such as workload can also influence trust as users that have too many demands placed on them may over-trust because of lack of monitoring resources (Biros et al., 2004). The information provided by the algorithm can facilitate many aspects of understanding. Colin et al. (2022) found attention maps helped facilitate user understanding of biases in the AI/ML algorithm, but it did not facilitate understanding of the failure cases. As such, information provided by the algorithms may not facilitate a full understanding but rather understanding of different outcomes.

Understanding

The user exploits the textual, numeric, image, or natural language output to facilitate their understanding of the decision process of the algorithm (Roscher et al., 2020). It is the ability of the human to cognitively process the output of the algorithm that leads to understanding (i.e., cognitive perceptions). Thus, understanding is the perception of the system as a result of the interpretation of the explained information. If the algorithm does not display the information in a format that is interpretable to the user, or if the user does not have the requisite knowledge, skills, and abilities to interpret, it may have high explainability, low interpretability (i.e., the user is not able to make sense of the explained output), and lead to misunderstanding (i.e., a misperception of what the model is explaining, how it functions etc.). However, if the user does have the requisite knowledge, skills, and ability, this can facilitate appropriate sense-making of the data and ultimately properly calibrated understanding of the system (Ehsan et al., 2024; Klein et al., 2006). This expression of the information facilitates interpretation which enables an understanding about what will happen in the future, as illustrated in Figure 2 (Koehler, 1991; Lombrozo and Carey, 2006; Mitchell et al., 1989). These perceptions result in an understanding of the stimuli, which is highly subjective. Thus, understanding is the schema or mental model that is created by the user from interpreting information in the environment, which can be used as a lens of analysis in future interactions with AI/ML and XAI systems. Typically, these schemas and mental models are assessed by measures of users’ performance, purpose and process perceptions of machine systems (Lee and See, 2004; Stevens and Stetson, 2023), in this case AI/ML/XAI.

Proposition 9: Knowledge, skills, and abilities (KSAs) will be important for facilitating understanding, such that information from the AI/ML/XAI should be displayed in relation to the users’ KSAs.

As noted above, the construct of understanding from the computer science literature is synonymous with trustworthiness perceptions (comprised of performance, process and purpose) from the social sciences literature. Performance perceptions concern the degree to which the user perceives the system can perform a specific task within a given context (Lee and See, 2004), what Hoff and Bashir (2015) would term situational trust. Some machine systems may be perceived to have good performance for certain tasks but not others. It may also be the context of the system’s use that moderates situational trust. For instance, an AI/ML-enabled system may perform well at classifying images from classes it was trained but not out of distribution classes to perceive algorithm performance differently depending on how the algorithms are applied4. The risk of misclassification may be tolerable in scenarios such as recycling management but not in high risk instances such as computer vision for autonomous vehicles. Process perceptions describe the user’s understanding of a machine system’s underlying algorithmic function (Lee and See, 2004). In human-AI/ML contexts, process perceptions may vary depending on what information a user perceives on how the AI/ML reached a decision, and these perceptions may be shaped by XAI which unpacks how the AI/ML functions (Alarcon and Willis, 2023). Purpose perceptions pertain to a user’s perceptions of why the system was designed (Lee and See, 2004). Lee and See note systems are often used outside of contexts they were built or used in contexts that have more diverse information than that which the model was trained on, which can influence users’ purpose perceptions. With regards to the above example, systems designed to classify specific classes such as cats and dogs, but not other classes (e.g., squirrels), may shape users’ perceptions of system purpose, which may or may not relate to other trustworthiness perceptions and intentions to rely on (i.e., trust) the system as shown in human-autonomy interaction work (Capiola et al., 2023; Lyons et al., 2021, 2023).

Providing information is not a catch-all that once provided will increase trust and reliance on the system. The explanations provided can reveal problems in the system which can help the user calibrate when to use the algorithm (Kästner et al., 2021). For example, Alarcon et al. (See footnote 4) found participants were able to more quickly detect out-of-distribution data and noted the open set recognition models were better able to classify appropriate images for within-distribution data; in comparison, the convolutional neural networks (CNNs) had issues because they were confident in their classifications regardless of the stimuli. Additionally, the user can misinterpret the information provided by the model, falsely attributing actionability (i.e., what can be done with the information) even when the explanation is unclear (Ehsan et al., 2024).

The explanation of the algorithm’s decision processes when the algorithm fails can also provide information as to improvements that need to be made to the algorithm. For example, adversarial attacks are used to determine ways to deceive the algorithm. Researchers noted that adding black and white bars to the stop sign, as illustrated in Figure 4, can make a convolutional neural network classify the image as a 35-mph sign instead of a stop sign. This helps the developer find issues in the algorithm that can be alleviated or fixed with updates and understand the flaws in the algorithm and improve it in future iterations.

Attitudes

There are numerous definitions of trust in the AI/ML/XAI literature. Many of these definitions have come from the interpersonal trust literature and have also conflated trust and reliance. Blanco (2025) recently theorized trust comprises (a) positive expectations, (b) some risk of the trustee not behaving as the trustor wants them to (c) a potential or need / wish of delegation, and (d) a basis in motives (i.e., motives based). She distinguishes this from reliance, which is the dependence of the trustor toward the trustee when the trustor needs to delegate. In her definition, the first two postulates of trust are the same as most definitions in the literature. Her third postulate of trust distinguishes that needing to delegate is not a necessary condition of trust, but rather the potential wish for delegation can necessitate trust. Additionally, she explicitly notes that trust is motives based, i.e., trustors have a goal in mind when ascribing trust. In her theoretical paper, she notes these motives are based on both cognitive (normative) and affective antecedents. Indeed, in the interpersonal trust literature, there has also been a demarcation of affect- and cognition-based trust (McAllister, 1995). However, the interpersonal trust literature has focused on differentiating antecedents to trust as affect- and cognition-based aspects of trustworthiness perceptions (Colquitt et al., 2007; Lee et al., 2022).

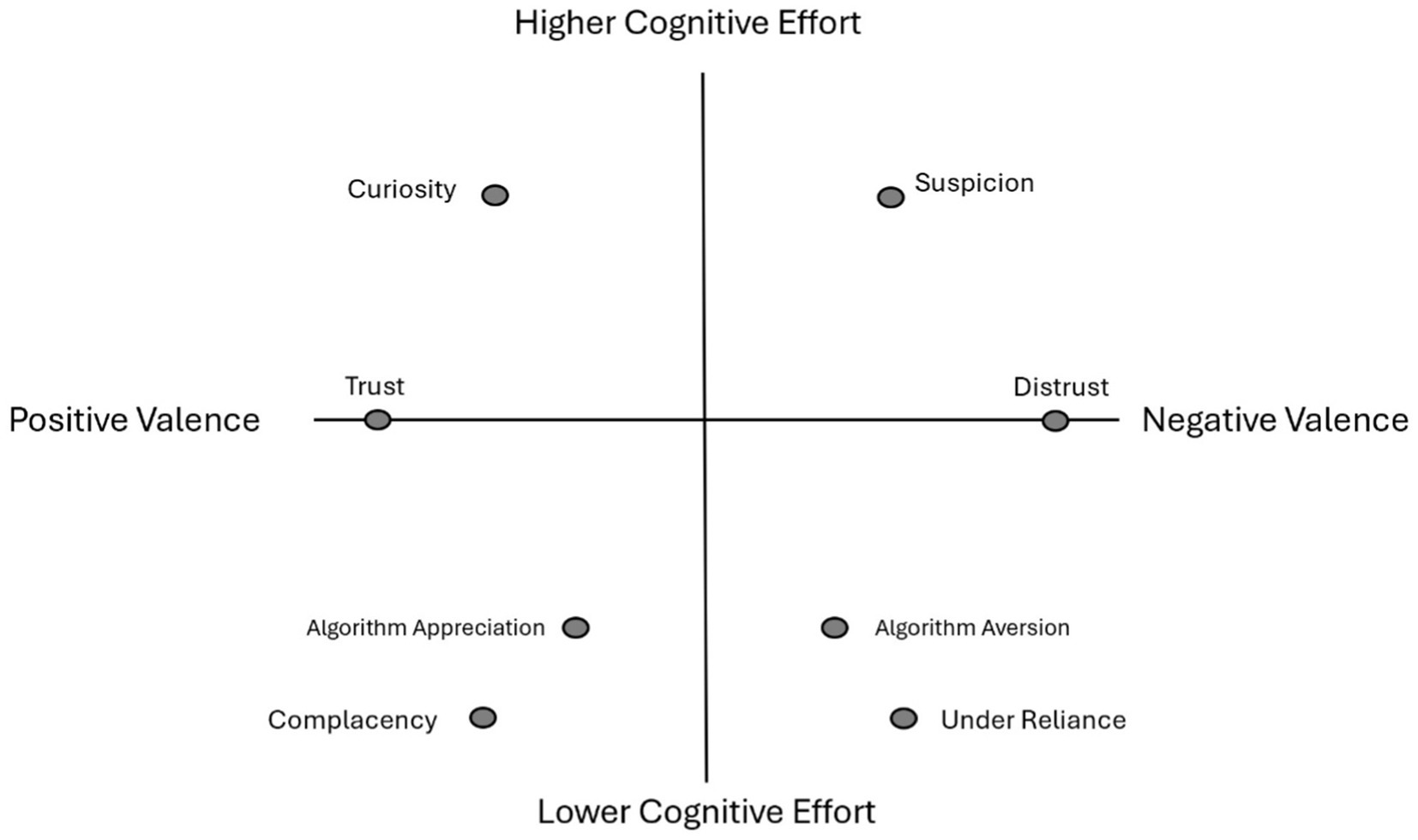

We theorize two underlying factors that influence attitudes toward AI/ML-enabled systems: valence and cognitive effort. Valence describes the attractiveness or aversiveness the user ascribes to the situation or referent (Russell, 1980). Valence comprises a spectrum of emotions ranging from positive to negative, with neutrality or indifference in the middle (Jessup et al., 2025; Li et al., 2017). Research on emotions has demonstrated that when users experience positive emotions, they tend to process information more holistically (Fredrickson, 2001; Kazén et al., 2015). In the human-machine interaction literature, this can facilitate adoption and acceptance of the system (Hoong et al., 2017; Jessup et al., 2023). Positive emotions also lead the user to explore the machine’s capabilities, experiment with features, and engage more with the system (Isen and Geva, 1987). Additionally, positive emotions can buffer against system failures or errors (Maroto-Gómez et al., 2023). In contrast, negative emotions can facilitate narrow or segmented cognitive processing (Kazén et al., 2015). Initial negative interactions are difficult to overcome as they lead to a negative mental model of that referent system (Jessup et al., 2020), which takes time and effort to change (Kim et al., 2023).

Cognitive effort characterizes the mental exertion necessary to evaluate a referent. Though there is no consensus on a definition (Li et al., 2017; Shepherd, 2022; Westbrook and Braver, 2015), cognitive effort is often described as the amount of controlled processing needed to understand, reason, and make decisions. This overlaps with dual-processing models of cognition (Kahneman, 2011) and persuasion (Chaiken, 1980; Petty and Cacioppo, 1986), which have also been applied to trust research (Alarcon and Ryan, 2018; Stoltz and Lizardo, 2018). System 1 processing, or heuristic processing, is quick, automatic, and relies on cognitive shortcuts. System 2 processing is more effortful, intentional, and accurate. Importantly people bring heuristics about referents into the decision-making process based on knowledge domain, experience, and pattern recognition (Ehsan et al., 2024). Aspects of the environment such as task complexity, familiarity, and contextual distractions can also influence the amount of cognitive processing an individual utilizes (Wickens and Carswell, 2012). For example, research has demonstrated that people choose to exert cognitive effort when machine systems are coupled with explanations for their decisions by weighing the cost of the cognitive effort compared to simply deferring to the overall system (Vasconcelos et al., 2023). This is what Hoff and Bashir (2015) term situational trust. In addition, a user’s motivation and cognitive ability (e.g., working memory capacity, processing speed) can influence the amount of cognitive effort a user is willing to exert on the task (Cacioppo et al., 1996). That is, cognitive effort facilitates or inhibits information processing, leading to resulting attitudes.

We theorize multiple attitudes toward AI/ML/XAI fall on a two-dimensional plane of valence and cognitive effort as they are a function of both dimensions, as illustrated in Figure 5. Lee and See (2004) are most famous for defining and explicating what it means to have calibrated trust, which is the correspondence between objective system capability and the user’s subjective trust toward that system informed by their perceptions of that system. Trust calibration was originally introduced in the Human Factors literature concerning trust in automation and has since been adopted and expanded in the human-machine interaction literatures, including human-robot interaction (e.g., Alarcon et al., 2023), human-autonomy interaction (e.g., Capiola et al., 2023), and human-AI/ML interaction (e.g., Harris et al., 2024; Schlicker et al., 2025; for a review, see Kohn et al., 2021). In the present model, calibrated attitudes fall on the x-axis, as they entail appropriate cognitive effort, and depending on the situation, comprise positive or negative valence resulting in trust and distrust, respectively. Calibrated trust is the goal, but other psychological attitude states may occur that fall on these two axes. When high or low cognitive effort is inappropriately employed, other states such as suspicion, curiosity, complacency, and under reliance can occur. We talk about each of these constructs in relation to the model in Figure 5.

Figure 5. Trust and trust related attitudes along valence and cognitive effort factors. Contextual risk negatively affects attitudes; high and low workload contexts can affect processing resources and effort.

The trust process outlined above is an iterative rather than static process (Chiou and Lee, 2023; Lee and See, 2004). Many different cues from the AI/ML/XAI influence perceptions over time. As the user obtains more information about the AI/ML via use and observation, the trustworthiness perceptions are updated. These trustworthiness perceptions are then updated based on how the system responds. This is what Hoff and Bashir (2015) refer to as learned trust.

Importantly, learned trust is contextually specific in relation to trust. Trust in the system for one context may not be transferred to other contexts. Instead, different attitudes may arise depending on the time spent with the system, the risk involved with the task and the affective valence associated with the algorithm. This may be for a variety of reasons such as differential risk between scenarios. For example, Lyons et al. (2024) found failure of one autonomous system lowered the trust in that specific system but not to other systems with the same operating constraints. The learned trust is the information processing and updating of the mental models/schemas of the human in the human-AI/ML/XAI interaction over time. This creates a feedback loop where trustworthiness perceptions are updated over time and information becomes salient. However, other findings can emerge as well. System-wide trust literature notes that trust decay can bleed over into other independent systems (Keller and Rice, 2010). Across many studies in contexts ranging from in-flight aircraft services (e.g., Rice et al., 2016) to robotic swarm interactions (e.g., Capiola et al., 2024b), perceived perturbations of one system aspect can bring down trust toward another orthogonal system.

Trust and distrust

Workers attempt to make sense of the technology they utilize at the same time they are doing a given task (Muir, 1987). This links trust theoretically to trust toward AI/ML-enabled systems, and researchers have meta-analyzed data on trust-relevant constructs in human-AI/ML interactions (see Kaplan et al., 2023). As noted, XAI was developed because of the lack of transparency of black-box models like DNNs to help facilitate (dis)trust formation toward opaque systems (Sanneman and Shah, 2022). The construct of trust has been defined as an attitude (Lee and See, 2004) or willingness to be vulnerable to a referent (Mayer et al., 1995), and we adopt that definition for our model (see also Kohn et al., 2021). If the user has adequate transparency provided about the system’s performance, purpose, and process, sufficient to outweigh the uncertainty and risk of the situation, they will trust the system appropriately. Individual differences, such as domain knowledge or task knowledge, may also play a role in this process as they provide information for perceiving the referent (Hoff and Bashir, 2015). The reason “appropriate” is used denotes the importance of veridical perceptions of the system (Lee and See, 2004), and this perhaps more important as referent machine systems leveraging AI/ML/XAI capabilities increase in ubiquity and opacity (Chiou and Lee, 2023; Schlicker et al., 2025). Indeed, if a user perceives the system to have low performance, purpose, and process, to the extent that their perceptions do not outweigh the perceived risk, then it is beneficial for them to not trust, i.e., distrust, the system (assuming their perceptions of the referent system are accurate). Trust should not be assumed to be beneficial in and of itself: what is important is that the user perceives the system accurately to form an intention to and ultimately rely on that system, again, what Lee and See (2004) note as calibrated trust, i.e., a correspondence between objective system capability and the user’s subjective trust toward that system informed by their perceptions.

Appropriate trust calibration is not a function of one type of processing; instead, cognitive processing can occur at different stages such as during initial interactions or when new information is made salient (Stoltz and Lizardo, 2018; Tutić et al., 2024). Trust calibration and recalibration implies making appropriate use of the relevant information in the environment to make the assessment. For this reason, we place appropriate trust and distrust in the middle of the cognitive effort y-axis but on their respective sides of the valence x-axis. If new information is made salient, effortful processing increases to incorporate the information in the user’s schema/attitude. However, the attitude of trust or distrust has been formed based on previous interactions which have resulted from cognitive effort and affective responses. Again, this is similar to what Hoff and Bashir (2015) term learned trust. This trust learned trust is a function of both system 1 and system 2 processing as the trust or distrust has been formed, aiding the user in processing information more efficiently, but new information that is not part of the schema will active system 2 processing. We note that recalibration is not illustrated in Figure 5 because it would depend on the previous perception. For example, if the user trusted the AI/ML/XAI and new information was provided, the user may be in the upper left quadrant.

Proposition 10: Calibrated trust and distrust are a function of moderate cognitive effort and their respective valence.

Suspicion

Suspicion is defined as a state of increased cognitive activity, uncertainty, and perceiving machine system mal-intent (Bobko et al., 2014). Whereas trust and distrust are a willingness to rely or not rely on the system respectively, suspicion is the withholding of the evaluation due to uncertainty as to how the referent will behave. As such, the uncertainty leads to increased cognitive effort to determine whether to utilize the system. A system can also be perceived as suspicious because of an individual’s tendency to be suspicious of machine systems (Calhoun et al., 2017), and contextual factors which facilitate suspicion may lead to distrust of a system with regards to its objective reliability (Bobko et al., 2014).

Little research has been conducted on suspicion toward AI/ML, despite recent calls for research on the construct in relation to AI/ML (Peters and Visser, 2023). Gay et al. (2017) found alerts from the AI/ML/XAI alone do not create suspicion. Instead, alerts facilitate information search through the users’ increasing cognitive effort. Gay et al. note situational differences facilitate suspicion, such that negative information can lead to increased suspicion. This suspicion led to decreased user performance in their scenario. Similarly, Strang (2020) found suspicion is high in cyberspace operations. These results indicate it is the context, relative risk, and information gathered from the environment that facilitates suspicion. As such, suspicion is placed in the upper right quadrant characterized by high cognitive effort and negative valence.

Proposition 11: High cognitive effort and negative valence will result in suspicion.

Curiosity

State curiosity is a temporary, situational state of intrinsic motivation or desire to learn or explore (Kashdan and Roberts, 2004; Spielberger, 1979). State curiosity is externally triggered by aspects of the environment such as novelty, ambiguity, or gaps in knowledge. State curiosity involves both feelings (i.e., interest, excitement) and mental engagement with the referent (i.e., cognitive effort). Oudeyer et al. (2016) note that curiosity is formed when the agent’s predictions are improving. Information gap theory (Loewenstein, 1994) theorizes state curiosity arises from an inconsistency or disparity between what is known and what is unknown. Curiosity is the drive for information through active intrinsic desire to obtain more information (de Abril and Kanai, 2018). Importantly, curiosity is driven by positive emotions in contrast to suspicion which is driven by negative emotions.

Research on curiosity in human-computer interaction research is relatively sparse. However, some researchers have developed models that personalize a user’s curiosity appetite. Abbas and Niu (2019) found personalization of the system to users’ openness to experience influenced more information gathering. Hoffman et al. (2023) also noted curiosity as an important factor in human-XAI interaction, noting that seeking information is driven by curiosity. Hoffman et al. note XAI should promote curiosity for increasing the accuracy of mental models, but no research to date has explored and demarcated the psychological “triggers” for curiosity. Still, it stands to reason that AI/ML/XAI can facilitate curiosity in the task. Researchers have found students interacting with ChatGPT led to more curiosity and creativity in the classroom (Essel et al., 2024). General curiosity can be triggered by a violation of expectations (Maheswaran and Chaiken, 1991), but it is the type of violation that distinguishes curiosity from suspicion. Curiosity may occur in less risky environments, when information obtained is not negative in the task, or when the context is relatively benign. Importantly, curiosity entails both positive valence (or at least a lack of mal-intent) and high cognitive effort for information seeking. However, some individuals may have a tendency to offer less cognitive effort (Petty and Cacioppo, 1986), and some situations may be so benign or so high in workload that exerting cognitive effort is not possible.

Proposition 12: High cognitive effort and positive valence will result in curiosity.

Complacency

Complacency is defined as decreased vigilance, attentiveness, and situation awareness resulting in a user relying too heavily on a system (Parasuraman and Manzey, 2010). Complacency with the system can be due to many different aspects, but in the instance of over-trust, the user becomes overly confident that machine systems will handle task responsibilities (Lee and See, 2004). The lack of cognitive effort associated with complacency leads to less task vigilance. That is, complacency can lead to missed errors, reduced situation awareness, slower reaction times, increased risk of accidents, and skill degradation (Parasuraman and Manzey, 2010). Instances of this are easily accessible in the news with Tesla owners not paying attention to the car while it is in its autonomous mode (Shepardson and Sriram, 2024). Lack of system oversight has led to accidents, including deaths. However, the lack of oversight results in over use as the individual expects a positive outcome, which is why complacency is in the lower left quadrant of Figure 5.

One related construct to complacency that we depict in Figure 5 is algorithm appreciation (Logg et al., 2019), which describes users’ tendency to rely on advice from algorithm referents compared to humans. Across several experiments, Logg et al. document cases of algorithm appreciation in tasks ranging from visual stimuli estimates and forecasting the popularity of content. Similar results are shown in foundational work in human-automation interaction (Dzindolet et al., 2002), where individuals demonstrate more positive attitudes toward machine decision support systems compared to humans before human-machine interaction commences.

Still, Logg et al. (2019) found appreciation for algorithms over humans when the latter forecast came from the participant themselves, or the participant was an expert in the forecasting context. Similarly, Dzindolet et al. (2002) found appreciation decreased after task interaction progressed, resulting in user under reliance on machine systems compared to humans when either was perceived to be imperfect.

Proposition 13: Low cognitive effort and positive valence will result in complacency.

Under reliance

Under reliance on a system refers to insufficient or inadequate use of the machine despite its objective capabilities (Lee and See, 2004). Importantly, this disinclination to use a system is a function of a lack of cognitive effort as there is evidence that the system is reliable and beneficial (Parasuraman and Manzey, 2010). Still, a user may forego the potential benefits of relying on the system such as increased performance and decreased decision time due to the user’s increased workload and potential fatigue. Thus, under reliance is comprised of lower cognitive effort exerted towards the system and an expectation of negative outcome, which is why under reliance is in the lower right corner of Figure 5. A good example of this can be found in the literature on algorithm aversion, which is the reluctance to use algorithms even when they demonstrate better accuracy and reliability than a human (Dietvorst et al., 2015). Negative experiences with other algorithms lead to the development of a negative bias toward algorithms when they are not perfect (Liu et al., 2023; Slovic et al., 2013). Additionally, negative emotions have been associated with less use of algorithms (Gogoll and Uhl, 2018; Prahl and Van Swol, 2017), leading to under reliance (i.e., algorithm aversion). This is driven by not only a bias or heuristic but also a function of one’s emotional state or valence.

Gaube et al. (2024) found under reliance toward AI/ML was more harmful to performance than over-reliance. Moreover, they found that XAI reduced under reliance on AI/ML referents, especially when users were expected to classify difficult images, but under reliance on the system still led to lower task performance. Under reliance on machine systems can be the result of many different variables such as user lack of error tolerance (Dietvorst et al., 2015; Dzindolet et al., 2002), perceived low controllability of the AI/ML/XAI (Cheng and Chouldechova, 2023), low transparency (Schemmer et al., 2023), or poor mental models of the AI/ML by the user (Kaplan et al., 2023).

Proposition 14: Low cognitive effort and negative valence will result in under reliance.

Personality can influence these attitudes through the mechanisms we described above as well as how users perceive the environment (Lazarus and Folkman, 1984). Personality is the lens through which humans view the world and process the information (McGuire, 1968). Different personality variables can influence the final attitude formation toward a given referent. However, the underlying processes of all the mechanisms through which personality can influence attitudes is beyond the scope of the current review. Interested readers are encouraged to review Albarracin and Shavitt (2018), Ajzen (2005), and Howe and Krosnick (2017).

Risk

The relevant uncertainty in utilizing an AI/ML/XAI algorithm represents an inherent risk in the task (Gulati, 1995). Risk is the uncertainty of the outcome and the relative vulnerability of relying on the system in the given context. Often times, this risk in an experimental task is instantiated as monetary payouts in the psychological trust literature (see Johnson and Mislin, 2011). However, this also has relevance to the use of AI/ML/XAI. The advent of XAI literature is in response to using black-box models in areas with high risk. The call for more transparent AI/ML is in direct response to the risk of utilizing these algorithms in parole decisions, autonomous vehicles, and other risky scenarios (Rudin, 2019). The role of situational risk is closely related to trust, suspicion, and over reliance. Risk augments how users trust and utilize the AI/ML by affecting how they perceive the fairness of the process/decision. Trust has also been viewed as a cognitive mechanism through which people process, interpret, and respond to informational risk. This risk perception varies by situation. A programmer developing an AI/ML algorithm to create a spam filter for email may have considerably less risk than a programmer utilizing AI/ML for customer payment processing systems. The risk inherent with each scenario will moderate how the user perceives the system (McComas, 2006; Thielmann and Hilbig, 2015), influencing their likelihood trusting that system given the tradeoff of accepting vulnerability toward that system in contexts of increased risk (Chiou and Lee, 2023; see also Kohn et al., 2021). Following the interpersonal trust literature (Mayer et al., 1995), contextual or perceived risk can also influence the processing resources users allocate toward making their decision to (dis)trust a machine referent (Kohn et al., 2021) including AI/ML/XAI (Chiou and Lee, 2023). Additionally, the risk inherent with each scenario can also establish which personality variables are activated in interactions with each algorithm. In the latter payment algorithm, there is considerably more risk; as such, personality variables such as risk aversion may play a larger role in the cognitive processing of information from the system. The former low risk scenario of the spam filter may not activate risk aversion because the risk is so low. Instead, personality variables such as complacency potential may be active in the cognitive processing because of the low inherent risk (Zhou et al., 2020).

Reliance behaviors

The cognitive processes (or lack thereof) described prior all lead to eventual decision-making which is referred to as reliance / compliance behaviors (Meyer, 2001; Meyer et al., 2014) or trust behaviors (Alarcon et al., 2021, 2023) in the Human Factors literature. As noted earlier, reliance is the actual dependence of the trustor on the trustee (i.e., delegation of a task). Much of the research has focused on attributing trust to reliance behaviors, such that appropriately trusting AI/ML means utilizing the system when it is accurate and disregarding the system when it is inaccurate. However, in real world applications pertinent in our theoretical model, the user will not know the actual state of the AI/ML/XAI decision. It is important that algorithms are designed so that users can most accurately trust them when it is applicable and appropriate.

An important issue in Psychology is that behaviors are not due to a single cognitive state. The Human Factors literature has noted several issues that may lead to a user’s decision to utilize a system. Our discussion of attitudes in the previous section illustrates some of the different attitudes that can influence behaviors. Importantly, one additional reason for reliance behaviors may simply be user errors. A user may accidentally perform the correct behavior, either without knowing they were going to or because they “hit the wrong button,” which happened to be correct. These human errors are often not accounted for in the literature, as it is assumed a user is performing the behavior on purpose. Instead, it may be that some of the behaviors are accounted for by simple mistakes.