- School of Dentistry, Faculty of Medicine and Dentistry, University of Alberta, Edmonton, AB, Canada

Introduction: Accurate assessment of midpalatal suture (MPS) maturation is critical in orthodontics, particularly for planning treatment strategies in patients with maxillary transverse deficiency (MTD). Although cone-beam computed tomography (CBCT) provides detailed imaging suitable for MPS classification, manual interpretation is often subjective and time-consuming.

Methods: This study aimed to develop and evaluate a lightweight two-dimensional convolutional neural network (2D CNN) for the automated classification of MPS maturation stages using axial CBCT slices. A retrospective dataset of CBCT images from 111 patients was annotated based on Angelieri's classification system and grouped into three clinically relevant categories: AB (Stages A and B), C, and DE (Stages D and E). A 9-layer CNN architecture was trained and evaluated using standard classification metrics and receiver operating characteristic (ROC) curve analysis.

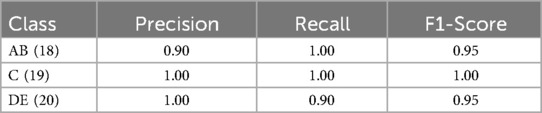

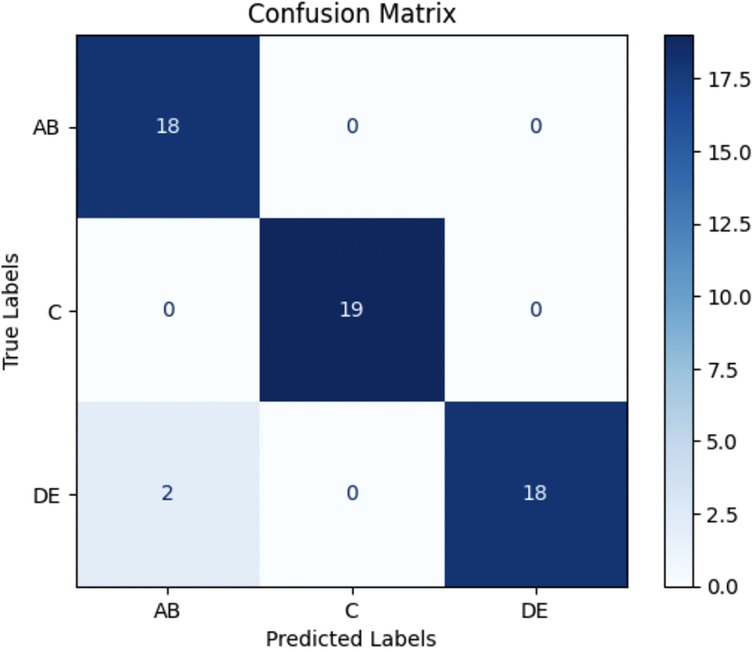

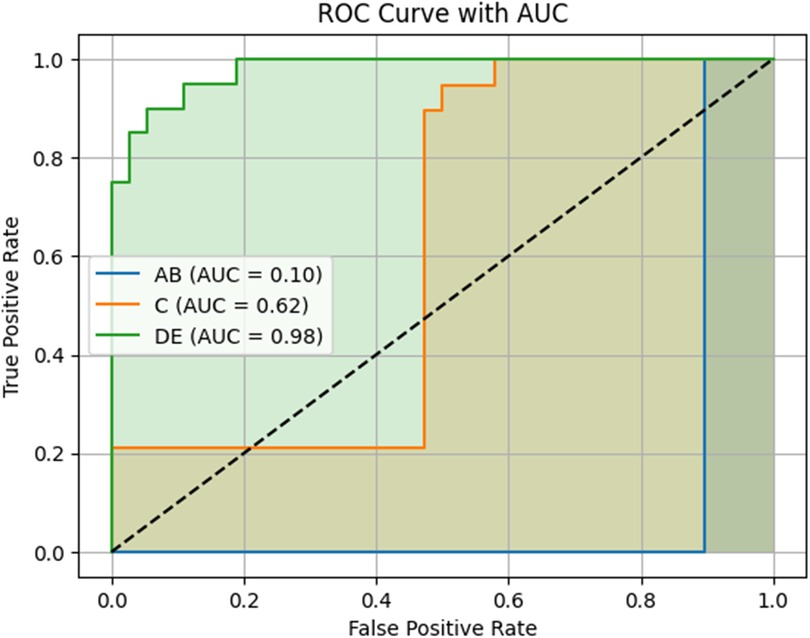

Results: The model achieved a test accuracy of 96.49%. Class-wise F1-scores were 0.95 for category AB, 1.00 for C, and 0.95 for DE. Area under the ROC curve (AUC) scores were 0.10 for AB, 0.62 for C, and 0.98 for DE. Lower AUC values in the early and transitional stages (AB and C) likely reflect known anatomical overlap and subjectivity in expert labeling.

Discussion: These findings indicate that the proposed 2D CNN demonstrates high accuracy and robustness in classifying MPS maturation stages from CBCT images. Its compact architecture and strong performance suggest it is suitable for real-time clinical decision-making, particularly in identifying cases that may benefit from surgical intervention. Moreover, its lightweight design makes it adaptable for use in resource-limited settings. Future work will explore volumetric models to further enhance diagnostic reliability and confidence.

1 Introduction

Maxillary transverse deficiency (MTD) is characterized by a reduced width of the upper jaw relative to the mandible. It can present clinically as posterior crossbite, dental crowding, altered tongue position, and impaired nasal airflow, and has been associated with compromised airway dimensions and increased risk for obstructive sleep apnea (1–8). Treatment options depend heavily on whether the midpalatal suture (MPS)—a key growth site in the maxilla—has fused. In growing patients with an open or partially ossified MPS, non-surgical expansion techniques such as rapid maxillary expansion (RME), slow maxillary expansion (SME), or microimplant-assisted rapid palatal expansion (MARPE) are typically effective (9–13). In contrast, patients with complete MPS fusion often require surgically assisted rapid palatal expansion (SARPE) or segmental LeFort I osteotomy, which carry additional cost, morbidity, and recovery time (14, 15).

Misclassifying the MPS stage can lead to complications such as relapse, pain, and unnecessary surgeries. Therefore, an objective evaluation of MPS maturation is essential for optimizing treatment outcomes and ensuring that patients receive the most effective and least invasive care (16–19). Conventional assessment methods, such as the five-stage CBCT-based classification proposed by Angelieri et al. (Stages A to E), rely on visual interpretation of axial slices and are inherently subjective. Inter-examiner agreement has been reported as low as 43%–68%, highlighting the diagnostic variability that may lead to overtreatment or undertreatment (20–22).

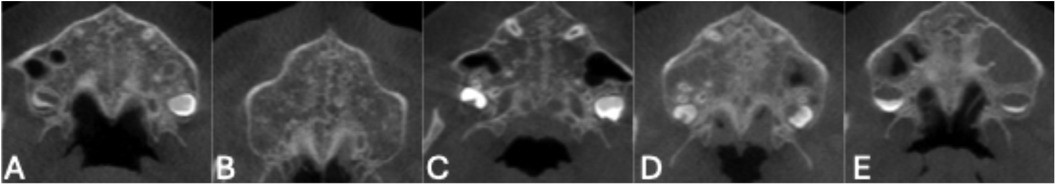

In Angelieri et al's system as shown in Figure 1, Stage A represents a straight, high-density suture line with no interdigitation (Figure 1A); Stage B shows increased scalloping (Figure 1B); Stage C features two parallel high-density lines (Figure 1C); Stage D marks partial fusion in the palatine bone (Figure 1D); and Stage E indicates complete fusion throughout the palate (Figure 1E), rendering the suture invisible. While this method is widely accepted, staging remains subjective, and clinical decisions often depend not on the specific stage but on whether the suture is open, transitional, or fused. As such, clinicians commonly group these into three actionable categories: AB (immature), C (uncertain), and DE (fused), which directly influence treatment strategy (23).

Figure 1. Representative axial CBCT slices showing the five stages of midpalatal suture (MPS) maturation according to angileri et al. (A) Stage A: Straight high-density line with no interdigitation. (B) Stage B: Scalloped appearance with early interdigitation. (C) Stage C: Increased interdigitation and partial fusion. (D) Stage D: Significant fusion with reduced suture visibility. (E) Stage E: Complete fusion; suture no longer visible.

Recent advances in artificial intelligence (AI), particularly convolutional neural networks (CNNs), have shown promise in automating radiographic interpretation in medicine and dentistry (24–29). CNNs are particularly suited to image classification tasks due to their ability to extract complex features from raw pixel data (30–33). In orthodontics, Zhu et al. (34) recently demonstrated the use of a ResNet-based CNN to classify MPS maturation stages from CBCT images, offering proof of concept. However, ResNet architectures require substantial computational resources and are not easily integrated into routine clinical practice.

Furthermore, prior AI-based studies have generally maintained the five-category structure proposed by Angelieri, which may not align with how clinicians make treatment decisions. In practice, MPS stages are commonly consolidated into three actionable groups:

• Stages A and B (AB): immature suture, favorable for non-surgical expansion

• Stage C: transitional morphology, uncertain outcome

• Stages D and E (DE): fused suture, requiring surgical expansion (23)

Given the importance of early and accurate diagnosis of MTD in ensuring efficient treatment, this study aims to develop and evaluate a 2D CNN model for the automated classification of MPS maturation stages from CBCT images. The model is designed to classify MPS into three groups: AB (stages A and B), C, and DE (stages D and E), corresponding to different treatment strategies. By leveraging a lightweight CNN architecture, this study seeks to balance high classification accuracy with low computational demand, offering a practical tool to improve diagnostic consistency and patient care in orthodontics.

2 Materials and methods

2.1 Data collection and preparation

This study was approved by the Health Research Ethics Board of the University of Alberta (study number: Pro00125920). Anonymized and de-identified CBCT scans were retrospectively obtained from 155 patients who underwent imaging as part of routine orthodontic treatment between 2014 and 2022 at the University of Alberta Orthodontics Clinic. Patients included in the study were between 7 and 21 years of age. All data were fully anonymized prior to the study, and individual demographic identifiers such as gender were not available. Although CBCT produces volumetric data, all labeling, image selection, and model training in this study were performed exclusively on 2D axial slices.

Scans were excluded if patients had prior orthodontic treatment, impacted upper teeth in the mid-palatal region, congenital craniofacial anomalies (e.g., cleft palate), if the MPS was not clearly visible in a single axial slice, or if image quality was compromised by motion artifacts or scatter. A total of 44 scans were excluded: 34 due to poor visualization of the suture, and 10 due to scatter or artifacts.

The rationale for these exclusions was to ensure that the deep learning model was trained on diagnostically interpretable images, where the MPS could be reliably visualized in a single slice and labeled. Including scans with unclear sutures or confounding anatomy would have introduced label noise, reduced model performance, and undermined study validity. In cases where the presence of the suture was ambiguous, multiple slices were reviewed by orthodontists before exclusion was confirmed. This approach prioritized label accuracy and model generalizability to real-world clinical CBCTs with sufficient image quality for diagnosis.

The remaining 111 CBCT scans from 111 patients were included in the study. Each scan consisted of approximately 450 2D axial slices which were converted from DICOM format to PNG using ITK-SNAP software, resulting in images with a dimension of 726 × 644 pixels. All CBCTs were acquired using a full field-of-view i-CAT scanner (Imaging Sciences International, Hatfield, PA, USA) at medium dimension, with a voxel size of 0.3 mm and an acquisition time of 8–9 s. Axial view of the patient images was classified into 5 groups of MPS maturation stage as first stated by Angeliere et al. by two orthodontists. In case of disagreement, a third orthodontist evaluated the images to determine the class of MPS. Once the patients were classified, the slices showing patients' palates were selected and saved in a separate folder.

The palate slices were then categorized into three groups AB (maturation stages A and B) C and DE (maturation stages D and E). This was done as a way to reduce the amount of variability for the DL model as the aim of this study was to help in reaching a diagnosis for an optimal treatment plan (23).

2.2 Data preprocessing

To improve model performance and ensure consistency across samples, several preprocessing steps were applied to the CBCT axial slices prior to CNN training. The goal of preprocessing was to focus the model's attention on the midpalatal suture (MPS), reduce irrelevant variation, and standardize image inputs.

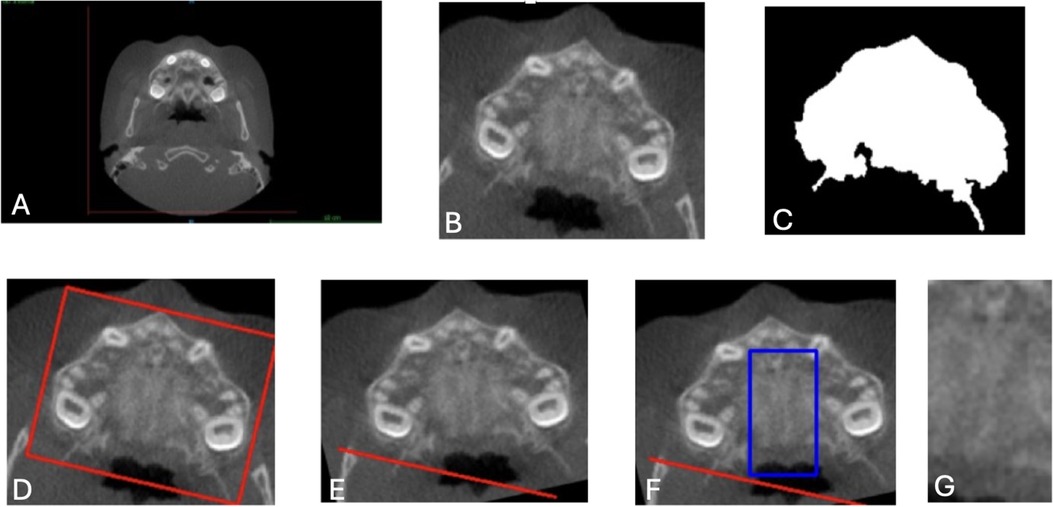

First, each slice was cropped to isolate the maxilla using fixed predefined coordinates, resulting in a cropped area with dimensions of 190 × 220 pixels (Figure 2B). This ensured that the entirety of the maxilla was included in the Region of Interest (ROI). Next, to further reduce noise and highlight image contours, a Gaussian blur was applied using OpenCV and NumPy libraries (35, 36). A binary mask of the maxilla was also generated for reference (Figure 2C).

Figure 2. Image preprocessing steps for CNN input preparation. (A) Original axial CBCT scan. (B) Cropped slice isolating the maxilla using fixed coordinates (190 × 220 pixels). (C) Binary mask identifying the maxillary region. (D) Angular correction was performed by drawing a rectangle around the widest maxillary contour and estimating the rotation angle. (E) Aligned image following rotation to standardize transverse orientation. (F) Region of interest (ROI) selection for the midpalatal suture, defined as 140 pixels vertically and 47 pixels horizontally, centered over the MPS. (G) Final cropped ROI used as model input. These preprocessing steps were applied uniformly to all axial slices to ensure anatomical consistency, reduce noise, and improve model focus on the midpalatal suture (MPS). CNN, convolutional neural network; ROI, region of interest; CBCT, cone-beam computed tomography.

To correct angular differences, the images were rotated based on the transverse dimension of the maxilla. A rectangle was drawn around the widest contour (Figure 2D), and the rotation angle was calculated and adjusted to align the images horizontally (Figure 2E). After rotation, the ROI for the MPS was determined by cropping an area 47 pixels around the X-axis and 140 pixels around the Y-axis, ensuring the MPS was fully captured in all CBCT images. This resulted in a final ROI dimension of 140 × 47 pixels, verified manually for accuracy. (Figures 2F,G).

These cropping dimensions were chosen to capture the MPS consistently across all CBCT images, reducing irrelevant information while maintaining computational efficiency. Each image was manually checked to ensure that the MPS was fully contained within the final region of interest (ROI), thereby maximizing the model's ability to learn relevant features for accurate classification. While this preprocessing pipeline was applied uniformly across all samples, the coordinate parameters and ROI dimensions were based on this specific dataset and may not be directly transferable to other CBCT datasets. Manual verification or dataset-specific adjustments would likely be required when applying the pipeline elsewhere, due to variations in patient anatomy, image acquisition protocols, and field of view. This highlights the importance of validating preprocessing procedures in the context of each new clinical dataset to ensure model reliability and generalizability.

2.3 ROI classifier development using DL

To identify the optimal axial slices containing the MPS, a separate binary classification CNN was developed to distinguish between slices that clearly displayed the MPS (preferred) and those that did not (non-preferred).

2.3.1 Data collection

A dataset of 996 axial slices was constructed from the full CBCT volumes of 111 patients. Of these, 575 slices were labeled as “preferred” slices, where the MPS was clearly visible, and 421 non-preferred slices, selected randomly from other regions of the scans.

2.3.2 Data splitting

The dataset was divided into training (70%), validation (20%), and testing (10%) subsets, maintaining class balance (37). This resulted in 402 preferred and 294 non-preferred slices for training, 115 preferred and 84 non-preferred for validation, and 57 preferred and 42 non-preferred for testing.

2.3.3 Model architecture

The architecture consisted of two convolutional layers with 32 and 64 filters respectively, each using a 3 × 3 kernel and ReLU activation. Each convolutional layer was followed by a 2 × 2 max-pooling layer to reduce spatial dimensions and computational load. The output was flattened and passed through a fully connected dense layer with 64 units and ReLU activation, followed by a final dense layer with a sigmoid activation function to output the probability of belonging to the “preferred” class. This compact architecture was designed to balance accuracy with computational efficiency and to minimize the risk of overfitting, given the binary nature of the task and relatively small dataset size.

2.3.4 Training

The model was trained with the Adam optimizer and binary cross-entropy loss function. Early stopping was applied after 5 epochs of no improvement, with the best model weights saved using checkpointing.

2.4 2D CNN model for MPS classification

After selecting the preferred slices that include the suture structure using a two-layer CNN model, another 2D CNN was developed to classify MPS maturation stages into three categories: AB (Stages A and B), C, and DE (Stages D and E).

2.4.1 Architecture

The model consisted of nine convolutional layers, designed to progressively extract features from preprocessed axial CBCT slices. The input to the model was a grayscale image of 140 × 47 pixels.

The first convolutional layer applied 64 filters of size 3 × 3, followed by batch normalization, ReLU activation, and max pooling. Each convolutional layer was followed by batch normalization and ReLU activation, and dropout layers (with a rate of 0.5) were interspersed throughout to reduce overfitting.

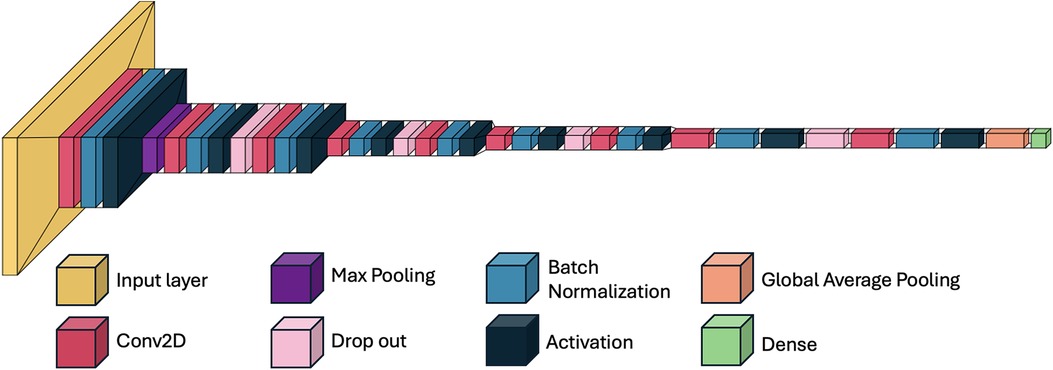

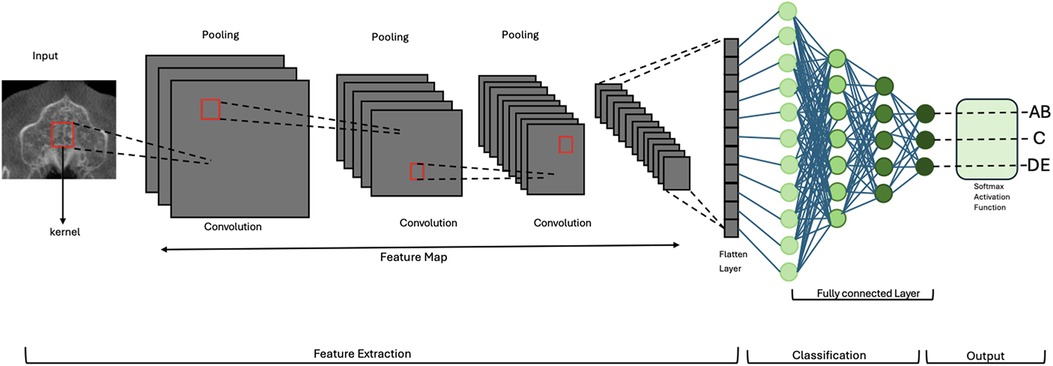

The output from the final convolutional block was passed through a global average pooling layer, followed by a dense layer with three output units and a softmax activation function, which provided the probability distribution across the three classes. The total number of trainable parameters was approximately 4.7 million. The model was implemented using TensorFlow (v2.12) and Keras. A schematic of the architecture is shown in Figures 3, 4.

Figure 3. Schematic overview of the 2D convolutional neural network (CNN) architecture for MPS classification. Axial CBCT slices serve as input. Feature extraction is performed through a series of convolutional and pooling layers. Outputs are passed to a fully connected layer and classified into one of three clinically grouped stages: AB, C, or DE. Softmax activation provides class probabilities. MPS, midpalatal suture; CNN, convolutional neural network.

Figure 4. Schematic overview of the 2D CNN used for midpalatal suture maturation classification. The model includes nine convolutional layers, each followed by batch normalization, ReLU activation, and dropout. Max pooling and global average pooling reduce dimensionality before the dense layers. This architecture enabled high classification performance while maintaining low model complexity (4.7 million trainable parameters).

2.4.2 Training setup

111 CBCT scans from 111 patients were included in this study. The ROI classifier was employed on the CBCTs to create a dataset consisting of 580 images of the ideal slices containing the MPS. The dataset was split for training, validation and test in patient-wise manner, where 70% of the data was used for training, 20% for validation and 10% for testing the CNN architecture.

The model was trained using the Adam optimizer with a learning rate of 0.001, and the categorical cross-entropy loss function. Training was conducted for up to 50 epochs with a batch size of 32. Early stopping with a patience of 5 epochs was applied based on validation loss to prevent overfitting. The best-performing weights were saved for evaluation.

2.5 Evaluation metrics

Model performance was evaluated using accuracy, precision, recall, and F1-score metrics for each class (AB, C, DE), providing a comprehensive assessment of the model's ability to classify MPS maturation stages.

To further assess the model's discriminative ability and ranking confidence, one-vs.-rest receiver operating characteristic (ROC) curves were generated for each class. The area under the curve (AUC) was calculated to quantify the model's ability to distinguish each class from the others across a range of probability thresholds.

Additionally, 95% confidence intervals for AUC scores were computed using bootstrapping with 1,000 iterations, providing insight into the statistical reliability of model predictions. All performance metrics were computed on the held-out test set and are reported in detail in the Results section.

3 Results

Manual classification of the 111 CBCT by the two orthodontists in this study achieved an inter-rater reliability of 68%, comparably higher than similar studies at 43% (21). Discrepancies were resolved by a third orthodontist with experience in midpalatal suture (MPS) assessment.

3.1 ROI classifier performance

A separate CNN model was developed to classify slices as “preferred” (containing the MPS) or “non-preferred.” The ROI classifier was evaluated on a test set comprising 57 preferred images and 42 non-preferred images. The evaluation yielded the following results, with a test accuracy of 99%. During the training and validation phases, both training and validation accuracies reached 100%, accompanied by negligible loss values, indicating effective model training. Manual verification of the model-selected slices confirmed agreement with expert selection in 99% of cases. This enabled construction of a final dataset of 580 ideal axial slices, used for MPS stage classification.

3.2 CNN model training and validation

The CNN model was trained to classify midpalatal maturation stages based on CBCT images. The input shape was set to 140 × 47, with a total of 405 training samples categorized into three groups: AB (127 samples), C (158 samples), and DE (120 samples).

The training utilized early stopping with a patience of 15 epochs and model checkpointing to save the best-performing weights. Initially set for 100 epochs with a batch size of 16, the training concluded at 90 epochs due to no improvement in validation loss after epoch 75. At that point, the model achieved a training accuracy of 97.81%, a validation loss of 0.0626, and a validation accuracy of 98.08%. The training duration for this epoch was approximately 5 s, with a processing time of 218 ms per step.

3.3 Testing results

The trained model was evaluated on a separate test dataset consisting of 57 images, distributed among the three groups: AB (18 samples), C (19 samples), and DE (20 samples). The testing results revealed a test loss of 0.2286 and test accuracy of 96.49%. The classification performance was further assessed using precision, recall, and F1-score metrics for each class (AB, C, DE) as demonstrated in Table 1 and Figure 5.

Table 1. Classification performance metrics (precision, recall, and F1-score) for each class (AB, C, DE).

Figure 5. Confusion matrix for 2D CNN model testing results. The model achieved a test accuracy of 96.49%. It classified AB with 100% recall (18/18), C with 100% recall (19/19), and DE with 90% recall (18/20). Misclassifications primarily occurred between DE and AB. AB = Stages A and B; DE = Stages D and E.

3.4 ROC and AUC analysis

ROC curves were generated for each class using a one-vs.-rest approach. The model achieved an AUC of 0.98 for class DE, indicating perfect separation from the other classes. The AUCs for AB and C were 0.10 and 0.62, respectively. Full ROC curves and associated metrics are shown in Figure 6.

Figure 6. Receiver operating characteristic (ROC) curves and area under the curve (AUC) scores for the three MPS maturation classes. The model's performance is shown using a one-vs.-rest approach. The DE class achieved a high AUC of 0.98, indicating excellent discriminative ability and model confidence. In contrast, the AB and C classes had lower AUC values (0.10 and 0.62, respectively), reflecting reduced certainty in predictions, particularly in early-stage classification. This may be due to anatomical overlap and subtle feature transitions between early and intermediate maturation stages. AB = Stages A and B; DE = Stages D and E.

4 Discussion

In this study, a dataset of 580 CBCT slices containing the MPS was used to train a lightweight 2D CNN for automated MPS maturation stage classification into three clinically relevant groups: AB, C, and DE. The proposed CNN achieved a training accuracy of 97.81%, a validation accuracy of 98.08%, and a test accuracy of 96.49%. Class-wise performance metrics demonstrated high precision (AB: 0.90, C: 1.00, DE: 1.00) and recall (AB: 1.00, C: 1.00, DE: 0.90), with F1-scores ranging from 0.95–1.00. These results suggest that our CNN architecture effectively supports early detection of MPS fusion stages from axial CBCT images. Clinically, the AB, C, and DE stages also broadly correspond to pre-pubertal, pubertal, and post-pubertal phases of growth, respectively, which are key considerations in determining the timing and method of maxillary expansion.

ROC analysis revealed AUC values of 0.10 for AB, 0.62 for C, and 0.98 for DE. While DE classification was highly confident and well-separated, the comparatively lower AUCs for AB and C suggest that the model had more difficulty distinguishing these early and transitional stages with consistent confidence. This may reflect the anatomical overlap and subtlety between AB and C stages, which are often difficult to distinguish even among experienced clinicians. In our dataset, inter-rater agreement between two orthodontists was 68%, underscoring the inherent subjectivity and variability in labeling these stages. Although the model predicted AB and C correctly in most cases—as reflected in the high F1-scores—the ROC-derived AUC indicates that the model often assigned similar probabilities across neighboring classes, reducing its overall ranking confidence.

Our results can be compared to Zhu et al., who also employed a CNN-based approach (ResNet18) for MPS classification from CBCT images where the distinction in model complexity is worth noting. Zhu et al.'s ResNet18 model comprises 11.69 million parameters, trained from 785 patient samples, whereas our custom model contains only 4.7 million parameters—approximately 60% fewer parameters. Despite this reduction in complexity, our model achieved significantly higher training accuracy (97.81% vs. 79.10%), underscoring the efficacy of a more efficient, lightweight architecture in this classification task. Our model demonstrates alternative approaches in development of small and task-specific DL models that can be applied in resource-constrained environments, such as clinical settings with limited samples, computational power, or on mobile devices.

Our findings align with previous research highlighting the effectiveness of DL techniques, particularly CNNs, in medical image analysis. Other studies have demonstrated CNNs' ability to interpret complex biomedical images effectively, including tasks like interstitial lung disease pattern classification and dental diagnostics. These studies further validate the utility of CNNs in healthcare, particularly in automating diagnostic processes and reducing human error (25, 26, 38–40).

Moreover, the lightweight nature of our CNN architecture offers practical advantages, especially in orthodontic clinics where computational resources may be limited. The model's efficiency, coupled with its high accuracy, demonstrates its potential for real-world applications, where rapid and accurate assessment of midpalatal suture maturation could significantly enhance treatment planning.

However, it is important to acknowledge some limitations of our study. While the sample size of CBCT images aligns with typical practices in medical research, it may impact the generalizability of our findings to larger and more diverse populations.

Future studies should focus on evaluating the model's performance with larger and more diverse datasets to ensure its robustness and applicability across different demographics.

A limitation of the approach used in this study was the exclusion of midpalatal sutures that were not visible in a single slice, as the methods used in the study can effectively classify, albeit only with individual slices. Future research should explore three-dimensional (3D) approaches to provide a more comprehensive analysis of MPS maturation stages, as well as optimize imaging protocols or employ AI-based enhancement techniques to improve suture detectability and reduce the need for image exclusion.

Another limitation of this study is the higher radiation dose associated with CBCT compared to occlusal radiographs. However, CBCT offers significantly greater image quality and precision, which was essential for the development of our small models (41). The ability to use smaller models stems from the superior clarity provided by CBCT, allowing for more precise visualization and classification. While occlusal radiographs were previously used to visualize the MPS (42), their clarity and accuracy were limited, as they provided a less detailed view of a potentially thin structure embedded deep within the palatal bone, making classification more challenging (43). As small model development becomes a growing focus in the field, the enhanced resolution of CBCT imaging will continue to play a crucial role in advancing research and clinical applications.

It is critical to recognize that our findings rely on Angelieri's staging system for midpalatal suture maturation, which could affect the validity of our results if future studies challenge this classification's accuracy. Additionally, building on this work, future research will explore volumetric (3D) CNN models to capture full spatial context. Preliminary results from our follow-up study suggest improved AUC values for AB and C stages, supporting the potential of 3D architectures to enhance diagnostic confidence in. By incorporating advanced imaging techniques and larger datasets, we aim to further enhance the accuracy and clinical relevance of AI-driven tools in orthodontic diagnosis and treatment planning.

5 Conclusion

In conclusion, this study developed a lightweight 2D convolutional neural network (CNN) to automate the classification of midpalatal suture (MPS) maturation stages from axial CBCT slices. The proposed model demonstrated high accuracy, with strong precision and F1-scores across all three clinically grouped classes: AB, C, and DE. ROC analysis further confirmed excellent performance for the DE stage, which is critical for identifying cases that may require surgically assisted expansion.

The model's efficient architecture, combined with its robust classification ability, highlights its potential for real-world clinical use—particularly in orthodontic settings where rapid and reliable MPS assessment could aid treatment planning. While classification of early and transitional stages (AB and C) presented lower AUC values, these findings reflect known diagnostic ambiguity and suggest areas for further development. Future research will explore 3D CNN models to improve diagnostic confidence and generalizability through volumetric context and larger, more diverse datasets.

Data availability statement

The data analyzed in this study is subject to the following licenses/restrictions: The dataset consists of patient CBCT images which are the property of Dr. Manuel Lagravere Vich from University of Alberta, School of Dentistry, Orthodontics Clinic. Requests to access these datasets should be directed tobWFudWVsQHVhbGJlcnRhLmNh.

Ethics statement

The studies involving humans were approved by University of Alberta Health Research Ethics Board—Health Panel. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation was not required from the participants or the participants' legal guardians/next of kin in accordance with the national legislation and institutional requirements.

Author contributions

MN: Conceptualization, Formal analysis, Methodology, Project administration, Validation, Visualization, Writing – original draft, Writing – review & editing, Data curation, Investigation, Software. NA: Writing – review & editing, Writing – original draft. ML: Writing – review & editing, Data curation, Investigation, Resources, Supervision. HL: Conceptualization, Resources, Writing – review & editing, Methodology, Supervision, Validation.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This work was funded by a CAD 5,000 grant from the Fund for Dentistry, University of Alberta, Mike Petryk School of Dentistry (2023).

Acknowledgments

The authors would like to sincerely thank Dr. Victor Ladewig and Dr. Silvia Capenakas for their invaluable contribution to this study. Dr. Ladewig and Dr. Capenakas assisted in classifying the stages of the MPS in the CBCT images and determining the optimal slice for analysis. Their expertise and dedication were instrumental in the success of this research.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

The author(s) declared that they were an editorial board member of Frontiers, at the time of submission. This had no impact on the peer review process and the final decision.

Generative AI statement

The author(s) declare that Generative AI was used in the creation of this manuscript. ChatGPT was used to grammar check the manuscript.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. McNamara JA. Maxillary transverse deficiency. Am J Orthod Dentofacial Orthop. (2000) 117(5):567–70. doi: 10.1016/S0889-5406(00)70202-2

2. Darawsheh AF, Khasawneh IM, Saeed AM, Al-Dwairi ZN. Applicability of fractal analysis for quantitative evaluation of midpalatal suture maturation. J Clin Med. (2023) 12(13):4189. doi: 10.3390/jcm12134189

3. Nervina JM, Kapila SD, Flores-Mir C. Assessment of maxillary transverse deficiency and treatment outcomes by cone beam computed tomography. In: Kapila SD, editor. Cone Beam Computed Tomography in Orthodontics: Indications, Insights, and Innovations. Chichester, Oxford: Wiley-Blackwell (2014). p. 383–409. doi: 10.1002/9781118674888.ch17

4. Duque-Urióstegui C, Vargas-Sanchez R, Lopez-Franco A. Treatment of maxillary transverse deficiency with rapid expansion of the palate with mini-implant: a review. Int J Res Med Sci. (2023) 11(5):1858. doi: 10.18203/2320-6012.ijrms20231375

5. Iodice G, Visciano A, Perroni G. Association between posterior crossbite, skeletal, and muscle asymmetry: a systematic review. Eur J Orthod. (2016) 38(6):638–51. doi: 10.1093/ejo/cjw003

6. Akbulut S, Kaya B, Altan A, Ozturk C. Prediction of rapid palatal expansion success via fractal analysis in hand-wrist radiographs. Am J Orthod Dentofacial Orthop. (2020) 158(2):192–8. doi: 10.1016/j.ajodo.2019.07.018

7. Jimenez-Valdivia LM, López-Ramos R, Sánchez-Sánchez J. Midpalatal suture maturation stage assessment in adolescents and young adults using cone-beam computed tomography. Prog Orthod. (2019) 20(1):38. doi: 10.1186/s40510-019-0291-z

8. Feu D, de Oliveira BH, de Oliveira Almeida MA, Kiyak HA, Miguel JAM. Oral health-related quality of life and orthodontic treatment seeking. Am J Orthod Dentofacial Orthop. (2010) 138(2):152–9. doi: 10.1016/j.ajodo.2008.09.033

9. Haghanifar S, Mahmoudi S, Foroughi R, Poorsattar Bejeh Mir A, Mesgarani A, Bijani A. Assessment of midpalatal suture ossification using cone-beam computed tomography. Electron Physician. (2017) 9(3):4035. doi: 10.19082/4035

10. Shayani A, Merino-Gerlach MA, Garay-Carrasco IA, Navarro-Cáceres PE, Sandoval-Vidal HP. Midpalatal suture maturation stage in 10-to 25-year-olds using cone-beam computed tomography—a cross-sectional study. Diagnostics. (2023) 13(8):1449. doi: 10.3390/diagnostics13081449

11. Zong C, Tang B, Hua F, He H, Ngan P. Skeletal and dentoalveolar changes in the transverse dimension using microimplant-assisted rapid palatal expansion (MARPE) appliances. Semin Orthod. (2019) 25:46–59. doi: 10.1053/j.sodo.2019.02.006

12. Quintela M, Rossi SB, Vera KL, Peralta JC, Silva ID, Souza L, et al. Miniscrew-assisted rapid palatal expansion (MARPE) protocols applied in different ages and stages of maturation of the midpalatal suture: case reports. Res Soc Dev. (2021) 10(11):e503101119480. doi: 10.33448/rsd-v10i11.19480

13. Brunetto DP, Sant’Anna EF, Machado AW, Moon W. Non-surgical treatment of transverse deficiency in adults using microimplant-assisted rapid palatal expansion (MARPE). Dent Press J Orthod. (2017) 22:110–25. doi: 10.1590/2177-6709.22.1.110-125.sar

14. Bloomquist DS, Joondeph DR. Orthognathic surgical procedures on non-growing patients with maxillary transverse deficiency. Semin Orthod. (2019) 25:248–63. doi: 10.1053/j.sodo.2019.08.004

15. Rachmiel A, Turgeman S, Shilo D, Emodi O, Aizenbud D. Surgically assisted rapid palatal expansion to correct maxillary transverse deficiency. Ann Maxillofac Surg. (2020) 10(1):136–41. doi: 10.4103/ams.ams_163_19

16. Suri L, Taneja P. Surgically assisted rapid palatal expansion: a literature review. Am J Orthod Dentofacial Orthop. (2008) 133(2):290–302. doi: 10.1016/j.ajodo.2007.01.021

17. Agarwal A, Mathur R. Maxillary expansion. Int J Clin Pediatr Dent. (2010) 3(3):139–46. doi: 10.5005/jp-journals-10005-1069

18. Isfeld D, Flores-Mir C, Leon-Salazar V, Lagravère M. Evaluation of a novel palatal suture maturation classification as assessed by cone-beam computed tomography imaging of a pre-and postexpansion treatment cohort. The Angle Orthod. (2019) 89(2):252–61. doi: 10.2319/040518-258.1

19. Isfeld D, Lagravere M, Leon-Salazar V, Flores-Mir C. Novel methodologies and technologies to assess mid-palatal suture maturation: a systematic review. Head Face Med. (2017) 13:1–15. doi: 10.1186/s13005-017-0144-2

20. Angelieri F, Neves L, Coelho H. Prediction of rapid maxillary expansion by assessing the maturation of the midpalatal suture on cone beam CT. Dent Press J Orthod. (2016) 21(06):115–25. doi: 10.1590/2177-6709.21.6.115-125.sar

21. Barbosa NMV, Silva P, Costa F. Reliability and reproducibility of the method of assessment of midpalatal suture maturation: a tomographic study. Angle Orthod. (2019) 89(1):71–7. doi: 10.2319/121317-859.1

22. Chhatwani S, Arman A, Möhlhenrich SC, Ludwig B, Jackowski J, Danesh G. Performance of dental students, orthodontic residents, and orthodontists for classification of midpalatal suture maturation stages on cone-beam computed tomography scans: a preliminary study. BMC Oral Health. (2024) 24(1):373. doi: 10.1186/s12903-024-04163-3

23. Ladewig V, Capelozza-Filho L, Almeida-Pedrin RR, Guedes FP, de Almeida Cardoso M, de Castro Ferreira Conti AC. Tomographic evaluation of the maturation stage of the midpalatal suture in postadolescents. Am J Orthod Dentofacial Orthop. (2018) 153(6):818–24. doi: 10.1016/j.ajodo.2017.09.019

24. Salunke D, Shendye P, Borkar R. Deep learning techniques for dental image diagnostics: a survey. 2022 International Conference on Augmented Intelligence and Sustainable Systems (ICAISS); IEEE (2022).

25. Al-Khuzaie MI, Al-Jawher WAM. Enhancing medical image classification: a deep learning perspective with multi wavelet transform. J Port Sci Res. (2023) 6(4):365–73. doi: 10.36371/port.2023.4.7

26. Aljuaid A, Anwar M. Survey of supervised learning for medical image processing. SN Comput Sci. (2022) 3(4):292. doi: 10.1007/s42979-022-01166-1

27. Alqazzaz S, Sun X, Nokes LD, Yang H, Yang Y, Xu R, et al. Combined features in region of interest for brain tumor segmentation. J Digit Imaging. (2022) 35(4):938–46. doi: 10.1007/s10278-022-00602-1

28. Huang S, Lee F, Miao R, Si Q, Lu C, Chen Q. A deep convolutional neural network architecture for interstitial lung disease pattern classification. Med Biol Eng Comput. (2020) 58:725–37. doi: 10.1007/s11517-019-02111-w

29. Esteva A, Kuprel B, Novoa RA, Ko J, Swetter SM, Blau HM, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. (2017) 542(7639):115–8. doi: 10.1038/nature21056

30. Schwendicke F, Cejudo Grano de Oro J, Garcia Cantu A, Meyer-Lueckel H, Chaurasia A, Krois J. Artificial intelligence for caries detection: value of data and information. J Dent Res. (2022) 101(11):1350–6. doi: 10.1177/00220345221113756

31. Mehta C, Shah R, Yanamala N, Sengupta PP. Cardiovascular imaging databases: building machine learning algorithms for regenerative medicine. Curr Stem Cell Rep. (2022) 8(4):164–73. doi: 10.1007/s40778-022-00216-x

32. Mehta S, Patnaik KS. Improved prediction of software defects using ensemble machine learning techniques. Neural Comput Appl. (2021) 33(16):10551–62. doi: 10.1007/s00521-021-05811-3

33. Monsarrat P, Bernard D, Marty M, Cecchin-Albertoni C, Doumard E, Gez L, et al. Systemic periodontal risk score using an innovative machine learning strategy: an observational study. J Pers Med. (2022) 12(2):217. doi: 10.3390/jpm12020217

34. Zhu M, Yang P, Bian C, Zuo F, Guo Z, Wang Y, et al. Convolutional neural network-assisted diagnosis of midpalatal suture maturation stage in cone-beam computed tomography. J Dent. (2024) 141:104808. doi: 10.1016/j.jdent.2023.104808

35. Harris CR, Millman KJ, van der Walt SJ, Gommers R, Virtanen P, Cournapeau D, et al. Array programming with NumPy. Nature. (2020) 585(7825):357–62. doi: 10.1038/s41586-020-2649-2

37. Al-Sarem M, Al-Asali M, Alqutaibi AY, Saeed F. Enhanced tooth region detection using pre-trained deep learning models. Int J Environ Res Public Health. (2022) 19(22):15414. doi: 10.3390/ijerph192215414

38. Isensee F, Jäger PF, Kohl SAA, Petersen J, Maier-Hein KH. Automated Design of Deep Learning Methods for Biomedical Image Segmentation (2019). arXiv preprint arXiv:1904.08128.

39. Ker J, Wang L, Rao J, Lim T. Deep learning applications in medical image analysis. IEEE Access. (2018) 6:9375–89. doi: 10.1109/ACCESS.2017.2788044

40. Singha A, Thakur RS, Patel T. Deep learning applications in medical image analysis. In: Dash S, Pani SK, Balamurugan S, Abraham A, editors. Biomedical Data Mining for Information Retrieval: Methodologies, Techniques, and Applications. Hoboken: John Wiley & Sons, Inc. (2021). p. 293–350. doi: 10.1002/9781119711278.ch11

41. Grünheid T, Kolbeck Schieck JR, Pliska BT, Ahmad M, Larson BE. Dosimetry of a cone-beam computed tomography machine compared with a digital x-ray machine in orthodontic imaging. Am J Orthod Dentofacial Orthop. (2012) 141(4):436–43. doi: 10.1016/j.ajodo.2011.10.024

42. Wehrbein H, Yildizhan F. The mid-palatal suture in young adults: a radiological histological investigation. Eur J Orthod. (2001) 23(2):105–14. doi: 10.1093/ejo/23.2.105

43. Forst D, Nijjar S, Khaled Y, Lagravere M, Flores-Mir C. Radiographic assessment of external root resorption associated with jackscrew-based maxillary expansion therapies: a systematic review. Eur J Orthod. (2014) 36(5):576–85. doi: 10.1093/ejo/cjt090

44. Hernández-Alfaro F, Valls-Ontañón A. SARPE and MARPE. In: Stevens MR, Ghasemi S, Tabrizi R, editors. Innovative Perspectives in Oral and Maxillofacial Surgery. Cham: Springer (2021). p. 321–5. doi: 10.1007/978-3-030-75750-2

45. Hernandez-Alfaro F, Mareque Bueno J, Diaz A, Pagés CM. Minimally invasive surgically assisted rapid palatal expansion with limited approach under sedation: a report of 283 consecutive cases. J Oral Maxillofac Surg. (2010) 68(9):2154–8. doi: 10.1016/j.joms.2009.09.080

46. Franchi L, Baccetti T, Lione R, Fanucci E, Cozza P. Modifications of midpalatal sutural density induced by rapid maxillary expansion: a low-dose computed tomography evaluation. Am J Orthod Dentofacial Orthop. (2010) 137(4):486–8. doi: 10.1016/j.ajodo.2009.10.028

Keywords: deep learning, convolutional neural networks, midpalatal suture, CBCT, orthodontics, maxillary transverse deficiency

Citation: Nik Ravesh M, Ameli N, Lagravere Vich M and Lai H (2025) Automated classification of midpalatal suture maturation using 2D convolutional neural networks on CBCT scans. Front. Dent. Med. 6:1583455. doi: 10.3389/fdmed.2025.1583455

Received: 25 February 2025; Accepted: 9 June 2025;

Published: 26 June 2025.

Edited by:

Satoshi Yamaguchi, Osaka University, JapanReviewed by:

Iara Frangiotti Mantovani, Federal University of Santa Catarina, BrazilOskar Komisarek, Nicolaus Copernicus University in Toruń, Poland

Copyright: © 2025 Nik Ravesh, Ameli, Lagravere Vich and Lai. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Hollis Lai, aG9sbGlzMUB1YWxiZXJ0YS5jYQ==

Mahshid Nik Ravesh

Mahshid Nik Ravesh Nazila Ameli

Nazila Ameli Manuel Lagravere Vich

Manuel Lagravere Vich Hollis Lai

Hollis Lai