- 1Acupuncture and Tuina School, Chengdu University of Traditional Chinese Medicine, Chengdu, Sichuan, China

- 2National Clinical Research Center for Chinese Medicine Acupuncture and Moxibustion, First Teaching Hospital of Tianjin University of Traditional Chinese Medicine, Tianjin, China

- 3Nanyang Medical College, Nanyang, Henan, China

- 4Postgraduate School, Tianjin University of Traditional Chinese Medicine, Tianjin, China

Background: There has been a significant increase in the number of diagnostic and predictive models for myelitis. These models aim to provide clinicians with more accurate diagnostic tools and predictive methods through advanced data analysis and machine learning techniques. However, despite the growing number of such models, their effectiveness in clinical practice and their quality and applicability in future research remain unclear.

Objective: To conduct a comprehensive methodological assessment of existing literature concerning myelitis modeling methodologies.

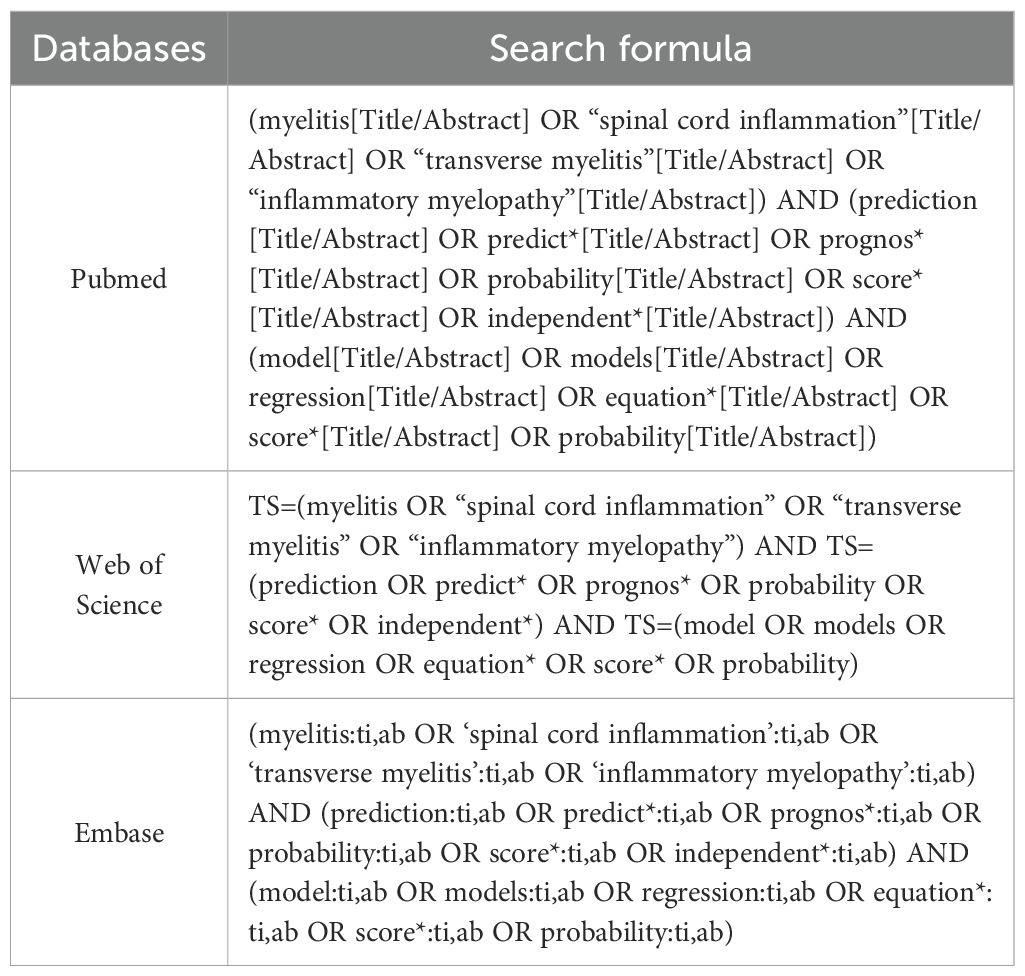

Methods: We queried PubMed, Web of Science, and Embase for publications through October 23, 2024. Extracted parameters covered: study design, data origin, outcome criteria, cohort size, predictors, modeling techniques, and validation metrics. Methodological quality was evaluated using the PROBAST instrument, assessing potential biases and clinical applicability.

Results: Among the 11 included studies, six focused on predictive diagnostic models, while five were centered on prognostic models. Modeling approaches comprised: logistic regression (n=6), Cox regression (n=2), deep learning (n=1), joint modeling (n=1), and hybrid machine learning/scoring algorithms (n=1). Multivariable logistic regression was the most frequently employed modeling algorithm in the current field. The most commonly used predictors for training diagnostic or prognostic models in myelitis were sex (n=6) and age (n=4). PROBAST evaluation indicated: (1) High bias risk (n=6): primarily from suboptimal data sourcing and analytical reporting gaps; (2) Unclear risk (n=4): mainly due to non-transparent analytical workflows; (3) Low risk (n=1). Pooled AUC for eight validated models reached 0.83 (95%CI: 0.75–0.91), demonstrating robust discriminative capacity.

Conclusion: Although existing models demonstrate good discrimination in predicting myelitis, according to the PROBAST criteria, only one study exhibited a low risk of bias; analysis of data accessibility indicated that the model from only one study was directly available for public use. Consequently, future research should prioritize the development of models with larger cohort sizes, rigorous methodological design, high reporting transparency, and validation through multicenter external studies, enabling direct clinical translation to enhance their application value in clinical practice and improve healthcare delivery.

Systematic Review Registration: https://www.crd.york.ac.uk/prospero/, identifier CRD42024623714.

Introduction

Myelitis is an inflammatory disease affecting the nervous system, primarily involving the gray and white matter regions of the spinal cord. The etiology of this condition is diverse, encompassing viral and bacterial infections, autoimmune diseases, and associations with certain systemic disorders. The clinical manifestations of myelitis vary depending on the affected spinal cord regions. Typical symptoms include limb weakness, neuropathic pain, sensory deficits, autonomic dysfunction, and varying degrees of motor impairment (1–3). The disease can present with acute onset and, in severe cases, may result in permanent neurological damage. Common types of myelitis include acute transverse myelitis (4), autoimmune myelitis—such as neuromyelitis optica (NMO) or neuromyelitis optica spectrum disorders (NMOSD) (5, 6), multiple sclerosis (MS) (7), and systemic lupus erythematosus (SLE) (8, 9), as well as infectious myelitis caused by pathogens like viruses, bacteria, or fungi (10–13). Among these, poliomyelitis and NMOSD have garnered more attention and extensive research. Studies suggest that various forms of myelitis are highly disabling diseases. Patients often endure significant disease burdens due to symptoms such as pain (14), which severely impacts their emotional well-being and quality of life (15–18).

Although the etiology of myelitis varies—ranging from immune-mediated mechanisms to infections—each cause requires distinct management strategies and yields different prognostic outcomes. Nonetheless, early and effective diagnosis, risk assessment, and outcome prediction are critical for timely interventions, preventing disease progression, and improving patient quality of life (19). As such, developing models for predicting disease progression, risk, and classification of myelitis has become a significant focus of current research. Despite expanding myelitis prognostic frameworks in recent years, their methodological rigor and clinical translatability remain unvalidated. This systematic review synthesizes existing predictive constructs for myelitis populations, generating evidence-based guidance for clinical deployment and research advancement.

Methods

Study protocol registered on PROSPERO (CRD42024623714).

Search strategy

Three biomedical repositories (PubMed, Web of Science, Embase) were systematically queried from inception through October 23, 2024, limited to English publications. The keywords used included terms such as “myelitis,” “prediction model,” “predictive factors,” “predictors,” “model,” and “scoring.” The complete search strategy is detailed in Table 1. Our systematic review employed the PICOTS framework per CHARMS guidelines (20), which standardizes review objectives, search methodology, and eligibility criteria (21). Additionally, we utilized the CHARMS-PROBAST integrated Excel template developed by B. M. Fernandez-Felix and colleagues to streamline evidence extraction and bias appraisal for clinical prediction models (22). Core systematic review components include:

Population (P): Patients with any type of myelitis.

Intervention (I): Developed and published diagnostic or prognostic models (with ≥2 predictors).

Comparator (C): No competing models.

Outcome (O): Any outcomes related to myelitis.

Timing(T): Predict outcomes based on the basic information at admission, clinical features, clinical scoring scale results, imaging characteristics, and laboratory indicators, or changes in features during the follow-up period.

Setting (S): The intended use of diagnostic prediction models is to individually predict the type of myelitis at an early stage, promote early diagnosis, and improve early clinical outcomes; The intended use of risk prediction models is to individually predict the later progression and changes of myelitis, helping to implement preventive measures and avoid adverse events.

Inclusion criteria

1. Myelitis patient cohorts;

2. Observational designs;

3. Prognostic algorithm development;

4. Myelitis-related outcomes.

Exclusion criteria

1. Non-predictive model studies;

2. Non-English publications;

3. Unretrievable full texts despite author outreach.

Study selection and screening

Two investigators (Huang JJ, Zhang ZZ) independently conducted selection. Initial title/abstract screening determined eligibility. Full-text articles underwent inclusion/exclusion assessment, with duplicate removal. Disagreements were resolved through tripartite consensus deliberation (Huang JJ, Zhang ZZ, Wu BQ).

Data extraction

Two researchers independently evaluated search results. Full-text eligibility assessments and discrepancies were resolved through deliberation or third-reviewer arbitration.

Data were documented via standardized Excel template (22), categorized as:

1. Basic Information: authors, year, design, participants, data sources, sample size;

2. Model Information: feature selection methodologies, model derivation techniques, validation approaches, performance quantifiers, missing data handling, continuous covariate transformation, final predictors, and model presentation formats.

Data extraction was performed by one researcher, and all data were subsequently verified by another researcher to ensure consistency and accuracy, with necessary corrections made as needed.

Quality assessment

Study bias risk and applicability were evaluated using the Prediction Model Risk of Bias Assessment Tool (PROBAST) (23). Two investigators (Huang JJ, Zhang ZZ) conducted independent appraisals. PROBAST provides a critical appraisal framework for prediction model studies (development/validation/updating), comprising 20 signaling queries across four domains: Participants, Predictors, Outcomes, Analysis. Each query accepts five responses: “Yes,” “Probably Yes,” “No,” “Probably No,” or “No Information.” Domain-level bias was deemed high-risk when ≥1 query received “No”/”Probably No.” Overall low bias risk required all domains achieving low-risk status.

Data synthesis and statistical analysis

Validation model AUC values underwent meta-analysis in RStudio (https://posit.co/download/rstudio-desktop/). Heterogeneity was quantified via I² index and Cochran’s Q test, with 25%/50%/75% thresholds denoting low/moderate/high heterogeneity (24). Effect model selection (fixed/random) depended on heterogeneity magnitude. Publication bias was assessed using Egger’s test (P>0.05 suggesting minimal bias) (25).

Results

Study selection

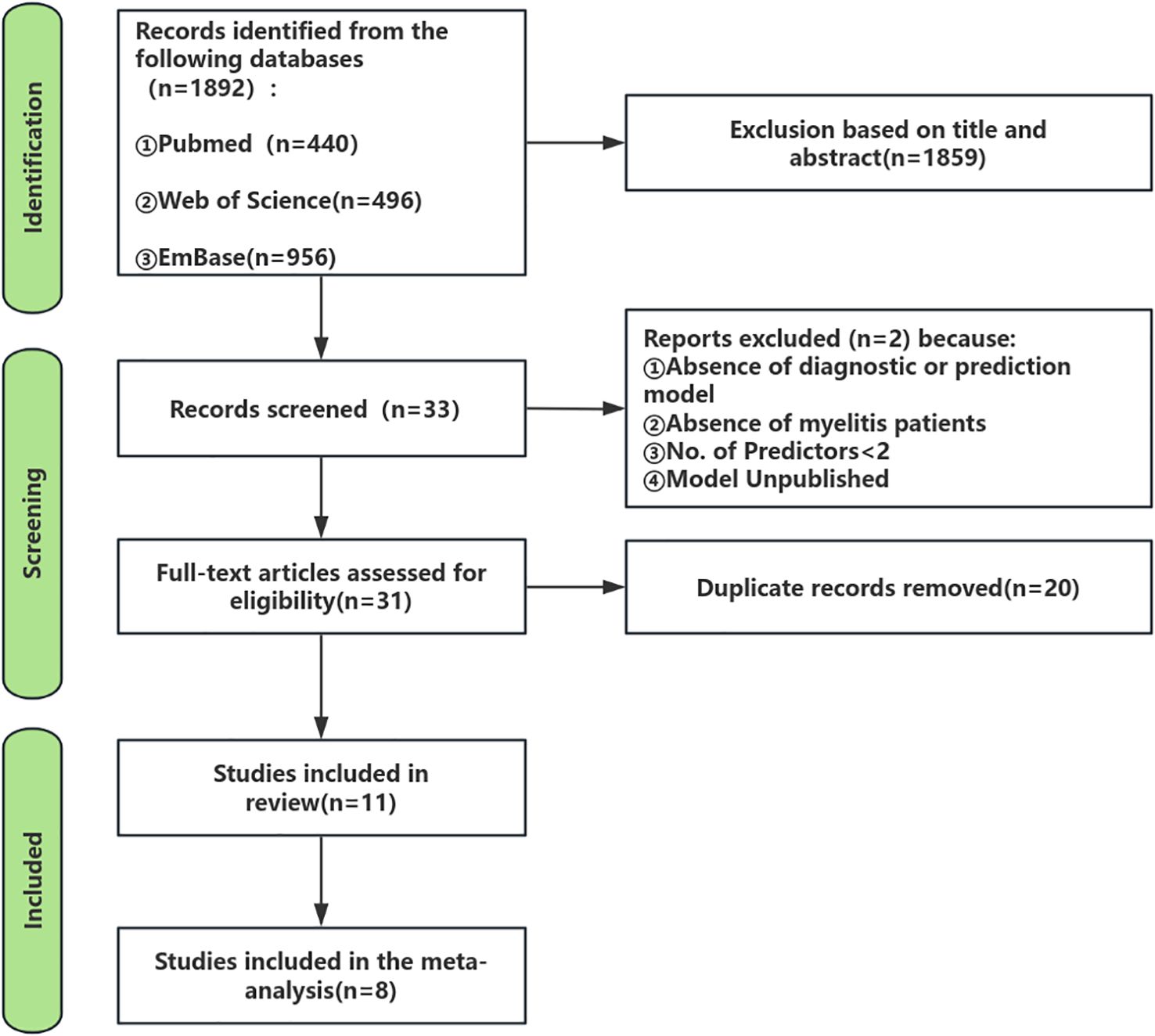

Figure 1 delineates the systematic review/meta-analysis search flowchart, presenting comprehensive search methodology and outcomes.

As of October 23, 2024, the initial search across the three databases yielded a total of 1,892 records. In the first stage, screening titles and abstracts resulted in the selection of 33 articles that were relevant to the study topic (at this stage, articles that clearly did not develop prediction models or focused solely on risk factors were excluded, and some borderline articles proceeded to the next evaluation stage). In the second stage, applying the inclusion and exclusion criteria led to 31 articles being selected for further evaluation. During this evaluation phase, 2 studies were excluded after discussion and assessment because they did not constitute prediction models in the strict sense and had fewer than two predictors. In the third stage, after removing 20 duplicate records, the final review included 11 studies and 11 models.

Study characteristics

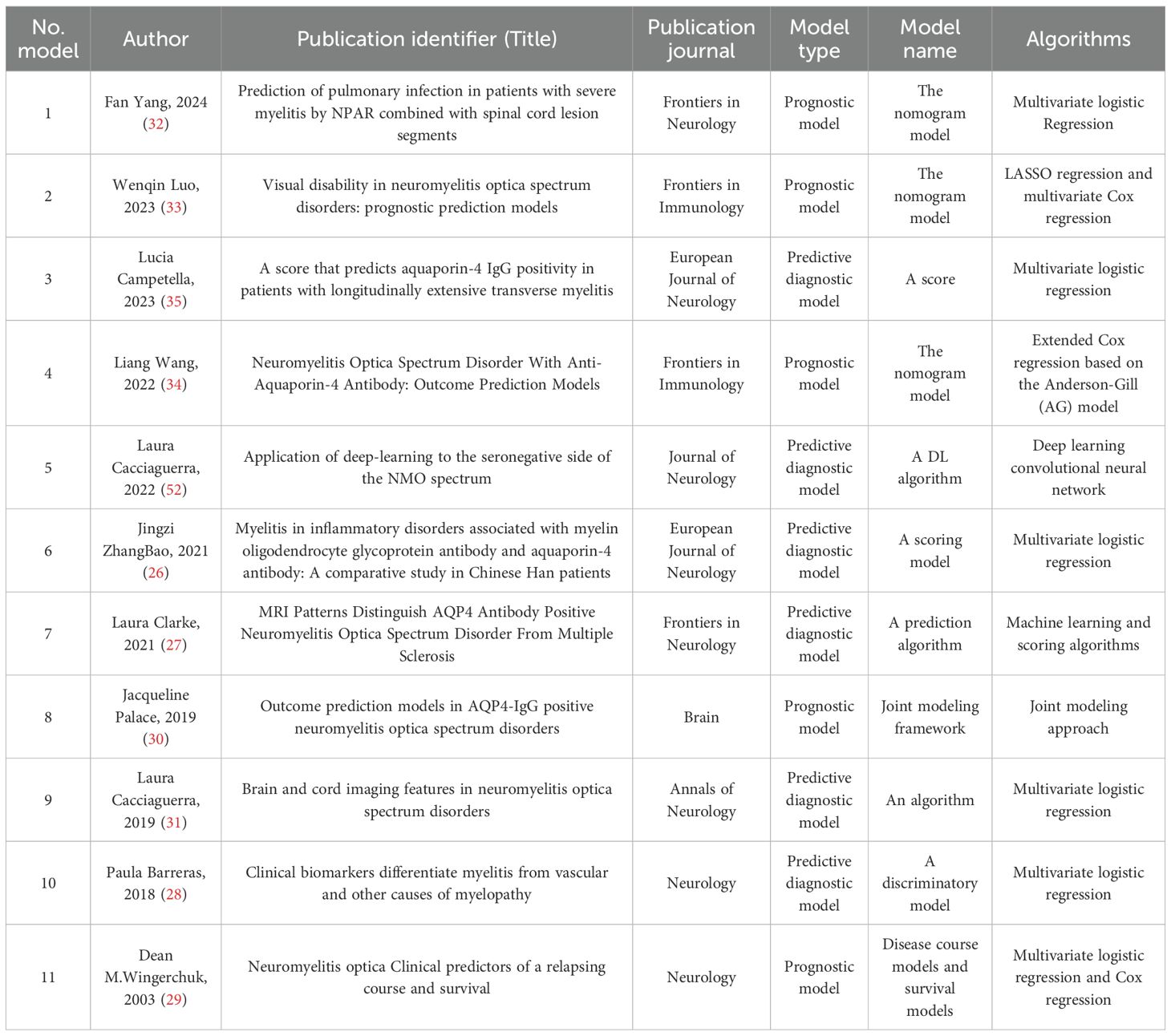

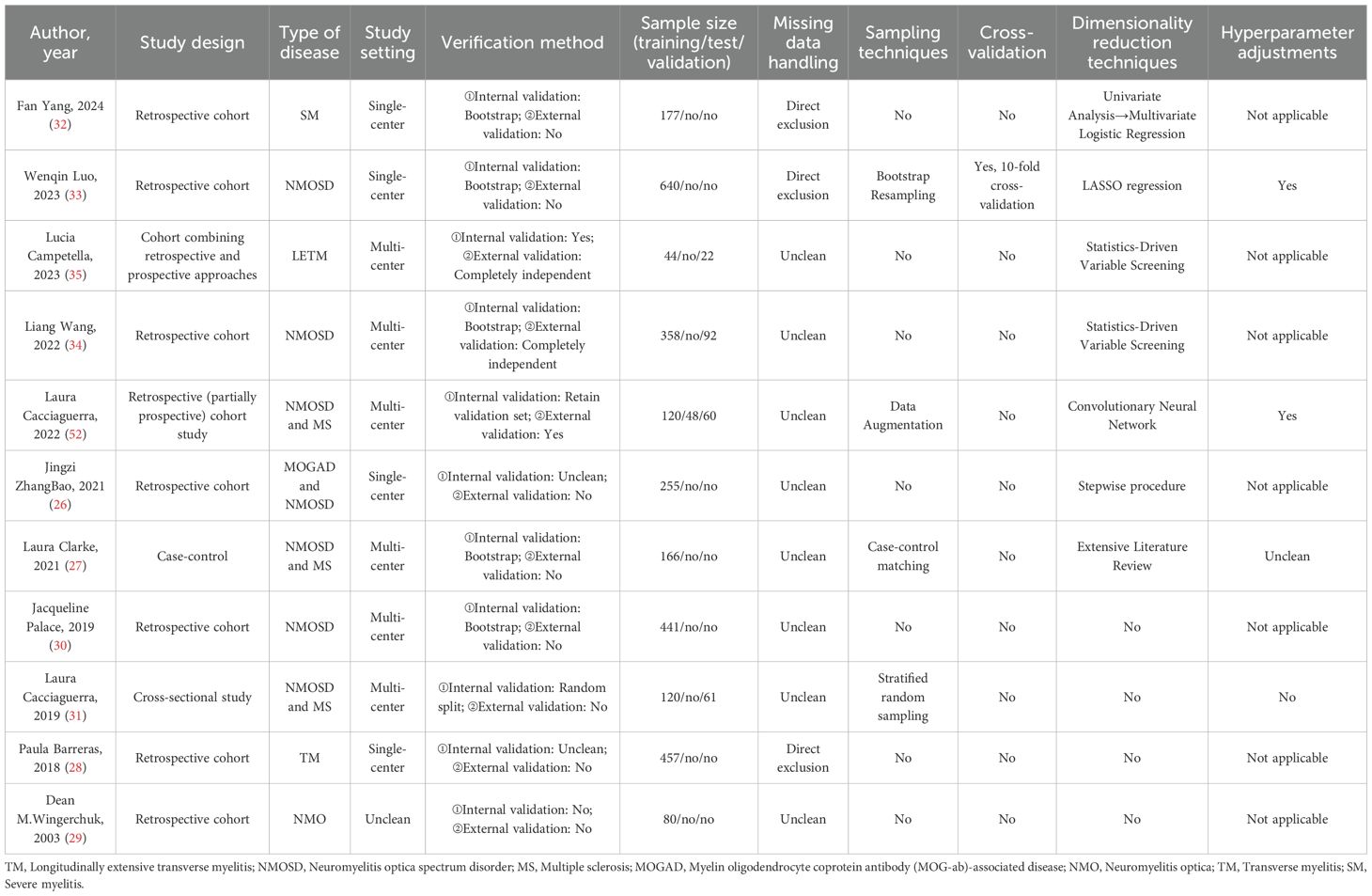

Details regarding the literature, model names, and key algorithmic applications of the 11 included studies are summarized in Table 2; the study designs, validation methods, sample sizes, data processing, and comprehensive analytical techniques are detailed in Table 3. These studies, published between 2003 and 2024, include six multicenter collaborative studies, four single-center studies, and one study with unclear institutional reporting. Methodologically, the majority of studies (n=7) adopted a retrospective design, two incorporated both retrospective and prospective designs, and the remaining two consisted of one case-control study and one cross-sectional study. The study populations predominantly involved patients with neuromyelitis optica (NMO) (8 studies), three of which also included cohorts with multiple sclerosis (MS), and one included patients with myelin oligodendrocyte glycoprotein antibody-associated disease (MOGAD). The other studies focused on transverse myelitis (2 studies) and severe myelitis (1 study). The sample sizes across all studies ranged from 66 to 640 participants.

Among all the included studies, six focused on predictive diagnostic models, while five were prognostic models. Six studies utilized multivariate logistic regression algorithms to develop their models, and one employed Cox regression. Notably, Dean M. Wingerchuk et al. combined both multivariate logistic regression and Cox regression algorithms. Additionally, Wenqin Luo et al. integrated LASSO regression with Cox regression for modeling, Laura Cacciaguerra et al. (52) applied a deep learning convolutional neural network algorithm, Jacqueline Palace et al. adopted a joint modeling strategy, and Laura Clarke et al. utilized both machine learning and scoring algorithms in their modeling approaches.

The most frequently occurring predictor across the models was gender (featured in 6 models), followed by age (in 4 models). Other common predictors included number of relapses, central cord lesion, and long-segment transverse myelitis (LETM) (each appearing in 3 models). Predictors with relatively high frequency also included aquaporin-4 antibody (AQP4) serostatus (antibody titer), treatment duration, type of treatment regimen, spasticity, brainstem lesion, cervical cord lesion, and occurrence of optic neuritis (each present in 2 models).

Models validation

Among the included studies, only three conducted both internal and external validation, while five performed internal validation only. Two studies did not carry out external validation, and their reporting on internal validation was unclear in the articles. Additionally, one study performed neither internal nor external validation (see Table 3).

Technology application

Among the 11 included studies, the sample sizes used for training and testing the models are detailed in Table 3. Only three studies described their methods for handling missing data, all of which employed direct exclusion, while the remaining eight studies did not report any approach for addressing missing values. Just four studies utilized sampling techniques: Bootstrap Resampling, Data Augmentation, Case-control Matching, and Stratified Random Sampling were employed respectively. Only one study (Wenqin Luo et al. (33),) implemented 10-fold cross-validation.

Additionally, seven studies applied dimensionality reduction techniques: Lucia Campetella and Liang Wang both used Statistics-Driven Variable Screening; Wenqin Luo applied LASSO Regression; Laura Cacciaguerra et al. (52) utilized a Convolutional Neural Network for feature reduction; Jingzi ZhangBao adopted a Stepwise Procedure; Laura Clarke conducted an Extensive Literature Review to select variables; and Fan Yang first screened variables with p < 0.05 in univariate analysis before incorporating significant predictors into multivariate logistic regression for further selection.

Furthermore, only Wenqin Luo et al. and Laura Cacciaguerra et al. (52) explicitly reported the use of hyperparameter tuning in their studies, while Laura Clarke et al. did not provide clear information on whether hyperparameter optimization was performed.

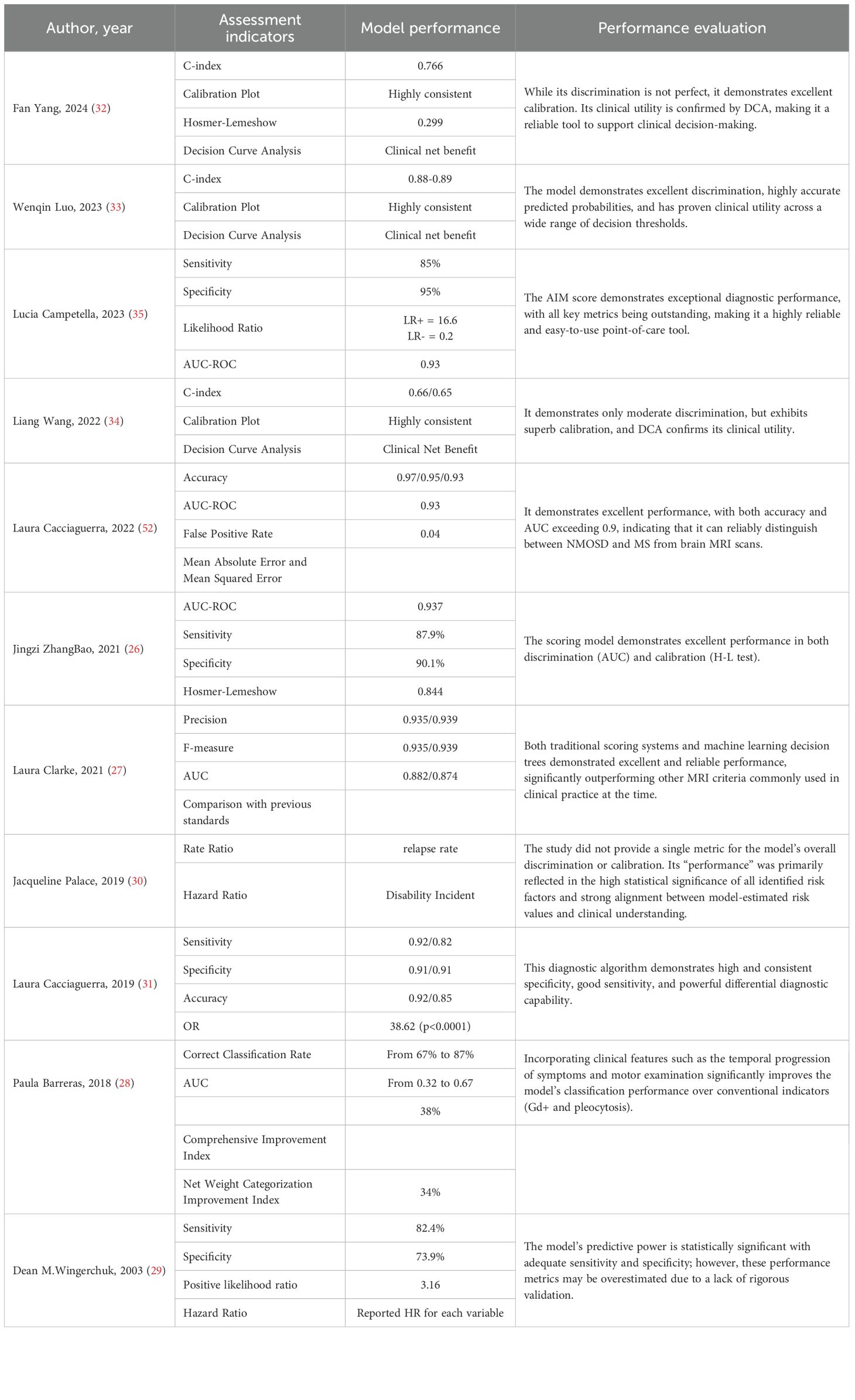

Performance metrics

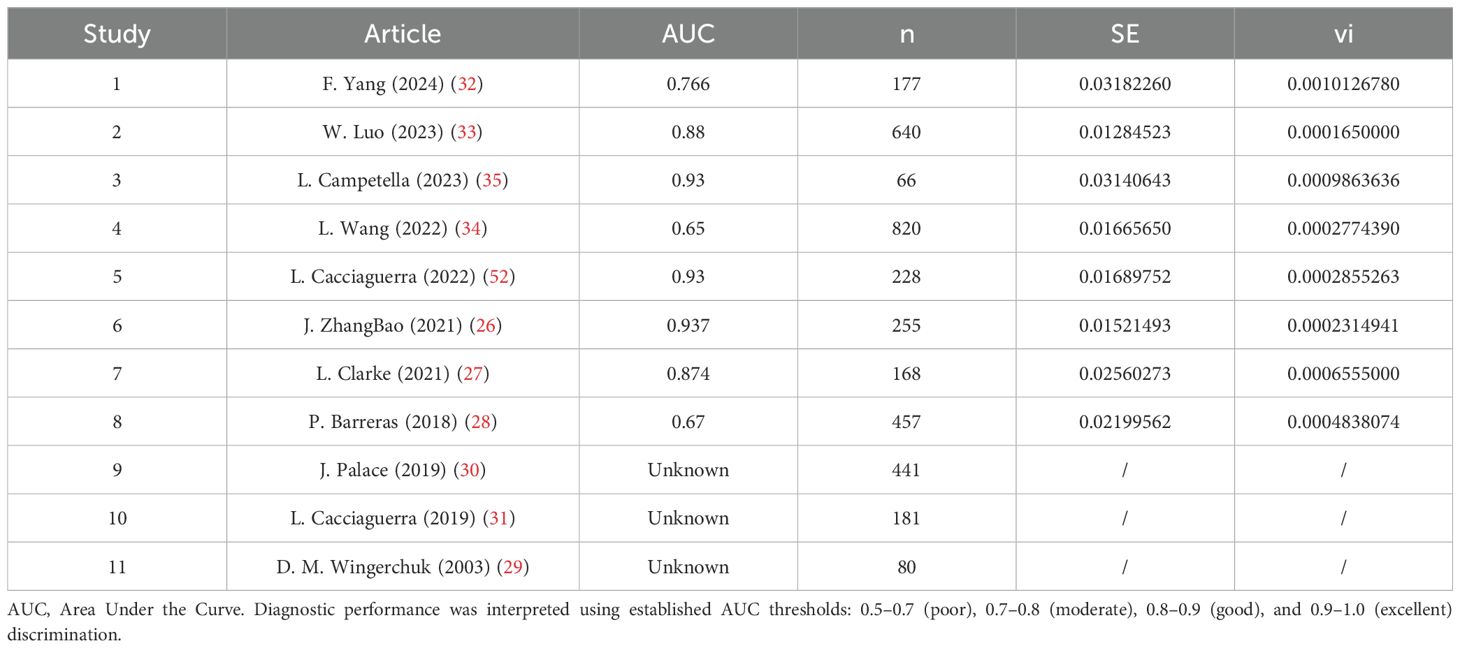

Of the 11 studies included, the core performance metrics used and their performance evaluations are presented in Table 4. The model from Jingzi ZhangBao et al. demonstrated excellent discriminative ability (AUC 0.94), making it an outstanding diagnostic tool. The model developed by Laura Clarke et al. showed superior comprehensive performance (high precision and high F-score) and was significantly better than existing standards. The model by Fan Yang et al. had acceptable discriminative ability (AUC 0.77), but exhibited excellent calibration and clinical utility, making it a reliable prognostic risk prediction tool. In terms of methodological rigor and model performance, the models from Wenqin Luo et al. and the Laura Cacciaguerra (31) et al. are considered reliable, as they employed stringent internal validation and a comprehensive set of evaluation metrics. The respectable performance of earlier studies (e.g., Dean M.Wingerchuk et al.) should be interpreted with caution due to their higher risk of overfitting. The models by Laura Cacciaguerra (2022) et al. and Lucia Campetella et al. demonstrated exceptional discriminative ability (AUC > 0.9); the former is designed for classification tasks, and the latter for diagnostic purposes. The model from Liang Wang et al. showed moderate discriminative ability but excellent calibration and clinical utility, which is crucial for risk prediction models. The study by Jacqueline Palace et al. focused on interpreting risk factors rather than predicting individual outcomes; thus, its performance is reflected in the precision and significance of its effect estimates.

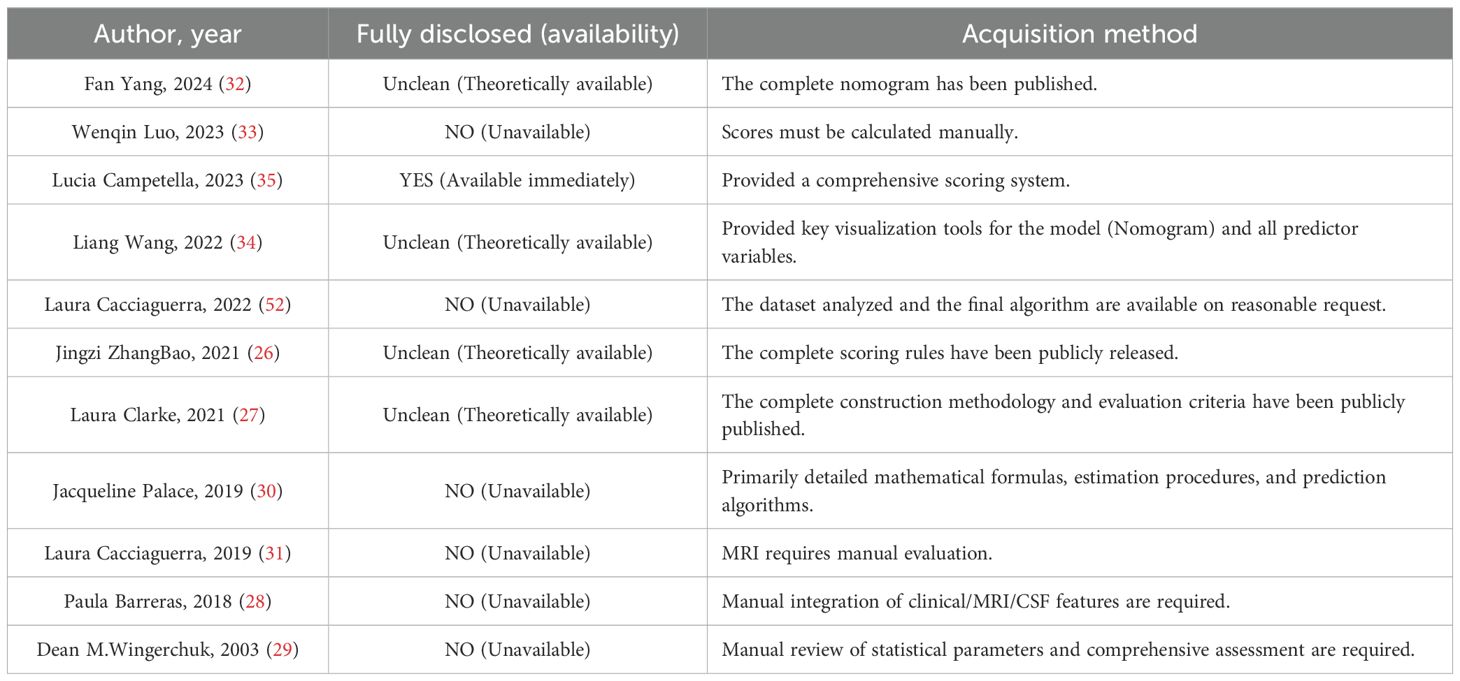

Data availability

Of the 11 included studies, only one [Lucia Campetella et al. (35)], provided a model directly accessible to the public. The remaining studies did not offer prediction models that could be directly downloaded or used online. Among these, four studies had models whose construction details or scoring rules were fully published in their respective papers, but researchers, clinicians, or peers wishing to use these models must manually extract the information from the articles and implement them independently. There are no readily downloadable code or tools available, making the process highly cumbersome and error-prone, so these models are considered “theoretically applicable.” The other six studies presented models that are highly complex, lacking core parameters or code, rendering them usable only as research instruments and thus “unusable” (see Table 5).

In summary, while the majority of the studies have made valuable methodological contributions and pointing the way for future clinical practice, but their primary contribution lies in generating new medical knowledge rather than serving as direct diagnostic or treatment tools. From the perspective of clinical applicability, they have not yet become mature tools that are “truly ready for direct use by others.”

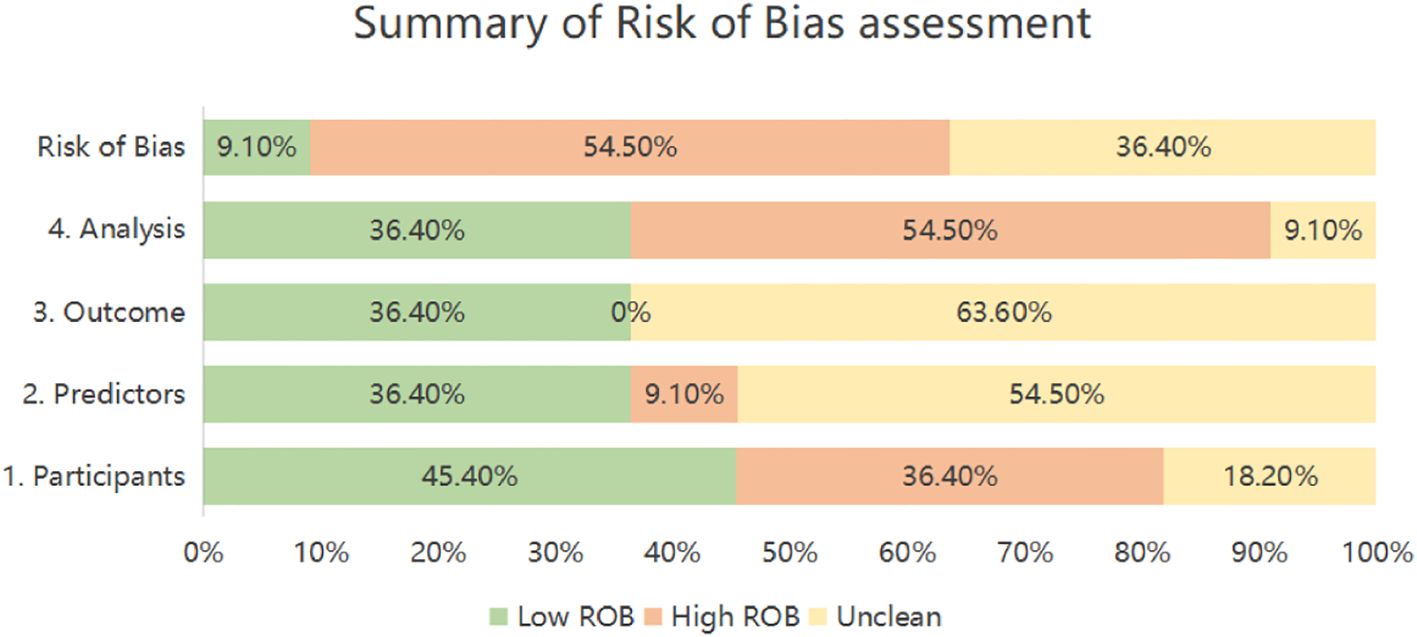

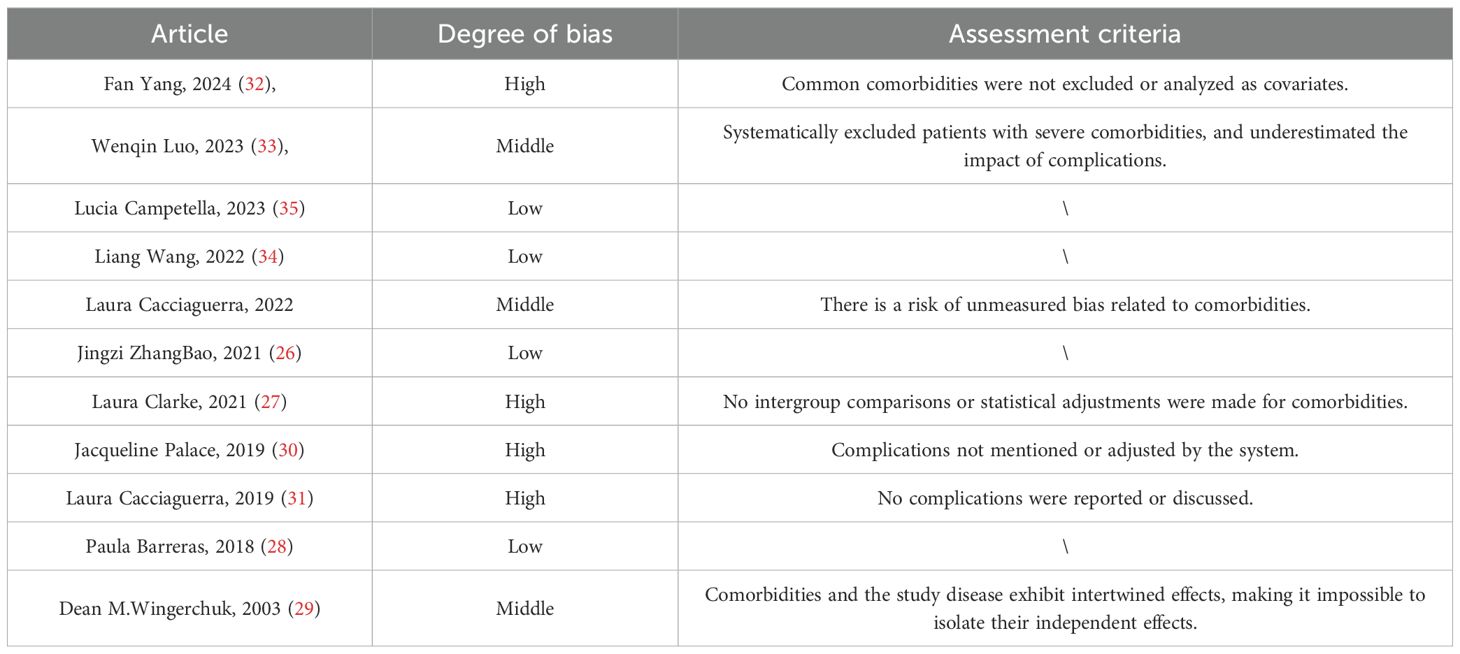

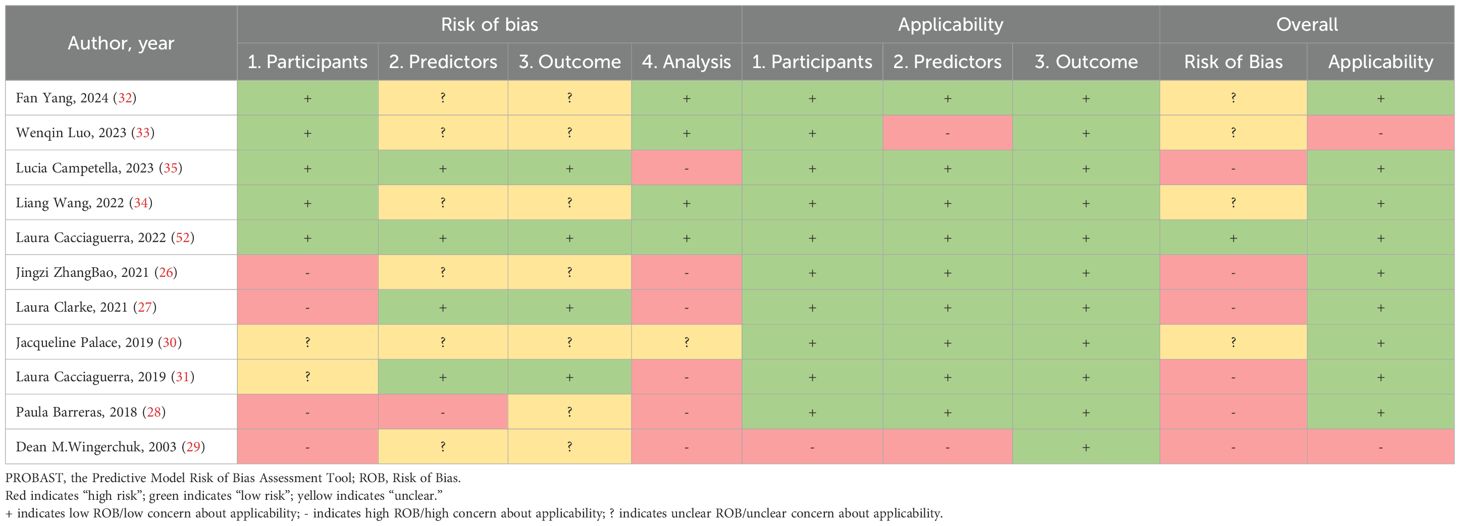

Results of quality assessment

The PROBAST-based quality assessment revealed substantial methodological limitations. Only a single study (9.1%) exhibited low overall risk of bias. Conversely, six investigations (54.5%) demonstrated high bias risk, signaling deficiencies in development or validation methodology, while four studies (36.4%) presented unclear risk due to insufficient reporting transparency. Within the Participant domain, four studies (36.4%) incurred high bias risk principally because of inadequately specified inclusion/exclusion criteria (26–29), and two studies (18.2%) showed unclear risk owing to incomplete descriptions of data sources or participant selection methods (30, 31). Regarding Predictors, one study (9.1%) was rated high risk, potentially reflecting non-blinded assessment (28), while six studies (54.5%) held unclear risk as they omitted reporting quality control procedures for predictor measurement—a lapse potentially attributable to retrospective designs (26, 28–30, 32–34). For Outcomes, seven studies (63.6%) carried unclear risk, none having documented whether blinding was maintained between outcome and predictor evaluations (26, 28–30, 32–34). Analysis concerns were pronounced: six studies (54.5%) manifested high bias risk. Specific issues encompassed deficient handling of missing data (n=4 studies) (26, 28, 31, 35), exclusive use of apparent validation without further testing (n=5 studies) (27–29, 31, 35), problematic conversion of continuous variables to categorical formats (n=2 studies) (26, 27), and reliance on single-split internal validation alone (n=1 study) (31). An additional study (9.1%) exhibited unclear analytical risk due to unreported statistical details (30). (Table 6, Figure 2).

Table 6. PROBAST results of the included studies: summarizes the risk of bias and applicability in the included studies.

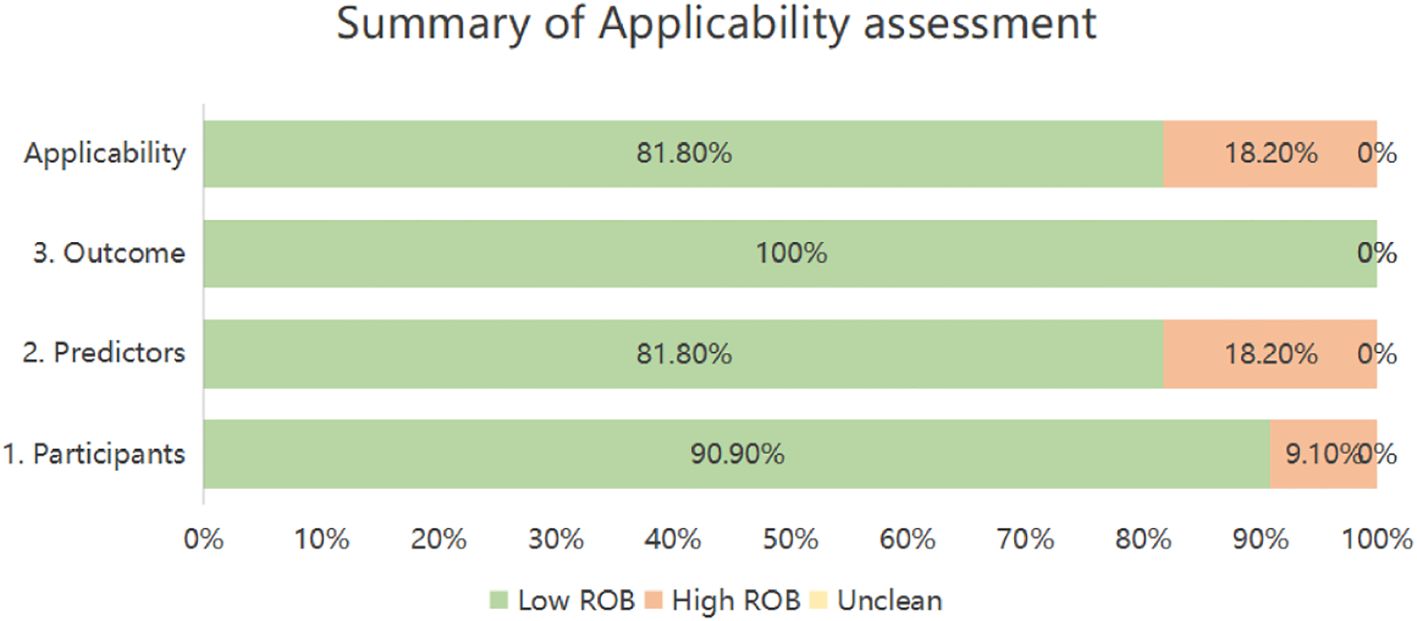

Applicability risk assessment revealed two studies (18.2%) with high overall risk, contrasting with nine (81.8%) demonstrating low applicability concerns. Domain-specific evaluation indicated high risk in Participants for one investigation (9.1%) due to notably limited sample size (29). Within Predictors, two studies (18.2%) received high-risk ratings stemming from suboptimal predictor measurement timing (29, 33). Conversely, all eleven studies exhibited universally low applicability risk in the Outcomes domain (Table 6, Figure 3).

Assessment of comorbidity-related bias

Some of the included studies did not explicitly address comorbidity-related bias during the modeling process. These potential biases may lead to unmeasured confounding factors, limiting the accuracy and generalizability of the study results and models. The evaluation identified four studies with high bias, three with moderate bias, and four with low bias (see Table 7). Among these, studies with low bias clearly documented autoimmune or other underlying comorbidities and incorporated them into the analysis, demonstrating better bias control. Studies with moderate bias systematically excluded patients with severe comorbidities, leading to an underestimation of the negative impact of comorbidities on adverse outcomes, or presented risks of unmeasured comorbidity-related bias, or exhibited complex interactions between comorbidities and the disease under investigation. Studies with high bias either did not report or systematically adjust for comorbidities.

Meta-analysis of validation models included in the review

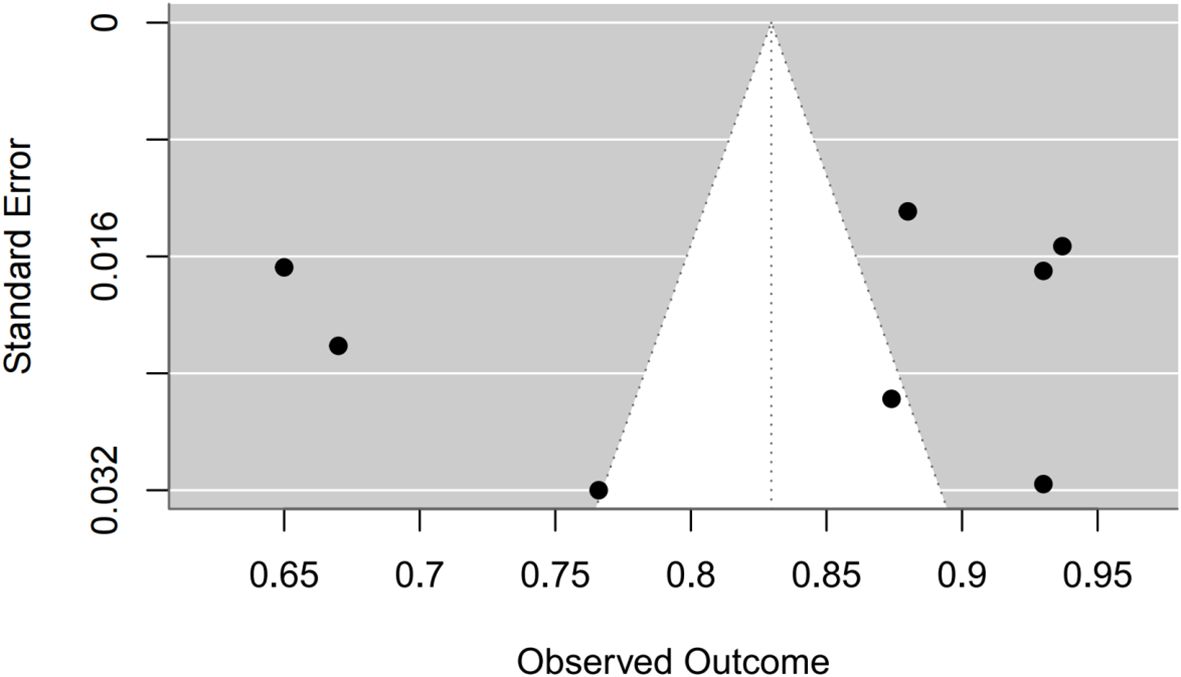

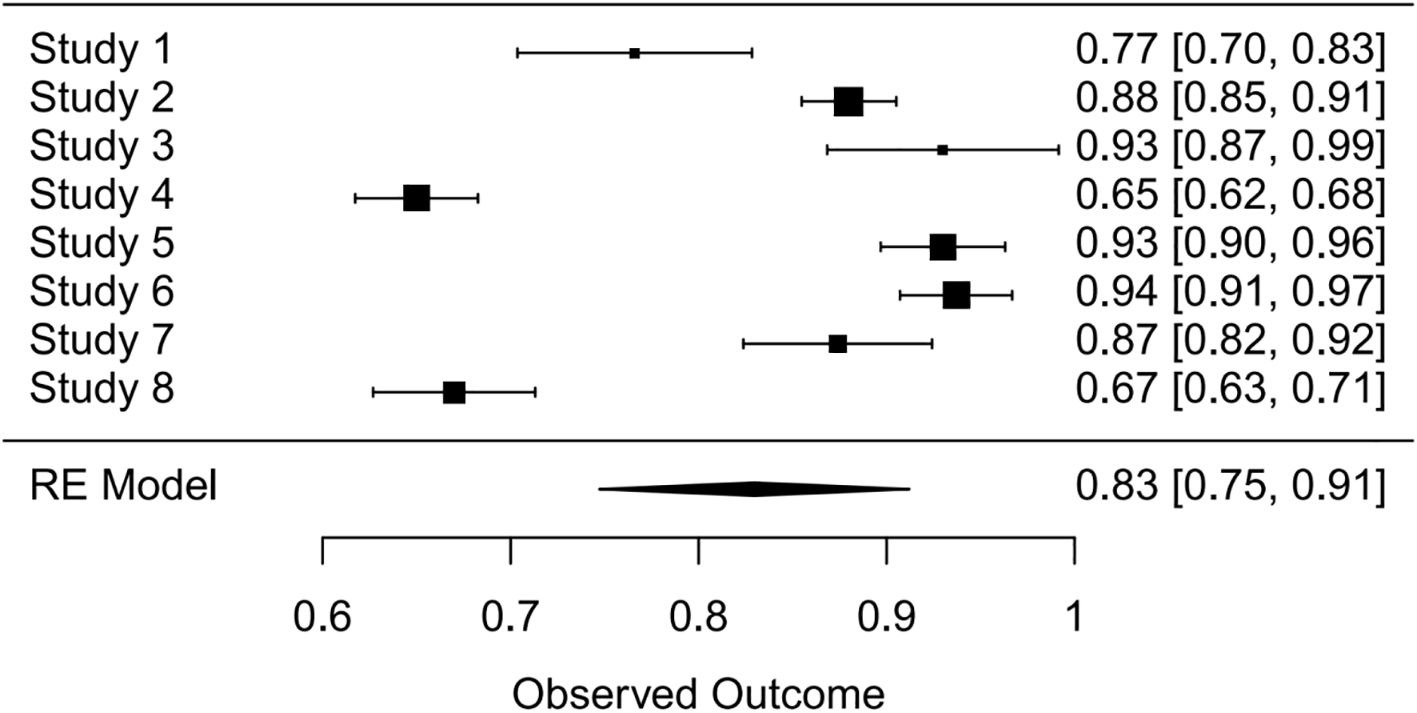

Synthesis of validation models proved feasible for eight studies (Table 8), as insufficient methodological reporting precluded inclusion of others. Although the investigation by Clarke et al. employed multiple modeling methodologies (27), all derived from a common cohort. Consequently, only objectively developed machine learning models underwent meta-analysis. A random-effects model yielded a pooled AUC of 0.83 (95% CI: 0.75–0.91). Substantial heterogeneity emerged (I² = 97.4%, p < 0.0001). Publication bias analysis via Egger’s test indicated non-significance (z ≈ -0.47; intercept ≈ 0.91, p = 0.656), corroborated by visual inspection of funnel and forest plots (Figures 4, 5, Supplementary Figures 1–2).

Figure 4. Forest plot visualizing summary AUC performance of validation models (n=8) under random-effects modeling.

Table 8. A detailed list of the specific AUC values of the predictive models included in the system overview.

Discussion

Myelitis is a broad concept encompassing various subtypes, making the clarity of myelitis diagnosis particularly important (4). Among numerous diagnostic indicators, MOG-related antibodies (such as IgG) (36, 37) and AQP4-related antibodies (such as IgG) (38, 39) play a central role in the diagnosis of myelitis. Preliminary statistics indicate that there is currently a wealth of research related to poliomyelitis and NMOSD, likely due to the prevalence and severity of these two diseases. The clinical symptoms of myelitis are largely similar, primarily manifesting as a series of symptoms following nervous system injury. Common clinical treatments include antiviral therapy, steroids, immunosuppressants, and plasma exchange (40–42), but these treatments are associated with certain adverse reactions and side effects (43). Therefore, early identification of effective clinical biomarkers, risk factor assessment, and the establishment of predictive models are strategies of significant clinical importance for predicting disease occurrence and progression, prognosis, recurrence, and monitoring treatment response. These strategies facilitate early identification and intervention, thereby significantly reducing adverse prognoses. This systematic review identifies a growing proliferation of myelitis prediction models, predominantly derived from single-center datasets. Among 11 evaluated models, internal/external validation demonstrated moderate-to-good predictive utility (AUC range: 0.65–0.937). However, PROBAST assessment revealed significant methodological limitations: six studies carried high bias risk and four exhibited unclear risk, substantially constraining clinical applicability. Meta-analysis of eight validated models produced an aggregate AUC of 0.83 (95% CI: 0.75–0.91), though extreme heterogeneity (I²=97.4%) was observed—likely attributable to population, predictor, and methodological variations. Further compromising reliability, inconsistent adherence to the TRIPOD reporting guidelines was noted across studies, introducing transparency deficits and potential bias (44, 45). Consequently, advancing this field necessitates: (1) development of models with expanded cohorts; (2) methodologically rigorous designs; (3) multicenter external validation; and (4) stringent reporting transparency.

Valuable insights can be gleaned from the model development processes of the included studies: Only three studies conducted both internal and external validation, while five studies only performed internal validation, despite the utilization of multicenter data in some cases. For example, leveraging multicenter data, the study by Cacciaguerra (31) et al. omitted rigorous external validation. Additionally, while random split validation represents an internal validation technique, it fails to mitigate model overfitting concerns (46). Wenqin Luo et al. employed LASSO regression and multivariate Cox regression for data analysis, demonstrating commendable handling of the models, whereas other models often overlooked this aspect. However, there were some predictors that involved variables which could not be obtained in a timely manner. Although Liang Wang et al. also used an extended Cox regression model and conducted both internal and external validation, the study was retrospective and failed to report the use of blinding, resulting in an unclear risk of bias. During the model development process, Laura Clarke et al. utilized both machine learning and scoring algorithms. Some studies indicate that compared to traditional logistic regression, machine learning methods often produce more objective and higher accuracy results (47). Machine learning approaches offer solutions to common methodological challenges—including limited samples, continuous variable handling, and predictor selection—encountered in conventional modeling. However, these techniques introduce new limitations: inadequate visualization frameworks and persistent reporting transparency deficits. The current review further identified substantial undisclosed analytical details. Consequently, methodological selection should be evidence-based and context-driven. While all evaluated models demonstrated moderate-to-high discriminative capacity, significant bias risks remain. Critical improvement areas include: (1) strengthening data provenance (prospective/retrospective; cohort/case-control designs); (2) standardizing predictor-outcome temporal alignment; (3) implementing blinding protocols; (4) ensuring adequate event rates; (5) optimizing continuous variable treatment; (6) refining predictor selection; (7) addressing data complexity; and (8) enhancing calibration and fitting procedures.

The incorporated prediction models demonstrate translational relevance through clinically informative predictors. Notably, gender emerged as a recurrent predictor across six studies, aligning with established epidemiological evidence of heightened myelitis incidence in females versus males (48, 49). Nevertheless, variations in age-sex distributions across cohorts challenge the universal generalizability of these predictors. For instance, two of the included studies reported a slightly higher risk in males (26, 33). Secondly, age is an important indicator commonly used in predicting the prognosis of myelitis, as it is associated with numerous diseases. The age of onset spans the entire age range, encompassing both pediatric and elderly populations. Some studies have shown a close relationship between older age at onset and poorer prognosis of myelitis (50). For example, Romina Mariano et al. (49) indicated that patients aged 50 and above exhibit higher levels of disability post-onset, whereas patients under 50 have higher recurrence rates after onset. Aditya Banerjee et al. (51) reported that patients under 35 years old may have better prognoses after recurrence compared to those over 35. Therefore, early detection, diagnosis, and intervention strategies should be adopted for younger populations, while increased attention and care are necessary for older populations. Additionally, the number of relapses, central spinal lesions, and longitudinally extensive transverse myelitis (LETM) are also commonly used predictors. The number of relapses is often used to predict the risk and prognosis of myelitis, the higher the number of relapses, the greater the risk of adverse outcomes and poorer prognosis (29, 33, 34). Imaging features such as central spinal lesions and LETM are frequently used in the classification and diagnosis of myelitis. Moreover, predictors identified across the 11 models constitute valuable candidates for future diagnostic, prognostic, and risk-modeling research. While urgent clinical implementation of myelitis prediction tools remains imperative, rigorous validation trials are essential to confirm their efficacy in: (1) enhancing early diagnostic accuracy, and (2) mitigating recurrence/progression risks. Successful validation will enable deployment across broader populations, ultimately improving patient prognoses and rehabilitation outcomes.

However, it is noteworthy that there is often an inverse relationship between model complexity and its clinical usability. Although these studies have yielded significant scientific discoveries, most of their models lack immediate accessibility, which substantially limits their dissemination, validation, and application in clinical practice and subsequent research. Therefore, enhancing model transparency and accessibility is of paramount importance to advance the field from “publishing papers” to “serving clinical needs”.

Limitations

Several limitations warrant consideration. First, predominant single-center derivation of included studies potentially constrains generalizability across institutions and regions. Application in diverse settings may thus require population-specific recalibration, underscoring the need for future models developed in multi-ethnic cohorts. Second, methodological and reporting heterogeneity permitted meta-analysis of only eight validation models from distinct studies. This restricted synthesis precluded comprehensive exploration of heterogeneity sources and compromised statistical power in publication bias assessment. Importantly, these constraints do not invalidate model evaluations but directly mirror the methodological gaps identified elsewhere in our analysis. Third, given that some models are not directly accessible, our conclusions regarding their clinical applicability are primarily based on performance metrics reported in the literature. Future prospective validation in cohorts with available models is necessary to further corroborate these findings. Forth, language restrictions excluded non-English publications, potentially omitting relevant research.

Conclusion

This systematic review synthesizes 11 models derived from 11 studies. Meta-analysis of eight validated models demonstrated good discriminative capacity (pooled AUC 0.83; 95% CI: 0.75–0.91). However, PROBAST assessment revealed substantial methodological concerns: six studies carried high risk of bias, four exhibited unclear risk, and two raised applicability issues. Additionally, we observed that the current majority of myelitis prediction models have limited open accessibility, which may restrict their independent validation in external cohorts and widespread adoption in clinical practice. Current reporting practices often fall short of PROBAST standards, underscoring the urgent need for researchers to rigorously employ PROBAST checklists and adhere to TRIPOD reporting guidelines. Furthermore, we encourage the provision of complete model coefficients in published papers or the sharing of code via open-source platforms to enhance research transparency and reproducibility. Future model development must prioritize multicenter designs, larger cohorts, methodologically robust validation, and enhanced transparency.

Data availability statement

The original contributions presented in the study are included in the article/Supplementary Material. Further inquiries can be directed to the corresponding author.

Author contributions

JH: Data curation, Writing – original draft, Writing – review & editing. YZ: Data curation, Writing – original draft, Writing – review & editing. ZZ: Data curation, Writing – original draft, Writing – review & editing. YC: Visualization, Writing – review & editing. BW: Conceptualization, Funding acquisition, Writing – review & editing.

Funding

The author(s) declare financial support was received for the research and/or publication of this article. This work was supported by the Scientific Research Program of Hebei Provincial Administration of Traditional Chinese Medicine (Project Number: T2025122).

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Generative AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/fimmu.2025.1669338/full#supplementary-material

References

1. Abboud H, Sun R, Modak N, Elkasaby M, Wang A, and Levy M. Spinal movement disorders in NMOSD, MOGAD, and idiopathic transverse myelitis: a prospective observational study. J Neurol. (2024) 271. doi: 10.1007/s00415-024-12527-6

2. Li X, Xu H, Zheng Z, Ouyang H, Chen G, Lou Z, et al. The risk factors of neuropathic pain in neuromyelitis optica spectrum disorder: a retrospective case-cohort study. BMC Neurol. (2022) 22. doi: 10.1186/s12883-022-02841-9

3. Noori H, Marsool MDM, Gohil KM, Idrees M, Subash T, Alazzeh Z, et al. Neuromyelitis optica spectrum disorder: Exploring the diverse clinical manifestations and the need for further exploration. Brain Behav. (2024) 14. doi: 10.1002/brb3.3644

4. Tisavipat N and Flanagan EP. Current perspectives on the diagnosis and management of acute transverse myelitis. Expert Rev Neurother. (2023) 23. doi: 10.1080/14737175.2023.2195095

5. Amatya S, Khan E, Kagzi Y, Akkus S, Gupta R, Hansen N, et al. Disease characteristics of NMOSD and their relationship with disease burden: Observations from a large single-center cohort. J neurological Sci. (2024) 467. doi: 10.1016/j.jns.2024.123311

6. Jarius S, Paul F, Weinshenker BG, Levy M, Kim HoJ, and Wildemann B. Neuromyelitis optica. Nat Rev Dis Primers. (2020) 6. doi: 10.1038/s41572-020-0214-9

7. McGinley MP, Goldschmidt CH, and Rae-Grant AD. Diagnosis and treatment of multiple sclerosis: A review. JAMA. (2021) 325. doi: 10.1001/jama.2020.26858

8. Wang MH, Wang ZQ, Zhang SZ, Zhang L, Zhao JL, Wang Q, et al. Relapse rates and risk factors for unfavorable neurological prognosis of transverse myelitis in systemic lupus erythematosus: A systematic review and meta-analysis. Autoimmun Rev. (2022) 21. doi: 10.1016/j.autrev.2021.102996

9. Wen X, Xu D, Yuan S, and Zhang J. Transverse myelitis in systemic lupus erythematosus: A case report and systematic literature review. Autoimmun Rev. (2022) 21. doi: 10.1016/j.autrev.2022.103103

10. Mangudkar S, Nimmala SG, Mishra MP, and Giduturi VN. Post-viral longitudinally extensive transverse myelitis: A case report. Cureus. (2024). doi: 10.7759/cureus.62033

11. Namageyo-Funa A, Greene SA, Henderson E, Traoré MA, Shaukat S, Bigouette JP, et al. Update on vaccine-derived poliovirus outbreaks - worldwide, January 2023-June 2024. MMWR Morbidity mortality weekly Rep. (2024) 73. doi: 10.15585/mmwr.mm7341a1

12. Medavarapu S, Goyal N, and Anziska Y. Acute transverse myelitis (ATM) associated with COVID 19 infection and vaccination: A case report and literature review. AIMS Neurosci. (2024) 11. doi: 10.3934/Neuroscience.2024011

13. Yeh EA, Yea C, and Bitnun A. Infection-related myelopathies. Annu Rev Pathol. (2022) 17. doi: 10.1146/annurev-pathmechdis-040121-022818

14. Bradl M, Kanamori Y, Nakashima I, Misu T, Fujihara K, Lassmann H, et al. Pain in neuromyelitis optica–prevalence, pathogenesis and therapy. Nat Rev Neurol. (2014) 10. doi: 10.1038/nrneurol.2014.129

15. Kong Y, Okoruwa H, Revis J, Tackley G, Leite MI, Lee M, et al. Pain in patients with transverse myelitis and its relationship to aquaporin 4 antibody status. J Neurological Sci. (2016) 368. doi: 10.1016/j.jns.2016.06.041

16. Kanamori Y, Nakashima I, Takai Y, Nishiyama S, Kuroda H, Takahashi T, et al. Pain in neuromyelitis optica and its effect on quality of life: a cross-sectional study. Neurology. (2011) 77. doi: 10.1212/WNL.0b013e318229e694

17. Ayzenberg I, Richter D, Henke E, Asseyer S, Paul F, Trebst C, et al. Pain, depression, and quality of life in neuromyelitis optica spectrum disorder: A cross-sectional study of 166 AQP4 antibody-seropositive patients. Neurology(R) neuroimmunol Neuroinflamm. (2021) 8. doi: 10.1212/NXI.0000000000000985

18. Beekman J, Keisler A, Pedraza O, Haramura M, Gianella-Borradori A, Katz E, et al. Neuromyelitis optica spectrum disorder: Patient experience and quality of life. Neurology(R) neuroimmunol Neuroinflamm. (2019) 6. doi: 10.1212/NXI.0000000000000580

19. Jarius S, Aktas O, Ayzenberg I, Bellmann-Strobl J, Berthele A, Giglhuber K, et al. Update on the diagnosis and treatment of neuromyelits optica spectrum disorders (NMOSD) - revised recommendations of the Neuromyelitis Optica Study Group (NEMOS). Part I: Diagnosis and differential diagnosis. J Neurol. (2023) 270. doi: 10.1007/s00415-023-11634-0

20. Moons KGM, Groot J, Bouwmeester W, Vergouwe Y, Mallett S, Altman DG, et al. Critical appraisal and data extraction for systematic reviews of prediction modelling studies: the CHARMS checklist. PloS Med. (2014) 11. doi: 10.1371/journal.pmed.1001744

21. Debra TPA, Damen JAAG, Snell KIE, Ensor J, Hooft L, Reitsma JB, et al. A guide to systematic review and meta-analysis of prediction model performance. BMJ (Clinical Res ed.). (2017) 356. doi: 10.1136/bmj.i6460

22. Fernandez-Felix BM, López-Alcalde J, Roqué M, Muriel A, Zamora J, Fernandez-Felix BM, et al. CHARMS and PROBAST at your fingertips: a template for data extraction and risk of bias assessment in systematic reviews of predictive models. BMC Med Res Method. (2023) 23. doi: 10.1186/s12874-023-01849-0

23. Wolff RF, Moons KGM, Riley RD, Whiting PF, Westwood M, Collins GS, et al. PROBAST: A tool to assess the risk of bias and applicability of prediction model studies. Ann Internal Med. (2019) 170. doi: 10.7326/M18-1376

24. Higgins JPT, Thompson SG, Deeks JJ, and Altman DG. Measuring inconsistency in meta-analyses. BMJ (Clinical Res ed.). (2003) 327. doi: 10.1136/bmj.327.7414.557

25. Egger M, Smith GD, Schneider M, and Minder C. Bias in meta-analysis detected by a simple, graphical test. BMJ (Clinical Res ed.). (1997) 315. doi: 10.1136/bmj.315.7109.629

26. ZhangBao J, Huang W, Zhou L, Wang L, Chang X, Lu C, et al. Myelitis in inflammatory disorders associated with myelin oligodendrocyte glycoprotein antibody and aquaporin-4 antibody: A comparative study in Chinese Han patients. Eur J Neurol. (2021) 28:1308–15. doi: 10.1111/ene.14654

27. Clarke L, Arnett S, Bukhari W, Khalilidehkordi E, Sanchez SJ, O’Gorman C, et al. MRI patterns distinguish AQP4 antibody positive neuromyelitis optica spectrum disorder from multiple sclerosis. Front Neurol. (2021) 12. doi: 10.3389/fneur.2021.722237

28. Barreras P, Fitzgerald KC, Mealy MA, Jimenez JA, Becker D, Newsome SD, et al. Clinical biomarkers differentiate myelitis from vascular and other causes of myelopathy. Neurology. (2018) 90:e12–21. doi: 10.1212/WNL.0000000000004765

29. Wingerchuk DM and Weinshenker BG. Neuromyelitis optica: clinical predictors of a relapsing course and survival. Neurology. (2003) 60:848–53. doi: 10.1212/01.WNL.0000049912.02954.2C

30. Palace J, Lin DY, Zeng D, Majed M, Elsone L, Hamid S, et al. Outcome prediction models in AQP4-IgG positive neuromyelitis optica spectrum disorders. Brain. (2019) 142:1310–23. doi: 10.1093/brain/awz054

31. Cacciaguerra L, Meani A, Mesaros S, Radaelli M, Palace J, Dujmovic-Basuroski I, et al. Brain and cord imaging features in neuromyelitis optica spectrum disorders. Ann Neurol. (2019) 85:371–84. doi: 10.1002/ana.25411

32. Yang F, Dong R, Wang Y, Guo J, Zang Q, Wen L, et al. Prediction of pulmonary infection in patients with severe myelitis by NPAR combined with spinal cord lesion segments. Front Neurol. (2024) 15:1364108. doi: 10.3389/fneur.2024.1364108

33. Luo W, Kong L, Chen H, Wang X, Du Q, Shi Z, et al. Visual disability in neuromyelitis optica spectrum disorders: prognostic prediction models. Front Immunol. (2023) 14:1209323. doi: 10.3389/fimmu.2023.1209323

34. Wang L, Du L, Li Q, Li F, Wang B, Zhao Y, et al. Neuromyelitis optica spectrum disorder with anti-aquaporin-4 antibody: outcome prediction models. Front Immunol. (2022) 13:873576. doi: 10.3389/fimmu.2022.873576

35. Campetella L, Papi C, Spagni G, Sabatelli E, Mariotto S, Gastaldi M, et al. A score that predicts aquaporin-4 IgG positivity in patients with longitudinally extensive transverse myelitis. Eur J Neurol. (2023) 30:2534–8. doi: 10.1111/ene.15863

36. Matsumoto Y, Kaneko K, Takahashi T, Takai Y, Namatame C, Kuroda H, et al. Diagnostic implications of MOG-IgG detection in sera and cerebrospinal fluids. Brain: J Neurol. (2023) 146. doi: 10.1093/brain/awad122

37. Banwell B, Bennett JL, Marignier R, Kim HoJ, Brilot F, Flanagan EP, et al. Diagnosis of myelin oligodendrocyte glycoprotein antibody-associated disease: International MOGAD Panel proposed criteria. Lancet Neurol. (2023) 22. doi: 10.1016/S1474-4422(22)00431-8

38. Zhao-Fleming HH, Sanchez CV, Sechi E, Inbarasu J, Wijdicks EF, Pittock SJ, et al. CNS demyelinating attacks requiring ventilatory support with myelin oligodendrocyte glycoprotein or aquaporin-4 antibodies. Neurology. (2021) 97. doi: 10.1212/WNL.0000000000012599

39. Taheri N, Sarrand J, and Soyfoo MS. Neuromyelitis optica: pathogenesis overlap with other autoimmune diseases. Curr Allergy Asthma Rep. (2023) 23. doi: 10.1007/s11882-023-01112-y

40. Chan K-H and Lee C-Y. Treatment of neuromyelitis optica spectrum disorders. Int J Mol Sci. (2021) 22. doi: 10.3390/ijms22168638

41. Contentti EC and Correale J. Neuromyelitis optica spectrum disorders: from pathophysiology to therapeutic strategies. J Neuroinflamm. (2021) 18. doi: 10.1186/s12974-021-02249-1

42. Ambrosius W, Michalak Sławomir, Kozubski W, and Kalinowska A. Myelin oligodendrocyte glycoprotein antibody-associated disease: current insights into the disease pathophysiology, diagnosis and management. Int J Mol Sci. (2020) 22. doi: 10.3390/ijms22010100

43. Jasiak-Zatonska M, Kalinowska-Lyszczarz A, Michalak S, and Kozubski W. The immunology of neuromyelitis optica-current knowledge, clinical implications, controversies and future perspectives. Int J Mol Sci. (2016) 17. doi: 10.3390/ijms17030273

44. Collins GS, Moons KGM, Dhiman P, Riley RD, Beam AL, Calster BV, et al. TRIPOD+AI statement: updated guidance for reporting clinical prediction models that use regression or machine learning methods. BMJ (Clinical Res ed.). (2024) 385. doi: 10.1136/bmj-2023-078378

45. Snell KIE, Levis B, Damen JAA, Dhiman P, Debray TPA, Hooft L, et al. Transparent reporting of multivariable prediction models for individual prognosis or diagnosis: checklist for systematic reviews and meta-analyses (TRIPOD-SRMA). BMJ (Clinical Res ed.). (2023) 381. doi: 10.1136/bmj-2022-073538

46. Steyerberg EW, Harrell FE, Borsboom GJJM, Eijkemans MJC, Vergouwe Y, and Habbema JDF. Internal validation of predictive models: Efficiency of some procedures for logistic regression analysis. J Clin Epidemiol. (2001) 54. doi: 10.1016/S0895-4356(01)00341-9

47. Churpek MM, Yuen TC, Winslow C, Meltzer DO, Kattan MW, and Edelson DP. Multicenter comparison of machine learning methods and conventional regression for predicting clinical deterioration on the wards. Crit Care Med. (2016) 44. doi: 10.1097/CCM.0000000000001571

48. Arnett S, Chew SH, Leitner U, Hor JY, Paul F, Yeaman MR, et al. Sex ratio and age of onset in AQP4 antibody-associated NMOSD: a review and meta-analysis. J Neurol. (2024) 271. doi: 10.1007/s00415-024-12452-8

49. Mariano R, Messina S, Kumar K, Kuker W, Leite MI, and Palace J. Comparison of clinical outcomes of transverse myelitis among adults with myelin oligodendrocyte glycoprotein antibody vs aquaporin-4 antibody disease. JAMA network Open. (2019) 2. doi: 10.1001/jamanetworkopen.2019.12732

50. Moura J, Samões R, Sousa AP, Figueiroa S, Mendonça T, Abreu P, et al. Prognostic factors associated with disability in a cohort of neuromyelitis optica spectrum disorder and MOG-associated disease from a nationwide Portuguese registry. J Neurological Sci. (2024) 464. doi: 10.1016/j.jns.2024.123176

51. Banerjee A, Ng J, Coleman J, Ospina JP, Mealy M, and Levy M. Outcomes from acute attacks of neuromyelitis optica spectrum disorder correlate with severity of attack, age and delay to treatment. Multiple sclerosis related Disord. (2019) 28. doi: 10.1016/j.msard.2018.12.010

Keywords: myelitis, diagnostic model, prediction model, performance evaluation, clinical applicability

Citation: Huang J, Zhao Y, Zhang Z, Cheng Y and Wu B (2025) Exploratory analysis of predictive models in the field of myelitis: a systematic review and meta-analysis. Front. Immunol. 16:1669338. doi: 10.3389/fimmu.2025.1669338

Received: 19 July 2025; Accepted: 22 September 2025;

Published: 02 October 2025.

Edited by:

Samar S. Ayache, Hôpitaux Universitaires Henri Mondor, FranceReviewed by:

Lorna Galleguillos, Clínica Alemana, ChileAdriano Lages Dos Santos, Federal Institute of Education, Science and Technology of Minas Gerais, Brazil

Copyright © 2025 Huang, Zhao, Zhang, Cheng and Wu. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Bangqi Wu, d2Jxd2JxMTk4MEBvdXRsb29rLmNvbQ==

†These authors have contributed equally to this work and share first authorship

Jingjie Huang

Jingjie Huang Yusheng Zhao2†

Yusheng Zhao2† Ziyi Zhang

Ziyi Zhang Yupei Cheng

Yupei Cheng Bangqi Wu

Bangqi Wu