- 1School of Biomedical Sciences, The University of New South Wales (UNSW Sydney), Sydney, NSW, Australia

- 2Neuroscience Research Australia, Sydney, NSW, Australia

- 3Center for Social and Affective Neuroscience, Department of Biomedical and Clinical Sciences, Linköping University, Linköping, Sweden

- 4Bionics and Bio-Robotics, Tyree Foundation Institute of Health Engineering, The University of New South Wales (UNSW Sydney), Sydney, NSW, Australia

Both hearing and touch are sensitive to the frequency of mechanical oscillations—sound waves and tactile vibrations, respectively. The mounting evidence of parallels in temporal frequency processing between the two sensory systems led us to directly address the question of perceptual frequency equivalence between touch and hearing using stimuli of simple and more complex temporal features. In a cross-modal psychophysical paradigm, subjects compared the perceived frequency of pulsatile mechanical vibrations to that elicited by pulsatile acoustic (click) trains, and vice versa. Non-invasive pulsatile stimulation designed to excite a fixed population of afferents was used to induce desired temporal spike trains at frequencies spanning flutter up to vibratory hum (>50 Hz). The cross-modal perceived frequency for regular test pulse trains of either modality was a close match to the presented stimulus physical frequency up to 100 Hz. We then tested whether the recently discovered “burst gap” temporal code for frequency, that is shared by the two senses, renders an equivalent cross-modal frequency perception. When subjects compared trains comprising pairs of pulses (bursts) in one modality against regular trains in the other, the cross-sensory equivalent perceptual frequency best corresponded to the silent interval between the successive bursts in both auditory and tactile test stimuli. These findings suggest that identical acoustic and vibrotactile pulse trains, regardless of pattern, elicit equivalent frequencies, and imply analogous temporal frequency computation strategies in both modalities. This perceptual correspondence raises the possibility of employing a cross-modal comparison as a robust standard to overcome the prevailing methodological limitations in psychophysical investigations and strongly encourages cross-modal approaches for transmitting sensory information such as translating pitch into a similar pattern of vibration on the skin.

Introduction

The frequency of environmental mechanical oscillations is sampled by two human sensory systems, auditory and tactile. Notwithstanding that these signals are transduced through quite different physical media and separate sensory epithelia, there are a surprising number of physiological commonalities between them (von Békésy, 1959; Saal et al., 2016). Both require the mechanical displacement of frequency-tuned receptors to transduce physical events into neural signals, and both modalities generate temporally-precise spiking responses in their primary afferents capable of conveying rapid time-varying signals (Saal et al., 2016). Furthermore, in both sensory modalities, low-frequency stimuli (<50 Hz) elicit a sensation of flutter where individual stimulus pulses are discriminable (Pollack, 1952), while high-frequency stimuli (>50 Hz) evoke a sensation of vibratory hum or pitch where stimulus pulses fuse into a singular or a continuous percept (Talbot et al., 1968; Krumbholz et al., 2000). The range of frequencies detectable by the skin (2–1,000 Hz) is noted to overlap partially with that sensed by the ear (20–20,000 Hz) (Bolanowski et al., 1988).

Temporal frequency analysis is a fundamental part of sensory processing in both modalities: in audition, temporal frequency analysis is required for perception of speech and music (Javel and Mott, 1988); in touch, it is essential for perception of surface texture (Mackevicius et al., 2012) and sensing of the environment through hand-held tools (Brisben et al., 1999). The relative first spike latencies across primary afferents are used to locate sound sources in audition (Grothe et al., 2010), and in touch to determine features of objects, such as curvature and force direction upon first contact (Johansson and Birznieks, 2004). Though sensitive to different stimulus energies, these two sensory modalities may implement similar coding strategies to extract behaviourally relevant stimulus information (Pack and Bensmaia, 2015).

Aside from the correspondences between auditory and tactile processing of environmental vibrations, various behavioural experiments have demonstrated reciprocal perceptual interactions between the two sensory modalities, suggesting an intimate link in the perception of frequency signals (Occelli et al., 2011). For example, concurrent acoustic stimuli have been shown to influence the detection of tactile vibrations (Wilson et al., 2010), the perception of vibrotactile frequency (Ro et al., 2009; Yau et al., 2009a), and even the perception of surface texture (Guest et al., 2002). Reciprocally, simultaneous tactile cues influenced auditory pitch perception (Convento et al., 2019), loudness perception (Yau et al., 2010), and thereby, speech comprehension (Gick and Derrick, 2009). The observed behavioural interactions are further substantiated by the evidence of neuronal responses in the sensory cortices to stimuli not of their principal modality. The human auditory cortex has been shown to respond to tactile stimulation (Foxe et al., 2002; Kayser et al., 2005; Schürmann et al., 2006), and reciprocally, human participants performing an auditory frequency discrimination task demonstrated auditory frequency representation responses distributed over somatosensory cortical areas (Pérez-Bellido et al., 2018; Rahman et al., 2020). Additionally, lesions to the somatosensory cortex in rats were found to systematically impact the processing of auditory information in the auditory cortex (Escabí et al., 2007), corroborating the evidence of anatomical projections connecting the two brain regions (Ro et al., 2013).

The similarities in temporal frequency processing, the significant cross-sensory influence on frequency perception, and the evidence of neural responses in the alternate sensory cortex during stimulus presentation, invite the possibility of perceptual frequency equivalence between touch and hearing. The question has not previously been directly addressed, but in light of the parallels outlined above, we sought to investigate whether a perceptual equivalence of frequency could be achieved between auditory and tactile pulse stimuli. For instance, what would be the auditory equivalence of a 50 Hz vibrotactile pulse train and vice versa? And can cross-sensory comparison stimuli be used to assess the perceived frequency of stimuli delivered to the other sensory modality? A demonstrated equivalency of perceptual frequency may permit cross-modal methods for transmitting sensory information, for example, translating pitch into a similar pattern of vibration on the skin (Marks, 1983). The ability to use an alternate sense as a standard in psychophysical experiments opens new experimental possibilities.

In this study, we aimed to determine whether perceived frequency of pulsatile mechanical vibrations can be matched with that elicited by acoustic pulse (click) trains, and vice versa by performing cross-modal psychophysical frequency discrimination experiments. In these experiments, we first tested stimuli spanning the flutter and vibratory hum range in their simplest form (regular acoustic and vibrotactile pulse trains). Second, we compared stimuli organised as bursts of pulses in one modality with regular stimuli in the other, with the hypothesis that the frequency of the matching regular pulse train will equal that predicted from the inter-burst gap, as previously shown in unimodal studies (Birznieks and Vickery, 2017; Ng et al., 2020, 2021; Sharma et al., 2022). The pulsatile stimulation technique employed in this study allows precise control of the timing of spikes in a fixed population of primary afferents responding to the stimulus modality. Each mechanical and acoustic pulse is a repeatable and uniform event, ensuring that the same population of afferents is activated regardless of how frequently these pulses are repeated (Vickery et al., 2020).

Materials and methods

The study was a controlled laboratory experiment involving behavioural measurements of the ability of human subjects to discriminate frequencies between vibrotactile and acoustic pulse trains. The participants performed a two-alternative forced-choice (2AFC) task where they discriminated which of two sequentially presented audio-tactile stimuli in a pair was perceived as higher in frequency. These judgements were recorded by a button press.

Subjects

Twelve healthy subjects (aged 19–42, six females) without any known history or presenting clinical signs of auditory and somatosensory disorders, screened via questionnaire, volunteered in the study. The experimental protocols were approved by the Human Research Ethics Committee of UNSW Sydney (HC210271), and written informed consent was obtained from all the subjects before conducting the experiments.

Mechanical pulse train generation and delivery

The required pulse trains, always of 1 s duration, were generated using custom scripts written in MATLAB (MathWorks, Natick, MA, United States) and Spike2 (Cambridge Electronic Design, Cambridge, United Kingdom) software. The generated stimulus waveforms (pulse trains) were converted to analogue voltage signals using a CED Power1401 mk II (Cambridge Electronic Design, Cambridge, United Kingdom), and then amplified by a PA 100E Power Amplifier (Data physics, San Jose, CA, United States) to drive the SignalForce GW-V4 shaker (Data Physics, San Jose, CA, United States). A probe with a 5 mm diameter metal ball attached to its tip delivered the mechanical pulses to the fingertip skin along the axis perpendicular to the skin surface. An OptoNCDT 2200–10 laser displacement sensor (Micro-Epsilon, Ortenburg, Germany) with a resolution of 0.3 μm at 10 kHz was used to detect the displacement of the stimulation probe.

Stimuli were delivered to the finger pad of the right index finger (dominant hand) which was secured to a finger rest. The participant’s forearm was immobilised with the aid of a GermaProtec vacuum pillow (AB Germa, Kristianstad, Sweden) which was moulded around the arm and deflated to maintain its shape and hold the arm in position. The probe was positioned to contact the skin with a pre-indentation force of around 0.1 N, as determined by the calibrated displacement of the probe from the laser recordings. The stimulus site was approximately halfway between the distal interphalangeal joint and the fingertip. The unstimulated fingers of the tested hand were not in contact with any surface.

Each vibrotactile pulse was a reproducible and uniform event with a protraction time <2 ms. With the pulse duration being comparable to the refractory period of an action potential, each mechanical stimulation event elicited only a single time-controlled spike in responding afferents, which was previously validated by microneurographic recordings (Birznieks and Vickery, 2017). The amplitude of each mechanical pulse vibration was set to 32 μm so as to activate both fast adapting type I (FAI) and fast adapting type II (FAII) afferents (Birznieks et al., 2019; Ng et al., 2022). Any sound produced by the shaker was masked using white noise delivered via headphones to the subjects.

Acoustic pulse train generation and delivery

Like the vibrotactile stimuli, 1 s acoustic pulse trains of desired temporal characteristics were generated using MATLAB (MathWorks, Natick, MA, United States) and Spike2 (Cambridge Electronic Design, Cambridge, United Kingdom) software and converted to analogue voltage signals using a Power 1401 (CED, Cambridge, United Kingdom). The output signal was fed to a Vonyx STM500BT 2-channel mixer (Tronios, Netherlands), where it was mixed with white noise before delivery binaurally via wired Bose QuietComfort 35 noise-cancelling headphones (Bose, United States) to the subjects. The auditory stimuli were presented against a background of continuous white noise, with great care taken to ensure that the auditory clicks remained salient and easily perceivable.

Each acoustic pulse was a 1 ms, fixed amplitude, Gaussian-modulated 5 kHz sinewave designed to excite a fixed population of cochlear afferents, thereby precluding place-cues code for pitch perception (Sharma et al., 2022). This atypical auditory stimulation technique allows participants to hear pulsatile qualities akin to vibrotactile stimulations.

Psychophysical experiment: Audio-tactile cross-modal frequency discrimination

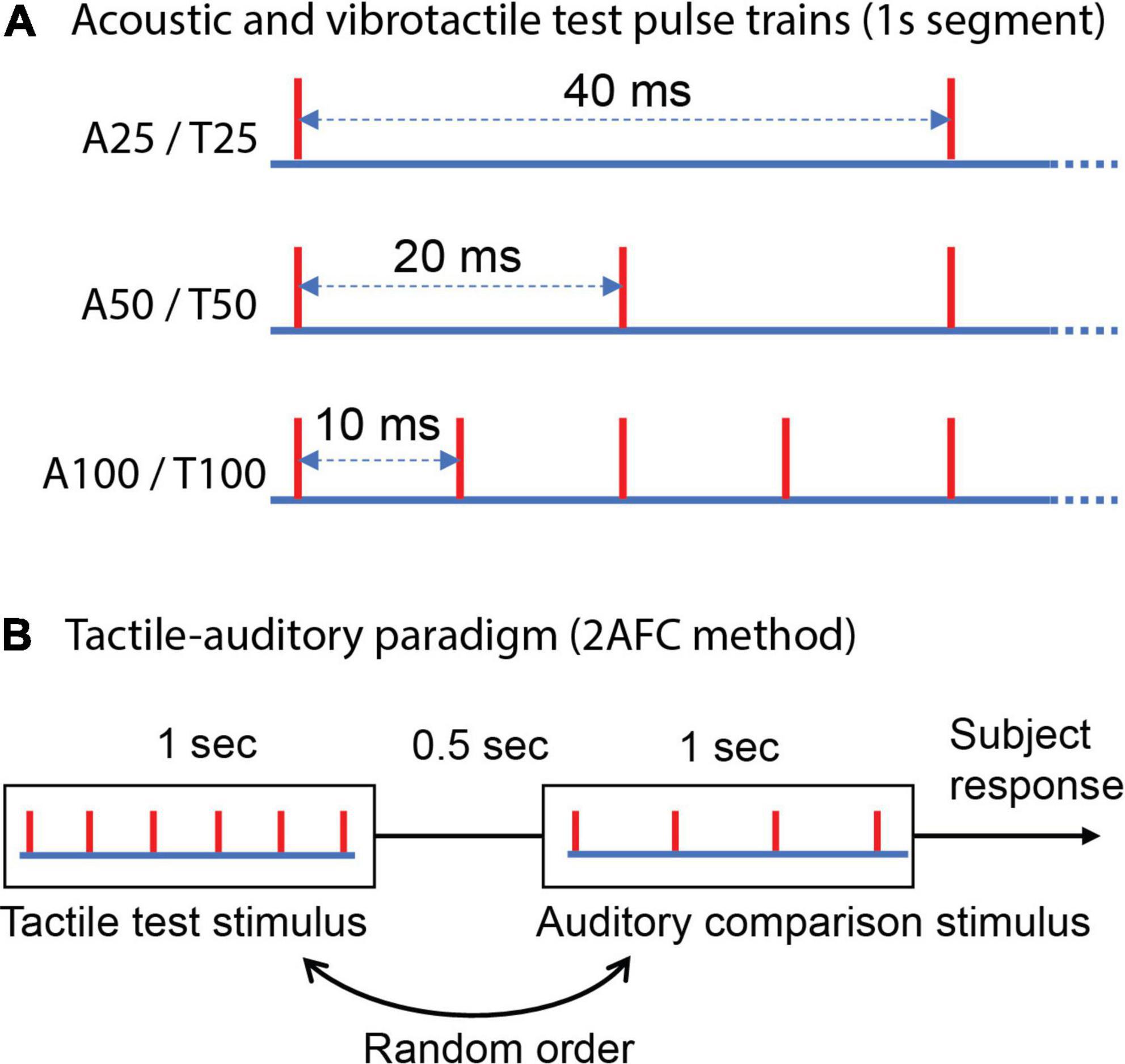

A 2AFC method was used to determine the perceptually equivalent frequency in one sensory modality for a test stimulus delivered to the other modality (Figure 1B). Each test stimulus (acoustic or tactile) was compared against six different regular pulse trains (trains of evenly spaced individual pulses) delivered to the other sensory system in a cross-modal manner (i.e., for a tactile test stimulus, acoustic pulse trains were used, and vice versa). This gave us two psychophysical experiment paradigms: auditory-tactile, where a test is auditory, with tactile stimuli as comparisons, and similarly tactile-auditory paradigm.

Figure 1. Schematic representation of test stimuli and the experimental protocol. (A) Auditory and vibrotactile 1 s regular test pulse trains (evenly spaced individual pulses); “A” denotes auditory and “T” tactile; a subsequent number indicates the frequency. Each red vertical line indicates the time of a mechanical pulse or an acoustic pulse. (B) Two alternative forced-choice (2AFC) method used to estimate cross-modal equivalent or matched frequency for test stimuli, tactile-auditory paradigm is presented as an example [i.e., a tactile test pulse train compared with six different regular acoustic pulse trains spanning a range of frequencies to obtain an auditory point of subjective equality (PSE)].

We matched the perceived intensity of a tactile pulse and an acoustic pulse (on the white noise background) for each subject so that the attention shift across modalities would not be dominated by one type. This was achieved by first finding a white noise volume for each subject that completely masked sounds from the vibrotactile shaker. Then subjects were presented trains of auditory clicks at 25 Hz of varying voltage and asked to compare intensity with that of tactile taps presented at 25 Hz and a fixed amplitude of 32 μm.

A pair of 1 s stimuli was delivered on each trial—a test and one of the six cross-modal comparison trains selected randomly, presented in random order and separated by 0.5 s. The subject indicated which of the two stimuli had the higher perceived frequency by button press. Subjects’ responses were acquired by the Power1401 and recorded in Spike2 for further analysis. Continuous white noise was delivered via the same headphones used for presenting auditory stimuli throughout the trials (including the duration of the two intervals and the 500 ms duration between intervals) to mask any auditory cues from the operation of the vibrotactile stimulation equipment. Subjects were instructed to ignore changes in the perceptual quality, and loudness or intensity elicited by the pulse trains if such changes were to occur and focus specifically on the frequency or repetition rate during frequency judgement. The participants practiced the cross-modal frequency discrimination task with a tactile test 25 Hz regular train compared five times against each of four regular acoustic pulse trains to ensure they understood the directions and could perform the task. No feedback was provided to the subjects during both the practice trials and the experimental trials.

We designed two experiments, one comparing regular trains of pulses, and one comparing paired pulses with regular comparison frequencies. This yielded 12 test blocks: tactile or auditory as test; with regular or paired as test; and test frequencies (25, 50, and 100 Hz). All blocks were tested on a given subject on 1 day and presented in random order. Subjects were given a short break after every three blocks.

To obtain psychometric curves, each test stimulus was compared 20 times against each of the six cross-modal comparison stimuli, giving rise to 120 trials per test condition. The 120 trials were randomised within each test condition and between subjects. For each comparison frequency, the proportion of times the participant responded that it was higher in frequency than the test was calculated (PH). The logit transformation ln(PH/(1-PH)) was then applied to the data to obtain a linear psychometric function. The frequency value at the zero crossing of the logit axis by the regression line fitted to the logit transformed data gave the point of subjective equality (PSE), which is the comparison frequency equally likely to be judged higher or lower than the test stimulus. This PSE value corresponds to the equivalent perceived or a matched frequency in the other modality for a given test stimulus. Discrimination sensitivity was measured by the Weber fraction, which was taken as one-half of the difference between the 25% point and the 75% on the psychometric function, divided by the frequency of the test stimulus (LaMotte and Mountcastle, 1975).

Experiment 1: Regular stimuli

This experiment investigated the cross-modal perceptual frequency equivalence between simple auditory and tactile stimuli of regular pulses.

A cross-modal frequency discrimination paradigm was used to assess the matching or equivalent frequency across audition and touch. The test stimuli were regular vibrotactile and acoustic 1 s pulse trains of 25, 50, and 100 Hz (Figure 1A). A PSE derived using auditory regular comparisons (which we term the auditory PSE) gives a matched/equivalent perceived frequency of an acoustic pulse train for a tactile test stimulus (which we called a tactile-auditory paradigm, Figure 1B). Similarly, a tactile PSE measures the tactile equivalence of an acoustic pulse train (auditory-tactile paradigm). The cross-modal regular comparison stimuli ranged from 12 to 44, 24–88, and 48–176 Hz for 25, 50, and 100 Hz test stimuli, respectively, regardless of the sensory modality used for the comparison. We compared how cross-modally achieved PSE differs from the expected unimodal perceived frequency which would equal the physical frequency for the regular train.

Experiment 2: Doublet burst patterns

This experiment investigated the perceptual frequency equivalence between complex temporal stimuli delivered in one modality and regular stimuli in the other.

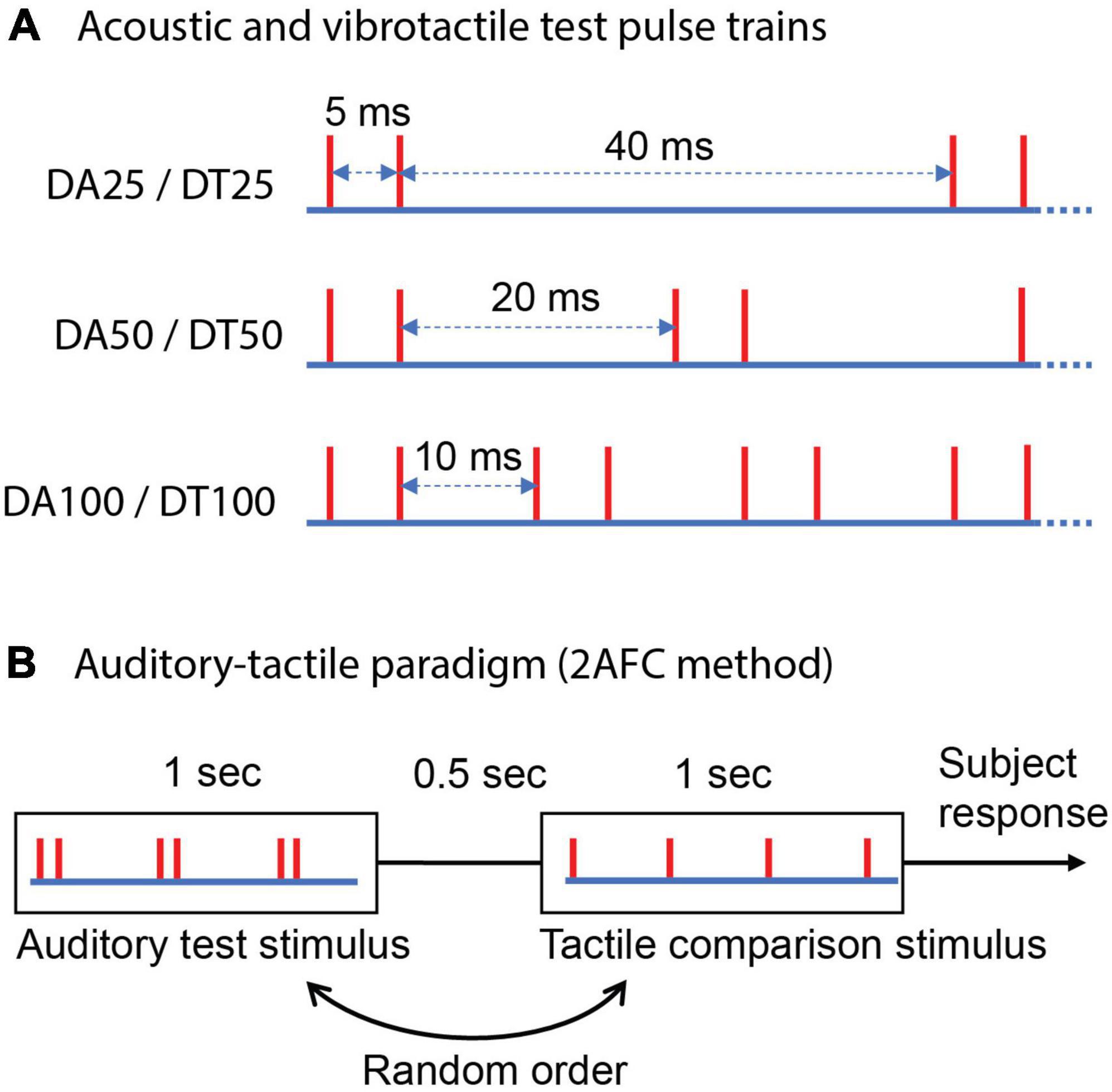

In this experiment, we had subjects match the perceptual frequency between bursting stimuli in one sensory modality (tactile or auditory) and regular stimuli in the other modality. The bursting stimuli were 1 s pulse trains with periodic bursts of two pulses (doublet pattern). The PSE was determined using cross-modal regular trains, employing the same 2AFC method as in the first set of experiments (differing in test stimulus patterns, Figure 2B). The three doublet test stimuli are schematically illustrated in Figure 2A, and were identical for auditory and tactile. Each vertical line represents the timing of an acoustic pulse (auditory test) or a mechanical pulse (tactile test). Based on experimental results that help define the time envelope of a burst in the tactile system (Birznieks and Vickery, 2017; Ng et al., 2018, 2021), the two pulses in a burst were spaced 5 ms apart in all the test trains.

Figure 2. Schematic representation of test stimuli and the experimental procedure. (A) Acoustic and vibrotactile 1 s test pulse trains consisting of periodic bursts of two pulses spaced 5 ms apart. Each red vertical line indicates the time of a mechanical pulse or an acoustic pulse. “DA” denotes doublet auditory and “DT” doublet tactile; a subsequent number indicates frequency that is reciprocal of the inter-burst interval in a train. Test trains differ in the duration between two consecutive bursts. (B) Two alternative forced-choice (2AFC) method used to estimate cross-modal equivalent frequency, auditory-tactile paradigm is presented as an example.

The inter-burst intervals in test trains were set at 40, 20, and 10 ms to correspond to expected perceived frequencies of 25, 50, and 100 Hz, respectively, for the doublet acoustic (DA25, DA50, and DA100) (Sharma et al., 2022) and vibrotactile (DT25, DT50, and DT100) test trains (Ng et al., 2020). The cross-modal regular comparisons that spanned 12–44, 24–88, and 48–176 Hz for stimuli DA/DT25–100, respectively, were identical to experiment 1, regardless of sensory modality used for the comparison.

Statistical analysis

The coefficient of determination (R2) of logit transformed psychophysics data was calculated to determine goodness of fit for the linear psychometric functions. A one-sample two-tailed t-test was performed to determine whether the experimentally obtained cross-modal PSEs differed significantly from the expected unimodal perceived frequency of the test stimulus. Within each experiment, a two-way repeated-measures ANOVA was used to examine whether the paradigm employed influenced the PSE. Similarly, a two-way repeated measures ANOVA analysed if presentation order of auditory and tactile stimuli in psychophysical trials affected PSEs. Tukey’s multiple comparisons tested mean Weber fractions across frequencies within a paradigm, and the paradigm effect on Weber fractions was analysed using a two-way repeated measures ANOVA. Unless specified, the data is presented as a mean with a 95% confidence interval.

Results

Perceptual equivalence between regular auditory and tactile stimuli (experiment 1)

Participants were able to make reliable comparisons of frequency across the two modalities as determined from the regression fits for the psychometric curves under both the auditory-tactile (R2 for logit-transformed psychophysical data, mean ± SD: 0.90 ± 0.07) and tactile-auditory (0.92 ± 0.06) paradigms.

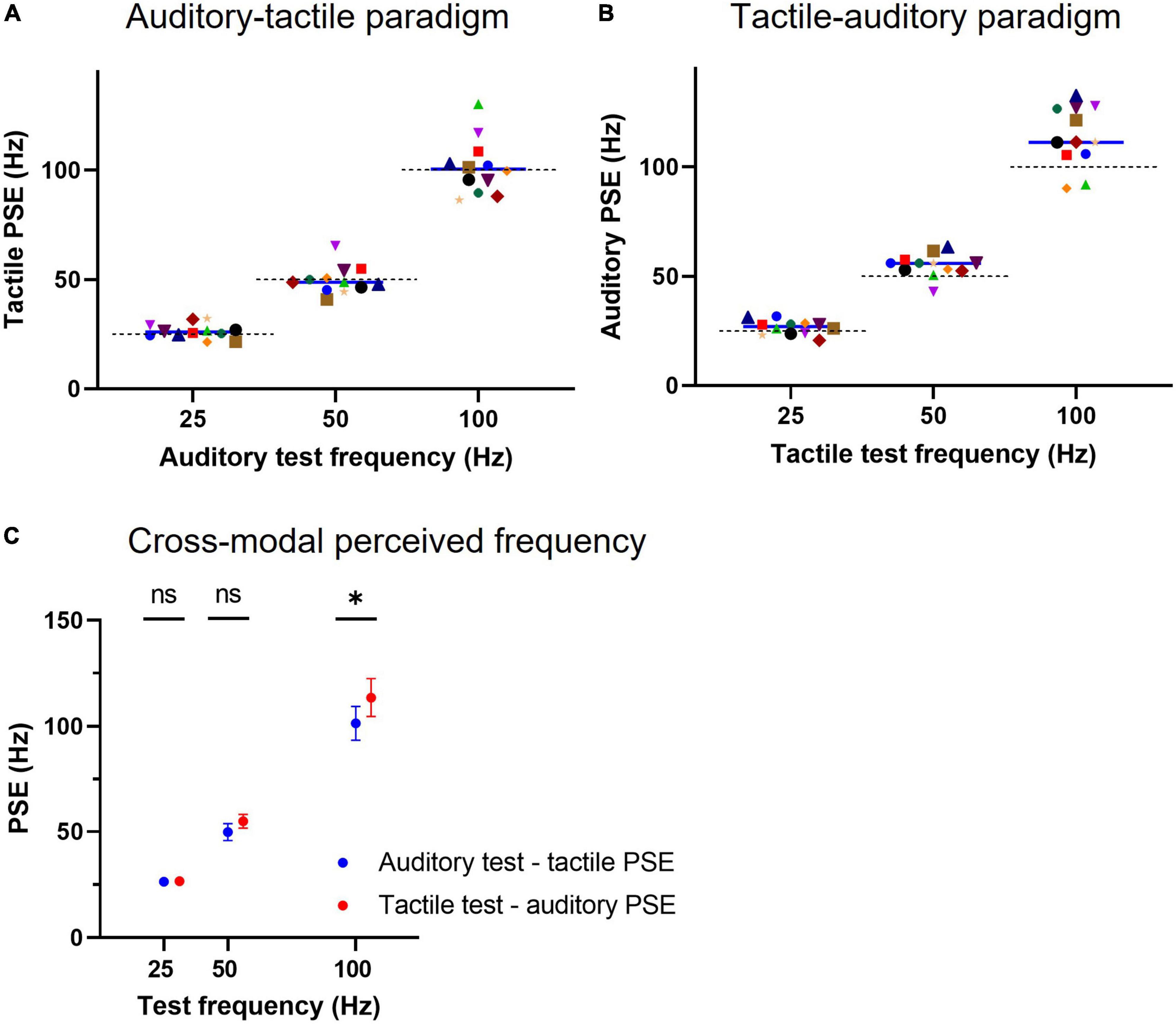

The individual subject tactile PSEs for the auditory-tactile paradigm, where a test auditory stimulus was compared against a range of tactile frequencies, are illustrated in Figure 3A. The mean PSEs across subjects were not statistically different from the physical frequencies of the presented acoustic pulse trains: 26.3 (95% CI: 24.2–28.5) Hz for the 25 Hz test (p = 0.19, n = 12, one-sample two-tailed t-test); 49.8 (45.8–53.8) Hz for the 50 Hz test (p = 0.91, n = 12), and 101.4 (93.4–109.4) Hz for the 100 Hz (p = 0.70, n = 12) test stimulus. The physical frequency (or unimodal expected perceived frequency) of each test stimulus is represented by the dashed lines in the figure for comparison with the cross-modal obtained individual PSEs.

Figure 3. Cross-modal point of subjective equality (PSE) for regular tactile and auditory stimuli. (A) Individual subject PSEs obtained for auditory test conditions using tactile comparisons (auditory-tactile paradigm). A tactile PSE represents the frequency of a vibrotactile pulse train that is equally likely to be judged higher or lower than the auditory test stimulus. A solid line indicates a median PSE (n = 12) value for a test frequency, whereas a dashed line represents the expected unimodal PSE (or the physical frequency) of the test stimulus. (B) Individual subject PSEs for tactile test conditions using auditory comparisons (tactile-auditory paradigm), conventions as in panel (A). (C) Cross-modal equivalent mean frequencies for both auditory and tactile stimuli of identical frequency, * represents p < 0.05 and error bars indicate ±95% CI.

The individual subject auditory PSEs for the tactile-auditory paradigm are shown in Figure 3B. The auditory equivalent frequency (mean PSE) for the 25 Hz vibrotactile test was 26.6 (95% CI: 24.5–28.7) Hz, which was not different from its physical frequency (p = 0.12, n = 12, one-sample two-tailed t-test). The mean equivalent frequencies for the other two vibrotactile pulse trains: 54.9 (51.6–58.2) for the 50 Hz test and 113.6 (104.7–122.4) for the 100 Hz test did, however, differ from their corresponding test physical frequencies (p = 0.007 and p = 0.006, respectively, n = 12, one-sample two-tailed t-test).

Two-way repeated-measures ANOVA (test stimulus and cross-modal paradigm) on the data presented in Figure 3C indicated that choice of paradigm (auditory-tactile or tactile-auditory) accounts only for 0.7% of the total variation in PSEs [F(1, 11) = 5.147, p = 0.045], with test stimulus causing 93.3% [F(2, 22) = 1,062, p < 0.0001] and no interaction effect between factors. Post-hoc Šidák’s multiple comparisons test showed a statistical difference only between cross-modal mean PSEs of 100 Hz test stimulus (101.4 vs. 113.6 Hz, p = 0.01).

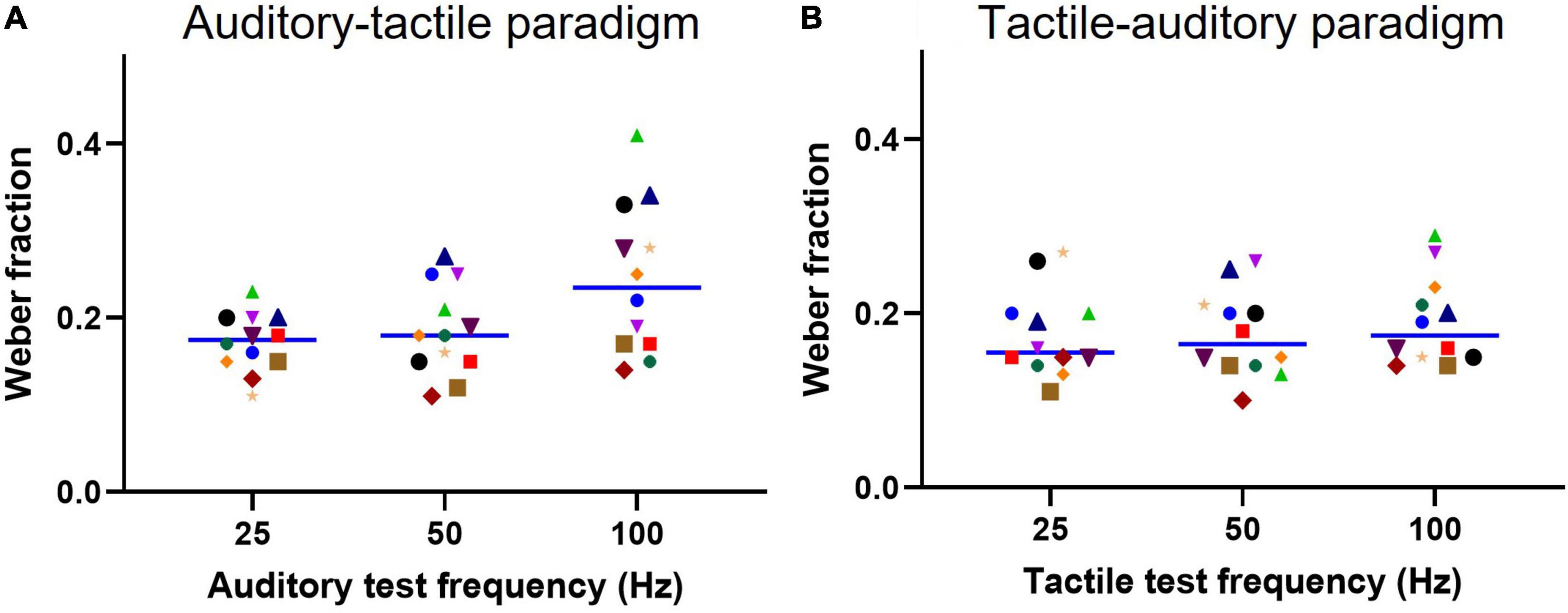

We also compared the discriminative ability of subjects when making cross-modal comparisons by calculating the Weber fraction. This value represents the sensitivity to changes in frequency. We plot the results in Figures 4A,B using the same conventions as for Figure 3. The mean Weber fractions for the auditory-tactile paradigm were 0.17 (95% CI: 0.15–0.19) for the 25 Hz test, 0.18 (0.15–0.21) for the 50 Hz test, and 0.24 (0.18–0.29) for the 100 Hz test stimulus. Tukey’s multiple comparisons test showed a significant difference between the 25 Hz and 100 Hz Weber fractions (p = 0.0146). The Weber fractions are similar across the three frequencies within the tactile-auditory paradigm: 0.17 (95% CI: 0.14–0.20) for the 25 Hz test; 0.17 (0.14–0.21) for the 50 Hz test, and 0.19 (0.15–0.22) for the 100 Hz test stimulus. A two-way repeated measures ANOVA (test frequency and paradigm) showed no significant effect of paradigm on Weber fractions [F(1.000, 11.00) = 4.484, p = 0.06] with test frequency accounting for 10.7% of the total variation [F(1.727, 19.00) = 6.300, p = 0.01]. The Weber fraction for a given test frequency was not statistically different across paradigms.

Figure 4. Weber fractions for each test stimulus. (A) Individual subject Weber fractions for each auditory test frequency using tactile comparisons (Auditory-tactile paradigm). (B) Similarly, individual subject Weber fractions for tactile test conditions using auditory comparisons (tactile-auditory paradigm). A solid line indicates a median (n = 12) Weber fraction value for a test frequency.

Presentation order of auditory and tactile stimuli does not influence point of subjective equality

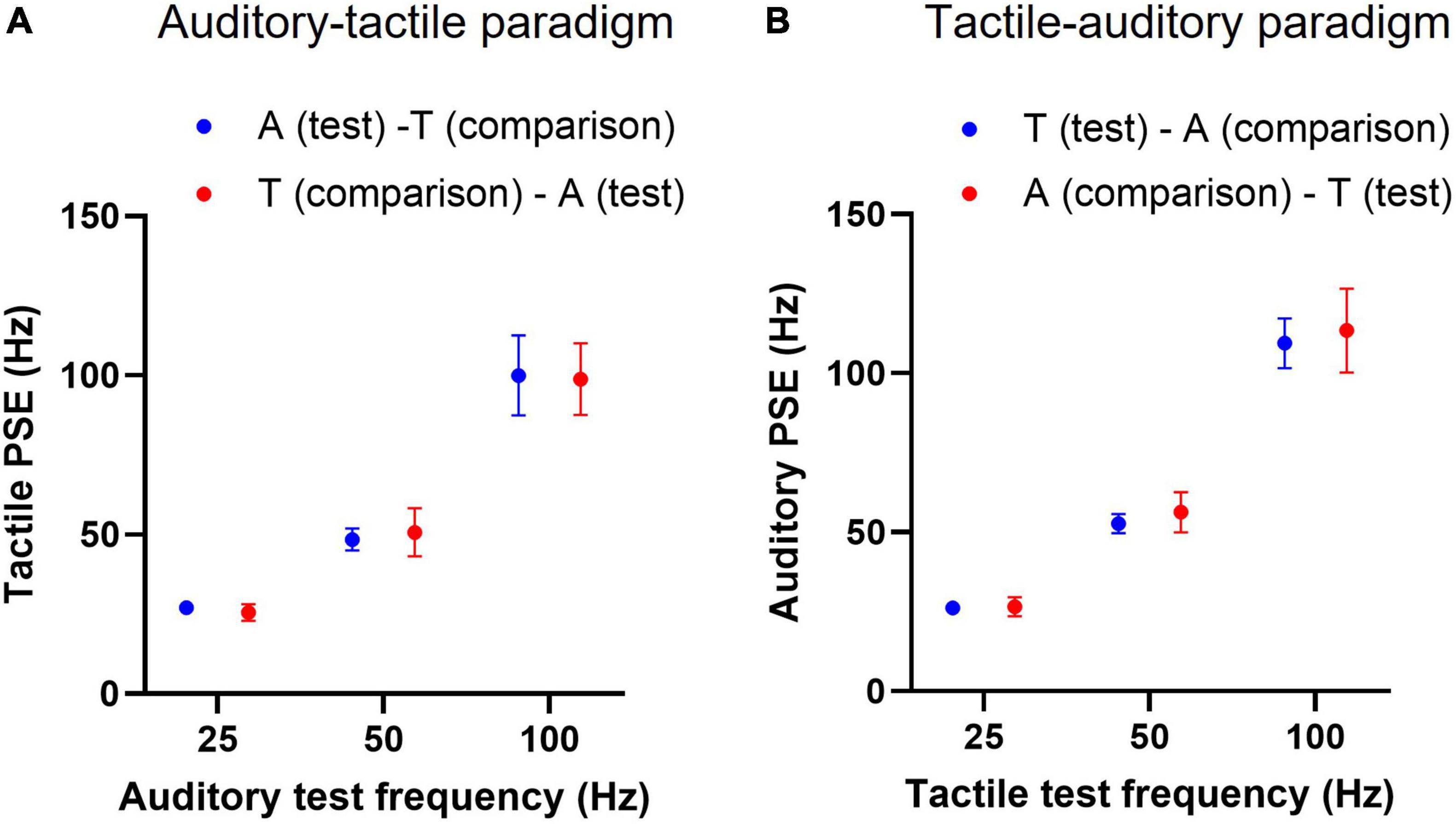

To determine if the presentation order of a test stimulus in cross-modal frequency discrimination trials (consisting of audio-tactile pairs) alters the PSE, trials in which a test stimulus preceded the cross-modal comparisons and vice-versa were sorted for each subject in both the paradigms, then analysed separately for the corresponding PSEs. Each paradigm thus yielded two conditions, for example in the auditory-tactile paradigm: [A (test)–T (comparison)] and [T (comparison)–A (test)]. Each test stimulus was compared ten times against each of the six comparison stimuli to obtain PSEs.

The mean tactile PSEs (n = 12) of an auditory test stimulus, whether it precedes or follows the cross-modal tactile comparisons, were not statistically different; the presentation order accounted only for 0.0005% of total variation [F(1, 11) = 0.0018, p = 0.96, two-way RM ANOVA, Figure 5A]. Similarly, the order in which tactile tests were presented in the tactile-auditory paradigm had no effect on mean PSEs; tactile test order accounted for 0.13% of total variation in observed PSEs [F(1, 11) = 1.596, p = 0.23, two-way RM ANOVA, Figure 5B].

Figure 5. Cross-modal point of subjective equality (PSE) with test stimulus presentation order. (A) Mean tactile PSEs (n = 12) of an auditory test train when it precedes or follows the tactile comparisons in psychophysical frequency discrimination trials. “A (test)–T (comparison)” indicates an auditory test stimulus preceding the tactile comparison stimuli, and “T (comparison)–A (test)” denotes the opposite presentation order. (B) Similarly, mean auditory PSEs for a tactile test train at two conditions, conventions as in panel (A). Error bars indicate ±95% CI.

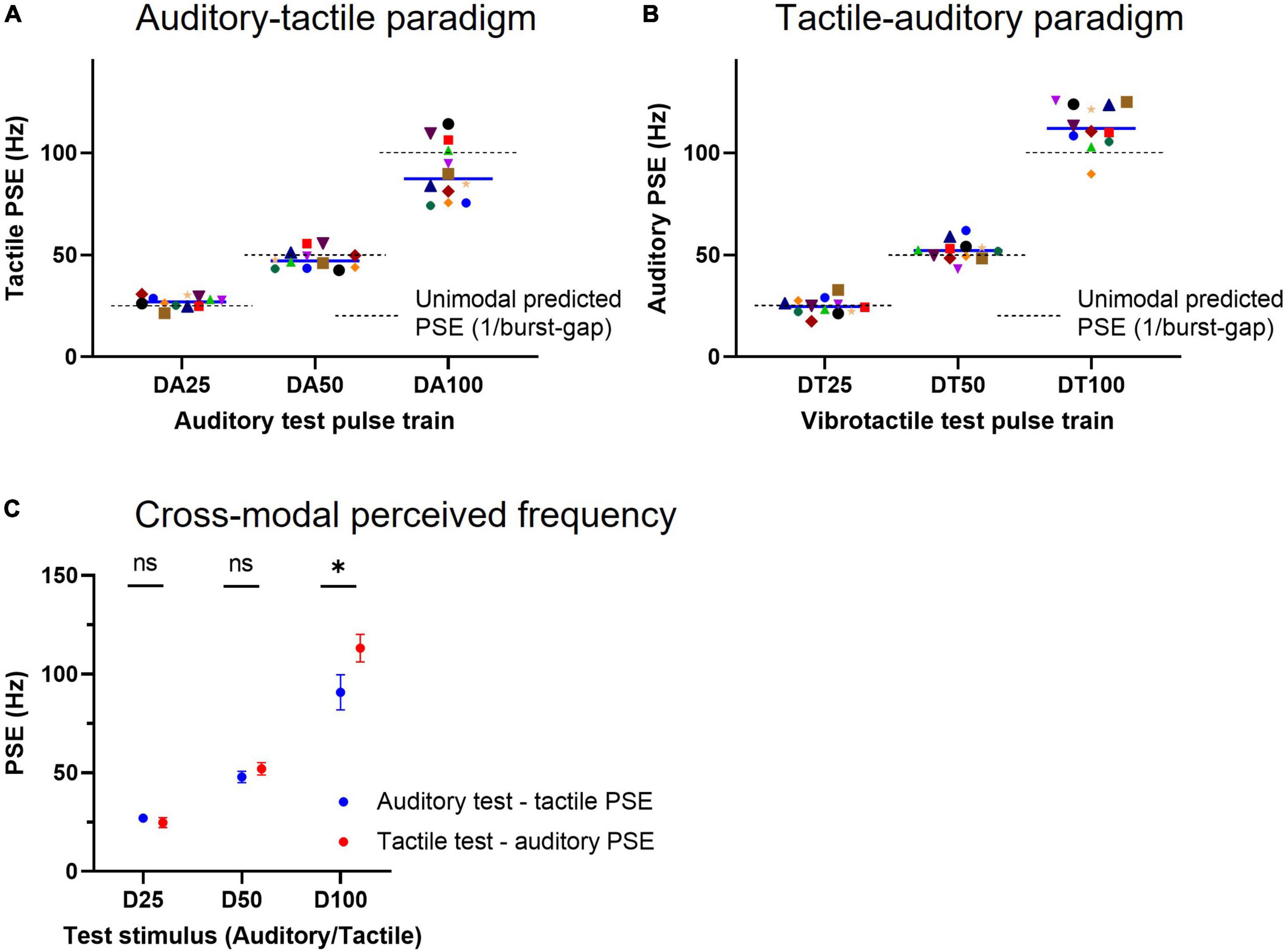

Perceptual equivalence between cross-modal bursting and regular stimuli (experiment 2)

Having observed in Experiment 1 that cross-modal matching of equivalent frequencies was possible for regular test pulse trains, in the second set of experiments we explored if the same holds for more complex stimuli, such as trains of bursts consisting of a doublet of pulses. All participants could perform cross-modal frequency discrimination between bursting and regular stimuli in either auditory-tactile (mean psychometric function fit R2 ± SD: 0.89 ± 0.08) or tactile-auditory (R2 ± SD: 0.93 ± 0.05) paradigms, with similar ability to that observed for regular vs. regular cross-modal stimuli.

The individual subject tactile PSEs for the three test acoustic doublet trains (schematically presented in Figure 2A), which vary both in burst rate/periodicity (23, 40, and 67 Hz) and mean pulse rate (46, 80, and 134 Hz, test trains DA25–DA100, respectively), are illustrated in Figure 6A. The mean equivalent tactile perceived frequency for stimulus DA25 was 27.0 Hz (95% CI: 25.3–28.8), for stimulus DA50 was 47.9 Hz (45.0–50.8) and stimulus DA100 was 90.9 Hz (81.9–99.8). Although these values are close to their burst-gap model predicted values depicted by the dashed lines [which correspond to the reciprocal of the inter-burst interval in the test train (Birznieks and Vickery, 2017; Ng et al., 2020, 2021)], the data for DA25 and DA100 differed from the predicted values of 25 Hz (p = 0.029, n = 12, one-sample t-test) and 100 Hz (p = 0.047, n = 12, one-sample t-test); while for stimulus DA50, there was no statistical difference from the burst-gap predicted value (50 Hz, p = 0.13, n = 12). However, the data for DA25 and DA100 was much more poorly predicted by their periodicity (23 Hz, p = 0.0004; and 67 Hz, p = 0.0001; one-sample t-test) or mean pulse rate (46 Hz, p < 0.0001; and 134 Hz, p < 0.0001) than from burst-gap predictions, suggesting the burst-gap model offers a better explanation.

Figure 6. Cross-modal equivalent frequency for acoustic and tactile doublet-burst pulse trains. (A) Individual subject tactile PSEs obtained for acoustic test pulse trains schematically depicted in Figure 2A (Auditory-tactile paradigm). A tactile point of subjective equality (PSE) represents the frequency of a vibrotactile pulse train that is equally likely to be judged higher or lower than the acoustic test stimulus, indicating an equivalent frequency for a given acoustic train. Each solid line indicates median PSE (n = 12) values for test trains, while the dashed lines represent unimodal (i.e., test and comparisons from the same sensory modality) predicted PSEs estimated by the burst-gap model (1/burst-gap). (B) Individual subject auditory PSEs for vibrotactile test pulse trains shown in Figure 2A (Tactile-auditory paradigm), conventions as in panel (A). (C) Cross-modal mean equivalent frequencies (n = 12) for both auditory and tactile pulse trains that are identical. * represents p < 0.05 and error bars denote ±95% CI.

When we determined auditory equivalent frequencies for the same patterns, we found 24.8 Hz (95% CI: 22.3–27.3) for stimulus DT25, 52.1 Hz (48.9–55.2) for stimulus DT50, and 113.2 Hz (106.2–120.3) for stimulus DT100 as shown in Figure 6B. The DT25 and DT50 PSEs matched the predicted values from the burst gap model of 25 Hz (p = 0.87, n = 12, one-sample t- test) and 50 Hz (p = 0.17). However, stimulus DT100 has a PSE higher than the stimulus burst-gap model predicted value of 100 Hz (p = 0.001, n = 12, one-sample t-test), yet this model predicted value remains a closer match to the observed PSE than mean pulse rate (134 Hz) and periodicity (67 Hz) predictions.

Two-way repeated measures ANOVA (test train and cross-modal paradigm) indicated that choice of paradigm accounts for 1.48% of the total variation [F(1, 11) = 17.88, p = 0.001], with maximum variation caused by the test train [90.74%, F(2, 22) = 469.4, p < 0.0001] and interaction between factors accounting only for 2.43% [F(2, 22) = 23.13, p < 0.0001]. Post-hoc Šidák’s multiple comparisons test showed a significant difference between cross-modal PSEs of stimulus D100 (90.87 vs. 113.2 Hz, p < 0.0001, Figure 6C).

Discussion

We directly addressed the question of whether a perceptual equivalence of temporal frequency could be determined between auditory and tactile pulsatile stimuli for frequencies that span the flutter range up to the vibration range.

The first set of experiments explored the cross-modal perceptual equivalent frequency for regular auditory (auditory-tactile paradigm) and tactile (tactile-auditory paradigm) stimuli of 25, 50, and 100 Hz. The equivalent perceived tactile frequency for each auditory test train accurately matched its physical frequency, whereas the auditory equivalences for 50 and 100 Hz vibrotactile test pulse trains were marginally higher at 55 and 114 Hz, respectively. It may be that flutter and vibration are elaborated along different processing streams within each modality [as in touch (Talbot et al., 1968)], and show a close cross-modal match only in the flutter frequency stream (Romo and Salinas, 2003; Bendor and Wang, 2007) whereas the very different ranges between vibratory hum (up to 1 kHz) and pitch (up to 20 kHz) lead to a progressive mismatch in touch and hearing. This is also consistent with the results of Experiment 2 where tactile stimuli are matched with slightly higher auditory frequencies at 100 Hz, while there is close agreement at 25 and 50 Hz (Figures 6A,B).

We measured the Weber fractions for subjects performing our tasks, and found no difference between tactile-auditory and auditory-tactile paradigms. The mean value at 50 Hz of 0.18 across the two paradigms is a close match to our previously reported value for a straight tactile-tactile comparison at 40 Hz of 0.19 using the same pulsatile stimuli (Birznieks et al., 2019). This is an important observation, as it shows that not only are the perceived frequencies equivalent across the two sensory modalities, but that the ability to make cross-modal judgements is equally as good as that for a unimodal judgement.

The deviation of PSEs from the physical frequencies in the present study are within our calculated Weber fraction, and the Weber fraction of 0.2–0.3 reported in the literature for the vibrotactile stimulus (Goff, 1967; BensmaÏa et al., 2005; Li et al., 2018), which implies at most a small perceptual difference. As each auditory and tactile pulse was designed to elicit a single spike in respective activated afferents, the matched pulse trains would create similar temporal spike trains in the responding primary afferents. The close agreement in perceived frequencies across the two modalities suggest that inter-spike intervals in the spike trains are analysed analogously in higher centres of the two senses to extract similar frequency information.

We did not observe biases in the frequency discrimination tasks from the presentation sequence of tactile and auditory stimuli in the psychophysical trials that consisted of audio-tactile pairs. This finding is consistent with a previous study in which trained monkeys discriminated frequency between two sequentially presented auditory and tactile stimuli (Lemus et al., 2010). Notably, our findings also demonstrated that perceptual frequency interactions between touch and audition are not likely when the stimuli are separated in time. The finding that any given auditory or tactile stimulus elicited perceived frequency (measured in cross-modal psychophysical experiments using a range of frequencies) did not deviate from the expected unimodal perceived frequency, provides evidence that there was little interference present, with no cross-modal transfer of effects. However, for perceptual interactions that were reported between concurrently delivered auditory and tactile stimuli (Yau et al., 2009a,2010; Convento et al., 2019), the interactions may have arisen from a division of attention over both senses, pointing to the fact that audio-tactile interplay may require attentional binding that is cued by synchronicity (Schroeder et al., 2010; Convento et al., 2018).

We extended our study in the second set of psychophysical experiments to investigate the perceptual equivalence between cross-modal audio-tactile bursting (1 s periodic doublet train) and regular pulse trains, with the hypothesis that the inter-pulse interval of the cross-modal matched regular pulse train equates the burst gap interval in the bursting test stimulus, either auditory or tactile. Even though the stimulus pair of varying patterns (bursting vs. regular) was expected to evoke different perceptual qualities across two modalities (audition and touch) and was cross-matched to a different sense, subjects reliably discriminated the frequencies of cross-modal stimulus pairs, as indicated by the large R2 values (>0.89) of psychometric function fits applied to the psychophysical data. As hypothesised, the inter-pulse interval of the equated cross-modal regular pulse train was well-matched to the perceived frequency of the bursting stimuli in D25 and D50. The cross-modal PSEs for stimulus D100 (with 10 ms burst gap), though not precisely fitting to its burst gap prediction value, was better explained by the burst gap than the other properties of the test train such as the simple mean pulse rate or burst periodicity.

Analogous temporal frequency processing mechanisms

The cerebral substrate underpinning the analysis of frequency signals is beyond the purview of this work, however, our findings make a strong case that the temporal frequency analysis mechanism is analogous across audition and touch, as the perceived frequency of doublet trains in either paradigm best corresponded to a unique temporal property—the duration of the burst-gap in test trains. Preliminary evidence from Nagarajan et al. (1998) suggests that temporal information processing may be mediated by common mechanisms in the auditory and tactile systems. Participants in their study were presented with pairs of vibratory tactile pulses and trained to distinguish the temporal interval between them. The findings revealed not just a drop in threshold as a function of training, but also that the better interval discrimination could be generalised to the auditory modality. Even though the generalisation was limited to an auditory base interval comparable to the one trained in touch, the results are noteworthy in that they show that temporal interval coding mechanisms could be shared centrally (Fujisaki and Nishida, 2010). Another study demonstrated that a prolonged exposure to acoustic stimuli (3 min adaptation) improves subsequent tactile frequency discrimination thresholds, and went as far as to propose that the two senses share or have overlapping neural circuits that facilitate basic frequency decoding (Crommett et al., 2017). In fact, the neural population that analogously signals flutter in cortices of both sensory modalities was identified [for somatosensory (Romo and Salinas, 2001, 2003) and auditory cortices (Lu et al., 2001; Bendor and Wang, 2007)], and an identical neuronal coding strategy was speculated to represent auditory and tactile flutter (Bendor and Wang, 2007; Saal et al., 2016). Furthermore, a recent study that used decoding analysis on brain activity discovered the common neural underpinnings of conscious perception between sensory modalities, including audition and touch (Sanchez et al., 2020). It has been suggested that the inner ear evolved as a highly frequency-specific responder from the skin, and the tactile system expanded the spectrum of low-frequency hearing (Fritzsch et al., 2007).

Others have argued for analogous processing mechanisms across senses for other properties; higher-order representations—of object shape and motion—were also found to be strikingly analogous in touch and vision (Pei et al., 2008; Yau et al., 2009b,2016). The coding similarities across modalities provide evidence for the existence of canonical computations: the nervous system seems to have evolved to implement similar computations across modalities to extract similar information about the environment, regardless of the source of inputs (Pack and Bensmaia, 2015; Crommett et al., 2017).

Cross-modal perceptual judgements

When subjects discriminate the difference in frequency between two sequentially applied stimuli, the discrimination task can be thought of as a chain of neural operations that include encoding information of the two successive stimuli, storing the first stimulus in working memory, comparing the second stimulus to the memory trace left by the first stimulus, and finally communicating the result (Romo and Salinas, 2001; Hernández et al., 2002).

The fact that perceptual equivalence of frequency could be achieved not only between cross-modal stimuli with closely matching afferent temporal firing patterns (regular vs. regular), but also between those with varying patterns (regular vs. bursting) that have different underlying spike patterns, suggests that the audio-tactile cross-modal perceptual decision might occur central to the primary sensory cortices—as they decode only information for their individual modality and do so only during the stimulus presentation period, and not during the delay between the two stimuli (Romo et al., 2002; Lemus et al., 2010). The neurons of the primary somatosensory cortex (S1) do not engage in the working memory component of the task, nor do they compare the difference between the two input frequencies (Hernández et al., 2000). Therefore, the cross-modal perceptual decision or convergence of two distinct processing channels must occur outside these cortical areas (Fuster et al., 2000; Schroeder and Foxe, 2002).

The intriguing question is where and how the modality-specific information is transformed into a supramodal signal that allows for cross comparison. Are these two sensory modalities encoded in working memory by the same group of neurons? For example, the neurons in the pre-supplementary motor area, an area shown to participate in perceptual judgements (Tanji, 1994; Hernández et al., 2002; Hernandez et al., 2010), were found to encode tactile and acoustic frequency information in working memory using the exact representation for both modalities (Vergara et al., 2016). Similarly, the medial premotor cortex was also shown to contribute to supramodal perceptual decision making (Haegens et al., 2017). Thus, single neurons from the frontal cortical region are good candidates to encode more than one sensory modality contributing to multimodal processing during perceptual judgements. Such supramodal representations may be quite restricted, as it may exist only for task parameters such as stimulus frequency that are strongly congruent and similarly discriminable across modalities (tactile and acoustic) (Vergara et al., 2016). Nevertheless, the comparison of stored and ongoing sensory information has been reported to occur widely—no single location can be designated as the unique locus of decision making (Romo and Salinas, 2003).

Methodological implications for future psychophysics research

Psychophysics is an essential tool in neuroscience to investigate underlying neural mechanisms for sensory perceptions. It must have a comparison standard that can be reliably and consistently equated to the test stimulus of interest (Laming and Laming, 1992). Conventionally, comparisons have been established using standardised stimuli in the same modality as the test stimuli of interest. But as the complexity of enquiries into human frequency perception advances, the same-modality comparison standard becomes challenging as an increasing number of confounders for frequency, such as varying stimulus intensities, stimulation sites and several stimulating electrodes (McIntyre et al., 2016) are introduced into research designs. Our findings of cross-modal matching of stimuli at identical frequencies raise the possibility of employing a cross-modal comparison standard for future psychophysical investigations in the two modalities, thereby overcoming the prevailing methodological limitations. Jelinek and McIntyre’s (2010) work provides an insight, as they used auditory noises of varied volumes to assess the perceived intensities of tactile stimuli. Similarly, the method of cross-modality matching to compare the perceived magnitude of electrical stimulation on the abdomen to that of a 500 Hz tone has been deployed (Sachs et al., 1980). In early pioneering work, von Békésy (1957, 1959) had already flagged that the sensation of touch may be used as a model for studying functional features of hearing (see also Gescheider, 1970). As stimulation properties (except frequency) in the auditory system have different coding schemes to their counterparts in the tactile system (Chatterjee and Zwislocki, 1997; Bollini et al., 2021), tactile confounders for vibrotactile frequency are less likely to bias pitch perception of auditory stimuli and vice versa. The perceived frequencies of tactile stimuli applied at different locations on the skin or with different shaped probes could all be matched to auditory stimuli of equivalent perceived pitch, allowing standardisation across different labs, and enabling these results to be compared in absolute terms regardless of skin location or probe shape.

Conclusion

Identical acoustic and vibrotactile pulse trains of simple and complex temporal features produce equivalent perceived frequencies with precise accuracy within the flutter range. This applied even for temporally-complex stimuli, indicating analogous frequency computation mechanisms deployed in higher centres, which supports the notion of a canonical computation. The findings suggest new experimental possibilities where the perceived frequency elicited by tactile stimuli, either regular or complex, within the flutter range can be measured explicitly using cross-modal auditory comparisons, and vice versa.

Data availability statement

The original contributions presented in this study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

The studies involving human participants were reviewed and approved by Human Research Ethics Committee UNSW Sydney. The patients/participants provided their written informed consent to participate in this study.

Author contributions

DS performed the experiments, analysed the data, drafted the manuscript, and prepared the figures. DS, IB, and RV interpreted the experimental results. DS, KN, IB, and RV conceived and designed the study, edited and revised the manuscript, and approved the final submitted version.

Funding

This study was funded by the Australian Research Council discovery project grant (DP200100630).

Acknowledgments

We thank Meng (Evelyn) Jin for her assistance with conducting pilot experiments and collecting the first six subjects’ data.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Bendor, D., and Wang, X. (2007). Differential neural coding of acoustic flutter within primate auditory cortex. Nat. Neurosci. 10, 763–771. doi: 10.1038/nn1888

BensmaÏa, S., Hollins, M., and Yau, J. (2005). Vibrotactile intensity and frequency information in the Pacinian system: A psychophysical model. Percept. Psychophys. 67, 828–841. doi: 10.3758/BF03193536

Birznieks, I., and Vickery, R. M. (2017). Spike timing matters in novel neuronal code involved in vibrotactile frequency perception. Curr. Biol. 27, 1485–1490. doi: 10.1016/j.cub.2017.04.011

Birznieks, I., McIntyre, S., Nilsson, H. M., Nagi, S. S., Macefield, V. G., Mahns, D. A., et al. (2019). Tactile sensory channels over-ruled by frequency decoding system that utilizes spike pattern regardless of receptor type. eLife 8:e46510. doi: 10.7554/eLife.46510

Bolanowski, S. J. Jr., Gescheider, G. A., Verrillo, R. T., and Checkosky, C. M. (1988). Four channels mediate the mechanical aspects of touch. J. Acoust. Soc. Am. 84, 1680–1694. doi: 10.1121/1.397184

Bollini, A., Esposito, D., Campus, C., and Gori, M. (2021). Different mechanisms of magnitude and spatial representation for tactile and auditory modalities. Exp. Brain Res. 239, 3123–3132. doi: 10.1007/s00221-021-06196-4

Brisben, A. J., Hsiao, S. S., and Johnson, K. O. (1999). Detection of vibration transmitted through an object grasped in the hand. J. Neurophysiol. 81, 1548–1558. doi: 10.1152/jn.1999.81.4.1548

Chatterjee, M., and Zwislocki, J. J. (1997). Cochlear mechanisms of frequency and intensity coding. I. The place code for pitch. Hear. Res. 111, 65–75. doi: 10.1016/s0378-5955(97)00089-0

Convento, S., Rahman, M. S., and Yau, J. M. (2018). Selective attention gates the interactive crossmodal coupling between perceptual systems. Curr. Biol. CB 28, 746–752.e745. doi: 10.1016/j.cub.2018.01.021

Convento, S., Wegner-Clemens, K. A., and Yau, J. M. (2019). Reciprocal interactions between audition and touch in flutter frequency perception. Multisens. Res. 32, 67–85. doi: 10.1163/22134808-20181334

Crommett, L. E., Perez-Bellido, A., and Yau, J. M. (2017). Auditory adaptation improves tactile frequency perception. J. Neurophysiol. 117, 1352–1362. doi: 10.1152/jn.00783.2016

Escabí, M. A., Higgins, N. C., Galaburda, A. M., Rosen, G. D., and Read, H. L. (2007). Early cortical damage in rat somatosensory cortex alters acoustic feature representation in primary auditory cortex. Neuroscience 150, 970–983. doi: 10.1016/j.neuroscience.2007.07.054

Foxe, J. J., Wylie, G. R., Martinez, A., Schroeder, C. E., Javitt, D. C., Guilfoyle, D., et al. (2002). Auditory-somatosensory multisensory processing in auditory association cortex: An fMRI study. J. Neurophysiol. 88, 540–543. doi: 10.1152/jn.2002.88.1.540

Fritzsch, B., Beisel, K. W., Pauley, S., and Soukup, G. (2007). Molecular evolution of the vertebrate mechanosensory cell and ear. Int. J. Dev. Biol. 51, 663–678. doi: 10.1387/ijdb.072367bf

Fujisaki, W., and Nishida, S. (2010). A common perceptual temporal limit of binding synchronous inputs across different sensory attributes and modalities. Proc. Biol. Sci. 277, 2281–2290. doi: 10.1098/rspb.2010.0243

Fuster, J. M., Bodner, M., and Kroger, J. K. (2000). Cross-modal and cross-temporal association in neurons of frontal cortex. Nature 405, 347–351. doi: 10.1038/35012613

Gescheider, G. A. (1970). Some comparisons between touch and hearing. IEEE Trans. Man-Mach. Syst. 11, 28–35. doi: 10.1109/TMMS.1970.299958

Gick, B., and Derrick, D. (2009). Aero-tactile integration in speech perception. Nature 462, 502–504. doi: 10.1038/nature08572

Goff, G. D. (1967). Differential discrimination of frequency of cutaneous mechanical vibration. J. Exp. Psychol. 74, 294–299. doi: 10.1037/h0024561

Grothe, B., Pecka, M., and McAlpine, D. (2010). Mechanisms of sound localization in mammals. Physiol. Rev. 90, 983–1012. doi: 10.1152/physrev.00026.2009

Guest, S., Catmur, C., Lloyd, D., and Spence, C. (2002). Audiotactile interactions in roughness perception. Exp. Brain Res. 146, 161–171. doi: 10.1007/s00221-002-1164-z

Haegens, S., Vergara, J., Rossi-Pool, R., Lemus, L., and Romo, R. (2017). Beta oscillations reflect supramodal information during perceptual judgment. Proc. Natl. Acad. Sci. U.S.A. 114, 13810–13815. doi: 10.1073/pnas.1714633115

Hernandez, A., Nacher, V., Luna, R., Zainos, A., Lemus, L., Alvarez, M., et al. (2010). Decoding a perceptual decision process across cortex. Neuron 66, 300–314. doi: 10.1016/j.neuron.2010.03.031

Hernández, A., Zainos, A., and Romo, R. (2000). Neuronal correlates of sensory discrimination in the somatosensory cortex. Proc. Natl. Acad. Sci. U.S.A. 97:6191. doi: 10.1073/pnas.120018597

Hernández, A., Zainos, A., and Romo, R. (2002). Temporal evolution of a decision-making process in medial premotor cortex. Neuron 33, 959–972. doi: 10.1016/S0896-6273(02)00613-X

Javel, E., and Mott, J. B. (1988). Physiological and psychophysical correlates of temporal processes in hearing. Hear. Res. 34, 275–294. doi: 10.1016/0378-5955(88)90008-1

Jelinek, H. F., and McIntyre, R. (2010). Electric pulse frequency and magnitude of perceived sensation during electrocutaneous forearm stimulation. Arch. Phys. Med. Rehabil. 91, 1378–1382. doi: 10.1016/j.apmr.2010.06.016

Johansson, R. S., and Birznieks, I. (2004). First spikes in ensembles of human tactile afferents code complex spatial fingertip events. Nat. Neurosci. 7, 170–177. doi: 10.1038/nn1177

Kayser, C., Petkov, C. I., Augath, M., and Logothetis, N. K. (2005). Integration of touch and sound in auditory cortex. Neuron 48, 373–384. doi: 10.1016/j.neuron.2005.09.018

Krumbholz, K., Patterson, R. D., and Pressnitzer, D. (2000). The lower limit of pitch as determined by rate discrimination. J. Acoust. Soc. Am. 108(3 Pt. 1) 1170–1180. doi: 10.1121/1.1287843

Laming, D., and Laming, J. (1992). F. Hegelmaier: On memory for the length of a line. Psychol. Res. 54, 233–239. doi: 10.1007/BF01358261

LaMotte, R. H., and Mountcastle, V. B. (1975). Capacities of humans and monkeys to discriminate vibratory stimuli of different frequency and amplitude: A correlation between neural events and psychological measurements. J. Neurophysiol. 38, 539–559. doi: 10.1152/jn.1975.38.3.539

Lemus, L., Hernández, A., Luna, R., Zainos, A., and Romo, R. (2010). Do sensory cortices process more than one sensory modality during perceptual judgments? Neuron 67, 335–348. doi: 10.1016/j.neuron.2010.06.015

Li, M., Zhang, D., Chen, Y., Chai, X., He, L., Chen, Y., et al. (2018). Discrimination and recognition of phantom finger sensation through transcutaneous electrical nerve stimulation. Front. Neurosci. 12:283. doi: 10.3389/fnins.2018.00283

Lu, T., Liang, L., and Wang, X. (2001). Temporal and rate representations of time-varying signals in the auditory cortex of awake primates. Nat. Neurosci. 4, 1131–1138. doi: 10.1038/nn737

Mackevicius, E. L., Best, M. D., Saal, H. P., and Bensmaia, S. J. (2012). Millisecond precision spike timing shapes tactile perception. J. Neurosci. 32, 15309–15317. doi: 10.1523/jneurosci.2161-12.2012

Marks, L. E. (1983). Similarities and differences among the senses. Int. J. Neurosci. 19, 1–11. doi: 10.3109/00207458309148640

McIntyre, S., Birznieks, I., Andersson, R., Dicander, G., Breen, P. P., and Vickery, R. M. (2016). “Temporal integration of tactile inputs from multiple sites,” in Haptics: Perception, devices, control, and applications, eds F. Bello, H. Kajimoto, and Y. Visell (Cham: Springer), 204–213.

Nagarajan, S. S., Blake, D. T., Wright, B. A., Byl, N., and Merzenich, M. M. (1998). Practice-related improvements in somatosensory interval discrimination are temporally specific but generalize across skin location, hemisphere, and modality. J. Neurosci. 18, 1559–1570. doi: 10.1523/JNEUROSCI.18-04-01559.1998

Ng, K. K. W., Birznieks, I., Tse, I. T. H., Andersen, J., Nilsson, S., and Vickery, R. M. (2018). Perceived frequency of aperiodic vibrotactile stimuli depends on temporal encoding. Haptics Sci. Technol. Appl. Pt I 10893, 199–208. doi: 10.1007/978-3-319-93445-7_18

Ng, K. K. W., Olausson, C., Vickery, R. M., and Birznieks, I. (2020). Temporal patterns in electrical nerve stimulation: Burst gap code shapes tactile frequency perception. PLoS One 15:e0237440. doi: 10.1371/journal.pone.0237440

Ng, K. K. W., Snow, I. N., Birznieks, I., and Vickery, R. M. (2021). Burst gap code predictions for tactile frequency are valid across the range of perceived frequencies attributed to two distinct tactile channels. J. Neurophysiol. 125, 687–692. doi: 10.1152/jn.00662.2020

Ng, K. K. W., Tee, X., Vickery, R. M., and Birznieks, I. (2022). The relationship between tactile intensity perception and afferent spike count is moderated by a function of frequency. IEEE Trans. Haptics 15, 14–19. doi: 10.1109/Toh.2022.3140877

Occelli, V., Spence, C., and Zampini, M. (2011). Audiotactile interactions in temporal perception. Psychon. Bull. Rev. 18, 429–454. doi: 10.3758/s13423-011-0070-4

Pack, C. C., and Bensmaia, S. J. (2015). Seeing and feeling motion: Canonical computations in vision and touch. PLoS Biol. 13:e1002271. doi: 10.1371/journal.pbio.1002271

Pei, Y. C., Hsiao, S. S., and Bensmaia, S. J. (2008). The tactile integration of local motion cues is analogous to its visual counterpart. Proc. Natl. Acad. Sci. U.S.A. 105, 8130–8135. doi: 10.1073/pnas.0800028105

Pérez-Bellido, A., Anne Barnes, K., Crommett, L. E., and Yau, J. M. (2018). Auditory frequency representations in human somatosensory cortex. Cereb. Cortex 28, 3908–3921. doi: 10.1093/cercor/bhx255

Rahman, M. S., Barnes, K. A., Crommett, L. E., Tommerdahl, M., and Yau, J. M. (2020). Auditory and tactile frequency representations are co-embedded in modality-defined cortical sensory systems. Neuroimage 215:116837. doi: 10.1016/j.neuroimage.2020.116837

Ro, T., Ellmore, T. M., and Beauchamp, M. S. (2013). A neural link between feeling and hearing. Cereb. Cortex (New York, N.Y. 1991) 23, 1724–1730. doi: 10.1093/cercor/bhs166

Ro, T., Hsu, J., Yasar, N. E., Elmore, L. C., and Beauchamp, M. S. (2009). Sound enhances touch perception. Exp. Brain Res. 195, 135–143. doi: 10.1007/s00221-009-1759-8

Romo, R., and Salinas, E. (2001). Touch and go: Decision-making mechanisms in somatosensation. Annu. Rev. Neurosci. 24, 107–137. doi: 10.1146/annurev.neuro.24.1.107

Romo, R., and Salinas, E. (2003). Flutter discrimination: Neural codes, perception, memory and decision making. Nat. Rev. Neurosci. 4, 203–218. doi: 10.1038/nrn1058

Romo, R., Hernández, A., Zainos, A., Lemus, L., and Brody, C. D. (2002). Neuronal correlates of decision-making in secondary somatosensory cortex. Nat. Neurosci. 5, 1217–1225. doi: 10.1038/nn950

Saal, H. P., Wang, X., and Bensmaia, S. J. (2016). Importance of spike timing in touch: An analogy with hearing? Curr. Opin. Neurobiol. 40, 142–149. doi: 10.1016/j.conb.2016.07.013

Sachs, R. M., Miller, J. D., and Grant, K. W. (1980). Perceived magnitude of multiple electrocutaneous pulses. Percept. Psychophys. 28, 255–262. doi: 10.3758/BF03204383

Sanchez, G., Hartmann, T., Fusca, M., Demarchi, G., and Weisz, N. (2020). Decoding across sensory modalities reveals common supramodal signatures of conscious perception. Proc. Natl. Acad. Sci. U.S.A. 117, 7437–7446. doi: 10.1073/pnas.1912584117

Schroeder, C. E., and Foxe, J. J. (2002). The timing and laminar profile of converging inputs to multisensory areas of the macaque neocortex. Brain Res. Cogn. Brain Res. 14, 187–198. doi: 10.1016/s0926-6410(02)00073-3

Schroeder, C. E., Wilson, D. A., Radman, T., Scharfman, H., and Lakatos, P. (2010). Dynamics of Active Sensing and perceptual selection. Curr. Opin. Neurobiol. 20, 172–176. doi: 10.1016/j.conb.2010.02.010

Schürmann, M., Caetano, G., Hlushchuk, Y., Jousmäki, V., and Hari, R. (2006). Touch activates human auditory cortex. NeuroImage 30, 1325–1331. doi: 10.1016/j.neuroimage.2005.11.020

Sharma, D., Ng, K. K. W., Birznieks, I., and Vickery, R. M. (2022). The burst gap is a peripheral temporal code for pitch perception that is shared across audition and touch. Sci. Rep. 12:11014. doi: 10.1038/s41598-022-15269-5

Talbot, W. H., Darian-Smith, I., Kornhuber, H. H., and Mountcastle, V. B. (1968). The sense of flutter-vibration: Comparison of the human capacity with response patterns of mechanoreceptive afferents from the monkey hand. J. Neurophysiol. 31, 301–334. doi: 10.1152/jn.1968.31.2.301

Tanji, J. (1994). The supplementary motor area in the cerebral cortex. Neurosci. Res. 19, 251–268. doi: 10.1016/0168-0102(94)90038-8

Vergara, J., Rivera, N., Rossi-Pool, R., and Romo, R. (2016). A neural parametric code for storing information of more than one sensory modality in working memory. Neuron 89, 54–62. doi: 10.1016/j.neuron.2015.11.026

Vickery, R. M., Ng, K. K. W., Potas, J. R., Shivdasani, M. N., McIntyre, S., Nagi, S. S., et al. (2020). Tapping into the language of touch: Using non-invasive stimulation to specify tactile afferent firing patterns. Front. Neurosci. 14:500. doi: 10.3389/fnins.2020.00500

von Békésy, G. (1957). Neural volleys and the similarity between some sensations produced by tones and by skin vibrations. J. Acoust. Soc. Am. 29, 1059–1069. doi: 10.1121/1.1908698

von Békésy, G. (1959). Similarities between hearing and skin sensations. Psychol. Rev. 66, 1–22. doi: 10.1037/h0046967

Wilson, E. C., Reed, C. M., and Braida, L. D. (2010). Integration of auditory and vibrotactile stimuli: Effects of frequency. J. Acoust. Soc. Am. 127, 3044–3059. doi: 10.1121/1.3365318

Yau, J. M., Kim, S. S., Thakur, P. H., and Bensmaia, S. J. (2016). Feeling form: The neural basis of haptic shape perception. J. Neurophysiol. 115, 631–642. doi: 10.1152/jn.00598.2015

Yau, J. M., Olenczak, J. B., Dammann, J. F., and Bensmaia, S. J. (2009a). Temporal frequency channels are linked across audition and touch. Curr. Biol. 19, 561–566. doi: 10.1016/j.cub.2009.02.013

Yau, J. M., Pasupathy, A., Fitzgerald, P. J., Hsiao, S. S., and Connor, C. E. (2009b). Analogous intermediate shape coding in vision and touch. Proc. Natl. Acad. Sci. U.S.A. 106, 16457–16462. doi: 10.1073/pnas.0904186106

Keywords: vibrotactile, audio-tactile equivalence, burst-gap code, temporal code, auditory click, frequency perception, cross-modal comparison, pitch perception

Citation: Sharma D, Ng KKW, Birznieks I and Vickery RM (2022) Auditory clicks elicit equivalent temporal frequency perception to tactile pulses: A cross-modal psychophysical study. Front. Neurosci. 16:1006185. doi: 10.3389/fnins.2022.1006185

Received: 29 July 2022; Accepted: 24 August 2022;

Published: 09 September 2022.

Edited by:

Benedikt Zoefel, UMR 5549 Centre de Recherche Cerveau et Cognition (CerCo), FranceReviewed by:

Arindam Bhattacharjee, University of Tübingen, GermanyLincoln Gray, James Madison University, United States

Copyright © 2022 Sharma, Ng, Birznieks and Vickery. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Deepak Sharma, ZGVlcGFrLnNoYXJtYTFAdW5zdy5lZHUuYXU=

Deepak Sharma

Deepak Sharma Kevin K. W. Ng

Kevin K. W. Ng Ingvars Birznieks

Ingvars Birznieks Richard M. Vickery

Richard M. Vickery