- 1School of Electrical Engineering, Vellore Institute of Technology (VIT) Chennai, Tamil Nadu, India

- 2Center for e-Automation Technologies and School of Electrical Engineering, Vellore Institute of Technology Chennai, Tamil Nadu, India

Introduction: Alzheimer's disease (AD) is a progressive neurological disorder that impairs memory and cognitive function in elderly individuals. Early detection is vital to slow disease progression and enable timely therapeutic intervention. Traditional diagnostic approaches for AD, however, often involve high time complexity and significant computational resource utilization, highlighting the need for more efficient automated solutions.

Methods: This study introduces a Resource Efficient Convolutional Neural Network (RECNN) framework for AD detection and diagnosis using brain MRI images. The methodology incorporates three main modules: Gabor transformation, data augmentation, and classification with an anomalous pixel segmentation algorithm. Gabor transforms are employed to enhance spatial frequency features and improve detection rates. Data augmentation techniques are applied to increase the diversity of training samples. The RECNN classifier is then used for image classification, and functional morphological segmentation is applied to classify affected pixels into mild or advanced stages of AD. Two benchmark datasets are utilized for training and testing the proposed framework.

Results: The proposed RECNN-based system demonstrated superior detection and classification performance compared with conventional AD detection methods. The model achieved improved accuracy and robustness, with segmentation results enabling the differentiation between mild and advanced AD cases. Comparative evaluation confirmed that RECNN significantly reduces computational complexity while maintaining high diagnostic reliability.

Discussion: The findings suggest that the RECNN framework offers a resource-efficient and accurate approach for AD detection using MRI data. By combining Gabor-based feature transformation, augmented data diversity, and advanced segmentation, the proposed method provides a scalable and clinically applicable tool for early diagnosis. Future work will extend the model to larger and more diverse datasets and explore hybrid architectures to further enhance diagnostic performance.

1 Introduction

Alzheimer's disease (AD) is a progressive neurodegenerative disorder that predominantly affects the elderly population. The disease often begins with subtle, asymptomatic changes, followed by a gradual decline in cognitive function. One of the earliest and most defining clinical features is impairment in memory function, which arises as healthy neurons progressively deteriorate. As the condition advances, many patients develop mild cognitive impairment (MCI), a transitional state between normal aging and dementia.

According to the Neuroimaging Society (NIS) 2021 report (Helaly et al., 2022; Jain et al., 2019; Wang Y. et al., 2018; Wang S. H. et al., 2018), AD is still incurable, even if detected at an early stage. However, timely diagnosis is considered crucial, as it allows for interventions that can delay disease progression and preserve quality of life. In particular, cognitive therapies and symptomatic treatments may help stabilize memory performance and slow the loss of functional independence.

Neuroimaging plays an essential role in AD detection and monitoring. Among the available modalities, Magnetic Resonance Imaging (MRI) and Positron Emission Tomography (PET) are the most widely adopted. While PET provides metabolic and molecular-level insights, its use is limited by the risk of radiation exposure. MRI, on the other hand, offers high-resolution structural information without the use of ionizing radiation, making it the preferred modality for longitudinal and clinical studies (Noor et al., 2020; Pulido et al., 2020; Altinkaya et al., 2020; Chaddad et al., 2018; Vatanabe et al., 2020).

MRI scans can be further divided into structural MRI (sMRI) and functional MRI (fMRI). Structural MRI provides superior pixel resolution and enables precise assessment of cortical and subcortical atrophy, which are key biomarkers of AD. Functional MRI, although lower in spatial resolution, captures dynamic neural activity and serves as a complementary tool for understanding disease-related brain dysfunction (Mahanty et al., 2024; Mandawkar and Diwan, 2024; Yao et al., 2023).

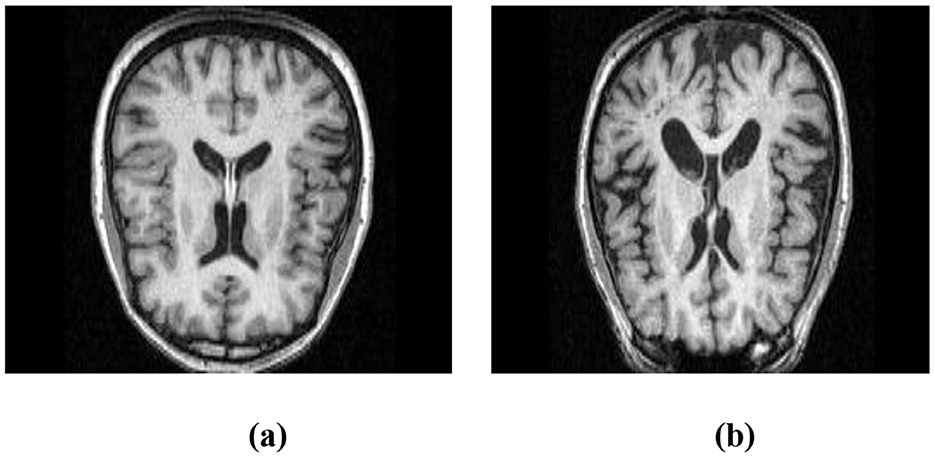

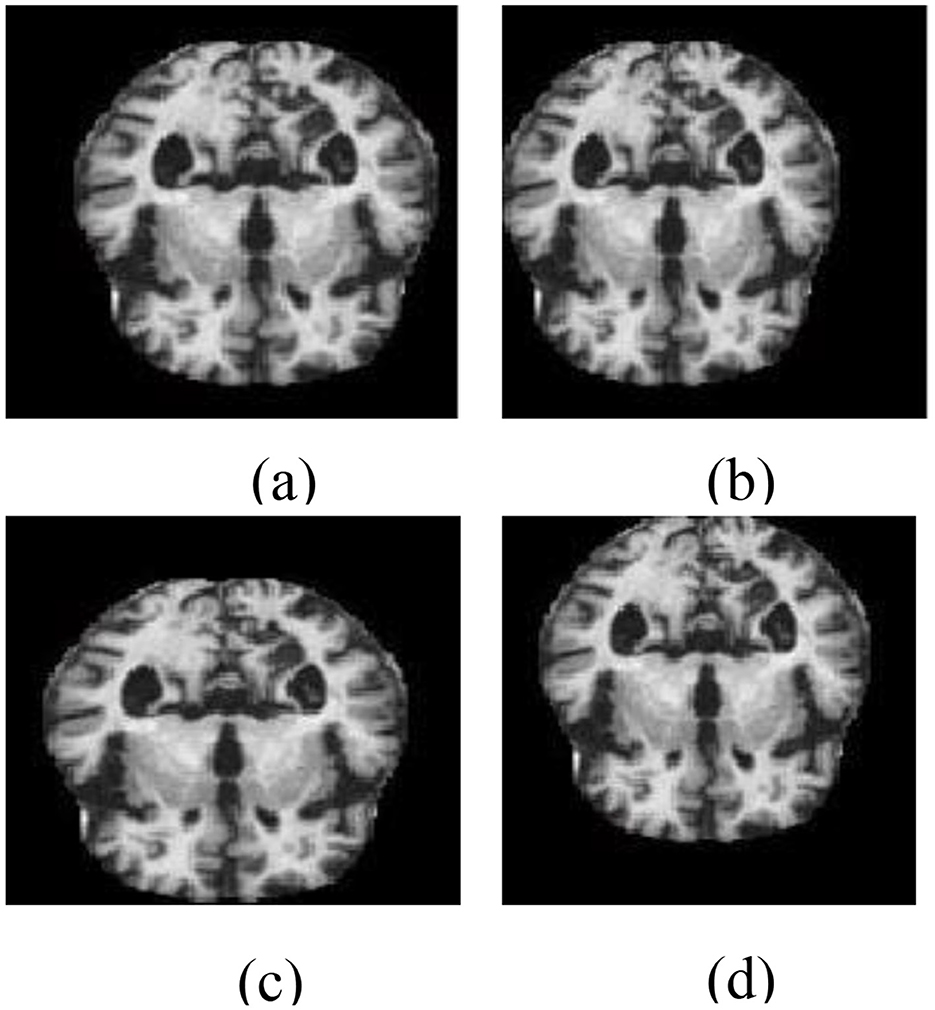

To demonstrate the utility of MRI in this context, Figure 1a shows a structural MRI scan of a healthy subject, while Figure 1b illustrates an AD-affected brain. Clear differences in structural integrity can be observed, highlighting the importance of MRI for early-stage detection.

Figure 1. Structural MRI scans illustrating brain morphology. (a) Non-AD brain image. (b) AD brain image.

Despite advances in imaging, traditional diagnostic approaches often fail to capture the diffuse and subtle structural variations that characterize early AD. Conventional CNN-based models used for image classification primarily rely on sequential feature extraction and may overlook weak but clinically significant signals. This limitation underscores the need for a resource-efficient and clinically adaptable deep learning framework.

In this study, we propose a Resource-Efficient Convolutional Neural Network (RECNN) that integrates Functional Gabor Transform (FGT) preprocessing, multi-path convolutional feature extraction, and Fuzzy C-Means clustering-based classification. By combining these innovations, the framework is designed to improve sensitivity to early-stage AD biomarkers while maintaining computational efficiency. Furthermore, the model not only distinguishes AD from non-AD cases but also provides a dual-stage categorization (mild vs. advanced), thereby enhancing its clinical applicability for assessing disease progression.

2 Literature survey

Recent years have witnessed a surge in interest in the application of deep learning and machine learning approaches for detecting and classifying Alzheimer's disease (AD) using neuroimaging data. Various strategies have been proposed, ranging from reinforcement learning frameworks to hybrid and hierarchical CNN-based models, each aiming to enhance accuracy and address the challenges of limited data, overfitting, and disease heterogeneity.

For instance, (Hatami et al. 2025) employed a deep reinforcement learning framework to improve AD image classification. Their non-linear learning strategy provided enhanced adaptability, yielding improvements in classification accuracy. Similarly, (Abhaya and Rajkumar 2025) proposed a hybrid architecture, Spike Google-Deep CNN SEViT, which successfully mitigated overfitting and produced reliable diagnostic outcomes. These contributions highlight the growing role of adaptive and hybrid methods in advancing AD detection.

Other studies have focused on traditional machine learning pipelines combined with neuroimaging. (Aberathne et al. 2023) utilized logistic regression on multimodal MRI and PET scans, reporting very high classification scores on benchmark datasets (KAD and MIRIAD). Along similar lines, (Diogo et al. 2022) introduced a multi-stage diagnostic framework based on support vector machines (SVM) with multi-kernel learning. By systematically varying kernel functions, their model demonstrated competitive sensitivity, specificity, and precision, highlighting the effectiveness of classical ML methods when carefully optimized.

Efficient architectures have also been explored. (Buvaneswari and Gayathri 2023) applied a linear variable model, achieving high accuracy with reduced computational demands. Meanwhile, (Liang and Gu 2020) incorporated weakly supervised deep learning with an attention mechanism to capture subtle disease cues, while (Lian et al. 2020) proposed a hierarchical CNN to localize atrophy patterns in MRI scans. Both approaches emphasized the importance of capturing pixel-level structural differences, with reported accuracies above 93% across multiple datasets.

Although these studies demonstrate remarkable progress, they share certain limitations. Many approaches prioritize overall classification accuracy but do not fully address the need for robust feature extraction across scales, reduction of overfitting in limited clinical datasets, or stage-specific diagnosis that could inform disease monitoring. Furthermore, while reported performance metrics are consistently high, these models are often tested on relatively homogeneous datasets, leaving questions about their generalizability to diverse clinical conditions, scanner types, and noise levels.

Building on these advancements, the present study introduces a Resource-Efficient Convolutional Neural Network (RECNN). Unlike prior research, RECNN integrates parallel multi-path convolution for rich feature extraction, introduces a feature integration stage to retain complementary signals, replaces dense layers with fuzzy C-means clustering to improve adaptability, and incorporates dual-stage diagnostic capability to separate mild and advanced AD cases. In doing so, it directly addresses the limitations identified in earlier studies and offers a unified, clinically meaningful framework for AD diagnosis.

In this study, we present a Resource-Efficient Convolutional Neural Network (RECNN) specifically designed for the classification of Alzheimer's disease (AD) images. The proposed framework introduces several distinct innovations that set it apart from conventional CNN-based approaches:

1. Multi-path convolutional design: the architecture incorporates parallel convolution–pooling streams that capture both fine-grained textural cues and broader structural patterns from brain images, enabling richer feature learning compared to traditional sequential CNN models.

2. Feature integration (FI) mechanism: features obtained from multiple abstraction levels are fused before classification, ensuring that complementary local and global information is preserved for more accurate detection of Alzheimer's-related changes.

3. Fuzzy C-Means (FCM)–based classification: instead of conventional fully connected layers, the model leverages FCM clustering to define adaptive decision boundaries, thereby improving robustness while mitigating overfitting in medical datasets with limited sample sizes.

4. Dual-stage diagnostic capability: beyond distinguishing AD from non-AD, the model provides additional diagnostic support by separating abnormal cases into mild and advanced stages, thereby enhancing its clinical utility for disease monitoring and progression assessment.

Together, these innovations establish RECNN as a technically distinctive and clinically meaningful framework for Alzheimer's disease classification.

The key contributions of this research are as follows:

• Functional Gabor transform (FGT): applied to capture spatial–frequency pixel properties, enhancing feature extraction from MRI images.

• Mitigation of overfitting: directional data augmentation (DA) strategies were employed during training to improve the model's robustness and generalization.

• Design of a novel RECNN architecture: a resource-efficient CNN framework specifically tailored for Alzheimer's disease (AD) image classification.

• Integration of Fuzzy C-Means (FCM): replaced traditional dense layers, providing adaptive decision boundaries and reducing overfitting risks.

3 Methodology

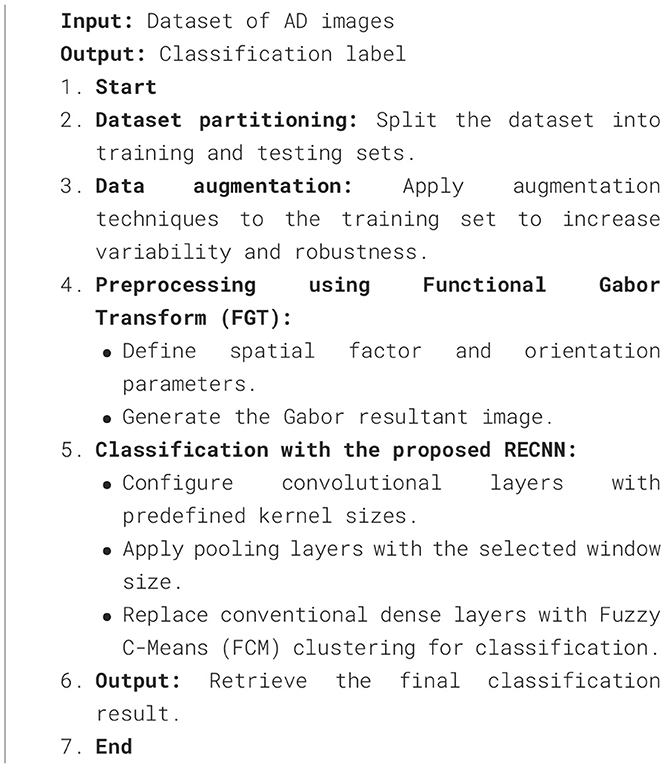

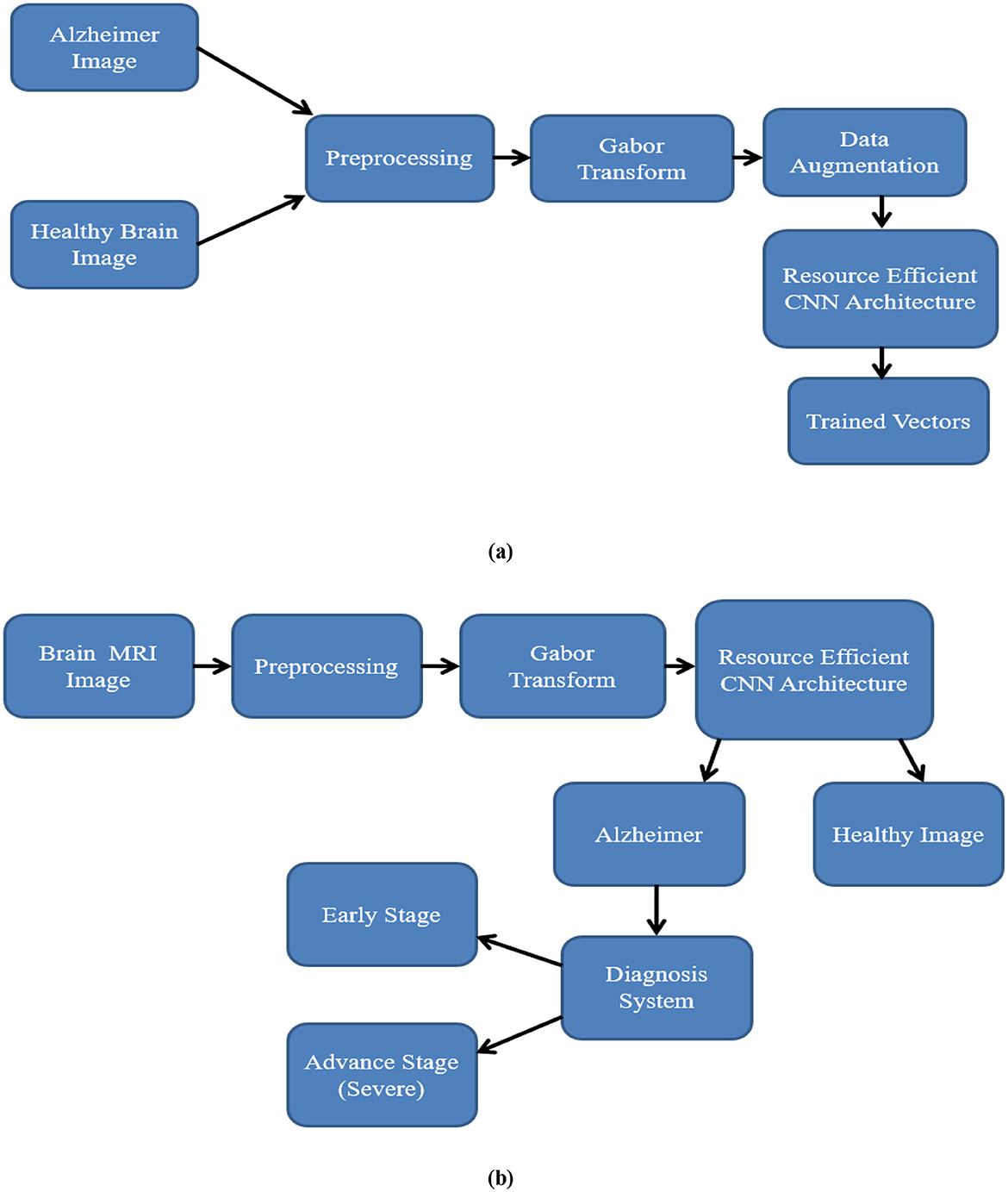

A RECNN-based deep learning approach is used in this method for the detection and diagnosis of AD images. Figures 2a, b illustrate the training and testing models. Brain MRI images of AD and non-AD subjects are obtained and preprocessed. The preprocessing module performs resizing and noise removal, followed by spatial frequency pixel property transformation using the functional Gabor transform. The data augmentation module in Figure 2a is used to increase the number of brain MRI images for training but is not applied during testing. As shown in Figure 2b, the testing stage classifies Gabor-transformed images with the RECNN architecture as either AD or non-AD. Subsequent diagnosis further categorizes AD images as mild or advanced.

Figure 2. Workflow of the proposed RECNN during (a) training and (b) testing. In training, T1-weighted sMRI images are resized, Gabor-transformed, augmented, and then input into RECNN for learning. During testing, preprocessed images are fed into the trained RECNN for classification.

The process involved in the training and testing stage is discussed as follows:

3.1 Preprocessing

In the preprocessing stage, images from the Kaggle1 and MIRIAD2 T1-weighted sMRI datasets were resized to a standardized resolution, ensuring uniformity across datasets and compatibility with the CNN input format.

3.2 Gabor transform

The brain MRI images obtained and utilized in this study exhibit spatial pixel representations in each image. The spatial variation of each pixel with respect to its surrounding pixels has a temporal relationship with the amplitude component, which reduces the detection rate of the AD image classification system. To address this, conversion from the spatial domain to the time and frequency domains with amplitude properties (Hosseini-Asl et al., 2016) is required to improve detection accuracy. To accomplish this, various conventional functional transformation models, such as Contourlet and Curvelet, are available; however, these models often result in a significant loss of pixel components during pixel property transformation. To improve the pixel property relationship during conversion, the functional Gabor transform (FGT) is used to perform spatial-frequency pixel property transformation.

The FGT has a unique two-dimensional kernel, which is multiplied by the two-dimensional brain MRI image to perform spatial-frequency pixel property conversion. The FGT kernel is given in Equation 1:

where x1 and y1 are the pixel coordinates in the brain MRI image, s is the spatial factor, and f is the frequency factor.

The spatial factor is set to 1, and the frequency factor is set to {1, 2, 3, and 4}.

The pixel coordinates in the brain MRI image are illustrated in the following Equations 2 and 3 with respect to its angle of orientation (θ).

where x and y are the pixel intensity values in the source brain MRI image with respect to the angle of orientation.

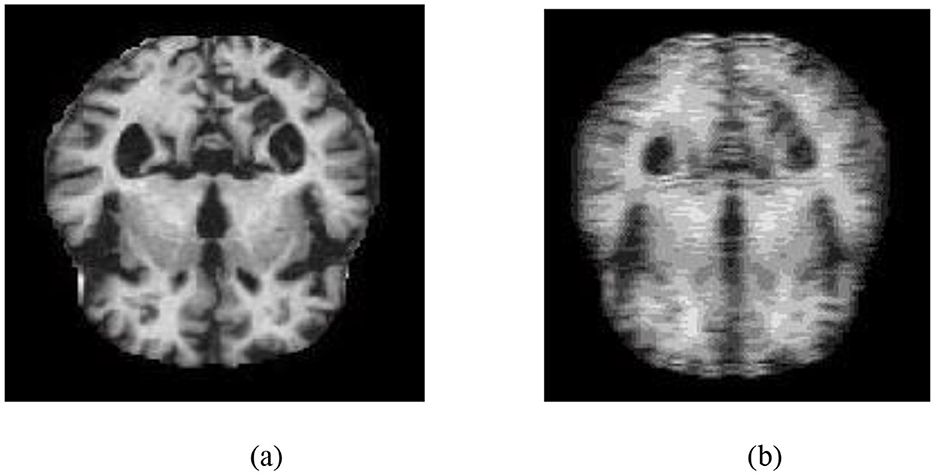

The angle of orientation of each pixel varies from −90 degrees to +90 degrees in 1-degree increments. Hence, for each frequency factor with a spatial factor, there is a 180-degree range of orientations, which creates 180 GK. These 180 GK are linearly convolved with the input source brain MRI image, producing 180 Gabor output images. These 180 Gabor output images are combined into a single Gabor image by selecting the maximum pixel intensity in each image. Figure 3 depicts an sMRI image of an AD subject and its corresponding Gabor-transformed image.

Figure 3. (a) T1-weighted sMRI image of an AD subject. (b) Corresponding Gabor-transformed image highlighting texture and frequency-domain features.

3.3 Data augmentation (DA)

As part of the training pipeline, data augmentation was employed by applying directional translations to the input images, including shifts to the right, left, upward, and downward (Li et al., 2018), to increase dataset diversity and mitigate overfitting. Directional transformations were chosen because of their ability to maintain the anatomical integrity of brain areas while introducing spatial variation. Unlike other augmentation methods, such as random rotations, elastic deformations, or intensity-based modifications, directional translations preserve the alignment and structural consistency required for reliable AD classification using structural MRI. This method keeps the enhanced data clinically relevant while improving the model's robustness, generalization capability, and functional efficiency during training. The data-augmented images are shown in Figure 4.

Figure 4. Data augmentation outputs illustrating directional transformations: (a) left-shifted image, (b) right-shifted image, (c) upward-shifted image, and (d) downward-shifted image. These augmented images are created to improve diversity during the training phase.

3.4 RECNN architecture for AD classifications

This module presents the architecture of the proposed Resource-Efficient Convolutional Neural Network (RECNN), which is designed for classifying Alzheimer's disease using brain MRI images. The classification of a brain image as either AD or a healthy brain image is performed by the CNN classifier. The CNN classifier consists of a set of convolutional layers and pooling layers, along with neural network (NN) layers. The convolutional layers are designed with a set of filter kernels of varying sizes, and the NN layer has been equipped with various numbers of neurons to produce the desired output response.

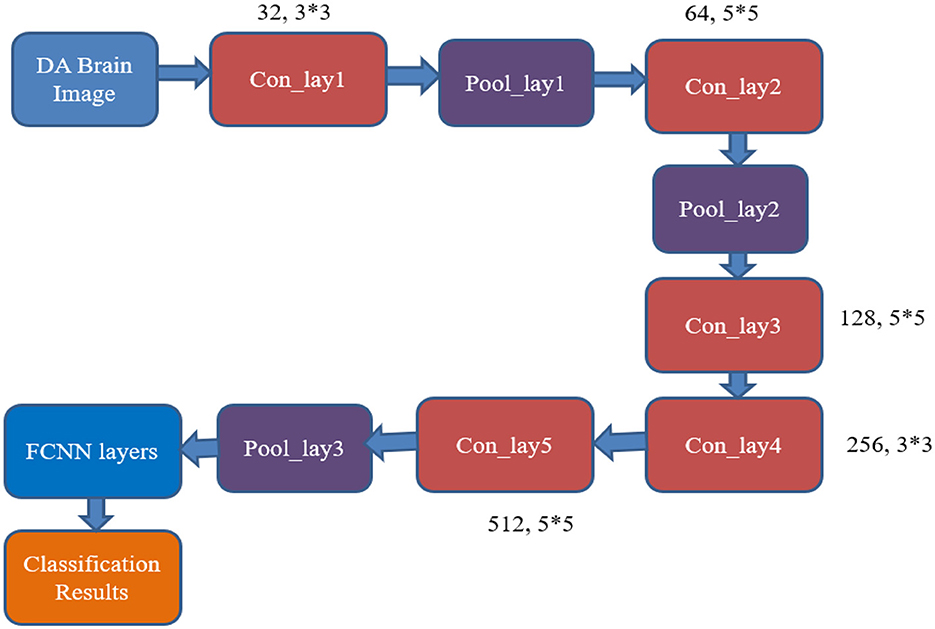

Figure 5 shows the existing CNN classifier for AD image classification (AlexNet). It includes five convolutional layers, three pooling layers, and a fully connected neural network (FCNN) layer.

Figure 5. Architecture of the conventional AlexNet classifier used for AD image classification. The model processes brain MRI images through a series of convolutional, pooling, and fully connected layers for feature extraction and classification.

The mathematical equations of the conventional classifier are as follows:

From Figure 5, it can be inferred that the desired output is obtained by utilizing a larger number of resources, like convolutional and pooling layers, with huge neuron requirements in the NN layer. This consumes more time and resources to produce the output responses. Hence, there is a need for developing a resource-optimized CNN architecture for the AD image classification process. The RECNN architecture is considered to overcome the limitations.

The proposed RECNN utilizes a pipelined framework to enhance the classification of AD from brain MRI images. This architecture combines both feature extraction and classification into a unified, continuous flow. Unlike conventional sequential systems—where extraction and classification are performed as independent processes—this method replaces the conventional Fully Connected Neural Network (FCNN) with a Fuzzy C-Means (FCM) clustering technique, ensuring a more cohesive and optimized flow of operations. Based on the streamlined architecture, the model is expected to reduce computational load and latency, thereby improving efficiency, execution speed, and scalability. This makes the RECNN particularly suitable for real-time analysis in clinical AD diagnosis scenarios.

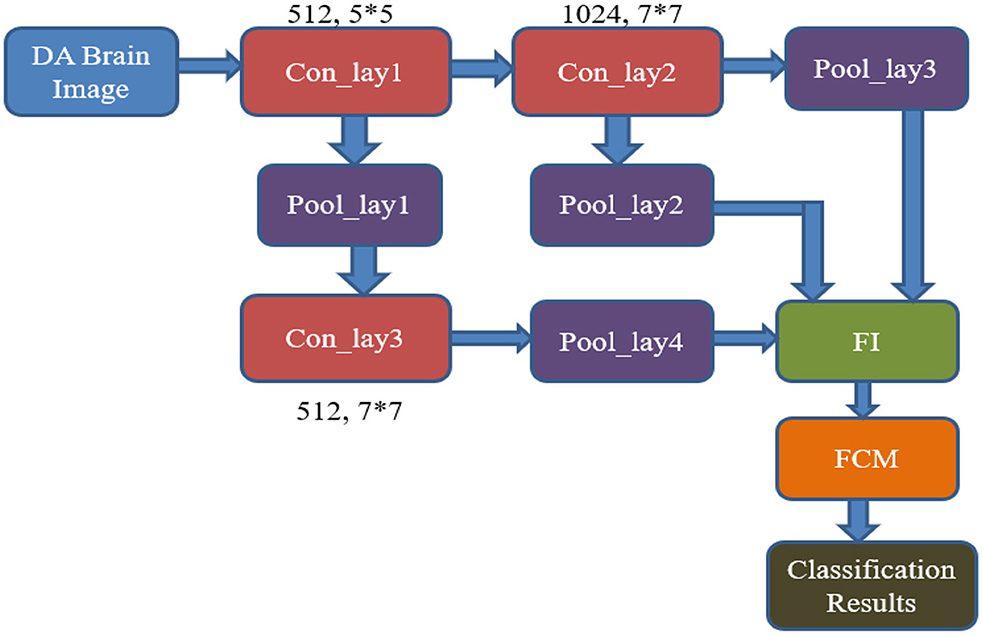

The internal design of the RECNN classifier features three convolutional layers, four pooling layers, and concludes with an FCM-based classification module, as shown in Figure 6. The pipeline starts by feeding a data-augmented brain MRI image into the first convolutional block (Con_lay1).

• Con_lay1 applies 512 filters, each measuring 5 × 5, to perform basic feature extraction through convolution. The resulting feature maps are forwarded simultaneously to both Con_lay2 and the first pooling block (Pool_lay1).

• Pool_lay1 performs a max-pooling operation with a 2 × 2 kernel, down-sampling the feature maps to reduce spatial size and computational load.

• Con_lay2 consists of 1,024 filters and uses a 7 × 7 kernel. This layer is strategically placed deeper in the pipeline to extract complex and abstract spatial features, ensuring that early-stage layers remain computationally lightweight for all resource efficiencies.

• The output from Con_lay2 is processed by Pool_lay2 and Pool_lay3, both of which apply 2 × 2 max pooling to reduce dimensionality and retain prominent features.

• Simultaneously, the output from Pool_lay1 is passed into Con_lay3, which uses 512 filters and a 7 × 7 kernel to extract more refined patterns. These are then processed by Pool_lay4 using max pooling.

Outputs from Pool_lay2, Pool_lay3, and Pool_lay4 are then combined by a Feature Integrator (FI), which merges information across scales to create a rich representation of the brain scan.

The final stage involves the FCM classifier, which applies soft clustering techniques to differentiate between AD and non-AD (NAD) images. By replacing the traditional FCNN with FCM, the model gains improved interpretability and enhanced performance in handling uncertain or overlapping data distributions, which are common in early or borderline AD cases.

The steps involved in the FCM logical approach are as follows:

The integrated features are included in the new feature vector “X,”, which contains “n” features generated through the layers of the proposed RECNN architecture. The new feature vector is illustrated by the following equation.

Each feature in “x” is assigned to a cluster, and the set of clusters is denoted in the following equation.

where k is the number of clusters that are assigned to the generated features.

The membership function “M” with p rows and q columns is represented by the following matrix.

where the following conditions need to be met to achieve the clustering functions.

Step 1: Assign M = [Mi., j] with zero iterations.

Step 2: Center vector of the generated features:

Step 3: Update the membership function M using the following equation.

Step 4: The stop condition is fixed if the iteration of Mite attains the maximum value.

3.5 Functional morphological algorithm

Functional Morphological Algorithms (FMA), when associated with pixel-level segmentation, enable the exact detection of localized brain alterations in MRI images. This enables reliable classification of Alzheimer's stages by revealing small structural differences in affected regions.

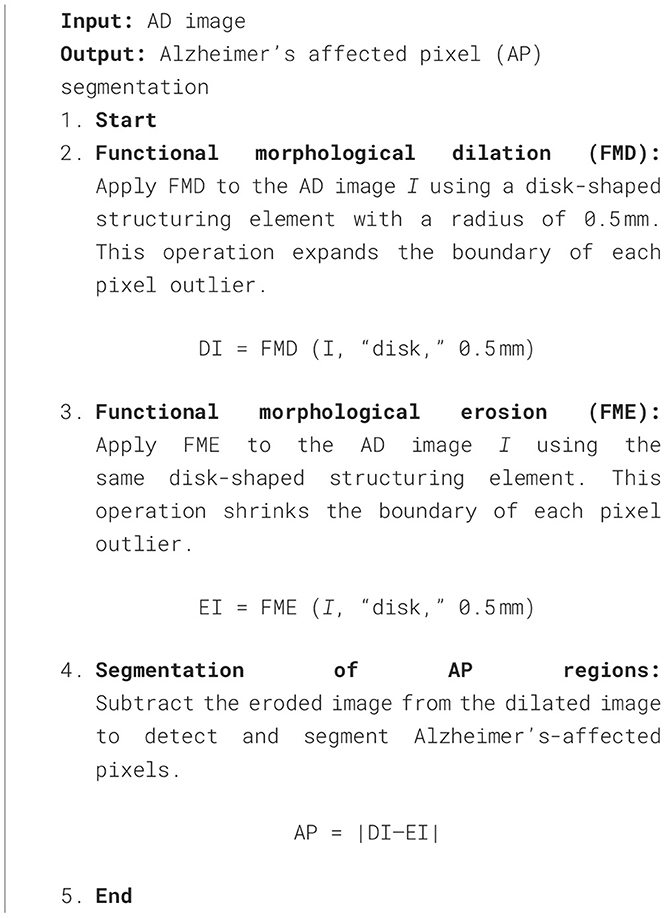

The stepwise procedure for Alzheimer's-affected pixel segmentation is outlined in Algorithm 1.

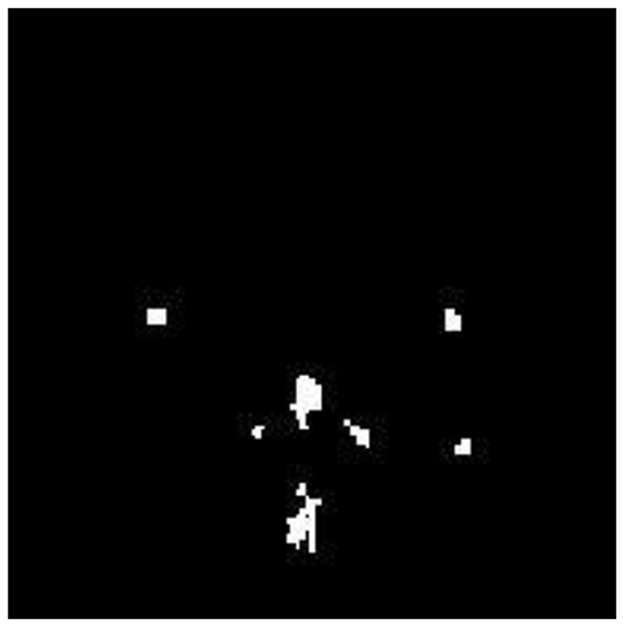

The FMA uses the detected AD image as its input image. Functional Morphological Dilation (FMD) is applied to the AD image using a disk-shaped structuring element with a radius of 0.5 mm, which increases or expands the outlier boundary of each pixel. The same image is then subjected to Functional Morphological Erosion (FME) using the same structuring element, which shrinks the outlier boundary of each pixel. AP pixels are detected and segmented in the AD image by subtracting the EI from DI. Figure 7 illustrates the segmentation of Alzheimer-affected pixel (AP) regions in an AD brain image, as classified by the RECNN model.

Figure 7. Segmentation output highlighting Alzheimer's-affected pixel (AP) regions in an AD brain image.

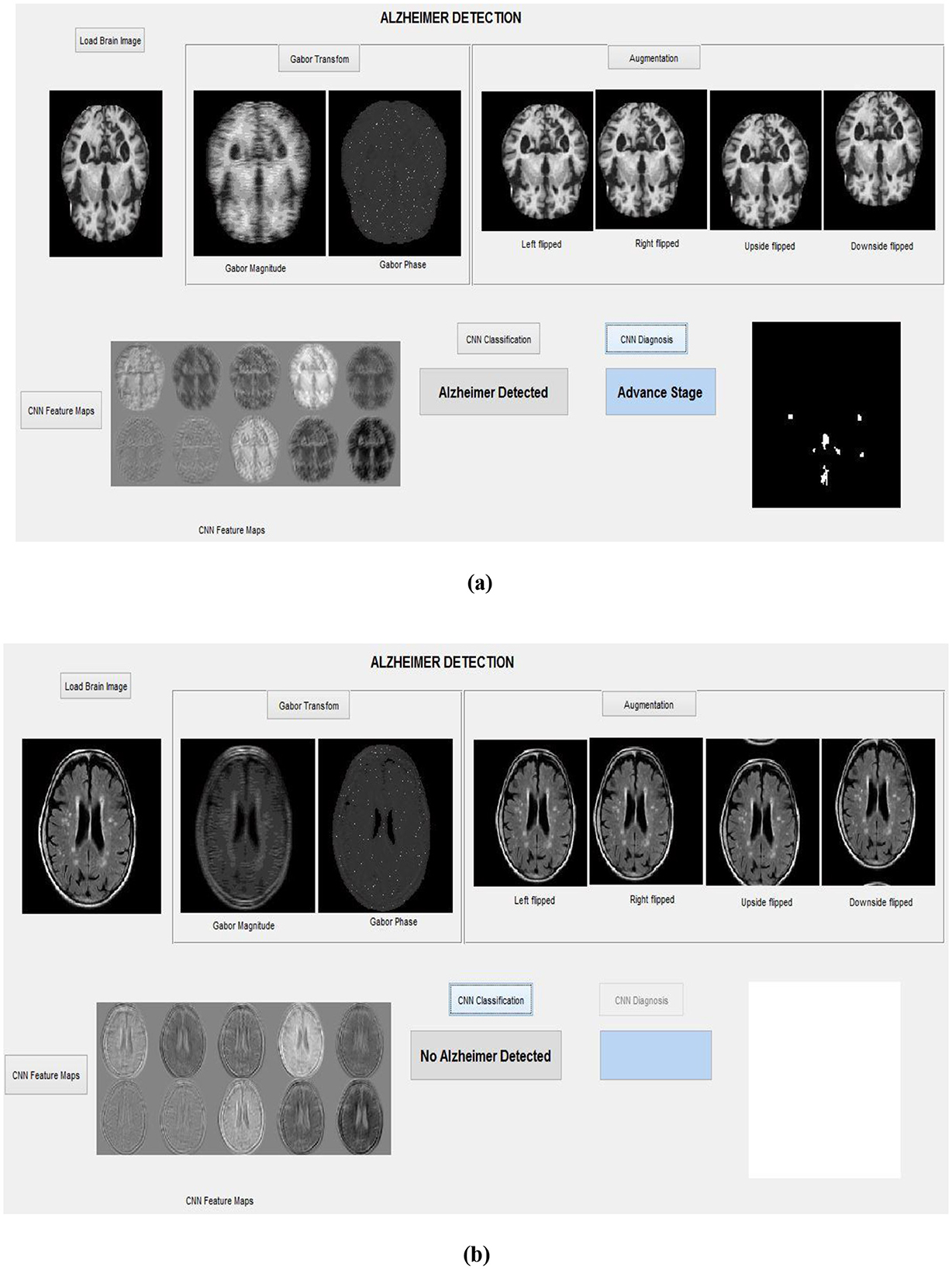

These segmented AP regions in the classified AD image are input into the RECNN classification algorithm for further analysis. Specifically, AP pixels from mild AD images and AP pixels from advanced AD images are trained separately by the RECNN algorithm to produce individual training patterns (ITPs). During testing, the AP regions in the classified AD image are processed by the RECNN algorithm against the ITPs, producing an output classified as either mild or advanced AD. Figures 8a, b present the simulation results from the testing phase, illustrating detection and classification outcomes for both AD and non-AD images. The results from each module are visualized through a graphical user interface.

Figure 8. Simulation results with the graphical interface: (a) Detection of an advanced-stage AD image, (b) Classification of a non-AD (healthy) brain image.

To illustrate the overall workflow of the proposed system, the complete process is summarized in Algorithm 2.

4 Results and discussions

The proposed Alzheimer's disease (AD) image detection and diagnosis framework based on the RECNN approach was evaluated using two publicly available datasets: Kaggle AD (KAD) and Minimal Interval Resonance Imaging in Alzheimer's Disease (MIRIAD). Both datasets are openly accessible and therefore do not require prior licensing agreements for research use. The KAD dataset includes MRI scans of both AD and non-AD subjects, each annotated by expert radiologists. It contains a total of 6,400 MRI brain images, of which 3,540 correspond to AD cases and 2,560 to non-AD cases. All images are provided at a uniform spatial resolution of 512 × 512 pixels.

In addition, this study also employed the MIRIAD dataset, curated by the Dementia Research Center (DRC) in the UK. This dataset comprises MRI scans from individuals aged 69–72 years, regardless of gender, with radiologist-provided annotations. The scans were obtained using a 1.5T Signa scanner with a 24 cm field of view and a 15° flip angle. The images are stored at a resolution of 256 × 256 pixels. In total, 8,500 images were used in this study, comprising 3,500 AD scans and 5,000 non-AD scans. All experiments and simulations were conducted in MATLAB R2024 on a Windows 10 Pro 64-bit system with an Intel Core i7-11700K processor (3.60 GHz, 8 cores/16 threads), 32 GB RAM, and 1 TB NVMe SSD. The inclusion of these two independent datasets was intentional, as they differ in both image resolution and acquisition settings. This diversity allows for a more rigorous evaluation of the robustness of the proposed approach.

The mathematical equations for calculating the performance of the AD image detection system are defined in Equations 8, 9:

Utilizing the equation mentioned above, the computational performance indicates a detection rate of 98.1% for ADDR and 99.1% for NADDR on the Kaggle dataset, while it reaches 99.7% for ADDR and 99.4% for NADDR on the MIRIAD dataset.

Furthermore, the performance of the AD detection and diagnosis system has been calculated and analyzed using the following mathematical equations:

where TP, TN, FP, and FN stand for true positive, true negative, false positive, and false negative, respectively. TP and TN indicate the correctly identified abnormal Alzheimer's pixels and healthy pixels. Incorrectly identified abnormal pixels and healthy pixels are represented by FP and FN, respectively.

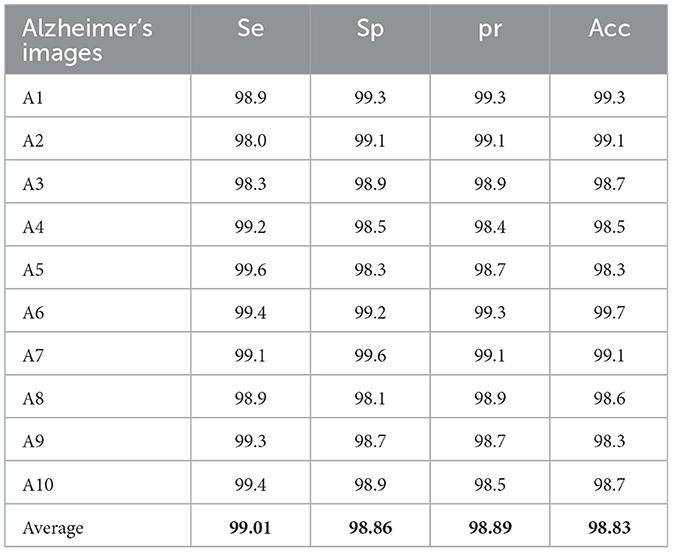

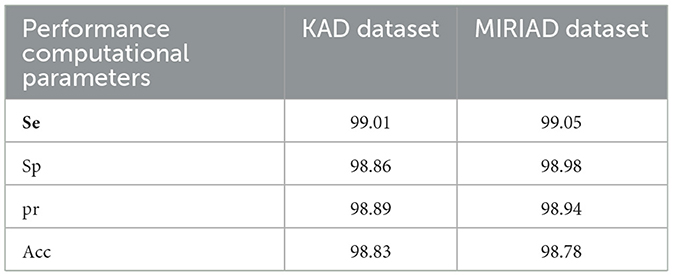

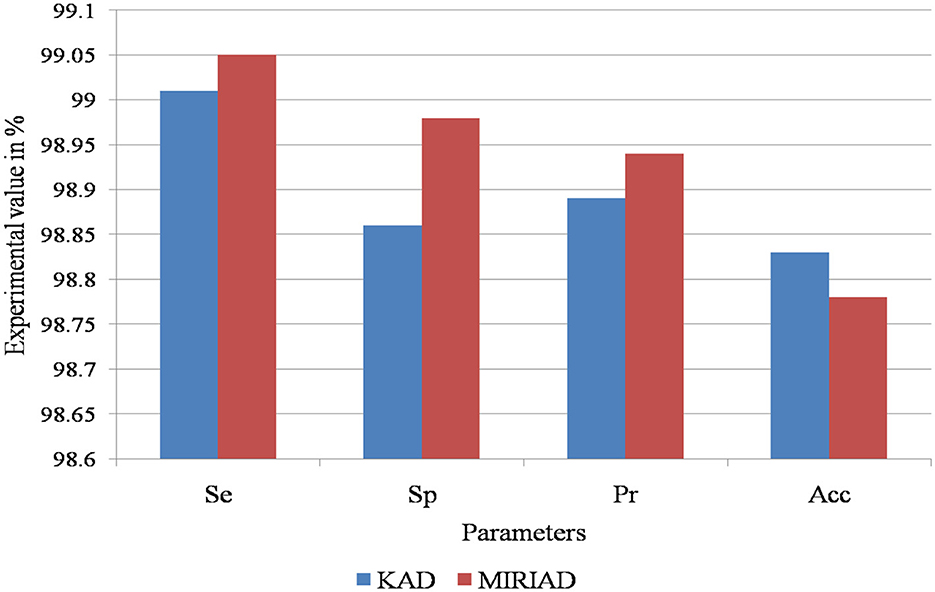

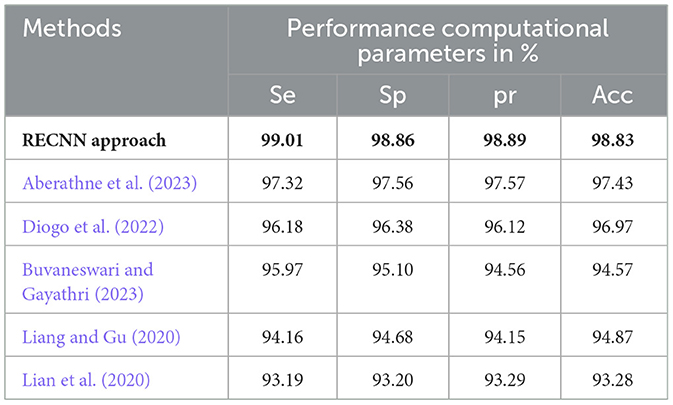

Table 1 presents the experimental results of the computational performance of the AD image detection system on the KAD dataset. The proposed RECNN-based AD imaging detection and diagnosis system achieves 99.01%Se, 98.86%Sp, 98.89%pr, and 98.83%Acc, respectively.

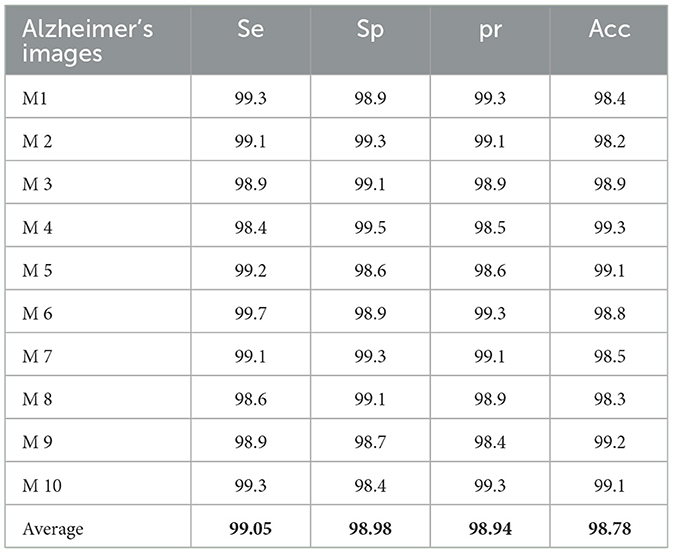

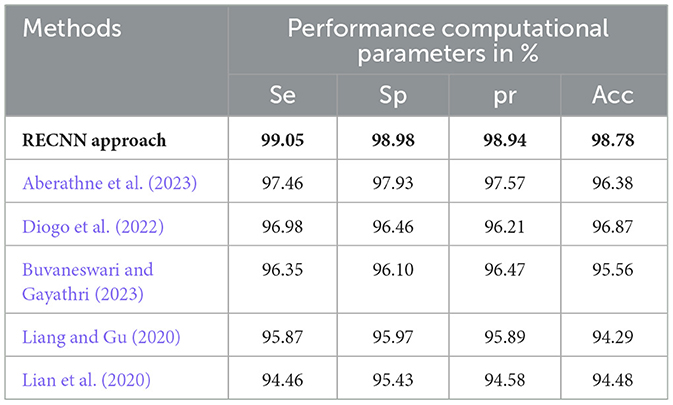

Table 2 presents the experimental results of the performance computation for the AD image detection system on the MIRIAD dataset. The proposed RECNN-based AD imaging detection and diagnosis system achieves 99.01%Se, 98.86%Sp, 98.89%pr, and 98.83%Acc, respectively.

This study utilizes k-fold cross-validation as a robust statistical method to evaluate the model's performance across different assessment criteria. The computational analysis of the AD detection and diagnosis system using the RECNN approach on the KAD and MIRIAD datasets is shown in Figure 9.

Figure 9. Performance evaluation of the proposed RECNN model on the KAD and MIRIAD datasets, illustrating classification metrics such as sensitivity, specificity, accuracy, and precision.

Table 3 presents the computational analysis of the AD detection and diagnosis system using the RECNN approach.

Table 4 presents a comparative analysis of the proposed RECNN approach with other similar AD detection and diagnosis methods with respect to the AD images in the KAD dataset.

Table 4. Comparative analysis of the proposed RECNN approach with other similar AD detection and diagnosis methods with respect to the AD images in the KAD dataset.

The experimental results of the proposed RECNN classification approach are compared with those of conventional AD detection methods in this study. (Aberathne et al. 2023) attained 97.32%Se, 97.56%Sp, 97.57%pr, and 97.43%Acc on the set of AD images on the KAD dataset. (Diogo et al. 2022) attained 96.18%Se, 96.38%Sp, 96.12%pr, and 96.97%Acc on the set of AD images on the KAD dataset. (Buvaneswari and Gayathri 2023) attained 95.97%Se, 96.10%Sp, 94.56%pr, and 94.57%Acc on the set of AD images on the KAD dataset. (Liang and Gu 2020) attained 94.16%Se, 94.68%Sp, 94.15%pr, and 94.87%Acc on the set of AD images on the KAD dataset. (Lian et al. 2020) attained 93.19%Se, 93.20%Sp, 93.29%pr, and 93.28%Acc on the set of AD images on the KAD dataset. The functional morphological algorithm proposed in this work, when integrated with the proposed RECNN classification algorithm, improves the overall performance efficiency with respect to the various evaluation parameters based on different datasets, as represented in Table 4. The data augmentation module in this proposed study enhances the dataset samples during the training stage of the classification algorithm, helping to eliminate overfitting. This is one of the key reasons for the improved experimental performance

Table 5 presents a comparative analysis of the proposed RECNN approach with other similar AD detection and diagnosis methods, specifically with respect to the AD images in the MIRIAD dataset.

Table 5. Comparative analysis of the proposed RECNN approach with other similar AD detection and diagnosis methods for AD images in the MIRIAD dataset.

The experimental results of the proposed RECNN classification approach have been compared with the conventional AD detection methods in this paper. (Aberathne et al. 2023) attained 97.46%Se, 97.93%Sp, 97.57%pr, and 96.38%Acc on the set of AD images on the MIRIAD dataset. (Diogo et al. 2022) attained 96.98%Se, 96.46%Sp, 96.21%pr, and 96.87%Acc on the set of AD images on the MIRIAD dataset. (Buvaneswari and Gayathri 2023) attained 96.35%Se, 96.10%Sp, 96.47%pr, and 95.56%Acc on the set of AD images on the MIRIAD dataset. (Liang and Gu 2020) attained 95.87%Se, 95.97%Sp, 95.89%pr, and 94.29%Acc on the set of AD images on the MIRIAD dataset. (Lian et al. 2020) attained 94.46%Se, 95.43%Sp, 94.58%pr, and 94.48%Acc on the set of AD images on the MIRIAD dataset.

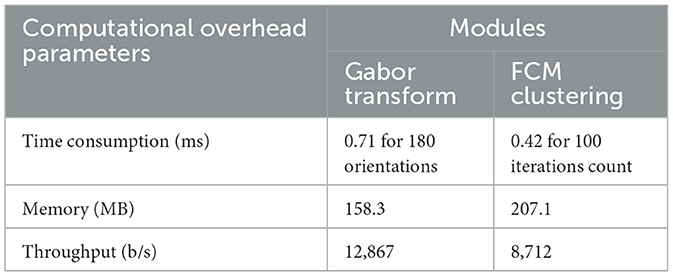

Based on the comparative analysis, it can be observed that the RECNN approach achieves better results than the other similar methods. Computational overhead refers to the consumption of computing resources for a specific task. The computational overhead includes computational time, memory utilization, bandwidth usage, and throughput. The determination of computational overhead is important for the Gabor transform and FCM due to their high computational cost and the large number of iterations. Table 6 presents the computational overhead of the proposed algorithm.

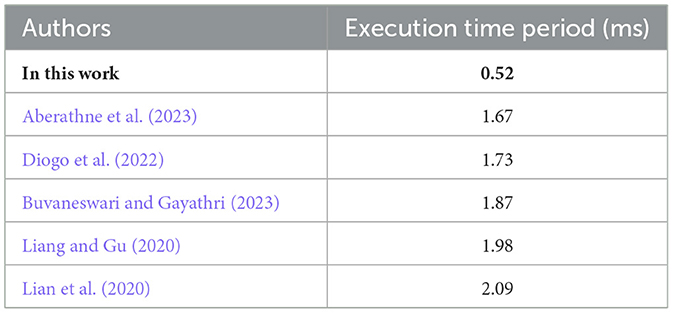

The execution time of the proposed method was evaluated in comparison with existing state-of-the-art approaches using the same computing environment. To ensure fairness, both the proposed framework and the baseline methods were implemented and tested on an identical simulation platform. The results demonstrate that the proposed approach achieves lower execution time, thereby reflecting better computational efficiency. A detailed comparison with recent state-of-the-art techniques is presented in Table 7.

5 Conclusion

AD primarily affects healthy brain cells, leading to memory loss and dependence on others for daily activities. Slowing disease progression can help patients live more independently and improve their quality of life. The RECNN architecture proposed in this study detects and diagnoses AD images in an optimized manner, enabling early identification. Integrated with the Gabor transform and a data augmentation module, the RECNN architecture performs spatial transformations and enhances image samples, thereby improving classification rates. Using this framework, AD and non-AD images are classified with efficient resource utilization, while FMA further distinguishes mild and advanced stages of AD. The proposed RECNN-based AD detection and diagnosis system achieved 99.01%Se, 98.86%Sp, 98.89%pr, and 98.83%Acc on the KAD dataset, and 99.05%Se, 98.98%Sp, 98.94%pr, and 98.78%Acc on the MIRIAD dataset. The experimental outcomes of the RECNN method are significantly compared with other existing AD detection methods, confirming that the RECNN approach used in this study produces better results. This method may also be extended in future studies to identify and examine the impacts of AD on those with head injuries and brain tumors.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Author contributions

PT: Conceptualization, Investigation, Methodology, Software, Writing – original draft. SV: Formal analysis, Supervision, Validation, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. The authors would like to thank the VIT management for supporting fund toward the publication of the work.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Footnotes

1. ^https://www.kaggle.com/datasets/marcopinamonti/alzheimer-mri-4-classes-dataset (Accessed on Jul 2023).

2. ^https://www.ucl.ac.uk/drc/research-clinical-trials/minimal-interval-resonance-imaging-alzheimers-disease-miriad

References

Aberathne, I., Don, K., and Samarasinghe, S. (2023). Detection of Alzheimer's disease onset using MRI and PET neuroimaging: longitudinal data analysis and machine learning. Neural Regen. Res. 18, 2134–2140. doi: 10.4103/1673-5374.367840

Abhaya, S. L., and Rajkumar, T. D. (2025). Spark-based Alzheimer's disease classification using hybrid spike Google-deep CNN-SEViT model. Eur. Phys. J. Plus 140:756. doi: 10.1140/epjp/s13360-025-06673-7

Altinkaya, E., Polat, K., and Barakli, B. (2020). Detection of Alzheimer's disease and dementia states based on deep learning from MRI images: a comprehensive review. J. Inst. Electron. Comput. 1, 39–53. doi: 10.33969/JIEC.2019.11005

Buvaneswari, P., and Gayathri, R. (2023). Detection and Classification of Alzheimer's disease from cognitive impairment with resting-state fMRI. Neural. Comput. Applic. 35, 22797–22812. doi: 10.1007/s00521-021-06436-2

Chaddad, A., Desrosiers, C., and Niazi, T. (2018). Deep radiomic analysis of MRI related to Alzheimer's disease. IEEE Access 6, 58213–58221. doi: 10.1109/ACCESS.2018.2871977

Diogo, V. S., Ferreira, H. A., and Prata, D. (2022). Early diagnosis of Alzheimer's disease using machine learning: a multi-diagnostic, generalizable approach. Alzheimers Res. Ther. 14:107. doi: 10.1186/s13195-022-01047-y

Hatami, M., Yaghmaee, F., and Ebrahimpour, R. (2025). Improving Alzheimer's disease classification using novel rewards in deep reinforcement learning. Biomed. Signal Process. Control 100, 106920–106934. doi: 10.1016/j.bspc.2024.106920

Helaly, H. A., Badawy, M., and Haikal, A. Y. (2022). Deep learning approach for early detection of Alzheimer's disease. Cogn. Comput. 14, 1711–1727. doi: 10.1007/s12559-021-09946-2

Hosseini-Asl, E., Keynto, R., and El-Baz, A. (2016). “Alzheimer's disease diagnostics by adaptation of 3D convolutional network,” in Proceedings of the 2016 IEEE International Conference on Image Processing (ICIP) (Phoenix, AZ: IEEE). doi: 10.1109/ICIP.2016.7532332

Jain, R., Jain, N., Aggarwal, A., and Hemanth, D. J. (2019). ScienceDirect Convolutional neural network-based Alzheimer's disease classification from magnetic resonance brain images. Cogn. Syst. Res. 57, 147–159. doi: 10.1016/j.cogsys.2018.12.015

Li, S., Xiao, B., Li, W., and Wang, G. (2018). Diagnosis of Alzheimer's disease based on 3D-PCANet. Comput. Sci. 45, 140–142. Available online at: https://www.jsjkx.com/EN/Y2018/V45/I6A/140

Lian, C., Liu, M., Zhang, J., and Shen, D. (2020). Hierarchical fully convolutional network for joint atrophy localization and Alzheimer's disease diagnosis using structural MRI. IEEE Trans. Pattern Anal. Mach. Intell. 42, 880–893. doi: 10.1109/TPAMI.2018.2889096

Liang, S., and Gu, Y. (2020). Computer-aided diagnosis of Alzheimer's disease through weak supervision deep learning framework with attention mechanism. Sensors 21:220. doi: 10.3390/s21010220

Mahanty, C., Rajesh, T., Govil, N., Venkateswarulu, N., Kumar, S., Lasisi, A., et al. (2024). Effective Alzheimer's disease detection using enhanced Xception blending with snapshot ensemble. Sci. Rep. 14:29263. doi: 10.1038/s41598-024-80548-2

Mandawkar, U., and Diwan, T. (2024). Hybrid cuttle Fish-Grey wolf optimization tuned weighted ensemble classifier for Alzheimer's disease classification. Biomed. Signal Process. Control. 92:106101. doi: 10.1016/j.bspc.2024.106101

Noor, M. B. T., Zenia, N. Z., Kaiser, M. S., Al Mamun, S., and Mahmud, M. (2020). Application of deep learning in detecting neurological disorders from magnetic resonance images: a survey on the detection of Alzheimer's disease, Parkinson's disease, and schizophrenia. Brain Inform. 7:11. doi: 10.1186/s40708-020-00112-2

Pulido, M. L. B., Hernández, J. B. A., Ballester, M. A. F., González, C. M. T., Mekyska, J., Smékal, Z., et al. (2020). Alzheimer's disease and automatic speech analysis: a review. Expert Syst Appl. 150:113213. doi: 10.1016/j.eswa.2020.113213

Vatanabe, P., Manzine, P. R., and Cominetti, M. R. (2020). Historic concepts of dementia and Alzheimer's disease: from ancient times to the present. Rev. Neurol. 176, 140–147. doi: 10.1016/j.neurol.2019.03.004

Wang, S. H., Phillips, P., Sui, Y., Liu, B., Yang, M., Cheng, H., et al. (2018). Classification of Alzheimer's disease based on an eight-layer convolutional neural network with leaky rectified linear unit and max pooling. J. Med. Syst. 42:85. doi: 10.1007/s10916-018-0932-7

Wang, Y., Yang, Y., Guo, X., Ye, C., Gao, N., Fang, Y. A., et al. (2018). A novel multimodal MRI analysis for Alzheimer's disease based on convolutional neural network. Annu. Int. Conf. IEEE Eng. Med. Biol. Soc. 2018, 754–757. doi: 10.1109/EMBC.2018.8512372

Keywords: Alzheimer's disease, Gabor transformation, data augmentation, CNN classifications, fuzzy C-mean

Citation: Prasath T and Sumathi V (2025) A pipelined, resource-efficient convolutional neural network architecture for detecting and diagnosing Alzheimer's disease using brain sMRI. Front. Neurosci. 19:1653565. doi: 10.3389/fnins.2025.1653565

Received: 25 June 2025; Accepted: 22 September 2025;

Published: 15 October 2025.

Edited by:

Rashelle Hoffman, Creighton University, United StatesReviewed by:

Raghavendran Venkatesan, Vels Institute of Science Technology and Advanced Studies, IndiaBanalaxmi Brahma, Dr. B. R. Ambedkar National Institute of Technology Jalandhar, India

Copyright © 2025 Prasath and Sumathi. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: V. Sumathi, dnN1bWF0aGlAdml0LmFjLmlu

T. Prasath

T. Prasath V. Sumathi

V. Sumathi