- School of Management, Jinan University, Guangzhou, China

Introduction: The emergence of Artificial Intelligence (AI) has revolutionized decision-making in human resource management. Since human and AI each possesses distinct strengths in the realm of decision-making, the synergy between human and AI agent has the potential to significantly enhance both the efficiency and the quality of managerial decision-making processes. Although assigning decision weights to AI agents presents innovative avenues for human-AI collaboration, the underlying mechanisms driving the allocation of decision weights to AI agents remain inadequately understood. To elucidate these mechanisms, this paper examines the influence of trust in AI on AI weight allocation within the framework of human-AI cooperation, leveraging the Socio-Cognitive Model of Trust (SCMT).

Methods: We conducted a series of survey studies involving scenario-based decision-making tasks. Study 1 examined the relationship between trust in AI and AI weight among 111 managers about employee recruitment tasks. Study 2 surveyed 210 managers using employee performance evaluation tasks.

Results: The results of Study 1 indicated that trust in AI enhances the decisional weight attributed to AI agents, and willingness to collaborate with AI mediates trust in AI and the weight of AI in personnel selection. The findings of Study 2 revealed that the perceived free will of AI agents negatively moderates the relationship between trust in AI and willing to collaborate with AI, such that the relationship is weaker when individuals perceive a higher degree of free will in AI agents than a lower degree.

Discussion: Theoretically, this paper advances the understanding of the function of trust in human-AI interaction by exploring the trust development from attitude to act in human-AI cooperative decision-making. Practically, it offers valuable insights into the design of AI agent and organizational management within the context of human-AI collaboration.

1 Introduction

Artificmial intelligence (AI) agents are computer-generated entities that are graphically represented and can either simulate fictional characters or emulate real human behaviors through AI-driven control (Alabed et al., 2022; Jeon, 2024). The advent of AI agents aligns with the emerging trend of organizations integrating human-AI collaboration into their decision-making frameworks (Lu, 2019). Over the last decades, AI has progressively developed cognitive capabilities comparable to those of humans, achieved through advancements in neural networks, machine learning, and human-computer interaction (Mbunge and Batani, 2023; Wang et al., 2020). Consequently, AI agents can efficiently aggregate and analyze vast amounts of historical data for rapid evaluation. Driven by the exponential growth of digital data and continuous breakthroughs in AI technologies, decision-making processes are increasingly being automated (Ahmad Husairi and Rossi, 2024; Ashoori and Weisz, 2019; Lomborg et al., 2023), encompassing applications such as personalized shopping recommendations, news curation, medical diagnostics, and financial portfolio management (Chua et al., 2023; Dilsizian and Siegel, 2014; Thurman and Schifferes, 2012).

Trust in humans is fundamentally characterized by one's beliefs about another's ability, benevolence, and integrity (Mayer et al., 1995). In contrast, trust in technology primarily stems from perceptions of its functionality, reliability, and helpfulness (Mcknight et al., 2011). The critical distinction between these two forms of trust lies in the presence of consciousness and moral agency in the trustee (Mcknight et al., 2011). However, as AI agents increasingly exhibit human-like attributes, this distinction is becoming less pronounced. Consequently, scholars have proposed that trust in AI encompasses both trust in AI human-like qualities and AI's functional capabilities (Choung et al., 2023). This conceptualization suggests that trust in AI represents both an extension and an evolution of traditional interpersonal trust paradigms. These developments underscore the critical need to examine trust in AI dynamics and their organizational management implications.

Trust in AI is the attitude that an agent can help an individual achieve a goal in a situation of known uncertainty and vulnerability (Lee and See, 2004). In the context of human-AI cooperation, trust is not only the basis for human-AI cooperation (Esterwood and Robert, 2021), but also affects the performance and efficiency of the human-AI team (McNeese et al., 2021). People's trust in AI agents can significantly increase people's adoption of AI agents and its recommendations in decision-making (Chua et al., 2023; Frank et al., 2023; Xu et al., 2024). Within the domain of organizational management, AI agents are allocated potential weight to make managerial decisions (Chowdhury et al., 2023; Köchling, 2023; Liu et al., 2023). Although human-AI joint decision-making has been applied in the workplace (Keding and Meissner, 2021), there has been inadequate discussion about the allocation of decision weights between humans and AI agents. AI weight is the decision weight people assign to AI in human-AI joint decision-making (Haesevoets et al., 2021). In some decision-making situations, AI agents have full decision-making weight, while in some situations, the AI has only partial decision-making weight or even no decision-making weight (Shrestha et al., 2019). In managerial decision-making processes, people do not want to exclude AI agents entirely, instead, they assign a certain weight (25%−30%) to AI agents (Haesevoets et al., 2021, 2024). However, the influencing mechanisms behind this allocation have not yet been fully demonstrated.

Considering that AI is already being used to offer solutions for managerial tasks, such as interview, evaluating job applicants, allocating work and predicting employee' performance (Chowdhury et al., 2023; Köchling, 2023; Liu et al., 2023; Raisch and Krakowski, 2021). As an important part of human resource management, the study of human-AI joint decision-making in recruitment and performance evaluation is of great significance to the development of human resource management under the trend of human-AI cooperation. In addition, previous research on human-AI joint decision-making in recruitment and performance evaluation provides the basis for this study (Haesevoets et al., 2021). Therefore, it is necessary to explore the intrinsic mechanism by which people assign decision weight to AI agents in recruitment and performance evaluation decision-making scenarios.

To be specific, this study aims to examine the mechanism how trust in AI shapes the weight allocated to AI in human-AI cooperation based on the development of Socio-Cognitive Model of Trust. In addition, considering the degree to which people perceive AI free will, we examine the contingencies that might alter the impact of trust in AI on the weight allocated to AI.

This research holds significant theoretical and practical importance. Firstly, our research reveals the development process of trust in human-AI interaction, and expands the application of the trust model in the field of human-AI cooperation. Secondly, we identify the boundary conditions that influence how trust nudges human-AI cooperation. Finally, this study presents a practical approach to human-AI joint decision-making in organizational management, introducing weight allocation method that may foster the future development of organizational management.

2 Literature review

When humans and AI make decisions together, they naturally form a human-AI team (McNeese et al., 2021; De Visser et al., 2020). “Human-centered Artificial Intelligence systems” believes that effective human AI teams need to be able to leverage the unique capabilities of humans and AI while overcoming each member's own limitations, enhancing human capabilities, and improving joint performance (Xu and Gao, 2024). AI has computing power and algorithmic logic exceeds the limited rationality of human, which provides it with a significant advantage in analytical decision-making (Gershman et al., 2015). In the speed of decision-making, AI rapidly acquires, processes, and analyses data without conflicting between accuracy and speed (Forstmann et al., 2010; Shrestha et al., 2019). Human possess qualities that cannot be completely replicated by AI, such as imagination, sensitivity, and creativity, which gives them an edge in intuitive decision-making (Vincent, 2021). In the face of uncertain, complex, and ambiguous problems, the combination of human and AI adapts organizational decision-making (Jarrahi, 2018). Thus, AI plus human collaboration could perform better than AI or human alone (Heer, 2019).

As AI permeates the human workplace, it is utilized to accomplish a variety of managerial tasks (Chowdhury et al., 2023; Liu et al., 2023; Raisch and Krakowski, 2021). Therefore, it is necessary to have a comprehensive understanding of the role AI plays in human-AI managerial decision-making. Generally, AI agent plays a key role in shaping organizational decision-making processes and performance. It serves as a tool for decision making by collecting information, interpreting data, recognizing patterns, generating results, answering questions and evaluating the results to improving decision-making (Ferràs-Hernández, 2018). The findings of Neiroukh et al. (2024) demonstrate that AI capability significantly and positively affects decision-making speed, decision quality, and overall organizational performance.

Trust in AI agent can help achieve an individual's goals in a situation characterized by uncertainty and vulnerability (Lee and See, 2004). The interpretability of AI plays a key role in the trust in AI (Mi, 2024). When AI algorithms support decision making, its often remain opaque to the decision makers and devoid of clear explanations for the decisions made (Burrell, 2016). While, explainable AI (XAI) can help decision makers detect incorrect suggestions made by algorithms and make better decisions (Janssen et al., 2020). Other characteristics related to AI interpretability, such as tangibility, immediacy, and transparency have also been shown to have a significant positive effect on trust in AI (O'Neill et al., 2022; Suen and Hung, 2023). Besides, the role of trust in human-AI interaction has received much attention (Ezer et al., 2019; Montague, 2010). Lee and See (2004) propose a theoretical model of the dynamics of trust in the context of automation. In human-AI interaction, cognitive trust formation from trust stance to trust intention. Trusting stance in AI agents can improve trusting beliefs in AI and these trusting beliefs has a significant positive effect on trusting intention, reflecting the likelihood to adopt/use AI agents (Tussyadiah, 2020). Research has shown that trust in AI can improve employee-AI collaboration, such as, increasing people's adoption of AI (Frank et al., 2023; Xu et al., 2024), behavioral intention to accept AI-based recommendations (Chua et al., 2023) and intention to cooperate with AI teammates (Hou et al., 2023; Kong et al., 2023).

Yet, there are also risks associated with an over-reliance on AI (Janssen et al., 2020). In order to avoid risks, people allocate different decision weight in human-AI decision-making. Regarding the AI weight in decision-making, there are two situations, one is whether AI has decision-making right (Ashoori and Weisz, 2019; Chua et al., 2023), and the other is how much weight AI has in decision-making (Haesevoets et al., 2021). According to Shrestha et al. (2019), there are three structural categories in human-AI managerial decision-making: (1) Full AI delegation, in which AI agents have full authority to make decisions. (2) Hybrid-Sequential decision-making structures: AI agent assists managers in making decisions, but does not have decision-making rights. (3) Aggregated human-AI decision-making: the AI agent can be seen as a “member” of the decision-making group and count its decisions toward the outcome. Empirical evidence shows that human managers are willing to accept that AI agent has about 30% of the decisional weight, which is on the lower end of the spectrum (Haesevoets et al., 2021).

In prior research, the technology acceptance model (TAM) has been utilized to elucidate individuals' adoption of new technologies (McLean and Osei-Frimpong, 2019). The original TAM identified perceived usefulness and perceived ease of use as the central constructs explaining technology adoption (Davis, 1989). As TAM has evolved, additional external factors such as social norms, perceived enjoyment, and trust have been incorporated into the framework to investigate individuals' intentions to utilize AI agents (Venkatesh and Bala, 2008; Venkatesh and Davis, 2000; Choung et al., 2023). In TAM, trust serves as a significant predictor of behavior, yet the complexity of trust has not been revealed. The Trust in Automation model posits that trust can be broken down into three overarching layers of variability: dispositional trust, situational trust, and learned trust (Hoff and Bashir, 2015). Although various factors influence each layer, people's trust in automated systems ultimately hinges on the integration of perceptions regarding the system's capabilities and reliability. Trust in Automation model tends to believe that the different layers of trust is relatively independent and lacks insights into the connections among the various layers. The Socio-Cognitive Model of Trust (SCMT) represents a more dynamic theoretical framework, positing trust as a composite and hierarchical concept comprising three components: Trust Attitude, Decision to Trust, and Act of Trust (Castelfranchi and Falcone, 2010). These components form a sequential continuum, evolving from initial trust propensity to active behavioral. Consequently, we employ the SCMT to examine the psychological mechanisms underlying how individuals assign weight to AI in decision-making processes.

Free will of AI has been a hotly debated topic. Free will can be defined as an independent force that is able to determine own purpose, create own intentions and change them deliberately and unpredictably (Romportl et al., 2013). The present investigation does not take a position on the reality of free will, nor is it even directly concerned with whether free will exists. Rather, it sought to investigate the consequences of belief in free will (Baumeister et al., 2009). Telling people they do not have free will can increase cheating and aggression (Alquist et al., 2013; Bergner and Ramon, 2013; Vohs and Schooler, 2008), decrease helping behavior and reduce self-control (Rigoni et al., 2012). However, high belief in free will related to positive outcomes such as higher job satisfaction (Feldman et al., 2018), better job performance (Stillman et al., 2010), and better academic performance (Feldman et al., 2016). The robots are governed by the laws of physics and programming, however these can be designed in such a way that the robots can exhibit free will (Ashrafian, 2015). Scholars who believe that a mechanism of free will shall form a necessary part of AI (Manzotti, 2011). A person or machine that can achieve various goals means that they have some degree of free will (Farnsworth, 2017; Saltik et al., 2021; Wallkötter et al., 2020). AI free will refers to the ability of AI to determine goals, create and adjust intentions based on actual situations, and choose strategies to take action (Krausová and Hazan, 2013). Autonomy refers to the AI system's capability to carry out its own processes and operations (Beer et al., 2014). Agency refers to the ability of AI to have the capacity to plan and act (Gray and Wegner, 2012). The autonomy, free will and agency of AI agents properties have common characteristics of plurality of possibilities and freedom of choice. Given the impact of autonomy and agency, the free will of AI agent may trigger people's identity threats and realistic identity threats (Złotowski et al., 2017) and affect the extent to which people are willing to use (Stafford et al., 2014) or work with it (Weiss et al., 2009).

3 Hypotheses development

Trust is defined as a psychological state in which the trustor believes in the trustee's reliability and willingly accepts vulnerability to potential risks (Mayer et al., 1995). Trust widely exists in the establishment and development of human relationships, such as intimate relationships (Rempel et al., 1985), and organizational relationships (Meng and Berger, 2019; Petrocchi et al., 2019). With the increasing integration of AI into daily life, the growing frequency of human-AI interpersonal interactions has prompted the application of trust theory to human-AI collaboration (Georganta and Ulfert, 2024; Sanders et al., 2019). Trust plays a critical role in decision-making within human-AI teams (Lemmers-Jansen et al., 2019; Tran et al., 2022; Zhang et al., 2023). As a general and principled theory of trust, Socio-Cognitive Model of Trust (SCMT, Castelfranchi and Falcone, 2010) posits that trust can only occur between cognitive agents—entities capable of forming goals and beliefs. Trust is fundamentally a mental state of a cognitive agent, reflecting a complex evaluative attitude toward another agent's ability to act in ways that align with shared objectives. In managerial decision-making, employee, as the cognitive agent, have job objectives (such as, work performance, flourish, and career success) and hold core/initial beliefs about the AI agent's competence and predictability. Trust in AI is the attitude that employee believe AI agent will help achieve his/her job objectives goals. According to the SCMT, trust as a composed and layered notion, including three compositions: Trust Attitude, Decision to Trust, and Act of trust. Trust Attitude denotes the simple evaluation of the agent before relying on it (core trust), and Decision to Trust means the decision of relying on the agent (trust “reliance”), and Act of trust refers the action of trusting (delegation). In the theory, there is a process link between these compositions of trust, that is “trust develops from dispositional one to the active one” (Castelfranchi and Falcone, 2010).

SCMT states that trust attitude is a determinant and precursor to the decision to trust, which in turn is a precondition for the decision of trust (Henrique, 2024). In our study, trust in AI represents an evaluation and disposition toward AI. In human-AI interaction, trust is the premise for humans' intention to use and accept algorithms (Sanders et al., 2017) and the basis for human-AI collaboration (Esterwood and Robert, 2021). The success of integrating AI into organizations critically depends on employees' trust in the AI agent (Müller et al., 2019). Human-AI interaction is a process in which a human employee and an AI system build a team completing complex work tasks through physical or non-physical contact (such as voice, gesture, facial expression communication, etc.) (Hentout et al., 2019). The willingness to collaborate can be treated as a communication tendency conceived as active communication involvement with another during the process of decision-making (Anderson and Martin, 1993). Thus, willingness to collaborate with AI is the tendency of people to collaborate with AI agents to complete work tasks (Cao et al., 2021; Paluch et al., 2022). Research points out that people's willingness to collaborate with AI is largely affected by individual cognition of AI technology. Specifically, perceived human-AI similarity has been shown to enhance trust in AI agents, consequently increasing users' willingness to engage in cooperative work with these system (You and Robert, 2018). Moreover, research has found that trust is a pivotal factor in the willingness to engage with AI and in the intention to continue using AI (Lv et al., 2022; Ostrom et al., 2019). Therefore, we propose hypothesis 1.

Hypothesis 1: trust has a positive effect on the willingness to collaborate with AI.

Human managers and AI agent can have different weights in managerial decision-making processes (Haesevoets et al., 2021). Thus, AI weight is the decision weight people assign to AI in human-AI joint decision-making. SCMT states that, trust is the mental counter-part of delegation and the deepest level of trust with a fully autonomous agent is the delegation (Castelfranchi and Falcone, 2010). Employee delegate some work goals in their own plan to an AI agent signifies that they trust the AI agent. Empirical studies of human-AI cooperative decision-making have allowed people to choose the desired relative weight assignment of human decision makers and AI. Employees prefer to assign 30% of the decision-making rights to the AI and retain 70% of the decision-making rights for themselves (Haesevoets et al., 2021). According to the SCMT, the Decision to Trust eventually translates into an Act of Trust. We automatically and unconsciously adjust the strength and value of the trust belief on which the decision is based in order to feel consistent and coherent (Castelfranchi and Falcone, 2010). As a result, when employees make decisions, they tend to adopt choices that increase their trust beliefs and favor their preferences. For instance, when people make online purchases, their trusting beliefs significantly enhances their (Lim et al., 2016). Trust in AI significantly increases humans' intentions to collaborate with AI agents and their usage of AI agents (Choung et al., 2023; Li et al., 2024b). Therefore, we believe that human managers who intend to work with AI agents may give AI agents more weight in decision-making to maintain consistency and coherence of trust beliefs. So we put forward hypothesis 2 and hypothesis 3:

Hypothesis 2: willingness to collaborate has a positive effect on weight of AI.

Hypothesis 3: willingness to collaborate mediates trust in AI and weight of AI.

AI free will refers to the ability of AI to determine goals, create and adjust intentions based on actual situations, and choose strategies to take action (Krausová and Hazan, 2013). Humans frequently evaluate the trustworthiness of AI agents based on their resemblance to human characteristics and AI agents exhibiting human-like traits are often perceived as more reliable (Natarajan and Gombolay, 2020). However, once human managers believe that AI agents have a high level of free will, they may feel scared, fearful, and threatened-the uncanny valley effect (Gray and Wegner, 2012). This effect describes the relationship between a character's degree of human likeness and the emotional response of the human receiver (MacDorman et al., 2009). Uncanny valley effect can cause negative emotions when interacting with AI agents and damage the adoption of AI agents (Arsenyan and Mirowska, 2021; Ciechanowski et al., 2019; Lou et al., 2023; Lu et al., 2021). The SCMT shows that risks affect employees' trust attitude and decision to trust (Castelfranchi and Falcone, 2010). Given the uncanny valley effect, AI agent's free will undermine and erode employees' positive expectations of collaborative outcomes (Araujo et al., 2020; Romeo et al., 2022). A survey experiment indicates that people would rather entrust their schedule to a person than to an AI agent (Cvetkovic et al., 2024). In human-AI jointly decision-making task, the excessive mind of AI agents will reduce employees' trust in AI and make them more cautious about the AI agent's suggestions (Romeo et al., 2022). Therefore, we believe that a high perceived degree of free will in AI can damage the positive connection between trust in AI and willingness to collaborate. We propose hypothesis 4:

Hypothesis 4: the relationship between trust and willingness to collaborate is negatively moderated by free will. When individuals perceive that the AI has lower free will, the more they trust AI, the more willing they are to collaborate with AI, thus assigning more weight to the AI.

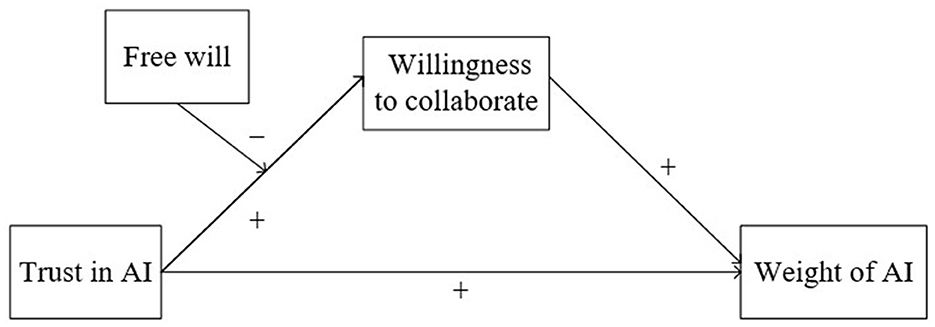

The research model is depicted in Figure 1. The objective of Study 1 was to investi- gate the impact of trust in AI on the weight assigned to AI in human-AI cooperation, as well as the mediating role of willingness to collaborate in managerial recruitment decision-making. Study 2 explored the boundary conditions and examined the moderating effect of perceived AI's free will in performance management decision-making.

4 Study 1

4.1 Method

4.1.1 Sample and procedure

We recruited 111 MBA students (Mage = 36.74, SD = 7.69, 76.58% females) from a business college in south China. We invited students to participate in an online recruitment decision during the break time of MBA classes. The MBA students volunteered for this study and were compensated with course credits. These participants were from different industries, mainly finance, service and manufacturing industry (43%), 56% of the participants were managers (44% were normal employee), and 70.3% of the participants have worked for more than 5 years. As for the experience with AI, 27.0% of participants had experience working with AI, and 40% were familiar or very familiar with AI. The sample of this study were well-educated and have substantial work and managerial experience, which allows them to understand experimental scenarios quickly and accurately.

Participants were presented introductory statement that described a scenario where humans and AI collaborate to jointly decide about employee recruitment. After reading this statement, participants were asked to answer two comprehension check questions about the material. Next, we measured their trust in AI and willingness to collaborate. Then, participants drag the slider bar to assign AI weight in human-AI cooperation hiring decisions. Finally, they finished measurements of control variables.

This research was approved by the Ethics Committee on Human Subjects Ethics Sub-Committee of Jinan University and complied with the Declaration of Helsinki. Prior to the survey, participants were provided with a consent form explaining the purpose and procedures of the research. Participants were assured of the confidentiality of their information, and the data were collected solely for research purposes. Once participants willingly provided their consent, the survey commenced. The number of ethical approval is 753948.

4.2 Measurements

4.2.1 Scenario material

At the start of this study, participants were asked to read a statement describing human-AI cooperation. It adapted by Haesevoets et al. (2021). We used a recruitment decision as an example to explain the collaborative approach to weight distribution. The specific expression is as follows:

In your role as a manager, you are mainly responsible for making recruitment decisions. With the development of AI technology, AI also has decision-making capabilities. Therefore, your company introduces AI agent as your colleague, collaborating with you to make joint decisions. AI agents are computer-generated, graphically displayed entities that represent either imaginary characters or real humans controlled by AI. Importantly, human managers and AI agent can have different weights in managerial decision-making processes, and the final decision is determined by the weighting of your and AI agent's decisions.

For example, in the decision-making process of recruiting employees, job applicants with higher interview scores are more likely to be hired. Both you and AI agent have 50% decision-making power. You give job applicant A an interview score of 80 and job applicant B an interview score of 90. AI agent gives job applicant A an interview score of 90 and job applicant B a score of 80. Finally, the interview score obtained by job applicant A is 80*50% + 90*50% = 85; the interview score obtained by job applicant B is 90*50% + 80*50% = 85.

To assess participants' comprehension of the reading material, we asked two questions after they read the material: (1) What is your role in the above situation? (Manager or Employee) (2) Who would you collaborate with in the above scenario? (AI or Human). Participants who incorrectly answered one question were excluded from the valid sample.

4.2.2 Trust in AI

We measured trust in AI using the three-item scale adapted from Gillath et al. (2021). Specific items were “How likely are you to accept decision-making advice from AI?”, “How likely are you to trust AI?”, and “How much do you feel secure to follow AI's decisions.” The scale ranged from “1 = not at all” to “6 = very”, α = 0.88.

4.2.3 Willingness to collaborate

Willingness to collaborate was measured by a three-item scale developed by Cao et al. (2021). We adapted these items to fit our research context. The specific items were as follows: (1) In the future, I plan to collaborate with AI for decision-making. (2) I will always try to collaborate with AI for decision-making in the workplace. (3) I plan to collaborate with AI frequently for decision-making. The scale ranged from “1 = strongly disagree” to “6 = Strongly agree,” α = 0.93.

4.2.4 Weight of AI

Weight of AI was measured by a slider from 0 to 100% (Haesevoets et al., 2021). Participants were asked how much decision-making weight would you expect AI to have when making management decisions about recruitment? They could answer these questions by using a slider that ranged from 0% (no weight at all) to 100% (complete weight), in small steps of 1%.

4.2.5 Control variables

As the experience with AI and knowledge about AI might vary between participants and might be associated with their trust in AI (Gillath et al., 2021), we measured participants' previous experience and familiarity with AI. Experience with AI was measured by asking if they have experience collaborating with AI. Familiarity with AI was measured with one item “How familiar are you with AI?” using a 5-point Likert-type scale (1 = extremely unfamiliar, 6 = extremely familiar). Besides, we controlled demographic variables including gender, age, work position, and years of working.

4.3 Results

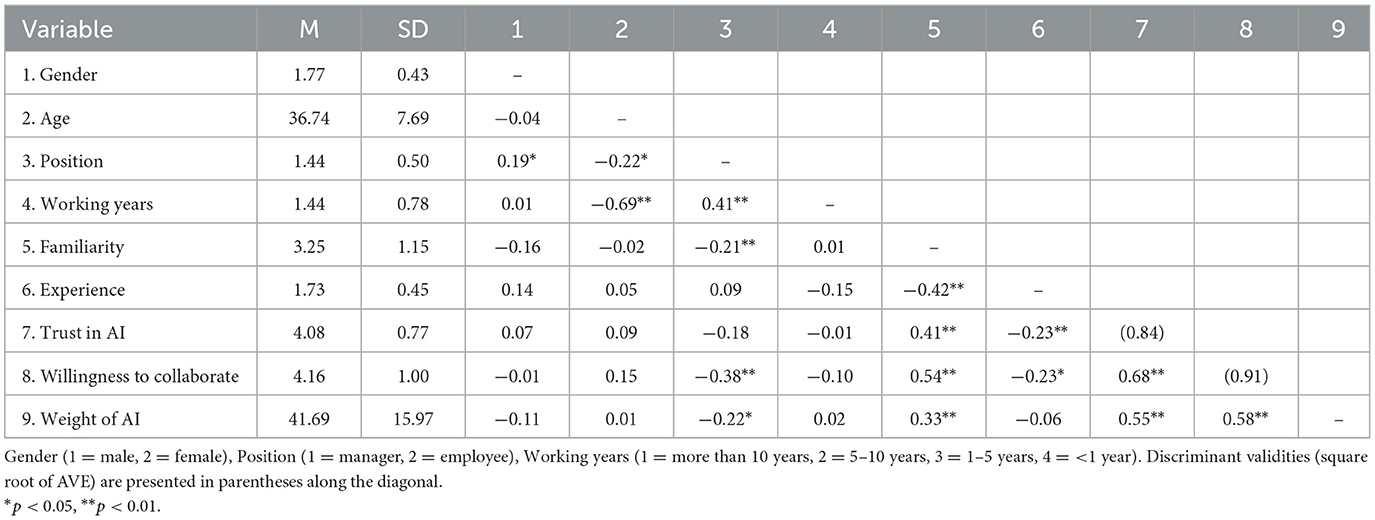

Descriptive statistics and correlations among all variables are presented in Table 1. Average Variance Extracted (AVE) >0.5 and the square root of AVE greater than the correlation between two constructs which presented good convergent validity and discriminant validity (Fornell and Larcker, 1981). Descriptive statistical analysis showed that gender, age and working years had no correlation with trust in AI, willingness to collaborate with AI and weight of AI. Position was negatively correlated with the willingness to collaborate with AI and weight of AI Familiarity with AI was positively correlated with trust in AI, willingness to collaborate with AI and weight of AI. Experience with AI was negatively correlated with trust in AI and willingness to collaborate with AI. Furthermore, trust in AI was significantly correlated with the willingness to collaborate with AI (r = 0.68, p < 0.01) and weight of AI (r = 0.55, p < 0.01), and the willingness to collaborate with AI was significantly positively correlated with the weight of AI (r = 0.58, p < 0.01), thus providing preliminary support for Hypothesis 1, Hypothesis 2, and Hypothesis 3.

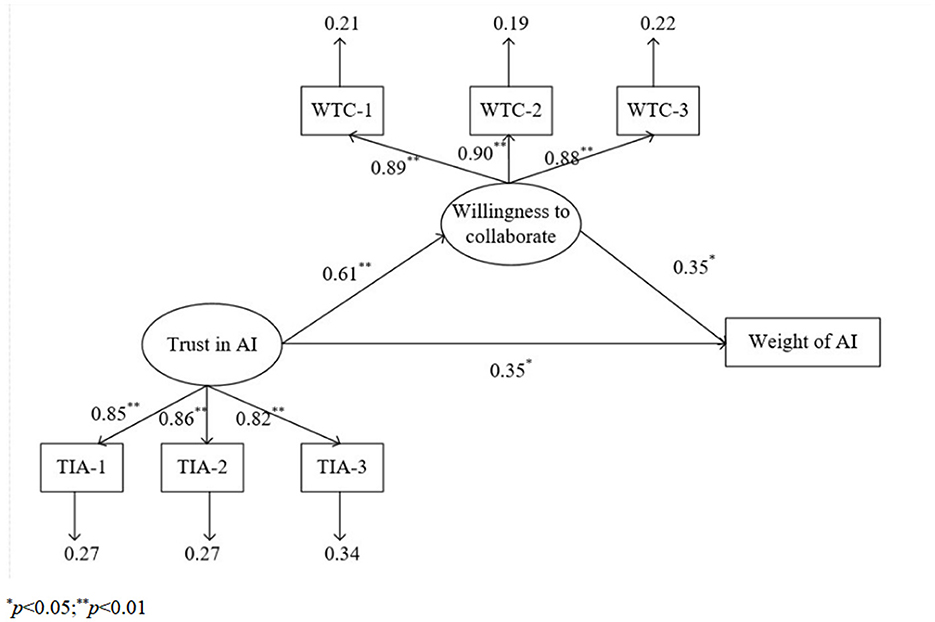

To test our Hypothesis, we used Mplus7.0 to conduct structural equation modeling (Byrne, 2013). The full model has a good fit (χ2/df = 1.75, CFI = 0.94, TLI = 0.92, RMSEA = 0.08.). Results (see Figure 2) showed that trust in AI positively influenced the weight of AI [β = 0.35, p < 0.05; 95% CIs (1.85, 14.95)], trust in AI positively influenced willingness to collaborate [β = 0.61, p < 0.01; 95% CIs (0.48, 0.92)], and willingness to collaborate positively influenced weight of AI [β = 0.35, p < 0.05; 95% CIs (0.69, 13.40)]. The indirect effect of trust in AI on weight of AI was 0.21 [95% CIs (0.03, 0.40)]. So, Hypothesis 1, Hypothesis 2, and Hypothesis 3 were supported.

Figure 2. Structure model with standardized regression coefficients and residual variances. *p < 0.05; **p < 0.01.

5 Study 2

5.1 Method

5.1.1 Sample and procedure

The participants in this study were recruited from MBA classes in a business college of south China. The MBA students volunteered for this study and were compensated with course credits. We obtained 210 valid questionnaires in Study 2. There were 112 males and 98 females, with an average age of 30.6 years (SD ± 6.427). These participants are managers from different industries, such as finance, service industry and manufacturing industry (45%), 60.5% of the participants were managers (39.5% were normal employee), and 60.5% of participants had worked for more than 5 years. As for the experience with AI, 39.0% of participants in our study had experience working with AI, and 53.3% were familiar or very familiar with AI.

Participants were presented with an introductory statement that described a scenario where humans and AI collaborate to evaluate employee' performance. After reading this statement, participants were asked to answer two comprehension check questions about the material. Next, we measured their trust in AI and willingness to collaborate. Then, participants drag the slider to assign AI weight in human-AI cooperation hiring decisions and fill out the free will scale. Finally, they finished measurements of control variables.

5.2 Measurements

5.2.1 Scenario material

At the start of this study, participants were asked to read a statement describing human-AI cooperation. It revised from Haesevoets et al. (2021). We used a performance decision as an example to explain the collaborative approach to weight distribution. The specific expression is as follows:

In your role as a manager, you are mainly responsible for making decisions about employee' compensation and performance evaluation. With the development of AI technology, AI also has decision-making capabilities. Therefore, your company introduces AI agent as your colleague, collaborating with you to make joint decisions. AI agents are computer-generated, graphically displayed entities that represent either imaginary characters or real humans controlled by AI. Importantly, human managers and AI agent can have different weights in managerial decision-making processes, and the final decision is determined by the weighting of your and AI agent's decisions.

For example, in the decision-making process of performance evaluation, employees with higher performance scores are more likely to receive higher compensation. Both you and AI agent have 50% decision-making power. You give Employee A a performance score of 80 and Employee B a performance score of 90. AI agent gives employee A a performance score of 90 and employee B a performance score of 80. Finally, the performance score obtained by employee A is 80* 50% + 90*50% = 85; the performance score obtained by employee B is 90*50% + 80*50% = 85.

To assess participants' comprehension of the reading material, we asked two questions after they had read the material: (1) What is your role in the above situation? (Manager or Employee) (2) Who would you collaborate with in the above scenario? (AI or Human). Participants who incorrectly answered one question were excluded from the valid sample.

5.2.2 Trust in AI and Willingness to collaborate

The measurement of Trust in AI and Willingness to collaborate were the same as study 1. The α of Trust in AI was 0.90 and α of Willingness to collaborate was 0.91 in this study.

5.2.3 Weight of AI

Similar to Study 1, the weight of AI was measured by a slider. Participants were asked how much decision-making weight would you expect AI to have when making management decisions about performance? They could answer these questions by using a slider that ranged from 0% (no weight at all) to 100% (complete weight), in small steps of 1%.

5.2.4 Free will

Free will was measured by a five-item scale developed by Nadelhoffer et al. (2014). The specific items were as follows: (1) AI has the ability to make decisions. (2) AI has free will. (3) How AI decisions unfold is entirely up to them. (4) AI could eventually take full control of their decisions and actions. (5) Even if the AI's choices are completely limited by the external environment, they have free will. The scale ranged from “1 = strongly disagree” to “6 = Strongly agree”, α = 0.88.

5.2.5 Control variables

Same as study 1. Participants' experience and familiarity with AI, gender, age, work position, and years of working were controlled in Study 2.

5.3 Results

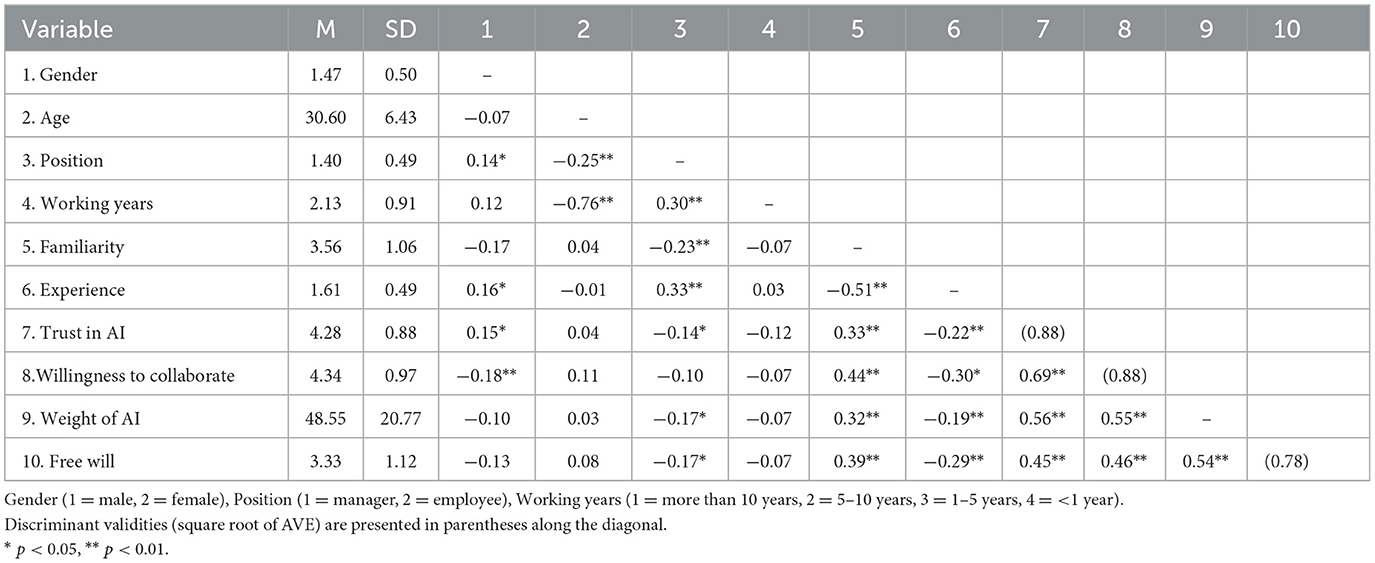

Descriptive statistics, correlations among all variables, and the square root of AVE are reported in Table 2. It shows that variables have good discriminant validity and convergent validity. Besides, the descriptive statistical analysis showed partial similar results in Study 1. Age and working years had no significant effect on trust in AI, willingness to collaborate with AI, weight of AI and AI free will. Familiarity with AI was positively correlated with trust in AI, willingness to collaborate with AI, weight of AI. However, gender was positively correlated with trust in AI and negatively correlated with willingness to collaborate with AI. Position was negatively correlated with trust in AI weight of AI and AI free will. Experience with AI was negatively correlated with trust in AI, willingness to collaborate with AI, weight of AI and AI free will. Most importantly, trust in AI was significantly correlated with the willingness to collaborate with AI (r = 0.69, p < 0.01) and weight of AI (r = 0.56, p < 0.01), and the willingness to collaborate with AI was significantly positively correlated with the weight of AI (r = 0.55, p < 0.01), thus providing preliminary support for Hypothesis 1, Hypothesis 2, and Hypothesis 3.

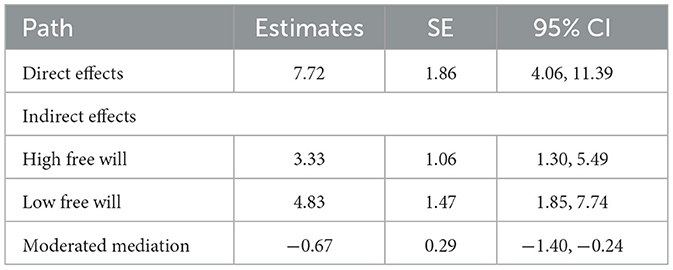

We used the PROCESS (model 7) to test our Hypotheses (Hayes, 2017). The procedure used 5,000 bootstrap samples, and the results were interpreted based on the 95% Confidence Interval (CI). The direct, indirect, and moderating effects are presented in Table 3. Results showed that trust in AI promoted the weight of AI [95% CIs (4.06, 11.39)] and the willingness to collaborate mediated the relationship between trust in AI and weight of AI [95% CIs (1.30, 5.49), 95% CIs (1.85, 7.74)]. Hypothesis 1, Hypothesis 2, and Hypothesis 3 were supported in the context of performance management as well.

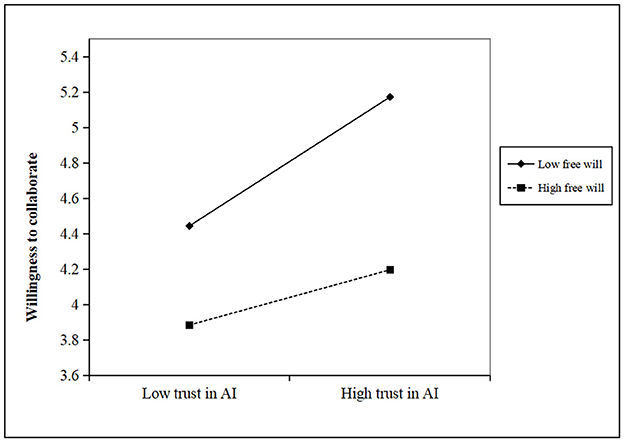

Furthermore, Hypothesis 4 proposes that free will plays a moderating role in the relationship between trust in AI and willingness to collaborate. The moderated mediation was significant [moderated mediation index = −0.67, 95% CIs (−1.40, −0.24)]. Figure 3 showed that trust in AI was more significantly and positively associated with willingness to collaborate when free will was low rather than high. Thus, Hypothesis 4 was supported.

6 Discussion

Through two studies of human-AI interaction in managerial decision-making, we found that trust in AI increased the weight people assign to AI in human-AI cooperation, and willingness to collaborate with AI played a mediating role. We revealed the development process of trust in AI in human-AI interaction according to the SCMT based on the background of human-AI interaction (Li et al., 2024a), proposing a new approach to enhance human-AI cooperation.

We further identified the boundary conditions for the influence of trust in AI on weight of AI. Specifically, perception of AI's free will moderated the association between trust in AI and willingness to collaborate with AI, and the indirect influence of trust in AI on the weight of AI (via willingness to collaborate) was weaker when free will was high rather than low. This result may stem from the Uncanny Valley effect, which poses a potential threat to human uniqueness. Prior research has demonstrated that the physical resemblance between humans and machines, coupled with the perception of machines as capable of performing advanced cognitive tasks, has elicited concerns about threats to human uniqueness (Ferrari et al., 2016). High free will AI agents, perceived as a tangible threat to human jobs, safety, resources, and identity, significantly impact the intended use of AI agents (Yogeeswaran et al., 2016).

6.1 Theoretical and practical implications

Our first contribution lies in linking employees' trust in AI to the aggregated AI weight relationship according to the SCMT. Researchers proposed different ways to assign AI weight in decision-making (Haesevoets et al., 2021, 2024; Shrestha et al., 2019), while we revealed the internal mechanism that affects AI weight from the perspective of cognitive development of trust in AI. When the AI agent works as a member of the decision-making team, human-AI interaction follows similar norms to interpersonal interaction (Georganta and Ulfert, 2024; Sanders et al., 2019). In addition, we found that the crucial role of willingness to collaborate with AI plays in the development of AI. Trust in AI affects how much weight people assign to AI in decision-making by increasing their willingness to collaborate with it. Employee believes in AI agent and is willing to be vulnerable to risk by allocating decisional weight to AI agents. We expand the application of SCMT in the field of human-AI managerial cooperation. Moreover, beyond the employee's adoption of AI agent and it's recommendations (Chua et al., 2023; Frank et al., 2023; Xu et al., 2024), we quantifiably measure decisional weight employee allocate to AI agent, which contributes to the understanding of human-AI team dynamics.

Second, this study incorporates uncanny valley effect of AI into the Socio-Cognitive Model of Trust through the boundary effect of perceived AI's free will. Perceived of high AI's free will increases people's perception of risks (Romeo et al., 2022; Złotowski et al., 2017) which threatened employees' trust attitude and decision to trust (Castelfranchi and Falcone, 2010) in the perspective of SCMT. Our research found that perceived high AI's free will weakened the impact of trust in AI on the willingness to collaborate. Existing research has not reached a consistent conclusion on whether AI has free will (Farnsworth, 2017; Manzotti, 2011; Romportl et al., 2013). The one really matters human-AI interaction is the sense/perception of AI free will, autonomy and agency (Hu et al., 2021; Sanchis, 2018; Wang and Qiu, 2024). When people perceive that AI can choose actions by itself and has free will, they will have a feeling of being threatened, such as realistic threat, identity threat and job insecurity (He et al., 2023; Złotowski et al., 2017), causing negative attitude toward AI. Our research is a preliminary exploration of the impact of AI free will on human-AI collaboration in human resource management, depicting the uncanny valley effect in human-AI team working.

Third, our findings could significantly enhance our understanding of how trust develops in human-AI interactions, thereby promoting better collaboration between humans and AI in organizational management. Human-AI interaction experiences will not only enhance the competence of making managerial decisions but will also shape the job design and crafting in workplace (He et al., 2023; Tursunbayeva and Renkema, 2023). This research explores new possibilities for human-AI cooperation by weight distribution. At the same time, organizations also need to continuously explore appropriate decision-making weight frameworks in order to adapt to changes in external circumstances.

These results have several practical implications for managers and organizations. First, our study highlights the powerful influence of trust in AI inhuman-AI interactions. To enhance the effectiveness of trust in AI organizations need to take steps to increase employee trust in AI. Before AI is brought into the organization, managers can assess pre-entry knowledge that employees have of AI systems and creating a formal AI onboarding plan (Bauer, 2010). During human-AI interaction, managers can aid in employee comprehension and actions in using AI, such as clarifying roles, determining task mastery, figuring out social interactions (Bauer et al., 2007), and improve the skills and knowledge of employee (Rodgers et al., 2023). In reality, the design of explainable AI (XAI) greatly affects people's trust in AI (Janssen et al., 2020). Therefore, it is necessary to improve the tangibility, immediacy, and transparency of AI to improve the interpretability of AI (Glikson and Woolley, 2020). For instance, when AI video interviews are adopted in job interviews, the tangibility, immediacy, and transparency of AI agents will significantly enhance job seekers' cognitive and affective trust in AI, thereby influencing their final recruitment intentions (Suen and Hung, 2023).

Second, in the era of Industry 5.0, people pay more attention to human-centered technologies in industrial practice and emphasize human-AI collaboration (Tanjung et al., 2025). From the perspective of human-AI trust, the research findings offer valuable insights into enhancing human-AI collaboration in organizational decision-making processes. By introducing a weight-distribution mechanism, it challenges traditional human-AI decision-making paradigms and innovates the collaborative decision-making framework. This approach not only reinforces the human-centered development philosophy but also elevates the quality of human-AI cooperation in future organizational management.

Third, since the advent of artificial intelligence, the rationale behind AI development and deployment has attracted significant attention from scholars, policymakers, and practitioners. This multidisciplinary discourse has yielded numerous constructive proposals addressing the ethical challenges inherent in AI system (Jobin et al., 2019; Floridi and Cowls, 2021). When AI possesses a higher degree of free will, it raises greater ethical concerns among stakeholders. Our finding provides insights for taking steps to reduce the side effect of AI free will on management decisions. On the one hand, governments can impose ethical policies and legal constraints on the design and use of AI (Astobiza et al., 2021; Trotta et al., 2023). On the other hand, companies ought to develop AI usage policies in compliance with existing legislation to standardize and supervise employee interactions with AI systems.

6.2 Limitations and future research directions

Our study has several limitations. We conduct scenario-based experiments (recruitment and performance evaluation managerial decision-making scenarios) to test our hypotheses. It would be worthwhile to extend this work to other decision- making tasks of human resource management, such as salary management and personnel promotion (Mehrabad and Brojeny, 2007). Although experimental scenarios were provided to simulate reality, there is still a bias between the experimental scenario and the actual working situation, which may limit the external validity of the results (Podsakoff and Podsakoff, 2019).

Another possible methodological limitation of our work is that the robustness of the scale we use needs to be improved. On the measurement of AI weights, we use a single item—a slider that ranges from 0% (no weights at all) to 100% (full weights) to measure the weight distribution between employees and AI agents. This measurement method may have the problem of measurement error and low reliability. When it comes to measuring AI trust, the scale of trust in AI we used is limited to three items, which may not fully capture the complexity of trust formation in human-AI collaboration. Therefore, we encourage future studies to use a multi-item scale to reduce measurement bias and to use a longer and validated trust scale. The scales currently used to measure AI free will may not fully capture the nuances of individual perception. Future research could focus on developing more comprehensive scales to better assess the complexity of this construct.

Second, our results are based on a convenience sample which weakens the external validity. The selection of MBA students for the survey is primarily attributed to their possession of relevant management experience and their utilization of AI in making certain management decisions within their daily work. While this sampling approach possesses a degree of rationality, it overlooks external validity, which could potentially influence the experimental outcomes. The filtering mechanism inherent in MBA programs tends to result in homogeneity in the educational and professional backgrounds of the sampled participants, which may hinder the detection of cross-industry and cross-cultural variations in the experimental results. In addition, taking into account the indirect effect of experience on the job allocation (Gillath et al., 2021), future research should replicate and extend these results with professionals with extensive experience in human-AI managerial decision-making.

Third, our research only explores trust as a crucial factor affecting the weight of AI. Naturally, trust is not the sole influence on the significance of AI, and forthcoming research can analyze other contributing factors. From the individual level, people's cognitive level, knowledge, self-efficacy and personal experience, personality type may affect the weight of AI. From the perspective of AI, its transparency, interpretability, reliability, and positioning affect the weight of AI (Glikson and Woolley, 2020; Han et al., 2023; Kong et al., 2024). We suggest that future research could broaden our understanding by investigating the relationship between these factors and weight of AI.

Finally, our research found the linear boundary effect of perceived free will of AI in decision-making through cross-sectional studies. Although studies have shown that people feel anxious and uneasy when faced with AI having a will and a mind (Gray and Wegner, 2012; Shank et al., 2019), other studies have shown that it can also increase individual closeness to AI and willingness to adopt AI recommendations (Chen et al., 2023; Lee et al., 2020). Considering the inverted U-shaped effect of the uncanny valley, it remains to explore whether this negative moderating effect of AI free will in human-AI interaction will change as people become more familiar with AI (MacDorman et al., 2009). We encourage future research to explore the non-linear or dynamic impact of free will on AI cooperation after deep integration of humans and AI through longitudinal designs. Furthermore, the potential power of AI free will in other human-AI interactions is worth investigating, such as human-AI co-creation and co-learning (Swan et al., 2023).

7 Conclusion

The emergence of artificial intelligence (AI) has revolutionized workplace dynamics, igniting a fervent discourse on the interaction between humans and AI. Our research investigated managerial decision-making weights that human employee delegate to AI agent. Serial empirical results revealed that trust in AI agent constitutes the foundational element of human-AI collaboration, wherein the willingness to collaborate with AI agent emerges as a pivotal factor. Furthermore, when employee consider AI agent has high capacity for free will, it undermined the role of trust in human-AI cooperation. We advance the understanding of the function trust in human-AI interaction and offer managerial and practical implications for human-AI team dynamics in organizational management. Collaboration highly depends on collaborators' trust and willingness to collaborate.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

This study was approved by the Ethics Committee on Human Subjects Ethics Sub-Committee of Jinan University. The studies were conducted in accordance with the local legislation and institutional requirements. Written informed consent for participation in this study was provided by the participants' legal guardians/next of kin.

Author contributions

YW: Data curation, Methodology, Conceptualization, Writing – review & editing. JW: Data curation, Investigation, Methodology, Writing – original draft. XC: Conceptualization, Writing – review & editing.

Funding

The author(s) declare that financial support was received for the research and/or publication of this article. This research was funded by the National Natural Science Foundation of China (Grant Nos.31970990 and 71701080) and Guangdong Key Laboratory of Neuromanagement.

Acknowledgments

We would like to thank the associate editor Dr Marco De Angelis and the reviewers at Frontiers In Organizational Psychology for their helpful and constructive feedback throughout the review process.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary Material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/forgp.2025.1419403/full#supplementary-material

References

Ahmad Husairi, M., and Rossi, P. (2024). Delegation of purchasing tasks to AI: the role of perceived choice and decision autonomy. Decis. Support Syst. 179:114166. doi: 10.1016/j.dss.2023.114166

Alabed, A., Javornik, A., and Gregory-Smith, D. (2022). AI anthropomorphism and its effect on users' self-congruence and self–AI integration: a theoretical framework and research agenda. Technol. Forecast. Soc. Change 182:121786. doi: 10.1016/j.techfore.2022.121786

Alquist, J. L., Ainsworth, S. E., and Baumeister, R. F. (2013). Determined to conform: disbelief in free will increases conformity. J. Exp. Soc. Psychol. 49, 80–86. doi: 10.1016/j.jesp.2012.08.015

Araujo, T., Helberger, N., Kruikemeier, S., and De Vreese, C. H. (2020). In AI we trust? Perceptions about automated decision-making by artificial intelligence. AI Soc. 35, 611–623. doi: 10.1007/s00146-019-00931-w

Arsenyan, J., and Mirowska, A. (2021). Almost human? A comparative case study on the social media presence of virtual influencers. Int. J. Hum.–Comput. Stud. 155:102694. doi: 10.1016/j.ijhcs.2021.102694

Ashoori, M., and Weisz, J. D. (2019). In AI we trust? Factors that influence trustworthiness of AI-infused decision-making processes. arXiv [Preprint] arXiv:1912.02675. doi: 10.48550/arXiv.1912.02675

Ashrafian, H. (2015). Artificial intelligence and robot responsibilities: innovating beyond rights. Sci. Eng. Ethics 21, 317–326. doi: 10.1007/s11948-014-9541-0

Astobiza, A. M., Toboso, M., Aparicio, M., and López, D. (2021). AI ethics for sustainable development goals. IEEE Technol. Soc. Mag. 40, 66–71. doi: 10.1109/MTS.2021.3056294

Bauer, T. N., Bodner, T., Erdogan, B., Truxillo, D. M., and Tucker, J. S. (2007). Newcomer adjustment during organizational socialization: a meta-analytic review of antecedents, outcomes, and methods. J. Appl. Psychol. 92, 707–721. doi: 10.1037/0021-9010.92.3.707

Baumeister, R. F., Masicampo, E. J., and DeWall, C. N. (2009). Prosocial benefits of feeling free: disbelief in free will increases aggression and reduces helpfulness. Pers. Soc. Psychol. Bull. 35, 260–268. doi: 10.1177/0146167208327217

Beer, J. M., Fisk, A. D., and Rogers, W. A. (2014). Toward a framework for levels of robot autonomy in human-robot interaction. J. Hum.-Robot Interact. 3:74. doi: 10.5898/JHRI.3.2.Beer

Bergner, R. M., and Ramon, A. (2013). Some implications of beliefs in altruism, free will, and nonreductionism. J. Soc. Psychol. 153, 598–618. doi: 10.1080/00224545.2013.798249

Burrell, J. (2016). How the machine ‘thinks': understanding opacity in machine learning algorithms. Big Data Soc. 3:2053951715622512. doi: 10.1177/2053951715622512

Byrne, B. (2013). Structural Equation Modeling with Mplus: Basic Concepts, Applications, and Programming. doi: 10.4324/9780203807644

Cao, G., Duan, Y., Edwards, J. S., and Dwivedi, Y. K. (2021). Understanding managers' attitudes and behavioral intentions towards using artificial intelligence for organizational decision-making. Technovation 106:102312. doi: 10.1016/j.technovation.2021.102312

Castelfranchi, C., and Falcone, R. (2010). Trust Theory: A Socio-Cognitive and Computational Model, 1st Edn. Hoboken, NJ: Wiley. doi: 10.1002/9780470519851

Chen, Q., Yin, C., and Gong, Y. (2023). Would an AI chatbot persuade you: an empirical answer from the elaboration likelihood model. Inf. Technol. People. 38, 937–962. doi: 10.1108/ITP-10-2021-0764

Choung, H., David, P., and Ross, A. (2023). Trust in AI and its role in the acceptance of AI technologies. Int. J. Hum.–Comput. Interact. 39, 1727–1739. doi: 10.1080/10447318.2022.2050543

Chowdhury, S., Dey, P., Joel-Edgar, S., Bhattacharya, S., Rodriguez-Espindola, O., Abadie, A., et al. (2023). Unlocking the value of artificial intelligence in human resource management through AI capability framework. Hum. Resour. Manag. Rev. 33:100899. doi: 10.1016/j.hrmr.2022.100899

Chua, A. Y. K., Pal, A., and Banerjee, S. (2023). AI-enabled investment advice: will users buy it? Comput. Hum. Behav. 138:107481. doi: 10.1016/j.chb.2022.107481

Ciechanowski, L., Przegalinska, A., Magnuski, M., and Gloor, P. (2019). In the shades of the uncanny valley: an experimental study of human–chatbot interaction. Future Gener. Comput. Syst. 92, 539–548. doi: 10.1016/j.future.2018.01.055

Cvetkovic, A., Savela, N., Latikka, R., and Oksanen, A. (2024). Do we trust artificially intelligent assistants at work? An experimental study. Hum. Behav. Emerg. Technol. 2024, 1–12. doi: 10.1155/2024/1602237

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 13:319. doi: 10.2307/249008

De Visser, E. J., Peeters, M. M. M., Jung, M. F., Kohn, S., Shaw, T. H., Pak, R., et al. (2020). Towards a theory of longitudinal trust calibration in human–robot teams. Int. J. Soc. Robot. 12, 459–478. doi: 10.1007/s12369-019-00596-x

Dilsizian, S. E., and Siegel, E. L. (2014). Artificial intelligence in medicine and cardiac imaging: harnessing big data and advanced computing to provide personalized medical diagnosis and treatment. Curr. Cardiol. Rep. 16:441. doi: 10.1007/s11886-013-0441-8

Esterwood, C., and Robert, L. (2021). Do You Still Trust Me? Human-Robot Trust Repair Strategies. doi: 10.1109/RO-MAN50785.2021.9515365

Ezer, N., Bruni, S., Cai, Y., Hepenstal, S. J., Miller, C. A., and Schmorrow, D. D. (2019). Trust engineering for human-AI teams. Proc. Hum. Factors Ergon. Soc. Ann. Meet. 63, 322–326. doi: 10.1177/1071181319631264

Feldman, G., Chandrashekar, S. P., and Wong, K. F. E. (2016). The freedom to excel: belief in free will predicts better academic performance. Personal. Individ. Differ. 90, 377–383. doi: 10.1016/j.paid.2015.11.043

Feldman, G., Farh, J.-L., and Wong, K. F. E. (2018). Agency beliefs over time and across cultures: free will beliefs predict higher job satisfaction. Pers. Soc. Psychol. Bull. 44, 304–317. doi: 10.1177/0146167217739261

Ferrari, F., Paladino, M. P., and Jetten, J. (2016). Blurring human–machine distinctions: anthropomorphic appearance in social robots as a threat to human distinctiveness. Int. J. Soc. Robot. 8, 287–302. doi: 10.1007/s12369-016-0338-y

Ferràs-Hernández, X. (2018). The future of management in a world of electronic brains. J. Manag. Inq. 27, 260–263. doi: 10.1177/1056492617724973

Floridi, L., and Cowls, J. (2021). “A unified framework of five principles for AI in Society,” in Ethics, Governance, and Policies in Artificial Intelligence. Philosophical Studies Series, Vol. 144, ed. L. Floridi (Cham: Springer). doi: 10.1007/978-3-030-81907-1_2

Fornell, C., and Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 18:39. doi: 10.1177/002224378101800313

Forstmann, B. U., Anwander, A., Schäfer, A., Neumann, J., Brown, S., Wagenmakers, E.-J., et al. (2010). Cortico-striatal connections predict control over speed and accuracy in perceptual decision making. Proc. Natl. Acad. Sci. 107, 15916–15920. doi: 10.1073/pnas.1004932107

Frank, D.-A., Jacobsen, L. F., Søndergaard, H. A., and Otterbring, T. (2023). In companies we trust: consumer adoption of artificial intelligence services and the role of trust in companies and AI autonomy. Inf. Technol. People 36, 155–173. doi: 10.1108/ITP-09-2022-0721

Georganta, E., and Ulfert, A. (2024). Would you trust an AI team member? Team trust in human– AI teams. J. Occup. Organ. Psychol. 97, 1212–1241. doi: 10.1111/joop.12504

Gershman, S. J., Horvitz, E. J., and Tenenbaum, J. B. (2015). Computational rationality: a converging paradigm for intelligence in brains, minds, and machines. Science 349, 273–278. doi: 10.1126/science.aac6076

Gillath, O., Ai, T., Branicky, M. S., Keshmiri, S., Davison, R. B., and Spaulding, R. (2021). Attachment and trust in artificial intelligence. Comput. Hum. Behav. 115:106607. doi: 10.1016/j.chb.2020.106607

Glikson, E., and Woolley, A. W. (2020). Human trust in artificial intelligence: review of empirical research. Acad. Manag. Ann. 14, 627–660. doi: 10.5465/annals.2018.0057

Gray, K., and Wegner, D. M. (2012). Feeling robots and human zombies: mind perception and the uncanny valley. Cognition 125, 125–130. doi: 10.1016/j.cognition.2012.06.007

Haesevoets, T., De Cremer, D., Dierckx, K., and Van Hiel, A. (2021). Human-machine collaboration in managerial decision making. Comput. Hum. Behav. 119:106730. doi: 10.1016/j.chb.2021.106730

Haesevoets, T., Verschuere, B., Van Severen, R., and Roets, A. (2024). How do citizens perceive the use of artificial intelligence in public sector decisions? Gov. Inf. Q. 41:101906. doi: 10.1016/j.giq.2023.101906

Han, B., Deng, X., and Fan, H. (2023). Partners or opponents? How mindset shapes consumers' attitude toward anthropomorphic artificial intelligence service robots. J. Serv. Res. 26, 441–458. doi: 10.1177/10946705231169674

Hayes, A. F. (2017). Introduction to Mediation, Moderation, and Conditional Process Analysis: A Regression-Based Approach. Guilford Publications.

He, C., Teng, R., and Song, J. (2023). Linking employees' challenge-hindrance appraisals toward AI to service performance: the influences of job crafting, job insecurity and AI knowledge. Int. J. Contemp. Hosp. Manag. 36, 975–994. doi: 10.1108/IJCHM-07-2022-0848

Heer, J. (2019). Agency plus automation: designing artificial intelligence into interactive systems. Proc. Natl. Acad. Sci. 116:201807184. doi: 10.1073/pnas.1807184115

Henrique, B. M. (2024). Trust in artificial intelligence: literature review and main path analysis. Comput. Hum. Behav. 2:100043. doi: 10.1016/j.chbah.2024.100043

Hentout, A., Aouache, M., Maoudj, A., and Akli, I. (2019). Human–robot interaction in industrial collaborative robotics: a literature review of the decade 2008. Adv. Robot. 33, 764–799. doi: 10.1080/01691864.2019.1636714

Hoff, K. A., and Bashir, M. (2015). Trust in automation: integrating empirical evidence on factors that influence trust. Hum. Factors 57, 407–434. doi: 10.1177/0018720814547570

Hou, K., Hou, T., and Cai, L. (2023). Exploring trust in human–AI collaboration in the context of multiplayer online games. Systems 11:217. doi: 10.3390/systems11050217

Hu, Q., Lu, Y., Pan, Z., Gong, Y., and Yang, Z. (2021). Can AI artifacts influence human cognition? The effects of artificial autonomy in intelligent personal assistants. Int. J. Inf. Manag. 56:102250. doi: 10.1016/j.ijinfomgt.2020.102250

Janssen, M., Hartog, M., Matheus, R., Ding, A., and Kuk, G. (2020). Will algorithms blind people? The effect of explainable AI and decision-makers' experience on AI-supported decision-making in government. Soc. Sci. Comput. Rev. 40:089443932098011. doi: 10.1177/0894439320980118

Jarrahi, M. H. (2018). Artificial intelligence and the future of work: human-AI symbiosis in organizational decision making. Bus. Horiz. 61, 577–586. doi: 10.1016/j.bushor.2018.03.007

Jeon, J.-E. (2024). The effect of AI agent gender on trust and grounding. J. Theor. Appl. Electron. Commer. Res. 19, 692–704. doi: 10.3390/jtaer19010037

Jobin, A., Ienca, M., and Vayena, E. (2019). The global landscape of AI ethics guidelines. Nat. Mach. Intell. 1, 389–399. doi: 10.1038/s42256-019-0088-2

Keding, C., and Meissner, P. (2021). Managerial overreliance on AI-augmented decision-making processes: how the use of AI-based advisory systems shapes choice behavior in RandD investment decisions. Technol. Forecast. Soc. Change 171:120970. doi: 10.1016/j.techfore.2021.120970

Köchling, A. (2023). Can I show my skills? Affective responses to artificial intelligence in the recruitment process. Rev. Manag. Sci. 17, 2109–2138. doi: 10.1007/s11846-021-00514-4

Kong, H., Yin, Z., Baruch, Y., and Yuan, Y. (2023). The impact of trust in AI on career sustainability: the role of employee–AI collaboration and protean career orientation. J. Vocat.. Behav. 146:103928. doi: 10.1016/j.jvb.2023.103928

Kong, X., Xing, Y., Tsourdos, A., Wang, Z., Guo, W., Perrusquia, A., et al. (2024). Explainable interface for human-autonomy teaming: a survey. arXiv [Preprint]. arXiv:2405.02583. doi: 10.48550/arXiv.2405.02583

Krausová, A., and Hazan, H. (2013). “Creating free will in artificial intelligence,” in Beyond AI: Artificial Golem Intelligence (Pilsen), 96.

Lee, J. D., and See, K. A. (2004). Trust in automation: designing for appropriate reliance. Hum. Factors 46, 50–80. doi: 10.1518/hfes.46.1.50.30392

Lee, S., Lee, N., and Sah, Y. J. (2020). Perceiving a mind in a chatbot: effect of mind perception and social cues on co-presence, closeness, and intention to use. Int. J. Hum.–Comput. Interact. 36, 930–940. doi: 10.1080/10447318.2019.1699748

Lemmers-Jansen, I. L. J., Fett, A.-K. J., Shergill, S. S., van Kesteren, M. T. R., and Krabbendam, L. (2019). Girls-boys: an investigation of gender differences in the behavioral and neural mechanisms of trust and reciprocity in adolescence. Front. Hum. Neurosci. 13:257. doi: 10.3389/fnhum.2019.00257

Li, Y., Li, Y., Chen, Q., and Chang, Y. (2024a). Humans as teammates: the signal of human–AI teaming enhances consumer acceptance of chatbots. Int. J. Inf. Manag. 76:102771. doi: 10.1016/j.ijinfomgt.2024.102771

Li, Y., Wu, B., Huang, Y., Liu, J., Wu, J., and Luan, S. (2024b). Warmth, competence, and the determinants of trust in artificial intelligence: a cross-sectional survey from China. Int. J. Hum. Comput. Interact. 41, 5024–5038. doi: 10.1080/10447318.2024.2356909

Lim, K. H., Sia, C. L., Lee, M. K. O., and Benbasat, I. (2016). Do I Trust You Online, and If So, Will I Buy? An Empirical Study of Two Trust-Building Strategies.

Liu, B., Wei, L., Wu, M., and Luo, T. (2023). Speech Production Under Uncertainty: How do Job Applicants Experience and Communicate with an AI Interviewer?

Lomborg, S., Kaun, A., and Scott Hansen, S. (2023). Automated decision-making: toward a people-centred approach. Sociol. Compass 17:e13097. doi: 10.1111/soc4.13097

Lou, C., Kiew, S. T. J., Chen, T., Lee, T. Y. M., Ong, J. E. C., and Phua, Z. (2023). Authentically fake? How consumers respond to the influence of virtual influencers. J. Advert. 52, 540–557. doi: 10.1080/00913367.2022.2149641

Lu, L., Zhang, P., and Zhang, T. (2021). Leveraging “human-likeness” of robotic service at restaurants. Int. J. Hosp. Manag. 94:102823. doi: 10.1016/j.ijhm.2020.102823

Lu, Y. (2019). Artificial intelligence: a survey on evolution, models, applications and future trends. J. Manag. Anal. 6, 1–29. doi: 10.1080/23270012.2019.1570365

Lv, X., Yang, Y., Qin, D., Cao, X., and Xu, H. (2022). Artificial intelligence service recovery: the role of empathic response in hospitality customers' continuous usage intention. Comput. Hum. Behav. 126:106993. doi: 10.1016/j.chb.2021.106993

MacDorman, K. F., Green, R. D., Ho, C.-C., and Koch, C. T. (2009). Too real for comfort? Uncanny responses to computer generated faces. Comput. Hum. Behav. 25, 695–710. doi: 10.1016/j.chb.2008.12.026

Manzotti, R. (2011). “Machine free will: is free will a necessary ingredient of machine consciousness?” in From Brains to Systems, eds. C. Hernández, R. Sanz, J. Gómez-Ramirez, L. S. Smith, A. Hussain, A. Chella, et al. (Cham: Springer), 181–191. doi: 10.1007/978-1-4614-0164-3_15

Mayer, R. C., Davis, J. H., and Schoorman, F. D. (1995). An Integrative Model of Organizational Trust. doi: 10.2307/258792

Mbunge, E., and Batani, J. (2023). Application of deep learning and machine learning models to improve healthcare in sub-Saharan Africa: emerging opportunities, trends and implications. Telemat. Inform. Rep. 11:100097. doi: 10.1016/j.teler.2023.100097

Mcknight, D. H., Carter, M., Thatcher, J. B., and Clay, P. F. (2011). Trust in a specific technology: an investigation of its components and measures. ACM Trans. Manag. Inf. Syst. 2, 1–25. doi: 10.1145/1985347.1985353

McLean, G., and Osei-Frimpong, K. (2019). Hey Alexa ... examine the variables influencing the use of artificial intelligent in-home voice assistants. Comput. Hum. Behav. 99, 28–37. doi: 10.1016/j.chb.2019.05.009

McNeese, N. J., Demir, M., Chiou, E. K., and Cooke, N. J. (2021). Trust and team performance in human–autonomy teaming. Int. J. Electron. Commer. 25, 51–72. doi: 10.1080/10864415.2021.1846854

Mehrabad, M. S., and Brojeny, M. F. (2007). The development of an expert system for effective selection and appointment of the jobs applicants in human resource management. Comput. Ind. Eng. 53, 306–312. doi: 10.1016/j.cie.2007.06.023

Meng, J., and Berger, B. K. (2019). The impact of organizational culture and leadership performance on PR professionals' job satisfaction: testing the joint mediating effects of engagement and trust. Public Relat. Rev. 45, 64–75. doi: 10.1016/j.pubrev.2018.11.002

Mi, J.-X. (2024). Toward explainable artificial intelligence: a survey and overview on their intrinsic properties. Neurocomputing 563:126919. doi: 10.1016/j.neucom.2023.126919

Montague, E. (2010). Validation of a trust in medical technology instrument. Appl. Ergon. 41, 812–821. doi: 10.1016/j.apergo.2010.01.009

Müller, L., Mattke, J., Maier, C., Weitzel, T., and Graser, H. (2019). “Chatbot acceptance: a latent profile analysis on individuals' trust in conversational agents,” in Proceedings of the 2019 on Computers and People Research Conference (New York, NY: ACM), 35–42. doi: 10.1145/3322385.3322392

Nadelhoffer, T., Shepard, J., Nahmias, E., Sripada, C., and Ross, L. T. (2014). The free will inventory: measuring beliefs about agency and responsibility. Consc. Cogn. 25, 27–41. doi: 10.1016/j.concog.2014.01.006

Natarajan, M., and Gombolay, M. (2020). “Effects of anthropomorphism and accountability on trust in human robot interaction,” in ACM/IEEE International Conference on Human-Robot Interaction (New York, NY: ACM), 33–42. doi: 10.1145/3319502.3374839

Neiroukh, S., Emeagwali, O. L., and Aljuhmani, H. Y. (2024). Artificial intelligence capability and organizational performance: unraveling the mediating mechanisms of decision-making processes. Manag. Decis. doi: 10.1108/MD-10-2023-1946. [Epub ahead of print].

O'Neill, T., McNeese, N., Barron, A., and Schelble, B. (2022). Human–autonomy teaming: a review and analysis of the empirical literature. Hum. Factors 64, 904–938. doi: 10.1177/0018720820960865

Ostrom, A. L., Fotheringham, D., and Bitner, M. J. (2019). “Customer acceptance of AI in service encounters: understanding antecedents and consequences,” in Handbook of Service Science, Volume II, eds. P. P. Maglio, C. A. Kieliszewski, J. C. Spohrer, K. Lyons, L. Patrício, and Y. Sawatani (Cham: Springer International Publishing), 77–103. doi: 10.1007/978-3-319-98512-1_5

Paluch, S., Tuzovic, S., Holz, H. F., Kies, A., and Jörling, M. (2022). “My colleague is a robot” – exploring frontline employees' willingness to work with collaborative service robots. J. Serv. Manag. 33, 363–388. doi: 10.1108/JOSM-11-2020-0406

Petrocchi, S., Iannello, P., Lecciso, F., Levante, A., Antonietti, A., and Schulz, P. J. (2019). Interpersonal trust in doctor-patient relation: evidence from dyadic analysis and association with quality of dyadic communication. Soc. Sci. Med. 235:112391. doi: 10.1016/j.socscimed.2019.112391

Podsakoff, P. M., and Podsakoff, N. P. (2019). Experimental designs in management and leadership research: strengths, limitations, and recommendations for improving publishability. Leadersh. Q. 30, 11–33. doi: 10.1016/j.leaqua.2018.11.002

Raisch, S., and Krakowski, S. (2021). Artificial intelligence and management: the automation–augmentation paradox. Acad. Manag. Rev. 46, 192–210. doi: 10.5465/amr.2018.0072

Rempel, J. K., Holmes, J. G., and Zanna, M. P. (1985). Trust in close relationships. J. Pers. Soc. Psychol. 49, 95–112. doi: 10.1037/0022-3514.49.1.95

Rigoni, D., Kühn, S., Gaudino, G., Sartori, G., and Brass, M. (2012). Reducing self-control by weakening belief in free will. Conscious. Cogn. 21, 1482–1490. doi: 10.1016/j.concog.2012.04.004

Rodgers, W., Murray, J. M., Stefanidis, A., Degbey, W. Y., and Tarba, S. Y. (2023). An artificial intelligence algorithmic approach to ethical decision-making in human resource management processes. Hum. Resour. Manag. Rev. 33:100925. doi: 10.1016/j.hrmr.2022.100925

Romeo, M., McKenna, P. E., Robb, D. A., Rajendran, G., Nesset, B., Cangelosi, A., et al. (2022). “Exploring theory of mind for human-robot collaboration.,” in 2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN) (Napoli: IEEE), 461–468. doi: 10.1109/RO-MAN53752.2022.9900550

Romportl, J., Ircing, P., Zackova, E., Polak, M., and Schuster, R. (2013). Beyond AI: Artificial Golem Intelligence.

Saltik, I., Erdil, D., and Urgen, B. A. (2021). “Mind perception and social robots: the role of agent appearance and action types,” in Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction (New York, NY: IEEE), 210–214. doi: 10.1145/3434074.3447161

Sanders, T., Kaplan, A., Koch, R., Schwartz, M., and Hancock, P. A. (2019). The relationship between trust and use choice in human-robot interaction. Hum. Factors 61, 614–626. doi: 10.1177/0018720818816838

Sanders, T., MacArthur, K., Volante, W., Hancock, G., Macgillivray, T., Shugars, W., et al. (2017). Trust and prior experience in human-robot interaction. Proc. Hum. Factors Ergon. Soc. Ann. Meet. 61, 1809–1813. doi: 10.1177/1541931213601934

Shank, D. B., Graves, C., Gott, A., Gamez, P., and Rodriguez, S. (2019). Feeling our way to machine minds: people's emotions when perceiving mind in artificial intelligence. Comput. Hum. Behav. 98, 256–266. doi: 10.1016/j.chb.2019.04.001

Shrestha, Y. R., Ben-Menahem, S. M., and Von Krogh, G. (2019). Organizational decision-making structures in the age of artificial intelligence. Calif. Manag. Rev. 61, 66–83. doi: 10.1177/0008125619862257

Stafford, R. Q., MacDonald, B. A., Jayawardena, C., Wegner, D. M., and Broadbent, E. (2014). Does the robot have a mind? Mind perception and attitudes towards robots predict use of an eldercare robot. Int. J. Soc. Robot. 6, 17–32. doi: 10.1007/s12369-013-0186-y

Stillman, T. F., Baumeister, R. F., Vohs, K. D., Lambert, N. M., Fincham, F. D., and Brewer, L. E. (2010). Personal philosophy and personnel achievement: belief in free will predicts better job performance. Soc. Psychol. Personal. Sci. 1, 43–50. doi: 10.1177/1948550609351600

Suen, H.-Y., and Hung, K.-E. (2023). Building trust in automatic video interviews using various AI interfaces: tangibility, immediacy, and transparency. Comput. Hum. Behav. 143:107713. doi: 10.1016/j.chb.2023.107713

Swan, E. L., Peltier, J. W., and Dahl, A. J. (2023). Artificial intelligence in healthcare: the value co-creation process and influence of other digital health transformations. J. Res. Interact. Mark. 18, 109–126. doi: 10.1108/JRIM-09-2022-0293

Tanjung, T., Ghazali, I., Mahmood, W. H. N. W., and Herawan, S. G. (2025). Drivers and barriers to Industrial Revolution5.0 readiness: a comprehensive review of key factors. Green Technol. Sustain. doi: 10.1016/j.grets.2025.100217

Thurman, N., and Schifferes, S. (2012). The future of personalization at news websites: lessons from a longitudinal study. J. Stud. 13, 775–790. doi: 10.1080/1461670X.2012.664341

Tran, T. T. H., Robinson, K., and Paparoidamis, N. G. (2022). Sharing with perfect strangers: the effects of self-disclosure on consumers' trust, risk perception, and behavioral intention in the sharing economy. J. Bus. Res. 144, 1–16. doi: 10.1016/j.jbusres.2022.01.081

Trotta, A., Ziosi, M., and Lomonaco, V. (2023). The future of ethics in AI: challenges and opportunities. AI Soc. 38, 439–441. doi: 10.1007/s00146-023-01644-x

Tursunbayeva, A., and Renkema, M. (2023). Artificial intelligence in health-care: implications for the job design of healthcare professionals. Asia Pac. J. Hum. Resour. 61, 845–887. doi: 10.1111/1744-7941.12325

Tussyadiah, I. P. (2020). Do travelers trust intelligent service robots? Ann. Tour. Res. 81:102886. doi: 10.1016/j.annals.2020.102886

Venkatesh, V., and Bala, H. (2008). Technology acceptance model 3 and a research agenda on interventions. Decis. Sci. 39, 273–315. doi: 10.1111/j.1540-5915.2008.00192.x

Venkatesh, V., and Davis, F. D. (2000). A theoretical extension of the technology acceptance model: four longitudinal field studies. Manag. Sci. 46, 186–204. doi: 10.1287/mnsc.46.2.186.11926

Vincent, V. (2021). Integrating intuition and artificial intelligence in organizational decision-making. Bus. Horiz. 64. doi: 10.1016/j.bushor.2021.02.008

Vohs, K. D., and Schooler, J. W. (2008). The value of believing in free will: encouraging a belief in determinism increases cheating. Psychol. Sci. 19, 49–54. doi: 10.1111/j.1467-9280.2008.02045.x

Wallkötter, S., Stower, R., Kappas, A., and Castellano, G. (2020). A Robot by Any Other Frame: Framing and Behaviour Influence Mind Perception in Virtual but not Real-World Environments. doi: 10.1145/3319502.3374800

Wang, J., Molina, M. D., and Sundar, S. S. (2020). When expert recommendation contradicts peer opinion: relative social influence of valence, group identity and artificial intelligence. Comput. Hum. Behav. 107:106278. doi: 10.1016/j.chb.2020.106278