- Chair of Ergonomics and Innovation, Department of Mechanical Engineering, Chemnitz University of Technology, Chemnitz, Germany

Introduction: Recent technological advances in human-robot collaboration (HRC) allow for increased efficiency and flexibility of production in Industry 5.0 while providing a safe workspace. Despite objective safety, research has shown subjective trust in robots to shape the interaction of humans and robots. While antecedents of trust have been broadly examined, empirical studies in HRC investigating the relationship between trust and industry-relevant outcomes are scarce and the importance of trust regarding its precise effects remains unclear. To advance human-centered automation, this paper investigates the affective, cognitive, and behavioral consequences of trust in robots, and explores whether trust mediates the relationship between industry-relevant characteristics and human-centered HRC outcomes.

Methods: In a pseudo real-world test environment, 48 participants performed a manufacturing task in collaboration with a heavy-load robot. Trust, affective experience over time, intention to use, and safety-critical behavior were examined. A 2 × 2 × 2 mixed design varied the availability of feedback, time pressure, and system failures, each expected to affect the level of trust.

Results: In the control group, trust remained consistently high across all conditions. System failures and feedback significantly reduced trust, whereas time pressure had no effect. System failures further increased negative affective experience, while feedback reduced safety-critical behavior. Trust was unrelated to affective experience but positively related to safety-critical behavior and intention to use. The relationship between feedback and safety-critical behavior, as well as intention to use, was significantly mediated by trust.

Discussion: Highly relevant for implementation, the control group showed a tendency toward overtrust during collaboration, evidenced by disregarding system failures. The results indicate that implementing a feedback system alongside the simulation of safe system failures has the potential to adjust trust toward a more appropriate level, thereby reducing safety-critical behavior. Based on these findings, the paper posits several implications for the design of HRC and gives directions for further research.

1 Introduction

Due to demographic changes and the declining prestige and attractiveness of workplaces involving physical labor among younger generations, meeting personnel demands in manufacturing companies is becoming an increasingly critical challenge for success. Furthermore, manufacturing companies experience an increasing demand for customized products. Economical production of small batch sizes and variant flexibility have become key to success (Buxbaum and Sen, 2021). Conventional automation reaches its limits here. However, technological advancements within Industry 4.0 like adaptive sensor systems (e.g., Bdiwi et al., 2017a) enable humans to interact with robots in a shared workspace without safety fences and to work simultaneously on the same task or component (ISO International Organization for Standardization, 2011). This so-called human-robot collaboration (HRC) is directly associated with Industry 5.0 (Alves et al., 2023) and is expected to unlock significant potential in the productive industry: (i) combining the repetitive skills of robots to improve quality with the ability of humans to solve ill-defined problems (ISO International Organization for Standardization, 2016), (ii) improving flexibility of production facilities (Oubari et al., 2018), (iii) improvement of ergonomic work conditions, both physical and mental, as robots execute monotonous or heavy-load tasks (Buxbaum and Sen, 2021; Pietrantoni et al., 2024), and (iv) simple programming and operation, enabling operators without programming expertise to train robots (Buxbaum and Sen, 2021). However, shared-space interaction with robots is still rarely implemented on the shop floor (Kopp et al., 2021; Giallanza et al., 2024). In addition to the technical complexity, this is partly due to the expectation that HRC holds a potential risk for humans (Buxbaum and Sen, 2021) as malfunctions of robots are even possible in standardized collaborations in industrial manufacturing (Schäfer et al., 2024). To both overcome these concerns and realize the potential of HRC, Industry 5.0 focuses on human-centered automation, sustainability, and resilient production, instead of the narrower “techno-economic vision” (Alves et al., 2023), which aims to maximize production speed and economic profit. Thus, the consideration of human factors is now central in HRC (Alves et al., 2023; Giallanza et al., 2024; Paliga, 2023), as is the understanding of the experience of humans during interactions with intelligent automation, such as robots (Alves et al., 2023).

From a human factors perspective, trust in robots is considered a key factor, as it determines the way humans interact with the robot (Coronado et al., 2022; Hancock et al., 2021; Schäfer et al., 2024). One of the most frequently cited contributions in the field of trust in HRC is the review paper of trust in automation by Lee and See (2004) – written at a time when HRC was still a young domain. The authors emphasize that, when interacting with automation, trust must be calibrated appropriately, as under- and overtrust are both associated with negative outcomes such as the misuse, disuse and abuse of automated systems. To this day, research emphasizing the importance of trust in HRC still largely refers to this foundational work (e.g., Hancock et al., 2011; Hopko et al., 2023)—although Lee and See (2004) primary focused on information and decision support automation rather than robots. Two recent reviews of shared-space HRC (Hopko et al., 2022; Coronado et al., 2022) concluded that the characteristics of robots as antecedents of human factors such as trust are widely studied. They have also shown that human factors are only used as dependent variables whereas their interrelations and potential effects on other covariate human factors as well as their effects on further industry-relevant outcomes in a shared-space HRC have so far been largely neglected. Given that research indicates trust to play a central role in HRC (e.g., Hancock et al., 2021; Schäfer et al., 2024), it seems pertinent that trust should be examined more closely as a predictor of industry-relevant outcomes. Therefore, in addition to studying the antecedents of trust in HRC, research in the field needs to further investigate the actual consequences and outcomes of trust relevant to the shop floors of Industry 5.0. Industry-relevant outcomes can be divided into two categories: performance-centered and human-centered. Industry 4.0 focused primarily on performance-centered outcomes (e.g., productivity, production costs, resource efficiency, product customization) of technological advancements. Beyond that, Industry 5.0 emphasizes the importance of human-centered outcomes like wellbeing and human safety, which are key to achieving the goals of Industry 4.0. Investigating human-centered outcomes empirically will help to nuance the discussion in the field and contribute to the realization of HRC, overcoming the tendency to argue for the importance of trust mainly in light of earlier results gained from the more general field of trust in automation. When working toward the goal of human-centered automation, it is decisive to look beyond performance criteria and consider the affective, cognitive and behavioral consequences for the individual human in a shared-space HRC.

This paper contributes to the state of the art with an empirical study investigating the relationships with and potential mediating effects of trust in robots during HRC on affective, cognitive, and behavioral human-centered outcomes. The following chapter provides an overview of the state of the art regarding the concept of trust in robots in HRC, specific human-centered outcomes of trust in HRC for Industry 5.0, and antecedents of trust that can be used as strategies to adjust trust levels.

2 State of the art

Despite an extensive body of research in the field, there is still no consensus how trust is defined (Hopko et al., 2022). Proposing an inclusive, human-focused definition, Schäfer et al. (2024) “define human trust in robots as an asymmetric way of relating that is characterized by dependence, risk, vulnerability, positive expectations, and free choice (p. 9).” Transferring this definition to industrial HRC, it follows that humans (i) depend on the robot to fulfill their work task (dependence), (ii) perceive a risk as they cannot predict future actions of the robot (risk), (iii) are vulnerable due to potential physical harm or a lack of knowledge regarding the robot's capabilities (vulnerability), (iv) expect the robot to support the shared task (positive expectation), and (v) are free to engage in trust toward the robot (free choice) even if they are obliged to use it in the workplace. In the same manner that it is possible to interact with another person without establishing trust, it is also possible to interact with a robot without establishing trust. Trust, which is based on positive expectations, leads to different behavior than mistrust in both human-human and human-robot constellations (Schäfer et al., 2024). For example, as with human-human interactions, humans interacting with robots can choose to observe their interaction partner's every physical or mental move in the case of mistrust, or choose not to monitor them based on positive expectations in the case of trust. Therefore, in HRC, humans have a certain degree of freedom on how they interact with the robot.

In a specific situation, humans choose to trust or choose not to trust (Schäfer et al., 2024). This situational aspect of the definition by Schäfer et al. lead to the conclusion that trust levels differ depending on situational circumstances and context. Firstly, this mirrors the argument that an “appropriate” or “calibrated” level of trust is necessary for effective HRC (Lee and See, 2004; Hancock et al., 2011, 2021; Chang and Hasanzadeh, 2024). Secondly, trust levels can be adjusted depending on situational circumstances and context (Hancock et al., 2011).

Going beyond antecedents of trust, Schäfer et al. (2024) also include affective (dependence and vulnerability), cognitive (positive expectations) and behavioral (free choice) components. It can be concluded that trust should have consequences on all three components, respectively. This aligns with the human-centered focus of Industry 5.0, where individual wellbeing is prioritized (Alves et al., 2023; Giallanza et al., 2024).

On an affective level, subjective wellbeing is highly relevant in human-centered automation. Negative emotions and stress experienced at the workplace impact employees' wellbeing (Khalid and Syed, 2024). However, affective experience remains understudied in HRC (Coronado et al., 2022). When affective experience is addressed, anxiety is a commonly studied affective state in shared-space HRC (Hopko et al., 2022). In a comprehensive review, Mauss and Robinson (2009) conclude that there is no “gold standard” for measuring emotions, as existing methods fail to capture both subjective experiences and physiological or behavioral reactions. The authors advocate for a dimensional approach to affective assessment, emphasizing valence and arousal as essential components. They also stress the importance of measuring emotions in conjunction with significant events to ensure accurate results. According to the circumplex model of affect (Russell, 1980), negative affective experiences are characterized by negative valence and increased arousal. To the best of our knowledge, there are no theoretical models that explicitly consider the relationship of trust and affective experience. Therefore, it is still unclear whether trust is an antecedent, covariate or consequence of affective states, and studies that track affective states over time and in relation to specific events are scarce in the context of HRC. As both trust and affective experience are examined by changes in physiological measures (e.g., Arai et al., 2010; Hopko et al., 2022), it can be assumed that both concepts are related. Based on trust literature, it has been found that (i) well-studied factors influencing trust (e.g., reliability) also affect physiological responses (Hopko et al., 2023), (ii) (3) emotions were suggested to be useful markers for under- und overtrust (Schoeller et al., 2021), and (iii) humans react with negative affective states following trust violations by robots (Alarcon et al., 2024). Applying the definition of Schäfer et al. (2024), if humans show low trust in a robot, it can be assumed that feelings of “vulnerability” arise, which might be accompanied by negative affective experiences such as anxiety—even if the robot performs perfectly. From these empirical and theoretical findings, we deduce that trust is an antecedent for affective experiences. Therefore, we exploratorily hypothesize:

Hypothesis 1: Lower trust is related to reduced valence and increased arousal (representing negative affective experience) during HRC.

On a cognitive level, for HRC to be implemented sustainably and resiliently in industrial workplaces, human workers should have a willingness to use the technology and have positive expectations of it. This is expected to be related to their belief that a certain type of automation, e.g., a robot in HRC, is beneficial to their goals (cf. “positive expectations,” Schäfer et al., 2024). However, trust is distinct from both acceptance and actual utilization of technology. While trust can enhance both, they have been found to be strongly influenced by contextual moderating factors (Kopp, 2024). A recent meta-analysis suggests that trust is actually an antecedent of intention to use a robot (Razin and Feigh, 2024). Additionally, trust has been shown to mediate the relationship between usability and willingness to use a robot (Babamiri et al., 2022). Therefore, intention to use is a relevant criterion, influenced by positive expectations as part of trust. However, most studies on trust in automation have focused on the relationship between automation reliability and operator usage, often neglecting to assess trust as a mediating variable (Lewis et al., 2018). We intend to further knowledge in this area and hypothesize:

Hypothesis 2: Trust is positively related to intention to use.

On a behavioral level, safety is paramount for human-centered automation (cf. “risk” and “vulnerability,” Schäfer et al., 2024). While shared-space HRC increases the risk of being physically harmed by the robot (Onnasch and Hildebrandt, 2022), it has been found that people are subject to a “positivity bias” (Dzindolet et al., 2003): they expect automated systems to work well and reliably. A recent study of technical experts in HRC even found concern about potential over-reliance on technology (Pietrantoni et al., 2024). The resulting overtrust reduces situation awareness and is related to complacency (Parasuraman and Manzey, 2010). On an objective and observable behavioral level, higher trust has been found to result in reduced monitoring behavior, with an increase in trust during interaction leading to even less monitoring (Hergeth et al., 2016). Therefore, it was shown that humans objectively react differently during interactions depending on their level of trust (cf. “free choice,” Schäfer et al., 2024). In example, workers might stop monitoring a cobot's actions in case of too high trust (Hopko et al., 2022). As malfunctions are rare and trust increases over time during failure-free interaction, consequently reduced monitoring may lead to safety-critical situations. Workers may, due to reduced monitoring, be unaware of system states and unable to react to sudden off-nominal events (Hopko et al., 2022) like system failures. This concept of overtrust in robots has been defined as a situational state in which humans misjudge the risks associated with their own actions due to an underestimation of the likelihood of a robot performing its functions ineffectively or unsafely (Wagner et al., 2018; Aroyo et al., 2021). Therefore, it is important to better understand objective safety-critical behavior of humans as a behavioral consequence of trust. Concluding, we hypothesize:

Hypothesis 3: Trust is positively related to safety-critical behavior during shared-space industrial HRC.

As mentioned above, the body of knowledge on antecedents of trust is extensive (Hopko et al., 2022). Previous results point to differences in the antecedents of trust depending on the domain. For example, although anthropomorphism has generally been found to have a positive impact on trust in robots, this holds particularly true for social robots and not necessarily for industrial robots (Onnasch and Hildebrandt, 2022). Research in the domain of industrial HRC has thus to consider industry-relevant characteristics as antecedents of trust, as proposed e.g., by Chang and Hasanzadeh (2024), who underline the need to investigate distinctive features relevant to the respective field of robots. The results of trust-influencing factors can be further used to create variance in human trust levels by purposefully adjusting trust levels and subsequently explore relationships with expected consequences of trust. In industrial application, two robot characteristics—system failures and feedback via communication—and the environmental characteristic time pressure have been argued to be important trust antecedents (Chang and Hasanzadeh, 2024). The following section briefly describes the effects of these antecedents on trust. Based on the previously explained relationship between trust and human-centered outcomes in industry, the links between antecedents of trust and these outcomes are discussed.

Much of the literature on trust focuses on system reliability and the effects of system failures; the latter have been shown to have a negative impact on trust (e.g., Parasuraman and Manzey, 2010; Wickens et al., 2015; Legler et al., 2023). This can be explained by the definition of trust itself: As trust involves positive expectations, these are violated in the event of a system failure. Akalin et al. (2023) state that trust loss is especially caused by robot failures, which include design failures, expectation failures and system failures. In this paper, we follow Akalin et al. (2023) and define system failures as failures in both, hardware or software, of a robot system. Furthermore, system failures increase perceptions of vulnerability (Schäfer et al., 2024). Higher monitoring and situation awareness have been observed following failures of an automated system (Wickens et al., 2015). As trust is positively related to intention to use and safety-critical behavior, it can be assumed that system failures also reduce these. Further, as trust has been shown to be related to affective experience (e.g., Alarcon et al., 2024; Hopko et al., 2023), it can be assumed that unexpected system failures are related to negative affective experience, probably mediated by trust. Given that studies on affective experiences are rare, the affective experiences resulting from trust-related antecedents and consequences of trust will be assessed in an exploratory manner. On the account of system failure and intention to use, we hypothesize:

Hypothesis 4: System failures reduce trust during industrial HRC, and thereby intention to use.

Providing visual feedback on the state of an automated system, such as a robot, has been shown to increase situation awareness and the predictability of robots' actions which enhances trust, thereby strengthening intention to use and reducing safety-critical behavior. Visual feedback systems have been implemented to increase user's situation awareness (Maurtua et al., 2017), displaying visual cues to signal danger and capture human attention (Goldstein, 2010). A meta-analysis showed that feedback directly affects trust in automation (Schaefer et al., 2016). Additionally, trust mediates the relationship between feedback and actual reliance on the system (cf. “dependence,” Schäfer et al., 2024), as feedback renders the robot's actions more predictable (Dzindolet et al., 2003). This minimizes human vulnerability, while increasing acceptance and intention to use. Therefore, although feedback is expected to enhance trust, and trust is positively related to safety-critical behavior, it is expected that feedback will lower safety-critical behavior. To better understand these relations, we hypothesize:

Hypothesis 5: Feedback increases trust during industrial HRC.

Hypothesis 6: Trust mediates the relationship of feedback with intention to use and safety-critical behavior.

Hypothesis 7: Feedback shows a direct effect on reducing safety-critical behavior.

Pacing in industrial settings can cause time pressure, which has been found to increase mental workload during human-automation interactions. The framework of Chang and Hasanzadeh (2024) highlights time pressure as a key environmental factor affecting trust in robots in industrial settings. Surprisingly, effects of time pressure have remained understudied. Most research assumes that time pressure increases trust, as humans experience an increased subjective workload under time pressure (Wang et al., 2016) and compensate this complexity and uncertainty by increasing trust in automation (Luhmann, 1979; van der Waa et al., 2021). As trust is positively related to intention to use and safety-critical behavior alike, it can be assumed that time pressure enhances both due to increased positive expectations and dependence on the robot. Therefore, we hypothesize:

Hypothesis 8: Time pressure increases trust during industrial HRC.

Hypothesis 9: Trust mediates the relationship of time pressure with intention to use and safety-critical behavior.

Summing up the current state of trust research, three elements are apparent that require closer consideration: (i) The concept of trust in HRC has characteristics at affective, cognitive and behavioral levels which leads to the assumption that trust also manifests in affective, cognitive, and behavioral consequences relevant for the design of efficient HRC in industrial settings. (ii) Investigating the concepts of affective experience, intention to use and safety-critical behavior is highly relevant to achieve human-centered automation as intended within Industry 5.0. (iii) Trust has the potential to mediate the relationship between characteristics typically apparent in industrial robots, such as system failures, feedback and time pressure, and important human-centered outcomes of industry, such as affective experience, intention to use and safety critical behavior. This puts trust at the center of attention to explain relationships between industrial characteristics of HRC workplaces and affective, cognitive, and behavioral consequences. Until now, human factors such as trust have rarely been studied as covariates of other human factors, and the potential mediating effects on outcomes relevant for a safe HRC have been largely neglected (Hopko et al., 2023). Therefore, our overall research question is: In an industrial HRC setting, how do changes in trust, prompted by the trust-relevant antecedents system failures, feedback and time pressure, impact the human-centered outcomes affective experience, intention to use and safety critical behavior?

To address the research question, an experimental study was conducted, comprising a realistic industrial collaboration task with a heavy-load robot within a pseudo real-world test environment. Method and results of the study are subsequently presented, closing with practical implications for industrial HRC.

3 Materials and methods

3.1 Test environment

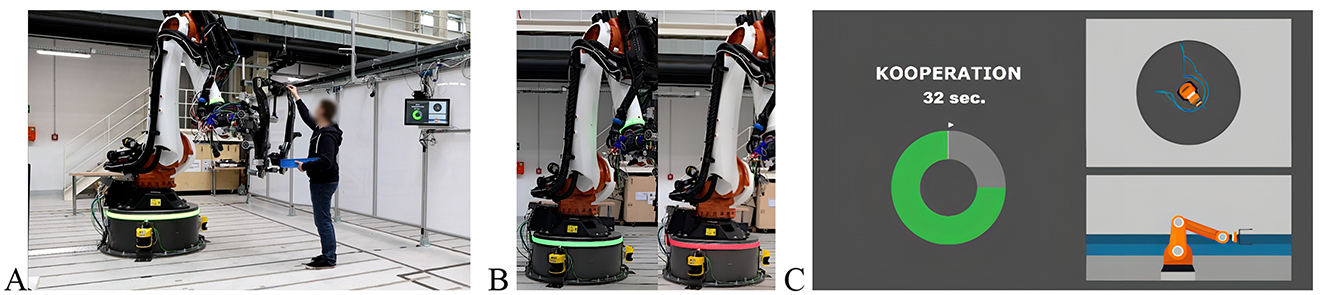

The study was conducted in a model factory. The robot cell in which participants operated was surrounded by various other robotic and automation systems. A KUKA Quantec prime KR 180 industrial robot, classified as a heavy-load robot, was used as a test bed with a feedback system implemented (see Figure 1A). The visual feedback system contained an LED lighting system at the flange and base of the robot (see Figure 1B) and an information display (see Figure 1C). Three colors showed different robot states: white for automated robot actions, green for the collaboration mode, and orange for system failure states. The dynamic information display contained a countdown showing the remaining collaboration time in seconds, as well as top and side views of the robot to help anticipate actual and future movement and paths. There was also a pop-up window providing written information on failure states. The information display was mounted at an angle on the wall that ensured visibility from both the safety zone and the assembly position. Still, from the assembly position, the human operator had to turn sideways and away from the robot to see the entire display (see Figure 2A). Redundant information on the robot's state and failure modes was presented by the LED lighting system and the feedback display. Further details regarding the development and user evaluation of the feedback system can be found in Legler et al. (2022).

Figure 1. (A) View of test environment with KUKA robot and participant during collaboration, and (B) visualization of feedback system containing LED lighting system and (C) an information display.

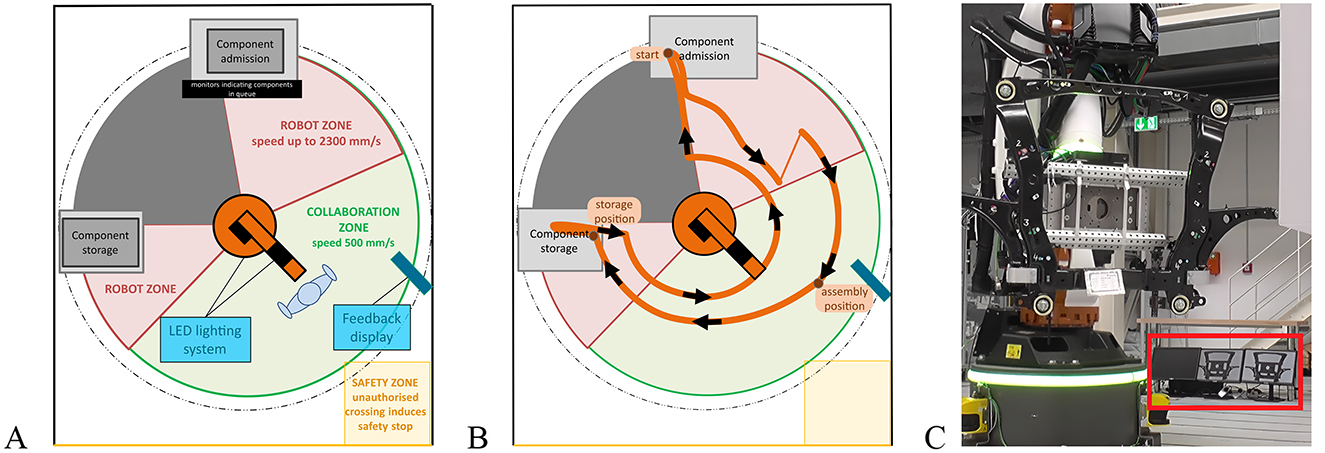

Figure 2. (A) Schematic presentation of the robot cell containing different zones and associated robot speeds, (B) visualization of robot's orbital path, and (C) monitors for simulating an assembly line with two components in the queue and green LED lighting system of the robot indicating collaboration mode.

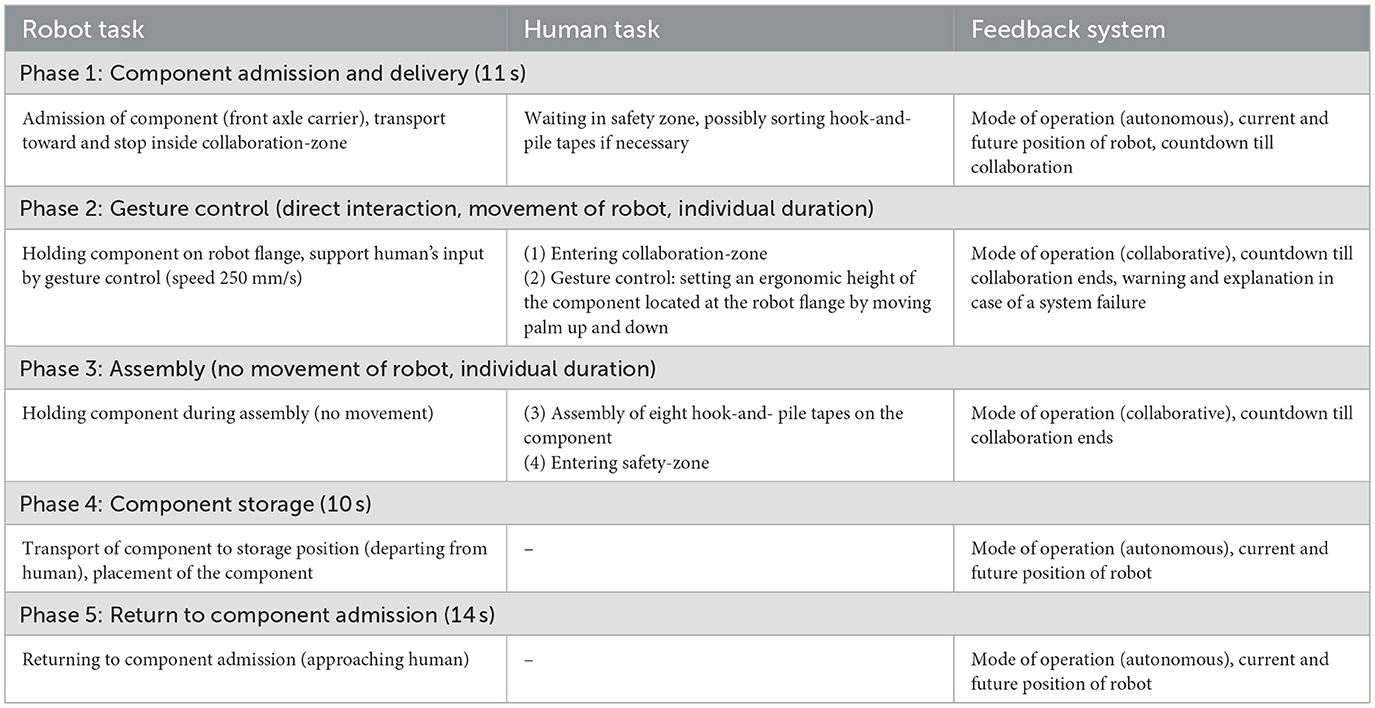

Table 1 describes the tasks performed by the human operator and the robot within a complete assembly cycle, as well as the information provided by the feedback system. Each cycle contains five phases involving the robot: component admission and delivery (phase 1), gesture control (phase 2), assembly (phase 3), component storage (phase 4), and return to component admission (phase 5). Phases gesture control to enable an ergonomic height and assembly can be referred to as “collaboration,” as participants left the safety zone during these phases. Figure 2B illustrates the robot's orbital path. The robot: (i) picked up the front axle carrier at the back; (ii) approached toward the assembly position in the front; (iii) remained in the assembly position holding the front axle carrier for human gesture control and assembly; (iv) moved to the component storage position on the left, and (v) returned to home position in the back.

The study was conducted following the approval of a formal risk assessment. The robot cell comprised collaboration, robot and safety zones (see Figure 2A, cf. Bdiwi et al., 2017a for further details on safety concept in the robot cell). Participants remained inside the robot cell throughout the experiment, waiting in the safety zone until the collaboration mode began (otherwise triggering an emergency stop). The robot's speed was associated with particular zones; it moved at a maximum speed of 2,300 mm/s in the robot zone and slowed down to 500 mm/s when entering the collaboration zone (see Figure 2B) to ensure that the robot system was able to immediately stop in case of an emergency stop triggered by humans. The collaboration mode only started when the robot came to a standstill within the collaboration zone at the assembly position. The LiDAR sensors (see Figure 1A) were deactivated during collaboration mode. The robot was capable of supporting a collaborative assembly task modeled on a real automotive industry workplace. The task involved attaching eight hook-and-pile tapes to a front axle carrier. The robotic system was equipped with an optical gesture control interface for height adjustment which is a typical ergonomic feature in HRC (Pluchino et al., 2023). This interface is based on a combination of depth, color and thermal imaging (for technical details cf. Al Naser et al., 2022). In practice, the algorithm performed best when participants extended their palm toward the robot. Movements of the palm in the vertical direction relative to the initial detection position were then translated into corresponding upward or downward motions of the robot arm. During collaboration mode, the robot moved at a speed of 250 mm/s. Given the robot movement with minimal physical distance to the human in the collaboration zone to support the human height setting task and holding the component for assembly represents a HRC level 3 according to Bdiwi et al. (2017b).

Although the automated gesture control was functional, the gesture control algorithm sometimes failed in prior study series as a result of incorrect human input or adverse environmental influences such as light reflections. Algorithm failures resulted in a lack of response from the system or jerky, uncontrolled movements of the robot arm due to repeated failed tracking of the palm. Therefore, to ensure standardized conditions, the test environment enabled the experimenters to manually control the robot arm during gesture control. Participants were unable to detect the manual gesture control (Wizard-of-Oz), allowing experimenters to simulate a failure of the gesture control system. As the automated gesture control was based on a very simple human gesture, moving the extended palm upwards and downwards was equally used as the input gesture for the Wizard-of-Oz gesture control.

To simulate an assembly line, the test environment also included three monitors positioned behind the robot and beneath the component admission table (see Figure 2C). The monitors were in the person's direct field of vision during the assembly task, ensuring that participants could evaluate their performance in the current assembly.

3.2 Experimental design

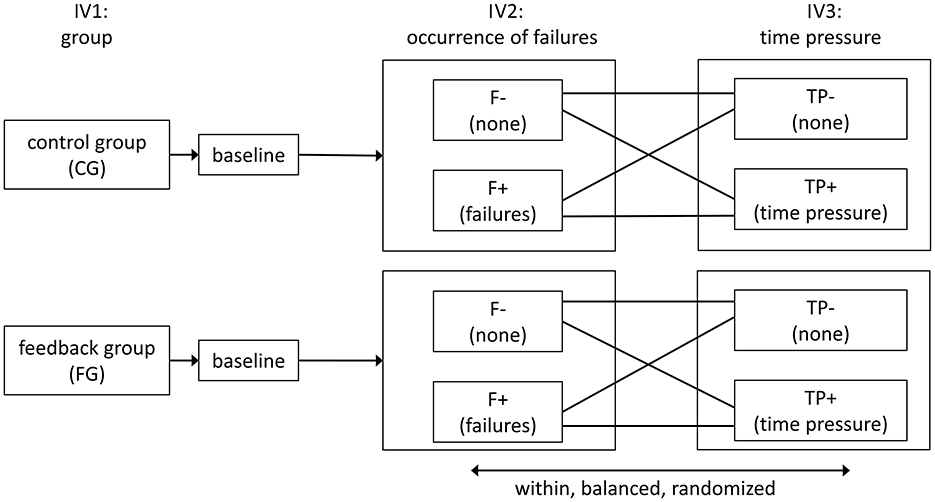

A mixed design was conducted with three factors: feedback, system failure and time pressure. “Feedback” was a between-subjects factor (CG = control vs. FB = feedback group); therefore, in the control group, the LED lighting system and information display were kept off. A balanced design of two within-subjects factors, “system failures” (F– = none vs. F+ = occurrence of failures) and “time pressure” (TP– = none vs. TP+ = time pressure), was applied within both groups. The experimental design resulted in four conditions, which were performed in randomized order after a baseline condition to allow habituation to the assembly task and to the assessment of affective experience. The design of the study is visualized in Figure 3.

System failures were simulated in gesture control mode while participants were setting the assembly height. As the robot delivered the front axle carrier at a height that prevented participants from reaching the highest hook-and-pile tapes, participants‘ intention was to lower the robot arm. The experimenters were instructed to manually control the robot arm with noticeably jerky movements in the direction opposite to the participants' palm movements for about 10 s, resulting in a position far too heigh to reach all hook-and-pile tapes Afterwards, gesture control was supported correctly and participants were able to lower the robot arm. Time pressure was introduced by adding a component to the assembly line monitors (see Figure 2C) after an individual cycle time had elapsed, resulting in a component in queue visible for the participants. Therefore, the time taken for collaboration mode, including time for gesture control and assembly of the component, was measured during participants' baseline assembly cycles, and the fastest collaboration time was used as an individual reference for each participant. The constant robot period (35 s) and the individual reference time (M = 26.8 s, SD = 4.7, min = 20, max = 39) were added together and used as the individual cycle time (resulting in an average of 61.8 s). To account for interindividual differences in assembly times and to ensure that time pressure was objectively operationalized and equally perceived across participants, the individual reference time was halved during time pressure conditions. Therefore, individual reference time during time pressure conditions showed an average of 13.4 s, resulting in an average individual cycle time of 48.4 s. From the participants' perspective, the individual reference time showed them how much time they had available to collaborate with the robot, and this time was displayed on the information display (see Figure 1B).

3.3 Measures

3.3.1 Demographics

Demographic information like sex, age, highest educational degree, as well as experience with industrial robots and production work, were captured in pre-survey. Additionally, Affinity for Technology Interaction (Franke et al., 2019, ATI, 6-point Likert-scale; α = 0.85) was assessed for sample specification.

3.3.2 Time progression measures of affective experience

According to Werth and Förster (2015), specific emotions are intensive, directed toward an object, and fleeting. Since collaborations with robots involve alternating phases of separate and cooperative activities, and robots approach and depart from humans, various affective states can occur. In accordance with Mauss and Robinson (2009), a dimensional self-report measure of affect over time was used, as it has been argued that this is sensitive to changes in valence and arousal, whereas physiological measurements such as heart rate or skin conductance have only been shown to be sensitive to arousal. The circumplex model of affect (Russell, 1980), for example, describes anxiety as an emotion characterized by a strong negative valence and high arousal. Therefore, it is possible to assess a tendency toward specific emotions based on valence and arousal values. The Feeling Scale (FS; Hardy and Rejeski, 1989; scale range −5 to 5) and Felt Arousal Scale (FAS; Svebak and Murgatroyd, 1985; scale range 1 to 12) were used to quantify self-reported affective experience. Combined single-item scales have also been used in other studies to measure affect continuously over time (Murray et al., 2016). Valence and arousal values were assessed every 10 seconds and the original scale of FAS was doubled to 12 points due to limited variance in values in pre-tests (cf. Legler et al., 2023 for further details on pre-test). Due to pretest results, the assessment interval of 10 seconds was found to enable participants to concentrate on their primary task. Both scales were available to the participants at all times, visualized by a fabric sticker on their left or right arm.

3.3.3 Outcome measures (post-scenario)

Further, several measures for dependent variables were applied. Trust in automation was measured subjectively via a German translation (Pöhler et al., 2016) of Jian-Scale, following each of the five scenarios. Only subscale “trust” (6 items; 7-point Likert scale) was used because the two subscales were highly correlated and previous work had questioned the two-factor structure (Pöhler et al., 2016; Legler et al., 2020). Across the scenarios, mean reliability for trust was α = 0.82. For Intention to Use, a German translation of the subscale “intention to use” (Brauer, 2017) was applied (scale range 1 to 5, mean reliability was α = 0.78) to measure overall willingness to interact with the robot in the future (cf. UTAUT Model; Brauer, 2017), representing the pre-conditional stage of actual usage and an indicator of technology acceptance. Safety-critical behavior was defined as disregarding system failures. After completion of data collection, the time taken for gesture control across all scenarios without simulated system failures was empirically calculated and showed a 5th percentile of 0.5 s. This value was used as a lower limit of an expected time for gesture control. Therefore, in failure scenarios, a minimum gesture control time of 10.5 s was expected (10 s for the failure simulation plus an expected lower limit of 0.5 s for the actual height adjustment). Due to the manual control of failures and expected slight inaccuracies of experimenters in simulating the failure duration, a tolerance period of 2 s was set. Therefore, in failure scenarios, a duration of less than 8.5 seconds between the start of collaboration mode and the start of assembly was defined as disregard of failures. De facto, participants did not complete the height setting task and started to assemble while the robot was still moving, which was not permitted by the instructions given to participants. To control for the manipulation of time pressure, one item covering temporal demands of the NASA-TLX measuring subjective workload (Hart, 2006; scale 0–100) was applied. Additionally, the number of components in the queue after finishing all five assembly cycles of a scenario was recorded for manipulation check.

3.4 Sample

The participants were recruited from the participant database of our partner research institution, which includes students from technical degree programmes and individuals working in technical professions, e.g., employees from companies located in the vicinity. Participants in the study had to be at least 18 years and free from any motor impairments. Given the technical focus of the study, an above-average affinity for technology was expected. In the experiment, 48 persons participated and were randomly assigned to two groups of equal size: control group and feedback group. Both groups were gender balanced. Participants were aged between 18 and 58, the mean age was 26.2 years (SD = 7.54) in the control and 25.6 years (SD = 7.84) in the feedback group. Bayes factors (cf. Section 3.6) did not show differences in age (BF10 = 0.52 ±0%, rscale = 0.3). Overall, affinity for technology interaction was on medium to high level and not differing between groups (Mcontrol = 3.79, Mfeedback = 3.88, BF10 = 0.78 ± 0%, rscale = 0.3, scale range 1–6). The majority of participants stated that they had no vocational qualification or bachelor's degree. These individuals were probably students at the time of the study. The remaining participants stated to they had a vocational qualification or technician/foreman's degree (11%) or master's degree (17%). Therefore, effects of participant groups (students vs. non-students) for baseline trust as the most important outcome of this study was tested. Bayes factors favored the null hypothesis in the overall sample (BF10 = 0.55 ± 0%, rscale = 0.3). Slightly more participants of the feedback group (29%) had interacted with an industrial robot before (vs. 20%) and were currently or had ever worked in production sector before (29% vs. 25%). Again, effects of previous personal experience in production (experienced vs. non-experienced) for baseline trust was tested. Bayes factors favored the null hypothesis in the overall sample (BF10 = 2.06 ± 0%, rscale = 0.3). Participants received financial compensation.

3.5 Procedures

Participants received the participant information, signed a declaration of consent and filled in the pre-survey. All materials applied to conduct the study including such as experimenter protocols and surveys is public available (cf. Data Availability Statement).

Participants watched two videos showing a real workplace with a handling device, as well as an equivalent task performed collaboratively with the robot in a test environment. This was intended to support the cover story of workplace design in the productive industry. Participants were then given instructions on the collaborative task, which consisted of setting the height via gesture control and carrying out the assembly task (see Table 1, phase 2). Participants in the feedback group also received a brief introduction to using the feedback system. All participants were instructed to:

• Stay within the marked safety zone before the robot stops inside the collaboration zone; leaving the zone would result in an emergency stop,

• Use gesture control for height setting in each assembly cycle,

• Stop the assembly and enter the safety zone as soon as anything abnormal or critical is noticed,

• And minimize their assembly time, as financial compensation would depend on it (compensation manipulation).

All participants performed a baseline condition involving a minimum of ten assembly cycles (see Table 1) in order to familiarize themselves with the assembly task, learn how to control the robot using gestures and become trained with the feedback system and the affective experience assessment. Afterwards, each participant performed four interaction scenarios with the robot in randomized order. Prior to each condition, participants were asked to rate their current valence and arousal values to establish a baseline (t0). Valence and arousal values were verbally rated by participants every 10 s (see Section 3.3) following an acoustic signal and recorded by experimenters.

Each experimental scenario comprised five assembly cycles, lasting around 5 min in total. The durations of an assembly cycle resulted from robot periods (35 s) and the individual times taken for gesture control and component assembly. A typical complete assembly cycle without simulated failures lasted 57.5 s (SD = 4.04), whereas one containing simulated failures lasted 67.5 s (SD = 3.97). System failures were simulated for all participants in the first and third assembly cycle of the respective experimental scenario. The position of simulated failures during the five assembly cycles was not randomized to reduce the mental load for the experimenters, thereby ensuring that all planned and manually simulated system failures were carried out by the experimenters in accordance with the specified procedure. Additionally, the fixed order of the simulated failures occurred only twice for each participant during the experiment; therefore, it can be assumed that the participants were unable to perceive the fixed order.

Each scenario was followed by a short survey to measure outcomes. At the end, participants were debriefed about the financial compensation manipulation and received compensation regardless of their assembly time. Overall, the study lasted about 60 min.

3.6 Data analysis

Statistic Software R (version 4.5.0; R Core Team, 2025) was used for data analysis. Bayes factor analysis was applied, using R package “BayesFactor” (Morey and Rouder, 2024). In accordance with Berger (2006), a very broad prior distribution was used as uninformed prior. It was operationalized by a zero-centered Cauchy distribution with a “medium” prior scale, reflecting common effect sizes in psychological studies (Speekenbrink, 2023). It was implemented by an according rscale parameter of r = 1/√2 (Morey and Rouder, 2024) in the ttestBF() function to compare independent groups. The parameterization of anovaBF() followed the same theoretical considerations and was used to examine main and interaction effects of the three independent variables. Unlike ttestBF(), the rscale parameter in anovaBF() is based on standardized treatment effects rather than Cohen's d. Therefore, rscale in anovaBF() was set to r = 0.5, analogously representing a “medium” prior scale (Speekenbrink, 2023). Individual scores were defined as random effect to address for the within-subjects variables system failure and time pressure. Finally, approximation method “simple” was used to account for the relatively small sample size for complex models (Navarro, 2015). BF10 is reported unless otherwise stated and represents the relative likelihood of the alternative hypothesis over the null hypothesis. For the interpretation of Bayes factors, we apply Jeffreys's scale of evidence (Jeffreys, 1961). With the aim of equivalence testing, in this paper, parameter rscale was set to 0.3 to test the null hypothesis against a rather small effect. For interpretation, BF10 < 1 shows a favoring of the null hypothesis over the alternative hypothesis, supporting equivalence of groups or conditions.

Package “ggplot2” (Wickham, 2016) was used to visualize time progression data. Valence and arousal values of each participant were normalized as deviation from initial values queried prior to each scenario starting at t0. Additionally, due to individual durations of gesture control and assembly of components, data of phase 2 and 3 (see Table 1) were also normalized using the median values across participants and dependent on scenario. Robot periods also varied as a result of minor technical issues. Therefore, all data within a specified phase were normalized to the means across comparable conditions. To smooth data curves, a generalized additive model (GAM; Wood et al., 2016) was used. According to the relatively low number of data points, the adaption of the GAM model in Wood et al. (2016) was applied and the function parameter “formula = y ~ s(x, bs = “cs”, fx = T, k = 0)” was used within the function geom_smooth() by setting k value to 10 for all graphs aiming at visual comparability. Due to missing robot log files, the resulting diagrams contain a different intersection of the total sample. However, due to the use of relative rather than absolute values and the approximation based on around 900 data points per line, the resulting distortion is expected to be low. Package “lavaan” (Rosseel, 2012) was used for structural equation modeling using a maximum likelihood estimation.

4 Results

4.1 Manipulation check

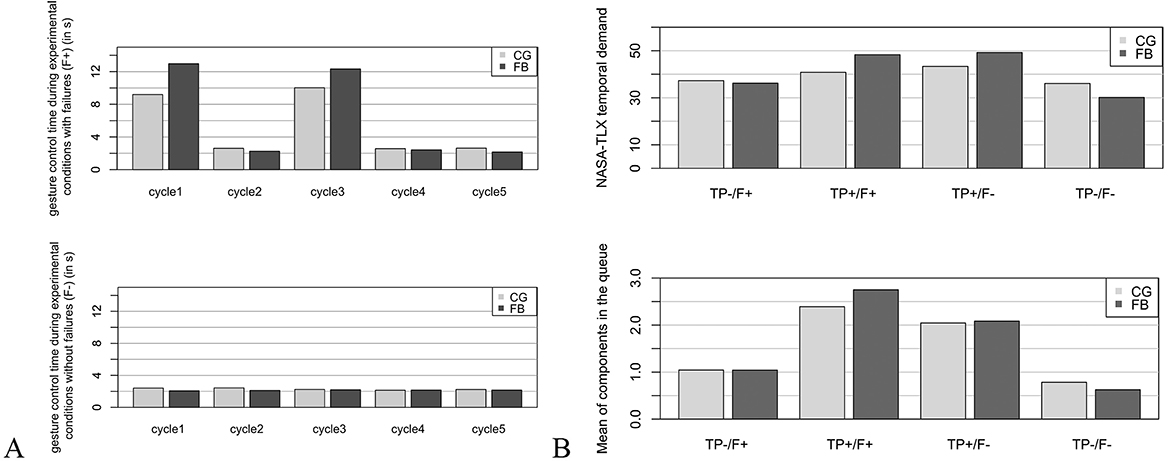

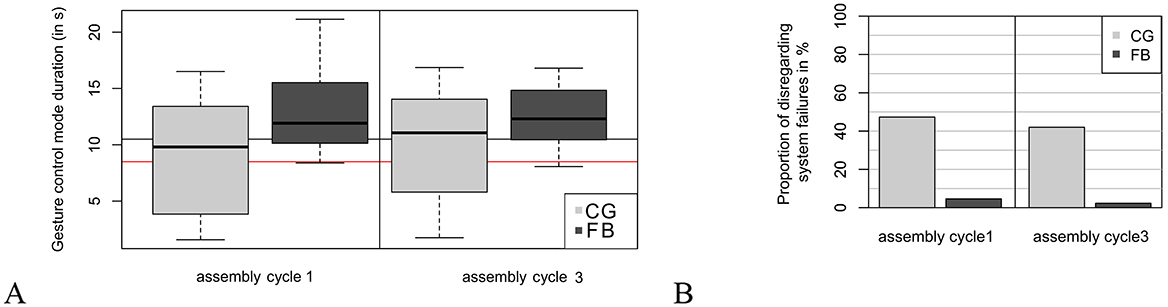

Due to the two within-subjects variables used, participants experienced two manipulations during the experiment. System failures were simulated for about 10 s during the first and third assembly cycle. Consequently, the duration of gesture control should be 10 s longer in experimental conditions involving simulated failures (F+). Figure 4A shows the duration of gesture control depending on the experimental condition, assembly cycle and group. Gesture control was higher in cycles 1 and 3 in experimental conditions with failures (F+; top) than in conditions without simulated failures (F–; bottom), demonstrating the success of the planned manipulation.

Figure 4. Results for manipulation check regarding (A) the realization of system failures operationalized by durations for gesture control dependent on experimental conditions, assembly cycle and group, and (B) time pressure measured as perceived time pressure and objective number of components in the queue at assembly line. CG, control group; FB, feedback group; TP+, with time pressure; TP–, without time pressure; F+, with failures; F–, without failures.

Regarding the manipulation of time pressure, the available assembly time for each individual (cf. Section 3.2) was reduced. Figure 4B (top) shows the subjective ratings for the NASA-TLX control item “temporal demands.” It is obvious that the manipulation of time pressure was more effective in the feedback group. However, the Bayes factor revealed no significant differences between the groups in either condition involving time pressure and failures (TP+/F+; BF10 = 0.46 ± 0.01%) or condition involving time pressure without simulated failures (TP+/F-; BF10 = 0.39 ± 0.01%). A Bayesian ANOVA applied to the feedback group showed no effect of time pressure (BF10 = 0.26 ± 1.07%) compared to a denominator model using individual scores as a random effect. The control group showed no effect of time pressure, either. Conversely, the objective manipulation check for time pressure (see Figure 4B, bottom), operationalized as the number of components in the queue, supports the success of the manipulation, as participants would have been able to reduce their assembly time in order to avoid components in the queue.

4.2 Trust

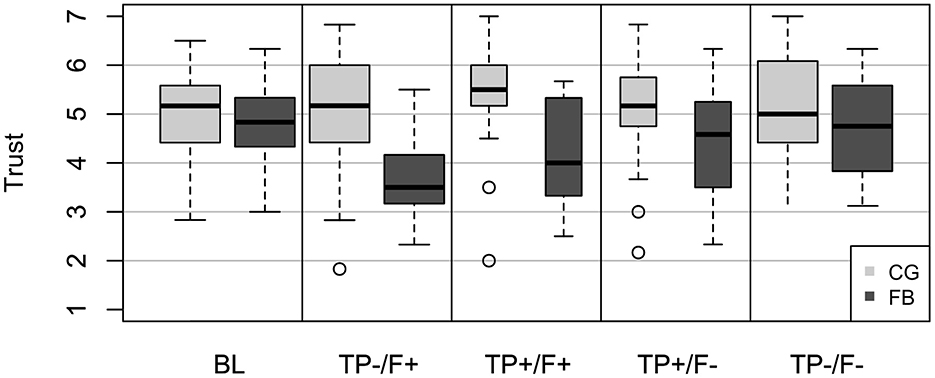

Figure 5 shows the descriptive results for trust ratings, clustered in group and experimental condition. In the control group, mean trust consistently remained high across all scenarios, whereas trust decreased in the feedback group following a system failure. In the feedback group, trust dropped below the scale mean during experimental condition TP-/F+ (M = 3.72, SD = 0.90).

Figure 5. Results of trust ratings depending on experimental conditions and group. Small bars show conditions with time pressure (TP+), wide bars without time pressure (TP–). F+ indicates conditions with simulated failure, F– without failure. The lower and upper boundary of the y-axes represents the scale range. BL, baseline; CG, control group; FB, feedback group.

Following completion of the baseline condition, trust levels were found to be similar for both groups (MCG = 4.92, MFB = 4.74, BF10 = 0.54, rscale = 0.3). A Bayesian ANOVA was applied. Table 2 shows the results of selected Bayesian models compared to a random model, with participants as the random factor. Including only the independent variables “feedback” (row 1) or “system failure” (row 2) significantly improved both models. The effect of “failure” was stronger than of “feedback.” The model including the independent variable “time pressure” (row 3) favored the null hypothesis. Accordingly, the model including the two significant main effects (row 4) showed strong evidence in favor of the alternative hypothesis, and including an interaction of both main effects (row 5) showed an additional significant improvement. Relating Bayesian model results to the descriptive results, (i) overall, trust was lower in the feedback group; (ii) system failures were found to reduce trust, and (iii) system failures had a stronger effect in the feedback group than in the control group. The results support hypothesis 4 (system failures reduce trust), but contradict hypotheses 5 (feedback increases trust) and 8 (time pressure increases trust).

Table 2. Bayesian ANOVA results modeling trust compared to a denominator model using participants ID as random factor.

4.3 Affective experience

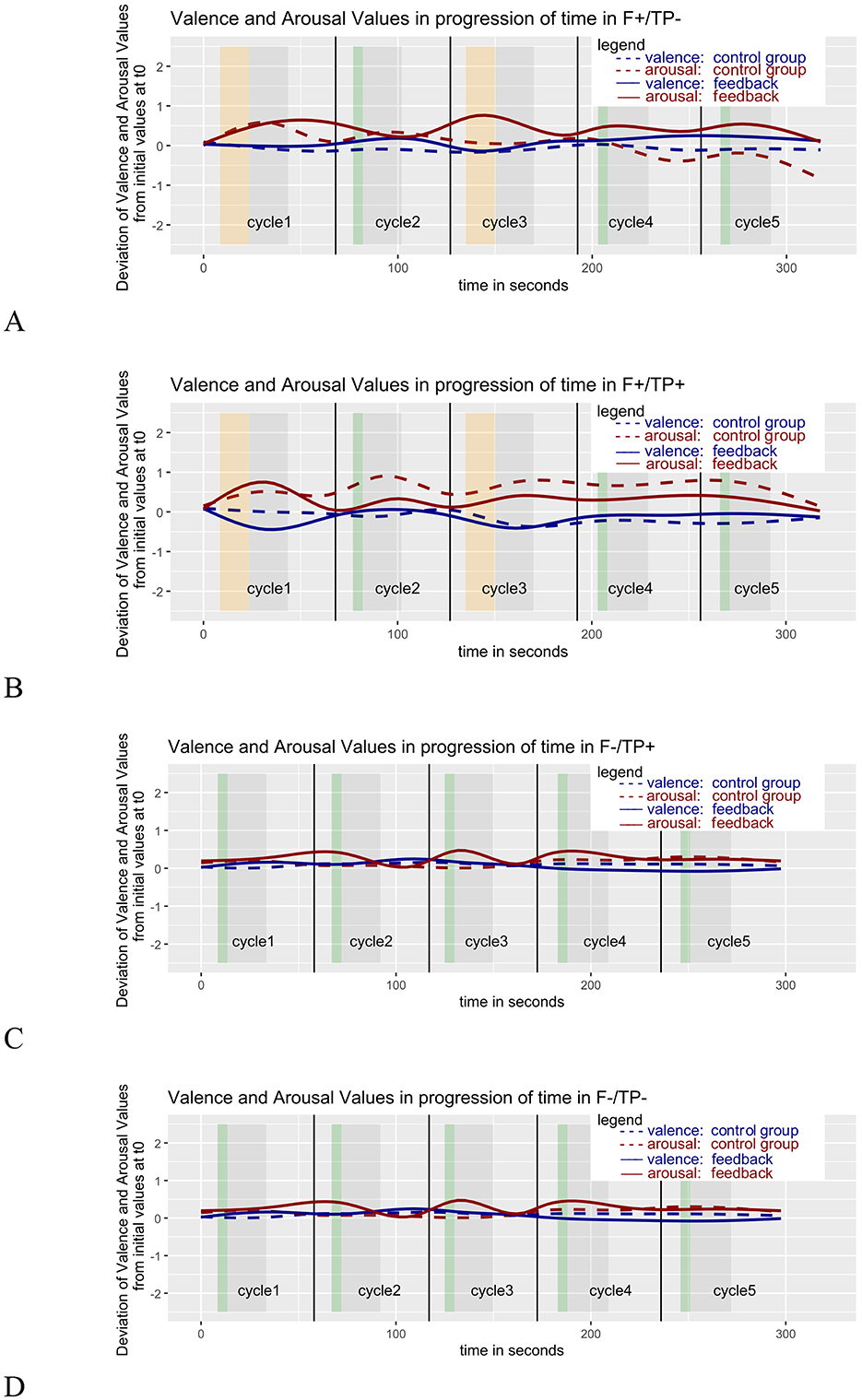

Figure 6 shows how subjective valence and arousal values progressed over time in all four experimental conditions. The valence and arousal values of each participant were normalized as a deviation from the initial values queried prior to the start of each condition, at t0. Timelines show the actual real-time robot periods (phases 1, 4, and 5), all normalized within the periods across conditions for the purpose of comparison, as well as the normalized data for gesture control (phase 2; visualized in green or orange in the event of simulated system failures) and assembly (phase 3; visualized in gray) (cf. Section 3.6). A decrease in valence and a simultaneous increase in arousal indicate a negative affective experience (cf. Section 3.3). Changes in valence and arousal that indicate negative affective experience and occur at the same time result in an elliptical shape formed by the lines for valence and arousal.

Figure 6. Time progression of valence and arousal values depending on experimental condition and group. Duration of gesture control (phase 2) is highlighted in green in conditions without simulated failures and highlighted in orange in case of simulated failures. Assembly time (robot phase 3) is highlighted in gray. t0 = initial values queried prior to each experimental condition. Graphs belong to condition (A) with simulated failure (F+) but without time pressure (TP-), (B) with simulated failure (F+) and time pressure (TP+), (C) without simulated failures (F-) but time pressure (TP+), and (D) with neither simulated failures (F–) nor time pressure (TP–).

Comparing all subfigures reveals that conditions involving simulated failures (Figures 6A, B) resulted in stronger fluctuations in valence and arousal values than conditions without simulated failures (Figures 6C, D). Conversely, valence and arousal values were not influenced by time pressure, as can be seen by comparing Figures 6C, D. A detailed analysis of the conditions involving simulated failures (Figures 6A, B) shows that the expected elliptical form of the valence and arousal lines mainly occurred during the assembly cycles involving simulated failures (cycles 1 and 3). This form was more evident in the feedback group than in the control group. Nevertheless, both groups showed a trend toward decreased valence and increased arousal in the first part of each assembly cycle, resulting in maximum deviation in the gesture control or assembly phase. At the end of each assembly cycle (phases 4 and 5), arousal values drop again.

To allow for further quantitative comparisons between the experimental conditions, maximum changes in valence and arousal relative to the preceding value over time were calculated, and the frequency of occurrence depending on the phase was analyzed. Of particular interest to the research questions are negative valence deviations (maximum change hereafter referred to as “valence drop”) and positive arousal deviations (maximum change hereafter referred to as “arousal surge”), as these indicate negative affective experiences.

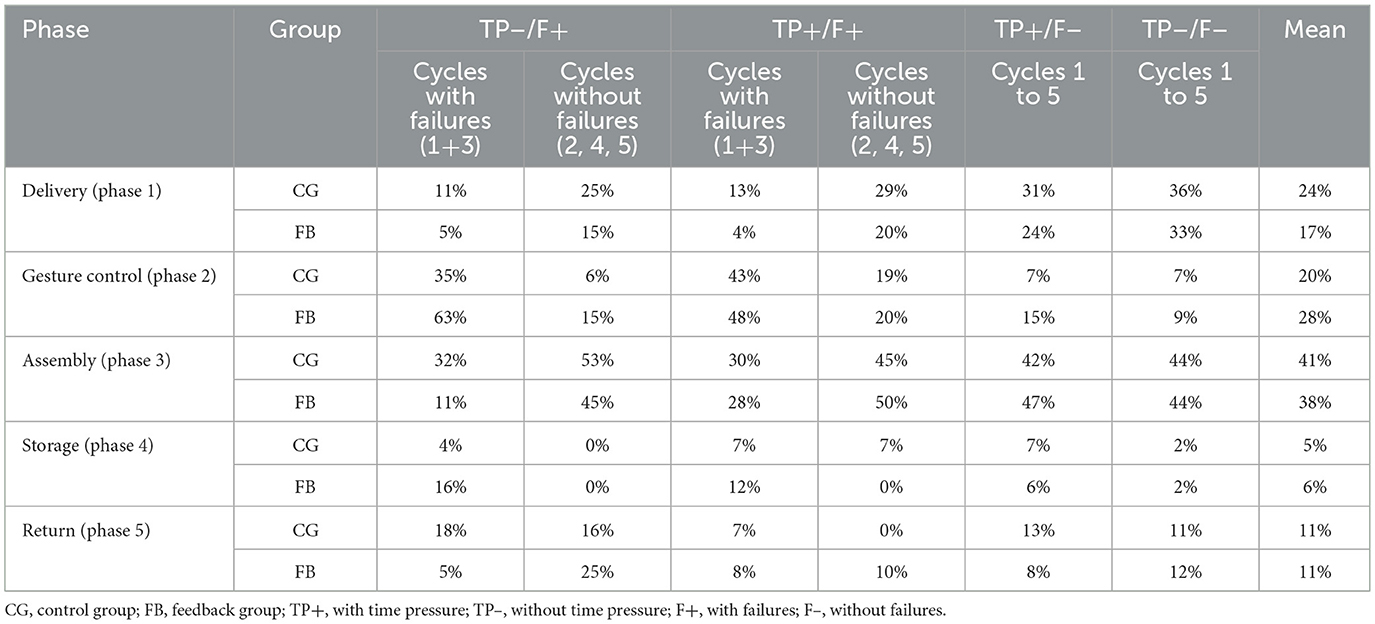

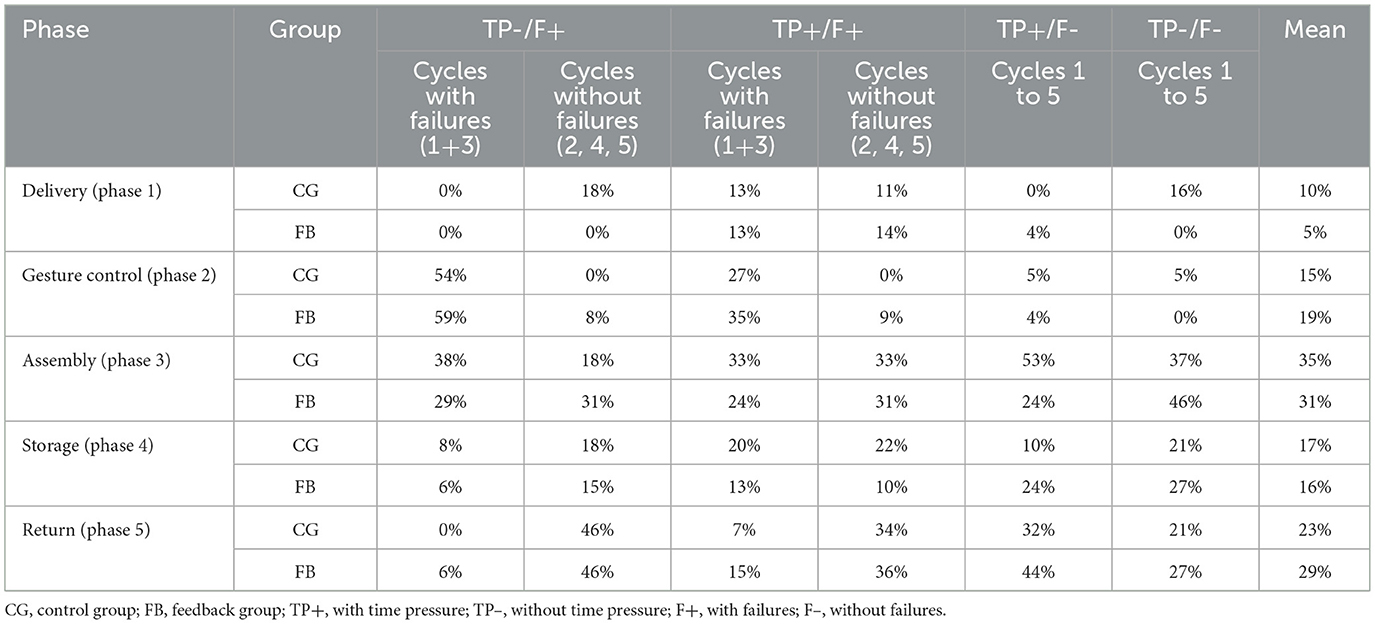

Table 3 shows the relative frequencies of arousal surges depending on the experimental condition and, where applicable, the simulation of system failures in the assembly cycles. Frequency patterns can be identified. Overall, it can be seen from column 9 (“mean”) that arousal surges typically occurred with the following frequency distribution: assembly > gesture control > delivery. Only a few arousal surges occurred during storage and return. Furthermore, arousal surges occurred more frequently during gesture control in cycles with simulated system failures than in cycles without failures, in both experimental groups (cf. columns 3 and 4, and 5 and 6, respectively). However, greater differences were observed in the feedback group. In experimental conditions without failure simulation (cf. columns 7 and 8), arousal surges were rarely caused by gesture control, but were more frequently observed in assembly (cf. Section 5.3 for a discussion of this result). Phase return did not frequently result in arousal surges, even though the robot approached the human at a speed of 500 mm/s while they waited within the safety zone (see Figures 2A, B).

Table 3. Relative frequency of arousal surges depending on experimental condition, assembly cycles and group.

Table 4 shows the relative frequencies of valence drops depending on experimental conditions and, where applicable, the simulation of system failures during assembly cycles. Again, a pattern of frequencies can be identified. Overall, referring to column 9 (“mean”), it is apparent that valence drops typically occurred with the following frequency distribution: assembly > return > gesture control. Only a few valence drops occurred during delivery. Furthermore, in both experimental groups, valence drops during gesture control were much more frequent in cycles with simulated system failures than in cycles without (cf. columns 3 and 4, and 5 and 6, respectively), and there were no differences between the groups during gesture control. In the absence of failure simulations (cf. columns 7 and 8), gesture control rarely caused valence drops, but more valence drops were observed during assembly (see Section 5.3 for discussion of this result). Return caused a high frequency of valence drops in both experimental groups in cycles and conditions without failures comparable to the frequencies found during assembly.

Table 4. Relative frequency of valence drops depending on experimental condition, assembly cycles and group.

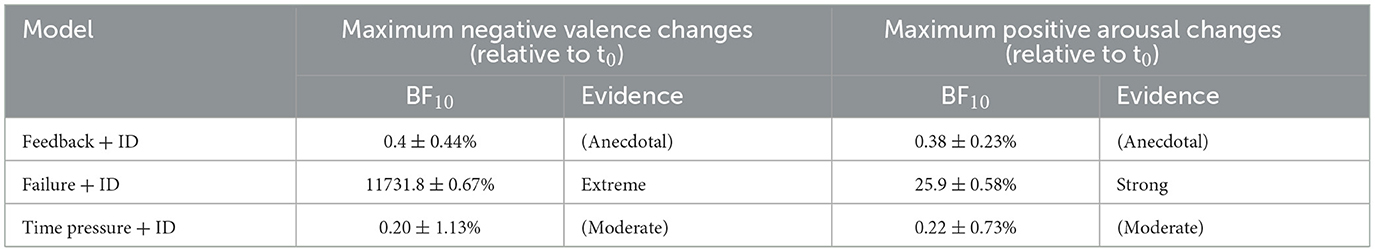

To further analyze the occurrence of negative affective experience, the maximum negative deviations of valence and the maximum positive deviations of arousal relative to the initial values (t0) were calculated. Overall, the maximum valence and arousal changes remained relatively small across all robot phases (compare also Figures 6A–D). A Bayesian 2 × 2 × 2 mixed ANOVA was used to model the maximum deviation in valence and arousal values. Table 5 shows the results of selected Bayesian models compared to a random model, with participants as the random factor. When modeling both valence and arousal changes, it was found that only the model including the independent variable “failure” (row 2) showed significant improvement on the random model. As the models that took the other two independent variables into account favored the null hypothesis (rows 1 and 3), no more complex models are reported. The effect of “failure” was stronger for valence changes than for arousal changes. This result shows that arousal values change to some extent also in conditions without system failures, while valence values mostly remain constant (see Figures 6C, D). As reduced valence and increased arousal were found following system failures, the exploratory hypothesis that system failures induce negative affective experience is supported by the data.

Table 5. Bayesian ANOVA results modeling maximum negative valence changes and maximum positive arousal changes relative to t0 compared to a denominator model using participants ID as random factor.

4.4 Intention to use

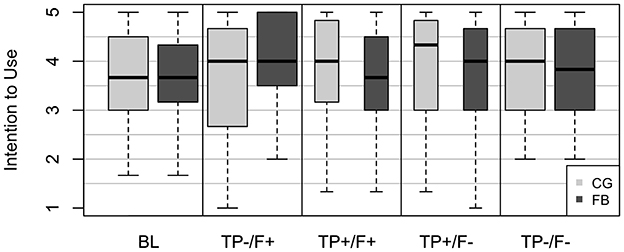

Figure 7 shows the descriptive results for the ratings of intention to use, depending on group and experimental condition. The mean intention to use score was consistently higher than the scale mean, showing comparable results across experimental variations and group. Additionally, high variance was found in all conditions and in both groups.

Figure 7. Results for intention to use depending on experimental conditions and group. Small bars show conditions with time pressure (TP+), wide bars without time pressure (TP–). F+ indicates conditions with simulated failure, F- without failure. The lower and upper boundary of the y-axes represents the scale range. BL, baseline; CG, control group; FB, feedback group.

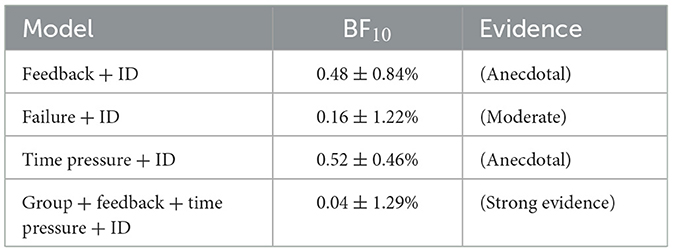

Following completion of the baseline condition, intention to use was found to be similar for both groups (MCG = 3.64, MFB = 3.60, BF10 = 0.52, rscale = 0.3). Again, a Bayesian ANOVA was applied. Table 6 shows results for selected Bayesian models when compared to a random model with participants as random factor. None of the models showed Bayes factors in favor of the alternative. Therefore, the model that only includes participants as a random factor best fits the data.

Table 6. Bayesian ANOVA results modeling intention to use compared to a denominator model using participants ID as random factor.

4.5 Safety-critical behavior

Safety-critical behavior was defined as disregarding simulated system failures during assembly cycles 1 and 3 (cf. Section 3.3). The duration of gesture control during assembly cycles involving system failures was higher for the feedback group (Mdn = 12.84, SD = 3.52) than for the control group (Mdn = 9.62, SD = 5.49), with Bayes factor showing different durations of gesture control between groups (BF10 = 17.85 ± 0%) (strong evidence). Figure 8A shows in more detail that, in the feedback group, durations rarely fell below the defined cut-off value of 8.5 s for “disregarding system failures.” No differences were observed in the disregard of simulated system failures dependent on the time pressure condition. Therefore, when both experimental conditions involving simulated system failures were combined, the control group disregarded 47% of all simulated system failures in assembly cycle 1 and 42% in assembly cycle 3. By contrast, the feedback group disregarded only 5% of failures in assembly cycle 1 and 3% in assembly cycle 3. Bayes factor analysis showed extreme evidence for a difference of both groups (BF10 = 14367.0 ± 0%) (see Figure 8B). As can be seen in Figure 8B, comparing assembly cycles 1 and 3, the frequency of disregarding system failure decreased sparsely.

Figure 8. (A) Duration of gesture control mode during assembly cycles involving system failures depending on group, black line shows expected minimum duration, red line shows cut-off value for operationalization of disregarding system failures, (B) proportion of disregarding system failures across the experiment depending on group. CG, control group; FB, feedback group.

4.6 Effects of trust: relationship of trust and human-centered outcome criteria

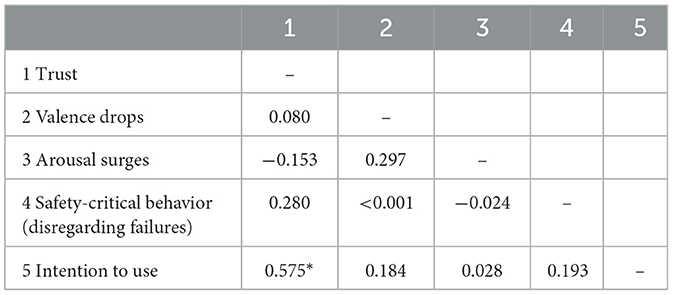

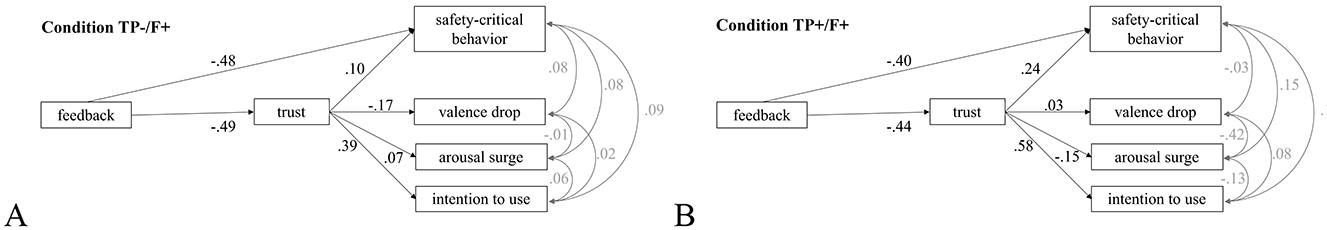

To analyze the relationship between trust and the human-centered outcomes, the experimental condition with the greatest variance in trust ratings was selected. Therefore, non-parametric correlations were calculated for the control group (cf. Table 7) and the feedback group (cf. Table 8) for all dependent variables using experimental condition TP+/F+. In the control group, trust shows a weak positive correlation with safety-critical behavior. The data support hypothesis 3, and not surprisingly, this correlation disappears in the feedback group because hardly any safety-critical behavior was observed in this group (see Section 4.5). In the control group, a strong positive correlation was found between trust and intention to use (data supporting hypothesis 2). Again, this correlation decreases in the feedback group, although a weak positive correlation is still evident. While the correlations show the expected direction for arousal surge, trust was non-significantly related to valence drops and arousal surge (data contradict hypothesis 1).

Table 7. Non-parametric correlation matrix (Kendall's τ) of all dependent variables in the control group (*p < 0.05).

Table 8. Non-parametric correlation matrix (Kendall's τ) of all dependent variables in the feedback group.

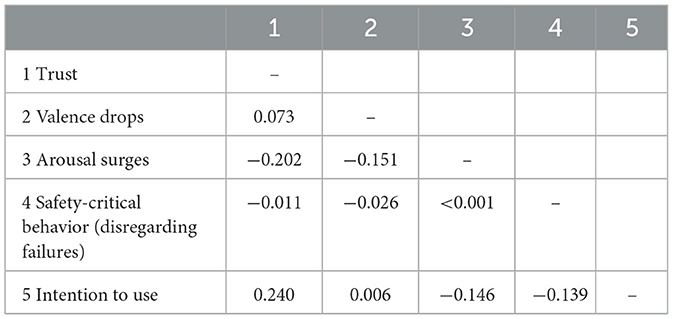

To uncover any potential suppressing effects, a structural equation model was calculated using all variables as manifest variables to estimate the models with trust as a mediator. As safety-critical behavior was only operationalized in experimental conditions involving simulated failures, and as time pressure had no effect on the human-centered outcome criteria (contradicting hypothesis 9, trust mediates the relationship between time pressure and intention to use, as well as safety-critical behavior), separate models were calculated for the experimental conditions involving system failures. These models included the independent variable “feedback” as an exogenous variable, trust as a mediator, and valence drop, arousal surge, safety-critical behavior, and intention to use as endogenous variables. All variables were z-standardized to obtain standardized beta weights.

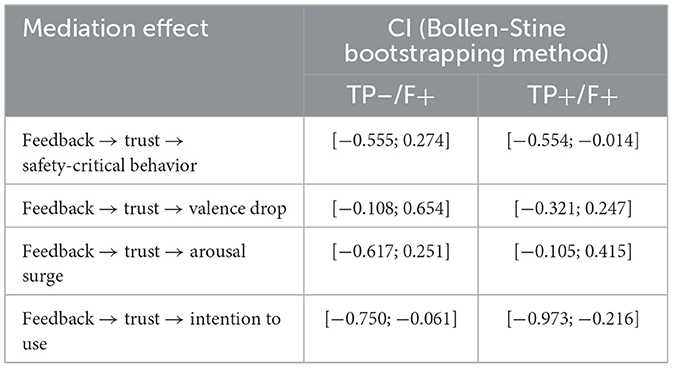

Figure 9 shows the model for the two experimental conditions involving simulated system failures. In condition TP-/F+, the model fit improved significantly when a direct path was added between feedback and safety-critical behavior (CFI = 0.908, RMSEA = 0.156, SRMR = 0.075) (Figure 9A). The same was true for the model for condition TP+/F+ (CFI = 0.994, RMSEA = 0.0.38, SRMR = 0.057) (Figure 9B). Results show a strong direct, negative effect of feedback on safety-critical behavior (supporting hypothesis 7—feedback shows an independent effect on reducing safety-critical behavior) and a strong direct, negative effect of feedback on trust (contradicting hypothesis 5—feedback increases trust). Besides, the model for condition TP+/F+ shows a significant mediation effect of trust on safety-critical behavior as the bootstrap confidence interval (using Bollen-Stine bootstrap method, Kim and Millsap, 2014) for estimating the indirect effect is not including zero (CI = [−0.554; −0.014], cf. Table 9). Data support hypothesis 6 (trust mediates the relationship of feedback with safety-critical behavior). Data do not support this mediation effect in experimental condition TP–/F+ (CI = [−0.555; 0.274]) (data contradicting hypothesis 6). For both experimental conditions involving simulated failures, the models support the significant mediation effect of trust on intention to use (data support Hypothesis 6—trust mediates the relationship between feedback and intention to use). However, there were no mediation effects of trust on valence drop or arousal surge.

Figure 9. Structural equation models with between-subjects variable ‘feedback' as exogenous variable (coding 0 = control group, 1 = feedback group) and trust as mediator for human-centered outcomes. Please note that values for valence and arousal present relative values compared to t0. Models calculated for (A) experimental condition TP-/F+, and (B) experimental condition TP+/F+. TP+, with time pressure; TP–, without time pressure; F+, with failures; F–, without failures.

Table 9. Bootstrap confidence interval for the mediation effect of “trust” in two experimental conditions involving simulated system failures.

5 Discussion

5.1 Summary and interpretation of results

This empirical study aimed to understand how changes in trust, caused by antecedents of trust (system failures, visual feedback and time pressure) impact human-centered outcomes (affective experience, intention to use and safety-critical behavior). The results showed most surprisingly that time pressure had no effect on trust or any other human-centered outcome. On the other hand, both system failures and the availability of feedback, as expected, were shown to have a significant effect on trust. However, the availability of feedback did not affect trust in the absence of system failures. Providing more information to participants about the current and future state of the robot or the time available for assembly did not have a positive effect on trust (cf. Figure 1; cf. Legler et al., 2022 for details on the feedback system). On the contrary, feedback had a negative effect on trust after the occurrence of system failure—a result that was the opposite of that predicted by our literature-derived hypothesis. This probably indicates that the feedback system focused participants' attention (Goldstein, 2010) on the system failure. For safety reasons, the failure was simulated as a technical malfunction in gesture control, ensuring that participants would not be physically harmed if they disregarded the failure. The reduction in trust observed in the feedback group demonstrates that even a less salient and harmless simulated system failure can significantly diminish trust in robots. As trust remained consistently high in the control group across conditions, the results suggest that the level of trust exceeded an appropriate trust level—a state known as overtrust (Lee and See, 2004). As the participants had no secondary task, they were able to focus exclusively on the robot. As they were also not permitted to assemble in the event of any robot movement, it can be assumed that the participants were able to perceive the malfunction but chose not to react. Such complacency is a typical indicator of overtrust (Parasuraman and Manzey, 2010). Therefore, data leads us to suppose that workers overtrusting a robot might notice system failures, but as a result of their inappropriately high level of trust they choose not to react (cf. “free choice,” Schäfer et al., 2024) as they do not expect the robot to work imperfectly or being harmful.

System failures were also found to affect the occurrence of negative affective experiences, measured by valence drops and arousal surges. These negative affective experiences occurred during specific HRC phases. In the case of an experimental condition involving simulated failures, these phases were gesture control and assembly in both groups (see Tables 3, 4). Results show that the negative affective experiences were caused by the simulated system failures. This further supports the assumption that participants in the control group also noticed the system failures, as they showed equivalent changes in valence and arousal compared to the feedback group. Overall, changes in valence and arousal values were within a range of ±2 points on an 11- or 12-point scale, respectively. Although these time-synchronized deviations in both values indicate a negative affective experience during system failures, the intensity of the resulting affects is assumed to be within a range that does not cause distress to humans.

Regarding intention to use, no effect of any of the independent variables was found in the data. Scores remained high across conditions and showed high variance due to individual dispositions, assessed by high intraindividual consistency of intention to use. However, intention to use did not correlate with negative affective experience (see Tables 7, 8), indicating that low intention to use was not caused by perceived distress in participants, but rather by an individual tendency to reject (new) technology in the workplace.

Safety-critical behavior was rarely observed in the feedback group, but frequently in the control group. This result again highlights the importance of feedback in HRC. The negative correlation of trust and safety-critical behavior has only been found in the control group, demonstrating that feedback has the potential to counteract individual tendencies toward overtrust.

Overall, the effect sizes of the relationships between trust, affective experience, intention to use, and safety-critical behavior demonstrate the distinctiveness of all human factors constructs applied. Trust was found to be unrelated to self-reported changes in valence and arousal. There are several possible explanations for this result. Firstly, changes in affective experience were assessed during the interaction, whereas trust was measured after the interaction. As emotions are fleeting (Werth and Förster, 2015), this result may have been confounded by this methodological choice. Secondly, the changes in valence and arousal may have been too small to detect a significant correlation. Third, from a theoretical perspective, the results could suggest that trust is a concept with stronger cognitive than affective aspects, as evidenced by the variety of definitions of trust in robots (cf. Schaefer, 2013 for a detailed overview), which conceptualize trust as an “expectation,” “belief” or “attitude.” This could also explain the strong correlation between trust and intention to use. Beyond trust, the other human-centered outcomes showed no significant correlations with each other. These results further support a need to integrate varieties of human factors criteria when studying HRC in the advent of Industry 5.0.

Finally, it was demonstrated that trust in robots mediated the effect of human-centered outcomes in industry, i.e., safety-critical behavior and intention to use. As strategies for intentionally adjusting trust levels have already been studied (de Visser et al., 2020), our results show that such strategies have a potential to affect industry-relevant outcomes such as safety-critical behavior—thus going beyond adjusting trust levels, which has unclear effects on HRC. This further highlights the importance of considering trust in HRC research to realize the human-centered vision of Industry 5.0.

5.2 Practical implications for HRC design in industrial workplaces

Several implications for industrial HRC design can be derived from the presented study results.

1. Feedback is highly relevant in ensuring situation awareness during HRC. It enables humans to perceive system failures and react properly to these off-nominal, infrequent and unexpected situations (Hopko et al., 2022). As malfunctions of robots are infrequent but always possible (Schäfer et al., 2024) and taking high costs for manufacturing companies both in case of health impairments of employees and reduced quality of products into account, high situation awareness of humans in HRC is key to success. Although this was not assessed in the study, situation awareness is especially important in the case of parallel human tasks (“dual tasks”), which are expected to be the norm in industrial HRC workplaces in order to make effective use of the autonomous robot periods. Nevertheless, dual tasks prevent humans from constantly monitoring the robot's actions, which again underlines the importance of feedback.

2. Simulating a set of safe system failures that do not negatively impact performance outcomes could maintain trust at an “appropriate level” by encouraging workers to pay attention to and monitor the behavior of the robot. This reduces the potential risk of overtrust arising from longer interactions with failure-free automation. These simulated failures must be made salient using a feedback system to reduce the risk of them being missed. This proposition aligns with HRI research which suggests familiarizing users with potential robot failures (Wagner et al., 2018) and applying “deceptive practices” of robots (Aroyo et al., 2021) to prevent overtrust.

3. Trust was unrelated to self-reported changes in valence and arousal. This calls into question frequently suggested operationalizations of trust measurement based on physiological arousal (Arai et al., 2010; Hopko et al., 2022). Additionally, physiological measures require a person to be wired with sensors, which raises questions not only of comfort, but also of protecting personal data. Instead, aiming at a recognition of human trust by an automated robotic system in the future, behavioral indicators of trust (Chang and Hasanzadeh, 2024) should preferably be used, as these are non-invasive and do not distract workers from their tasks.

5.3 Limitations and future research directions

The study presented had some limitations. From a content perspective, as the study focused on human-centered outcomes, performance-centered outcomes were not considered. While recognizing the importance of human-centered outcomes, performance-centered outcomes remain an indispensable target variable in Industry 5.0, as performance is key to success in manufacturing. Investigations into assembly time as a performance-centered outcome can be found in Legler et al. (2022). No effects on assembly time were found as a result of industry-relevant antecedents of trust. There are two possible explanations for this effect. Firstly, even under time pressure, the assembly task may not have been complex enough to affect objective performance measurements when performed without a secondary task. Secondly, it might not be possible to detect human adaptation of assembly time within a total duration of 5 min per scenario. As also trust is known to develop over a longer period of time, studies involving longer, undisturbed interaction with the robot are required. Nevertheless, high levels of trust were already evident in baseline conditions, indicating positive expectations toward robots prior to the interaction. Furthermore, in real industrial scenarios, trust is equally expected to increase during failure-free HRC.

Due to the post-scenario measurement and laboratory setting, it can be assumed that participants were aware of occurring experimental variations and anticipated some form of manipulation. Flook et al. (2019) summarized that ecological validity is low in laboratory settings, especially in the case of failure simulation, as participants perceive the setting as artificial, controlled, and therefore safe. However, it can be assumed that workers in industrial HRC workplaces also believe their workplaces to be safe due to occupational safety examinations prior to workplace release. Time pressure had no effect on the human-centered outcomes, equally callings into question the ecological validity, as the participants probably did not expect consequences if demonstrating insufficient performance.

Finally, this study cannot imply the long-term effects of implementing safe system failures to adjust trust toward a more appropriate level, nor the possible habituation to “safe” system failures of workers at real HRC workplaces. Although participants were instructed to assemble only at the component on the robot flange when the robot stopped moving, participants in the control group may have perceived the simulated system failures but judged them as non-critical and therefore disregarded them. Behavioral observations during the experiment and correct gesture control during the baseline support this explanation. Still, the instruction of participants prior to interaction combined with the baseline condition in this study is comparable to workplace trainings that often include verbal or written instructions for behavior in exceptional situations while practical training is only carried out for normal work processes.

As trust and affective experience has been shown to be unrelated in this study, the findings show a need for a theoretical framework that can explain how and under which circumstances trust impacts emotions.

Several methodological limitations due to the laboratory study design also need to be discussed in order to interpret the results.

Given the relatively small sample of the study, a Bayes factor analysis was performed to compare the relative evidence for the null compared to the alternative hypothesis. Overall, the effects observed in the study clearly favored either the null or the alternative hypothesis. However, the relatively small sample size could account for some effects in this study classified as anecdotal evidence (cf. Jeffreys, 1961) which means that the data show a particular degree of uncertainty regarding the favoring of the null or alternative hypothesis. These effects should be investigated in further studies. Additionally, the sample did not consist of actual workers from the productive industry. Based on participants' highest educational degree, it can be assumed that more than two-thirds of participants were students at the time of the study. Although no statistical effects were found when comparing students and non-students, or participants with and without prior production experience, the generalizability of the results has to be judged as limited, and the results of the study need to be replicated with actual workers from the productive industry. As a starting point, a sample of experienced workers from workplaces including fenceless human-robot coexistence (no shared work task) seem promising.

The cut-off value used to operationalize the disregard of system failures has a significant impact on the results regarding safety-critical behavior. Nevertheless, the difference between groups in terms of disregarding system failures was large enough for the effect to remain when the defined tolerance was deducted.