- 1Department of Work and Organizational Psychology, International Institute for Management and Economic Education, Europa-Universitaet Flensburg, Flensburg, Germany

- 2Department of Computer Science, Faculty of Mathematics and Natural Science, Humboldt University of Berlin, Berlin, Germany

- 3Discipline of Business Information Systems, The University of Sydney Business School, Sydney, NSW, Australia

Introduction: This study examines how job design and worker motivation shape task performance and platform commitment in microtasking. Microtasking, a form of crowdsourcing in which work is coordinated by internet platforms and completed in very small units, poses challenges for designing motivating jobs that yield high-quality results. Drawing on the job characteristics model and self-determination theory, we link task and knowledge characteristics, autonomous motivation, and amotivation to quantitative and qualitative performance as well as commitment.

Methods: We test our model in a free simulation experiment with 558 microtasking workers, combining survey data with objectively assessed performance and self-rated commitment.

Results: The analysis shows that while certain job characteristics are positively related to autonomous motivation, unexpectedly, the latter does not mediate their effects on performance or commitment. Instead, amotivation plays the central role: certain task and knowledge characteristics are associated with reduced amotivation, which in turn relates to better performance and higher commitment.

Discussion: This finding indicates that in microtasking, mitigating amotivation is more predictive than fostering autonomous motivation—a significant departure from traditional work motivation theory. The study contributes to theory by highlighting the distinct role of amotivation in platform work and offers practical guidance for designing microtasks that sustain worker engagement and performance.

1 Introduction

The rise in opportunities for gig work on digital labor platforms is both a global trend and a challenge (Nevo and Kotlarsky, 2020; Wu and Huang, 2024). This paper is concerned with microtasking, a particular form of crowdsourcing. Crowdsourcing refers to the outsourcing of tasks to a “crowd” of internet users in the form of an open call (Brabham, 2013; Howe, 2008), which, today, millions of people use to find work (Datta et al., 2023; Kässi et al., 2021). Microtask “gigs” are accomplished online and are web-based (Wu and Huang, 2024) and fall into categories such as verification and validation, surveys, or quality assessment and testing (Zulfiqar et al., 2022), which is why these gig workers are also called “gig data providers” (Watson et al., 2021). Microtasking refers to crowdsourcing instances in which the tasks are small and require minimal time and skills (Morris et al., 2012). Microtask platforms provide many benefits to requesters such as rapid results, wide scalability, and low cost (Zulfiqar et al., 2022). However, a widely shared concern is the variation in task performance (especially quality), limiting the wider use of microtasking. A key corresponding research challenge is the identification of factors that explain this variance so as to address them via improved design.

Conventional work theories, developed in the context of classical types of work (offline, employed), explain how core job characteristics relate to employee attitudes (e.g., motivation) and to employee behavior (e.g., performance; Oldham and Fried, 2016), yet it remains unclear to what extent such theories apply to gig work (Wu and Huang, 2024) and to microtasking crowdsourcing specifically. Some characteristics are very different to classic types of work. Microtasking work is extremely short in duration (Kaganer et al., 2013). Microtasking platforms use algorithmic management and performance evaluation (Lee et al., 2015; Rosenblat and Stark, 2016). Microtaskers are categorized as independent contractors, not as employees (Kuhn and Maleki, 2017). Microtasking platforms usually exhibit high competition (Woodcock and Graham, 2019) and high turnover rates (Jabagi et al., 2019). Crowdsourcing workers seek high flexibility (Churchill and Craig, 2019; Oyer, 2020). The commitment of gig workers toward platforms might be rather flexible and fluid (Cropanzano et al., 2023). As a result of these differences in the nature of work and its context, it remains unclear to what extent existing theories transfer to and explain motivation of task performance and platform commitment in microtasking crowdsourcing. Indeed, Nevo and Kotlarsky (2020) highlight the motivation of crowd members (during task execution) and the (ongoing) maintenance of continuing crowd engagement as an open research problem.

To date, there are only a few studies on crowdsourcing, and even fewer in microtasking specifically, that consider (1) the relation of certain job characteristics and motivation in local-based gig work (Zaman et al., 2020; Zhang et al., 2024); (2) the relation of certain job characteristics and performance as well as commitment and retention (but not in microtasking; e.g., Behl et al., 2021; Cram et al., 2020; Mousa and Chaouali, 2022; Zhang et al., 2024); (3) motivation and performance (Lukyanenko et al., 2014; Zaman et al., 2020) as well as commitment (Jiang et al., 2021; Rockmann and Ballinger, 2017); and (4) mediations between specific job characteristics and performance as well as commitment, for instance, mental health as a mediator, but only one for motivation as a mediator (Zhang et al., 2024). While many of these studies focused, for instance, on local-based online work, none except for the study by Jiang et al. (2021) were conducted in the context of microwork. Additionally, performance ratings were usually self-rated, with Zhang et al. (2024) providing one of the few studies with customer-rated performance. Furthermore, studies so far have focused on specific job characteristics, such as autonomy and algorithmic control (Wan et al., 2024), and not a comprehensive set. Thus, evidence on the relevance of job design for microtasking is still limited.

In this paper, we address these knowledge gaps and contribute to a better understanding of how job design characteristics and worker motivation relate to task performance and commitment to platforms in the context of microtasking crowdsourcing. To this end, we ask and answer the research question:

How do job characteristics and worker motivation influence task performance and commitment in microtasking crowdsourcing?

To answer this question, we conceptually developed a model based on work psychology theories as well as insights from prior studies in the microtasking and crowdsourcing context. Specifically, for conceptualizing job design, we draw on the job characteristics model (JCM; Hackman and Oldham, 1976, 1980) and its extensions (Morgeson and Humphrey, 2006). For conceptualizing motivation types, we draw on self-determination theory (SDT; Ryan and Deci, 2000a,b). We tested the theoretical model in a free simulation experiment (i.e., quasi-experimental design) with a sample of 558 microtasking workers, measuring job design (i.e., task and knowledge characteristics) and motivational factors as well as objective task performance (quantitative and qualitative) and self-rated commitment to the platform at two points in time. The analysis contributes to our theoretical understanding of task design and motivation in microtasking work and has practical implications for microtasking design and its management.

Microtasking is a form of crowdsourcing (Kaganer et al., 2013) and hence open innovation (Schlagwein et al., 2017), which breaks knowledge work down into small “microtasks” that are completed in seconds or minutes by human workers (Paolacci et al., 2010). As such, it offers a work format that challenges traditional “employed vs. self-employed” categorizations and needs to be considered as a new form of working (Kuhn, 2016; Kuhn and Maleki, 2017). Like other crowdsourcing forms, three primary actors exist in microtasking: the requesters who outline the tasks, the intermediaries providing the platform for task allocation, and the workers performing the tasks.

Crowdsourcing research on requesters has focused on the benefits and applicability of crowdsourcing from the company's or client's perspective. Requesters use crowdsourcing for conducting work, solving problems, or innovating products (Estellés-Arolas and González-Ladrón-De-Guevara, 2012). Studies have compared open innovation approaches to conventional “closed” approaches, finding that crowd approaches may be more effective (Afuah and Tucci, 2012, 2013) or less costly (Baldwin and Von Hippel, 2011). Organizations typically aim to achieve superior work outputs rather than minimize costs (Almirall and Casadesus-Masanell, 2010; Schlagwein and Bjørn-Andersen, 2014). Microtasking requesters are often individuals, such as researchers recruiting survey and experimental participants (Buhrmester et al., 2011; Crone and Williams, 2017; Shank, 2016).

Research on crowdsourcing platforms focuses on their mediating function. Governance of platforms is critical to keep the crowdsourcing community alive (Mindel et al., 2018). Microtasks require very short actions or judgments by humans that cannot or have not been automated, and hence are sometimes called “human computation” (Law and Von Ahn, 2011; Quinn and Bederson, 2011). Research suggests that allowing crowds to define data categories leads to better performance (Lukyanenko et al., 2014), and that results can be improved through appropriate information quality management strategies (Lukyanenko et al., 2019) and crowd review processes (Brynjolfsson et al., 2016). However, algorithmic human resource management is perceived as a form of control and a challenge by crowdworkers (Duggan et al., 2020, 2023), and algorithmic management seems to collide with autonomy perceptions and workers' motivation (Gagné et al., 2022a; Jarrahi et al., 2020). Despite task design being the second most important aspect after payment (Durward et al., 2020; Kuang et al., 2019), designing tasks that keep workers motivated while providing high-quality results has been and remains a key challenge in microtasking crowdsourcing (De Vreede and Briggs, 2019; Horton and Chilton, 2010; Zheng et al., 2011). Comparable dynamics have been documented in contest settings, where sponsorship increases solver participation but shifts the composition of entrants rather than uniformly enhancing outcomes (Mo et al., 2025).

Research on crowdsourcing workers has examined diverse aspects of worker experience and behavior, including value systems (Deng et al., 2016), potential exploitation (Kuhn and Maleki, 2017; Irani and Silberman, 2013, 2014; Tavakoli et al., 2017), and community leadership (Jiahui et al., 2018; Johnson et al., 2015). Research on motivation commonly builds on SDT (Deci and Ryan, 1990; Ryan and Deci, 2000b) and focuses on extrinsic and intrinsic motivations (Alam and Campbell, 2017; Spindeldreher and Schlagwein, 2016). Common extrinsic motivations include monetary compensation (e.g., Borst, 2010; Brabham, 2010; Gerber and Hui, 2013; Hayes et al., 2017; Leimeister et al., 2009; Ye and Kankanhalli, 2013) and recognition (Brabham, 2008, 2010; Choy and Schlagwein, 2016; Füller, 2006; Rode, 2016; Väätäjä, 2012; Zheng et al., 2011). Intrinsic motivations include pleasure, fun, entertainment (Brabham, 2008; Füller, 2006; Ipeirotis, 2010; Rode, 2016; Ståhlbröst and Bergvall-Kåreborn, 2011; Sun et al., 2011; Väätäjä, 2012; Zhang et al., 2015), learning (Zhang et al., 2019), altruism (Alam and Campbell, 2017; Choy and Schlagwein, 2016; Gerber and Hui, 2013; Jackson et al., 2015; Liu et al., 2011), and identity alignment (Choy and Schlagwein, 2016; Gerber and Hui, 2013; Hong et al., 2018; Jackson et al., 2015; Massung et al., 2013). However, SDT emphasizes that work contexts may never be entirely intrinsically motivating (Gagné and Deci, 2005). The current crowdsourcing literature outlines various motivating factors but falls short in linking job design (alterable by the requester), worker motivation, and task performance as well as platform commitment. A theoretical explanation guiding effective task design for the microtasking context specifically is lacking.

Job characteristics refer to the attributes of the job or task itself as well as to the characteristics of the social and organizational environment in which the task is performed (Morgeson and Humphrey, 2006). The JCM holds that such job or task characteristics impact worker behavior and attitude (Hackman and Oldham, 1976); in its original form the JCM lays out five job or task characteristics that are relevant for workers motivation, satisfaction, and performance (Hackman and Oldham, 1975; Oldham and Fried, 2016). These five motivating task characteristics are: (1) autonomy: the task provides substantial freedom to workers; (2) task variety: the task requires a variety of different skills; (3) task significance: the task has an impact on human lives; (4) task identity: the task is a coherent, identifiable piece of work; and (5) feedback: the task provides performance information to workers. Job characteristics theory holds that high levels of these characteristics (i.e., task or jobs strongly exhibiting them) impact positively on job outcomes—mediated by workers' “psychological states” such as perceptions of meaningfulness, responsibility, and satisfaction (Hackman and Oldham, 1976; Oldham and Fried, 2016). Via this mediation, the job characteristics are held to positively impact behavioral outcomes such as the quality of the work performance. Empirical work has mostly supported the basic tenets of job characteristics theory (Oldham, 2013; Oldham and Fried, 2016). Recent theoretical extensions suggest that there are elements missing in job characteristics theory—adding four “knowledge characteristics” to the discussion (Morgeson and Humphrey, 2006); “social characteristics” were introduced in this work, but they are of little applicability to microtasking, which is performed mostly in isolation. While the above five task characteristics are concerned with how the task itself is accomplished, knowledge characteristics reflect the kind of knowledge, skills, and abilities demanded by humans during work, through the work itself. The four motivating knowledge characteristics are: (1) information processing: the task is cognitively demanding due to information handling; (2) problem solving: the task demands novel ideas and solutions; (3) skill variety: the task requires a range of different skills; and (4) specialization: the task requires a depth in knowledge or skills.

Empirical research has shown that including both task and knowledge characteristics allows for better prediction of both worker attitudes and behavioral outcomes (Gagné et al., 2015; Morgeson and Humphrey, 2006). However, the level of applicability and level of predictiveness of the theory varies between different work types (e.g., non-professional vs. professional work; Stegmann et al., 2010). Hence, while there is empirical evidence for the relevance of job design for work outcomes in conventional employed work (Morgeson and Humphrey, 2006; Oldham and Fried, 2016), it is unclear to what degree, or if at all, this applies to microtasking crowdsourcing. On the one hand, there is a general focus on higher autonomy in crowdsourcing as compared to traditional work settings, for instance, by self-selecting work tasks (Ashford et al., 2018). On the other hand, autonomy perceptions by gig workers also include being controlled by algorithmic management, including low autonomy in the execution of tasks (Jarrahi et al., 2020). Bellesia et al. (2024) discuss algorithmic embeddedness as a constraint for the effects of the traditional job characteristics (i.e., the extended JCM, Oldham and Fried, 2016) on the motivation of gig workers. Additionally, gig workers might experience higher restrictions in their job behaviors as algorithmic management link their reputation and, thus, income prospects, on customer satisfaction (Wood and Graham, 2019). Furthermore, given the hyper-specialization in performing small components of a task, job characteristics such as skill and task variety are underexplored in the crowdsourcing context (Keith et al., 2020), and particularly in microtasking.

The “psychological states” part of the JCM has seen empirical support only for perceived meaningfulness (Oldham, 2013). However, SDT (with its focus on the extent of self-regulated motivation) has found substantial support for workers' reactions to job design in a wide range of contexts (Gagné and Deci, 2005). Hence, the combination of JCM and SDT provides a promising foundation for explaining the job characteristics of microtasking and its motivational potential.

SDT distinguishes between amotivation and intrinsic and extrinsic motivations on a continuum ranging from controlled to autonomous regulation, and three basic human needs (i.e., autonomy, competence, and relatedness; Deci and Ryan, 2000; Gagné and Deci, 2005; Gagné et al., 2015; Ryan and Deci, 2000b). Trépanier et al. (2023) generally supported the factor structure reflecting autonomous motivation, introjected and external regulation, and amotivation. On the favorable, autonomous end of the continuum is “motivation” as a voluntary choice with internal regulation. Here, the motivation is more intrinsic because individuals enjoy autonomy and a sense of choice, congruence, and volition. There is an inherent tendency in humans to seek out novelty and challenges; to extend and exercise one's capacity; and to explore and learn (Ryan and Deci, 2000b). Autonomous motivation is linked to optimal employee functioning (e.g., vigor/vitality, satisfaction, lower turnover intention; Trépanier et al., 2023). On the unfavorable, controlled side of the continuum are externally regulated motivations (ranging from integrated, identified, and introjected to external) and finally “amotivation” (Gagné and Deci, 2005). Controlled motivation is extrinsically driven because it drives individuals to withstand pressure, tension, and demand. Humans perform such activities in order to attain a desired and separable outcome (Deci and Ryan, 2000). While autonomous and controlled motivations involve intentional regulation of behavior, amotivation is characterized by a lack of intention and motivation (Gagné and Deci, 2005). Amotivation involves having no particular positive, explicable motivation for the behavior one is exhibiting (Gagné and Deci, 2005). The consequences are emotional exhaustion, intention to quit, and lower performance and job effort. The basic human needs for autonomy, competence, and relatedness are critical for autonomous motivation (Gagné et al., 2015; Van den Broeck et al., 2008). On the contrary, the less these needs are fulfilled, the higher the amotivation. In order to contrast these differences in the quality of motivations, in our study we concentrate on the far ends of the proposed continuum, that is, on autonomous motivation and amotivation.

What does this mean for microtasking? As reviewed above, prior research on microtasking and other forms of crowdsourcing is fragmented and cannot clearly answer to what degree such concepts are applicable. In a sample of local-based online crowdworkers (i.e., drivers), Zaman et al. (2020) reported a positive relationship between basic need satisfaction (combined measure) and both extrinsic and intrinsic motivation. On the one hand, basic human needs for competence and autonomy might be difficult to fulfill in microtasking due to their brevity. Microwork may reduce the satisfaction of competence needs due to a lack of variety, skill use, and meaning (Gagné et al., 2022b). Regarding autonomy, Gagné et al. (2022a) reviewed mostly negative effects of algorithmic management on workers' need for satisfaction and motivation. On the other hand, microtasking workers can self-select tasks to be performed at a much higher degree than other workers and then repeatedly work on the same tasks for the same project. If basic human needs were fulfilled, on-demand workers were intrinsically motivated and subsequently reported higher organizational identification (Rockmann and Ballinger, 2017). As reviewed above, empirical results available so far suggest that both intrinsic and extrinsic motivations are present in microtasking, with intrinsic motivations particularly impacting positively on the task performance and quality of work, while extrinsic motivation impacts positively on the quantity of work undertaken (Banerjee et al., 2023; Buhrmester et al., 2011; Criscuolo et al., 2014; Crone and Williams, 2017). Overall, we lack empirical evidence on how and which specific task and knowledge characteristics (i.e., the overall job design) relate to specific types of worker motivation and, ultimately, task performance and platform commitment in microtasking crowdsourcing.

To conceptualize job design in microtasking crowdsourcing, the above five task characteristics and four knowledge characteristics are plausibly applicable and useful. Also, job characteristics are pragmatically important antecedents as they can be designed and changed by the work requester. While the impact of some task and knowledge characteristics might be limited due to the small scope of each individual microtasking work item, it appears that a variation is sufficient for a significant variance in motivation and performance even in short-term online work (Kuhn and Maleki, 2017).

Job characteristics and performance as well as platform commitment. For digital work tasks, requesters can often easily and substantially modify the job design, for instance, with the aim of increasing worker motivation or otherwise improving the performance of the task at hand (e.g., reducing error rates). As one example, Aghayi and LaToza (2023) compared traditional and microtask programming directly. Besides a reduction in onboarding time and an increase in project velocity, microtask programming decreased individual developer productivity, while the quality of the created code was comparable. Focusing on gig work challenges in a sample of (local-based) drivers, Zhang et al. (2024) found that viability challenges were negatively related to service performance (service, travel, safety) as rated by customers. Behl et al. (2021) reported, that gamification increased performance and reduced intention to quit. Mousa and Chaouali (2022) found work meaningfulness to be positively related to affective commitment with the platform. Algorithmic controls were related to gig workers' techno-stressors, which in turn related to the continuance intentions of Uber drivers—positively so, if techno-stressors were perceived as a challenge, and negatively so, if techno-stressors were perceived as a hindrance (Cram et al., 2020). However, evidence is limited by the cross-sectional design. Overall, there is preliminary evidence for a link between job characteristics and performance as well as commitment for the crowdworking context, though evidence for microtasking is lacking.

Job characteristics and work motivation. In microtasking, given the limited scope of the task, decreasing amotivation and thus avoiding a lack of motivation (e.g., workers not taking on microtasks of the same type repeatedly and thus not using their skill gain) through job design appears to be prima facie a goal equally important to the conventionally more common aim of job design to increase autonomous motivation. Studies of non-crowdsourcing contexts reported that good job design appears to be significantly related to lowering amotivation (Gagné et al., 2015). Yet, job design seems to be largely unexplored in prior studies of crowdsourcing, which have often treated the task as “given” rather than as “designed.” In one of the rare studies that included JCT and SDT, the five original job (task) characteristics (combined in one measure) were positively related to extrinsic motivation and negatively related to intrinsic motivation and joy (Zaman et al., 2020). Zhang et al. (2024) reported that viability challenges were negatively related to autonomous motivation (in one out of two studies), while algorithm evaluation was not. Thus, results from traditional worker samples seem challenged. However, both cross-sectional studies focused on local-based crowdwork, that is, drivers. Overall, the relationships between job characteristics such as autonomy, skill/task variety, and motivation remain largely unknown for crowdworkers (Keith et al., 2020). Considering the arguments about job design (i.e., JCM), worker motivation (i.e., SDT), and the specifics of the microtasking context, we assume that task and knowledge characteristics will positively relate to autonomous motivation and negatively to amotivation.

Motivation, performance, and platform commitment. There is some empirical evidence that autonomous motivation relates to performance in crowdsourcing (Lukyanenko et al., 2014), and that better design leads to higher motivation, participation, and performance (Leimeister et al., 2009). Further, while not empirically examined, amotivation may also play a central role. The tasks have a limited scope, they are fragmented, non-enriched, short-term, and do not allow individuals to work for a meaning-giving “higher cause.” Meaningfulness may thus be perceived as low, on a personal as well as on a societal level. Thus, if low task and knowledge characteristics relate to low experiences of meaningfulness, they are amotivating. However, in two cross-sectional studies about Uber drivers, autonomous motivation and other-rated service performance were not related (Zhang et al., 2024). In one cross-sectional study about microtasking, microworkers' motivational orientations (i.e., compensation, enjoyment, microtime structure) were positively related to microworkers' intention to continue microworking, via the perceived relative advantage of microworking (Jiang et al., 2021). Overall, empirical results are far from conclusive.

Work motivations as mediators. Studies about motivation mediating between job characteristics and performance or platform commitment in microtasking are lacking. While Zhang et al. (2024) tested an indirect relationship between viability and performance via autonomous motivation (with limited evidence, see above), three other cross-sectional studies find evidence for mediations between job characteristics and commitment via mental health in online work. Umair et al. (2023) found feedback, not job autonomy, to be positively related to overload and job insecurity, while the latter, in turn, were related to strain, which related to (higher) discontinuous intention. Likewise, Wang et al. (2020) reported that feedback and cognitive demands related to exhaustion and—via crowdwork experiences—to lower platform commitment. Finally, challenges related to gig work (e.g., income insecurity, loneliness) were related to gig workers' withdrawal intentions, again mediated by stressor perceptions (Huang et al., 2024). In summary, mediations in cross-sectional studies can only be interpreted with caution. Furthermore, the mediation between job characteristics and performance as well as commitment by motivation has not yet been investigated in microtasking.

Through their impacts on worker motivation, we expect task and knowledge characteristics to ultimately influence performance, both in terms of quality and extent, as well as platform commitment (e.g., Jabagi et al., 2019). More specifically, we argue that beneficial job design (high scores for task and knowledge characteristics) will be associated with higher quantitative and qualitative performance and with higher platform commitment via their relations to autonomous motivation. Thus, we expect autonomous motivation to mediate the positive associations between task and knowledge characteristics and performance as well as commitment in microtasking. However, as job design by its nature will also directly influence performance and commitment, we assume a partial mediation.

H1: Autonomous work motivation partially mediates the positive relationship between task characteristics and (a) task performance and (b) platform commitment. That is, task characteristics are positively related to autonomous motivation, which in turn is positively related to task performance and platform commitment.

H2: Autonomous work motivation partially mediates the positive relationship between knowledge characteristics and (a) task performance and (b) platform commitment. That is, knowledge characteristics are positively related to autonomous motivation, which is positively related to task performance and platform commitment.

Furthermore, we expect that poor job design (“low” task and knowledge characteristics) will be associated with lower performance and platform commitment, mediated through their relation to amotivation. Research on conventional work suggests that performance will suffer and platform commitment will decrease when people are amotivated. Though overall evidence is lacking in microtasking, there are some empirical indications of this effect (Paolacci et al., 2010). Thus, we expect that high task and knowledge characteristics relate to low amotivation, and low amotivation in turn relates to higher performance and platform commitment in microtasking.

H3: Amotivation partially mediates the positive relationship between task characteristics and (a) task performance and (b) platform commitment. That is, task characteristics are negatively related to amotivation, which in turn is negatively related to task performance and platform commitment.

H4: Amotivation partially mediates the positive relationship between knowledge characteristics and (a) task performance and (b) platform commitment. That is, knowledge characteristics are negatively related to amotivation, which in turn is negatively related to task performance and platform commitment.

2 Research method

2.1 Study design

Our research design focused on a free simulation experiment, combined with an upfront survey. Notably, free simulation experiments are different from classical controlled experiments because treatment levels are not predetermined (Fromkin and Streufert, 1976). They correspond to the quasi-experimental study designs conducted in field research in the area of work and organizational psychology. They have been used in various studies in order to capture the rich variation of the independent variables in a natural setting (Burton-Jones and Straub Jr, 2006). Typically, participants are placed in a real-world situation (Gefen et al., 2000) in which they respond to real or realistic events (Söllner et al., 2016) and where they act freely according to what is natural (Vance et al., 2008) in the given setting. Free simulation experiments are specifically effective for guaranteeing ecological validity (Pallud and Straub, 2014). We combined this with survey data. The strategy to administer an upfront survey is inspired by Polites and Karahanna (2012). In the first part of the study, we collected data via a cross-sectional, quantitative, self-report online survey administered as a microtask in the natural environment of a crowdsourcing platform. In the second part of the study, we added workers' objective real-life performance in microtasks over the month following the survey (released by the crowdsourcing platform). Both data sources were matched by the worker IDs with the consent of the participants. The participation was anonymous, voluntary, and confidential. Sociodemographic data was only provided for sample description.

2.2 Sampling procedure and description

Workers on a German microtasking platform were invited to fill in a quantitative survey in the form of a microtask. In order to enhance variability in performance, the invitation to participate was sent to 800 workers with either high or low quantity and quality of prior performance. The sample of 564 participating workers marks an initial response rate of 70.5% (N = 564). The sample included persons with high quality (i.e., task approval rate above 80%) and either high (n = 149) or low (n = 149) quantity (i.e., more, respectively less, than 250 realized tasks), and persons with lower quality and either high (n = 118) or low (n = 148) quantity. Data was complete for 558 questionnaires. One-third (33.5%) of the participants were women (n = 187), 56.8% men (n = 317), and 54 did not specify. The highest education was indicated as university for 137 participants (24.6%), secondary school for 72 participants (12.9%), high school for 185 participants (33.2%), and apprenticeship for 74 (13.3%), while 90 participants did not indicate their level of education. A total of 80 participants held a Bachelors' degree (14.3%), and 22 held a Masters' degree (3.9%). The majority (76.0%) of the participants are of German origin (n = 424), followed by 38 Austrians (6.8%), six Swiss, and each of one to three participants from 16 other countries. For 69.7% of participants, the country of residence was Germany (n = 389). Regarding their employment status, 33.9% of participants were employed (n = 189), 23.8% were students (n = 133), 8.1% were pupils (n = 45), 5.2% were freelancers (n = 29), 3.2% were entrepreneurs (n = 18), 2.7% were stay at home parents (n = 15), 2.3% were unemployed (n = 13), and one indicated that they were an intern (115 not specified).

2.3 Operationalization

To measure the theoretical constructs, scales had to be rated on a Five-point Likert scale ranging from 1 (= I agree) to 5 (= I do not agree). All scales (except platform commitment) were re-coded in order to mirror agreement.

Job design was assessed via the Work Design Questionnaire (Morgeson and Humphrey, 2006), using a German version (Stegmann et al., 2010). This standardized questionnaire is based on the extended JCM as introduced in Section 1. It comprises 21 scales for task design, some of which are not applicable to microtasking (e.g., ergonomic or social aspects). We included the following five subscales for task characteristics:

• Autonomy (including work scheduling, decision-making, work methods) was measured using nine items (e.g., “The job allows me to make my own decisions about how to schedule my work”).

• Task variety was assessed using four items (e.g., “The job involves a great deal of task variety”).

• Task significance comprised four items (e.g., “The results of my work are likely to significantly affect the lives of other people”).

• Task identity was measured using four items (e.g., “The job involves completing a piece of work that has an obvious beginning and an end”).

• Feedback from the job was assessed using three items (e.g., “The work activities themselves provide direct and clear information about the effectiveness of my job performance”).

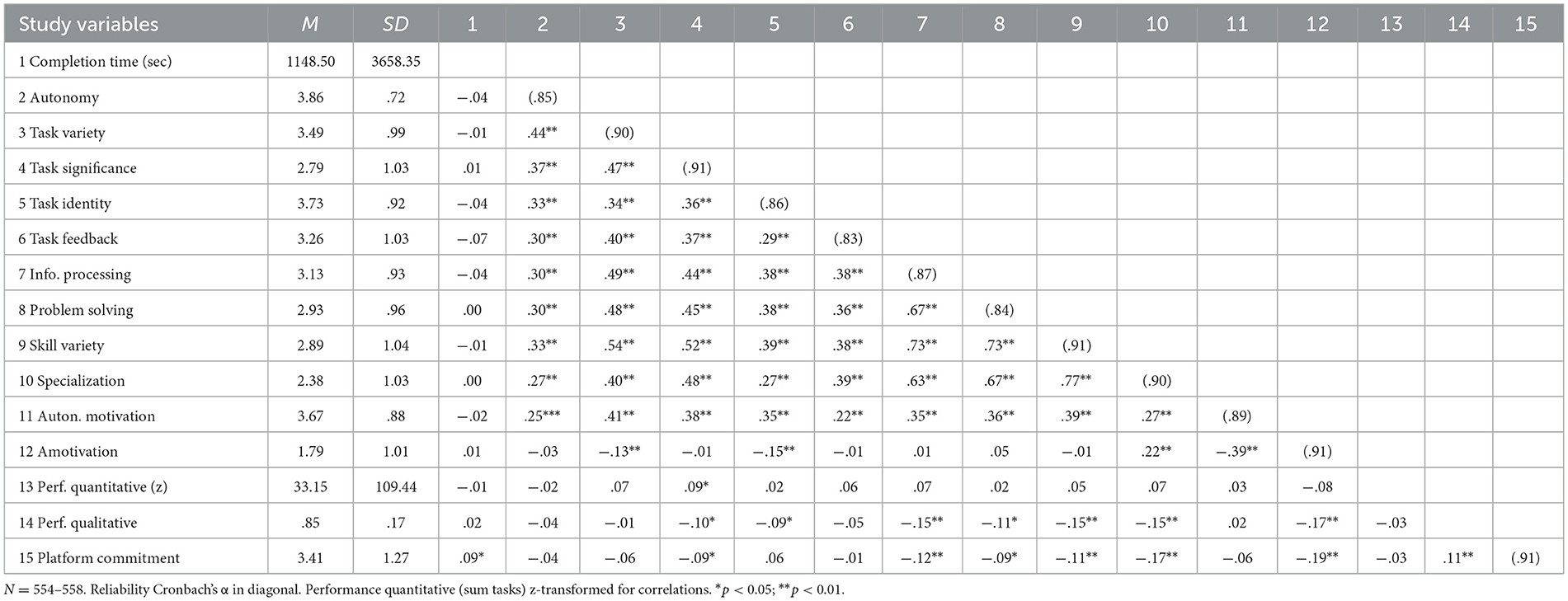

From knowledge characteristics, the subscale job complexity was excluded as the items are not applicable to microtasking. Indeed, complexity is intentionally avoided for microtasks. We use four subscales, each measured using four items: information processing (e.g., “The job requires me to monitor a great deal of information”), problem solving (e.g., “The job involves solving problems that have no obvious correct answer”), skill variety (e.g., “The job requires a variety of skills”), and specialization (e.g., “The job is highly specialized in terms of purpose, tasks, or activities”). Cronbach's α reliability for all subscales ranged between α = 0.83 and α = 0.91 (see Table 1).

Work motivation was measured with scales from the Multidimensional Work Motivation Scale (Gagné et al., 2015). This standardized questionnaire is based on SDT. Scales had to be answered following the stem “Why do you or would you put efforts into your job?” The autonomous motivation composite was assessed with the three items from the component identification (e.g., “Because I personally consider it important to put efforts in this job”) and three items from the component intrinsic motivation (e.g., “Because I have fun doing this job”). Amotivation was measured with three items, a sample item being “I do little because I don't think this job is worth putting efforts into.” Cronbach's α reliabilities were α = 0.89 and α = 0.91, respectively (see Table 1).

The three dependent variables, (quantitative and qualitative) task performance and platform commitment, were assessed as follows: Quantitative performance was operationalized as the total of the submitted tasks (sum) in the month of the survey, and the qualitative performance was the number of the approved tasks relative to the submitted tasks (percentage). These data were matched with the participating workers' IDs. As the common scales assessing organizational commitment seemed less appropriate for online workers, relevant items of platform commitment were developed with the platform providers and operationalized as working for competitors (i.e., non-commitment). Following the statement “I work parallel on other microtasking platforms, because...,” the participants had to rate four reasons for doing so, that is, “I earn more money there,” “this way I have more variety,” “otherwise I have not enough tasks,” and “I have more fun at work there.” Cronbach's reliability was α = 0.91.

Additionally, we included two control variables. As a proxy for the conscientious completion of the survey, the completion time was included as a continuous control variable. Additionally, when analyzing the antecedents of performance quality, the performance quantity was included as a control variable. Following Mason and Suri (2012)'s recommendations for crowdsourcing research, we added an open question in order to ensure quality control. We asked: “At which place, respectively, in which situation are you typically when working on [name of platform]?”

2.4 Analysis

Beside zero-order correlations for descriptive purposes, regression-based path analyses provided by the PROCESS software, model 4, for SPSS (Hayes et al., 2017) was conducted for the mediation hypotheses. Using ordinary least square regression, this macro applies bootstrapped confidence intervals (CI) and generates conditional indirect effects in mediation models (Preacher et al., 2007). Given the 54 separate tests, for the mediation analyses CI was set to 99% and the significance level to p < .001 (=.05/54).

The relationships between job characteristics and motivation were additionally analyzed in an exploratory manner. As an indicator of relative effect size, relative weight analysis (Johnson, 2000) was applied by means of a program by Tonidandel and LeBreton (2011). This ordinary least square-based procedure provides raw and rescaled relative weights (RW) for each variable regarding the variance explanation of the criterion (including the CI of the raw weights). Also, by means of bootstrapping, statistical significance values of the weights were calculated (i.e., CI for the test of significance).

3 Results

Zero-order correlations and descriptive statistics of all study variables are displayed in Table 1. As completion time only significantly correlated with platform commitment, it was only used as a covariate in all analyses with platform commitment as the dependent variable.

The open question for quality control provided valuable insights into the locations and settings where the 558 participants carried out their microtasks. The most frequent answers were: at home (n = 441), at work (n = 65), at computer or laptop (n = 57), in trains, buses or taxi (n = 48), at home office (n = 45), in transit (n = 31), at university or school (n = 23), after work (n = 22), and on the couch (n = 22). The answers mirror the heterogeneity of situations in which workers accomplish their microtasks.

3.1 Autonomous motivation as mediator (H1, H2)

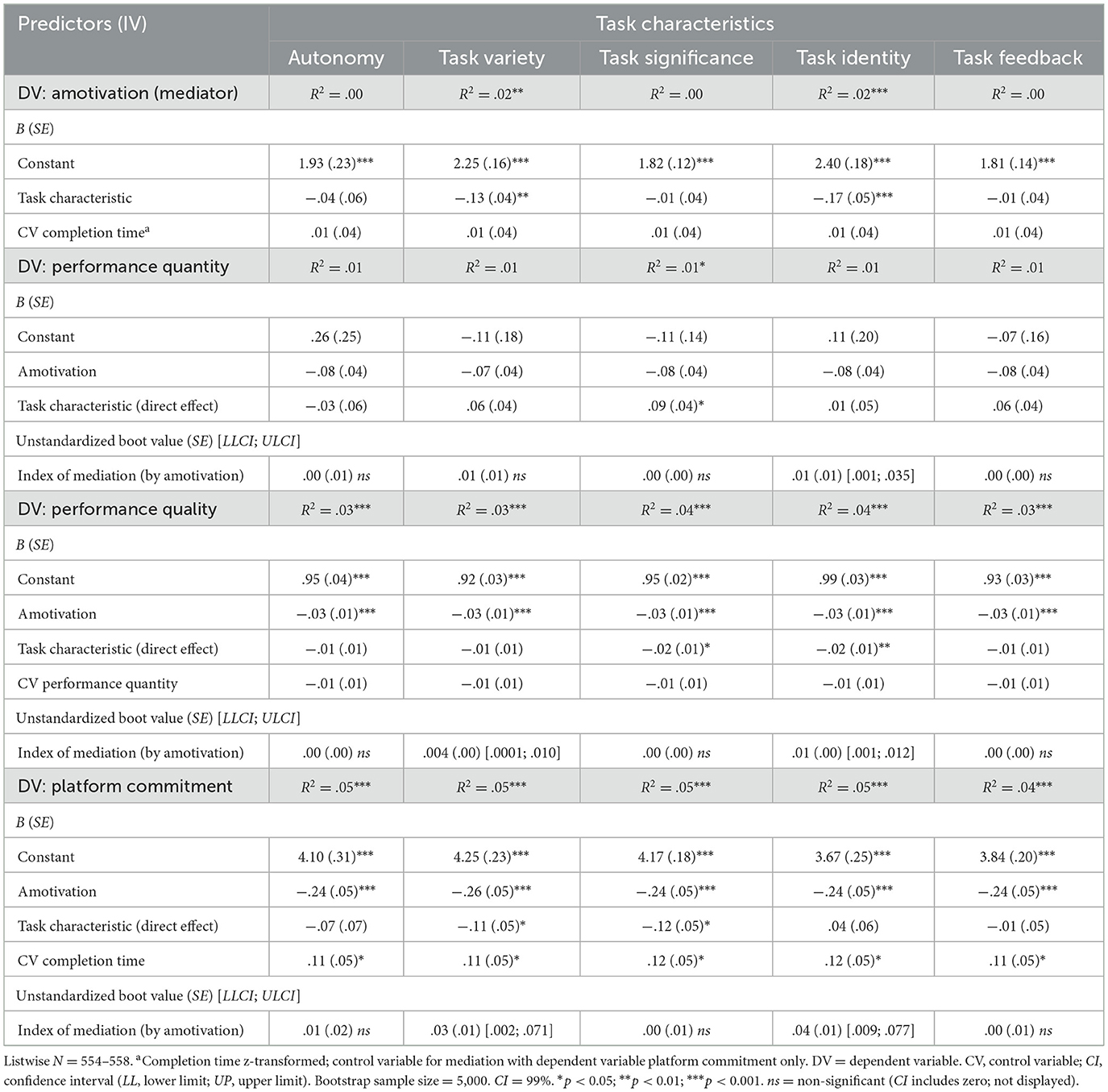

Though all task characteristics were significantly positively related to autonomous motivation, the latter was not related to any of the dependent variables (Table 2). Hence, the mediation hypothesis H1 for the relationships between task characteristics and (a) quantitative performance, qualitative performance and (b) platform commitment was not supported. Additionally, none of the direct relationships between task characteristics and performance or platform commitment reached p < .001.

Table 2. Direct and indirect effects: task characteristics, autonomous motivation, and task performance (H1).

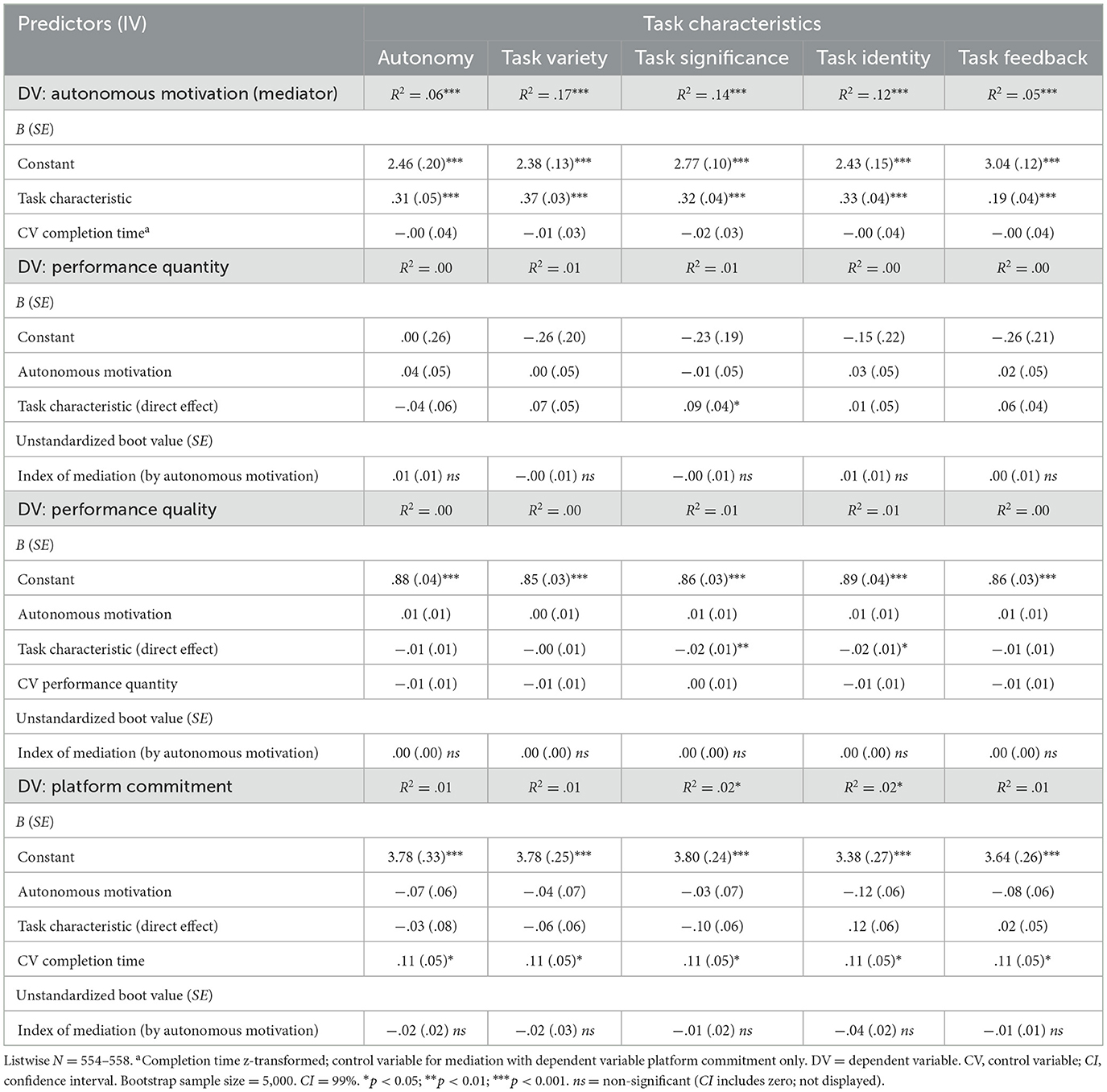

Likewise, all knowledge characteristics were significantly positively related to autonomous motivation, but the mediation hypothesis H2 for the relationship between knowledge characteristics and (a) quantitative performance, qualitative performance and (b) platform commitment was not supported (Table 3). Regarding the direct effects of knowledge characteristics, none was significantly related to performance quantity, while all but problem solving were significantly (i.e., p < .001) negatively related to performance quality (i.e., information processing, skill variety, specialization all B = −0.03, SE = .01, p < .001), and specialization was also significantly negatively related to platform commitment (B = −0.21, SE = .05, p < .001).

Table 3. Direct and indirect effects: knowledge characteristics, autonomous motivation, and task performance (H2).

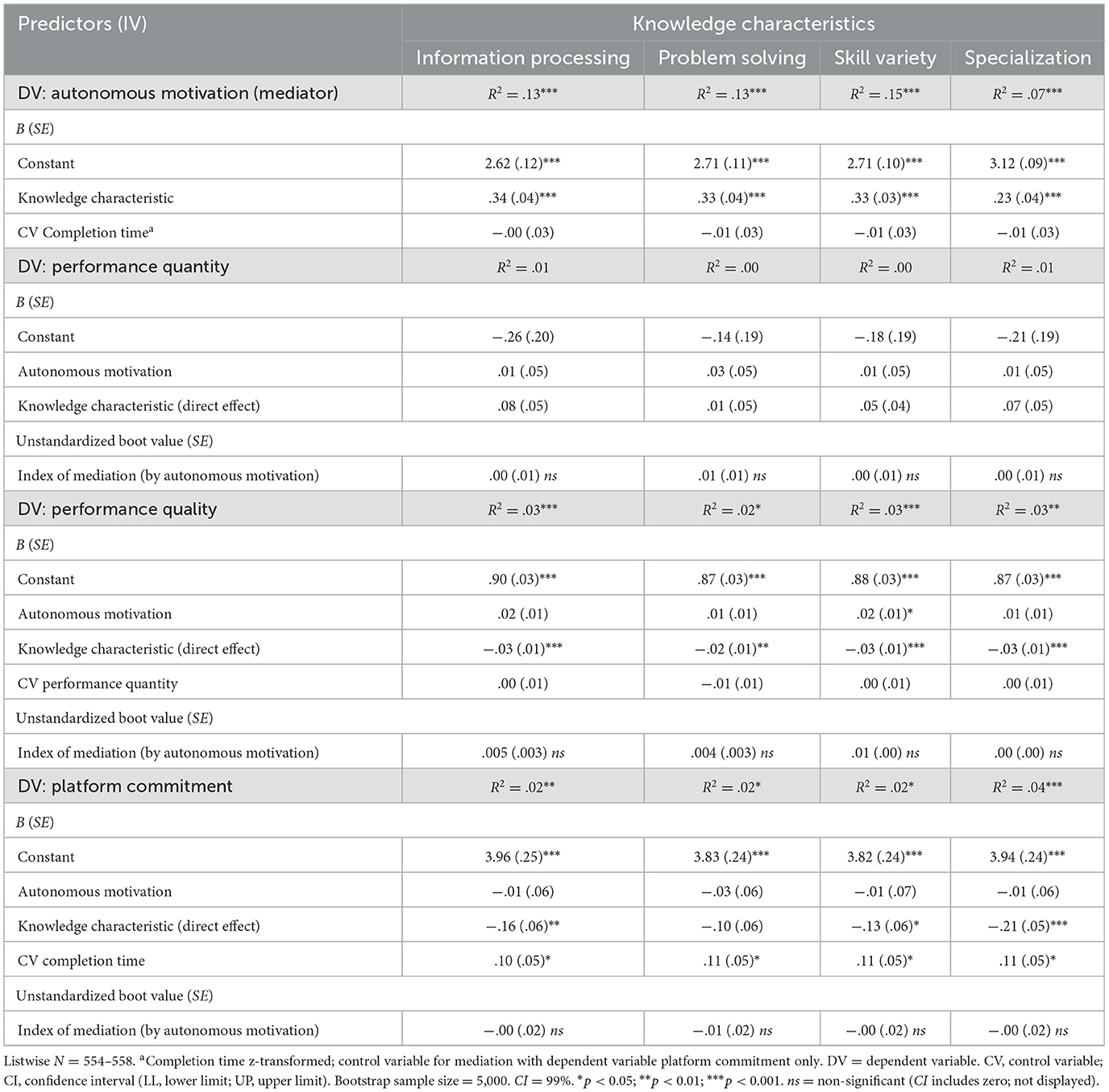

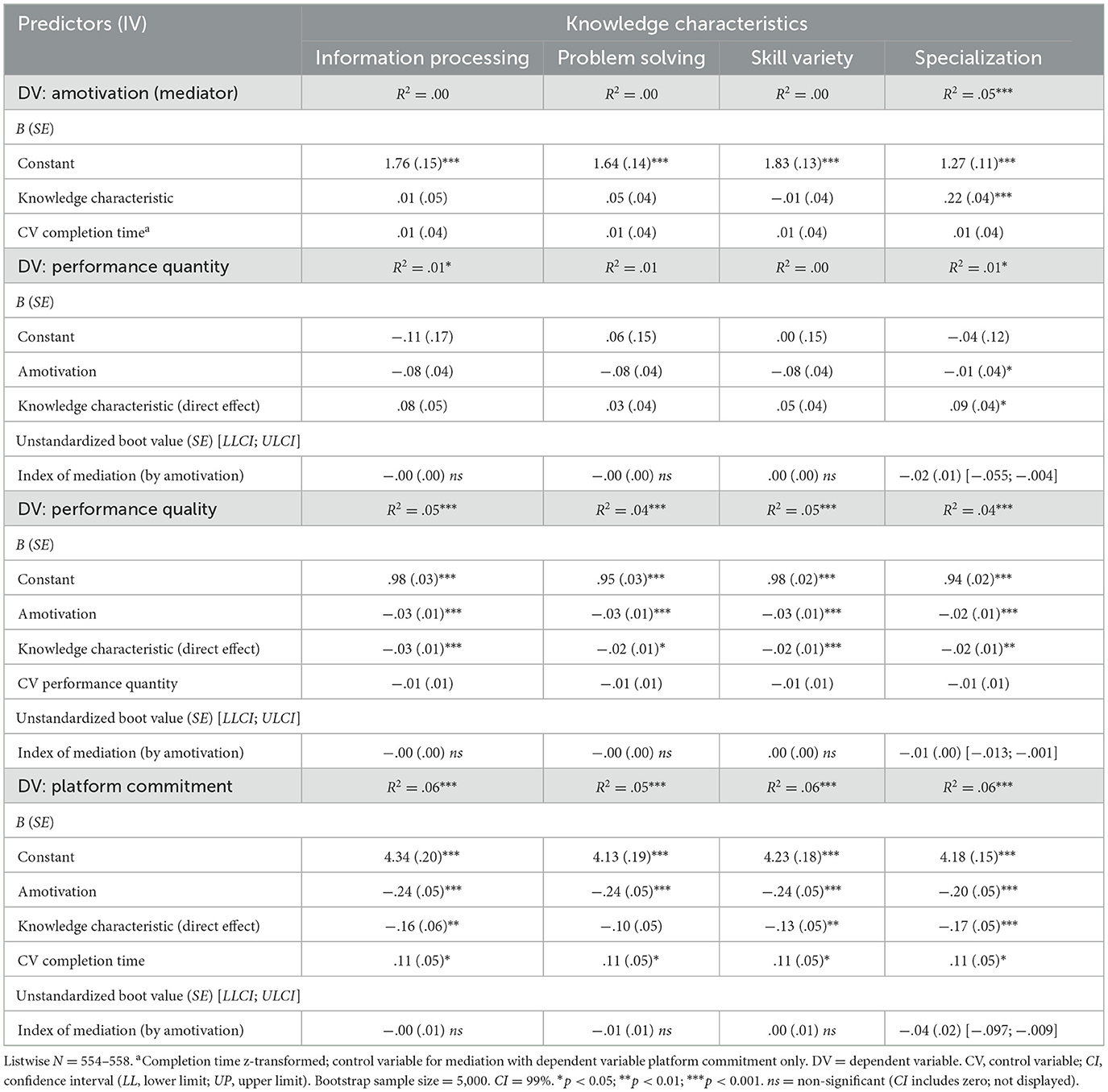

3.2 Amotivation as mediator (H3, H4)

While only task identity (B = −0.17, SE = .05, p < .001) was significantly negatively related to amotivation (Table 4), amotivation in turn was not significantly related to performance quantity, but was negatively related to performance quality (B = −0.03, SE = .01, p < .001) and platform commitment (B = −0.24, SE = .05, p < .001). Amotivation mediated the associations of task identity with performance quantity (CI [.001; .035]), of task variety and task identity with performance quality (CI [.0001; .010], resp. [.001; .012]), and with platform commitment (CI [.002; .071], resp. [.009; .077]). Thus, the mediation hypothesis H3 for the relationships between task characteristics and (a) quantitative performance, qualitative performance and (b) platform commitment was partially supported for task variety and task identity.

While specialization was positively related to amotivation (B = 0.22, SE = .04, p < .001), the mediation hypothesis H4 for the relationship between knowledge characteristics and (a) quantitative performance, qualitative performance and (b) platform commitment was only supported for this one knowledge characteristic (see Table 5). The association of specialization with quantitative performance (CI [−.055; −.004]), qualitative performance (CI [−.013; −.001]) and platform commitment (CI [−.097; −.009]) showed significant indirect effects via amotivation.

Table 5. Direct and indirect effects: knowledge characteristics, amotivation, and task performance (H4).

In summary, all task and knowledge characteristics were significantly related to higher autonomous motivation, but only task identity and specialization were (negatively vs. positively) related to amotivation. While autonomous motivation did not mediate (H1 and H2 not supported), amotivation mediated between three job characteristics and performance as well as platform commitment. That is, in partial support of H3 and H4, amotivation mediated the relationships between task identity and specialization for all three dependent variables, and also between task variety and qualitative performance and platform commitment.

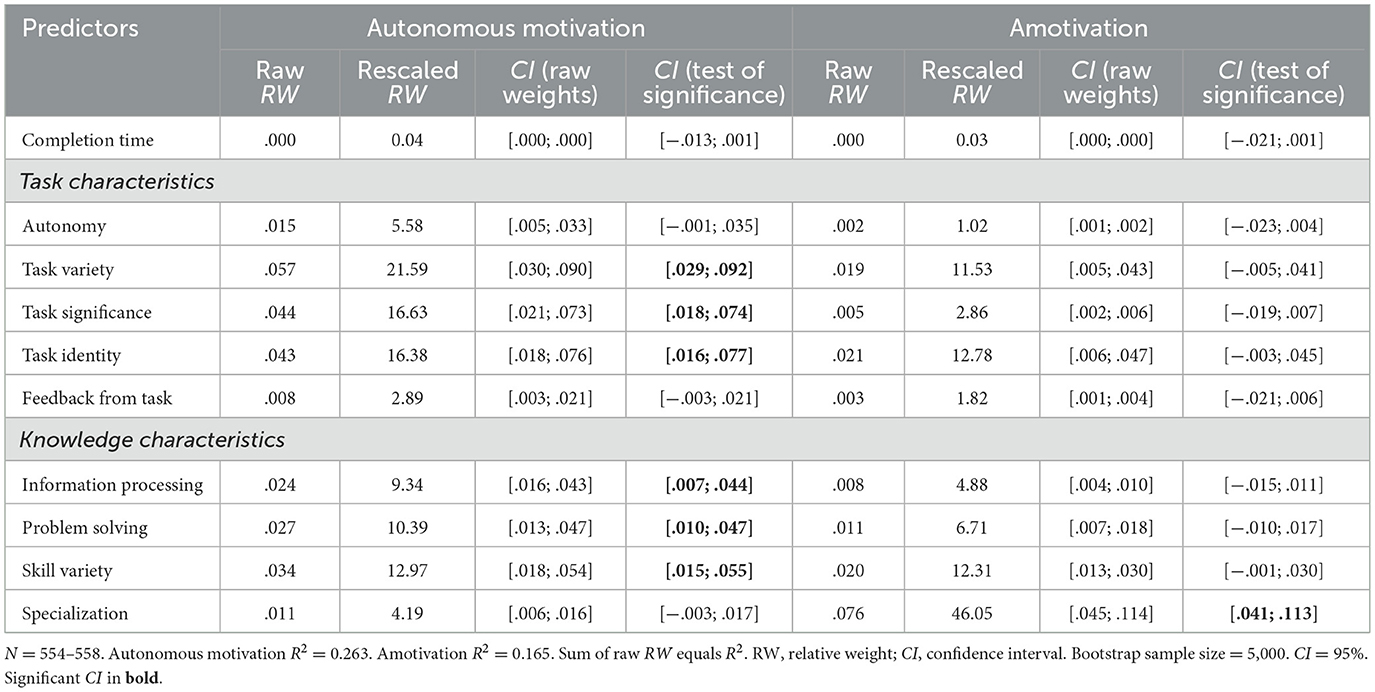

3.3 Direct relationship between job characteristics and work motivation

As a measure of relative effect size (Johnson, 2000; Tonidandel and LeBreton, 2011), Table 6 shows relative weight (RW) analyses including task and knowledge characteristics. For autonomous motivation, we found (a) that task variety, task significance, and task identity had the highest RW and explained more than half (55%, rescaled RW) of the explained variance (R2 = .26), and (b) that those three characteristics as well as information processing, problem solving, and skill variety were significant statistical predictors of autonomous motivation (i.e., CI for the test of significance did not include zero). Regarding amotivation, task variety, task identity, skill variety, and specialization had the highest RW and explained more than 80% of the explained variance (R2 = .17). Specialization, with the rescaled RW of 46.1%, was a significant predictor of amotivation.

Table 6. Relative weight analysis of relationships between task and knowledge characteristics and autonomous motivation/amotivation.

Taken together, three task characteristics (task variety, significance, identity) each explained between 4% and 6% of the variance in autonomous motivation. One knowledge characteristic, specialization, explained 7.6% of the variance in amotivation.

4 Discussion

This study provides empirical insights into the associations between job design, worker motivation, task performance, and platform commitment in microtasking crowdsourcing. The empirical results generally support the associations between job characteristics, worker motivation, and task performance as well as platform commitment. Both task and knowledge characteristics are significantly related to autonomous motivation, and task identity and specialization are also related to amotivation. However, mediations of autonomous motivation, per H1 and H2, were not significant, and only a few statistical mediations for amotivation were supported. That is, amotivation mediated the positive relationships between two task characteristics (i.e., task variety, task identity) and performance and commitment (H3 partially supported) and the positive relationships between the knowledge characteristic specialization and all three performance measures (H4 partially supported). Overall, while all job characteristics are related to higher autonomous motivation, thus supporting an important claim of JCM, autonomous motivation seems less relevant for performance and commitment. However, while the majority of the job characteristics is not related to amotivation, the few significant ones are also mediated by amotivation to be associated with performance and commitment. Additionally, only a few job characteristics (and only knowledge characteristics) are directly significantly related to qualitative performance and platform commitment, especially specialization. Unexpectedly, specialization did not have a beneficial impact on motivation of microtask workers and was related to lower performance quality and platform commitment. This is contrary to earlier findings (e.g., Oldham and Fried, 2016). The low-skill level of microtasks suggests that knowledge characteristics may be detrimental to quality.

When juxtaposing our findings with existing literature, contrasts and similarities emerge. Zhang et al. (2024) did not find a relationship between autonomous motivation of crowdworkers and service performance—neither self-rated nor other-rated. This aligns with our findings and suggests that perhaps amotivation would have been a more relevant focus than autonomous motivation. Zaman et al. (2020) found that job characteristics were positively associated with extrinsic motivation but negatively associated with intrinsic motivation, showing that some associations known for traditional work might not be as straightforward as assumed. However, our results diverge from Zaman et al.'s findings, as we observed positive relationships with autonomous motivation, suggesting that the dynamics of motivation in microtasking environments may be more complex than previously understood. Finally, like Banerjee et al. (2023) we found differences between quantitative and qualitative performance. While the authors reported a higher relevance of extrinsic reward for quantity rather than quality, we found job characteristics being more relevant for quality as compared to quantity. This might underline the intrinsic notion of job characteristics. Analogously, Mo et al. (2025) found that interventions in contest design, such as sponsorship, do not consistently improve performance quality, underscoring how platform-level mechanisms can reshape participation without guaranteeing better outcomes.

The lack of statistical mediation by autonomous motivation might be related to inherent platform characteristics like task fragmentation (Duggan et al., 2020) and algorithmic control (Dunn, 2020), which seem to jeopardize worker motivation (e.g., Gagné et al., 2022a; Jarrahi et al., 2020) and may lead to feelings of powerlessness, loneliness and alienation (Glavin et al., 2021; Wan et al., 2024).

4.1 Theoretical contributions

This study supports the applicability of job characteristics theory (Hackman and Oldham, 1976; Morgeson and Humphrey, 2006) and SDT (Deci and Ryan, 2000; Gagné and Deci, 2005) to microtasking crowdsourcing. This is important because it has been questioned how far general work and human resource theories (Kuhn, 2016; Kuhn and Maleki, 2017; Ryan and Deci, 2000b) and job design theory, including the JCM in particular (Gagné et al., 2015; Morgeson and Humphrey, 2006; Oldham and Fried, 2016; Stegmann et al., 2010), apply to new, open, and crowd-based forms of working such as microtasking. In this regard, the motivation classification stemming from SDT (autonomous motivation vs. amotivation; Deci and Ryan, 1990, 2000; Ryan and Deci, 2000b) proved useful when combined with the JCM (Hackman and Oldham, 1975, 1976).

The lack of significant mediation by autonomous motivation suggests that traditional conceptualizations of work enrichment and empowerment (Oldham and Fried, 2016) may not fully apply to microtasking crowdsourcing. In contrast to trends around work enrichment and empowerment, microtasking essentially returns to Taylorist principles (Deng et al., 2016; Kuhn, 2016; Kuhn and Maleki, 2017). This is consistent with research showing that microtasking crowdsourcing lacks the structure necessary to sustain intrinsic motivation (Deng and Joshi, 2016).

This implies that the very structure of microtasks—characterized by brevity, fragmentation, and limited scope—creates an environment fundamentally different from traditional employment. Because microtasking work is extremely short in duration, it may prevent workers from developing the sustained engagement that typically fosters autonomous motivation (Kaganer et al., 2013). This aligns with observations that microtasking reduces the satisfaction of competence needs due to limited variety, skill use, and meaning (Gagné et al., 2022b).

Our findings align with research questioning the role of autonomy in gig work (Kuhn and Maleki, 2017), where algorithmic management significantly constrains workers' abilities to make meaningful choices. Unlike traditional work environments, where autonomy fosters engagement (Gagné et al., 2015), in microtasking, perceived autonomy may not lead to higher performance or platform commitment. The algorithmic management prevalent on microtasking platforms may undermine autonomous motivation (Wan et al., 2024). Algorithmic control can negatively affect workers' need satisfaction and motivation (Jarrahi et al., 2020).

A notable finding is that specialization was positively associated with amotivation and negatively with performance quality, contrary to expectations. When tasks are already small and require minimal skills (Morris et al., 2012), further specialization may reduce rather than enhance the worker's sense of meaningful contribution. This reflects the “duality of empowerment and marginalization” in microtasking crowdsourcing (Deng et al., 2016). One possible explanation is that microtasking does not provide meaningful skill development or deep engagement opportunities, leading to task fatigue or disengagement (Keith et al., 2020). This is consistent with findings that low-skill microtasks often fail to satisfy workers' need for competence, a key component of SDT (Ryan and Deci, 2000b).

The (limited) statistical mediating role of amotivation rather than autonomous motivation aligns with the finding that autonomous motivation did not relate to service performance in platform work (Zhang et al., 2024). This suggests that in microtasking environments, reducing negative motivational states may be more effective than attempting to enhance positive ones. This insight challenges traditional motivational theories but may have broader applicability to platform work (Wu and Huang, 2024).

Finally, the statistical mediation of relationships between task variety, task identity, and performance outcomes suggests that microtaskers may view these job characteristics as “hygienic factors” (Herzberg, 1966) rather than motivators. When absent, workers become amotivated, but their presence does not necessarily create autonomous motivation strong enough to influence performance. This interpretation is consistent with Zaman et al.'s (2020) finding that job characteristics related differently to extrinsic vs. intrinsic motivation in platform work.

4.2 Practical implications

A straightforward practical implication of our findings is that microtasking crowdsourcing requesters and platform designers should prioritize reducing amotivation rather than attempting to enhance autonomous motivation. From the requesters' perspective, job design aimed at increasing autonomous motivation appears to offer limited benefits, whereas reducing amotivation is more effective. In other words, performance suffers when workers are amotivated; hence, it is in the requesters' interest to focus on design and specifications that minimize amotivation. At the same time, following human-centered design principles that jointly optimize job design and technology remains warranted (Parker and Grote, 2022).

This emphasis on reducing negative motivational states has direct implications for job and task design in microtasking. While traditional job design interventions emphasize job enrichment (Oldham and Fried, 2016), in microtasking, reducing task monotony and ensuring clear task identity may be more effective (Buhrmester et al., 2011). This aligns with findings that task clarity and structure are critical for sustaining engagement in digital markets (Jiang et al., 2021).

The finding that specialization correlates with higher amotivation suggests that platforms should balance specialization with opportunities for variation. In line with research on gamification in gig work (Behl et al., 2021), this could involve designing microtask sequences that progressively increase in complexity or offering skill-based task recommendations, where the nature of work allows. Our findings, and those of others, that algorithmic control can undermine worker motivation (Duggan et al., 2020), suggest that platform designers should ensure that performance evaluation and task allocation mechanisms are transparent and provide constructive feedback. Implementing real-time feedback loops and meaningful worker recognition may help mitigate the negative effects of algorithmic management (see also, Gagné et al., 2022a).

In short, within the scope of what can be designed and changed with typical microtasking jobs, design and specification effort should focus on four key areas: task significance (articulating how the work will be used), task variety (broadening the scope of flexibility of the work where possible), task identity (designing tasks as clearly delineated pieces of work), and opportunities for information processing and problem solving (higher-level skills).

4.3 Limitations and future research

The strengths of this study are the unique mixed-method data, including survey and actual performance data, as well as a large sample size; however, the design also comes with certain limitations. First, job characteristics, work motivation, and platform commitment are self-reported measures. To address this concern, we built on validated and established measurement constructs, but nonetheless, issues of self-reporting apply. Second, survey data were assessed at only one point in time, not longitudinally. This cross-sectional part of the data limits firm causal conclusions on the relationships between job characteristics and motivation and platform commitment. Thus, despite statistically conducting mediation analyses, the results rather present associations than causal claims. Our findings need to be tested with a more longitudinal study design. However, the combination with quasi-experimental performance data in the study reduces the threat of common method variance.

Additionally, our study focuses on a German microtasking platform. This may limit generalizability to other platforms and countries. While psychological associations generally hold across different comparable contexts and microtask workers are commonly an international cohort, cross-country and cross-platform studies are needed to validate whether the observed patterns hold across different contexts (Mindel et al., 2018).

Platform commitment was only indirectly assessed, by means of asking for (not) wanting to work for other platforms. While this proxy should be sufficient for accounting for calculative organizational commitment, that is, commitment based on instrumental need (Meyer and Allen, 1991), it leaves out normative and affective organizational commitment. Though both latter types could be expected to be rather low, due to a lack of perceived obligation (relevant for normative commitment) and a lack of direct social relationships and thus a lack of human targets crowdworkers might feel emotionally attached to (core of affective commitment, Meyer and Allen, 1991), including all three dimensions might provide answers on how to actually foster emotional attachment (i.e., countering negative algorithmic control effects).

The lack of a significant mediating role of autonomous motivation is surprising and warrants further study. This is especially so, given that the link between intrinsic motivation and performance in other work contexts is well established (Lukyanenko et al., 2014). One possible explanation is that microtasking inherently lacks the continuity and depth needed to foster sustained intrinsic motivation Zaman et al., (2020). Also, (Bellesia et al. 2024) propose algorithmic embeddedness as an explanation of why traditional job characteristics may not unfold their motivational potential. Future research could explore whether different microtasking platforms exhibit variations in motivation-related effects based on the nature of the tasks offered. Another aspect requiring future research is the negative impact of specialization on performance quality. This may reflect task fatigue or a lack of perceived meaning in repetitive, specialized work (Keith et al., 2020), which may cause alienation (Wan et al., 2024). That is, future studies are needed to examine whether introducing elements of skill progression or knowledge-building could counteract these effects. Moreover, alternative conceptualizations of motivation, including extrinsic and strategic motivations (Zhang et al., 2024), may be needed to better capture worker engagement in microtasking environments. While we focused on the contrast of amotivation and autonomous motivation, future research may include the continuum of extrinsic motivations Deci and Ryan, (2000); Ryan and Deci, (2000b) as mediators between job design and performance and commitment. Finally, while we based our arguments on JCM and SDT, (Watson et al. 2021) recommend applying the job demand-resources model (JD-R model) to gig work, which could, for instance, mean recognizing the role of wellbeing as a mediator between job demands and performance (i.e., the health path of the JD-R model). In fact, the relationship between job characteristics and motivation is a core part of the JCM as well as the (motivational path of the) JD-R model: within the latter, a range of job resources (e.g., autonomy, feedback) lead to work engagement (Demerouti et al., 2001). The investigation of the health path of the JD-R model for microtask crowdsourcing might focus on algorithmic control as a job demand decreasing workers wellbeing (e.g., Schlicher et al., 2021). Furthermore, the distinction of job demands as hindrance or challenge stressors (LePine et al., 2004, 2005) within microtask crowdsourcing might be valuable with regard to motivation.

As an alternative theoretical framework addressing potentially exploitative management strategies, labor process theory (LPT) describes platforms' control mechanism on a rather systemic level (Gandini, 2018). While LPT was applied to explain the mechanisms of labor control of platforms for local-based gig work (e.g., Heiland, 2022; Wu et al., 2019), similar evidence for microtasking crowdsourcing and the motivation of workers would be welcome.

5 Conclusion

In conclusion, this study highlights the importance of job design in microtasking crowdsourcing and calls for a shift in focus from fostering intrinsic motivation to mitigating amotivation. The findings are informative for crowdsourcing requesters and platform designers in terms of how to enhance performance and commitment but also indicate further research needs.

Data availability statement

The raw data supporting the conclusions of this article will be made available by the authors, without undue reservation.

Ethics statement

Ethical approval was not required for the studies involving humans because the sample consists of adult workers, data was collected completely anonymously, and no questions in the survey could be considered harmful (no intervention whatsoever). The studies were conducted in accordance with the local legislation and institutional requirements. The participants provided their written informed consent to participate in this study.

Author contributions

TS: Conceptualization, Data curation, Formal analysis, Investigation, Methodology, Writing – original draft, Writing – review & editing. JM: Conceptualization, Investigation, Methodology, Project administration, Writing – original draft, Writing – review & editing. DS: Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Acknowledgments

The authors wish to thank Fabian Pittke for helping with collecting the data and preparing it for data analysis.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The author(s) declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher's note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

Afuah, A., and Tucci, C. L. (2012). Crowdsourcing as a solution to distant search. Acad. Manage. Rev. 37, 355–375. doi: 10.5465/amr.2010.0146

Afuah, A., and Tucci, C. L. (2013). Value capture and crowdsourcing. Acad. Manage. Rev. 38, 457–460. doi: 10.5465/amr.2012.0423

Aghayi, E., and LaToza, T. D. (2023). A controlled experiment on the impact of microtasking on programming. Empirical Softw. Eng. 28:10. doi: 10.1007/s10664-022-10226-2

Alam, S. L., and Campbell, J. (2017). Temporal motivations of volunteers to participate in cultural crowdsourcing work. Inf. Syst. Res. 28, 744–759. doi: 10.1287/isre.2017.0719

Almirall, E., and Casadesus-Masanell, R. (2010). Open versus closed innovation: a model of discovery and divergence. Acad. Manage. Rev. 35, 27–47. doi: 10.5465/AMR.2010.45577790

Ashford, S. J., Caza, B. B., and Reid, E. M. (2018). From surviving to thriving in the gig economy: a research agenda for individuals in the new world of work. Res. Org. Behav. 38, 23–41. doi: 10.1016/j.riob.2018.11.001

Baldwin, C. Y., and Von Hippel, E. (2011). Modeling a paradigm shift: from producer innovation to user and open collaborative innovation. Org. Sci. 22, 1399–1417. doi: 10.1287/orsc.1100.0618

Banerjee, S., Bhattacharyya, S., and Bose, I. (2023). The motivation paradox: understanding contradictory effects of awards on contribution quantity versus quality in virtual community. Inf. Manage. 60:103855. doi: 10.1016/j.im.2023.103855

Behl, A., Sheorey, P., Jain, K., Chavan, M., Jajodia, I., and Zhang, Z. J. (2021). Gamifying the gig: transitioning the dark side to bright side of online engagement. Austral. J. Inf. Syst. 25, 1–34. doi: 10.3127/ajis.v25i0.2979

Bellesia, F., Mattarelli, E., Bertolotti, F., and Sobrero, M. (2024). Algorithmic embeddedness and the ‘gig' characteristics model: examining the interplay between technology and work design in crowdwork. J. Manage. Stud. doi: 10.1111/joms.13130

Borst, I. (2010). Understanding crowdsourcing: effects of motivation and rewards on participation and performance in voluntary online activities (Doctoral dissertation), Erasmus University Rotterdam, Rotterdam, NL.

Brabham, D. C. (2008). Moving the crowd at iStockphoto: the composition of the crowd and motivations for participation in a crowdsourcing application. First Monday 13. doi: 10.5210/fm.v13i6.2159

Brabham, D. C. (2010). Moving the crowd at threadless: motivations for participation in a crowdsourcing application. Inf. Commun. Soc. 13, 1122–1145. doi: 10.1080/13691181003624090

Brynjolfsson, E., Geva, T., and Reichman, S. (2016). Crowd-squared: amplifying the predictive power of search trend data. MIS Q. 40, 941–961. doi: 10.25300/MISQ/2016/40.4.07

Buhrmester, M., Kwang, T., and Gosling, S. D. (2011). Amazon's mechanical turk: a new source of inexpensive, yet high-quality, data? Perspect. Psychol. Sci. 6, 3–5. doi: 10.1177/1745691610393980

Burton-Jones, A., and Straub Jr, D. W. (2006). Reconceptualizing system usage: an approach and empirical test. Inf. Syst. Res. 17, 228–246. doi: 10.1287/isre.1060.0096

Choy, K., and Schlagwein, D. (2016). Crowdsourcing for a better world: on the relation between IT affordances and donor motivations in charitable crowdfunding. Inf. Technol. People 29, 221–247. doi: 10.1108/ITP-09-2014-0215

Churchill, B., and Craig, L. (2019). Gender in the gig economy: men and women using digital platforms to secure work in Australia. J. Sociol. 55 741–761. doi: 10.1177/1440783319894060

Cram, W. A., Wiener, M., Tarafdar, M., and Benlian, A. (2020). “Algorithmic controls and their implications for gig worker ell-being and behavior,” in Proceedings of the 41st International Conference on Information Systems (ICIS, India) (Atlanta, GA: Association for Information Systems).

Criscuolo, P., Salter, A., and Ter Wal, A. L. J. (2014). Going underground: bootlegging and individual innovative performance. Org. Sci. 25, 1287–1305. doi: 10.1287/orsc.2013.0856

Crone, D., and Williams, L. (2017). Crowdsourcing participants for psychological research in Australia: a test of microworkers. Aust. J. Psychol. 69, 39–47. doi: 10.1111/ajpy.12110

Cropanzano, R., Keplinger, K., Lambert, B. K., Caza, B., and Ashford, S. J. (2023). The organizational psychology of gig work: an integrative conceptual review. J. Appl. Psychol. 108, 492–519. doi: 10.1037/apl0001029

Datta, N., Rong, C., Singh, S., Stinshoff, C., Iacob, N., Nigatu, N. S., et al. (2023). Working Without Borders: The Promise and Peril of Online Gig Work. World Bank. Available online at: https://openknowledge.worldbank.org/handle/10986/40066 (Accessed November 29, 2024).

De Vreede, G.-J., and Briggs, R. O. (2019). A program of collaboration engineering research and practice: contributions, insights, and future directions. J. Manage. Inf. Syst. 36, 74–119. doi: 10.1080/07421222.2018.1550552

Deci, E. L., and Ryan, R. M. (1990). Intrinsic Motivation and Self-determination in Human Behavior. New York, NY: Plenum Press.

Deci, E. L., and Ryan, R. M. (2000). The ‘what' and ‘why' of goal pursuits: human needs and the self-determination of behavior. Psychol. Inq. 11, 227–268. doi: 10.1207/S15327965PLI1104_01

Demerouti, E., Bakker, A. B., Nachreiner, F., and Schaufeli, W. B. (2001). The job demands-resources model of burnout. J. Appl. Psychol. 86, 499–512. doi: 10.1037/0021-9010.86.3.499

Deng, X., and Joshi, K. (2016). Why individuals participate in micro-task Crowdsourcing work environment: revealing crowdworkers' perceptions. J. Assoc. Inf. Syst. 17, 648–673. doi: 10.17705/1jais.00441

Deng, X., Joshi, K., and Galliers, R. D. (2016). The duality of empowerment and marginalization in microtask crowdsourcing: giving voice to the less powerful through value sensitive design. MIS Q. 40, 279–302. doi: 10.25300/MISQ/2016/40.2.01

Duggan, J., Carbery, R., McDonnell, A., and Sherman, U. (2023). Algorithmic HRM control in the gig economy: the app-worker perspective. Hum. Resour. Manage. 62, 883–899. doi: 10.1002/hrm.22168

Duggan, J., Sherman, U., Carbery, R., and McDonnell, A. (2020). Algorithmic management and app-work in the gig economy: a research agenda for employment relations and HRM. Hum. Resour. Manag. J. 30, 114–132. doi: 10.1111/1748-8583.12258

Dunn, M. (2020). Making gigs work: digital platforms, job quality and worker motivations. New Technol. Work Employ. 35, 232–249. doi: 10.1111/ntwe.12167

Durward, D., Blohm, I., and Leimeister, J. M. (2020). The nature of crowd work and its effects on individuals' work perception. J. Manage. Inf. Syst. 37, 66–95. doi: 10.1080/07421222.2019.1705506

Estellés-Arolas, E., and González-Ladrón-De-Guevara, F. (2012). Towards an integrated crowdsourcing definition. J. Inf. Sci. 38, 189–200. doi: 10.1177/0165551512437638

Fromkin, H. L., and Streufert, S. (1976). “Laboratory experimentation,” in Handbook of Industrial and Organizational Psychology, ed. M. D. Dunnette (Chicago, IL: Rand McNally College Publishing Company), 415–465.

Füller, J. (2006). Why consumers engage in virtual new product developments initiated by producers. Adv. Consum. Res. 31, 639–646.

Gagné, M., and Deci, E. L. (2005). Self-determination theory and work motivation. J. Organ. Behav. 26, 331–362. doi: 10.1002/job.322

Gagné, M., Forest, J., Vansteenkiste, M., Crevier-Braud, L., Van den Broeck, A., Aspeli, A. K., et al. (2015). The Multidimensional Work Motivation Scale: validation evidence in seven languages and nine countries. Euro. J. Work Org. Psychol. 24, 178–196. doi: 10.1080/1359432X.2013.877892

Gagné, M., Parent-Rocheleau, X., Bujold, A., Gaudet, M. C., and Lirio, P. (2022a). How algorithmic management influences worker motivation: a self-determination theory perspective. Can. Psychol. 63, 247–260. doi: 10.1037/cap0000324

Gagné, M., Parker, S. K., Griffin, M. A., Dunlop, P. D., Knight, C., Klonek, F. E., et al. (2022b). Understanding and shaping the future of work with self-determination theory. Nat. Rev. Psychol. 1, 378–392. doi: 10.1038/s44159-022-00056-w

Gandini, A. (2018). Labour process theory and the gig economy. Hum. Relat. 72, 1039–1056. doi: 10.1177/0018726718790002

Gefen, D., Straub, D., and Boudreau, M. (2000). Structural Equation Modeling and regression: guidelines for research practice. Commun. Assoc. Inf. Syst. 4:7. doi: 10.17705/1CAIS.00407

Gerber, E. M., and Hui, J. (2013). Crowdfunding: motivations and deterrents for participation. ACM Trans. Comput. Hum. Interact. 20, 1–31. doi: 10.1145/2530540

Glavin, P., Bierman, A., and Schieman, S. (2021). Über-alienated: powerless and alone in the gig economy. Work Occup. 48:07308884211024711. doi: 10.1177/07308884211024711

Hackman, J. R., and Oldham, G. R. (1975). Development of the job diagnostic survey. J. Appl. Psychol. 60, 159–170. doi: 10.1037/h0076546

Hackman, J. R., and Oldham, G. R. (1976). Motivation through the design of work: test of a theory. Organ. Behav. Hum. Perform. 16, 250–279. doi: 10.1016/0030-5073(76)90016-7

Hackman, J. R., and Oldham, G. R. (1980). Work redesign and motivation. Prof. Psychol. 11, 445–455. doi: 10.1037/0735-7028.11.3.445

Hayes, A. F., Montoya, A. K., and Rockwood, N. (2017). The analysis of mechanisms and their contingencies: PROCESS versus structural equation modeling. Austral. Market. J. 25, 76–81. doi: 10.1016/j.ausmj.2017.02.001

Heiland, H. (2022). Neither timeless, nor placeless: control of food delivery gig work via place-based working time regimes. Hum. Relat. 75, 1824–1848. doi: 10.1177/00187267211025283

Hong, Y., Hu, Y., and Burtch, G. (2018). Embeddedness, prosociality, and social influence: evidence from online crowdfunding. MIS Q. 42, 1211–1224. doi: 10.25300/MISQ/2018/14105

Horton, J. J., and Chilton, L. B. (2010). “The labor economics of paid crowdsourcing,” in Proceedings of the ACM Conference on Electronic Commerce (New York, NY: ACM), 209–218. doi: 10.1145/1807342.1807376

Howe, J. (2008). Crowdsourcing: Why the Power of the Crowd is Driving the Future of Business. New York, NY: Crown.

Huang, X.-J., Sun, Z.-Y., Li, J.-M., and Li, J. H. (2024). Will fun and care prevent gig workers' withdrawal? A moderated mediation model. Asia Pacific J. Hum. Resourc. 62:e12425. doi: 10.1111/1744-7941.12425

Ipeirotis, P. (2010). “Demographics of mechanical turk,” in NYU Working Paper No. CEDER-10-01 (New York, NY).

Irani, L., and Silberman, M. (2013). “Turkopticon,” in Proceedings of the ACM CHI Conference on Human Factors in Computing Systems (CHI) (New York, NY: ACM). doi: 10.1145/2470654.2470742

Irani, L., and Silberman, M. (2014). From critical design to critical infrastructure: lessons from Turkopticon. Interactions 21, 32–35. doi: 10.1145/2627392

Jabagi, N., Croteau, A.-M., Audebrand, L. K., and Marsan, J. (2019). Gig-workers' motivation: thinking beyond carrots and sticks. J. Manag. Psychol. 34, 192–213. doi: 10.1108/JMP-06-2018-0255

Jackson, C. B., Osterlund, C., Mugar, G., Hassman, K. D., and Crowston, K. (2015). “Motivations for sustained participation in crowdsourcing: case studies of citizen science on the role of talk,” in Proceedings of the Hawaii International Conference on System Sciences (HICSS) (Hawaii: University of Hawaii at Manoa, Association for Information Systems IEEE Computer Society Press), 1624–1634. doi: 10.1109/HICSS.2015.196

Jarrahi, M. H., Sutherland, W., Nelson, S. B., and Sawyer, S. (2020). Platformic management, boundary resources for gig work, and worker autonomy. Comput. Support. Cooper. Work 29, 153–189. doi: 10.1007/s10606-019-09368-7

Jiahui, M., Sarkar, S., and Menon, S. (2018). Know when to run: recommendations in crowdsourcing contests. MIS Q. 42, 919–944. doi: 10.25300/MISQ/2018/14103

Jiang, L., Wagner, C., and Chen, X. (2021). Taking time into account: understanding microworkers' continued participation in microtasks. J. Assoc. Inf. Syst. 22, 893–930. doi: 10.17705/1jais.00684

Johnson, J. W. (2000). A heuristic method for estimating the relative weight of predictor variables in multiple regression. Multivariate Behav. Res. 35, 1–19. doi: 10.1207/S15327906MBR3501_1

Johnson, S. L., Safadi, H., and Faraj, S. (2015). The emergence of online community leadership. Inf. Syst. Res. 26, 165–187. doi: 10.1287/isre.2014.0562

Kaganer, E., Carmel, E., Hirschheim, R., and Olsen, T. (2013). Managing the human cloud. MIT Sloan Manage. Rev. 54, 23–32.

Kässi, O., Lehdonvirta, V., and Stephany, F. (2021). How many online workers are there in the world? A data-driven assessment. Soc. Sci. Res. Netw. doi: 10.31235/osf.io/78nge

Keith, M. G., Harms, P. D., and Long, A. C. (2020). Worker health and well-being in the gig economy: a proposed framework and research agenda. Entrepreneurial Small Business Stressors Exp. Stress Well Being 18, 1–33. doi: 10.1108/S1479-355520200000018002

Kuang, L., Huang, N., Hong, Y., and Yan, Z. (2019). Spillover effects of financial incentives on non-incentivized user engagement: evidence from an online knowledge exchange platform. J. Manage. Inf. Syst. 36, 289–320. doi: 10.1080/07421222.2018.1550564

Kuhn, K. M. (2016). The rise of the “gig economy” and implications for understanding work and workers. Ind. Organ. Psychol. 9, 157–162. doi: 10.1017/iop.2015.129

Kuhn, K. M., and Maleki, A. H. (2017). Micro-entrepreneurs, dependent contractors, and instaserfs: understanding online labor platform workforces. Acad. Manage. Perspect. 31, 183–200. doi: 10.5465/amp.2015.0111

Law, E., and Von Ahn, L. (2011). Human Computation. San Rafael, CA: Morgan and Claypool Publishers. doi: 10.1007/978-3-031-01555-7

Lee, M. K., Kusbit, D., Metsky, E., and Dabbish, L. (2015). “Working with machines,” in Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (New York, NY: ACM), 1603–1612. doi: 10.1145/2702123.2702548

Leimeister, J. M., Huber, M., Bretschneider, U., and Krcmar, H. (2009). Leveraging crowdsourcing: activation-supporting components for IT-based ideas competition. J. Manage. Inf. Syst. 26, 197–224. doi: 10.2753/MIS0742-1222260108

LePine, J. A., LePine, M. A., and Jackson, C. L. (2004). Challenge and hindrance stress: relationships with exhaustion, motivation to learn, and learning performance. J. Appl. Psychol. 89, 883–891. doi: 10.1037/0021-9010.89.5.883

LePine, J. A., Podsakoff, N. P., and LePine, M. A. (2005). A meta-analytic test of the challenge stressor-hindrance stressor framework: an explanation for inconsistent relationships among stressors and performance. Acad. Manage. J. 48, 764–775. doi: 10.5465/amj.2005.18803921

Liu, Y., Lehdonvirta, V., Alexandrova, T., and Nakajima, T. (2011). Drawing on mobile crowds via social media. Multimedia Syst. 18, 53–67. doi: 10.1007/s00530-011-0242-0

Lukyanenko, R., Parsons, J., and Wiersma, Y. F. (2014). The IQ of the crowd: understanding and improving information quality in structured user-generated content. Inf. Syst. Res. 25, 669–689. doi: 10.1287/isre.2014.0537

Lukyanenko, R., Parsons, J., and Wiersma, Y. F. (2019). Expecting the unexpected: effects of data collection design choices on the quality of crowdsourced user-generated content. MIS Q. 43, 623–647. doi: 10.25300/MISQ/2019/14439

Mason, W., and Suri, S. (2012). Conducting behavioral research on Amazon's Mechanical Turk. Behav. Res. Methods 44, 1–23. doi: 10.3758/s13428-011-0124-6