- 1Graduate School of Arts and Sciences, Columbia University, New York, NY, United States

- 2School of Humanities, Beijing University of Posts and Telecommunications, Beijing, China

- 3Asian Family Services, Auckland, New Zealand

- 4Graduate School of Arts and Sciences, Georgetown University, Washington, DC, United States

- 5Department of Media and Communications, London School of Economics, London, United Kingdom

- 6Department of Economics, Albert-Ludwigs-Universität Freiburg, Freiburg im Breisgau, Germany

- 7Sun Yat-sen University, Guangzhou, China

This paper explores the transformative potential of big data and data science in global governance, with particular emphasis on their application in international organizations addressing sustainable development challenges. Through comprehensive analysis of theoretical frameworks, current applications, and future directions, we examine how big data technologies enhance decision-making processes and operational efficiency in global governance frameworks, particularly within United Nations agencies and affiliated international organizations. The research identifies the “4Vs” of big data (Volume, Velocity, Variety, and Veracity) as fundamental characteristics reshaping governance approaches while highlighting innovative applications like UN Global Pulse, SDG tracking systems, and AI-driven predictive analytics in crisis prevention. We assess technical, ethical, and organizational challenges, including data quality inconsistencies, interoperability issues, privacy concerns, algorithmic bias, and resource constraints that impede the full integration of big data into governance systems. The paper proposes forward-looking strategies for infrastructure development, skills enhancement, and policy frameworks that can maximize big data's benefits while addressing ethical considerations and regulatory requirements. Our findings suggest that big data, when properly governed through international cooperation and ethical frameworks, can significantly enhance crisis response capabilities, improve resource allocation, and accelerate progress toward sustainable development goals. This research contributes to the evolving understanding of big data's role in addressing transnational challenges through improved monitoring systems, predictive capabilities, and evidence-based policy interventions.

1 Introduction

1.1 Context and importance

Big data and data science offers new opportunities for managing global issues, increasing the effectiveness and speed of implementation (Hansen and Porter, 2017). These technologies gather information from diverse sources—from satellite imagery and remote sensors to social media—and translate them into actionable insights for addressing global challenges. By enabling real-time analysis and predictive modeling, big data assists policymakers and governments in making plans and drawing solutions to current and future global challenges (Giest, 2017).

The United Nations Sustainable Development Goals (SDGs), which aim to eradicate poverty, eliminate hunger, expand access to clean water, and combat climate change among other goals, exemplify the types of complex global issues that can benefit from data-driven solutions. For instance, satellite observation coupled with modeling enables stakeholders to track the dynamics of deforestation and urbanization processes and evaluate agricultural productivity for some SDG indicators.

As the world becomes increasingly interconnected, traditional policy responses often struggle to keep pace with the scale, speed, and complexity of global phenomena such as pandemics, natural disasters, and military conflicts (Kuzio et al., 2022). In this context, big data provides a means to enhance evidence-based governance across national borders, facilitating smarter, more inclusive international responses. According to Sîrbu et al. (2021), real-time data analytics are useful because they provide extra context concerning crises and tracking movement or trends. This contextual importance serves to justify the need for big data and data science in the future of global governance.

1.2 Research objectives and key questions

This paper explores the potential of big data and data science in global governance, with an emphasis on future needs and applications. It provides a comprehensive review of current applications of big data in international organizations; limitations in technological infrastructure, ethical guidelines, and political frameworks; solutions to address current challenges; and future directions for emerging technologies in multilateral institutions.

Main research questions of the paper include:

(1) How are international organizations currently applying big data to support global governance goals such as the SDGs, humanitarian relief, and crisis prevention?

(2) What technical and organizational infrastructures are required to implement big data systems at scale within international organizations?

(3) What ethical, legal, and political challenges arise from the cross-border use of big data for global governance?

(4) How can big data and AI enhance early warning systems for crises, from food insecurity to political conflict?

(5) What policy reforms, standards, and capacity-building strategies are necessary to ensure equitable and responsible use of big data in international governance?

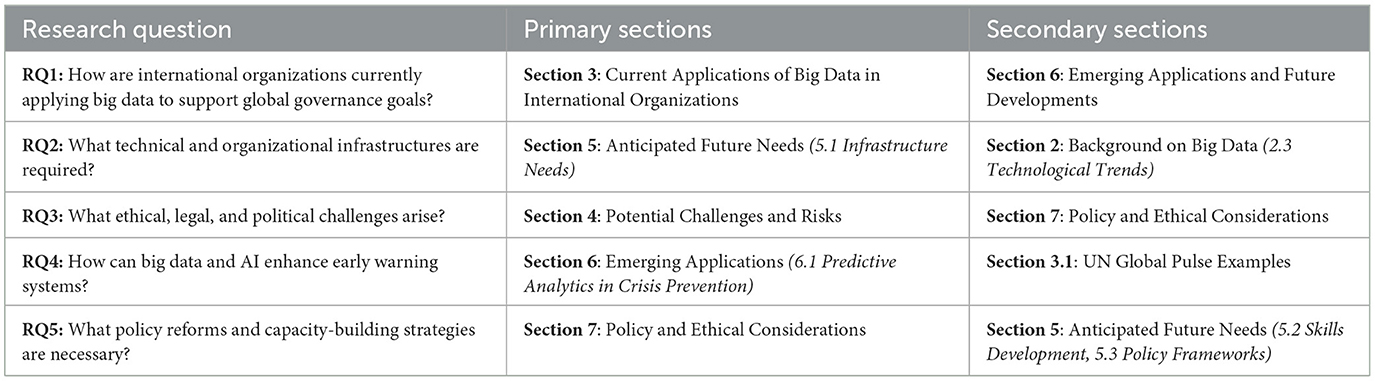

Answering these questions, the paper aims to offer a theoretical and practical understanding of how big data can help address global challenges (Table 1).

1.3 Justification of scope and sampling strategy

To explore these questions, we focus on a purposive sample of international organizations and their projects that represent the diversity of big data-driven governance initiatives globally. This includes:

• Multilateral organizations such as the UN, World Bank, and WHO—selected for their central roles in shaping global development, health, and humanitarian agendas.

• Key projects and initiatives utilizing big data, such as UN Global Pulse, the Global Information and Early Warning System on Food and Agriculture (GIEWS), and WHO's NEXO weather stations—chosen because they exemplify cutting-edge integration of data science into institutional workflows and operate at a relatively large scale with demonstrable influence.

• Specific big data applications, spanning domains like climate change, food security monitoring, public health analytics, and conflict prediction—selected to demonstrate the breadth of use cases and the evolving toolkit of data governance.

This sampling strategy enables a nuanced exploration of both technical innovations and institutional pathways, ensuring the paper remains grounded in practical experiences while offering insights transferable across sectors and regions.

1.4 Structure overview

This paper adopts a systematic approach to examine the role of big data in global governance. The paper is divided into eight sections. Section 1 is an introduction that presents the topic under discussion, whereas Section 8 discusses the research findings and outlines recommendations concerning the policy implications and future research prospects (Figure 1). The rest of the sections presents different issues concerning big data and global governance:

Section 2: Background introduces the foundational concepts of big data and data science, including related technologies such as AI and Internet of Things, as well as emerging technological trends such as data democratization, cloud storage evolution, and visualization advances. It establishes the conceptual and historical groundwork necessary for understanding pathways for smarter, real-time decision-making.

Section 3: Current Applications examines how major international organizations (UN, World Bank, WHO) are deploying big data. Case studies include SDG monitoring through satellite imagery, AI-assisted social welfare targeting, and global health AI standards.

Section 4: Challenges and Risks analyzes the technical, ethical, and organizational barriers to big data adoption, including data quality, algorithmic bias, interoperability issues, and digital authoritarianism.

Section 5: Anticipated Needs outlines future requirements in infrastructure, training, and regulatory development for expanding data-driven governance. It identifies bottlenecks in cloud computing, data literacy, and policy coordination.

Section 6: Emerging Applications explores the latest emerging applications of big data within international organizations and identifies areas where further development and innovation are needed, particularly following progress in infrastructure, training, and regulatory development highlights new uses of AI and predictive analytics.

Section 7: Policy and Ethical Considerations evaluates normative frameworks governing data use, including data sovereignty, international guidelines and regulations, the General Data Protection Regulation (GDPR) compliance, and accountability mechanisms.

2 Background on big data and data science

2.1 Definitions and core concepts

2.1.1 Big data definition

Big data has emerged as a transformative concept in the digital age, driven by the exponential growth of data generated through various digital activities. The McKinsey Foundation defines big data as “data sets whose size is beyond the ability of typical database software tools to capture, store, manage, and analyze” (Manyika et al., 2011). The inherent value of big data lies in its ability to reveal patterns through the interconnections of data points concerning individuals, groups, or the underlying structure of information itself (Vargas-Solar et al., 2016).

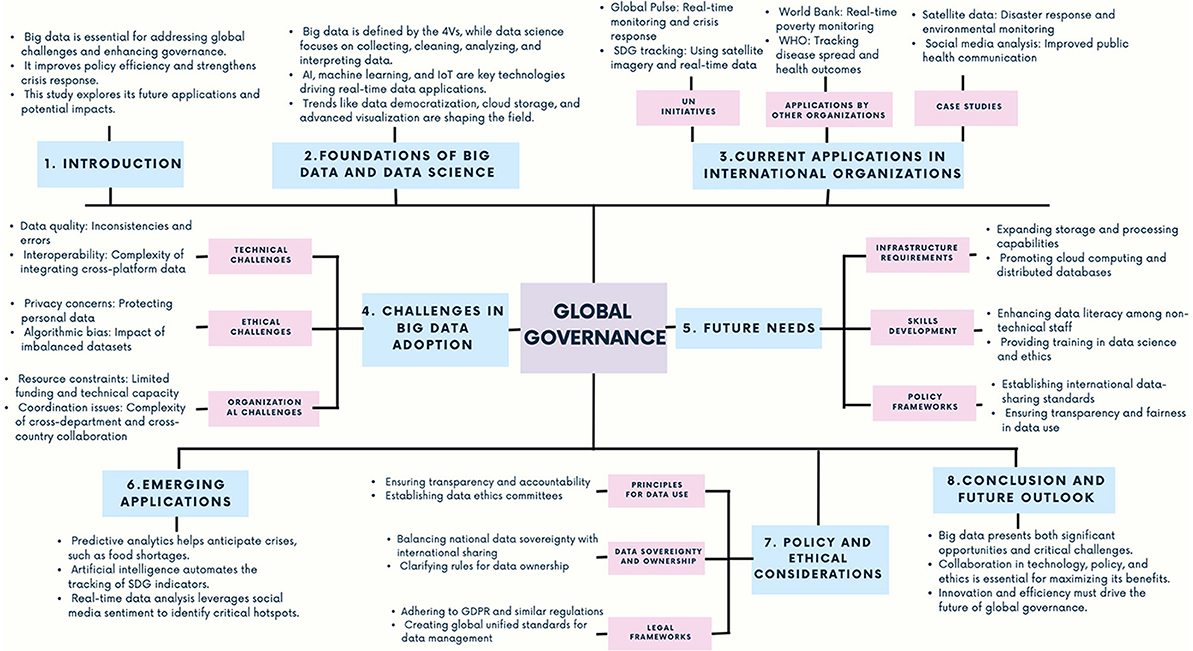

The concept of big data is also commonly characterized by the 4Vs framework, which effectively offers a comprehensive understanding of its nature (Kulkarni et al., 2016; Huang et al., 2015) (Figure 2).

Figure 2. This figure illustrates the 4Vs framework–Volume, Velocity, Variety, and Veracity–that characterizes big data, demonstrating how these dimensions interconnect and collectively distinguish big data from traditional data structures.

2.1.1.1 Volume: scale and size of data

Volume represents the unprecedented scale and magnitude of data in the modern era. The world's data storage capacity has exhibited exponential growth, doubling approximately every 40 months since the 1980s. The COVID-19 pandemic catalyzed an unprecedented surge in information demand, resulting in the creation of 64.2 zettabytes of data in 2020—a remarkable 314% increase from 2015 levels. A significant portion of this consists of “data exhaust”, representing passively collected data from daily interactions with digital products and services, including mobile devices, credit cards and social media platforms. It is precisely because people generate data through a variety of activities, including creating documents, downloading media and using applications. This has led to the demand for sophisticated storage solutions and enhanced data processing capabilities.

2.1.1.2 Velocity: speed of data generation and processing

Velocity encompasses both the rapid pace of data generation and the critical need for swift processing capabilities in the big data era. Real-time systems, particularly in financial trading, depend heavily on high-velocity data processing. Stock exchanges, for example, handle thousands of transactions per second, while financial institutions conduct real-time market data analysis for informed decision-making. In industrial applications, sensor networks continuously generate high-speed data streams, exemplified by manufacturing plant sensors monitoring various metrics. A notable example is the LHC ATLAS detector at CERN, which employs approximately 80 readout channels and possesses up to 1 petabyte of unfiltered data per second, subsequently refined to around 100 megabytes per second. This sophisticated system is engineered to record up to 40 million collision events every second (Demchenko et al., 2024).

2.1.1.3 Variety: different types and sources of data

Variety addresses the diverse spectrum of data types in the contemporary landscape. With traditional data structures were primarily confined to structured data in relational databases, the big data era has introduced numerous forms of unstructured data, including text, audio, and video formats. These diverse data types require sophisticated preprocessing techniques to extract meaningful insights and generate appropriate metadata (Demchenko et al., 2024).

2.1.1.4 Veracity: data quality and reliability

The veracity dimension of Big Data encompasses dual aspects: data consistency determined by statistical reliability, and data trustworthiness, influenced by factors including data origin, collection methodologies and infrastructure integrity. Big Data veracity guarantees the trustworthiness, authenticity of used data while protecting against unauthorized access and modification. Data security must be maintained throughout its lifecycle, from collection from trusted sources to processing on verified computing facilities and secure storage systems. Essential considerations in maintaining data veracity include data and linked data integrity, data authenticity and trusted origin, identification of both data and source, computing and storage platform trustworthiness, availability and timeliness, as well as accountability and reputation management (Demchenko et al., 2024).

2.1.2 Historical evolution of the concept

The evolution of big data represents a gradual progression aligned with information technology advancement. Initially, limited data storage and processing capabilities restricted the understanding of large-scale data management. With the advancement of computer technology, the amount of generated and stored data gradually increased, and people began to recognize the potential value of data. The 1990s witnessed the emergence of data warehousing and business intelligence technologies, providing enterprises with enhanced data management and analysis tools. During this period, the scale and complexity of data grew steadily, though not yet reaching big data proportions. The early twenty-first century, marked by Internet proliferation and e-commerce growth, saw an exponential increase in data generation rates and scale. The emergence of search engines, social media platforms and similar technologies led to unprecedented accumulation of user behavior data, focusing attention on large-scale data processing and analysis. In 2012, the US government launched the “Big Data Research and Development Initiative”, elevating big data to the national strategic level. This move attracted extensive attention from various organizations and countries around the world, and the relevant infrastructure, industrial applications and theoretical systems of big data have been continuously developed and improved. Since then, big data has gradually transformed from a single technical concept into a new element, strategy and mindset (Data Strategy Key Laboratory, 2017).

2.1.3 Current understanding of big data in global governance

Big data has transformed global governance by automating governance processes, reshaping interactions among nations, and empowering non-state actors. It transcends national boundaries, establishes new connection patterns, and employs algorithms and automation to influence governance structures. “Big data relies on the globalization operation of new media and strengthens, extends, obscures and confounds power in new ways” (Hansen and Porter, 2017), presenting a governance model distinct from traditional sovereignty. Cross-border data flows have spurred competition for control, with countries and enterprises establishing new “boundaries” to manage data access, altering traditional governance patterns. However, challenges persist, including privacy concerns, “data nationalism,” and unequal access to critical data hinder global cooperation, particularly affecting marginalized populations (Castro, 2013).

Despite these challenges, big data offers opportunities to address global issues. The UN Global Pulse Initiative exemplifies this potential through real-time food price monitoring, providing governments with timely decision-making tools for crises prevention (UN Global Pulse, 2024). Additionally, big data analysis reveals previously hidden social inequalities, such as the marginalization of women in informal sectors, offering insights for more inclusive policy-making (United Nations, 2023). Through the integration of algorithms, automation, and cross-border collaboration, big data continues to redefine governance, amplifying the roles of technology platforms, private companies, and non-human actors (Barnett et al., 2021).

2.1.4 Data science components

Data Science Components refer to the fundamental components that make up workflows and systems in the field of data science. These components work together to help data scientists extract valuable insights from data, construct models, and facilitate data-driven decision-making.

2.1.4.1 Data collection methodologies

Data collection represents the initial phase of any data science project. Sources vary widely, including databases (both relational such as MySQL, and non-relational databases like MongoDB), file systems (CSV files, JSON files, etc.), web crawlers (extracting data from web pages), and sensors (data collected by Internet of Things devices), etc. For example, an e-commerce companies might obtain user purchase records from its sales databases, browsing behavior data from website log files, and competitor pricing information through web crawlers establishing a comprehensive foundation for subsequent analysis (Wang et al., 2019).

2.1.4.2 Data cleaning techniques

Raw data often has various problems, such as missing values, duplicate values, incorrect values, and inconsistent data formats. Data cleaning addresses these problems through systematic approaches. For missing values, methods such as deleting records with missing values or filling with the mean or median can be used; for duplicate values, they need to be identified and deleted; for incorrect values, they may need to be corrected based on business rules; for inconsistent data formats, such as different date formats, they need to be unified. For example, in a medical data set, there may be some incorrect inputs in the patient's age field (such as a negative age), which need to be corrected. At the same time, if there are spelling errors or duplicate records in the patient's name, they also need to be cleaned (Kulkarni et al., 2016).

2.1.4.3 Analysis approaches

Analysis primarily involves preliminary data examination to understand distribution patterns and feature relationships. This includes calculating fundamental statistics (including means, medians, and standard deviations) and generating visual representations (such as histograms, scatter plots, and box plots). Through data exploration, outliers and skewed distributions in the data can be discovered. For example, when analyzing telecom customer data, by drawing a histogram of the monthly call duration, the distribution of the customer call duration can be understood, and whether there are a few customers with extremely long call durations (possibly business users) can be known, thus providing clues for subsequent customer classification.

2.1.4.4 Interpretation frameworks

Interpretation frameworks comprise methodologies and strategies for understanding and communicating analysis results and model outputs, helping stakeholders (such as business decision-makers, customers, etc.) understand the significance and value of data science work.

For machine learning models, especially complex ones (such as deep learning models), it is important to explain their working principles and output results. For example, for an image classification model based on deep learning, feature importance analysis (such as calculating the importance scores of features) can be used to explain how the model classifies according to different features of the image (such as color, shape, etc.). For decision tree models, the decision-making process of the model can be explained by showing the structure of the decision tree (nodes and branches).

2.1.5 Application of data science in international organizations

The application of data science in international organizations demonstrates its vital role in decision-making, policy monitoring, and fostering global collaboration. These organizations leverage statistical data across domains such as trade, unemployment, and public health to provide critical insights to the global community. These analytical tools not only measure development progress but also define international challenges, from identifying “least developed countries” to establishing global debt benchmarks. Additionally, quantitative research plays a pivotal role in driving public policy reforms through international oversight. The statistical operations within international organizations involve complex collaborations among intergovernmental bodies, expert committees, and secretariats, achieved through sophisticated cross-national coordination.

Moreover, the quantitative approaches of these organizations are often viewed as authoritative tools, valued for their neutrality and independence from domestic political conflicts, while promoting policy innovation and cross-border cooperation. Data science, through the analysis of statistical methodologies and data collection techniques, offers a unique perspective on the historical evolution and impact of international organizations, as exemplified by UNESCO's adaptation of its statistical framework to align with neoliberal policy objectives (Cussó and Piguet, 2023).

In practical applications, data science supports humanitarian aid, health monitoring, and the achievement of Sustainable Development Goals (SDGs) (Kirkpatrick and Vacarelu, 2018). The United Nations optimizes resource allocation through population displacement data in conflict zones, WHO employs data modeling for epidemic trends, and UNICEF uses data analysis to enhance service coverage for vulnerable groups. Similarly, the International Organization for Migration monitors migration pathways and evaluates policy impacts, while platforms like OCHA's Relief Web integrate data from multiple sources to aid disaster response and information dissemination.

Across disciplines such as economics and agriculture, data science facilitates investigation of complex issues such as war-induced displacement and human rights violations. With the integration of big data and artificial intelligence, data science has become indispensable for managing global affairs and improving service delivery in international organizations, providing robust support for global governance and policy design (Cussó and Piguet, 2023; Dixit and Gill, 2024).

2.2 Technologies related to big data

2.2.1 AI and machine learning

The integration of AI and Machine Learning algorithms with Big Data enables advanced analytical capabilities, including predictive insights generation, decision-making processes automation, and support for innovative applications spanning recommendation systems, fraud detection, and personalized marketing strategies (Demchenko et al., 2024,).

In the domain of artificial intelligence and machine learning, there are three primary methodologies–supervised learning, unsupervised learning, and reinforcement learning—addressing distinct aspects of data-driven problem-solving in different ways.

2.2.1.1 Supervised learning

Supervised learning involves the training of models using labeled datasets to establish input-output mappings. This methodology finds extensive application in classification tasks (such as spam detection) and regression problems (such as house price prediction), where the goal is to optimize the model's performance by minimizing prediction errors. Notable supervised learning algorithms include XGBoost, LightGBM, and k-Nearest Neighbors, widely used across finance, healthcare, and e-commerce sectors. Additionally, supervised learning contributes significantly to feature engineering and time series forecasting through specialized algorithms including Object2Vec and DeepAR.

2.2.1.2 Unsupervised learning

Unsupervised learning focuses on processing unlabeled data to discover inherent patterns and structures within the data. This approach aims to identify data characteristics through various techniques like clustering, dimensionality reduction, and anomaly detection. Prominent algorithms in the category include Principal Component Analysis (PCA) for dimensionality reduction, K-Means for clustering, and Random Cut Forest (RCF) for anomaly detection. These techniques are particularly effective in customer segmentation, genetic data analysis, and fraud detection. Unsupervised learning is well-suited for handling complex high-dimensional data, and methods such as IP Insights (used for recognizing network patterns) have proven valuable in specialized fields (Demchenko et al., 2024).

2.2.1.3 Reinforcement learning

Reinforcement learning, in contrast to the previous two methods, does not rely on labeled data but instead learns through trial and error by interacting with the environment. The methodology emphasizes cumulative reward optimization through action adjustment based on environmental feedback. In reinforcement learning, an agent takes actions in an environment and refines its strategy over time to maximize long-term benefits. Contemporary algorithms such as Deep Q-Networks (DQN) and policy gradient methods are primarily applied in areas like robotics, game AI, and autonomous driving. Reinforcement learning emphasizes dynamic decision-making processes and adapts strategies to complex environments, driving innovation in a variety of applications.

2.2.2 Applications of AI and machine learning in global governance

AI and machine learning demonstrate significant impact across multiple domains of global governance. In the economic field, in the face of severe disruptions to the global food supply chain caused by abnormal events such as trade conflicts, natural disasters, and pandemics, AI methodologies (including machine learning, reinforcement learning, and deep learning) can identify regular, irregular, and contextual components, enhancing understanding events outcomes and guiding decision-making guidance for suppliers, farmers, processors, wholesalers, retailers, and policymakers to promote welfare improvement (Batarseh and Gopinath, 2020).

In the technological field, particularly network development, the evolution from 5G to next-generation 6G networks increasingly relies on intelligent network orchestration and management. AI will play a key role in the emerging 6G paradigm. Moreover, as a system or machine that simulates human thinking, although most AI systems have no perception ability, they can think and calculate like humans through algorithms and cooperate with technologies such as machine learning (Yarali, 2023).

In addition, in international migration governance, AI technology is widely applied in various scenarios such as the prediction management of migration flows, automated decision-making, identity recognition, machine learning and matching, sentiment analysis, border monitoring, and robotics. While technological iterations drive governance concepts and model transformation, they simultaneously impact governance systems through capability disparities among governance subjects, fairness considerations, and normative frameworks (Chen and Wu, 2021).

2.2.3 Internet of Things

The Internet of Things (IoT) is also closely related to big data, as it generates vast volumes of real-time data from connected devices that require advanced analytics to process and interpret. The IoT architecture represents an ecosystem comprising interconnected physical objects accessible via Internet protocols. This architecture embodies the continuous integration between digital and physical realms, incorporating various technologies to form the Internet of Things (Margaret Mary et al., 2021). The purpose of designing the IoT architecture is to meet system requirements in specific application fields. Implementing an IoT system based on a given architecture to achieve required characteristics is essential.

The main IoT architecture categories include software IoT architecture, hardware IoT architecture, and general IoT architecture. Research suggests an end-to-end IoT architecture based on a five-layer model with various enabling technologies (Hafdi, 2019).

The IoT system contains several key components. Devices (Things), as the core element, evolve in aspects like user interface, appearance, performance, energy consumption, and security. New IoT devices explore applications with enhanced intelligence, incorporating various sensors and actuators, microcontroller architectures (such as ARM Cortex-M), input/output interfaces, programming models, and real-time operating systems (Firouzi et al., 2020).

2.2.4 Real-world applications of IoT in governance

IoT applications in governance continue to expand, transforming social governance. In smart city initiatives, IoT devices monitor environmental indicators like air and water quality in real time, providing data-driven support for decision-making. Wearable devices collect health data for personalized healthcare services. In traffic management, IoT optimizes signal control through real-time monitoring, while smart parking systems improve efficiency by helping drivers quickly locate available parking spaces (Nimkar and Khanapurkar, 2021). Water quality monitoring supports ecological goals through scientific management solutions (Lan and Fan, 2002). In agriculture, IoT sensors monitor soil conditions for precision farming while ensuring food safety through product traceability. Industrial applications improve production efficiency through equipment monitoring and intelligent management. In transportation, IoT enhances logistics by tracking shipments in real-time (Verma et al., 2022).

2.3 Technological trends

As countries and organizations increasingly depend on data-driven strategies and digital infrastructures, several technological trends have emerged. Among them, data democratization, the evolution of cloud storage, and advancements in data visualization are transforming how information is accessed, processed, and interpreted. Understanding these trends offers critical insight into both current capabilities and future trajectories across industries.

2.3.1 Data democratization

Data Democratization (DD) enables broader employee access to data understanding and usage within organizations. Its core features include wider data access with security controls, self-service analytics tools, enhanced data literacy training, collaborative knowledge sharing, and promoting data value recognition (Lefebvre et al., 2021).

This concept has seen notable implementation in digital-native companies such as Airbnb, Uber, and Netflix, where data-driven thinking is deeply embedded in organizational culture. In contrast, traditional enterprises, despite investments in IT infrastructure, often grapple with cultural inertia—stemming from limited managerial awareness and underestimated employee data capabilities (Lefebvre et al., 2021).

Research on data democratization remains focused on specific areas without a comprehensive theoretical framework. Key dimensions include data accessibility, analytical skill development, and knowledge sharing, with applications in healthcare and urban planning.

Looking ahead, data democratization continues to evolve with exponential data growth projected for the next 5 years. The advancement in data analytics enables the extraction of meaningful context from external data sources for better business decisions. Future trends show that technology, especially AI and machine learning, will accelerate the process by automatically identifying patterns, generating insights, and handling data, thus lowering the usage threshold. For enterprises, benefits include improved decision-making, enhanced cohesion, and deeper customer understanding. Challenges remain in organizational silos, specialist dependence, and data visualization limitations (Lefebvre et al., 2021).

2.3.2 Cloud storage evolution

Building upon the data access revolution, the evolution of cloud storage marks another foundational shift in digital infrastructure. Originally celebrated for overcoming traditional limitations in data capacity and accessibility, cloud platforms like Google Drive, OneDrive, and Dropbox have become integral to storing and sharing information efficiently (Rawat, 2020).

Cloud storage technology continues to evolve with cutting-edge developments. AIOps platforms leverage machine learning, and analytics to enhance IT operations through proactive insights (Levin et al., 2019). In electrical automation, cloud storage facilitates efficient data processing for electrical automation control, combining work data from production and manufacturing with big data storage to guide work processes and inform operational decisions (Yu, 2020). Meanwhile, in the security system sector, the integration of cloud computing and cloud storage technologies has become increasingly profound, significantly enhancing the performance and reliability of security systems to meet the growing demands for safety (Zhou, 2018).

However, this progress brings security challenges. Data encryption faces challenges with duplicate detection, while convergent encryption remains vulnerable to attacks. Client-side deduplication technology presents security risks despite storage efficiency benefits (Park et al., 2015). Key management issues also persist, particularly in secure deduplication systems as user numbers increase (Babu and Babu, 2016).

To counteract these concerns, flexible distributed schemes can enhance data accuracy and security. Fuzzy authorization schemes can also improve access control through encryption and third-party auditor mechanisms, reducing storage consumption while maintaining public auditing capabilities (Puranik et al., 2016).

2.3.3 Visualization advances

As more organizations gain access to data and the storage infrastructure becomes more powerful, the ability to interpret and communicate complex information becomes crucial. This is where data visualization technologies have made their mark, offering dynamic and intuitive ways to translate raw data into meaningful insights.

The latest tools and techniques for big data visualization have significantly advanced, offering powerful functionalities tailored to diverse analytical scenarios. Tableau connects to various data sources, providing intuitive interfaces for complex analyses. RapidMiner excels in data mining and machine learning visualization. R Studio, with packages like ggplot2 enables detailed statistical visualization for fields such as finance and market analysis (Okechukwu, 2022).

Current visualization technologies can handle large-scale datasets efficiency, supporting diverse data types, and interactive exploration. Tools like Tableau and RapidMiner efficiently process millions of records in real-time, managing structured and unstructured data while enabling collaborative decision-making through platforms like Tableau Public (Okechukwu, 2022).

Future developments will further enhance usability and impact. Technologically, they will become smarter, capable of identifying patterns, suggesting suitable chart types, and highlighting key data points, making analysis more efficient and user-friendly. Enhanced interactivity, such as gesture controls and voice commands, will enable users to explore data more intuitively (Xie et al., 2022). Moreover, the integration of AI, VR, and AR will deliver richer, more immersive visualization experiences (Sharma et al., 2021).

The applications of these tools will also expand significantly. In business, they will support decision-making by visualizing sales or customer data to guide precise strategies (Hu and Hao, 2020). In healthcare, they will help doctors analyze patient records and imaging data, improving diagnosis accuracy and enabling personalized treatments (Nazir et al., 2019). In journalism, data visualization will become a powerful storytelling tool, presenting trends and impacts through intuitive charts (Liang, 2017).

2.4 Summary

A solid understanding of big data, data science, and their associated technologies is foundational for navigating today's digital transformation. Key trends such as data democratization, cloud storage evolution, and advancements in data visualization are revolutionizing how institutions collect, share, and analyze information. These shifts not only enable more efficient data use but also pave the way for smarter, real-time decision-making.

As this section has shown the fundamentals of these technologies, the following sections will explore the concrete applications of big data within international organizations such as the UN system—highlighting how big data is being harnessed to support sustainable development goals and enhance humanitarian response.

3 Current applications of big data in international organizations

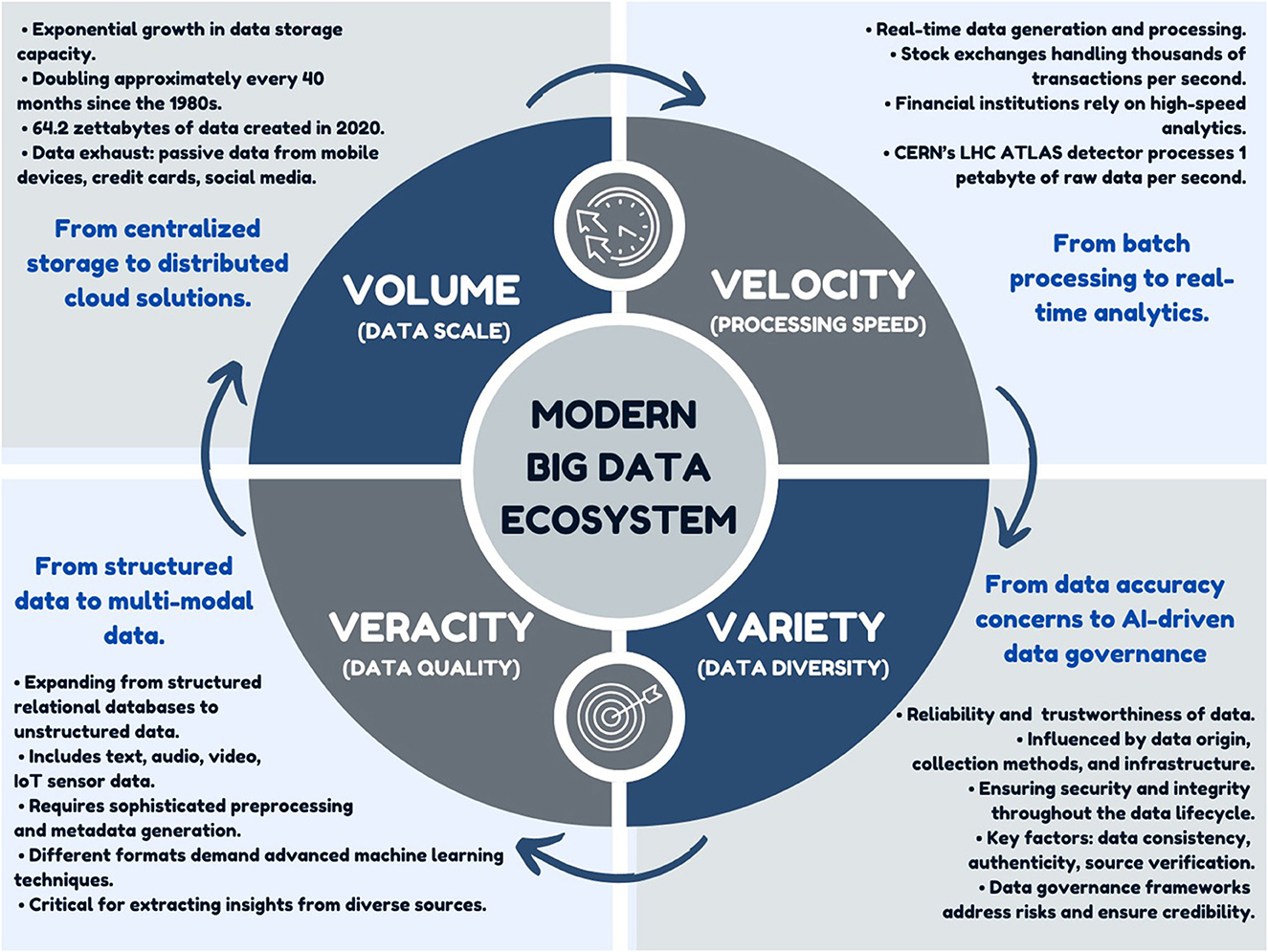

This section directly addresses our first research question by examining concrete applications of big data across major international organizations. We analyze how the UN, World Bank, and WHO are leveraging big data technologies to advance SDGs, enhance humanitarian relief efforts, and strengthen crisis prevention mechanisms.

3.1 UN initiatives in big data applications

3.1.1 UN global pulse

UN Global Pulse was launched in 2009 to explore how big data and data science can support the Sustainable Development Goals (SDGs) and humanitarian action. The initiative harnesses emerging technologies to understand global challenges, enabling real-time decision-making and improving UN programs efficiency (United Nations, 2023; UN Global Pulse, 2023). Its core objectives include: harnessing big data for real-time monitoring of SDG progress (UN Global Pulse, 2023); using mobile phone data, satellite imagery, and social media to improve humanitarian aid effectiveness (Legner, 2021); and strengthening partnerships between international organizations, governments, and technology companies (Bandola-Gill et al., 2022). Global Pulse also partners with technology firms like Microsoft, Google, and IBM for data analytics capabilities, machine learning, and cloud storage (Soares, 2012). Furthermore, collaborations with the World Bank, WHO, and UNICEF ensure alignment with global development agendas (Aguerre et al., 2024).

Global Pulse is coordinated by the UN Secretary-General's office in New York, with regional hubs in Jakarta (Indonesia), Kampala (Uganda), and Helsinki (Finland), focusing on food security, migration, and urbanization (UN Global Pulse, 2023). These hubs collaborate with stakeholders to apply data analytics to local challenges (Hennen et al., 2023).

Pulse Lab Jakarta, a regional hub jointly operated by UN Global Pulse and the Government of Indonesia, serves the broader Asia-Pacific region as a data innovation facility. It has been instrumental in utilizing mobile data and satellite imagery for disaster risk assessment and urban development. The lab analyzes real-time data to track population movement during floods and guide resource allocation (UN Global Pulse, 2023; United Nations, 2023). This project underscores the importance of data-driven urban resilience (Aguerre et al., 2024).

Similarly, Pulse Lab Kampala, the UN Global Pulse regional hub based in Uganda, focuses on food security by integrating mobile phone data with weather forecasts. During the 2020 drought, Pulse Lab Uganda's predictions helped NGOs allocate food aid more efficiently (United Nations, 2023; Hennen et al., 2023).

3.1.1.1 Project analysis

Leveraging social media data from platforms like Twitter and Facebook, Global Pulse has developed systems for monitoring public sentiment and identifying emerging crises, including Ebola outbreak monitoring and public health messaging (UN Global Pulse, 2013; United Nations, 2023). The initiative utilizes Natural Language Processing (NLP), sentiment analysis, and machine learning to analyze unstructured social media data, helping track trends and gauge public sentiment in real time (Legner, 2021). The data includes social media posts, user-generated content, and geospatial information. These are analyzed to track crises, public opinion, and information diffusion (United Nations, 2023).

Sentiment analysis and geospatial mapping are core techniques used to identify regions of high activity or concern. These analyses enable the UN to monitor global events in real time and optimize resource allocation during disasters (UN Global Pulse, 2023). Meanwhile, geolocation helps map crisis impact, while text analysis provides insights into public health, migration trends, and social unrest (Soares, 2012).

With social media analytics, Global Pulse's projects have successfully improved response times and resource allocation, as demonstrated during the Nepal earthquake and Uganda food security initiatives (Legner, 2021; Bandola-Gill et al., 2022). During the 2015 Nepal earthquake, social media data helped track aftershocks and coordinate rescue efforts more effectively (United Nations, 2023; UN Global Pulse, 2023). In the Ebola outbreak, it helped monitor misinformation for better public health communication, while during Hurricane Maria in Puerto Rico, social media analysis directed aid to the hardest-hit areas (Legner, 2021).

Despite successes, challenges persist in data privacy, data quality, and cross-border data sharing. Global Pulse employs anonymization techniques to protect privacy but concerns about data sovereignty remain significant hurdles (Aguerre et al., 2024). Integrating datasets from countries with different data infrastructure presents logistical challenges (Soares, 2012).

Key lessons from Global Pulse's projects include collaborating with local actors to ensure data relevance and accuracy and the importance of data interoperability to facilitate cross-sector collaboration (Hennen et al., 2023). Moreover, ethical data collection and usage frameworks must be carefully designed to balance development goals with privacy and security concerns (Aguerre et al., 2024).

3.1.1.2 SDG tracking and monitoring

Big data also plays an important role in tracking SDG progress, and the UN has developed several platforms to support this effort. The primary platform is the Global SDG Indicator Platform by UN Statistics Division (UNSD), which consolidates data from national governments and international organizations. The SDG Tracker by Our World in Data also provides real-time visualizations of SDG indicators (UN Big Data, 2025)

SDG monitoring combines traditional statistics (census data, national surveys) with alternative sources like satellite imagery and mobile data. Poverty and health indicators are monitored through household surveys, while environmental indicators rely on remote sensing data (Legner, 2021). Big data and sensor networks enable real-time monitoring.

Countries report through the Voluntary National Reviews (VNRs), at the UN High-Level Political Forum. These are complemented by the SDG Progress Report, which aggregates data to assess global progress (United Nations, 2023).

UNSD works with national statistical offices to ensure standardized data collection. Many countries have established national SDG monitoring frameworks, though challenges remain in aligning diverse data sources, particularly in low-resource settings (Aguerre et al., 2024).

3.1.1.3 Applications of satellite imagery in SDG monitoring

For SDG 2 (Zero Hunger), satellite imagery monitors agricultural productivity and food security. Remote sensing tracks crop yields, drought conditions, and soil moisture. FAO uses this data to monitor global food production and assess climate impacts on food systems (Bandola-Gill et al., 2022).

Satellite data can also track urban development in rapidly growing cities, supporting the tracking of SDG 11 (Sustainable Cities and Communities). The Global Urban Monitoring Initiative observes urban growth patterns and environmental impact (Aguerre et al., 2024).

Additionally, satellite plays a vital role in monitoring SDG 13 (Climate Action). The European Space Agency (ESA) and NASA monitor global temperature changes, sea level rise, and glacier melt. Sentinel satellites track changes in vegetation, ice caps, and water bodies (Hennen et al., 2023).

Furthermore, satellite imagery provides near-instantaneous data on disaster impact analysis. After natural disasters like hurricanes, floods, or earthquakes, satellite data helps responders quickly identify the scale of destruction and humanitarian needs (United Nations, 2023). As mentioned earlier, after the 2015 Nepal earthquake, satellite data helped assess infrastructure damage and guide humanitarian aid deployment. This technology is essential for ensuring that relief efforts are targeted efficiently, saving lives and resources (UN Global Pulse, 2023).

3.1.1.4 Future development

Future developments in big data applications in the UN should focus on enhancing data accuracy. The integration of 5G networks, edge computing, and blockchain technologies may revolutionize remote data collection. New satellite constellations will provide more frequent imagery, improving SDG monitoring capabilities (Aguerre et al., 2024).

Equally important is addressing the challenge of interoperability. Current limitations include data interoperability between platforms and standardization of collection methods. Data gaps exist between satellite observations and ground-level information, particularly in rural or conflict areas. Ensuring technology sustainability in low-resource settings remains critical for inclusive and equitable data-driven development (Bandola-Gill et al., 2022; Soares, 2012) (Figure 3).

Figure 3. This ecosystem diagram maps the relationships between UN initiatives (Global Pulse, SDG Tracking) and other international organizations (World Bank, WHO), highlighting data flows, collaborative projects, and technological implementations.

3.2 Current applications of big data in World Bank

The integration of big data and artificial intelligence (AI) enables decision-makers to address complex global challenges with precision and efficiency. At the World Bank, AI is applied both internally and externally, from project design to policy advice (Okahashi and Blanco, 2020). Lutz Lersch, the Bank's Senior AI Officer, emphasizes the Bank's responsibility to explore tools like ChatGPT and Google's Gemini while ensuring responsible implementation (Saldinger, 2023). Presented below are key applications of big data and AI at the World Bank.

3.2.1 Project development

The World Bank's Environmental and Social Framework (ESF) includes 10 E & S Standards for sustainable development projects. The AI-powered ESF Risk Assessment Toolkit leverages big data to generate baseline information and flags environmental and social risks. These reports help project teams facilitate strategic dialogues, assist consultants, and support client preparation work.

3.2.2 Poverty reduction efforts

Since 2018, the World Bank Group has implemented nearly 45 projects including MALENA, Mai, and Impact AI (IFC Opens Public Access to MALENA: An AI-Powered Accelerator for Sustainable Investments). Impact AI enhances decision-making by utilizing big data to provide actionable solutions and evidence for development professionals and policymakers. The MALENA platform processes ESG data to optimize investments in poverty-stricken regions (IFC Opens Public Access to MALENA: An AI-Powered Accelerator for Sustainable Investments).

The Bank's big data and AI integration extends to real-time economic monitoring through satellite imagery and financial data (Real-Time Welfare Monitoring for Development Impact). A notable example is the $70 million Novissi cash transfer initiative in Togo during COVID-19. Using AI and satellite imagery, the program identified disadvantaged regions and leveraged mobile phone metadata to assess consumption patterns for 70% of the population, reaching over 57,000 beneficiaries between 2020 and 2021 (Prioritizing the Poorest and Most Vulnerable in West Africa).

For food insecurity. the Development Impact Group AI uses natural language processing on news articles as an early warning system. This approach improves crisis forecast accuracy by up to 50% for up to 1 year ahead, enabling proactive measures especially in data-limited areas (Fraiberger; Development Impact Group).

3.2.3 Climate finance and environmental sustainability

The integration of big data and AI in climate-smart decision-making offers governments seeking a promising tool to navigate climate resilience and sustainable development (UNDP, 2025). As climate change accelerates, governments face the dual challenge of protecting infrastructure from rising sea levels, extreme heat, and storm surges while ensuring investments contribute to emissions reduction. A key challenge is the gap between the vast climate data availability and governments' capacity to process and act on it. The World Bank highlights AI's potential to bridge this gap by analyzing large datasets and presenting actionable insights in user-friendly formats, enhancing climate-smart decision-making (Peixoto et al., 2023).

AI enables governments to move beyond simple data collection to proactive, context-specific decisions that advance climate resilience. For example, the Green Economy Diagnostic (GED) under the Climate-Smart Development Initiative integrates datasets- air quality, extreme weather patterns, and economic performance, to guide sub-national regions in climate-conscious investments. AI identifies trends, such as temperature and air quality variations, offering concrete recommendations on emissions standards and infrastructure resilience. As AI advances, it could further optimize resource allocation for climate mitigation and adaptation while fostering stakeholder collaboration.

However, AI's effectiveness in climate governance depends on multidisciplinary teams translating data into actionable solutions. Despite AI's ability to process large datasets, human and institutional barriers must be addressed. Governments need to invest in skilled teams for AI tool development and deployment, requiring substantial funding and support. Additionally, a “demos-driven” approach—focusing on practical results rather than theoretical frameworks—is crucial for refining AI applications. Hands-on experimentation and iterative learning allow AI tools to evolve in alignment with climate and development goals (Peixoto et al., 2023).

3.2.3.1 Case study: digital tools to safeguard the climate resilience of smallholder farmers

The Digital Farm initiative, funded by the World Bank's Trust Fund for Statistical Capacity Building III, empowered smallholder farmers in East Africa with real-time climate data for climate-smart agriculture (2018–2020). In partnership with the International Centre for Tropical Agriculture (CIAT), Climate Edge, and local cooperatives, the project trained lead farmers and youth agents in using digital tools such as a digital record-keeping app and NEXO weather stations (World Bank).

NEXO weather stations, using IoT M2M SIM cards, trained bioclimatic variables affecting coffee and tea crops. Integrated with satellite and farm-level data, the initiative provided farmer-friendly dashboards for decision-making. Addressing challenges like data literacy and connectivity, the project emphasized human-centered design to ensure usability.

Outcomes included training over 4,000 smallholders, installing 20 weather stations, and integrating climate data into FarmDirect's app, improving profitability and resilience. This success highlights the need for ongoing investment in digital literacy and data accessibility for smallholder farmers.

3.2.4 Social norms and behavior monitoring

The World Bank recognizes big data's role in addressing harmful social norms. The Development Impact Group (DIME) AI, supported by a Bill & Melinda Gates Foundation grant, enhances research and policy in India, Kenya, and Nigeria. This initiative conducts media consumed by Adolescent Girls and Young Women (AGYW), develops monitoring tools, and evaluates media's influence on social norms. In order to improve accessibility, the group is utilizing machine learning to automate content analysis and monitoring tools. Additionally, policy dialogues will be conducted to maximize the effectiveness of these efforts, particularly within major entertainment hubs (Development Impact Group).

A key component is the development of Hate Speech Detection (HSD) models to combat harmful online content. Traditional HSD models, often trained on U.S. data, struggle with regional dialects, and biased datasets can distort moderation performance. To address this, the World Bank and academic partners developed culturally tailored HSD models, improving accuracy with human oversight. A study found that human reviewers monitoring just 1% of flagged tweets enabled AI to moderate 60% of hateful content effectively.

Understanding social media usage patterns helps policymakers address interethnic violence and societal trends. Leveraging detection models, the World Bank provides real-time policy tools. In Nigeria, a targeted intervention used the best-performing HSD model to identify users sharing hate content and delivered ads with prosocial messages. Early findings show a 15% reduction in hate content among those exposed to the ads, likely due to decreased sharing within their networks (Tonneau et al., 2024).

3.3 World Health Organization (WHO) applications of big data

WHO's Director General, Tedros Adhanom Ghebreyesus, highlights AI's growing role in digital healthcare, particularly in diagnosis, clinical care, drug development, disease monitoring, outbreak management, and overall health system organization (Harnessing Artificial Intelligence for Health) (Taylor, 2023).

WHO's AI strategy is built on three pillars: setting standards, governance, and policies for evidence-based AI in health; fostering pooled investments and global expert collaboration, and implementing sustainable AI programs at the national level (Artificial Intelligence for Health).

In collaboration with the International Telecommunication Union (ITU), WHO established the ITU/WHO Focus Group on “AI for Health” (FG-AI4H) in July 2018. The initiative aims to develop international evaluation standards for AI solutions in healthcare, focusing on machine learning, medicine, regulation, public health, statistics, and ethics. This multidisciplinary effort consolidates national expertise to create universal benchmarks for AI in health.

FG-AI4H operates through iterative, collaborative efforts, with bi-monthly meetings producing deliverables on AI ethics, regulatory best practices, software lifecycle specifications, data management, AI training, evaluation criteria, and AI adoption strategies. Specialized working groups generate documents on specific use cases and guidelines for AI deployment in healthcare settings (Kan et al., 2024).

A key outcome of the FG-AI4H is the development of guidelines and specifications designed for replicability. These guidelines support AI evaluation and contribute to an online platform for benchmarking AI applications. Leveraging global expertise, the Group ensures AI solutions are developed, tested, and implemented with consistency, transparency, and ethical consideration.

Several factors have contributed to FG-AI4H's progress, including active participation from national and regional health regulators, public health agencies, medical professionals, AI developers, and ITU and WHO inter-agency collaborations. However, challenges remain, such as ensuring cross-border coordination and balancing innovation with regulation. Despite these constraints, the Focus Group's collaborative approach and commitment to standardized, replicable AI evaluation frameworks promise to drive sustainable and scalable advancements in healthcare AI worldwide (Figure 3).

3.4 Summary

Recent years have witnessed a significant expansion in the application of big data across international organizations, particularly within the United Nations (UN), the World Bank, and the World Health Organization (WHO). The UN's Global Pulse initiative exemplifies the integration of mobile data, satellite imagery, and social media analytics to enhance humanitarian action, monitor public sentiment during crises, and track progress toward the Sustainable Development Goals (SDGs). At the World Bank, artificial intelligence and big data have been incorporated across sectors, from poverty alleviation programs to climate resilience initiatives and hate speech monitoring. Similarly, the WHO focuses on AI for healthcare and develops global standards for AI applications in healthcare.

While this section highlights the concrete applications of big data in advancing sustainable development, disaster resilience, public health, and global governance, the next section will critically examine the potential challenges and risks associated with integrating big data into global governance frameworks, including privacy concerns, infrastructural and human resource limitations, ethical dilemmas, and the potential misuse of data.

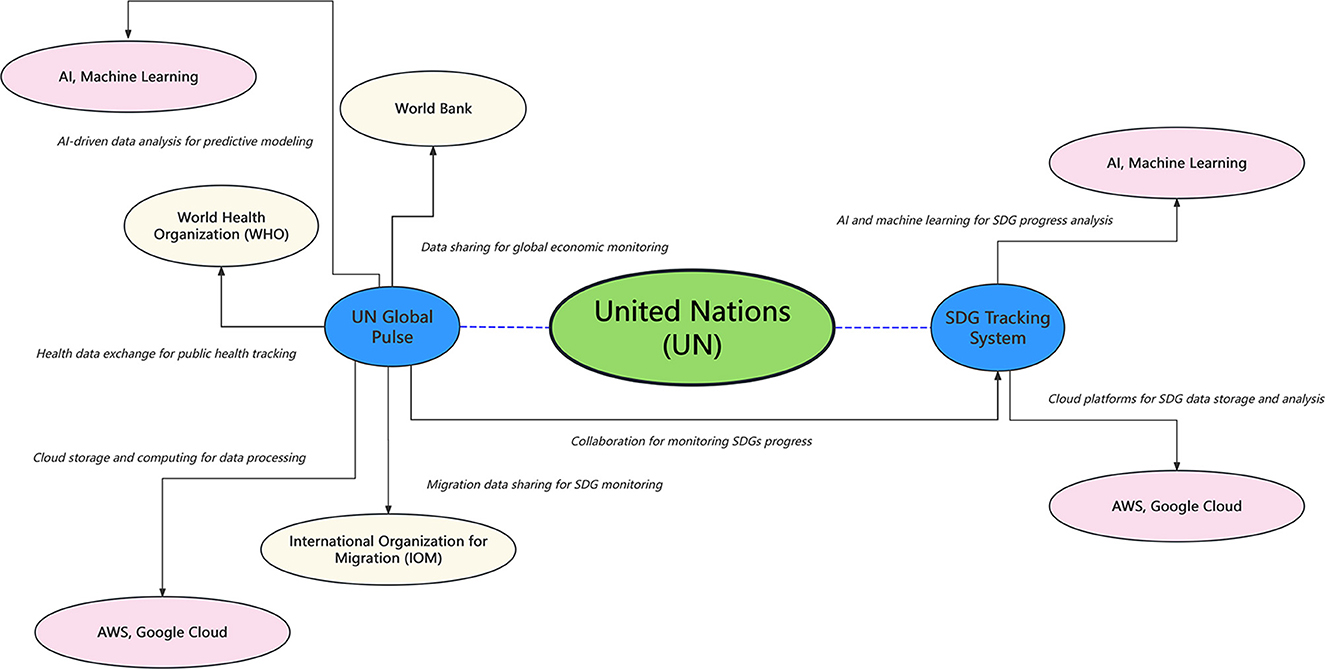

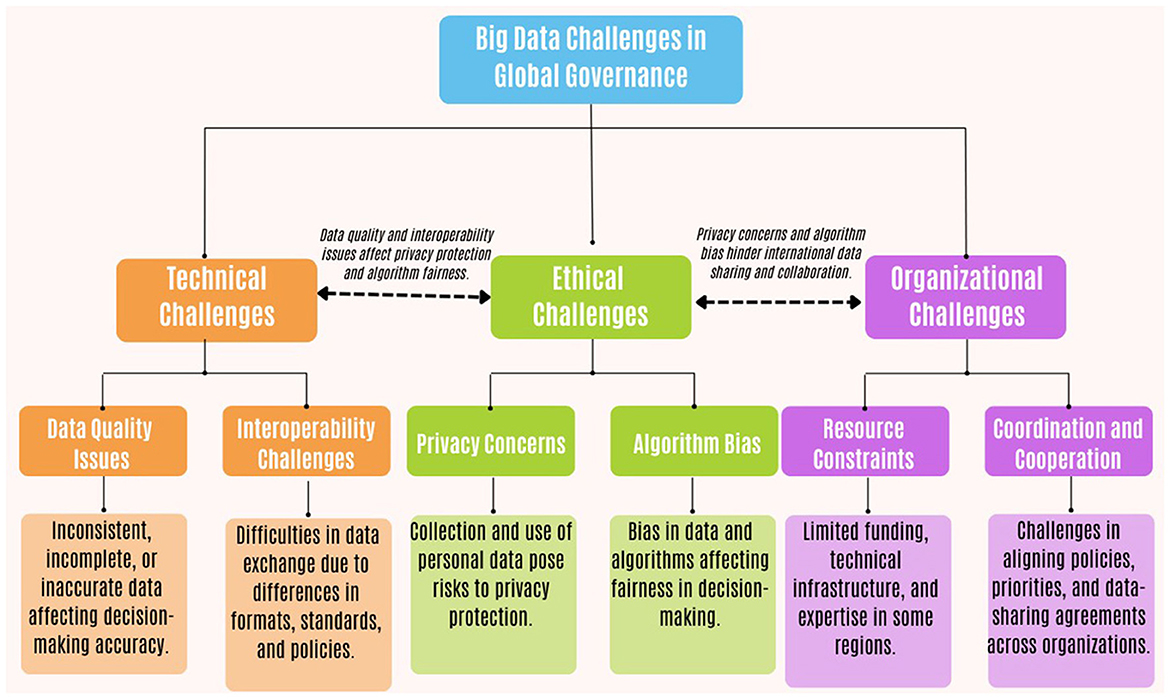

4 Potential challenges and risks in adopting big data for global governance

In the current era of increasing informatization and rapid technological development, the trend of both modern science and industrial development is gradually being driven by data. The utilization of Big Data extends beyond pioneering technology into most market sectors, enhancing overall operational efficacy (Figure 4). For organizations of global repute, the efficacious design and development of integrated Big Data systems is pivotal to the adoption of Big Data for global governance (Demchenko et al., 2024). However, the adoption of big data for governance is not without substantial challenges. This section critically examines the technical, ethical, organizational, and political risks associated with integrating big data into global governance frameworks.

Figure 4. This framework visualizes the three core challenge dimensions (technical, ethical, and organizational) that impede big data implementation in global governance.

4.1 Technical challenges

The establishment of a big data platform to serve international organizations, such as the United Nations, is paramount for realizing of the Sustainable Development Goals (SDGs). Such platforms can provide real-time information for monitoring SDG progress and facilitate data sharing between member states (United Nations, 2023). However, new paths often come with challenges, particularly in managing data quality and overcoming interoperability limitations.

4.1.1 Data quality issues

Data quality varies for multiple reasons, with inconsistent and low-quality data impacting decision-making accuracy. According to the Journal of Accountancy, 1–5% of error rates can be attributed to human input, including spelling errors, incompleteness, and duplicate entries. The absence of standardized data management can result in inconsistencies and fragmentation of data sets (Elahi, 2022), as well as the fragmentation of data sets. This is due to the fact that data is stored in isolated data repositories maintained by departments and organizations, which can create data silos and impede the harmonization and sharing of information (Tsidulko, 2024). In addition, the integration of multiple data resources may result in discrepancies due to the presence of different quality standards when merging data from diverse systems or external sources (Batini and Scannapieco, 2016). Concurrently, the inadequate incorporation of third-party data represents a significant contributing factor to the prevalence of data quality concerns within organizational contexts. This is due to the absence of oversight and control over the fundamental data sources (David, 2022). As technology advances, there is a possibility that older systems may not be compatible with the latest data formats, which could also result in the generation of unclean data. Common data quality issues under international governance are often similar to those faced by most organizations. According to the United Nations Data Quality Assurance Framework (UN DQAF) factsheet (International Monetary Fund, 2003), the issues described above need to be taken seriously.

High quality data must possess both accuracy and validity to reflect real situation accurately. Data integrity is crucial, as missing data can affect decision outcomes and lead to bias. Data consistency must be maintained, especially in cross-database systems. Moreover, timeliness is essential as only recent data can reliably reflect current situations.

4.1.2 Interoperability challenges

Beyond internal data quality, the challenge of interoperability across systems, sectors, and national borders is critical. Cultural, linguistic, and technical differences exacerbate difficulties in establishing standardized governance frameworks (Sargiotis, 2024). Interoperability challenges arise when data systems across organizations, regions, and countries need to communicate, or integrate data. Different countries use varying data formats, including basic units of measurement and date formats, which can lead to incompatibility during data exchange. Overcoming these barriers requires the development of internationally agreed-upon data standards and robust protocols for ensuring compatibility across diverse platforms.

4.2 Ethical issues

As global governance increasingly relies on big data analytics, ethical concerns regarding privacy, transparency, and algorithmic fairness have also come to the forefront.

4.2.1 Privacy concerns

The vast amount of personal information collected through big data systems poses significant privacy challenges. Individuals often have little insight into how their data is used or whether sufficient protections are in place, particularly when data is transferred across jurisdictions with differing legal standards (Zuboff, 2019). Additionally, in the global governance context, data comes from different countries with varying privacy standards and regulations, further complicating efforts to protect personal information.

4.2.2 Algorithm bias

Algorithmic bias remains another significant ethical risk. Complex algorithms based on big data analytics can obscure the calculation process, leading to questions about impartiality. Without transparency, affected populations cannot challenge erroneous or discriminatory outcomes.

Algorithmic bias can be categorized into three types: data source bias, sampling bias, and algorithmic bias. Data source bias occurs when training data is concentrated in certain regions, while sampling bias results from unbalanced category distribution in datasets. Algorithmic bias stems from the design itself and can affect decision-making outcomes. These biases can reinforce social inequities or distort policy interventions.

For example, during the pandemic, the World Health Organization (WHO) used big data to calculate spread rates and predict infection numbers (Rossouw and Greyling, 2024). However, better data availability in Europe and the United States led to algorithmic bias, affecting resource distribution in developing regions (Bayati et al., 2022). Addressing such biases requires deliberate efforts to diversify datasets, audit algorithmic processes, and ensure inclusive model development practices.

4.3 Organizational inefficiencies and resource shortages

Beyond technological and ethical considerations, one of the most significant barriers to integrating big data into global governance lies in organizational inefficiencies and resource shortages.

The successful application of big data requires seamless coordination among a wide range of stakeholders, including international organizations, national governments, and private entities. However, this coordination is often hindered by competing interests, fragmented management systems, and communication barriers between countries (Kuzio et al., 2022).

Additionally, the UN system itself can still be ineffective even after integrating big data into global governance. This is due to longstanding structural issues of the system, including deep-seated divides between the Global North and the Global South, which shape disparities in resources, influence, and data access. Furthermore, overlapping jurisdictions among UN agencies, competing mandates, fragmented accountability structures, and entrenched bureaucratic processes create barriers to effective coordination and decision-making (Weiss, 2016). As mentioned earlier, many UN bodies—such as UNDP and WHO—collect and store data independently, using incompatible formats and systems. This lack of interoperability also limits the ability to conduct integrated analyses. Despite the potential for data-driven innovation, these systemic inefficiencies often hinder the UN's ability to act cohesively and responsively across complex global issues.

Furthermore, establishing big data infrastructure demands substantial upfront investment and specialized human resources. Many developing countries lack the technical infrastructure and data science expertise necessary to implement large-scale data initiatives, often depending on developed countries to supply resources and foster capacity-building partnerships.

Therefore, while big data holds great promise for enhancing global governance, its full integration is currently obstructed by institutional fragmentation, coordination failures, and uneven resource distribution across countries and agencies.

4.4 Misuse of data and risks of surveillance

Lastly, big data is not a neutral technological force, but one that is entangled with political power structures, capable of either increasing authoritarian surveillance or enhancing democratic transparency.

When misused, data streams such as facial recognition, social media activity, geolocation data, and satellite imagery can be harnessed to monitor dissent, track individual mobility, and enforce population control, thereby supporting what scholars call digital authoritarianism. Conversely, when strong legal safeguards, institutional transparency, and meaningful public participation are embedded within data governance frameworks, big data can become a powerful tool for the governments to identify underserved populations, better allocate resources, plan infrastructure, inform equitable policy interventions, and monitor sustainable development.

A research conducted by (Feldstein 2019) revealed that at least 75 out of 176 countries are actively employing artificial intelligence technologies for surveillance purposes. These include 51% of advanced democracies, 37% of closed autocracies, and 41% of both competitive authoritarian regimes and electoral democracies. While the adoption of AI surveillance tools does not automatically imply the abuse of data, the breadth of deployment across regime types raises serious concerns about the potential for repression, data misuse, and rights violations. These developments underscore the urgent need for clear ethical standards and robust regulatory oversight in global data governance.

4.5 Summary

Technical challenges such as data quality and interoperability, ethical dilemmas around privacy and bias, organizational inefficiencies, resource disparities, and the threat of political misuse must all be carefully navigated.

Moving forward, the development of digital infrastructure, investment in human capital, the establishment of robust governance frameworks, and a strong commitment to ethical principles are essential to ensuring that big data serves as a tool for inclusive, equitable, and democratic global development.

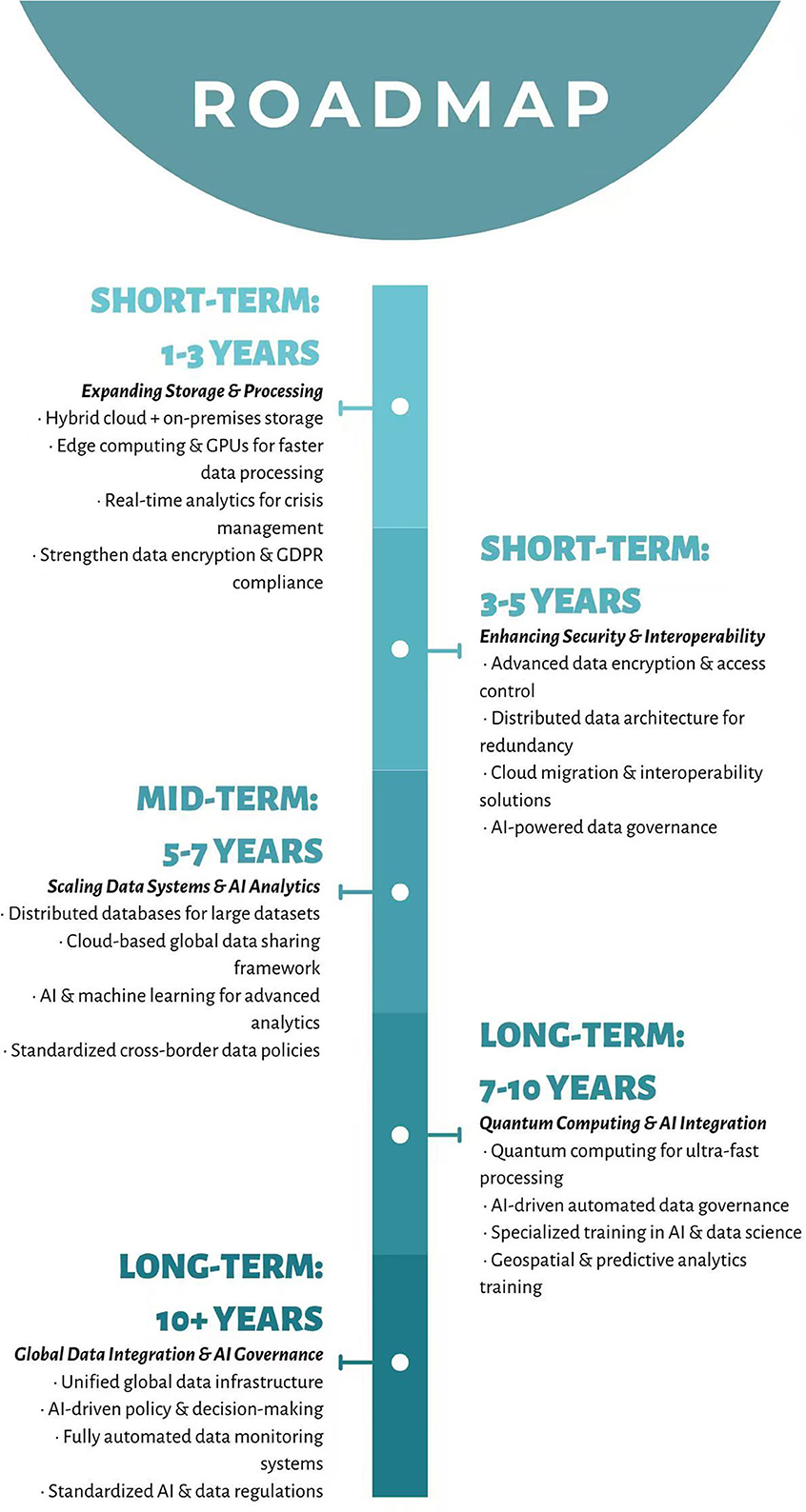

5 Anticipated future needs for big data in international organizations

Building on the current applications discussed, this section addresses our second research question by identifying the essential technical and organizational infrastructures required for scaling big data systems in international organizations.

5.1 Infrastructure needs

With the exponential growth of real-time data—from satellite imagery and sensor networks to social media and mobile devices—international organizations must anticipate and proactively address emerging needs for infrastructure, human capital, and policy frameworks.

5.1.1 Data storage solutions

Many international organizations, including the UN, depend on cloud storage solutions to manage Big Data. As more data is generated through high-resolution satellite imagery, sensor networks, social media analytics, and mobile data, existing infrastructure may not suffice (Singh and Sidhu, 2023). Future systems must scale to accommodate exabytes of information. Hybrid models involving cloud storage and on-premises infrastructure are likely to become more widespread (Soares, 2012; Aguerre et al., 2024). Technologies like quantum computing and distributed databases will also be crucial for storing vast datasets efficiently.

Furthermore, future data storage systems should feature low-latency access, robust encryption, and advanced compression techniques to ensure quick data processing while maintaining security (Hennen et al., 2023).

5.1.2 Data processing capabilities

The growing demand for real-time analytics, particularly during crises and humanitarian emergencies, calls for significant increases in computing power. Technologies such as Graphics Processing Units (GPUs) and edge computing will be essential to manage the growing volume of data (Singh and Sidhu, 2023). Cloud computing and edge computing solutions will also play key roles in ensuring swift data processing (Legner, 2021).

A reliable data processing system also requires distributed architectures that can operate across multiple regions and handle redundancy. For global organizations that rely on real-time data—such as the UN's SDG monitoring systems—maintaining continuous service is crucial. Building in automatic failover capabilities and geo-redundancy will ensure data resilience (Soares, 2012).

5.1.3 Cloud solutions

As organizations increasingly migrate to cloud environments, cybersecurity becomes a top priority. Strong encryption, strict access control protocols, and compliance with data privacy frameworks—such as the General Data Protection Regulation (GDPR)—are essential safeguards (United Nations, 2023).

Equally important is ensuring that cloud-based platforms remain accessible to authorized users across borders while ensuring data security. This is crucial for international cooperation and timely decision-making during global crises (Soares, 2012).

However, the transition to cloud solutions presents several challenges, particularly when migrating from legacy systems. Key issues include data migration complexities, ensuring data interoperability, and training staff on new technologies. Organizations must carefully manage the change process while meeting local legal requirements for data protection (Legner, 2021).

5.2 Skills development

As technical infrastructure evolves, parallel investments in human capacity are critical. Bridging the global data literacy gap is essential for the equitable and effective use of big data in international governance.

5.2.1 Data literacy programs

A significant barrier to the effective use of big data is the lack of data literacy among policymakers and staff. Many individuals lack skills to interpret complex data from sources like satellite imagery, and social media analytics. According to a report by the (UN Global Pulse, 2023), this gap hinders the capacity to leverage data-driven insights for achieving the SDGs.

Addressing this requires systematic training needs assessments to identify skill gaps at all levels of the organization. This includes assessing staff capabilities, familiarity with data science tools, and capacity to apply data insights to policy-making. The assessment should consider institutional needs, focusing on types of data typically used and specific sector demands (Hennen et al., 2023).

Data Literacy Programs should focus on both fundamental data skills and advanced analytical capabilities. For entry-level learners, programs should introduce basic concepts in data management, and data visualization tools. For senior professionals, programs should emphasize data-driven decision-making and predictive analytics (Legner, 2021).

5.2.2 Specialized training

Advanced technical roles require training in programming languages such as Python, R, and SQL, as well as skills in machine learning, geospatial analysis, and ethical data use (Soares, 2012).

Course modules should cover foundational data science, advanced analytics, data visualization and communication, and data governance frameworks, with a strong emphasis on ethics and equity. Training initiatives must be inclusive and scalable, ensuring that personnel in both developed and developing countries have equal access to learning opportunities. Additionally, courses should be designed around real-world case studies and development challenges, equipping professionals with practical tools to apply in the field (Singh and Sidhu, 2023) (Figure 5).

Figure 5. This roadmap presents the evolutionary trajectory for big data integration in global governance, outlining short-term, medium-term, and long-term infrastructure developments and skill requirements.

5.3 Policy frameworks

As the scale and sensitivity of data use increase, robust policy frameworks must ensure ethical, secure, and interoperable applications.

5.3.1 Ethical guidelines

Ethical guidelines for Big Data usage are essential to ensure data is collected, processed, and applied responsibly. Key principles include privacy, transparency, data sovereignty, fairness, and beneficence (Aguerre et al., 2024; Soares, 2012).

Organizations should establish ethics committees and develop data ethics code of conduct. Training programs should ensure staff understand ethical standards (Legner, 2021).

Enforcement of ethical standards requires clear sanctions for violations, such as disciplinary actions, and effective whistleblower protections to encourage reporting unethical practices. Regular audits and the use of automated systems to monitor compliance will ensure ethical breaches are detected and addressed promptly (Hennen et al., 2023).

Ethical guidelines must also be regularly reviewed and updated to address emerging challenges in Big Data, such as AI ethics and machine learning transparency. Feedback from stakeholders, including local communities and tech experts, should be incorporated to ensure the guidelines stay relevant and adaptable to new technologies (United Nations, 2023; Soares, 2012).

5.3.2 International standards

Although several global data governance frameworks exist—including the General Data Protection Regulation (GDPR), OECD principles, and ISO/IEC 27001 for information safety—gaps remain, particularly in addressing emerging issues like AI, blockchain, data sovereignty, and cross-border data flows (Aguerre et al., 2024).

The lack of harmonization between different regional frameworks creates challenges for global organizations. A unified global framework is needed to standardize data protection, privacy, and sharing rules. Such a framework would facilitate international cooperation, streamline data-sharing agreements, and enhance trust in global governance systems (Hennen et al., 2023).

Implementing this vision will require overcoming significant political resistance and regulatory fragmentation. Balancing innovation and regulation remains critical for global data governance (Legner, 2021; Soares, 2012).

5.4 Summary

In sum, unlocking the full potential of big data in international governance depends on scalable infrastructure, skilled human resources, sound policy frameworks, and strong ethical safeguards.

The following section will explore the latest emerging applications of big data within international organizations and identifies areas where further development and innovation are needed, particularly following progress in infrastructure, capacity-building, and regulatory alignment.

6 Emerging applications and future developments of big data in international organizations

With the rapid advancement of data science, emerging techniques such as predictive analytics, machine learning, and Natural Language Processing (NLP) are increasingly transforming the landscape of modern monitoring systems in international affairs. These cutting-edge technologies are improving early warning systems and automating the tracking of progress toward the Sustainable Development Goals (SDGs).

6.1. Predictive analytics in crisis prevention

This section specifically addresses our fourth research question by examining how AI and machine learning technologies are revolutionizing early warning systems for various types of crises.

According to the UN Office for Disaster Risk Reduction, an Early Warning System (EWS) is a comprehensive system that integrates hazard monitoring, forecasting, prediction, disaster risk assessment, communication, and preparedness efforts (UN Office for Disaster Risk Reduction). Traditional EWS often face limitations including reliance on outdated data, and slow analysis processes. However, the incorporation of big data and predictive analytics offers promising solutions.

6.1.1 Food insecurity early warning systems