- Department of Information and Communication Systems Engineering, School of Engineering, University of the Aegean, Samos, Greece

The increasing integration of AI-driven interfaces into public service channels has catalyzed a vibrant discourse on the interplay between technological innovation and the traditional values of public governance. This discussion invites a critical exploration of how emerging chatbot architectures can be aligned with ethical principles and resilient public sector practices. While there is research assessing the potential benefits of integrating chatbots in service delivery, existing evaluation approaches often lack specificity to the unique context of public administration, failing to adequately balance technical performance with crucial ethical considerations, safety requirements, and core public service principles like transparency, fairness, and accountability. This research addresses this critical gap by developing and applying a structured evaluation framework specifically designed for assessing diverse chatbot architectures within the public sector. The methodology offers actionable insights to guide the selection and implementation of chatbot solutions that enhance citizen engagement, streamline government services, and uphold key public service values. A key contribution is the introduction of fifteen pre-assessed evaluation criteria, encompassing areas such as input understanding, error handling, legal compliance, safety, and personalization, which are applied to four distinct chatbot architectures. Our findings indicate that while no single architecture is universally optimal, hybrid retrieval-augmented generation (RAG) systems emerge as the most balanced approach, effectively mitigating the risks of pure generative models while retaining their adaptability. Ultimately, this work provides actionable guidance for policymakers and researchers, supporting informed decisions on the responsible use of chatbots and emphasizing the critical balance between innovation and public trust.

1 Introduction

In recent decades, digitization and automation are promoted as key drivers in the long-established efforts to streamline and automate public service delivery, that had already begun in previous decades by the New Public Management dictates. The intensity of these efforts has intensified and reinforced by a wide combination of factors, among which, technological advancement, the wide adoption of digital interfaces in market and commerce, the latest economic and health crises, and recently, the growing uptake and normalization of the applications of artificial intelligence (AI).

Chatbot systems that had already found their way in the commercial sector and markets as a means for enhancing customer service and reducing costs, experienced an explosive increase in their adoption with the emergence of generative AI, soon finding their way to the public sector. These systems transform how citizens interact with public administrations, providing constant support, automating the response to citizens' queries or providing interactive interfaces for online service delivery. By facilitating communication and improving access to services, chatbots are bridging the gap between citizens and the state, allowing for more responsive and efficient public services. However, the underlying technologies supporting these chatbots vary significantly, affecting their performance, security, user experience and crucially, their alignment with public values. As governments increasingly integrate these technologies, understanding their capabilities and limitations becomes critical to ensure effective implementation, informed decision-making, and maintaining public trust.

1.1 Research focus and objectives

This research addresses the challenge of integrating chatbots into public services in a responsible way. While chatbots present significant opportunities for modernizing citizen-state interactions—personalizing processes, improving accessibility and citizen engagement—their underlying architectures differ in critical aspects, presenting distinct advantages and disadvantages related in particular to key parameters of public services. Nevertheless, there is a significant gap in the current literature on how to systematically assess these aspects in the context of public service delivery. This gap consists of two parts. First, existing evaluations tend to focus narrowly on technical performance measures, overlooking the rigorous assessment required to align a chatbot's capabilities with the fundamental principles of public administration. Moreover, comparative analyses across the full range of potential chatbot architectures relevant to public sector development remain rare.

To address this gap, we propose and apply a comprehensive framework aimed at evaluating chatbot architectures, particularly for public service delivery. A key contribution is the multidimensional structure of the framework, which contains fifteen distinct evaluation criteria, selected to reflect public service priorities. These criteria include technical dimensions (e.g., input comprehension, error handling, scalability, etc.), user-centric aspects (e.g., multilingual support, accessibility, personalization, etc.), as well as broader parameters such as cross-sectoral adaptability and ethical compliance, so as to ensure evaluations relevant to the public service context. We analyze and evaluate these criteria across four prevalent chatbot architectural paradigms—rule-based, retrieval-based, generative and the increasingly important hybrid retrieval-augmented generation (RAG) systems. By integrating these multifaceted factors, the research offers a structured methodology for decision makers and managers to select chatbot systems that not only enhance service delivery but also support the core values of public services and mitigate potential risks.

The remainder of this paper is structured as follows. Section 2 establishes the foundational context by reviewing the evolution of conversational AI, outlining the ethical and legal landscape for its use in public administration, and synthesizing the key literature that informs our evaluation framework. Section 3 then presents a detailed typology of the four primary chatbot architectures that are the focus of this study. Building upon this, Section 4 outlines the multi-phase comparative methodology developed for this research, detailing the process for framework development, architectural profiling, and heuristic evaluation. Section 5 presents the comprehensive results of this evaluation, followed by Section 6, which discusses the implications of these findings and offers key recommendations for policymakers and public managers. Finally, Section 7 acknowledges the limitations of this study and suggests directions for future research, before Section 8 offers the concluding remarks.

2 Background

2.1 The evolution of conversational AI

Chatbots can be defined as conversational agents that employ natural language to interact with users, replacing the traditional interfaces often used by digital service providers. Chatbots can operate as standalone software or be embedded into physical equipment such as speakers, screens or serving desks.

Chatbots have evolved significantly since the introduction of early systems like ELIZA in 1966 (Weizenbaum, 1966). Constrained by the computational and technological limitations of their time chatbots of that era were based on simple script-based interactions, operating within a narrow parameter space. The turn of the 21st century with the emergence of the internet and the exponential availability of digital data marked a transformative shift for the abilities and the interfaces of chatbots. The appearance of virtual assistants like Siri and Alexa, integrating speech recognition with Natural Language Understanding (NLU), significantly enhanced chatbot functionalities and their potential use cases. Chatbots soon found their way into social media and messaging platforms and gradually expanded to customer service, marketing, and e-commerce applications, by companies seeking automation of routine inquiries, reduced costs and response times and improved customer satisfaction.

In the past decade, advances in Machine Learning (ML) and Natural Language Processing (NLP) have enabled chatbots to handle ever more complex context-aware interactions, and perform diverse tasks, such as multi-lingual communication, translation and problem solving. The introduction of Transformers architecture (Vaswani et al., 2017) has led to the development of sophisticated language models such as GPT (Radford et al., 2018). These innovations, combined with improvements in computer hardware and rapidly increasing availability of digitized text, have given rise to the “era” of Large Language Models (LLMs), a new generation of language models, pre-trained in enormous amounts of text data that provide the foundational capabilities needed by chatbots to support multiple use cases and applications. Pre-trained LLM-driven chatbots can generate more dynamic and contextually aware responses, excel in maintaining dialogues and addressing complex queries, while exhibiting better contextual understanding and adaptability.

Recent advances in multi-modal AI architecture, together with much more powerful computing systems have further improved their capabilities, introducing a new era of chatbots that not only are able to understand and respond to user requests, but can reason, plan, act, and adapt their learning autonomously. This new era of AI agents enables chatbots to plan and execute complex tasks, adapting their behavior to achieve specific goals without requiring constant human oversight. This autonomous operation signifies a departure from traditional, purely reactive chatbot paradigms, toward goal-oriented, intelligent agents, and is poised to revolutionize numerous industries, restructuring established workflows, and redefining the dynamics of human-AI collaboration.

2.2 The ethical and legal landscape for AI in public administration

During the last decade, chatbots have become increasingly popular across various industries playing a significant role in digital transformation. This is particularly evident in the provision of public services where an increasing number of chatbots are being deployed, transforming public sector interactions by serving as an intuitive user interface for citizens interactions. Public service chatbots generally fall into two main categories. The first category consists of informative chatbots, which provide policy information, emergency alerts, real-time updates and public service guidelines. The second category includes service-completion chatbots, designed to facilitate administrative tasks such as appointment scheduling, form submission, and application processing. By automating routine transactions, these bots help streamline operations, reduce operational costs and the burden on traditional service channels.

According to a 2022 study, chatbots represent the most frequently employed AI-based tool within the European Union, constituting 22.8 percent of all use cases (Van Noordt and Misuraca, 2022). A meta-analysis of 30 studies (Ma'rup et al., 2024), indicates that chatbots have a significant positive effect on the efficiency of public services, reducing response time and increased user satisfaction.

However, while the integration of AI chatbots in public administration is a change that improves the quality and accessibility in citizen-state interactions, their deployment also introduces several technical, legal, and ethical risks that require careful consideration, robust legal frameworks, interdisciplinary oversight, and risk mitigation mechanisms, to ensure safe and effective implementation. The following sections identify and discuss how these emerging risks affect established legal norms and ethical imperatives, highlighting the pressing need for mitigation strategies to reconcile the capabilities of modern chatbots with the foundational requirements of public service governance.

2.2.1 Risks associated with the deployment of chatbots in public administration

In recent years, the adoption of chatbot systems in public services has been driven and accelerated by the emergence of LLM models. These models allow even more natural and fluent interactions and have reduced user concerns and resistance, which has led to growth in adoption. This increased integration, however, is now highlighting several risks that need to be addressed to preserve the principles of good governance. Modern LLMs are based on generative AI and operate probabilistically, generating responses based on the statistical likelihood of word sequences. This lack of determinism, along with their reliance on training data, introduces risks such as inconsistencies, factual inaccuracies, unpredictable outputs, and inherited biases, reducing reliability and posing challenges for their use in high-stakes domains of public services and governance.

Generative AI models tend to produce inaccurate or even completely fabricated outputs, often referred to as “hallucinations”, due to their probabilistic nature. Such risks may lead to incorrect legal guidance or misleading information, thus exposing public organization to liability risks. Dahl et al. (2024) showed that LLMs hallucinate at least 58% of the time in legal tasks and concluded by cautioning against the rapid and unsupervised integration of popular LLMs into high-stakes environments. A notorious example is the Mata v. Avianca case, in which attorney Steven A. Schwartz used ChatGPT for legal research and filed a federal brief containing fabricated cases, citations, and holdings—an oversight that led to sanctions from United States District Court (2023). This case underscores the significant challenges in detecting and mitigating hallucinations, particularly in public-service deployment scenarios where legal accuracy and accountability are critical.

In addition, reliance on vast amounts of training data means that generative chatbots can inadvertently amplify biases present in their training datasets. While hallucinations refer to instances when a model produces factually incorrect or entirely fabricated content, biases represent systematic distortions in outputs that mirror pre-existing prejudices or stereotypes hidden in the data. Such biases, stemming from skewed or unrepresentative datasets, may result in discriminatory outcomes, harmful stereotypes and even incite hate speech. Skewed datasets, whether representatively valid or not, produce skewed models, which in turn can affect the output of the chatbots. Moreover, biases may also emerge during fine-tuning processes like Reinforcement Learning from Human Feedback (RLHF) where subjective interpretations of “human values” can pass from evaluators to the model. Combined with the opaque nature of deep learning architectures, these issues hinder transparency and accountability, complicating efforts to identify and mitigate the origins of erroneous outputs (O'Neil, 2016; Biggio and Roli, 2018).

Adversarial attacks present an additional risk to chatbot systems, particularly those based on large language models. In these attacks, malicious actors craft subtle hard-to-detect input modifications—often referred to as “jailbreaks” or “prompt injections”—that exploit vulnerabilities and design glitches in the model to bypass safety mechanisms and trigger inappropriate or harmful outputs. Such manipulations can cause the chatbot to generate misleading or damaging responses, potentially revealing sensitive data and undermining public trust in government services. Adversarial techniques have been used to force chatbots to reveal confidential details or produce content that violates established guidelines (Liu et al., 2023; Zhuo et al., 2023). The integration of models of other modalities (voice, image) into LLMs may introduce additional risks of adversarial attacks (Ye et al., 2023). The combination of generative models with retrieval capabilities further increases the risks -blurring the line between data and instructions- permitting attacks that can remotely affect other users' systems by strategically injecting the prompts into data likely to be retrieved at inference time (Greshake et al., 2023).

The integration of LLM-based chatbots within public services also raises significant data privacy and security concerns. Chatbot systems interacting with citizens, receive and process sensitive personal information, including personal details, and even health records. Potential vulnerabilities in the chatbot's architecture, data storage, or APIs can be exploited by malicious actors to gain unauthorized access to this data, leading to identity theft, financial fraud, and other harmful consequences. The increasing sophistication of cyberattacks targeting AI systems, as highlighted in recent research (Biggio and Roli, 2018), underscores the urgent need for robust security measures. Furthermore, reliance on third-party LLMs and commercial cloud infrastructure limits public service organizations' control over data usage. User queries and other related data may be utilized for model training or fine-tuning purposes, in ways that conflict with privacy regulations such as the General Data Protection Regulation (GDPR). To address these challenges, it is crucial for public administration to adopt privacy-by-design frameworks and enforce strict contractual data usage agreements.

2.2.2 Ethical requirements

These technical and operational risks associated with chatbots stand in stark conflict with the ethical and legal standards traditionally upheld in public service delivery. The deployment of chatbot introduces complexities that can undermine core principles of public administration such as accountability, transparency, and fairness, eroding public trust and exposing public organizations to legal liability.

Fairness as a fundamental principle of public sector mandates the impartial, equitable, and unbiased delivery of government services and policies. Legal frameworks, ethical codes, and administrative protocols require public servants to act objectively, transparently, with respect to the rights and dignity of all citizens. But this principle is directly engaged in the case of chatbots, by the manifestation of the bias risk, as prejudices present in training data may yield discriminatory outputs that contravene the fairness mandate. A notable example, as described by Lippens (2024), involves ChatGPT, which, during simulated job application assessments, exhibited discriminatory tendencies against certain ethnic and gender groups. The model assigned lower suitability scores to applicants from specific backgrounds, reflecting societal stereotypes embedded within the training data. Additionally, chatbot interfaces can create accessibility disparities for individuals with disabilities, while the digital divide may further marginalize those lacking technological access or digital literacy.

Transparency, another foundational requirement in public administration, requires that government processes and decision-making mechanisms remain open and comprehensible to citizens. Applied in the context of chatbots deployment for public service delivery, this principle mandates clear communication regarding the nature of automated interactions, including disclosure of the chatbot's operational methods, training data sources, and inherent limitations, as well as, clear explanation of any decision and information provided. But, the opaque, “black box” architecture and the probabilistic nature of generative models obscure the reasoning behind chatbot responses, impeding citizens' ability to scrutinize and challenge responses, decisions or recommendations. This lack of explainability directly challenges transparency, potentially eroding trust and hindering engagement with public services. To address this, public administrations must implement measures that demystify the underlying algorithms, establish accessible channels for feedback and fallback mechanisms to human representatives for unresolved issues. Felzmann et al. (2019) argue that for AI systems to be considered trustworthy, transparency requirement should be tailored to the stakeholder more broadly, including developers, users, regulators, deployers, and society in general. This is echoed in a broader analysis by Hohmann (2021), which examines how major intergovernmental organizations interpret transparency, reinforcing its status as a fundamental principle in any public-facing system.

The principle of accountability, a cornerstone of responsible governance, is also directly challenged by the integration of chatbot technology into public services. Demanding that public institutions and their representatives are answerable for their actions, decisions, and outcomes, this principle requires clear lines of responsibility, robust oversight mechanisms, and accessible avenues for redress when failures occur or harm is inflicted. But the complexity and obscurity of LLMs, coupled with the potential for automated decision-making processes, impedes the identification of who is responsible in case of misinformation or wrong decisions. This can erode public trust and expose organizations to legal liability, as demonstrated by a ruling against Air Canada, where the company was held accountable and liable for misrepresentations made by its chatbot regarding bereavement travel policies (Civil Resolution Tribunal, 2024).

Respect for citizen's privacy and data protection constitute a major set of ethical and legal obligations of public administration. Given the chatbots' role in handling personal, and in some cases even sensitive information, risks of violation of those principles can materialize. Vulnerabilities in chatbot systems can expose sensitive data during breaches and cyberattacks. For instance, ChatGPT publicly admitted that its famous chatbot system leaked chat history of users due to vulnerabilities in the Redis client open-source library (OpenAI, 2023). The volume, velocity, and variety of data collected, coupled with the potential for automated analysis and profiling, increases the risks for user privacy and data security. To ensure respect for user privacy and robust data protection within chatbot deployments, public administrations must prioritize adherence to established data protection principles. This entails obtaining informed consent prior to data collection, minimizing data collection to what is strictly necessary for specified purposes, implementing robust security measures to safeguard against unauthorized access or disclosure, and affording individuals the rights to access, rectify, and erase their personal data. Adherence to these tenets is critical for sustaining public trust, upholding constitutional rights, and ensuring compliance with data protection regulations.

2.2.3 Ethical and regulatory frameworks

Deploying AI in public services introduces risks that not only undermine the integrity of public interactions but also challenge the adequacy of current regulatory frameworks designed to protect citizens and ensure equitable service delivery. The integration of emerging technologies into public administration has strained traditional mechanisms for enforcing principles of ethical governance, underscoring the need for new legal and administrative frameworks adapted to this evolving landscape.

In the past 5 years alone, nearly a hundred different non-legally binding ethical codes or statements have been adopted by public, private, and non-governmental organizations, all promoting similar principles, such as transparency, fairness, respect for human autonomy, and privacy (Maclure and Morin-Martel, 2025). Often articulated by international organizations and expert bodies, those frameworks provide principles, ethical compliance standards and guidelines for addressing crucial ethical challenges and uphold the rights of citizens. For example, the OECD's “Recommendations on AI” establish internationally recognized principles for trustworthy AI, emphasizing transparency, accountability, fairness, and robustness (OECD, 2019). Similarly, the European Commission's “Ethics Guidelines for Trustworthy AI” outline essential requirements for AI systems to be lawful, ethical, and robust, stressing human agency and oversight, technical robustness, and data governance (EC, 2019). UNESCO's “Recommendation on AI Ethics”, adopted in November 2021, also promotes a human-centered approach, prioritizing inclusivity, human dignity, and accountability (UNESCO, 2021).

However, there is growing skepticism regarding the potential of ethical principles to help enact responsible development of AI technologies (Maclure and Morin-Martel, 2025). Most of these ethical frameworks are largely conceptual; although they offer guidance and promote ethical awareness, their abstract, general, and voluntary nature limits their practical effectiveness in protecting citizens' rights. The realization that existing legal rules were insufficient to protect people's rights against AI's risks, and that the promulgation of nonbinding AI ethics guidelines did not provide a satisfactory solution either (Smuha, 2025), ultimately led to the introduction of the first enforceable regulatory measures. In the European context, the advancement of AI technologies has prompted the European Union to proactively establish regulatory frameworks that ensure the ethical deployment of AI. A cornerstone of these efforts is the Artificial Intelligence Act (AI Act), which categorizes AI applications based on their risk levels. Representing a significant milestone in the regulation of artificial intelligence, the Act aims to drive the development, deployment, and use of AI across Europe, with a substantial focus on safety, protection of fundamental rights. It introduces a risk-based classification system, assigning obligations proportional to the risks posed by various AI applications, with a particular emphasis on high-risk and unacceptable use-cases. Under the provisions of the Act, chatbots are generally categorized as limited-risk systems, but generative Large Language Models, depending on their scale and societal impact, can be classified as General Purpose AI (GPAI) systems, for which special obligations are introduced. These include preparing detailed technical documentation for submission to the AI Office upon request, creating deployer-oriented guidelines, ensuring compliance with EU copyright law, and providing summaries of training data sources. For high-impact GPAI models with systemic risks (identified as those involving computational resources exceeding 10∧25 FLOPs), additional measures include continuous risk assessment, model evaluations, cybersecurity safeguards, and mandatory notifications to the European Commission regarding new qualifying systems. Traditional rule and retrieval based chatbots, in contrast, face fewer regulatory burdens under the AI Act due to their limited scope and deterministic nature. While they must comply with general data protection standards, including the GDPR, they are exempt from the extensive risk management and transparency obligations imposed on generative LLMs.

However, policymakers should exercise caution in relying solely on the EU AI Act's provisions for assessing chatbot risks. As Smuha and Yeung (2025) argue, while the Act aims to address “systemic risks” from General Purpose AI (GPAI) models, its focus on computational metrics and data size thresholds for identifying such risks is problematic as this threshold is rather arbitrary, potentially excluding influential GPAI models that fall below it, particularly as the industry shifts toward smaller, more potent models. Moreover, limiting “systemic risks” to GPAI models overlooks the potential for even traditional rule-based AI systems to pose significant risks. A more comprehensive risk assessment should encompass diverse model types and consider potential impacts on public health, safety, fundamental rights, and society, beyond mere computational resource criteria.

2.3 Literature review: evaluating chatbots in public service

This section reviews relevant empirical and theoretical literature to inform the development of a practical evaluation methodology. While the preceding discussion outlined the broad risks and governance principles, a focused review of empirical studies on existing chatbot implementations is necessary to identify the specific parameters and criteria that determine their effectiveness and alignment with public values. This section, therefore, surveys the empirical and theoretical literature and serves as the direct foundation for the evaluation framework proposed in Chapter 4, ensuring that the selected evaluation criteria are substantiated by real-world challenges and scholarly insights into the deployment of chatbots in the public sector.

Recent empirical research provides a granular account of the challenges inherent in chatbot implementation within public organizations. Chen et al. (2024) examined chatbot adoption across 22 U.S. state governments, distinguishing between the drivers of technology adoption and the determinants of successful implementation. In their study Chen et al. (2024) highlight real-world benefits such as 24/7 service and multilingual support, noting that chatbots can “reduce staff workloads” and improve access across service lines when properly deployed and designed. The authors identify knowledge-base creation and maintenance, managing technology skills, securing adequate resources, navigating safety regulations, and meeting citizen expectations as the most crucial determinants of a chatbot's success. These findings suggest that technical performance is intertwined with organizational capacity, ethical considerations, and user-centric design, supporting the need for a multidimensional evaluation approach. The need for a multidimensional and domain-specific approach is further corroborated by recent research in other high-stakes sectors. Gupta et al. (2025) likewise propose an evaluation framework for financial-sector chatbots that assesses cognitive intelligence, user experience, operational efficiency, and ethical compliance. Their findings reinforce the inadequacy of generic evaluations and the need for a domain-specific, multidimensional approach. Their framework, which assesses chatbots across cognitive intelligence, user experience, operational efficiency, and ethical compliance, reinforces the validity of a holistic evaluation model. The emergence of such specialized frameworks underscores a critical consensus: generic, one-size-fits-all evaluations are insufficient. Meaningful assessment requires a multi-dimensional methodology tailored to the unique operational realities, user expectations, and ethical obligations of the specific sector.

Government chatbots are expected to provide accurate, reliable, and consistent information, but studies highlight challenges in maintaining data quality and handling complex citizen queries (Ma'rup et al., 2024; Cortés-Cediel et al., 2023). The risk of “hallucinations” (factually incorrect outputs) is well documented in LLMs, especially in high-stakes domains such as healthcare and legal services (Dahl et al., 2024). Rule-based systems, by contrast, provide consistency and predictability but lack the flexibility of LLMs. Hybrid retrieval-augmented generation (RAG) architectures, which ground responses in a curated knowledge base, are emerging as an effective approach to reduce hallucinations. For example, Vemulapalli (2025) finds that RAG systems can reduce hallucination rates by roughly 70% compared to pure generative models. These findings emphasize the importance of criteria such as Input Comprehension (how well the system decodes varied language) and Response Accuracy and Factual Integrity (checking answers against official data) for comprehensive architecture selection.

While accuracy and reliability define the baseline for performance, literature consistently shows that user perception and trust ultimately determine whether such systems succeed in practice. Beyond functional performance, the success of a digital government service is contingent on public trust and a positive user experience. A seminal experimental study by Aoki (2020) directly investigated the drivers of initial public trust in government chatbots. Her research revealed that trust is highly context-dependent, with the public showing significantly less trust in chatbots for service areas requiring empathy and complex situational judgment, such as parental support, compared to more procedural tasks like waste separation. This crucial finding empirically demonstrates that “performance” in the public sector is judged not only on technical accuracy but also on the perceived ability to handle nuanced human needs. Furthermore, Aoki's discovery that communicating citizen-centric purposes—such as ensuring “uniformity in response quality” or “24-h, 365-day, timely responses”—measurably enhances public trust, even if the effect sizes are small, provides a direct link between strategic communication and user acceptance. These findings are consistent with research by Abbas et al. (2023), which highlights the importance of clarity and effective error recovery, and shows that government chatbot users demand not only efficiency but also trustworthiness, ease of use, and nuanced conversation handling. They report that a positive user experience “is heavily dependent on the chatbot's ability to provide clear, understandable responses and to offer effective recovery mechanisms when errors occur”. Recent experimental work reinforces this: Zhou et al. (2025) find that empathetic chatbot communication significantly enhances user trust and satisfaction in digital public services. In short, peer-reviewed evidence shows that fluent, context-aware dialogue and robust recovery from misunderstandings are essential for user satisfaction, and thus must be explicitly evaluated for the selection of chatbot architecture. These insights motivate our User Experience and Communication category, which includes criteria like Conversational Fluency (grammatical, coherent responses), Error Handling and Recovery (fallbacks and clarification), and Response Timing (speed and consistency).

A growing body of work has begun to categorize and analyze the risks related to generative or RAG implementations of chatbot systems. The literature highlights numerous ethical and safety dimensions that public agencies must consider. Gan et al. (2024) present a comprehensive survey that addresses the expanding security, privacy, and ethical challenges associated with LLM–based agents, focusing on implementations that use transformer models as control hubs to perform complex tasks. The authors propose a novel taxonomy that organizes threats by both their sources—such as malicious inputs, model misuse, or data vulnerabilities—and their impacts across different agent modules and operational stages. Yu et al. (2025) propose taxonomies for threats across agent components and stages. Cui et al. (2024) developed a risk framework for LLM models, focusing on the risks of four LLM modules: the input module, language model module, toolchain module, and output module. Ammann et al. (2025), have conducted research focusing on the vulnerabilities of RAG pipelines, and outlining the attack surface from data pre-processing and data storage management to integration with LLMs. The identified risks are then paired with corresponding mitigations in a structured overview. Greshake et al. (2023) provided an overview of safety threats and design vulnerabilities of LLMs, including prompt engineering and hallucinations, and highlighted the risks associated with their inclusion in chatbots. Bommasani et al. (2021) surveyed some of the risks that accompany the widespread adoption of foundation models, ranging from their technical underpinnings to their societal consequences. Their research highlights important risks related to the use of LLMs in chatbots, such as the handling of output liability and discrimination and noting that the use of such models by governmental entities—at a local, state or federal level—necessitates special considerations. A survey by Chu et al. (2024) delivers a structured and comprehensive taxonomy of research on fairness in LLMs. A notable insight stressed by the study is that LLMs can produce accurate outputs grounded in flawed rationale, thereby amplifying discriminatory patterns despite surface-level correctness. This underscores the complex challenge of ensuring both fairness and transparency in LLM-generated text—highlighting the need for rigorous, multi-stage evaluation and diverse mitigation strategies. These findings directly inform our Ethics and Safety dimension, which includes sub-criteria such as Bias Mitigation and Fairness, Ethical Compliance, and Data Protection and Privacy, grounded in the literature. By embedding these ethical requirements into the framework, we address the “expanding security, privacy, and ethical challenges” identified in scholarly surveys.

Practical deployments demand that chatbots be adaptable across domains and scalable to workload. Studies of conversational AI architectures underscore this need: for example, Mechkaroska et al. (2024) demonstrate that as user demand grows, maintaining responsiveness and low latency requires both vertical and horizontal scaling of the system. This justifies criteria for Scalability and Resource Efficiency in our framework, ensuring a chatbot can handle large user loads without degrading performance. Additionally, public service chatbots can adapt to different domains and offer full-service delivery, when designed to allow integration with existing information systems and data sources. This architectural paradigm was formally introduced in the foundational work of Lewis et al. (2020), who proposed an end-to-end model that combines a pre-trained retriever to find relevant documents with a pre-trained generator to synthesize an answer. The key advantage of this approach for adaptability is that it decouples the model's linguistic capabilities from its domain-specific knowledge. To adapt the system to a new public service domain, administrators can update or replace the external knowledge base without the need for costly and time-consuming model retraining. These observations support our Adaptability criteria: the chatbot's ability to incorporate new data domains, connect to APIs, and be updated by non-experts. In sum, the literature suggests that evaluating domain versatility, integration flexibility, and update efficiency is crucial for public-sector chatbots, since they must scale out to varied tasks and growing usage without undue cost or complexity.

Finally, the principles of digital equity and seamless service integration emerge as foundational themes in the literature. Public sector chatbots cannot be considered successful if they fail to serve all members of the public, including those with disabilities, limited digital literacy, or different language needs. Scholarship on the “digital divide” warns that new technologies can inadvertently exacerbate inequalities if not designed with universal access in mind (Helsper, 2021). Therefore, a chatbot's value is intrinsically tied to its inclusivity, justifying a direct evaluation of its accessibility (e.g., compliance with WCAG standards) and multilingual capabilities. Moreover, the literature on digital government transformation emphasizes a shift from siloed information provision to integrated, end-to-end service delivery (Wirtz et al., 2018). The ultimate goal is to automate entire service workflows, not just answer queries. Research indicates that a chatbot's true value is realized when it is seamlessly integrated into existing government processes, allowing citizens to perform tasks such as scheduling appointments or checking application statuses directly within the conversational interface (Androutsopoulou et al., 2019). Practitioner-focused reports, such as those from the Center for Technology in Government at the University at Albany, echo this, noting that chatbots can significantly “improve citizens' access to public services” by breaking down language and time barriers. This body of work provides a clear mandate for our “Inclusivity and Service Delivery” dimension, which, with its criteria of “Multilingual Support and Accessibility” and “Service Delivery and Process Automation,” ensures that the evaluation captures a chatbot's alignment with the core public values of universal service and administrative efficiency.

The evaluation framework presented in Chapter 4 is directly informed by these findings. Our framework, therefore, synthesizes these empirically and theoretically derived dimensions into a structured and comprehensive methodology which includes multi-dimensional criteria for assessing chatbot architectures in the public sector.

3 A typology of chatbot architectures

A prerequisite for evaluating alternative approaches to chatbot systems is the establishment of a clear and coherent typology of chatbots that defines the application scope of the methodology. For practical usability, this typology should encompass all major chatbot implementation techniques while filtering out unnecessary details and variations. Chatbots can be classified on a multitude of parameters, such as domain knowledge, service type, interaction modality (text, voice, or multimodal), architectural design, personalization abilities, cognitive capabilities, or learning adaptability. Among these, the underlying architectural design is a fundamental parameter which examines the technologies used by chatbots to process user input and generate responses. A typical chatbot architecture consists of at least three components: natural Language Understanding (NLU) component, Natural Language Generation (NLG) component and User Interface (UI). From these three components NLU and NLG are the most important for both the classification of chatbots, but also, for their actual performance and capabilities. NLU is responsible for interpreting and processing user inputs, whereas NLG focuses on constructing appropriate responses. But details of operation, mixing of approaches and variations in comprehension and response synthesis, allows distinguishing subcategories and introduction of hybrid approaches such as modern RAG implementations that integrate external knowledge with AI-driven responses.

To ensure a comprehensive and practical evaluation, our methodology adopts a typology based on four architectural categories of chatbots, namely rule-based, retrieval-based, generative and hybrid. These categories effectively account for key differences in comprehension, response synthesis, and underlying knowledge base, making them the most suitable for the application of the evaluation. The following sections will outline the core characteristics and operational principles of these four categories, which will serve as the primary focus of the evaluation methodology.

3.1 Rule-based architectures

Rule-based chatbots are among the earliest paradigms of conversational AI. Typically operating using predefined sets of rules and patterns, are implemented using decision trees and finite-state machines. Systems in this category trigger responses based on simple keyword detection rules and predefined conversation flows. Scripting languages such as AIML (Artificial Intelligence Markup Language), RiveScript, and ChatScript, are often being used to facilitate the definition of keyword pattern-matching rules, response templates and decision flows.

The NLU component in rule-based chatbots is deterministic; it relies on predefined rulesets to identify keywords and intent, without any contextual understanding capabilities. As a result, these chatbots require highly structured input to function effectively, lacking adaptability to unexpected queries or paraphrased expressions. Their NLG component relies on template-based responses, selecting pre-written outputs based on the identified input. While this guarantees predictability and reliability, it also limits their flexibility, making them more effective for narrowly defined, structured, and repetitive interactions, such as automated customer support, FAQ systems, and basic transactional workflows.

Variations among rule-based chatbots stem from their structural complexity and the sophistication level of their rulesets. Simpler approaches rely on detecting specific words or phrases in user inputs to trigger predetermined responses hardcoded in the source code. Decision tree based chatbots follow a pre-defined tree structure in which each branch represents a distinct dialogue path, while template-based use pre-written scripts or conversation templates, often implemented in languages such as AIML.

3.2 Retrieval-based architectures

While rule-based chatbots rely on a predetermined set of rules and response templates to guide their interactions, retrieval-based chatbots use existing data to generate responses. Instead of relying on rulesets and keyword detection, these systems leverage semantic similarity techniques to identify the user intent, matching input vectors with relevant responses residing in locally stored knowledge bases, FAQ, document corpora, and/or knowledge graphs. This allows for more flexibility and adaptability than rigid rule-based approaches.

The NLU component in retrieval-based chatbots typically employs statistical similarity techniques, such as TF-IDF, cosine similarity, or word embeddings (e.g., Word2Vec, GloVe). For NLG, retrieval-based systems rely on response selection rather than generation, ranking and retrieving the most contextually similar response from their underlying knowledge base. Overall, retrieval-based chatbots improve over rule-based, with their ability to handle more complex and paraphrased user queries while maintaining factual accuracy.

Retrieval-based chatbots can be further subdivided by the underlying retrieval techniques. More recent approaches employ dense embeddings from models like BERT (Devlin et al., 2019), RoBERTa (Liu et al., 2019), and ELECTRA (Clark et al., 2020), to capture semantic similarity instead of using traditional methods based on simple vector space techniques such as TF-IDF. These models improve on the intent and entities recognition of syntactically complex inputs, leading to better contextual understanding. Additionally, retrieval-based chatbots can be classified as static or dynamic depending on how they process and rank responses. Static retrieval systems select the best-matching response from a fixed, pre-indexed corpus using a single-pass assessment. In contrast, dynamic retrieval systems continuously refine response ranking in real time, employing techniques such as context-aware re-ranking, query expansion, and neural search models to enhance response relevance based on conversational history and user intent. Other variations include personalization-enhanced models, which adapt responses based on historical user interactions, and systems that integrate structured knowledge—such as knowledge graphs—to improve the relevance and precision of retrieved information.

3.3 Generative-based architectures

In contrast to retrieval-based chatbots, where the response is retrieved from predefined sources, generative-based chatbots produce responses dynamically relying on deep learning techniques and pre-trained Large Language Models. A milestone for their success was the advent of Transformer models (such as GPT), which, based on decoder-only architectures1 enabled context-aware self-adaptive text generation, dramatically improving the fluency and naturalness of the generated text and revolutionizing the chatbot landscape.

The NLU component in these systems is inherently integrated within the generative process, where a single model both interprets and produces text in a single pipeline. For NLG, generative models use single-step decoding, predicting each token based on prior context in an autoregressive manner. This allows the generation of coherent, contextually appropriate, and novel responses, making these models highly effective for open-ended dialogues and creative outputs. However, this generative capacity often produces “hallucinations”,2 as responses are generated probabilistically rather than retrieved from a validated source.

Generative-based chatbots can be further classified into open-domain models, trained on massive and diverse datasets, capable of engaging in unconstrained conversations, and closed-domain models, fine-tuned on specific datasets to ensure domain relevance and enhanced factual accuracy. Recent research approaches introduce Transformer variations with memory, that integrate long-term contextual awareness and dialogue history, allowing for more coherent multi-turn dialogue interactions (Wu et al., 2022; Bulatov et al., 2024).

3.4 Hybrid approaches using retrieval-augmented generation (RAG)

Hybrid approaches address the risks of purely generative models by integrating retrieval-based knowledge with generative response synthesis. Retrieval augmented generation is an architectural approach for optimizing the performance of generative models by connecting them with external knowledge bases. RAG helps large language models (LLMs) deliver more relevant responses of higher quality, combining the factual accuracy of retrieval-based systems with the fluency and creativity of generative models.

The NLU component in RAG-based chatbots first retrieves contextually relevant documents, knowledge snippets, or structured data from an external corpus (e.g., a vector database, a knowledge graph), leveraging semantic similarity techniques like those used in retrieval-based chatbots. The NLG component then synthesizes responses using a generative model, leveraging the retrieved knowledge as additional context. This approach enhances factual consistency, reduces the risk of hallucinations associated with purely generative models, and allows the chatbot to provide more informative and grounded responses.

Similarly to static and dynamic retrieval systems, two primary hybrid approaches are based on the flow of interaction between the retriever and the generator components. Passive RAG operates through a single interaction in which the retriever supplies data to the generator, and the generator produces a response without further feedback. Conversely, in active RAG, a two-way exchange between the retriever and the generator, is taking place, during which, the retriever continuously refines its data selection based on the evolving text generated, while the generator can request additional information to clarify uncertainties. This process enhances the integration of context and improves the overall accuracy and relevance of the responses generated. More variations of hybrid RAG models can stem from their retrieval strategies (e.g., vector search, knowledge graph traversal), retrieval source (differentiating between offline document-based RAG, which rely on pre-indexed, static corpora and online internet-based RAG, leveraging dynamic web content via APIs and search engines to incorporate real-time information), the granularity of the retrieved information (e.g., full documents, paragraphs, sentences), and the generative constraints applied during response synthesis (e.g., prompt engineering, fact verification).

4 Methodology: a framework for comparative architectural evaluation

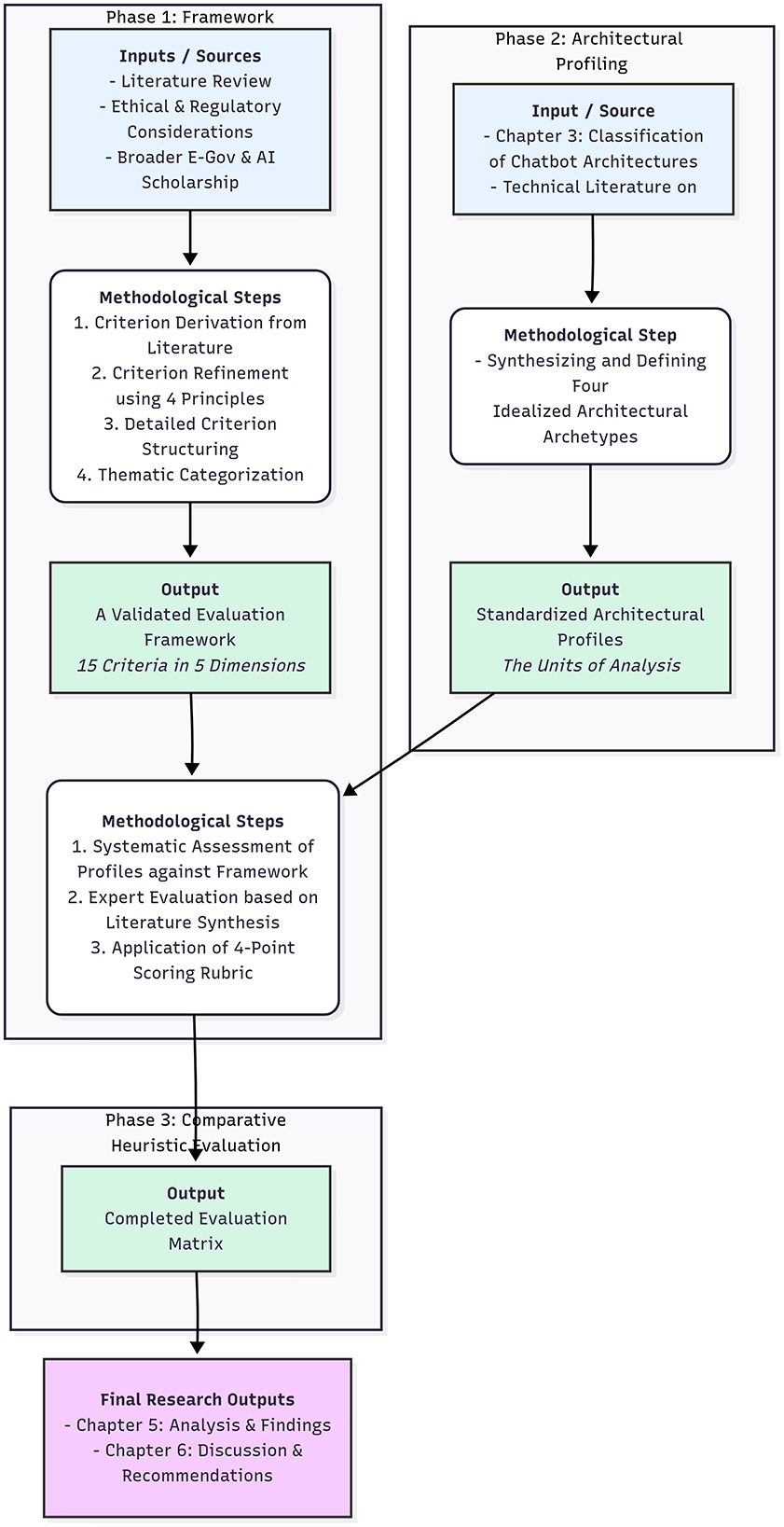

To address the research gap, we developed and applied a multi-stage comparative analytical methodology designed to systematically evaluate the four primary chatbot architectures focusing particularly on the needs, particularities and use cases of public administration. This targeted approach ensures that the proposed framework not only evaluates the operational performance of chatbot architectures, but also their alignment with values and ethical standards for the provision of public services, providing guidance toward robust, citizen-focused AI deployments. The methodology was executed in three distinct phases, as illustrated in Figure 1.

4.1 Phase 1: development of the evaluation framework

The foundation of this research is a robust evaluation framework derived directly by the key challenges and requirements identified in the ethical and legal considerations survey (Section 2.2) and the literature review (Section 2.3). This phase translated the identified key challenges into a set of structured, measurable criteria.

1. Criterion derivation: An initial long-list of potential evaluation criteria was derived from the key themes identified in the literature: functional efficacy, user-centricity, ethical governance, and inclusivity. The process ensured that the criteria were grounded in documented real-world challenges (Chen et al., 2024), user expectations (Abbas et al., 2023; Aoki, 2020), technical benchmarks (Vemulapalli, 2025), and ethical principles (Gan et al., 2024; Chu et al., 2024). By grounding the framework in this extensive literature, we ensure that its foundational dimensions are robustly justified by both theory and empirical evidence on public-sector chatbot deployments.

2. Criterion refinement and validation: The initial list was systematically refined into a final set of fifteen criteria by applying four rigorous selection principles:

• Universal applicability: Ensuring each criterion is relevant to all four architectures. Criteria depending on architecture-specific features are excluded to ensure that comparisons remain valid and unbiased.

• Differentiability: Selecting criteria that highlight meaningful performance variations. A criterion is considered differentiable if it provides measurable variation across architectures rather than yielding uniform or indistinct results.

• Granular measurability: Disaggregating broad concepts into specific, assessable components.

• Contextual relevance and value alignment: Prioritizing criteria that reflect the unique needs and values of the public sector.

3. Detailed criterion structuring: To ensure each criterion was unambiguous, contextually relevant, and assessable, every refined criterion was then formally operationalized using a four-part structure:

• Focus: Defines the specific aspect of the chatbot being evaluated (e.g., the ability to interpret user input).

• Objective: Outlines the intended role and importance of this criterion within the public service context (e.g., to ensure citizens' needs are correctly understood).

• Key determinants: Identifies the underlying technical and architectural elements that influence performance for that criterion (e.g., the sophistication of the NLU model).

• Assessment methods: Proposes potential methods for how the criterion could be empirically measured, adding a layer of practical applicability to the framework.

This structured definition process adds analytical rigor and ensures that every evaluation is based on a clear, multi-faceted understanding of the criterion.

4. Thematic categorization: For analytical clarity, the final fifteen validated criteria were organized into five distinct thematic dimensions: (I) Core Functionality and Understanding, (II) User Experience and Communication, (III) Ethics and Safety, (IV) Adaptability and Scalability, and (V) Inclusivity and Service Delivery, ensuring a holistic and well-balanced assessment.

4.2 Phase 2: architectural profiling

To ensure a fair and consistent comparison, we first established standardized, idealized profiles for each of the four architectures, as detailed in Section 3. Without this architectural profiling, the research would be vulnerable to a major methodological flaw: comparing idiosyncratic, real-world implementations that have too many confounding variables. These profiles—Rule-Based, Retrieval-Based, Generative, and Hybrid (RAG)—served as the consistent units of analysis for the evaluation. Each profile was defined by a consistent set of five core characteristics derived from the technical literature:

• Core operational logic: How does it process input and generate a response? (e.g., deterministic keyword matching vs. probabilistic token prediction).

• Underlying technology: What are the key components? (e.g., decision trees, finite-state machines vs. Transformer models, vector databases, a combination of underlying technologies).

• Knowledge management: How does it store and use information? (e.g., hard-coded scripts vs. a curated document corpus vs. parametric knowledge learned during pre-training).

• Inherent strengths: What is the architecture naturally good at? (e.g., Rule-Based systems excel at consistency and predictability).

• Inherent weaknesses: What are its systemic risks? (e.g., Generative models have an inherent risk of hallucination).

This step prevents the comparison of specific, idiosyncratic implementations and instead focuses the analysis on the fundamental capabilities of each architectural paradigm.

4.3 Phase 3: comparative heuristic evaluation and scoring

The core of the methodology involved a comparative heuristic evaluation where the four architectural profiles were systematically assessed against the fifteen criteria. This heuristic approach was selected as it is ideally suited for a conceptual, comparative study focused on evaluating the inherent capabilities and systemic risks of idealized architectural types, rather than the empirical performance of a single, specific implementation,

1. Evaluation process: The scoring was conducted through an expert evaluation, based on a comprehensive synthesis of the evidence presented in the literature review. For each criterion, we assessed the inherent capacity of each architecture to meet its demands. The judgment was based not on a single implementation but on the documented potential and systemic risks associated with each architectural approach. For instance, the scoring for “Response Accuracy” considered both the high risk of hallucination in pure generative models (Dahl et al., 2024) and the documented mitigation effect of RAG architectures (Vemulapalli, 2025).

2. Scoring rubric: To ensure consistency, a 4-point ordinal scale was used, with each level having a clear operational definition:

• Limited: The architecture has inherent design characteristics that make it fundamentally ill-suited to meet the criterion without significant and unnatural modifications.

• Moderate: The architecture can meet the criterion but has significant constraints or requires substantial effort, and performance may be inconsistent.

• High: The architecture is naturally suited to meet the criterion, and it represents a core strength.

• Very high: The architecture represents the state-of-the-art for the criterion; its design is optimized to excel in this area.

The output of this phase is the comprehensive evaluation matrix presented in the Results section (Table 1), which provides the empirical basis for the subsequent analysis, discussion, and policy recommendations.

4.4 Criteria for evaluating chatbot architectures in public service delivery

Building on the literature review and the preceding framework operationalization, this section outlines the criteria used to evaluate chatbot architectures in public service contexts. The criteria are organized into five overarching dimensions: core Functionality and Understanding, User Experience and Communication, Ethics and Safety, Adaptability and Scalability, and Inclusivity and Service Delivery. Each dimension reflects a cluster of concerns repeatedly emphasized in the literature, ranging from technical accuracy to broader sociotechnical values such as fairness, accessibility, and democratic legitimacy.

• Core functionality and understanding focuses on a chatbot's capacity to provide accurate, reliable, and contextually appropriate responses. This includes not only response accuracy but also the system's ability to correctly interpret diverse citizen queries.

• User experience and communication addresses how effectively the chatbot interacts with users. Dimensions such as conversational fluency, error handling, and responsiveness are critical in shaping trust and satisfaction with digital public services.

• Ethics and safety captures the governance-related risks of deploying AI-driven systems. Criteria in this category encompass alignment with ethical and regulatory standards such as data protection, fairness, bias mitigation.

• Adaptability and scalability evaluates the ability of chatbot architectures to transfer across service domains and to operate efficiently under varying workloads. This dimension reflects the need for public-sector systems to remain sustainable and flexible in rapidly changing administrative environments.

• Inclusivity and service delivery ensures that chatbot deployments advance digital equity and enhance service integration. Criteria include accessibility for citizens with diverse needs and the ability to support multilingual interactions while embedding chatbots into end-to-end service processes.

To ensure transparency and replicability, each criterion is further elaborated through four analytical layers: focus, Objective, Key Determinants, and Assessment Methods. This structure translates high-level evaluation concerns into operational guidance, specifying both the intended role of each criterion and the mechanisms by which it can be empirically assessed.

4.4.1 Core functionality and understanding

Input comprehension and intent recognition

• Focus: The chatbot's ability to accurately interpret user inputs, encompassing natural language variations, implicit needs, complex or ambiguous queries, and underlying intent, including context maintenance across multi-turn conversations.

• Objective: Ensure the chatbot correctly comprehends user queries and their underlying intent to enable relevant and accurate responses, while effectively managing conversational context.

• Key Determinant: The architecture's sophistication in employing Natural Language Understanding, including techniques such as named entity recognition, intent classification, semantic analysis, and leveraging conversational history for contextual awareness and fallback handling.

• Assessment Methods: A/B testing with varied question complexity, human evaluation using predefined complex queries, automated testing with diverse input datasets, confusion matrix analysis, NLU score evaluation.

Response accuracy and factual integrity

• Focus: The chatbot's capacity to provide factually correct, current, and reliable information pertinent to the user's request and specific to the public service domain, including the consistent referencing of official sources.

• Objective: Ensure the factual accuracy and currency of chatbot responses, based on reliable data, official sources, and verifiable information.

• Key Determinant: The robustness and currency of the knowledge base, the integrity and completeness of rule logic, and the quality and verification processes of the training datasets, including automated updating mechanisms.

• Assessment Methods: Manual expert review, automated verification against curated databases, error analysis, precision/recall metrics.

Consistency and reliability

• Focus: The chatbot's ability to deliver consistent, predictable, and reliable outputs across repeated or similar queries, ensuring stability under varying conditions and over time.

• Objective: Build trust by ensuring uniform responses for similar user inputs, minimizing confusion and guaranteeing uniform service delivery.

• Key Determinant: The architecture's ability to maintain response uniformity through structured data, predefined workflows, robust logic, version control, and quality control processes. This includes mechanisms to detect and address inconsistent behavior.

• Assessment Methods: Regression testing with predefined queries, stress testing under variable loads, statistical variance analysis, stability metrics, automated consistency scoring.

4.4.2 User experience and communication

Conversational fluency and contextual awareness

• Focus: The ability to produce responses that are fluent, grammatically correct, coherent, and contextually appropriate within ongoing dialogue, including the management of multi-turn conversations and conversational transitions.

• Objective: Foster user confidence and engagement through intuitive, natural, and fluid conversational interactions that effectively track conversation history and intent.

• Key Determinant: Reliance on advanced Natural Language Generation models that produce contextually relevant and adaptive responses, while also managing conversational memory and transitions.

• Assessment Methods: User surveys, human evaluation scales, conversational log analysis, multi-turn dialogue simulation.

Error handling and recovery

• Focus: The chatbot's ability to gracefully handle errors (e.g., misunderstandings, invalid inputs, technical issues) and guide users toward resolution or alternative paths, including effective communication when an answer cannot be found.

• Objective: Prevent user frustration and maintain smooth interactions through clear feedback, alternative suggestions, and robust recovery mechanisms.

• Key Determinant: The architecture's robustness in error detection and handling, supported by fallback strategies and recovery mechanisms.

• Assessment Methods: Simulated user tests with flawed inputs, error log analysis, fault injection tests, post-error user feedback, evaluation of fallback mechanisms.

Response timing and responsiveness

• Focus: The speed at which the chatbot provides responses, including consistency under varying conditions.

• Objective: Enhance user experience by ensuring timely answers, especially in high-pressure or emergency scenarios.

• Key Determinant: The efficiency of the underlying architecture in processing queries, data access, and response delivery with minimal latency.

• Assessment Methods: Automated timing measurements, load testing, throughput analysis, time-to-response distribution analysis, user perception studies.

4.4.3 Ethics and safety

Bias mitigation and fairness

• Focus: The chatbot's ability to avoid and mitigate biases in its outputs, ensuring equitable treatment of all users, regardless of demographic characteristics (e.g., race, gender, religion, etc.).

• Objective: Guarantee non-discriminatory service delivery and promote fairness, equity, and inclusion.

• Key Determinant: The architecture's inherent design elements that may introduce bias, alongside the practicability of implementation of bias detection mechanisms, debiasing techniques, and regular fairness audits.

• Assessment Methods: Bias audits of training data and outputs, A/B testing with diverse inputs, statistical bias analysis, fairness metric computations.

Ethical compliance and liability safety

• Focus: Avoidance of offensive or misleading outputs extending beyond factual accuracy to include the prevention of harm.

• Objective: Protect users and maintain ethical integrity and liability safety in public service delivery.

• Key Determinant: The nature of output generation (deterministic vs. probabilistic), combined with safeguards and compliance protocols embedded within the architecture.

• Assessment Methods: Legal compliance reviews, scenario-based liability simulations, red-teaming exercises and content filtering effectiveness analysis, documentation analysis, audit trail evaluation.

Data protection and privacy

• Focus: The inherent design characteristics and operational mechanisms of each chatbot architecture that contribute to specific vulnerabilities in, handling, storage, and processing of user data.

• Objective: Identify and mitigate unique vulnerabilities arising from each architecture's inherent design, ensuring user data is protected and handled responsibly.

• Key Determinant: Features that influence data breaches, leaks, or privacy violations, including data storage practices, external data dependencies, and the risk of unintended data regurgitation.

• Assessment Methods: Security audits, data flow analysis, penetration testing, privacy impact assessments, vulnerability scanning.

4.4.4 Adaptability and scalability

Domain versatility and integration

• Focus: The chatbot's capacity to operate effectively across multiple public service areas and integrate seamlessly with existing systems and databases.

• Objective: Ensure scalable performance across diverse domains and interoperability with public service infrastructures.

• Key Determinant: Flexibility in incorporating domain-specific datasets, workflows, APIs, and external systems, along with the ability to transition between domains.

• Assessment Methods: Pilot testing in new domains, API integration tests, cross-domain scenario simulations, case studies

Scalability and resource efficiency

• Focus: The ability to scale and manage large user volumes and complex requests, while maintaining high performance and efficient resource use.

• Objective: Deliver cost-effective service that avoids bottlenecks, optimizes resources, and minimizes environmental impact.

• Key Determinant: Efficient resource utilization, optimization strategies, and load balancing techniques that enable high concurrency without performance degradation..

• Assessment Methods: Load testing, resource monitoring, cost-benefit analysis, performance benchmarking, stress testing

Maintainability and update efficiency

• Focus: The ease with which the chatbot can be updated and maintained in response to evolving data, procedures, and legal changes, while minimizing effort, energy and need of specialized skills.

• Objective: Minimize operational overhead while keeping the system relevant, accurate, and compliant with evolving requirements and standards.

• Key Determinant: The architecture's modularity, ease of use in its design and adaptability to incremental updates, version control practices, and the presence of automated model retraining protocols, along with user-friendly maintenance interfaces.

• Assessment Methods: Code and documentation reviews, version control analysis, update frequency tracking, automated regression testing, system log analysis.

4.4.5 Inclusivity and service delivery

Multilingual support and accessibility

• Focus: The ability to process and respond to queries in multiple languages, ensuring equitable access for users with diverse abilities, literacy levels, cultural backgrounds and technological access.

• Objective: Ensure inclusivity and consistent service quality across various languages and interfaces.

• Key Determinant: Advanced NLP for multilingual support, adherence to accessibility standards (e.g., WCAG), and support for diverse interaction modes considering device and bandwidth constraints.

• Assessment Methods: Multilingual user testing, accessibility audits, cross-device testing, user surveys across diverse groups

Personalization and contextualization

• Focus: The chatbot's capacity to tailor interactions based on individual user needs, contexts, and preferences while maintaining privacy and fairness.

• Objective: Enhance service relevance and user satisfaction through dynamic, personalized interactions.

• Key determinant: Utilization of adaptive models, contextual data services, and user segmentation techniques that enable dynamic adjustment of responses.

• Assessment methods: A/B testing personalized versus generic responses, user studies, analysis of user logs, context retention testing.

Service delivery and process automation

• Focus: The ability to support structured workflows, integrate with other public service channels, and automate routine tasks, such as form filling and application submissions.

• Objective: Enhance efficiency and reduce administrative burdens in handling complex, multi-step public service procedures.

• Key determinant: The integration capabilities with external systems, API support, workflow management, and the robustness of process automation features.

• Assessment methods: User-centered design tests, workflow analysis, API integration testing, A/B testing of user interfaces, process completion rate measurement.

5 Results

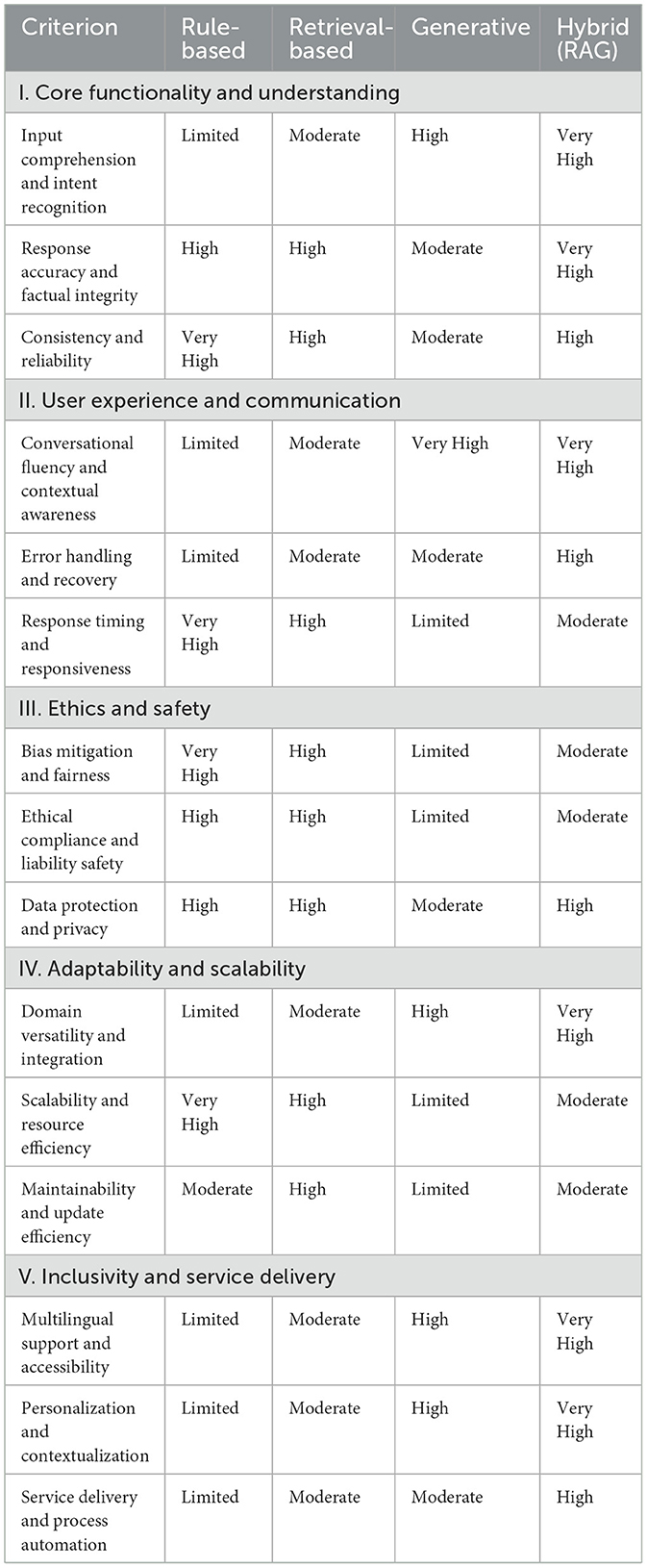

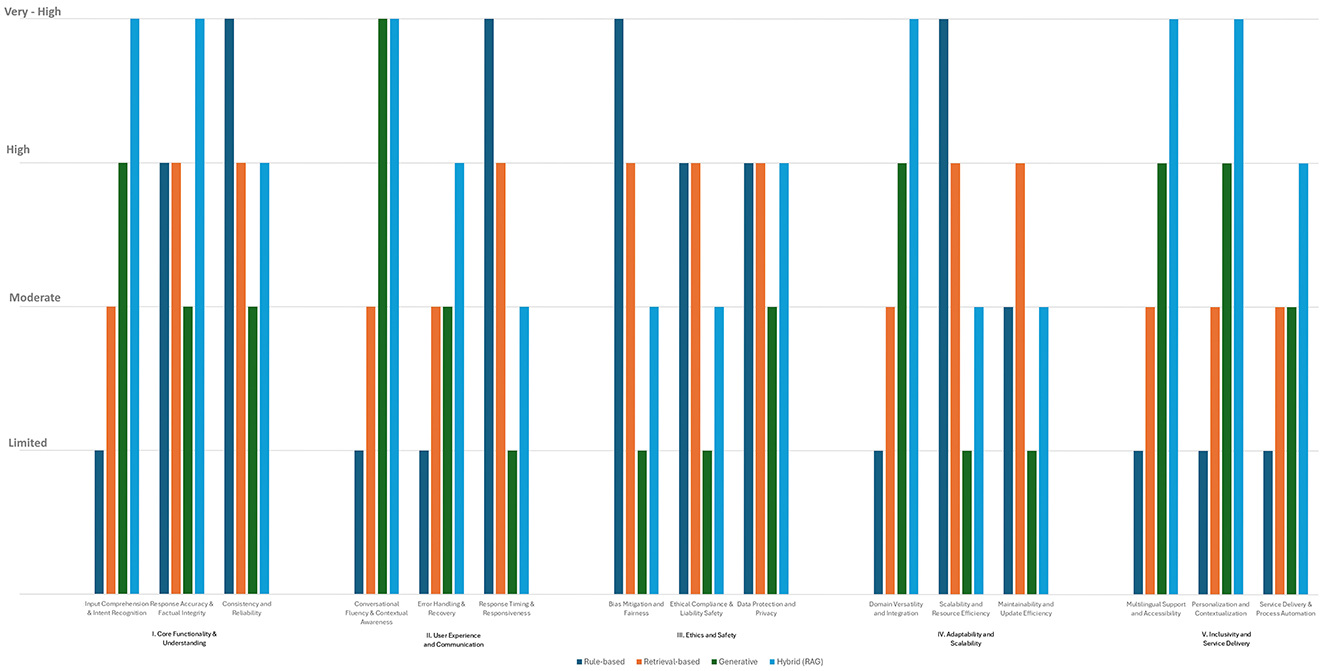

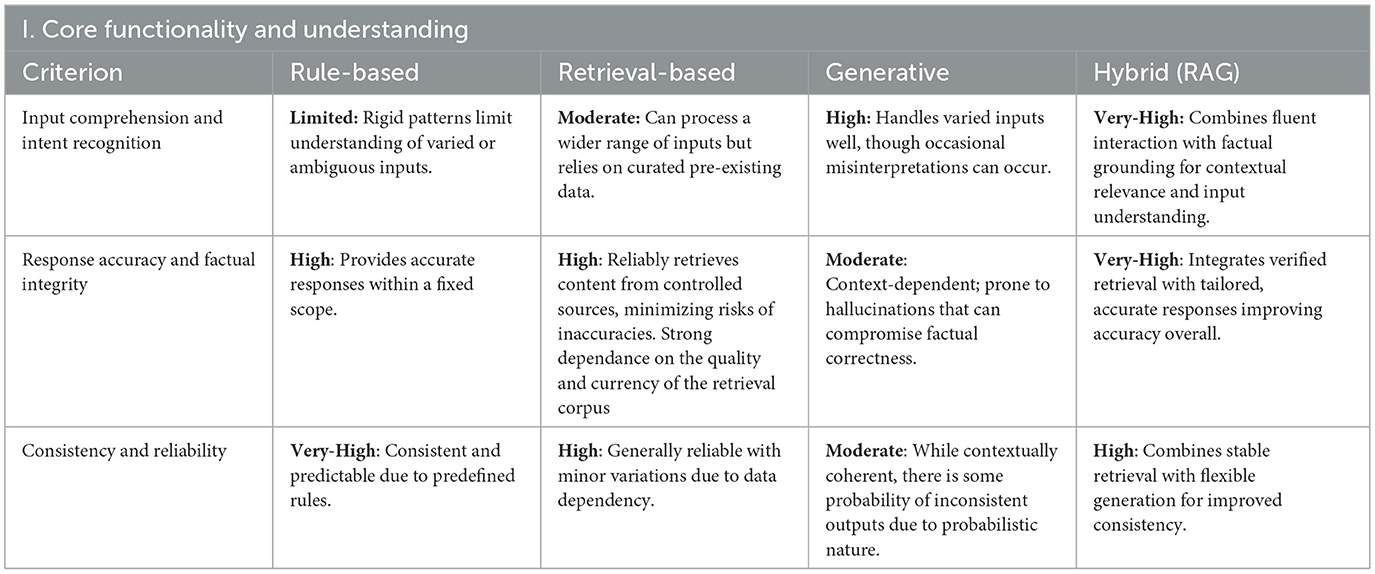

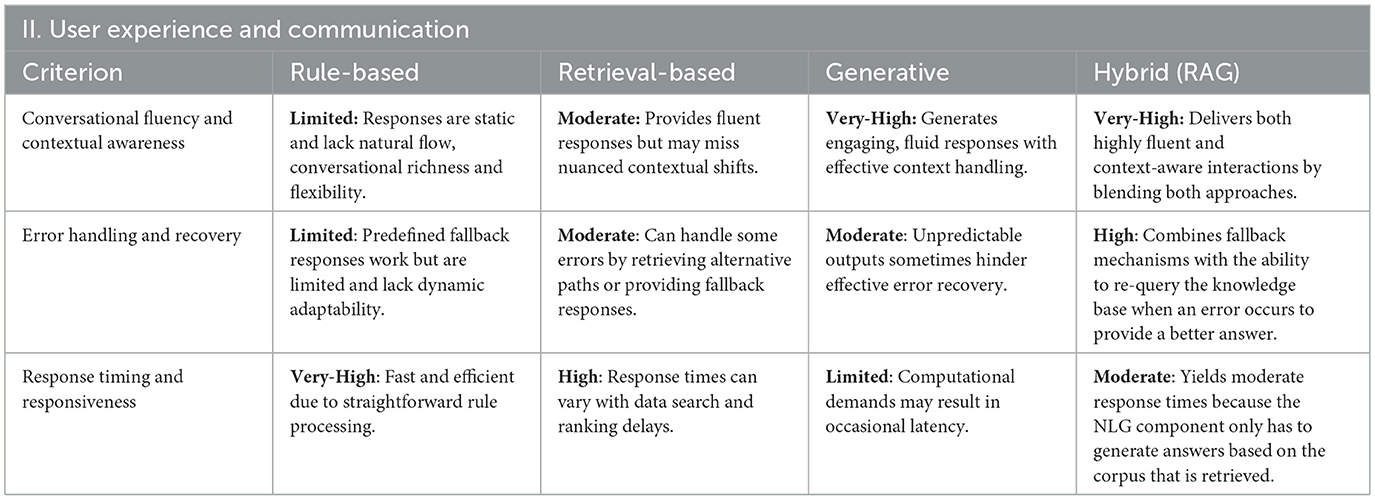

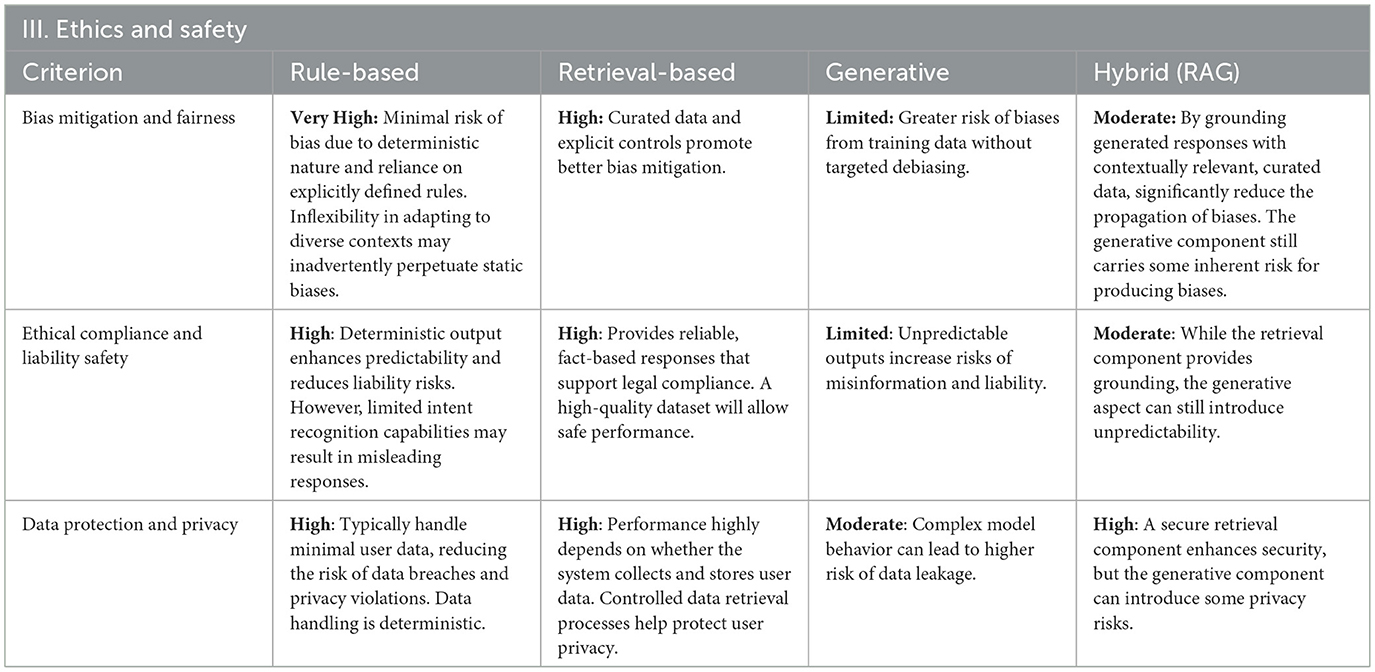

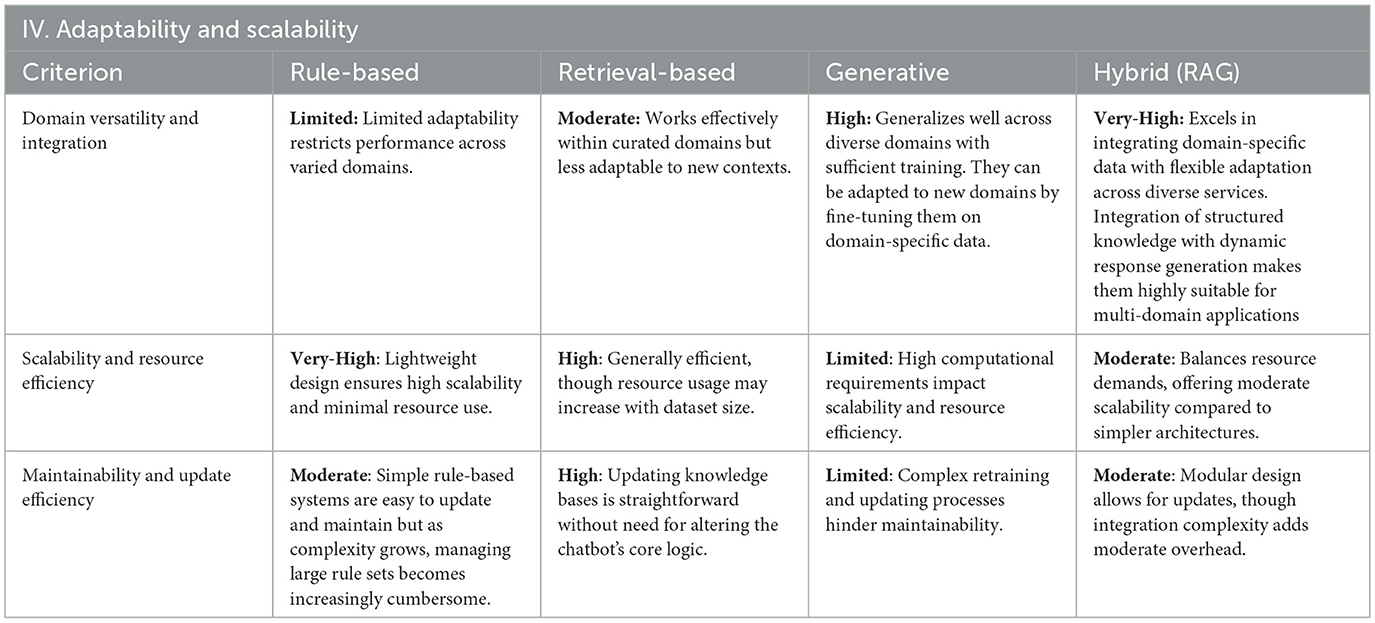

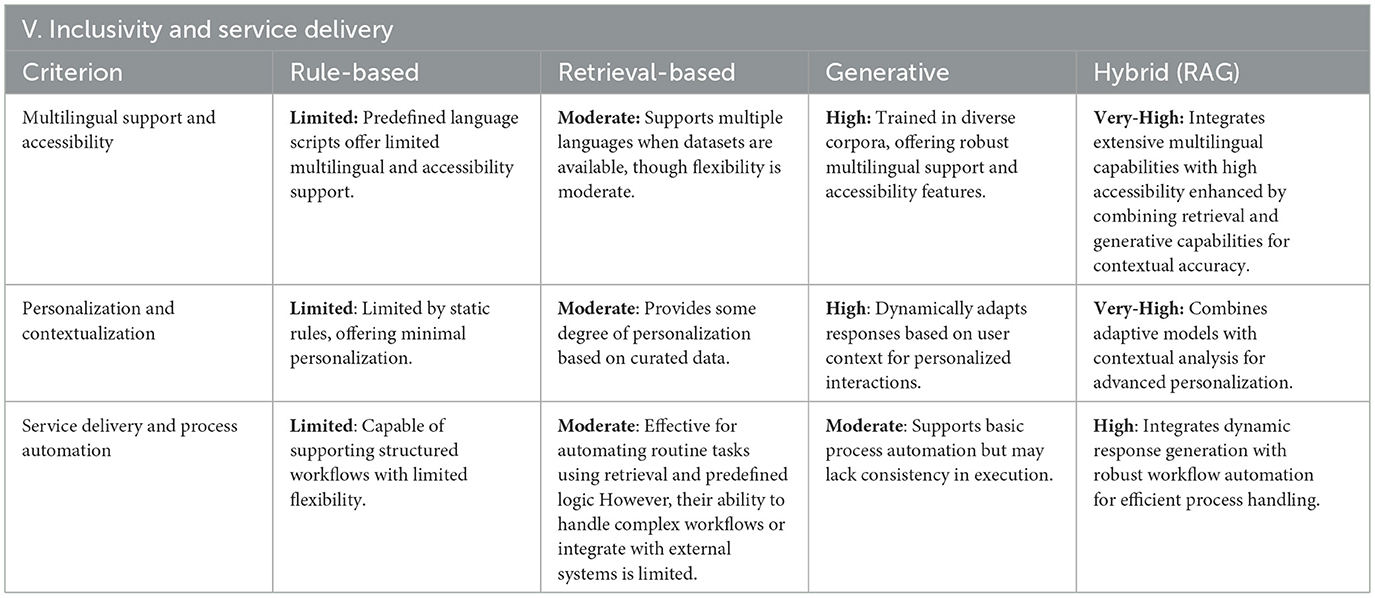

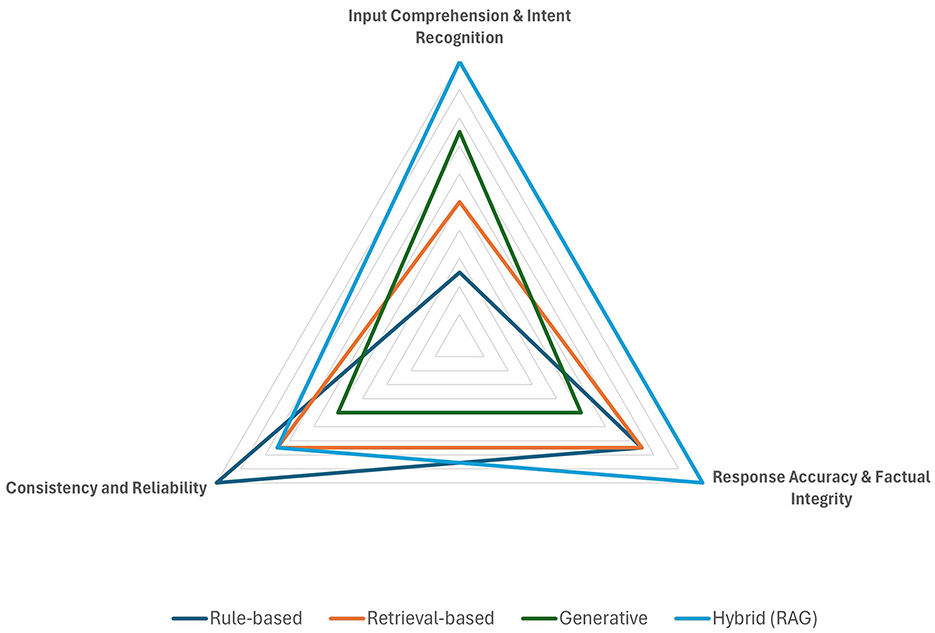

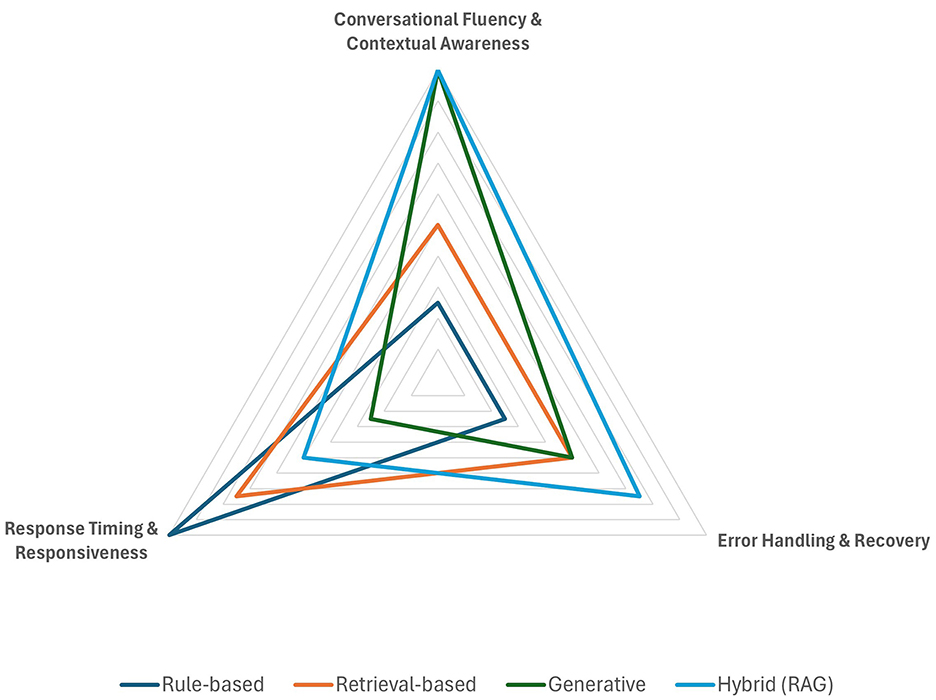

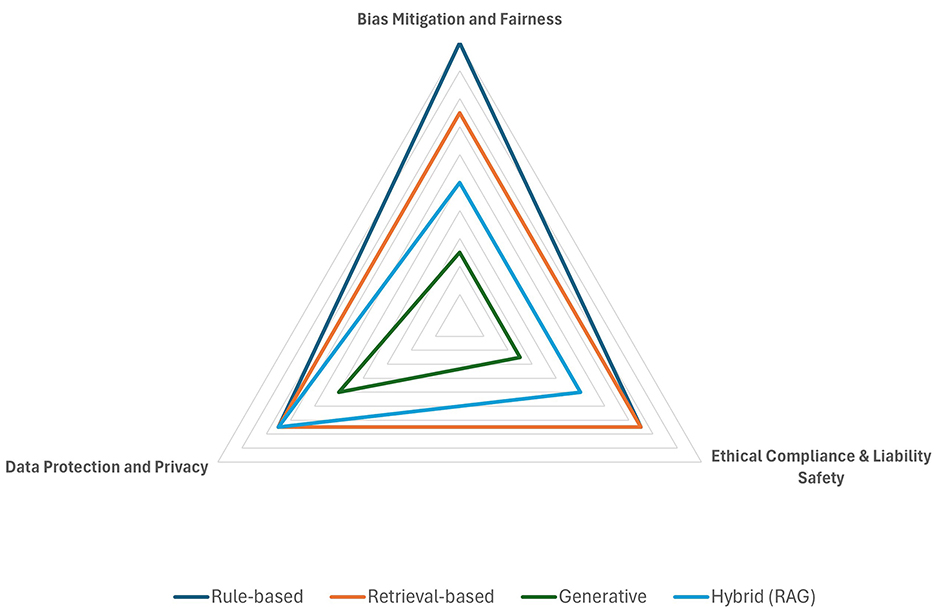

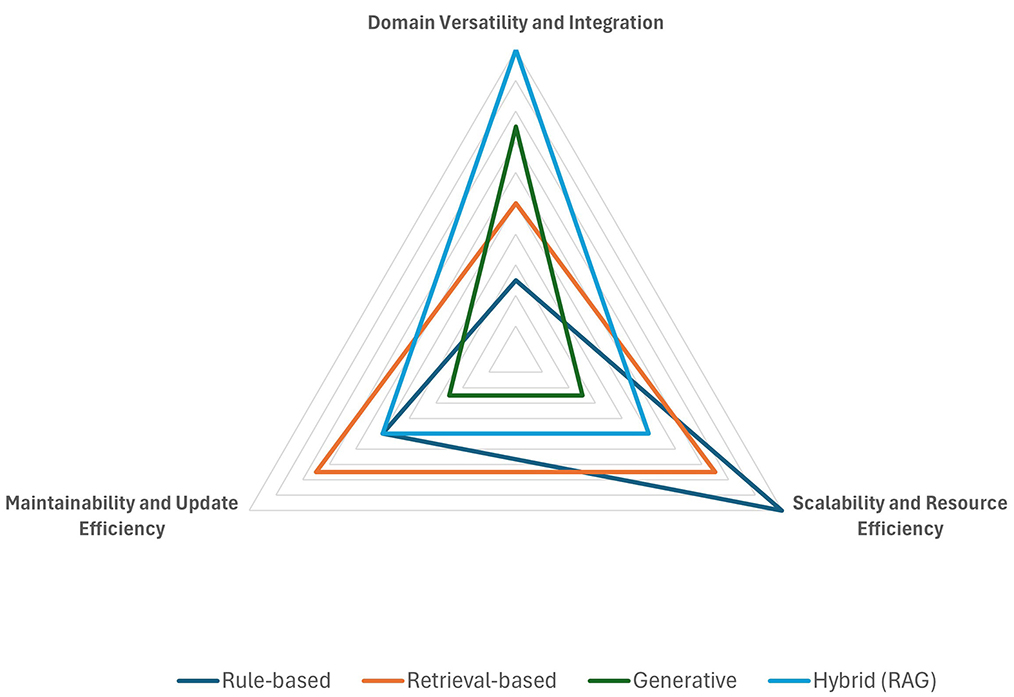

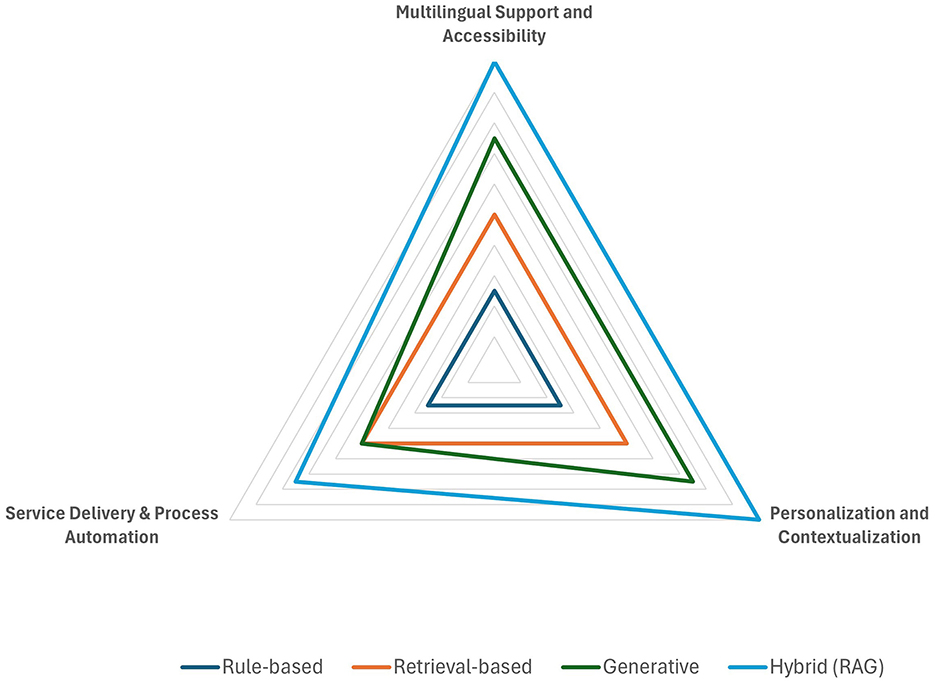

This chapter presents the findings from the comparative evaluation of four chatbot architectures using our proposed framework. An overview of the results is provided in Table 1 and Figure 2, presenting the assigned score (ranging from Limited to Very-High) for each architectural profile and evaluation criterion. Note that for presentation purposes this table contains only the assigned score (Limited to Very-High) for each architecture and criterion combination.

The next section offers a summary of the results. Detailed explanations and justifications for each criterion category are then provided in subsequent sections. These analyses, organized across the five evaluation categories, highlight how different architectures perform and offer key policy recommendations for decision-makers in public administration. For each category, the detailed evaluation results are presented in both a table format (Tables 2–6) and through radar charts (Figures 3–7), where the length of each spoke is proportional to the magnitude of the assigned value (after quantification of discrete values and scaling up to the highest value).

5.1 Overview

Overall, the results, highlight that each architecture exhibits distinct strengths and limitations aligned with their inherent design principles. Rule-based systems demonstrate exceptional consistency and reliability due to their deterministic, predefined workflows, yet they are constrained by limited flexibility and contextual sensitivity. Retrieval-based chatbots offer enhanced factual accuracy by leveraging curated databases, though their performance is closely tied to the currency and comprehensiveness of their underlying data sources. Generative models excel in conversational fluency and contextual engagement, providing dynamic and human-like interactions; however, they are occasionally susceptible to errors and biases inherent in probabilistic outputs. Notably, the hybrid retrieval-augmented generation (RAG) approach emerged as the most balanced architecture. By integrating the robustness of retrieval-based methods with the adaptability of generative models, the hybrid approach achieves superior performance across multiple evaluation criteria, including accuracy, scalability, and ethical safeguards.

In the subsequent sections, detailed analyses of individual criteria will further illuminate these findings and guide the formulation of targeted policy recommendations for the effective and responsible deployment of chatbot technologies in public service delivery.

5.2 Findings by evaluation dimension

5.2.1 Core functionality and understanding

The analysis of the results in this category reveals that rule-based systems offer high consistency due to their fixed logic and workflows but exhibit limitations in handling complex, ambiguous inputs, whereas retrieval-based systems improve factual accuracy by leveraging curated data; however, their effectiveness depends largely on the currency and completeness of their underlying knowledge bases. Generative systems show superior adaptability in interpreting diverse queries and maintaining context over multi-turn interactions, yet, their probabilistic output can lead to occasional inaccuracies or hallucinations. LLMs can produce consistent answers, particularly in the case of short and relatively simple queries. However, for more complex input, they are generally unable to produce the same answer to the same query over time. Hybrid chatbot architectures stand out by combining the strengths of retrieval and generative approaches—delivering enhanced input comprehension and reliable response generation with solid consistency.

Recommendations for core functionality:

• Adopt hybrid architectures for services requiring both adaptability and factual accuracy, particularly in complex administrative tasks.

• Supplement rule-based systems with retrieval components to enhance flexibility without sacrificing consistency in areas where strict compliance is essential.

• Regularly update knowledge bases when deploying retrieval-based systems to maintain high accuracy and relevance.

5.2.2 User experience and communication

Findings in this category reveal that conversational quality and depth is highest in generative and hybrid systems, which produce fluid, natural language and maintain context over extended dialogues. But generative systems can be significantly slow, due to the computational demands of generating the text. In contrast, rule-based systems, though exceptionally fast, often yield simplistic, less adaptive exchanges that lack conversational depth, while retrieval-based systems provide moderate fluency, primarily due to reliance on pre-constructed responses. Error handling is more effective in hybrid systems, which integrate dynamic recovery mechanisms and fallback strategies, whereas generative models, while capable of generating creative error messages, may suffer from hallucinations and offer unreliable guidance. Rule-based systems demonstrate even lower adaptability when facing errors and unexpected inputs.

Overall, the results underscore that while rapid response is characteristic of rule-based systems, the superior engagement and adaptability of generative and hybrid models make them more suited for delivering a user-centric experience in public services.

Recommendations for user experience:

• Prioritize user-centric designs that integrate hybrid systems to enhance conversational quality and ensure dynamic error recovery.

• Implement continuous user feedback mechanisms to refine error handling and response timing, especially in critical or emergency service scenarios.

• Tailor interface designs to specific public service contexts, ensuring that systems meet the unique interaction needs of diverse citizen groups.

5.2.3 Ethics and safety