Explore article hub

- 1Center for Research in Cognition and Neuroscience (CRCN), ULB Neuroscience Institute (UNI), Université libre de Bruxelles, Brussels, Belgium

- 2Brain, Mind, and Consciousness Program, Canadian Institute for Advanced Research (CIFAR), Toronto, ON, Canada

- 3School of Psychological Sciences, Tel-Aviv University, Tel Aviv, Israel

- 4Sagol School for Neuroscience, Tel-Aviv University, Tel Aviv, Israel

- 5Sussex Centre for Consciousness Science, University of Sussex, Brighton, United Kingdom

- 6School of Engineering and Informatics, University of Sussex, Brighton, United Kingdom

Abstract

Understanding the biophysical basis of consciousness remains a substantial challenge for 21st-century science. This endeavor is becoming even more pressing in light of accelerating progress in artificial intelligence and other technologies. In this article, we provide an overview of recent developments in the scientific study of consciousness and consider possible futures for the field. We highlight how several novel approaches may facilitate new breakthroughs, including increasing attention to theory development, adversarial collaborations, greater focus on the phenomenal character of conscious experiences, and the development and use of new methodologies and ecological experimental designs. Our emphasis is forward-looking: we explore what “success” in consciousness science may look like, with a focus on clinical, ethical, societal, and scientific implications. We conclude that progress in understanding consciousness will reshape how we see ourselves and our relationship to both artificial intelligence and the natural world, usher in new realms of intervention for modern medicine, and inform discussions around both nonhuman animal welfare and ethical concerns surrounding the beginning and end of human life.

Key points

- Understanding consciousness is one of the most substantial challenges of 21st-century science and is urgent due to advances in artificial intelligence (AI) and other technologies.

- Consciousness research is gradually transitioning from empirical identification of neural correlates of consciousness to encompass a variety of theories amenable to empirical testing.

- Future breakthroughs are likely to result from the following: increasing attention to the development of testable theories; adversarial and interdisciplinary collaborations; large-scale, multi-laboratory studies (alongside continued within-lab effort); new research methods (including computational neurophenomenology, novel ways to track the content of perception, and causal interventions); and naturalistic experimental designs (potentially using technologies such as extended reality or wearable brain imaging).

- Consciousness research may benefit from a stronger focus on the phenomenological, experiential aspects of conscious experiences.

- “Solving consciousness”—even partially—will have profound implications across science, medicine, animal welfare, law, and technology development, reshaping how we see ourselves and our relationships to both AI and the natural world.

- A key development would be a test for consciousness, allowing a determination or informed judgment about which systems/organisms—such as infants, patients, fetuses, animals, organoids, xenobots, and AI—are conscious.

Introduction

Understanding consciousness is one of the greatest scientific challenges of the 21st century, and potentially one of the most impactful for society. This challenge reflects many factors, including (i) the many philosophical puzzles involved in characterizing how conscious experiences relate to physical processes in brains and bodies; (ii) the empirical challenge of obtaining objective, reliable, and complete data about phenomena that appear to be intrinsically subjective and private; (iii) the conceptual/theoretical challenge of developing a theory of consciousness that is sufficiently precise and not only accounts for empirical data and clinical cases but is also sufficiently comprehensive to account for all functional and phenomenological properties of consciousness; and (iv) the epistemological and methodological challenges of developing valid tests for consciousness that can determine if a given organism/system is conscious. The potential impact of understanding consciousness stems from the many interlinked implications this can have for science, technology, medicine, law, and other critical aspects of society. Existentially, a complete scientific account of consciousness is likely to profoundly change our understanding of the position of humanity in the universe.

Accordingly, consciousness has become an object of intense scrutiny from different disciplines. While the connection between mind and body is an ancient philosophical conundrum, in recent decades, the metaphysical issues have been accompanied by a set of empirical questions, with neuroscience and psychology attempting to discover and explain the connections between conscious experiences and neural activity. Yet, strikingly, the core problem had already been formulated in scientific terms at the turn of the 20th century: certain articles from that period read almost as though they had been written today. For instance, in 1902, Minot wrote a Science article titled “The problem of consciousness in its biological aspects” in which he “[…] hopes to convince you that the time has come to take up consciousness as a strictly biological problem …” (1).

Eighty-eight years later, Crick and Koch called for renewed inquiry into “the neural correlates of consciousness” (2, 3), prompted in part by the increasing availability of novel brain imaging methods that could link the biological activity of the brain with subjective experience. This empirical program continues apace, together with theory development and ever deeper interactions with philosophy. But today, there is also a sense that the field has reached an uneasy stasis. For example, a recent review (4) taking a highly inclusive approach identified over 200 distinct approaches to explaining consciousness, exhibiting a breathtaking diversity in metaphysical assumptions and explanatory strategies. In such a landscape, there is a danger that researchers talk past each other rather than to each other. Empirically, Yaron et al. (5) showed that most extant experimental research on theories of consciousness is geared toward supporting them rather than attempting to falsify or compare them, reflecting a confirmatory posture that hinders progress. This manifested both in the low percentage of experiments that ended up challenging theories, as opposed to supporting them (15%), and in the low percentage of experiments that were designed a priori to test theoretical predictions (35%, with only 7% testing more than one theory in the same experiment).

Beyond the genuine and highly complex scientific challenges that the study of consciousness must address, sociological factors may also contribute to the current sense of entrenchment: nobody likes to change their mind (6)! Emerging collaborative frameworks—especially adversarial collaborations—may help alleviate this concern, at least to some extent. But there are also further factors: the possibility that consciousness research is not sufficiently addressing why it feels like anything at all to be conscious and the role that conscious phenomenology plays in our mental, and indeed biological lives (7–9).

This paper is structured in a forward-looking manner, moving from the past, through the present, and on to the future. First, we clarify terms and make some essential conceptual distinctions. Then, we briefly review what has been achieved so far in elucidating the neural and theoretical basis of consciousness. Next, we consider the future of our field, outlining some promising directions, approaches, methods, and applications, and advocating for a renewed focus on the phenomenological/experiential aspects of consciousness. Finally, we imagine a time in which we have “solved consciousness” and explore some of the key consequences of such an understanding for science and society.

Three distinctions about consciousness

Consciousness is a broad construct—a “mongrel” concept (10)—used by different people to mean different things. In this paper, we stress three distinctions.

The first distinction is between the notion of the level of consciousness and the notion of the contents of consciousness. In the first sense, consciousness is a property associated with an entire organism (a creature) or system: one is conscious (for example, when in a normal state of wakefulness) or not (for example, when in deep dreamless sleep or a coma). There is an ongoing vibrant debate about whether one should think of levels of consciousness as degrees of consciousness or whether they are best characterized in terms of an array of dimensions (11) or as “global states” (12). In the second sense, consciousness is always consciousness of something: our subjective experience is always “contentful”—it is always about something, a property philosophers call intentionality (3, 13). Here, again, there is some debate over the terms, for example, whether there can be fully contentless global states of consciousness (14) and whether consciousness levels (or global states) and contents are fully separable (11, 15).

The second distinction is between perceptual awareness and self-awareness (note that in this article, we use the terms consciousness and awareness interchangeably). Perceptual awareness simply refers to the fact that when we are perceptually aware, we have a qualitative experience of the external world and of our bodies within it (though of course, some perceptual experiences can be entirely fictive, such as when dreaming, vividly imagining, or hallucinating). Importantly, mere sensitivity to sensory information is not sufficient to be considered as perceptual awareness: the carnivorous plant Dionaea muscipula and the camera on your phone are both sensitive to their environment, but we have little reason to think that either has perceptual experiences. Thus, mere sensitivity is not sufficient for perceptual awareness, as it does not necessarily feel like something to be sensitive. This experiential character is precisely what makes the corresponding sensation a conscious sensation (16).

We take self-awareness, on the other hand, to mean experiences of “being a self.” These experiences can be of many different kinds, from low-level experiences of mood and emotion (17) to high-level experiences of being the subject of our experiences, which might be supported by some inner (metacognitive) model of ourselves and our mental states (18–20). This kind of high-level reflective self-awareness is associated with the “I” and with a sense of personal identity over time (21).

The distinction between self-awareness and perceptual awareness is not sharp. Some aspects of the experience of “being a self” seem not to involve reflective self-awareness, such as experiences of emotion, mood, body ownership, agency, and of having a first-person perspective (22, 23). Some of these aspects may arguably have perceptual features. For example, emotional experience may depend on interoception (24–26). In addition, some perspectives, such as the higher-order theories described below, suggest that a form of metacognition might play a constitutive role in all instances of perceptual awareness, not only in self-awareness (18, 27, 28).

Human beings normally possess both perceptual awareness and self-awareness, but this is probably not true at all times or for all species. In humans, reflective self-awareness may be absent in specific conscious states, such as absorption or flow (29), or in states of minimal phenomenal experience (14). Other species may lack this reflective capability altogether. For example, few will doubt that dogs have perceptual experiences as well as various non-reflective self-related experiences—though this can be contested as we currently lack a way to directly test for consciousness in other species [see (30–32) for recent attempts to tackle this problem]. Nevertheless, there is no convincing evidence that dogs have reflective self-awareness in the sense defined above. Putting these debates aside, consciousness research has thus far largely focused, with exceptions (26, 33, 34), on trying to explain perceptual awareness as a first, albeit notoriously difficult, step toward understanding other aspects of consciousness. This emphasis most likely stems from the fact that perceptual awareness is generally easier to manipulate in experiments.

The third distinction contrasts the phenomenological (i.e., experiential) aspects of consciousness with its functions. This discussion has been largely shaped by Block’s (35) influential, yet controversial (36, 37), distinction between phenomenal consciousness and access consciousness—informally, what consciousness feels like and what it does. Access consciousness is associated with the various functions that consciousness enables, such as global availability, verbal report, reasoning, and executive control. Phenomenal consciousness, on the other hand, refers to the felt qualities of conscious mental states: the complex mixture of bitterness and sweetness of a Negroni cocktail, the distinctive hue of International Klein Blue, the anxiety prompted by one’s to-do list. All such conscious mental states have phenomenal character (using the philosophical term, often referred to as “qualia”): there is something it is like for us to be in each of these states. By contrast, there is nothing it was like for the neural network Alpha Go (38) to win against the South Korean world Go champion Lee Sedol (it was Sir Demis Hassabis and the DeepMind team who drank the champagne instead). Despite its seductive use of language, we think there is also nothing it is like for GPT-5 to engage in a conversation (39, 40).

Just as there has been greater emphasis within consciousness science on studying perceptual awareness compared with self-awareness, there has also been a greater emphasis on studying the functional rather than the phenomenological aspects of consciousness. This, again, may be due to the relative ease with which functional properties related to conscious access can be studied empirically compared with phenomenological aspects (41–43). With respect to the neural underpinnings of consciousness, we have been more focused on finding the mechanisms that differentiate between a consciously processed and an unconsciously processed stimulus than on explaining the difference between two conscious experiences, again with exceptions (44–48). Additionally, with respect to the functions of consciousness, we have been more oriented toward documenting what we can do without awareness rather than because of it (49–52). The potential for complex behavior in the absence of awareness has been further emphasized by the rapid advances in artificial intelligence (AI), where complicated functions can be executed without any accompanying phenomenology, at least as far as we can tell.

What have we achieved so far?

Following this clarification of terms, we briefly review where things stand today in consciousness research. Given the enormous challenge that explaining consciousness represents, it is easy to underestimate the significant progress that has already been made. This progress has been particularly visible over the last 30 or so years, but in fact it extends much further back, with highlights including seminal work on split-brain patients, neurological patients, work with brain stimulation, research on nonhuman primates, and much more (53–55).

Some basic facts are now well established. In humans and other mammals, the thalamocortical system is strongly involved in consciousness, whereas the cerebellum (despite having many more neurons) is not. Different regions of the cortex are associated with different aspects of conscious content, whether these are distinct perceptual modalities (56), experiences of volition or agency (34), emotions (57), or other aspects of the sense of “self” (58). Researchers have identified a myriad of candidate signatures of consciousness in humans, focusing on global neural patterns [e.g., neuronal complexity (59), non-linear cortical ignitions (60), stability of neural activity patterns (61)], specific electrophysiological markers of consciousness [e.g., the perceptual awareness negativity (62)], alpha suppression (63), late gamma bursts (64), and on relevant brain areas such as the “posterior hot zone” (65) or frontoparietal areas (66) as well as subcortical structures and brainstem arousal systems that may contribute to and modulate awareness (67–70). For some of these regions, notably brainstem arousal systems, there is debate about whether they represent necessary enabling conditions for consciousness and/or whether they contribute to the material basis of consciousness (67, 69).

At the same time, some previously popular hypotheses have now been empirically excluded. For example, the idea that consciousness is uniquely associated with 40 Hz (gamma band) oscillations has fallen out of favor based on substantial evidence (71, 72). In parallel, there has been a growing recognition that various confounds need to be carefully ruled out in order to interpret these findings, including those related to the enabling conditions for conscious experience, post-perceptual processes such as memory and report, and the concern that consciousness is often (but not always) correlated with greater signal strength and performance capacity (73–76). In this regard, phenomena such as blindsight, in which consciousness can be partly dissociated from performance capacity, are particularly intriguing [(77–79); but see (80, 81), for critiques].

Complementing these empirical findings, many theories of consciousness have been developed over recent years. These vary greatly in their aims and scope, in the degree of traction they have gained in the community, and in their level of empirical support (5, 12, 82–84). A selection of these theories provides a useful lens through which to focus attention on the progress made so far in the scientific study of consciousness.

Global workspace theory

One prominent theory, named “global workspace theory” (GWT), originated from “blackboard” architectures in computer science. Such architectures contain many specialized processing units that share and receive information from a common centralized resource—the “workspace.” The first version of GWT (85) was a cognitive theory that assumed that consciousness depends on global availability: just like blackboard architectures, the cognitive system consists of a set of specialized modules capable of processing their inputs automatically and unconsciously, but they are all connected to a global workspace that can broadcast information throughout the entire system and make its contents available to a wide range of specialized cognitive processes such as attention, evaluation, memory, and verbal report (86). The core claim of GWT is thus that it is the wide accessibility and broadcast of information within the workspace that constitutes conscious (as opposed to unconscious) contents. Since the 1990s, GWT has developed into a neural theory (referred to as global neuronal workspace theory) in which neural signals that exceed a threshold cause “ignition” of recurrent interactions within a global workspace distributed across multiple cortical regions—this being the process of “broadcast” (64, 87). Importantly, GWT is what is called a first-order theory: what makes a mental state conscious depends on properties of that mental state (and its neural underpinnings) only and not on some other process relating to that mental state in some way. Thus, in contrast with the assumptions of higher-order theories (HOTs, introduced below), GWT does not postulate that consciousness depends on higher-order representation or indexing of some kind.

GWT is primarily a theory of conscious access (88), focused on how mental states gain access to consciousness and how they accrue functional utility as a result. This is characterized largely in terms of supporting flexible, content-dependent behavior, including the ability to deliver subjective verbal reports [but see (89) for a discussion of the phenomenal aspect of consciousness and how the theory explains it, and see Dehaene’s section in (90)]. GWT’s clear neurophysiological predictions (centering on nonlinear “ignition” and on the involvement of frontoparietal regions) has led to a wealth of supportive experimental evidence (64). For example, divergences of activity ~250–300 ms post-stimulus have been associated with ignition (91), and measures of long-distance information sharing among cortical regions have been associated with broadcast (92). However, a major challenge for GWT lies in specifying what exactly counts as a “global workspace” (12): does it depend on the nature of the “consuming” systems, the type of broadcast, and/or on other factors?

Higher-order theories

A second prominent theory of consciousness is Rosenthal’s (93) higher-order thought theory, which proposes that a mental state is a conscious mental state when one has a “higher-order” thought that one is in that mental state. This core idea has now been elaborated on in different ways, resulting in a family of higher-order theories (HOTs). Unlike first-order theories, higher-order theories all claim that mental states are conscious when they are the target of a “higher-order” mental state of a specific kind (18, 93–95). The nature of the relationship between first-order and higher-order states varies among HOTs, but they all share the basic notion that for a first-order mental state X to be conscious, there must be a higher-order state X that in some way monitors or meta-represents X. Take the experience of consciously seeing a red chair. According to HOTs, the first-order representation (perhaps instantiated as a pattern of neural activity in the visual cortex) of red is not by itself sufficient to produce a conscious experience. Instead, there need to be additional “higher-order” states that point to or (meta)represent the first-order representation for it to be experienced as red. Crucially, such higher-order states need not be conscious themselves (i.e., we do not need to be aware of a mental state with content like, “I am now seeing red”). Rather, it is their very existence that makes the target content conscious. HOTs capture the intuitively plausible notion that a mental state is a conscious mental state as soon as I am aware of being in that mental state. This offers an equally intuitive distinction between conscious and unconscious mental states: I am conscious of some situation when I know about that situation; otherwise, I am unconscious of that situation.

Many HOTs locate the neural basis of the relevant meta-representations in anterior regions of the human brain, with an emphasis on the prefrontal cortex (96). Future “neural HOTs” will likely develop richer mappings between brain states and the theoretical distinction between first- and higher-order states (97). These theories are therefore supported by evidence implicating these regions in consciousness and undermined by evidence that anterior regions are not necessary for consciousness. As such, they have motivated studies investigating the neural correlates of consciousness (NCCs) with this question in mind (98). Of particular note are experiments that attempt to control for how well participants perform at a perceptual task: such studies (including in “blindsight” participants) have shown that when conditions are matched for performance, differences between conscious and unconscious perception are found in anterior cortical regions (75, 99) and interference with prefrontal function using transcranial magnetic stimulation (TMS) or multivariate neurofeedback affects subjective aspects of perception (such as confidence) without changing performance (100, 101). Studies associating perceptual metacognitive abilities with anterior prefrontal function also provide intriguing supportive evidence, albeit less direct (e.g., 102, 103). Additional support can be drawn from demonstrations of decoding of the content of consciousness from frontal areas (104).

However, HOTs currently do not fully specify the actual neural mechanism(s) mediating the implementation of first- versus higher-order states: how exactly does one brain state “point” at another, and what motivates the choice of which first-order state to point at or re-represent? Another challenge is that they focus on the contents of consciousness and provide less explanation for the level of consciousness. These under-specifications reflect the relatively limited empirical formulation of HOTs—despite their considerable philosophical backbone (105)—as compared with other theories (5). These aspects of the theory are currently being developed (45), and an ongoing adversarial collaboration (ETHoS1) is specifically aimed at comparing the empirical predictions of four HOT variants.

Integrated information theory

A very different perspective is provided by “integrated information theory” (IIT), developed by Giulio Tononi and colleagues since the 1990s (44, 106, 107). Rather than asking what in the brain gives rise to consciousness, IIT identifies features of conscious experience (described in five axioms) that it assumes are essential and then asks what properties a physical substrate of consciousness must have for these features to be present. A striking claim of IIT is that any physical substrate that possesses these properties will exhibit some level of consciousness (108). The two most illustrative essential features, or axioms, are (unsurprisingly) information and integration. According to IIT, every conscious experience is necessarily both informative (in virtue of ruling out many alternative experiences; i.e., every experience is the way it is, and not some other way) and integrated (every experience is a unified scene). IIT introduces a mathematical measure, phi (Φ), which, broadly speaking, measures the extent to which a physical system entails irreducible maxima of integrated information and thereby, according to the theory, provides a full measure of consciousness. Different versions of IIT introduce different varieties of Φ, with the latest being IIT 4.0 (107), but all associate consciousness with the underlying “cause—effect structure” of a physical system and not just with the dynamics (e.g., neural activity) that the physical system supports. IIT is arguably the most ambitious theory we discuss because it addresses both the level and content of consciousness, proposes a sufficient basis for consciousness, and explicitly addresses phenomenological aspects of consciousness, such as spatiality (109) and temporality (110).

IIT has been criticized on the grounds that measurement of Φ is challenging or infeasible for anything other than very simple systems. Other “weak” versions of IIT have been proposed in which Φ is easier to measure, but this comes at the cost of abandoning claims of an identity relationship between Φ and consciousness (111). Another line of criticism is that the axioms proposed by full IIT do not satisfy standard philosophical criteria of being self-evidently true (112). Concerns like these have led to robust debate over whether the core claims of IIT are empirically testable and over what should be expected from a scientific theory of consciousness (40, 113, 114).

The most commonly referenced experimental support for IIT comes from evidence examining empirically applicable proxies2 for integrated information (Φ) under different global states of consciousness. In a canonical series of studies (115, 116), Massimini and colleagues have developed a measure of consciousness, called the “perturbation complexity index” (PCI), which quantifies the complexity of the brain’s response to cortical stimulation. Most commonly, the method uses TMS to inject a brief pulse of energy into the cortex, an electroencephalogram to measure the response, and the information-theoretic metric of Lempel–Ziv complexity (which quantifies the diversity of patterns within a signal) to quantify the complexity of the response. High PCI values arguably correspond to high levels of integration and information in the underlying dynamics. However, it is important to emphasize that the PCI, while inspired by and based on IIT, is not a measure or approximation of Φ, and differences in PCI across conscious levels may also be affected by differences in how unconscious processes operate at these levels. The PCI results, while fascinating, cannot be taken to directly support the distinctive aspects of IIT that rely on the definition of Φ, and are also compatible with or supportive of other theories, notably GWT. Nevertheless, the PCI method has shown exciting promise in important practical scenarios, such as detecting residual consciousness in unresponsive patients following severe brain injury (59).

In terms of neural correlates, IIT theorists claim that brain activity sufficient for conscious perception is localized to posterior regions (e.g., the posterior cortical “hot zone”). This claim is based on the argument that neural connectivity in these regions is well suited to generating high levels of (irreducible) integrated information, rather than the anterior regions favored by HOTs and GWT (117).

Predictive (and recurrent) processing theory

The final theory we mention here is not really (or at least not primarily) a theory of consciousness but rather a general theory of brain function—of perception, cognition, and action—from which more specific connections between brain processes and aspects of consciousness can be derived and tested (118). According to “predictive processing” (PP), the brain continually minimizes sensory “prediction error” signals, either by updating its predictions about the causes of sensory signals or by performing actions to bring about predicted or desired sensory inputs (the latter process being termed “active inference”) (119–121). This ongoing process of prediction error minimization provides a mechanism by which the view of perception as a process of Bayesian inference, or “best-guessing”, (122) and as a means of predictive regulation of physiological variables can be implemented (123, 124). In its most ambitious and all-encompassing version, the “free energy principle,” the mechanism of prediction error minimization, arises out of fundamental constraints regarding control and regulation that apply to all physical systems that maintain their organization over time in the face of external perturbations (125, 126).

Several distinct theories of consciousness fall under the umbrella of PP (e.g., 23, 127, 128). These typically share the claim that the contents of conscious experiences arise from (top-down) predictions rather than from a “read out” of (bottom-up) sensory signals. Informally, the contents of perceptual experience are given by the brain’s “best guess” of the causes of its sensorium or, even more informally, as a “controlled hallucination” in which the brain’s predictions are reined in by sensory signals arising from the world and the body (23).

One particular influential theory under the PP umbrella deserves mention: recurrent processing theory (RPT), also known as “local recurrency” or “re-entry” theory, associates consciousness with top-down (recurrent) signaling in the brain but does not appeal directly to the Bayesian aspects of PP (129, 130). Instead, RPT uses neurophysiological evidence to motivate the view that local recurrence (e.g., in visual cortex) is sufficient for phenomenal experience to occur and that feedforward (bottom-up) activity is always insufficient for conscious perception, no matter how “deep” into the brain this activity reaches (36). RPT’s focus on local recurrence is usually used to contrast the theory with other theories that involve widespread broadcast (GWT) or higher-order processes (HOT) (90), but as theories gain precision, it could be that aspects of RPT also surface in other theories (83). For example, the “ignition” process central to GWT might involve local recurrence. Nonetheless, a key difference between RPT and these other theories remains that RPT allows that phenomenal experience could be present without cognitive access (36).

The core commitments of PP do not directly specify a necessary or sufficient basis for consciousness to happen, nor do they specify how to distinguish conscious from unconscious processing. RPT is an exception here, proposing sufficient conditions, given the right enabling background conditions. Instead, the value of PP for theories of consciousness may largely reside in providing resources for developing and testing systematic or explanatory correlations between brain processes and properties of conscious experience, both functional and experiential (118). PP accounts tend to focus on conscious content rather than conscious level (e.g., 131, 132); they speak to both phenomenological (in terms of the nature of top-down predictions) and functional aspects of consciousness and address aspects of selfhood and embodiment more directly than other theories discussed here (e.g., 40, 133). Notably, variants of the theories discussed above can be expressed within the framework of PP, so there can be ‘PP versions’ of, for example, GWT and HOT (95, 134).

Whether PP succeeds as a theory in consciousness science will depend both on evidence that prediction error minimization is indeed a core brain operation and on its ability to draw explanatorily and predictively powerful links between elements of predictive processing and aspects of conscious experience. While there is substantial evidence linking top-down signaling to conscious perception (135, 136), evidence for explicit sensory prediction error signals playing the roles proposed by PP remains mixed (137), at least when compared to the well-studied dopaminergic reward prediction error signal (138). Further, while abundant evidence shows that participant expectations can shape conscious perception (139), much remains to be done to causally connect the computational entities of PP with specific forms of consciousness. For some, this is a shortcoming of the theory: it might be too general and accordingly not informative enough to explain consciousness. Conversely, more specific formulations of the top-down principle, such as RPT, have been criticized for being too narrow, for example, focusing on visual processing only and failing to explain how this relates to other modalities and how conscious information is integrated across modalities.

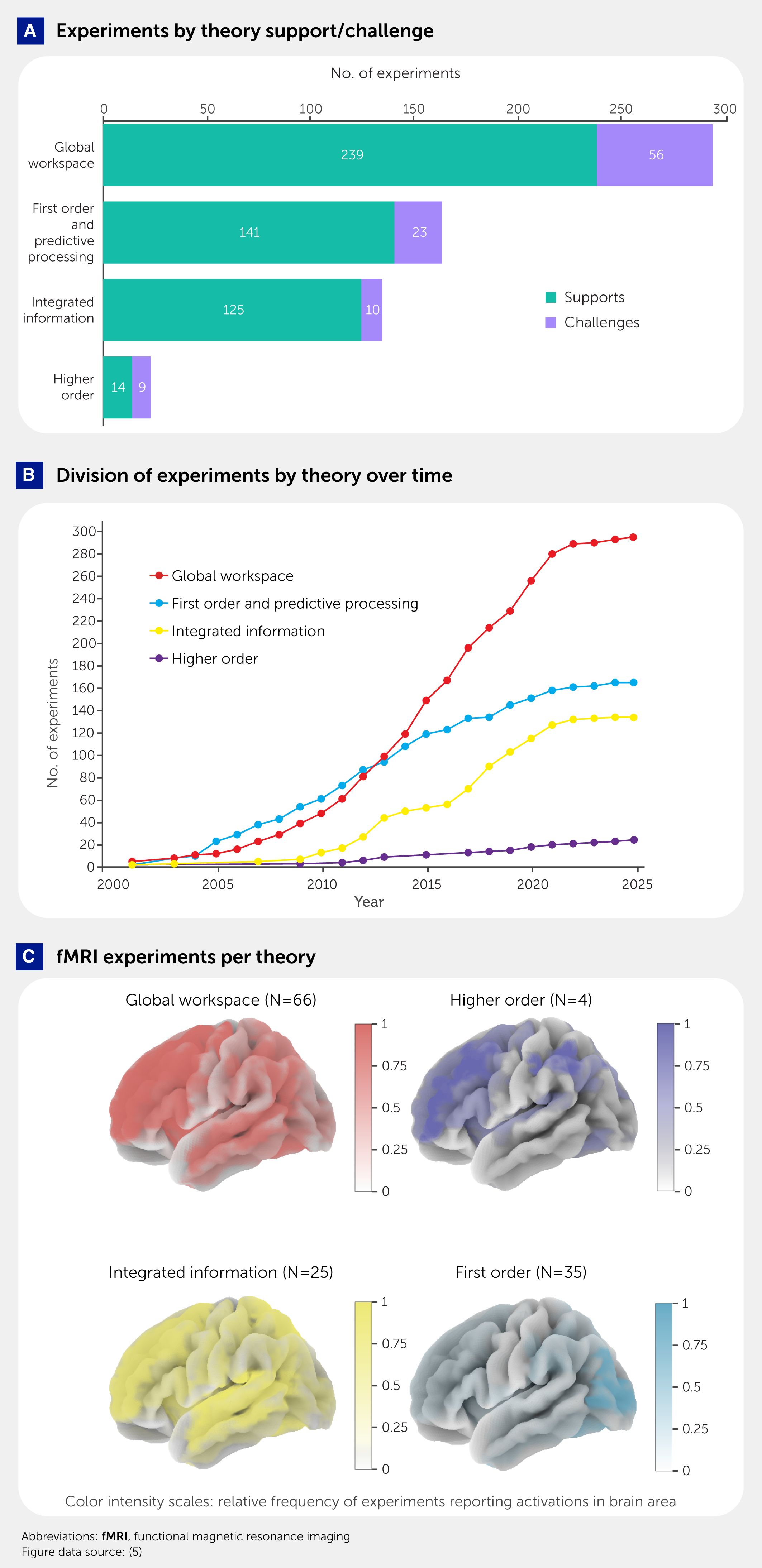

This short tour of several of the many theories of consciousness [for a recent comprehensive survey, see (4)] highlights that there is not only a lack of agreement about the answers in consciousness science but also a lack of consensus about approaches and relevant questions. This does not mean there has been no progress. On the contrary, the last two decades have witnessed an enlightening move away from a simple search for NCCs in a comparatively theory-free and therefore explanatorily impoverished way to a rich landscape of different theories with varying degrees of experimental support. The Consciousness Theories Studies (ConTraSt) (https://contrastdb.tau.ac.il) database study has recently quantified the differences in the extent of research relating to the four theories of consciousness described above, and demonstrated how research results tend to align with the predictions of the supported theory [see Figure 1 and (5)]. There are also some striking commonalities as well as differences among theories. For example, recurrent processing emerges as a key principle in GWT, IIT, PP, and some versions of HOT as well as other theories. Such unifying principles might point toward a “minimal unifying model” of consciousness, at least in biological systems (140).

Figure 1. Results of the Consciousness Theories Studies (ConTraSt) database study (5). Updated results of the ConTraSt database, now including 511 experiments published until mid-2025, which interpreted their findings in light of four prominent theories of consciousness: global workspace theory (GWT), higher-order theories (HOT), integrated information theory (IIT), and recurrent processing theory (RPT). Notably, there are currently no papers in the database for predictive processing theory (PPT). This is mainly because the database is based on the work done by Yaron et al. (5), where PPT was not included, and new uploads referring to this theory have not been made yet. (A) Distribution of experiments across theories. Green sections in the bars represent the number of experiments interpreted as supporting the theory; purple sections represent experiments interpreted as challenging it. (B) Effects over time: a cumulative distribution of experiments supporting the theories. (C) Functional magnetic resonance imaging (fMRI) findings for experiments supporting each of the theories. The same conventions used by Yaron et al. (5) are used here: for each activation, the color intensity indicates the relative frequency of experiments reporting activations in that brain area. While overlaying all findings demonstrates that most of the cortex has been implicated in consciousness, the breakdown by theory presents four different pictures, each aligning with the predictions of the supported theory. This further illustrates the confirmatory posture that most authors in the field have—intentionally or not—espoused.

Where are we going?

Thus far, we have surveyed some of the current main directions in the study of consciousness. As our overview makes clear, the sheer diversity of approaches and theories that characterize the field raises questions about how it can best make progress. In this section, we consider the most promising directions to follow in this ongoing quest, which some consider potentially endless (141). What will be the state of our field 50 years from now? Will our successors look back with satisfaction at the progress made toward “solving consciousness,” or will they feel that the research has been going in circles, not getting any closer?

Considering that prophecy is given to fools, we will refrain from making a prediction here. But we note that the history of science abounds with unfulfilled scientific promises to solve one mystery or another, like producing cold fusion (142), curing cancer (143), achieving room temperature superconductivity, or indeed fully simulating the human brain (144). On the other hand, science often outperforms human predictions: 50 years ago, it probably seemed unthinkable that a computer would ever beat a human chess champion (145), converse fluently (146), or be able to create art (147). Bearing this in mind, what will the future of consciousness science look like? In the following sections, we sketch out nascent trends that will most likely shape the field in the coming decade: a shift toward theory-driven research, the necessity of collaborative and interdisciplinary work, the adoption of new methods, and an emphasis on applications. We hope that developments like these may help the field move beyond the current “uneasy stasis” we mentioned earlier.

From correlates to testable theories

The first major shift is a transition from “searching for the NCCs” to an increased focus on theory-driven empirical research (12, 82–84). While the former has been largely dominated by a data-driven, bottom-up approach consisting, for instance, of manipulating consciousness in hopes of identifying neural contrasts between consciously perceived and non-consciously perceived stimuli, the latter is driven by empirical predictions derived from specific theories of consciousness. Generally speaking, the agenda seems to be gradually transitioning toward providing explanations that go beyond descriptions [see (9), for a critical review]. This seems to be a step in the right direction, though more work is needed to potentially turn this simple step into a major leap.

First, theories must be thoroughly scrutinized to identify both their core constructs (148) plus testable predictions that have high explanatory power. Most, if not all, theories include claims and concepts that are somewhat fuzzy—often almost metaphorical—and these are then translated into neural terms in ways that are sometimes too simplistic, for example by debating whether consciousness is subserved by the front or the back of the brain (149, 150). Further elucidation and formalization are needed to make it possible for the theories to be fully tested. Addressing such issues would open up another research strategy, focused on the “search for computational correlates of consciousness” (151)—that is, identifying which computational differences best characterize the distinction between conscious and unconscious information processing. This in turn requires further precision. For example, what does it mean for information to be globally broadcast (152), and how do the receiving neurons understand the message? Similarly, how exactly does a higher-order brain state point at first-order brain states (96)? Or how is the unfolded cause–effect structure of a certain conscious state (107) physically implemented in neural terms? Only when predictions are fully fleshed out will we be able to assess their explanatory power using clear measures (153, 154).

Second, the explananda (explanatory targets) of the theories should be better defined, especially given claims that they might not be explaining the same things and the fact that they are supported by different types of empirical data, at least to some degree (5, 82). We believe that a greater focus on the phenomenological, experiential aspects of consciousness—for example, by studying quality spaces (45, 48, 155) or by pursuing computational phenomenology (14, 156–158)—is likely to yield substantial dividends here, by making the explananda more precise and thereby sharpening the distinctions among theories.

Third, as Seth and Bayne (12) argue, current theories should become not only more precise (for example, by using computational modeling) and more testable (for example, by developing new measures) but also more comprehensive. That is, theories should progressively be able to explain more distinct aspects of consciousness, and a good theory should explain as many aspects of consciousness as possible (82). An alternative and potentially complementary strategy is to focus on explaining the minimal, universally present features of consciousness (140)—perhaps reflecting a kind of “minimal phenomenal experience” (159).

Another shift in emphasis encouraged by theory-driven predictions is a focus on causal as well as on correlational evidence. Causal predictions generally provide stricter tests of a theory and hence more informative evidence. An example of a theory-based causal prediction can be found in INTREPID (https://arc-intrepid.com/about/), one of the current crop of adversarial collaborations. There, the team is using optogenetics in mice to contrast the effects of merely inactive versus optogenetically inactivated neurons in the visual cortex on visual perception, testing a prediction derived from IIT. Outside the context of theory testing, some have used optogenetics to examine the dependence of conscious perception of cortico-cortical and cortico-thalamic connectivity (157).

Finally, to allow us to home in on promising theories and reduce our credence in less useful ones, the field should focus on evaluating these theories through experiments designed a priori to test their predictions. At least some of these experiments should probe multiple theories simultaneously, to create meaningful contrasts between them. This leads us to the next suggested move.

From isolation to collaboration

Until recently, consciousness has mostly been studied by dozens of laboratories around the world, mostly independently. Each scholar has addressed the problem using their own tools, ideas, and theoretical approaches and pursued their research alone or with a small group. Yet, other fields have taught us that big questions often cannot be solved by individuals or small groups and that such questions may be better addressed through collaborative science (e.g., 160–162). Applied to our field, collaborative approaches can be used at multiple levels.

Selecting research questions

Defining key questions that are worth pursuing can be taken up by the community at large (163) or by a joint process involving multiple researchers and scholars. One form of such collaboration that we have already mentioned is adversarial collaboration, championed by Kahneman (6). Here, theoretical opponents work together to design experiments that would test their approaches, pushing each other toward better theoretical and experimental definitions of their claims.

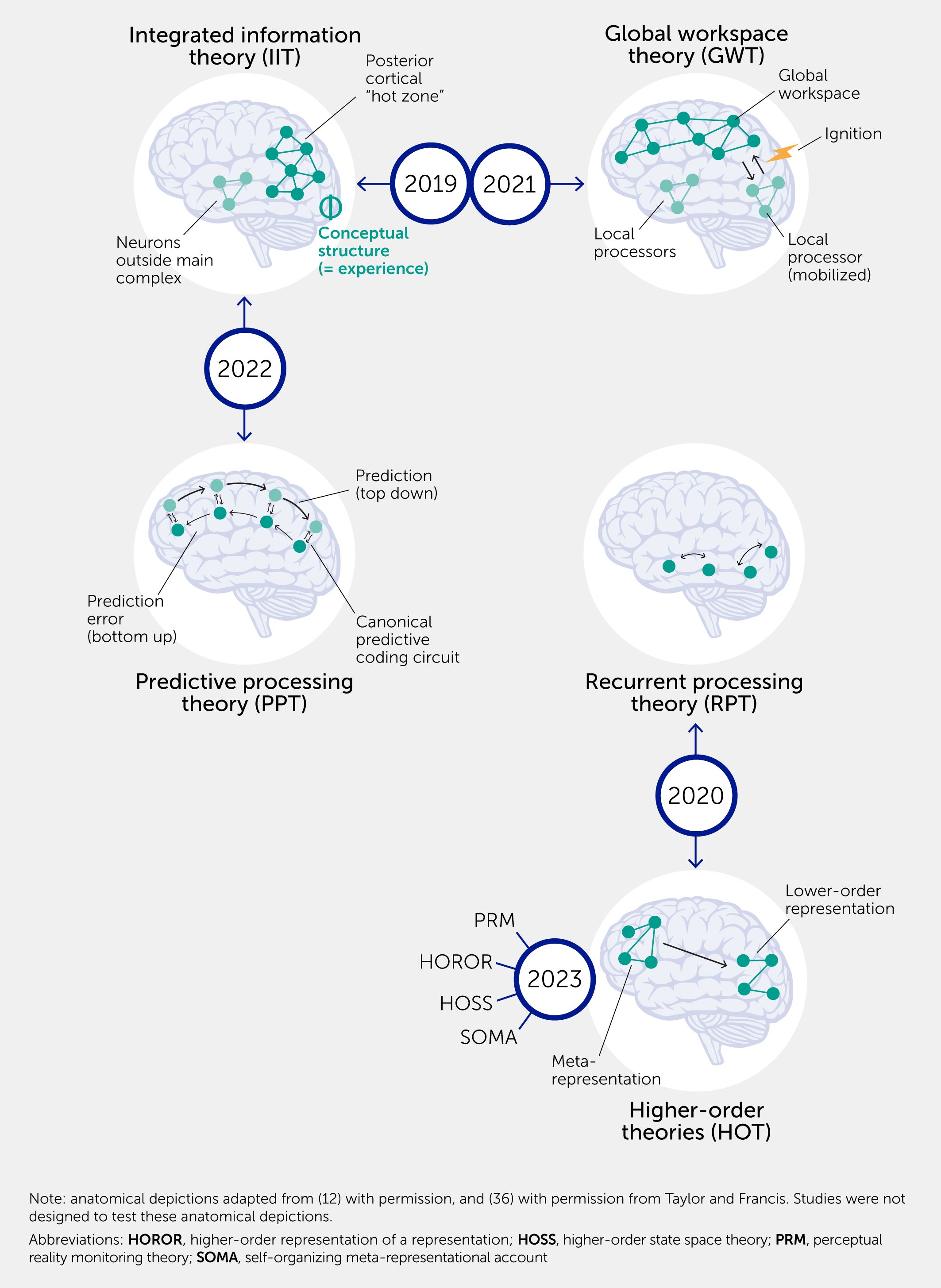

A recent program initiated by the Templeton World Charity Foundation (TWCF) adopted this method in an attempt to “accelerate research on consciousness” by encouraging theory leaders to mutually engage and design experiments likely to arbitrate between competing theories. A series of such adversarial collaborations is now underway, pioneered by the Cogitate Consortium (Figure 2; 117). Time will tell if these collaborations allow us to arbitrate between theories. The first results of the Cogitate Consortium interestingly—and perhaps unsurprisingly—do not fully align with either of the predictions made by the theories in question, namely IIT and GWT. A challenge for this consortium, and likely for future adversarial collaborations, is that the agreed-upon experiments did not directly test the core aspects of either theory—a problem that in turn may follow from each theory making different assumptions and having distinct explananda. Yet, the experiments provided meaningful tests of the neuroscientific predictions of these theories, and the failure to confirm some of these predictions will hopefully lead to self-correction by the theories and to shifting the credence assigned by the community to each theory (164).

Figure 2. An illustration of the ongoing adversarial collaborations funded by the Templeton World Charity Foundation. Such collaborations invite theory leaders to jointly conceive experiments aimed at falsifying the core tenets of different theories. The experiment designs and theoretical predictions to be tested are preregistered and the experiments are performed and replicated by independent teams. In total, eight theories (see text) will be tested. To date, five adversarial collaborations have been launched. Cogitate (initiated in 2019) tested predictions of information integration theory (IIT) and global neuronal workspace theory (GWT). Data collection is complete and the first experimental results have been published (117). A second adversarial collaboration (2021) is comparing IIT and GWT in nonhuman animals. Thirdly, INTREPID (2022) is testing IIT against predictive processing theory (PPT) and neurorepresentationalism. A fourth collaboration (2020) contrasts higher-order theories (HOTs) of consciousness—specifically higher-order representation of a representation (HOROR) (94)—with some first-order theories, in particular recurrent processing theory (RPT) (130) and perceptual reality monitoring (PRM) (75). Finally, ETHoS (2023) aims to test four HOT variants: HOROR, PRM, higher-order state space (HOSS) (95), and the self-organizing metarepresentational account (SOMA) (18, 27). The outcomes of such vast empirical programs will likely shape the field over the next decade, but whether they will decisively rule out specific theories remains to be seen.

Defining research methods

Since its inception, the field of consciousness science has been characterized by controversies about how best to operationalize, manipulate, and measure consciousness for example, (165–168). Unsurprisingly, this lack of consensus practices is accompanied by a myriad of conflicting findings and claims, for example, on the scope of unconscious processing (169–171). Developing new methods and protocols that achieve broad uptake and consensus by virtue of having demonstrated validity would significantly advance the field (172), akin, for example, to collaborative attempts to define the goals of research in metacognition (173). Beyond making progress toward resolving key questions about what consciousness is and how it should be best studied, this would also allow direct comparisons between datasets obtained in different laboratories across the world and hopefully increase the chances of converging on agreed-upon claims about conscious versus unconscious processing. Notably, a single consensus approach would not suffice by itself and is likely to be extremely hard to obtain given the inherent complexities in studying consciousness. Rather, field-specific standardized approaches could usefully complement the rich variety of experimental and theoretical approaches currently flourishing. A relevant example is a recent collaborative effort (174) to define best practices for characterizing unconscious processing, for example, which awareness scale is preferable in each context, when tests of awareness should be administered, etc.

Collecting data

The plea for better-powered, multi-laboratory studies has been made in many fields, and in recent years such attempts have abounded (175–177). This might even be more crucial for consciousness research and psychological science more generally, where effects are typically weak and short-lived (178). Indeed, several such initiatives are already underway, some benefitting from the engagement and involvement of large swathes of the public. Examples include The Perception Census, a large-scale citizen science study of perceptual diversity (https://perceptioncensus.dreamachine.world), the SkuldNet COST Consortium (http://skuldnet.org/), and the Cogitate Consortium (117).

Interdisciplinarity

Consciousness is one of the most complex phenomena known to science, and understanding it requires the collaboration of scholars of different disciplines. In many ways, our field seems to have been a frontrunner in interdisciplinarity, as seen already in the “Towards a science of consciousness” conferences and at the annual meetings of the Association for the Scientific Study of Consciousness. Collaborations between neuroscientists, psychologists, and philosophers (11, 12, 48, 179–181); psychologists and computational neuroscientists (182); neuroscientists, philosophers, and physicists (183); and psychiatrists and psychologists (184) are just a few examples of the ways an interdisciplinary approach can advance the field of consciousness. Crucially, effective interdisciplinarity takes decades; this is perhaps one of the core arguments in support of bottom-up, curiosity-driven fundamental research: to allow interdisciplinary connections to formulate and flourish.

One aspect of interdisciplinarity worth highlighting is the benefit of involving philosophers of science (not only philosophers of mind). The challenge of understanding consciousness is of such magnitude that, even with substantial progress being made, there remain robust discussions about appropriate definitions, conceptual foundations, and constraints on empirical research as illustrated by the recent debate over IIT (40, 113, 114). Here, philosophy of science can provide a systematic meta-theory that can help the community to converge around exactly what should be explained and how.

Embracing new methods

Consciousness science could also benefit greatly from the development of new experimental methods, just as the field of neuroscience has benefited from functional brain imaging and other innovations. One promising arena for new methods is the opportunity to study consciousness in less constrained, more naturalistic environments. Given the challenge of studying consciousness, and a historical skepticism toward our ability to do so (185), the field has thus far mainly focused on finding the most controlled and simplified paradigms and operational definitions. Yet, recently, research has been conducted in more ‘real world’-like settings, relying on state-of-the-art technologies that continue to evolve (186). Studies using virtual/augmented reality (187–190) suggest new ways to study consciousness, potentially in tandem with wearable brain imaging technologies such as optically pumped magnetometers (191). A particular advantage of these “extended reality” technologies is that they provide powerful new ways of investigating aspects of conscious experience that would otherwise remain difficult to study. Examples include experiences of embodiment through the use of avatars or virtual/augmented body parts (192–194), the influence of social context on the neural basis of consciousness, and the ability to suppress real-life objects (not merely computer screen images) from consciousness (195). In general, methods like these enable us to get closer to studying conscious (and unconscious processes) as they happen in real life, for example, when we are walking down a street—a situation of enormous sensory richness compared with a typical laboratory experiment (196).

Much can also be gained from combining the old and the new. An example here is the emerging approach of computational neurophenomenology, which merges new methods in computational models of neurocognitive systems together with relatively old philosophical and behavioral methods from phenomenological research to help build more informative bridges between brain mechanisms and conscious experience (151, 197). The key to doing so successfully may lie in flexibly recognizing how “old” and “new” approaches might benefit each other, rather than treating them in an either/or fashion, or as beholden to certain paradigms or explanatory targets.

One useful approach is to focus on the phenomenological aspects of consciousness. Here, a promising method to understanding what an experience is like for an organism—how it is similar or different to other possible experiences—is to investigate empirical mappings between (objective) neural and (subjective) perceptual similarity structures in high-dimensional spaces (45–48). As von Uexküll (198) and, more recently, Yong (199) point out, the world looks, smells, and tastes very different to a fly than it does to us: each organism is sensing its environment through sensory modalities that have been shaped by different evolutionary constraints and hence yield conscious experiences that are markedly different. Recent investigations using intracranial recordings have linked visual experiences to stable points in a high-dimensional neural state space (200–204). Such empirical investigations, in turn, bear on the philosophical claim that the qualitative nature of conscious experience arises by virtue of its relational similarity to other experiences—the “quality space” hypothesis (48, 205, 206). However, it remains unclear how these proposals for the neural encoding of qualitative features of (and relationships between) sensory experiences can be integrated into the theories of consciousness reviewed above [though see IIT for one approach (107)].

While these new methods offer exciting opportunities, they should not blind us to lessons from the past and lead us to forget hard-won lessons from earlier epochs of psychology (207). Of particular relevance to consciousness research is the issue of demand characteristics, which refers to how attributes of experimental context may implicitly influence participant behavior and experience (207). If experimental conditions are not carefully matched for participants’ expectations, observed differences may arise from mixtures of compliance and changes in experience caused by suggestibility and not from whatever other underlying process may be under examination (208, 209). Even more problematic may be experimenters’ own expectations about their study participants (210–212).

A focus on methods should also pay attention to the general problems of reliability and replicability that have plagued many areas of science, including psychology, where initiatives aiming to address these issues have become particularly prominent. Therefore, both old and new methods such as pre-registration, open data and materials, should be developed with appropriate rigor and embrace open science principles wherever possible. This probably means that most experiments will require large samples and more work such as measuring and controlling for demand characteristics, compared with what has typically been the case. This seems like a small price to pay to ensure, compared with what the field generates research of lasting value.

What if we succeed?

The final section of this paper ventures from the near future to the far horizon: imagine a time in which we have “solved consciousness,” whenever that may be. What would be the consequences of such an understanding? How would a complete explanation of the mechanisms of consciousness change the way we understand ourselves? One aspect seems clear—the more certainty we gain in our methods and in our theories, the more we will be able to translate findings from consciousness research into applications that address real-world problems. The impetus to apply consciousness science to address practical problems is increasing, given the accelerating progress in AI, developments in neuronal organoids (213, 214), and increasing societal attention to ethical concerns relating to nonhuman animal welfare and to the beginning and end of human life. For instance, a potential contribution of particular importance to society will be the development of a test (or tests) for consciousness, allowing one to determine, or at least provide an informed judgment about, which entities—infants, patients, fetuses, animals, lab-grown brain organoids, xenobots, AI—are conscious (32, 215, see also the section on “Clinical implications”).

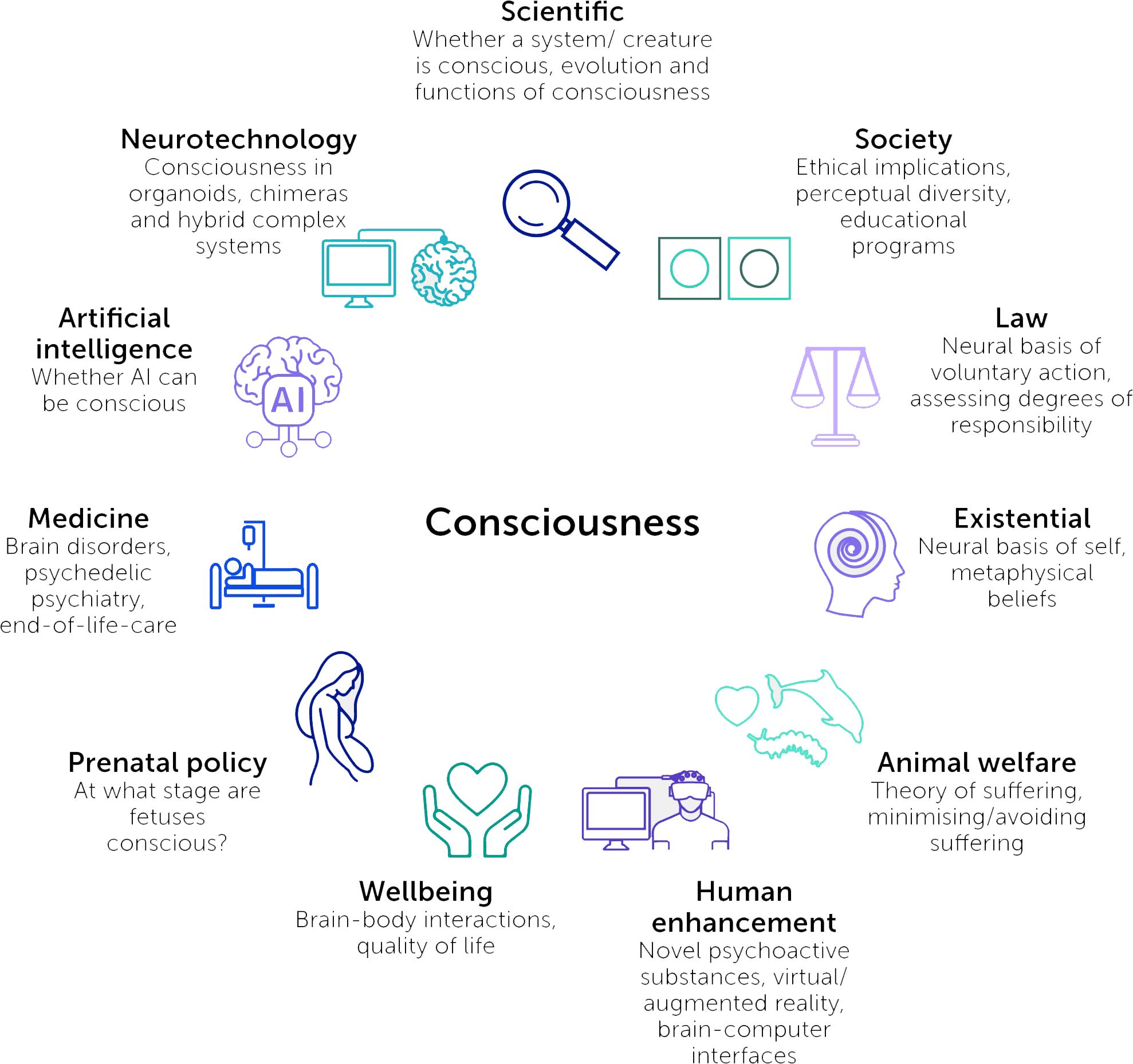

Akin to calls to consider what may ensue if we succeed in building artificial general intelligence (216), here we consider the potential implications of success in consciousness science in four areas: scientific, clinical, ethical, and societal (Figure 3). Importantly, many of these implications may apply already even for partial “solutions” as we, hopefully, incrementally approach a full scientific understanding of consciousness.

Scientific implications

Consciousness science remains somewhat marginal relative to the wider ecosystem of neuroscience and cognitive science (217). Every year, tens of thousands of neuroscientists attend the Society for Neuroscience Annual Meeting in the United States, but only a small fraction of their abstracts mention consciousness or subjective experience (in 2023, for instance, 92 abstracts included “consciousness”, compared with 4,297 that mentioned “behavior” and 7,237 that mentioned “brain”). Instead, the community’s approach to the brain remains mostly engineering-like: geared toward understanding how its component parts interact to produce behavior. This mechanistic approach will continue to accelerate in the next decades with the increased precision of tools such as optogenetics to probe and stimulate circuit function, affording unprecedented control over brain states and behavior. However, without progress in consciousness science, we may reach a point at which the brain, animal or human, is understood at a similar level as current AI systems. We might understand, at least in part, how it works, yet still not know how, or even whether, its mechanisms are linked to conscious experience. In contrast, success in consciousness science will provide a rich account of the biological basis of behavior and allow us to precisely determine when and whether certain brain states are conscious or underpin conscious experiences. In turn, it will provide neuroscience with the tools to control consciousness with the same precision as is currently being pursued for behavior3.

Interfacing mechanistic neuroscience with consciousness science will be aided by asking what function(s) consciousness serves. Why should it matter that certain mental states are conscious and others are not if both are able to guide behavior? Indeed, much research on behavioral control and decision-making has proceeded without heeding consciousness as a variable (85). The key concepts of feeling, reward, value, valence, and utility have been approached differently in different fields, and have seldom been connected with consciousness research (33, 69, 218, 219). Debate continues about which broader behavioral functions specifically depend on phenomenal experience. Meanwhile, there is renewed interest in approaching this crucial “why” question from evolutionary (220, 221), philosophical (222), and psychological perspectives (8).

Clinical implications

Success in consciousness science will usher in a new realm of interventions in modern medicine. It already has a substantial practical impact on the clinical approach to neurological disorders of consciousness (223). Pioneering neuroimaging work over the past two decades has allowed communication with non-responsive patients previously considered to be in a vegetative state (224, 225). As discussed above, other approaches have applied indices of neural complexity, inspired by theories such as IIT, to distinguish between subgroups of patients with and without neural signatures that imply minimal levels of conscious experience (116, 226, 227). Thankfully, such cases of nonresponsive consciousness following coma are relatively rare in the population as a whole. In contrast, in an increasingly ageing population, the incidence of nonresponsive advanced dementias is likely to rise substantially. We know relatively little about what subjective experience is like in advanced dementia (228, 229), but progress in consciousness science will enable similar measures of consciousness level to be applied to such patients, to guide their care and to provide information to families about what their loved one’s experience may be like.

Consciousness science also has considerable potential to improve our understanding and management of mental health conditions. These conditions, including depression, anxiety, schizophrenia, and autism spectrum disorders, are leading drivers of the global disease burden (230). In the European Union alone, the healthcare and socioeconomic costs of mental health conditions are estimated to total €600 billion/year (equivalent to >4% of gross domestic product) (231). Major unmet needs remain in this field, with first-line pharmacological and psychotherapeutic interventions, often discovered through serendipity alone, having remained unchanged for decades and showing limited effect sizes overall (232). One concern is that drug discovery efforts focus on behavioral markers of a certain condition (e.g., anxiety or chronic pain) in model animals such as mice, which may not accurately represent the conscious experience of these conditions in humans (233–235). Indeed, in 2017, Thomas Insel, a former head of the United States National Institute of Mental Health, conceded that even US$20 billion of investment had failed to “move the needle” on mental health (236).

From this perspective, it is striking that emotion and affective states in general have been comparatively neglected in consciousness research, (though see references 25, 33, 209, 237–241) and especially so if phenomenal experience is indeed about it feeling like something to be a conscious creature. Experimental medicine in this space is hampered by the fact that we still know very little about the neural mechanisms that underpin debilitating changes in subjective experience (242). Another interesting approach is to harness unconscious processes to develop more effective treatments for phobias. In one proof-of-concept study, researchers were able to decode fear-related representations that occurred unconsciously and reward them using neurofeedback (243). This, in turn, was reported to reduce physiological fear responses to consciously perceived stimuli without the participant having to undergo (often aversive) conscious exposure therapy.

The next frontier is to create an effective bridge from mechanisms to new interventions in mental health by studying markers of subjective experience in both human and animal models. Both sides of this interaction are crucial, as psychiatry and neuroscience need consciousness science and vice versa. Dysfunctions of consciousness in mental health disorders are probably distinctive in humans, having idiosyncratic content—mice may not become consciously depressed, for instance. At the same time, however, many of the same computational primitives supporting the neural control of behavior are conserved across species, raising the potential for biomarkers of conscious experience to be validated in humans and back-translated into animal models of mental health conditions (239–241). More broadly, if we gain a precise understanding of the biological basis of consciousness, it should be possible to design circuit-level interventions, for instance, brain–computer interfaces, that directly target, remediate, and potentially enhance aspects of conscious experience.

Ethical implications

Consciousness in nonhuman animals

Consideration of consciousness is often intertwined with ethical and moral obligations toward (presumed) conscious organisms (244). In ancient traditions, such as the Dhārmic religions, moral obligations toward nonhuman animals often rested on conscious aspects of experience (245, 246). For example, damage caused to an animal or system would only be ethically problematic if it caused them to be consciously in pain. Today, the corresponding philosophical term is “sentientism”—the notion that moral status follows from the capacity for phenomenal experience. Note that some versions of sentientism restrict the claim to the capacity for valenced experience, for example, the ability to feel some form of “good” or “bad” such as “pleasure” or “pain” [for a discussion of the importance of a general theory of suffering, see Lee (247) and Metzinger (248)]. Most consciousness researchers now reject the notion that only humans have consciousness (163)—a notion that can be traced in different ways to Aristotle and Descartes (249). However, they disagree on the dividing line between conscious and non-conscious entities, or indeed on whether such a dividing line exists at all. IIT, for instance, has implications that consciousness is probably a widespread feature of all living and potentially also some non-living complex systems (108). Conversely, global cognitive theories such as HOT suggest that a meta-representational neural system is a prerequisite for consciousness, one that might be limited in scope to those creatures who also have the capacity for, perhaps implicit, metacognition (75, 250, 251). As theoretical and experimental progress refines our credence in these various theories, we will be able to better characterize our confidence in which animals are conscious and what kinds of conscious experiences they may have (32). A consensual theory of consciousness would crystallize this dividing line, possibly affecting not only the use of model animals in neuroscience itself (244) but also societal perceptions of animals’ suffering and their use by humans as sources of food, clothing, and medical products (252–255).

The ethical implications of success in consciousness science are intertwined with the kind of scientific explanation of consciousness that would correspond to such success. The example of life is informative. Vitalists held that there was a firm dividing line between the living and the non-living and that this was associated with some additional property, élan vital, that supported life. Biomedical science dissolved the need for such an extra property and led to a move away from “living versus non-living” being a central dividing line of moral status; for example, this can be seen in the adoption of brain death by Western medicine as the more relevant criterion for moral obligations toward sustaining life support. Indeed, consciousness itself now takes a more central stage in discussions of moral obligations toward humans and other animals. In the future, however, advances in consciousness science may begin to dissolve or reformulate those dividing lines based on consciousness, leading them to be replaced by other as yet unknown considerations.

Law

The law presents another broad area of societal implications. Many legal frameworks distinguish between notions of mens rea (“guilty mind”) and actus rea (“guilty act”), in which mens rea picks out the conscious intent to engage in particular conduct. The neuroscience and biology of voluntary action, along with a deeper philosophical understanding of the concept of “free will”, can be perceived as undermining the foundational notion that conscious intent is under the individual’s control. Already, since the 1960s, brain injuries and diseases have been leveraged for legal defenses or post-hoc exoneration, as in the case of Charles Whitman, the “Texas Tower Sniper” found to have a large brain tumor impacting his amygdala (256). Today, the “my brain made me do it” defense is becoming increasingly popular, despite inherent conceptual difficulties (257). As we elucidate the neural basis of voluntary action (34, 258, 259) and the effects of unconscious processes on decision-making (260), it will become increasingly difficult to discern when moral and criminal responsibility should, if ever, apply (34, 261). These issues are not abstract. Whatever one’s philosophical position on free will may be, judgments are being passed every day on people whose brain development and operation will have been affected by factors outside their control.

Artificial consciousness

Success in consciousness science may also result in a detailed understanding of mechanisms that can, in principle, be recreated in an artificial system (40, 262, 263). In philosophy, the position that mental states—including conscious ones—can be instantiated, rather than merely simulated, in an artificial system with different structural and/or material properties is known as substrate independence (or, in more restrained versions, substrate flexibility). Substrate independence/flexibility is closely related to multiple realizability—the idea that similar mental states can be implemented in different ways, although not necessarily in different kinds of material (264). Both substrate independence/flexibility and multiple realizability are, in turn, related to functionalism—the idea that mental states depend on the functional organization of a system, which can include its internal causal structure, rather than on its material properties (265). Within the category of functionalism, the more specific notion of computational functionalism claims that computation provides a sufficient basis for consciousness (266), so that consciousness could be implemented in non-biological information processing devices such as artificial neural networks of the sort deployed in cutting-edge AI systems (262). However, even if some version of computational functionalism is true, which remains debated (40, 267), abstracting the biological information processing sufficient for consciousness away from the messy realities of sensory and motor systems may be difficult, if not impossible, leading any artificial consciousness to end up looking animal-like rather than existing disembodied in software (268, 269). Further, functionalism, whether computational or otherwise, may not be true in the end, in which case other factors may be necessary for consciousness, such as being “biological” or “alive”—a position broadly known as biological naturalism (40, 270).

If artificial consciousness were achieved, whether by design or inadvertently, it would of course bring about a huge shift in allowing consciousness to decouple from biological life, which would in turn herald major ethical challenges on at least a similar scale to those discussed in relation to animals. The ethical problems could even be more severe in some regards since we humans might not be able to recognize or have any relevant intuitions about artificial consciousness or its qualitative character. There may also be the potential to mass-produce artificial consciousness systems perhaps with the click of a mouse, leading to the possibility (even if very low probability) of introducing vast quantities of new suffering into the world, potentially of a form we could not recognize. These observations provide some reasons for why we should not deliberately pursue the goal of creating artificial consciousness (271). There are other reasons too. Artificially conscious systems may be more likely to have their own interests, as distinct from the “interests” endowed by human designers. This could exacerbate the problem of ensuring their behavior is guaranteed to be in line with human and broader planetary interests: this being the “value alignment” problem already prominent in discussions of AI ethics.

In the near future, it is more likely that artificial intelligence, in the form of machine learning, and consciousness will continue to decouple. Such decoupling is likely to be counterintuitive and affect what we can conceive. For instance, the philosopher John Searle wrote in 2015, “[...] I am convinced that consciousness matters enormously. Try to imagine me writing this book unconsciously, for example.” (272). Now, with the advent of large language models such as the GPT series, generative AI can write coherent and novel prose, and we can imagine how it could write a book unconsciously—though it might not yet be a very good one (273). Here, a different ethical question comes into focus. It is likely that artificial systems such as large language models will continue to improve in their mimicry of humans, leading a large sector of the population to adopt the “intentional stance” (274) toward such systems, attributing them with psychological properties such as beliefs, desires, and intentions, or perhaps even to fully perceive and believe these systems as being conscious. In these scenarios, people will intuitively assume they are conscious, even if scientists and engineers protest otherwise (40, 275–277). Such misfirings of mindreading machinery may sometimes be benign, for instance when children believe their favorite Pixar character is real (278–280), but this will not always be true. Significant challenges arise when people invest emotional significance into their relationships with seemingly conscious agents, as in the case of a Belgian man committing suicide after interacting with a chatbot (281).

More generally, the growth and societal penetrance of more powerful pseudo-conscious artefacts could have considerable societal impact (40, 282). People may be more open to psychological and behavioral manipulation if they believe the AI systems they are interacting with really feel and understand things. There may be calls to restructure our moral and legal systems around an intuition that an AI is conscious, even when it is not thereby potentially diverting resources and moral attention away from humans and animals that are actually conscious. Alternatively, we may learn to treat AI systems as if they are not conscious, even though we cannot help feeling that they are [perhaps illusions of artificial consciousness will be cognitively impenetrable, in the same way that some visual illusions are, (40)]. In situations like this, we risk brutalizing our minds, a danger long ago identified by Kant among others (283).

A key question running through all these issues is whether AI systems are more similar to humans in ways that turn out not to matter for consciousness (e.g., seducing our anthropic biases through linguistic ability) and less similar in ways that turn out to be critical (e.g., lacking the biological basis common to all known instances of consciousness, or not implementing the right kind of functional recurrency). A mature science of consciousness, guided by experiment and theory, will play a critical role in these debates.

Societal implications

An increasingly mechanistic understanding of consciousness is likely to reshape how humans see themselves and their place in the universe. We anticipate that human conceptions of consciousness will be reshaped by a gradual, mechanistic understanding provided by experimental science. The fields of evolution, genetics, and comparative cognition have eroded notions of mysterious human uniqueness in favor of explanations in terms of biological mechanisms. We envisage a continuation of this process as consciousness research continues to mature (though of course, a backlash is always possible, if elements of society feel threatened). This form of “success” that we envisage in consciousness science may lead the moral and ethical implications of consciousness to become more nuanced. In 1950, Alan Turing suggested that “[…] at the end of the 20th century the use of words and general educated opinion will have altered so much that one will be able to speak of machines thinking without expecting to be contradicted” (284). Perhaps a similar process will lead us to soon no longer be vexed by the question, “But is it conscious”?