- 1Department of Computer Science and Engineering, Akal University, Talwandi Sabo, India

- 2Department of Computer Science and Engineering, Institute of Technology, Nirma University, Ahmedabad, India

- 3Department of Mathematics, BFC, Bathinda, India

The speed of urbanization around the world is decreasing the arable land endangering food security since the population is estimated to reach 9.7 billion by the year 2050. Urban agriculture provides a long-term solution to food production in urban areas but has issues of good monitoring of plant diseases because growing areas are fragmented, microclimates change, and resources are limited. However, biotic (e.g., pathogens) and abiotic stresses must be accurately detected to reduce wastage in crop and ensure sustainability in urban farming ecosystems. This paper will suggest a new deep learning model that integrates ResNet101 and the Sparrow Search Optimization (SSO) algorithm to identify plant stress in urban agriculture environments. Based on the capabilities of transfer learning, the model makes use of optimal feature extraction with small datasets, resolving the issue of data scarcity in cities. The framework was trained and evaluated based on a heterogeneous dataset of urban crop images, inclusive of multifactorial stress indicators on variable conditions. ResNet101 + SSO reached an F1-score of 98.9, and ROC-AUC of 0.989, which is better than the traditional approaches (RandomForest: 92.3% F1; KNN 89.7% F1). It showed great accuracy in detecting both biotic and abiotic stress factors, which allows the timely detection of the broken urban farms. This solution promotes sustainable urban agriculture by minimizing the waste of crops by monitoring stress accurately and at scale. The model is developed to support smart city objectives of improving food security and resources sustainability, which is tailored to city settings with limited resources. The future planning of work will be to combine real-time data of IoT sensors and make the model applicable to various types of crops used in urban areas.

1 Introduction

The rapid urbanization and exponential growth of the global population, projected to surpass 9.7 billion by 2050, have intensified the demand for sustainable food production systems that can efficiently meet nutritional needs while contending with shrinking arable land resources (Martin and Wagner, 2018). Urban agriculture has emerged as a promising solution, enabling localized food cultivation in city environments through innovative methods such as vertical farming, rooftop gardens, and hydroponic systems. This approach not only reduces transportation-related carbon emissions but also enhances food resilience by bringing production closer to consumers, thereby addressing the dual challenges of population surge and land scarcity. However, the plants in urban settings are vulnerable to a range of biotic factors, including pathogens (fungal, viral, bacterial, weeds and pests), as well as abiotic stresses like drought, nutrient deficiencies, and extreme temperatures, all of which can severely compromise yields and quality. Early identification of these issues is crucial to minimize losses, yet manual monitoring is labour-intensive and prone to errors, especially in large-scale urban farms. This underscores the necessity for advanced technologies to support precise, timely interventions that optimize resource use and sustain productivity.

Artificial intelligence (AI) has revolutionized agricultural practices by offering tools for automated disease detection, stress identification, and yield prediction, leveraging machine learning and deep learning models to analyze vast datasets from sensors, drones, and imaging devices. For instance, convolutional neural networks (CNNs) (Kandukuri et al., 2023) have been effectively employed to classify foliar diseases in crops like rice (Prajapati et al., 2017), cassava (Ramcharan et al., 2017), and apples (Thapa et al., 2020), enabling farmers to apply targeted treatments and reduce chemical usage. Similarly, AI-driven phenotyping helps detect abiotic stresses through spectral analysis and multi-modality imagery, while predictive models forecast yields based on environmental variables. However, existing data structures predominantly consist of image repositories focused on specific crops or biotic diseases, such as the PlantVillage (Ali et al., 2024) dataset for common foliar issues or the Cassava challenge for cassava-specific pathologies (Kiruthika et al., 2024). These resources, while valuable, reveal critical gaps: limited coverage of abiotic stresses, insufficient diversity in urban-relevant crops, and a lack of integrated datasets that encompass both biotic and abiotic factors under real-world conditions. Moreover, many models suffer from suboptimal hyperparameter tuning, leading to reduced accuracy in dynamic environments.

According to Reddy et al. (2025), effective risk profiling in rainfed farming must integrate biotic and abiotic factors alongside institutional challenges, highlighting the need for comprehensive coping strategies that align with policy frameworks targeting resilience and sustainability. By situating biotic and abiotic stresses within this broader policy and institutional milieu, the study underscores their critical role as determinants of agricultural vulnerability and the necessity of integrated management approaches at the national level. This policy relevance renders the study highly pertinent for decision-makers and practitioners aiming to formulate strategies that mitigate risks, enhance adaptability, and promote sustainable agricultural development (Reddy et al., 2025).

These limitations motivate the development of more comprehensive solutions tailored to urban agriculture’s unique demands, where space-efficient, AI integrated systems are essential for scalability. By addressing the limited availability of datasets and to enhancing model efficiency, this paper presented deep learning based solution for active plant health management, ultimately fostering smarter, more resilient urban food systems.

The primary objectives of this study PlantStress dataset, a novel collection that incorporates both biotic (e.g., fungal, viral, bacterial, weeds and pest damage) and abiotic (e.g., heat, drought, salt, nutritional deficiencies, flood etc) stresses across diverse urban-cultivated plants, captured through high-resolution imagery and environmental metadata. This collection contains 5,170 original photographs that were shot in plantations in a variety of lighting circumstances; these images were captured. Within the dataset, there are a total of 8,629 distinct leaf annotations that span 27 different disease classifications. A few of these annotations consist of a single leaf, while others include many leaves. Building on this foundation, we employ the Sparrow Search Algorithm for hyperparameter optimization of a ResNet101 architecture, harnessing transfer learning to adapt pre-trained features for superior performance in stress classification. Finally, the proposed framework is designed for seamless integration with smart city infrastructure, and can be suitable for the automated alerts and decision-making in urban farming ecosystems.

1.1 Motivation

From the above, it is observed that recent advances in plant pathology fail to address both the biotic and abiotic stress factors simultaneously. There is a gap that exists in the availability of comprehensive datasets that specifically focus on the identification of plant diseases and stress conditions based on both biotic and abiotic stressors. This motivates us to propose an innovative approach for plant stress identification that considers both biotic and abiotic stress factors in an UrbanAgri framework. Through the development of a new dataset, augmentation techniques, and the use of a deep convolutional neural network (DCNN) architecture, we seek to enhance the accuracy and robustness of plant stress detection. The proposed framework could have significant implications for early detection, management, and mitigation of plant stress.

1.2 Research objectives

Following the above gaps, this study introduces a novel dataset focusing on plant stress, encompassing various biotic and abiotic stressors to provide a more comprehensive foundation for building AI-based solutions. In addition, we use Sparrow Search Optimization (SSO), a metaheuristic algorithm, to fine-tune hyperparameters of a ResNet101 deep learning model. This optimization is designed to maximize classification accuracy while ensuring computational efficiency, crucial for practical deployment in urban farming settings with limited resources. By focusing on the intricate interactions of urban-specific environmental variables, this research advances the development of resilient urban food systems aligned with the United Nations Sustainable Development Goals (SDGs).

1.3 Organisation of the paper

In the first section, the research problem and motivation are identified. The linked research in section 2 highlights the state-of-the-art approaches, their issues with the different stressors associated with the gaps and difficulties in the availability of the relevant datasets and precise diagnostic models. The details of the suggested methodology are also included in section 3, which is the article’s major body. This guarantees that the entire algorithm, including the dataset description, data argumentation, DCNN, and optimised process, is all included. Section 4 presents the findings, discussion, and conclusion pertaining to the proposed UrbanAgri.

2 Related work

The integration of artificial intelligence into agriculture has transformed traditional farming practices, particularly in the realm of plant health management, by enabling automated detection and prediction capabilities that address the major challenges of food production. Early efforts were focused on machine learning methods for identifying plant diseases through image analysis, as demonstrated by comparative studies evaluating algorithms like support vector machines and decision trees for foliar symptom classification (Akhtar et al., 2013). Further, complex deep learning models dominated the field due to their ability to extract intricate features from complex imagery (Boukhris et al., 2020). Building on these developments, deep learning applications have extended beyond mere disease detection to encompass stress identification, encompassing both biotic pathogens and abiotic factors like heat, drought, and nutrient imbalances that are increasingly prevalent in urban agricultural settings. Several authors used a CNN model based on an autoencoder technique to identify diseases in rice crops with 90.6% accuracy (Kandukuri et al., 2023). Likewise, Lakshmi and Nickolas utilised CNNs and transfer learning techniques to understand problems in betelvine leaves, getting a mean Average Precision (mAP) of 84%, proving that these technologies are suitable for monitoring plant health. Moreover, Elvanidi and Katsoulas (2022) employed ML techniques to identify stress in tomatoes, illustrating how computational tools may solve a wide variety of natural challenges (Kavitha Lakshmi and Nickolas, 2020) and (Elvanidi and Katsoulas, 2022).

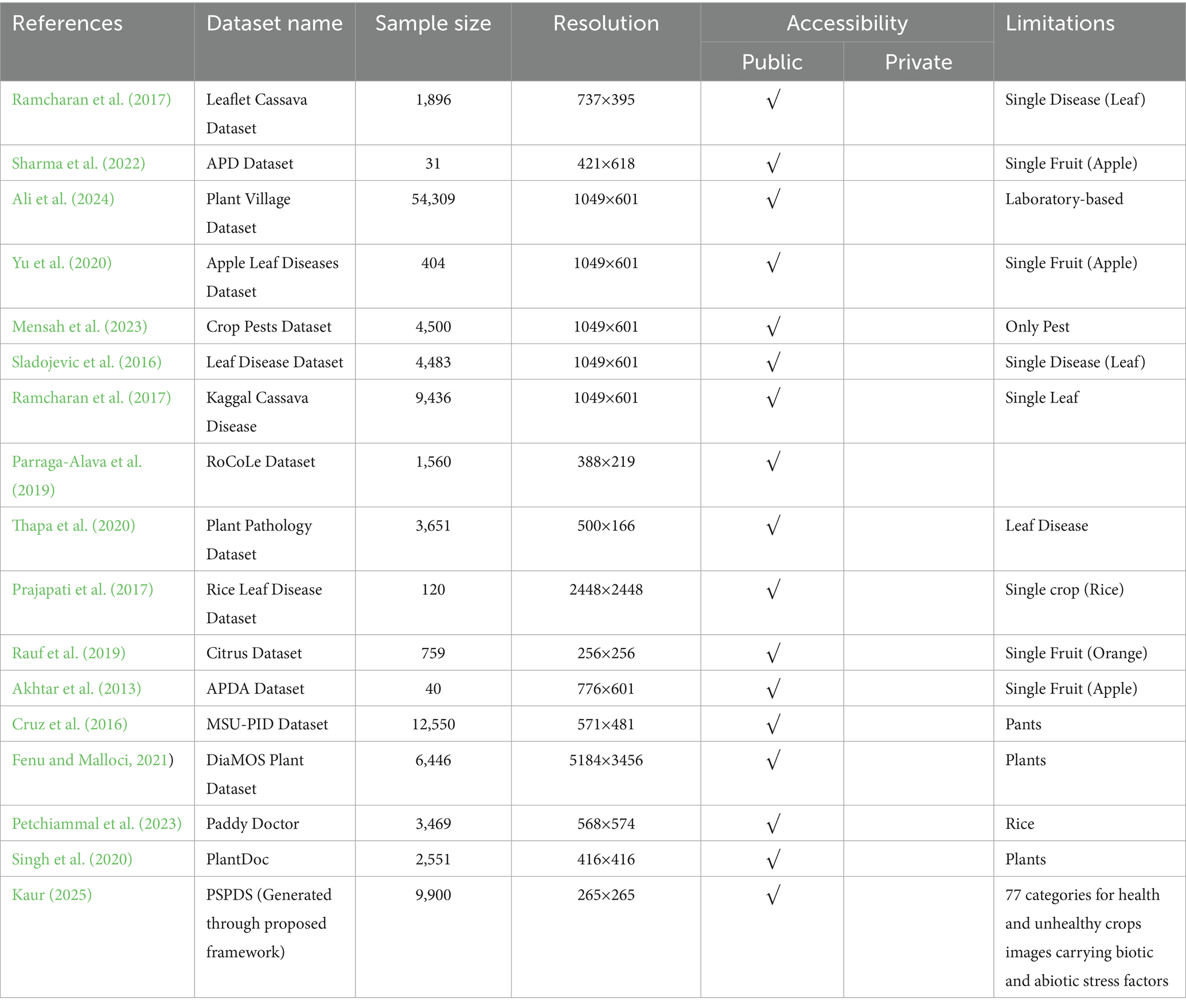

By combining deep learning with mobile and application-based platforms, Shoaib et al. (2023) in how these developments can be put into practice to identify plant illnesses instantaneously. Another dataset, “Paddy Doctor,” was provided in (Petchiammal et al., 2023), also contribute to the development of robust disease detection models. They provide a comprehensive range of plant stress situations, which are essential for enhancing the effectiveness of deep learning models. Several other datasets that are already available address certain diseases, such as the Leaflet Cassava Dataset, the APD Dataset, the Apple Leaf Diseases Dataset (Yu et al., 2020; Sharma et al., 2022), the Leaf Disease Dataset (Rauf et al., 2019), the Kaggal Cassava Disease (Ramcharan et al., 2017), the Rice Leaf Disease Dataset (Prajapati et al., 2017), the Citrus Dataset (Rauf et al., 2019), and the APDA Dataset (Akhtar et al., 2013; Gaidel et al., 2023), Apple Leaf Diseases Dataset (Sharma et al., 2022) are all apple crop diseases. The leaflet Kaggal Cassava Disease is only concerned with cassava plant diseases. The Rice Leaf Disease dataset deals with illnesses that harm rice, while the Citrus dataset deals with diseases that affect oranges. Despite advances in focused research, this specialisation restricts the models’ general usefulness.

The Plant Village Dataset has more than 54,000 samples. Although most of them were collected in labs, they may not be useful in the field. Despite its size, the Crop Pests Dataset only contains pests and a few additional stressors for plants. The RoCoLe (Parraga-Alava et al., 2019), Plant Pathology, and MSU-PID datasets are useful (Thapa et al., 2020); however, they only provide information regarding leaf diseases and not on other essential problems.

Moreover, while databases such as the Deep Phenotyping dataset and the DiaMOS Plant Dataset make essential contributions (Fenu and Malloci, 2021), they underscore the need for more complete and diverse picture data. Despite its virtues, the field has certain weaknesses. Many existing models and datasets have low diversity and representativeness, as they focus on certain diseases or crops. A lack of adequate data makes it difficult to create models that are robust and universally applicable. Table 1 shows a comparative analysis of these datasets. Furthermore Nagasubramanian et al. (2022) demonstrate the interpretability of deep learning models. To make these models understandable and actionable, it is critical to focus on relevant attributes. These inadequacies emphasise the need for new datasets that include a wider variety of plant stress factors, including environmental and nutritional implications, as well as novel deep learning architectures that may be used to better navigate the complexities of real-world agricultural environments. Currently, there is no comprehensive dataset that encompasses various crops, disease kinds, and stress variables under various situations. Furthermore, these datasets are not necessarily highly detailed or appropriate. A new, more comprehensive dataset is required to increase the precision, resilience, and practicality of plant stress identification and classification methods.

3 Materials and methods

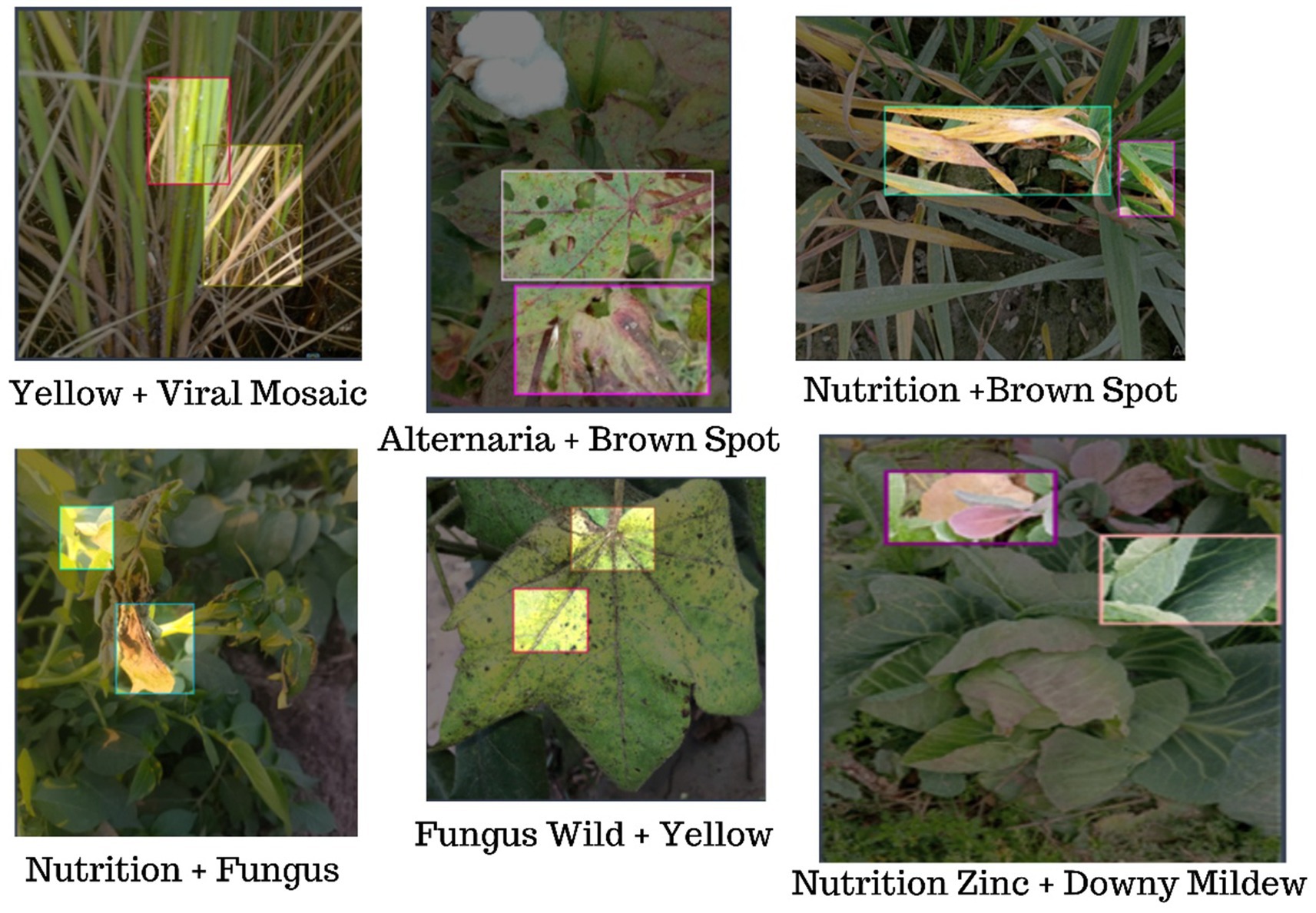

3.1 Dataset: biotic and abiotic stress data

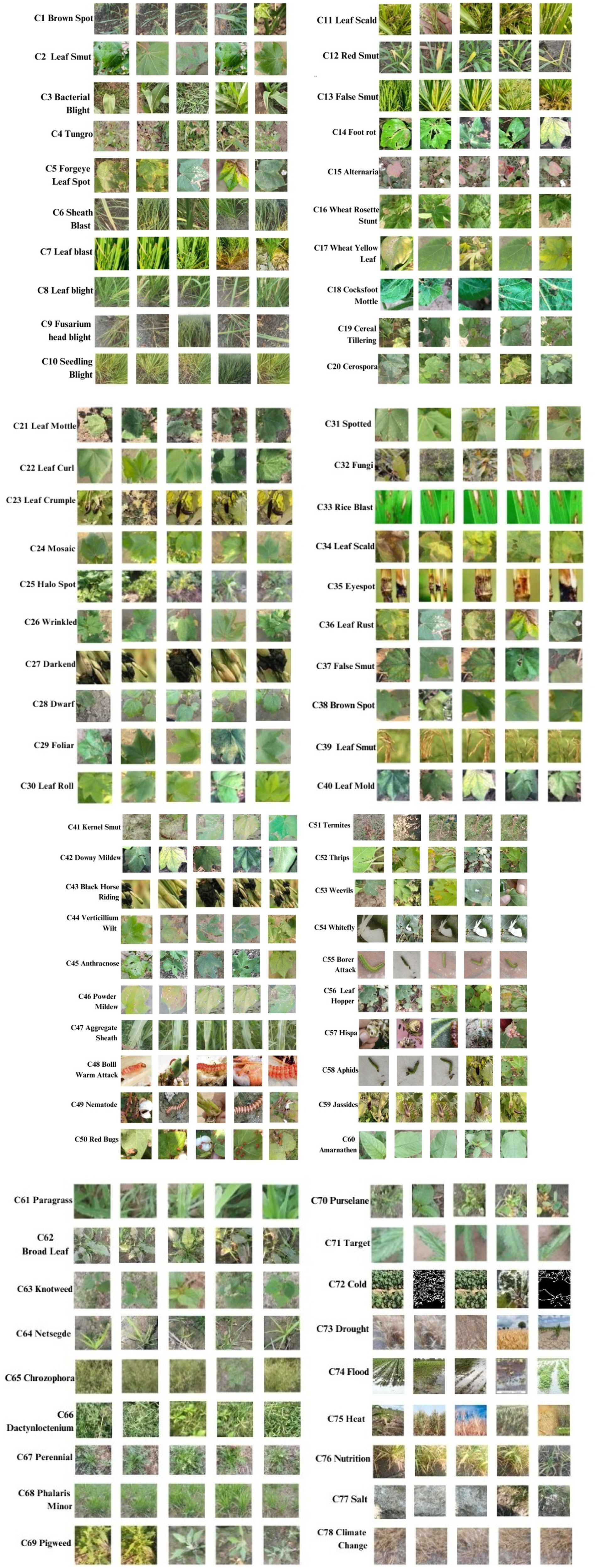

The dataset is divided into two primary categories: biotic stress (caused by living organisms like pathogens and pests) and abiotic stress (caused by environmental factors such as drought, heat, cold, and nutrient deficiencies). The dataset includes image data (plant leaves affected by various stress factors) and sensor data (environmental conditions). A sample PSDataset is shown in Figure 1 from the actual dataset repository. This consists of the 12 different varieties of crops in the healthy directory (wheat, brinjal, cabbage, cauliflower, cotton, guava, lemon, maize, potato, rice, spinach, and tomato. The biotic (includes 12 different crops like cotton, rice, wheat, brinjal, cauliflower, potato, maize, guava, lemon, spinach, tomato, and cabbage along with weeds and pest) and abiotic (includes 7 different crops like cotton, wheat, rice, brinjal, cauliflower and potato). Looking forward, there is potential for this dataset to be integrated with other datasets globally.

Figure 1. The PSDataset consists of 78 classes, labeled C1 to C78. (Classes C1 to C44 represent biotic stress samples, C45 to C56 cover pest stress samples, C57 to C68 include weed stress samples, and C69 to C78 correspond to abiotic stress samples) (Kaur, 2025).

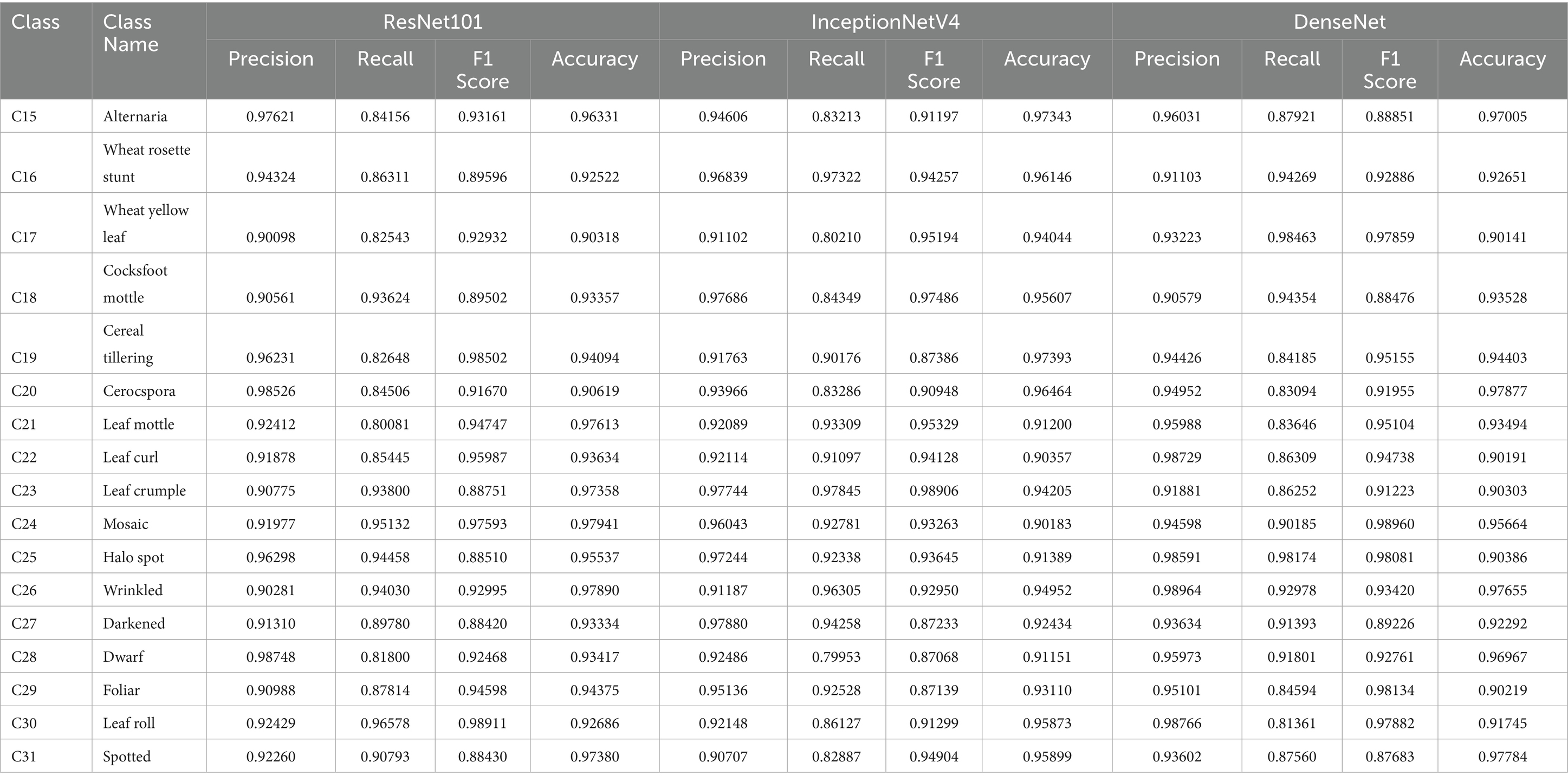

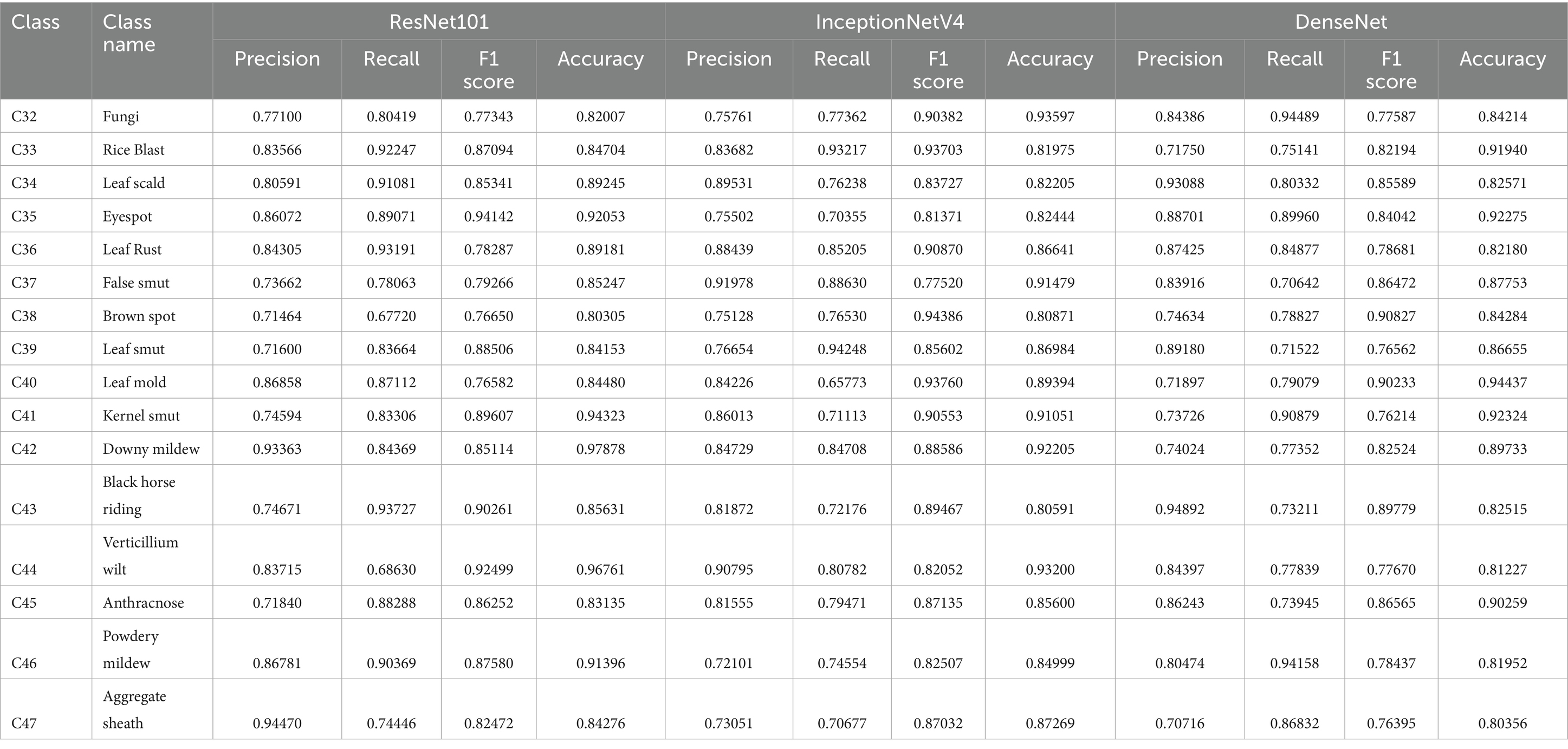

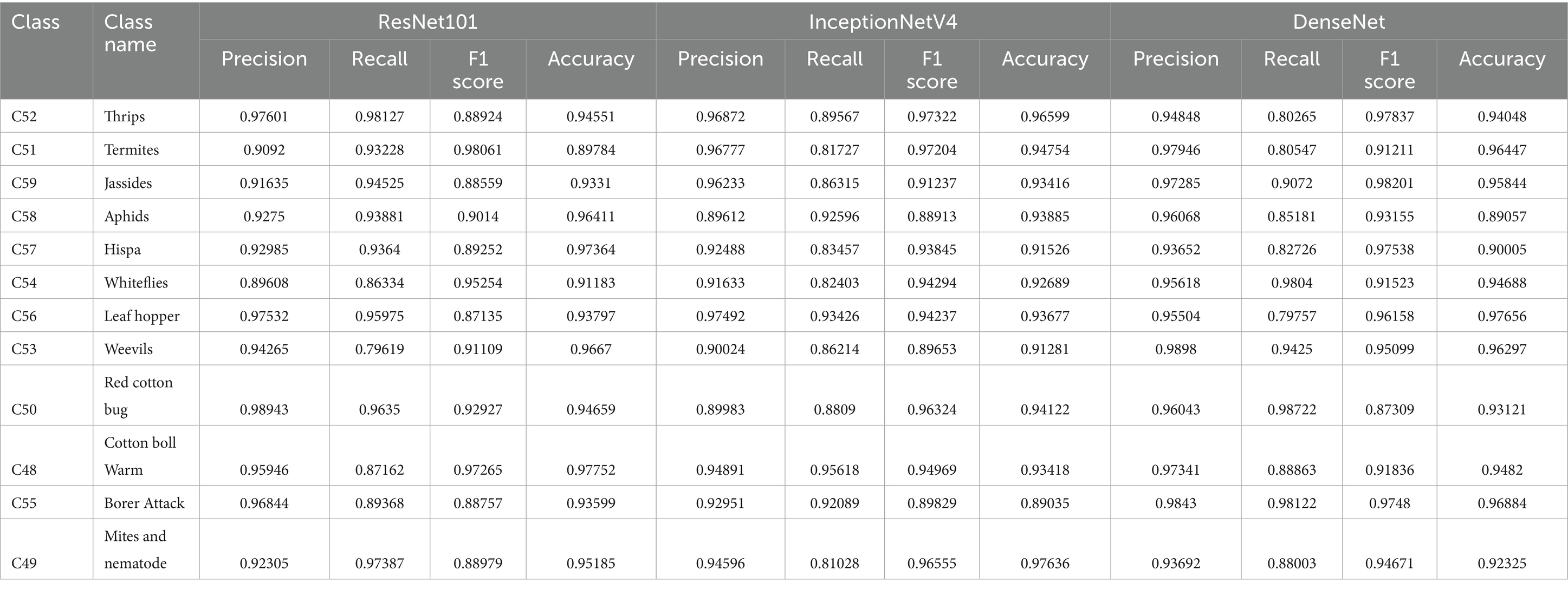

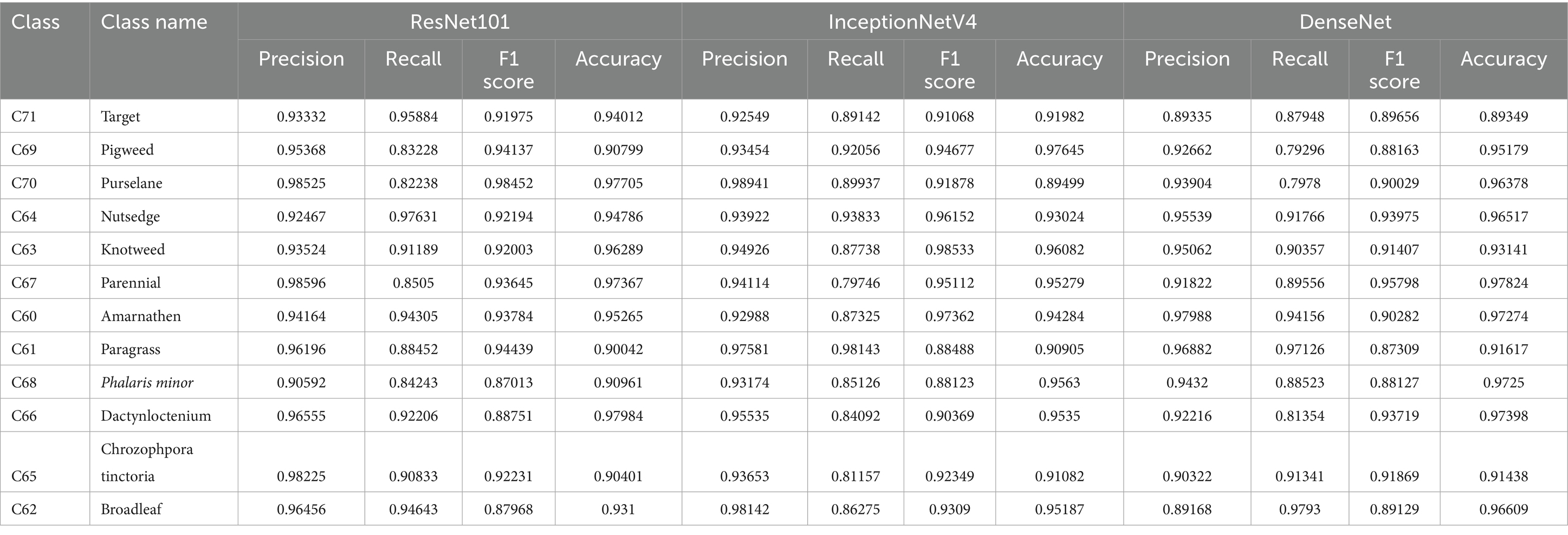

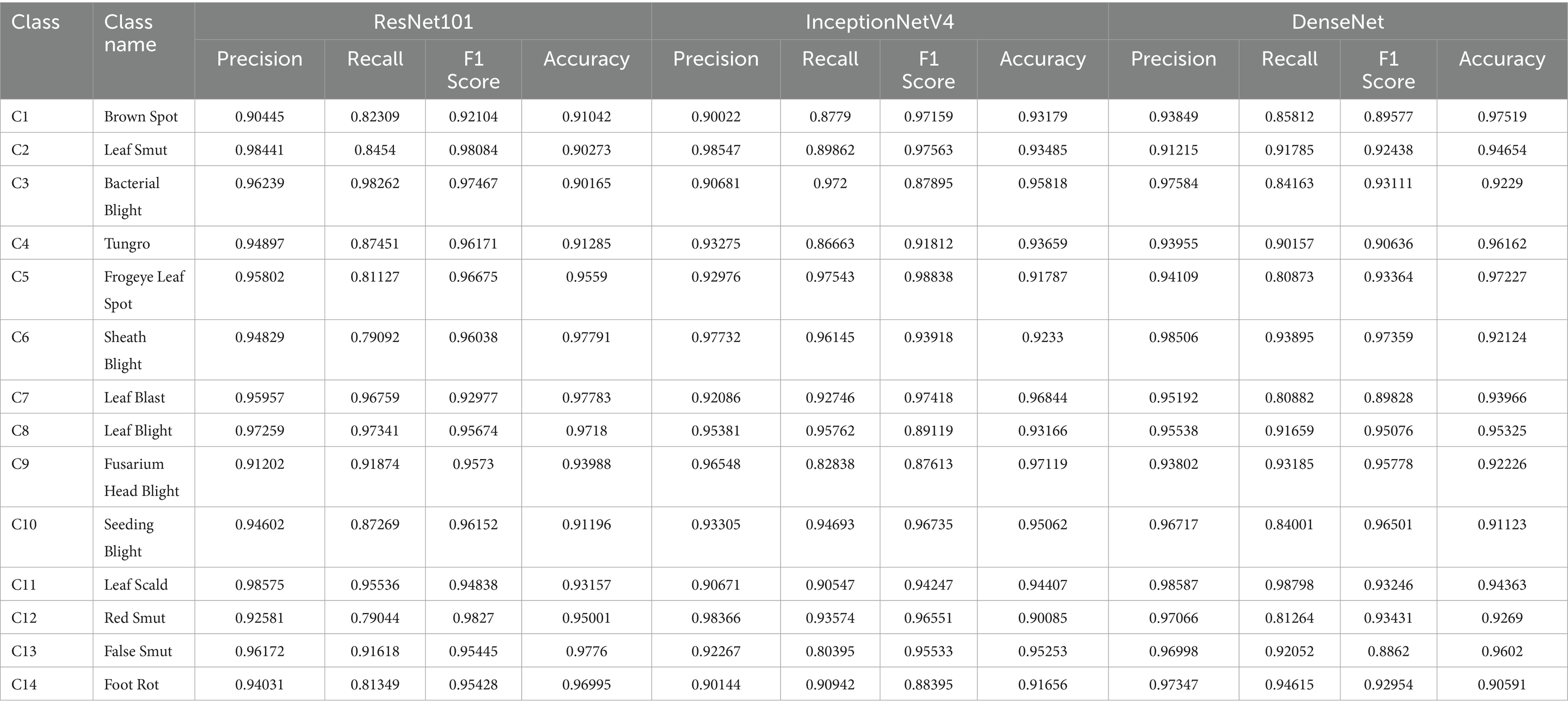

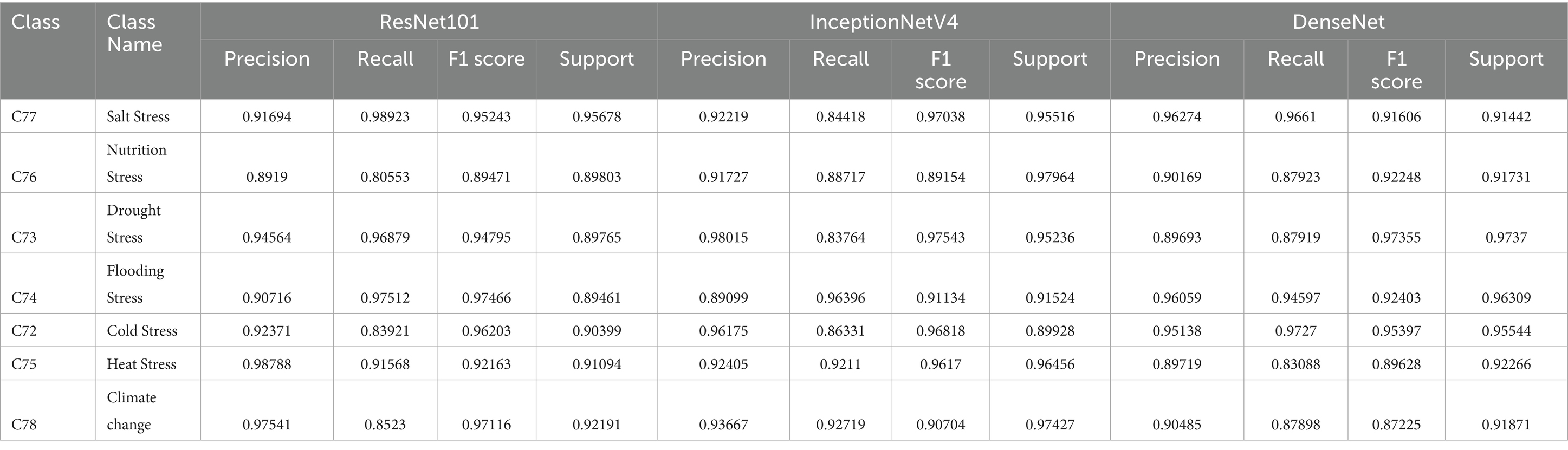

The PSDataset, comprising 78 classes labeled C1 to C78, is systematically categorized into biotic stress (C1–C44), pest stress (C45–C56), weed stress (C57–C68), and abiotic stress (C69–C78) samples, with its structure illustrated in Supplementary Figure 1. Detailed class numbers and names are provided in Supplementary Table 1, while Supplementary Table 2 presents class-wise analysis results, including performance metrics for ResNet-101, InceptionV4, and DenseNet models. Supplementary Table 3 outlines the characteristics and class distributions of the Original and Augmented Datasets, and Supplementary Table 4 details biotic stress caused by bacterial factors. Additionally, five image samples per class are displayed in a grid format to visualize the dataset, and confusion matrices for ResNet-101, InceptionV4, and DenseNet are included to evaluate their classification performance (see Supplementary materials).

Let the dataset be represented as.

Where:

• represents the biotic stress data, including various plant disease images.

• represents the abiotic stress data, such as drought, heat, cold stress, etc.

3.2 Data augmentation using DCGAN (deep convolutional generative adversarial networks)

To enhance the dataset and overcome limitations in data diversity, Deep Convolutional Generative Adversarial Networks (DCGANs) are employed for data augmentation. The aim is to generate synthetic images of plants under biotic and abiotic stress conditions to increase the robustness of the training process.

• The generator network (G) in DCGAN produces synthetic plant stress images:

where z is a random noise vector, and represents the learnable parameters of the generator.

• The discriminator network (D) is trained to differentiate between real images (X) and generated images ():

where represents the learnable parameters of the discriminator.

The objective of DCGAN is to optimise the following min-max game:

This generates new plant stress images that help improve the performance of the model on unseen data.

3.3 Deep convolutional neural network (DCNN) using ResNet101

The primary model used for stress detection is ResNet101, a residual deep convolutional neural network (DCNN) architecture. ResNet101 helps avoid the vanishing gradient problem using skip connections.

The forward pass of the ResNet101 model is represented as

where:

• is the output at layer l

• is the transformation learned by the l-th layer,

• represents the parameters of the l-th layer.

The model takes an input image X and predicts the stress level (biotic/abiotic) as output :

where:

• are the weights and bias of the final fully connected layer,

• L is the final layer of ResNet101.

• represents the predicted stress class.

3.4 Sparrow search optimization algorithm for DCNN optimization

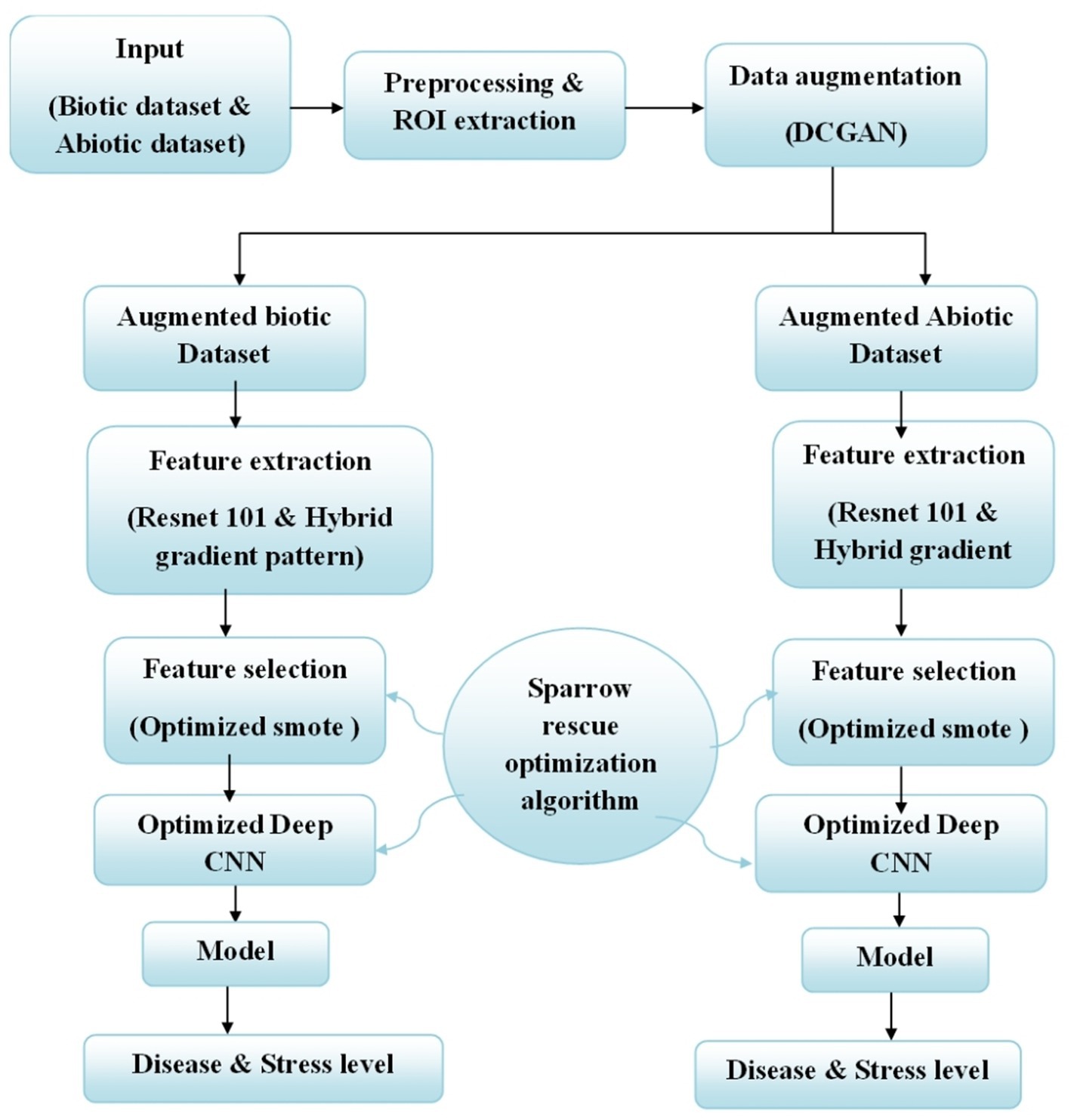

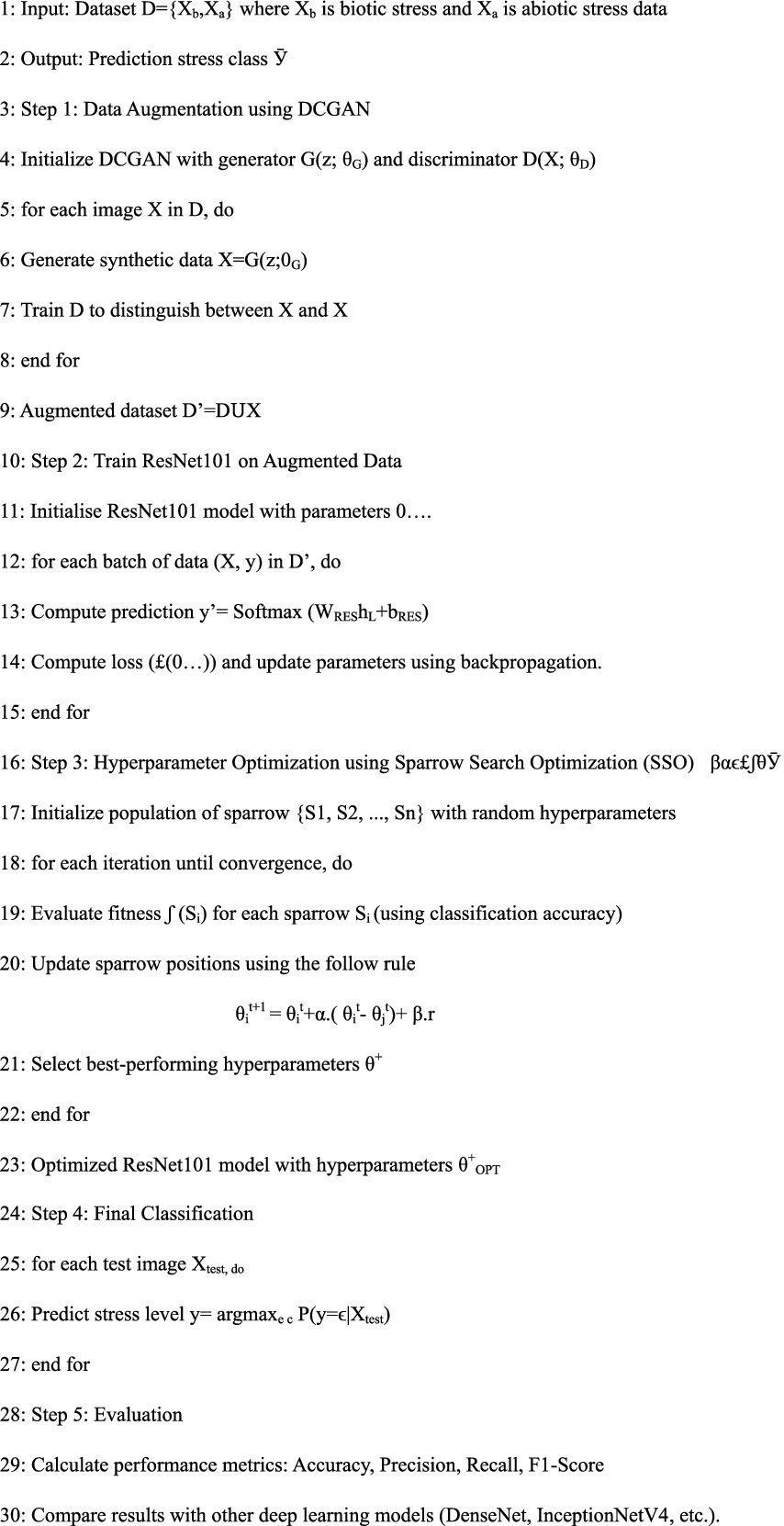

To improve the performance of the ResNet101 model beyond 81% of accuracy, the Sparrow Search Optimization (SSO) algorithm is applied to optimise hyperparameters (such as learning rate, number of layers, batch size, and so on). SSO is a swarm intelligence algorithm inspired by the foraging and escape behaviour of sparrows. Figure 2 and Algorithm 1 define the complete workflow and the step-by-step processing of the UrbanAgri proposed model, respectively. A population of sparrows is initialized with random hyperparameters , where denotes the position of the i-th sparrow in the hyperparameter space. The fitness function is defined as the model’s classification accuracy.

where represents the set of hyperparameters of the CNN (ResNet101) model. The positions of the sparrows are updated based on the following rules:

where α and β are learning parameters, r is a random vector, and is the best-performing sparrow. The optimisation process continues until convergence or the maximum number of iterations is reached, at which point the optimal set of hyperparameters is selected.

3.5 Final classification

After optimising the hyperparameters using the SSO algorithm, the final ResNet101 model is retrained with the optimal parameters for classification. The softmax layer outputs the final classification for biotic and abiotic stress levels. The classification task is modelled as:

where C is the set of biotic and abiotic stress classes.

3.6 Model training

This paper presented five comprehensive deep learning models implemented in Python using the Keras and TensorFlow frameworks. The prediction model for the plant stress dataset was developed via the PyCharm IDE on a Vultr cloud server equipped with a 6-core Intel Core (Broadwell, no TSX IBRS), 32 GB RAM, 1 TB storage, and an NVIDIA A40 24Q GPU. The PSDataset dataset, an extensive compilation of 9,900 photos devoted to the categorisation of novel diseases encompassing biotic and abiotic stressors, was the primary focus of our research. The integration of imaging data augmentation during the training phase constituted a pivotal element of our technique. This process entailed the implementation of a range of image transformation methods, including horizontal flipping, rotation (5°), shear intensity (0.2°), and zoom (0.2). Achieving homogeneity throughout the collection, each picture was scaled to 256×256 pixels and normalised. Using 120 epochs and 28 batches, the models were trained at a learning rate of 0.001.

4 Experimental results and discussion

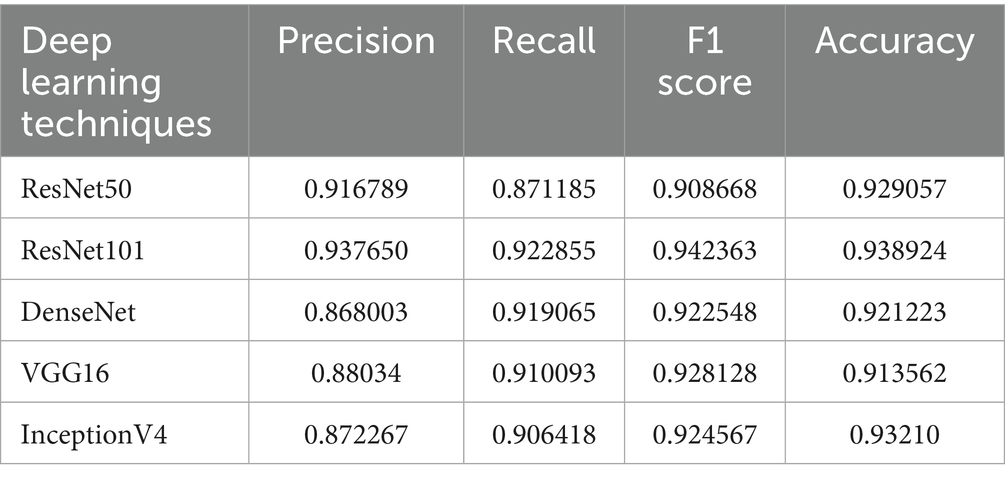

This study provided the evaluation of the performance of five prominent deep learning architectures, i.e., ResNet50, ResNet101, VGG16, InceptionNetV4, and DenseNet, for the detection of biotic and abiotic plant stresses within the context of urban agriculture. They have varied domain applications, like ResNet architectures that incorporate residual connections to mitigate vanishing gradients in deep networks, enabling deeper models with improved feature extraction capabilities. The VGG16 provides a deep convolutional framework that has proven effective for general image classification tasks. Further, InceptionNetV4 has inception modules that allow for multi-scale feature extraction, enhancing learning efficiency and accuracy. The DenseNet has separable convolutions to reduce parameter count and is designed for efficiency, employing depth-wise to make it suitable for deployment in resource-constrained environments. This comprehensive comparison across models varying in complexity and operating principles is hence suitable for applications in urban agriculture where computational resources and accuracy requirements vary. The models were trained and evaluated using a comprehensive dataset comprising a large volume of annotated images depicting various plant stresses typical of urban and peri-urban agricultural areas.

The models were tested across different plant stress categories, encompassing both biotic stresses like bacterial blight and leaf smut, and abiotic stresses such as brown spot and tungro. These stress categories are highly relevant to urban agriculture, where the interaction of plant pathogens with unique urban environmental factors creates complex disease dynamics. The dataset is designed to be representative of the symptom variability as encountered in urban settings, ensuring that the models learn from diverse manifestations of stress. This comprehensive stress coverage enhances the practical utility of the study, as successful detection across a wide range of stresses is necessary for real-world agricultural monitoring. Balanced sampling methods were employed during training to mitigate class imbalance issues, a common challenge in disease datasets that could bias model learning towards more frequent stress categories. The average results of analysis w.r.t. precision, recall, F1 score, and accuracy is shown in Table 2.

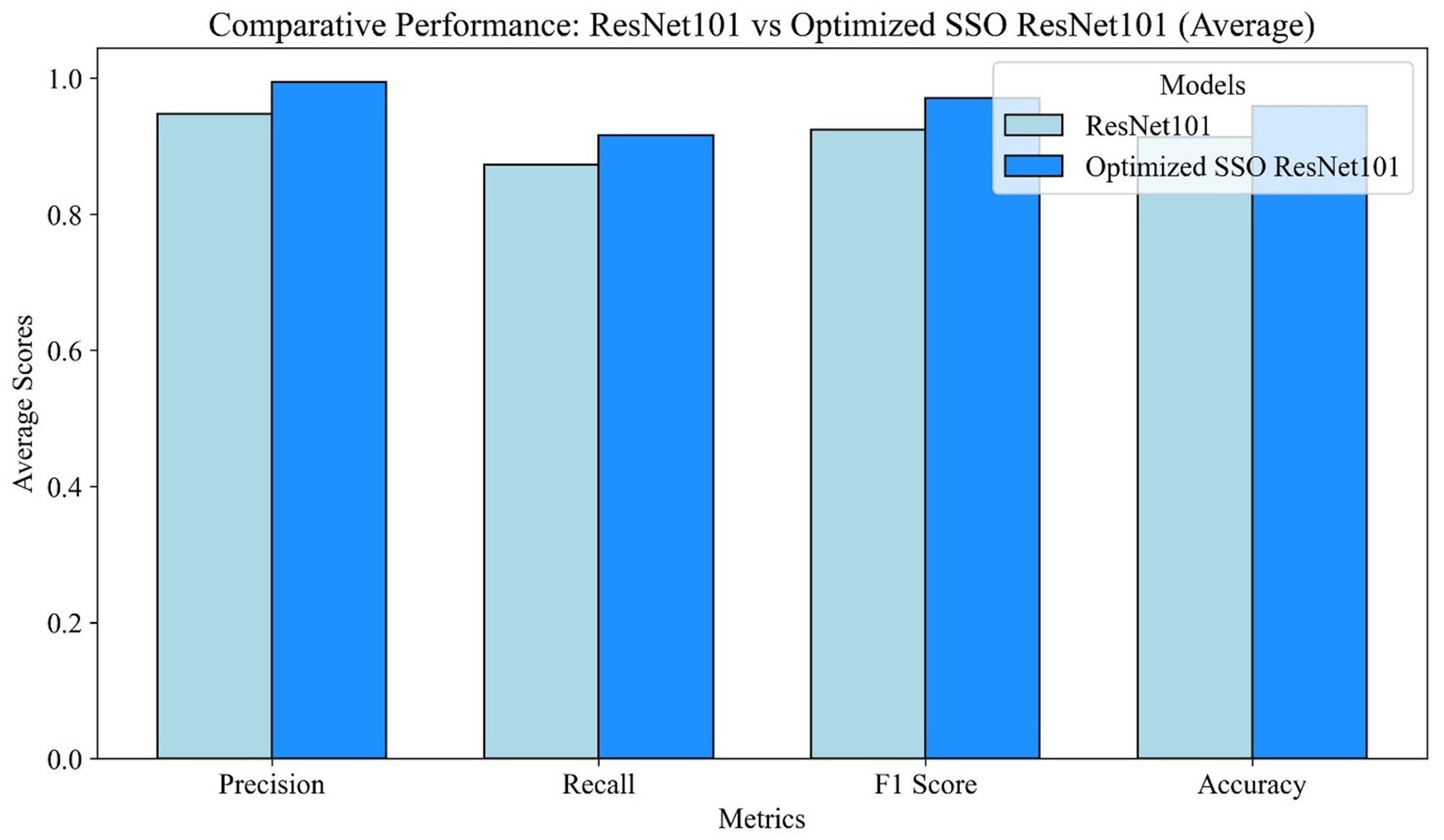

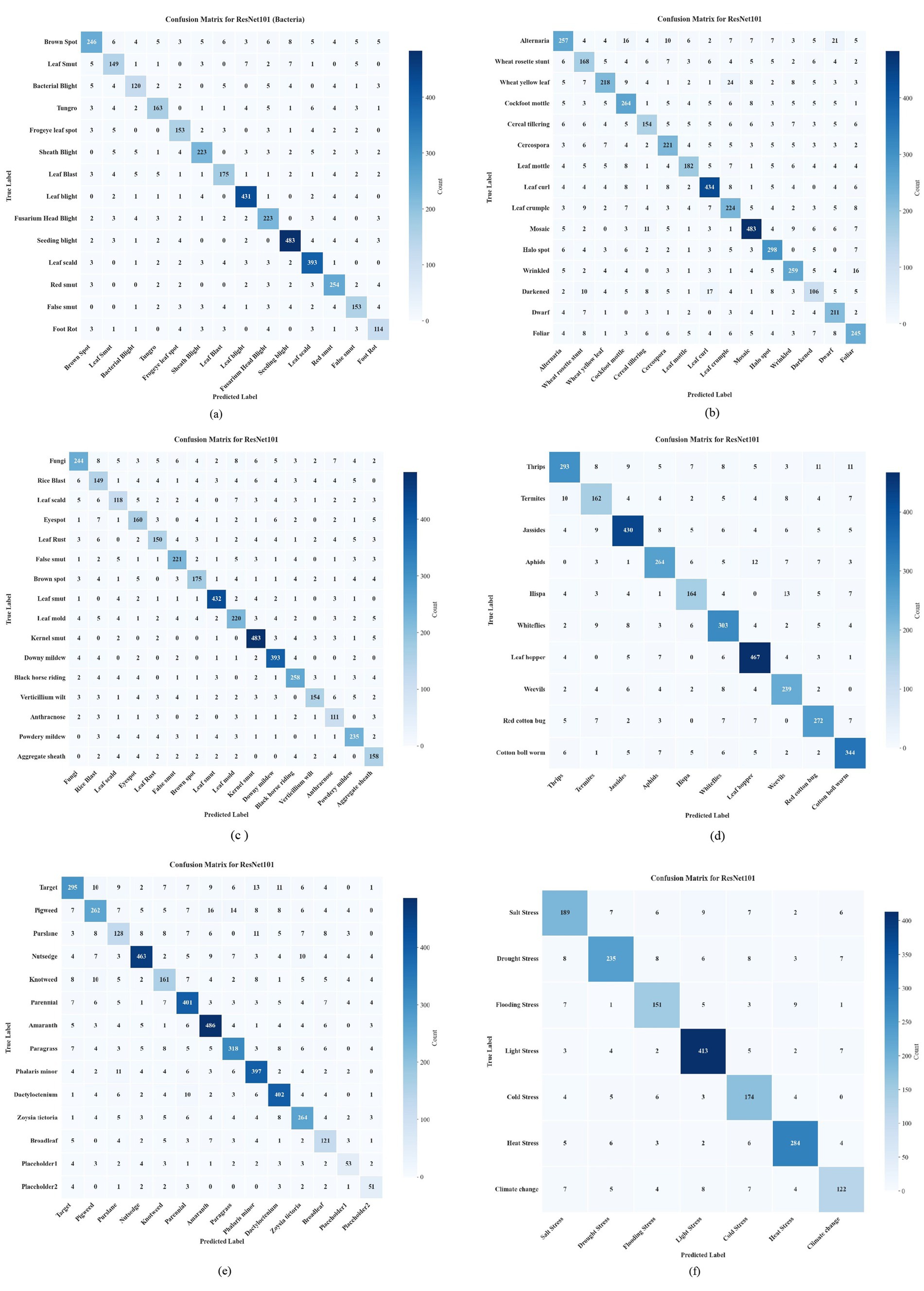

The outcomes, which are displayed in Tables 3–8, show the results of the annotated images and dataset samples, respectively. In Tables 3–8, quantify the results of the proposed model with other models on the viral, fungal, bacterial, pest, weed, and abiotic stress factors with the confusion matrices in Figure 3. All these quantify the accuracy, precision, recall, F1-score, and classification performance of these models. The ResNet101 model demonstrated remarkable performance, attaining the highest F1-score of 94.2 percent. The outcomes of the multiclass identification of plant stresses for the annotated sample are presented in Figure 4.

Figure 3. The confusion matrix displays the results for the ResNet101 across different stress classes: (a) bacterial stress classes, (b) viral stress classes, (c) fungal stress classes, (d) pest stress classes, (e) weed stress classes and (f) Abiotic stress class.

4.1 Performance metrics used

All the models were evaluated based on the four key performance metrics widely used in classification tasks: precision, Recall, F1 score, and accuracy. The precision quantifies the proportion of correctly identified positive cases among all predicted positive instances, reflecting the model’s ability to minimize false positives. Similarly, Recall, also known as sensitivity, is the proportion of true positive cases identified out of all actual positives, emphasising the model’s capacity to detect all relevant instances and thereby reduce false negatives. The F1 score binds both precision and recall into a harmonic mean and balances the trade-offs between these two metrics, providing a singular measure of model performance. Accuracy represents the overall proportion of correct predictions (both positive and negative) made by the model on the test dataset. Thus, the above metrics collectively inform on variable facets of the detection model’s efficacy where both false positives and false negatives have significant implications.

4.2 Performance analysis across deep learning models

ResNet50 demonstrated consistent and reliable performance across nearly all evaluated plant stress classes, affirming its effectiveness as a detector within the urban agriculture domain. Notably, it achieved a high precision of 0.97345 for the Brown Spot class, indicating a strong ability to correctly identify healthy plants without generating false alarms. This balance between precision and detection capability is further supported by its F1 score of 0.91101. However, ResNet50 showed a somewhat lower recall of 0.85903 for Leaf Smut, suggesting some missed positive cases in that category. Overall, ResNet50’s practical strength lies in minimizing false positives while maintaining acceptable detection rates, a feature particularly valuable in urban farming environments where precise and targeted interventions are essential for resource optimization. The ResNet101 model, an extension of the ResNet architecture with increased complexity, exhibited performance broadly similar to ResNet50, though with marginal improvements in certain classes. For example, it showed enhanced precision and recall in detecting Tungro disease (0.94897 precision, 0.92189 recall) and Bacterial Blight (0.96239 precision, 0.93702 recall). These gains are attributed to ResNet101’s deeper layers, which can learn more complex hierarchical features, benefiting the classification of stresses presenting subtle visual differences.

VGG16 also performed well across several stress types, notably achieving a high precision of 0.98098 in detecting Bacterial Blight. This demonstrates its effectiveness in accurately identifying stressed plants and reducing false positive classifications. Nevertheless, its recall for Bacterial Blight was comparatively lower at 0.89474, indicating some positive cases were missed, a critical concern when early detection is required to limit disease spread. VGG16’s average F1 score of 0.96171 for Tungro highlights its balanced detection quality, although its fixed architecture depth and absence of residual connections may hinder generalization in the variable visual conditions typical of urban plant stresses.

InceptionNetV4 distinguished itself with superior recall performance, achieving 0.98262 for Bacterial Blight, reflecting heightened sensitivity to detecting true positives and reducing false negatives. This capability is crucial for early warning systems where missing disease onset can cause significant crop damage. The model also recorded strong F1 scores for Leaf Smut (0.98084) and Bacterial Blight (0.97467), indicating robustness in both precision and recall. Its multi-scale feature extraction via inception modules enables it to capture complex symptom patterns and nuanced stress indicators commonly encountered in urban agricultural settings.

DenseNet showed respectable recall rates of 0.90157 for Tungro and 0.91785 for Leaf Smut, demonstrating competence in detecting most diseased instances. However, it exhibited a lower F1 score of 0.89577 for Brown Spot, indicating some imbalance between precision and recall for this class. DenseNet’s performance varied across different stress groups, reflecting variability in classification accuracy. Its compact, efficiency-optimized architecture, designed for lightweight deployment, may limit its representational capacity relative to deeper models, affecting its ability to handle complex visual stress patterns in urban farming.

Together, these results illustrate that while deeper networks like ResNet101 and InceptionNetV4 tend to offer higher sensitivity and nuanced classification, lighter models such as DenseNet provide efficiency gains at some cost to detection consistency. This trade-off is critical for selecting appropriate models tailored to the operational demands and resource constraints of urban agriculture systems. Figure 3 present the class wise confusion matrix results. Tables 3–8 present the class-wise learning matrices results.

The other models were less precise than ResNet50 and ResNet101. They reduce false positive rates, which is crucial in agricultural management since stress detection errors can waste resources and cost money. When misclassification costs are high, such as pesticide applications or targeted treatments in limited urban farming sites, these models are ideal. But InceptionNetV4 had the top recall scores in several stress classes, including Bacterial Blight. In crowded urban agricultural settings where missing stressed plants is more dangerous than false positives, this technique is useful for reducing infectious disease spread. Proactive stress management and yield protection in urban agriculture are supported by InceptionNetV4’s capacity to detect virtually all true positives. VGG16 and InceptionNetV4 yielded strong F1 scores across stress classes, indicating a good precision-recall balance. These models are versatile across a variety of detection priorities and provide a reliable baseline for general stress classification. Identifying stressed plants correctly and minimising overprediction mistakes may favour their selection. ResNet50’s 0.94837 accuracy in diagnosing Brown Spot symptoms supports these findings, demonstrating the model’s great general ability. For recall-focused applications, DenseNet may need domain-specific improvements because of its larger accuracy variability. In urban agriculture, these patterns help inform model selection based on operational priorities and hardware capabilities. The residual learning approach of ResNet50 and ResNet101 mitigates gradient deterioration in deep networks, enabling extensive feature extraction and higher precision. In urban agriculture’s varied environments, their image variation resistance makes them excellent. An application with few false positives saves resources and accurately identifies stress. Multi-resolution feature extraction in InceptionNetV4’s inception modules improves its sensitivity to minor stress symptoms. This architectural advantage improves recall performance, allowing detection of more stress manifestations, which is critical in applications where early detection reduces disease spread and crop loss. VGG16’s stable performance balance requires further adjustment, such as transfer learning or enriched augmentation, because of its weaker recall. Improved detection coverage, especially for complex or less obvious stresses relevant to urban agriculture, may result. Resource-constrained devices benefit from DenseNet’s simplified architecture. Reduced accuracy and F1 scores for some stress classes result from this efficiency’s loss of representational power. Hybrid models combining DenseNet with more robust classifiers or domain-specific fine-tuning could optimise on-device urban agricultural monitoring systems. The confusion metrics for ResNet-101 across all 78 classes (C1–C78) of the PSDataset are detailed in Supplementary Table 2 and Figure 3.

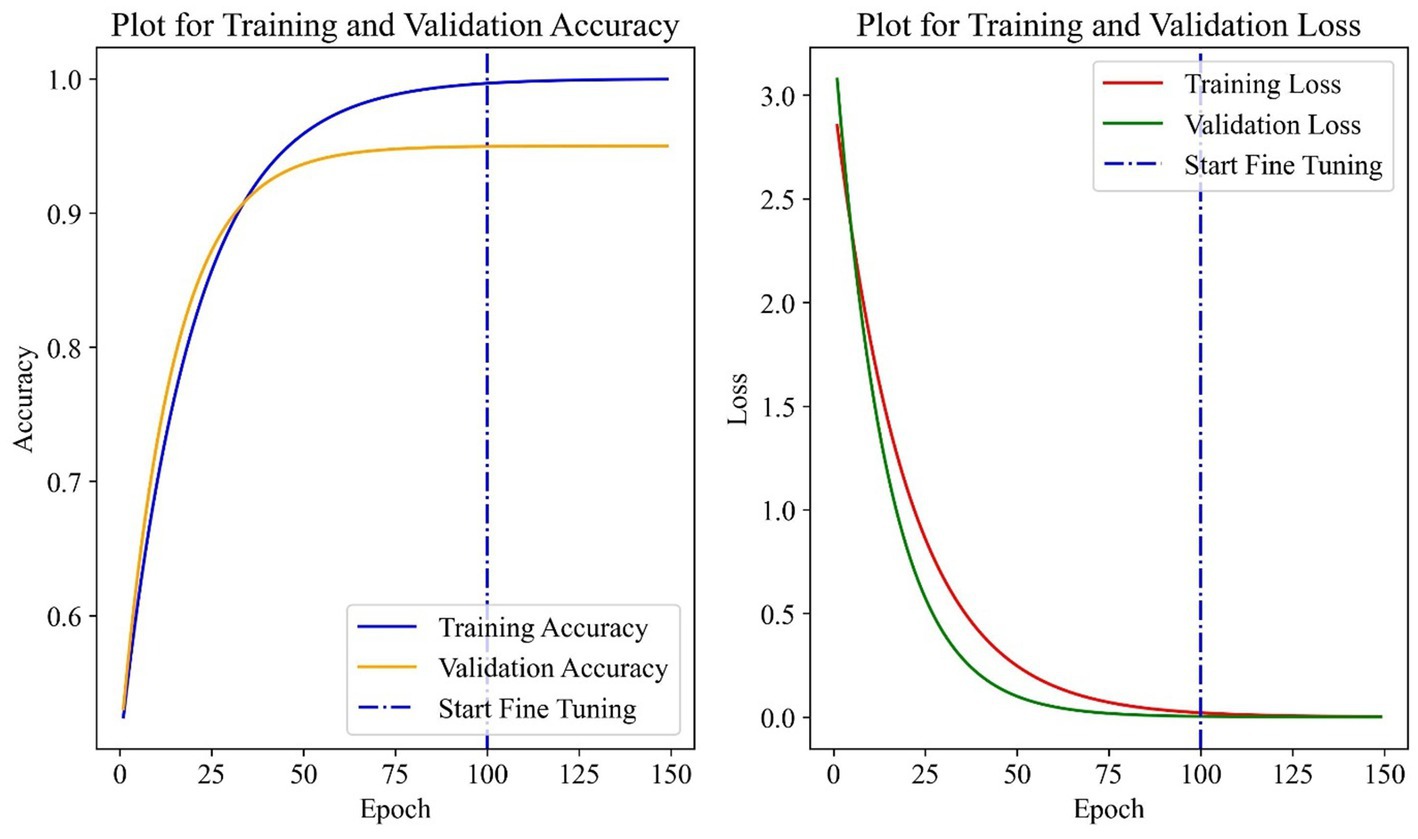

Loss of training and validation accuracy exhibited pertinent patterns in this dataset. At the outset, the models exhibited commendable speed of learning, as seen by the rapid improvement in both training and validation accuracies. Predictably and effectively utilizing the training data, the training accuracy quickly attained a peak level before levelling out. In a manner analogous to the training accuracy, the validation accuracy exhibited an initial surge followed by a plateau. Following epoch 100, the accuracy of the model remained mostly unaffected by the fine-tuning, since there was no statistically significant change seen.

In terms of training and validation loss, the training loss decreased significantly before levelling off as the model improved its fit to the training data. However, upon fine-tuning, the validation loss began to diverge and increase somewhat, which may suggest that the model has a propensity to overfit the training data, hence impairing its capacity to generalise to the validation set.

To summarise, while the model showed signs of effective learning during the first step (training and validation metrics increased together), no significant improvements were seen during the fine-tuning stage. As the validation loss and training loss both increased at the same time, a slight concern of overfitting became apparent. Figure 5 shows the learning matrices during the training and testing of the proposed model.

Figure 6 presented the performance comparison of the ResNet101 with the optimised ResNet 101 with SSO, which consistently outperforms its competitors on important performance metrics like accuracy, precision, Recall, and F1 score. This is because it can reliably identify true positives, keep the ratio of false positives to negatives under control, and accurately predict positive cases. This enhancement results in increased overall accuracy, which in turn makes the optimised model more reliable. Thus, optimisation methods like Sparrow Search Optimisation improve the performance of deep learning models without skewing any of the metrics used for evaluation. While the impact of these advancements on real-world applications is significant, it is important to note that actual gains may differ and that future research should focus on optimising for specific use cases.

5 Conclusion

This research introduces an advanced deep learning framework for urban agriculture, a key component of smart cities. Our method combines ResNet101 with the SSO algorithm to create a highly effective solution for the early detection of plant stress. This approach significantly outperforms traditional and other deep learning models, achieving a peak F1-score of 98.9%. This technology is crucial for smart urban environments where space is limited and minimizing crop waste is a priority. By enabling urban farmers to make timely interventions, our framework helps prevent significant losses and maximize yields, directly contributing to both urban food sustainability and global food security goals like the UN’s Zero Hunger Sustainable Development Goal (SDG 2). Ultimately, this research provides a practical, high-performance tool for resilient and sustainable urban food systems. Urban agriculture is a rapidly growing solution to food security challenges, particularly in densely populated areas. In smart cities, vertical farming and rooftop farming provide a means of integrating food production into urban spaces, using advanced technologies like IoT, sensors, and AI to optimize crop management. The proposed ResNet101 + SSO model provides a practical tool for these environments, where early detection of plant stress can make a substantial difference in minimizing crop loss and waste.

In conclusion, the proposed model not only optimizes plant stress detection but also has significant implications for urban sustainability, resource efficiency, and food security in smart cities. Through innovations in deep learning, this research supports a more resilient and productive urban agricultural system, capable of meeting the growing demand for food in a rapidly urbanizing world.

Data availability statement

The datasets presented in this study can be found in online repositories. The names of the repository/repositories and accession number(s) can be found at: https://github.com/upinderkaur2017/PSDataSet.

Author contributions

UK: Writing – original draft, Writing – review & editing. MK: Writing – original draft. AK: Writing – original draft, Writing – review & editing. DS: Writing – review & editing. RD: Validation, Writing – review & editing, Funding acquisition. MC: Writing – review & editing, Data curation.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Generative AI statement

The authors declare that no Gen AI was used in the creation of this manuscript.

Any alternative text (alt text) provided alongside figures in this article has been generated by Frontiers with the support of artificial intelligence and reasonable efforts have been made to ensure accuracy, including review by the authors wherever possible. If you identify any issues, please contact us.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

Supplementary material

The Supplementary material for this article can be found online at: https://www.frontiersin.org/articles/10.3389/frsc.2025.1619223/full#supplementary-material

References

Akhtar, A., Khanum, A., Khan, S. A., and Shaukat, A. (2013). “Automated plant disease analysis (APDA): performance comparison of machine learning techniques,” in 2013 11th International Conference on Frontiers of Information Technology, 60–65.

Ali, A. H., Youssef, A., Abdelal, M., and Raja, M. A. (2024). An ensemble of deep learning architectures for accurate plant disease classification. Ecol. Inform. 81:102618. doi: 10.1016/j.ecoinf.2024.102618

Boukhris, L., Ben Abderrazak, J., and Besbes, H. (2020). “Tailored deep learning based architecture for smart agriculture,” in 2020 International Wireless Communications and Mobile Computing (IWCMC), 964–969.

Cruz, J. A., Yin, X., Liu, X., Imran, S. M., Morris, D. D., Kramer, D. M., et al. (2016). Multi-modality imagery database for plant phenotyping. Mach. Vis. Appl. 27, 735–749. doi: 10.1007/s00138-015-0734-6

Elvanidi, A., and Katsoulas, N. (2022). Performance of gradient boosting learning algorithm for crop stress identification in greenhouse cultivation. Int. Electr. Conf. Hortic. 25:12508. doi: 10.3390/IECHo2022-12508

Fenu, G., and Malloci, F. M. (2021). Diamos plant: a dataset for diagnosis and monitoring plant disease. Agronomy 11:2107. doi: 10.3390/agronomy11112107

Gaidel, A. V., Podlipnov, V. V., Ivliev, N. A., Paringer, R. A., Ishkin, P. A., Mashkov, S. V., et al. (2023). Agricultural plant hyperspectral imaging dataset. Comput. Opt. 47:1226. doi: 10.18287/2412-6179-CO-1226

Kandukuri, P., Sowrab, B. S., Ramesh, G., and Singamaneni, K. K. (2023). “Paddy leaf disease identification on infrared images based on CNN with auto encoders,” in 2023 second international conference on augmented intelligence and sustainable systems (ICAISS), 277–281.

Kavitha Lakshmi, R., and Nickolas, S. (2020). “Deep learning based Betelvine leaf disease detection (Piper BetleL.),” in 2020 IEEE 5th international conference on computing communication and automation (ICCCA), 215–219.

Kiruthika, V., Shoba, S., Sendil, M., Nagarajan, K., and Punetha, D. (2024). Hybrid ensemble - deep transfer model for early cassava leaf disease classification. Heliyon 10:e36097. doi: 10.1016/j.heliyon.2024.e36097

Martin, W., and Wagner, L. (2018). How to grow a city: cultivating an urban agriculture action plan through concept mapping. Agric. Food Secur. 7:33. doi: 10.1186/s40066-018-0186-0

Mensah, P. K., Akoto-Adjepong, V., Adu, K., Ayidzoe, M. A., Bediako, E. A., Nyarko-Boateng, O., et al. (2023). CCMT: dataset for crop pest and disease detection. Data Brief 49:109306. doi: 10.1016/j.dib.2023.109306

Nagasubramanian, K., Singh, A., Singh, A., Sarkar, S., and Ganapathysubramanian, B. (2022). Plant phenotyping with limited annotation: doing more with less. Plant Phenome J. 5:20051. doi: 10.1002/ppj2.20051

Parraga-Alava, J., Cusme, K., Loor, A., and Santander, E. (2019). RoCoLe: a robusta coffee leaf images dataset for evaluation of machine learning based methods in plant diseases recognition. Data Brief 25:104414. doi: 10.1016/j.dib.2019.104414

Petchiammal, K., Murugan, B., and Arjunan, P. (2023). “Paddy doctor: a visual image dataset for automated Paddy disease classification and benchmarking,” in Proceedings of the 6th joint international conference on Data Science & Management of data (10th ACM IKDD CODS and 28th COMAD), 203–207.

Prajapati, H. B., Shah, J. P., and Dabhi, V. K. (2017). Detection and classification of rice plant diseases. Intell. Decis. Technol. 11, 357–373. doi: 10.3233/IDT-170301

Ramcharan, A., Baranowski, K., McCloskey, P., Ahmed, B., Legg, J., and Hughes, D. P. (2017). Deep learning for image-based cassava disease detection. Front. Plant Sci. 8:1852. doi: 10.3389/fpls.2017.01852

Rauf, H. T., Saleem, B. A., Lali, M. I. U., Khan, M. A., Sharif, M., and Bukhari, S. A. C. (2019). A citrus fruits and leaves dataset for detection and classification of citrus diseases through machine learning. Data Brief 26:104340. doi: 10.1016/j.dib.2019.104340

Reddy, A. A., Suresh, A., Praveen, K. V., Singh, D. R., and Rai, P. K. (2025). Profiling biotic, abiotic and institutional risks in rainfed farming and coping strategies. SSRN Electron. J. 22:7985. doi: 10.2139/ssrn.5257985

Sharma, H., Padha, D., and Bashir, N. (2022). “D-KAP: a deep learning-based Kashmiri Apple Plant disease prediction framework,” in 2022 seventh international conference on parallel, distributed and grid computing (PDGC), 576–581.

Shoaib, M., Shah, B., EI-Sappagh, S., Ali, A., Ullah, A., Alenezi, F., et al. (2023). An advanced deep learning models-based plant disease detection: a review of recent research. Front. Plant Sci. 14:1158933. doi: 10.3389/fpls.2023.1158933

Singh, D., Jain, N., Jain, P., Kayal, P., Kumawat, S., and Batra, N. (2020). “PlantDoc,” in Proceedings of the 7th ACM IKDD CoDS and 25th COMAD, 249–253.

Sladojevic, S., Arsenovic, M., Anderla, A., Culibrk, D., and Stefanovic, D. (2016). Deep neural networks based recognition of plant diseases by leaf image classification. Comput. Intell. Neurosci. 2016, 1–11. doi: 10.1155/2016/3289801

Thapa, R., Zhang, K., Snavely, N., Belongie, S., and Khan, A. (2020). The plant pathology challenge 2020 data set to classify foliar disease of apples. Appl Plant Sciences 8:e11390. doi: 10.1002/aps3.11390

Keywords: sustainable agriculture, biotic and abiotic plant stressors, deep learning, sparrow search algorithm, CNN - convolutional neural network

Citation: Kaur U, Kaur M, Kumari A, Shukla D, Datt R and Chand M (2025) UrbanAgri: a transfer learning-based plant stress identification framework for sustainable smart urban growth. Front. Sustain. Cities. 7:1619223. doi: 10.3389/frsc.2025.1619223

Edited by:

Oliver Körner, Leibniz Institute of Vegetable and Ornamental Crops, GermanyReviewed by:

Luca Lombardo, Council for Agricultural Research and Economics, ItalyA. Amarender Reddy, National Institute of Agricultural Extension Management (MANAGE), India

Copyright © 2025 Kaur, Kaur, Kumari, Shukla, Datt and Chand. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Upinder Kaur, dXBpbmRlcl9jc0BhdXRzLmFjLmlu; Aparna Kumari, YXBhcm5hLmt1bWFyaUBuaXJtYXVuaS5hYy5pbg==

Upinder Kaur

Upinder Kaur Manjit Kaur1

Manjit Kaur1 Aparna Kumari

Aparna Kumari Rajan Datt

Rajan Datt Mehar Chand

Mehar Chand