Abstract

In the field of biomedical research, rats are widely used as experimental animals due to their short gestation period and strong reproductive ability. Accurate monitoring of the estrous cycle is crucial for the success of experiments. Traditional methods are time-consuming and rely on the subjective judgment of professionals, which limits the efficiency and accuracy of experiments. This study proposes an EfficientNet model to automate the recognition of the estrous cycle of female rats using deep learning techniques. The model optimizes performance through systematic scaling of the network depth, width, and image resolution. A large dataset of physiological data from female rats was used for training and validation. The improved EfficientNet model effectively recognized different stages of the estrous cycle. The model demonstrated high-precision feature capture and significantly improved recognition accuracy compared to conventional methods. The proposed technique enhances experimental efficiency and reduces human error in recognizing the estrous cycle. This study highlights the potential of deep learning to optimize data processing and achieve high-precision recognition in biomedical research. Future work should focus on further validation with larger datasets and integration into experimental workflows.

1 Introduction

Understanding the physiological and behavioral changes that occur in female animals at different stages of the estrous cycle is critical for interpreting experimental data. Female animals, including rats, experience significant variations in biological aspects such as gene expression (1), protein levels (2, 3), electrophysiological properties (4, 5), behavior (6, 7), and drug responses (8) due to their estrous cycle. Recognizing this, the National Institutes of Health (NIH) emphasized in 2015 the necessity to consider sex as a variable in studies and to include both male and female animals (9).

Rats are widely used in research due to their ease of care and short reproductive cycle. The estrous cycle, also known as the cycle, is the period of time between the onset of two estrus (10). As model organisms, rats play a key role in biomedical research. By studying rats, scientists have been able to gain insight into the mammalian reproductive system, revealing fundamental mechanisms related to the cell cycle, hormonal regulation, and reproductive behavior. These studies have provided important insights into deepening our understanding of human reproductive health. Their estrous cycle, comprising four main phases—proestrus (P), estrus (E), metestrus (M), and diestrus (D) (11, 12), is particularly important for studies on the female reproductive system. Accurate determination of these phases is crucial, as each phase can exhibit different responses to medications and in disease modeling, enhancing the reliability and applicability of experimental data.

In experiments, the stage of the estrous cycle in rats is usually determined by cytological analysis of vaginal smear samples. Specifically for each phase, the analysis is mainly based on the type, number, shape, and size of the cells in the smear, as well as their relative proportions to each other (13–17), as shown in Figures 1A–D. Briefly, the P Stage is in the follicular stage of development, with rising estrogen levels, lasting 12–14 h, and vaginal smears showing mainly nucleated cells and erythrocytes. The E Stage, in which rats show sexual receptivity, is the ovulatory stage and lasts for 9–15 h, with vaginal smears showing mainly keratinized cells. Immediately following the M Stage, which lasts 21–24 h, the corpus luteum begins to form in the ovary at this stage, estrogen levels decrease, and progesterone levels increase. Keratinized cells, nucleated cells, and leukocytes can be seen in the vaginal smear. D Stage has the longest cycle, lasting 55–57 h, in which the corpus luteum matures and secretes large amounts of progesterone to maintain the endometrium, and the vaginal smear shows mainly leukocytes (18, 19). Although vaginal cytological analysis is the traditional method for localizing the estrous cycle in rats, manual assessment has some limitations. For example, the process often requires prolonged training so that examiners can become proficient in recognizing the different phases; it is time-consuming and costly to manually identify estrous phases from images; and even professionally trained personnel may sometimes differ in their assessments. These limitations highlight the potential inconvenience and inconsistency of this approach.

Figure 1

Vaginal cytology presenting each stage of the rat estrous cycle. Stages of the estrous cycle include the (A) proestrus, (B) estrus, (C) metestrus, and (D) diestrus.

Deep learning, especially research methods relying on artificial neural networks, has been widely used in several fields (20, 21), and the field of veterinary science and medicine is no exception (12, 22, 23). For this research area, we have conducted a comprehensive review of the relevant literature, with a special focus on the niche area of pathology. Pathology is the study of disease-induced changes in tissues and their causes. A large number of studies in the research literature based on convolutional neural network (CNN) technology have explored the composition and properties of various types of tissues through deep learning. For example, Sun et al. (24) classified endometrial tissue images and their findings classified pathological diagnoses into four categories (i.e., normal endometrium, endometrial polyp, endometrial hyperplasia, and endometrial adenocarcinoma) and achieved significant progress in classification results. In contrast, a hybrid convolutional deep neural network model developed by Yan et al. (25) focused on classifying breast cancer tumors using a wider range of image resources, demonstrating the potential of the model to be applied in this field.

In this research project, we applied deep learning algorithms to analyze stained samples collected from vaginal exfoliated cells. In this paper, we use the EfficientNet deep learning model for sample training and accurate classification of images. Currently, pathologists are still using microscopes to examine these digitally converted pathology samples. EfficientNet, as a cutting-edge image-processing network, is particularly well-suited to accurately identify and differentiate between various cell types from complex cellular images using its deep learning architecture (26). Adopting this approach effectively solves the problem of consistency associated with tedious training, time-consuming and subjective judgments required when manually determining the phases of the estrous cycle.The addition of EfficientNet enabled us to rapidly and accurately analyze large amounts of image data to accelerate the determination of the various phases of the estrous cycle, significantly improving the efficiency of the study and the reliability of the results (27, 28). During implementation, it was found that training using our proposed model took only 8–10 min, and the detection speed reached the second level, realizing significant time savings compared to traditional microscopy methods.

The main contributions are summarized as follows.

-

In this study, we constructed a raw dataset dedicated to the study of vaginal decidual cell staining images for understanding the rat's estrous cycle, a collaborative research project spanning medicine, pathology, and computer science. By conducting research in this cutting-edge, cross-disciplinary field, we are able to explore in-depth aspects of microscopic image recognition and animal physiology.

-

We used the EfficientNet model, which is usually used for target detection, as a classifier and achieved high accuracy in the identification of vaginal exfoliated cells and judgment of motility cycle, bridging the gap of research in this field. At the same time, such a model can provide a second opinion to pathologists to support their clinical diagnosis.

-

We have made significant advances in fine-grained recognition by using the EfficientNet model to be able to extract more deep information from micrographs of vaginal exfoliated cells, further reducing the possibility of misjudging the motility cycle.

2 Materials and methods

2.1 Animals

At 8 weeks old, 40 female specific-pathogen-free (SPF) Sprague-Dawley (SD) rats were obtained from Changsha Tianqin Biotechnology Co., Ltd. in China. Following a 2-week acclimation period, 36 SD rats with stable estrous cycles were chosen for the study.

2.2 Data acquisition

In this paper, we used vaginal exfoliated cell staining to obtain microscopic images of the rat's estrous cycle. The specific operation was as follows: firstly, the cotton ball of the smaller end of the medical swab was completely moistened with saline, and the vaginal opening was fully exposed by gently lifting the rat's tail, and the moistened swab was gently put into the rat's vagina and slowly rotated for 2 or 3 times and then taken out; secondly, the swab taken out was evenly rotated and coated on an ordinary slide, with an area of about the size of the thumb's nail cap, and then the vaginal secretion on the slide was dried up and then stained with 0.2% methylene blue staining solution for about 15 min, and then gently rinsed with tap water. Then, after the slides were air-dried, an appropriate amount of neutral gum could be added to the slides, and then the slides were covered with a coverslip, avoiding the production of bubbles as much as possible, and then stored at room temperature; Lastly, each vaginal exfoliated cell smear was observed under a microscope and labeled. In order to obtain sufficient data, the experimental rats were injected with cyclophosphamide and leucovorin. Cyclophosphamide was used to induce immunosuppression, which can affect the estrous cycle and provide a model for studying the effects of immunosuppression on reproductive health. Leucovorin was administered to mitigate the toxic effects of cyclophosphamide. Data were collected every day for 4 consecutive weeks. In view of the fact that the rat's estrous cycle consists of four consecutive phases, namely, the pre-motility phase, the motility phase, the post-motility phase, and the inter-motility phase, the motility cycle was determined according to the presence and the number of keratinized epithelial cells, leucocytes, and nucleated epithelial cells on the vaginal smears, and the pictures were combined with the expert prior knowledge to correspond one-to-one with the motility cycle. The target classification results are labeled one-to-one, constituting the dataset for the EfficientNet model. Obtained 646 for P stage, 672 for E stage, 670 for M stage, and 667 for D stage, for a total of 2,267. The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

2.3 Image preprocessing

Before training for deep learning, it is crucial to properly preprocess the collected image data related to the rat estrous cycle. This step ensures that our dataset is not only clean but also consistent, enabling the trained model to achieve optimal performance. Therefore, we adopted a series of standard operations for data preprocessing: first, in the image quality control stage, all microscopic images were carefully examined to exclude those samples whose image quality was degraded due to improper sampling, focusing errors, or motion blur. The remaining images were subjected to an initial judgment of cellular structure recognition, with the aim of obtaining training samples for the model, and samples that contributed substantially to the subsequent analysis were retained.

Subsequently, image enhancement techniques were applied to enhance the details of the images. By adjusting the brightness and contrast, we enhanced the visual contrast of the cellular structures to provide clear inputs for subsequent modeling. Similarly, the sharpening process further enhances the recognition of local details without losing the realism of the image. Image format conversion is another part of the preprocessing, and in this step we strictly control the conversion quality loss by uniformly converting all images to JPEG format suitable for processing by the machine learning framework. This processing also includes a uniform resizing of the images, which ensures that each image meets the input specifications of the training model (29, 30), as shown in the following equation, where the image data is uniformly resized to 224 dimensions. The original image also maintained to preserve key biological feature information.

In order to scale the image from the original resolution to the new resolution, we use Equation (1) to (4). Firstly, we need to calculate the scale factor

We want to keep the aspect ratio of the image constant, choose the smaller of the width and height scale factors as the uniform scale factor:

This scale factor is then used to calculate the scaled width and height:

If the scaled width and height do not reach the target resolution, the target resolution can be reached by padding (padding). We use zero padding, the amount of padding can be calculated as follows:

Because we need to fill an odd number, we can adjust the fill amount slightly so that the left and right (top and bottom) fills are as even as possible.

In order to further enhance the generalization ability of the model and reduce the possibility of overfitting, data enhancement means were applied to the image dataset, including rotation, scaling, flipping, and cropping of the images. These operations generated variants of the training images, thus simulating experimental environments with high diversity. Finally, to ensure the accuracy and validity of the model evaluation, we divided the entire dataset into training, validation, and testing sets, with uniformly distributed samples in each subset to ensure fairness and comprehensiveness of the evaluation.

2.4 EfficientNet model

We use the EfficientNet model as a classifier based on the convolutional neural networkCNN (CNN) architecture. EfficientNet is an advanced CNN architecture that was first proposed by Tan and Le in their 2019 paper (31). The core concept of EfficientNet lies in equalizing the depth, width, and image resolution, which are systematically coordinated through a method known as the compound scaling method (32). Traditional CNN architectures are designed with a given resource budget and scaled to improve accuracy as more resources become available (33). However, this simple scaling is often done for a single dimension, either by increasing the width of the network (the number of neurons in a layer), or the depth of the network (the number of layers), or by increasing the resolution of the input image.EfficientNet proposes a different approach: the composite scaling method is able to take into account all three dimensions at once, as shown in Figure 2, and to unify scaling by a fixed scale factor ϕ to unify the scaling, as shown in the following Equation (5), as a way to maintain the balance between the dimensions. This balanced approach proves to be effective in improving the performance of the model while maintaining high efficiency of the parameters.

Figure 2

A composite scaling method for feature extraction strategy.

Where, w, d, r are the scaling coefficients of the scaling network for width, depth, and resolution, respectively; α, β, γ are all constants obtained from a very small range of network searches; intuitively, ϕ is a specific coefficient that controls the amount of resources used for a resource, and α, β, γ determines exactly how the resource is allocated.

The EfficientNet model adopts the mobile bottleneck convolution (MBConv) of MobileNet V2 (34) as its core network architecture and draws on the squeeze-and-excitation technique of SENet (35) to further optimize the network architecture. The details of the EfficientNet architecture adopted in this study are demonstrated in Table 1. The starting phase, i.e., Phase 1, uses a standard convolutional layer of 3x3 size with a step size of 2; from Phase 2 to Phase 8, a duplicate MBConv structure is used for stacking; and Phase 9, consists of a standard convolutional layer of 1x1 size, an average pooling layer, and a fully-connected layer.

Table 1

| Stage | Operator | Resolution | Channels | Layers |

|---|---|---|---|---|

| 1 | Conv3 × 3 | 224 × 224 | 64 | 1 |

| 2 | MBConv1, k3 × 3 | 112 × 112 | 32 | 1 |

| 3 | MBConv6, k3 × 3 | 112 × 112 | 48 | 2 |

| 4 | MBConv6, k5 × 5 | 56 × 56 | 80 | 2 |

| 5 | MBConv6, k3 × 3 | 28 × 28 | 160 | 3 |

| 6 | MBConv6, k5 × 5 | 14 × 14 | 224 | 3 |

| 7 | MBConv6, k5 × 5 | 14 × 14 | 384 | 4 |

| 8 | MBConv6, k3 × 3 | 7 × 7 | 640 | 4 |

| 9 | Conv1 × 1& Pooling & FC | 7 × 7 | 2,560 | 1 |

Description of each stage in the network architecture.

3 Experimental results

3.1 Performance indicators

In this study, a set of specific evaluation criteria was established to determine the performance of our model for detection during the estrous cycle phase. Therefore, we chose metrics such as accuracy, precision, recall, and F1 score for effectiveness assessment. Among them, the confusion matrix (12) serves as a tool, which can be constructed based on the values of the parameters mentioned above, to evaluate the success of the classification algorithm.

Accuracy is a key measure of how correct the algorithm is in performing the classification (36). The resulting accuracy value is derived by comparing the ratio of the number of correctly classified samples to the total number of samples. It is often used as an important measure of the model's performance. The value of the accuracy rate is between 0 and 1. The closer the value is to 1, the higher the classification success rate of the model (37). The specific Equation (6) is demonstrated below.

where TP denotes True Positives, i.e., true class positive instances; TN denotes True Negatives, i.e., true class negative instances; FP denotes False Positives, i.e., false positive instances; and FN denotes False Negatives, i.e., false negative instances.

The assessment metric of Precision is primarily used to measure the ability of a neural network to correctly identify positive samples (38). It is specifically defined as the ratio of the number of true positive predictions of the model to the number of all positive predictions. As this ratio rises, the probability of successful recognition by the network increases. The corresponding calculation is shown in the following Equation (7).

where TP denotes True Positives, i.e., true class positive instances; FP denotes False Positives, i.e., false positive instances.

The metric Recall measures the proportion of samples correctly identified by the model as belonging to their respective classes. Specifically, it quantifies how many of the actual positive samples are correctly predicted as positive, and how many of the actual negative samples are correctly predicted as negative. This metric is crucial for assessing how well the model identifies samples in both positive and negative categories. The Equation (8) for recall is shown below.

where TP denotes True Positives, i.e., true class positive instances; FN denotes False Negatives, i.e., false negative instances.

The F1 Score is a metric that combines precision and recall, providing a single measure that reflects the balance between these two metrics. It is particularly useful in scenarios where we want to consider both the completeness (recall) and correctness (precision) of the model's predictions. The Equation (9) for F1 Score integrates these components to give a comprehensive assessment of the model's performance.

3.2 Model setting

In deep learning, model training is both complex and computationally intensive. By deploying Graphics Processing Unit (GPU) acceleration in our daily research or development environments, we can significantly improve the efficiency of model training and quickly achieve better training results. For all experiments, NVIDIA Corporation (NVIDIA) 3050ti GPU was used for model training and testing. In addition, in order to optimize the model training, Adam was chosen as the optimization algorithm in this study, and the training Epochs were set to 100, the initial learning rate was set to 0.001, and the batch size was adjusted to 16, and the detailed configurations can be found in Table 2. In addition, it is worth mentioning that the use of GPU acceleration is especially critical for dealing with large-scale datasets and complex model architectures, which not only reduces the training time, but also helps the experiments to iterate rapidly and optimize the model performance.

Table 2

| Parameters | Value |

|---|---|

| Rate of learning | 0.001 |

| Number of epochs | 100 |

| Batch size | 16 |

| Image size | 224 |

| Optimization algorithm | Adam |

Training parameter values for EfficientNet model.

3.3 Discussion and results

As can be seen in Figure 3, the model loss (a metric characterizing poor model performance) drops rapidly from a high value of slightly above 2.5 in the early stages of training, indicating that the EfficientNet model quickly begins to learn and improve its performance in the application of rat motility classification. The training and validation loss curves are very close to each other and remain consistent over time, indicating that the model has good generalization ability, i.e., it can be effectively applied to unseen data, and also that there is no overfitting. After about 50 epochs, the two loss curves are essentially stable with only minor fluctuations, which means that the model may be approaching the limit of its learning potential. At this stage, the performance improvement of the model becomes marginal, and further training may not result in significant performance gains unless the model configuration or training process is changed. The loss value eventually stabilizes at around 0.4, a point that indicates that the EfficientNet model performs well on the task of classifying rat motility on vaginal micro-smears.

Figure 3

Training-validation loss graphs according to the EfficientNet model.

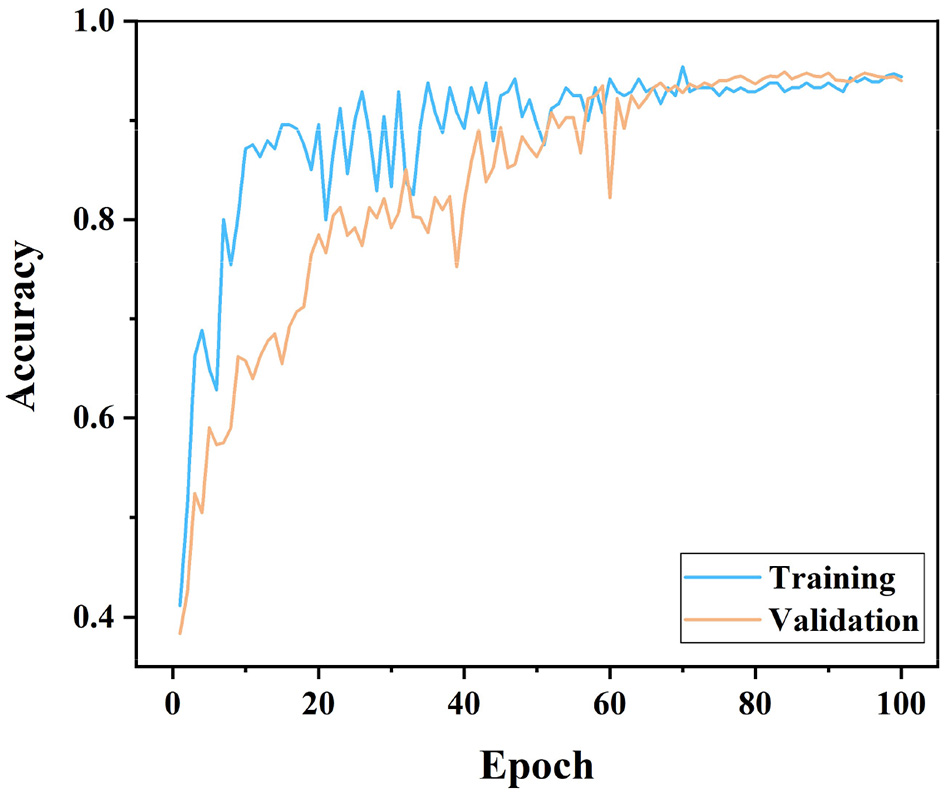

As can be seen in Figure 4, both training and validation accuracies seem to increase from about 0.4 when starting from an Epoch of 0. This indicates that initially the model learns from a base level and improves its performance very quickly, which is consistent with the early behavior of typical deep learning model training curves. It is worth noting that the training curve increases faster than the validation curve and reaches an accuracy close to 1.0 earlier. As can be seen from the curves, the training curve has more fluctuations than the validation curve. This can be due to the fact that the model encounters some difficult to fit data during the learning process. The validation curve is usually smoother, but overall the accuracy is lower than the training set. It does not reach the high point of the training accuracy and shows some degree of generalization.

Figure 4

Training-validation accuracy graphs according to the EfficientNet model.

Based on the analysis results of these two figures, it is evident that the EfficientNet model has good learning ability and generalization performance in dealing with specific biomedical image classification problems, showing its advantages in complex image recognition tasks.

Table 3 shows the distribution and evaluation metrics of the results obtained from the data trained on the EfficientNet model. It can be seen that the overall accuracy of the EfficientNet model is 95.8% and the F1 score, which is the reconciled mean of precision and recall, is 0.968, indicating a good balance between precision and recall. In terms of category stage performance: the Proestrus stage, the model performs slightly lower than the overall in this stage, with an accuracy of 97.0%, a precision of 0.933, a recall of 0.949, and an F1 score of 0.941. The precision is lower than in the other stages, indicating that there are more false positives; the Estrus stage: the model performs very well in this stage, having the highest accuracy (99.1%) and perfect recall (1.0). It correctly identifies all the actual positive examples with the highest F1 score of all phases at 0.983; Metestrus phase: here the model has 98.2% accuracy, very high precision (0.983) and recall (0.950). The F1 score of 0.966 is still very high and shows a balanced performance; Diestrus phase: performance is Estrus stage, with 99.1% accuracy, 0.983 precision, 0.983 recall, and an F1 score of 0.983. the model has a very high ability to correctly recognize and classify pictures in this stage.

Table 3

| Stage | Number of images | Accuracy (%) | Precision | Recall | F1 score |

|---|---|---|---|---|---|

| Whole | 239 | 95.8 | 0.967 | 0.971 | 0.968 |

| Proestrus | 59 | 97.0 | 0.933 | 0.949 | 0.941 |

| Estrus | 58 | 99.1 | 0.967 | 1.0 | 0.983 |

| Metestrus | 62 | 98.2 | 0.983 | 0.950 | 0.966 |

| Diestrus | 60 | 99.1 | 0.983 | 0.983 | 0.983 |

Test results of data trained with EfficientNet model.

Based on the results of the confusion matrix in Figure 5, we can see that the performance of this classification model in recognizing the four categories; Diestrus, Estrus, Metestrus and Proestrus is good. Specifically, for the Diestrus category, the model correctly identified 56 cases, but three cases were misidentified as Metestrus; in the Estrus category, the model performs well, correctly identifying all 58 cases without misidentification; for the Metestrus category, the model correctly identifies 57 cases, but four cases were misidentified as Diestrus and one case was misidentified as Proestrus; in the Proestrus category, the model also performed well, correctly identifying 59 cases, but two cases were misidentified as Estrus. in addition, the model predicted Estrus and Proestrus very accurately, with no misidentifications to other stages. There was some confusion between Diestrus and Metestrus, especially Metestrus was misidentified as Diestrus more often, which may be due to the greater similarity in features between these two categories. At the same time, there was also some confusion between Metestrus and Proestrus, albeit in small numbers, which indicates that the model may need further improvement in distinguishing between these two stages.

Figure 5

Confusion matrices for the EfficientNet model.

Overall, the model's performance on the classification task remains reliable, with most predictions being correct. However, in order to further improve the model's performance, focusing on reducing misclassification between Diestrus and Metestrus, as well as the few confusions between Estrus and Proestrus, and Metestrus and Proestrus, may be the focus of the next improvement efforts.

4 Conclusion

In this study, the EfficientNet deep learning model was successfully introduced to the field of biomedical image analysis. In particular, the model was used for the first time to identify and classify vaginal microscopic smears of rats during the estrous cycle on a large scale and in an automated manner. In the four key stages of Proestrus, Estrus, Metestrus, and Diestrus, EfficientNet showed excellent recognition ability, achieving a high accuracy of 95.8%, as well as F1 scores of more than 0.94 in all stages, especially in the Estrus and Diestrus stages. This breakthrough innovation not only demonstrates the great potential of EfficientNet in the field of biomedical image processing, but also provides a reliable methodological tool for subsequent related research.

Statements

Data availability statement

Missing values, inconsistent data, and erroneous records may exist in the data set. These issues may affect the accuracy of analysis results. Requests to access these datasets should be directed to: xiaodipu2022@163.com.

Ethics statement

The animal study was approved by Ethics Committee of Huaihua Maternal and Child Health Hospital. The study was conducted in accordance with the local legislation and institutional requirements.

Author contributions

XP: Writing – original draft. LL: Validation, Investigation, Writing – review & editing. YZ: Conceptualization, Writing – review & editing. ZX: Supervision, Formal analysis, Visualization, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research, authorship, and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1.

DiCarlo LM Vied C Nowakowski RS . The stability of the transcriptome during the estrous cycle in four regions of the mouse brain. J Compar Neurol. (2017) 525:3360–87. 10.1002/cne.24282

2.

Spencer-Segal JL Waters EM Bath KG Chao MV McEwen BS Milner TA . Distribution of phosphorylated TrkB receptor in the mouse hippocampal formation depends on sex and estrous cycle stage. J Neurosci. (2011) 31:6780–90. 10.1523/JNEUROSCI.0910-11.2011

3.

Zenclussen ML Casalis PA Jensen F Woidacki K Zenclussen AC . Hormonal fluctuations during the estrous cycle modulate heme oxygenase-1 expression in the uterus. Front Endocrinol. (2014) 5:32. 10.3389/fendo.2014.00032

4.

Scharfman HE Mercurio TC Goodman JH Wilson MA MacLusky NJ . Hippocampal excitability increases during the estrous cycle in the rat: a potential role for brain-derived neurotrophic factor. J Neurosci. (2003) 23:11641–52. 10.1523/JNEUROSCI.23-37-11641.2003

5.

Adams C Chen X Moenter SM . Changes in GABAergic transmission to and intrinsic excitability of gonadotropin-releasing hormone (GnRH) neurons during the estrous cycle in mice. Eneuro. (2018) 5:2018. 10.1523/ENEURO.0171-18.2018

6.

Meziane H Ouagazzal AM Aubert L Wietrzych M Krezel W . Estrous cycle effects on behavior of C57BL/6J and BALB/cByJ female mice: implications for phenotyping strategies. Genes Brain Behav. (2007) 6:192–200. 10.1111/j.1601-183X.2006.00249.x

7.

Milad MR Igoe SA Lebron-Milad K Novales J . Estrous cycle phase and gonadal hormones influence conditioned fear extinction. Neuroscience. (2009) 164:887–95. 10.1016/j.neuroscience.2009.09.011

8.

Lebron-Milad K Milad MR . Sex differences, gonadal hormones and the fear extinction network: implications for anxiety disorders. Biol Mood Anxiety Disord. (2012) 2:1–12. 10.1186/2045-5380-2-3

9.

Mazure CM . Our evolving science: studying the influence of sex in preclinical research. Biol Sex Diff . (2016) 7:1–3. 10.1186/s13293-016-0068-8

10.

Bozoğlu H . Deneysel hipertiroidi oluşturulmuş sıçanlarda östrus siklusunun değişik evrelerinde dişi genital organlarda (ovaryum ve uterus) östrojen ve progesteron reseptör dağılımının immünohistokimyasal olarak incelenmesi. Edirne: Trakya Üniversitesi Sağlık Bilimleri Enstitüsü (2013).

11.

Vanagondi UR Velichety SD . Histological observations in human ovaries from embryonic to menopausal age. Int J Anat Res. (2016) 4:3203–08. 10.16965/ijar.2016.439

12.

Çeçen Ş Çeribaşı S Erkuş M Özer AB Tuncer T Çınar A . Classification of Estrus Cycles in Rats by Using Deep Learning. Traitement du Signal. (2024) 41:122. 10.18280/ts.410122

13.

Byers SL Wiles MV Dunn SL Taft RA . Mouse estrous cycle identification tool and images. PLoS ONE. (2012) 7:e35538. 10.1371/journal.pone.0035538

14.

Becker JB Arnold AP Berkley KJ Blaustein JD Eckel LA Hampson E et al . Strategies and methods for research on sex differences in brain and behavior. Endocrinology. (2005) 146:1650–73. 10.1210/en.2004-1142

15.

Gal A Lin PC Barger AM MacNeill AL Ko C . Vaginal fold histology reduces the variability introduced by vaginal exfoliative cytology in the classification of mouse estrous cycle stages. Toxicol Pathol. (2014) 42:1212–20. 10.1177/0192623314526321

16.

MacDonald JK Pyle WG Reitz CJ Howlett SE . Cardiac contraction, calcium transients, and myofilament calcium sensitivity fluctuate with the estrous cycle in young adult female mice. Am J Physiol Heart Circulat Physiol. (2014) 2014:730. 10.1152/ajpheart.00730.2013

17.

Cora MC Kooistra L Travlos G . Vaginal cytology of the laboratory rat and mouse: review and criteria for the staging of the estrous cycle using stained vaginal smears. Toxicol Pathol. (2015) 43:776–93. 10.1177/0192623315570339

18.

Smith M Freeman M Neill J . The control of progesterone secretion during the estrous cycle and early pseudopregnancy in the rat: prolactin, gonadotropin and steroid levels associated with rescue of the corpus luteum of pseudopregnancy. Endocrinology. (1975) 96:219–26.

19.

Marcondes F Bianchi F Tanno A . Determination of the estrous cycle phases of rats: some helpful considerations. Brazil J Biol. (2002) 62:609–14. 10.1590/S1519-69842002000400008

20.

Yang K Yu Z Wen X Cao W Chen CP Wong HS et al . Hybrid classifier ensemble for imbalanced data. IEEE Trans Neural Netw Learn Syst. (2019) 31:1387–400. 10.1109/TNNLS.2019.2920246

21.

Liu S Cao W Fu R Yang K Yu Z . RPSC: robust pseudo-labeling for semantic clustering. In: Proceedings of the AAAI Conference on Artificial Intelligence, Vol. 38. Palo Alto, CA (2024). p. 14008–16.

22.

Furkan A Akalin B . Onkoloji Alanında Yapay Zeka Yöntemleri. Gevher Nesibe J Med Health Sci. (2022) 7:81–92. 10.46648/gnj.387

23.

Temurtas F Gorur K Cetin O Ozer I . Machine learning for thyroid cancer diagnosis. In:NayakJPelusiDAl-DabassD, editors. Computational Intelligence in Cancer Diagnosis. Amsterdam: Elsevier (2023). p. 117–45.

24.

Sun H Zeng X Xu T Peng G Ma Y . Computer-aided diagnosis in histopathological images of the endometrium using a convolutional neural network and attention mechanisms. IEEE J Biomed Health Informat. (2019) 24:1664–76. 10.1109/JBHI.2019.2944977

25.

Yan R Ren F Wang Z Wang L Zhang T Liu Y et al . Breast cancer histopathological image classification using a hybrid deep neural network. Methods. (2020) 173:52–60. 10.1016/j.ymeth.2019.06.014

26.

Shi Y Yang K Wang M Yu Z Zeng H Hu Y . Boosted unsupervised feature selection for tumor gene expression profiles. CAAI Trans Intell Technol. (2024) 2024:12317. 10.1049/cit2.12317

27.

Chen W Yang K Yu Z Shi Y Chen C . A survey on imbalanced learning: latest research, applications and future directions. Artif Intell Rev. (2024) 57:1–51. 10.1007/s10462-024-10759-6

28.

Li G Yu Z Yang K Lin M Chen CP . Exploring feature selection with limited labels: a comprehensive survey of semi-supervised and unsupervised approaches. IEEE Trans Knowl Data Eng. (2024) 2024:3397878. 10.1109/TKDE.2024.3397878

29.

He K Zhang X Ren S Sun J . Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern RecognitionLas Vegas, NV: IEEE (2016). p. 770–8.

30.

Ronneberger O Fischer P Brox T . U-NET: convolutional networks for biomedical image segmentation. In: Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5–9, 2015, Proceedings, Part III 18. Berlin: Springer (2015). p. 234–41.

31.

Tan M Le Q . Efficientnet: rethinking model scaling for convolutional neural networks. In: International Conference on Machine Learning. (2019). p. 6105–14.

32.

Yang K Liu Y Yu Z Chen CP . Extracting and composing robust features with broad learning system. IEEE Trans Knowl Data Eng. (2021) 35:3885–96. 10.1109/TKDE.2021.3137792

33.

Yu Z Zhong Z Yang K Cao W Chen CP . Broad learning autoencoder with graph structure for data clustering. IEEE Trans Knowl Data Eng. (2023) 2023:3283425. 10.1109/TKDE.2023.3283425

34.

Sandler M Howard A Zhu M Zhmoginov A Chen LC . MobileNetV2: inverted residuals and linear bottlenecks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Los Alamitos, CA (2018). p. 4510–20.

35.

Hu J Shen L Sun G . Squeeze-and-excitation networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (2018) Salt Lake City, UT:IEEE. p. 7132–41.

36.

Dang AT . Accuracy and loss: things to know about the top 1 and top 5 accuracy. Medium. (2021).

37.

Westwood FR . The female rat reproductive cycle: a practical histological guide to staging. Toxicol Pathol. (2008) 36:375–84. 10.1177/0192623308315665

38.

MENTEŞE E HANÇER E . Histopatoloji görüntülerde derin öğrenme yöntemleri ile çekirdek segmentasyonu. Avrupa Bilim ve Teknoloji Dergisi. (2020) 2020:95–102. 10.31590/ejosat.819409

Summary

Keywords

deep learning, EfficientNet, histopathology, estrus cycle, estrus staging, pathological image, classification

Citation

Pu X, Liu L, Zhou Y and Xu Z (2024) Determination of the rat estrous cycle vased on EfficientNet. Front. Vet. Sci. 11:1434991. doi: 10.3389/fvets.2024.1434991

Received

22 May 2024

Accepted

01 July 2024

Published

23 July 2024

Volume

11 - 2024

Edited by

Amal M. Aboelmaaty, National Research Centre, Egypt

Reviewed by

Ashraf Soror, National Research Centre, Egypt

Abd El-Nasser Madboli, National Research Centre, Egypt

Updates

Copyright

© 2024 Pu, Liu, Zhou and Xu.

This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Xiaodi Pu xiaodipu2022@163.comZihan Xu xhao117@163.com

†These authors have contributed equally to this work and share first authorship

Disclaimer

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article or claim that may be made by its manufacturer is not guaranteed or endorsed by the publisher.