- 1Department of Clinical Sciences, Foster Hospital for Small Animals at Tufts Cummings School of Veterinary Medicine, North Grafton, MA, United States

- 2Department of Biostatistics, Boston University School of Public Health, Boston, MA, United States

- 3Department of Small Animal Clinical Sciences, Texas A&M University, College Station, TX, United States

Introduction: The purpose of this retrospective study was to determine the inter-and intra-rater agreement among novice raters, as well as agreement between novice raters and an experienced consensus using the Gächter grading scale for the evaluation of the severity of septic joints in dogs.

Methods: Three surgical residents served as novice raters, and two American College of Veterinary Surgery (ACVS) diplomates, experienced with arthroscopic evaluation of canine joints, served as the experienced consensus. Arthroscopy images were first evaluated by the experienced consensus and scored using the Gächter scale. After two supervised training sessions, novices applied the scale twice to the same images, 2 weeks apart.

Results: The application of the Gächter grading scale was unreliable in dogs when utilized by novice raters.

Discussion: Both the intra-rater agreement measured among the three novice raters and inter-rater reliability comparing the three novice raters to an expert consensus showed a consistently low concurrence among the individuals when tested at two separate time intervals. Lack of skill with arthroscopy, awareness of the anatomy and potential anatomic variations, and inadequate training in the application of the Gächter grading scheme could play a large part in a novice’s ability to apply the grading scale to a septic joint. Inter-rater agreement, while initially moderate, had a decreasing concurrence between the two-time intervals.

1 Introduction

Septic joints are only rarely reported in dogs, but their actual frequency is unknown (1). In humans, the reported incidence of septic arthritis varies from 2 to 5 cases/100,000 individuals annually in the general population, to 28–38 cases/100,000 individuals among patients with rheumatoid arthritis, and 40–68 cases/100,000 individuals annually among patients with joint prostheses (2, 3). Depending on the severity of infection and specific pathogen, sepsis can cause rapid and potentially permanent damage to the joint surface, with poor functional ability long-term (3–6). The most common etiologies reported in human medicine are immunocompromised patients, pre-existing joint diseases, the presence of joint prostheses, and/or joint surgery (3). In the veterinary field, common etiologies reported are a penetrating wound, a surrounding infection, or hematogenous spread (including skin infection and dental disease as the more common sources) (2).

Septic joints are generally suspected based on clinical findings, including fever, local hyperemia, joint effusion, lameness, and pain. Confirmation requires ancillary diagnostic testing such as joint fluid analysis, joint fluid culture, and/or synovial biopsy with tissue culture (1, 4). In humans, the gold standard treatment of septic arthritis is arthroscopic debridement and lavage in combination with appropriate antimicrobial therapy (3, 7).

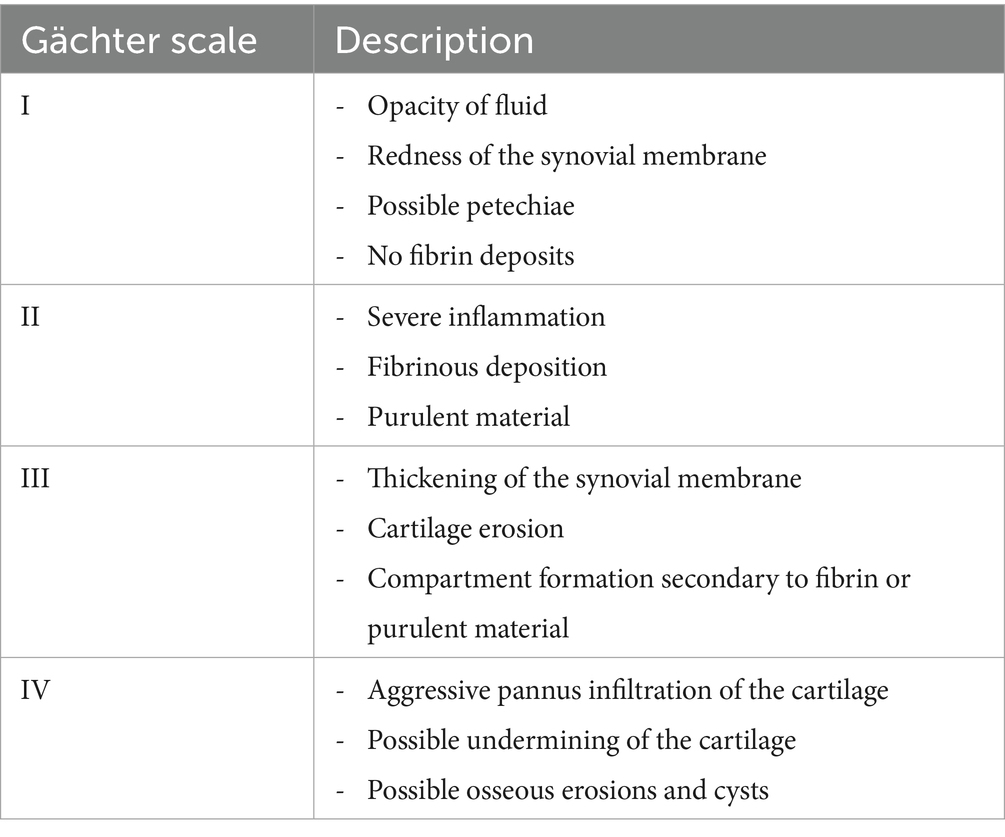

In 1985, Andre Gächter described a classification system for human septic joints using arthroscopic findings to grade severity between I and IV (8). The Gächter scale can be applied to any joint disease, but in most cases has been applied to septic knee joints (6, 9). The use of Gächter scale grading has been demonstrated to have both prognostic and therapeutic significance in humans (6, 9). Similar information could be gleaned using the Gächter scale in veterinary patients, and possibly help direct clinical decision-making.

Intra- and inter-rater agreement is important for any diagnostic test to have value in clinical decision-making. Intra-rater is the degree of agreement among repeated sessions by the same rater, allowing assessment of the reliability of a single rater’s judgment. While inter-rater agreement is the degree of agreement among multiple raters who individually evaluate the same subject, it can assist in determining the consistency of assessment by different raters. The purpose of this study was to evaluate the inter-and intra-rater agreement of the Gächter grading scale when applied by novice raters, as well as compare agreement between novice raters and an expert consensus. Our hypothesis was that when applying the Gächter grading scale, novices would have a moderate intra-rater and inter-rater variability between the time periods, and good agreement with an expert consensus.

2 Materials and methods

2.1 Study design

Medical records from 2010 to 2022 from two surgical referral practices (XXX and XXX) were retrospectively searched for dogs diagnosed with septic joints. Inclusion criteria were a confirmed positive joint culture result (synovial fluid and/or synovial tissue biopsy and culture), availability of videos or still frames from arthroscopic assessment of the joint, and procedures performed by a board-certified surgeon or a resident under direct supervision of a board-certified surgeon. Additional data included age, breed, weight, physical examination findings, and the affected joint.

2.2 Arthroscopy image evaluation

Arthroscopy still frames and videos were assigned a random number (Random.org, Random Sequence Generator, Dublin, Ireland). Two American College of Veterinary Surgery (ACVS) diplomates, blinded to case data and experienced with arthroscopic evaluation of canine joints, initially reviewed all arthroscopy still frames and videos. Cases were excluded if they felt there was inadequate imaging quality for definitive evaluation. The remaining images were then graded based on the Gächter scale (Table 1), forming an expert consensus. The ACVS diplomates evaluated the videos and still frames together to form a consensus. There were several disagreements initially, but a discussion about key features allowed them to settle on an agreed-upon grade.

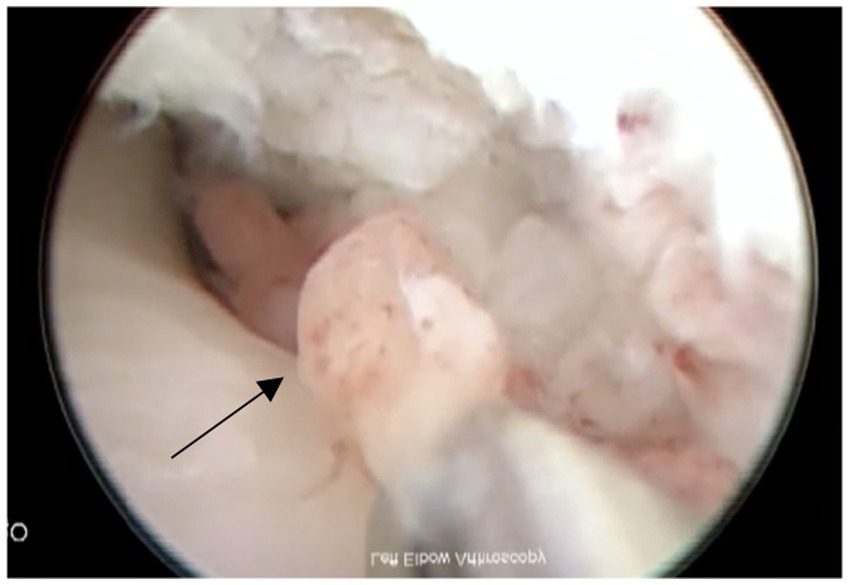

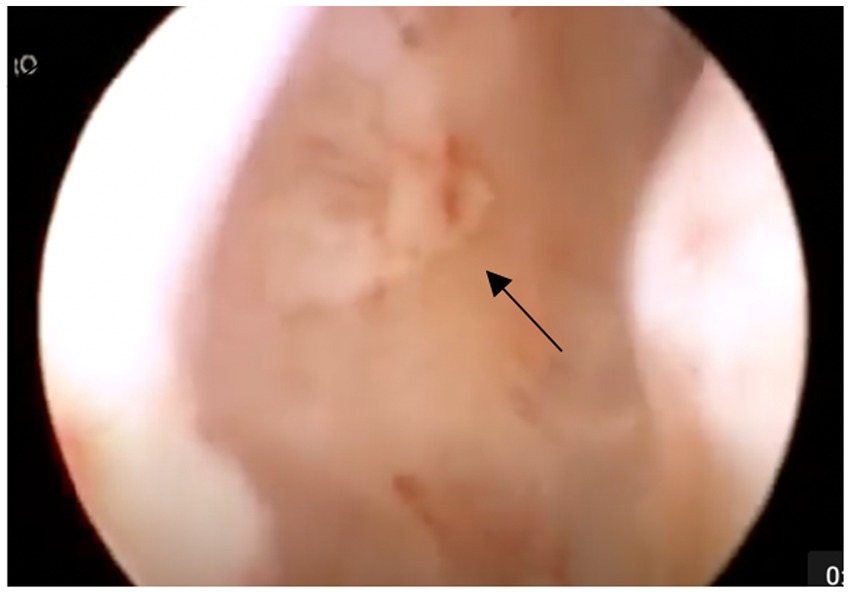

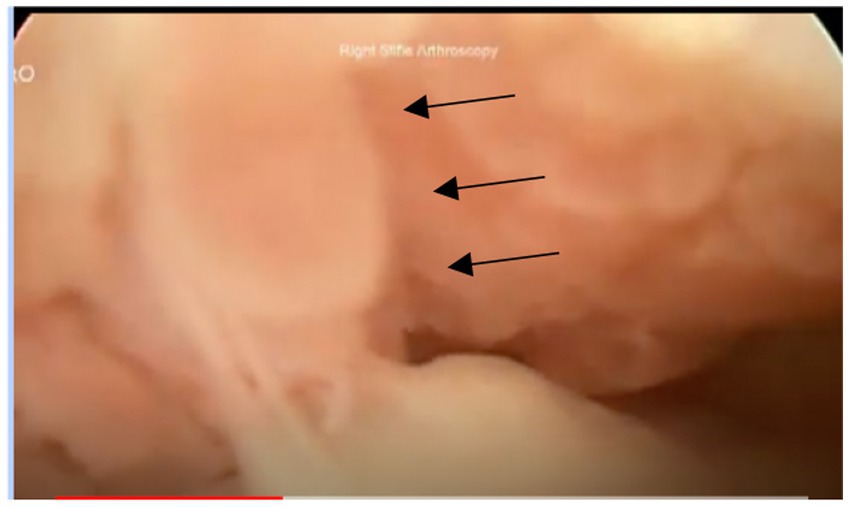

Three novice raters (ACVS residents) received two supervised training sessions in Gächter scale application in lecture format (approximately 60 min in length), followed by supervised evaluation of five arthroscopy cases to practice application of the Gächter scale. For actual study grading, novice raters were supplied with a description of the Gächter scale and example images (Figures 1–3). An image for grade IV was not included due to a lack of arthroscopic image availability in this case series. Novice raters used the Gächter scale to evaluate the same septic joint cases twice, 2 weeks apart. The images were presented in the same order for both grading sessions, with the cases including two videos, one video, and one still frame or two still frames. The primary author was not blinded and recorded all data.

2.3 Statistical analysis

The frequency and percentage describing the distribution of the rating scale in this sample were reported. To assess the inter-rater agreement and intra-rater agreement, we computed Light’s kappa coefficient and 95% confidence intervals based on an absolute agreement two-way mixed effects model where the subjects (dogs) and raters were modeled as random effects (10–12). All analyses were conducted using the “irr” and “DescTools” packages in RStudio (13–15). The statistical significance of the inter-and intra-rater agreement was examined; p-values less than 0.05 were considered statistically significant.

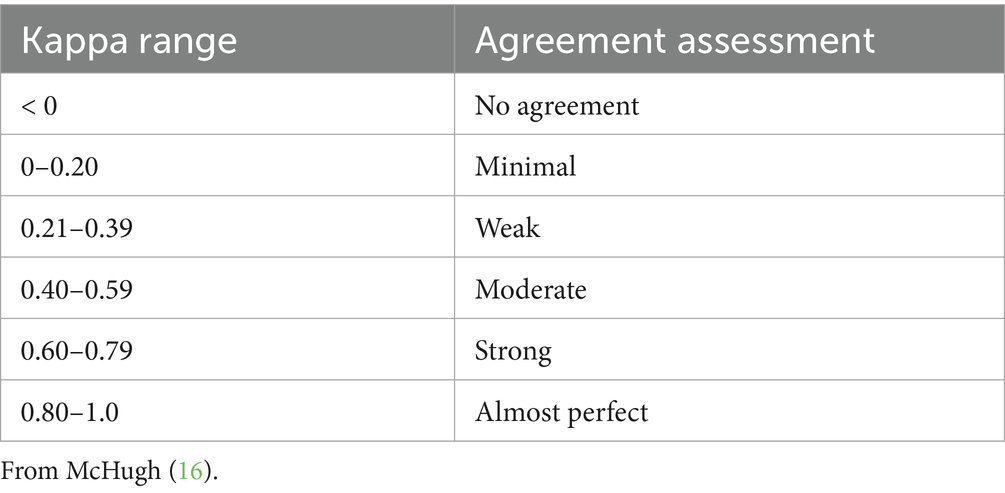

Kappa agreement was assessed, as listed in Table 2 (16). Any kappa below 0.60 indicates an inadequate agreement among the raters, and little confidence should be placed in the results. A negative kappa represents a disagreement, or an agreement worse than expected. A low negative value (0 to −0.10) indicates no agreement (16).

3 Results

3.1 Case data

Twenty-six cases were reviewed, with 18 meeting the final inclusion criteria; 8 cases were excluded by the expert consensus for inadequate imaging quality. Body weight of the final study population ranged from 30 to 78 kg (median: 43 kg), and age ranged from 2.5 years to 11 years (median: 5 years). Fourteen dogs were male (12 castrated, 2 intact) and 4 were female (3 spayed, 1 intact). Breeds included Labrador Retriever (n = 4, 22%), German Shepherd (n = 3, 17%), Mastiff (n = 3, 17%), Newfoundland (n = 2, 11%), Saint Bernard (n = 2, 11%), Mixed Breed (n = 2, 11%), Bernese Mountain Dog (n = 1, 5.5%), and Boxer (n = 1, 5.5%). The joint most affected was the stifle (n = 14, 78%), followed by the elbow (n = 3, 17%) and hip (n = 1, 5%). The cause for the majority of cases was not known or recorded and thus not evaluated for this study.

Arthroscopic images consisted of still frames (n = 6, 33%), videos (n = 11, 61%), or a combination of both (n = 1, 6%). Each case included either two still frames, one still frame and one video, or two videos. The videos ranged from 8 to 41 s in length. Organisms cultured from infected joints included Staphylococcus pseudintermedius (n = 8, 44%), Beta-hemolytic streptococcus (n = 7, 38%), Pasteurella (n = 1, 6%), Staphylococcus schleiferi (n = 1, 6%), and Actinomyces (n = 1, 6%). All joints were reported to be infected with a single organism.

3.2 Arthroscopic evaluation

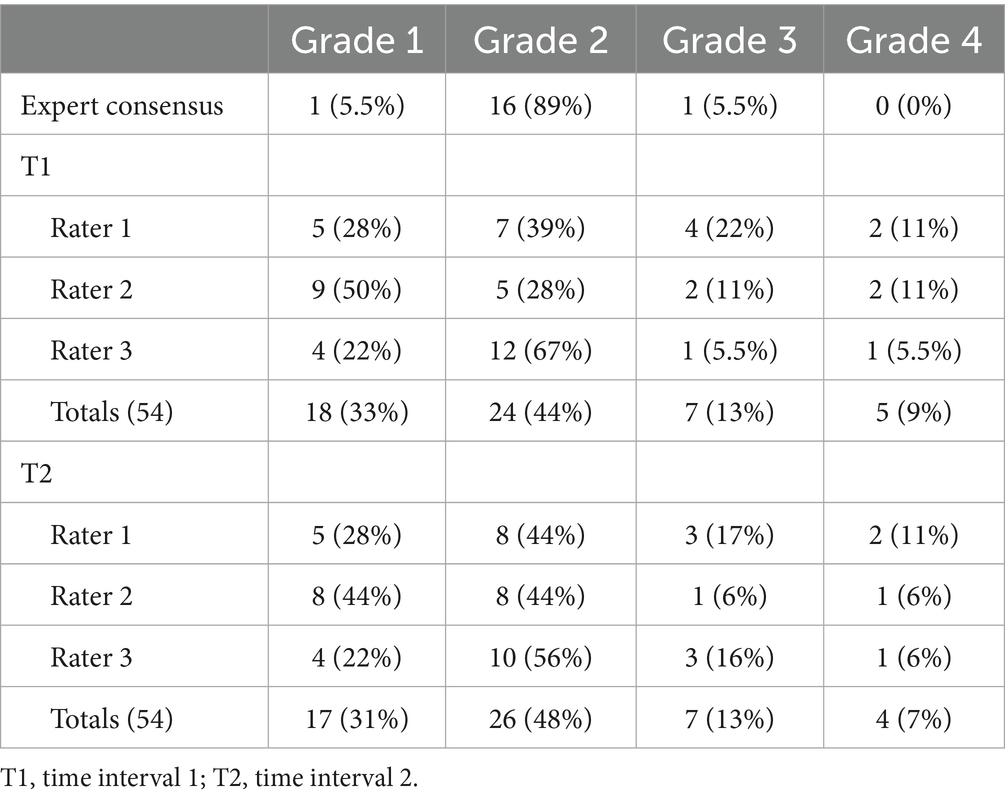

The results of the expert consensus and novice evaluations at both intervals are shown in Table 3. The expert consensus classified 5.6% (n = 1) as grade 1, 88.9% (n = 16) as grade 2, 5.6% (n = 1) as grade 3, and 0% (n = 0) as grade 4.

Table 3. Grades assigned to 18 septic joints utilizing the Gächter grading scale for both novice raters and an expert consensus at two-time intervals.

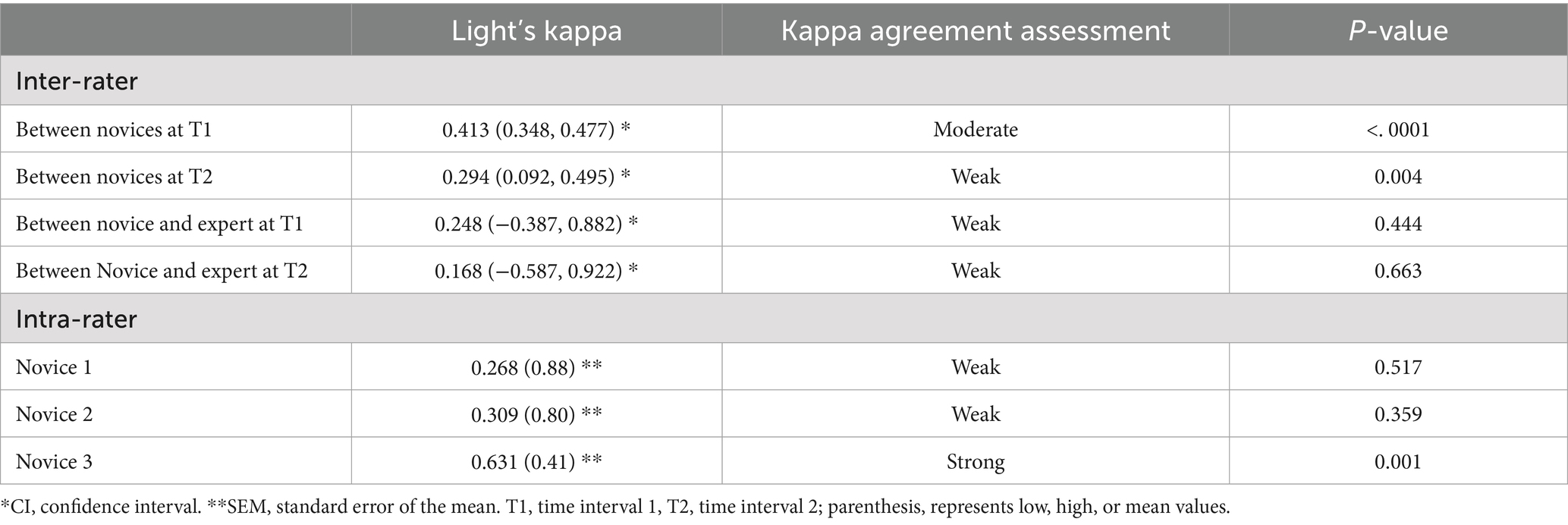

The results of the inter-rater and intra-rater agreement between novices and the expert consensus at both intervals are shown in Table 4.

Table 4. Inter- and intra-rater agreement values between 3 novice raters and an expert consensus for 18 dogs using the Gächter scale grading scale.

4 Discussion

The results of this study indicate that the application of the Gächter grading scale was unreliable in this study in dogs when applied by novice raters to pre-recorded videos and still-frame images. Both the intra-rater agreement measured among the three novice raters and comparison to an expert consensus showed a consistently low concurrence among the individuals when tested at two separate time intervals. Inter-rater agreement, while initially moderate, had a decreasing concurrence between the two time intervals. Our hypothesis that the Gächter grading scale would show sufficient agreement to be used by inexperienced surgeons was incorrect based on the results obtained during this study.

Intra-rater agreement among novices between time intervals was moderate (0.402), suggesting that frequent review of the grading scale and criteria for each stage should be performed until the rater is comfortable with applying the scale. Several studies have confirmed the novice learning curve among surgery residents, with improved performance after lecture, live demonstration by senior surgeons, and hands-on training (17, 18). Residents performing their first 100 surgeries were more than three times more likely to encounter complications compared with residents who had performed at least 600 surgeries. Intra-rater agreement could potentially improve with further training from experienced clinicians and increased frequency of application (19).

Inter-rater agreement was initially moderate (0.413), but nearly halved (0.294) between the time periods evaluated. Before application, the novices had two lecture-style training sessions (approximately 60 min in length) with case example discussion. These training sessions were 1 week apart, followed by the novice group being provided five cases for individual grading that were returned within 7 days (20, 21). The five cases provided were not utilized in the final grading. Inter-rater agreement is critical for a diagnostic test to be useful and is commonly assessed in other tests in veterinary medicine to ensure reliability (22). A lack of skill with arthroscopy and awareness of the anatomy and potential anatomic variations could play a large part in a novice’s ability to apply the grading scale to a septic joint. Since there is no comparable grading scale in the veterinary field, introducing this new concept may be difficult for novices inexperienced with arthroscopy.

Of the 18 cases evaluated, 16 were considered grade 2 by the expert consensus. A meta-analysis in humans of 318 total septic joints indicated that 75 cases were grade 1 (24%), 157 cases (49%) were grade 2, 69 cases were grade 3 (22%), and 17 cases were grade 4 (5%) (6). Cases that were grade 1 and 2 occurred when the interval time between the appearance of symptoms and the treatment was approximately 7–15 days (7). Regardless of the procedure performed, prompt intervention is the most critical factor for eradicating infection and achieving good clinical outcomes (23). Infection within the joint and synovial membrane results in an inflammatory response. This releases destructive enzymes, and since synovial fluid is rich in nutrients, the fluid therefore makes an excellent growth medium for infection (24). Studies performed on rabbit models established that irreversible damage could occur to cartilage, bone, capsule, and ligamentous structures without intervention within 5 days of infection (23). While the time to intervention after the onset of clinical disease was not assessed in the present study, rapid intervention may have contributed to the majority of cases being classified as relatively mild grade 2 on the Gächter scale.

Large-breed, middle-aged male dogs were most frequently represented here, consistent with previous studies (25). Like previous reports in both humans and dogs, the stifle was most affected (6, 24). While an explanation for stifle predilection has not been reported, previous surgery or pre-existing joint disease, such as osteoarthritis, which frequently occurs in large or giant breed dogs, increases the risk of developing septic arthritis (24, 26–28). Future studies should document any pre-existing conditions that affect the joint. In the current study, all joints were infected with a single bacterium; however, a variety of organisms were cultured across cases. This is consistent with previously reported studies, but it does not eliminate the risk of multiple organisms affecting a joint (4, 26).

Several limitations exist in the current study. A larger sample size would have been preferred, but septic joints are infrequent, and several cases were eliminated because of a lack of positive culture. A joint can be septic without exhibiting a positive culture, which can further limit the number of available cases for study (4, 29). A larger sample size would also allow for more variety in the grades, as the limitation within the current study is the lack of variability, with the majority being grade 2. Arthroscopy imaging for this study was not obtained for the application of the Gächter grading scale originally; therefore, specifically recorded imaging was not available for the presence or absence of specific lesions to which the grading scale could be applied. If arthroscopy imaging was obtained with the application of the Gächter grading scale in mind, the outcome might have been different. Cases were also eliminated due to poor-quality images obtained with arthroscopy. Obtaining quality images with arthroscopy is necessary for the rater to identify redness of the synovial membrane, fibrinous deposits, purulent material, cartilage erosion, and/or pannus, required to accurately apply the Gächter grading scale. There was no subjective difference in the ability to assign a grade based on the availability of video versus still-frame. For consistency, future studies may consider a preference for using one modality for the entire study. A final limitation is the number of evaluations performed by novice raters. A more definitive pattern could be determined if additional evaluations were performed, showing that raters progressively worsened (or improved) in application in this study.

Future studies should consider inter-rater and intra-rater agreement among experienced raters to determine if the low agreement of the Gächter grading scale demonstrated in this study is due to experience level or if the Gächter grading scale is difficult to apply to the veterinary field, even for experienced clinicians. During the development of the experienced consensus, an agreed-upon consensus grade was subjectively reached very quickly and easily between the two experienced surgeons. It is the authors’ opinion that the application of the Gächter scale is possible, but likely requires experienced observers and appropriate training, and could be assessed in a future study. The observation that inter-rater agreement was initially moderate and nearly halved between the time periods evaluated may indicate that more rigorous training and experience with the grading system prior to applying it may improve the outcome. Further considerations could include a larger case number to assess the trends of grades, additional time periods, different training methods, and assessment of the value of the Gächter classification in predicting the clinical outcome of veterinary patients.

In conclusion, while the Gächter classification has shown to be a valuable prognostic tool in predicting the outcome of surgical treatment of septic joints in humans, a significant variability between the novice raters and the lack of agreement with the experienced consensus in the current study suggests that additional training time or alternate methods of training are warranted prior to the routine application in veterinary patients.

Data availability statement

The original contributions presented in the study are included in the article/supplementary material, further inquiries can be directed to the corresponding author.

Ethics statement

Ethical approval was not required for the study involving humans in accordance with the local legislation and institutional requirements. Written informed consent to participate in this study was not required from the participants or the participants’ legal guardians/next of kin in accordance with the national legislation and the institutional requirements. The manuscript presents research on animals that do not require ethical approval for their study.

Author contributions

TS: Conceptualization, Data curation, Investigation, Methodology, Writing – original draft, Writing – review & editing. MK: Conceptualization, Data curation, Methodology, Resources, Visualization, Writing – review & editing. JH: Formal analysis, Writing – review & editing. CB: Data curation, Writing – review & editing. WS: Writing – review & editing. RM: Conceptualization, Formal analysis, Methodology, Supervision, Writing – original draft, Writing – review & editing.

Funding

The author(s) declare that no financial support was received for the research and/or publication of this article.

Conflict of interest

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Publisher’s note

All claims expressed in this article are solely those of the authors and do not necessarily represent those of their affiliated organizations, or those of the publisher, the editors and the reviewers. Any product that may be evaluated in this article, or claim that may be made by its manufacturer, is not guaranteed or endorsed by the publisher.

References

1. Clements, DN, Owen, MR, Mosley, JR, Carmichael, S, Taylor, DJ, and Bennett, D. Retrospective study of bacterial infective arthritis in 31 dogs. J Small Anim Pract. (2005) 46:171–6. doi: 10.1111/j.1748-5827.2005.tb00307.x

2. Bennett, D, and Taylor, DJ. Bacterial infective arthritis in the dog. J Small Anim Pract. (1988) 29:207–30. doi: 10.1111/j.1748-5827.1988.tb02278.x

3. Kaandorp, CJ, Van Schaardenburg, D, Krijnen, P, Habbema, JD, and van de Laar, MA. Risk factors for septic arthritis in patients with joint disease. A prospective study. Arthritis Rheum. (1995) 38:1819–25. doi: 10.1002/art.1780381215

4. Smith, JW, Chalupa, P, and Shabaz Hasan, M. Infectious arthritis: clinical features, laboratory findings and treatment. Clin Microbiol Infect. (2006) 12:309–14. doi: 10.1111/j.1469-0691.2006.01366.x

5. Mathews, CJ, Weston, VC, Jones, A, Field, M, and Coakley, G. Bacterial septic arthritis in adults. Lancet. (2010) 375:846–55. doi: 10.1016/S0140-6736(09)61595-6

6. De Franco, C, Artiaco, S, de Matteo, V, Bistolfi, A, Balato, G, Vallefuoco, S, et al. The eradication rate of infection in septic knee arthritis according to the Gächter classification: a systematic review. Orthop Rev. (2022) 14:33754. doi: 10.52965/001c.33754

7. Aïm, F, Delambre, J, Bauer, T, and Hardy, P. Efficacy of arthroscopic treatment for resolving infection in septic arthritis of native joints. Orthop Traumatol Surg Res. (2015) 101:61–4. doi: 10.1016/j.otsr.2014.11.010

9. Yanmış, I, Ozkan, H, Koca, K, Kılınçoğlu, V, Bek, D, and Tunay, S. The relation between the arthroscopic findings and functional outcomes in patients with septic arthritis of the knee joint, treated with arthroscopic debridement and irrigation. Acta Orthop Traumatol Turc. (2011) 45:94–9. doi: 10.3944/AOTT.2011.2258

10. Conger, A . Integration and generalization of kappas for multiple raters. Psychol Bull. (1980) 88:322–8. doi: 10.1037/0033-2909.88.2.322

11. Light, R . Measures of response agreement for qualitative data: some generalizations and alternatives. Psychol Bull. (1971) 76:365–77. doi: 10.1037/h0031643

12. Koo, TK, and Li, MY. A guideline of selecting and reporting intraclass correlation coefficients for reliability research [published correction appears in J Chiropr Med. 2017;16(4):346]. J Chiropr Med. (2016) 15:155–63. doi: 10.1016/j.jcm.2016.02.012

13. RC Team . R: a language and environment for statistical computing. R Foundation for Statistical Computing (2022). Available online at: https://www.R-project.org/ (Accessed April 19, 2023).

14. Gamer, MLJ (2019). _irr: Various coefficients of interrater reliability and agreement. R package version 0.84.1. Available online at: https://CRAN.R-project.org/package=irr

15. A S . Desc tools: tools for descriptive statistics. R package version 0.99.48. (2023). Available online at: https://CRAN.R-project.org/package=DescTools (Accessed April 19, 2023).

16. McHugh, ML . Interrater reliability: the kappa statistic. Biochem Med. (2012) 22:276–82. doi: 10.11613/BM.2012.031

17. Randleman, JB, Wolfe, JD, Woodward, M, Lynn, MJ, Cherwek, DH, and Srivastava, SK. The resident surgeon phacoemulsification learning curve. Arch Ophthalmol. (2007) 125:1215–9. doi: 10.1001/archopht.125.9.1215

18. Corey, RP, and Olson, RJ. Surgical outcomes of cataract extractions performed by residents using phacoemulsification. J Cataract Refract Surg. (1998) 24:66–72. doi: 10.1016/s0886-3350(98)80076-x

19. Gupta, S, Haripriya, A, Vardhan, SA, Ravilla, T, and Ravindran, RD. Residents' learning curve for manual small-incision cataract surgery at Aravind eye hospital, India. Ophthalmology. (2018) 125:1692–9. doi: 10.1016/j.ophtha.2018.04.033

20. González-García, R, Rodríguez-Campo, FJ, Escorial-Hernández, V, Muñoz-Guerra, MF, Sastre-Pérez, J, Naval-Gías, L, et al. Complications of temporomandibular joint arthroscopy: a retrospective analytic study of 670 arthroscopic procedures. J Oral Maxillofac Surg. (2006) 64:1587–91. doi: 10.1016/j.joms.2005.12.058

21. Larsen, MM, Buch, FO, Tour, G, Azarmehr, I, and Stokbro, K. Training arthrocentesis and arthroscopy: using surgical navigation to bend the learning curve. J Oral Maxillofacial Surg Med Pathol. (2023) 35:554–8. doi: 10.1016/j.ajoms.2023.03

22. Fettig, AA, Rand, WM, Sato, AF, Solano, M, McCarthy, RJ, and Boudrieau, RJ. Observer variability of tibial plateau slope measurement in 40 dogs with cranial cruciate ligament-deficient stifle joints. Vet Surg. (2003) 32:471–8. doi: 10.1053/jvet.2003.50054

23. Riegels-Nielson, P, Frimodt-Möller, N, and Jensen, JS. Rabbit model of septic arthritis. Acta Orthop Scand. (1987) 58:14–9. doi: 10.3109/17453678709146335

24. Rao, N, and Esterhai, JL. Septic arthritis. AAOS Orthop Knowl Musculosk Infect. (2010) 13:155–63.

25. Mielke, B, Comerford, E, English, K, and Meeson, R. Spontaneous septic arthritis of canine elbows: twenty-one cases. Vet Comp Orthop Traumatol. (2018) 31:488–93. doi: 10.1055/s-0038-1668108

26. Marchevsky, AM, and Read, RA. Bacterial septic arthritis in 19 dogs. Aust Vet J. (1999) 77:233–7. doi: 10.1111/j.1751-0813.1999.tb11708.x

27. Witsberger, TH, Villamil, JA, Schultz, LG, Hahn, AW, and Cook, JL. Prevalence of and risk factors for hip dysplasia and cranial cruciate ligament deficiency in dogs. J Am Vet Med Assoc. (2008) 232:1818–24. doi: 10.2460/javma.232.12.1818

28. Duval, JM, Budsberg, SC, Flo, GL, and Sammarco, JL. Breed, sex, and body weight as risk factors for rupture of the cranial cruciate ligament in young dogs. J Am Vet Med Assoc. (1999) 215:811–4. doi: 10.2460/javma.1999.215.06.811

Keywords: Gächter, septic arthritis, septic joint, arthroscopy, inter-rater agreement, intrarater agreement

Citation: Stockman TI, Kowaleski MP, Hicks JM, Berns CN, Saunders WB and McCarthy RJ (2025) Inter- and intra-rater agreement among novices and comparison to an experienced consensus using the Gächter scale for the evaluation of septic joints. Front. Vet. Sci. 12:1577046. doi: 10.3389/fvets.2025.1577046

Edited by:

Jessica Graves, Cellular Longevity, Inc., United StatesReviewed by:

Lindsey Helms Boone, Auburn University, United StatesJarrod Troy, Iowa State University, United States

Copyright © 2025 Stockman, Kowaleski, Hicks, Berns, Saunders and McCarthy. This is an open-access article distributed under the terms of the Creative Commons Attribution License (CC BY). The use, distribution or reproduction in other forums is permitted, provided the original author(s) and the copyright owner(s) are credited and that the original publication in this journal is cited, in accordance with accepted academic practice. No use, distribution or reproduction is permitted which does not comply with these terms.

*Correspondence: Tiffany I. Stockman, VGlmZmFueS5TdG9ja21hbkBudmEuY29t

Tiffany I. Stockman

Tiffany I. Stockman Michael P. Kowaleski1

Michael P. Kowaleski1

Jacqueline M. Hicks

Jacqueline M. Hicks W Brian Saunders

W Brian Saunders